Abstract

We show how to apply a general theoretical approach to nonequilibrium statistical mechanics, called Maximum Caliber, originally suggested by E. T. Jaynes [Annu. Rev. Phys. Chem. 31, 579 (1980)], to a problem of two-state dynamics. Maximum Caliber is a variational principle for dynamics in the same spirit that Maximum Entropy is a variational principle for equilibrium statistical mechanics. The central idea is to compute a dynamical partition function, a sum of weights over all microscopic paths, rather than over microstates. We illustrate the method on the simple problem of two-state dynamics, A↔B, first for a single particle, then for M particles. Maximum Caliber gives a unified framework for deriving all the relevant dynamical properties, including the microtrajectories and all the moments of the time-dependent probability density. While it can readily be used to derive the traditional master equation and the Langevin results, it goes beyond them in also giving trajectory information. For example, we derive the Langevin noise distribution rather than assuming it. As a general approach to solving nonequilibrium statistical mechanics dynamical problems, Maximum Caliber has some advantages: (1) It is partition-function-based, so we can draw insights from similarities to equilibrium statistical mechanics. (2) It is trajectory-based, so it gives more dynamical information than population-based approaches like master equations; this is particularly important for few-particle and single-molecule systems. (3) It gives an unambiguous way to relate flows to forces, which has traditionally posed challenges. (4) Like Maximum Entropy, it may be useful for data analysis, specifically for time-dependent phenomena.

INTRODUCTION

While the theoretical foundations of statistical mechanics of the equilibrium state are well established,1 there seems to be no unique and generally accepted formulation of the nonequilibrium state.2, 3, 4 Rather, there are various well-understood approaches to nonequilibrium statistical mechanics, each of which is plagued by some deficiencies. For example, master-equation methods give differential equations that can be solved for time-dependent probabilities of states. However, in systems having only small numbers of particles, dynamical fluctuations can be so large that mean probabilities, which are smooth, continuous, and differentiable quantities, are not the natural language for the dynamics. Moreover, probability distribution-based methods do not give information about individual particle trajectories. The Langevin equation, on the other hand, does give trajectory information, but it is usually restricted in various ways. Analytical Langevin modeling is challenged by nonlinear dynamical problems2 and is usually based on assuming noise that is white and uncorrelated or Gaussian. Hence, as a matter of principle, it would be useful to have a single unified approach to nonequilibrium statistical mechanics (a) from which both distribution-based or trajectory-based approaches can be derived, (b) which is not restricted to near equilibrium, to linear systems, or simple kinds of noise, and (c) from which the properties of fluctuations can be derived rather than assumed. Furthermore, it is desirable to have a variational principle for dynamics that would serve the same role that Maximum Entropy and the Second Law serve for problems of equilibrium.

Here, we explore such a variational approach, called Maximum Caliber. It was originally suggested by Jaynes5 as a generalization of his Maximum Entropy Formulation. To illustrate its full range of predictions, we apply this approach to one of the simplest problems of dynamics, the two-state system, A↔B. Caliber may ultimately be useful for systems, such as in biology, nanotech, and single-molecule experiments, where the numbers of particles is small and where there is some interest in knowing the distribution of trajectories.6, 7

In this paper, we focus on dynamics, not statics. However, our strategy follows so closely the derivation of the Boltzmann distribution law of equilibrium statistical mechanics of Jaynes8, 9, 10 that we first show the Jaynes treatment of equilibria, called Maximum Entropy (MaxEnt). To derive the Boltzmann law, MaxEnt starts from a given set of equilibrium microstates j=1,2,3,…,N that are relevant to the problem at hand. We aim to compute the probabilities pj of those microstates in equilibrium. We define the entropy, S, of the system as

| (1.1) |

where kB is Boltzmann’s constant. The equilibrium probabilities, , are those values of pj that cause the entropy to be maximal, subject to two constraints:

| (1.2) |

which is a normalization condition that insures that the probabilities pj sum to one, and

| (1.3) |

which says that the energies, when averaged over all the microstates, sum to the macroscopically observable average energy. This is equivalent to the statement that the temperature is constant. Introducing Lagrange multipliers μ and β to enforce constraints 1.2, 1.3, we maximize the function

| (1.4) |

which leads to the equilibrium probabilities

| (1.5) |

where Q=∑je−BEj is the partition function. By using the thermodynamic expression d⟨E⟩=TdS with 1.1, 1.3, we readily obtain β=1∕kBT. This MaxEnt derivation of the Boltzmann distribution law provides a simple, compact, and transparent variational principle for computing the equilibrium probabilities of the microstates. The basic idea is that, by maximizing Eq. 1.4, we select the distribution with the greatest multiplicity that agrees with the given information 1.2, 1.3.

Following this idea, the generalization of MaxEnt to time-dependent problems is—at least in principle—a straightforward matter.5, 11, 12 In this case we have some time-dependent quantities An with averages

| (1.6) |

Instead of the equilibrium probability pj of a microstate in Eq. 1.4, the pj(t) now denote the probability of a microtrajectory, e.g., a specific single-particle trajectory. As a consequence, the resulting entropy ∝∑jpj(t)ln pj(t) will be a functional or path integral13 of the {pj(t)}. In direct analogy to the equilibrium case [Eq. 1.4], we construct the quantity

| (1.7) |

where Lagrange multipliers μ and λn enforce that the distribution is normalized and that the averages 1.6 are satisfied. Jaynes called this quantity “Caliber,” since it refers to the cross sectional area of a tube, which partly determines the flow in a dynamic process.5 To find the weights of the individual dynamical paths, pj(t), we maximize the Caliber 1.7 by setting δC∕δpj=0. This gives for the path weights

| (1.8) |

where Qd=∑jexp{λ1A1j+⋯+λLALj} denotes the dynamical partition function. In complete analogy to MaxEnt, by maximizing Eq. 1.7, we select the path distribution with the greatest multiplicity that agrees with the given information 1.2, 1.6. This path distribution then determines the time evolution of all time-dependent observables of the system.

Here is how Caliber is applied to a given dynamical problem. First, we are given a set of trajectories (for example, from a model) and a set of values, Anj, characterizing the property An for trajectory j. We take as given (for example, from experiments) L first-moment quantities An. Maximizing the Caliber via δC∕δpj=0 gives L equations that can be solved for the L unknowns λn. Finally, substituting these quantities λn into Eq. 1.8 gives the dynamical partition function Qd and the trajectory populations pj. Those quantities, in turn, can then be used to obtain all the other dynamical distribution properties of interest.

This derivation makes no assumptions that a system is near equilibrium, or about separations of time scales, or about the linearity or nonlinearity of relationships between forces and flows, or about the nature of distributions of noise or fluctuations. The Caliber method is quite general in principle, although for many problems, similar to equilibrium statistical mechanics, analytical solutions will not be possible and it may be necessary to resort to numerical methods of solution. The approach has been subject to some formal study,11, 12, 14, 15 but practical applications and tests of it have been largely unexplored. Only recently, the principle of Maximum Caliber has been experimentally verified for the problem of nanodiffusion16, 17 and for a single bead trapped in a double well potential.18 In this work, we illustrate the Caliber approach more specifically through application to two-state dynamical systems.

THE DYNAMICAL PARTITION FUNCTION

Definition

Consider a Brownian-driven classical two-state system A↔B. Consider, first, the trajectory of a single particle (Fig. 1). We divide time into discrete units Δt. Each possible trajectory has N time steps, so the time duration of each trajectory is t=NΔt.

Figure 1.

One possible trajectory of a single particle that alternates stochastically between states A and B as a function of time.

There are four rate quantities that are of interest: Nabj, the number of transitions (over the full course of the N time intervals from time 0 to t, of one particular trajectory j) that have occurred from state B to state A; Nbaj, the number of transitions from A to B along trajectory j; Naaj, the number of “transitions” from state A to state A; and Nbbj, the number of transitions from B to B during a trajectory. Once the populations pj of the individual trajectories are known, the average numbers of such transitions can be computed from

| (2.1) |

Hence, quantities such as ⟨Nab⟩∕N are rates; these are the numbers of such transitions per unit time. Other quantities are obtainable from these. For example, for a trajectory having N time steps, the fraction of time that the system spends in state A can be expressed as ⟨NA∕N⟩=⟨(Naa+Nab)∕N⟩. In our present simple example, we consider steady-state situations in which each such average rate is a fixed number and is not, itself, a time-varying quantity. However, as we show below (see Sec. 4C), the Caliber method is general and can treat arbitrary time dependencies.

For the two-state system, the path weights are given by Caliber [Eq. 1.8],

| (2.2) |

where, to keep the notation as simple as possible, we have converted to different variables, γab=eλ1, γba=eλ2, γaa=eλ3, and γbb=eλ4. The dynamical partition function

| (2.3) |

is a sum over the dynamical weights of all the trajectories. Each dynamical weight is a product of factors describing that trajectory: γba is the probability that during the time interval Δt, the system was in state A and switches to state B, γab is the probability that the system was in state B and switches to state A, γaa is the probability that the system was in state A and stays in state A, and γbb is the probability of staying in state B. Without loss of generality, we will consider trajectories that start at time t=0 in state A. To illustrate, in a simple system involving only three time steps (N=3), there are eight possible paths, giving the following partition sum over those path weights:

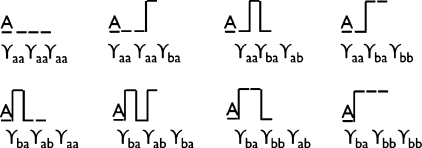

these paths and their weights are illustrated in Fig. 2.

Figure 2.

All the possible two-state trajectories of N=3 time steps for a system starting in state A, with their corresponding statistical weights.

Collecting up the results above into a more compact matrix notation gives

| (2.4) |

with initial state (start in A) and final state (end in A or B) and where

| (2.5) |

is the matrix of transition probabilities between the two states. Of these four variables, note that only two are independent because of the conservation relationships:

| (2.6) |

That is, for example, if the particle is in state A at time t, then at time t+Δt, the particle must be either in state A or B.

What are the probabilities PA(t) and PB(t) that the system is in state A or state B, respectively, at time t? We can readily obtain these probabilities from the dynamical partition function. Suppose the system starts in state A at time t=0 with probability PA(0) and in B with probability PB(0). To compute the state populations at time t, we multiply by the propagator matrix G for each of the N time steps to get

| (2.7) |

Since PA(t)+PB(t)=1, it follows from Eq. 2.7 that the partition function is normalized,

| (2.8) |

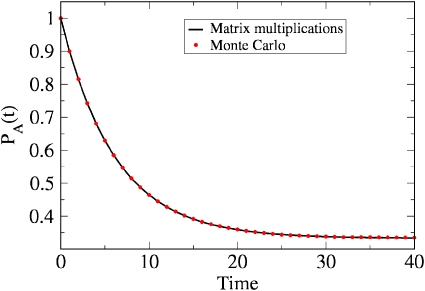

As an illustration, Fig. 3 shows the time evolution of PA(t), given that γba=1∕10 and γab=1∕20. As expected, PA(t) decays at a rate γab+γab=3∕20.

Figure 3.

Time evolution of population probability PA(t) as obtained from Eq. 2.7, starting from state A and assuming transition probabilities γba=ka=1∕10 and γab=kb=1∕20 for illustration. The system relaxes with a decay rate of γab+γab=3∕20 and approaches equilibrium, PA(∞)=1−⟨NB⟩eq∕N=γab∕(γba+γab)=1∕3, as expected. Also shown is the result of a dynamical Monte Carlo simulation (dotted line), which agrees well with the matrix multiplication method (solid line), when 106 trajectories are employed.

Another quantity of interest is the conditional probability, PA(t2∣t1), that the system is in state A at time t2, given that it was in state A at time t1:

| (2.9) |

Some properties are derivatives of the dynamical partition function

It is readily verified from Eq. 2.3 that various average quantities and higher moments can be calculated as derivatives of the partition function. For example, we can get the average number of switching transitions, Nba, from

| (2.10) |

(and similarly for the other quantities Nab, Naa, and Nbb; see Appendix A). In the equilibrium limit, we can readily derive closed-form expressions for the moments. For example, NB∕N=(Nba+Nbb)∕N is the fraction of the time NΔt that the system spends in state B. Appendix A shows that in this limit, as N→∞, we have

| (2.11) |

| (2.12) |

It is worth noting that these equations imply detailed balance, i.e., ⟨NB⟩eqγab=⟨NA⟩eqγba.

Other derivatives of the dynamical partition function are also useful—mixed moments, for example. Central to equilibrium thermodynamics is the set of reciprocal relationships known as Maxwell’s relations, which involve equalities among mixed second derivatives of the partition function. The importance of Maxwell’s relations lies in the fact that we often want to know the quantity on one side of such equalities, but we are only able to measure the quantity on the other side. Here, we show that Caliber gives similar mixed second derivative equalities, except here it is for dynamical properties rather than for equilibria. For example,

| (2.13) |

Perhaps expressions such as Eq. 2.13 will be useful for dynamics in the same way that Maxwell’s relations are for equilibria.

A chemical fluctuation theorem

Of much interest in nonequilibrium statistical mechanics are fluctuation theorems.15, 17, 19, 20 A fluctuation theorem relates the probability Pf of a forward trajectory to the probability Pr of the corresponding reverse trajectory in a dynamical system. From Caliber, we can readily calculate such ratios for our two-state system. The dynamical partition function 2.3 gives the ratio of the populations of forward to reverse trajectories as

| (2.14) |

where we have assumed, for the purpose of calculation, that the forward trajectory starts in state A and ends in state B. Employing Eq. 2.11 for the equilibrium populations PA(t)=1 and PB(∞) gives

| (2.15) |

where SA and SB denote the entropies over the populations of states A and B, respectively. This simple derivation gives the fluctuation theorem for the two-state system,

| (2.16) |

showing the more favorable routes are exponentially more populated than their reverse trajectories.

Other dynamical quantities can be obtained from the dynamical partition function

Other properties that are not simple derivatives of Qd can also be obtained from the dynamical partition function. One such property is the probability P(NB,t) that the particle has spent exactly NB time steps in state B over the time course from time t′=0 to t. Another example is the probability P(Nba,t) that the particle has had exactly Nba switches during the trajectory. Or, because of its relationship to the equilibrium constant K=NB∕NA, we may be interested in the dynamical distribution of the quantity P(NB∕NA,t). Computing these properties requires a way to “pick out” certain specific trajectories from the partition sum. Expressed in terms of Kronecker delta functions, these are

| (2.17) |

| (2.18) |

| (2.19) |

Recalling from Eq. 2.2 that the path weights pj depend on the variables Nabj, Nbaj, Naaj, and Nbbj, we can calculate, say, P(Nab,t), by simply summing over all the particular paths j that take on the particular value of interest, Nabj=Nab:

| (2.20) |

where gj=g(Nabj,Nbaj,Naaj,Nbbj) denotes the multiplicity of paths j that have these particular values of the four quantities.

Although the direct enumeration of paths is straightforward in principle, it becomes cumbersome for large N, since the number of paths grows exponentially with the length of the trajectory. In these cases, such averages can be obtained using a dynamical Monte Carlo scheme instead.21, 22, 23 In a direct generalization of standard equilibrium Monte Carlo, we can sample the nonequilibrium dynamics by comparing the rates of individual time steps with random numbers (see Ref. 23 for a recent review). For example, the Gillespie algorithm describes a random walk in state space that reproduces the correct distribution of the master equation of the process.21 For our single-particle two-state system, we can use a particularly simple dynamical Monte Carlo scheme. At each time step, we draw a random number r, which is compared to the transition probability γba (when the system is in state A) or γab (when it is in state B). If the transition probability is larger than r, the system makes a transition to the new state; otherwise, the system stays in its previous state. Figure 3 shows that the Monte Carlo approach gives good agreement with the matrix multiplication method, when 106 trajectories are employed.

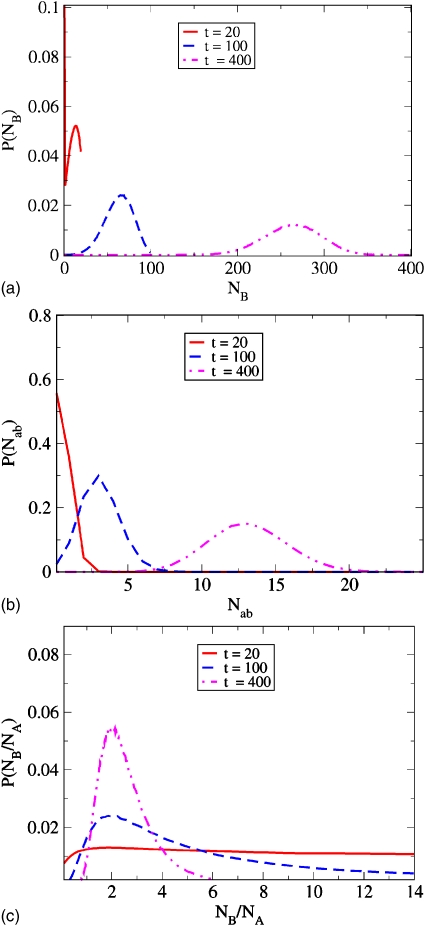

Adopting again our simple example with γba=1∕10 and γab=1∕20, Fig. 4 shows how the distributions P(NB,t), P(Nba,t), and P(NB∕NA,t) begin sharply peaked when the system is initiated in state A, and remain asymmetrical as they shift with time toward their equilibrium distributions (t≳400). Interestingly, we find a nonzero third moment of these distributions, even in the limit of long times. This implies that they are not exactly Gaussian, as the corresponding Langevin modeling would normally assume (although the deviation is quite small). For example, we obtain ⟨(Nba−⟨Nba⟩)⟩3∕N=0.006 86 and 0.006 91 from Eq. A15 and the Monte Carlo simulations. At long times, we find that P(NB∕NA,t) peaks at the expected equilibrium coefficient value, NB∕NA=2.

Figure 4.

Time evolution of distributions P(NB,t) (top), P(Nab,t) (middle), and P(NB∕NA,t) (below).

DERIVING EQUATIONS OF MOTION FROM CALIBER

Our premise in this paper is to use Caliber as a foundational principle from which we can derive dynamical properties. A standard way to treat dynamics is through master equations and Langevin equations.

Master equation

Master equations are among the most common modeling approaches in nonequilibrium statistical mechanics. These are differential equations that express the governing dynamics of state probabilities, such as PA(t) or PB(t) in the two-state system. Here, we show how to derive the master equation for this problem from Caliber’s trajectory-based dynamical partition function. We aim to compute quantities such as dPA∕dt and dPB∕dt. For the single time step Δt=1 from t to t+1, Caliber Eq. 2.7 gives

| (3.1) |

Converting from the γ notation to the more familiar rate-coefficient notation, ka and kb, gives

| (3.2) |

leading to the well-known master equation for this problem

| (3.3) |

where PA and PB on the right-hand side represent the state populations at time t−1 in the discrete time notation. While chemical master equations such as these are well-understood standard fare, they are limited; they do not give information about the underlying system trajectories. Thus, it is not straightforward to compute the distribution of dynamical quantities, which can be measured, e.g., in single molecule experiments. The advantage of the Caliber approach above is that it gives a deeper vantage point from which we can derive the dynamical properties of both the trajectories and the state densities, all within a single framework.

Switching from one-particle to multiple-particle systems

In the sections above, we have considered one particle that switches between states A and B. Now we generalize and treat a system of M particles. Each particle can switch stochastically between states A and B. We treat the case of independent particles to show how the dynamical partition function method simplifies such problems. Because of the particle independence, the dynamical partition function Qd,M for the total system factorizes into M single-particle partition functions Qd,1:

| (3.4) |

Hence, for the M-particle system, we obtain directly

| (3.5) |

which gives

| (3.6) |

where denotes the binomial coefficients and Pn(t) is the probability that n of the M particles are in state A at time t.

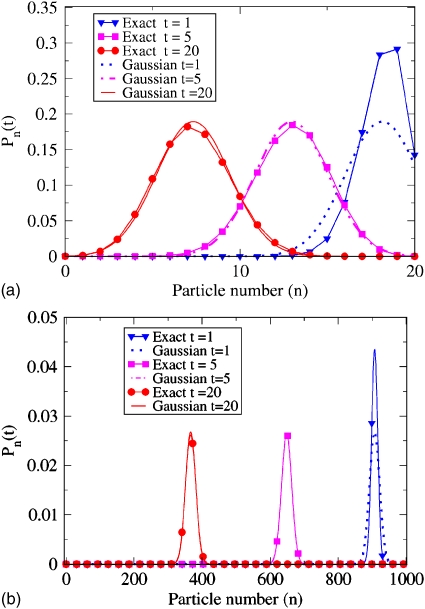

Here are some examples of how Eq. 3.6 can be useful. First, Eq. 3.6 gives the diffusional dynamics of the M-particle system (see Fig. 5). It is clear from the substantial width of this curve for M=20 particles that the mean value, PA(t)=(1∕M)∑n=1,MnPn(t)=⟨n⟩∕M, provides only a limited description of the time evolution of the system. The copy numbers of proteins inside biological cells are often not much greater than this, so such dynamical variance quantities will be important in such cases. Assuming M=1000 particles, on the other hand, the distributions are well localized at their mean values.

Figure 5.

Time evolution of probability distribution Pn(t) of number of particles, n, in state A, assuming ka=1∕10, kb=1∕20, and initial condition Pn(0)=δn,M. For various times, the exact binomial distribution is shown in solid lines along with the Gaussian distribution drawn with broken lines. In the upper panel M=20 total particles are considered, in the lower M=1000.

Second, Eq. 3.6 gives a simple way to derive the Poisson-like distribution for the M-particle equilibrium. At long times, we have PA(∞)=kb∕(ka+kb)=1−PB(∞). Substituting these relationships into the right-hand equality in Eq. 3.6 and defining the equilibrium constant as K=kb∕ka gives the Poisson-like distribution2

| (3.7) |

which is expected for independent particles at equilibrium; the proportionality constant is a function of M.

Third, Eq. 3.6 gives a simple way to derive the master equation for the M-particle τB reaction. We calculatePn(t+Δt)−Pn(t) using Eq. 3.6 and expand to first order in the rate coefficients ka and kb to get the M-particle master equation

| (3.8) |

which accounts for the gains and losses in the n-particle “bin” to and from the adjacent n+1 and n−1 bins. Here again, this master equation is well-known; the virtue of this Caliber derivation is simply in showing that the state-population dynamics can be derived from a single unified framework that also gives trajectory properties.

Deriving the chemical Langevin equation from Caliber

Master equations describe quantities that are already integrated over the microscopic trajectories. The standard way to recapture information about dynamical trajectories is to use a Langevin equation instead. In the Langevin approach, the left-hand side of an expression, for example, is a differential equation for average forces, velocities, or rates for a particular dynamical problem. On the right-hand side is a fluctuating noise quantity, which is assumed to have certain statistical properties. Typically, the noise is assumed to be uncorrelated and white, or to obey a Gaussian distribution. Such approaches are known to fail, however, in various circumstances, such as when the dynamics is nonlinear.2 There is currently no deeper analytical approach that prescribes the nature of the noise when setting up a Langevin equation for complex problems. Here, we illustrate how to derive the chemical Langevin equation, for the two-state model, from Caliber, giving a principled way of treating the noise. We begin with the full trajectory distribution given by Caliber and show how to derive the appropriate fluctuations for the corresponding Langevin equation from it.

In Langevin terminology, for our two-state problem, let n(t) represent the instantaneous number of particles in state A at time t. Correspondingly, M−n(t) is the instantaneous number of particles in state B. Formally, the Langevin approach asserts that the fluctuating trajectory quantity n(t) can be expressed in terms of a differential equation2

| (3.9) |

where Ft is a fluctuating noise quantity that has particular properties.24 First, it is assumed that the average noise is zero, ⟨Ft⟩=0, so that averaging over trajectories recovers the correct macroscopic expression for the mean dynamics,

| (3.10) |

Since ⟨n⟩=MPA(t) and ⟨M−n⟩=MPB(t), this step of averaging over trajectories just recovers the master equation for this system. Now, rearranging Eq. 3.9 and replacing the derivative on the left side with the single time-step (Δt=1) quantity, n(t+1)−n(t), gives

| (3.11) |

Our aim here is to derive from Caliber the nature of this fluctuating quantity, F(t), rather than to assume that it is a Gaussian distribution, as is often done in Langevin modeling. We want to determine the various statistical moments of F(t). To do this, we need the joint probability distribution P(n(t+1),n(t)). Using the notation n(t+1)=m and n(t)=n, P(n(t+1),n(t)) can be expressed as

| (3.12) |

where Pn(t) is given by Eq. 3.6. This expression contains two types of terms. First, given n particles in state A at time t, this sums over the trajectories in which i of them jump to state B at time t+1 (hence, n−i of them stay in state A). Second, given M−n particles in state B at time t, this sums over trajectories in which m−n+i of them jump into state A at time t+1 (so, M−m−i remain in state B).

Based on this joint distribution, Appendix B derives the first three moments of the distribution over trajectories. For the first moment, Caliber gives ⟨F(t)⟩=0, as expected. For the second moment, we obtain

| (3.13) |

Clearly, since PA and PB are time dependent, this second moment is also time dependent. However, in the limit as t→∞, the second moment reduces to

| (3.14) |

via the substitution of PA(∞)=kb∕(ka+kb)=1−PB(∞) into Eq. 3.13. Correspondingly, the third moment is (in first order of ka and kb, see Appendix B)

| (3.15) |

which is time dependent and goes to zero in the limit of long times, ⟨F(∞)3⟩=0. Since the third moment is nonzero for short times, the standard assumption in Langevin modeling that the noise is Gaussian-distributed is not exact, but becomes an increasingly good approximation for long times. Also as a matter of principle, the implication of this derivation is that for more complex Langevin modeling, Caliber may provide a general way to derive the appropriate noise distributions, when the Gaussian assumption is known to fail.

Finally, to complete the Caliber derivation of the Langevin approach, we integrate Eq. 3.9 to put it into the form

| (3.16) |

where ⟨n(t)⟩=MPA(t). Next, we note that the underlying distribution Pn(t) is given by the binomial distribution Eq. 3.6, which we approximate as a Gaussian,

| (3.17) |

where

| (3.18) |

This derivation shows how, starting from Caliber, we recover the standard Langevin model assumption of Gaussian noise. Figures 5a, 5b show that the Gaussian curves accurately mimic the binomial distribution, and that the Gaussian differs from the exact Pn(t) only at very short times. Interestingly, the exact result shows that the width of the distribution is time dependent at short times. This, of course, is not recovered by the usual Langevin treatment.

CONSTRAINTS

How to choose constraints for Caliber modeling

What is the justification for the Caliber approach? Statistical mechanics is about making models. For dynamics, a model is a statement of a set of possible microscopic routes and some chosen set of microscopic parameters (the statistical weights, not known in advance), the number of which will typically be much smaller than the number of different trajectories. The Caliber strategy is simply a way to determine the values of those statistical weight parameters so as to satisfy the observable average flux quantities, and so as to otherwise assign no further favoritism to any one trajectory over any other. The essential idea is that all trajectories are equivalent intrinsically and are only weighted differently by virtue of the resultant statistical weight factors. Caliber then predicts other dynamical moments. If those other dynamical moments were then found to disagree with experiments, it would imply the need for a different model.

This raises the question of what types of constraints are appropriate in Eq. 1.7, 1.6, 2.1. In this regard, the Maximum Caliber approach to dynamics bears close resemblance to the Maximum Entropy approach to equilibrium, which involves satisfying constraints, such as Eq. 1.3, on certain equilibrium averages. In applying either Caliber to problems of dynamics or MaxEnt to problems of equilibrium, we are not at liberty to choose constraints arbitrarily. For example, for the two-state model of interest in this paper, there are many possible quantities that could have served as the “observables” of our trajectories, including ⟨Nab−Nba⟩, , and , or an infinite number of others. The resulting dynamical distribution function that would have been predicted from those various choices can differ depending on what constraints are chosen. So, what are the “right” constraints?

First, in physical problems, some quantities, like energy, momentum, mass, particle numbers, or volume, are conserved. They are extensive, or first-order homogeneous functions. In equilibrium thermodynamics, you can only predict a state of equilibrium if you maximize the entropy S(U,V,N) that is a function of extensive variables; you cannot predict equilibrium by maximizing the function S(T,V2,N∕U) of intensive variables, such as temperature, or other non-conserved quantities. Similarly, for dynamics, the constraints used in Caliber are only fluxes, such as ⟨Nab⟩, which are time derivatives of conserved first-moment quantities, not higher moments of fluxes. Second, any linear combination of flux quantities would also lead to the same prediction for the pj’s: hence, substituting ⟨NA⟩=⟨Naa⟩+⟨Nab⟩ for the quantities ⟨Naa⟩ or ⟨Nab⟩, for example, would give the same trajectory populations. Hence, there is freedom to choose among linear combinations of flux constraints those that are most convenient.

Third, what is the right number of constraints? In our present model, we have two, corresponding traditionally, say, to an equilibrium constant and a forward rate coefficient. In a two-state system of independent particles having stationary dynamics and no memory, this may be sufficient to account for bulk experiments. However, modern single-molecule measurements can also give the higher moments.17, 18, 25, 26 In those cases, it is found that no further statistical weight parameters are needed. If some additional microscopic process were operative that caused a further preference of some trajectories over others, additional measurements (constraints) would be needed to fix the values of the additional parameters required. In the sections below, we illustrate how Caliber can be applied to other conserved quantities (time, rather than flux), to time-dependent constraints, and to memory effects.

Constraining the time rather than the flux

An alternative way to describe the two-state system is through the waiting time distribution rather than through the flux distribution. Caliber can treat time distributions as simply as it can treat flux distributions. Often measured in single-molecule experiments are the waiting times τA and τB, i.e., the number of time steps the system spends in state A or B until it switches to the other state. The mean waiting time in state A is ⟨τA⟩=∑jpj(t)τAj. Now, switching from constraints on average fluxes to constraints on average times, this time-based Caliber formulation gives the path weights , where Qd is a dynamical partition sum over all the possible waiting times,

| (4.1) |

Hence, we obtain for the average waiting time

The average waiting time ⟨τA⟩=1∕ka is an observable, equal to the inverse of the rate constant. Hence, from this observable, we obtain λ, leading also to the well-known Poisson waiting time distribution for this system,

| (4.2) |

This simple derivation shows how a constraint on the mean waiting time gives, through the Caliber approach, the full waiting time distribution. Also, since the same holds independently for the waiting time distribution in state B, we obtain from these two constraints the weights

| (4.3) |

In short, there can be different ways to choose constraints for Caliber. We have shown the equivalence of fixing two flux quantities such as Nab and Naa or fixing, instead, two mean waiting times, for states A and B. One advantage of the latter is that the waiting times are decoupled and independent [Eq. 4.2], while the former quantities are interdependent and must be combined, as indicated in this paper, to give a proper distribution.

Time-dependent constraints

Consider now a two-state process, A↔B, in which the energy minima and barrier height vary with time. Now, the constraint quantities, such as ⟨Nab(t)⟩, will depend on time, as does the dynamical partition function Qd. The Caliber formulation remains the same;5, 11, 12 here is an illustration of how it is implemented in this case. First, consider a discrete piecewise time variation, whereby an observable A takes on a fixed value for some time interval, and a different value over the next time interval, τ≤t:

| (4.4) |

By fixing ⟨A(τ)⟩ for a number of times τ=τ1,…,τK, we obtain the Caliber function

| (4.5) |

It is clear that by taking the limit of small time intervals, this procedure can accommodate any arbitrary time dependence.

As an illustration, suppose the two-state system has two different rate constants over two different time regimes:

| (4.6) |

This corresponds to the time-dependent constraints

| (4.7) |

and similarly for the other flux quantities. As a consequence, each term in Eq. 2.20 is replaced by a double sum, e.g.,

| (4.8) |

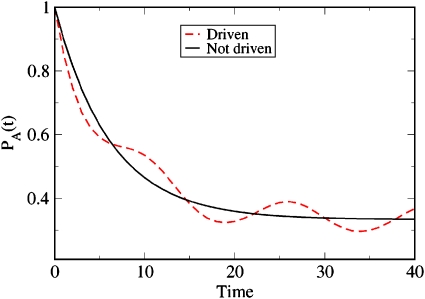

Figure 6 shows a calculation for a periodically forced two-state system. In this case, we use γab(t)=γab[1+0.5 cos(4γabt)]. To compute the dynamical properties of the system, we discretize the values of γab(t) for each time interval Δt and substitute each such value into its own G matrix, which we then multiply together to get the time-dependent partition function [see Eqs. 2.5, 2.6, 2.7]. The figure shows how the computed value of the population of A, PA(t), oscillates in time in this case.

Figure 6.

Time evolution of PA(t) for a periodically driven two-state system (dashed line) compared to the stationary nondriven case (solid line) treated previously.

Memory effects

The Caliber approach also allows us to treat dynamics that is non-Markovian and involves memory effects. To account for the time history, we can make the substitution3

| (4.9) |

in master Eq. 3.3, where Kn(t) (n=A,B) is the memory function that accounts for the non-Markovian behavior of the system. A common form is Kn(t)=cne−t∕τn, where τ represents the memory time.

There are different ways to treat memory effects in the Caliber formulation. First, consider the memory function

| (4.10) |

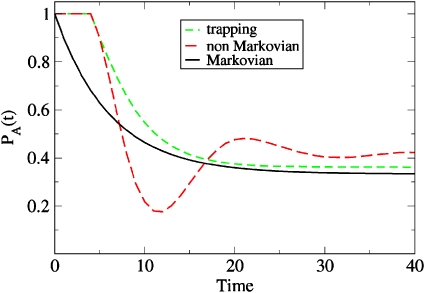

which describes a system that gets trapped in state n=A,B for tn time steps before it can exit. For this simple case, we again get the dynamical partition function 2.3 but augmented with the additional conditions that Naaj≥tB and Nbbj≥tB. Figure 7 shows the Caliber prediction for tA=tB=4. Starting in state A at t=0, we obtain PA(t)=1 for t≤4 by construction. For longer times, PA(t) looks similar to the Markovian case, although the system needs to wait each time it arrives at state A or B for at least four time steps.

Figure 7.

Two-state dynamics showing PA(t) in two systems having memory: The system gets trapped in state A for four cycles [light dashed line, Eq. 4.10] or is trapped with an exponential memory decay [dark dashed line, Eq. 4.12], both compared to simple Markovian relaxation (solid line).

Let us now consider the case that there are only first-neighbor effects in time. Hence, we need to go beyond PA(t) and PB(t) and consider the joint probabilities: PAA(t)=P(A,t∣A,t−Δt) that the system is in state A at time t given that it was in state A at time t−Δt; PAB(t)=P(A,t∣B,t−Δt) that the system is in state A at time t given that it was in state B at time t−Δt; etc. The only modification required of the simpler Caliber treatment above is now the need for a larger transition matrix G:

| (4.11) |

where γijk denotes the transition probability to state j from i, given that the system was in state k before. Instead of four numbers NA, NB, Nab, Nba to characterize the occurrences of the four transition probabilities γaa, γab, γba, γbb along a path of the Markovian two-state system, we now need eight statistical weights. As in Eq. 2.6, each column of transition matrix G in Eq. 4.11 sums up to one. In general, to treat longer memory processes, Caliber simply requires increasingly large G matrices, and additional statistical weights that characterize those variations.

As an example, Fig. 7 shows a memory process that combines trapping and exponential decay. Here, we take

| (4.12) |

with tn=τn=4. The calculation requires the construction of a G matrix according to Eq. 4.11 including M=4 memory steps. Again we find that the population PA(t) gets stuck in state A for t≤4. For longer times, however, the combination of trapping and exponentially decaying memory function results in an oscillatory decay of PA(t), until equilibrium is reached.

CONCLUSIONS

We have described the Maximum Caliber approach to nonequilibrium statistical mechanics, applied to a simple dynamical two-state system, A↔B. In this approach, experimentally observable average rates are taken as input to determine microscopic dynamical statistical weight quantities. This is done using a dynamical partition function, Qd, which is a sum over microscopic paths, resembling the way that equilibrium partition functions are sums over microstates. Caliber is quite general: It gives both the time evolution of density- or population-based quantities, as master equations do, but it also gives trajectory quantities, as Langevin models do. Analytical Langevin models require assumptions about the nature of noise distributions and are typically limited to linear dynamics. In contrast, Caliber gives a deeper foundation from which those noise distributions can be derived and is not limited to linear systems. Also, in principle, Caliber is not limited to applications near equilibrium.

While this work has been restricted to two-state dynamics, several generalizations of the Caliber formulation are obvious. First, we have shown here that Caliber can readily treat more complex dynamics, for example, in which the energy landscape itself varies in time or involving non-Markovian memory. Hence, we can also describe nonequilibrium situations with a persisting external perturbation. Second, it is straightforward to extend the theory to a general N-state system, simply by increasing the dimensionality of the G matrix. In a similar vein, standard diffusion or random walk problems can be expressed through a G matrix and subsequently treated by Caliber. Finally, we can generalize from a discrete state space (e.g., states A and B) to a continuous state space (e.g., position space x). We obtain for the continuous time evolution of a continuous state variables x(t) the path probability p[x(t)] as a functional of the path x(t), and the dynamical partition function is given by the functional integral13, which sums up all continuous paths x(t) that start from x(0).

One of the main motivations for the Caliber approach is that it can treat single-molecule or few-particle systems, where it is of interest to know the dynamical distributions over trajectories. Also, in the same way that the MaxEnt method has found applications beyond equilibrium statistical mechanics, in signal and image processing applications, we believe that Maximum Caliber may be similarly useful for the analysis of dynamical data.

ACKNOWLEDGMENTS

We thank Rob Phillips, David Wu, Mandar Inamdar, and Frosso Seitaridou for many inspiring and helpful discussions and a long-standing collaboration, as well as Moritz Otten for helpful comments on the manuscript. This work has been supported by NIH Grant No. GM34993 and the Fonds der Chemischen Industrie.

APPENDIX A: ANALYTICAL RESULTS FOR THE TWO-STATE PROBLEM

We wish to derive analytic expressions for the first moments of the distributions P(NB,t) [Eq. 2.17] and P(Nab,t) [Eq. 2.18], where NB∕N is the part of the time the system spends in state B and Nab denotes the number of switches from state B to state A. To this end, we diagonalize transition matrix G given in Eq. 2.5 in order to obtain a closed expression of the dynamical partition function Qd in Eq. 2.4. We denote the eigenvalues of G by λ1 (larger) and λ2 (smaller) and the corresponding eigenvectors as (e1a,e1b) and (e2a,e2b). Thus, upon inserting the complete set we can write Qd of Eq. 2.4 as

| (A1) |

In the limit of long times, we can approximate Eq. A1 as

| (A2) |

| (A3) |

that is, the partition function Qd depends only on the largest eigenvalue for t→∞. We note that this approximation of the equilibrium partition function is equivalent to the transfer matrix method, which is usually employed to solve Ising models.27 The corresponding eigenvector (e1a,e1b) can be written as

| (A4) |

Therefore, we can express the mean flows in terms of γaa, γbb, γab, and γba as

| (A5) |

| (A6) |

| (A7) |

| (A8) |

With ⟨NA⟩=⟨Naa⟩+⟨Nab⟩ and ⟨NB⟩=⟨Nbb⟩+⟨Nba⟩, we obtain explicit expressions for the flows at t→∞:

| (A9) |

| (A10) |

To derive similar results for the second moments, we use Eq. 2.3 to derive the expressions

| (A11) |

| (A12) |

Using the same strategy as described above, we then obtain

| (A13) |

| (A14) |

Similarly, the third moments can be calculated by taking the appropriate derivatives of the partition sum. Here we report the third moment of the stochastic variable Nba,

| (A15) |

APPENDIX B: NOISE DISTRIBUTION OF THE CHEMICAL LANGEVIN MODEL

Consider a single time step, Δt=1. In order to calculate the moments of Langevin noise 3.11, we first evaluate the distribution P(m,n) defined in Eq. 3.12. In leading order, only jumps with m=0,±1 are considered, which leads to

| (B1) |

where Pn(t) is given by Eq. 3.6. Using γaa=1−γba, γbb=1−γab, γba=ka, γab=kb, and expanding to first order in ka and kb, we obtain

| (B2) |

Insertion in Eq. 3.11 gives for the second moment of F(t)

| (B3) |

Using , this simplifies to

| (B4) |

In the last line we used that ⟨n⟩=MPA and and kept only terms to first order in ka and kb. Since PA(∞)=kb∕(ka+kb)=1−PB(∞), we obtain at long times

| (B5) |

In complete analogy to the above derivation, we can also calculate higher moments of F(t) from Eq. B2. For example, the third moment reads

In leading order we then obtain

| (B6) |

which vanishes in the limit of long times

| (B7) |

References

- Callen H. B., Thermodynamics and an Introduction to Thermostatistics (Wiley, New York, 1985). [Google Scholar]

- Van Kampen N. G., Stochastic Processes in Physics and Chemistry (Elsevier, Amsterdam, 1997). [Google Scholar]

- Zwanzig R., Nonequilibrium Statistical Mechanics (Oxford University Press, Oxford, 2001). [Google Scholar]

- Mazo R. M., Brownian Motion (Clarendon, Oxford, 2002). [Google Scholar]

- Jaynes E. T., Annu. Rev. Phys. Chem. 10.1146/annurev.pc.31.100180.003051 31, 579 (1980). [DOI] [Google Scholar]

- Blossey R., Computational Biology: A Statistical Mechanics Perspective (Chapman & Hall∕CRC, New York, 2006). [Google Scholar]

- Beard D. A. and Qian H., Chemical Biophysics: Quantitative Analysis of Cellular Systems (Cambridge University Press, Cambridge, 2008). [Google Scholar]

- Jaynes E. T., Phys. Rev. 10.1103/PhysRev.106.620 106, 620 (1957). [DOI] [Google Scholar]

- See http://bayes.wustl.edu/ for numerous helpful references on the Maximum Entropy formulation.

- Dill K. and Bromberg S., Molecular Driving Forces: Statistical Thermodynamics in Chemistry and Biology (Garland Science, New York, 2003). [Google Scholar]

- Jaynes E. T., in The Maximum Entropy Formalism, edited by Levine R. D. and Tribus M. (MIT, Cambridge, MA, 1978), p. 15. [Google Scholar]

- Jaynes E. T., in Complex Systems–Operational Approaches, edited by Haken H. (Springer, Berlin, 1985), pp. 254–269. [Google Scholar]

- Schulman L. S., Techniques and Applications of Path Integration (Wiley, New York, 1981). [Google Scholar]

- Dougherty J. P., Philos. Trans. R. Soc. London, Ser. A 10.1098/rsta.1994.0022 346, 259 (1994). [DOI] [Google Scholar]

- Dewar R. C., J. Phys. A 10.1088/0305-4470/38/21/L01 38, L371 (2005). [DOI] [Google Scholar]

- Ghosh K., Dill K., Inamdar M. M., Seitaridou E., and Phillips R., Am. J. Phys. 10.1119/1.2142789 74, 123 (2006). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seitaridou E., Inamdar M., Phillips R., Ghosh K., and Dill K., J. Phys. Chem. 111, 2288 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu D., Ghosh K., Inamdar M., Lee H., Fraser S., Dill K., and Phillips R. (to be published) (2008).

- Evans D. J., Cohen E. G. D., and Morriss G. P., Phys. Rev. Lett. 10.1103/PhysRevLett.71.2401 71, 2401 (1993). [DOI] [PubMed] [Google Scholar]

- Wang G. M., Sevick E. M., Mittag E., Searles D. J., and Evans D. J., Phys. Rev. Lett. 10.1103/PhysRevLett.89.050601 89, 050601 (2002). [DOI] [PubMed] [Google Scholar]

- Gillespie D. T., J. Phys. Chem. 10.1021/j100540a008 81, 2340 (1977). [DOI] [Google Scholar]

- Gibson M. A. and Bruck J., J. Phys. Chem. A 10.1021/jp993732q 104, 1876 (2000). [DOI] [Google Scholar]

- Gillespie D. T., Annu. Rev. Phys. Chem. 10.1146/annurev.physchem.58.032806.104637 58, 35 (2007). [DOI] [PubMed] [Google Scholar]

- Zwanzig R., J. Phys. Chem. B 10.1021/jp0034630 105, 6472 (2001). [DOI] [Google Scholar]

- Purohit P. K., Kondev J., and Phillips R., Proc. Natl. Acad. Sci. U.S.A. 10.1073/pnas.0737893100 100, 3173 (2003). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leite V. B. P., Onuchic J. N., Stell G., and Wang J., Biophys. J. 10.1529/biophysj.104.046243 87, 3633 (2004). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pathria R. K., Statistical Mechanics (Butterworth-Heinemann, Oxford, 1996). [Google Scholar]