Abstract

We present a new estimator for computing free energy differences and thermodynamic expectations as well as their uncertainties from samples obtained from multiple equilibrium states via either simulation or experiment. The estimator, which we call the multistate Bennett acceptance ratio estimator (MBAR) because it reduces to the Bennett acceptance ratio estimator (BAR) when only two states are considered, has significant advantages over multiple histogram reweighting methods for combining data from multiple states. It does not require the sampled energy range to be discretized to produce histograms, eliminating bias due to energy binning and significantly reducing the time complexity of computing a solution to the estimating equations in many cases. Additionally, an estimate of the statistical uncertainty is provided for all estimated quantities. In the large sample limit, MBAR is unbiased and has the lowest variance of any known estimator for making use of equilibrium data collected from multiple states. We illustrate this method by producing a highly precise estimate of the potential of mean force for a DNA hairpin system, combining data from multiple optical tweezer measurements under constant force bias.

INTRODUCTION

A recurring challenge in statistical physics, computational chemistry, and single-molecule experiments is the collection of a sufficient amount of data to estimate physical quantities of interest to adequate precision. In computer simulations of physical or chemical models, such quantities include potentials of mean force, phase coexistence curves, fluctuation or temperature-dependent properties, and free energy differences. In single-molecule experiments, these quantities might include potentials of mean force along a pulling coordinate or the distance between fluorescence probes during resonant energy transfer. For all of these problems, collection of sufficient statistics for a reliable estimate often requires multiple simulations at different thermodynamic states1 or measurements performed under different applied biasing potentials. In computer simulations, multistate techniques such as umbrella sampling,2 simulated3 and parallel tempering,4 and the use of alchemical intermediates in free energy calculations can greatly aid convergence; in experiments, data collected under constant applied force can help provide adequate sampling of conformations of interest.5

Even with these methods, it may require a large quantity of data to produce estimates with the desired precision. Computing the most precise estimate possible from the available data can therefore be critical in allowing these quantities to be estimated with reasonable computational or experimental effort. While the choice of thermodynamic states to sample can also greatly affect efficiency, we focus here on only the problem of statistically efficient estimation given samples from predetermined states.

Early methods for computing free energy differences6, 7 or equilibrium expectations2 relied on one-sided exponential averaging (EXP), which is formally exact but does not make the most efficient use of data when samples from more than one state are available.8 Subsequently, the Bennett acceptance ratio method (BAR)9, 10 greatly improved upon EXP for the computation of free energy differences, producing statistically optimal estimates of free energy differences when two states are sampled10 and yielding estimates that can be more than an order of magnitude more precise.8 More recently, multiple histogram reweighting methods11, 12 were proposed as a way to incorporate data from multiple states to produce superior estimates of free energy differences and equilibrium expectations for arbitrary thermodynamic states, including states not sampled.

While multiple histogram techniques—most notably, the weighted histogram analysis method12 (WHAM)—can produce statistically optimal estimates of the discretized densities of states11 or histogram occupation probabilities,13 they have several limitations for the treatment of continuous systems. First, the reliance on energy histograms of width sufficient to contain many samples—often larger than many times the thermal energy—introduces a bias that can be substantial and often difficult to assess.14 Second, unlike BAR, there are no direct expressions to estimate the statistical uncertainty in free energy differences or expectations obtained from WHAM. Third, application of WHAM to samples collected with a biasing potential which is not trivially scaled by a linear field parameter such the temperature or an applied electric field requires a number of bins that grows exponentially in the number of states, making it computationally intractable for even modest numbers of states. While more recent maximum likelihood13 and Bayesian formulations15 mitigate the memory requirements, they do not remove the histogram bias effects, and introduce a costly Markov chain Monte Carlo sampling procedure to estimate uncertainties.15, 16

Here, we use recent results from the field of statistical inference17, 18, 19, 20 to construct a statistically optimal estimator for computing free energy differences and equilibrium expectations at arbitrary thermodynamic states using equilibrium samples from multiple thermodynamic states. The resulting estimator, termed the multistate Bennett acceptance ratio estimator (MBAR) as it reduces to BAR when only two states are considered and shares several steps in its derivation, is equivalent to WHAM in the limit that histogram bin widths are shrunk to zero but is derived without the need to invoke histograms. Unlike WHAM, this estimator provides a direct assessment of uncertainties, critical in making comparison between experiment and theory, and the computational expense of computing the estimator remains modest across a wider variety of applications. Furthermore, it can easily be applied to data sampled from non-Boltzmann sampling schemes or to the analysis of single-molecule experiments in cases where an external bias potential is applied.

This paper is organized as follows. Section 2 recapitulates the literature on extended bridge sampling estimators used here as the basis for the MBAR estimator. Expressions for computing estimates of free energy differences (Sec. 3) and equilibrium expectations (Sec. 4) are then provided. Finally, we illustrate the method in Sec. 5 by applying it to the estimation of the potential of mean force (PMF) for a DNA hairpin system by combining data from multiple equilibrium optical force clamp experiments under different external biasing potentials.

EXTENDED BRIDGE SAMPLING ESTIMATORS

Suppose we obtain Ni uncorrelated equilibrium samples from each of K thermodynamic states within the same ensemble, such as NVT, NPT, or μVT (see Appendix A for more information on subsampling correlated time series data to produce uncorrelated samples). Each state is characterized by a specified combination of inverse temperature, potential energy function, pressure, and∕or chemical potential(s), depending on the ensemble. We define the reduced potential functionui(x) for state i to be

| (1) |

where x∊Γ denotes the configuration of the system within a configuration space Γ, with V(x) as volume (in the case of a constant pressure ensemble) and n(x) as the number of molecules of each of M components of the system [in the case of a (semi)grand ensemble]. For each state i, βi denotes the inverse temperature, Ui(x) the potential energy function (which may include biasing weights), pi the external pressure, and μi the vector of chemical potentials of the M system components.

Configurations from state i are sampled from the probability distribution

| (2) |

where qi(x) here is non-negative and represents an un-normalized density function, and ci is the (generally unknown) normalization constant (known in statistical mechanics as the partition function). In samples obtained from standard Metropolis Monte Carlo or thermostatted molecular dynamics simulations or from experiment, this un-normalized density is simply the Boltzmann weight qi(x)=exp[−ui(x)] but may, in general, differ in simulations employing non-Boltzmann weights, such as multicanonical simulations21 and those using Tsallis statistics.22

We wish to produce an estimator for the difference in dimensionless free energies

| (3) |

(where the fi are related to the dimensional free energies Fi by fi=βiFi) and the equilibrium expectations

| (4) |

These expectations can be computed as ratios of the normalization constants if we define new functions q(x)=A(x)qi(x), where the q(x) no longer need be non-negative for states from which no samples are collected.23

To construct an estimator for these ratios of normalization constants, we first note the identity

| (5) |

which holds for arbitrary choice of functions αij(x), provided all ci are nonzero.

Using this relation, summing over the index j, and substituting the empirical estimator for the expectations ⟨g⟩i, we obtain a set of K estimating equations

| (6) |

for i=1,2,…,K, where solution of the set of equations for all yields estimates of ci from the sampled data determined up to a scalar multiplier.

Equation 6 defines a family of asymptotically unbiased estimators parametrized by the choice of functions αij(x), known in the statistics literature as extended bridge sampling estimators.20 By making the choice

| (7) |

we obtain an estimator that has been proven to be optimal in the sense that it has the lowest variance for a large class of choices of αij(x) which includes all reweighting estimators in common use.20 This estimator is also asymptotically unbiased and guaranteed to have a unique solution (up to a multiplicative constant)20 and can also be derived from maximum-likelihood methods19, 24 or cast as a reverse logistic regression problem.19, 25

While a closed-form expression for the set of cannot be obtained from Eqs. 6, 7, numeric values can nevertheless be easily computed by any suitable method for solving systems of coupled nonlinear equations. A simple self-consistent iteration method and an efficient Newton–Raphson solver are described in Appendix C.

In the large sample limit, the error in the ratios will be normally distributed,20 and the asymptotic covariance matrix, Θij=cov(θi,θj), where θi≡ln ci, can be estimated by19

| (8) |

where IN is the N×N identity matrix (with as the total number of samples), and N=diag(N1,N2,…,NK). The superscript + denotes a suitable generalized inverse, such as the standard Moore–Penrose pseudoinverse, since the quantity in parentheses will be rank-deficient. W denotes theN×K matrix of weights

| (9) |

The samples are now indexed by a single index n=1,…,N, as the association of which samples xn came from which distribution pi(x) is no longer relevant. We note that this definition ensures for all i=1,…,K and for all n=1,…,N. The computational cost of evaluating the pseudoinverse of an N×N matrix in computing can be reduced to that of computing the eigenvalue decomposition of a K×K matrix, and in many cases the covariance matrix can even be produced by operations only on K×K matrices (see Appendix D).

The covariance of estimates of arbitrary functions ϕ(θ1,…,θK) and ψ(θ1,…,θK) of the log normalization constants θi can be estimated from by the expansion

| (10) |

FREE ENERGIES

When configurations are sampled with Boltzmann statistics, where qi(x)≡exp[−ui(x)], Eqs. 6, 7 produce the following estimating equations for the dimensionless free energies

| (11) |

which must be solved self-consistently for . Again, because the normalization constants are only determined up to a multiplicative constant, the estimated free energies are determined uniquely only up to an additive constant, so only differences will be meaningful.

The uncertainty in the estimated free energy difference can be computed from Eqs. 8, 10 as

| (12) |

Free energy differences and uncertainties between states not sampled are easily estimated by augmenting the set of states with additional reduced potentials ui(x) with the number of samples Ni=0. For these unsampled states, no additional self-consistent estimation is required, so free energy differences involving many such states can be estimated very efficiently.

EQUILIBRIUM EXPECTATIONS

The equilibrium expectation of some mechanical observable A(x) that depends only on configuration x (and not momentum) is given by Eq. 4 and can be computed as a ratio of normalization constants cA∕ca by defining two additional “states” characterized by the functions23

where again q(x)≡exp[−u(x)] if the expectation with respect to the Boltzmann weight is desired. Even though qA(x) may no longer be strictly non-negative, we can still make use of the extended bridge sampling estimator [Eq. 6] to estimate the expectation ⟨A⟩ since NA=Na=0.

Similarly, we augment the matrix W [Eq. 9] with columns WnA and Wna corresponding to qA(x) and qa(x), respectively:

| (13) |

where normalization constants and are defined in terms of self-consistent estimating equations as

| (14) |

We can then write the estimator of the expectation as

| (15) |

and an estimator for the uncertainty as

| (16) |

where the covariance matrix is now computed from the augmented W. Covariances between estimates of ⟨A⟩ at different thermodynamic states, or between two observables ⟨A⟩ and ⟨B⟩, can also be constructed by adding the appropriate columns to the covariance matrix and applying Eq. 10 to estimate the desired uncertainty. If the dimensionless free energies have already been determined, computation of for any A(x) and any q(x) does not require additional iterative solution of the self-consistent estimating equations.

APPLICATION TO LABORATORY EXPERIMENTS

The MBAR estimator is not limited in application to data produced from simulation—it can also be applied to combine data from multiple equilibrium experiments in the presence of externally applied fields. To illustrate, we estimate the potential of mean force (PMF) of a DNA hairpin attached by double-stranded DNA (dsDNA) linkers to glass beads along the distance between the beads. The collection of equilibrium trajectories under a variety of constant force loads (corresponding to a linear external potential along the extension coordinate) for the DNA hairpin system 20R55∕4T collected by an optical double trap experiment5 was reported earlier.26 The complete dataset was obtained from Michael Woodside (National Institute for Nanotechnology, NRC and Department of Physics, University of Alberta) and consists of 16 trajectories at 296.15 K, each 5 s in duration and sampled with a period of 0.1 ms, totaling 50 000 samples each. Each trajectory was collected under a different constant force load with force loads ranging from 12.35 to 14.41 pN, with an estimated 10% relative error in the measurement of this force value.

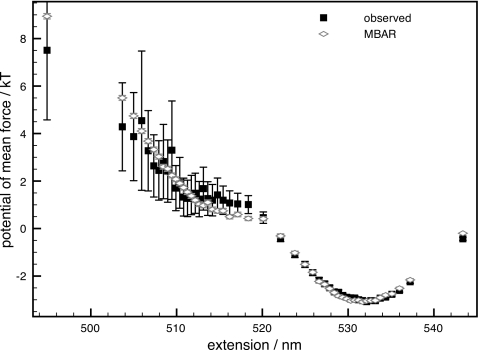

The data were analyzed to produce an optimal estimate of the PMF under a force load of 14.19 pN, a force load at which it is difficult to determine the entire PMF to high precision from the equilibrium trajectory collected at this force load alone (Fig. 1). The sampled extension range was divided into 50 unequally sized bins such that the number of samples per bin was equal in order to avoid regions with zero histogram counts, as would occur with equally spaced bins. Analysis with the MBAR estimator took 18 s on a standard 2.16 GHz Intel Core 2 Duo MacBook Pro, and the resulting error bars are more than an order of magnitude smaller than those derived from the single trajectory at this force load in the poorly sampled region of the PMF. Below, we describe how both types of analysis were performed.

Figure 1.

PMF of the DNA hairpin and dsDNA handles system 20R55∕4T under 14.19 pN external force. The PMF is computed directly from the data collected with that external force using the observed occupancy as described in the text (black filled squares) and also using the MBAR estimate with data from experiments from a range of external forces (unfilled gray diamonds), with error bars in the corresponding color. Note that in most cases, the MBAR error bars are less than the height of the corresponding symbol.

To estimate the PMF from the 14.19 pN trajectory alone (black filled squares in Fig. 1), the total number of counts Ni per histogram bin was determined, and the reduced PMF (in units of kT) fi computed up to an irrelevant additive constant from

| (17) |

where wi is the relative width of bin i necessary to correct for the nonuniform bin sizes. The statistical uncertainty in the histogram count was estimated by standard methods (see Eq. (26) in Ref. 27),

| (18) |

where g is the statistical inefficiency of the extension time series, estimated from the extension autocorrelation function (see Sec. 5.2 of Ref. 27).

To estimate the PMF using the MBAR estimator, the dataset was first subsampled with an interval equal to the statistical inefficiency of each trajectory at constant force to produce a set of uncorrelated samples. The reduced potential energy for each state k under the experimental conditions corresponds to

| (19) |

where U0(x) is the (unknown) potential energy function of the system in the absence of an externally applied biasing potential and is the (known) externally applied biasing potential, given by

| (20) |

where z(x) is the extension coordinate, Fk is the constant applied force along the positive z direction, and ak is a constant offset.

Because only differences ui(x)−uj(x) appear in the estimating equations [Eq. 11], the unknown components of the reduced potential energy cancel out and need not be considered

| (21) |

While the constant term β(ai−aj) involving the unknown zero potential intercepts will appear in the estimated state free energies, these do not influence computed expectations in Eq. 15, and are hence irrelevant.

The probability of finding the system in bin i under the conditions of interest is given by the expectation

| (22) |

where χi(z) is an indicator function that assumes the value of 1 if the system is in bin i and zero otherwise. The PMF (in units of thermal energy kT) can then be computed from pi up to an irrelevant additive constant as

| (23) |

and the uncertainties propagated by Eq. 10.

Because each PMF is only determined up to an arbitrary additive constant, the mean value of each PMF was subtracted before plotting. This is equivalent to choosing the additive constants so as to obtain an optimal least-squares rms fit between the two PMFs.

It should be noted that this result corresponds to the PMF for the entire system connected to the glass beads, which includes not only the DNA hairpin but the two dsDNA linkers and their attachments to the glass beads. In other work, deconvolution or related methods have been applied to correct for the stretching of the linkers to estimate the PMF for the DNA hairpin alone.28

DISCUSSION

The MBAR estimator presented here provides a rapid and robust way to extract estimates of free energy differences and equilibrium expectations from multiple equilibrium samples of different thermodynamic states in a statistically optimal way. As the estimator is asymptotically efficient among a wide class of “bridge sampling” estimators,20 which includes EXP and BAR as members, the resulting estimates from MBAR will have the lowest (or equal) variance in the large sample limit.

While multiple histogram techniques11, 12, 13, 15 have been widely used for combining data from multistate simulations, the MBAR estimator supplants these methods in the majority of cases. Most importantly, it provides a reliable and inexpensive method for estimating the uncertainties in the resulting estimates and their correlations, which are critical for propagating uncertainties to quantities of interest. Additionally, the elimination of histograms avoids both the bias arising from discretization of continuous energies, as well as the computational overhead of constructing and storing high dimensional histograms.

In this framework, multiple histogram reweighting methods such as WHAM can be understood as a histogram kernel density estimator approximation to MBAR. In some applications, histograms can reduce the computational expense required for solving the estimating equations [Eq. 6] at the expense of introducing bias. When samples are distributed according to the Boltzmann weight, the estimator for the free energies [Eq. 11] is precisely Eq. (21) of Ref. 12 or Eq. 15 of Ref. 29, in both cases presented as a reduction of the histogram bin width to zero in the standard WHAM equations [Eqs. (19) and (20) of Ref. 12]. While the validity of this limit is dubious—the derivations in these references rely upon an estimate of the uncertainty in each histogram count which cannot be correct when the bins are nearly empty—the derivation of this equation from the extended bridge sampling estimator demonstrates for the first time that these equations are, in fact, asymptotically unbiased estimators of the true free energy differences.

The MBAR estimator also can be considered a multistate generalization of the BAR estimator.9 In deriving BAR, Bennett constructed an estimator from Eq. 5 directly, determining the single α(x) which minimized the variance in the estimator of the free energy difference between only two states. In deriving MBAR, summing over all states j and determining the functions αij(x) that minimizes the covariance matrix of the estimator for ratios of normalization constants produces an optimal estimator for the multistate case. A proof of the equivalence of MBAR and BAR for two states can be found in Appendix E.

BAR and a recent pairwise multistate generalization to Bennett’s acceptance ratio method (which we shall refer to as PBAR) (Ref. 30) differ from MBAR in that they can also be applied to nonequilibrium work measurements between pairs of states, in addition to equilibrium reduced potential differences (instantaneous work measurements). However, PBAR constructs a total likelihood function from products of likelihood functions connecting pairs of states, assuming independence of all work measurements. For equilibrium samples, this means that a sampled configuration xn from a state i can only be used to provide information about the instantaneous work required to switch to a single other state j for use in the PBAR estimator, whereas in MBAR, each sampled xn can be used to provide information about all states. As a result, MBAR should require significant fewer samples from each state to produce an estimate of equivalent precision with equilibrium data.

A Python implementation of the MBAR estimator described here is available under the GNU General Public License (GPL), and is provided online, along with several example applications, at https:∕∕simtk.org∕home∕pymbar.

ACKNOWLEDGMENTS

We are indebted to Michael Woodside for providing us with detailed datasets from Ref. 26 and helpful comments. We thank Evangelos A. Coutsias, Gavin E. Crooks, Fernando A. Escobedo, Edward H. Feng, Andrew Gelman, Andrew I. Jewett, Libusha Kelly, Jun S. Liu, David D. L. Minh, David L. Mobley, Frank M. Noé, Vijay S. Pande, Sanghyun Park, M. Scott Shell, Zhiqiang Tan, Matthew A. Wyczalkowski, and Huafeng Xu for enlightening discussions and constructive comments on this manuscript. J.D.C. gratefully acknowledges support from Ken A. Dill through NIH grant GM34993 and Vijay S. Pande through a NSF grant for Cyberinfrastructure (NSF CHE-0535616), and MRS support from Richard A. Friesner and an NIH NRSA Fellowship.

APPENDIX A: CORRELATED TIME SERIES DATA

While estimating equations based on Eq. 6 can be applied to correlated or uncorrelated datasets, provided that the empirical estimator

| (A1) |

remains asymptotically unbiased, the asymptotic covariance matrix estimator [Eq. 8] only produces sensible estimates when applied to uncorrelated datasets. Application to correlated datasets may produce severe underestimates of the true statistical uncertainty and should be avoided.

A set of uncorrelated configurations can be obtained from a correlated time series, such as is generated by a molecular dynamics or Metropolis Monte Carlo simulation, by subsampling the time series with an interval approximately equal to the equilibrium relaxation time for the system. Because the equilibrium relaxation time is difficult to compute for all but the simplest systems, we find the maximum of the statistical inefficiency g computed for several relevant observables [such as the reduced potential uk(x) in Boltzmann-weighted sampling, structural observables A(x) in the computation of potentials of mean force, etc.] provides a practical estimate useful for subsampling.

The statistical inefficiency gA of the observable A(x) of a time series is formally defined as (see Janke31 for a detailed exposition),

| (A2) |

where τA denotes the integrated autocorrelation time and CAA(t) the normalized fluctuation autocorrelation function of the observable A.

Direct application of these equations substituting the empirical estimator for the expectation can be problematic due to statistical noise. As a result, there exist a number of standard procedures27, 31, 32, 33 to improve the quality and stability of this estimate for physical systems, making use of properties such as stationarity. The fast method for estimating the integrated autocorrelation time described in Sec. 5.2 of Chodera et al.27 is implemented in the Python implementation of MBAR available online.

APPENDIX B: RECOMPUTATION OF REDUCED ENERGIES AT MULTIPLE STATES

Application of MBAR to simulation data requires uk(xn) to be evaluated for all K reduced potential functions uk(x) and all N uncorrected sampled configuration xn, a total of KN reduced potential evaluations. In practice, this is not overly burdensome; the samples xn are generally produced by schemes that generate chains of highly correlated samples, such as thermostatted molecular dynamics or Monte Carlo simulations. Once the stored configurations are subsampled to eliminate correlations and produce an effectively uncorrelated sample (as described above), the number of remaining samples N≈T∕g is generally smaller than the number of samples T produced during the simulation by one or more orders of magnitude.

In cases where uk(x) differ only by a linear scaling parameter of one or more components (such as temperature or an external field parameter), computation of uk(xn) for all K states is a trivial operation. For other cases, such as when all samples are collected from thermodynamic states that only differ in the external biasing potential (e.g., linear or harmonic), we note that the reduced energy differences uk(x)−ui(x) involve only differences in the external biasing potential, which can often be rapidly computed. Section 5 contains an illustration of this in application to single-molecule pulling experiments.

APPENDIX C: EFFICIENT SOLUTION OF THE ESTIMATING EQUATIONS

A number of methods can be used to obtain a self-consistent solution to the free energy estimating equations obtained from combining Eqs. 6, 7

| (C1) |

or in terms of the dimensionless free energies fi=−ln ci,

| (C2) |

While any method capable of solving a coupled set of nonlinear equations may be employed, we describe two approaches to their solution: A straightforward yet reliable self-consistent iteration method and an efficient yet slightly less reliable Newton–Raphson method. Both methods are implemented in the Python implementation of the estimator available online.

Self-consistent iteration

As in Ref. 12, the could be obtained by self-consistent iteration of Eq. 11 using the last set of iterates to produce a new estimated set of iterates ,

| (C3) |

Convergence is assured regardless of the initial choice of , so it is sufficient to initialize the iteration by setting all . Alternative initial choices of the initial reduced free energies may speed convergence. For example, we have found the choice

| (C4) |

which, for Boltzmann weighting [qk(x)=exp[−uk(x)]] corresponds to the average reduced potential energy, usually works well. Additional inexpensive choices are possible, such as fixing and estimating consecutive differences , k=1,2,…,K−1 using BAR.9, 10

Cautions

For numerical reasons, it is convenient to constrain f1=0 during the course of iteration by subtracting f1 from the updated values in order to obtain a unique solution and prevent uncontrolled growth in the magnitude of the estimates. Iteration is terminated when the quantities of interest change by a fraction of the desired precision with additional iterations, but a convenient rule of thumb is to terminate when . Because the quantities of interest and the relative free energies can converge at different rates, it is advised that the former be monitored when possible.

It is also critical to avoid overflow in the computation of exponentials ea. To compute log sums of the form , we can use the equivalent form

| (C5) |

where c≡maxnan. To minimize underflow, the terms exp[an−c] can be summed in order from smallest to largest.

Newton–Raphson

A more efficient approach to determine is to employ a Newton–Raphson solver, which has the advantage of quadratic convergence (a near doubling of the number of digits of precision) with each iteration when sufficiently near the solution. Because each iteration requires inversion of a (K−1)×(K−1) matrix, this approach is only efficient if K is small, say K<100, but this will be satisfied in a wide number of cases.

First, we write the estimating equations in terms of a set of functions gi(θ) such that the solution of the estimating equations [Eq. C2] corresponds to . Several such choices of both the function g(θ) and the parametrization (the normalization constants ci or their logarithms θi) are possible, and the efficiencies of approaches based on different choices may differ substantially, but we find it convenient to choose

| (C6) |

where Wni is defined in Eq. 9. It can easily be seen that is equivalent to the estimating equations:

| (C7) |

In Newton–Raphson, the function g(θ) is expanded about the current iterate θ(n) to first order

| (C8) |

where

| (C9) |

We seek the next iterate θ(n+1) such that g(θ(n+1))=0, which yields the update equation

| (C10) |

where + denotes the pseudoinverse. If all the qi(x) are unique and Ni>0 for all states, the standard matrix inverse may be substituted for the pseudoinverse.

Cautions

We only need to iterate over states for which Ni>0; the relative free energies of states where Ni=0, and expectation values at all states, can be determined after the self-consistent equations are solved to determine the relative free energies of states where Ni>0. Since we must constrain f1=0 to avoid drift during the process of free energy determination, we can simply use a modified form of Eq. C10 where rows and columns corresponding to the first state are omitted,

| (C11) |

where γ∊(0,1] is a scalar multiplier that controls the rate of convergence. Since the initial iterate θ(1) may be far from the realm of quadratic convergence [i.e., outside the range at which the Taylor expansion in Eq. C8 holds], it is often safer to choose an initial γ⪡1. We have found γ=0.1 works well for the first step, with γ=1 used thereafter.

Even then, there are times when with reduced γ does not prevent numerical instability. The instability may be due to the initial guess iterate θ(0) being too far from the region of quadratic convergence, such that the first-order Taylor expansion above is a poor approximation to g(θ) in Eq. C8. In this case, a better procedure for choosing the initial iterate may aid convergence. Starting with one or more iterations of the self-consistent method (Sec. C1), or using an initial estimate from application of BAR (Ref. 10) to sequential states may be sufficient. Less commonly, failure to converge may be result from numerical precision limiting the accurate calculation of the pseudoinverse [H(θ(n))(2:K,2:K)]+. In all cases, we find that self-consistent iteration still works reliably to recover the estimator and can be used as a fallback procedure.

APPENDIX D: EFFICIENT COMPUTATION OF THE ASYMPTOTIC COVARIANCE MATRIX

Singular value decomposition

The N×K matrix W [Eq. 9 in the main paper] can be written in terms of its singular value decomposition

| (D1) |

where U is an N×N unitary matrix of left singular vectors (such that UUT=IN), Σ is an N×K matrix containingL<K singular values along the diagonal, and V is a K×K unitary matrix of right singular vectors.

The estimator for the asymptotic covariance matrix [Eq. 8] can then be expanded to

| (D2) |

We partition the matrix of singular values Σ into a K×K diagonal region ΣK (of which only the first L⩽K diagonal entries will be nonzero) and an (N−K)×K zero matrix 0,

| (D3) |

We can then rewrite the above expression as

| (D4) |

We note that pseudoinversion of the quantity in brackets now only requires O(K3) work, though this can be further reduced to O(L3) work if the reduced SVD is used.

The singular values ΣK and matrix of right singular vectors V can easily be computed from the eigenvalue decomposition of WTW,

| (D5) |

When W has full column rank

In the case that W has full column rank [because all qk(x), k=1,…,K are unique] we can make further progress. Using Eq. D4, we can write

| (D6) |

We note that WT1N=1K, and WN1K=1N, and so WTWN1N=1K, and observe that [(WTW)−1−N] has rank K−1 with kernel 1K

We can supplement the quantity in brackets with , where b is some nonzero scalar, without changing the covariance values computed from it, and make it invertible:

| (D7) |

We choose b=N−1 to ensure the inversion is well-conditioned (as in Ref. 19), producing

| (D8) |

APPENDIX E: EQUIVALENCE OF MBAR AND BAR FOR TWO STATES

We start with Eq. 11. For ease of use, we define and Δu(x)=u2(x)−u1(x) and M=ln N1∕N2. Without loss of generalization, since the equations are symmetric, we examine the self-consistent equation for ,

| (E1) |

We make the additional observation that

which allows us to write Eq. E1 as

| (E2) |

which is precisely the equation for BAR presented in Shirts et al.10 with N1=nF, N2=nR, and the reduced potential difference in place of the work.

We now examine the expression for the variance limited to two states. When the two thermodynamic states are not identical, W will have full rank, and the asymptotic covariance matrix can be written as [see Eq. D7 above]

where we have from Eq. 9

Defining F as the Fermi function, and , then in the case of two states and .

The matrix WTW can then be written as

| (E3) |

If we represent the matrix (WTW)ij=aij, the determinant ∣WTW∣ will be D=a11a22−a21a12. The variance in ratios is actually independent of multiplicative factor used in front of 1K1K, as we will show below, so we will use b in place of 1∕N for generality. The inverse of the covariance matrix is then

| (E4) |

The determinant will then be

| (E5) |

However, we note that (WTW)−1−N is singular, and thus the sum of the first three terms in Eq. E5 equals zero. Additionally, because it has kernel 1K, it must also satisfy a22−a21−N1D=0 and a11−a12−N2D=0. Because we know by symmetry that a12=a21, which we denote by simply a, this determinant then becomes

| (E6) |

We then obtain

| (E7) |

The variance in f1−f2 will be Θ11+Θ22−2Θ12, which reduces to

| (E8) |

which is indeed independent of b≠0. Since a22=N1+a and a11=N2+a, given D=a11a22−a2 (as noted above), we can find that . We then obtain

| (E9) |

This is the equation for the asymptotic covariance of free energies given for the BAR method in Shirts et al.10

References

- Here, a thermodynamic state is defined by a combination of potential energy function (including any biasing potentials) and external thermodynamic parameters, such as temperature, pressure, and chemical potential, all within the same thermodynamic ensemble [e.g., canonical, isothermal-isobaric and (semi)grand canonical].

- Torrie G. M. and Valleau J. P., J. Comput. Phys. 10.1016/0021-9991(77)90121-8 23, 187 (1977). [DOI] [Google Scholar]

- Marinari E. and Parisi G., Europhys. Lett. 10.1209/0295-5075/19/6/002 19, 451 (1992). [DOI] [Google Scholar]

- Hukushimi K. and Nemoto K., J. Phys. Soc. Jpn. 10.1143/JPSJ.65.1604 65, 1604 (1996). [DOI] [Google Scholar]

- Greenleaf W. J., Woodside M. T., Abbondanzieri E. A., and Block S. M., Phys. Rev. Lett. 10.1103/PhysRevLett.95.208102 95, 208102 (2005). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zwanzig R. W., J. Chem. Phys. 10.1063/1.1740409 22, 1420 (1954). [DOI] [Google Scholar]

- Widom B., J. Chem. Phys. 10.1063/1.1734110 39, 2808 (1963). [DOI] [Google Scholar]

- Shirts M. R. and Pande V. S., J. Chem. Phys. 10.1063/1.1873592 122, 144107 (2005). [DOI] [PubMed] [Google Scholar]

- Bennett C. H., J. Comput. Phys. 10.1016/0021-9991(76)90078-4 22, 245 (1976). [DOI] [Google Scholar]

- Shirts M. R., Bair E., Hooker G., and Pande V. S., Phys. Rev. Lett. 10.1103/PhysRevLett.91.140601 91, 140601 (2003). [DOI] [PubMed] [Google Scholar]

- Ferrenberg A. M. and Swendsen R. H., Phys. Rev. Lett. 10.1103/PhysRevLett.63.1195 63, 1195 (1989). [DOI] [PubMed] [Google Scholar]

- Kumar S., Bouzida D., Swendsen R. H., Kollman P. A., and Rosenberg J. M., J. Comput. Chem. 10.1002/jcc.540130812 13, 1011 (1992). [DOI] [Google Scholar]

- Bartels C. and Karplus M., J. Comput. Chem. 18, 1450 (1997). [DOI] [Google Scholar]

- Kobrak M. N., J. Comput. Chem. 10.1002/jcc.10313 24, 1437 (2003). [DOI] [PubMed] [Google Scholar]

- Gallicchio E., Andrec M., Felts A. K., and Levy R. M., J. Phys. Chem. B 10.1021/jp045294f 109, 6722 (2005). [DOI] [PubMed] [Google Scholar]

- Park S., Ensign D. L., and Pande V. S., Phys. Rev. E 10.1103/PhysRevE.74.066703 74, 066703 (2006). [DOI] [PubMed] [Google Scholar]

- Vardi Y., Ann. Stat. 13, 178 (1985). [Google Scholar]

- Gill R. D., Vardi Y., and Wellner J. A., Ann. Stat. 16, 1069 (1988). [Google Scholar]

- Kong A., McCullagh P., Meng X.-L., Nicolae D., and Tan Z., J. R. Stat. Soc. Ser. B (Stat. Methodol.) 65, 585 (2003). [Google Scholar]

- Tan Z., J. Am. Stat. Assoc. 99, 1027 (2004). [Google Scholar]

- Mezei E., J. Comput. Phys. 10.1016/0021-9991(87)90054-4 68, 237 (1987). [DOI] [Google Scholar]

- Tsallis C., J. Stat. Phys. 10.1007/BF01016429 52, 479 (1988). [DOI] [Google Scholar]

- Doss H. makes this suggestion in the conference discussion of Ref. .

- Bartels C., Chem. Phys. Lett. 331, 446 (2000). [Google Scholar]

- Geyer C. J., School of Statistics, University of Minnesota, Minneapolis, Minnesota, Technical Report No. 568 (unpublished).

- Woodside M. T., Behnke-Parks W. M., Larizadeh K., Travers K., Herschlag D., and Block S. M., Proc. Natl. Acad. Sci. U.S.A. 10.1073/pnas.0511048103 103, 6190 (2006). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chodera J. D., Swope W. C., Pitera J. W., Seok C., and Dill K. A., J. Chem. Theory Comput. 10.1021/ct0502864 3, 26 (2007). [DOI] [PubMed] [Google Scholar]

- Woodside M. T., Anthony P. C., Behnke-Parks W. M., Larizadeh K., Herschlag D., and Block S. M., Science 10.1126/science.1133601 314, 1001 (2006). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Souaille M. and Roux B., Comput. Phys. Commun. 10.1016/S0010-4655(00)00215-0 135, 40 (2001). [DOI] [Google Scholar]

- Maragakis P., Spichty M., and Karplus M., Phys. Rev. Lett. 10.1103/PhysRevLett.96.100602 96, 100602 (2006). [DOI] [PubMed] [Google Scholar]

- Janke W., in Quantum Simulations of Complex Many-Body Systems: From Theory to Algorithms, edited by Grotendorst J., Marx D., and Murmatsu A. (John von Neumann Institute for Computing, Jülich, Germany, 2002), Vol. 10, pp. 423–445. [Google Scholar]

- Swope W. C., Andersen H. C., Berens P. H., and Wilson K. R., J. Chem. Phys. 10.1063/1.442716 76, 637 (1982). [DOI] [Google Scholar]

- Flyvbjerg H. and Petersen H. G., J. Chem. Phys. 10.1063/1.457480 91, 461 (1989). [DOI] [Google Scholar]