Abstract

How do comprehenders build up overall meaning representations of visual real-world events? This question was examined by recording event-related potentials (ERPs) while participants viewed short, silent movie clips depicting everyday events. In two experiments, it was demonstrated that presentation of the contextually inappropriate information in the movie endings evoked an anterior negativity. This effect was similar to the N400 component whose amplitude has been previously reported to inversely correlate with the strength of semantic relationship between the context and the eliciting stimulus in word and static picture paradigms. However, a second, somewhat later, ERP component—a posterior late positivity—was evoked specifically when target objects presented in the movie endings violated goal-related requirements of the action constrained by the scenario context (e.g., an electric iron that does not have a sharp-enough edge was used in place of a knife in a cutting bread scenario context). These findings suggest that comprehension of the visual real world might be mediated by two neurophysiologically distinct semantic integration mechanisms. The first mechanism, reflected by the anterior N400-like negativity, maps the incoming information onto the connections of various strengths between concepts in semantic memory. The second mechanism, reflected by the posterior late positivity, evaluates the incoming information against the discrete requirements of real-world actions. We suggest that there may be a tradeoff between these mechanisms in their utility for integrating across people, objects, and actions during event comprehension, in which the first mechanism is better suited for familiar situations, and the second mechanism is better suited for novel situations.

INTRODUCTION

When we see a neighbor working on her garden, or the host of a birthday party decorating the cake, we effortlessly integrate information about the individual people, objects, and actions to form coherent semantic representations of these events as a whole. The goal of the current study was to examine the neurocognitive processes underlying such visual event comprehension. Event-related potentials (ERPs) were recorded while participants watched depictions of real-world events in short, silent movie clips. The high temporal resolution of ERPs allowed the time course of the rapid comprehension mechanisms to be tracked in real time, and differences in polarity and scalp topography of the ERPs permitted to distinguish between the engaged distinct neurocognitive processes.

ERPs have been used extensively to study on-line semantic processing. A large body of the experiments has examined the modulation of one particular ERP component, the N400, during language comprehension. The N400 is a negative-going waveform that starts at approximately 300 msec and peaks at approximately 400 msec after presentation of a content word. The amplitude of this waveform is larger to critical words that are semantically unrelated (vs. related) to preceding single words in semantic priming paradigms (e.g., Bentin, McCarthy, & Wood, 1985), and that are incongruous (vs. congruous) with their preceding sentence contexts (e.g., Kutas & Hillyard, 1980, 1984) or global discourse contexts (e.g., Camblin, Gordon, & Swaab, 2007; van Berkum, Zwitserlood, Hagoort, & Brown, 2003; van Berkum, Hagoort, & Brown, 1999). In addition to frank semantic anomalies (e.g., “He takes his tea with sugar and socks”), the N400 is sensitive to inconsistencies with comprehenders’ real-world knowledge of what is common (e.g., “He takes his tea with sugar and ginger”; e.g., Kutas & Hillyard, 1980) or factually true (e.g., “American school buses are blue”; Hagoort, Hald, Bastiaansen, & Petersson, 2004). Of particular relevance, the amplitude of the N400 is inversely correlated with the strength of associative and categorical semantic relationship between the eliciting word and a given context both in word lists and sentences (Grose-Fifer & Deacon, 2004; Federmeier & Kutas, 1999; Kutas & Hillyard, 1980, 1989). Taken together, these findings are consistent with the theory that comprehenders store information about the real world within semantic memory in a structured fashion such that representations of individual concepts have connections of varying strength, depending on factors such as their feature similarity or how often they have been experienced in the same context (e.g., Hutchison, 2003; Zacks & Tversky, 2001; Fischler & Bloom, 1985; Bower, Black, & Turner, 1979). These graded connections in semantic memory networks are thought to be continuously accessed and used during on-line language comprehension, and the N400 waveform appears to reflect a process whereby the meaning of an incoming stimulus is mapped onto the corresponding field in semantic memory. The tighter the link between the representation of this eliciting item and the specific semantic-memory field activated by the preceding context, the less demanding this mapping process, and the smaller the amplitude of the N400.

Several studies have also examined the modulation of ERPs that are evoked by static visual images. Just as in language comprehension, increased N400s have been elicited to pictures of objects that are incongruous (vs. congruous) with a single picture prime (e.g., McPherson & Holcomb, 1999; Barrett & Rugg, 1990), with the context of a surrounding scene (Ganis & Kutas, 2003), and with a verbal sentence context (Federmeier & Kutas, 2001; Ganis, Kutas, & Sereno, 1996). A larger N400 has been elicited also to visual scenes that are incongruous (vs. congruous) with sequentially presented static pictures conveying stories (West & Holcomb, 2002). Furthermore, the amplitude of the N400 has been reported to be inversely correlated with the strength of associative and categorical semantic relationship between the context and the target picture (Federmeier & Kutas, 2001; McPherson & Holcomb, 1999). These results suggest that the comprehension of both language and the visual real world might rely on similar mechanisms that access graded semantic memory networks.

Despite many similarities between these negative-going ERPs to words and pictures, there are also differences. In some of the above picture studies, a second functionally similar but earlier negativity, the N300, has been reported to overlap with the more traditional N400 (e.g., McPherson & Holcomb, 1999). Moreover, the N300/N400 complex elicited by pictures has a more anterior scalp topography than the N400 observed in most language studies (e.g., McPherson & Holcomb, 1999). These distributional differences may reflect neuroanatomical distinctions between semantic memory networks accessed by pictures and words1 (e.g., Sitnikova, West, Kuperberg, & Holcomb, 2006; McPherson & Holcomb, 1999). Depictions of the real world conveyed in pictures might access their corresponding representations faster than more symbolic words.

For some time, the N400 was considered the primary ERP component indexing semantic processing. However, a series of recent language studies (e.g., Kuperberg, Kreher, Sitnikova, Caplan, & Holcomb, 2007; Kuperberg, Caplan, Sitnikova, Eddy, & Holcomb, 2006; van Herten, Chwilla, & Kolk, 2006; Kim & Osterhout, 2005; van Herten, Kolk, & Chwilla, 2005; Hoeks, Stowe, & Doedens, 2004; Kolk, Chwilla, van Herten, & Oor, 2003; Kuperberg, Sitnikova, Caplan, & Holcomb, 2003) have uncovered situations in which the N400 is strikingly insensitive to outright semantic incongruities. In many of these studies, ERPs were recorded to verb-argument violations, for example, “So as everyone gets a piece the cake must cut ....” The incongruence in such sentences lies in that their syntax assigns the thematic role of an agent (the entity that is performing the central action) around the target verb (e.g., “cut”) to an inanimate subject noun phrase (NP; e.g., “the cake”). However, an inanimate NP cannot occupy this role (e.g., an inanimate cake cannot execute a cutting action). These violations elicited a P600—a positive-going ERP component with a somewhat later time course than the N400, peaking at around 600 msec after word onset.

The neurocognitive processes reflected by this P600 evoked to verb-argument violations and the precise situations under which it is evoked are currently under debate (for a review, see Sitnikova, Holcomb, & Kuperberg, 2008; Kuperberg, 2007). Time-course and scalp-topography similarities between this effect and the P600 effect evoked by syntactic ambiguities and frank anomalies (e.g., Osterhout, Holcomb, & Swinney, 1994; Osterhout & Holcomb, 1992) suggest a possibility that verb-argument violations are recognized by the processing system as syntactic anomalies (e.g., in a sentence, “So as everyone gets a piece the cake must be cut ...”; Kim & Osterhout, 2005). An alternative possibility is that the positivity effect to verb-argument violations reflects modulation of another component (we will term it a late positivity) that is evoked by a semantic integration analysis. On this account, it might reflect the process whereby the semantic properties of NPs are evaluated against the minimal requirements of the verb (e.g., the verb “cut” requires that its agent be able to execute volitional actions; Sitnikova, Holcomb, & Kuperberg, 2008; Sitnikova, 2003). Thematic integration between the target verb and its NP arguments on the basis of certain verb-based semantic requirements has been suggested to be reflected by a posterior positivity evoked between approximately 200 and 600 msec after the verb presentation (e.g., Bornkessel, Schlesewsky, & Friederici, 2002, 2003). The positivity evoked by the verb-argument violations may reflect continuing efforts to integrate the target verb with the preceding subject NP. Of note, employing these discrete, “rule-like” semantic requirements of verbs is fundamentally different from the type of integration that accesses graded connections between concepts in semantic memory and that is reflected by the N400. This analysis takes into account only a subset of the semantic properties of NPs (previously termed “affordances”; e.g., Kaschak & Glenberg, 2000; Gibson, 1979) that enable the entity denoted by an NP to play its part in the action denoted by a verb2 (e.g., when integrating the verb “cut” with its subject NP, this mechanism would not consider differences between NPs “a butcher” and “a mailman”).

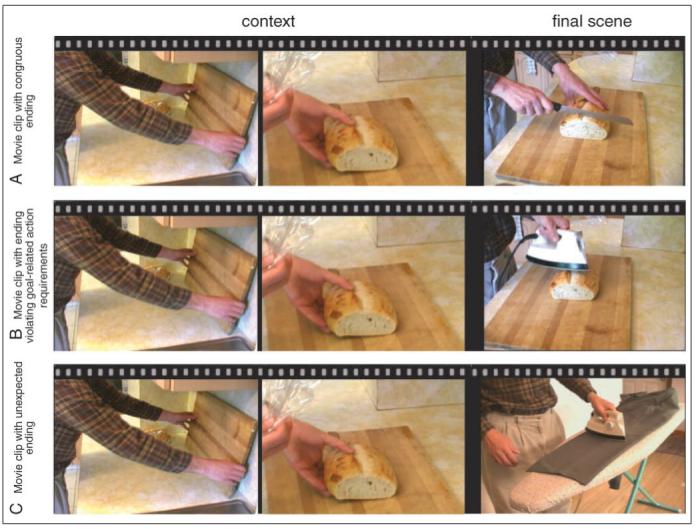

We recently conducted an ERP study that provided some support for the engagement of an analogous semantic integration analysis on the basis of minimal requirements of achieving actions’ goals in the absence of language—during the comprehension of short, silent video vignettes (Sitnikova, Kuperberg, & Holcomb, 2003). In this study, movie clips of common goal-directed, real-world activities ended either with a congruous or incongruous final event. For example, in one of the scenarios, the lead-up context depicted a man place a cutting board and a loaf of bread on a kitchen counter. In the congruous condition, the final event involved the man cutting the bread with a knife (see Figure 1A). In the incongruous condition, the final scene showed the man slide an electric iron across the loaf of bread (Figure 1B). As the target object introduced in the incongruous final scenes was unexpected in the conveyed contextual activity, it was not surprising that these scenes evoked an anteriorly distributed N400 effect relative to the congruous scenes where the context-appropriate object was used (analogous to the N400 effect previously seen in studies using static pictures). Of most interest, in addition to evoking an enhanced frontal N400, these incongruous scenes evoked a large posterior late positivity relative to congruous scenes. In our original report, we speculated that this increased late positivity might not reflect the unexpectedness of the target object within the context per se, but rather some additional semantic integration difficulty. Here, in the light of the recently accumulated language literature reviewed above, we propose a more specific hypothesis: just as language, visual real-world events evoke a late positivity reflecting a semantic integration analysis on the basis of discrete semantic representations of what is necessary to achieve the goal of the target action. In our movie paradigm, the contextual events constrained the central action in the final scene, but the target object did not have an affordance for this action (e.g., the electric iron does not have a sharp-enough edge to be used in cutting bread).

Figure 1.

Frames taken from movie clips used in the study. Shown for each movie type are two frames illustrating events depicted as a context (”a man places a cutting board on a kitchen counter, and then places a loaf of bread on the cutting board”), followed by a single frame illustrating the congruous final scene (A: “the man cuts off a piece of bread with a knife”), the incongruous final scene that was both unexpected and violated goal-related action requirements (B: “the man slides an electric iron across the loaf of bread”), and the incongruous final scene that, even though unexpected, did not violate the goal-related requirements of its central action (C: “the man uses an electric iron to press wrinkles from his pants”). Note, the same set of congruous movies (Type A) was used both in Experiments 1 and 2, whereas incongruous movies of Types B and C were used in Experiments 1 and 2, respectively. Sample movie clips and verbal descriptions of the full movie set may be viewed at www.nmr.mgh.harvard.edu/~tatiana/movies.

The aim of the present study was to determine whether the neurocognitive processes reflected by the N400 and the late positivity during real-world comprehension can be dissociated. Based on the arguments put forth above, this should be possible because comprehension of the visual real-world events is mediated by two separate processing streams. The first analysis, reflected by the N400, is proposed to map perceptual input onto graded connections between concepts in semantic memory. In contrast, the late positivity is proposed to reflect semantic integration on the basis of discrete goal-related requirements of real-world actions. In two experiments, ERPs were recorded to the final scenes in movie clips that we hypothesized would differentially engage these two neurocognitive processes.

In Experiment 1, just as in the Sitnikova et al. (2003) study, the incongruous movie scenes introduced a target object that was both semantically unrelated to the context and violated goal-related requirements of the contextually constrained target action. However, unlike in that earlier study, which used continuous depictions of real-world activities, each movie clip now included a cut between the context and the final movie scene. In Experiment 2, the incongruous scenes introduced a different type of anomaly in the same movie scenarios. These final events, although semantically unrelated to the preceding context, did not violate the requirements of their central action (e.g., the final scene might show a man use an electric iron to press wrinkles from his pants, presented after the contextual shot showing the man place a cutting board and a loaf of bread on a kitchen counter; see Figure 1C).

It was predicted that, because the N400 reflects the process of mapping the incoming information onto the corresponding fields in graded semantic memory networks, its amplitude would increase in response to both types of incongruous scenes relative to congruous movie endings. This is because, in both experiments, the incongruous final scenes introduced information that is inconsistent with the semantic representation of the preceding contextual events. In addition, it was predicted that, if the late positivity is specifically sensitive to the violation of goal-related requirements of actions (and not simply the unexpectedness of the target objects within their contextual scenarios), the amplitude of this ERP would be larger in response to the incongruous scenes with such violations (Experiment 1) than without such violations (Experiment 2).

EXPERIMENT 1: INCONGRUOUS SCENES WITH VIOLATIONS OF GOAL-RELATED REQUIREMENTS OF ACTIONS

In the original Sitnikova et al. (2003) study, ERPs were recorded to silent movie clips that showed real-world events continuously. After the initial contextual events were presented, the actor would reach out for something off-camera and bring a target object into view. This object would then be engaged into the final event’s central action. ERP recordings were time-locked to the moment of the first discernable appearance of the target object in the movie clip (e.g., when a fragment of a knife blade became visible). In the current experiment, a cinematographic cut was introduced between the context and the final (target) event. ERPs were time-locked to the onset of these final scenes.

The “cutting” technique was originally designed by cinematographers to mimic the viewers’ natural tendency to take in perceived events over separate intersaccade fixations that key on different aspects of the visual environment (e.g., Bobker & Marinis, 1973). Just as, across saccades, comprehenders tend to focus attention on different components of visual space (e.g., after taking in the global scene outline, they might focus on the hands of the person in the center), movie shots before and after a cut usually show different perspectives on the conveyed events. Cuts have been routinely used in commercial motion pictures and TV shows for several decades and are now recognized as an effective tool to enhance the comprehension of visual events (Schwan, Garsoffky, & Hesse, 2000; Carroll & Bever, 1976).

Cuts were used in the current experiment for two reasons. First, in the Sitnikova et al. (2003) paradigm that presented events continuously, the time-locking of ERPs to the cognitive processes of interest was imprecise. Because the critical objects were brought into view gradually, the timing of their appearance in a movie clip was variable across trials (i.e., the target object could become fully visible over a period as short as 100 msec [3 frames], or as many as 433 msec [13 frames]). Moreover, the onset of the central action in the final events, relative to the ERP time-locking point, was also considerably different across trials (233-1000 msec). As a result of this variable delay after the ERP time-locking point, the cognitive processes of interest underlying semantic integration might have not been aligned well across trials, which, in turn, would lead to ERP effects smeared over time. Indeed, this may be why an N300 effect, previously observed to static picture stimuli (McPherson & Holcomb, 1999), was not significant to continuously presented incongruous events in the Sitnikova et al. study (i.e., part of what was identified as the N400 might actually be due to N300 activity). In order to achieve better ERP time-locking to the evoked cognitive processes, the cuts were introduced in the present study so as to ensure that, immediately at the onset of the final scenes, the target object was fully visible as it was engaged into the central action (e.g., the man cuts the bread with a fully visible knife immediately at the onset of the final scene).

The second reason between-shot cuts were used in the present experiment was to allow for a direct comparison of ERP effects evoked by the incongruous scenes violating goal-related action requirements to ERP effects elicited by the comparable incongruous final scenes without such violations (in Experiment 2). To be able to construct the movie clips of Experiment 2, two separate movie shots needed to be combined and so cuts were necessary. It was, therefore, important to examine any influence of the cut itself on ERP activity. By using movie clips with cuts in both Experiments 1 and 2, it was possible to control for any such effects across the two experiments.

If, as expected, the cuts do not disrupt naturalistic comprehension, then just as in the Sitnikova et al. (2003) study, incongruous final scenes presenting target objects that are semantically unrelated to the context and violate goal-related action requirements should evoke both an enhanced N400 and an enhanced late positivity relative to congruous final scenes. Moreover, presenting the target scenes after a cut should lead to a better alignment of ERP waveforms across the trials, and would allow a clearer characterization of the onset latencies of the observed ERP effects.

Methods

Participants

Fifteen right-handed, native English-speaking volunteers (8 men, 7 women; mean age = 19.5 years) who had normal or corrected-to-normal vision served as participants. Although we originally recruited sixteen participants, ERP data from one female participant were unusable due to excessive ocular artifact.

Materials

The stimuli were 80 pairs of color movie clips (see Figure 1A and B), filmed using a digital Cannon GL1 video camera and edited using Adobe Premier 6.0 software. In all clips, two or more events from a common goal-directed real-life activity (e.g., shaving, cooking) that involved a single main actor were presented as a context and were followed by a final scene showing the actor manipulating a target object. The context and final movie shots were separated by a cut, and were spliced together with no blank frames in between. We were careful to ensure that the scene change at the cut would appear natural and not disruptive: Across the cut, the events were shown from a different viewpoint (e.g., camera angle might shift from a long distance shot to a close-up). All target objects (e.g., a knife) were clearly visible and were engaged into the central action (e.g., cutting) at the onset of the final scene, but did not appear in the clip before the final scene. ERP recordings were time-locked to the onset of the final scenes.

The movie clips were constructed in pairs such that the same context was used with either a congruous final scene or an incongruous final scene. In the incongruous final scenes, the target object was not commonly used in the conveyed contextual activity and did not have an affordance for the central action constrained by the preceding events. This was confirmed in a pretest study: A separate group of 18 healthy participants (9 women, 9 men; mean age 19) watched 80 movie clips that showed only the context part of each scenario, and were asked to describe the event (action and engaged objects) that they would expect to take place next in each clip. Results of this pretest showed that the target objects used in the incongruous movie endings in the ERP experiment had “0” cloze probability within the clip context. Moreover, these objects did not have affordances for any of the actions that were listed by the pretest participants for a given contextual scenario.

The movie clips were counterbalanced such that an object used in the congruous final scene in one scenario was used in the incongruous final scene in another scenario. The clips were arranged into two sets, each consisting of 40 congruous and 40 incongruous items. The assignment of clips to sets was such that no context or final scene shot was included twice in one set, although across sets all contexts and all target objects appeared in both the congruous and incongruous conditions.

Procedure

Participants were seated in a comfortable chair in a sound-attenuated room approximately 125 cm from a 17-in. computer monitor used for stimuli presentation. Eight participants viewed Movie Set 1 and eight participants viewed Set 2 [ERP data from one individual were unusable due to excessive ocular artifact—we analyzed the remaining data in two ways: (1) in 15 participants and (2) in 14 participants so as each movie set was used the same number of times—because no differences were found in the results, here we report the analysis in 15 participants.] Movie clips, subtending approximately 4° of visual angle, and centered on a black background, were shown without sound at a rate of 30 frames per second and ranged between 4 and 29 sec in duration (mean = 11 sec); the final scene lasted 2 sec in all movie clips.

Participants progressed across the clips at their own pace and were instructed to keep their eyes in the center of the screen. At the “?” prompt that appeared 100 msec after the offset of each clip, participants were asked to press a “Yes” or “No” button depending on whether the presented goal-directed sequence of events would commonly be witnessed in everyday life. Six additional clips were used in a practice session.

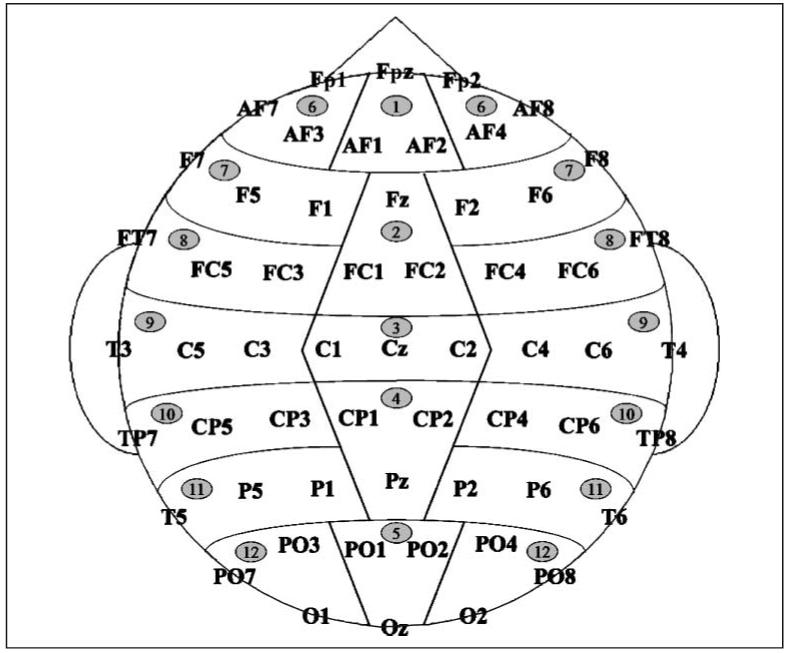

The electroencephalogram (bandpass, 0.01 to 40 Hz, 6 dB cutoffs; sampling rate, 200 Hz) was recorded from 57 active tin scalp electrodes (see diagram in Figure 2) held in place by an elastic cap (Electro-Cap International, Eaton, OH), and from the electrodes located below and at the outer canthus of each eye and over the right mastoid bone. All active electrodes were referenced to the left mastoid site.

Figure 2.

Electrode montage: 17 standard International 10-20 system locations and 24 extended 10-20 system locations were supplemented with 20 additional locations including AF3, AF1, AF2, and AF4 sites (placed at increments of 20% of the distance between AF7 and AF8); F5, F1, F2, and F6 sites (20% increments of F7-F8 distance), P5, P1, P2, and P6 sites (20% increments of T5-T6 distance); and PO1, PO2, PO3, and PO4 sites (20% increments of PO7-PO8 distance). Also shown are scalp region columns used in statistical analyses. The midline ANOVAs included five levels of region factor (1 = anterior-frontal; 2 = frontal; 3 = central; 4 = parietal; 5 = occipital). The lateral ANOVAs included seven levels of region factor (6 = anterior-frontal; 7 = frontal; 8 = fronto-central; 9 = central; 10 = centro-parietal; 11 = parietal; 12 = occipital), and two levels of hemisphere factor (left and right). Both midline and lateral analyses also included three levels of electrode factor, indicating precise electrode locations.

For the trials with correct behavioral responses on the movie classification task, the ERPs (epoch length = 100 msec before the final scene onset to 1600 msec after the final scene onset) were selectively averaged off-line across trials from each condition. The trials with ocular artifacts (activity >60 μV below eyes or at the eye canthi) were excluded: 10% of trials were rejected in the congruous condition and 11% in the incongruous condition. The average ERPs were re-referenced to a mean of the left and right mastoids.

Analysis

Average ERPs were quantified by calculating the mean amplitudes (relative to the 100-msec baseline preceding the final scene) within two time windows (0-150 msec, 150-250 msec) corresponding to the early sensory/perceptual processes, and within three time windows (250-350 msec, 350-600 msec, 600-1000 msec) that roughly corresponded to the windows previously used in examining the N300, N400, and late positivity components. In addition, as the present effects were prolonged over time, we also included a later 1000-1500 msec epoch. For each of these time windows, two omnibus analyses of variance (ANOVAs) for repeated measures were conducted in order to examine midline and lateral columns of scalp regions: Scalp topography factors (region, hemisphere, and electrode) are defined in Figure 2. Each analysis also had a congruence factor (congruous and incongruous). Significant Congruence × Region interactions were parsed by examining the effect of congruence within each region. The Geisser-Greenhouse correction was applied to all repeated measures with more than one degree of freedom (Geisser & Greenhouse, 1959).

Results

Participants were highly accurate in deciding whether goal-directed event sequences presented in the movie clips would commonly be witnessed in everyday life. The accuracy rates were 90% in the congruous condition and 94% in the incongruous condition.

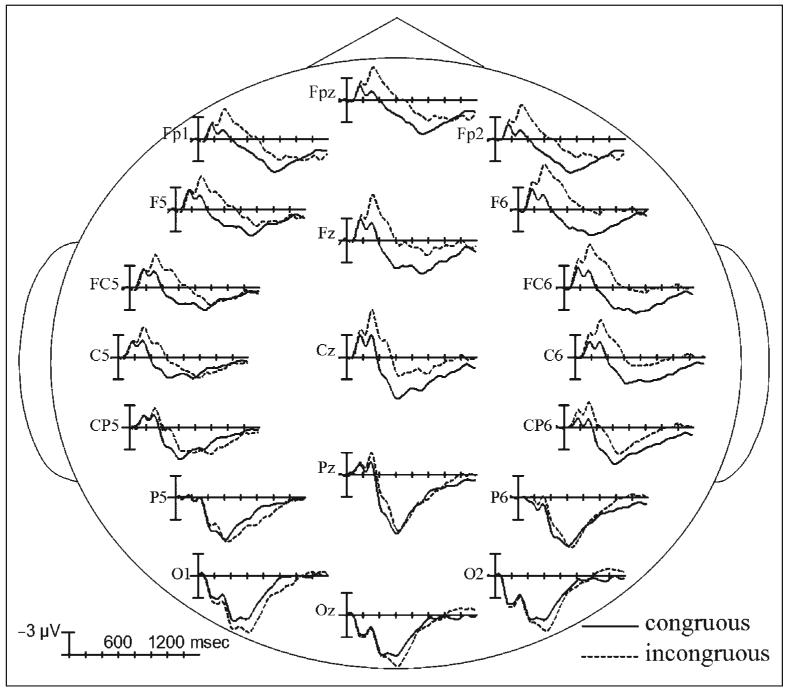

The mean ERPs time-locked to the presentation of final movie scenes are plotted in Figure 3. A series of early positive and negative peaks (P1 at 80 msec, N1 at 180 msec, and P2 at 220 msec) was followed by anteriorly distributed negative-going late components that peaked at approximately 315, 550, and 680 msec. These potentials overlapped with a widely distributed late positivity with a parietal maximum at about 650 msec. Onset latencies for ERP effects to the incongruous (vs. congruous) condition were: approximately 250 msec after the final scene onset for the increased negativity, and approximately 500 msec for the increased positivity. Table 1 presents the results of statistical analyses confirming these differences.

Figure 3.

ERPs time-locked to the incongruous final movie scenes that were both unexpected and violated goal-related action requirements, compared to ERPs time-locked to congruous final scenes. Shown are waveforms at representative electrode sites from each scalp region used in the statistical analyses.

Table 1.

Results of Statistical Analyses Contrasting ERPs Evoked by Incongruous Movie Endings Violating Goal-related Requirements of Real-world Actions and by Congruous Movie Endings in Experiment 1

|

F |

|||||

|---|---|---|---|---|---|

| Region | Contrast | 250-350 msec | 350-600 msec | 600-1000 msec | 1000-1500 msec |

| Midline | |||||

| Omnibus | C | 6.181* | 12.242** | ||

| C × R | 8.079** | 66.298** | 27.053** | ||

| Planned comparisons | |||||

| Anterior-frontal | C | n/a | 21.963** | 36.355** | |

| Frontal | C | n/a | 7.637* | ||

| Central | C | n/a | 11.679** | ||

| Parietal | C | n/a | 16.313** | 29.466** | |

| Occipital | C | n/a | 39.793** | 36.268** | |

| Lateral | |||||

| Omnibus | C | 7.194* | 11.321** | ||

| C × R | 5.365* | 22.682** | 73.090** | 29.393** | |

| Planned comparisons | |||||

| Anterior-frontal | C | 14.859** | 33.401** | 48.929** | |

| Frontal | C | 5.225* | 21.650** | 33.234** | |

| C × E | 10.416** | ||||

| Fronto-central | C | 12.098** | 13.512** | ||

| C × E | 22.475** | ||||

| Central | C | 4.960* | 13.204** | ||

| C × E | 12.472** | ||||

| Centro-parietal | C | 26.948** | 24.280** | ||

| C × E | 11.149** | ||||

| Parietal | C | 40.633** | 31.983** | ||

| C × E | |||||

| Occipital | C | 47.791** | 31.383** | ||

| C × E | 11.850** | ||||

C = main effect of congruence, degrees of freedom 1, 14; C × R = Congruence × Region interaction, degrees of freedom 4, 56 (midline) and 6, 84 (lateral); C × E = Congruence × Electrode interaction, degrees of freedom 2, 28.

p < .05.

p < .01.

In the earlier sensory/perceptual time windows (0-150 msec, 150-250 msec), ERPs were not significantly different between congruous and incongruous movie endings.

In the 250-350 msec epoch, ERPs were more negative to incongruous (vs. congruous) final scenes over the anterior scalp regions (Congruence × Region interaction in the lateral omnibus ANOVA; planned comparisons: significant differences in two lateral regions).

In the 350-600 msec time window, the increased anterior negativity to incongruous (vs. congruous) final scenes became more widespread (main effects of congruence and Congruence × Region interactions in midline and lateral omnibus ANOVAs; planned comparisons: significant differences in several midline and lateral regions).

In the 600-1000 msec epoch, different ERP patterns were observed between anterior and posterior scalp regions (Congruence × Region interactions in midline and lateral omnibus ANOVAs). Over more anterior regions, incongruous (vs. congruous) final scenes continued to evoke an increased negativity (planned comparisons: significant differences in anterior midline and lateral regions that were larger at the peripheral than medial sites—Congruence × Electrode interactions in lateral analyses). Over more posterior regions,incongruous (vs. congruous) scenes evoked a wide-spread positivity effect (planned comparisons: significant differences in posterior midline and lateral regions).

In the 1000-1500 msec epoch, there remained only the posterior positivity effect that became even more wide-spread (main effects of Congruence and Congruence × Region interactions in midline and lateral omnibus ANOVAs; planned comparisons: significant differences in several midline and lateral regions that were larger at the medial than peripheral scalp areas—Congruence Electrode interactions in lateral analyses).

Discussion

This experiment examined neurophysiological processes evoked by final events in short movie clips in which the context and the critical scene were separated by a cut. Incongruous final events in this experiment involved a target object that was both semantically unrelated to the movie context and violated goal-related action requirements. Relative to the contextually appropriate movie endings, these incongruous events evoked both an increased anterior negativity at approximately 250 msec and an increased posterior positivity at approximately 500 msec after the final scene onset. Thus, these effects replicated the general pattern of the ERPs in the Sitnikova et al. (2003) study, in which participants were presented with continuous movie clips with no cuts. This suggests that the use of cuts does not disrupt semantic processing during visual-world comprehension.

One possible interpretation of the large positivity effect in the Sitnikova et al. (2003) study was that it reflected decision P3-like processes (see Donchin & Coles, 1988): Participants were able to classify the incongruous scenarios as such immediately after the detection of the anomaly, whereas no classification decision was possible at the comparable point in congruous continuous movies.3 In the current experiment, however, the cut between the context and final scenes clearly identified both incongruous and congruous target scenes at the comparable points in movie clips, making this explanation less likely.

An important advantage of using cuts in the current study was that it allowed for the abrupt presentation of target objects and actions at the onset of target scenes, improving the accuracy of ERP time-locking to the processes of interest. This might have contributed to our ability to record a significant N300 effect (between 250 and 350 msec) to incongruous (vs. congruous) movie endings—consistent with previous studies with static pictures (e.g., McPherson & Holcomb, 1999). As we suggested above, this early semantic congruence effect might reflect the rapid access that realistic visual images have to semantic memory networks.

On the other hand, the onset latency of the late positivity (at approximately 500 msec) in the present movies with cuts was similar to that seen in the Sitnikova et al. (2003) continuous movies. Accuracy of the ERP time-locking might be less critical for this rather large ERP effect. It is noteworthy that the latency of this effect did not change depending on whether the target object was first brought into view and then, at least 233 msec later, involved in the central action (Sitnikova et al., 2003) or was immediately involved in the central action (the current study). This suggests that the late positivity can be evoked by the analysis of object features alone, even without perceiving incongruous spatio-temporal parameters of the central action in the target event.

As in the Sitnikova et al. (2003) study, the offset of both the anterior N400 and the posterior late positivity (both lasting for over 1000 msec) was more prolonged than is typical of their counterparts evoked by written words (lasting between 200 and 400 msec). One reason for these extended effects might be the prolonged presentation of the incongruous information in movie scenes: The target objects were engaged into incongruous actions that unfolded over a few hundred milli-seconds. Of note, this suggests that spatio-temporal information in real-world events is analyzed within both the N400 and the late positivity processing streams. In line with this explanation, similar prolonged N400s are observed in association with spoken language that also involves processing of critical information over time as the eliciting word unfolds phoneme by phoneme (e.g., Holcomb & Neville, 1991).

In conclusion, this experiment replicated both the anterior negativity and the posterior positivity findings of Sitnikova et al. (2003) in a different sample of participants using movie clips with cuts. It was hypothesized that the observed N300/N400 and late positivity might reflect distinct neurocognitive processes during visual-world comprehension, and that they can be dissociated from one another. The next experiment was designed to test this hypothesis.

EXPERIMENT 2: INCONGRUOUS SCENES WITHOUT VIOLATIONS OF GOAL-RELATED REQUIREMENTS OF ACTIONS

This second experiment examined the neurophysiological processes elicited by final movie scenes that were semantically unrelated to their preceding context, but that did not violate the goal-related requirements of their central action (e.g., Figure 1C: After placing a cutting board and a loaf of bread on a kitchen counter, a man uses an electric iron to press wrinkles from his pants). The same control congruous movie clips were used as in Experiment 1 (e.g., Figure 1A). The second aim was to directly compare the effects produced to those evoked by the incongruous scenes in Experiment 1.

It was predicted that mapping of these contextually inappropriate movie endings onto corresponding fields in graded semantic memory networks would be relatively effortful and would evoke an increased anterior N300/N400 effect, relative to the congruous condition. The critical question was whether these scenes would also evoke a late positivity effect. If, as hypothesized, the late positivity was evoked by the incongruous final events in Experiment 1 because they violated goal-related requirements of the contextually constrained target actions, this effect should be attenuated to the scenes that are merely unexpected and do not violate such requirements. If, however, these final scenes evoke a positivity effect similar to that observed in Experiment 1, this would support a more nonspecific account—for example, a decision-related P3 resulting from the behavioral task performed by participants.

Methods

Participants

Fifteen right-handed, native English-speaking volunteers (8 men, 7 women; mean age = 20 years) served as participants. Although we originally recruited 16 participants, ERP data from one female participant were unusable due to excessive ocular artifact.

Materials

The same set of congruous movie clips as in Experiment 1 was used in Experiment 2 (see Figure 1A). However, the incongruous clips were constructed differently (see Figure 1C) by resplicing together (with no blank frames in between) the context and final scene shots from two semantically unrelated clips from the congruous movie set. Based on the pretest described in Experiment 1, these incongruous final scenes had “0” cloze probability within the preceding movie context. Care was taken to ensure that, in all movie clips, it appeared that the same main actor took part both in the contextual and final events and there were no dramatic changes in the brightness or contrast between the two consecutive shots.

The clips were again arranged into two sets, each consisting of 40 congruous and 40 incongruous items. The assignment of clips to sets was such that no context or final scene shot was included twice in one set, although across sets all contexts and all final scenes appeared in both the congruous and incongruous conditions.

Procedure

The recording procedure was the same as in Experiment 1 [ERP data from one individual were unusable due to excessive ocular artifact—we, again, analyzed the remaining data in two ways: (1) in 15 participants and (2) in 14 participants so as each movie set was used the same number of times—because no differences were found in results, here we report the analysis in 15 participants]. In the 15-participant sample, 12% of ERP trials were rejected in the congruous condition and 11% of trials were rejected in the incongruous condition due to ocular artifacts.

Analysis

Averaged ERPs were analyzed using the time windows and two omnibus ANOVAs (midline and lateral) that were identical to the ones described in Experiment 1. We also conducted similar planned comparisons with the exception that in the 1000-1500 msec epoch, where the omnibus ANOVA revealed a Congruence × Region × Hemisphere interaction (but no Congruence × Region interaction), the effect of congruence was examined at each level of the region factor, separately for each hemisphere.

To directly compare the congruence effects (within four time windows: 250-350 msec, 350-600 msec, 600-1000 msec, and 1000-1500 msec after final scene onset) between the incongruous scenes without (Experiment 1) and with (Experiment 2) violations of goal-related action requirements, an additional set of mixed design ANOVAs was conducted. These analyses included an additional between-subject factor of experiment (Experiment 1 vs. Experiment 2) and examined interactions involving the factors of congruence and experiment. Congruence × Region × Experiment interactions were parsed by examining the effects within each level of the region factor.

Results

Participants were again highly accurate in deciding whether goal-directed event sequences presented in the movie clips would commonly be witnessed in everyday life. The accuracy rates were 96% in the congruous condition and 92% in the incongruous condition.

The mean ERPs time-locked to the presentation of final movie scenes are plotted in Figure 4. Again, early sensory/perceptual ERPs were followed by widely distributed, slow negative-going and positive-going deflections that were most prominent over the anterior and parietal scalp regions, respectively. In this experiment, differences in ERPs between the incongruous and congruous conditions became evident already at approximately 150 msec. Table 2 presents the results of statistical analyses confirming these differences.

Figure 4.

ERPs time-locked to the incongruous final movie scenes that, although unexpected, did not violate goal-related requirements of their central action, compared to ERPs time-locked to congruous final scenes. Shown are waveforms at representative electrode sites from each scalp region used in the statistical analyses.

Table 2.

Results of Statistical Analyses Contrasting ERPs Evoked by Incongruous Movie Endings without Violations of Goal-related Action Requirements and by Congruous Movie Endings in Experiment 2

|

F |

||||||

|---|---|---|---|---|---|---|

| Region | Contrast | 150-250 msec | 250-350 msec | 350-600 msec | 600-1000 msec | 1000-1500 msec |

| Midline | ||||||

| Omnibus | C | 9.342** | 36.929** | 20.539** | 6.833* | 5.634* |

| C × R | 10.590** | 14.561** | 10.608** | |||

| Planned comparisons | ||||||

| Anterior-frontal | C | n/a | 26.393** | 22.263** | 16.781** | n/a |

| Frontal | C | n/a | 49.490** | 32.009** | 13.466** | n/a |

| Central | C | n/a | 43.051** | 32.836** | 10.538** | n/a |

| Parietal | C | n/a | 14.824** | 9.164** | n/a | |

| Lateral | ||||||

| Omnibus | C | 13.947** | 40.839** | 31.047** | 8.918* | 4.677* |

| C × R | 3.964* | 13.944** | 21.253** | 18.410** | ||

| C × R × H | 9.925** | |||||

| Planned comparisons | ||||||

| Anterior-frontal | C | 24.996** | 25.888** | 18.937** | ||

| C × H | 5.470* | 6.482* | 7.888* | |||

| Frontal | C | 9.495** | 36.208** | 36.222** | 25.165** | 17.203**R |

| C × H | 9.855** | 8.815* | 10.503** | 12.444** | n/a | |

| C × E | 8.053* | |||||

| Fronto-central | C | 25.997** | 64.514** | 65.519** | 27.879** | 19.568**R |

| C × H | 9.210** | 10.202** | 11.292** | 10.432** | n/a | |

| C × E | 7.892* | |||||

| Central | C | 30.245** | 62.927** | 72.563** | 17.367** | 23.985**R |

| C × H | 7.935* | 8.777* | 11.627** | 10.386** | n/a | |

| C × E | 14.061** | 6.053* | ||||

| Centro-parietal | C | 13.466** | 22.221** | 21.674** | 12.721**R | |

| C × H | 5.782* | n/a | ||||

| C × E | 11.689** | 6.892* | ||||

| Parietal | C | 5.548*R | ||||

| Occipital | C | 6.260* | ||||

| C × H | 6.069* | |||||

C = main effect of congruence, degrees of freedom 1, 14; C × R = Congruence × Region interaction, degrees of freedom 4, 56 (midline) and 6, 84 (lateral); C × R × H = Congruence × Region × Hemisphere interaction, degrees of freedom 6, 84; C × H = Congruence Hemisphere interaction, degrees of freedom 1, 14; C × E = Congruence × Electrode interaction, degrees of freedom 2, 28; R = this analysis was conducted in a right-hemisphere lateral region.

p < .05.

p < .01.

In the early sensory time window, between 0 and 150 msec after target scene onset, there were no significant differences between congruous and incongruous conditions.

In the early visual feature perception time window, between 150 and 250 msec, ERPs were more negative to incongruous (vs. congruous) movie endings over frontal and central scalp regions, primarily in the right hemisphere (main effects of congruence in midline and lateral omnibus ANOVAs and a Congruence × Region interaction in the lateral analysis; planned comparisons: significant differences in several lateral analyses that were right-lateralized—Congruence × Hemisphere interactions).

A similar pattern of results continued across the 250-350 msec and 350-600 msec time windows, but the negativity effect became more bilateral and widespread over anterior and central regions (main effects of congruence and Congruence × Region interactions in midline and lateral omnibus ANOVAs; planned comparisons: significant differences in several midline and lateral analyses that were larger over the right than the left hemisphere— Congruence × Hemisphere interactions in lateral analyses, and were also larger over medial than peripheral sites—Congruence × Electrode interactions in lateral analyses).

In the 600-1000 msec time window, the anterior negativity effect was still evident, but a different pattern was present at the most posterior scalp regions (main effects of congruence and Congruence × Region interactions in midline and lateral omnibus ANOVAs). The negativity effect was evoked over the anterior sites (planned comparisons: significant differences in anterior midline and lateral analyses that were right-lateralized—Congruence × Hemisphere interactions in lateral analyses). Over the most posterior sites, incongruous (vs. congruous) final scenes evoked a small positivity effect (planned comparisons: a significant difference only in the lateral occipital region that was left-lateralized—Congruence Hemisphere interaction).

In the 1000-1500 msec time window, the negativity effect to incongruous (vs. congruous) movie endings became strongly right-lateralized, being mostly limited to the right-hemisphere sites (main effects of congruence in both midline and lateral omnibus ANOVAs, but Congruence × Region × Hemisphere interaction—no Congruence × Region interaction—in the lateral omnibus ANOVA; planned comparisons: significant differences in several right-hemisphere lateral analyses but no significant differences involving the congruence factor in left-hemisphere lateral analyses).

COMPARISON BETWEEN EXPERIMENTS 1 AND 2

Difference waves obtained by subtracting ERPs to congruous movie endings from ERPs to incongruous movie endings in Experiments 1 and 2 are shown in Figure 5. Corresponding voltage maps demonstrating the spatial topography of the observed effects within four time windows (250-350, 350-600, 600-1000, and 1000-1500 msec) are shown in Figure 6. Table 3 shows results of statistical analyses directly comparing the ERP effects between Experiments 1 and 2.

Figure 5.

Difference waves obtained by subtracting the ERPs time-locked to congruous final scenes from the ERPs time-locked to incongruous final scenes with (Experiment 1) and without (Experiment 2) violations of goal-related action requirements. Shown are waveforms at representative electrode sites from each scalp region used in the statistical analyses.

Figure 6.

Voltage maps corresponding to the difference waves in Figure 5 averaged across four time windows (created using the EMSE Data Editor software; Source Signal Imaging, San Diego, CA).

Table 3.

Results of Statistical Analyses Contrasting Congruence Effects between Experiments 1 and 2

|

F |

|||||

|---|---|---|---|---|---|

| Region | Contrast | 250-350 msec | 350-600 msec | 600-1000 msec | 1000-1500 msec |

| Midline | |||||

| Omnibus | C × Exp | 6.712* | 7.568* | 17.112** | |

| C × R × Exp | 4.285* | 4.207* | 9.471** | ||

| Planned comparisons | |||||

| Frontal | C × Exp | n/a | 6.048* | 5.878* | 7.263* |

| Central | C × Exp | n/a | 8.840** | 12.366** | 18.618** |

| Parietal | C × Exp | n/a | 8.197** | 25.715** | |

| Occipital | C × Exp | n/a | 4.754* | 22.121** | |

| Lateral | |||||

| Omnibus | C × Exp | 11.157** | 5.769* | 9.588** | 15.759** |

| C × R × Exp | 3.876* | 4.858* | 10.236** | ||

| Planned comparisons | |||||

| Fronto-central | C × Exp | n/a | 11.132** | 7.916** | 7.768** |

| C × H × Exp | n/a | 8.827** | |||

| C × E × Exp | n/a | 6.857* | 14.424** | ||

| Central | C × Exp | n/a | 15.496** | 20.486** | 21.713** |

| C × H × Exp | n/a | 5.056* | 11.854** | ||

| C × E × Exp | n/a | 11.394** | |||

| Centro-parietal | C × Exp | n/a | 9.013** | 18.050** | 24.919** |

| C × H × Exp | n/a | 5.318* | 16.228** | ||

| C × E × Exp | n/a | 11.632** | |||

| Parietal | C × Exp | n/a | 9.307** | 24.371** | |

| C × H × Exp | n/a | 6.996* | 14.271** | ||

| C × E × Exp | n/a | 7.025** | |||

| Occipital | C × Exp | n/a | 4.644* | 19.014** | |

| C × H × Exp | n/a | 10.446** | |||

| C × E × Exp | n/a | 6.764** | |||

These analyses specifically focused on interactions involving factors of congruence and experiment; main effects of congruence are not reported.

C × Exp = Congruence × Experiment interaction, degrees of freedom 1, 28; C × R × Exp = Congruence × Region × Experiment interaction, degrees of freedom 4, 112 (midline) and 6, 168 (lateral); C × H × Exp = Congruence × Hemisphere × Experiment interaction, degrees of freedom 1, 28; C × E × Exp = Congruence × Electrode × Experiment interaction, degrees of freedom 2, 56.

p < .05.

p < .01.

Across the 250-350 msec and 350-600 msec time windows, the anterior negativity effect to incongruous (vs. congruous) final scenes was larger in Experiment 2 (without violations of goal-related action requirements) than in Experiment 1 (with such violations—Congruence × Experiment interactions in the midline omnibus ANOVA between 250 and 350 msec and lateral omnibus ANOVAs in both time windows). In the 350-600 msec epoch, this difference was primarily evident over central scalp regions (Congruence × Region × Experiment interactions midline and lateral in omnibus ANOVAs; planned comparisons: significant differences in central midline and lateral regions but no interactions involving the congruence and experiment factors in the most anterior regions).

In the 600-1000 msec epoch, the ERP patterns varied across the anterior, central, and posterior regions (Congruence × Experiment interactions but also Congruence × Region × Experiment interactions in midline and lateral omnibus ANOVAs). At the anterior sites, the negativity effect to incongruous (vs. congruous) final scenes was comparable between the experiments (planned comparisons: no significant interactions involving the congruence and experiment factors). In the central regions, incongruous scenes in Experiment 1 (with violations of goal-related action requirements) evoked a positivity effect but incongruous scenes in Experiment 2 (without such violations) mainly evoked a negativity effect (planned comparisons: significant differences in central midline and lateral analyses). Most importantly, at the posterior regions, the positivity effect to incongruous (vs. congruous) final scenes was larger in Experiment 1 (when these scenes included violations of goal-related action requirements) than in Experiment 2 (when they did not—planned comparisons: significant differences in posterior midline and lateral analyses; note the significant differences between the experiments at the lateral occipital region, in which the positivity effect reached significance in Experiment 2). The differences between the experiments were larger in the right than in the left hemisphere (Congruence × Experiment × Hemisphere interactions in lateral planned comparisons).

In the 1000-1500 msec epoch, incongruous scenes evoked a large posterior positivity effect in Experiment 1 (with violations of goal-related action requirements), but a right-lateralized negativity effect in Experiment 2 (without such violations—Congruence × Experiment and Congruence × Region × Experiment interactions in midline and lateral omnibus ANOVAs; planned comparisons: significant differences in several midline and lateral analyses that were larger in the right than in the left hemisphere—Congruence × Hemisphere × Experiment interactions in lateral regions).

Post Hoc Analyses

It is unlikely that ERP differences between Experiments 1 and 2 can be accounted for by some general variable such as spurious individual differences across participants or general differences in strategies taken to perform the behavioral task. If this was the case, the ERPs evoked to congruous movie endings would be expected to be different between the experiments. We examined whether there were any such differences by conducting mixed design ANOVAs (within 0-150 msec, 150-250 msec, 250-350 msec, 350-600 msec, 600-1000 msec, and 1000-1500 msec time windows) for the midline and lateral columns of scalp regions. These analyses included a between-subject factor of experiment and within-subject scalp topography factors as shown in Figure 2. None of the effects involving the experiment factor were statistically significant.

A potential difficulty in interpreting the between-experiment differences in the posterior positivity effect to incongruous (vs. congruous) movie endings was that, in the incongruous condition, changes in the scene background across the context and final movie shots were more dramatic in Experiment 2 than in Experiment 1. This might modulate demands on accessing semantic memory networks, leading to a disproportional increase in the negativity effect to incongruous (vs. congruous) movie endings in Experiment 2, which in turn might lead to attenuation of the overlapping posterior positivity effect (i.e., in Experiment 2, a large late positivity effect might have been present but cancelled out by an overlapping effect of the opposite polarity). We obtained evidence against this possibility by demonstrating that background scene changes influence the ERPs primarily at the anterior and central sites. Among the congruous movie clips, changes across the context and final scenes were of two types: (1) merely zoom-in or zoom-out, for example, Figure 1A; (2) background change, for example, the context shows the kitchen sink background: the actor fills up a watering can; the final scene shows a plant on a window sill: the actor waters the plant. We selectively reaveraged ERPs across congruous trials of each type in both experiments. Difference waves obtained by subtracting ERPs to “zoom-change” movie endings from ERPs to “background-change” movie endings, combined between Experiments 1 and 2, are shown in Figure 7A. Corresponding voltage maps demonstrating the spatial topography of the observed differences are shown in Figure 7B. In mixed design midline and lateral ANOVAs, including a between-subject factor of experiment, a within-subject factor of scene (zoom-change vs. background-change), and within-subject scalp topography factors as shown in Figure 2, we confirmed that the negativity effect to “background-change” (vs. “zoom-change”) movie endings was present in all time windows after 150 msec, but was limited to anterior and central scalp regions (Table 4: Scene Region interactions in both midline and lateral ANOVAs that were parsed to reveal significant differences in several anteriorregions but no effects involving the scene factor in the parietal or occipital regions; also note that no effects involving the experiment factor were significant). Thus, this anteriorly distributed negativity effect due to background scene change, even if contributing to the ERP differences between the incongruous and congruous movie endings in Experiment 2, would be unlikely to modulate a positivity effect at the more posterior (parietal and occipital) scalp regions. Note, in all post hoc analyses, the Geisser-Greenhouse correction was applied to all repeated measures with more than one degree of freedom (Geisser & Greenhouse, 1959).

Figure 7.

Difference waves at five representative electrode sites obtained by subtracting the ERPs time-locked to “zoom-change” congruous final scenes from the ERPs time-locked to “background-change” congruous final scenes, combined across Experiments 1 and 2 (A; shaded in purple are 600-1000 msec and 1000-1500 msec time windows, in which a large posterior positivity effect to incongruous (vs. congruous) movie endings was evident in Experiment 1), and corresponding voltage maps averaged across four time windows (B; the maps were created using the EMSE software).

Table 4.

Results of Statistical Post hoc Analyses Contrasting ERPs Evoked by Congruous Movie Endings including Zoom and Background Changes in Experiments 1 and 2

|

F |

||||||

|---|---|---|---|---|---|---|

| Region | Contrast | 150-250 msec | 250-350 msec | 350-600 msec | 600-1000 msec | 1000-1500 msec |

| Midline | ||||||

| Omnibus | S × R | 3.311* | 4.263* | 8.256** | 3.699* | 4.977* |

| Planned comparisons | ||||||

| Anterior-frontal | S | 4.614* | 6.958* | 5.181* | 7.285* | |

| Frontal | S | 5.306* | 8.468** | |||

| Lateral | ||||||

| Omnibus | S | 4.964* | 6.210* | |||

| S × R | 6.435** | 7.760** | 12.363** | 5.713* | 6.863** | |

| Planned comparisons | ||||||

| Anterior-frontal | S | 5.578* | 6.053* | 9.180** | 6.101* | 8.556** |

| Frontal | S | 5.046* | 5.689* | 7.755** | 7.401* | 9.666** |

| Fronto-central | S | 4.782* | 4.743* | 6.635* | 8.716** | 11.186** |

| Central | S | 6.405* | 7.711* | |||

S = main effect of scene, degrees of freedom 1, 28; S × R = Scene × Region interaction, degrees of freedom 4, 112 (midline) and 6,168 (lateral).

p < .05.

p < .01.

Discussion

This experiment examined processing of final movie scenes that are semantically unrelated to the preceding contextual events but do not violate goal-related requirements of real-world actions. Relative to the contextually appropriate movie endings, these unexpected scenes evoked a robust N300/N400 effect that was maximal over anterior electrode sites. However, unlike in Experiment 1, only a handful of occipital electrode sites revealed a small positivity effect between 600 and 1000 msec to these scenes, although participants again made active decisions about the movie clips regarding whether the presented goal-directed event sequences would commonly be witnessed in everyday life. This finding provides evidence for the specificity of the late positivity evoked to incongruous movie endings with violations of goal-related action requirements in Experiment 1, suggesting that this effect is not simply a decision-related P3 (Donchin & Coles, 1988). At least in part, this large late positivity in Experiment 1 must be explained by differences in the semantic content of the incongruous final scenes between the two experiments. These findings and their implications will be discussed in further detail in the General Discussion. Here we focus on the anterior negativity findings.

There were clear similarities in the N300/N400 effects to incongruous (vs. congruous) scenes across Experiments 1 and 2. Throughout their prolonged time course, both these effects had similar anterior topography across the scalp. Therefore, it is unlikely that the anterior distribution of the N300/N400 in Experiment 1 was merely due to its overlap with a large posterior positivity effect (i.e., due to a cancellation on this effect at the more posterior sites by an overlapping effect of the opposite polarity).

There were, however, two aspects of the negativity effect to incongruous (vs. congruous) scenes in Experiment 2 that differed from Experiment 1. First, this effect in Experiment 2 started at approximately 150 msec after the final scene onset—approximately 100 msec earlier than in Experiment 1. One possible explanation for this earlier onset could be modulation of the visual feature analysis as a result of repetition priming by the preceding movie context in the congruous relative to incongruous final scenes (see Eddy, Schmid, & Holcomb, 2006). This could be because, in Experiment 2, the scene background had more dramatic changes across the context and final movie shots in the incongruous than in the congruous conditions.4 In contrast, in Experiment 1, the background changes were comparable between the congruous and incongruous final scenes (the target object rather than the entire final scene were manipulated between the conditions), which would account for the absence of the visual feature priming effect.

The second difference between the N300/N400 effects to incongruous (vs. congruous) scenes evoked in the two current experiments was in their hemispheric lateralization. Whereas in Experiment 1 the effect was similar across the left and right hemispheres, in Experiment 2, it was strongly right-lateralized. This right lateralization was evident throughout its entire time course but was most marked within the later, 1000-1500 msec epoch. One explanation for this right-sided distribution might be that it reflects integrating meaning that transcends individual events to form a global representation of the viewed movie clip—analogous to the previously proposed hypothesis that the right hemisphere is important for integrating across sentences to form a global representation of verbal discourse (e.g., Robertson et al., 2000; St George, Kutas, Martinez, & Sereno, 1999; Rehak et al., 1992). This observation is also consistent with ERP studies demonstrating a right-lateralized N400 effect to words (e.g., Camblin et al., 2007; van Berkum et al., 1999, 2003) and images (West & Holcomb, 2002) that, although acceptable within their local sentence/picture context, are incongruous with the overall contextual scenario. One possible mechanism of the right-hemisphere contributions to such global-level integration might be by coding weakly associated concepts in broad semantic fields (see Coulson & Wu, 2005; Jung-Beeman, 2005). In Experiment 2, participants might have attempted to form a global representation of each incongruous movie clip by accessing such weak associations between individual events (e.g., between “slicing bread” and “ironing clothes”).

GENERAL DISCUSSION

The results of the present two experiments support our hypothesis that comprehension of real-world visual events might be supported by two neurophysiologically distinct semantic integration mechanisms. The first mechanism, reflected by the anterior N300/N400, evident in both Experiments 1 and 2, may access graded connections between concepts in semantic memory. The second, somewhat slower mechanism, reflected by the posterior late positivity, which was robust only in Experiment 1, may access knowledge of discrete goal-related requirements of real-world actions.

Below we further discuss the properties of these N300/N400 and late positivity effects. Also noted are similarities and differences between these effects and their counterparts previously reported during the comprehension of language. We consider the possible roles that each of these mechanisms might play in visual event comprehension, and briefly suggest how these cognitive mechanisms may be supported in the brain.

The Anterior N300/N400

Both experiments described here replicate previous ERP findings in visual comprehension in several ways. Whereas those earlier studies used static pictures as stimuli (Ganis & Kutas, 2003; West & Holcomb, 2002; Federmeier & Kutas, 2001; McPherson & Holcomb, 1999; Ganis et al., 1996), here similar effects are demonstrated with dynamic visual images.

First, the dynamic visual images in movie clips evoked an N400-like negativity that was functionally similar to the N400 evoked by language stimuli. In both Experiments 1 and 2, the amplitude of this negativity was larger when the eliciting scene included information semantically unrelated to the preceding contextual events. Thus, this effect may reflect mapping of the perceived visual input onto the corresponding fields in graded semantic memory networks—the process that is functionally similar to mapping words in language. As incongruous information unfolded over time in dynamic movie scenes, it was not surprising that the overall time course of this negativity was more prolonged than that typical of the N400 evoked by abruptly presented written words. Second, the scalp distribution of the negativity effect evoked by movie scenes was more anterior than that of the N400 to words. This supported the hypothesis that visual images activate graded semantic memory networks that are neuroanatomically distinct from these activated by words. Third, movie scenes evoked an anterior N300 effect (in the 250-350 msec epoch), in addition to the later anterior N400 effect, suggesting that visual images might access their corresponding representations in semantic memory networks faster than more symbolic words. Finally, we demonstrated that semantic integration within visual scenes elicits a bilateral N300/N400 (Experiment 1, consistent with static picture findings by Ganis & Kutas, 2003), whereas integration between the scenes is reflected by a right-lateralized N300/N400 (Experiment 2, consistent with static picture findings by West & Holcomb, 2002). Because N400s evoked by words that are incongruous within their global verbal discourse context also tend to be right-lateralized (Camblin et al., 2007; van Berkum et al., 1999, 2003), we suggest that this pattern of results is consistent with the possibility that integration across individual events might especially depend on broadly coded semantic representations within the right hemisphere (Coulson & Wu, 2005; Jung-Beeman, 2005).

Mapping the perceptual input onto graded connections between concepts within semantic memory is thought to be especially useful in familiar circumstances, such as a traditional birthday party (e.g., Bower et al., 1979). In such situations, perceiving only few details allows comprehenders to access representations of the related concepts and, as a result, to rapidly grasp the likely overall meaning of events and to prepare for what would be expected to come next. Such a mechanism, however, is not as efficient in less familiar situations and cannot explain how people are able to build veridical representations of events that include entities and actions that have not been previously experienced together. We suggest that accurate and flexible comprehension of events in the real world depends on a second semantic mechanism that utilizes knowledge of what is necessary to achieve action goals in the real world.

The Late Positivity

The selective late positivity to incongruous movie endings in Experiment 1 relative to Experiment 2 supported the hypothesis that this effect is related to the presence in these scenes of the violation of goal-related action requirements. As a result, it demonstrated functional similarity of this effect to the late positivity that has recently been described when verb-based semantic requirements are violated during language comprehension (e.g., “the cake must cut”; e.g., Kuperberg et al., 2003). These two positivity effects evoked in movie clips and sentences resemble each other in their time course: They become evident, peak, and offset later than the N400 waveform (although just as the N400, the late positivity is more prolonged to movie scenes than to written words). Furthermore, these effects are comparable in their scalp topography: Both are widespread across the posterior recording sites and have a parietal maximum. Taken together, these similarities suggest that the late positivity may reflect a common neurocognitive mechanism that is engaged across language and visual-world events. The functional properties of this waveform are consistent with the interpretation that it reflects a process that evaluates affordances of real-world entities (in language described by NPs) against goal-related requirements of real-world actions (in language depicted by verbs; Sitnikova et al., 2008; Sitnikova, 2003). Such an account is consistent with behavioral evidence that comprehenders are able to rapidly evaluate affordances of their visual real-world environment against semantic requirements of auditorily presented target verbs in sentences (e.g., Chambers, Tanenhaus, & Magnuson, 2004) and to construct perceptual-motor simulations during reading (e.g., Stanfield & Zwaan, 2001; Kaschak & Glenberg, 2000; Barsalou, 1999).

This analysis of goal-related action requirements may play an important part in flexible visual real-world comprehension by enabling viewers to understand relationships in novel combinations between entities and actions. In the visual-world domain, a set of discrete requirements including the semantic properties of entities and the spatio-temporal relationships between them can uniquely constrain specific actions. For example, the cutting action requires that the entity in the agent role be able to perform cutting (e.g., 〈have ability for volitional actions〉), the entity in the instrument role have physical properties necessary for cutting (e.g., 〈have a sturdy sharp edge〉); and the entity in the patient role be cuttable (e.g., 〈unsturdy〉). In addition, there are minimal spatio-temporal requirements for the cutting action (e.g., 〈the instrument and the patient must come in physical contact〉). In comprehension of a visual event, the correspondence between the perceptual input and the goal-related requirements of a given real-world action would allow viewers to identify the event’s central action and to assign the roles to the engaged entities. Thus, when viewing a person wriggle a stretch of tape measure across a cake (an unusual event that might happen at an office birthday party), observers would likely understand that the tape measure—which has a sturdy sharp edge—is used as an instrument in a cutting action.

Comprehenders of the visual world may evaluate the perceptual input against requirements of several alternative actions, and this may contribute to the prolonged time course of the late positivity. In the present Experiment 1, the late positivity might reflect the evaluation of the target scenes against the goal-related requirements of actions constrained by the preceding contextual events (e.g., of the scene “a man sliding an electric iron across a loaf of bread” against the requirements of the cutting action). In addition, it might reflect attempts to re-evaluate whether the entities and their spatio-temporal relationships might meet the requirements for an alternative action that is also acceptable in a given scenario (e.g., the scene “a man sliding an electric iron across a loaf of bread” meets the requirements for the action of “defrosting”—the electric iron has a hot surface that could be used to defrost a loaf of bread).

The Two Underlying Neural Mechanisms: A Hypothesis

The morphological, temporal, and spatial differences between the N300/N400 complex and the late positivity suggest that the cognitive mechanisms they reflect are mediated by anatomically distinct neural processes (Holcomb, Kounios, Anderson, & West, 1999; Kutas, 1993). Interestingly, in the nonhuman primate brain, it is well established that there is a functional dichotomy of structures along the anterior-posterior dimension: The posterior cortex appears to specialize in representing sensory information, whereas the frontal lobe is devoted to representing and executing actions. A similar division of labor has been proposed in the human brain (e.g., Fuster, 1997). From this perspective, it seems possible that graded semantic representations reflecting all prior perceptual experiences are mediated primarily within the posterior cortex, whereas requirements specific to real-world actions are mediated within the prefrontal cortex. To examine this hypothesis, it will be important to use techniques such as functional magnetic resonance imaging and magnetoencephalography that can localize the neural activity in the brain with superior spatial resolution to ERPs.

Some insights into the neural mechanisms that can support graded semantic representations and discrete goal-related action requirements come from research in computational neuroscience. In connectionist networks, acquisition and use of graded semantic knowledge have been simulated by means of variation in synaptic weights that represent connection strengths (based on feature similarity or association strength) between the learned concepts (for a review, see Hutchison, 2003). However, more recent studies demonstrated that the neurobiological mechanisms specific to the prefrontal cortex (that support updating of active maintenance contingent on the presence of a reward) can lead to self-organization of discrete, rule-like representations coded by patterns of activity (distinct sets of units with high synaptic weights—e.g., Rougier, Noelle, Braver, Cohen, & O’Reilly, 2005, rather than by changes in synaptic weights). We suggest that, through breadth of learning experience with real-world actions that achieved or failed to achieve their goal (i.e., either coupled or not with a “reward” signal), these prefrontal mechanisms can identify the pattern of activity present across all instances of achieving a specific goal. In comprehension, searching for such pattern of activity, representing the parameters required for a given action, would allow flexibility in recognizing actions in novel circumstances, and eliminate the need to learn a new set of connection strengths. For example, very different objects such as a plate, a stretched tape measure, or a knife, if used to cut a cake, would access the same pattern of neuronal activity coding the requirement 〈must have a sturdy sharp edge〉 and can be assigned the instrument role around a cutting action. Intriguingly, electrophysiological studies in nonhuman primates have obtained evidence that prefrontal neurons display such a discrete pattern of response to categories of visual stimuli that are defined by their functional relevance (Freedman, Riesenhuber, Poggio, & Miller, 2001, 2002, 2003; for reviews, see Miller, Nieder, Freedman, & Wallis, 2003; Miller, Freedman, & Wallis, 2002).

Summary and Conclusion

In two experiments, we aimed to dissociate the N300/N400 and the late positivity ERP components evoked during real-world visual comprehension. It was demonstrated that the N300/N400 was evoked by the presentation of contextually inappropriate information in final events in movie clips, independently of whether the events violated goal-related requirements of real-world actions. In contrast, the late positivity was selectively increased to scenes with such violations. These findings support the hypothesis that comprehension of the visual real world might be mediated by two complementary semantic integration mechanisms. The first mechanism, reflected by the anterior N300/N400, maps the incoming information onto the graded connections between concepts in semantic memory. The second mechanism, reflected by the late positivity, evaluates the incoming information against the discrete goal-related requirements of real-world actions. We suggest that there may be a tradeoff between these mechanisms in their utility for integrating the people, objects, and actions during event comprehension, in which the first mechanism is better suited for familiar situations, and the second mechanism is better suited for novel situations. Future research will address questions of how these mechanisms are supported in the brain and interact in real time.

Acknowledgments

This research was conducted in partial fulfillment of the requirements for the PhD degree at Tufts University, August 2003. Tatiana Sitnikova thanks the dissertation committee chair, Phillip J. Holcomb, as well as the other members of the dissertation committee, W. Caroline West, Gina Kuperberg, and Lisa Shin for their stimulating contributions. We thank David R. Hughes and Sonya Jairaj for their assistance in preparing the materials and collecting the data. This research was supported by a grant HD25889 to P. J. H., a grant from the MGH Fund for Medical Discovery to T. S., NARSAD (with the Sidney R. Baer, Jr., Foundation) grants to T. S. and G. K., grants MH02034 and MH071635 to G. K., and by the Institute for Mental Illness and Neuroscience Discovery (MIND).

Footnotes

Depending on the type of stimuli, the N400 has been reported to change in scalp topography and time course. Topographic differences in the ERPs are generally taken to reflect distinctions in the underlying neural generators of the evoked cognitive processes (Holcomb et al., 1999; Kutas, 1993). Changes in the time course of a given ERP component are assumed to merely reflect modulation of the timing of the underlying neurocognitive processing due to factors such as processing load.

In the General Discussion, we will consider evidence from computational neuroscience and nonhuman primate electrophysiology that provides insights on how these discrete, rule-like requirements of real-world actions can be supported in the brain.

Using the original Sitnikova et al. (2003) continuous movie clips, we obtained statistically indistinguishable positivity effects with two instructions: (1) when we asked participants to actively decide whether each event sequence would commonly be witnessed in real life, (2) when we asked participants to simply watch the movies passively (Sitnikova, 2003). This insensitivity to the behavioral task changes argues against the interpretation that this positivity is similar to the decision-related P3.