Abstract

Phonological processing was examined in school-age children who stutter (CWS) by assessing their performance and recording event related brain potentials (ERPs) in a visual rhyming task. CWS had lower accuracy on rhyming judgments, but the cognitive processes that mediate the comparisons of the phonological representations of words, as indexed by the rhyming effect (RE) ERP, were similar for the stuttering and normally fluent groups. Thus the lower behavioral accuracy of rhyming judgments by the CWS could not be attributed to that particular stage of processing. Instead, the neural functions for processes preceding the RE, indexed by the N400 and CNV elicited by the primes and the N400 elicited by the targets, suggest atypical processing that may have resulted in less efficient, less accurate rhyming judgment for the CWS. Based on the present results, it seems likely that the neural processes related to phonological rehearsal and target word anticipation, as indexed by the CNV, are distinctive for CWS at this age. Further, it is likely that the relative contributions of the left and right hemispheres differ in CWS in the stage of processing when linguistic integration occurs, as indexed by the N400. Taken together, these results suggest that CWS may be less able to form and retain a stable neural representation of the prime onset and rime as they anticipate the target presentation, which may lead to lower rhyming judgment accuracy.

Keywords: phonology, event-related brain potentials, children who stutter, language, CNV, N400

Introduction

Developmental stuttering is a disorder in which the forward flow and rhythm of speaking are involuntarily disrupted even though the individual knows precisely what he/she wants to say. Developmental stuttering affects approximately 4–5% of preschool children, with approximately 25% of these children developing chronic stuttering that persists into adulthood (Yairi & Ambrose, 1999). Many earlier studies have focused on speech motor control variables as they relate to overt fluent and non-fluent speech production. These studies examined how disrupted motor commands to the articulatory, laryngeal, and respiratory systems lead to stuttering (e.g., Denny & Smith, 1992; McClean & Runyan, 2000; Peters & Boves, 1988; Smith et al., 1993; Zimmerman, 1980; Zocchi et al., 1990). Later studies of adults who stutter (AWS) focused on motor performance in the face of increased linguistic demands and have suggested strong bi-directional language/motor interactions (Kleinow & Smith, 2000; van Lieshout, Starkweather, Hulstijn, & Peters, 1995).

We have examined processing differences in AWS in paradigms that do not require overt speech production. These studies, employing measures of event-related brain potentials (ERPs), revealed that language processing is subtly altered in AWS, even in the absence of any speech production requirements (Weber-Fox, 2001; Cuadrado & Weber-Fox, 2003; Weber-Fox, Spencer, Spruill & Smith, 2004). Converging evidence from this series of studies indicates that AWS display atypical ERPs associated with later latency, cognitive components (N280, N400, P600) for various aspects of linguistic processing, including semantic and syntactic information. These studies suggest that speech motor control in stuttering is not independent of language formulation processes. The language processing results are consistent with recent kinematic studies examining the interaction between speech motor control and language formulation demands in normal individuals (see Smith & Goffman, 2004 for review). Kinematic parameters of speech implementation have been shown to be affected by the language demands of an utterance in typically developing children and adults, as well as in AWS (Goffman & Smith, 1999; Kleinow & Smith, 2000; Kleinow & Smith, 2006; van Lieshout et al., 1995). Thus, the use of ERPs to examine the disorder of stuttering offers valuable insights into the neural functions for language processing in the absence of overt speech planning or production. Additionally, the study of people who stutter offers an opportunity to examine how neural functions for language processing can be affected by interactions with an atypically developing speech motor system.

Phonological Processing in Adults Who Stutter

In an earlier experiment from our laboratory, we investigated phonological processing in AWS employing a visual rhyming judgment paradigm. We found that the neural systems engaged for phonological processing in AWS are, for the most part, very similar to those of normally fluent speakers (Weber-Fox et al., 2004). However, longer reaction times (RTs) on the most difficult rhyming judgment task indicated that AWS may be more vulnerable to additional task complexity imposed by introducing orthographic interference to the rhyming judgment (e.g., words that look similar but do not rhyme, “GOWN, OWN”). And further, the amplitude of the later cognitive neural activity associated with the rhyme decision (derived from the Rhyming Effect (RE) difference wave), was larger over the right hemisphere for AWS but more symmetrical for the normally fluent controls. These results did not support a hypothesis for a core phonological processing deficit in AWS (Kolk & Postma, 1997; Postma & Kolk, 1993;Wingate, 1988), however, the evidence suggested that the AWS are more vulnerable to increased task demands and that they display greater right hemisphere involvement in late cognitive processes for the rhyming task.

Phonological Processing in Children Who Stutter

The current study examines phonological processing in children who stutter (CWS). Behavioral evidence indicates that there are greater delays in phonological development for young children who exhibited persistent stuttering compared to those who recovered from stuttering (Paden, Yairi & Ambrose, 1999). Also, it has been suggested that phonological disorders occur at a higher rate among CWS, (e.g., as high as 30–40%) compared to the incidence in the general population (2–6%) (Beitchman, Nair, Clegg, & Patel, 1986; Conture, Louko, & Edwards, 1993; Louko, 1995; Melnick & Conture, 2000; Ratner, 1995; Wolk, 1998). The rates of co-occurrence of stuttering and phonological disorders vary considerably across studies. These differences may be due in part to methodological differences among studies, including varied criteria for identifying phonological disorders (Nippold, 2001; 2002). Nonetheless, there continues to be a general consensus that phonological disorders and/or subclinical differences in phonological processing co-occur with fluency disorders in children (See Nippold, 2002 for review).

Employing a Rhyming Paradigm to Investigate Phonological Processing

The ability to judge whether two words rhyme has been established as one measure of phonological awareness (Gathercole, Willis, & Baddeley, 1991; Høien, Lundberg, Stanovich, & Bjaalid, 1995). We employed a well studied visual rhyming task to examine behavioral responses and event-related brain potentials (ERPs) elicited by briefly presented primes and target words. Evidence from articulatory suppression paradigms, for example, repeating a word such as “the, the, the…” while performing visual rhyming judgments, suggest that rhyming judgments involve the encoding of orthographic information into phonological representations and are thought to activate the “articulatory loop” or “inner voice” (Arthur, Hitch, & Halliday, 1994; Baddeley, 1986; Baddeley & Hitch, 1974; Besner, 1987; Johnston & McDermott, 1986; Richardson, 1987; Wilding & White, 1985). The processes involved in completing the rhyming judgments therefore are thought to include retrieving the phonological representation of the prime word, holding it in working memory via the articulatory loop, and segmenting it into its onset and rime elements. Similar processes are assumed to occur for processing the target word in the pair (Besner, 1987). The rhyming judgment is then produced by a comparison of the rime element of the target to that of the prime word (Besner, 1987).

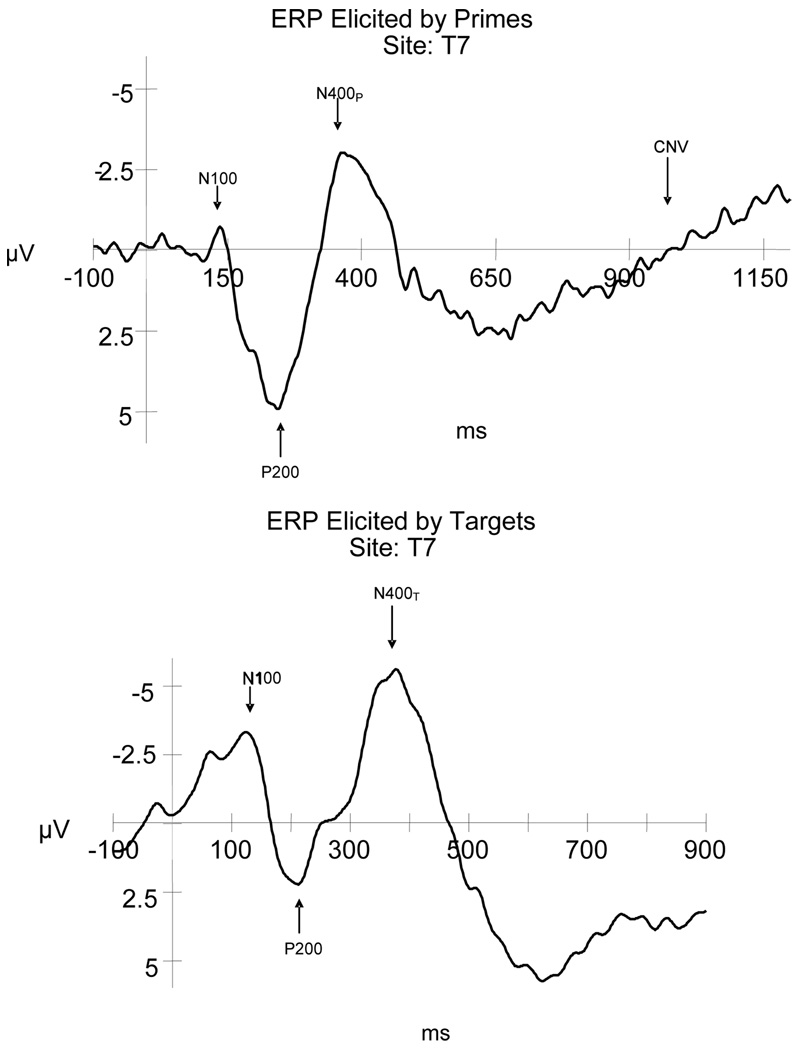

Rhyming paradigms employing ERP measures have been used to map developmental changes in accuracy, RT and neural functions for phonological processing in normally developing individuals aged 7 years through adulthood (Coch, Grossi, Coffey-Corina, Holcomb, & Neville, 2002; Grossi, Coch, Coffey-Corina, Holcomb, & Neville, 2001; Kramer & Donchin, 1987; Polich, McCarthy, Wang, & Donchin, 1983; Rugg, 1984; Rugg & Barrett, 1987; Weber-Fox, Spencer, Cuadrado, & Smith, 2003). In each of these studies, a reliable rhyming ERP index has been observed. Figure 1 illustrates the ERP components typically elicited by the prime and target words (congruent non-rhyme), presented here for normally fluent children aged 9–13 years. Across rhyming studies, the ERPs were characterized by an increase in the amplitude of the negative peak approximately 350–400 ms post stimulus onset for nonrhyming target words compared to rhyming targets. This ERP index has been found to be specific to the rhyming judgment task, because it was not elicited by a visual matching task using identical stimuli, but without the need to transform the orthography into phonological representations (Polich et al., 1983). The difference between the non-rhyme and rhyme elicited waveforms (formed by subtracting the waveforms elicited by rhyming conditions from the non-rhyming conditions) has been called the “rhyming effect (RE)” (Grossi et al., 2001). The RE is a broadly distributed negative component that peaks around 400 ms and is considered a member of the family of N400-like potentials that have been shown to be sensitive to contextual information (Grossi et al., 2001; Rugg & Barrett, 1987). It has been hypothesized that the RE reflects cognitive processes mediating the comparisons of the phonological representations of words, that is, the processes that underlie the ability to determine whether words rhyme or not (Grossi et al., 2001). A stable, adult-like RE was observed in the ERPs of normally developing children as young as 7 years of age performing a rhyming task (Coch et al, 2002; Grossi et al., 2001; Weber-Fox et al., 2003). However, orthographic-phonological interference produced greater peak latency delays in the REs elicited in school age children (aged 9–10) compared to young adults (Weber-Fox et al., 2003).

Figure 1.

Illustration of typical grand average ERP waveforms (taken from the CWNF – aged 9–13 years of age) to highlight the key components elicited by primes and target word (congruent non-rhyme) stimuli employed in a rhyming task.

Another aspect of ERPs that has been studied in relation to the rhyming paradigms is a late, slow negative deflection elicited by the prime words. Rugg (1984) noted similarities between this late slow deflection and the contingent negative variation (CNV) which reflects expectation or anticipation of a subsequent stimulus (Walter, Cooper, Aldridge, McCallum, & Winter, 1964). Rugg hypothesized that the CNV elicited in the rhyming paradigm reflects working memory retention and silent rehearsal of the phonological sequence contained in the prime word as the subject awaits the expected presentation of the target word. Additionally, developmental studies in both visual and auditory modalities indicate that the late slow deflection associated with prime words in a rhyming task may also reflect processes related to task-specific requirements of preparing for the target presentation (Coch et al., 2002; Grossi et al., 2001). For example, a visual rhyming task but not an auditory rhyming task elicited a CNV with a left frontal distribution that was thought to be related to the need for phonological encoding of orthographic symbols (Coch et al., 2002; Grossi et al., 2001). Visual rhyming studies of normally developing children indicate that the CNV exhibits developmental changes; the CNV amplitude over anterior electrode sites increased with age, especially over the left hemisphere (Grossi et al., 2001).

Hypotheses and Predictions

The association between phonological deficits and stuttering derived from studies of young children (Conture et al., 1993; Louko, 1995; Melnick & Conture, 2000; Paden et al., 1999; Ratner, 1995; Wolk, 1998; Yaruss & Conture, 1996), but these investigations have not included any indices of neural processing that may underlie any subclinical differences in phonological abilities in CWS. Therefore a critical question is whether CWS exhibit atypical phonological processing even in tasks where no speech production planning or execution are involved. We chose to replicate our earlier visual rhyming study experiment in AWS (Weber-Fox et al., 2004) in school-age CWS. This paradigm is ideal because it provides clear indices of the neural processes involved in the rhyming judgment task and is also provides a window on the effects of increased cognitive loads in making the rhyming judgment.

Based on the previous behavioral findings in CWS (Beitchman, et al., 1986; Conture, et al., 1993; Louko, 1995; Melnick & Conture, 2000; Paden et al., 1999; Ratner, 1995; Wolk, 1998), we hypothesize that at least some aspects of phonological processing function less effectively in CWS, and we predict that these differences would be reflected in poorer performance and distinctive neural functions elicited by tasks that require phonological encoding, such as a rhyming paradigm described above. However, based on the earlier behavioral results, we do not know what aspects of phonological processing operate atypically in CWS. Therefore, we did not make predications regarding specific ERP components, but rather designed the experiment to shed light on this question. The findings in adults suggest that increased cognitive loads on phonological processing may enhance differences between normally fluent speakers and those who stutter (Weber-Fox et al., 2004). We hypothesize the same would be true for CWS. A second prediction is that differences between CWS and CWNF for behavioral accuracy, reaction time and ERP measures will be greatest for the conditions with the greatest cognitive loads, that is, the conditions in which the orthographic information conflicted with the phonological decision.

Method

Participants and Screening Procedures

Participants, aged between 9;4 and 13;9 (years;months) were 10 children who stutter (CWS) and 10 children who are normally fluent (CWNF). Participants were matched according to age (within 12 months) and gender (Table 1). The children were all right-handed as determined by an abbreviated version of the Edinburgh Inventory for assessment of handedness (Oldfield, 1971). Handedness was tested by observing which hand was used in handling objects (pencil and paper, scissors and paper, small Nerf ball, plastic spoon, toothbrush) which were placed on a table at midline in front of the participants. The participants were then asked to perform a task using the items, such as writing their name, drawing, cutting a piece of paper with the scissors, throwing the ball to the examiner or pretending to eat or brush their teeth. All participants were native English speakers with no reported history of neurological, language, reading, or hearing impairments. At the time of testing, the Stuttering Severity Instrument for Children and Adults (SSI-3) was administered to each of the CWS (Riley, 1994; See Table 1). It should be noted that the SSI severity measures were based on only one standard sample of conversation and reading and do not capture the variations of stuttering severity observed across situations. All of the children who stuttered reported a history of treatment for their stuttering. However, the types of treatment, treatment durations, as well as the ages of participation in treatments varied considerably across the children.

Table 1.

Characteristics of Participants

| Children who stutter | Children who are normally fluent | ||||

|---|---|---|---|---|---|

| ID# | Age | Gender | SSI Severity | Age | Gender |

| 1 | 9;8 | M | Moderate | 10;0 | M |

| 2 | 13;1 | M | Severe | 12;10 | M |

| 3 | 10;10 | M | Mild | 10;10 | M |

| 4 | 12;9 | F | Moderate | 13;9 | F |

| 5 | 12;4 | F | Moderate-Severe | 12;7 | F |

| 6 | 12;3 | M | Severe | 12;11 | M |

| 7 | 12;6 | M | Moderate | 12;7 | M |

| 8 | 11;8 | M | Very Severe | 11;4 | M |

| 9 | 13;2 | M | * | 12;8 | M |

| 10 | 9;4 | M | Mild | 9;7 | M |

| Mean | 11;6 | 11;7 | |||

| S.D. | 1;1 | 1;2 | |||

Note: SSI score was lost during moving of our laboratory facility.

The language abilities of the children were screened to ensure that all participants demonstrated age appropriate language abilities. The Clinical Evaluation of Language Fundamentals-Screening Test was employed (CELF-R, Semel, Wiig, & Secord, 1989; CELF-3, Psychological Corporation, 1996). One of the CWS (#8 on Table 1), fell 2 points below the criterion score for the CELF (he received a 32, his criterion score was 34). He also demonstrated very severe stuttering during the language screening test. In reviewing his language performance, we determined that his disfluencies, particularly in the sentence repetition task, affected his score. Normal language skills for the oldest child, were measured by four subtests of the Test of Adolescent and Adult Language, including the listening and speaking grammar and vocabulary subtests (TOAL-3, Hammill, Brown, Larsen, & Wiederholt, 1994). Oral structures and nonspeech oral motor skills were within normal limits for all participants as assessed by the Oral Speech Mechanism Screening Evaluation – Revised (OSMSE-R, St. Louis & Ruscello, 1987). All participants also exhibited normal bilateral hearing as confirmed by hearing screenings at a level of 20 dBHL at 250, 500, 1000, 2000, 4000, 6000, and 8000 Hz presented via headphones. Also, each participant had normal or corrected-to-normal vision according to parental-report and confirmed by a visual acuity screening of each eye using a standard eye chart.

Additionally, each participant’s performance on the nonword repetition task described by Dollaghan and Campbell (1998) was measured. The nonword repetition task was included because it requires processing phonological information without semantic or syntactic constraints. Further, the task includes the encoding processes necessary for accurate reproduction of the phonological information. The nonword stimuli ranged from 1 syllable to 4 syllables. Participants were instructed to repeat each stimulus item as accurately as possible after hearing each recorded token one time. Administration and scoring of the nonword repetition task followed the procedures outlined by Dollaghan and Campbell (1998). The accuracy score on this task is calculated as the number of phonemes in each nonsense word that is produced correctly (Dollaghan & Campbell, 1998). Accordingly, this task measured production performance that relied on phonological processing capabilities and complemented the rhyming judgment task utilized in the ERP paradigm.

Stimuli for Rhyming Judgment Task

Stimuli were 124 rhyming word pairs and 124 nonrhyming word pairs that were used previously in a study of typically developing children and adults, and AWS (Weber-Fox et al., 2003; 2004). Each of the word pairs consisted of a prime followed by a target. Rhyming (R+) pairs consisted of two conditions: 62 orthographically similar (R+O+, e.g., THROWN, OWN) and 62 orthographically dissimilar (R+O−, e.g., CONE, OWN) pairs. Nonrhyming (R−) pairs also consisted of two conditions: 62 orthographically similar (R−O+, e.g., GOWN, OWN) and 62 orthographically dissimilar (R−O−, e.g., CAKE, OWN). Thus, for the rhyming and nonrhyming pairs, orthography was congruent (R+O+ and R−O−) or incongruent (R+O− and R−O+) with the phonologically based rhyming decision (Table 2). The word pairs were balanced so that all targets were matched with a prime in each of the four conditions, consequently the list of target words in each of the 4 conditions were identical. The primes in the R−O− condition were randomly selected from the primes used in the other conditions (see Weber-Fox et al., 2003, for a complete list of stimulus items). All but one of the target words were open class words which are known to elicit an N350 component (Hagoort, Brown, & Osterhout, 1999).The means (SD) of the word frequencies per million for the R+O+, R−O+, R+O−, and R−O− primes were 384 (1499), 634 (1853), 886 (4804), and 602 (1784) respectively (Francis & Kucera, 1982). As stated above, the target words were the same across conditions with a mean (SD) frequency per million of 248 (518). No significant differences were found for word frequency across the primes and targets, F (4, 291) = .553, p = .70. Therefore, differences in accuracy, RT, and ERP responses across conditions could not be attributed to frequency effects.

Table 2.

Examples of Phonological and Orthographic Combinations for Prime and Target Word Pairs

| Phonology | Orthography | |

|---|---|---|

| Similar (O+) | Dissimilar (O−) | |

| Rhyme (R+) | Congruent (R+O+) | Incongruent (R+O−) |

| TOWN, CROWN | NOUN, CROWN | |

| HOME, DOME | FOAM, DOME | |

| DOVE, LOVE | OF, LOVE | |

| Nonrhyme (R−) | Incongruent (R-O+) | Congruent (R−O−) |

| SHOWN, CROWN | POUR, CROWN | |

| COME, DOME | IDEA, DOME | |

| MOVE, LOVE | TOES, LOVE | |

Note: See Weber-Fox et al., 2003 for complete list of word pair stimuli.

Electroencephalographic Recordings

Electrical activity at the scalp was recorded from electrodes secured in an elastic cap (Quik-cap, Compumedics Neuroscan). Twenty-eight electrodes were positioned over homologous locations of the two hemispheres according to the criteria of the International 10-10 system (American Electroencephalographic Society, 1994). Locations were as follows: lateral sites F7/F8, FT7/FT8, T7/T8, TP7/TP8, P7/P8, mid-lateral sites FP1/FP2, F3/F4, FC3/FC4, CP3/CP4, P3/P4, O1/O2 and midline sites FZ, FCZ, CZ, CPZ, PZ, OZ. Recordings were referenced to linked electrodes placed on the left and right mastoids1. Horizontal eye movement was monitored via electrodes placed over the left and right outer canthi. Electrodes over the left inferior and superior orbital ridge were used to monitor vertical eye movement. All electrode impedances were adjusted to 5 kOhms or less. The electrical signals were amplified within a bandpass of .1 and 100 Hz and digitized on-line (Neuroscan 4.0) at a rate of 500 Hz.

Procedures

Each participant and parent completed consent forms. The parent of each child also completed a case history form. All participants were encouraged to ask questions throughout the course of the experiment. The children watched a video during the capping procedure. Once appropriate impedance levels were obtained (< 5 kOhms), they were seated comfortably in a sound-attenuating room. The children were positioned 160-cm from a 47.5-cm monitor and the experimental task was explained.

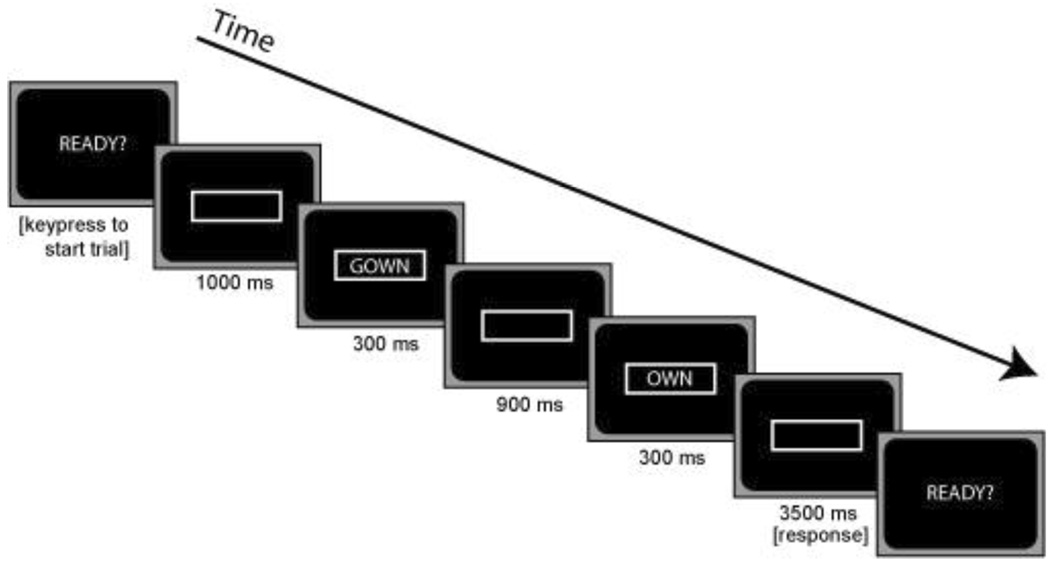

Participants were instructed to make the rhyming judgment “as quickly and accurately as possible” and were instructed to refrain from blinking during trials. As the experiment was self-paced, the participant triggered the beginning of a trial with a button press. A trial began with a centered, white rectangular border appearing on the screen. Following a delay of 1000 ms, a prime was presented for 300 ms. After a 900 ms inter-stimulus interval, a target was presented for 300 ms. The time course of the prime and target stimuli presentation is illustrated in Figure 2. Visual angles of the word stimuli were 0.5 to 5 degrees horizontally and 0.5 degrees vertically. Following the presentation of the target, participants pressed the “yes” button if the two words rhymed, or the “no” button if the two words did not rhyme. The response hands corresponding to the “yes” and “no” buttons were counterbalanced across participants and within each of the groups. The rectangular border remained on the screen for an additional 3500 ms following the offset of the target at which point the word “READY?” appeared in the center of the screen.

Figure 2.

Illustration of the time course of the presentation of the prime and target stimuli used to elicit ERPs and rhyming judgments. A prompt “READY” appears on the screen. The participant then presses a button to initiate the trial (time = 0). A white rectangular border is then presented for 1000 ms, followed by a prime word (e.g., “GOWN”) for 300 ms. The white border remains on the screen during the inter stimulus interval of 900 ms, followed by a target word (e.g., “OWN”) which remains on the screen for 300 ms. After the appearance of the target, participants press a button as quickly as possible to indicate whether the prime and target words rhymed or not. During the response interval, the white border remains on the screen for 3500 ms followed by the prompt “READY” at which time the participant can initiate the next trial.

Prior to the test blocks, a practice session consisting of 10 word pairs was carried out. Participants then performed two test blocks, each containing 124 prime and target pairs. The word pairs were pseudo-randomized across blocks with equal representation from each of the four conditions. Trials with identical target words were separated by at least five intervening trials. The order of the blocks was counterbalanced across participants. Each block lasted approximately 18 minutes and varied slightly depending on the pace of individual participants.

Data Analyses

Behavioral Measures

Rhyming judgment accuracy and reaction time (RT) were obtained from signals generated from the response pad. RT, the time from the target onset to the button press, was calculated for the correct trials that occurred at least 200 ms after stimulus onset but before 1800 ms, to eliminate spurious button presses (6%). Previous RT findings indicate that this range captures the time needed for rapidly responding to a complex stimulus such as a visual rhyming task (e.g., Grossi et al., 2001). Rhyming judgment accuracies and RTs, averaged across trials for each participant in each condition, were compared using mixed effects ANOVAs with repeated measures that included a between factor (group: CWS, CWNF) and a within subject factor (condition: R+O+, R−O+, R+O−, R−O−). Using the MS-error terms of the repeated measures analysis, post-hoc comparisons were made using the Tukey HSD method to determine which comparisons contributed to significant effects (Hays, 1994).

ERP Measures

Trials with excessive eye movement or other forms of artifact (14%) were excluded from further analyses of the ERP responses. The rejected trials were equally distributed across the four conditions. The remaining trials were averaged by condition for each participant. The averages were triggered 100 ms prior to the prime and target onsets and included 1200 ms and 800 ms after the trigger for the primes and targets respectively. The ERP data from the 100 ms interval prior to the prime and target onsets served as the measure of baseline activity and each EEG epoch was baseline corrected (Neuroscan 4.2) prior to averaging. The peak latencies of ERP components were computed in relation to the trigger point (0 ms) that marked the stimuli onsets. The peaks were automatically detected using Neuroscan 4.2 software with specified temporal windows that capture the ERP components elicited in this paradigm, as described below.

For ERPs elicited by the primes and targets, temporal windows for measurement were based on the peak amplitudes and latencies of the N100, N190 and P200 components, and were measured within the temporal windows of 50 – 200 ms, 100–250, and 150 – 250 ms, respectively. The peak latencies, peak amplitudes and mean amplitudes of the N400P (primes) and N400T (targets) were measured within the temporal windows of 300 – 600 ms and 250 – 600 ms post stimulus onset, respectively. The temporal windows were selected by centering the window on the N400P and N400T observed in the grand averages and then checking to make sure that individual responses fell within those windows. These windows were similar to those used previously in adults (Weber-Fox et al., 2004), but were slightly later to capture the timecourse of the children’s ERPs. In addition, for the prime words, the mean amplitude of the contingent negative variation (CNV) was measured within the temporal window of 600–1200 ms (Grossi et al., 2001). Figure 1 (top panel) illustrates the polarity and time course of these ERPs for typically developing children aged 9–13 years of age. An additional measure for the ERPs elicited by the targets was obtained by computing the difference waves by subtracting the rhyme from the nonrhyme averages elicited by the orthographically congruent (R−O− minus R+O+) and incongruent (R−O+ minus R+O−) conditions. This difference wave is referred to as the rhyming effect (RE) because it isolates the differences between the rhyme and nonrhyme elicited waveforms (Grossi et al., 2001). The peak and mean amplitudes and peak latencies of the RE were measured within a temporal window of 300−800 ms, similar to the temporal window used previously in adults (Weber-Fox et al., 2004), with a slightly later and longer window to better capture the RE amplitudes of the children’s responses.

ERP amplitudes and peak latencies were compared with mixed effects ANOVAs with repeated measures including a between subject factor of group (CWS, CWNF) and 3 within subject factors including condition (R+O+, R+O−, R−O+, R−O−), hemisphere (left and right) and subset of electrode sites. The subset of electrode sites used for the comparisons were based on visual inspection of the elicited waveforms to determine where reliable peaks could be measured and based on findings from previous studies (Grossi et al., 2001; Weber-Fox et al., 2003). In addition, lateral and mid-lateral electrode sites were selected for the repeated measures analyses because they provided a sample of ERPs from the left and right hemispheres that allowed for the examination of distributional effects within and between hemispheres. The subset of electrode sites used for each of the components of interest were: N100 and P200 (F7/8, FC3/FC4, FT7/8, T7/8), N190 (O1/2), N400P, CNV, and N400T (F7/8, FC3/FC4, FT7/8, T7/8, TP7/8, CP3/4). Mixed effects ANOVAs with repeated measures were also applied to the difference waves (RE) and included a between-subject factor of group (CWS, CWNF) and three within subject factors including condition (congruent and incongruent subtractions), hemisphere (left and right) and electrode sites (F7/8, FC3/4, FT7/8, T7/8, TP7/8, CP3/4). For the sake of clarity and conciseness in reporting the results, the peak amplitude statistics for the N400P, CNV, and N400T were not included in the present report because they mirrored those of the mean amplitude measures and the mean amplitude measures are more resistant to artifacts such as latency jitter (Luck, 2005). Significance values were set at p < .05. For all repeated measures with greater than one degree of freedom in the numerator, the Huynh-Feldt (H–F) adjusted p-values were used to determine significance (Hays, 1994). The effect sizes, indexed by the partial-eta squared statistic (ep2), are reported for all significant effects. Tukey HSD post hoc comparisons, which utilize the MS Error term from the original repeated measures ANOVA, were calculated for significant interactions involving multiple variables to determine which comparisons contributed to the significant F values (Hays, 1994).

Results

Behavioral

Phonological Awareness

The CWS and CWNF performed similarly on the Dollaghan and Campbell (1998) “Non-Word Repetition Task”, Group F (1, 18) = 1.12, p = .304, Condition X Group F (4, 72) < 1. The scores obtained by both groups were similar to those reported previously for 30 typically developing children in this age range (Weber-Fox et al., 2003) and showed the same effect for number of syllables on task performance accuracy, Condition F (4, 72) = 71.23, H–F p < .001. As evident in Table 3, the children performed less accurately on the repetition of non-words with 4 syllables compared to the one, two, and three syllable tasks.

Table 3.

Performance on Non-Word Repetition Task

| Children who stutter | Children who are normally fluent | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| ID# | PC1 | PC2 | PC3 | PC4 | Total | PC1 | PC2 | PC3 | PC4 | Total |

| 1 | 100 | 100 | 92.9 | 83.3 | 91.6 | 100 | 100 | 100 | 94.4 | 97.9 |

| 2 | 100 | 100 | 96.4 | 91.6 | 95.8 | 100 | 100 | 96.4 | 83.3 | 92.7 |

| 3 | 100 | 100 | 100 | 80.5 | 92.7 | 100 | 100 | 100 | 83.3 | 93.8 |

| 4 | 100 | 100 | 96.4 | 83.3 | 92.7 | 83.3 | 100 | 100 | 75 | 88.5 |

| 5 | 100 | 100 | 100 | 88.8 | 95.8 | 100 | 100 | 100 | 97.2 | 98.9 |

| 6 | 83.3 | 85 | 78.6 | 58.3 | 72.9 | 100 | 100 | 100 | 91.7 | 96.8 |

| 7 | 100 | 100 | 100 | 80.5 | 92.7 | 100 | 100 | 93.9 | 72.2 | 87.5 |

| 8 | 91.7 | 100 | 96.4 | 69.4 | 86.5 | 100 | 100 | 100 | 86.1 | 94.8 |

| 9 | 100 | 100 | 96.4 | 91.6 | 95.8 | 100 | 100 | 100 | 83.3 | 93.8 |

| 10 | 100 | 100 | 100 | 100 | 92.7 | 100 | 100 | 100 | 77.8 | 91.7 |

| Mean | 97.5 | 98.5 | 95.7 | 80.8 | 90.9 | 98.3 | 100 | 99 | 84.4 | 93.6 |

| S.D. | 5.6 | 4.7 | 6.4 | 10.3 | 6.9 | 5.3 | 0 | 2.1 | 8.2 | 3.7 |

Note. The percent of Phonemes Correct (PC) for 1-, 2-, 3-, and 4-syllable repetitions.

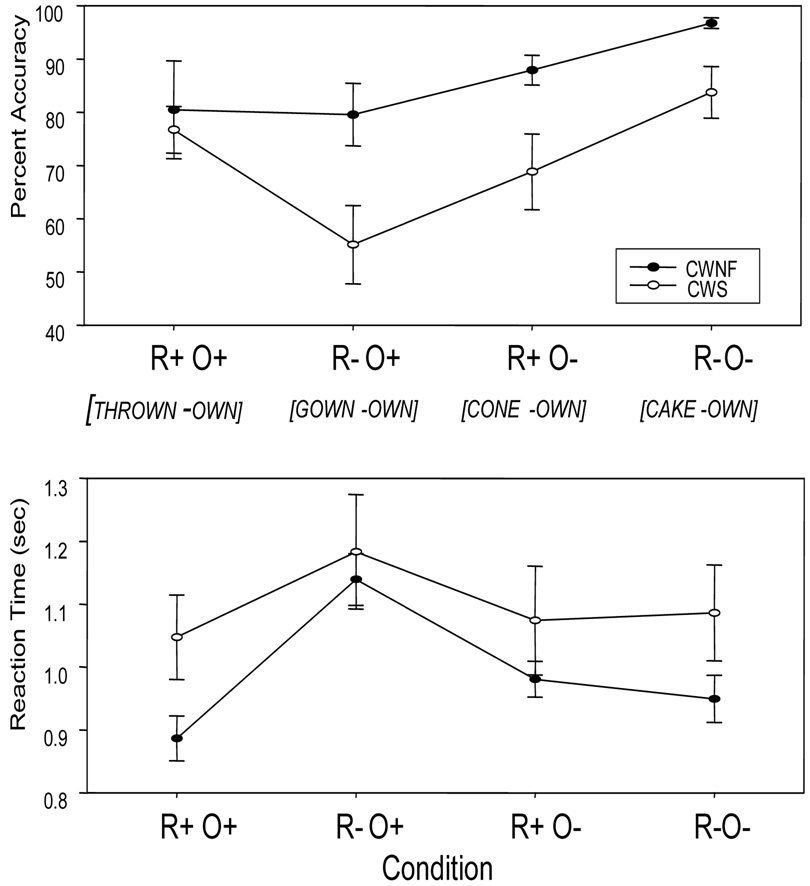

Rhyming Judgments – Accuracy

The accuracy of rhyming judgments was significantly lower for the CWS compared to the CWNF, F (1, 18) = 8.684; p = .009, ep2 =.325 (Figure 3, upper panel). The mean (SE) percent correct across all conditions combined was 88.5 (4.2) for the CWNF and 71.1 (4.2) for the CWS. The accuracy levels also varied according to condition, F (3, 54) = 20.01, H–F p < .001, ep2= .526. Post-hoc comparisons revealed that relative to the other three conditions, accuracy was significantly reduced for the R−O+ condition, Tukey HSD p < .05. This condition required encoding different phonological representations for words with similar orthography (e.g., GOWN, OWN). Further, the accuracy for the R+O− condition was reduced compared to the R−O− condition, Tukey HSD p < .05. This condition requires encoding the same phonological representation for words with different orthography (e.g., CONE, OWN). There was no interaction between group and condition, F (3, 54) = 1.59, H–F p = .21.

Figure 3.

Rhyming judgment behavioral accuracies and reaction times (means and SEs) for children who are normally fluent (CWNF) and children who stutter (CWS).

Rhyming Judgments - Reaction Time

Overall group differences in RT were not found, F (1, 18) = 1.708, p = .21. Figure 3 (lower panel) shows the condition effects for the measure of RT, F (3, 54) = 23.29, H–F p < .001, ep2 = .564. As can be seen, RTs were slower for the R−O+ condition relative to the other three, and post-hoc comparisons confirmed this observation, Tukey HSD p < .05. The interaction between group and condition did not reach significance, F (3, 54) = 2.237, H–F p = .12.

ERPs

Early Potentials Elicited by Primes

N100, N190, P200

The mean peak latencies and amplitudes of the early potentials were not affected differentially by the prime conditions, F (3, 54) < 2.09, H–F p > .11. The peak latencies and amplitudes of the early potentials elicited by the primes also did not differentiate the CWS and CWNF groups2, F (1, 18) < 3.38, p > .08.

Later Potentials Elicited by Primes

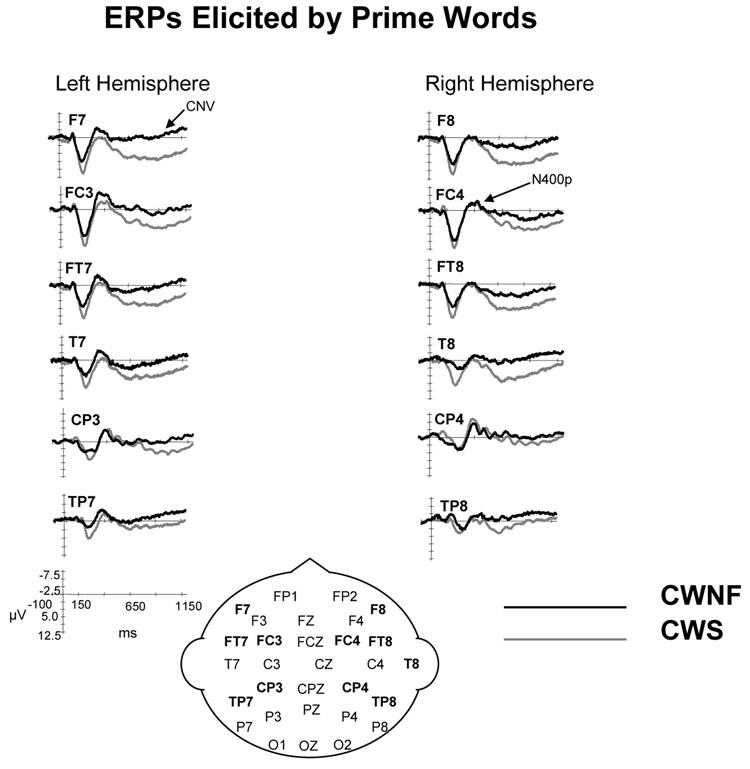

N400P

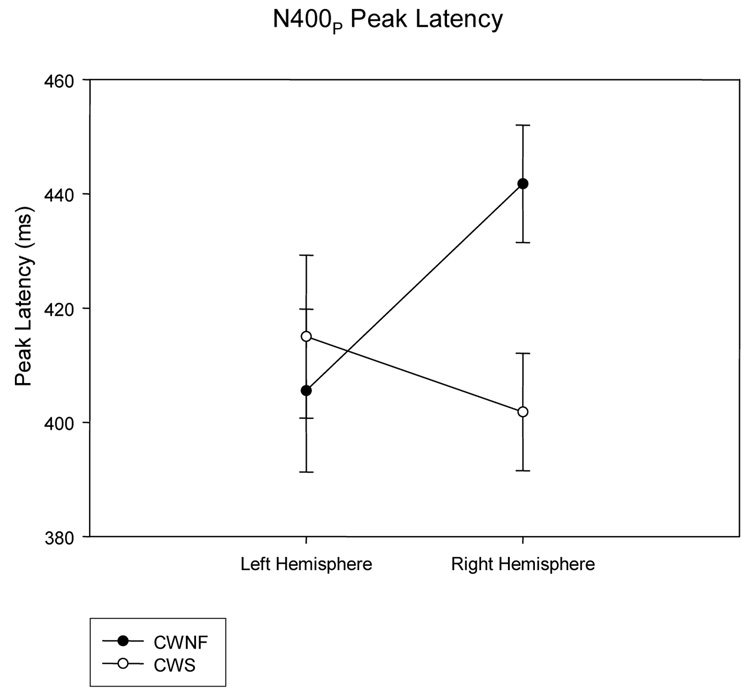

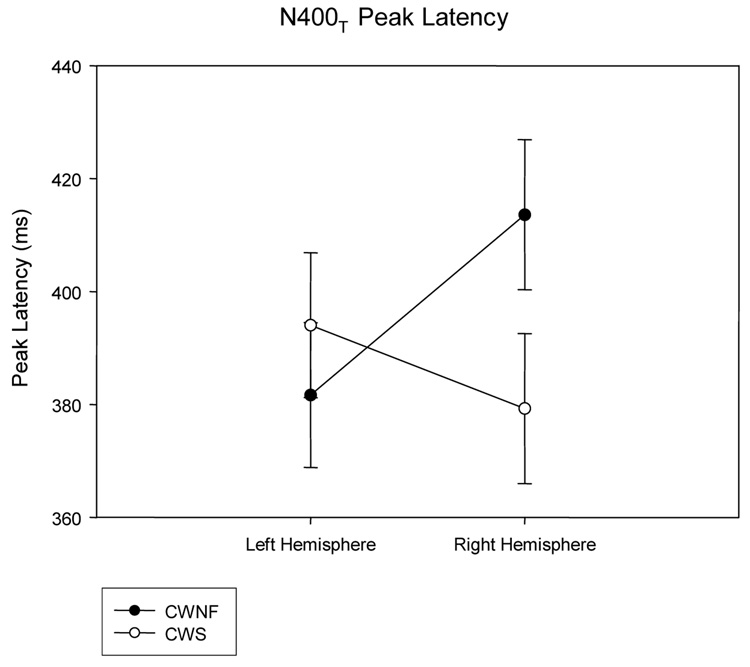

The N400P for the CWNF and CWS can be observed in the ERP waveforms illustrated in Figure 4. The mean (SE) peak latency of the second negative component was 408.4 (11.3) ms for the CWS and 423 (11.3) ms for the CWNF and did not differ significantly between the groups, F (1, 18) < 1. However, there was a significant interaction between hemisphere and group, F (1, 18) = 11.579, p = .003, ep2 = .391. As shown in Figure 5, the peak latency of the N400P was earlier over the left hemisphere compared to the right hemisphere for the CWNF, Tukey HSD p < .05. For the CWS, the N400P peak latency was similar over the hemispheres. In addition, the peak latency of the N400P over the right hemisphere was earlier in the CWS compared to the CWNF (Tukey HSD p <.05).

Figure 4.

ERP grand averages elicited by the prime words for children who are normal fluent (CWNF) and children who stutter (CWS). The ERPs are shown for electrode sites over the left and right hemispheres from anterior to posterior locations. In this and subsequent ERP figures, negative potentials are plotted upward. This figure illustrates a reduced CNV in the CWS compared to their typically developing peers.

Figure 5.

Peak latencies of the N400P elicited by prime words (means and SEs) are plotted for the left and right hemispheres for the children who are normally fluent (CWNF) and the children who stutter (CWS). The N400P peaked earlier over the left hemisphere compared to the right for the CWNF. Further, compared to the CWNF, the N400P elicited in CWS peaked earlier over the right hemisphere.

The mean amplitude of the N400P did not differ for the two groups of children, F (1, 18) = 1.042, p = .32. The condition effect was significant for the mean amplitude measure, F (3, 53) = 3.011, H–F p =.038, ep2 = .143. Post-hoc comparisons revealed that the significant condition effect was due to a larger negative mean amplitude for the primes in the incongruent rhyme condition relative to the congruent rhyme condition (Tukey HSD p < .05). All other condition comparisons did not reach significance.

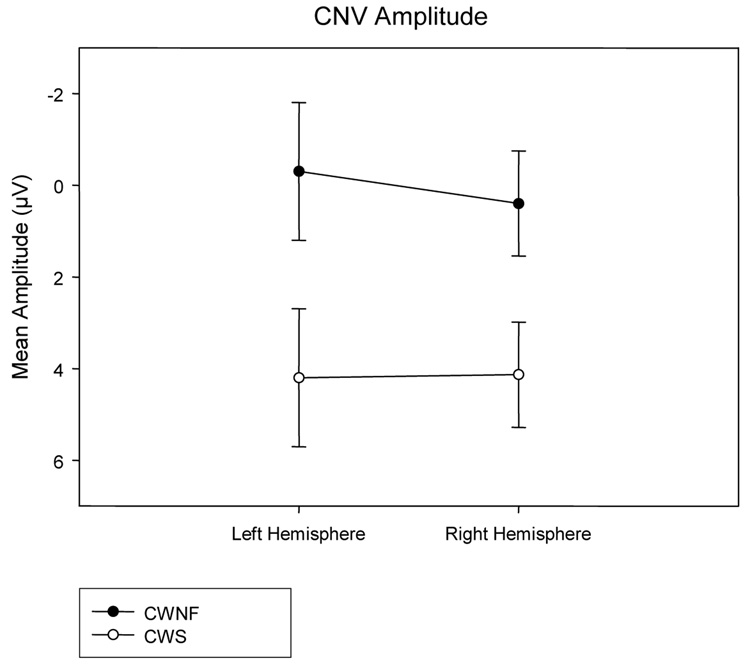

CNV

The mean amplitude of the CNV was reduced in the CWS compared to the CWNF, F (1, 18) = 5.410, p = .032, ep2 = .231. This can be observed in the ERP waveforms in Figure 4 and is illustrated across electrode sites for each hemisphere in Figure 6. The amplitude of the CNV was similar across conditions, F (3, 54) = 2.014, H–F p = .134. A significant effect of electrode site, F (5, 90) = 9.825, H–F p < .001, ep2 = .353, revealed that mean negative amplitude was greatest for TP7/8 and CP3/4 (Tukey HSD < .05).

Figure 6.

Mean amplitudes of the CNV elicited by prime words (means and SEs) are plotted for the left and right hemispheres for the children who are normally fluent (CWNF) and the children who stutter (CWS). While the CWNF exhibited a typical CNV, the CNV was reduced in the CWS.

Summary for ERPs Elicited by Primes

The ERPs elicited by the prime words revealed two main differences between the CWS and the CWNF. The peak latency of the N400P was earlier over the left compared to the right hemisphere for the CWNF, a pattern not seen in the CWS. In fact, the peak latency of the N400P was earlier for the CWS over the right hemisphere compared to the CWNF. Furthermore, the CNV amplitude was reduced in the CWS compared to the CWNF.

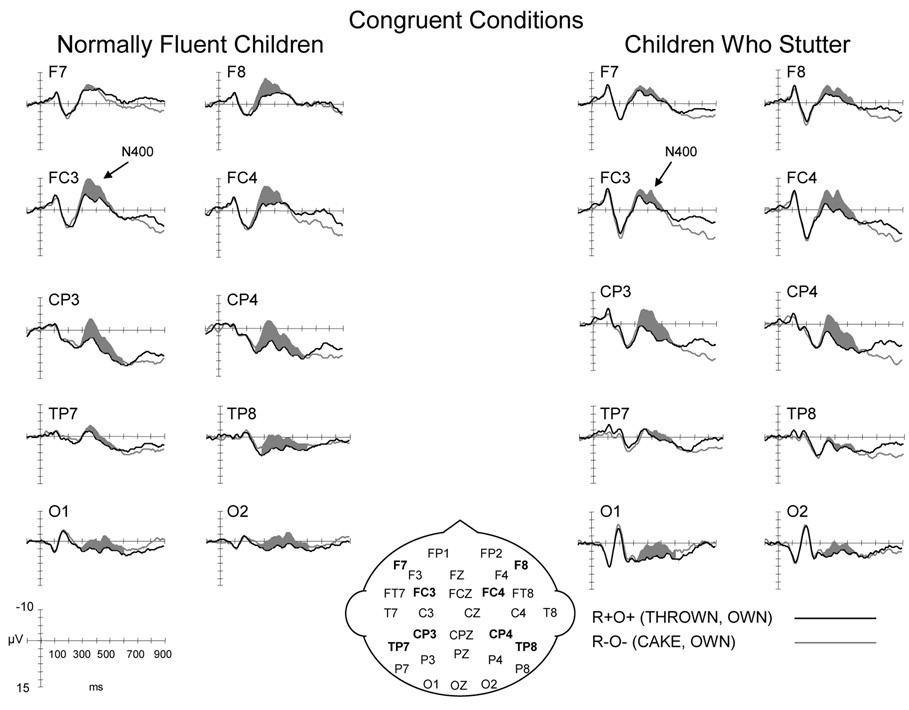

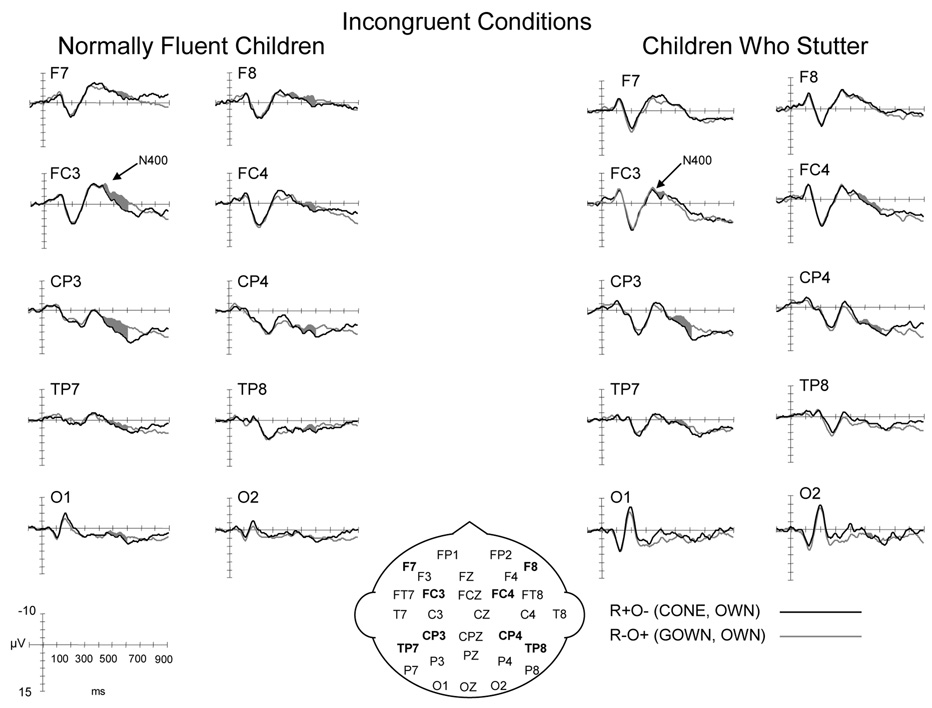

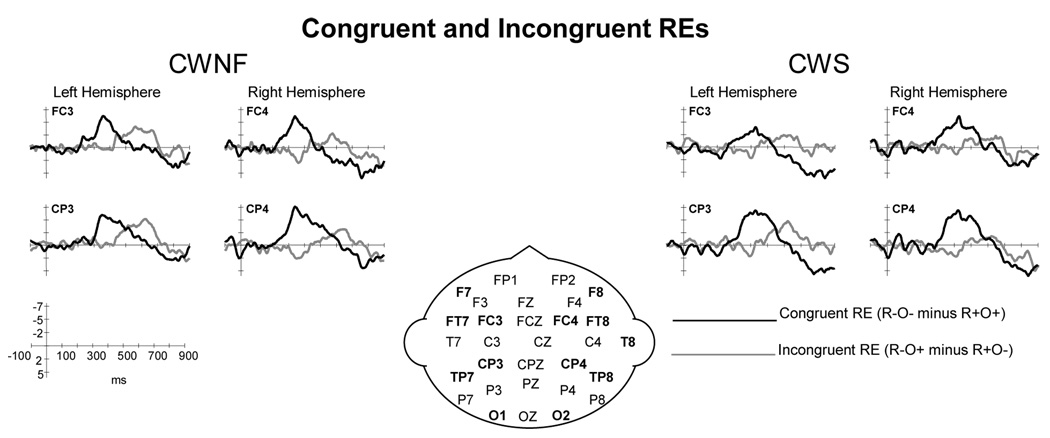

Targets

The ERPs elicited by the targets are illustrated in Figure 7 and Figure 8. As can be observed in Figure 7, both groups exhibited the expected increase in the N400T amplitude for the non-rhyme relative to the rhyme condition in the congruent conditions. There is also evidence of a prolonged negativity for incongruent non-rhymes relative to the incongruent rhymes for both groups (Figure 8). The similarities and differences in the ERP waveforms for the CWS and CWNF are summarized below.

Figure 7.

ERP grand averages elicited by the target words in the congruent rhyme (R+O+) and congruent non-rhyme (R−O−) conditions for children who are normally fluent (CWNF) and children who stutter (CWS). The ERPs are shown for electrode sites over the left and right hemispheres from anterior to posterior locations. The shaded areas indicate the increased negativity of the N400T elicited by the non-rhyme condition relative to the rhyme condition.

Figure 8.

ERP grand averages elicited by the target words in the incongruent rhyme (R+O−) and incongruent non-rhyme (R−O+) conditions for children who are normally fluent (CWNF) and children who stutter (CWS). The ERPs are shown for electrode sites over the left and right hemispheres from anterior to posterior locations. The shaded areas indicate the increased negativity of the N400T elicited by the non-rhyme condition relative to the rhyme condition.

Early Potentials Elicited by Targets

N100, N190, P200

The mean peak latencies and amplitudes of the early potentials were not affected differentially by the prime conditions, F (3,54) < 2.54, H–F p > .065. The peak latencies and amplitudes of the early potentials elicited by the primes also did not differentiate the CWS and CWNF groups, F (1, 18) < 3.26, p > .085.

Later Potentials Elicited by Targets

N400T

The N400T can be observed for the CWNF and the CWS in the ERP waveforms displayed in Figure 7 and Figure 8. The mean (SE) peak latencies of the second negative component was 386.7 (11.3) ms for the CWS and 397.6 (11.3) ms for the CWNF and did not differ between groups, F (1, 18) < 1. However, there was a significant interaction between hemisphere and group, F (1, 18) = 6.307, p = .02, ep2 = .259. As shown in Figure 9, the peak latency of the N400T tended to be earlier over the left hemisphere compared to the right hemisphere for the CWNF, Tukey HSD p = .10. For the CWS, the N400T peak latency was similar over the hemispheres. In addition, the peak latency of the N400T over the right hemisphere tended to be earlier in the CWS compared to the CWNF, Tukey HSD p= .07.

Figure 9.

Peak latencies of the N400T elicited by target words (means and SEs) are plotted for the left and right hemispheres for the children who are normally fluent (CWNF) and the children who stutter (CWS). Consistent with the findings for the N400P, the N400T tended to peak earlier over the left hemisphere compared to the right for the CWNF, a pattern not seen for the CWS. Further, compared to the CWNF, the N400T elicited in the CWS tended to peak earlier over the right hemisphere.

The mean amplitude of the N400T did not differ for the two groups of children, F (1, 18) < 1. The N400T mean amplitude was affected by condition as evident in Figure 7, F (3, 54) = 4.91, H–F p =.004, ep2 = .214. Post-hoc comparisons revealed that the significant condition effect was due to a larger amplitude for the congruent non-rhyme relative to congruent rhyme, Tukey HSD < .05. An interaction between condition and electrode, F (15, 270) = 2.516, H–F p = .012, ep2 = .123, indicated that the larger amplitude for the congruent non-rhyme N400T compared to the rhyme condition was significant over the mid-lateral electrode sites FC3/4 and CP3/4, Tukey HSD p < .05. The mean amplitudes of the N400T for the incongruent non-rhyme (R−O+, e.g., GOWN, OWN) did not differ from the incongruent rhyme (R+O-, e.g., CONE-OWN), however, as illustrated in Figure 8, the N400T elicited by the incongruent non-rhyme continued beyond the N400T for the incongruent rhyme. These findings are consistent with previous findings for children (Weber-Fox et al., 2003) and are reflected quantitatively in the RE analyses of the difference waves described below.

Rhyming Effect (RE) - Difference Wave Analyses

The REs (rhyming effect) derived from the difference waves of both groups of children are illustrated in Figure 10. The peak latencies of the congruent as well as the incongruent REs were similar for both groups, F (1, 18) < 1. Consistent with previous findings (Weber-Fox et al., 2003), the peak latencies of the REs were longer for the incongruent subtractions compared to the congruent ones, F (1, 18) = 27.375, p < .001, ep2 = .603. The peak latencies averaged across the electrode sites for the congruent difference waves, 454.35 (19.9) ms, were on average 119.4 ms earlier than those of the incongruent difference waves, 573.73 (15.9) ms.

Figure 10.

Grand average difference waves isolating the rhyming effect (RE) for the congruent (R−O− minus R+O+) and incongruent (R−O+ minus R+O−) subtractions. The REs are shown for children who are normally fluent (CWNF) and children who stutter (CWS) from electrode sites over mid-lateral electrode locations where the RE was most prominent.

The mean amplitudes of the REs also did not differ between the CWS and the CWNF, F (1, 18) < 1. The amplitude of the RE was larger for the congruent RE compared to the incongruent RE, F (1, 18) = 10.45, p = .005, ep2 = .367. This is evident in the illustration of the difference waveforms in Figure 10. An interaction between the RE subtraction condition and hemisphere, F (1, 18) = 7.22, p = .015, ep2 = .286, revealed that the difference in amplitude was significant over the right hemisphere. The distribution of the RE was largest over the mid-lateral electrode sites, FC3/4 and CP3/4, F (5, 90) = 4.58, H–F p = .004 ep2 = .203, Tukey HSD p < .05.

Summary for ERPs Elicited by Targets

The ERPs elicited by the target words revealed similar findings for the N400T as those for the N400P. The peak latency of the N400T tended to be earlier over the left compared to the right hemisphere for the CWNF, a pattern not seen in the CWS. Moreover, the peak latency of the N400T tended to be earlier for the CWS over the right hemisphere compared to the CWNF.

Discussion

The converging evidence from the behavioral and electrophysiological measures in this experiment indicate that specific neural processes related to phonological encoding mediating rhyming decisions operate atypically for CWS as a group and provide clues about which stages of processing differ. These results are consistent with the hypothesis that functions of the language system of CWS may be an important factor in the development of stuttering for some individuals (e.g., Smith 1990; Smith & Kelly, 1997). Moreover, the ERP findings point to specific stages of rhyming judgments that are different in CWS, specifically, in the rehearsal period before the target words appear, and during the interval when lexical integration is thought to occur.

Behavioral Accuracy

It is noteworthy that the CWS and CWNF performed similarly on the Dollaghan and Campbell (1998) non-word repetition task. This result indicates that the phonological awareness and production skills of the two groups were comparable as measured by this test. However, it should be noted that many of the participants performed at or near ceiling. Since this test was developed to elucidate processing differences in younger school-age children (6;0–9;9 years;months) with specific language impairment (Dollaghan & Campbell, 1998), it is possible that a non-word repetition task containing more phonologically complex stimuli (e.g., containing consonant clusters) may have distinguished the groups in the current study.

Despite similar phonological awareness and production abilities as measured by nonword repetition test (Dollaghan & Campbell, 1998), rhyming judgment accuracies were lower for the CWS compared to the CWNF. The differences in behavioral accuracy can not be attributed to reading difficulties as both groups reported normal, age-appropriate reading skills with no history of reading problems in school. So what might the reduced rhyming judgment accuracy reflect for the CWS? It is important to look at the demands of the rhyming task in the present study. The task required making rapid, as well as accurate, responses as the children were instructed to respond “as quickly as possible”. Therefore the task imposed a temporal constraint in both the rate of stimulus presentation and in formulating a response. In addition, the orthographic incongruence conditions added a level of interference in making the rhyming judgments. Thus, this task challenged the phonological processing system in a way that may not be apparent in everyday reading, or standard rhyming tasks. So, it is possible that the reduced rhyming accuracy of the CWS reflects a fairly subtle processing deficit. We predicted that CWS would exhibit poorer performance on the rhyming task as the findings indicate, supporting the hypothesis that at least some aspects of phonological processing function less effectively in CWS. However, we also predicted that differences in performance would be enhanced for the more complex conditions containing orthographic and phonological incongruence, but this prediction was not supported by the data. Unlike the previous findings in AWS who performed less accurately on only the most difficult condition compared to normally fluent speakers, the profile for the CWS was more uniform across the conditions. Thus, the behavioral findings are consistent with the idea that there are changes with maturation of phonological processing systems for persons who stutter. The ERPs elicited in the CWS and CWNF, discussed below, suggest that differences in specific stages of phonological processing may have contributed to the lower rhyming judgment accuracy of the CWS.

N400P and N400T

Consistent with previous findings in AWS and a large group of normally developing children (Weber-Fox et al., 2004), the CWS and the CWNF both exhibited typical rhyme responses characterized by increases in the N400T amplitude to non-rhyming compared to rhyming targets. As previously described, the N400T elicited in rhyming paradigms is thought to be a member of the family of N400-like potentials that are sensitive to contextual information (Grossi et al., 2001; Rugg & Barrett, 1987). In the current study, as in many other rhyming studies (e.g., Grossi et al., 2001; Kramer & Donchin, 1987; Polich et al., 1983; Rugg & Barrett, 1987; Weber-Fox et al., 2003; 2004), the amplitude of the N400T elicited by the target words was sensitive to the context imposed by the preceding priming words. Thus, the neural functions of the CWS showed a similar sensitivity to the phonological and orthographic contextual priming for processing the target words.

Interestingly, the peak latency of the N400T was earlier over the left hemisphere compared to the right for the CWNF, while the N400T peak latency of the CWS was equal over the two hemispheres, and peaked earlier over the right hemisphere compared to the CWNF. This relative difference in hemispheric latencies was also observed for the N400P elicited by the prime words, suggesting that the difference in the timing was not specific to the target word processing. Thus, these results indicate that for this prime-target silent rhyming task, a difference in timing of neural functions between the two hemispheres distinguished the CWS and that this difference was specific to the later endogenous cognitive potential, the N400, known to index integrative language functions (see Kutas, 1997 for review). The findings suggest that the timing of the relative contributions of the left and right hemispheres functions may operate differently in CWS.

Previous studies in adults have suggested that AWS may engage right hemisphere functions differently than normally fluent speakers, even for tasks that do not require overt speaking (e.g., Braun et al., 1997; Fox et al., 1996; 2000; Ingham, Fox, Ingham, & Zamarripa, 2000; Preisbach et al, 2003; Weber-Fox et al., 2004). It was suggested by Preisbach and colleagues (2003) that atypical right hemisphere activity in AWS may reflect compensatory processes for the reduction of white matter underlying the left sensorimotor cortex (tongue and larynx regions) (Sommer, Koch, Paulus, Wieller, & Buchel, 2002). Very little is known, however, about neural anatomy and functions of CWS, as almost all studies have been conducted with adult participants. One structural imaging study of CWS aged 9–12, indicates that anatomical differences related to speech and language may distinguish the brains of children who exhibit persistent stuttering (Chang, Erickson, & Ambrose, 2005). The anatomical differences were identified as reduced gray matter volume in the left inferior frontal cortex (Broca’s area), increased gray matter in the right hemisphere insula region, and decreased white matter in the arcuate fasciculus. Based on the previous work in adults and the preliminary imaging findings in CWS, it is possible that the differences in the relative timing of the left and right hemisphere functions for CWS may in part be due to atypical growth and functioning of cortical regions mediating speech and language. More studies of CWS and their typically developing peers are needed to better understand underlying differences in neuroanatomy in addition to discovering the patterns of neural functions for processing different kinds of linguistic and non-linguistic information.

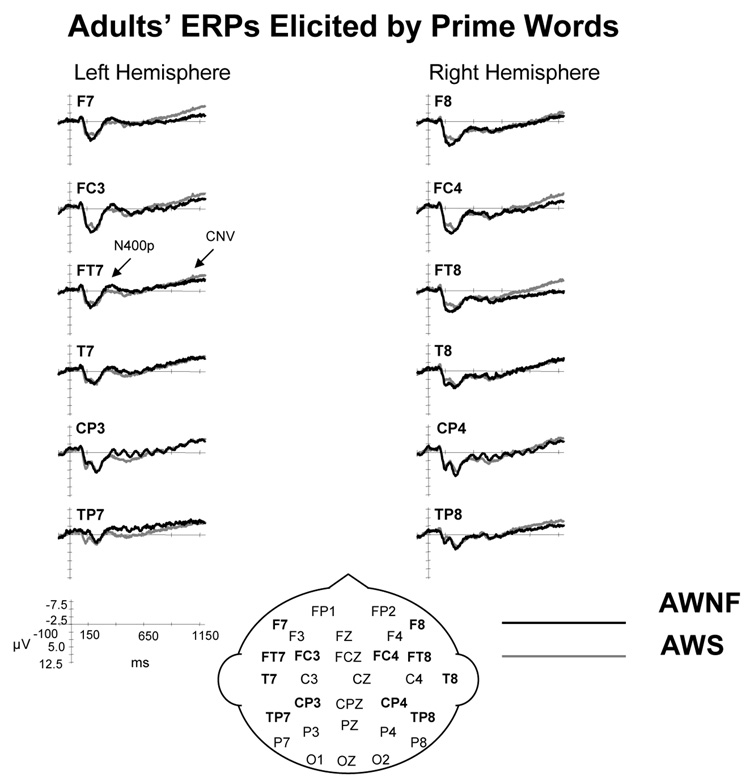

Contingent Negative Variation (CNV)

The CNV was found to be reduced in the CWS relative to the CWNF. As, previously reviewed, the CNV elicited by the primes is thought to index working memory retention and silent rehearsal of the phonological sequence contained in the prime word as the participant awaits the expected presentation of the target word (Rugg, 1984). Also, the CNV elicited in this kind of visual rhyming task has been shown to change with maturation, specifically increasing in amplitude over anterior sites of the left hemisphere, as children age (Grossi et al., 2001). It is important to note that in our earlier study of AWS, no differences were found between the amplitude of the CNV in AWS and AWNF. The results for the CNV elicited by the prime words were not reported (Weber-Fox et al., 2004), because no group differences were found for the prime words and the analysis focused on the ERP responses elicited by the target words. The overlay of the CNV elicited in AWS and AWNF is shown in Figure 11; both groups exhibit a typical CNV to the prime words. These earlier findings suggest that the diminished CNV in the CWS may be a developmental phenomenon, that is, some aspects of the CNV are atypical in CWS, but these differences resolve as they mature. A cross-sectional study of typically developing children by Grossi and colleagues (2001) reveals that the age of our participants spanned a point at which the CNV amplitude increases rapidly. Their findings reveal low, flat CNV amplitudes from age 7 through age 10 at frontal, anterior-temporal, and temporal sites, with marked increases in CNV amplitudes for the 11–12 and 13–14 year old children. Because of the smaller number of children in the present study, we could not examine how the CNV changed with age, but our findings suggest that the CNV elicited in the CWS is less mature. Given that the CNVs elicited in AWS and AWNF do not differ, we conclude that at some later point in development, the processing indexed by the CNV of CWS matures and “catches-up” to that of the CWNF. More work is needed to chart the developmental time course of the CNV in CWS relative to their typically developing peers.

Figure 11.

Grand average ERPs elicited by prime words for adults subjects who stutter (AWS) and those who are normally fluent (AWNF) who were tested in a previous study (Weber-Fox et al., 2004). The ERPs are shown for electrode sites over the left and right hemispheres from anterior to posterior locations. This figure illustrates that, unlike the findings for the CWS, the ERPs elicited by the prime words were similar for the AWS and AWNF.

Rhyming Effect – (RE)

Both the CWNF and the CWS exhibited typical REs with no significant differences in ERP measures of latency or amplitude of the RE. These findings suggest that the cognitive processes that mediate the comparisons of the phonological representations of words, that is the processes underlying the ability to determine whether words rhyme or not (Grossi et al., 2001) functioned similarly for the CWS and the CWNF. Thus the decreased behavioral accuracy of rhyming judgments cannot be attributed to this particular stage of processing. Instead, as previously described, the patterns of neural functions for processes preceding the RE, indexed by the N400P, CNV, and N400T, suggest atypical processing that may have resulted in less efficient, less accurate rhyming detection for the CWS. Based on the present results, it seems likely that the neural functions related to phonological rehearsal and target anticipation, as indexed by the CNV, is immature in CWS. Further, it is also likely that the relative contributions of the left and right hemisphere functions may differ in CWS for the stage of processing when linguistic integration occurs, as indexed by the N400P and N400T. Taken together, these results suggest that CWS are less able to form and retain a stable neural representation of the prime onset and rime as they anticipate the target presentation, which may lead to lower subsequent rhyming judgment accuracy.

Developmental Implications

Overall, the behavioral and ERP differences between CWS compared to their normally fluent peers were qualitatively different and much more pronounced than those in AWS observed in an earlier study from our laboratory (Weber-Fox et al., 2004). These findings reinforce the need for developmental stuttering research because the present study demonstrates that differences in neural functions associated with stuttering change over the course of development. Thus, it will be important for future studies to use techniques for neuroanatomical and functional neural imaging in CWS to better understand how brain organization and function may contribute to the development of the disorder. Further, the study of even younger CWS, who are closer to the onset, will be vital to determine with greater certainty how atypical neural processing may contribute to stuttering behavior.

It should be noted that the decreased task performance and atypical ERPs observed for the CWS may also be related to the processing demands of the task. Our previous findings for AWS indicate that increased processing demands, while affecting performance for both AWS and normally fluent speakers, accentuated the poorer performance of the AWS (Cuadrado & Weber-Fox, 2003; Weber-Fox et al., 2004). In the current study, increased processing demands related to orthographic and phonological incongruence did not accentuate the group differences in performance or ERP effects. However, it is possible that, unlike for the AWS, the inherent task demands common across conditions were sufficient to produce excessive processing loads for the CWS. More work is needed to determine whether simplifying the overall task demands for phonological processing would result in similar performances and ERPs for CWS and CWNF.

Acknowledgments

We thank William Murphy, M.A., CCC-SLP for his help in recruiting participants who stutter. We also thank Janna Berlin for scheduling the participants, and Bridget Walsh and Neeraja Sagagopan for help in the speech, language, and hearing testing of some of the participants. Portions of this study were presented at the American Speech, Language, and Hearing conference (Weber-Fox, Spruill, Spencer, & Smith, 2004). This work was funded by a grant (DC00559) from the National Institutes on Deafness and other Communication Disorders.

Footnotes

The use of physically linked mastoids, while removing bias toward either hemisphere, may reduce hemispheric asymmetries in the ERPs (Luck, 2005).

The P200 appears larger for the CWS compared to the CWNF (Figure 4), however, this difference did not reach significance. Inspection of individual participant records indicated that the P200 amplitude of two of the CWS were much larger compared to the other participants.

References

- American Electroencephalographic Society. Guideline thirteen: Guidelines for standard electrode placement nomenclature. Journal of Clinical Neurophysiology. 1994;11:111–113. [PubMed] [Google Scholar]

- Arthur TAA, Hitch GJ, Halliday MS. Articulatory loop and children’s reading. British Journal of Psychology. 1994;85:283–300. [Google Scholar]

- Baddeley AD. Working Memory. Oxford: Oxford University Press; 1986. [Google Scholar]

- Baddeley AD, Hitch GJ. Working memory. In: Bower G, editor. Recent Advances in the Psychology of Learning and Motivation. vol. VIII. New York: Academic Press; 1974. [Google Scholar]

- Beitchman JH, Nair R, Clegg M, Patel PC. Prevalence of speech and language disorders in 5-year-old kindergarten children in the Ottawa-Carleton region. Journal of Speech and Hearing Disorders. 1986;51:98–110. doi: 10.1044/jshd.5102.98. [DOI] [PubMed] [Google Scholar]

- Besner D. Phonology, lexical access in reading, and articulatory suppression: A critical review. Quarterly Journal of Experimental Psychology. 1987;39A:467–438. [Google Scholar]

- Braun AR, Varga M, Stager S, Schulz G, Selbie S, Maisog JM, Carson RE, Ludlow CL. Altered patterns of cerebral activity during speech and language production in developmental stuttering: An H2 150 positron emission tomography study. Brain. 1997;120:761–784. doi: 10.1093/brain/120.5.761. [DOI] [PubMed] [Google Scholar]

- Chang S-E, Erickson K, Ambrose N. Regional gray and white matter volumetric growth differences in children with persistent vs. recovered stuttering: A VBM study; Presented at the Society for Neuroscience Conference; Washington, D.C. 2005. [Google Scholar]

- Coch D, Grossi G, Coffey-Corina S, Holcomb P, Neville H. A developmental investigation of ERP auditory rhyming effects. Developmental Science. 2002;5:467–489. [Google Scholar]

- Conture EG, Louko LJ, Edwards ML. Simultaneously treating stuttering and disordered phonology in children: Experimental treatment, preliminary findings. American Journal of Speech-Language Pathology. 1993;2:72–81. [Google Scholar]

- Cuadrado E, Weber-Fox C. Atypical syntactic processing in individuals who stutter: Evidence from event-related brain potentials and behavioral measures. Journal of Speech, Language, and Hearing Research. 2003;46:960–976. doi: 10.1044/1092-4388(2003/075). [DOI] [PubMed] [Google Scholar]

- Denny M, Smith A. Gradations in a pattern of neuromuscular activity associated with stuttering. Journal of Speech, Language, and Hearing Research. 1992;35:1216–1229. doi: 10.1044/jshr.3506.1216. [DOI] [PubMed] [Google Scholar]

- Dollaghan C, Campbell TF. Nonword repetition and child language impairment. Journal of Speech, Language, and Hearing Research. 1998;41:1136–1146. doi: 10.1044/jslhr.4105.1136. [DOI] [PubMed] [Google Scholar]

- Fox PT, Ingham RJ, Ingham JC, Hirsch TB, Down JH, Martin C, et al. A PET study of the neural systems of stuttering. Nature. 1996;382:158–162. doi: 10.1038/382158a0. [DOI] [PubMed] [Google Scholar]

- Fox PT, Ingham RJ, Ingham JC, Zamarripa F, Ziong J-H, Lancaster J. Brain correlates of stuttering and syllable production: A PET performance-correlation analysis. Brain. 2000;123:1985–2004. doi: 10.1093/brain/123.10.1985. [DOI] [PubMed] [Google Scholar]

- Francis WN, Kucera H. Frequency Analysis of English Usage: Lexicon and Grammar. Boston: Houghton Mifflin Company; 1982. [Google Scholar]

- Gathercole SE, Willis C, Baddeley AD. Differentiating phonological memory and awareness of rhyme: Reading and vocabulary development in children. British Journal of Psychology. 1991;82:387–406. [Google Scholar]

- Goffman L, Smith A. Development and phonetic differentiation of speech movement patterns. Journal of Experimental Psychology: Human Perception and Performance. 1999;25:649–660. doi: 10.1037//0096-1523.25.3.649. [DOI] [PubMed] [Google Scholar]

- Grossi G, Coch D, Coffey-Corina S, Holcomb PJ, Neville HJ. Phonological processing in visual rhyming: A developmental ERP study. Journal of Cognitive Neuroscience. 2001;13(5):610–625. doi: 10.1162/089892901750363190. [DOI] [PubMed] [Google Scholar]

- Hagoort P, Brown C, Osterhout L. The neurocognition of syntactic processing. In: Brown CM, Hagoort P, editors. The neurocognition of language. New York: Oxford University Press; 1999. pp. 273–316. [Google Scholar]

- Hammill DD, Brown VL, Larsen SC, Wiederholt JL. Test of Adolescent and Adult Language – Third Edition (TOAL-3) Austin, TX: Pro-Ed.; 1994. [Google Scholar]

- Hays WL. Statistics. Fifth Edition. Forth Worth, TX: Harcourt Brace College Publishers; 1994. [Google Scholar]

- Høien T, Lundberg I, Stanovich KE, Bjaalid I-K. Components of phonological awareness. Reading and Writing. 1995;7(2):171–188. [Google Scholar]

- Ingham RJ, Fox PT, Ingham JC, Zamarripa F. Is overt stuttered speech a prerequisite for the neural activation associated with chronic developmental stuttering? Brain and Language. 2000;75:163–194. doi: 10.1006/brln.2000.2351. [DOI] [PubMed] [Google Scholar]

- Johnston R, McDermott EA. Suppression effects in rhyme judgment tasks. Quarterly Journal of Experimental Psychology. 1986;38A:111–124. [Google Scholar]

- Kleinow J, Smith A. Influences of length and syntactic complexity on the speech motor stability of the fluent speech of adults who stutter. Journal of Speech, Language, and Hearing Research. 2000;43:548–559. doi: 10.1044/jslhr.4302.548. [DOI] [PubMed] [Google Scholar]

- Kleinow J, Smith A. Potential interactions among linguistic, autonomic, and motor factors in speech. Developmental Psychobiology. 2006;48:275–287. doi: 10.1002/dev.20141. [DOI] [PubMed] [Google Scholar]

- Kolk H, Postma A. Stuttering as a covert repair phenomenon. In: Curlee RF, Siegel GM, editors. Nature of Treatment of Stuttering: New Directions. New York: Allyn & Bacon; 1997. pp. 182–203. [Google Scholar]

- Kramer AF, Donchin E. Brain potentials as indices of orthographic and phonological interaction during word matching. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1987;13(1):76–86. doi: 10.1037//0278-7393.13.1.76. [DOI] [PubMed] [Google Scholar]

- Kutas M. Views on how the electrical activity that the brain generates reflects the functions of different language structures. Psychophysiology. 1997;34:383–398. doi: 10.1111/j.1469-8986.1997.tb02382.x. [DOI] [PubMed] [Google Scholar]

- Levelt JM. Speaking: From intention to articulation. Cambridge, MA: MIT Press; 1998. [Google Scholar]

- Louko LJ. Phonological characteristics of young children who stutter. Topics in Language Disorders. 1995;15:48–59. [Google Scholar]

- Luck SJ. An Introduction to the Event-Related Potential Technique. Cambridge, MA: MIT Press; 2005. [Google Scholar]

- McClean MD, Runyan CM. Variations in the relative speeds of orofacial structures with stuttering severity. Journal of Speech, Language, and Hearing Research. 2000;43:1524–1531. doi: 10.1044/jslhr.4306.1524. [DOI] [PubMed] [Google Scholar]

- Melnick KS, Conture EG. Relationship of length and grammatical complexity to the systematic and non-systematic speech errors and stuttering of children who stutter. Journal of Fluency Disorders. 2000;25:21–45. [Google Scholar]

- Nippold MA. Phonological disorders and stuttering in children: What is the frequency of co-occurrence? Clinical Linguistics and Phonetics. 2001;15(3):219–228. [Google Scholar]

- Nippold MA. Stuttering and phonology: Is there an interaction? American Journal of Speech-Language Pathology. 2002;11:99–110. [Google Scholar]

- Oldfield RC. The Assessment and Analysis of Handedness: The Edinburgh Inventory. Neuropsychologia. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Paden EP, Yairi E, Ambrose NG. Early childhood stuttering II: Phonology and stuttering. Journal of Speech, Language, and Hearing Research. 1999;42:1113–1124. doi: 10.1044/jslhr.4205.1113. [DOI] [PubMed] [Google Scholar]

- Peters HFM, Boves L. Coordination of aerodynamic and phonatory processes in fluent speech of stutterers. Journal of Speech, Language, and Hearing Research. 1988;31:352–361. doi: 10.1044/jshr.3103.352. [DOI] [PubMed] [Google Scholar]

- Polich J, McCarthy G, Wang WS, Donchin E. When words collide: Orthographic and phonological interference during word processing. Biological Psychology. 1983;16:155–180. doi: 10.1016/0301-0511(83)90022-4. [DOI] [PubMed] [Google Scholar]

- Postma A, Kolk HHJ. The covert repair hypothesis: Prearticulatory repair processes in normal and stuttered disfluencies. Journal of Speech and Hearing Research. 1993;36:472–487. [PubMed] [Google Scholar]

- Preibisch C, Neumann K, Raab P, Euler HA, von Gudenberg AW, Lanfermann H, Giraud A-L. Evidence for compensation for stuttering by the right frontal operculum. NeuroImage. 2003;20:1356–1364. doi: 10.1016/S1053-8119(03)00376-8. [DOI] [PubMed] [Google Scholar]

- Psychological Corporation. Clinical Evaluation of Language Fundamentals-3: Screening Test. San Antonio: Harcourt Brace & Company; 1996. [Google Scholar]

- Ratner N. Treating the child who stutters with concomitant language or phonological impairment. Language, Speech, and Hearing Services in Schools. 1995;26:180–186. [Google Scholar]

- Richardson TE. Phonology and reading: The effects of articulatory suppression upon homophony and rhyme judgments. Language and Cognitive Processes. 1987;2:229–244. [Google Scholar]

- Riley GD. A Stuttering Severity Instrument for Children and Adults – Third Edition (SSI-3) Austin, TX: Pro-Ed.; 1994. [DOI] [PubMed] [Google Scholar]

- Rugg MD. Event-related potentials and the phonological processing of words and nonwords. Neuropsychologia. 1984;22(4):435–443. doi: 10.1016/0028-3932(84)90038-1. [DOI] [PubMed] [Google Scholar]

- Rugg MD, Barrett SE. Event-related potentials and the interaction between orthographic and phonological information in a rhyme-judgment task. Brain and Language. 1987;32:336–361. doi: 10.1016/0093-934x(87)90132-5. [DOI] [PubMed] [Google Scholar]

- Semel E, Wiig EH, Secord W. Clinical Evaluation of Language Fundamentals- Revised: Screening Test. New York: Psychological Corporation; 1989. [Google Scholar]

- Smith A. Factors in the etiology of stuttering. American Speech-Language-Hearing Association Reports, Research Needs in Stuttering: Roadblocks and Future Directions. 1990;18:39–47. [Google Scholar]

- Smith A, Goffman L. Interaction of language and motor factors in speech production. In: Masson B, Kent RD, Peters HFM, Peters PHHM, Hulstijn W, editors. Speech Motor Control in Normal and Disordered Speech. Oxford University Press; 2004. [Google Scholar]

- Smith A, Kelly E. Stuttering: A dynamic multifactorial model. In: Curlee RF, Seigel GM, editors. Nature and treatment of stuttering: New directions. 1997. pp. 204–217. [Google Scholar]

- Smith A, Luschei E, Denny M, Wood J, Hirano M, Badylak S. Spectral analyses of laryngeal and orofacial muscles in stutterers. Journal of Neurology, Neurosurgery, and Psychology. 1993;56:1301–1311. doi: 10.1136/jnnp.56.12.1303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sommer M, Koch MA, Paulus W, Weiller C, Buchel C. Disconnection of speech-relevant brain areas in persistent developmental stuttering. The Lancet. 2002;360:380–383. doi: 10.1016/S0140-6736(02)09610-1. [DOI] [PubMed] [Google Scholar]

- St. Louis KO, Ruscello DM. Oral Speech Mechanism Screening Exam – Revised (OSMSE-R) Austin, TX: Pro-Ed.; 1987. [Google Scholar]

- Van Lieshout PHHM, Starkweather CW, Hulstijn W, Peters HFM. Effects of linguistic correlates of stuttering on EMG activity in nonstuttering speakers. Journal of Speech, Language, and Hearing Research. 1995;38:360–372. doi: 10.1044/jshr.3802.360. [DOI] [PubMed] [Google Scholar]

- Walter WG, Cooper R, Aldridge V, McCallum WC, Winter AL. Contingent negative variation: An electrical sign of sensorimotor association and expectancy in the human brain. Nature. 1964;203:380–384. doi: 10.1038/203380a0. [DOI] [PubMed] [Google Scholar]

- Weber-Fox C. Neural systems for sentence processing in stuttering. Journal of Speech Language and Hearing Research. 2001;44:814–825. doi: 10.1044/1092-4388(2001/064). [DOI] [PubMed] [Google Scholar]

- Weber-Fox C, Spencer R, Cuadrado E, Smith A. Development of Neural Processes Mediating Rhyme Judgments: Phonological and Orthographic Interactions. Journal of Developmental Psychobiology. 2003;43(2):128–145. doi: 10.1002/dev.10128. [DOI] [PubMed] [Google Scholar]

- Weber-Fox C, Spencer R, Spruill JE, III, Smith A. Phonological processing in adults who stutter: Electrophysiological and behavioral evidence. Journal of Speech, Language, and Hearing Research. 2004;47:1244–1258. doi: 10.1044/1092-4388(2004/094). [DOI] [PubMed] [Google Scholar]

- Weber-Fox C, Spruill JE, III, Spencer R, Smith A. American Speech, Language, & Hearing Association. Philadelphia, PA; 2004. Neurophysiological indices of phonological processing in children who stutter. [Google Scholar]

- Wilding J, White W. Impairment of rhyme judgments by silent and overt articulatory suppression. Quarterly Journal of Experimental Psychology. 1985;37A:95–107. [Google Scholar]

- Wingate M. The Structure of Stuttering: A Psycholinguistic Analysis. New York: Springer-Verlag; 1988. [Google Scholar]

- Wolk L. Intervention strategies for children who exhibit co-existing phonological and fluency disorders: A clinical note. Child Language Teaching and Therapy. 1998;14:69–82. [Google Scholar]

- Yairi E, Ambrose NG. Early childhood stuttering I: Persistency and recovery rates. Journal of Speech, Language, and Hearing Research. 1999;42:1097–1112. doi: 10.1044/jslhr.4205.1097. [DOI] [PubMed] [Google Scholar]

- Yaruss SJ, Conture EG. Stuttering and phonological disorders in children: Examination of the covert repair hypothesis. Journal of Speech and Hearing Research. 1996;39:349–364. doi: 10.1044/jshr.3902.349. [DOI] [PubMed] [Google Scholar]

- Zimmerman GN. Articulatory behaviors associated with stuttering: A cineradiographic analysis. Journal of Speech, Language, and Hearing Research. 1980;23:108–121. doi: 10.1044/jshr.2301.108. [DOI] [PubMed] [Google Scholar]

- Zocchi L, Estenne M, Johnston S, Del Ferro L, Ward ME, Macklem PT. Respiratory muscle incoordination in stuttering speech. American Review of Respiratory Disorders. 1990;141:1510–1515. doi: 10.1164/ajrccm/141.6.1510. [DOI] [PubMed] [Google Scholar]