Abstract

The visual and auditory systems frequently work together to facilitate the identification and localization of objects and events in the external world. Experience plays a critical role in establishing and maintaining congruent visual–auditory associations, so that the different sensory cues associated with targets that can be both seen and heard are synthesized appropriately. For stimulus location, visual information is normally more accurate and reliable and provides a reference for calibrating the perception of auditory space. During development, vision plays a key role in aligning neural representations of space in the brain, as revealed by the dramatic changes produced in auditory responses when visual inputs are altered, and is used throughout life to resolve short-term spatial conflicts between these modalities. However, accurate, and even supra-normal, auditory localization abilities can be achieved in the absence of vision, and the capacity of the mature brain to relearn to localize sound in the presence of substantially altered auditory spatial cues does not require visuomotor feedback. Thus, while vision is normally used to coordinate information across the senses, the neural circuits responsible for spatial hearing can be recalibrated in a vision-independent fashion. Nevertheless, early multisensory experience appears to be crucial for the emergence of an ability to match signals from different sensory modalities and therefore for the outcome of audiovisual-based rehabilitation of deaf patients in whom hearing has been restored by cochlear implantation.

Keywords: sound localization, spatial hearing, multisensory integration, auditory plasticity, behavioural training, vision

1. Introduction

Our perception of the external world relies principally on vision and hearing. An ability to determine accurately and rapidly the location of a sound source is of great importance in the lives of many species. This is equally the case for animals seeking potential mates or prey as for those trying to avoid and escape from approaching predators. The process of localizing sounds also plays a key role in directing attention towards objects or events of interest, so that they can be registered by other senses, most commonly vision. Perhaps of most value in humans, spatial hearing can significantly improve the detection and, in turn, the discrimination of sounds of interest in noisy situations, such as a restaurant or bar. Consequently, preservation of this ability is of considerable importance in the rehabilitation of the hearing impaired. This review will consider the role of learning and plasticity in the development and maintenance of auditory localization and, in particular, the contribution of vision to this process.

2. Determining the direction of a sound source

In contrast to the visual and somatosensory systems, where stimulus location is encoded by the distribution of activity across the receptor surface in the retina or the skin, respectively, localizing a sound source is a highly complex, computational process that takes place within the brain. Because auditory space cannot be mapped onto the cochlea in the inner ear in the same way, the direction of a sound source has to be inferred from acoustical cues generated by the interaction of sound waves with the head and external ears (Blauert 1997; King et al. 2001). The separation of the ears on either side of the head is key to this, as sounds originating from a source located to one side of the head will arrive at each ear at slightly different times. Moreover, by shadowing the far ear from the sound source, the head produces a difference in amplitude level at the two ears. The level of the sound is also altered by the direction-specific filtering by the external ears, giving rise to spectral localization cues.

By themselves, each of these spatial cues is potentially ambiguous and is informative only for certain types of sound and regions of space. Thus, interaural time differences are used for localizing low-frequency sounds (less than approx. 1500 Hz in humans), whereas interaural level differences are more important at high frequencies. For narrow-band sounds, both binaural cues are spatially ambiguous, since the same cue value can arise from multiple directions known as ‘cones of confusion’ (Blauert 1997; King et al. 2001). Similarly, spectral cues are ineffective unless the sound has a broad frequency content (Butler 1986), in which case they enable the front–back confusions in the binaural cues to be resolved. Spectral cues also provide the basis for localization in the vertical plane and even allow some capacity to localize sounds in azimuth using one ear alone (Slattery & Middlebrooks 1994; Van Wanrooij & Van Opstal 2004). However, because this involves detection of the peaks and notches imposed on the sound spectrum by the external ear, the sound must have a sufficiently high amplitude for these features to be discerned (Su & Recanzone 2001). Moreover, under monaural conditions, these cues no longer provide reliable spatial information if there is uncertainty in the stimulus spectrum (Wightman & Kistler 1997). In everyday listening conditions, auditory localization cues are also likely to be distorted by echoes or other sounds. Accurate localization can therefore be achieved and maintained only if the information provided by the different cues is combined appropriately within the brain.

The values of the localization cues vary not only with the properties of the sound, but also with the dimensions of the head and external ears. Thus, during development, the cue values associated with any given direction in space will gradually change while these structures are growing (Moore & Irvine 1979; Clifton et al. 1988; Schnupp et al. 2003; Campbell et al. 2008; figure 1). Consequently, representations of sound-source location in the brain cannot become fully mature until the auditory periphery has stopped growing. Moreover, the adult values attained once development is complete will vary from one individual to another according to differences in the size, shape and separation of the ears. This implies that listeners must learn by experience to localize with their own ears, a view that is supported by the finding that humans can localize headphone signals that simulate real sound sources more accurately when these are derived from measurements made from their own ears than from the ears of other individuals (Wenzel et al. 1993; Middlebrooks 1999).

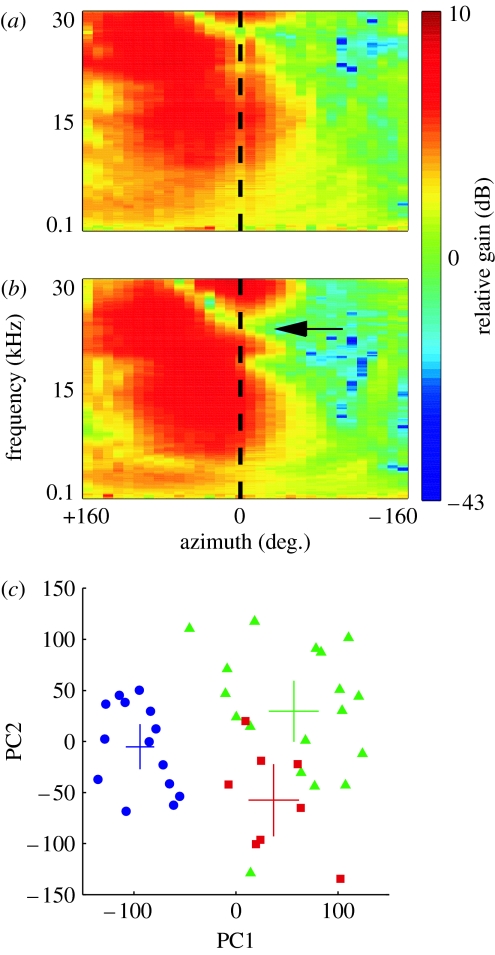

Figure 1.

The acoustical cues that provide the basis for auditory localization change in value as the head and ears grow. Directional transfer functions (DTFs) from (a) an infant (post-natal day (P) 33) and (b) an adult ferret (Mustela putorius). Each plot shows how the gain in decibels of the external ear varies as a function of sound azimuth (x) and frequency (y) at a constant elevation (the animal's horizon). The difference in gain on either side of the midline (0°) is due to the acoustic shadowing effect of the head. The infant ear is less directional and features present in the adult, such as the high-frequency notch (arrowed in (b)), are shifted to higher frequencies. (c) Age-related differences are quantified for a large number of animals by converting each DTF to a vector and performing a principal components analysis on the population of DTF vectors. Data are plotted along the first two principal components (which cumulatively explain 56% of the variance). Points are labelled according to the age of the animal and each animal is represented by two data points, one for each ear. The large crosses indicate the mean and 95% confidence intervals for each distribution. There is a clear distinction between the infant (blue, P33–P37) and adult points (green) with no overlap between the distributions. Juvenile animals (red, aged approx. P50 (P49–P51)) have intermediate DTFs that overlap with the adult distribution, indicating that spectral cues approach maturity three to four weeks after the onset of hearing in this species. Adapted from Campbell et al. (2008).

Accurate auditory localization relies on non-acoustic factors too. Because the coordinates of auditory space are centred on the head and ears, information must be provided by the vestibular and proprioceptive senses about the orientation and motion of these structures (Goossens & Van Opstal 1999; Vliegen et al. 2004). Moreover, a congruent representation of the external world has to be provided by the different senses, so that the objects registered by more than one modality can be reliably localized and identified. In the case of vision and hearing, this means that activation of a specific region of the retina corresponds to a particular combination of monaural and binaural localization cues values. Because most animals can move their eyes, that relationship is not fixed. Consequently, the neural processing and perception of auditory spatial information is also influenced by the direction of gaze (Zwiers et al. 2004; Bulkin & Groh 2006; Razavi et al. 2007).

3. Visual influences on the accuracy of auditory localization

Although sound sources can obviously be localized on the basis of auditory cues alone, localization accuracy improves if the target is also visible to the subject (Shelton & Searle 1980; Stein et al. 1989). This is an example of a more general phenomenon by which the central nervous system can combine inputs across the senses to enhance the detection, localization and discrimination of stimuli and speed up reactions to them. Cross-modal interactions also occur when conflicting information is provided by different senses. For instance, it is frequently the case when listening to someone's voice that we also see their lips moving, which, particularly in noisy situations, can improve speech intelligibility (Sumby & Pollack 1954). But when the visual and auditory signals no longer match, as occurs when viewing someone articulating one speech syllable while listening to another, listeners typically report hearing a third syllable that represents a combination of what was seen and heard (the ‘McGurk effect’; McGurk & MacDonald 1976).

Conflicting visual cues can also influence the perceived location of a sound source. Thus, the presentation of synchronous but spatially disparate visual and auditory targets tends to result in mislocalization of the auditory stimulus, which is perceived to originate from near the location of the visual stimulus (Bertelson & Radeau 1981). Visual capture of sound-source location forms the basis for the ventriloquist's illusion and explains why we readily associate sounds with their corresponding visual events on a television or cinema screen, rather than with the loudspeakers located to one side. This is usually thought to arise because, in contrast to the ambiguous and relatively imprecise cues that underlie auditory localization, the retina provides the brain with high-resolution and reliable information about the visual world. However, if visual stimuli are blurred so that they become harder to localize, the illusion can work in reverse, with sound capturing vision (Alais & Burr 2004). Along with other studies (Ernst & Banks 2002; Battaglia et al. 2003; Heron et al. 2004), this finding suggests that rather than vision having an inherent advantage in spatial processing, the weighting afforded to different sensory cues when they are integrated by the brain varies according to how reliable they are.

Nevertheless, visual localization is normally more accurate than sound localization and therefore tends to dominate conflicts between the two modalities, thereby enabling, at least within certain limits, spatially misaligned cues to be perceived as if they originate from the same object or event. Repeated presentation of consistently misaligned cues results in a shift in the perception of auditory space that can last for tens of minutes once the visual stimulus is removed (figure 2). This is known as the ventriloquism after-effect and has been observed in both human (Radeau & Bertelson 1974; Recanzone 1998; Lewald 2002) and non-human (Woods & Recanzone 2004) primates. Short-term changes in auditory localization can also be induced in humans by compressing the central part of the visual field for 3 days with 0.5× lenses (Zwiers et al. 2003). These studies highlight the dynamic nature of auditory spatial processing in the mature brain, which allows the perceived location of sound sources to be modified in order to conform to changes in visual space.

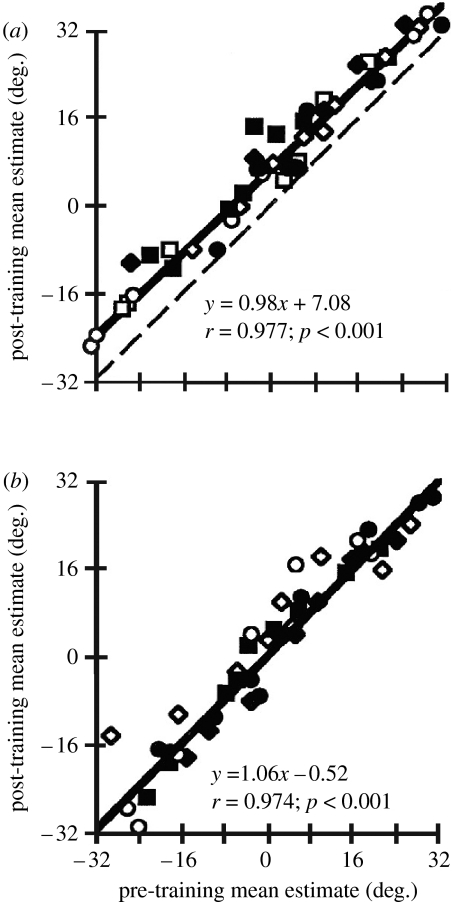

Figure 2.

The ventriloquism after-effect. Regression of mean auditory localization judgments taken before (pre-training, x-axis) and after (post-training, y-axis) exposing human subjects to spatially misaligned auditory and visual stimuli. (a) Data obtained after conditioning with an 8° mismatch between the stimuli. The dashed line indicates perfect correlation between the pre- and post-training estimates. In this case, the auditory localization estimates have been shifted in the direction of the previously present visual stimulus, so the regression line lies above the dashed line. (b) Data obtained after conditioning with a 0° mismatch between the stimuli. There is no difference between pre- and post-training estimates and the dashed line is hidden by the thicker solid regression line. Adapted and reproduced with permission from Recanzone (1998). Copyright (1998) National Academy of Sciences, USA. Open and filled squares, subject 1; open and filled diamonds, subject 2; open and filled circles, subject 3 (open symbols, 750 Hz; filled symbols, 3 kHz).

4. Visual–auditory interactions in the brain

Revealing where and how multisensory information is combined and integrated in the brain is critical to understanding the basis by which visual inputs influence auditory perception and behaviour. Until recently, multisensory convergence was thought to be the preserve of specific cortical and subcortical brain regions. It is now clear, however, that many cortical regions receive afferent inputs from more than one of the senses, including primary areas that were previously thought to be modality specific. This seems to be particularly the case in the auditory cortex, where sensitivity to visual stimulation has been demonstrated in humans (Calvert et al. 1999; Giard & Peronnet 1999; Molholm et al. 2002), non-human primates (Schroeder & Foxe 2002; Brosch et al. 2005; Ghazanfar et al. 2005; Kayser et al. 2007), ferrets (Bizley et al. 2007) and rats (Wallace et al. 2004a).

One likely function for these multisensory interactions has been revealed by Ghazanfar et al. (2005) who found that responses to vocalizations in monkey auditory cortex could be either enhanced or suppressed when the animals viewed the corresponding facial expressions, whereas this was less likely to be the case if the image of the face was replaced by a disc that was flashed on and off to mimic the mouth movements. It has been suggested that visual and somatosensory inputs can modulate the phase of oscillatory activity in the auditory cortex, potentially amplifying the response to related auditory signals (Schroeder et al. 2008). A facilitatory role for these inputs in sound localization has also been proposed (Schroeder & Foxe 2005), a possibility supported by the finding that visual inputs can increase the amount of spatial information conveyed by neurons in auditory cortex (Bizley & King 2008). As the cortex is necessary for normal sound localization (Recanzone & Sutter 2008), it seems likely that such interactions could underlie the visual capture of auditory space perception. Indeed, the observation that the ventriloquism after-effect does not seem to transfer across sound frequency (Recanzone 1998; Lewald 2002; Woods & Recanzone 2004, but see also Frissen et al. 2005 for a different result) implies that early, tonotopically organized regions are involved.

5. Visual guidance of auditory spatial processing during development

In addition to recalibrating auditory space whenever temporary spatial mismatches occur, vision plays an important role in guiding the maturation of the auditory spatial response properties of neurons in certain regions of the brain. This has been demonstrated most clearly in the superior colliculus (SC) in the midbrain, where visual, auditory and tactile inputs are organized into topographically aligned spatial maps (King 2004). This arrangement allows each of the sensory inputs associated with a particular event to be transformed into appropriate motor signals that control the direction of gaze. Where individual SC neurons receive converging multisensory inputs, the strongest responses can often be generated to combinations of stimuli that occur in close temporal and spatial proximity, which, in turn, appears to improve the accuracy of orienting responses (Stein & Stanford 2008). As with the perception of space, maintenance of intersensory map alignment in the SC requires the incorporation of eye-position information in order to allow for differences in the reference frames used to specify visual and auditory spatial signals (Jay & Sparks 1987; Hartline et al. 1995; Populin et al. 2004).

The dominant role played by vision in aligning the sensory maps in the SC has been demonstrated by altering the spatial relationship between auditory localization cues and directions in visual space. This has been achieved by surgically inducing a persistent change in eye position (King et al. 1988), by the use of prisms that laterally displace the visual field representation (Knudsen & Brainard 1991; figure 3), and, most recently, by maintaining young animals in the dark and periodically exposing them to temporally coincident but spatially incongruent visual and auditory stimuli (Wallace & Stein 2007). In each case, a corresponding shift in the neural representation of auditory space is produced, which, at least in prism-reared owls, involves a rewiring of connections within the midbrain (DeBello et al. 2001). In addition to these long-lasting effects on auditory spatial tuning, behavioural studies in owls have shown that prism experience induces equivalent changes in the accuracy of auditory head-orienting responses (Knudsen & Knudsen 1990; figure 3).

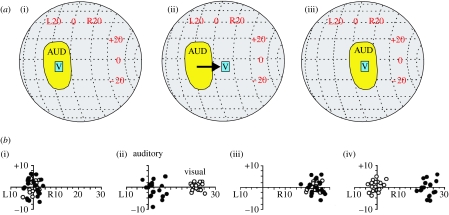

Figure 3.

Visual experience shapes the developing auditory localization pathway in the barn owl (Tyto alba). (a) Effects of prism rearing on the auditory spatial receptive fields of neurons in the optic tectum. (i) Visual (V) and auditory (AUD) receptive fields are normally in close correspondence. (ii) Placing prisms that displace the visual field to the right by 23° disrupts the alignment of these receptive fields. (iii) After young owls have worn the prisms for eight weeks, the auditory receptive field has shifted so that it becomes realigned with the visual receptive field. Adapted from Knudsen & Brainard (1991). (b) Adjustment of auditory orienting responses in a prism-reared owl. Head-orienting responses to visual (open circles) or auditory (filled circles) targets plotted with respect to the location of the stimulus. Owls normally make accurate head turns towards either stimulus. Prisms immediately shift the visual responses, but have no effect on the auditory responses. However, after 42 days of experience with the prisms, the auditory responses have shifted to match the optical displacement of the visual field, presumably as a consequence of the changes that take place in the optic tectum. When the prisms are removed, normal visual responses are restored, although it takes several weeks for the auditory orienting responses to recover ((i) before prisms, (ii) after 1 day with prisms, (iii) after 42 days with prisms and (iv) prisms removed). Adapted from Knudsen & Knudsen (1990).

Studies in human infants have shown that certain multisensory abilities emerge at different stages within the first year of life (Neil et al. 2006; Kushnerenko et al. 2008). However, the capacity to integrate different sensory cues in a statistically optimal fashion emerges much later, at approximately 8 years of age (Gori et al. 2008; Nardini et al. 2008). Before this, one or other sense seems to dominate, which is potentially useful if one sense is used during development to calibrate another, as in the influence of vision on auditory spatial processing.

6. Auditory spatial learning in adulthood

Early studies in barn owls showed that altered vision results in adaptive auditory plasticity during a sensitive period of development, but not in older animals (Knudsen & Knudsen 1990; Knudsen & Brainard 1991). This is consistent with other studies, including those in mammals, which have explored the role of experience in the development and maintenance of sound localization mechanisms (King et al. 2000). Nevertheless, more recent experiments in owls have shown that the capacity for prism experience to induce long-term modifications of both the auditory space map (Brainard & Knudsen 1998; Knudsen 1998; Bergan et al. 2005) and localization behaviour (Brainard & Knudsen 1998) can be extended to older animals under certain conditions.

It is also now clear that the mature mammalian brain is capable of relearning to localize sound in the presence of substantially altered auditory spatial cues. This has been demonstrated in humans by placing moulds into the external ears in order to alter the spectral cues corresponding to different directions in space (Hofman et al. 1998; Van Wanrooij & Van Opstal 2005). As expected, this manipulation immediately impaired localization in the vertical plane. However, response accuracy subsequently recovered over the course of a few weeks. No after-effect was observed following removal of the moulds, indicating that this recalibration in the neural processing of spectral cues did not interfere with the capacity of the brain to use the cue values previously experienced by the subjects. In a similar vein, Kacelnik et al. (2006) showed that altering binaural cues by reversible occlusion of one ear greatly reduces the accuracy with which adult ferrets can localize sounds in the horizontal plane. Again, performance rapidly improved over the next week or so, but only if the animals received auditory localization training after the earplug had been introduced. Indeed, the extent and rate of improvement were determined by the frequency of training (figure 4). Compared with the initial pattern of errors induced by the earplug, a very small and transient after-effect was seen following its removal. This indicates that plasticity in this task is unlikely to be attributed to the animals learning a new association between the altered binaural cues and directions in space. Instead, it appears that the brain is capable of reweighting the different auditory cues according to how reliable or consistent they are in a manner that resembles the optimal integration of multisensory cues in ventriloquism.

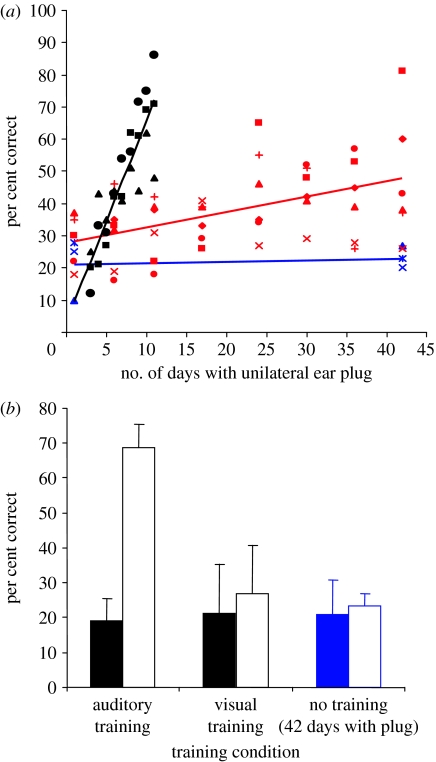

Figure 4.

Auditory localization adaptation is accelerated by modality-specific training. (a) Change in performance (averaged across all speaker locations) over time in three groups of ferrets with unilateral earplugs. Each symbol represents a different animal. No change was found in trained ferrets that received an earplug for six weeks, but were tested only at the start and end of this period (n=3, blue symbols and regression line). Two groups of animals received an equivalent amount of auditory training while the left ear was occluded. Although the earplug was in place for less time, a much faster rate of improvement was observed in the animals that received daily training (n=3, black symbols and regression line) compared with those that were trained every 6 days (n=6, red symbols and regression line). (b) Mean (±s.d.) auditory localization scores at the start (filled bars) and end (open bars) of the plugging period for the animals that received daily auditory training for 10 days (black symbols in (a)), another group of auditory-trained ferrets that performed a visual localization task every day while the left ear was occluded for the same period (n=3), and the three ferrets that received no training during a six-week period of monaural occlusion (blue symbols in (a)). The only significant improvement in sound localization was found for the auditory-trained animals. Adapted from Kacelnik et al. (2006).

Given the evidence that vision can recalibrate auditory localization both during infancy and in later life, it seems reasonable to assume that visual cues provide a possible source of sensory feedback about the accuracy of acoustically guided behaviour, which might therefore guide the plasticity observed when localization cues are altered. Indeed, if they are also deprived of vision, smaller adaptive changes occur in the tuning of midbrain neurons in owls raised with one ear occluded (Knudsen & Mogdans 1992). Moreover, normally sighted birds that have adapted to monaural occlusion fail to recover normal localization behaviour following earplug removal if vision is prevented at the same time (Knudsen & Knudsen 1985).

However, visual feedback is neither sufficient nor required for recalibrating auditory space in response to altered binaural cues in adult mammals. Kacelnik et al. (2006) found that ferrets with unilateral earplugs do not recover auditory localization accuracy if they are trained on a visual, rather than an auditory, localization task (figure 4b), highlighting the requirement for modality-specific training, while normal plasticity was seen in response to auditory training in animals that had been visually deprived from infancy (figure 5). It has been suggested that these results can be accounted for by unsupervised sensorimotor learning, in which the dynamic acoustic inputs associated with an animal's own movements help build up a stable representation of auditory space (Aytekin et al. 2008). Although vision is not essential for the relearning of accurate sound localization by monaurally occluded ferrets, it is certainly possible that training with congruent multisensory cues might result in faster learning than that with auditory cues alone, as recently demonstrated in humans for a motion detection task (Kim et al. 2008).

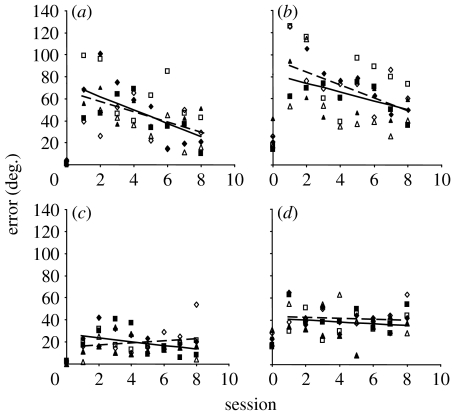

Figure 5.

Relearning to localize sound in the presence of a unilateral conductive hearing loss does not require visual feedback. Data from three normally sighted (open symbols) and three visually deprived (filled symbols) ferrets showing the magnitude of the unsigned errors averaged across speaker location in the left ((a,b) side of plugged ear) and right ((c,d) side of open (unplugged) ear) hemifields. Each symbol represents a different animal. Data are shown for the session prior to the insertion of the earplug (session 0) and for eight sessions, carried out at 6-day intervals, with the left ear occluded (sessions 1–8). (a,c) 1000 ms, (b,d) 40 ms. Adapted from Kacelnik et al. (2006).

7. Visual–auditory interactions following sensory deprivation: implications for cochlear implantation

There is both behavioural and physiological evidence that early loss of vision can interfere with the maturation of certain aspects of auditory spatial processing (Withington-Wray et al. 1990; King & Carlile 1993; Zwiers et al. 2001; Wallace et al. 2004b). Nevertheless, there is no doubt that accurate sound localization can develop in the absence of vision (figure 5) and, in some cases, even surpass the performance of individuals with normal sight (King & Parsons 1999; Röder et al. 1999; Gougoux et al. 2005; Lewald 2007). Enhanced auditory capacities could result from changes within the auditory pathway (Korte & Rauschecker 1993; Elbert et al. 2002), perhaps reflecting the greater attention paid to the auditory modality. However, the recruitment of visual cortex also seems to be involved in the superior auditory localization performance of blind individuals (Weeks et al. 2000; Gougoux et al. 2005), which is presumably made possible either by establishing novel functional connections from auditory to visual brain areas or by unmasking connections that are now known to exist normally.

Although the emergence of heightened abilities in the use of the remaining senses in the blind or deaf is clearly advantageous, this has important implications for the capacity of the brain to process inputs from the missing sense, should these be restored, and to coordinate those inputs with the intact sensory modalities. Thus, while cross-modal reorganization of auditory cortex appears to facilitate the perception of visual speech in the deaf, it may limit the capacity of these individuals to make use of restored auditory inputs provided by cochlear implants (Doucet et al. 2006; Lee et al. 2007). Another important consideration is the effect of early sensory deprivation on the subsequent capacity to bind visual and auditory signals. Early loss of vision has been reported to impair the ability of the central nervous system to combine and integrate multisensory cues (Wallace et al. 2004b; Putzar et al. 2007). Synthesis of auditory and visual information can be achieved, however, if sensory function is restored early enough. Thus, congenitally deaf children fitted with cochlear implants within the first two and a half years of life exhibit the McGurk effect, whereas, after this age, auditory and visual speech cues can no longer be fused (Schorr et al. 2005). Interestingly, patients with cochlear implants received following postlingual deafness—who presumably benefitted from multisensory experience early in life—are better than listeners with normal hearing at fusing visual and auditory signals, thereby improving speech intelligibility in situations where both sets of cues are present (Rouger et al. 2007).

While cochlear implantation has enabled many profoundly deaf patients to recover substantial auditory function, including an ability to converse by telephone, they usually have difficulty in localizing sounds and perceiving speech in noisy conditions. This is because, in the vast majority of cases, only one ear is implanted. However, if hearing is restored bilaterally by implanting both ears, these functions can be recovered or, in the case of the congenitally deaf, established for the first time (Litovsky et al. 2006; Long et al. 2006). On the basis of the highly dynamic way in which auditory spatial information is processed in the brain, it seems certain that the capacity of patients to interpret the distorted signals provided by bilateral cochlear implants will be enhanced by experience and by training strategies that encourage their use in localization tasks. Moreover, provided that they can integrate multisensory inputs, training with congruent auditory and visual stimuli should be particularly useful for promoting adaptive plasticity in the auditory system of these individuals.

Acknowledgements

The author is supported by a Wellcome Trust Principal Research Fellowship.

Footnotes

One contribution of 12 to a Theme Issue ‘Sensory learning: from neural mechanisms to rehabilitation’.

References

- Alais D., Burr D. The ventriloquist effect results from near-optimal bimodal integration. Curr. Biol. 2004;14:257–262. doi: 10.1016/j.cub.2004.01.029. doi:10.1016/j.cub.2004.01.029 [DOI] [PubMed] [Google Scholar]

- Aytekin M., Moss C.F., Simon J.Z. A sensorimotor approach to sound localization. Neural. Comput. 2008;20:603–635. doi: 10.1162/neco.2007.12-05-094. doi:10.1162/neco.2007.12-05-094 [DOI] [PubMed] [Google Scholar]

- Battaglia P.W., Jacobs R.A., Aslin R.N. Bayesian integration of visual and auditory signals for spatial localization. J. Opt. Soc. Am. A Opt. Image. Sci. Vis. 2003;20:1391–1397. doi: 10.1364/josaa.20.001391. doi:10.1364/JOSAA.20.001391 [DOI] [PubMed] [Google Scholar]

- Bergan J.F., Ro P., Ro D., Knudsen E.I. Hunting increases adaptive auditory map plasticity in adult barn owls. J. Neurosci. 2005;25:9816–9820. doi: 10.1523/JNEUROSCI.2533-05.2005. doi:10.1523/JNEUROSCI.2533-05.2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bertelson P., Radeau M. Cross-modal bias and perceptual fusion with auditory-visual spatial discordance. Percept. Psychophys. 1981;29:578–584. doi: 10.3758/bf03207374. [DOI] [PubMed] [Google Scholar]

- Bizley J.K., King A.J. Visual-auditory spatial processing in auditory cortical neurons. Brain Res. 2008;1242:24–36. doi: 10.1016/j.brainres.2008.02.087. doi:10.1016/j.brainres.2008.02.087 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bizley J.K., Nodal F.R., Bajo V.M., Nelken I., King A.J. Physiological and anatomical evidence for multisensory interactions in auditory cortex. Cereb. Cortex. 2007;17:2172–2189. doi: 10.1093/cercor/bhl128. doi:10.1093/cercor/bhl128 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blauert J. MIT Press; Cambridge, MA: 1997. Spatial hearing: the psychophysics of human sound localization. [Google Scholar]

- Brainard M.S., Knudsen E.I. Sensitive periods for visual calibration of the auditory space map in the barn owl optic tectum. J. Neurosci. 1998;18:3929–3942. doi: 10.1523/JNEUROSCI.18-10-03929.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brosch M., Selezneva E., Scheich H. Nonauditory events of a behavioral procedure activate auditory cortex of highly trained monkeys. J. Neurosci. 2005;25:6797–6806. doi: 10.1523/JNEUROSCI.1571-05.2005. doi:10.1523/JNEUROSCI.1571-05.2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bulkin D.A., Groh J.M. Seeing sounds: visual and auditory interactions in the brain. Curr. Opin. Neurobiol. 2006;16:415–419. doi: 10.1016/j.conb.2006.06.008. doi:10.1016/j.conb.2006.06.008 [DOI] [PubMed] [Google Scholar]

- Butler R.A. The bandwidth effect on monaural and binaural localization. Hearing Res. 1986;21:67–73. doi: 10.1016/0378-5955(86)90047-x. doi:10.1016/0378-5955(86)90047-X [DOI] [PubMed] [Google Scholar]

- Calvert G.A., Brammer M.J., Bullmore E.T., Campbell R., Iversen S.D., David A.S. Response amplification in sensory-specific cortices during crossmodal binding. Neuroreport. 1999;10:2619–2623. doi: 10.1097/00001756-199908200-00033. doi:10.1097/00001756-199908200-00033 [DOI] [PubMed] [Google Scholar]

- Campbell R.A.A., King A.J., Nodal F.R., Schnupp J.W.H., Carlile S., Doubell T.P. Virtual adult ears reveal the roles of acoustical factors and experience in auditory space map development. J. Neurosci. 2008;28:11 557–11 570. doi: 10.1523/JNEUROSCI.0545-08.2008. doi:10.1523/JNEUROSCI.0545-08.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clifton R.K., Gwiazda J., Bauer J.A., Clarkson M.G., Held R.M. Growth in head size during infancy: implications for sound localization. Dev. Psychol. 1988;24:477–483. doi:10.1037/0012-1649.24.4.477 [Google Scholar]

- DeBello W.M., Feldman D.E., Knudsen E.I. Adaptive axonal remodeling in the midbrain auditory space map. J. Neurosci. 2001;21:3161–3174. doi: 10.1523/JNEUROSCI.21-09-03161.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doucet M.E., Bergeron F., Lassonde M., Ferron P., Lepore F. Cross-modal reorganization and speech perception in cochlear implant users. Brain. 2006;129:3376–3383. doi: 10.1093/brain/awl264. doi:10.1093/brain/awl264 [DOI] [PubMed] [Google Scholar]

- Elbert T., Sterr A., Rockstroh B., Pantev C., Müller M.M., Taub E. Expansion of the tonotopic area in the auditory cortex of the blind. J. Neurosci. 2002;22:9941–9944. doi: 10.1523/JNEUROSCI.22-22-09941.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ernst M.O., Banks M.S. Humans integrate visual and haptic information in a statistically optimal fashion. Nature. 2002;415:429–433. doi: 10.1038/415429a. doi:10.1038/415429a [DOI] [PubMed] [Google Scholar]

- Frissen I., Vroomen J., de Gelder B., Bertelson P. The after effects of ventriloquism: generalization across sound-frequencies. Acta Psychol. (Amst) 2005;118:93–100. doi: 10.1016/j.actpsy.2004.10.004. doi:10.1016/j.actpsy.2004.10.004 [DOI] [PubMed] [Google Scholar]

- Ghazanfar A.A., Maier J.X., Hoffman K.L., Logothetis N.K. Multisensory integration of dynamic faces and voices in rhesus monkey auditory cortex. J. Neurosci. 2005;25:5004–5012. doi: 10.1523/JNEUROSCI.0799-05.2005. doi:10.1523/JNEUROSCI.0799-05.2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Giard M.H., Peronnet F. Auditory-visual integration during multimodal object recognition in humans: a behavioral and electrophysiological study. J. Cogn. Neurosci. 1999;11:473–490. doi: 10.1162/089892999563544. doi:10.1162/089892999563544 [DOI] [PubMed] [Google Scholar]

- Gori M., Del Viva M., Sandini G., Burr D.C. Young children do not integrate visual and haptic form information. Curr. Biol. 2008;18:694–698. doi: 10.1016/j.cub.2008.04.036. doi:10.1016/j.cub.2008.04.036 [DOI] [PubMed] [Google Scholar]

- Goossens H.H., Van Opstal A.J. Influence of head position on the spatial representation of acoustic targets. J. Neurophysiol. 1999;81:2720–2736. doi: 10.1152/jn.1999.81.6.2720. [DOI] [PubMed] [Google Scholar]

- Gougoux F., Zatorre R.J., Lassonde M., Voss P., Lepore F. A functional neuroimaging study of sound localization: visual cortex activity predicts performance in early-blind individuals. PLoS Biol. 2005;3:e27. doi: 10.1371/journal.pbio.0030027. doi:10.1371/journal.pbio.0030027 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hartline P.H., Vimal R.L.P., King A.J., Kurylo D.D., Northmore D.P.M. Effects of eye position on auditory target localization and neural representation of space in the superior colliculus of cat. Exp. Brain Res. 1995;104:402–408. doi: 10.1007/BF00231975. doi:10.1007/BF00231975 [DOI] [PubMed] [Google Scholar]

- Heron J., Whitaker D., McGraw P.V. Sensory uncertainty governs the extent of audio-visual interaction. Vision Res. 2004;44:2875–2884. doi: 10.1016/j.visres.2004.07.001. doi:10.1016/j.visres.2004.07.001 [DOI] [PubMed] [Google Scholar]

- Hofman P.M., Van Riswick J.G., Van Opstal A.J. Relearning sound localization with new ears. Nat. Neurosci. 1998;1:417–421. doi: 10.1038/1633. doi:10.1038/1633 [DOI] [PubMed] [Google Scholar]

- Jay M.F., Sparks D.L. Sensorimotor integration in the primate superior colliculus. II. Coordinates of auditory signals. J. Neurophysiol. 1987;57:35–55. doi: 10.1152/jn.1987.57.1.35. [DOI] [PubMed] [Google Scholar]

- Kacelnik O., Nodal F.R., Parsons C.H., King A.J. Training-induced plasticity of auditory localization in adult mammals. PLoS Biol. 2006;4:e71. doi: 10.1371/journal.pbio.0040071. doi:10.1371/journal.pbio.0040071 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kayser C., Petkov C.I., Augath M., Logothetis N.K. Functional imaging reveals visual modulation of specific fields in auditory cortex. J. Neurosci. 2007;27:1824–1835. doi: 10.1523/JNEUROSCI.4737-06.2007. doi:10.1523/JNEUROSCI.4737-06.2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim R.S., Seitz A.R., Shams L. Benefits of stimulus congruency for multisensory facilitation of visual learning. PLoS ONE. 2008;3:e1532. doi: 10.1371/journal.pone.0001532. doi:10.1371/journal.pone.0001532 [DOI] [PMC free article] [PubMed] [Google Scholar]

- King A.J. The superior colliculus. Curr. Biol. 2004;14:R335–R338. doi: 10.1016/j.cub.2004.04.018. doi:10.1016/j.cub.2004.04.018 [DOI] [PubMed] [Google Scholar]

- King A.J., Carlile S. Changes induced in the representation of auditory space in the superior colliculus by rearing ferrets with binocular eyelid suture. Exp. Brain Res. 1993;94:444–455. doi: 10.1007/BF00230202. doi:10.1007/BF00230202 [DOI] [PubMed] [Google Scholar]

- King A.J., Parsons C.H. Improved auditory spatial acuity in visually deprived ferrets. Eur. J. Neurosci. 1999;11:3945–3956. doi: 10.1046/j.1460-9568.1999.00821.x. doi:10.1046/j.1460-9568.1999.00821.x [DOI] [PubMed] [Google Scholar]

- King A.J., Hutchings M.E., Moore D.R., Blakemore C. Developmental plasticity in the visual and auditory representations in the mammalian superior colliculus. Nature. 1988;332:73–76. doi: 10.1038/332073a0. doi:10.1038/332073a0 [DOI] [PubMed] [Google Scholar]

- King A.J., Parsons C.H., Moore D.R. Plasticity in the neural coding of auditory space in the mammalian brain. Proc. Natl Acad. Sci. USA. 2000;97:11 821–11 828. doi: 10.1073/pnas.97.22.11821. doi:10.1073/pnas.97.22.11821 [DOI] [PMC free article] [PubMed] [Google Scholar]

- King A.J., Schnupp J.W.H., Doubell T.P. The shape of ears to come: dynamic coding of auditory space. Trends Cogn. Sci. 2001;5:261–270. doi: 10.1016/s1364-6613(00)01660-0. doi:10.1016/S1364-6613(00)01660-0 [DOI] [PubMed] [Google Scholar]

- Knudsen E.I. Capacity for plasticity in the adult owl auditory system expanded by juvenile experience. Science. 1998;279:1531–1533. doi: 10.1126/science.279.5356.1531. doi:10.1126/science.279.5356.1531 [DOI] [PubMed] [Google Scholar]

- Knudsen E.I., Brainard M.S. Visual instruction of the neural map of auditory space in the developing optic tectum. Science. 1991;253:85–87. doi: 10.1126/science.2063209. doi:10.1126/science.2063209 [DOI] [PubMed] [Google Scholar]

- Knudsen E.I., Knudsen P.F. Vision guides the adjustment of auditory localization in young barn owls. Science. 1985;230:545–548. doi: 10.1126/science.4048948. doi:10.1126/science.4048948 [DOI] [PubMed] [Google Scholar]

- Knudsen E.I., Knudsen P.F. Sensitive and critical periods for visual calibration of sound localization by barn owls. J. Neurosci. 1990;10:222–232. doi: 10.1523/JNEUROSCI.10-01-00222.1990. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knudsen E.I., Mogdans J. Vision-independent adjustment of unit tuning to sound localization cues in response to monaural occlusion in developing owl optic tectum. J. Neurosci. 1992;12:3485–3493. doi: 10.1523/JNEUROSCI.12-09-03485.1992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Korte M., Rauschecker J.P. Auditory spatial tuning of cortical neurons is sharpened in cats with early blindness. J. Neurophysiol. 1993;70:1717–1721. doi: 10.1152/jn.1993.70.4.1717. [DOI] [PubMed] [Google Scholar]

- Kushnerenko E., Teinonen T., Volein A., Csibra G. Electrophysiological evidence of illusory audiovisual speech percept in human infants. Proc. Natl Acad. Sci. USA. 2008;105:11 442–11 445. doi: 10.1073/pnas.0804275105. doi:10.1073/pnas.0804275105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee H.J., Truy E., Mamou G., Sappey-Marinier D., Giraud A.L. Visual speech circuits in profound acquired deafness: a possible role for latent multimodal connectivity. Brain. 2007;130:2929–2941. doi: 10.1093/brain/awm230. doi:10.1093/brain/awm230 [DOI] [PubMed] [Google Scholar]

- Lewald J. Rapid adaptation to auditory–visual spatial disparity. Learn. Mem. 2002;9:268–278. doi: 10.1101/lm.51402. doi:10.1101/lm.51402 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewald J. More accurate sound localization induced by short-term light deprivation. Neuropsychologia. 2007;45:1215–1222. doi: 10.1016/j.neuropsychologia.2006.10.006. doi:10.1016/j.neuropsychologia.2006.10.006 [DOI] [PubMed] [Google Scholar]

- Litovsky R.Y., Johnstone P.M., Godar S.P. Benefits of bilateral cochlear implants and/or hearing aids in children. Int. J. Audiol. 2006;45:S78–S91. doi: 10.1080/14992020600782956. doi:10.1080/14992020600782956 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Long C.J., Carlyon R.P., Litovsky R.Y., Downs D.H. Binaural unmasking with bilateral cochlear implants. J. Assoc. Res. Otolaryngol. 2006;7:352–360. doi: 10.1007/s10162-006-0049-4. doi:10.1007/s10162-006-0049-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGurk H., MacDonald J. Hearing lips and seeing voices. Nature. 1976;264:746–748. doi: 10.1038/264746a0. doi:10.1038/264746a0 [DOI] [PubMed] [Google Scholar]

- Middlebrooks J.C. Virtual localization improved by scaling nonindividualized external-ear transfer functions in frequency. J. Acoust. Soc. Am. 1999;106:1493–1510. doi: 10.1121/1.427147. doi:10.1121/1.427147 [DOI] [PubMed] [Google Scholar]

- Molholm S., Ritter W., Murray M.M., Javitt D.C., Schroeder C.E., Foxe J.J. Multisensory auditory–visual interactions during early sensory processing in humans: a high-density electrical mapping study. Brain Res. Cogn. Brain Res. 2002;14:115–128. doi: 10.1016/s0926-6410(02)00066-6. doi:10.1016/S0926-6410(02)00066-6 [DOI] [PubMed] [Google Scholar]

- Moore D.R., Irvine D.R.F. A developmental study of the sound pressure transformation by the head of the cat. Acta Otolaryngol. 1979;87:434–440. doi: 10.3109/00016487909126447. doi:10.3109/00016487909126447 [DOI] [PubMed] [Google Scholar]

- Nardini M., Jones P., Bedford R., Braddick O. Development of cue integration in human navigation. Curr. Biol. 2008;18:689–693. doi: 10.1016/j.cub.2008.04.021. doi:10.1016/j.cub.2008.04.021 [DOI] [PubMed] [Google Scholar]

- Neil P.A., Chee-Ruiter C., Scheier C., Lewkowicz D.J., Shimojo S. Development of multisensory spatial integration and perception in humans. Dev. Sci. 2006;9:454–464. doi: 10.1111/j.1467-7687.2006.00512.x. doi:10.1111/j.1467-7687.2006.00512.x [DOI] [PubMed] [Google Scholar]

- Populin L.C., Tollin D.J., Yin T.C.T. Effect of eye position on saccades and neuronal responses to acoustic stimuli in the superior colliculus of the behaving cat. J. Neurophysiol. 2004;92:2151–2167. doi: 10.1152/jn.00453.2004. doi:10.1152/jn.00453.2004 [DOI] [PubMed] [Google Scholar]

- Putzar L., Goerendt I., Lange K., Rösler F., Röder B. Early visual deprivation impairs multisensory interactions in humans. Nat. Neurosci. 2007;10:1243–1245. doi: 10.1038/nn1978. doi:10.1038/nn1978 [DOI] [PubMed] [Google Scholar]

- Radeau M., Bertelson P. The after-effects of ventriloquism. Q. J. Exp. Psychol. 1974;26:63–71. doi: 10.1080/14640747408400388. doi:10.1080/14640747408400388 [DOI] [PubMed] [Google Scholar]

- Razavi B., O'Neill W.E., Paige G.D. Auditory spatial perception dynamically realigns with changing eye position. J. Neurosci. 2007;27:10 249–10 258. doi: 10.1523/JNEUROSCI.0938-07.2007. doi:10.1523/JNEUROSCI.0938-07.2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Recanzone G.H. Rapidly induced auditory plasticity: the ventriloquism aftereffect. Proc. Natl Acad. Sci. USA. 1998;95:869–875. doi: 10.1073/pnas.95.3.869. doi:10.1073/pnas.95.3.869 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Recanzone G.H., Sutter M.L. The biological basis of audition. Annu. Rev. Psychol. 2008;59:119–142. doi: 10.1146/annurev.psych.59.103006.093544. doi:10.1146/annurev.psych.59.103006.093544 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Röder B., Teder-Sälejärvi W., Sterr A., Rösler F., Hillyard S.A., Neville H.J. Improved auditory spatial tuning in blind humans. Nature. 1999;400:162–166. doi: 10.1038/22106. doi:10.1038/22106 [DOI] [PubMed] [Google Scholar]

- Rouger J., Lagleyre S., Fraysse B., Deneve S., Deguine O., Barone P. Evidence that cochlear-implanted deaf patients are better multisensory integrators. Proc. Natl Acad. Sci. USA. 2007;104:7295–7300. doi: 10.1073/pnas.0609419104. doi:10.1073/pnas.0609419104 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schnupp J.W.H., Booth J., King A.J. Modeling individual differences in ferret external ear transfer functions. J. Acoust. Soc. Am. 2003;113:2021–2030. doi: 10.1121/1.1547460. doi:10.1121/1.1547460 [DOI] [PubMed] [Google Scholar]

- Schorr E.A., Fox N.A., Van Wassenhove V., Knudsen E.I. Auditory-visual fusion in speech perception in children with cochlear implants. Proc. Natl Acad. Sci. USA. 2005;102:18 748–18 750. doi: 10.1073/pnas.0508862102. doi:10.1073/pnas.0508862102 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schroeder C.E., Foxe J.J. The timing and laminar profile of converging inputs to multisensory areas of the macaque neocortex. Brain Res. Cogn. Brain Res. 2002;14:187–198. doi: 10.1016/s0926-6410(02)00073-3. doi:10.1016/S0926-6410(02)00073-3 [DOI] [PubMed] [Google Scholar]

- Schroeder C.E., Foxe J. Multisensory contributions to low-level, ‘unisensory’ processing. Curr. Opin. Neurobiol. 2005;15:454–458. doi: 10.1016/j.conb.2005.06.008. doi:10.1016/j.conb.2005.06.008 [DOI] [PubMed] [Google Scholar]

- Schroeder C.E., Lakatos P., Kajikawa Y., Partan S., Puce A. Neuronal oscillations and visual amplification of speech. Trends Cogn. Sci. 2008;12:106–113. doi: 10.1016/j.tics.2008.01.002. doi:10.1016/j.tics.2008.01.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shelton B.R., Searle C.L. The influence of vision on the absolute identification of sound-source position. Percept. Psychophys. 1980;28:589–596. doi: 10.3758/bf03198830. [DOI] [PubMed] [Google Scholar]

- Slattery W.H., Middlebrooks J.C. Monaural sound localization: acute versus chronic unilateral impairment. Hearing Res. 1994;75:38–46. doi: 10.1016/0378-5955(94)90053-1. doi:10.1016/0378-5955(94)90053-1 [DOI] [PubMed] [Google Scholar]

- Stein B.E., Stanford T.R. Multisensory integration: current issues from the perspective of the single neuron. Nat. Rev. Neurosci. 2008;9:255–266. doi: 10.1038/nrn2331. doi:10.1038/nrn2331 [DOI] [PubMed] [Google Scholar]

- Stein B.E., Meredith M.A., Huneycutt W.S., McDade L. Behavioral indices of multisensory integration: orientation to visual cues is affected by auditory stimuli. J. Cogn. Neurosci. 1989;1:12–24. doi: 10.1162/jocn.1989.1.1.12. doi:10.1162/jocn.1989.1.1.12 [DOI] [PubMed] [Google Scholar]

- Su T.I., Recanzone G.H. Differential effect of near-threshold stimulus intensities on sound localization performance in azimuth and elevation in normal human subjects. J. Assoc. Res. Otolaryngol. 2001;2:246–256. doi: 10.1007/s101620010073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sumby W.H., Pollack I. Visual contributions to speech intelligibility in noise. J. Acoust. Soc. Am. 1954;26:212–215. doi:10.1121/1.1907309 [Google Scholar]

- Van Wanrooij M.M., Van Opstal A.J. Contribution of head shadow and pinna cues to chronic monaural sound localization. J. Neurosci. 2004;24:4163–4171. doi: 10.1523/JNEUROSCI.0048-04.2004. doi:10.1523/JNEUROSCI.0048-04.2004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Wanrooij M.M., Van Opstal A.J. Relearning sound localization with a new ear. J. Neurosci. 2005;25:5413–5424. doi: 10.1523/JNEUROSCI.0850-05.2005. doi:10.1523/JNEUROSCI.0850-05.2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vliegen J., Van Grootel T.J., Van Opstal A.J. Dynamic sound localization during rapid eye–head gaze shifts. J. Neurosci. 2004;24:9291–9302. doi: 10.1523/JNEUROSCI.2671-04.2004. doi:10.1523/JNEUROSCI.2671-04.2004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wallace M.T., Stein B.E. Early experience determines how the senses will interact. J. Neurophysiol. 2007;97:921–926. doi: 10.1152/jn.00497.2006. doi:10.1152/jn.00497.2006 [DOI] [PubMed] [Google Scholar]

- Wallace M.T., Ramachandran R., Stein B.E. A revised view of sensory cortical parcellation. Proc. Natl Acad. Sci. USA. 2004;101:2167–2172. doi: 10.1073/pnas.0305697101. doi:10.1073/pnas.0305697101 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wallace M.T., Perrault T.J., Jr, Hairston W.D., Stein B.E. Visual experience is necessary for the development of multisensory integration. J. Neurosci. 2004;24:9580–9584. doi: 10.1523/JNEUROSCI.2535-04.2004. doi:10.1523/JNEUROSCI.2535-04.2004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weeks R., Horwitz B., Aziz-Sultan A., Tian B., Wessinger C.M., Cohen L.G., Hallett M., Rauschecker J.P. A positron emission tomographic study of auditory localization in the congenitally blind. J. Neurosci. 2000;20:2664–2672. doi: 10.1523/JNEUROSCI.20-07-02664.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wenzel E.M., Arruda M., Kistler D.J., Wightman F.L. Localization using nonindividualized head-related transfer functions. J. Acoust. Soc. Am. 1993;94:111–123. doi: 10.1121/1.407089. doi:10.1121/1.407089 [DOI] [PubMed] [Google Scholar]

- Wightman F.L., Kistler D.J. Monaural sound localization revisited. J. Acoust. Soc. Am. 1997;101:1050–1063. doi: 10.1121/1.418029. doi:10.1121/1.418029 [DOI] [PubMed] [Google Scholar]

- Withington-Wray D.J., Binns K.E., Keating M.J. The maturation of the superior collicular map of auditory space in the guinea pig is disrupted by developmental visual deprivation. Eur. J. Neurosci. 1990;2:682–692. doi: 10.1111/j.1460-9568.1990.tb00458.x. doi:10.1111/j.1460-9568.1990.tb00458.x [DOI] [PubMed] [Google Scholar]

- Woods T.M., Recanzone G.H. Visually induced plasticity of auditory spatial perception in macaques. Curr. Biol. 2004;14:1559–1564. doi: 10.1016/j.cub.2004.08.059. doi:10.1016/j.cub.2004.08.059 [DOI] [PubMed] [Google Scholar]

- Zwiers M.P., Van Opstal A.J., Cruysberg J.R. A spatial hearing deficit in early-blind humans. J. Neurosci. 2001;21:1–5. doi: 10.1523/JNEUROSCI.21-09-j0002.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zwiers M.P., Van Opstal A.J., Paige G.D. Plasticity in human sound localization induced by compressed spatial vision. Nat. Neurosci. 2003;6:175–181. doi: 10.1038/nn999. doi:10.1038/nn999 [DOI] [PubMed] [Google Scholar]

- Zwiers M.P., Versnel H., Van Opstal A.J. Involvement of monkey inferior colliculus in spatial hearing. J. Neurosci. 2004;24:4145–4156. doi: 10.1523/JNEUROSCI.0199-04.2004. doi:10.1523/JNEUROSCI.0199-04.2004 [DOI] [PMC free article] [PubMed] [Google Scholar]