Abstract

One of the most critical problems we face in the study of biological systems is building accurate statistical descriptions of them. This problem has been particularly challenging because biological systems typically contain large numbers of interacting elements, which precludes the use of standard brute force approaches. Recently, though, several groups have reported that there may be an alternate strategy. The reports show that reliable statistical models can be built without knowledge of all the interactions in a system; instead, pairwise interactions can suffice. These findings, however, are based on the analysis of small subsystems. Here, we ask whether the observations will generalize to systems of realistic size, that is, whether pairwise models will provide reliable descriptions of true biological systems. Our results show that, in most cases, they will not. The reason is that there is a crossover in the predictive power of pairwise models: If the size of the subsystem is below the crossover point, then the results have no predictive power for large systems. If the size is above the crossover point, then the results may have predictive power. This work thus provides a general framework for determining the extent to which pairwise models can be used to predict the behavior of large biological systems. Applied to neural data, the size of most systems studied so far is below the crossover point.

Author Summary

Biological systems are exceedingly complicated: They consist of a large number of elements, those elements interact in nonlinear and highly unpredictable ways, and collective interactions typically play a critical role. It would seem surprising, then, that one could build a quantitative description of biological systems based only on knowledge of how pairs of elements interact. Yet, that is what a number of studies have found. Those studies, however, focused on relatively small systems. Here, we ask the question: Do their conclusions extend to large systems? We show that the answer depends on the size of the system relative to a crossover point: Below the crossover point the results on the small system have no predictive power for large systems; above the crossover point the results on the small system may have predictive power. Moreover, the crossover point can be computed analytically. This work thus provides a general framework for determining the extent to which pairwise models can be used to predict the behavior of large biological systems. It also provides a useful heuristic for designing experiments: If one is interested in understanding truly large systems via pairwise interactions, then make sure that the system one studies is above the crossover point.

Introduction

Many fundamental questions in biology are naturally treated in a probabilistic setting. For instance, deciphering the neural code requires knowledge of the probability of observing patterns of activity in response to stimuli [1]; determining which features of a protein are important for correct folding requires knowledge of the probability that a particular sequence of amino acids folds naturally [2],[3]; and determining the patterns of foraging of animals and their social and individual behavior requires knowledge of the distribution of food and species over both space and time [4]–[6].

Constructing these probability distributions is, however, hard. There are several reasons for this: i) biological systems are composed of large numbers of elements, and so can exhibit a huge number of configurations—in fact, an exponentially large number, ii) the elements typically interact with each other, making it impossible to view the system as a collection of independent entities, and iii) because of technological considerations, the descriptions of biological systems have to be built from very little data. For example, with current technology in neuroscience, we can record simultaneously from only about 100 neurons out of approximately 100 billion in the human brain. So, not only are we faced with the problem of estimating probability distributions in high dimensional spaces, we must do this based on a small fraction of the neurons in the network.

Despite these apparent difficulties, recent work has suggested that the situation may be less bleak than it seems, and that an accurate statistical description of systems can be achieved without having to examine all possible configurations [2], [3], [7]–[11]. One merely has to measure the probability distribution over pairs of elements and use those to build the full distribution. These “pairwise models” potentially offer a fundamental simplification, as the number of pairs is quadratic in the number of elements, not exponential. However, support for the efficacy of pairwise models has, necessarily, come from relatively small subsystems—small enough that the true probability distribution could be measured experimentally [7]–[9],[11]. While these studies have provided a key first step, a critical question remains: will the results from the analysis of these small subsystems extrapolate to large ones? That is, if a pairwise model predicts the probability distribution for a subset of the elements in a system, will it also predict the probability distribution for the whole system? Here we find that, for a biologically relevant class of systems, this question can be answered quantitatively and, importantly, generically—independent of many of the details of the biological system under consideration. And the answer is, generally, “no.” In this paper, we explain, both analytically and with simulations, why this is the case.

Results

The extrapolation problem

To gain intuition into the extrapolation problem, let us consider a specific

example: neuronal spike trains. Fig. 1A shows a typical spike train for a small population of

neurons. Although the raw spike times provide a complete description, they are

not a useful representation, as they are too high-dimensional. Therefore, we

divide time into bins and re-represent the spike train as 0 s and 1 s: 0 if

there is no spike in a bin; 1 otherwise (Fig. 1B) [7]–[9],[11]. For

now we assume that the bins are independent (an assumption whose validity we

discuss below, and in more detail in the section “Is there anything

wrong with using small time bins?”). The problem, then, is to find  where

where  is a binary variable indicating no spike (

is a binary variable indicating no spike ( ) or one or more spikes (

) or one or more spikes ( ) on neuron

) on neuron  . Since this, too, is a high dimensional problem (though less

so than the original spike time representation), suppose that we instead

construct a pairwise approximation to

. Since this, too, is a high dimensional problem (though less

so than the original spike time representation), suppose that we instead

construct a pairwise approximation to  , which we denote

, which we denote  , for a population of size

, for a population of size  . (The pairwise model derives its name from the fact that it

has the same mean and pairwise correlations as the true model; see Eq. (15).)

Our question, then, is: if

. (The pairwise model derives its name from the fact that it

has the same mean and pairwise correlations as the true model; see Eq. (15).)

Our question, then, is: if  is close to

is close to  for small

for small  , what can we say about how close the two distributions are for

large

, what can we say about how close the two distributions are for

large  ?

?

Figure 1. Transforming spike trains to spike count.

(A) Spike rasters. Tick marks indicate spike times; different rows correspond to different neurons. The horizontal dashed lines are the bin boundaries. (B) Spike count in each bin. In this example the bins are small enough that there is at most one spike per bin, but this is not necessary—one could use bigger bins and have larger spike counts.

To answer this question quantitatively, we need a measure of distance. The

measure we use, denoted  , is defined in Eq. (3) below, but all we need to know about it

for now is that if

, is defined in Eq. (3) below, but all we need to know about it

for now is that if  then

then  , and if

, and if  is near one then

is near one then  is far from

is far from  . In terms of

. In terms of  , our main results are as follows. First, for small

, our main results are as follows. First, for small  , in what we call the perturbative regime,

, in what we call the perturbative regime,  is proportional to

is proportional to  . In other words, as the population size increases, the

pairwise model becomes a worse and worse approximation to the true distribution.

Second, this behavior is entirely generic: for small

. In other words, as the population size increases, the

pairwise model becomes a worse and worse approximation to the true distribution.

Second, this behavior is entirely generic: for small  ,

,  increases linearly, no matter what the true distribution is.

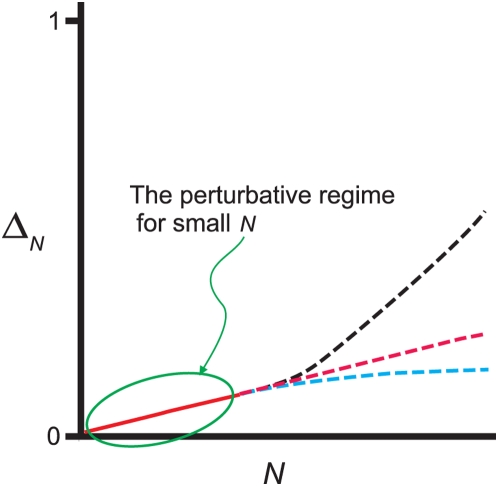

This is illustrated schematically in Fig. 2, which shows the generic behavior of

increases linearly, no matter what the true distribution is.

This is illustrated schematically in Fig. 2, which shows the generic behavior of  . The solid red part of the curve is the perturbative regime,

where

. The solid red part of the curve is the perturbative regime,

where  is a linearly increasing function of

is a linearly increasing function of  ; the dashed curves show possible behavior beyond the

perturbative regime.

; the dashed curves show possible behavior beyond the

perturbative regime.

Figure 2. Cartoon illustrating the dependence of  on

on  .

.

For small  there is always a perturbative regime in which

there is always a perturbative regime in which  increases linearly with

increases linearly with  (solid red line). When

(solid red line). When  becomes large,

becomes large,  may continue increasing with

may continue increasing with  (red and black dashed lines) or it may plateau (cyan

dashed line), depending on

(red and black dashed lines) or it may plateau (cyan

dashed line), depending on  . The observation that

. The observation that  increases linearly with

increases linearly with  does not, therefore, provide much, if any information

about the large

does not, therefore, provide much, if any information

about the large  behavior.

behavior.

These results have an important corollary: if one does an experiment and finds

that  is increasing linearly with

is increasing linearly with  , then one has no information at all about the true

distribution. The flip side of this is more encouraging: if one can measure the

true distribution for sufficiently large

, then one has no information at all about the true

distribution. The flip side of this is more encouraging: if one can measure the

true distribution for sufficiently large  that

that  saturates, as for the dashed blue line in Fig. 2, then there is a chance that

extrapolation to large

saturates, as for the dashed blue line in Fig. 2, then there is a chance that

extrapolation to large  is meaningful. The implications for the interpretation of

experiments is, therefore, that one can gain information about large

is meaningful. The implications for the interpretation of

experiments is, therefore, that one can gain information about large  behavior only if one can analyze data past the perturbative

regime.

behavior only if one can analyze data past the perturbative

regime.

Under what conditions is a subsystem in the perturbative regime? The answer turns

out to be simple: the size of the system,  , times the average probability of observing a spike in a bin,

must be small compared to 1. For example, if the average probability is 1/100,

then a system will be in the perturbative regime if the number of neurons is

small compared to 100. This last observation would seem to be good news: if we

divide the spike trains into sufficiently small time bins and ignore temporal

correlations, then we can model the data very well with a pairwise distribution.

The problem with this, though, is that temporal correlations can be ignored only

when time bins are large compared to the autocorrelation time. This leads to a

kind of catch-22: pairwise models are guaranteed to work well (in the sense that

they describe spike trains in which temporal correlations are ignored) if one

uses small time bins, but small time bins is the one regime where ignoring

temporal correlations is not a valid approximation.

, times the average probability of observing a spike in a bin,

must be small compared to 1. For example, if the average probability is 1/100,

then a system will be in the perturbative regime if the number of neurons is

small compared to 100. This last observation would seem to be good news: if we

divide the spike trains into sufficiently small time bins and ignore temporal

correlations, then we can model the data very well with a pairwise distribution.

The problem with this, though, is that temporal correlations can be ignored only

when time bins are large compared to the autocorrelation time. This leads to a

kind of catch-22: pairwise models are guaranteed to work well (in the sense that

they describe spike trains in which temporal correlations are ignored) if one

uses small time bins, but small time bins is the one regime where ignoring

temporal correlations is not a valid approximation.

In the next several sections we quantify the qualitative picture presented above:

we write down an explicit expression for  , explain why it increases linearly with

, explain why it increases linearly with  when

when  is small, and provide additional tests, besides assessing the

linearity of

is small, and provide additional tests, besides assessing the

linearity of  , to determine whether or not one is in the perturbative

regime.

, to determine whether or not one is in the perturbative

regime.

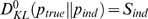

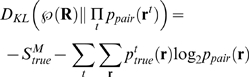

Quantifying how well the pairwise model explains the data

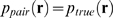

A natural measure of the distance between  and

and  is the Kullback-Leibler (KL) divergence [12], denoted

is the Kullback-Leibler (KL) divergence [12], denoted  and defined as

and defined as

| (1) |

The KL divergence is zero if the two distributions are equal; otherwise it is nonzero.

Although the KL divergence is a very natural measure, it is not easy to interpret

(except, of course, when it is exactly zero). That is because a nonzero KL

divergence tells us that  , but it does not give us any real handle on how good, or bad,

the pairwise model really is. To make sense of the KL divergence, we need

something to compare it to. A reasonable reference quantity, used by a number of

authors [7]–[9], is the KL divergence

between the true distribution and the independent one, the latter denoted

, but it does not give us any real handle on how good, or bad,

the pairwise model really is. To make sense of the KL divergence, we need

something to compare it to. A reasonable reference quantity, used by a number of

authors [7]–[9], is the KL divergence

between the true distribution and the independent one, the latter denoted  . The independent distribution, as its name suggests, is a

distribution in which the variables are taken to be independent,

. The independent distribution, as its name suggests, is a

distribution in which the variables are taken to be independent,

| (2) |

where  is the distribution of the response of the

is the distribution of the response of the  neuron,

neuron,  . With this choice for a comparison, we define a normalized

distance measure—a measure of how well the pairwise model explains the data—as

. With this choice for a comparison, we define a normalized

distance measure—a measure of how well the pairwise model explains the data—as

| (3) |

Note that the denominator in this expression,  , is usually referred to as the multi-information [7],[13],[14].

, is usually referred to as the multi-information [7],[13],[14].

The quantity  lies between 0 and 1, and measures how well a pairwise model

does relative to an independent model. If it is 0, the pairwise model is equal

to the true model (

lies between 0 and 1, and measures how well a pairwise model

does relative to an independent model. If it is 0, the pairwise model is equal

to the true model ( ); if it is near 1, the pairwise model offers little

improvement over the independent model; and if it is exactly 1, the pairwise

model is equal to the independent model (

); if it is near 1, the pairwise model offers little

improvement over the independent model; and if it is exactly 1, the pairwise

model is equal to the independent model ( ), and so offers no improvement.

), and so offers no improvement.

How do we attach intuitive meaning to the two divergences  and

and  ? For the latter, we use the fact that, as is easy to show,

? For the latter, we use the fact that, as is easy to show,

| (4) |

where  and

and  are the entropies [15],[16] of

are the entropies [15],[16] of  and

and  , respectively, defined, as usual, to be

, respectively, defined, as usual, to be  . For the former, we use the definition of the KL divergence to write

. For the former, we use the definition of the KL divergence to write

|

(5) |

The quantity  has the flavor of an entropy, although it is a true entropy

only when

has the flavor of an entropy, although it is a true entropy

only when  is maximum entropy as well as pairwise (see Eq. (6) below).

For other pairwise distributions, all we need to know is that

is maximum entropy as well as pairwise (see Eq. (6) below).

For other pairwise distributions, all we need to know is that  lies between

lies between  and

and  . A plot illustrating the relationship between

. A plot illustrating the relationship between  , the two entropies

, the two entropies  and

and  , and the entropy-like quantity

, and the entropy-like quantity  , is shown in Fig.

3.

, is shown in Fig.

3.

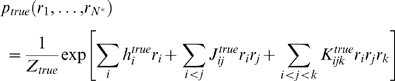

Figure 3. Schematic plot of  (black line),

(black line),  (cyan line) and

(cyan line) and  (red line).

(red line).

The better the pairwise model, the closer  is to

is to  . This is reflected in the normalized distance measure,

. This is reflected in the normalized distance measure,  , which is the distance between the red and cyan lines

divided by the distance between the red and black lines.

, which is the distance between the red and cyan lines

divided by the distance between the red and black lines.

Note that for pairwise maximum entropy models (or maximum entropy models for

short),  has a particularly simple interpretation, since in this case

has a particularly simple interpretation, since in this case  really is an entropy. Using

really is an entropy. Using  to denote the pairwise entropy of a maximum entropy model, for

this case we have

to denote the pairwise entropy of a maximum entropy model, for

this case we have

| (6) |

as is easy to see by inserting Eqs. (4) and (5) into (3). This expression has been used previously by a number of authors [7],[9].

in the perturbative regime

in the perturbative regime

The extrapolation problem discussed above is the problem of determining  in the large

in the large  limit. This is hard to do in general, but there is a

perturbative regime in which it is possible. The small parameter that defines

this regime is the average number of spikes produced by the whole population of

neurons in each time bin. It is given quantitatively by

limit. This is hard to do in general, but there is a

perturbative regime in which it is possible. The small parameter that defines

this regime is the average number of spikes produced by the whole population of

neurons in each time bin. It is given quantitatively by  where

where  is the bin size and

is the bin size and  the average firing rate,

the average firing rate,

| (7) |

with  the firing rate of neuron

the firing rate of neuron  .

.

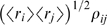

The first step in the perturbation expansion is to compute the two quantities

that make up  :

:  and

and  . As we show in the section “Perturbative

Expansion” (Methods), these are

given by

. As we show in the section “Perturbative

Expansion” (Methods), these are

given by

| (8a) |

| (8b) |

where

| (9a) |

| (9b) |

Here and in what follows we use  to denote terms that are proportional to

to denote terms that are proportional to  in the limit

in the limit  . The

. The  in Eq. (9a) has been noted previously [7], although the

authors did not compute the prefactor,

in Eq. (9a) has been noted previously [7], although the

authors did not compute the prefactor,  .

.

The prefactors  and

and  , which are given explicitly in Eqs. (42) and (44), depend on

the low order statistics of the spike trains:

, which are given explicitly in Eqs. (42) and (44), depend on

the low order statistics of the spike trains:  depends on the second order normalized correlation

coefficients,

depends on the second order normalized correlation

coefficients,  depends on the second and third order normalized correlation

coefficients (the normalized correlation coefficients are defined in Eq. (16)

below), and both depend on the firing rates of the individual cells. The details

of that dependence, however, are not important for now; what is important is

that

depends on the second and third order normalized correlation

coefficients (the normalized correlation coefficients are defined in Eq. (16)

below), and both depend on the firing rates of the individual cells. The details

of that dependence, however, are not important for now; what is important is

that  and

and  are independent of

are independent of  and

and  (at least on average; see next section).

(at least on average; see next section).

Inserting Eq. (8) into Eq. (3) (into the definition of  ) and using Eq. (9), we arrive at our main result,

) and using Eq. (9), we arrive at our main result,

| (10a) |

| (10b) |

Note that in the regime  ,

,  is necessarily small. This explains why, in an analytic study

of non-pairwise model in which

is necessarily small. This explains why, in an analytic study

of non-pairwise model in which  was small, Shlens et al. found that

was small, Shlens et al. found that  was rarely greater than 0.1 [8].

was rarely greater than 0.1 [8].

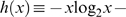

We refer to quantities with a superscript zero as “zeroth order.” Note that, via Eqs. (4) and (5), we can also define zeroth order entropies,

| (11a) |

| (11b) |

These quantities are important primarily because differences between them and the actual entropies indicate a breakdown of the perturbation expansion (see in particular Fig. 4 below).

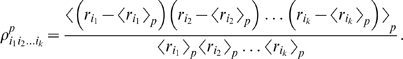

Figure 4. Cartoon showing extrapolations of the zeroth order KL divergences and entropies (see Eqs. (9) and (11)).

These extrapolations illustrate why the two natural quantities derived

from them,  and

and  , occur beyond the point at which the extrapolation is

meaningful. (A) Extrapolations on a log-log scale. Black:

, occur beyond the point at which the extrapolation is

meaningful. (A) Extrapolations on a log-log scale. Black:  ; green:

; green:  ; cyan:

; cyan:  . The red points are the data. The points

. The red points are the data. The points  and

and  label the intersections of the two extrapolations with

the independent entropy,

label the intersections of the two extrapolations with

the independent entropy,  . (B) Extrapolation of the entropies rather than the KL

divergences, plotted on a linear-linear scale. The data, again shown in

red, is barely visible in the lower left hand corner. Black:

. (B) Extrapolation of the entropies rather than the KL

divergences, plotted on a linear-linear scale. The data, again shown in

red, is barely visible in the lower left hand corner. Black:  ; solid orange:

; solid orange:  ; solid maroon:

; solid maroon:  . The dashed orange and maroon lines are the

extrapolations of the true entropy and true pairwise

“entropy”, respectively.

. The dashed orange and maroon lines are the

extrapolations of the true entropy and true pairwise

“entropy”, respectively.

Assuming, as discussed in the next section, that  and

and  are approximately independent of

are approximately independent of  ,

,  , and

, and  , Eq. (10) tells us that

, Eq. (10) tells us that  scales linearly with

scales linearly with  in the perturbative regime—the regime in which

in the perturbative regime—the regime in which  . The key observation about this scaling is that it is

independent of the details of the true distribution,

. The key observation about this scaling is that it is

independent of the details of the true distribution,  . This has a very important consequence, one that has major

implications for experimental data: if one does an experiment with small

. This has a very important consequence, one that has major

implications for experimental data: if one does an experiment with small  and finds that

and finds that  is proportional to

is proportional to  , then the system is, with very high probability, in the

perturbative regime, and one does not know whether

, then the system is, with very high probability, in the

perturbative regime, and one does not know whether  will remain close to

will remain close to  as

as  increases. What this means in practical terms is that if one

wants to know whether a particular pairwise model is a good one for large

systems, it is necessary to consider values of

increases. What this means in practical terms is that if one

wants to know whether a particular pairwise model is a good one for large

systems, it is necessary to consider values of  that are significantly greater than

that are significantly greater than  , where

, where

| (12) |

We interpret  as the value at which there is a crossover in the behavior of

the pairwise model. Specifically, if

as the value at which there is a crossover in the behavior of

the pairwise model. Specifically, if  , the system is in the perturbative regime and the pairwise

model is not informative about the large

, the system is in the perturbative regime and the pairwise

model is not informative about the large  behavior, whereas if

behavior, whereas if  , the system is in a regime in which it may be possible to make

inferences about the behavior of the full system.

, the system is in a regime in which it may be possible to make

inferences about the behavior of the full system.

The prefactors,  and

and

As we show in Methods (see in particular

Eqs. (42) and (44)), the prefactors  and

and  depend on which neurons out of the full population are used.

Consequently, these quantities fluctuate around their true values (in the sense

that different subpopulations produce different values of

depend on which neurons out of the full population are used.

Consequently, these quantities fluctuate around their true values (in the sense

that different subpopulations produce different values of  and

and  ), where “true” refers to an average over

all possible

), where “true” refers to an average over

all possible  sub-populations. Here we assume that the

sub-populations. Here we assume that the  neurons are chosen randomly from the full population, so any

set of

neurons are chosen randomly from the full population, so any

set of  neurons provides unbiased estimates of

neurons provides unbiased estimates of  and

and  . In our simulations, the fluctuations were small, as indicated

by the small error bars on the blue points in Fig. 5. However, in general the size of the

fluctuations is determined by the range of firing rates and correlation

coefficients, with larger ranges producing larger fluctuations.

. In our simulations, the fluctuations were small, as indicated

by the small error bars on the blue points in Fig. 5. However, in general the size of the

fluctuations is determined by the range of firing rates and correlation

coefficients, with larger ranges producing larger fluctuations.

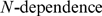

Figure 5. The  dependence of the KL divergences and the normalized

distance measure,

dependence of the KL divergences and the normalized

distance measure,  .

.

Data was generated from a third order model, as explained in the section

“Generating synthetic data” (Methods), and fit to pairwise maximum entropy models

and independent models. All data points correspond to averages over

marginalizations of the true distribution (see text for details). The

red points were computed directly using Eqs. (1), (3) and (4); the blue

points are the zeroth order estimates,  ,

,  , and

, and  , in rows 1, 2 and 3, respectively. The three columns

correspond to

, in rows 1, 2 and 3, respectively. The three columns

correspond to  , 0.029, and 0.037, from left to right. (A, B, C) (

, 0.029, and 0.037, from left to right. (A, B, C) ( ). Predictions from the perturbative expansion are in

good agreement with the measurements up to

). Predictions from the perturbative expansion are in

good agreement with the measurements up to  , indicating that the data is in the perturbative

regime. (D, E, F) (

, indicating that the data is in the perturbative

regime. (D, E, F) ( ). Predictions from the perturbative expansion are in

good agreement with the measurements up to

). Predictions from the perturbative expansion are in

good agreement with the measurements up to  , indicating that the data is only partially in the

perturbative regime. (G, H, I) (

, indicating that the data is only partially in the

perturbative regime. (G, H, I) ( ). Predictions from the perturbative expansion are not

in good agreement with the measurements, even for small

). Predictions from the perturbative expansion are not

in good agreement with the measurements, even for small  , indicating that the data is outside the perturbative

regime.

, indicating that the data is outside the perturbative

regime.

Because  does not affect the mean values of

does not affect the mean values of  and

and  , it is reasonable to think of these quantities—or at

least their true values—as being independent of

, it is reasonable to think of these quantities—or at

least their true values—as being independent of  . They are also independent of

. They are also independent of  , again modulo fluctuations. Finally, as we show in the section

“Bin size and the correlation coefficients” (Methods),

, again modulo fluctuations. Finally, as we show in the section

“Bin size and the correlation coefficients” (Methods),  and

and  are independent of

are independent of  in the limit that

in the limit that  is small compared to the width of the temporal correlations

among neurons. We will assume this limit applies here. In sum, then, to first

approximation,

is small compared to the width of the temporal correlations

among neurons. We will assume this limit applies here. In sum, then, to first

approximation,  and

and  are independent of our three important quantities:

are independent of our three important quantities:  ,

,  , and

, and  . Thus, we treat them as effectively constant throughout our

analysis.

. Thus, we treat them as effectively constant throughout our

analysis.

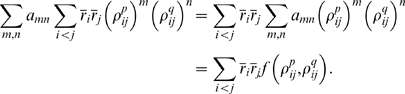

The dangers of extrapolation

Although the behavior of  in the perturbative regime does not tell us much about its

behavior at large

in the perturbative regime does not tell us much about its

behavior at large  , it is possible that other quantities that can be calculated

in the perturbative regime,

, it is possible that other quantities that can be calculated

in the perturbative regime,  ,

,  , and

, and  (the last one exactly), are informative, as others have

suggested [7]. Here we show that this is not the

case—they also are uninformative.

(the last one exactly), are informative, as others have

suggested [7]. Here we show that this is not the

case—they also are uninformative.

The easiest way to relate the perturbative regime to the large  regime is to ignore the corrections in Eqs. (8a) and (8b),

extrapolate the expressions for the zeroth order terms, and ask what their large

regime is to ignore the corrections in Eqs. (8a) and (8b),

extrapolate the expressions for the zeroth order terms, and ask what their large  behavior tells us. Generic versions of these extrapolations,

plotted on a log-log scale, are shown in Fig. 4A, along with a plot of the independent

entropy,

behavior tells us. Generic versions of these extrapolations,

plotted on a log-log scale, are shown in Fig. 4A, along with a plot of the independent

entropy,  (which is necessarily linear in

(which is necessarily linear in  ). The first thing we notice about the extrapolations is that

they do not, technically, have a large

). The first thing we notice about the extrapolations is that

they do not, technically, have a large  behavior: one terminates at the point labeled

behavior: one terminates at the point labeled  , which is where

, which is where  (and thus, via Eq. (0a),

(and thus, via Eq. (0a),  ; continuing the extrapolation implies negative true zeroth

order entropy); the other at the point labeled

; continuing the extrapolation implies negative true zeroth

order entropy); the other at the point labeled  , which is where

, which is where  (and thus, via Eq. (5) and the fact that

(and thus, via Eq. (5) and the fact that  ,

,  ).

).

Despite the fact that the extrapolations end abruptly, they still might provide

information about the large  regime. For example,

regime. For example,  and/or

and/or  might be values of

might be values of  at which something interesting happens. To see if this is the

case, in Fig. 4B we plot the

naive extrapolations of

at which something interesting happens. To see if this is the

case, in Fig. 4B we plot the

naive extrapolations of  and

and  (that is, the zeroth order quantities given in Eq. (11),

(that is, the zeroth order quantities given in Eq. (11),  and

and  ), on a linear-linear plot, along with

), on a linear-linear plot, along with  . This plot contains no new information compared to Fig. 4A, but it does elucidate

the meaning of the extrapolations. Perhaps its most striking feature is that the

naive extrapolation of

. This plot contains no new information compared to Fig. 4A, but it does elucidate

the meaning of the extrapolations. Perhaps its most striking feature is that the

naive extrapolation of  has a decreasing portion. As is easy to show mathematically,

entropy cannot decrease with

has a decreasing portion. As is easy to show mathematically,

entropy cannot decrease with  (intuitively, that is because observing one additional neuron

cannot decrease the entropy of previously observed neurons). Thus,

(intuitively, that is because observing one additional neuron

cannot decrease the entropy of previously observed neurons). Thus,  , which occurs well beyond the point where the naive

extrapolation of

, which occurs well beyond the point where the naive

extrapolation of  is decreasing, has essentially no meaning, something that has

been pointed out previously by Bethge and Berens [10]. The other

potentially important value of

is decreasing, has essentially no meaning, something that has

been pointed out previously by Bethge and Berens [10]. The other

potentially important value of  is

is  . This, though, suffers from a similar problem: when

. This, though, suffers from a similar problem: when  ,

,  is negative.

is negative.

How do the naively extrapolated entropies—the solid lines in Fig. 4B—compare to

the actual entropies? To answer this, in Fig. 4B we show the true behavior of  and

and  versus

versus  (dashed lines). Note that

(dashed lines). Note that  is asymptotically linear in

is asymptotically linear in  , even though the neurons are correlated, a fact that forces

, even though the neurons are correlated, a fact that forces  to be linear in

to be linear in  , as it is sandwiched between

, as it is sandwiched between  and

and  . (The asymptotically linear behavior of

. (The asymptotically linear behavior of  is typical, even in highly correlated systems. Although this

is not always appreciated, it is easy to show; see the section “The

behavior of the true entropy in the large N limit,”

Methods.) Comparing the dashed and solid lines, we see that the naively

extrapolated and true entropies, and thus the naively extrapolated and true

values of

is typical, even in highly correlated systems. Although this

is not always appreciated, it is easy to show; see the section “The

behavior of the true entropy in the large N limit,”

Methods.) Comparing the dashed and solid lines, we see that the naively

extrapolated and true entropies, and thus the naively extrapolated and true

values of  , have extremely different behavior. This further suggests that

there is very little connection between the perturbative and large

, have extremely different behavior. This further suggests that

there is very little connection between the perturbative and large  regimes.

regimes.

In fact, these observations follow directly from the fact that  and

and  depend only on correlation coefficients up to third order (see

Eqs. (42) and (44)) whereas the large

depend only on correlation coefficients up to third order (see

Eqs. (42) and (44)) whereas the large  behavior depends on correlations at all orders. Thus, since

behavior depends on correlations at all orders. Thus, since  and

and  tell us very little, if anything, about higher order

correlations, it is not surprising that they tell us very little about the

behavior of

tell us very little, if anything, about higher order

correlations, it is not surprising that they tell us very little about the

behavior of  in the large

in the large  limit.

limit.

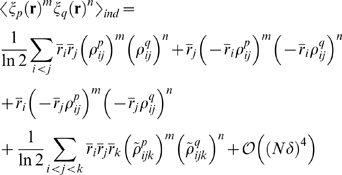

Numerical simulations

To check that our perturbation expansions, Eqs. (8–10), are correct,

and to investigate the regime in which they are valid, we performed numerical

simulations. We generated, from synthetic data, a set of true distributions,

computed the true distance measures,  ,

,  , and

, and  numerically, and compared them to the zeroth order ones,

numerically, and compared them to the zeroth order ones,  ,

,  , and

, and  . If the perturbation expansion is valid, then the true values

should be close to the zeroth order values whenever

. If the perturbation expansion is valid, then the true values

should be close to the zeroth order values whenever  is small. The results are shown in Fig. 5, and that is, indeed, what we

observed. Before discussing that figure, though, we explain our procedure for

constructing true distributions.

is small. The results are shown in Fig. 5, and that is, indeed, what we

observed. Before discussing that figure, though, we explain our procedure for

constructing true distributions.

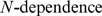

The set of true distributions we used were generated from a third order model (so named because it includes up to third order interactions). This model has the form

|

(13) |

where  is a normalization constant, chosen to ensure that the

probability distribution sums to 1, and the sums over

is a normalization constant, chosen to ensure that the

probability distribution sums to 1, and the sums over  ,

,  and

and  run from 1 to

run from 1 to  . The parameters

. The parameters  and

and  were chosen by sampling from distributions (see the section

“Generating synthetic data,” Methods), which allowed us to

generate many different true distributions. In all of our simulations we

calculate the relevant quantities directly from Eq. (13) . Consequently, we do

not have to worry about issues of finite data, as would be the case in realistic

experiments.

were chosen by sampling from distributions (see the section

“Generating synthetic data,” Methods), which allowed us to

generate many different true distributions. In all of our simulations we

calculate the relevant quantities directly from Eq. (13) . Consequently, we do

not have to worry about issues of finite data, as would be the case in realistic

experiments.

For a particular simulation (corresponding to a column in Fig. 5), we generated a true distribution

with  , randomly chose 5 neurons, and marginalized over them. This

gave us a 10-neuron true distribution. True distributions with

, randomly chose 5 neurons, and marginalized over them. This

gave us a 10-neuron true distribution. True distributions with  were constructed by marginalizing over additional neurons

within our 10-neuron population. To achieve a representative sample, we

considered all possible marginalizations (of which there are 10 choose

were constructed by marginalizing over additional neurons

within our 10-neuron population. To achieve a representative sample, we

considered all possible marginalizations (of which there are 10 choose  , or

, or  ). The results in Fig. 5 are averages over these marginalizations.

). The results in Fig. 5 are averages over these marginalizations.

For neural data, the most commonly used pairwise model is the maximum entropy model. Therefore, we use that one here. To emphasize the maximum entropy nature of this model, we replace the label “pair” that we have been using so far with “maxent.” The maximum entropy distribution has the form

|

(14) |

Fitting this distribution requires that we choose the  and

and  so that the first and second moments match those of the true

distribution. Quantitatively, these conditions are

so that the first and second moments match those of the true

distribution. Quantitatively, these conditions are

| (15a) |

| (15b) |

where the angle brackets,  and

and  , represent averages with respect to

, represent averages with respect to  and

and  , respectively. Once we have

, respectively. Once we have  and

and  that satisfy Eq. (15), we calculate the KL divergences, Eqs.

(1) and (4), and use those to compute

that satisfy Eq. (15), we calculate the KL divergences, Eqs.

(1) and (4), and use those to compute  .

.

The results are shown in Fig.

5. The rows correspond to our three quantities of interest:  ,

,  , and

, and  (top to bottom). The columns correspond to different values of

(top to bottom). The columns correspond to different values of  , with the smallest

, with the smallest  on the left and the largest on the right. Red circles are the

true values of these quantities; blue ones are the zeroth order predictions from

Eqs. (9) and (10b).

on the left and the largest on the right. Red circles are the

true values of these quantities; blue ones are the zeroth order predictions from

Eqs. (9) and (10b).

As suggested by our perturbation analysis, the smaller the value of  , the larger the value of

, the larger the value of  for which agreement between the true and zeroth order values

is good. Our simulations corroborate this: the left column of Fig. 5 has

for which agreement between the true and zeroth order values

is good. Our simulations corroborate this: the left column of Fig. 5 has  , and agreement is almost perfect out to

, and agreement is almost perfect out to  ; the middle column has

; the middle column has  , and agreement is almost perfect out to

, and agreement is almost perfect out to  ; and the right column has

; and the right column has  , and agreement is not good for any value of

, and agreement is not good for any value of  . Note that the perturbation expansion breaks down for values

of

. Note that the perturbation expansion breaks down for values

of  well below

well below  (defined in Eq.(12)): in the middle column of Fig. 5 it breaks down when

(defined in Eq.(12)): in the middle column of Fig. 5 it breaks down when  , and in the right column it breaks down when

, and in the right column it breaks down when  . This is not, however, especially surprising, as the

perturbation expansion is guaranteed to be valid only if

. This is not, however, especially surprising, as the

perturbation expansion is guaranteed to be valid only if  .

.

These results validate the perturbation expansions in Eqs. (8) and (10), and show

that those expansions provide sensible predictions—at least for some

parameters. They also suggest a natural way to assess the significance of

one's data: plot  ,

,  , and

, and  versus

versus  , and look for agreement with the predictions of the

perturbation expansion. If agreement is good, as in the left column of Fig. 5, then one is in the

perturbative regime, and one knows very little about the true distribution. If,

on the other hand, agreement is bad, as in the right column, then one is out of

the perturbative regime, and it may be possible to extract meaningful

information about the relationship between the true and pairwise models.

, and look for agreement with the predictions of the

perturbation expansion. If agreement is good, as in the left column of Fig. 5, then one is in the

perturbative regime, and one knows very little about the true distribution. If,

on the other hand, agreement is bad, as in the right column, then one is out of

the perturbative regime, and it may be possible to extract meaningful

information about the relationship between the true and pairwise models.

That said, the qualifier “at least for some parameters” is an

important one. This is because the perturbation expansion is essentially an

expansion that depends on the normalized correlation coefficients, and there is

an underlying assumption that they don't exhibit pathological behavior.

The  order normalized correlation coefficient for the distribution

order normalized correlation coefficient for the distribution  , denoted

, denoted  , is written

, is written

|

(16) |

A potentially problematic feature of the correlation coefficients

is that the denominator is a product over mean activities. If the mean

activities are small, the denominator can become very small, leading to very

large correlation coefficients. Although our perturbation expansion is always

valid for sufficiently small time bins (because the correlation coefficients

eventually becomes independent of bin size; see the section “Bin size

and the correlation coeffcients,” Methods), “sufficiently

small” can depend in detail on the parameters. For instance, at the

maximum population size tested ( ) and for the true distributions that had

) and for the true distributions that had  , the absolute error of the prediction had a median of

approximately 16%. However, about 11% of the runs had

errors larger than 60%. Thus, the exact size of the small parameter

at which the perturbative expansion breaks down can depend on the details of the

true distribution.

, the absolute error of the prediction had a median of

approximately 16%. However, about 11% of the runs had

errors larger than 60%. Thus, the exact size of the small parameter

at which the perturbative expansion breaks down can depend on the details of the

true distribution.

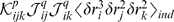

External fields and pairwise couplings have a simple dependence on firing rates and correlation coefficients in the perturbative regime

Estimation of the KL divergences and  from real data can be hard, in the sense that it takes a large

amount of data for them to converge to their true values. In addition, as

discussed above, in the section “The prefactors

gind and

gpair”, there are fluctuations in

from real data can be hard, in the sense that it takes a large

amount of data for them to converge to their true values. In addition, as

discussed above, in the section “The prefactors

gind and

gpair”, there are fluctuations in  associated with finite subsampling of the full population of

neurons. Those fluctuations tend to keep

associated with finite subsampling of the full population of

neurons. Those fluctuations tend to keep  from being purely linear, as can seen, for example, in the

blue points in Fig. 5F and

5I. We therefore provide a second set of relationships that can be used

to determine whether or not a particular data set is in the perturbative regime.

These relationships are between the parameters of the maximum entropy model, the

from being purely linear, as can seen, for example, in the

blue points in Fig. 5F and

5I. We therefore provide a second set of relationships that can be used

to determine whether or not a particular data set is in the perturbative regime.

These relationships are between the parameters of the maximum entropy model, the  and

and  , and the mean activity and normalized second order correlation

coefficient (the latter defined in Eq. (19) below).

, and the mean activity and normalized second order correlation

coefficient (the latter defined in Eq. (19) below).

Since the quantity  plays a central role in our analysis, we replace it with a

single parameter, which we denote

plays a central role in our analysis, we replace it with a

single parameter, which we denote  ,

,

| (17) |

In terms of this parameter, we find (using the same perturbative approach that led us to Eqs. (8–10); see the section “External fields, pairwise couplings and moments,” Methods), that

| (18a) |

| (18b) |

where  , the normalized second order correlation coefficient, is

defined in Eq. (16) with

, the normalized second order correlation coefficient, is

defined in Eq. (16) with  ; it is given explicitly by

; it is given explicitly by

| (19) |

(We don't need a superscript on  or a subscript on the angle brackets because the first and

second moments are the same under the true and pairwise distributions.) Equation

(18a) can be reconstructed from the low firing rate limit of analysis carried

out by Sessak and Monasson [17], as can the first three terms in the

expansion of the log in Eq. (18b).

or a subscript on the angle brackets because the first and

second moments are the same under the true and pairwise distributions.) Equation

(18a) can be reconstructed from the low firing rate limit of analysis carried

out by Sessak and Monasson [17], as can the first three terms in the

expansion of the log in Eq. (18b).

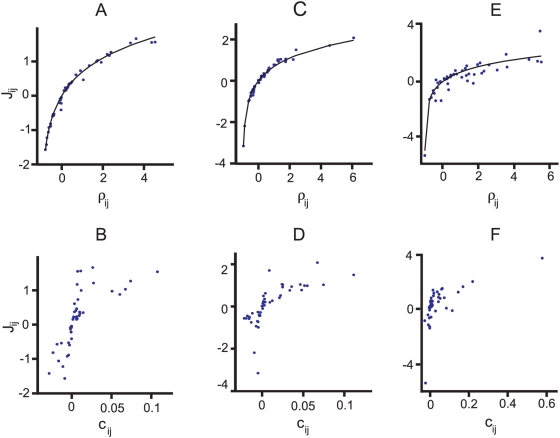

Equation (18) tells us that the  of the

of the  and

and  , the external fields and pairwise couplings, is very weak. In

Fig. 6 we confirm this

through numerical simulations. Equation (18b) also provides additional

information—it gives us a functional relationship between the pairwise

couplings and the normalized pairwise correlations function,

, the external fields and pairwise couplings, is very weak. In

Fig. 6 we confirm this

through numerical simulations. Equation (18b) also provides additional

information—it gives us a functional relationship between the pairwise

couplings and the normalized pairwise correlations function,  . In Fig.

7A–C we plot the pairwise couplings,

. In Fig.

7A–C we plot the pairwise couplings,  , versus the normalized pairwise correlation coefficient,

, versus the normalized pairwise correlation coefficient,  (blue dots), along with the prediction from Eq. (18b) (black

line). Consistent with our predictions, the data in Fig. 7A–C essentially follows a

line—the line given by Eq. (18b).

(blue dots), along with the prediction from Eq. (18b) (black

line). Consistent with our predictions, the data in Fig. 7A–C essentially follows a

line—the line given by Eq. (18b).

Figure 6. The true external fields and pairwise interactions compared with the predictions of the perturbation expansion.

The top row shows the true external fields,  , versus those predicted from Eq. (18a), and the bottom

row shows the true pairwise interaction,

, versus those predicted from Eq. (18a), and the bottom

row shows the true pairwise interaction,  , versus those predicted from Eq. (18b). Values of

, versus those predicted from Eq. (18b). Values of  ranging from 5 to 10 are shown, with different colors

corresponding to different

ranging from 5 to 10 are shown, with different colors

corresponding to different  . For each value of

. For each value of  , data is shown for 45 realization of the true

distribution. Insets show the

, data is shown for 45 realization of the true

distribution. Insets show the  of the mean external fields (top) and mean pairwise

interactions (bottom). The three columns correspond exactly to the

columns in Fig. 5.

(A, B) (

of the mean external fields (top) and mean pairwise

interactions (bottom). The three columns correspond exactly to the

columns in Fig. 5.

(A, B) ( ). There is a very good match between the true and

predicted values of both external fields and pairwise interactions. (C,

D) (

). There is a very good match between the true and

predicted values of both external fields and pairwise interactions. (C,

D) ( ). Even though

). Even though  has increased, the match is still good. (E, F) (

has increased, the match is still good. (E, F) ( ). The true and predicted external fields and pairwise

interactions do not match as well as the cases shown in (A, B, C, D).

There is also now a stronger

). The true and predicted external fields and pairwise

interactions do not match as well as the cases shown in (A, B, C, D).

There is also now a stronger  in the mean external fields compared to (A) and (B).

The

in the mean external fields compared to (A) and (B).

The  of the pairwise interactions in (F) is weaker than

that of the external fields, but still notably stronger than the ones in

(B) and (D). This indicates that the perturbative expansion is starting

to break down.

of the pairwise interactions in (F) is weaker than

that of the external fields, but still notably stronger than the ones in

(B) and (D). This indicates that the perturbative expansion is starting

to break down.

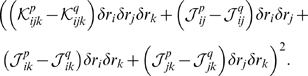

Figure 7. The relation between pairwise couplings and pairwise correlations.

This figure shows that there is a simple relation between  and

and  , but not between

, but not between  and

and  . (A, C, E)

. (A, C, E)  versus the normalized coefficients,

versus the normalized coefficients,  (blue points), along with the predicted relationship,

via Eq. (18b) (black line). (B, D, F)

(blue points), along with the predicted relationship,

via Eq. (18b) (black line). (B, D, F)  versus the Pearson correlation coefficients,

versus the Pearson correlation coefficients,  , Eq. (26) (blue points). The three columns correspond

exactly to the columns in Fig. 5 from left to right; that is,

, Eq. (26) (blue points). The three columns correspond

exactly to the columns in Fig. 5 from left to right; that is,  for (A, B),

for (A, B),  for (C, D), and

for (C, D), and  for (E, F). The prediction in the top row (black line)

matches the data well, even in the rightmost column.

for (E, F). The prediction in the top row (black line)

matches the data well, even in the rightmost column.

A relationship between the pairwise couplings and the correlations coefficients

has been sought previously, but for the more standard Pearson correlation

coefficient [7],[9],[11]. Our analysis explains why it was not found.

The Pearson correlation coefficient, denoted  , is given by

, is given by

|

(20) |

In the small  limit—the limit of interest—the right hand

side of Eq. (20) is approximately equal to

limit—the limit of interest—the right hand

side of Eq. (20) is approximately equal to  . Because

. Because  depends on the external fields,

depends on the external fields,  and

and  (see Eq. (18a)) and there is a one-to-one

relationship between

(see Eq. (18a)) and there is a one-to-one

relationship between  and

and  (Eq. (18b)), there can't be a one-to-one relationship

between

(Eq. (18b)), there can't be a one-to-one relationship

between  and

and  . We verify the lack of a relationship in Fig. 7D and 7E, where we again plot

. We verify the lack of a relationship in Fig. 7D and 7E, where we again plot  , but this time versus the standard correlation coefficient,

, but this time versus the standard correlation coefficient,  . As predicted, the data in Fig. 7D and 7E is scattered over two

dimensions. This suggests that

. As predicted, the data in Fig. 7D and 7E is scattered over two

dimensions. This suggests that  , not

, not  , is the natural measure of the correlation between two neurons

when they have a binary representation, something that has also been suggested

by Amari based on information-geometric arguments [18].

, is the natural measure of the correlation between two neurons

when they have a binary representation, something that has also been suggested

by Amari based on information-geometric arguments [18].

Note that the lack of a simple relationship between the pairwise couplings and

the standard correlation coefficient has been a major motivation in building

maximum entropy models [7],[11]. This is for good reason: if there is a

simple relationship, knowing the  adds essentially nothing. Thus, plotting

adds essentially nothing. Thus, plotting  versus

versus  (but not

(but not  ) is an important test of one's data, and if the two

quantities fall on the curve predicted by Eq. (18b), the maximum entropy model

is adding very little information, if any.

) is an important test of one's data, and if the two

quantities fall on the curve predicted by Eq. (18b), the maximum entropy model

is adding very little information, if any.

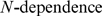

As an aside, we should point out that the  is a function of the variables used to represent the firing

patterns. Here we use 0 for no spike and 1 for one or more spikes, but another,

possibly more common, representation, derived from the Ising model and used in a

number of studies [7],[9],[11], is to use −1 and +1

rather than 0 and 1. This amounts to making the change of variables

is a function of the variables used to represent the firing

patterns. Here we use 0 for no spike and 1 for one or more spikes, but another,

possibly more common, representation, derived from the Ising model and used in a

number of studies [7],[9],[11], is to use −1 and +1

rather than 0 and 1. This amounts to making the change of variables  . In terms of

. In terms of  , the maximum entropy model has the form

, the maximum entropy model has the form  where

where  and

and  are given by

are given by

| (21a) |

| (21b) |

The second term on the right side of Eq. (21a) is proportional to  , which means the external fields in the Ising representation

acquire a linear

, which means the external fields in the Ising representation

acquire a linear  that was not present in our 0/1 representation. The two

studies that reported the

that was not present in our 0/1 representation. The two

studies that reported the  of the external fields [7],[9] used

this representation, and, as predicted by our analysis, the external fields in

those studies had a component that was linear in

of the external fields [7],[9] used

this representation, and, as predicted by our analysis, the external fields in

those studies had a component that was linear in  .

.

Is there anything wrong with using small time bins?

An outcome of our perturbative approach is that our normalized distance measure,  , is linear in bin size (see Eq. (10b)). This suggests that one

could make the pairwise model look better and better simply by making the bin

size smaller and smaller. Is there anything wrong with this? The answer is yes,

for reasons discussed above (see the the section “The extrapolation

problem”); here we emphasize and expand on this issue, as it is an

important one for making sense of experimental results.

, is linear in bin size (see Eq. (10b)). This suggests that one

could make the pairwise model look better and better simply by making the bin

size smaller and smaller. Is there anything wrong with this? The answer is yes,

for reasons discussed above (see the the section “The extrapolation

problem”); here we emphasize and expand on this issue, as it is an

important one for making sense of experimental results.

The problem arises because what we have been calling the “true” distribution is not really the true distribution of spike trains. It is the distribution assuming independent time bins, an assumption that becomes worse and worse as we make the bins smaller and smaller. (We use this potentially confusing nomenclature primarily because all studies of neuronal data carried out so far have assumed temporal independence, and compared the pairwise distribution to the temporally independent—but still correlated across neurons—distribution [7]–[9],[11]. In addition, the correct name “true under the assumption of temporal independence,” is unwieldy.) Here we quantify how much worse. In particular, we show that if one uses time bins that are small compared to the characteristic correlation time in the spike trains, the pairwise model will not provide a good description of the data. Essentially, we show that, when the time bins are too small, the error one makes in ignoring temporal correlations is larger than the error one makes in ignoring correlations across neurons.

As usual, we divide time into bins of size  . However, because we are dropping the independence assumption,

we use

. However, because we are dropping the independence assumption,

we use  , rather than

, rather than  , to denote the response in bin

, to denote the response in bin  . The full probability distribution over all time bins is

denoted

. The full probability distribution over all time bins is

denoted  . Here

. Here  is the number of bins; it is equal to

is the number of bins; it is equal to  for spike trains of length

for spike trains of length  . If time bins are approximately independent and the

distribution of

. If time bins are approximately independent and the

distribution of  is the same for all

is the same for all  (an assumption we make for convenience only, but do not need;

see the section “Extending the normalized distance measure to the time

domain,” Methods), we can write

(an assumption we make for convenience only, but do not need;

see the section “Extending the normalized distance measure to the time

domain,” Methods), we can write

| (22) |

Furthermore, if the pairwise model is a good one, we have

| (23) |

Combining Eqs. (22) and Eq. (23) then gives us an especially simple expression

for the full probability distribution:  .

.

The problem with small time bins lies in Eq. (22): the right hand side is a good

approximation to the true distribution when the time bins are large compared to

the spike train correlation time, but it is a bad approximation when the time

bins are small (because adjacent time bins become highly correlated). To

quantify how bad, we compare the error one makes assuming independence across

time to the error one makes assuming independence across neurons. The ratio of

those two errors, denoted  , is given by

, is given by

|

(24) |

It is relatively easy to compute  in the limit of small time bins (see the section

“Extending the normalized distance measure to the time

domain,” Methods), and we find that

in the limit of small time bins (see the section

“Extending the normalized distance measure to the time

domain,” Methods), and we find that

| (25) |

As expected, this reduces to our old result,  , when there is only one time bin (

, when there is only one time bin ( ). When

). When  is larger than 1, however, the second term is always at least

one, and for small bin size, the third term is much larger than one.

Consequently, if we use bins that are small compared to the temporal correlation

time of the spike trains, the pairwise model will do a very bad job describing

the full, temporally correlated spike trains.

is larger than 1, however, the second term is always at least

one, and for small bin size, the third term is much larger than one.

Consequently, if we use bins that are small compared to the temporal correlation

time of the spike trains, the pairwise model will do a very bad job describing

the full, temporally correlated spike trains.

Discussion

Probability distributions over the configurations of biological systems are extremely important quantities. However, because of the large number of interacting elements comprising such systems, these distributions can almost never be determined directly from experimental data. Using parametric models to approximate the true distribution is the only existing alternative. While such models are promising, they are typically applied only to small subsystems, not the full system. This raises the question: are they good models of the full system?

We answered this question for a class of parametric models known as pairwise models. We focused on a particular application, neuronal spike trains, and our main result is as follows: if one were to record spikes from multiple neurons, use sufficiently small time bins and a sufficiently small number of cells, and assume temporal independence, then a pairwise model will almost always succeed in matching the true (but temporally independent) distribution—whether or not it would match the true (but still temporally independent) distribution for large time bins or a large number of cells. In other words, pairwise models in the “sufficiently small” regime, what we refer to as the perturbative regime, have almost no predictive value for what will happen with large populations. This makes extrapolation from small to large systems dangerous.

This observation is important because pairwise models, and in particular pairwise

maximum entropy models, have recently attracted a great deal of attention: they have

been applied to salamander and guinea pig retinas [7], primate retina

[8],

primate cortex [9], cultured cortical networks [7], and cat visual

cortex [11].

These studies have mainly operated close to the perturbative regime. For example,

Schneidman et al. [7] had  (for the data set described in their Fig. 5), Tang et al. [9] had

(for the data set described in their Fig. 5), Tang et al. [9] had  to 0.4 (depending on the preparation), and Yu et al. [11] had

to 0.4 (depending on the preparation), and Yu et al. [11] had  . For these studies, then, it would be hard to justify

extrapolating to large populations.

. For these studies, then, it would be hard to justify

extrapolating to large populations.

The study by Shlens et al. [8], on the other hand, might be more amenable to

extrapolation. This is because spatially localized visual patterns were used to

stimulate retinal ganglion cells, for which a nearest neighbor maximum entropy

models provided a good fit to their data. (Nearest neighbor means  is zero unless neuron

is zero unless neuron  and neuron

and neuron  are adjacent.) Our analysis still applies, but, since all but the

nearest neighbor correlations are zero, many of the terms that make up

are adjacent.) Our analysis still applies, but, since all but the

nearest neighbor correlations are zero, many of the terms that make up  and

and  vanish (see Eqs. (42) and (44)). Consequently, the small parameter

in the perturbative expansion becomes

vanish (see Eqs. (42) and (44)). Consequently, the small parameter

in the perturbative expansion becomes  (rather than

(rather than  ), where

), where  is the number of nearest neighbors. Since

is the number of nearest neighbors. Since  is fixed, independent of the population size, the small parameter

will not change as the population size increases. Thus, Shlens et al.may have tapped

into the large population behavior even though they considered only a few cells at a

time in their analysis. Indeed, they have recently extended their analysis to more

than 100 neurons, and they still find that nearest neighbor maximum entropy models

provide very good fits to the data [19].

is fixed, independent of the population size, the small parameter

will not change as the population size increases. Thus, Shlens et al.may have tapped

into the large population behavior even though they considered only a few cells at a

time in their analysis. Indeed, they have recently extended their analysis to more

than 100 neurons, and they still find that nearest neighbor maximum entropy models

provide very good fits to the data [19].

Time bins and population size

That the pairwise model is always good if  is sufficiently small has strong implications: if we want to

build a good model for a particular

is sufficiently small has strong implications: if we want to

build a good model for a particular  , we can simply choose a bin size that is small compared to

, we can simply choose a bin size that is small compared to  . However, one of the assumptions in all pairwise models used

on neural data is that bins at different times are independent. This produces a

tension between small time bins and temporal independence: small time bins

essentially ensure that a pairwise model will provide a close approximation to a

model with independent bins, but they make adjacent bins highly correlated.

Large time bins come with no such assurance, but they make adjacent bins

independent. Unfortunately, this tension is often unresolvable in large

populations, in the sense that pairwise models are assured to work only up to

populations of size

. However, one of the assumptions in all pairwise models used

on neural data is that bins at different times are independent. This produces a

tension between small time bins and temporal independence: small time bins

essentially ensure that a pairwise model will provide a close approximation to a

model with independent bins, but they make adjacent bins highly correlated.

Large time bins come with no such assurance, but they make adjacent bins

independent. Unfortunately, this tension is often unresolvable in large

populations, in the sense that pairwise models are assured to work only up to

populations of size  where τ

corr is the typical

correlation time. Given that

where τ

corr is the typical

correlation time. Given that  is at least several Hz, for experimental paradigms in which

the correlation time is more than a few hundred ms,

is at least several Hz, for experimental paradigms in which

the correlation time is more than a few hundred ms,  is about one, and this assurance does not apply to even

moderately sized populations of neurons.

is about one, and this assurance does not apply to even

moderately sized populations of neurons.

These observations are especially relevant for studies that use small time bins to model spike trains driven by natural stimuli. This is because the long correlation times inherent in natural stimuli are passed on to the spike trains, so the assumption of independence across time (which is required for the independence assumption to be valid) breaks badly. Knowing that these models are successful in describing spike trains under the independence assumption, then, does not tell us whether they will be successful in describing full, temporally correlated, spike trains. Note that for studies that use stimuli with short correlation times (e.g., non-natural stimuli such as white noise), the temporal correlations in the spike trains are likely to be short, and using small time bins may be perfectly valid.

The only study that has investigated the issue of temporal correlations in

maximum entropy models does indeed support the above picture [9]: for

the parameters used in that study ( to 0.4), the pairwise maximum entropy model provided a good

fit to the data (

to 0.4), the pairwise maximum entropy model provided a good

fit to the data ( was typically smaller than 0.1), but it did not do a good job

modeling the temporal structure of the spike trains.

was typically smaller than 0.1), but it did not do a good job

modeling the temporal structure of the spike trains.

Other systems—Protein folding

As mentioned in the Introduction, in addition to the studies on neuronal data,

studies on protein folding have also emphasized the role of pairwise

interactions [2],[3]. Briefly, proteins

consist of strings of amino acids, and a major question in structural biology

is: what is the probability distribution of amino acid strings in naturally

folding proteins? One way to answer this is to approximate the full probability

distribution of naturally folding proteins from knowledge of single-site and

pairwise distributions. One can show that there is a perturbative regime for

proteins as well. This can be readily seen using the celebrated HP protein model

[20], where a protein is composed of only two types of

amino acids. If, at each site, one amino acid type is preferred and occurs with

high probability, say  with

with  , then a protein of length shorter than

, then a protein of length shorter than  will be in the perturbative regime, and, therefore, a good

match between the true distribution and the pairwise distribution for such a

protein is virtually guaranteed.

will be in the perturbative regime, and, therefore, a good

match between the true distribution and the pairwise distribution for such a

protein is virtually guaranteed.

Fortunately, the properties of real proteins generally prevent this from

happening: at the majority of sites in a protein, the distribution of amino

acids is not sharply peaked around one amino acid. Even for

those sites that are sharply peaked (the evolutionarily-conserved sites), the

probability of the most likely amino acid,  , rarely exceeds 90% [21],[22]. This puts proteins consisting of only a few

amino acids out of the perturbative regime, and puts longer

proteins—the ones usually studied using pairwise models—well

out of it.

, rarely exceeds 90% [21],[22]. This puts proteins consisting of only a few

amino acids out of the perturbative regime, and puts longer

proteins—the ones usually studied using pairwise models—well

out of it.