Abstract

Variable selection is fundamental to high-dimensional statistical modeling. Many variable selection techniques may be implemented by maximum penalized likelihood using various penalty functions. Optimizing the penalized likelihood function is often challenging because it may be nondifferentiable and/or nonconcave. This article proposes a new class of algorithms for finding a maximizer of the penalized likelihood for a broad class of penalty functions. These algorithms operate by perturbing the penalty function slightly to render it differentiable, then optimizing this differentiable function using a minorize-maximize (MM) algorithm. MM algorithms are useful extensions of the well-known class of EM algorithms, a fact that allows us to analyze the local and global convergence of the proposed algorithm using some of the techniques employed for EM algorithms. In particular, we prove that when our MM algorithms converge, they must converge to a desirable point; we also discuss conditions under which this convergence may be guaranteed. We exploit the Newton-Raphson-like aspect of these algorithms to propose a sandwich estimator for the standard errors of the estimators. Our method performs well in numerical tests.

Keywords: AIC, BIC, EM algorithm, LASSO, MM algorithm, penalized likelihood, oracle estimator, SCAD

1. Introduction

Fan and Li (2001) discuss a family of variable selection methods that adopt a penalized likelihood approach. This family includes well-established methods such as AIC and BIC as well as more recent methods such as bridge regression (Frank and Friedman, 1993), LASSO (Tibshirani, 1996), and SCAD (Antoniadis and Fan, 2001). What all of these methods share is the fact that they require the maximization of a penalized likelihood function. Even when the log-likelihood itself is relatively easy to maximize, the penalized version may present numerical challenges. For example, in the case of SCAD or LASSO or bridge regression, the penalized log-likelihood function is nondifferentiable; with SCAD or bridge regression, the function is also nonconcave. To perform the maximization, Fan and Li (2001) propose a new and generic algorithm based on local quadratic approximation (LQA). In this article, we demonstrate and explore a connection between the LQA algorithm and minorization-maximization (MM) algorithms (Hunter and Lange, 2000), which shows that many different variable-selection techniques may be accomplished using the same algorithmic techniques.

MM algorithms exploit an optimization technique that extends the central idea of EM algorithms (Dempster et al., 1977) to situations not necessarily involving missing data nor even maximum likelihood estimation. The connection between LQA and MM enables us to analyze the convergence of the local quadratic approximation algorithm using techniques related to EM algorithms (Wu, 1983; Meng, 1994; Lange, 1995; Meng and Van Dyk, 1997). Furthermore, we extend the local quadratic approximation idea here by forming a slightly perturbed objective function to maximize. This perturbation solves two problems at once. First, it renders the objective function differentiable, which allows us to prove results regarding the convergence of the MM algorithms discussed here. Second, it repairs one of the drawbacks that the LQA algorithm shares with forward variable selection: Namely, if a covariate is deleted at any step in the LQA algorithm, it will necessarily be excluded from the final selected model. We discuss how to decide a priori how large a perturbation to choose when implementing this method and make specific comments about the price paid for using this perturbation.

The new algorithm we propose retains virtues of the Newton-Raphson algorithm, which among other things allows us to compute a standard error for the resulting estimator via a sandwich formula. It is also numerically stable and is never forced to delete a covariate permanently in the process of iteration. The general convergence results known for MM algorithms imply among other things that the newly proposed algorithm converges correctly to the maximizer of the perturbed penalized likelihood whenever this maximizer is the unique local maximum. The linear rate of convergence of the algorithm is governed by the largest eigenvalue of the derivative of the algorithm map.

The rest of the article is organized as follows. Section 2 briefly introduces the variable selection problem and the penalized likelihood approach. After providing some background on MM algorithms, Section 3 explains their connection to the LQA idea, then provides a modification to LQA that may be shown to be an MM algorithm for maximizing a perturbed version of the penalized likelihood. Various convergence properties of this new MM algorithm are also covered in Section 3. Section 4 describes a method of estimating covariances and presents numerical tests of the algorithm on a set of four diverse problems. Finally, Section 5 discusses the numerical results and offers some broad comparisons among the competing methods studied in Section 4. Some proofs appear in the Appendix.

2. Variable selection via maximum penalized likelihood

Suppose that {(xi, yi): i = 1, …, n} is a random sample with conditional log-likelihood givenxi. Typically, the yi are response variables that depend on the predictors x i through a linear combination , andφ is a dispersion parameter. Some of the components of β may be zero, which means that the corresponding predictors do not influence the response. The goal of variable selection is to identify those components of β that are zero. A secondary goal in this article will be to estimate the nonzero components of β.

In some variable selection applications, such as standard Poisson or logistic regression, no dispersion parameter φ exists. In other applications, such as linear regression, φ is to be estimated separately after β is estimated. Therefore, the penalized likelihood approach does not penalize φ, so we simplify notation in the remainder of this article by eliminating explicit reference to φ. In particular, ℓi(β, φ) will be written ℓi(β). This is standard practice in the variable selection literature; see, for example, Frank and Friedman (1993), Tibshirani (1996), Fan and Li (2001) or Miller (2002).

Many variable selection criteria arise as special cases of the general formulation discussed in Fan and Li (2001), where the penalized likelihood function takes the form

| (2.1) |

In equation (2.1), the pj(·) are given nonnegative penalty functions, d is the dimension of the covariate vector xi, and the λj are tuning parameters controlling model complexity. The selected model based on the maximized penalized likelihood (2.1) satisfies pj(|βj|) = 0 for certain βj’s, which accordingly are not included in this final model, and so model estimation is performed at the same time as model selection. Often, the λj may be chosen by a data-driven approach such as cross-validation or generalized cross-validation (Craven and Wahba, 1979).

The penalty functions pj(·) and the tuning parameters λj are not necessarily the same for all j. This allows one to incorporate hierarchical prior information for the unknown coefficients by using different penalty functions and taking different values of λj for the different regression coefficients. For instance, one may not be willing to penalize important factors in practice. For ease of presentation, we assume throughout this article that the same penalization is applied to every component of β and write λjpj(|βj|) as pλ (|βj|), which implies that the penalty function is allowed to depend on λ. Extensions to situations with different penalty functions for each component of β do not involve any extra difficulties except more tedious notation.

Many well-known variable selection criteria are special cases of the penalized likelihood of equation (2.1). For instance, consider the L0 penalty pλ(|β|) = 0.5λ2I (|β| ≠ 0), also called the entropy penalty in the literature, where I(·) is an indicator function. Note that the dimension or the size of a model equals ΣjI (|βj| ≠ 0), the number of nonzero regression coefficients in the model. In other words, the penalized likelihood (2.1) with the entropy penalty can be rewritten as

where |M| = ΣjI (|βj| ≠ 0), the size of the underlying candidate model. Hence, several popular variable selection criteria can be derived from the penalized likelihood (2.1) by choosing different values of λ. For instance, the AIC (or Cp) and BIC criteria correspond to and , respectively, although these criteria were motivated from different principles. Similar in its effect to the entropy penalty function is the hard thresholding penalty function (see Antoniadis, 1997) given by

Recently, many authors have been working on penalized least squares with the Lq penalty pλ (|β|) = λ|β|q. Indeed, bridge regression is the solution of penalized least squares with the Lq penalty (Frank and Friedman, 1993). It is well known that ridge regression is the solution of penalized likelihood with the L2 penalty. The L1 penalty results in LASSO, proposed by Tibshirani (1996). Finally, there is the smoothly clipped absolute deviation (SCAD) penalty of Fan and Li (2001). For fixed a > 2, the SCAD penalty is the continuous function pλ (·) defined by pλ (0) = 0 and, for β ≠ 0,

| (2.2) |

where throughout this article denotes the derivative of pλ (·) evaluated at |β|.

Letting denote the limit of as x → |β| from above, the MM algorithms introduced in the next section are shown to apply to any continuous penalty function pλ (β) that is nondecreasing and concave on (0, ∞) such that . The previously mentioned penalty functions that satisfy these criteria are hard thresholding, SCAD, LASSO, and Lq with 0 < q ≤ 1. Therefore, the methods presented in this article enable a wide range of variable selection algorithms.

Nonetheless, there are some common penalty functions that do not meet our criteria. The entropy penalty is excluded because it is discontinuous, and in fact maximizing the AIC- or BIC-penalized likelihood in cases other than linear regression often requires exhaustive fitting of all possible models. For q > 1, the Lq penalty is excluded because it is not concave on (0, ∞); however, the fact that pλ(|β|) = |β|q is everywhere differentiable suggests that the penalized likelihood function may be susceptible to gradient-based methods and hence alternatives such as MM may be of limited value. In particular, the special case of ridge regression (q = 2) admits a closed-form maximizer, a fact we allude to following equation (3.17). But there is a more subtle reason for excluding Lq penalties with q > 1. Note that for any of our nonexcluded penalty functions. As Fan and Li (2001) point out, this fact (which they call singularity at the origin because it implies a discontinuous derivative at zero) ensures that the penalized likelihood has the sparsity property: The resulting estimator is automatically a thresholding rule that sets small estimated coefficients to zero, thus reducing model complexity. Sparsity is an important property for any penalized likelihood technique that is to be useful in a variable selection setting.

3. Maximized penalized likelihood via MM

It is sometimes a challenging task to find the maximum penalized likelihood estimate. Fan and Li (2001) propose a local quadratic approximation for the penalty function: Suppose that we are given an initial value β(0). If is very close to 0, then set β̂j = 0; otherwise, the penalty function is locally approximated by a quadratic function using

when . In other words,

| (3.1) |

for . With the aid of this local quadratic approximation, a Newton-Raphson algorithm (for example) can be used to maximize the penalized likelihood function, where each iteration updates the local quadratic approximation.

In this section, we show that this local quadratic approximation idea is an instance of an MM algorithm. This fact enables us to study the convergence properties of the algorithm using techniques applicable to MM algorithms in general. Throughout this section, we refrain from specifying the form of pλ(·), since the derivations apply equally to any one of hard thresholding, LASSO, bridge regression using Lq with 0 < q ≤ 1, SCAD, or any other method with penalty function pλ(·) satisfying the conditions of Proposition 3.1.

3.1. Local Quadratic Approximation as an MM algorithm

MM stands for Majorize-Minimize or Minorize-Maximize, depending on the context (Hunter and Lange, 2000). EM algorithms (Dempster et al., 1977), in which the E-step may be shown to be equivalent to a minorization step, are the most famous examples of MM algorithms, though there are many examples of MM algorithms that involve neither maximum likelihood nor missing data. Heiser (1995) and Lange et al. (2000) give partial surveys of work in this area. The apparent ambiguity in allowing MM to have two different meanings is harmless, since any maximization problem may be viewed as a minimization problem by changing the sign of the objective function.

Consider the penalty term −n Σjpλ(|βj|) of equation (2.1), ignoring its minus sign for the moment. Mimicking the idea of Equation (3.1), we define the function

| (3.2) |

We assume that pλ(·) is piecewise differentiable so that exists for all θ. Thus, Φθ0(θ) is a well-defined quadratic function of θ for all real θ0 except for θ0 = 0. Section 3.2 remedies the problem that Φθ0(θ) is undefined when θ0 = 0.

We are interested in penalty functions pλ(θ) for which

| (3.3) |

A function Φθ0(θ) satisfying condition (3.3) is said to majorize pλ(|θ|) at θ0. If the direction of the inequality in condition (3.3) were reversed, then Φθ0(θ) would be said to minorize pλ(|θ|) at θ0.

The driving force behind an MM algorithm is the fact that condition (3.3) implies

which in turn gives the descent property

| (3.4) |

In other words, if θ0 denotes the current iterate, any decrease in the value of Φθ0(θ) guarantees a decrease in the value of pλ(|θ|). If θk denotes the estimate of the parameter at the kth iteration, then an iterative minimization algorithm would exploit the descent property by constructing the majorizing function Φθk(θ), then minimizing it to give θk+1 — hence the name “majorize-minimize algorithm”. Proposition 3.1 gives sufficient conditions on the penalty function pλ(·) in order that Φθ0(θ) majorizes pλ (|θ|). Several different penalty functions that satisfy these conditions are depicted in Figure 1 along with their majorizing quadratic functions.

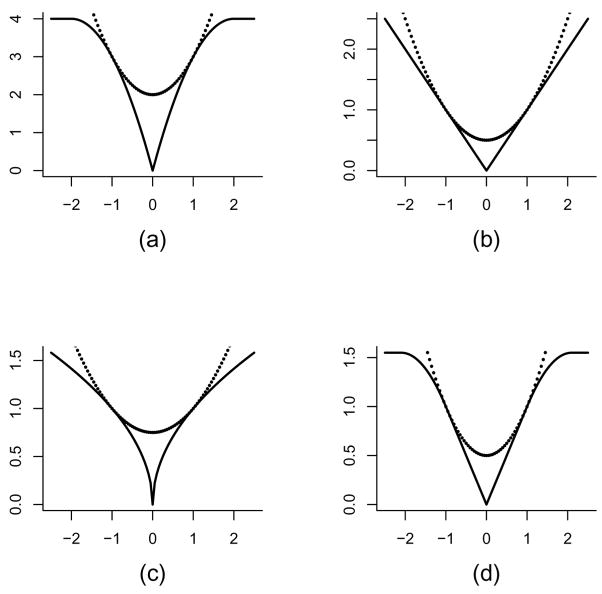

Fig. 1.

Majorizing functions Φθ0 (θ) for various penalty functions are shown as dotted curves; the penalty functions are shown as solid curves. The four penalties are (a) hard thresholding with λ = 2; (b) L1 with λ = 1; (c) L0.5 with λ = 1; and (d) SCAD with a = 2.1 and λ = 1. In each case, θ0 = 1.

Proposition 3.1

Suppose that on (0, ∞), pλ (·) is piecewise differentiable, nondecreasing, and concave. Furthermore, pλ (·) is continuous at 0 and . Then for all θ0 ≠ 0 , Φθ0(θ) as defined in equation (3.2) majorizes pλ(|θ|) at the points ±|θ0|. In particular, conditions (3.3) and (3.4) hold.

Next, suppose that we wish to employ the local quadratic approximation idea in an iterative algorithm, where denotes the value of β at the kth iteration. Appending negative signs to pλ(|βj|) and to turn majorization into minorization, we obtain the following corollary from Proposition 3.1 and equation (2.1):

Corollary 3.1

Suppose that for all j and that pλ(θ) satisfies the conditions given in Proposition 3.1. Then

| (3.5) |

minorizes Q(β) atβ(k).

By the ascent property — the analogue, for minorizing functions, of the descent property (3.4) — Corollary 3.1 suggests that given β(k), we should defineβ(k+1) to be the maximizer of Sk(β), thereby ensuring that Q(β(k+1))> Q(β(k)). The benefit of replacing one maximization problem by another in this way is that Sk(β) is susceptible to a gradient-based scheme such as Newton-Raphson, unlike the non-differentiable function Q(β). Since the sum in equation (3.5) is a quadratic function of β — in fact, the Hessian matrix of this sum is a diagonal matrix — this sum presents no difficulties for maximization. Therefore, the difficulty of maximizing Sk(β) is determined solely by the form of ℓ(β). For example, in the special case of a linear regression model with normally distributed errors, the log-likelihood function ℓ(β) is itself quadratic, which implies that Sk(β) may be maximized analytically.

If some of the components of β(k) equal zero (or in practice, if some of them are close to zero), the algorithm proceeds by simply setting the final estimates of those components to be zero, deleting them from consideration, then defining the function Sk(β̃) as in equation (3.5), whereβ̃ is the vector composed of the nonzero components of β. The weakness of this scheme is that once a component is set to zero, it may never reenter the model at a later stage of the algorithm. The modification proposed in Section 3.2 eliminates this weakness.

3.2. An improved version of local quadratic approximation

The drawback of Φθ0(θ) in equation (3.2) is that when θ0 = 0, the denominator 2|θ0| makes Φθ0(θ) undefined. We therefore replace 2|θ0| by 2(ε + |θ0|) for some ε > 0. The resulting perturbed version of Φθ0(θ), which is defined for all real θ0, is no longer a majorizer of pλ(θ) as required by the MM theory. Nonetheless, we may show that it majorizes a perturbed version of pλ(θ), which may therefore be used to define a new objective function Qε(β) that is similar to Q(β). To this end, we define

| (3.6) |

and

| (3.7) |

The next proposition shows that an MM algorithm may be applied to the maximization of Qε(β) and suggests that a maximizer of Qε(β) should be close to a maximizer of Q(β) as long as ε is small and Q(β) is not too flat in the neighborhood of the maximizer.

Proposition 3.2

Suppose that pλ (·) satisfies the conditions of Proposition 3.1. For ε > 0, define

| (3.8) |

Then (a) For any fixed ε >0,

| (3.9) |

minorizes Qε (β)atβ(k).

(b) As ε↓ 0, |Qε (β) − Q(β)| → 0 uniformly on compact subsets of the parameter space.

Note that when the MM algorithm converges and Sk,ε (β) is maximized by β(k), it follows by straightforward differentiation that

Thus, when ε is small, the resulting estimator β̂ approximately satisfies the penalized likelihood equation

| (3.10) |

Suppose that β̂ε denotes a maximizer of Qε(β) and β̂0 denotes a maximizer of Q(β). In general, it is impossible to bound ||β̂ε − β̂0|| as a function of ε because Q(β) may be quite flat near its maximum. However, suppose that Qε(β) is upper compact, which means that {β: Qε(β) ≥ c} is a compact subset of Rd for any real constant c. In this case, then we may obtain the following corollary of Proposition 3.2(b).

Corollary 3.2

Suppose thatβ̂ε denotes a maximizer of Qε(β). If Qε(β)is upper compact for all ε ≥ 0, then under the conditions of Proposition 3.1, any limit point of the sequence{β̂ε}ε↓0 is a maximizer of Q(β).

Both Proposition 3.2(a) and Corollary 3.2 give results as ε ↓ 0, which suggests the use of an algorithm in which ε is allowed to go to zero as the iterations progress. Certainly it would be possible to implement such an algorithm. However, in this article we interpret these results merely as theoretical justification for using the ε perturbation in the first place, and instead we hold ε fixed throughout the algorithms we discuss. The choice of this fixed ε is the subject of the next subsection.

3.3. Choice of ε

Essentially, we want to solve the penalized likelihood equation (3.10) for β̂j ≠ 0 (recall that pλ(βj) is not differentiable at βj = 0). Suppose, therefore, that convergence is declared in a numerical algorithm whenever |∂Q(β̂)/∂βj| < τ for a predetermined small tolerance τ. Our algorithm accomplishes this by declaring convergence whenever |∂Qε(β̂)/∂βj| < τ/2, where satisfies

| (3.11) |

Since is nonincreasing on (0, ∞), equation (3.6) implies

forβ̂j ≠ 0. Thus, to ensure that inequality (3.11) is satisfied, simply take

This may of course lead to a different value of ε each time β changes; therefore, in our implementations we fix

| (3.12) |

When the algorithm converges, if |∂Q(β̂)/∂βj| < τ, βj is presumed to be zero. In the numerical examples of Section 4, we take τ = 10−8.

3.4. The algorithm

By the ascent property of an MM algorithm, β(k+1) should be defined at the kth iteration so that

| (3.13) |

Note that if β(k+1) satisfies inequality (3.13) without actually maximizing Sk,ε(β), we still refer to the algorithm as an MM algorithm, even though the second M –for “maximize” – isn’t quite accurate. Alternatively, we could adopt the convention used for EM algorithms by Dempster et al (1977) and refer to such algorithms as generalized MM, or GMM, algorithms; however, in this article we prefer to require extra duty of the label MM and avoid further crowding the field of acronym-named algorithms.

From equation (3.9) we see that Sk,ε (β) consists of two parts, ℓ(β) and the sum of quadratic functions of the components of β. The latter part is easy to maximize directly; thus, the difficulty of maximizing Sk,ε(β), or at least attaining inequality (3.13), is solely determined by the form of ℓ(β). In general, when ℓ(β) is easy to maximize then so is Sk,ε(β), which distinguishes Sk,ε(β) from the (ε-perturbed) penalized likelihood Qε(β). Even if ℓ(β) is not easily maximized, at least if it is differentiable then so is Sk,ε(β), which means inequality (3.13) may be attained using standard gradient-based maximization methods such as Newton-Raphson. The function Qε(β), though differentiable, is not easily optimized using gradient-based methods because it is very close to the nondifferentiable function Q(β).

Although it is impossible to detail all possible forms of likelihood functions ℓ(β) to which these methods apply, we begin with the completely general Newton-Raphson-based algorithm

| (3.14) |

where ∇2Sk,ε(·) and ∇Sk,ε(·) denote the Hessian matrix and gradient vector, respectively, and αk is some positive scalar. Using the definition (3.9) of Sk,ε(β), algorithm (3.14) becomes

| (3.15) |

where Ek is the diagonal matrix with (j, j)th entry . We take the ordinary maximum likelihood estimate to be the initial value β(0) in the numerical examples of section 4.

There are some important special cases. First, we consider perhaps the simplest case but by far the most important case because of its ubiquity — the linear regression model with normal homoscedastic errors, for which

| (3.16) |

where X = (x1, ···, xn)T, the design matrix of the regression model, and y is the response vector consisting of yi. As pointed out at the beginning of Section 2, we omit mention of the error variance parameter σ2 here because this parameter is to be estimated using standard methods once β has been estimated. In this case, equation (3.15) with αk = 1 gives a closed-form maximizer of Sk,ε(β) because Sk,ε(β) is exactly a quadratic function. The resulting algorithm

| (3.17) |

may be viewed as iterative ridge regression. In the case of LASSO, which uses the L1 penalty, this algorithm is guaranteed to converge to the unique maximizer of Qε(β) (see Corollary 3.3).

The slightly more general case of generalized linear models with canonical link includes common procedures such as logistic regression and Poisson regression. In these cases, the Hessian matrix is

where V is a diagonal matrix whose (i, i)th entry is given by and v(·) is the variance function. Therefore, the Hessian matrix is negative definite provided that X is of full rank. This means that the vector −[∇2Sk,ε(β(k))−1 ∇Sk,ε(β(k)) in equation (3.14) is a direction of ascent (unless of course ∇Sk,ε(β(k)) = 0), which guarantees the existence of a positive αk such that β (k+1) satisfies inequality (3.13). A simple way of determining αk is the practice of step-halving: Try αk = 2−ν for ν = 0, 1, 2, … until the resulting β(k+1) satisfies inequality (3.13). For large samples in practice, ℓ(β) tends to be nearly quadratic, particularly in the vicinity of the MLE (which is close to the penalized MLE for large samples), so step-halving does not need to be employed very often. Nonetheless, in our experience it is always wise to check that inequality (3.13) is satisfied; even when the truth of this inequality is guaranteed as in the linear regression model, checking the inequality is often a useful debugging tool. Indeed, whenever possible it is a good idea to check that the objective function itself satisfies Qε(β(k+1)) > Qε(β(k)); for even though this inequality is guaranteed theoretically by inequality (3.13), in practice many a programming error is caught using this simple check.

In still more general cases, the Hessian matrix ∇2ℓ(β) may not be negative definite or it may be difficult to compute. If the Fisher information matrix I(β) is known, then −nI(β) may be used in place of ∇2ℓ(β). This leads to

and the positive definiteness of the Fisher information will ensure that step-halving will always lead to an increase in Sk,ε(β).

Finally, we mention the possibility of applying an MM algorithm to a penalized partial likelihood function. Consider the example of the Cox proportional hazards model (Cox, 1975), which is the most popular model in survival data analysis. The variable selection methods of Section 2 are extended to the Cox model by Fan and Li (2002). Let Ti, Ci and xi be respectively the survival time, the censoring time and the vector of covariates for the ith individual. Correspondingly, let Zi = min{Ti, Ci} be the observed time and δi = I(Ti ≤ Ci) be the censoring indicator. It is assumed that Ti and Ci are conditionally independent given xi and that the censoring mechanism is noninformative. Under the proportional hazards model, the conditional hazard function h(ti|xi) of Ti given xi is given by

where h0(t) is the baseline hazard function. This is a semiparametric model with parameters h0(t) and β. Denote the ordered uncensored failure times by , and let (j) provide the label for the item falling at so that the covariates associated with the N failures are x(1), ···, x(N). Let denote the risk set right before the time . A partial likelihood is given by

Fan and Li (2002) consider variable selection via maximization of the penalized partial likelihood

It can be shown that the Hessian matrix of ℓP(β) is negative definite provided that X is of full rank. Under certain regularity conditions, it can further be shown that in the neighborhood of a maximizer, the partial likelihood is nearly quadratic for large n.

3.5. Convergence

It is not possible to prove that a generic MM algorithm converges at all, and when an MM algorithm does converge, there is no guarantee that it converges to a global maximum. For example, there are well-known pathological examples in which EM algorithms — or generalized EM algorithms, as discussed following inequality (3.13) — converge to saddle points or fail to converge (McLachlan and Krishnan, 1997). Nonetheless, it is often possible to obtain convergence results in specific cases.

We define a stationary point of the function Qε(β) to be any point β at which the gradient vector is zero. Because the differentiable function Sk,ε(β) is tangent to Qε(β) at the point β(k) by the minorization property, the gradient vectors of Sk,ε(β) and Qε(β) are equal when evaluated atβ(k). Thus, when using the method of Section 3.4 to maximize Sk,ε(β), we see that fixed points of the algorithm—i.e., points with gradient zero— coincide with stationary points of Qε(β). Letting M(β) denote the map implicitly defined by the algorithm that takes β(k) to β(k+1) for any point β(k), inequality (3.13) states that Sk,ε{M (β)} > Sk,ε(β). The limit points of the set {β(k): k = 0, 1, 2, …} are characterized by the following slightly modified version of Lyapunov’s theorem (Lange, 1995).

Proposition 3.3

Given an initial valueβ(0), letβ(k) = Mk(β(0)). IfQε(β) = Qε{M (β)} only for stationary pointsβofQεand ifβ*is a limit point of the sequence {β(k)}such that M(β)is continuous atβ*,thenβ*is a stationary point of Qε (β).

Equation (3.14) with αk = 1 gives

| (3.18) |

where equation (3.18) uses the fact that ∇Sk,ε(β(k)) = ∇Qε(β(k)). As discussed in Lange (1995) and Lange et al. (2000), the derivative of M(β) gives insight into the local convergence properties of the algorithm. Suppose that ∇Qε(β(k)) = 0, so β(k) is a stationary point. In this case, differentiating equation (3.18) gives

It is possible to write ∇2Sk,ε(β(k)) − ∇2Qε(β(k)) in closed form as nAk, where and

Under the conditions of Proposition 3.2, and thus nAk is negative semidefinite, a fact that may also be interpreted as a consequence of the minorization of Qε (β) by Sk,ε (β). Furthermore, ∇2ℓ(β(k)) is often negative definite, as pointed out in Section 3.4, which implies that ∇2Sk,ε (β(k)) is negative definite. This fact, together with the fact that ∇2Sk,ε(β(k)) − ∇2Qε(β(k)) is negative semidefinite, implies that the eigenvalues of ∇M(β(k)) are all contained in the interval [0, 1) (Hestenes, 1981). Ostrowski’s theorem (Ortega, 1990) thus implies that the MM algorithm defined by equation (3.18) is locally attracted to β(k) and that the rate of convergence to β(k) in a neighborhood of β(k) is linear with rate equal to the largest eigenvalue of M(β(k)). In other words, if β* is a stationary point and ρ < 1 is the largest eigenvalue of ∇M(β*), then for any δ > 0 such that ρ + δ < 1, there exists a neighborhood Nδ containing β* such that for all β ∈ Nδ,

Further details about the rate of convergence for similar algorithms may be found in Lange (1995) and Lange et al. (2000).

Lyapunov’s theorem (Proposition 3.3) gives a necessary condition for a point to be a limit point of a particular MM algorithm. To conclude this section, we consider a sufficient condition for the existence of a limit point. Suppose that the function Qε(β) is upper compact, as defined in Section 3.2. Then given the initial parameter vector β(0), the set B = {β ∈ Rd: Qε(β) ≥ Q(β(0))} is compact; furthermore, by equations (3.6) and (3.7), Qε(β) ≥ Q(β) so that B contains the entire sequence . This guarantees that the sequence has at least one limit point, which must therefore be a stationary point of Qε(β) by Proposition 3.3. If in addition there is no more than one stationary point — for example, if Qε(β) is strictly concave — then we may conclude that the algorithm must converge to the unique stationary point.

Upper compactness of Qε(β) follows as long as Qε(β) → −∞ whenever ||β|| → ∞; this is often not difficult to prove for specific examples. In the particular case of the L1 penalty (LASSO), strict concavity also holds as long as ℓ (β) is strictly concave, which implies the following corollary.

Corollary 3.3

If pλ(|θ|) = λ |θ|and ℓ(β) is strictly concave and upper compact, then the MM algorithm ofequation (3.15) gives a sequence {β(k)} converging to the unique maximizer ofQε(β) for anyε > 0.

In particular, Corollary 3.3 implies that using our algorithm with the ε-perturbed LASSO penalty guarantees convergence to the maximum penalized likelihood estimator for any full-rank generalized linear model or (say) Cox proportional hazards model. However, strict concavity of Qε(β) is not typical for other penalty functions presented in this article in light of the requirement in Proposition 3.2 that pλ(·) be concave — and hence that −pλ(·) be convex — on (0, ∞). This fact means that when an MM algorithm using some penalty function other than L1 converges, then it may converge to a local, rather than a global, maximizer of Qε(β). This can actually be an advantage, since one might like to know if the penalized likelihood has multiple local maxima.

4. Numerical Examples

Since Fan and Li (2001) have already compared the performance of LASSO with SCAD using a local quadratic approximation and other existing methods, in the following four numerical examples we focus on assessing the performance of the proposed algorithms using the SCAD penalty (2.2). Namely, we compare the unmodified LQA to our modified version (both using SCAD), where ε is chosen according to equation (3.12) with τ = 10−8. For SCAD, , and this tuning parameter λ is chosen using generalized cross-validation, or GCV (Craven and Wahba, 1979). As suggested by Fan and Li (2001), we take a = 3.7 in the definition of SCAD.

The Newton-Raphson algorithm (3.15) enables a standard error estimate via a sandwich formula:

| (4.1) |

where

Naturally, another estimate may be formed if −nI(β̂) is substituted for ∇ℓ(β̂) in equation (4.1). Fan and Peng (2004) establish the consistency of this sandwich formula for related problems, and their method of proof may be adapted to this situation, though we do not do so in this article.

For the simulated examples, Examples 1 through 3, we compare the performance of the proposed procedures along with AIC and BIC in terms of model error and model complexity. With μ(x) = E(Y|x), model error (ME) is defined as E{μ̂(x) − μ(x)}2, where the expectation is taken with respect to a new observation x. The ME’s of the underlying procedures are divided by that of the ordinary maximum likelihood estimate, so we report relative model error (RME).

Example 1 (Linear regression)

In this example, we generated 500 data sets, each of which consists of 100 observations from the model

where β is a 12-dimensional vector whose first, fifth and ninth components are 3, 1.5 and 2 respectively, and whose other components equal 0. The components of x and ε are standard normal and the correlation between xi and xj is taken to be ρ. In our simulation, we consider three cases: ρ = 0.1, ρ = 0.5, and ρ = 0.9. In this case, there is a closed form for the model error, namely ME(β̂) = (β̂−β)T cov(x)(β̂−β). The median of the relative model error (RME) over 500 simulated data sets is summarized in Table 1. The average number of 0 coefficients is also reported in Table 1, in which the column labelled “C” gives the average number of coefficients, of the nine true zeros, correctly set to zero and the column labelled “I” gives the average number of the three true nonzeros incorrectly set to zero.

Table 1.

Relative Model Errors for Linear Regression

| Method | RME | Zeros | RME | Zeros | RME | Zeros | |||

|---|---|---|---|---|---|---|---|---|---|

| Median | C | I | Median | C | I | Median | C | I | |

| ρ = 0.9 | ρ = 0.5 | ρ = 0.1 | |||||||

| New | .437 | 8.346 | 0 | .215 | 8.708 | 0 | .238 | 8.292 | 0 |

| LQA | .590 | 7.772 | 0 | .237 | 8.680 | 0 | .269 | 8.272 | 0 |

| BIC | .337 | 8.644 | 0 | .335 | 8.652 | 0 | .328 | 8.656 | 0 |

| AIC | .672 | 7.358 | 0 | .673 | 7.324 | 0 | .668 | 7.374 | 0 |

| Oracle | .201 | 9.000 | 0 | .202 | 9 | 0 | .211 | 9 | 0 |

In Table 1, New and LQA refer to the newly proposed algorithm and the local quadratic approximation algorithm of Fan and Li (2001). AIC and BIC stand for the best subset variable selection procedures that minimize AIC scores and BIC scores. Finally, “Oracle” stands for the oracle estimate computed by using the true model y = β1x1 + β5x5 + β9x9 + ε. When the correlation among the covariates is small or moderate, we see that the new algorithm performs the best in terms of model error and LQA also performs very well; their RMEs are both very close to those of the oracle estimator. When the covariates are highly correlated, the new algorithm outperforms LQA in terms of both model error and model complexity. The performance of BIC and AIC remains almost the same for the three cases in this example; Table 1 indicates that BIC performs better than AIC.

We now test the accuracy of the proposed standard error formula. The standard deviation of the estimated coefficients for the 500 simulated data sets, denoted by SD, can be regarded as the true standard deviation except for Monte Carlo error. The average of the estimated standard errors for the 500 simulated data sets, denoted by SE, and their standard deviation, denoted by std(SE), gauge the overall performance of the standard error formula. Table 2 only presents the SD, SE, std(SE) of β1. The results for other coefficients are similar. In Table 2, LSE stands for the ordinary least squares estimate; other notation is the same as that in Table 1. The differences between SD and SE are less than twice std(SE), which suggests that the proposed standard error formula works fairly well. However, the SE appears to consistently underestimate the SD, a common phenomenon (see Kauermann and Carroll, 2001), so it may benefit from some slight modification.

Table 2.

Standard deviations and standard errors of β̂1 in the linear regression model

| Method | SD | SE (std(SE)) | SD | SE (std(SE)) | SD | SE (std(SE)) |

|---|---|---|---|---|---|---|

| ρ = 0.9 | ρ = 0.5 | ρ = 0.1 | ||||

| LSE | .339 | .322(.035) | .152 | .144(.016) | .114 | .109(.012) |

| New | .303 | .260(.036) | .129 | .120(.017) | .104 | .098(.014) |

| LQA | .315 | .265(.037) | .128 | .120(.017) | .105 | .098(.014) |

| BIC | .295 | .269(.028) | .133 | .124(.013) | .105 | .101(.010) |

| AIC | .322 | .278(.029) | .145 | .128(.013) | .109 | .101(.010) |

| Oracle | .270 | .264(.027) | .126 | .124(.013) | .103 | .102(.010) |

Example 2 (Logistic regression)

In this example, we assess the performance of the proposed algorithm for a logistic regression model. We generated 500 data sets, each of which consists of 200 observations, from the logistic regression model

| (4.2) |

where β is a 9-dimensional vector whose first, fourth and seventh components are 3, 1.5 and 2 respectively, and whose other components equal 0. The components of x are standard normal, where the correlation between xi and xj is ρ. In our simulation, we consider two cases, ρ = 0.25 and ρ = 0.75. Unlike the model error for linear regression models, there is no closed form of ME for the logistic regression model in this example. The ME, summarized in Table 3, is estimated by 50,000 Monte Carlo simulations. Notation in Table 3 is the same as that in Table 1. It can be seen from Table 3 that the newly proposed algorithm performs better than LQA in terms of model error and model complexity. We further test the accuracy of the standard error formula derived by using the sandwich formula (4.1). The results are similar to those in Table 2 — the proposed standard error formula works fairly well — so they are omitted here.

Table 3.

Relative Model Errors for Logistic Regression

| Method | RME | Zeros | RME | Zeros | ||

|---|---|---|---|---|---|---|

| Median | C | I | Median | C | I | |

| ρ = 0.25 | ρ = 0.75 | |||||

| New | .277 | 5.922 | 0 | .528 | 5.534 | 0.222 |

| LQA | .368 | 5.728 | 0 | .644 | 4.970 | 0.090 |

| BIC | .304 | 5.860 | 0 | .399 | 5.796 | 0.304 |

| AIC | .673 | 4.930 | 0 | .683 | 4.860 | 0.092 |

| Oracle | .241 | 6 | 0 | .216 | 6 | 0 |

Best variable subset selection using the BIC criterion is seen in Table 3 to perform quite well relative to other methods. However, best subset selection in this example requires an exhaustive search over all possible subsets, and therefore it is computationally expensive. The methods we propose can dramatically reduce computational cost. To demonstrate this point, random samples of size 200 were generated from model (4.2) with β being a d-dimensional vector whose first, fourth and seventh components are 3, 1.5 and 2 respectively, and whose other components equal 0. Table 4 depicts the average computing time for each simulation with d = 8,···, 11 and indicates that computing times for the BIC and AIC criteria increase exponentially with the dimension d, making these methods impractical for parameter sets much larger than those tested here. Given the increasing importance of variable selection problems in fields like genetics and data mining where the number of variables is measured in the hundreds or even thousands, efficiency of algorithms is an important consideration.

Table 4.

Computing Time for the Logistic Model (Seconds per Simulation)

| ρ | d | New | LQA | BIC | AIC |

|---|---|---|---|---|---|

| 0.25 | 8 | 0.287 | 0.142 | 2.701 | 2.699 |

| 9 | 0.316 | 0.151 | 5.702 | 5.694 | |

| 10 | 0.348 | 0.180 | 11.761 | 11.754 | |

| 11 | 0.424 | 0.199 | 26.702 | 26.576 | |

|

| |||||

| 0.75 | 8 | 0.395 | 0.199 | 2.171 | 2.166 |

| 9 | 0.438 | 0.205 | 4.554 | 4.553 | |

| 10 | 0.452 | 0.225 | 9.499 | 9.518 | |

| 11 | 0.532 | 0.244 | 19.915 | 19.959 | |

Example 3 (Cox model)

We investigate the performance of the proposed algorithm for the Cox proportional hazard model in this example. We simulated 500 data sets each for sample sizes n = 40, 50 and 60 from the exponential hazard model

| (4.3) |

where β = (0.8, 0, 0, 1, 0, 0, 0.6, 0)T. This model is used in the simulation study of Fan and Li (2002). The xu’s were marginally standard normal and the correlation between xu and xv was ρ|u−v| with ρ = 0.5. The distribution of the censoring time is an exponential distribution with mean U exp(xTβ0), where U is randomly generated from the uniform distribution over [1,3] for each simulated data set so that 30% of the data are censored. Here β0 = β is regarded as a known constant so that the censoring scheme is noninformative. The model error E{μ̂(x) − μ(x)}2 is estimated by 50,000 Monte Carlo simulations and is summarized in Table 5. The performance of the newly proposed algorithm is similar to that of LQA. Both the new algorithm and LQA perform better than best subset variable selection with the AIC or BIC criteria. Note that the BIC criterion is a consistent variable selection criterion. Therefore, as the sample size increases, its performance becomes closer to that of the nonconcave penalized partial likelihood procedures. We also test the accuracy of the standard error formula derived by using the sandwich formula (4.1). The results are similar to those in Table 2; the proposed standard error formula works fairly well.

Table 5.

Relative Model Errors for the Cox Model

| Method | RME | Zeros | RME | Zeros | RME | Zeros | |||

|---|---|---|---|---|---|---|---|---|---|

| Median | C | I | Median | C | I | Median | C | I | |

| n | 40 | 50 | 60 | ||||||

| New | .173 | 4.790 | 1.396 | .288 | 4.818 | 1.144 | .324 | 4.882 | .904 |

| LQA | .174 | 4.260 | .626 | .296 | 4.288 | .440 | .303 | 4.332 | .260 |

| BIC | .247 | 4.492 | .606 | .337 | 4.564 | .442 | .344 | 4.624 | .272 |

| AIC | .470 | 3.880 | .358 | .551 | 3.948 | .240 | .577 | 3.986 | .160 |

| Oracle | .103 | 5 | 0 | .152 | 5 | 0 | .215 | 5 | 0 |

As in Example 2, we take β to be a d-dimensional vector whose first, fourth and seventh components are nonzero (0.8, 1.0, and 0.6, respectively) and whose other components equal 0. Table 6 shows that the proposed algorithm and LQA can dramatically save computing time compared with AIC and BIC.

Table 6.

Computing Time for the Cox Model (Seconds per Simulation)

| n | d | New | LQA | BIC | AIC |

|---|---|---|---|---|---|

| 40 | 8 | 0.248 | 0.147 | 0.415 | 0.418 |

| 9 | 0.258 | 0.140 | 0.843 | 0.843 | |

| 10 | 0.299 | 0.149 | 1.711 | 1.712 | |

| 11 | 0.327 | 0.162 | 3.680 | 3.675 | |

|

| |||||

| 50 | 8 | 0.320 | 0.197 | 0.588 | 0.591 |

| 9 | 0.364 | 0.200 | 1.218 | 1.225 | |

| 10 | 0.406 | 0.217 | 2.532 | 2.531 | |

| 11 | 0.466 | 0.211 | 5.189 | 5.171 | |

|

| |||||

| 60 | 8 | 0.417 | 0.263 | 0.820 | 0.827 |

| 9 | 0.474 | 0.268 | 1.795 | 1.768 | |

| 10 | 0.513 | 0.279 | 3.454 | 3.454 | |

| 11 | 0.574 | 0.288 | 7.219 | 7.162 | |

As a referee pointed out, it is of interest to investigate the performance of variable selection algorithms when the “full” model is misspecified. Model misspecification is a concern for all variable selection procedures, not merely those discussed in this article. To address this issue, we generated a random sample from model (4.3) with β = (0.8, 0, 0, 1, 0, 0, 0.6, 0, β9, β10)T, where β9 = β10 = 0.2 or 0.4. The first 8 components of x are the same as those in the above simulation. We take , and . In our model fitting, our “full” model only uses the first 8 components of x. Thus, we misspecified the full model by ignoring the last two components. Based on the “full” model, variable selection procedures are carried out. The oracle procedure uses (x1, x4, x7, x9, x10)T to fit the data. The model error E{μ̂(x)−μ(x)}2, where μ(x) is the mean function of the true model including all 10 components of x, is estimated by 50,000 Monte Carlo simulations and is summarized in Table 7, from which we can see that all variable selection procedures outperform the full model. This implies that selecting significant variables can dramatically reduce both model error and model complexity. From Table 7, we can see that both the newly proposed algorithm and LQA significantly reduce the model error of best subset variable selection using AIC or BIC.

Table 7.

Relative Model Errors for Misspecified Cox Models

| (β9, β10) | Method | RME | # of Zeros | RME | # of Zeros | RME | # of Zeros |

|---|---|---|---|---|---|---|---|

| n = 40 | n = 50 | n = 60 | |||||

| (0.2,0.2) | New | 0.155 | 6.276 | 0.259 | 6.142 | 0.298 | 5.906 |

| LQA | 0.146 | 4.992 | 0.251 | 4.822 | 0.294 | 4.662 | |

| BIC | 0.244 | 5.186 | 0.356 | 5.020 | 0.487 | 4.932 | |

| AIC | 0.441 | 4.336 | 0.564 | 4.162 | 0.657 | 4.104 | |

| Oracle | 0.254 | 5 | 0.194 | 5 | 0.239 | 5 | |

|

| |||||||

| (0.4, 0.4) | New | 0.205 | 6.544 | 0.323 | 6.384 | 0.479 | 6.132 |

| LQA | 0.197 | 5.166 | 0.324 | 4.924 | 0.469 | 4.806 | |

| BIC | 0.345 | 5.352 | 0.477 | 5.156 | 0.593 | 5.110 | |

| AIC | 0.560 | 4.444 | 0.670 | 4.236 | 0.755 | 4.240 | |

| Oracle | 0.268 | 5 | 0.228 | 5 | 0.273 | 5 | |

Example 4 (Environmental data)

In this example, we illustrate the proposed algorithm in the context of analysis of an environmental data set. This data set consists of the number of daily hospital admissions for circulation and respiration problems and daily measurements of air pollutants. It was collected in Hong Kong from January 1, 1994 to December 31, 1995 (courtesy of T. S. Lau). Of interest is the association between levels of pollutants and the total number of daily hospital admissions for circulatory and respiratory problems. The response is the number of admissions and the covariates X1 to X3 are the levels (in μg/m3) of the pollutants sulfur dioxide, nitrogen dioxide, and dust. Because the response is count data, it is reasonable to use a Poisson regression model with mean μ(x) to analyze this data set. To reduce modeling bias, we include all linear, quadratic and interaction terms among the three air pollutants in our full model. Since empirical studies show that there may be a trend over time, we allow an intercept depending on time, the date on which observations were collected. In other words, we consider the following model:

We further parameterize the intercept function by a cubic spline

where the knots kj are chosen to be the 10th, 25th, 50th, 75th and 90th percentiles of t. Thus, we are dealing with a Poisson regression model with 18 variables. To avoid numerical instability, the time variable and the air pollutant variables are standardized.

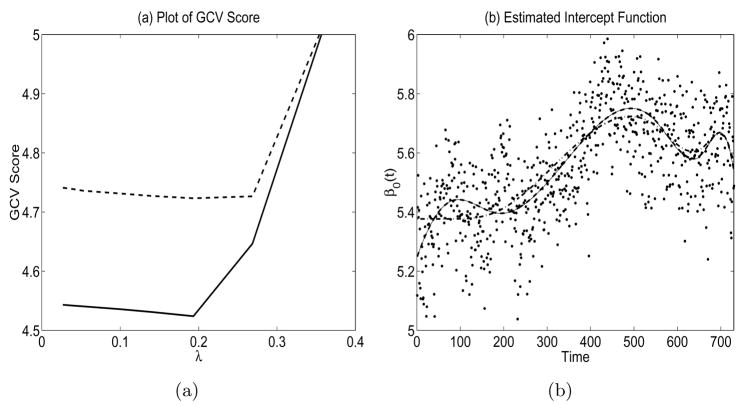

Generalized cross-validation is used to select the tuning parameter λ for the new algorithm using SCAD. The plot of the GCV scores against λ is depicted in Figure 2(a), and the selected λ equals 0.1933. In Table 8, we see that all linear terms are very significant, whereas the quadratic terms of SO2 and dust and the SO2 × dust interaction are not significant. The plot of the estimated intercept function β0(t) is depicted in Figure 2(b) along with the estimated intercept function under the full model. The two estimated intercept functions are almost identical and capture the time trend very well, but the new algorithm saves two degrees of freedom by deleting the t3 and terms from the intercept function.

Fig. 2.

In (a), the solid line indicates the GCV scores for SCAD using the new algorithm, and the dashed line indicates the same thing for the LQA algorithm. In (b), the solid and thick dashed lines that nearly coincide indicate the estimated intercept functions for the new algorithm and the full model, respectively; the dash-dotted line is for LQA. The dots are the full-model residuals log(y) − xTβ̂ MLE

Table 8.

Estimated Coefficients and their Standard Errors

| Covariate | MLE | New | LQA | |

|---|---|---|---|---|

| SO2 | 0.0082 (0.0041) | 0.0043 (0.0024) | 0.0090 (0.0029) | |

| NO2 | 0.0238 (0.0051) | 0.0260 (0.0037) | 0.0311 (0.0033) | |

| Dust | 0.0195 (0.0054) | 0.0173 (0.0037) | 0.0043 (0.0026) | |

|

|

−0.0029 (0.0013) | 0 (0.0009) | −0.0025 (0.0010) | |

|

|

0.0204 (0.0043) | 0.0118 (0.0029) | 0.0157 (0.0031) | |

| Dust2 | 0.0042 (0.0025) | 0 (0.0015) | 0.0060 (0.0018) | |

| SO2 × NO2 | −0.0120 (0.0039) | −0.0050 (0.0021) | −0.0074 (0.0022) | |

| SO2 × Dust | 0.0086 (0.0047) | 0 (< 0.00005) | 0 (NA) | |

| NO2 × Dust | −0.0305 (0.0061) | −0.0176 (0.0037) | −0.0262 (0.0041) |

Note: NA stands for “Not available”.

For the purpose of comparison, SCAD using the LQA is also applied to this data set. The plot of the GCV scores against λ is also depicted in Figure 2(a), and the selected again equals 0.1933. In this case, not only the t3 and terms but also the t and t2 terms are deleted from the intercept function. The estimated intercept function is the dash-dotted curve in Figure 2(b), and now the resulting estimated intercept function looks dramatically different from the one estimated under the full model, and furthermore it appears to do a poor job of capturing the overall trend. The LQA estimates shown in Table 8 are quite different from those obtained using the new algorithm, even though they both use the same tuning parameter. Recall that LQA suffers the drawback that once a parameter is deleted, it cannot reenter the model, which appears to have had a major impact on the LQA model estimates in this case. Standard errors in Table 8 are available for the deleted coefficients in the new model but not LQA because the two algorithms use different deletion criteria.

5. Discussion

We have shown how a particular class of MM algorithms may be applied to variable selection. In modifying previous work on variable selection using penalized least squares and penalized likelihood by Fan and Li (2001, 2002, 2004) and Cai, et al (2004), we have shown how a slight perturbation of the penalty function can eliminate the possibility of mistakenly excluding variables too soon while simultaneously enabling certain convergence results. While the numerical tests given here deal with four very diverse models, the range of possible applications of this method is even broader. Generally speaking, the MM algorithms of this article may be applied to any situation where an objective function — whether a likelihood or not — is penalized using a penalty function such as the one in equation (2.1). If the goal is to maximize the penalized objective function and pλ(·) satisfies the conditions of Proposition 3.1, then an MM algorithm may be applicable. If the original (unpenalized) objective function is concave, then the modified Newton-Raphson approach of Section 3.4 holds promise. In Section 2, we list several distinct classes of penalty functions in the literature that satisfy the conditions of Proposition 3.1, but there may also be other useful penalties to which our method applies.

The numerical tests of Section 4 indicate that the modified SCAD penalty we propose performs well on simulated data sets. This algorithm has comparable relative model error (sometimes quite a bit better, sometimes slightly worse) to BIC and the unmodified SCAD penalty implemented using LQA, and it consistently outperforms AIC. Our proposed algorithm tends to result in more parsimonious models than the LQA algorithm, typically identifying more actual zeros correctly but also eliminating too many nonzero coefficients. This fact is surprising in light of the drawback that LQA can exclude variables too soon during the iterative process, a drawback that our algorithm corrects. The particular choice of ε, addressed in Section 3.3, may warrant further study because of its influence on the complexity of the final model chosen.

An important difference between our algorithm and both AIC and BIC is the fact that the latter two methods are often not computationally efficient, with computing time scaling exponentially in the number of candidate variables whenever it becomes necessary to search exhaustively over the whole model space. This means that in problems with hundreds or thousands of candidate variables, AIC and BIC can be difficult if not impossible to implement. Such problems are becoming more and more prevalent in the statistical literature as topics such as microarray data and data mining increase in popularity.

Finally, we have seen in one example involving the Cox proportional hazards model that both our method and LQA perform well when the model is misspecified, even outperforming the oracle method for samples of size 40. Although questions of model misspecification are largely outside the scope of this article, it is useful to remember that although simulation studies can aid our understanding, the model assumed for any real dataset is only an approximation of reality.

Acknowledgments

The authors thank referees for constructive suggestions. Li’s research was supported by NSF grants DMS-0348869 and CCF-0430349, and a NIH grant NIDA 1-P50-DA10075,.

Appendix: Proofs of some results in Section 3

Proof of Proposition 3.1

The proof uses the following Lemma:

Lemma A.1

Under the assumptions of Proposition 3.1, is a nonincreasing function of θ for any nonnegative ε.

The proof of the lemma is immediate: Both and (ε + θ)−1 are positive and nonincreasing on (0, ∞), so their product is nonincreasing. For any θ > 0, we see that

| (A.1) |

Furthermore, since pλ(·) is nondecreasing and concave on (0, ∞) (and continuous at 0), it is also continuous on [0, ∞). Thus, Φθ0(θ) − pλ(|θ|) is an even function, piecewise differentiable and continuous everywhere. Taking ε = 0 in Lemma A.1, equation (A.1) implies that Φθ0(θ) − pλ(|θ|) is nonincreasing for θ ∈ (0, |θ0|) and nondecreasing for θ ∈ (|θ0|, ∞); this function is therefore minimized on (0, ∞) at |θ0|. Since it is clear that Φθ0(|θ0|) = pλ(|θ0|) and Φθ0(−|θ0|) = pλ (−|θ0|), condition (3.3) is satisfied for θ0 = ±|θ0|.

Proof of Proposition 3.2

For part (a), it suffices to show that Φθ0,ε (θ) majorizes pλ, ε(|θ|) at θ0. It follows by definition that Φθ0,ε (θ0) = pλ, ε (|θ0|). Furthermore, Lemma A.1 shows as in Proposition (3.1) that the even function Φθ0,ε(θ) − pλ, ε (|θ|) is decreasing on (0, |θ0|) and increasing on (|θ0|, ∞), giving the desired result.

To prove part (b), it is sufficient to show that |pλ, ε(|θ|) − pλ(|θ|)| → 0 uniformly on compact subsets of the parameter space as ε ↓ 0. Since is nonincreasing on (0, ∞),

and because , the right side of the above inequality tends to 0 uniformly on compact subsets of the parameter space as ε ↓ 0.

Proof of Corollary 3.2

Let β̂ denote a maximizer of Q(β) and put B = {β ∈ Rd:Qε0(β) ≥ Q(β̂)} for some fixed ε0 > 0. Then B is compact and contains all β̂ε for 0 ≤ ε < ε0. Thus, Proposition 3.2 shows that for ε < ε0,

If β* is a limit point of {β̂ε}ε↓ 0, then by the continuity of Q(β), |Q(β*)− Q(β̂)| = 0 and so β* is a maximizer of Q(β).

Proof of Proposition 3.3

Given an initial value β(0), let β(k) = Mk(β(0)) for k ≥ 1; i.e., {β(k)} is the sequence of points that the MM algorithm generates starting from β(0). Let Λ denote the set of limit points of this sequence. For any β* ∈ Λ, passing to a subsequence we have β(kn) → β*. The quantity Qε(β(kn)), since it is increasing in n and bounded above, converges to a limit as n → ∞. Thus, taking limits in the inequalities

gives Qε (β*) = Qε{limn →∞ M (βkn)}, assuming this limit exists. Of course, if M (β) is continuous at β*, then we have Qε(β*) = Qε{M (β*)}, which implies that β* is a stationary point of Qε(β).

Note that Λ is not necessarily nonempty in the above proof. However, we know that each β(k) lies in the set {β: Qε(β) ≥ Qε(β1)}, so if this set is compact, as is often the case, we may conclude that is indeed nonempty.

Contributor Information

David R. Hunter, Department of Statistics, The Pennsylvania State University University Park, Pennsylvania 16802–2111, E-mail: dhunter@stat.psu.edu

Runze Li, Department of Statistics, The Pennsylvania State University University Park, Pennsylvania 16802–2111 E-mail: rli@stat.psu.edu.

References

- 1.Antoniadis A. Wavelets in statistics (with discussion) Journal of the Italian Statistical Association. 1997;6:97–144. [Google Scholar]

- 2.Antoniadis A, Fan J. Regularization of wavelets approximations (with discussion) Journal of American Statistical Association. 2001;96:939–967. [Google Scholar]

- 3.Cai J, Fan J, Li R, Zhou H. Variable selection for multivariate failure time data. Biometrika. 2004 doi: 10.1093/biomet/92.2.303. In press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Cox DR. Partial likelihood. Biometrika. 1975;62:269–276. [Google Scholar]

- 5.Craven P, Wahba G. Smoothing noisy data with spline functions: estimating the correct degree of smoothing by the method of generalized cross-validation. Numer Math. 1979;31:377–403. [Google Scholar]

- 6.Dempster AP, Laird NM, Rubin DB. Maximum likelihood from incomplete data via the EM algorithm. Journal of the Royal Statistical Society, Series B. 1977;39:1–38. [Google Scholar]

- 7.Fan J, Peng H. Nonconcave penalized likelihood with a diverging number of parameters. The Annals of Statistics. 2004;32:928–961. [Google Scholar]

- 8.Fan J, Li R. Variable selection via nonconcave penalized likelihood and its oracle properties. Journal of American Statistical Association. 2001;96:1348–1360. [Google Scholar]

- 9.Fan J, Li R. Variable selection for Cox’s proportional hazards model and frailty model. The Annals of Statistics. 2002;30:74–99. [Google Scholar]

- 10.Fan J, Li R. New estimation and model selection procedures for semiparametric modeling in longitudinal data analysis. Journal of American Statistical Association. 2004;99:710–723. [Google Scholar]

- 11.Frank IE, Friedman JH. A statistical view of some chemometrics regression tools. Technometrics. 1993;35:109–148. [Google Scholar]

- 12.Heiser WJ. Convergent computing by iterative majorization: theory and applications in multidimensional data analysis. In: Krzanowski WJ, editor. Recent Advances in Descriptive Multivariate Analysis. Clarendon Press; Oxford: 1995. pp. 157–189. [Google Scholar]

- 13.Hestenes MR. Optimization Theory: The Finite Dimensional Case. Wiley; New York: 1975. [Google Scholar]

- 14.Hunter DR, Lange K. Rejoinder to discussion of “Optimization transfer using surrogate objective functions”. Journal of Computational and Graphical Statistics. 2000;9:52–59. [Google Scholar]

- 15.Kauermann G, Carroll RJ. A note on the efficiency of sandwich covariance matrix estimation. 2001;96:1387–1396. [Google Scholar]

- 16.Lange K. A gradient algorithm locally equivalent to the EM algorithm. Journal of the Royal Statistical Society, Series B. 1995;57:425–437. [Google Scholar]

- 17.Lange K, Hunter DR, Yang I. Optimization transfer using surrogate objective functions. Journal of Computational and Graphical Statistics. 2000;9:1–59. [Google Scholar]

- 18.Lehmann EL. Pacific Grove. California: Wadsworth & Brooks/Cole; 1983. Theory of Point Estimation. [Google Scholar]

- 19.Meng XL. On the rate of convergence of the ECM algorithm. The Annals of Statistics. 1994;22:326–339. [Google Scholar]

- 20.Meng XL, Van Dyk DA. The EM algorithm — an old folk song sung to a fast new tune (with discussion) Journal of the Royal Statistical Society, Series B. 1997;59:511–567. [Google Scholar]

- 21.McLachlan G, Krishnan T. The EM Algorithm and Extensions. Wiley; New York: 1997. [Google Scholar]

- 22.Miller AJ. Subset Selection in Regression. 2. Chapman and Hall; London: 2002. [Google Scholar]

- 23.Ortega JM. Numerical Analysis: A second course. Society for Industrial and Applied Mathematics; Philadelphia: 1990. [Google Scholar]

- 24.Tibshirani R. Regression shrinkage and selection via the LASSO. Journal of the Royal Statistical Society, series B. 1996;58:267–288. [Google Scholar]

- 25.Wu CFJ. On the convergence of properties of the EM algorithm. The Annals of Statistics. 1983;11:95–103. [Google Scholar]