Abstract

The present study explored constraints on mid-fusiform activation during object discrimination. In three experiments, participants performed a matching task on simple line configurations, nameable objects, three dimensional (3-D) shapes, and colors. Significant bilateral mid-fusiform activation emerged when participants matched objects and 3-D shapes, as compared to when they matched two-dimensional (2-D) line configurations and colors, indicating that the mid-fusiform is engaged more strongly for processing structural descriptions (e.g., comparing 3-D volumetric shape) than perceptual descriptions (e.g., comparing 2-D or color information). In two of the experiments, the same mid-fusiform regions were also modulated by the degree of structural similarity between stimuli, implicating a role for the mid-fusiform in fine differentiation of similar visual object representations. Importantly, however, this process of fine differentiation occurred at the level of structural, but not perceptual, descriptions. Moreover, mid-fusiform activity was more robust when participants matched shape compared to color information using the identical stimuli, indicating that activity in the mid-fusiform gyrus is not driven by specific stimulus properties, but rather by the process of distinguishing stimuli based on shape information. Taken together, these findings further clarify the nature of object processing in the mid-fusiform gyrus. This region is engaged specifically in structural differentiation, a critical component process of object recognition and categorization.

INTRODUCTION

Efficient object recognition confers an obvious survival advantage and is essential to normal functioning of human everyday life. It is therefore important to study behavioral and neural mechanisms of this essential and complex function. Although single-cell recordings in animals and brain imaging studies in humans have revealed a clear picture of retinotopic mapping in the early visual cortex (Wang, Tanifuji, & Tanaka, 1998; Engel, Glover, & Wandell, 1997; Sereno et al., 1995), it remains uncertain what specific neural correlates are involved and how they are organized functionally for higher levels of object recognition. Some researchers propose that there are functionally encapsulated areas in the ventral processing stream (VPS) specialized for processing different categories of objects or other visual entities (Cohen et al., 2002; Polk et al., 2002; Epstein & Kanwisher, 1998; Kanwisher, McDermott, & Chun, 1997). Specific stimulus properties that are unique to a particular object category may dictate whether a particular functional region is engaged versus another. Yet others suggest that the underlying principle of functional organization is not based on the type of stimulus or their properties but is driven instead by the perceptual and cognitive demands involved in accessing or differentiating object descriptions (Rogers, Hocking, Mechelli, Patterson, & Price, 2005; Joseph, 2001; Gauthier, Tarr, Anderson, Skudlarski, & Gore, 1999). For example, Rogers et al. (2005) showed that classifying animals at an intermediate (or basic) level of categorization (e.g., dog or car) activated regions of the fusiform gyrus bilaterally, but classifying vehicles at this intermediate level did not activate these regions. However, when the same objects were classified at a more specific level of categorization (e.g., Labrador or BMW), fusiform activation was observed for both categories of objects. Consequently, these regions of the fusiform gyrus are involved in fine differentiation of object representations rather than being driven by taxonomic category differences or different stimulus properties.

The process of fine differentiation of visually homogenous categories may also explain why the mid-fusiform gyrus is consistently activated for faces compared to some other object categories across studies (Joseph, 2001; Kanwisher et al., 1997). Faces represent the extreme end of a structural similarity continuum in that they share the same overall structure of two eyes, two ears, a nose, and a mouth (Arguin, Bub, & Dudek, 1996; Bruce & Humphreys, 1994; Damasio, Damasio, & Van Hoesen, 1982). Mid-fusiform activation is not reserved only for the class of faces (Rogers et al., 2005; Joseph & Gathers, 2002; Gauthier et al., 1999), thus it is important to explore a more basic principle, such as fine differentiation of structural descriptions, which may drive activation in the fusiform gyrus.

Whereas some studies have implicated the mid-fusiform gyrus in processing of shape descriptions and other studies have implicated the mid-fusiform in the process of fine differentiation of object descriptions, no studies have integrated such findings into a unified processing account. The novel contribution of the present study is the integration of findings that the mid-fusiform gyrus not only processes abstract shape representations but that these pre-semantic representations are important for making fine differentiations among visually similar objects. We presently define the “mid-fusiform” gyrus based on Joseph’s (2001) review of category-specific brain activations in which the mid-fusiform gyrus was defined by a y Talairach coordinate (Talairach & Tournoux, 1988) ranging from −41 to −70. Fusiform regions outside of this range will be referred to as “anterior” or “posterior” fusiform regions.

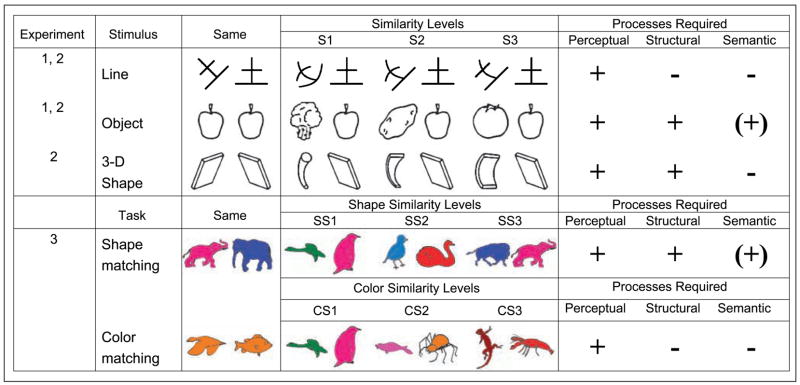

Three major questions guided the present research. First, does the mid-fusiform gyrus process structural or perceptual descriptions of objects? Second, is the mid-fusiform gyrus involved in fine differentiation of objects at the structural or perceptual level? Third, is activation in the mid-fusiform gyrus driven by specific stimulus properties or the process of differentiating objects at the level of structural descriptions? Object recognition was measured by performance on a matching task that required deciding whether two stimuli were the same or different. Line drawings of nameable objects and 3-D shapes (Figure 1) were presumed to tap into processing of structural descriptions, whereas line configurations and the color information in line drawings were presumed to tap into perceptual but not structural processing. Matching of objects may also involve semantic processing, but it is not required for the matching task (in Figure 1, semantic processing is parenthesized). The degree of structural and perceptual similarity between nonmatching stimuli was parametrically varied as in Joseph and Farley (2004) and Joseph and Gathers (2003) to tap into the process of fine differentiation. Higher levels of similarity were expected to induce higher functional magnetic resonance imaging (fMRI) signal in brain regions involved in fine differentiation.

Figure 1.

Sample stimuli used in all three experiments. In Experiments 1 and 2, P stimuli tapped into perceptual processing and PS and PSS stimuli tapped into structural processing. Within each processing type, three similarity levels were manipulated (S1–S3). In Experiment 3, the task required matching based on shape or color information of the same stimuli. Similarity level was parametrically varied (SS1–SS3 for shape matching and CS1–CS3 for color matching). Shape and color similarity were factorially combined, but not all permutations are shown. Hypothesized processes required for each manipulation are indicated in the rightmost columns.

The first question is whether the process of differentiation in the mid-fusiform gyrus occurs at the level of perceptual or structural object descriptions. According to some theories of object recognition, structural descriptions specify the 3-D volumetric configuration of individual parts of an object (Biederman, 1987; Marr & Nishihara, 1978). The arrangement and relative sizes of the primitive components define the structure of an object. A structural description is an abstract representation that mediates between perceptual and semantic processing. It allows the visual system to map different images or exemplars of an object onto the same percept, which is critical for accessing the relatively more stable and unchanging semantic representation of an object. Perceptual descriptions, in contrast, specify the edges in an image as well as the layout of surfaces (e.g., primal and 2 1/2-D sketches; Marr, 1982), but they do not specify the 3-D volumetric configuration of an object as structural descriptions do. Starrfelt and Gerlach (2007), Gerlach, Law, and Paulson (2006), and Gerlach et al. (2002) have suggested that mid-fusiform regions are involved in the process of shape configuration, defined as the integration of visual elements into whole objects. Mid-fusiform regions may also process 3-D structure that is not necessarily associated with meaningful objects (Op de Beeck, Beatse, Wagemans, Sunaert, & Van Hecke, 2000; Schacter et al., 1995). In addition, Hayworth and Biederman (2006) recently showed that anterior aspects of the lateral occipital complex (Malach et al., 1995) do not respond to local image features, but instead, this region responds to the component parts of an object, which is a critical aspect of a structural description. Therefore, mid-fusiform regions may be engaged by stimuli or tasks that involve processing the structural, rather than perceptual, descriptions of objects. The first hypothesis of the present study was that objects, 3-D shapes, and processing of shape information in colored drawings were expected to induce more mid-fusiform activation than processing of line configurations or color information.

The second question is whether making fine distinctions among visually similar objects is specifically associated with mid-fusiform activation (Rogers et al., 2005; Gerlach, Law, & Paulson, 2004; Joseph & Farley, 2004; Joseph & Gathers, 2003; Price, Noppeney, Phillips, & Devlin, 2003; Gauthier et al., 1999; Gerlach, Law, Gade, & Paulson, 1999; Damasio, Grabowski, Tranel, Hichwa, & Damasio, 1996). Objects within the same category tend to overlap at the level of structural descriptions; consequently, the process of fine differentiation of objects (e.g., distinguishing different breeds of dogs) will require making fine distinctions among structural descriptions. Differentiating objects at a more basic or intermediate level may proceed from perceptual information such as the presence of straight or curved edges which may support, for example, the differentiation of natural versus manufactured objects. The present study explored this possibility further by parametrically varying the degree of similarity among objects at both the structural and perceptual levels. Two previous studies (Joseph & Farley, 2004; Joseph & Gathers, 2003) showed that fMRI signal in the mid-fusiform gyrus is parametrically modulated by degree of structural similarity between objects. Specifically, fMRI signal increased as the degree of structural similarity between two objects increased. This indicates that the mid-fusiform gyrus is engaged when making fine distinctions among similar objects. The finding by Rogers et al. (2005) that the mid-fusiform gyrus is engaged when classifying objects at a more specific level is also in line with this idea. However, previous studies have not determined whether making fine distinctions among object representations occurs at the perceptual or structural level. The second hypothesis of the present study was that the brain regions that are implicated in structural processing will also show greater modulation by structural similarity than by perceptual similarity. Specifically, greater degrees of structural similarity (Similarity level 3; Figure 1) are expected to induce higher levels of activation in the mid-fusiform gyrus, whereas greater degrees of perceptual similarity are not.

The third question is whether mid-fusiform activation during fine discrimination of objects is process- or stimulus-driven. Rogers et al. (2005) showed that the very same vehicle stimuli that engaged the mid-fusiform gyrus during specific categorization (e.g., distinguishing BMW from Morris) did not engage the mid-fusiform during intermediate categorization (e.g., distinguishing cars from dogs). Consequently, mid-fusiform activation during object processing is not driven by a specific configuration of visual features. Experiment 3 used colored line drawings and required matching based on the shape or color information as a way to control perceptual input while varying demands on structural (shape-matching) or perceptual (color-matching) processing. The third hypothesis of the present study was that shape matching will engage the mid-fusiform gyrus more strongly than will color matching.

EXPERIMENT 1

Methods

Participants

Twelve right-handed, native English speakers (M = 24.8 years, SD = 6.8; 8 women) with normal or corrected-to-normal vision were recruited from the local community and compensated for participation. A signed informed consent form approved by University of Kentucky Institutional Review Board was obtained from each participant prior to the experiment.

Stimuli

The stimuli were pairs of objects (e.g., animals, fruits) or novel line configurations, presented above and below a fixation cross on the center of the screen. For all stimuli, the contours were black on a white background and each image subtended an approximate visual angle of 8.5°. The objects were line drawings used in previous studies (e.g., Joseph & Gathers, 2003; Joseph, 1997). The objects and line configuration pairs varied across three levels of similarity (Figure 1). For the object pairs (referred to as PSS stimuli), similarity levels were determined in a previous study (Joseph, 1997) in which participants rated the similarity of the two items of a pair in terms of 3-D volumetric structure. The distribution of structural similarity ratings determined the assignment of object pairs to each of the three similarity levels (PSS1–PSS3) in the present study, with 10 pairs per PSS level. The line configurations (referred to as P stimuli) consisted of the same three straight lines but in 12 different configurations. Each of these line configurations was then modified to create a distracter stimulus in which one, two, or three of the straight lines were replaced by a curved line (see Figure 1). If only one line was changed, then this distracter had the highest similarity (P3) with the original configuration, and if all three lines were changed, this distracter had the lowest similarity (P1). Because this was a same–different matching task, “same” stimulus pairs were also created. For the line configurations, a match consisted of the same line configuration in different orientations (with disparity between the two ranging from 15° to 45°). For the objects, a match consisted of two different exemplars, views, or positions of the same object.

Design and Procedures

The two main variables were processing type (P = line configurations and PSS = objects) and similarity level (1, 2, 3). Processing type was manipulated across functional runs (two runs of each type) and similarity level was manipulated across blocks within a run (three blocks of each similarity level counterbalanced within subjects). The order of the tasks was counterbalanced across subjects. Each run consisted of 112 trials, organized in a block fashion. Each block consisted of eight trials, with four match and four mismatch pairs randomly mixed together. Experimental blocks alternated with a block of four fixation trials in which a crosshair continuously appeared in the center of the screen for a total of 16 sec. Each trial lasted for 4000 msec, starting with a target display for 400 msec and a blank screen for 3600 msec. The target display was relatively short to minimize the effect of eye movements and gaze shift. However, participants were allowed 2000 msec to respond from the onset of the target display.

Given the nature of the matching used in “same” trials for objects, prior to entering the scanner, individuals were trained to identify which pairs of objects constituted a match to reduce any ambiguity about “same” responses. After learning the matches, participants received practice on same–different matching for both object and line pairs by pressing buttons on a serial response box, similar to how they would respond in the scanner. During training and during the actual scanning session, participants were asked to respond as accurately and quickly as possible. No feedback was given on performance. During the scanning session, stimuli were presented using a high-resolution rear-projection system and participants viewed the stimuli via a reflection mirror mounted on the head coil. Participants responded with the index (“same”) and middle finger (“different”) of their right hand, and responses were recorded with an MR-compatible response pad. A desktop computer running E-Prime (Version 1.1 SP3, Psychology Software Tools, Pittsburgh, PA) controlled stimulus presentation and the recording of responses. The timing of stimulus presentation was synchronized with the magnet trigger pulses.

Image Acquisition

A 1.5-T Siemens Vision magnetic resonance imaging system at the University of Kentucky Medical Center equipped for echo-planar imaging (EPI) was used for data acquisition. A total of 448 EPI images were acquired (TR = 4000 msec, TE = 40 msec, flip angle = 90°), each consisting 44 contiguous axial slices (matrix = 64 × 64, in-plane resolution = 3.56 × 3.56 mm2, thickness = 3 mm, gap = 0.6 mm). A high-resolution T1-weighted MP-RAGE anatomical set (150 sagittal slices, matrix = 256 × 256, field-of-view = 256 × 256 mm2, slice thickness = 1 mm, no gap) was collected for each participant.

Behavioral Data Analysis

Reaction time and error rates were recorded from participants performing the matching task in the scanner. To ensure that the reaction time variable was normally distributed (to meet the assumptions of a multivariate approach), the log transformation (LogRTs) of individual reaction times was used. LogRTs from individual trials more than three standard deviations from the overall group mean were considered outliers (no outliers emerged in this experiment). Only correct LogRTs were submitted to analyses (79% of the data). Each dependent variable was analyzed in separate repeated measures analyses of variance (ANOVAs) using the multivariate approach (O’Brien & Kaiser, 1985). Results from multivariate tests are reported when sphericity assumptions are violated; otherwise, results from the univariate tests are reported (Hertzog & Rovine, 1985). Data from 11 subjects were analyzed. One subject’s responses were not completely recorded due to a malfunction of the response device during scanning.

fMRI Data Analysis

For the first-level analysis on individual subjects’ data, the first four volumes of each run were discarded to allow the magnetic resonance signal to reach steady state. Using FMRIB’s FSL package (www.fmrib.ox.ac.uk/fsl), images in each participant’s time series were motion corrected, spatially smoothed with a 3-D Gaussian kernel (full width at half maximum = 7.5 mm), and temporally filtered using a high-pass filter (360 sec). Customized square waveforms (on/off) were generated for each participant according to the order of experimental conditions in which he or she completed. In a second analysis, a parametric linear regressor was created to model an ascending similarity function to detect regions that were sensitive to the process of fine differentiation. These waveforms were then convolved with a double-gamma hemodynamic response function. For each participant, FILM (FMRIB’s Improved Linear Model) was used to estimate the hemodynamic parameters for different explanatory variables and to generate statistical contrast maps of interest. After statistical analysis for each participant’s time series, contrast maps were normalized into common stereotaxic space before mixed-effects group analyses were performed. The average EPI image was registered to the MP-RAGE volume, and the MP-RAGE volume to the ICBM152 T1 template, using FMRIB’s Linear Image Registration Tool module in FSL.

Second-level analyses were performed to pool over the two runs of each processing type for individual subjects. At the third level, spatially normalized contrast maps from individual participants were entered into mixed-effects group analysis. Regions of interest (ROIs) were defined as clusters of 30 or more contiguous voxels (Xiong, Gao, Lancaster, & Fox, 1995), in which parameter estimate values differed significantly from zero (p < .01, two-tailed). The Mintun peak algorithm (Mintun, Fox, & Raichle, 1989) located the local peaks (maximal activation) within each ROI. Additional analyses were performed based on the average activation intensity extracted from the ROIs identified at the group level. The present focus is on regions in the fusiform gyrus and the inferior temporal cortex, but other regions are reported in the Appendix.

Results and Discussion

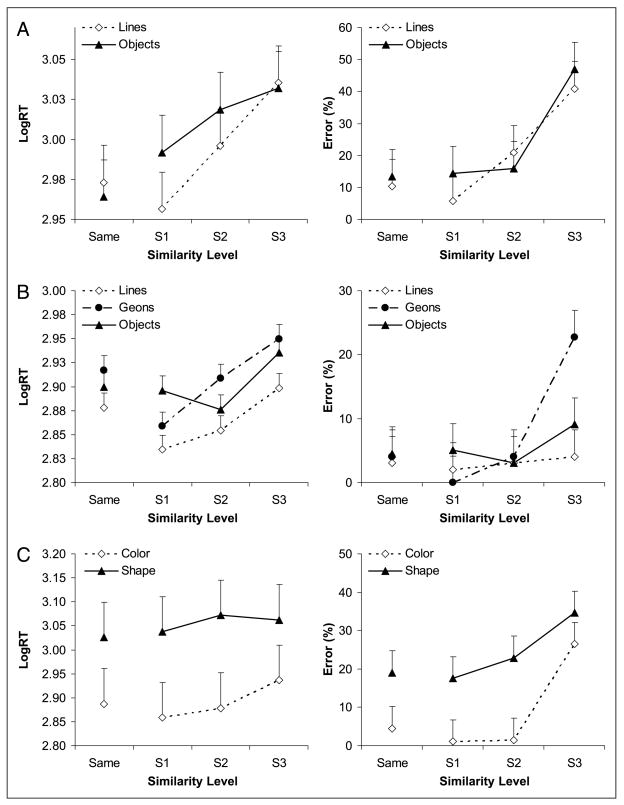

Matching performance is plotted as a function of processing type and similarity level in Figure 2A. Object matching and line configuration matching were not different for LogRT [F(1, 10) = 1.2, p = .30] or errors [F(1, 10) = 2.7, p = .13]. However, objects or line configurations that were greater in similarity induced longer reaction times [F(3, 8) = 17.2, p < .001] and higher error rates [F(3, 8) = 41.0, p < .0001]. The Processing type × Similarity level interaction was significant for LogRT [F(3, 30) = 4.9, p < .05], but the simple main effects of perceptual and structural similarity (on “different” trials) and linear trends were significant (all p < .05).

Figure 2.

(A–C) Behavioral performance for Experiments 1 through 3, respectively. In C, each color similarity level response is averaged across all shape similarity levels, and the converse for shape similarity. Error bars in this figure and Figures 3, 4, and 5 are within-subject confidence intervals for the similarity effect (Loftus & Masson, 1994).

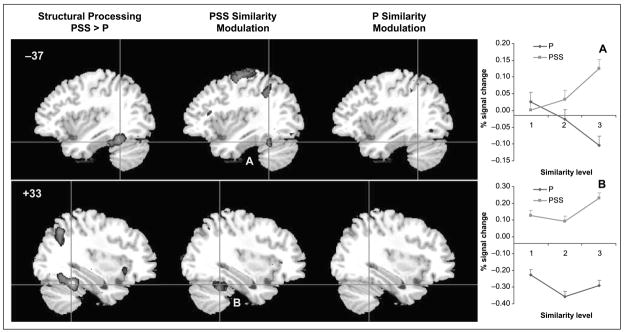

To identify the regions that are recruited by the processing of structural information, the brain areas significantly activated by the objects as compared to the line configurations were determined (Table 1). Activity in the bilateral mid-fusiform gyrus was significantly greater for discrimination of objects compared to line configurations (Figure 3). Discrimination of line configurations did not recruit any VPS regions more than object discrimination.

Table 1.

Fusiform and Inferior Temporal Activation in Experiment 1

| MNI Coordinate |

||||||

|---|---|---|---|---|---|---|

| Region | BA | Size | x | y | z | Max. Z value |

| Object > Line Configuration | ||||||

| R Fusiform | 37 | 1019 | +38 | −46 | −24 | 4.44 |

| L Inferior temporal | 20 | 896 | −46 | −48 | −14 | 4.04 |

| PSS-similarity Modulated Regions | ||||||

| R Fusiform | 37 | 127 | +42 | −62 | −14 | 3.22 |

| L Fusiform | 37 | 482 | −42 | −62 | −6 | 3.81 |

BA = Brodmann’s area; R = right; L = left.

Figure 3.

Activation maps for the analysis of processing type (structural vs. perceptual processing) and similarity modulation in Experiment 1 (z > 2.58). Plots A–B correspond to Regions A and B.

To determine whether mid-fusiform regions are modulated by degree of structural similarity, a second analysis was conducted in which similarity level was treated as a single regressor with three levels (i.e., PSS1–PSS3 for the two object runs or P1–P3 for the two line configuration runs). The mid-fusiform regions that were implicated in structural processing also show modulation by structural similarity (Figure 3). The similarity modulated regions are subregions within those implicated in structural processing. No fusiform regions were modulated by perceptual similarity. A repeated measures ANOVA on percent signal change from resting baseline as a function of processing type (structural, perceptual) and similarity level (S1–S3) confirmed this for the left mid-fusiform region. The Processing type × Similarity interaction was significant [F(2, 22) = 3.5, p = .05] and indicates that fMRI signal was greatest for the highest level of structural similarity but not for the equally difficult condition of high perceptual similarity (Figure 3A). The right fusiform region did not show significant similarity modulation (Figure 3B).

In this experiment, robust bilateral activation emerged in the mid-fusiform gyrus when participants discriminated object pairs, which are associated with structural descriptions, as compared to pairs of meaningless line configurations, which are only associated with perceptual descriptions. This mid-fusiform activation for object discrimination reflects the processing of structural information given that both line configurations and objects are associated with perceptual descriptions. The comparable behavioral performance for these two processing types indicates that the mid-fusiform activation was not driven by task difficulty. The line configurations were as difficult to discriminate as the objects, but the object discrimination task more robustly recruited the mid-fusiform gyrus. In addition, in agreement with Joseph and Farley (2004) and Joseph and Gathers (2003), fMRI signal in the left mid-fusiform gyrus was systematically modulated by structural similarity level but not by perceptual similarity. Consequently, the left mid-fusiform gyrus is involved in fine differentiation of structural descriptions. The right mid-fusiform gyrus was not as strongly implicated in this differentiation process but it was associated with structural processing.

EXPERIMENT 2

A clear difference between line configurations and objects is the presence of structural object information in the latter, but the two stimulus types also differ in terms of semantic information. Objects have rich semantic representations, including information about their attributes, category membership, and names, whereas line configurations are semantically impoverished. Although semantic information may not be required by the present matching task, it may be implicitly activated (Pins, Meyer, Humphreys, & Boucart, 2004; Joseph, 1997; Joseph & Proffitt, 1996). Therefore, the mid-fusiform activation attributed to structural processing in Experiment 1 may instead reflect semantic processing of the objects during matching. In addition, mid-fusiform or other visual processing regions may show different responses to the processing of 3-D structure associated with meaningful and nameable entities versus processing of 3-D structure of semantically impoverished stimuli. In the present experiment, a third stimulus type was added—primitive 3-D shapes which require structural processing but should make minimal demands on semantic processing (see Figure 1).

Methods

Participants

Fourteen right-handed, native English speakers (M = 25 years, SD = 5.5; 6 women), with normal or corrected-to-normal vision, were recruited from the local community and compensated for participation. None of the subjects participated in the other two experiments. A signed informed consent form approved by University of Kentucky Institutional Review Board was obtained from each participant prior to the experiment. Data from two participants were excluded due to excessive head motion.

Stimuli

In addition to the stimuli used in Experiment 1, a third stimulus type was added—basic 3-D geometric shapes defined as geons by Biederman (1987) (Figure 1). The 3-D shape stimuli (referred to as PS stimuli) were 12 drawings of 3-D shapes scanned in from Biederman in which the 3-D shapes were classified along four dimensions, or nonaccidental properties: (a) curved or straight edge of the cross-section of the shape; (b) both rotational and reflectional symmetry or only reflectional symmetry of the cross-section; (c) constant or expanded size of the cross-section along the longitudinal axis of the shape; and (d) curved or straight longitudinal axis of the shape. Ten shape pairs were created for each of three similarity levels and the number of overlapping nonaccidental properties determined the similarity level. For the 3-D shape stimuli, “same” pairs consisted of an item and its left–right mirror image. As shown in Figure 1, 3-D shapes were expected to engage both perceptual and structural processing but minimal degrees of semantic processing.

Design, Procedures, and Analyses

In this second experiment, an additional processing type was added (3-D shapes) to the two processing types used in Experiment 1 (line configurations and objects), with one functional run for each processing type. Similarity level was again manipulated across blocks of each functional run. Within each experimental block, two match and six mismatch pairs were randomly mixed. The order of the runs was counterbalanced across subjects. All procedures and analyses were the same as in Experiment 1, except that no second-level analysis was necessary to pool over runs within each subject because participants performed only one run for each processing type.

Results and Discussion

Behavioral data from 11 subjects were analyzed. One subject’s responses were not completely recorded due to malfunction of the response device during scanning. Outliers comprised 0.25% of the LogRT data and were omitted from analyses. Only correct LogRTs (94% of the data) were submitted to analyses. Figure 2B shows matching performance as a function of processing type and similarity level. Object and 3-D shape matching were more difficult than matching line configurations, as indicated by a significant main effect of processing type for LogRT [F(2, 20) = 7.6, p < .01]. The effect of processing type was marginal for errors [F(2, 20) = 3.6, p = .058], and matching of objects was not necessarily more difficult than matching of line configurations [F(1, 10) = 3.6, p > .05]. Performance was modulated by similarity level, with greater degrees of similarity inducing longer reaction times [F(3, 8) = 77.8, p < .0001] and more errors [F(3, 8) = 12.7, p < .005]. There was no interaction between processing type and similarity level for either LogRT or error rates, and no speed–accuracy tradeoff.

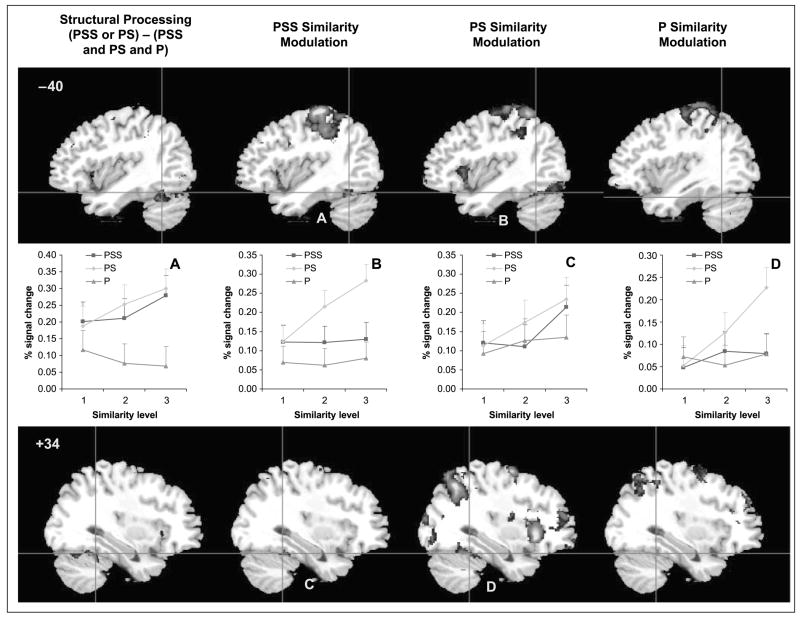

The first analysis isolated the regions that were involved in structural processing using the contrasts of PSS > P and PS > P. Although the mid-fusiform gyrus was activated according to these contrasts, the regions were quite small (Table 2). Next, the activation map for the objects versus line configuration (PSS > P) was combined with the map for 3-D shapes versus line configurations (PS > P) using arithmetic operators (addition for logical “OR” and multiplication for logical “AND”) to implement logical operations (also see Joseph, Partin, & Jones, 2002). The reasoning was that both objects and 3-D shapes tap into structural processing, whereas line configurations only tap into perceptual processing. The group activation maps were combined as follows: [(PSS > P) or (PS > P)] − [(PSS > fixation) and (PS > fixation) and (P > fixation)]. The first part of the equation isolates any voxels that are activated either by objects or 3-D shapes relative to line configurations, as an index of structural processing. The second part of the equation removes any voxels that are activated by all stimuli relative to fixation, as an index of perceptual processing. The voxels isolated by this equation are thus associated with structural but not perceptual processing. Using this combination of activation maps, the bilateral mid-fusiform gyrus was activated when participants were performing object or 3-D shape discrimination as compared to discriminating line configurations (Figure 4 and Table 2). This bilateral mid-fusiform activation was similar to the activation in Experiment 1. Other brain regions that emerged from these contrasts are listed in the Appendix.

Table 2.

Fusiform, Occipital, and Inferior Temporal Activation in Experiment 2

| MNI Coordinate |

||||||

|---|---|---|---|---|---|---|

| Region | BA | Size | x | y | z | Max. Z value |

| Structural Processing: PSS > P | ||||||

| L Fusiform | 37 | 35 | −38 | −56 | −20 | 3.01 |

| L Posterior fusiform | 19 | 63 | −30 | −70 | −14 | 3.25 |

| Structural Processing: PS > P | ||||||

| R Fusiform | 37 | 65 | +30 | −56 | −18 | 3.21 |

| L Middle occipital | 37 | 55 | −40 | −70 | +2 | 3.05 |

| Structural Processing: [(PSS > P) OR (PS > P)] − [(PSS > Fixation) AND (PS > Fixation) AND (P > Fixation)] | ||||||

| R Fusiform/cerebellum | 37 | 665 | +26 | −54 | −22 | 4.10 |

| L Fusiform | 37 | 589 | −34 | −54 | −24 | 3.67 |

| PSS-similarity Modulated Regions | ||||||

| R Fusiform | 37 | 35 | +34 | −40 | −24 | 3.45 |

| R Fusiform | 37 | 27 | +40 | −56 | −16 | 3.22 |

| R Inferior temporal | 37 | 10 | +58 | −58 | −16 | 2.92 |

| L Fusiform | 37 | 307 | −34 | −54 | −22 | 3.88 |

| PS-similarity Modulated Regions | ||||||

| R Inferior temporal | 37 | 944 | +50 | −60 | −2 | 3.79 |

| L Fusiform | 37 | 1151 | −44 | −62 | −22 | 3.57 |

BA = Brodmann’s area; R = right; L = left.

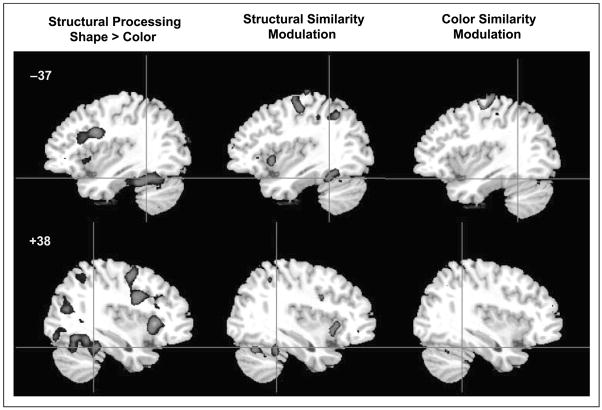

Figure 4.

Activation maps for the analysis of processing type (structural vs. perceptual processing) and similarity modulation in Experiment 2 (z > 2.58). Plots A–D correspond to Regions A–D.

To determine whether mid-fusiform regions activated by structural descriptions are implicated in fine differentiation of shape, a second analysis was conducted in which similarity level was treated as a single regressor with three levels (PSS1–PSS3 for the object run, PS1–PS3 for the 3-D shape run, or P1–P3 for the line configuration run). The mid-fusiform regions that were modulated by structural similarity (either PSS similarity or PS similarity) are illustrated in Figure 4 and listed in Table 2. As in Experiment 1, the same mid-fusiform regions that are implicated in structural processing are also modulated by structural similarity of either 3-D shapes or objects. The PS and PSS-similarity modulation occurred in slightly different, but highly overlapping, regions. Importantly, these regions were modulated more by increasing structural similarity than by increasing perceptual similarity (see panels A–D in Figure 4). The relatively anterior regions in both hemispheres (panels A and C) were modulated by both PSS and PS similarity, whereas the relatively more posterior regions (panels B and D) were modulated primarily by PS similarity. Repeated measures ANOVAs on percent signal change from resting baseline as a function of processing type (PSS, PS, P) and similarity level (S1–S3) were conducted in each of the six structural similarity modulated regions in Table 2. All six regions showed significant effects of similarity level (p ≤.055), but the Processing type × Similarity interaction did not reach significance in these regions.

In this experiment, the bilateral mid-fusiform gyrus was more strongly activated when participants discriminated objects and 3-D shapes than when they discriminated 2-D line configurations. The 3-D shapes have clear 3-D structure but minimal semantic information, whereas the objects have both 3-D structure and semantic associations. Joseph and Gathers (2003) showed that name agreement for these 3-D shapes is much lower (35.6%) than the name agreement for object stimuli (85.2%), and these same stimuli were used in this study. Therefore, the semantic information associated with these 3-D shapes is minimal. Given that mid-fusiform activation emerged for both object and 3-D shape discrimination, semantic engagement may not be necessary for this activation to occur. In addition, fMRI signal in the bilateral mid-fusiform gyrus in this experiment was greater for high structural similarity, which indicates that this region is engaged for fine differentiation of objects at the level of structural descriptions.

EXPERIMENT 3

Previous studies have suggested that mid-fusiform activation may not be driven by different stimulus properties related to different visual categories, but may be largely dependent on the type of processing engaged for an object recognition or categorization task. In particular, the requirement to process object form (Cant & Goodale, 2007; Starrfelt & Gerlach, 2007; Gerlach et al., 2002, 2006; Hayworth & Biederman, 2006) or the demand for greater differentiation of object representations (Rogers et al., 2005; Gerlach et al., 2004; Joseph & Farley, 2004; Joseph & Gathers, 2003; Price et al., 2003; Gauthier et al., 1999; Damasio et al., 1996) may be a stronger factor predicting mid-fusiform activation than would differences in stimulus properties associated with different visual categories. In this experiment, this idea is explored further by using the same set of stimuli for two different tasks—color matching and shape matching. Keeping the stimuli the same across the two tasks controls for the bottom–up influence of specific stimulus properties on mid-fusiform activation. Shape matching is expected to more strongly recruit the mid-fusiform gyrus than will color matching. This difference in activation cannot be attributed to different perceptual inputs because the same stimuli are used for each task.

Methods

Participants

Fourteen right-handed, native English speakers (M = 23.7 years, SD = 3.8; 5 women) with normal or corrected-to-normal vision and normal color vision were recruited from the local community and compensated for participation. None of the subjects participated in the other two experiments. A signed informed consent form approved by University of Kentucky Institutional Review Board was obtained from each participant prior to the experiment. Data from one participant were excluded due to incomplete data.

Stimuli

The stimuli were line drawings of animals painted in artificial colors (Figure 1). Animal pairs were constructed to yield three levels of similarity, similar to Experiments 1 and 2 and Joseph and Gathers (2003). The colors used for the animal stimuli were selected based on a preliminary rating experiment in which 27 individual color patches (constructed from all possible combinations of red, green, or blue values set to 0, 128, or 255) were paired with each other to yield 351 total color pairs. Participants rated the similarity of the two colors in a pair by pressing one of 11 buttons on the keyboard (Button 1 indicated lowest similarity and Button 11 indicated highest similarity). Twenty participants viewed all color pairs twice in a random order. The mean rating for each pair was computed and the resulting distribution was divided into three equal groups to determine three similarity levels for the colors. The colors that were assigned to the animals were drawn from the three similarity levels. The final color pairs were not matched for luminance or saturation because the goal was to manipulate a stimulus property that would be perceptual in nature and would not require processing of object structure. The goal was not to isolate wavelength from luminance or saturation information.

Both structural and color similarity of the animal pairs were manipulated simultaneously so that the same set of stimuli could be used for both the color and shape-matching tasks. A preliminary study indicated that animal matching was more difficult than matching color patches, so we attempted to use animal stimuli that yielded the lowest reaction times within each similarity level (based on previous studies). “Same” responses for color pairs consisted of different animals in the same color. “Same” responses for animal pairs consisted of two exemplars, positions, or orientations of the same animal in different colors. As in Experiment 1, participants were trained to identify the same pairs prior to the scanning session.

Design, Procedures, and Analyses

Three variables were manipulated orthogonally in this experiment: color similarity (CS), shape similarity (SS; Figure 1), and the stimulus dimension to which participants were asked to respond, which is referred to as processing type (i.e., color or structural processing). Two runs of each processing type were presented in counterbalanced order across subjects. Color and shape similarity levels were manipulated across blocks for each matching task. Within each of nine experimental blocks, two “same” and six “different” pairs were randomly mixed. Of the six “different” pairs, two pairs consisted of stimuli that were matched in the task-irrelevant dimension (e.g., two exemplars of the same animal in different colors for the color-matching task). This setup enabled us to manipulate the similarity levels of the task-relevant dimension across blocks while at the same time use the same set of stimuli for both the color- and shape-matching tasks. In both the behavioral and fMRI data analyses, the effect of the relevant dimension’s similarity was collapsed across the irrelevant dimension’s similarity. All data acquisition parameters and analyses were the same as in Experiment 1.

Results and Discussion

Data from 13 subjects were analyzed. Outliers comprised 0.03% of the LogRT data and were omitted from analyses. The correct LogRTs (84% of the data) and error rates are plotted as a function of processing type and similarity level for the relevant task dimension (Figure 2C). Structural processing (collapsed across color-matching conditions) was harder than color processing (collapsed across shape-matching conditions), as shown by the significant main effect of processing type for LogRT [F(1, 12) = 294.2, p < .0001] and errors [F(1, 12) = 15.7, p < .005]. Also, greater degrees of similarity were more difficult to resolve than lower levels, as shown by the significant main effect of similarity level for LogRT [F(3, 36) = 27.7, p < .0001] and errors [F(3, 10) = 27.7, p < .0001]. The Processing type × Similarity level interaction was significant for LogRT [F(3, 36) = 16.3, p < .0001], but the simple main effects of color and shape similarity (on “different” trials) were significant, as were the linear trends (p < .05). Given that both the LogRTs and error rates showed similar patterns, there was no speed–accuracy tradeoff.

Similar to the analysis used in Experiment 1, brain regions that were activated by structural processing more than for color processing were isolated (Table 3). The mid-fusiform and inferior temporal gyri were more strongly recruited for structural processing (i.e., shape matching) than for color processing (i.e., color matching; Figure 5). Color processing did not recruit any VPS regions more strongly than structural processing (but see the Appendix for non-VPS regions that were activated in this experiment).

Table 3.

Fusiform and Inferior Temporal Activation in Experiment 3

| MNI Coordinate |

||||||

|---|---|---|---|---|---|---|

| Region | BA | Size | x | y | z | Max. Z value |

| Shape Matching > Color Matching | ||||||

| R Fusiform | 37 | 48 | +36 | −40 | −24 | 3.75 |

| L Inferior temporal | 37 | 613 | −54 | −70 | −12 | 5.05 |

| R Fusiform | 37 | 474 | +42 | −62 | −14 | 5.03 |

| SS-modulated Regions | ||||||

| R Fusiform | 37 | 382 | +34 | −42 | −22 | 4.30 |

| L Fusiform | 37 | 305 | −34 | −46 | −24 | 4.09 |

BA = Brodmann’s area; R = right; L = left.

Figure 5.

Activation maps for the analysis of processing type (structural vs. color processing) and similarity modulation in Experiment 3 (z > 3.3).

To determine whether mid-fusiform regions activated by structural descriptions are implicated in fine differentiation of shape, a second analysis was conducted in which similarity level was treated as a single regressor with three levels (SS1–SS3 for the shape-matching runs, CS1–CS3 for the color-matching runs). The mid-fusiform regions that were modulated by structural similarity overlap with the regions recruited for structural processing, whereas the regions modulated by color similarity do not (Figure 5). In fact, no fusiform regions were modulated by color similarity. Repeated measures ANOVAs on percent signal change from resting baseline as a function of processing type (SS, CS) and similarity level (S1–S3) were conducted in the two structural similarity modulated regions in Table 3. These regions, however, did not show significant effects of ascending similarity.

This experiment showed that the mid-fusiform gyrus and the surrounding inferior temporal cortex was more strongly activated when participants performed shape matching than when they performed color matching on the exact same stimuli. Because the perceptual input was identical for both tasks, the mid-fusiform activation reflects demands on processing object structure rather than being driven by specific stimulus properties in a bottom–up fashion.

COMPARISON ACROSS EXPERIMENTS 1 TO 3

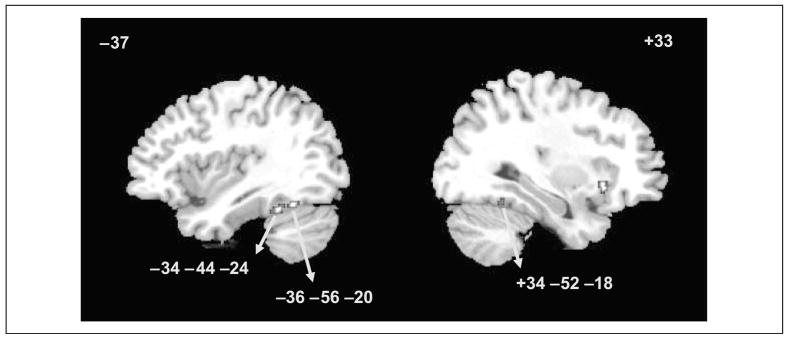

The overlapping voxels for structural processing across all three experiments were determined (Figure 6). The bilateral mid-fusiform gyrus was commonly activated, with more voxels in the left than in the right hemisphere. These foci of activation are very much in line with previous studies showing parametric modulation of fMRI signal by degree of structural similarity during object discrimination (Joseph & Farley, 2004; Joseph & Gathers, 2003). The right insula was also commonly activated across all three experiments.

Figure 6.

Common voxels activated by structural processing in all three experiments: object versus line matching in Experiment 1, object or 3-D shape matching versus line matching in Experiment 2, and shape matching versus color matching in Experiment 3 (z > 2.58).

GENERAL DISCUSSION

The first hypothesis of the present study was confirmed in that the bilateral mid-fusiform gyrus was activated for structural processing across three different experiments. The processing that occurred in this region was not strictly perceptual in nature given that matching of 2-D line configurations or colors embedded in objects did not produce significant mid-fusiform activation. In addition, mid-fusiform activation could not be explained by task difficulty for object matching because the object and line configuration-matching tasks were equated for difficulty in Experiment 1 and in some conditions of Experiments 2 and 3. The second hypothesis was also confirmed in two of the three experiments: Similarity modulation emerged in the left mid-fusiform in Experiment 1, in the bilateral mid-fusiform in Experiment 2, and not at all in Experiment 3. The third hypothesis was also confirmed: Processing of object structure but not object color activates the mid-fusiform gyrus in Experiment 3. This is in line with recent proposals that the requirement to disambiguate objects that are similarly shaped is a stronger influence on mid-fusiform activation than processing specific stimulus properties (Rogers et al., 2005; Gerlach et al., 1999, 2004; Joseph & Gathers, 2003; Gauthier et al., 1999).

An alternative explanation for the strong bilateral mid-fusiform gyrus response to objects and 3-D shapes is that these stimuli are more complex than line configurations. However, the similarity modulation results show that the mid-fusiform gyrus responds more strongly to higher levels of structural similarity than to lower levels of similarity. Importantly, the low structural similarity pairs are as complex as the high structural similarity pairs (see Figure 1), but the high similarity pairs activate the mid-fusiform gyrus more strongly (Figures 3 and 4). Therefore, complexity of the stimuli cannot explain the greater activation by high similarity pairs because high and low similarity pairs are equally complex.

Although the left mid-fusiform was most commonly implicated for structural processing across the three experiments (Figure 6), there may be a difference between left and right fusiform activation. In Experiment 2, the left mid-fusiform gyrus was more strongly activated for object matching, but the right mid-fusiform gyrus was more strongly activated for matching 3-D shapes. There are at least two different, but not mutually exclusive, explanations for this lateralization effect. First, the left mid-fusiform activation for objects may be related to the fact that objects are meaningful and nameable entities with strong semantic associations. The left hemisphere activation may reflect automatic processing of semantic information associated with objects even though semantic processing may not have been required by the matching task. A related idea is that the left mid-fusiform gyrus may process structural descriptions that are specifically associated with objects, whereas the right mid-fusiform gyrus may process 3-D object structure that is not related to meaningful objects. Second, previous studies have found a left–right distinction in fusiform regions, such that the left fusiform shows fMRI adaptation for different viewpoints or exemplars. In contrast, the right hemisphere (Burgund & Marsolek, 2000) or, more specifically, the right fusiform gyrus (Simons, Koutstaal, Prince, Wagner, & Schacter, 2003; Vuilleumier, Henson, Driver, & Dolan, 2002; Koutstaal et al., 2001) does not show viewpoint or exemplar adaptation. Structural descriptions, as originally conceived by Marr and Nishihara (1978), are abstract representations of object structure that are also viewpoint independent. Consequently, the structural processing in left mid-fusiform regions may reflect the processing of abstract, viewpoint-independent descriptions. The right mid-fusiform activation for 3-D shapes in the present study could be explained by the need to match the shapes across different viewpoints (which was only a minimal demand for the object stimuli).

Experiment 3 showed that activation in the mid-fusiform is not driven in a bottom–up fashion by specific stimulus properties, but rather by the demand to process structural (or shape) information. In addition, in Experiments 1 and 2, the demand to differentiate objects that were high in structural similarity induced greater fMRI signal in the mid-fusiform gyrus. This finding, coupled with previous findings (Joseph & Farley, 2004; Joseph & Gathers, 2003), suggests that mid-fusiform regions are recruited to make fine distinctions among structurally similar objects or shapes. However, similarity modulation of fMRI signal did not emerge in Experiment 3. We suggest that the processing type effect (i.e., shape versus color matching) likely overwhelmed the effects of structural similarity. Hence, similarity modulation did not emerge as a strong factor in Experiment 3. The behavioral results also bear this out. In Experiment 3 (but not in Experiments 1 and 2), the effect of processing type was much stronger on both reaction time and error performance than was the effect of similarity, as indicated by a comparison of F values for these effects. In Experiments 1 and 2, the effect of similarity was stronger than the effect of processing type on behavior. Similarity modulation of fMRI signal seems to emerge only in the cases when the behavioral effects of similarity are stronger than the behavioral effects of processing type. Joseph and Farley demonstrated a similar outcome in that measures of response bias, which showed the strongest behavioral effect of similarity, predicted fMRI signal in the mid-fusiform gyrus, whereas reaction time, errors, and sensitivity did not.

In conclusion, the current study adds further to the growing body of research showing that the bilateral mid-fusiform gyrus is engaged when objects are processed at the level of structural descriptions, which are abstract, modality-independent, and viewpoint-independent representations of object shape that mediate between perceptual and semantic descriptions and can be accessed through visual or tactile modalities (e.g., Amedi, Malach, Hendler, Peled, & Zohary, 2001). Such representations are critical for making fine distinctions among visually similar stimuli, as with members of the same taxonomic categories and faces. Faces share the same basic shape or structure, thus individuating faces from each other requires discriminating subtle differences in the arrangement of features (i.e., detecting differences in spacing of features, or second-order configural information) or discriminating differences in the size or shape of components (i.e., detecting differences in the features of a face). The present findings also demonstrate that the stimulus properties themselves do not drive the activation patterns in a bottom–up manner because the mere presentation of 3-D objects was not sufficient to activate the mid-fusiform gyrus. Instead, demands on processing structural descriptions predicted mid-fusiform activation. Additional questions for future research include distinguishing semantic and structural descriptions more clearly to separate the contribution of each processing type on mid-fusiform activation. This approach could lead to a better understanding of the factors that contribute to left versus right ventral occipito-temporal activation in object discrimination.

Acknowledgments

This research was supported by grants from the National Science Foundation (BCS-0224240) and the National Institutes of Health (R01 MH063817; P20 RR015592). We thank Agnes Bognar, Christine Corbly, and Gerry Piper for their technical assistance.

Appendix

APPENDIX.

Additional brain regions activated in Experiments 1 through 3

| MNI Coordinate |

||||||

|---|---|---|---|---|---|---|

| Region | BA | Cluster Size | x | y | z | Maximum Z value |

| Experiment 1: Object > Line Configuration | ||||||

| R Anterior fusiform | 20 | 39 | +40 | −16 | −24 | 2.98 |

| R Inf. occipital | 17/18 | 76 | +28 | −100 | 0 | 3.24 |

| L Middle occipital | 17/18 | 196 | −18 | −100 | +2 | 3.77 |

| R Inf. frontal | 45 | 542 | +42 | +28 | +16 | 4.12 |

| L Inf. frontal | 45 | 195 | −50 | +38 | +2 | 3.30 |

| R Inf. orbito-frontal | 11 | 147 | +24 | +24 | −14 | 3.73 |

| R Angular gyrus | 7 | 279 | +34 | −62 | +44 | 3.78 |

| R Cerebellum | 119 | +42 | −70 | −28 | 3.21 | |

| Vermis | 227 | 0 | −56 | −32 | 3.40 | |

| L Globus pallidus | 191 | −14 | +8 | +2 | 3.41 | |

| R Globus pallidus | 39 | +14 | +6 | +4 | 2.93 | |

| Experiment 2: Object > Line Configuration | ||||||

| L Inf. frontal | 45 | 50 | −46 | +26 | +16 | 3.02 |

| R Insula | 48 | 44 | +38 | +18 | −8 | 3.17 |

| L Cerebellum | 72 | −26 | −46 | −32 | 3.19 | |

| Experiment 2: 3-D Shape > Line Configuration | ||||||

| L Calcarine sulcus | 18 | 212 | −12 | −72 | +18 | 3.29 |

| R Lingual gyrus | 30 | 57 | +12 | −40 | −10 | 3.06 |

| L Superior occipital | 17 | 62 | −10 | −94 | +4 | 3.01 |

| L Parahippocampal | 30 | 35 | −16 | −28 | −10 | 2.96 |

| L Inf. frontal | 45 | 36 | −54 | +22 | +22 | 3.30 |

| R Postcentral gyrus | 43 | 37 | +62 | −6 | +32 | 3.16 |

| R Insula | 47 | 121 | +38 | +22 | −6 | 4.34 |

| L Insula | 48 | 75 | −34 | +2 | +14 | 3.17 |

| L Temporal pole | 34 | 188 | −18 | +4 | −20 | 3.71 |

| L Inf. parietal | 40 | 85 | −40 | −44 | +34 | 3.35 |

| L Superior parietal | 7 | 147 | −28 | −70 | +40 | 3.55 |

| R Cerebellum | 63 | +14 | −46 | −26 | 3.16 | |

| Vermis | 64 | 0 | −54 | −40 | 3.20 | |

| Experiment 2: Object > 3-D Shape | ||||||

| R Inf. frontal | 45 | 47 | +58 | +34 | +4 | 3.24 |

| Experiment 2: Structural Processing: [(PSS > P) OR (PS > P)] − [(PSS > Fixation) AND (PS > Fixation) AND (P > Fixation)] | ||||||

| L Insula | 47 | 157 | −38 | 16 | −8 | 3.36 |

| R Insula | 47 | 30 | +32 | 22 | 2 | 2.94 |

| L Putamen | 64 | −24 | −8 | 2 | 3.28 | |

| L Precentral | 6 | 389 | −60 | −24 | 60 | 3.64 |

| R Postcentral | 31 | +66 | −2 | 38 | 3.28 | |

| L Cingulate | 24 | 65 | −2 | 16 | 40 | 3.46 |

| L Inf. parietal | 40 | 51 | −32 | −36 | 38 | 3.31 |

| L Cingulate | 39 | −2 | −6 | 50 | 3.56 | |

| R Precentral | 6 | 73 | +46 | −6 | 58 | 3.40 |

| L Postcentral | 4 | 31 | −44 | −24 | 68 | 4.21 |

| Experiment 3: Shape Matching > Color Matching | ||||||

| R Middle occipital | 19 | 147 | +30 | −74 | +28 | 4.28 |

| R Insula | 47 | 377 | +32 | +22 | +2 | 5.81 |

| L Insula | 47 | 50 | −30 | +20 | −2 | 4.57 |

| L Inf. frontal | 44 | 259 | −42 | +10 | +28 | 4.69 |

| R Precentral gyrus | 44 | 537 | +42 | +6 | +30 | 4.80 |

| R Precentral gyrus | 6 | 80 | +36 | −4 | +50 | 4.28 |

| R Supramarginal | 32 | 401 | +4 | +14 | +46 | 4.65 |

| L Superior parietal | 7 | 225 | −28 | −66 | +52 | 4.28 |

| R Superior parietal | 7 | 223 | +30 | −62 | +52 | 4.28 |

| L Cerebellum | 142 | −8 | −76 | −26 | 4.56 | |

| R Cerebellum | 162 | +14 | −72 | −24 | 4.25 | |

| Experiment 3: Color Matching > Shape Matching | ||||||

| L Inf. parietal | 40 | 54 | −60 | −42 | +46 | 4.11 |

| R Temporal pole | 38 | 33 | +36 | +18 | −32 | 3.78 |

| L Temporal pole | 38 | 118 | −30 | +14 | −28 | 4.04 |

BA = Brodmann’s area; R = right; L = left; Inf. = inferior.

References

- Amedi A, Malach R, Hendler T, Peled S, Zohary E. Visuo-haptic object-related activation in the ventral visual pathway. Nature Neuroscience. 2001;4:324–330. doi: 10.1038/85201. [DOI] [PubMed] [Google Scholar]

- Arguin M, Bub D, Dudek G. Shape integration for visual object recognition and its implication in category-specific visual agnosia. Visual Cognition. 1996;3:221–275. [Google Scholar]

- Biederman I. Recognition-by-components: A theory of human image understanding. Psychological Review. 1987;94:115–147. doi: 10.1037/0033-295X.94.2.115. [DOI] [PubMed] [Google Scholar]

- Bruce V, Humphreys GW. Recognizing objects and faces. Visual Cognition. 1994;1:141–180. [Google Scholar]

- Burgund ED, Marsolek CJ. Viewpoint-invariant and viewpoint-dependent object recognition in dissociable neural subsystems. Psychonomic Bulletin & Review. 2000;7:480–489. doi: 10.3758/bf03214360. [DOI] [PubMed] [Google Scholar]

- Cant JS, Goodale MA. Attention to form or surface properties modulates different regions of occipitotemporal cortex. Cerebral Cortex. 2007;17:713–731. doi: 10.1093/cercor/bhk022. [DOI] [PubMed] [Google Scholar]

- Cohen L, Lehericy S, Chochon F, Lemer C, Rivaud S, Dehaene S. Language-specific tuning of visual cortex? Functional properties of the visual word form area. Brain. 2002;125:1054–1069. doi: 10.1093/brain/awf094. [DOI] [PubMed] [Google Scholar]

- Damasio AR, Damasio H, Van Hoesen GW. Prosopagnosia: Anatomic basis and behavioral mechanisms. Neurology. 1982;32:331–341. doi: 10.1212/wnl.32.4.331. [DOI] [PubMed] [Google Scholar]

- Damasio H, Grabowski TJ, Tranel D, Hichwa RD, Damasio AR. A neural basis for lexical retrieval. Nature. 1996;380:499–505. doi: 10.1038/380499a0. [DOI] [PubMed] [Google Scholar]

- Engel S, Glover GH, Wandell B. Retinotopic organization in human visual cortex and the spatial precision of functional MRI. Cerebral Cortex. 1997;7:181–192. doi: 10.1093/cercor/7.2.181. [DOI] [PubMed] [Google Scholar]

- Epstein R, Kanwisher N. A cortical representation of the local visual environment. Nature. 1998;392:598–601. doi: 10.1038/33402. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Tarr MJ, Anderson AW, Skudlarski P, Gore JC. Activation of the middle fusiform “face area” increases with expertise in recognizing novel objects. Nature Neuroscience. 1999;2:568–573. doi: 10.1038/9224. [DOI] [PubMed] [Google Scholar]

- Gerlach C, Aaside CT, Humphreys GW, Gade A, Paulson OB, Law I. Brain activity related to integrative processes in visual object recognition: Bottom–up integration and the modulatory influence of stored knowledge. Neuropsychologia. 2002;40:1254–1267. doi: 10.1016/s0028-3932(01)00222-6. [DOI] [PubMed] [Google Scholar]

- Gerlach C, Law I, Gade A, Paulson OB. Perceptual differentiation and category effects in normal object recognition. Brain. 1999;122:2159–2170. doi: 10.1093/brain/122.11.2159. [DOI] [PubMed] [Google Scholar]

- Gerlach C, Law I, Paulson OB. Structural similarity and category-specificity: A refined account. Neuropsychologia. 2004;42:1543–1553. doi: 10.1016/j.neuropsychologia.2004.03.004. [DOI] [PubMed] [Google Scholar]

- Gerlach C, Law I, Paulson OB. Shape configuration and category specificity. Neuropsychologia. 2006;44:1247–1260. doi: 10.1016/j.neuropsychologia.2005.09.010. [DOI] [PubMed] [Google Scholar]

- Hayworth KJ, Biederman I. Neural evidence for intermediate representations in object recognition. Vision Research. 2006;46:4024–4031. doi: 10.1016/j.visres.2006.07.015. [DOI] [PubMed] [Google Scholar]

- Hertzog C, Rovine M. Repeated-measures analysis of variance in developmental research: Selected issues. Child Development. 1985;56:787–809. [PubMed] [Google Scholar]

- Joseph JE. Color processing in object verification. Acta Psychologica. 1997;97:95–127. doi: 10.1016/s0001-6918(97)00026-7. [DOI] [PubMed] [Google Scholar]

- Joseph JE. Functional neuroimaging studies of category specificity in object recognition: A critical review and meta-analysis. Cognitive, Affective and Behavioral Neuroscience. 2001;1:119–136. doi: 10.3758/cabn.1.2.119. [DOI] [PubMed] [Google Scholar]

- Joseph JE, Farley AB. Cortical regions associated with different aspects of performance in object recognition. Cognitive, Affective & Behavioral Neuroscience. 2004;4:364–378. doi: 10.3758/cabn.4.3.364. [DOI] [PubMed] [Google Scholar]

- Joseph JE, Gathers AD. Natural and manufactured objects activated the “fusiform face area”. NeuroReport. 2002;13:935–938. doi: 10.1097/00001756-200205240-00007. [DOI] [PubMed] [Google Scholar]

- Joseph JE, Gathers AD. Effects of structural similarity on neural substrates for object recognition. Cognitive, Affective, & Behavioral Neuroscience. 2003;3:1–16. doi: 10.3758/cabn.3.1.1. [DOI] [PubMed] [Google Scholar]

- Joseph JE, Partin DJ, Jones KM. Hypothesis testing for selective, differential, and conjoined brain activation. Journal of Neuroscience Methods. 2002;118:129–140. doi: 10.1016/s0165-0270(02)00122-x. [DOI] [PubMed] [Google Scholar]

- Joseph JE, Proffitt DR. Semantic versus perceptual influences of color in object recognition. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1996;22:407–429. doi: 10.1037//0278-7393.22.2.407. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: A module in human extrastriate cortex specialized for face perception. Journal of Neuroscience. 1997;17:4301–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koutstaal W, Wagner AD, Rotte M, Maril A, Buckner RL, Schacter DL. Perceptual specificity in visual object priming: Functional magnetic resonance imaging evidence for a laterality difference in fusiform cortex. Neuropsychologia. 2001;39:184–199. doi: 10.1016/s0028-3932(00)00087-7. [DOI] [PubMed] [Google Scholar]

- Loftus GR, Masson MEJ. Using confidence intervals in within-subject designs. Psychonomic Bulletin & Review. 1994;1:476–490. doi: 10.3758/BF03210951. [DOI] [PubMed] [Google Scholar]

- Malach R, Reppas JB, Benson RR, Kwong KK, Jiang H, Kennedy WA, et al. Object-related activity revealed by functional magnetic resonance imaging in human occipital cortex. Proceedings of the National Academy of Sciences, USA. 1995;92:8135–8139. doi: 10.1073/pnas.92.18.8135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marr D. Vision: A computational investigation into human representation and processing of visual information. San Francisco: Freeman; 1982. [Google Scholar]

- Marr D, Nishihara HK. Representation and recognition of the spatial organization of three-dimensional shapes. Proceedings of the Royal Society of London, Series B. 1978;200:269–294. doi: 10.1098/rspb.1978.0020. [DOI] [PubMed] [Google Scholar]

- Mintun MA, Fox PT, Raichle ME. A highly accurate method of localizing regions of neuronal activation in the human brain with positron emission tomography. Journal of Cerebral Blood Flow and Metabolism. 1989;9:96–103. doi: 10.1038/jcbfm.1989.13. [DOI] [PubMed] [Google Scholar]

- O’Brien R, Kaiser M. MANOVA method for analyzing repeated measures designs: An extensive primer. Psychological Bulletin. 1985;97:316–333. [PubMed] [Google Scholar]

- Op de Beeck H, Beatse E, Wagemans J, Sunaert S, Van Hecke P. The representation of shape in the context of visual object categorization tasks. Neuroimage. 2000;12:28–40. doi: 10.1006/nimg.2000.0598. [DOI] [PubMed] [Google Scholar]

- Pins D, Meyer ME, Humphreys GW, Boucart M. Neural correlates of implicit object identification. Neuropsychologia. 2004;42:1247–1259. doi: 10.1016/j.neuropsychologia.2004.01.005. [DOI] [PubMed] [Google Scholar]

- Polk TA, Stallcup M, Aguirre GK, Alsop D, D’Esposito M, Detre JA, et al. Neural specialization for letter recognition. Journal of Cognitive Neuroscience. 2002;14:145–159. doi: 10.1162/089892902317236803. [DOI] [PubMed] [Google Scholar]

- Price CJ, Noppeney U, Phillips J, Devlin JT. How is the fusiform gyrus related to category-specificity? Cognitive Neuropsychology. 2003;20:561–574. doi: 10.1080/02643290244000284. [DOI] [PubMed] [Google Scholar]

- Rogers TT, Hocking J, Mechelli A, Patterson K, Price C. Fusiform activation to animals is driven by the process, not by the stimulus. Journal of Cognitive Neuroscience. 2005;17:434–445. doi: 10.1162/0898929053279531. [DOI] [PubMed] [Google Scholar]

- Schacter DL, Reiman E, Uecker A, Polster MR, Yun LS, Cooper LA. Brain regions associated with retrieval of structurally coherent visual information. Nature. 1995;376:587–590. doi: 10.1038/376587a0. [DOI] [PubMed] [Google Scholar]

- Sereno MI, Dale AM, Reppas JB, Kwong KK, Belliveau JW, Brady TJ, et al. Borders of multiple visual areas in humans revealed by functional magnetic resonance imaging. Science. 1995;268:889–893. doi: 10.1126/science.7754376. [DOI] [PubMed] [Google Scholar]

- Simons JS, Koutstaal W, Prince S, Wagner AD, Schacter DL. Neural mechanisms of visual object priming: Evidence for perceptual and semantic distinctions in fusiform cortex. Neuroimage. 2003;19:613–626. doi: 10.1016/s1053-8119(03)00096-x. [DOI] [PubMed] [Google Scholar]

- Starrfelt R, Gerlach C. The visual what for area: Words and pictures in the left fusiform gyrus. Neuroimage. 2007;35:334–342. doi: 10.1016/j.neuroimage.2006.12.003. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. Co-planar stereotaxic atlas of the human brain. New York: Thieme; 1988. p. 122. [Google Scholar]

- Vuilleumier P, Henson RN, Driver J, Dolan RJ. Multiple levels of visual object constancy revealed by event-related fMRI of repetition priming. Nature Neuroscience. 2002;5:491–499. doi: 10.1038/nn839. [DOI] [PubMed] [Google Scholar]

- Wang G, Tanifuji M, Tanaka K. Functional architecture in monkey inferotemporal cortex revealed by in vivo optical imaging. Neuroscience Research. 1998;32:33–46. doi: 10.1016/s0168-0102(98)00062-5. [DOI] [PubMed] [Google Scholar]

- Xiong J, Gao JH, Lancaster JL, Fox PT. Clustered pixels analysis for functional MRI activation studies of the human brain. Human Brain Mapping. 1995;3:287–301. [Google Scholar]