Summary:

A key challenge in clinical proteomics of cancer is the identification of biomarkers that could allow detection, diagnosis and prognosis of the diseases. Recent advances in mass spectrometry and proteomic instrumentations offer unique chance to rapidly identify these markers. These advances pose considerable challenges, similar to those created by microarray-based investigation, for the discovery of pattern of markers from high-dimensional data, specific to each pathologic state (e.g. normal vs cancer). We propose a three-step strategy to select important markers from high-dimensional mass spectrometry data using surface enhanced laser desorption/ionization (SELDI) technology. The first two steps are the selection of the most discriminating biomarkers with a construction of different classifiers. Finally, we compare and validate their performance and robustness using different supervised classification methods such as Support Vector Machine, Linear Discriminant Analysis, Quadratic Discriminant Analysis, Neural Networks, Classification Trees and Boosting Trees. We show that the proposed method is suitable for analysing high-throughput proteomics data and that the combination of logistic regression and Linear Discriminant Analysis outperform other methods tested.

Keywords: mass spectrometry, Wilcoxon’s test, logistic regression, supervised classifications

Introduction

Over recent years, scientific knowledge in cancer biology has progressed considerably. However, the practical impact of this research on screening, diagnosis, prognosis and monitoring remains limited. New methods must be developed to identify the physiological and pathological mechanisms in the origin and spread of tumors. Such approaches are essential for the discovery, identification and validation of new bio-markers. Recently, progress in mass spectrometry system, such as surface enhanced laser desorption/ionization time-of-flight (SELDI-TOF), has opened up interesting perspectives for identifying these markers or establishing specific protein profiles that may be used for cancer diagnosis (Adam, 2002; Petricoin, 2002; Zhang, 2004; Solassol, 2006). In this work, we considered SELDI raw data in attempt to discriminate cancer from benign diseases. After protein ionization and desorption with a laser, the mass spectrum is represented by the intensity of the proteins fixed on the chip (y-coordinate) as a function of the mass-to-charge (m/z) ratio (x-coordinate). From the spectra, the initial pre-processing steps are (a) the normalization and calibration to limit any bias caused by the instruments or the operator, (b) baseline subtraction, (c) peak detection, and (d) peak alignment to allow the same x-coordinate in all the spectra. One of the best challenges and the most important steps is then to reduce the high- dimension of these spectra to extract the discriminatory features or the best combination of markers capable of differentiating between two classes of interest (Duda, 2001). For this last step, spectra are processed using computerized algorithms based on multivariate statistical analyses. Several different mathematical algorithms have been applied to elucidate statistically significant differences such as cluster analysis, genetic algorithms, discriminate analysis, neural networks or hierarchical classification (Bauer, 1999; Petricoin, 2002; Vlahou, 2003; Wu, 2003).

In this work, we developed a three-step strategy to extract markers or combination of markers from high-dimensional SELDI data. From the pre-processing step, we detected 228 peaks, but among them some were not characteristic of the disease and were identically expressed in the two considered groups (cancer and benign disease). To allow an optimal identification of differentially expressed peaks, a preselection strategy of discriminating biomarkers combinations was chosen, rather than a simple filtering of the data by only a two-sided statistical test which was not taking into account the biomarkers inter-correlation. Next, we focused on different supervised classification methods due to the consideration that an a priori information coming from the training sample can allow the identification of the optimal diagnostic combinations. We compared the performance and the robustness of these various supervised classification methods and discussed their respective strengths and weaknesses.

Data Set and Pre-Processing

The study involved a total of 170 serum samples collected at the Arnaud de Villeneuve University Hospital (Montpellier, France) with institutional approval: 147 patients with pathologically confirmed cancer and 23 patients suffering from a benign disease in the related organ. Whole blood was collected during fasting and all samples were processed within 1 h of collection and rapidly frozen at −80 °C before analysis. An anion-exchange fractionation procedure was performed before surface-enhanced laser desorption/ionisation time-of-flight mass spectrometry analysis. Serum samples were thus separated into six different pH gradient elution fractions, referred as to F1, F2, F3, F4, F5 and F6. Each fraction was randomly applied to a weak cation exchange ProteinChip array surface (CM10) in a 96-well format. F2 was not subjected to analysis due to the weak number of peaks detected in preliminary experiments. Arrays were read on a Protein Biological System II ProteinChip reader (Ciphergen Biosystem). Peak detection was performed using the ProteinChip Biomarker software (version 3.2.0, Ciphergen Biosystem Inc.). Spectra were background subtracted and the peak intensities were normalized to the total ion current of m/z between 2.5 and 50 kDa. Automatic peak detection was performed in the range of 2.5 to 50 kDa with the following settings: i) signal-to-noise ratio at 4 for the first pass and 2 for the second pass, ii) minimal peak threshold at 15% of all spectra, iii) cluster mass window at 0.5% of mass. The resulting CSV file containing absolute intensity and m/z ratio was exported into Microsoft Excel (Microsoft, Redmont) for subsequent analysis.

Biomarkers Selection

Initially, a selection of the most discriminating biomarkers was carried out. The 228 peaks detected by Ciphergen software are aligned. A peak is defined as discriminating when the intensities of the individuals of the cancer group are significantly different than the reference group. Initially the peaks differentially expressed in the two groups were selected using the two-sided Wilcoxon’s test. After this preselection, a combination of discriminating peaks is required by using a logistic regression (Pepe, 2006).

Wilcoxon’s test

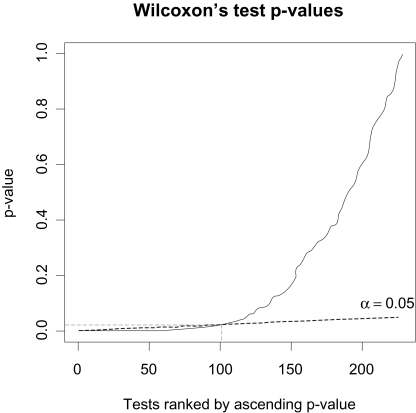

The assumption that each peak intensity follows a normal distribution has been rejected using a Shapiro-Wilk normality test in each group. A two-sided Wilcoxon’s test was employed to test the H0 assumption of equality of the intensities in the two groups. We correct the loss of power induced by multiple tests by the false discovery rate (FDR) approach (Verhoeven, 2005; Benjamini, 1995). FDR is the expected proportion of type I errors among all significant results (V/r), where V is the number of type I errors (“false discoveries”), and r is the number of significant tests. A procedure to control FDR at level α was proposed by Benjamini and Hochberg (1995). That consists initially of ranking by ascending order the 228 p-values that we note now by : p(1) ≤ p(2) ≤ . . . ≤ p(228), and H(i) denote the null hypothesis corresponding to p(i). The second stage consists of the search for k which is largest i for which:

This resulting p-value p(k) is the threshold p-value for each test taken individually, such as we reject all the null assumptions H(1), …, H(k) (Fig. 1). The null assumption has been rejected for k = 100 biomarkers.

Figure 1.

Application of Benjamini and Hochberg FDR control on the 228 Wilcoxon’s test p-values ranked by ascending order.

Binary logistic regression

The Wilcoxon’s retains the most discriminating peaks. On the other hand, the logistic regression combines several biomarkers to find the best model allowing classification in cancer/control groups. Let us consider the diagnosis variable Y to be modelled, which takes two values:

Y = 1 for all the individuals that belong to the cancer group.

Y = 0 for all the individuals that belong to the control group.

The outputs to be modelled Yi|xi follows a Bernouilli distribution of parameter πi = P(Yi = 1|xi), where xi is a vector line of the actual values for the explanatory variables. The logit of the multiple logistic regression (Hosmer, 2000) is given by

where (x1,…, xp) is a collection of p biomarkers selected in the model.

The classical model-building strategy is to find the most parsimonious model that explains the data. This provides a general and numerically stable model. To study the robustness of the logistic regression predictor’s selection, the two strategies of models-building forward and stepwise were employed. The significance level of the score chi-square for entering an effect into the model was fixed at 0.05 in the forward and stepwise logistic regressions. A significance level of 0.05 is considered in the Wald chi-square test to test if an effect must stay in the stepwise logistic regression which was implemented with SAS software (version 8.1). A weight of 1 and 147/23 was affected to the cancer and control groups, respectively. This weighting was employed because the control sample is subsampled.

The estimated logit in the forward selection is given by the following expression:

The estimated logit in the stepwise selection is given by the following expression:

where designed the jth peak in the original biomarkers matrix which contains 228 peaks and Fk indicates that this peak was detected in the kth fraction. The AUC for the two models were 0.988 for the forward strategy and 0.995 for the stepwise strategy. The forward and stepwise logistic regression modelled the logit with 6 and 8 biomarkers ranging from 3 to 48 kDa. The most stable peaks were the 5 common peaks of the two models i.e. , , , , . The model with these peaks is estimated by:

for an AUC of 0.973.

These three models produced above were used in the comparison of the supervised classification techniques. For the moment, the AUC is the only criterion which makes it possible to evaluate the model in term of classification.

Overview of the Supervised Classifications Used

Supervised classification techniques consist in a definition of a classification rule based on a training set for which the true class-label is known. The matrix x denotes the biomarkers matrix with n = 169 lines (i.e. individuals) and p columns, where p is the number of biomarkers retained in the different logistic regressions. Also, x1,…, xp denote the p biomarkers contained in the columns of x. The training data consist of N pairs {(x(1), y(1)), (x(2), y(2)), …, (x(N), y(N))} with x(i) ∈ ℜp and the N class-labels corresponding y(i) ∈{ −1,1}. In the training set, the true class-label y(i) adopted in the next sections are the following:

y(i) = −1, if the sample point x(i) belongs to the cancer group,

y(i) = 1, if the sample point x(i) belongs to the control group.

where, the ith line of the matrix x(i) represents the p coordinates of the ith training sample point.

Support vector machine

A Support Vector Machine (SVM) is a supervised learning technique that constructs an optimal separating hyperplane from the training set with an aim of classifying the test set (Vapnik, 1998; Hastie, 2001; Lee, 2004; Li, 2004). When the data are not linearly separable, one solution for the classification problem is to map the data into the feature space that is usually a higher-dimensional space using a function φ usually non linear. Thus, for x(i) the ith vector in the original input space φ(x(i)) is the corresponding vector in the feature space. The value of the kernel function K on (x(i), x(j)) computes the inner product of φ(x(i)) and φ(x(j)) in the feature space. The radial basis kernel is employed in this article. Its formulation is the following:

radial basis: K(x(i), x(j)) = exp,(−‖x(i)–x(j)‖2/c),

where c > 0 is a scalar.

The search of the discriminant function f (x) = φ(x)T β + β0 is formulated into the following optimization problem

subject to ξi ≥ 0,y(i) (φ(x(i))T β + β0) ≥ 1–ξi,∀l, where γ > 0 is a constant and ξi are the slack variables. This optimization problem is solved by maximizing the Lagrangian dual objective function. The solution β̂ for β has the form of linear combination of the terms y(i) φ (x(i)) and β̂0 is the common value that solve y(i)[φ(x(i))Tβ̂+ β̂0] = 1 for each i. The decision function can be written as

In other words, if Ĝ(x(j)) = −1 then j th observation x(j) of the sample test has a class-label y(j) equal to −1 and will belong to the cancer group, else the class-label is equal to 1 and the individual x(j) will belong to the control group.

Linear discriminant analysis and quadratic discriminant analysis

Suppose that fk (x) is the density of the observations x in the k th class, and πk denote the prior probability of class k, where . The Bayes theorem gives us

The classification rule for the test set is to affect the observation x′ at the k th class with maximal probability P(Y = k|X = x'). For linear and quadratic discriminant analysis, the densities fk are modelled as p-multivariate Gaussian (Webb, 2002; Lee, 2004). To compare the two classes k and l, the log-ratio was defined as

- Quadratic discriminant analysis (QDA) is the general discriminant problem, where the decision boundary {x: δk (x) = δl (x)} between the two classes is a quadratic equation in x. The quadratic discriminant function is defined as

Linear discriminant analysis (LDA) arises when the covariance matrix ∑k and ∑l are assume equals ∑k = ∑, ∀k. Then, the decision boundary between classes k and l is an equation linear in x in a p-dimensions hyperplane.

In practice, the true parameters of the Gaussian distributions are not known, but we can estimate them using the training set. Also, an estimate δ̂k of δk can be obtained and the decision rule can be written as

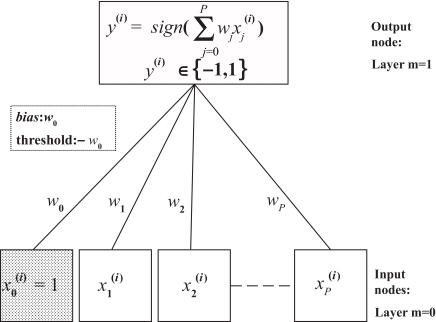

Single-layer neural network

Artificial neural networks (ANN) are learning algorithms that are modelled on the neural activity of the brain (Hastie, 2001; Dreyfus, 2002; Chen, 2004; Lee, 2004). Each node represents a neuron, and the connections represent the synapses (Fig. 2). A constant entry x0 = 1N is included in the whole perceptron entries, affected of a weight w0. The constant w0 is often referred as the bias and –w0 is called the threshold. Also, x = (x0, x1, x2, …, xp) denote the input variables such as xj ∈ ℜN; w = (w0, w1, w2, …, wp) denote the associated weight vector. The training set is used to find the appropriate values of the synaptic weights vector (w0, w1, w2, …, wp) in neural networks to solve the classification problem. If the two classes are linearly separable, it exists a decision boundary {x: wT x = 0}. If wT x > 0, is in the first class and if wTx > 0, x is in the second class. A decision rule Ĝ(x) can be defined in terms of a linear function of the input x as follows

where sign(z) denotes the sign of the quantity z. Let the risk function R(w) measures the success of a decision rule by comparing the true labels y(i) with the predicted labels Ĝ(x(i)). The weight vector w is chosen to minimize the risk function. A current choice for the risk function is the sum of squared errors. The gradient descent procedure can be used to find optimum weights ŵ in term of risk, and the decision rule can be written as

Figure 2.

Schematic of a single-layer neural network.

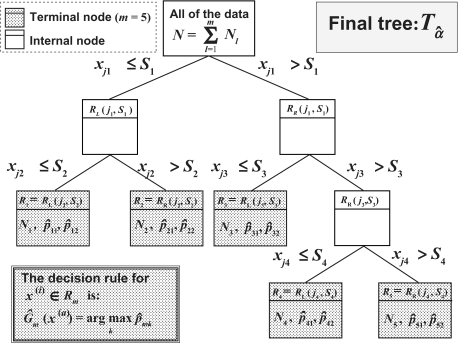

Classification trees

A classification tree is a multi-stage decision process that divides successively the whole of the N training sample observations in two homogeneous segments with regard to the class-labels by using the p biomarkers x1, …, xp (Hastie, 2001;Yang, 2005). The algorithm needs to select automatically a splitting rule for each internal node. This means determining a splitting variable with an associated threshold Sl that has been used to partition the data set at each node in two regions : RL(jl, sl) = {x|xjl ≤ sl} and RR(jl, sl) = {x|xjl > sl}. For each splitting variable xjl, the threshold sl is determined by scanning through all of the inputs , and the determination of the best pair (jl, sl) in term of maximization of the decrease in the node impurity function. In this article, the decrease in the node impurity function is expressed according to the Gini criterion. The splitting process is repeated on each of the two resulting regions of the previous step, and this until the stopping rule stops the process. The splitting process (Nakache, 2003) is stopped when the segment is pure (it contains subjects of the same class), if it contains identical observations, or if it contains a small number of subjects. Then this large tree is pruned using cost-complexity pruning. The final tree retained is noted by Tâ (Fig. 3). For the K class (here K = 2) and the M nodes in the final tree Tâ, the proportion of class k observations in terminal node m was computed as

where Nmis the size of the training sample in the region Rm. For x ∈ Rm the decision rule is to affect x in the majority class in node m, and it can be written as

In other words, in the particular case where K = 2 for each final node m of the final tree the assignment rule can be also written in the following term:

Figure 3.

Schematic of an example of classification tree with 5 terminal nodes.

If p̂mk ≥ 0 5 then the individuals of the node m are assigned to the class k, else they are assigned to the remaining class.

Boosting trees

The purpose of boosting is to apply M times the weak classification algorithm on the weighted training data, so as to produce a sequence of weak classifiers Gm(x), m = 1,2, …, M (Hastie, 2001; Fushiki, 2006). Then, a strong classifier is built by making a linear combination of the weighted sequence of weak classifiers. For a vector variables X, a classifier Gm(X) produces a prediction of the class-label Y that belongs to {−1,1}. The error rate on the training sample is

where is the weight associated to the i th observation of the training sample at the mth step. A weak classifier is one whose error rate is only slightly better than random guessing. The weights are initialized with . For each iteration m = 2,3,…, M the observation weights are modified and the classification algorithm is reapplied to the weighted observations. The error rate εm is computed and the weights of the observations at the m + 1th step are recomputed as

where αm = log((1–εm)/εm). In other words, at the step m the observations misclassified at the previous step have their weights increased (Nakache, 2003), and on the contrary the weights of the well classified observations are decreased. The predictions from all of them are then combined trough a weighted majority vote to produce the final prediction:

Cross-validation

The cross-validation is applied on biomarker selections combined with different classification methods. The logistic regression, which took part at the preselection step, was also used as a discriminant analysis method in the cross-validation. The aim of this step is to validate our method of marker selections, while comparing the predictive power of the different supervised classification methods with this selection method. We applied the holdout method for the cross-validation. That consists on repeating the algorithm of decision rules construction described below, and to estimate their performances. First, the cross-validation consists on a random drawing of a training sample. The training sample size N was varied from 40%, 60%, and 80% of the total sample size n = 169. The remaining sample is named test sample. The features number of the training sample is limited to these p most discriminating features described above, such as x ∈ ℜ N×p. The decision rule G(x) is evaluated using the training set whose class-labels are known, and that for the different supervised classification techniques studied in this article. The class-label of each test sample observation is predicted using the decision rules G(x). The class-labels of the test sample being known, the predictions of the different methods can be evaluated by the calculation of TP, TN, FP, FN, where TP, TN, FP, FN means the number of true positive, true negative, false positive and false negative samples, respectively. These numbers are computed in each test set of the 1000 iterations of the cross-validation and summed. For each classification method, the sensitivity, the specificity and the accuracy was calculated to compare them. The sensitivity is defined as TP/(TP+FN) which represents the ability of a classification method to classify correctly the patients reached of cancer, and the specificity defined as TN/(TN+FP) the percentage of observation of the control sample correctly classified. The accuracy is defined as (TP+TN)/(TP+TN+FP+FN) and measures the percentage of whole of observations correctly classified. The cross-validation was applied to the three biomarkers selections using, under the R software, the package CaMassClass (www.r-project.org) dedicated to the treatment of Protein Mass Spectra (SELDI) Data.

Results and Discussion

The goal of our article was to detect biomarkers and to assess their discriminating capacity using the different several supervised classification methods. In this way, we believe that cross-validation can answer to this question. We developed a three-step strategy to extract markers or combination of markers and to evaluate the robustness of these classifiers. First, protein peaks from 228 protein clusters were selected by a Wilcoxon test. Then, logistic regression models were used to construct two discriminating subsets of features composed of 6 and 8 protein peaks, ranging from 3 to 48 kDa, using forward (Table 1) and stepwise (Table 2) logistic regressions respectively. A third subset of discriminating markers (Table 3) was built by taking the intersection of the two first. Since there was no gold standard method for classification of mass spectrometry data, we were interested in comparing the performance and the robustness of different classification approaches. The unsupervised and supervised classification methods have been evaluated, but only the latter that showed the most satisfying results was presented in this paper.

Table 1.

Cross-validation forward.

| Training sample size (%) | TP | TN | FP | FN | Sensitivity | Specificity | Accuracy | |

|---|---|---|---|---|---|---|---|---|

| Binary logistic regression | 0.4 | 82371 | 11148 | 2852 | 5629 | 0.9360 | 0.7963 | 0.9169 |

| 0.6 | 53525 | 7547 | 1453 | 4475 | 0.9228 | 0.8386 | 0.9115 | |

| 0.8 | 26657 | 4289 | 711 | 2343 | 0.9192 | 0.8578 | 0.9102 | |

| SVM | 0.4 | 83580 | 9666 | 4334 | 4420 | 0.9498 | 0.6904 | 0.9142 |

| 0.6 | 55035 | 6761 | 2239 | 2965 | 0.9489 | 0.7512 | 0.9223 | |

| 0.8 | 27677 | 3868 | 1132 | 1323 | 0.9544 | 0.7736 | 0.9278 | |

| LDA | 0.4 | 82465 | 11603 | 2397 | 5535 | 0.9371 | 0.8288 | 0.9222 |

| 0.6 | 54676 | 7510 | 1490 | 3324 | 0.9427 | 0.8344 | 0.9281 | |

| 0.8 | 27483 | 4159 | 841 | 1517 | 0.9477 | 0.8318 | 0.9306 | |

| QDA | 0.4 | 82687 | 6647 | 7353 | 5313 | 0.9396 | 0.4748 | 0.8758 |

| 0.6 | 52177 | 6695 | 2305 | 5823 | 0.8996 | 0.7439 | 0.8787 | |

| 0.8 | 25860 | 4064 | 936 | 3140 | 0.8917 | 0.8128 | 0.8801 | |

| Neural Networks | 0.4 | 86408 | 10132 | 4830 | 1592 | 0.9819 | 0.6772 | 0.9376 |

| 0.6 | 56932 | 6819 | 2181 | 1068 | 0.9816 | 0.7577 | 0.9515 | |

| 0.8 | 28535 | 3828 | 1172 | 465 | 0.9840 | 0.7656 | 0.9519 | |

| Classification Trees | 0.4 | 80945 | 3508 | 10492 | 7055 | 0.9198 | 0.2506 | 0.8280 |

| 0.6 | 53202 | 2554 | 6446 | 4798 | 0.9173 | 0.2838 | 0.8322 | |

| 0.8 | 26804 | 1354 | 3646 | 2196 | 0.9243 | 0.2708 | 0.8282 | |

| Boosting Trees | 0.4 | 84585 | 4921 | 9153 | 3415 | 0.9612 | 0.3497 | 0.8769 |

| 0.6 | 55461 | 3278 | 5918 | 2539 | 0.9562 | 0.3565 | 0.8741 | |

| 0.8 | 27708 | 1668 | 4580 | 1292 | 0.9554 | 0.2670 | 0.8334 |

Table 2.

Cross-validation stepwise.

| Training sample size (%) | TP | TN | FP | FN | Sensitivity | Specificity | Accuracy | |

|---|---|---|---|---|---|---|---|---|

| Binary logistic regression | 0.4 | 78024 | 9594 | 4406 | 9976 | 0.8866 | 0.6853 | 0.8590 |

| 0.6 | 50115 | 6878 | 2122 | 7885 | 0.8641 | 0.7642 | 0.8506 | |

| 0.8 | 24871 | 3942 | 1058 | 4129 | 0.8576 | 0.7884 | 0.8474 | |

| SVM | 0.4 | 81876 | 7211 | 6789 | 6124 | 0.9304 | 0.5151 | 0.8734 |

| 0.6 | 53604 | 5122 | 3878 | 4396 | 0.9242 | 0.5691 | 0.8765 | |

| 0.8 | 26802 | 2941 | 2059 | 2198 | 0.9242 | 0.5882 | 0.8748 | |

| LDA | 0.4 | 79379 | 10253 | 3747 | 8621 | 0.9020 | 0.7324 | 0.8787 |

| 0.6 | 52929 | 6682 | 2318 | 5071 | 0.9126 | 0.7424 | 0.8897 | |

| 0.8 | 26691 | 3614 | 1386 | 2309 | 0.9204 | 0.7228 | 0.8913 | |

| QDA | 0.4 | 83320 | 3909 | 10091 | 4680 | 0.9468 | 0.2792 | 0.8552 |

| 0.6 | 52004 | 5052 | 3948 | 5996 | 0.8966 | 0.5613 | 0.8516 | |

| 0.8 | 25690 | 3219 | 1781 | 3310 | 0.8859 | 0.6438 | 0.8503 | |

| Neural Networks | 0.4 | 85758 | 7750 | 6694 | 2242 | 0.9745 | 0.5366 | 0.9128 |

| 0.6 | 56802 | 5317 | 3683 | 1198 | 0.9793 | 0.5908 | 0.9271 | |

| 0.8 | 28527 | 2891 | 2541 | 473 | 0.9837 | 0.5322 | 0.9125 | |

| Classification Trees | 0.4 | 81399 | 3459 | 10541 | 6601 | 0.9250 | 0.2471 | 0.8319 |

| 0.6 | 53351 | 2592 | 6408 | 4649 | 0.9198 | 0.2880 | 0.8350 | |

| 0.8 | 26680 | 1388 | 3612 | 2320 | 0.9200 | 0.2776 | 0.8255 | |

| Boosting Trees | 0.4 | 80072 | 3654 | 7799 | 1401 | 0.9828 | 0.3190 | 0.9010 |

| 0.6 | 52540 | 2480 | 5605 | 849 | 0.9841 | 0.3067 | 0.8950 | |

| 0.8 | 26102 | 1408 | 5322 | 455 | 0.9829 | 0.2092 | 0.8264 |

Table 3.

Cross-validation for common peaks.

| Training sample size (%) | TP | TN | FP | FN | Sensitivity | Specificity | Accuracy | |

|---|---|---|---|---|---|---|---|---|

| Binary logistic regression | 0.4 | 80169 | 10970 | 3030 | 7831 | 0.9110 | 0.7836 | 0.8935 |

| 0.6 | 52459 | 7542 | 1458 | 5541 | 0.9045 | 0.8380 | 0.8955 | |

| 0.8 | 26258 | 4256 | 744 | 2742 | 0.9054 | 0.8512 | 0.8975 | |

| SVM | 0.4 | 81890 | 9144 | 4856 | 6110 | 0.9306 | 0.6531 | 0.8925 |

| 0.6 | 54293 | 6543 | 2457 | 3707 | 0.9361 | 0.7270 | 0.9080 | |

| 0.8 | 27333 | 3769 | 1231 | 1667 | 0.9425 | 0.7538 | 0.9148 | |

| LDA | 0.4 | 81219 | 11258 | 2742 | 6781 | 0.9229 | 0.8041 | 0.9066 |

| 0.6 | 54271 | 7380 | 1620 | 3729 | 0.9357 | 0.8200 | 0.9202 | |

| 0.8 | 27320 | 4021 | 979 | 1680 | 0.9421 | 0.8042 | 0.9218 | |

| QDA | 0.4 | 78654 | 8663 | 5337 | 9346 | 0.8938 | 0.6188 | 0.8560 |

| 0.6 | 50593 | 6991 | 2009 | 7407 | 0.8723 | 0.7768 | 0.8595 | |

| 0.8 | 25080 | 4048 | 952 | 3920 | 0.8648 | 0.8096 | 0.8567 | |

| Neura Networks | 0.4 | 86002 | 9173 | 5197 | 1998 | 0.9773 | 0.6383 | 0.9297 |

| 0.6 | 56730 | 6180 | 2820 | 1270 | 0.9781 | 0.6867 | 0.9390 | |

| 0.8 | 28511 | 3341 | 1659 | 489 | 0.9831 | 0.6682 | 0.9368 | |

| Classification Trees | 0.4 | 80836 | 3530 | 10470 | 7164 | 0.9186 | 0.2521 | 0.8271 |

| 0.6 | 53349 | 2623 | 6377 | 4651 | 0.9198 | 0.2914 | 0.8354 | |

| 0.8 | 26795 | 1439 | 3561 | 2205 | 0.9240 | 0.2878 | 0.8304 | |

| Boosting Trees | 0.4 | 79569 | 2546 | 9603 | 1164 | 0.9856 | 0.2096 | 0.8841 |

| 0.6 | 52099 | 1478 | 8392 | 852 | 0.9839 | 0.1497 | 0.8529 | |

| 0.8 | 25924 | 708 | 9136 | 440 | 0.9833 | 0.0719 | 0.7355 |

The mean performance (accuracy, sensibility and specificity) of our classifiers on 1000 randomly generated 80:20, 60:40 and 40:60 set of samples were evaluated using different class-prediction models. The results showed that the forward logistic regression is better than the stepwise logistic regression in terms of accuracy and specificity. Interestingly, 5 protein peaks were common to the two models. A classifier with the protein peaks common to these two model selection methods allowed a more parsimonious model, as effective as the forward logistic regression (Table 3). Then it can be pointed out that the specificity was lower than the sensitivity and did not exceed the 0.86. The least effective supervised classification methods was the classification trees and the boosting trees that failed to correctly classify individuals of the control group. Although the sensitivity of both methods was acceptable, it was not the case for the specificity that was found lower than 0.36. Comparing to Quadratic Discriminant Analysis, the Linear Discriminant Analysis gave the best performance result to discriminate both samples achieving a mean classification accuracy of 0.93, a sensitivity of 0.95, and a specificity of 0.83 with a 80:20 cross-validation set samples (Table 1). The Linear Discriminant Analysis was slightly better than the Logistic Regression in terms of accuracy, and sensitivity. The results from SVM and Neural Networks were similar in terms of mean performance but showed a lower mean specificity (0.78) compared to Discriminant Analysis and Logistic Regression methods (0.86). Finally, the model selection robustness was confirmed by using different training sample sizes that varied from 40 to 80%. Interestingly, all the selection methods were stable with all the training sample size tested, except for Quadratic Discriminant Analysis. The Linear Discriminant Analysis remained the most robust method with a mean specificity ranging from 0.82 to 0.83, and sensitivity from 0.93 to 0.95 with the different sample sizes tested (Table 1).

We showed that Linear Discriminant Analysis, Quadratic Discriminant Analysis, Logistic Regression, Support Vector Machine and Neural Networks were the five most robust supervised classification methods in our study. The combination of the two-sided Wilcoxon’s test and the Logistic Regression for the markers pre-selection and the Linear Discriminant Analysis seemed to be the more effective in term of classification of samples in control and cancer groups. We observed that once the most discriminating markers are selected, the results of sensitivity, specificity and accuracy can be radically different from one method to another. The choice of these classification methods depends on the data, on the choice made for the pre-selection and on the problem that has to be solved. Also, it is essential to test several classification methods on the selected biomarkers. The question of the bias between the selection method and the Discriminant Analysis can arise. Accordingly we evaluated the whole method (i.e. the preselection stage combined with the Discriminant Analysis) in a 5-fold cross-validation (Ambroise, 2002). If we consider the preselection method with the logistic regression forward, we found an accuracy of 0.8763, a sensitivity of 0.9027, and a specificity of 0.7. The combination of this selection method and this classification method is robust. But these performances could be better if the difference between sizes of the two groups had not been so important. The relatively low specificity obtained with our data could be explained by this strong imbalance in the size of both sample groups, or by the choice of a control group with high-risk of developping cancer. This last condition could explain the very low specificity observed with the Classification Trees and the Boosting Trees classification methods, which uses thresholds. In conclusion, this biomarkers selection method should be employed on other studies, to validate its robustness. It also would be interesting to ensure a medium term follow-up of this control group population to allow the reappraisal of benign condition and rule out the possibility of infra clinical and radiological cancer development in this group of patient. In this case, it could allow a correct reallocation of the patient in the correct group and a more efficient re-evaluation of the different classification methods. Finally, the potential markers selected should be clearly identified and annotated using extra purification such as standard chromatography and/or electrophoresis and analysis by peptide mass fingerprint using more resolutive MS techniques or peptide sequencing via tandem MS analysis. This identification presents several interesting features, particularly during the discovery phase, by adding a supplementary validation phase using independent immunological methods, such as ELISA, and by increasing the predictive value of the molecular signature.

Footnotes

Authors Contributions

Both Nadège Dossat and Alain Mangé contributed equally to this study

Please note that this article may not be used for commercial purposes. For further information please refer to the copyright statement at http://www.la-press.com/copyright.htm

References

- Adam BL, Qu Y, Davis JW, et al. Serum protein fingerprinting coupled with a pattern-matching algorithm distinguishes prostate cancer from benign prostate hyperplasia and healthy men. Cancer Res. 2002;62:3609–14. [PubMed] [Google Scholar]

- Ambroise C, McLachlan GJ. Selection bias in gene extraction on the basis of microarray gene-expression data. Proc. Natl. Acad. Sci. U.S.A. 2002;99(10):6562–6. doi: 10.1073/pnas.102102699. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bauer E, Kohavi R. An empirical comparison of voting classification algorithms: bagging, boosting, and variants. Machine Learning. 1999;36:105–39. [Google Scholar]

- Benjamini Y, Hochberg Y. Controlling the false discovery rate – a pratical and powerful approach to multiple testing. J.R. Stat. Soc B. 1995;57:289–300. [Google Scholar]

- Chen Y-D, Zheng S, Yu J-K, et al. Artificial neural networks analysis of surface-enhanced laser desorption/ionization mass spectra of serum protein pattern distinguishes colorectal cancer from healthy population. Clin. Cancer Res. 2004;10(04):8380–5. doi: 10.1158/1078-0432.CCR-1162-03. [DOI] [PubMed] [Google Scholar]

- Dreyfus G, Martinez J-M, Samuelides M, et al. Réseaux de neurones: méthodologie et application. Paris: Eyrolles; 2002. [Google Scholar]

- Duda RO, Hart PE, Stork DG. Pattern classification. John Wiley and son, Inc; New York: 2001. [Google Scholar]

- Fushiki T, Fujisawa H, Eguchi S. Identification of biomarkers from mass spectrometry data using a “common” peak approach. B.M.C. Bioinformatics. 2006;7:358. doi: 10.1186/1471-2105-7-358. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hastie T, Tibshirani R, Friedman J. The Elements of Statistical Learning. New York: Springer; 2001. [Google Scholar]

- Hosmer DW, Lemeshow S. Applied Logistic Regression. Second Edition. New York; Chichester: Wiley; 2000. [Google Scholar]

- Lee M-L, T . Analysis of Microarray Gene Expression Data. Boston: Kluwer Academic Publishers; 2004. [Google Scholar]

- Li L, Tang H, Wu Z, Gong J, et al. Data mining techniques for cancer detection using serum proteomic profiling. Artif. Intell. Med. 2004;32(2):71–83. doi: 10.1016/j.artmed.2004.03.006. [DOI] [PubMed] [Google Scholar]

- Nakache J-P, Confais J. Statistique explicative appliquée. Paris: Technip; 2003. [Google Scholar]

- Pepe MS, Cai T, Longton G. Combining predictors for classification using the area under the receiver operating characteristic curve. Biometrics. 2006;62(1):221–9. doi: 10.1111/j.1541-0420.2005.00420.x. [DOI] [PubMed] [Google Scholar]

- Petricoin EF, Ardekani AM, Hitt BA, et al. Use of proteomic patterns in serum to identify ovarian cancer. Lancet. 2002;359:572–7. doi: 10.1016/S0140-6736(02)07746-2. [DOI] [PubMed] [Google Scholar]

- Solassol J, Jacot W, Lhermitte L, et al. Clinical proteomics and mass spectrometry profiling for cancer detection. Expert Rev. Proteomics. 2006;3:311–20. doi: 10.1586/14789450.3.3.311. [DOI] [PubMed] [Google Scholar]

- Vapnik V. Statistical learning theory. New York: Wiley; 1998. [Google Scholar]

- Vlahou A, Schorge JO, Gregory BW, et al. Diagnosis of ovarian cancer using decision tree classification of mass spectral data. J. Biomed. Biotechnolo. 2003;5:308–14. doi: 10.1155/S1110724303210032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Verhoeven KJF, Simonsen KL, McIntyre LM. Implementing false discovery rate control: increasing your power. Oikos. 2005;108(3):643–47. [Google Scholar]

- Webb A. Statistical Pattern Recognition. Second Edition. Wiley; 2002. [Google Scholar]

- Wu B, Abbott T, Fishman D, et al. Comparison of statistical methods for classification of ovarian cancer using mass spectrometry data. Bioinformatics. 2003;19(13):1636–43. doi: 10.1093/bioinformatics/btg210. [DOI] [PubMed] [Google Scholar]

- Yang S-Y, Xiao X-Y, Zhang W-G. Application of serum SELDI proteomic patterns in diagnosis of lung cancer. BMC cancer. 2005;5:83. doi: 10.1186/1471-2407-5-83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang Z, Bast RC, Jr, Yu Y, et al. Three biomarkers identified from serum proteomic analysis for the detection of early stage ovarian cancer. Cancer Res. 2004;64:5882–90. doi: 10.1158/0008-5472.CAN-04-0746. [DOI] [PubMed] [Google Scholar]