Abstract

How much attention is needed to produce implicit learning? Previous studies have found inconsistent results, with some implicit learning tasks requiring virtually no attention while others rely on attention. In this study we examine the degree of attentional dependency in implicit learning of repeated visual search context. Observers searched for a target among distractors that were either highly similar to the target or dissimilar to the target. We found that the size of contextual cueing was comparable from repetition of the two types of distractors, even though attention dwelled much longer on distractors highly similar to the target. We suggest that beyond a minimal amount, further increase in attentional dwell time does not contribute significantly to implicit learning of repeated search context.

Keywords: implicit learning, visual attention, spatial context learning, contextual cueing, selective attention

Introduction

Extensive cognitive research has shown that selective attention dictates conscious perception and explicit memory. When asked to sort out new shapes from previously exposed shapes, observers often pick out previously attended shapes but not previously ignored shapes (Rock & Gutman, 1981). Similarly, unexpected visual objects often go unnoticed, resulting in an “inattentional blindness” (Mack and Rock, 1998; Simons & Chabris, 1999). Furthermore, attention has a graded effect on the quality of conscious perception and memory. As less attention is paid to a distracting event, inattentional blindness increases (Cartwright-Finch & Lavie, 2007; Simons & Chabris, 1999).

Does attention similarly affect implicit processes? The answer is apparently “no,” at least for some implicit processes. Ignored visual input often leads to priming, facilitating or delaying future processing of that stimulus (Tipper & Cranston, 1985). Additionally, words presented in the neglected hemifield of neglect patients can facilitate lexical decision of another word (McGlinchey-Berroh, Milberg, Verfaellic, Alexander, & Kilduff, 1993). Thus, attention is not needed for some unconscious processing. However, other implicit processes rely on attention. Implicit learning of a sequence of visual locations is reduced by a concurrent, tone-counting task (Nissen & Bullemer, 1987; see reviews by Jimenez, 2003). Visual statistical learning of frequently paired shapes increases with attention (Turk-Browne, Junge, & Scholl, 2005). Because different implicit learning tasks engage different cognitive processes, their reliance on attention also varies. Whether implicit learning depends on attention, therefore, must be addressed for each paradigm separately.

The current study aims to delineate the role of attention in implicit learning of repeated search context. This kind of learning, known as contextual cueing, is often studied in visual search tasks (e.g., search for T among Ls). Unknown to the observers, some search displays occasionally repeat in the experiment. Although observers cannot recognize the repeated displays, visual search on those displays is faster than on unrepeated displays (Chun & Jiang, 1998).

Although contextual cueing is implicit, it does not bypass the gating of selective attention. When searching for a black T among black and white Ls, for example, the white Ls can be filtered out preattentively but the black Ls are eliminated only with focal attention (Kaptein, Theeuwes, & Van der Heijdt, 1995). In this task, repetition of the attended distractors (the black Ls) facilitates search as much as repetition of the entire search array, whereas repetition of the ignored distractors (the white Ls) leads to no facilitation (Jiang & Chun, 2001; Jiang & Leung, 2005). But does attention have a graded effect on contextual cueing? That is, does a search context that receives more attention also lead to greater contextual cueing?

The graded-attention hypothesis is consistent with existing data. Whether selection is achieved on the basis of color (Jiang & Chun, 2001) or shape (Chang & Cave, 2006), search context rejected preattentively results in little or no contextual cueing, while search context receiving focal attention leads to robust cueing. The graded-attention hypothesis also captures observations made in explicit perceptual tasks, where the degree of conscious perception of distractors correlates with the amount of attention available for processing them (Cartwright-Finch & Lavie, 2007; Simons & Chabris, 1999).

We test the graded-attention hypothesis by measuring contextual cueing in repeated search displays that require different amounts of attention. We rely on Duncan and Humphreys (1989)’s observation that the similarity between target and distractors dictates the degree of attentional engagement. More attention is required to reject distractors that are more similar to the target. In turn, the slope of search RT as a function of set size is steeper for similar distractors than dissimilar distractors. In our experiments, subjects searched for a T among Ls. We employed two types of Ls whose similarity to the target varied. We confirmed that search slope was steeper when searching for a T among similar distractors than among dissimilar distractors, suggesting that more attention was put on similar distractors. If the graded-attention hypothesis is correct, then contextual cueing should be greater when the similar Ls repeat their locations than when the dissimilar Ls repeat their locations.

Experiment 1

In this experiment we placed the two types of distractors (similar or dissimilar to the target) on separate search trials. We also manipulated search set size to verify that the two types of distractors engaged attention to different degrees.

Method

Participants

Fifteen participants (mean age 23 years) from Harvard University completed this experiment for payment or course-credit. All had normal or corrected-to-normal visual acuity. They were tested individually in a normally lit room and sat unrestricted at about 57cm from the screen.

Materials

Participants searched for a T rotated to the left or right among L-shaped distractors rotated in the four cardinal directions. The items were white presented on a gray background. They were randomly placed in an imaginary 10×10 grid (33.8°×33.8°). All items were created by two orthogonal line segments (length 35 pixels; 1.5°). One segment bisected the other to form a T. Ls were created by displacing one segment to the end of the other. The offset at the junction of the two segments was 8 pixels for similar Ls and 2 pixels for dissimilar Ls (Figure 1).

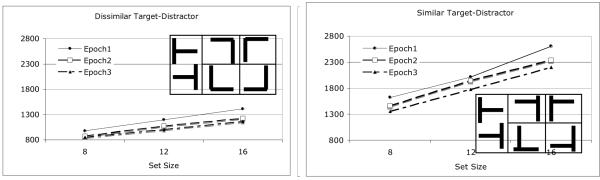

Figure 1.

Training RT in Experiment 1. The targets and distractors are shown in the inset. We binned 5 adjacent blocks into one epoch.

Procedure

Each trial began with a fixation point (0.2°) for 500msec. The search display was then presented until participants pressed a key to indicate the target’s orientation. There was always a T; its orientation was randomly selected on each trial. Participants were instructed to respond as accurately and as quickly as possible. A sad face icon followed each incorrect response.

Design

Each participant completed three phases of the experiment consecutively: training, transfer, and recognition.

In the training phase, participants completed 15 blocks, each with 60 trials. The 60 trials were unique visual displays, but they repeated in later blocks (for a total of 15 times). The 60 trials were randomly and evenly divided into three set sizes (there were 8, 12, or 16 total number of items) and two types of distractors (similar vs. dissimilar). Due to the large number of trials needed for this experiment, we did not include unrepeated trials during training.

In the transfer phase, each block of 120 trials was randomly and evenly divided into three set sizes (8, 12, or 16), two types of distractor (similar vs. dissimilar), and two types of search context (repeated or unrepeated). The repeated search context was the same as the ones used in the training phase. The unrepeated search context involved newly generated distractor locations along with trained target locations. Because 10 trials per condition were too few to obtain a stable RT, we repeated all 120 trials 3 times for 4 transfer blocks.

Finally, in the recognition phase, observers were shown 120 trials similar to the transfer phase. They were asked to determine whether a search display was previously shown in the training phase. Only accuracy was emphasized.

Results

Accuracy in the training and transfer phases of the experiment was high (over 97%). It was significantly higher when observers searched among dissimilar distractors than similar distractors (ps < .01). Otherwise accuracy was not significantly influenced by experimental conditions, ps > .05. In the RT analysis, we excluded incorrect trials and trials faster than 200msec.

1. Training

Figure 1 shows mean RT in the training phase, where each epoch contained 5 blocks. An ANOVA on target-distractor (T-D) similarity, set size, and epoch showed significant main effects of all three factors, all ps < .001. Not only was search RT slower with similar distractors than dissimilar distractors, but the search slope was also steeper, resulting in a significant interaction between similarity and set size, F(2, 28) = 46.25, p < .001. By epoch 3, search slope was 39msec/item for dissimilar T-D trials and 106msec/item for similar T-D trials. Thus, we were successful at manipulating the degree of attention required for different types of distractors. There was also a significant interaction between T-D similarity and epoch, F(2, 28) = 46.25, p < .001. For dissimilar T-D search, reduction in RT was most obvious from epoch 1 to epoch 2. For similar T-D search, reduction in RT was obvious throughout training. The other interaction effects were insignificant, p > .05.

2. Transfer

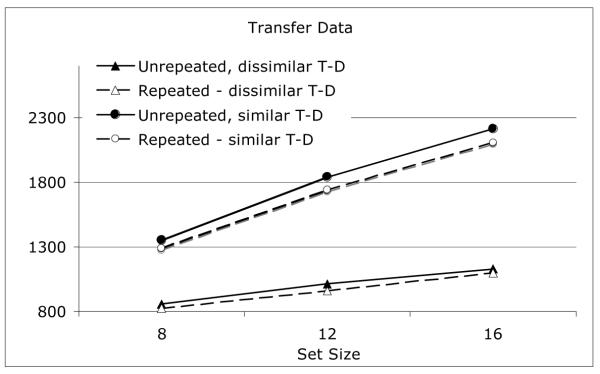

Was contextual cueing greater for repeated search context that received more attention? Figure 2 shows the transfer data.

Figure 2.

Transfer RT in Experiment 1.

An ANOVA on context repetition (repeated or unrepeated), set size (8, 12, or 16), and target-distractor similarity (similar vs. dissimilar) revealed a significant main effect of context repetition, with faster RT on repeated displays, F(1, 14) = 5.05, p < .04. The main effects of set size and T-D similarity were also significant, ps < .001. However, the interaction between context repetition and T-D similarity was not significant, F < 1, neither was the interaction between context repetition and set size significant, F < 1. The only significant interaction was between set size and T-D similarity, resulting from steeper search slopes for similar T-D than dissimilar T-D, F(2, 28) = 55.70, p < .001.

Follow-up tests showed that contextual cueing was highly significant on dissimilar T-D trials, F(1, 14) = 9.71, p < .008. The average size of contextual cueing was 40msec across all set sizes, which was a facilitation of 4% over baseline RT. Contextual cueing was statistically less robust on similar T-D trials, F(1, 14) = 2.97, p = .11. Its absolute magnitude was 89msec averaged across all set sizes, reflecting a facilitation of 5% in RT. T-test showed that the size of contextual cueing was comparable across the two types of distractors, whether it was measured in absolute cueing effect, p > .80, or as percentage saving in RT, p > .25.

3. Recognition

Table 1 shows recognition accuracy. In no case was hit rate (correctly recognizing a repeated display as repeated) significantly higher than false alarm rate (incorrectly recognizing an unrepeated display as repeated), ps > .30. This confirms that learning was implicit.

Table 1.

Recognition results from Experiment 1. False alarm: likelihood that observers incorrectly recognize an unrepeated display as repeated; Hit: likelihood that observers correctly identify a repeated display as repeated

| Set size | Dissimilar Target-Distractor | Similar Target-Distractor | ||

|---|---|---|---|---|

| False Alarm | Hit | False Alarm | Hit | |

| 8 | .37 | .30 | .54 | .35 |

| 12 | .49 | .47 | .50 | .53 |

| 16 | .51 | .48 | .61 | .53 |

Discussion

Results from Experiment 1 were inconsistent with the graded-attention hypothesis, according to which contextual cueing is stronger when the repeated search context receives more attention. Even though context from distractors highly similar to the target received more attention, it did not result in greater or more robust contextual cueing.

Experiment 2

Experiment 2 used a different design to provide converging evidence for Experiment 1. In Experiment 1, the two different types of distractors were placed on different search trials, making it possible for observers to develop different search strategies. For instance, observers may have used a passive search mode on dissimilar trials and an active search mode on similar trials. Given that contextual cueing is more reliable when observers adopt a passive search mode (Lleras & von Muhlenen, 2004), strategic differences may override a graded attention effect. In addition, because search RT on similar T-D trials was several times longer than that on dissimilar T-D trials, it is difficult to directly compare the size of contextual cueing across the two conditions. To overcome these limitations, in Experiment 2 we presented similar and dissimilar distractors on the same search trial. On each trial observers searched for a T among 16 Ls, half of which were highly similar to the T and the rest were dissimilar. Importantly, we repeated either the dissimilar, similar, or both sets in the transfer phase. Because similar Ls require more attention than dissimilar Ls, the graded-attention hypothesis predicts more contextual cueing from repetition of the similar Ls.

Method

Participants

Forty observers (mean age 21 years) from a similar subject pool as Experiment 1’s completed this experiment.

Design

Participants were tested in training, transfer, and recognition phases consecutively.

In the training phase observers completed 28 blocks, each involving 16 unique search trials. On each trial there was one target (left or right T) presented among 16 distractors, half were highly similar to the target and half were dissimilar to the target (see Figure 1). All 16 trials repeated once per block for 28 times.

In the transfer phase, we tested 4 conditions each involving 16 trials derived from the training trials. The target locations repeated in all conditions. In the both-repeated condition, the search display was the same as the training displays. In the unrepeated condition, the search display shared with the trained displays only in target locations; the distractor locations were randomly generated. In the similar-distractor-repeated condition, the 8 Ls similar to the target were placed at their trained locations, but the 8 dissimilar Ls were placed at randomly selected locations. The opposite manipulation was used in the dissimilar-distractor-repeated condition.

Finally, in the recognition phase, observers were presented with both-repeated, similar-distractor-repeated, dissimilar-distractor-repeated, and unrepeated trials and decided whether a given search display was previously repeated during training. Only accuracy was emphasized.

Results

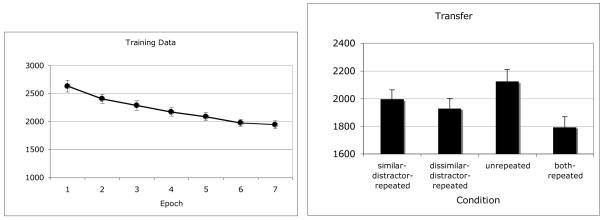

Overall accuracy for training and transfer was high (above 97%) and was not significantly affected by conditions. Figure 3 shows the training and the transfer phase RT. Only correct trials and trials with RT over 200msec were included.

Figure 3.

Results from Experiment 2. Left: Training phase (each epoch = 4 adjacent blocks). Right: Transfer data. Error bars show standard error of the mean.

In the training phase, RT progressively got faster as the experiment went on, F(6, 234) = 39.11, p < .001. This improvement reflected a combination of general procedural learning and specific learning of repeated search context.

In the transfer phase, we obtained a significant contextual cueing effect: RT was faster for both-repeated than unrepeated, t(39) = 4.55, p < .001. Repeating the similar-distractor resulted in an intermediate RT. It was significantly slower than the both-repeated condition, t(39) = 3.51, p < .001, but marginally faster than the unrepeated condition, t(39) = 1.78, p < .08. Repeating the dissimilar-distractor set also led to an RT that was slower than both-repeated, t(39) = 2.43, p < .02 and faster than unrepeated, t(39) = 2.71, p < .01. Crucially, the similar-distractor-repeated condition was not faster than the dissimilar-distractor-repeated condition. There was no evidence that the distractor context receiving more attention led to greater contextual cueing, t(39) < 1. Assuming a medium effect size, the power of this analysis was .64. Given that the mean results went in the opposite direction from that predicted by the graded-attention hypothesis and given the power of this analysis, it is highly unlikely that an effect consistent with the graded-attention hypothesis was there but simply went undetected.

Finally, there was no evidence for explicit awareness of the repetition. Hit rate for correctly identifying a repeated display (55%) was not significantly different from the false alarm rate for misidentifying an unrepeated display (52%), t(39) < 1. Identification of the two subset repeated displays (52% for dissimilar-distractor-repeated, 53% for similar-distractor-repeated) was also not different from hit or false alarm rates, ps > .10.

Discussion

In Experiment 2 we showed two types of distractors on each trial: distractors highly similar to the target and distractors dissimilar to the target. Both types of distractors received some attention, as it took approximately 100msec/item to reject the highly similar distractors and 39msec/item to reject the dissimilar distractors (see Experiment 1). The extra devotion of attention, however, did not result in greater contextual cueing for the similar distractors. Both the dissimilar and the similar distractor contexts were learned equally, and the combination of the two (both-repeated) contributed to the largest contextual cueing effect.

General Discussion

Humans search for a target more quickly on search displays that occasionally repeat. This facilitation is known as contextual cueing, where the repeated search context for the target provides a cue to facilitate search (Chun & Jiang, 1998). Previous studies show that selective attention modulates contextual cueing. Repetition of distractors that are rejected preattentively produces little or no contextual cueing (Jiang & Chun, 2001; Jiang & Leung, 2005). In contrast, distractors that require serial scrutiny of attention result in robust contextual cueing.

In this study we tested the hypothesis that selective attention exerts a graded effect on contextual cueing. That is, the greater the amount of attention on a repeated context, the larger the contextual cueing effect from that context. This hypothesis is plausible because it characterizes effects of attention on explicit visual processes (Cartwright-Finch & Lavie, 2007; Simons & Chabris, 1999), and it is consistent with existing data on contextual cueing. However, data from the current study do not support the graded-attention hypothesis. Distractors that are more similar to the target, and thus require more attentional scrutiny, do not lead to greater contextual cueing when they repeat. These results hold when the degree of attention varied across different search trials (Experiment 1) or within a search trial (Experiment 2).

If the graded attention hypothesis does not capture the relationship between selective attention and contextual cueing, what is that relationship? Previous studies show that search context that received little or no attention does not lead to contextual cueing (Chang & Cave, 2006; Jiang & Chun, 2001; Jiang & Leung, 2005). In those studies, the ignored search context is rejected preattentively, on the basis of a salient color feature. The amount of attentional dwell time on each ignored distractor is probably less than 10msec. In the current study, we show that a dwell time of 39msec/item is sufficient to produce a contextual cueing effect comparable to that produced by a dwell of about 100msec. From these results we make the following speculation: beyond a threshold amount, additional increase in attentional dwell time does not further contribute to contextual cueing. This speculation must be tested in the future by parametrically manipulating attentional dwell time on each item and measuring the resultant contextual cueing.

Why doesn’t contextual cueing scale with attentional dwell time monotonically (see also Hodsoll & Humphreys, 2005)? To answer this question, it is important to realize that contextual cueing depends on two processes: learning a repeated context and expressing the learning during search. The strength of learning relies on processing the spatial relationship between the target and its search context. Some amount of attentional dwell time may be needed to register the spatial relationship, but further increasing the time spent inspecting each distractor may not increase learning, as the extra time is not used to build associations. For this reason, the strength of learning may not increase with increasing dwell time on each distractor.

Even if the strength of learning does not scale with attentional dwell time, one may still expect a greater contextual cueing effect on trials that take longer to complete. That is, if one assumes that search results from a horse race between standard, serial search and memory-based search (Logan, 1988), the memory-based search should win more often if the standard serial search is slow, as when the distractors are similar to the target or when a display contains numerous items. This, however, was not what we found. Contextual cueing was not greater on similar T-D trials than dissimilar ones, and it was not greater on large set size trials than small set size trials. How should these results be accounted for? This is a difficult question that researchers are currently debating (Brady & Chun, in press; Kunar, Flusberg, Horowitz, & Wolfe, in press). There are several possibilities. First, the strength of learning may actually be weaker on trials associated with longer RTs. For example, displays with larger set size may be less distinguishable from one another (Hodsoll & Humphreys, 2005), leading to less learning than displays with smaller set size. Alternatively, perhaps the simple “horse race” model of contextual cueing is incorrect. For example, Kunar et al. proposed that the search process itself was unaffected by repetition of search context. Instead, contextual cueing resulted from greater confidence on making a response on repeated trials. Another possibility is that search is indeed a horse race between standard serial search and memory-based search, but these two processes interact with each other. Prolonged search may interfere with the use of memory-based information for search. Tests of these hypotheses must be conducted in the future.

In summary, although selective attention modulates implicit learning of repeated search context (Jiang & Chun, 2001), its effect on learning is not graded. Increasing attentional dwell time on individual search elements does not always increase contextual cueing from these elements. Future studies must delineate exactly how attention is used to form associative learning in contextual cueing.

Acknowledgements

This research was supported by NIH MH071788, ARO 46926-LS, and ONR YIP 2005 to Y.V.J. It was conducted while V.R. was visiting Jiang Lab. We thank Tim Vickery and Won Mok Shim for comments and suggestions.

Reference

- Brady TF, Chun MM. Spatial constraints on learning in visual search: Modeling contextual cueing. Journal of Experimental Psychology: Human Perception & Performance. doi: 10.1037/0096-1523.33.4.798. in press. [DOI] [PubMed] [Google Scholar]

- Cartwright-Finch U, Lavie N. The role of perceptual load in inattentional blindness. Cognition. 2007;102:321–340. doi: 10.1016/j.cognition.2006.01.002. [DOI] [PubMed] [Google Scholar]

- Chang K, Cave K. When is a stimulus configuration learned as a context?; Poster presented at OPAM; 2006. [Google Scholar]

- Chun MM, Jiang Y. Contextual cueing: Implicit learning and memory of visual context guides spatial attention. Cognitive Psychology. 1998;36:28–71. doi: 10.1006/cogp.1998.0681. [DOI] [PubMed] [Google Scholar]

- Duncan J, Humphreys GW. Visual search and stimulus similarity. Psychological Review. 1989;96:433–458. doi: 10.1037/0033-295x.96.3.433. [DOI] [PubMed] [Google Scholar]

- Hodsoll JP, Humphreys GW. Preview search and contextual cueing. Journal of Experimental Psychology: Human Perception & Performance. 2005;31:1346–1358. doi: 10.1037/0096-1523.31.6.1346. [DOI] [PubMed] [Google Scholar]

- Jiang Y, Chun MM. Selective attention modulates implicit learning. Quarterly Journal of Experimental Psychology. 2001;54(A):1105–1124. doi: 10.1080/713756001. [DOI] [PubMed] [Google Scholar]

- Jiang Y, Leung AW. Implicit learning of ignored visual context. Psychonomic Bulletin & Review. 2005;12:100–106. doi: 10.3758/bf03196353. [DOI] [PubMed] [Google Scholar]

- Jimenez L. Attention and Implicit Learning. John Benjamins Publishing Co.; Amsterdam: 2003. [Google Scholar]

- Kaptein NA, Theeuwes J, Van der Heijden AHC. Search for a conjunctively defined target can be selectively limited to a color-defined subset of elements. Journal of Experimental Psychology: Human Perception & Performance. 1995;21:1053–1069. [Google Scholar]

- Kunar MA, Flusberg S, Horowitz TS, Wolfe JM. Does contextual cueing guide the deployment of attention? Journal of Experimental Psychology: Human Perception & Performance. doi: 10.1037/0096-1523.33.4.816. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lleras A, Von Muhlenen A. Spatial context and top-down strategies in visual search. Spatial Vision. 2004;17:465–482. doi: 10.1163/1568568041920113. [DOI] [PubMed] [Google Scholar]

- Logan GD. Toward an instance theory of automatization. Psychological Review. 1988;95:492–527. [Google Scholar]

- Mack A, Rock I. Inattentional Blindness. MIT Press; Cambridge, MA: 1998. [Google Scholar]

- McGlinchey-Berroth R, Milberg WP, Verfaellic M, Alexander M, Kilduff PT. Semantic processing in the neglected visual field: Evidence from a lexical decision task. Cognitive Neuropsychology. 1993;10:79–108. [Google Scholar]

- Nissen MJ, Bullemer P. Attentional requirements of learning: Evidence from performance measures. Cognitive Psychology. 1987;19:1–32. [Google Scholar]

- Rock I, Gutman D. The effect of inattention on form perception. Journal of Experimental Psychology: Human Perception & Performance. 1981;7:275–285. doi: 10.1037//0096-1523.7.2.275. [DOI] [PubMed] [Google Scholar]

- Simons DJ, Chabris CF. Gorillas in our midst: sustained inattentional blindness for dynamic events. Perception. 1999;28:1059–1074. doi: 10.1068/p281059. [DOI] [PubMed] [Google Scholar]

- Tipper SP, Cranston M. Selective attention and priming: Inhibitory and facilitatory effects of ignored primes. Quarterly Journal of Experimental Psychology. 1985;37(A):591–611. doi: 10.1080/14640748508400921. [DOI] [PubMed] [Google Scholar]

- Turk-Browne NB, Junge J, Scholl BJ. The automaticity of visual statistical learning. Journal of Experimental Psychology: General. 2005;134:552–564. doi: 10.1037/0096-3445.134.4.552. [DOI] [PubMed] [Google Scholar]