Abstract

Two masked priming experiments investigated the time course of the activation of sub-phonemic information during visual word recognition. EEG was recorded as participants read targets with voiced and unvoiced final consonants (e.g., fad and fat), preceded by nonword primes that were incongruent or congruent in voicing and vowel duration (e.g., fap or faz). Experiment 1 used a long duration mask (100 ms) between prime and target, whereas Experiment 2 used a short mask (22 ms). Phonological feature congruency began modulating the amplitude of brain potentials by 80 ms; the feature incongruent condition evoked greater negativity than the feature congruent condition in both Experiments. The early onset of the congruency effect indicates that skilled readers initially activate sub-phonemic feature information during word identification. Congruency effects also appeared in the middle and late periods of word recognition, suggesting that readers use phonological representations in multiple aspects of visual word recognition.

Research over the past twenty years established that phonological processing deficits underlie most difficulties in learning to read (for reviews see: Adams, 1990; Goswami, 2007; Liberman et al., 1989; Snowling, 1998), yet the role that phonological processing plays in skilled reading is far from being completely understood. Numerous studies have demonstrated that skilled readers activate phonological representations during visual word recognition (for reviews see: Frost, 1998; Rastle and Brysbaert, 2006), but the nature and function of those representations remain largely unknown. The present study addresses theoretical questions about the input and output of lexical access processes. It investigates when phonological features become relevant in visual word recognition, and whether initial phonological processing is constrained by the lexicality of the prime.

Phonological features are the constituents of phonemes that differentiate entries in the lexicon. Whereas phonological features describe a listener’s internal representation of a spoken word form, phonetic features are the parts of the acoustic signal that indicate the underlying phonological form. Several types of phonological features exist, including manner of articulation, place of articulation, and voicing. The voicing feature differentiates the /b/ phoneme from the /p/ phoneme, for example, even though these two sounds have the same manner and place of articulation. For example, slab and slap differ in terms of final consonant voicing. As final consonant voicing has the effect of lengthening the duration of the preceding vowel, minimal pairs with a final consonant voicing contrast necessarily differ along two dimensions of phonetic features (i.e., voicing and vowel duration). The present experiments manipulated these dimensions in order to investigate whether skilled readers process phonological feature information during visual word recognition.

Many studies have demonstrated phonological priming in visual word recognition using homophones, pseudohomophones, and orthographically matched non-homophone primes (see for reviews: Frost, 1998; Grainger et al., 2006; Rastle and Brysbaert, 2006). These experiments are informative about phonological processing, but they do not provide information about the specificity of phonological representations in reading, because pseudohomophones prime all aspects of phonology (e.g., consonant and vowel segments, phonemes, and phonological features).

Other experiments have investigated the specific nature of phonological representations used in visual word recognition. Several studies report that these representations include syllable information (Ashby and Martin, 2008; Ashby and Rayner, 2004; Carreiras et al., 1993; Carreiras and Perea, 2002; Chen et al., 2003; Ferrand et al., 1997; Hutzler et al., 2005), and there is some evidence for the representation of onset-rime units (Ashby et al. 2006; Treiman et al., 1995) and lexical stress information (Ashby and Clifton, 2005; Columbo, 1992). Thus, previous research indicates that skilled readers’ phonological representations include several levels of phonological information beyond the level of phoneme segments.

The question of whether readers’ phonological representations include sub-phonemic feature information has been investigated recently in two lexical decision studies (Lukatela et al., 2001; 2004). Lukatela et al. (2001) used a four-field masked priming paradigm (i.e., mask-prime-mask-target) to present nonword primes that differed from their targets by one or two features (ZEA-sea vs. VEA-sea). In the 57 ms stimulus-onset asynchrony (SOA) conditions, lexical decision times were faster when the prime differed from the target by one feature than when it differed by two features. In a subsequent paper, Lukatela et al. (2004) varied the phonetic properties of vowel duration by manipulating final consonant voicing, in order to test whether vowel duration information affected lexical decision times. In these masked priming experiments, words like pleat and plead were preceded by a variety of primes: three experiments contrasted an identical word condition (plead/plead) with a condition where the prime’s final consonant differed in voicing (pleat/plead), a condition with a dissimilar lexical prime (roach/plead), or a control string (XXXXX/plead). In the most relevant experiments, lexical decision times were consistently slower for the long vowel duration targets than the short vowel duration targets, replicating an effect reported initially by Abramson and Goldinger (1997). Further, identity priming effects were larger for long vowel duration words than short vowel duration words. The vowel length effect suggests that a detailed phonological representation is accessed during lexical decision. Lukatela et al. (2004) claimed that differences in the identity priming effects for short and long duration words indicate an early role for phonological features in word recognition; they concluded that phonetic and phonological information are activated during lexical access. However, lexical decision is a measure of binary decision making (word/not word) and not necessarily a measure of word recognition in terms of identifying a unique lexical entry (Balota and Chumbley, 1984). In addition, reaction times measure the end-point of processing and offer little information about the time-course of phonological effects. Thus, the data from Lukatela and colleagues suggest that phonological features are represented during visual word recognition but do not specify when this activation occurs.

Establishing the time course of activation in visual word recognition is central to understanding how phonological processes operate during reading. According to one conception, skilled readers activate semantic and syntactic processes primarily from direct access of the orthographic form, at least for familiar words (Shaywitz, 2003; Smith, 1999). Were this the case, it would be surprising to find evidence that skilled readers represent specific phonetic correlates of a phonological contrast (i.e., phonological features) early in visual word recognition. Rather, one might expect phonology to become more salient later at the point of lexical identification, as a full phonological representation is activated and used to integrate words into sentences (Frost, 1998). Alternatively, it is possible that phonology plays an early role in the visual word recognition of skilled readers (Perfetti et al., 1988; Perfetti, 1999; Pollatsek et al., 1992; Rayner and Pollatsek, 1989). Readers might generate production codes quickly from the orthographic form, and thereby activate the underlying phonological representation (Ashby, 2006). If readers used orthographic information to activate an initial representation that resembles the acoustic information available in speech, one would expect that production representation to include phonological and phonetic detail.

Previous ERP studies have examined the time-course of phonological processing in visual word recognition, but the large time range of effects (200–500 ms) does not indicate with any precision when phonology first impacts lexical processing. Phonological effects typically appeared between 300 and 500 ms in early ERP studies that required semantic, rhyming, or sentence acceptability judgments (Kutas and Van Petten, 1990; Rugg, 1984; Rugg and Barrett, 1987). However, Kramer and Donchin (1987) reported phonological effects in the 200 ms to 300 ms window in a rhyme judgment experiment. More recent studies also tend to find phonological effects between 200 – 300 ms. For example, Newman and Connolly (2004) investigated phonological and orthographic processing using an RSVP paradigm that presented highly predictable sentence contexts to raise readers’ expectations of a particular orthographic word form. When the target word violated readers’ orthographic expectations, they found larger N270 amplitudes than in the congruent word condition that met readers’ expectations. Newman and Connolly (2004) claim that the N270 suggests the operation of phonological recoding processes that are important for sentence integration. Experiments using an oddball paradigm, which required explicit judgments for non-target items, found phonological effects as early as 290 ms (Bentin et al., 1999; Simon et al., 2004). One study that examined orthography to phonetic translation processes, reported that ERPs differentiated legal from illegal letter strings by 150 ms (Proverbio et al., 2004). However, this effect cannot be attributed solely to phonological/phonetic processing, as string legality was both orthographic and phonological in nature.

In most previous ERP studies, participants read target words that appeared on the screen suddenly, without any prior word form information. Sudden-onset presentations might give rise to word recognition processes that differ from those occurring in more natural reading situations. During silent reading, the extension of the perceptual span to the right of the fixated word (in English), allows readers to obtain word form information before a word is processed foveally during fixation. Eye movement studies of silent reading indicate that skilled readers routinely use this parafoveal form information to facilitate word recognition, even though they are not consciously aware of doing so (see Rayner, 1998 for a review). Therefore, the availability of word form information prior to foveal processing might be key to tapping the time-course of phonological processing during a typical reading experience. Surprisingly, it appears that parafoveal presentation of the advance information is not necessary to obtain these effects; experiments report phonological priming effects when foveal primes are briefly presented during the initial moments of fixation during silent reading (H.W Lee et al., 1999; Y.A. Lee et al., 1999). Therefore, there is some reason to expect that brief masked primes that present advance information foveally and below the threshold of conscious detection might yield earlier phonological effects in ERPs.

A few ERP studies have used a masked priming paradigm to investigate phonological priming. Grainger et al. (2006) conducted a masked-priming study in which participants read targets (e.g., BRAIN) preceded by pseudohomophone primes (e.g., brane) and orthographic control primes (e.g., brant), brain potentials were more negative between 250 – 350 ms at anterior sites only, for the orthographic control condition compared to the pseudohomophone condition. Ashby and Martin (2008) conducted a masked-priming study with syllable congruent and incongruent nonword primes that also yielded an increased negativity to the incongruent condition between 250 –350 ms. The time course of effects in these two studies suggests that masked priming results in somewhat earlier phonological effects than are reported in most previous ERP studies (i.e., before 300 ms). However, the timing of these effects is still difficult to reconcile with previous eye movement research.

Phonological effects appearing around 250 ms in ERPs would be too late to affect fixation durations during silent reading (Rayner, 1998; Sereno and Rayner, 2003). Given that the phonological similarity of parafoveal and foveal representations consistently decreases fixation durations (Ashby and Rayner, 2004; Pollatsek et al., 1992), phonological effects on fixation duration should occur within 100 – 130 ms after the start of the fixation. This is because there is a 120 – 150 ms delay between programming an eye movement and making an eye movement. Some ERP studies have reported early effects in word recognition that are consistent with this time line. Hauk et al. (2006) found effects of word length and letter frequency around 110 ms and Sereno et al. (1998) found effects of word frequency as early as 130 ms. However, these studies necessarily compared different target words and so possible early effects could be confounded with low level visual differences (e.g., in the letter composition of high and low frequency words). Overall, the results of eye movement studies and some ERP experiments suggest that prelexical phonological priming effects should appear by around 100 ms.

The present experiments investigated how phonological features are processed in visual word recognition. We examined the ERPs elicited by printed words with voiced final consonants (fad) and unvoiced final consonants (fat) preceded by nonword primes that were congruent or incongruent in terms of voicing and vowel duration (fak/faz). With this design, the congruent and incongruent primes and targets were visually identical; congruency depended on the relationship between the two. The predictions for the present study are straightforward. If readers process feature information, such as voicing, in order to form an initial phonological representation, then the ERP waveforms elicited when a prime is phonologically congruent with the subsequent target should differ from waveforms elicited by the phonologically incongruent condition early in word recognition. However, if skilled readers primarily rely on a visual route to directly access familiar words in the lexicon, then incongruent phonological information should be irrelevant early in word recognition and one would not expect to find any early differences in the congruent and incongruent ERP waveforms.

Methods

Participants

Twenty students at the University of Massachusetts were paid $18.00 or received 3 experimental credits to participate in an experiment. Two students who participated in Experiment 1 also participated in Experiment 2. All participants were right-handed, native English speakers with normal, or corrected to normal, vision. Data from an additional six participants in Experiment 1 and three participants in Experiment 2 were excluded from the grand averages due to technical problems.

Apparatus and procedure

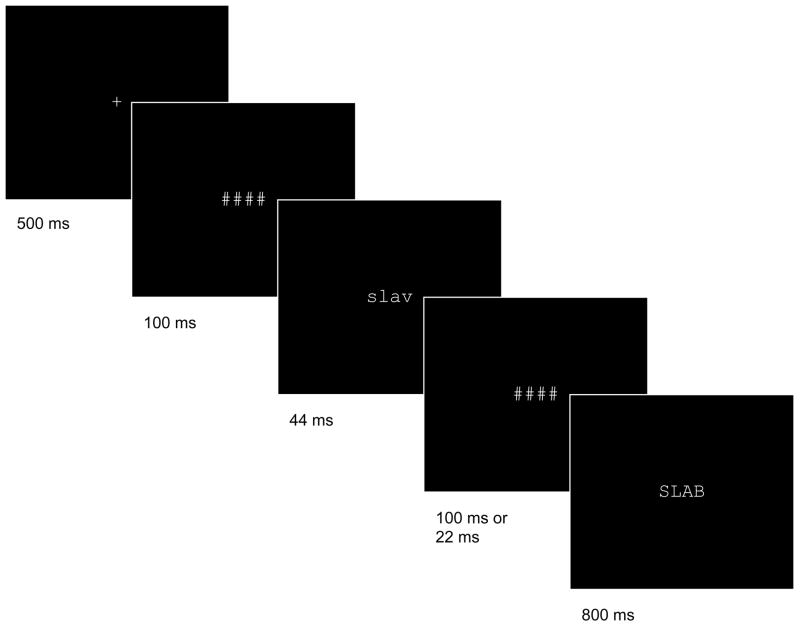

EEG was recorded continuously as participants read single words silently. A 21″ NEC Multisync monitor running at 90 Hz displayed the words in cyan letters on a black background1. Participants first saw a ready signal, and initiated the trial with a keypress that was followed by a fixation cross in the center of the screen for 500 ms (Fig. 1). Target words were presented in a four-field masked priming paradigm that presented a forward mask (100 ms), a lowercase nonword prime (44 ms), a backward mask (100 ms in Experiment 1, 22 ms in Experiment 2), and then the target word in uppercase (800 ms). On each trial, the fields were a consistent number of characters. Most participants reported seeing a row of hash marks (#####) followed by the target word, and a few reported seeing a flash. No one reported seeing letter strings before the targets appeared. Semantic judgments were made on half of the filler trials via keypress. Questions that tapped five semantic categories were presented after the target disappeared from the screen (e.g., is it clothing, does it fly, is it bigger than a shoebox). E-prime software (www.psnet.com) presented the materials and recorded manual responses. The experiment was completed in one session of approximately 60 minutes. The procedures in the two experiments were identical except for the viewing of the display, the font size, and the duration of the mask intervening between prime and target. Experiment 1 trials were viewed on a fully visible computer screen, whereas in Experiment 2 trials appeared within a 3″ × 5″ window cut into black coreboard that masked the rest of the screen. The font size was reduced from 14 point Courier in Experiment 1 to 10 point Monaco in Experiment 2. The most substantial difference between the two experiments was the use of a long duration backward mask in Experiment 1 (100 ms) and a short duration backward mask (22 ms) in Experiment 2.

Fig. 1.

The masked priming paradigm. A fixation point was followed by a forward mask, a nonword prime, a backward mask, and a word target. All images were shown in cyan on a black background. The duration of the backward mask for Experiment 2 was 22 ms (prime-target SOA = 66 ms) and for Experiment 1 was 100 ms (prime-target SOA = 144 ms).

Materials and design

The materials for Experiments 1 and 2 were identical. Participants read 48 words with a final unvoiced consonant (e.g., fat) and 48 words with a final voiced consonant (e.g., fad), preceded by masked nonword primes that were incongruent or congruent with the target in terms of the voicing of the final consonant. The nonword primes differed from targets by one letter, which was the final consonant phoneme. Twelve targets were preceded by incongruent primes that differed from the target only in voicing and vowel duration (e.g., cobe/cope). Remaining targets differed by at least one additional feature (e.g., neek/need). Many of our target words were taken from the materials in Lukatela et al., (2004). Target words ranged from 3–5 letters in length (mean = 3.9 letters), with voiced and unvoiced targets matched for length and initial bigram. Unvoiced and voiced targets had mean frequencies of 31 and 20 occurrences per million, respectively (Francis and Kucera, 1982).

Participants read these 96 target words interspersed with 318 unrelated filler items that were also preceded by masked primes. One half of the filler items were similar in length, frequency, and morphology to the experimental items. The other half comprised longer, morphologically complex words. Unvoiced and voiced target words were presented in a different random order for each participant. Each participant read every target word once, with half of the targets preceded by incongruent primes and other half preceded by congruent primes. For a given participant, each pair of unvoiced and voiced targets was preceded by the identical masked prime, once in the incongruent condition and once in the congruent condition. Material lists and participant groups were fully counterbalanced.

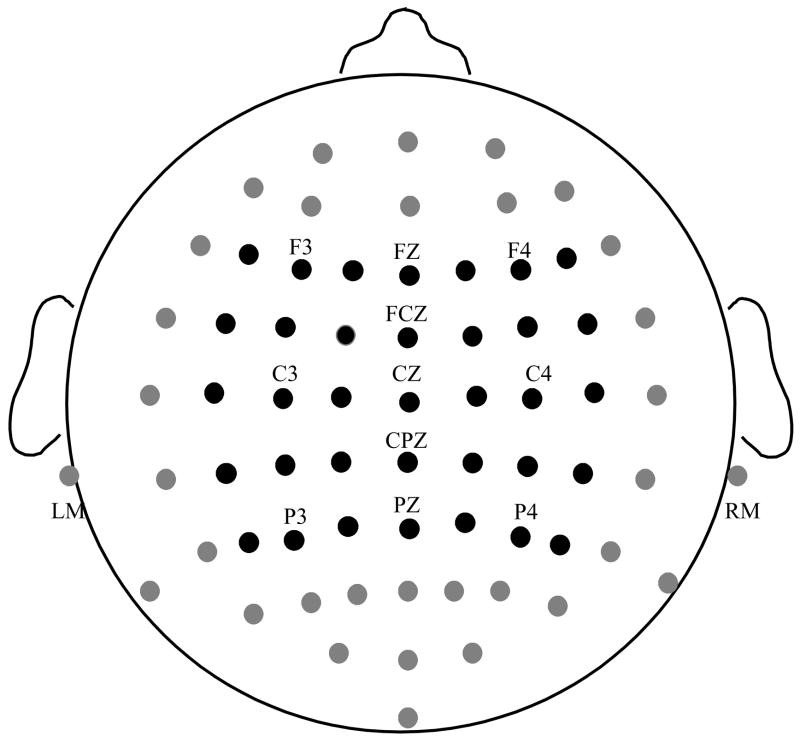

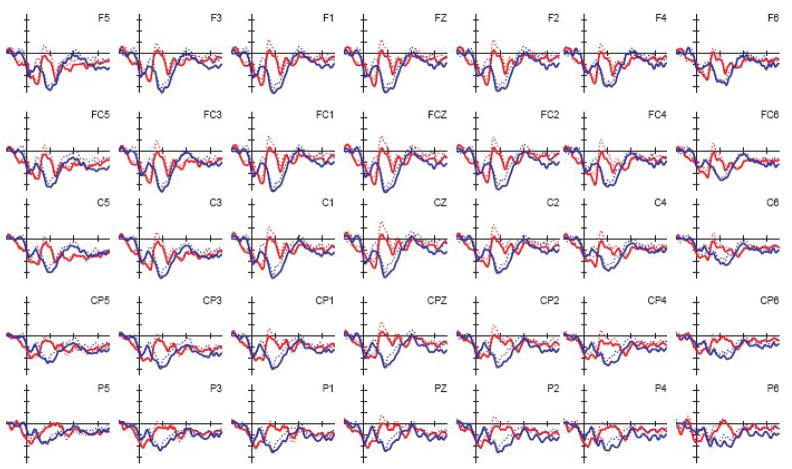

ERP methods and data analysis

EEG was recorded reference-free in DC from sixty-four Ag/AgCl active electrodes arranged in the 10–20 international system (Fig. 2). EOG electrodes were placed on the outer canthi as well as above and below the left eye. EEG and EOG potentials were amplified by Biosemi Active-Two amplifiers and sampled at a rate of 512 Hz. The EEG signals for each subject were band-pass filtered at 0.16 – 30 Hz and referenced to averaged mastoids offline using EEProbe software (www.ant-neuro.com). Parts of the signal that contained EOG deflections of +/− 30 μV were rejected automatically and trials with low frequency shifts and other artifacts were rejected by hand. The EEG was segmented into epochs from the first mask (−244 ms in Experiment 1, −166 ms in Experiment 2) to 700 ms post-target onset. Next, the EEG for each electrode was averaged across trials for each participant in each condition, yielding individual averages that were baseline corrected for either 82 ms (Experiment 1) or 84 ms (Experiment 2) during the forward mask preceding the prime. Participant averages were then processed to produce the grand average for each condition.

Fig. 2.

Approximate location of 64 scalp electrodes. Data from electrodes shown in black were included in analyses. ANOVAs included two electrode position factors: Rows (5 levels) and Columns (7 levels).

Thirty-five electrodes were selected a priori to create complete rows and columns that coded for electrode position in the ANOVAs, and these data were included in the analyses2. The ANOVAs for each experiment included four within participant factors: Congruency between prime and target (incongruent or congruent), Target type (voiced or unvoiced) and Electrode position (Rows 1 – 5 and Columns 1 –7). As the voicing differences in the targets were confounded with physical differences in letters in the two sets of targets (slab vs. slap), we present only congruency effects evident across the full set of items. Experiment was treated as a between participants factor in omnibus ANOVAs that tested 20 ms bins. Statistical analyses for three time periods (early: 80 –180 ms, middle: 200 – 520 ms, and late: 520 – 700 ms) were guided by congruency effects (or congruency by electrode position interactions) in consecutive bins. The N1 was identified as the first negative peak visible after target onset in each experiment. We report Greenhouse-Geisser adjusted p-values for contrasts with more than 1 degree of freedom. Effects with p-values at the.05 level or less were considered statistically significant. Significant interactions with congruency and electrode position were followed up with simple effects tests.

Results

Experiment 1

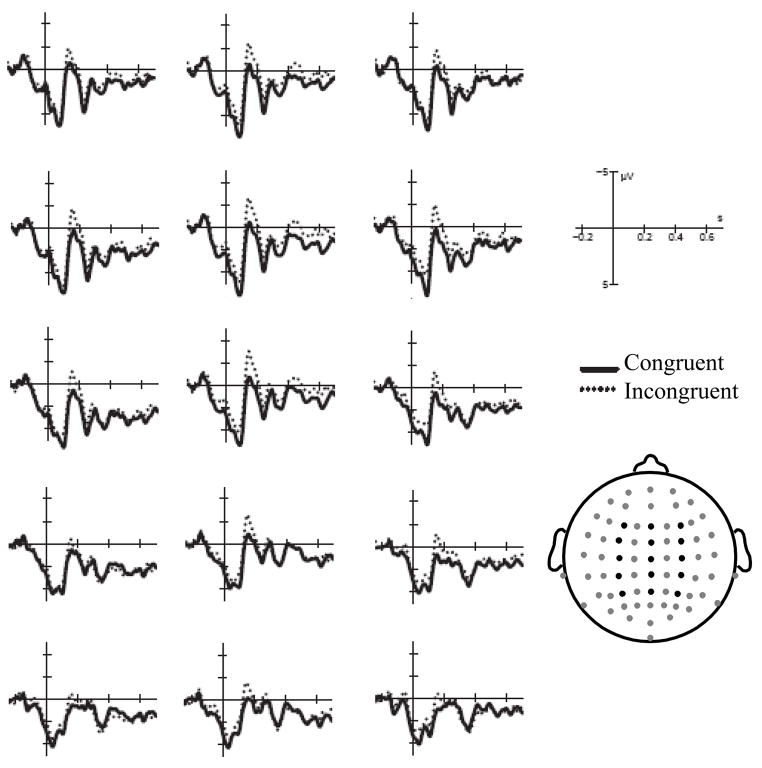

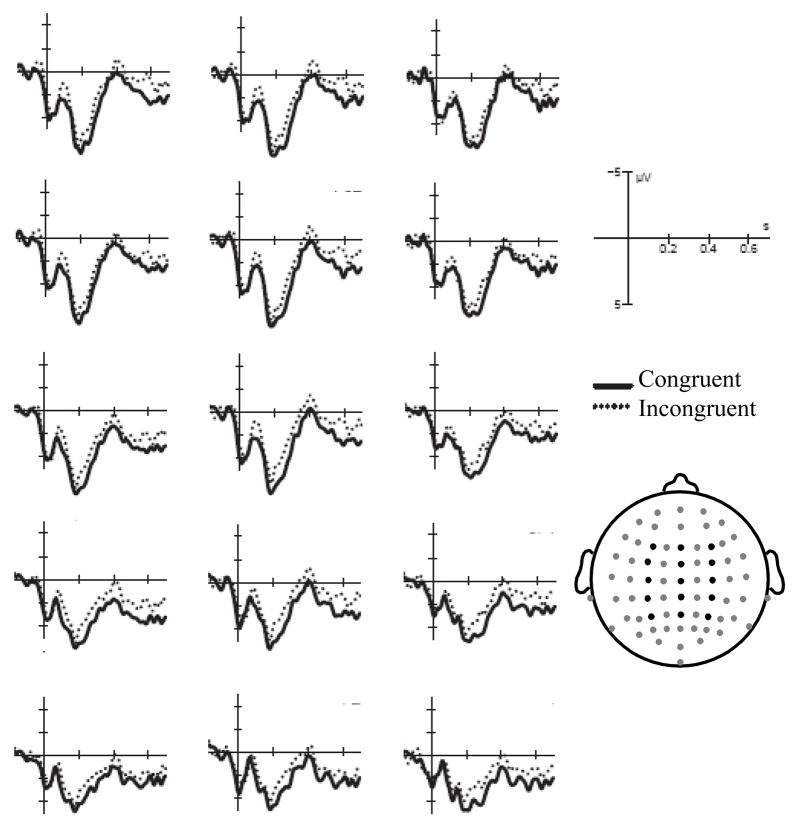

Figure 3 presents the effects of feature congruency between the prime and the target for Experiment 1 (the long backward mask). Early in word recognition, the feature-incongruent condition elicited a more negative waveform on average than did the feature-congruent condition in the 80 – 180 ms time range, F(1,19)= 8.33, p=.01. The effect appeared somewhat stronger at midline sites (Fig. 6), F(6,114)= 3.14, p=.03. To determine the onset of this effect, significance was tested in two additional time windows: 60 – 80 ms, F<1, and 80– 100 ms, F(1,19)= 5.21, p=.03. The offset of the congruency effect was determined by testing the 180 – 200 ms window, F(1,19)= 3.22, p=.09. The 80 –180 ms window best captures the largest difference between conditions in the early period. Measurement of the waveform at FCZ (averaged over subjects) indicates that the first negative-going wave (N1) peaked at 148 ms.

Fig. 3.

Feature congruency effects in Experiment 1. Targets preceded by congruent primes (solid line) evoke a smaller negativity than those in the incongruent condition (dotted line) between 80 –180 ms.

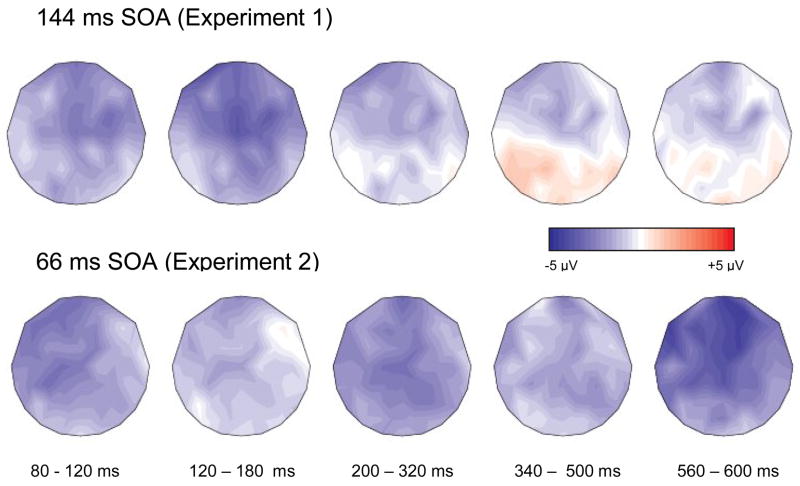

Fig. 6.

Topographic maps illustrate the early congruency effect in Experiments 1 and 2. The longer duration of the congruency effect in the long SOA experiment, relative to the short SOA experiment, is evident.

Neither the congruency effect nor any interactions were significant between 200 – 320 ms, F(1, 19)= 1.04, p=.32. Congruency interacted with electrode position in the 320– 520 ms time range, F(4,76)= 4.52, p=.02. Fig. 6 describes the anterior congruency effect. As in the previous period, scalp potentials were more negative in the incongruent feature condition than in the congruent condition. The onset of the congruency by site interaction was tested in two time windows: 300 –320 ms, F(4,76)=2.07, p=.16, and 320 –340 ms, F(24, 456)= 2.02, p=.045. Measurement of the grand average waveform at FCZ indicates that this anterior negativity peaked at 447 ms. The offset of the congruency effect was determined by testing the 520 –540 ms bin, Fs<1. After 520 ms, no congruency effects were significant Fs<1.

Experiment 2

Figure 4 presents the effects of feature congruency between the prime and the target for Experiment 2 (the short backward mask). The feature-incongruent condition elicited a more negative waveform than did the feature-congruent condition in the 80 –120 ms window, F(1,19)= 10.41, p=.004. The onset of this congruency effect was tested in two consecutive time windows: 60– 80 ms, F<1, and 80–100 ms, F(1,19)= 10.39, p=.004. The offset of the congruency effect was determined by testing the 120 –140 ms window, F(1,19)= 1.31, p=.27. Inspection of the scalp distribution of potentials (Fig. 6) in the 120 – 140 ms window suggests a lingering left-lateralized congruency effect that tended to be stronger at anterior sites, F(4,76)= 3.12, p=.06. The 80 –120 ms time range best captures the main effect of congruency in the early period. Measurement of the waveform at FCZ (averaged over subjects) indicates that the first negative going wave (N1) peaked at 100 ms.

Fig. 4.

Feature congruency effects in Experiment 2. Targets preceded by congruent primes (solid line) evoke a smaller negativity than those in the incongruent condition (dotted line) between 80 –120 ms.

The congruency effect was significant in the middle period of word recognition in the 200 –320 ms time range, F(1,19)= 8.28, p=.01. As with the earlier congruency effect, scalp potentials were more negative in the incongruent feature condition than in the congruent condition. The onset of this main effect was determined by testing for significance in two time bins: 180 – 200 ms, F(1,19)= 1.42, p=.24, and 200 – 220 ms, F(1,19)= 7.20, p=.015. The offset of the feature congruency effect was determined by testing the 320 – 340 ms window, F(1,19)= 3.25, p=.09. The 200 – 320 ms time range best captures the feature congruency effect in the middle period of word recognition.

In the later period of word recognition, a main effect of feature congruency reached significance in the 560 – 600 ms time range, F(1,19)= 4.45, p=.048, as well as a congruency by electrode row interaction, F(4,76)= 3.49, p=.045. Inspection of the waveforms suggests that the effect appeared weaker at the posterior sites tested. We determined the time range that best captured the congruency effect by testing for significance in two time bins: 540 – 560 ms, F(1,19)= 3.09, p=.10, and 560 –580 ms, F(1,19)= 3.48, p=.08. The marginal significance of the congruency effect in the latter window suggests a gradual onset. The offset of the effect was tested in the 600 – 620 ms bin, F(1,19)= 1.61, p=.22. The congruency by row interaction was not significant at any of these time windows (Fs<1.5). The 560 – 600 ms time range best captures the main effect of feature congruency in the late period of word recognition. Neither a main effect of congruency, F(1,19)= 2.50, p=.13, nor any interactions with electrode position (Fs<1) were significant between 600 – 700 ms.

Experiments 1 and 2

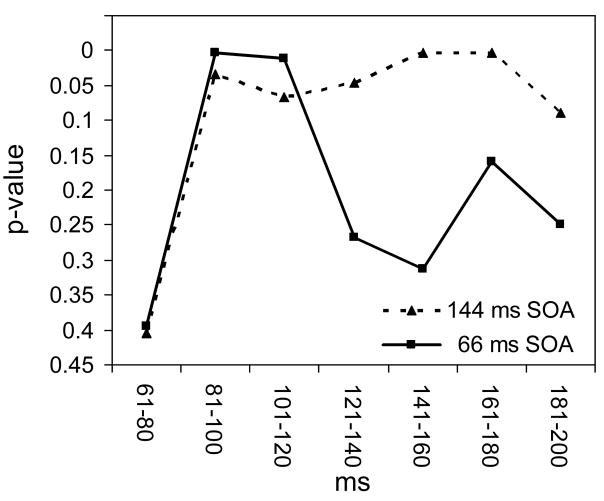

Omnibus ANOVAs tested for the effect of Experiment. The possible contributors to the Experiment factor are discussed further in the Discussion section. In addition to different participants, the experiments also differed in the duration of the mask that appeared between the prime and the target (Experiment 1 mask=100 ms, Experiment 2 mask= 22 ms). Before 320 ms we observed a main effect of Experiment in each time range; 80 –180 ms, F(1,38)=5.53, p=.024, and 200–320 ms, F(1,38)=7.58, p=.009. The main effect of Experiment was not significant in the following time ranges: 340 – 520 ms, F<.5; 520 – 600 ms, F<0.2; 600 – 700 ms, F<0.6. The waveforms presented in Figure 5 visually contrast the brain potentials in Experiment 1 and Experiment 2. Inspection of the waveforms supports statistical tests indicating that similar congruency effects occurred in both experiments until 320 ms, and illustrates substantial differences in the waveforms evoked in each experiment.

Fig. 5.

Feature congruency effects in Experiment 1 (shown in red) and 2 (shown in blue). Waveforms in the congruent conditions are drawn in solid lines. Main effects of Experiment are evident 0 – 320 ms after target onset, but no Experiment by Congruency interaction appeared until after this window.

The Experiment factor did interact with congruency and electrode position in the 340 – 500 ms time range, F(4,152)= 3.51, p=.04. This interaction suggests that the presence of a congruency effect at anterior sites in Experiment 1 and the absence of a significant effect in Experiment 2 in this time range is the single reliable difference in congruency effects between Experiments.

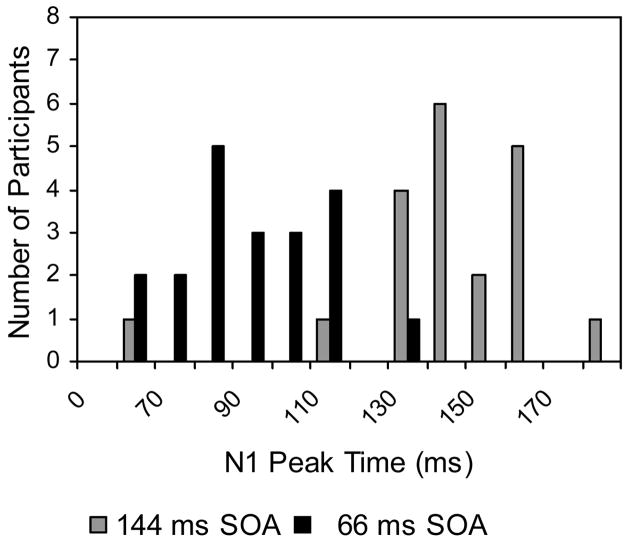

Summary of Results

Significant feature-congruency effects appeared by 100 ms after target onset in both experiments (Fig. 8). The congruency effect consistently manifested as more negative-going potentials in the incongruent condition as compared to the congruent condition. In both experiments, the congruency effect appeared on the N1, irrespective of its timing. However, the feature congruency effect does not appear to be time-locked to the N1, as its onset preceded the N1 in the first experiment, which used the longer mask. Figure 5 suggests that mask duration did not affect the initial timing of congruency effects, but did affect the timing of the N1 in the grand average. Figure 6 illustrates the relative durations of the early congruency effect in each experiment. The distribution of mean N1 peak times for participants (Fig. 7) indicates that the short mask duration decreased the latency of the N1 peaks as compared to the long mask duration. In the middle period, a main effect of congruency appeared between 200 – 320 ms with the short mask in Experiment 2, but was not significant in Experiment 1. Experiment mask duration interacted with the congruency effect and electrode position between 340 – 500 ms. As seen in Fig.6, a congruency effect is evident at anterior sites in Experiment 1, whereas no significant effect was observed in Experiment 2 during this time. Later in word recognition, a congruency effect did appear in Experiment 2 between 560 – 600 ms.

Fig. 8.

Significance tests for the early congruency effects. The feature congruency effect onset around 80 ms in both experiments. In Experiment 2 (66 ms SOA), the effect was significant for a narrower time window (80 – 120 ms) relative to Experiment 1 (80 – 180 ms). In Experiment 1, the main effect of congruency was marginal between 100 – 120 ms, F(1,19) = 3.82, p=.066, and a congruency by electrode position interaction was significant between 120 –140 ms, F(6,114) = 2.99, p=.046.

Fig. 7.

N1 peak times for participants in Experiments 1 and 2. The distribution of the N1 peak times shifted to the left in the short SOA experiment (shown in black) as compared to the distribution of N1 peak times in the long SOA experiment (shown in grey).

Discussion

Two masked priming experiments investigated phonological processing in visual word recognition, shedding light on how readers use sub-phonemic information. The central result of this study is a phonological feature congruency effect that was significant in three periods: early (80 –180 ms), middle (200 – 520 ms), and late (520 – 700 ms). These periods encompass the first, second, and third instance of observed congruency effects. In each period, the brain potentials of skilled readers were more negative when reading fat preceded by faz (the voicing incongruent condition) as compared to when fat was preceded by fak (the voicing congruent condition). The initial feature congruency effect onset around 80 ms in both experiments, which is the earliest phonological activation to be replicated in ERPs. The congruency effect is the first electrophysiological evidence to suggest that phonological processing in visual word recognition involves the activation of sub-phonemic feature information. The early onset of the effect also indicates a potential temporal alignment of ERP and eye movement accounts of phonological processing in visual word recognition. The appearance of a congruency effect in several periods of word recognition indicates that feature activation percolates through the cognitive system, affecting several aspects of the word identification process (Holcomb and Grainger, 2007).

In terms of the nature of the phonological representations used in word recognition, the congruency effect suggests that skilled readers automatically activate sub-phonemic information to form highly detailed phonological, and possibly phonetic, representations. One could claim, however, that in this particular study participants needed to activate feature information in order to access a particular lexical entry and distinguish fat from fad. Were such minimal pairs the only items in our experiments, the congruency effect might be an artifact of strategic processing. Given that participants saw these words in the context of three hundred other words which were not minimal pairs, we propose that the activation of phonological features is automatic in word recognition.

Experiments 1 and 2 provide electrophysiological evidence that converges with existing behavioral data for the role of phonological features in visual word recognition (Lukatela et al., 2001; 2004). Unfortunately, the present experiments cannot determine whether phonetic or phonological processing is the main contributor to our congruency effect. This is because in our materials, as in Lukatela et al., 2004, vowel duration is the phonetic correlate of underlying differences in phonological features (e.g., voicing) in English. Thus, we refer to our effect as phonological because phonological similarity necessarily contributes to the congruency effect, but phonetic processing may also be involved.

The high temporal resolution of the present ERP data contributes new information about the time course of feature processing. The onset of the feature congruency effect around 80 ms suggests that readers process features in the initial phases of visual word recognition. We attribute the congruency effect to the fundamental nature of features in language processing (Lahiri and Reetz, 2003; Lukatela and Turvey, 2001; Pulvermuller et al., 2006). It would be unexpected to find such early priming effects for suprasegmental phonological information (e.g., rime, initial syllable, or lexical stress), as they comprise feature information that is processed initially.

The early congruency effect holds implications for understanding the front-end processes involved in lexical access. In terms of the input to visual word recognition, little is known about how the visual stimulus is converted to a language representation. It is possible that initial letter processing activates phonological representations without phonetic activation, and the congruency effect is consistent with this claim. The early onset of the congruency effect suggests that when congruent phonological feature information is activated by the prime letter string, it facilitates the initial activation of the target’s phonological features during word recognition. Alternatively, it is possible that skilled readers might activate a phonetic representation that serves as the “acoustic input” to subsequent word recognition processes (Ashby, 2006). The disjunction of the congruency effect onset (around 80 ms) and the N1 peak times in Experiment 1 (Fig. 7) could suggest that feature congruent primes facilitate initial phonetic activation that is utilized by, but is separable from, lexical access processes. However, this conclusion would depend on a close link between N1 latency and a particular stage of lexical access.

We now discuss the priming effects we observed in relation to previous ERP studies of phonological priming in visual word recognition. Previous studies typically find phonological effects between 200 – 500 ms. Two recent studies used a masked-priming paradigm similar to the one used in the present experiments. Grainger et al. (2006) found increased negativity to targets preceded by orthographic control primes as compared to pseudohomophone primes between 250 –350 ms at anterior sites (N250). When Ashby and Martin (2008) used syllable congruent and incongruent nonword primes, they also found an increased negativity in the incongruent condition compared to the congruent condition between 250 –350 ms. The phonological effects in these experiments are comparable to the congruency effects observed in Experiment 2 between 200 – 320 ms and in Experiment 1 between 320 – 520 ms (respectively). The effects tended to be stronger at anterior sites and consistently showed more negative-going potentials in the phonologically incongruent condition. However, our congruency effects were evoked by nonword letter strings, whereas Grainger et al. used pseudohomophone primes that are entries in the phonological lexicon. Thus, the present data suggest that feature congruency effects in visual word recognition are not dependent on the lexicality of the prime. The similarity in the time course of Newman and Connolly’s N270, the effects in previous masked priming ERP studies, and the present congruency effects in the middle period suggest that the phonological representations used for sentence integration could include feature and syllable information.

Studies of phonological priming in auditory word recognition are also useful in interpreting our results. Cognitive psychologists have posited an interface between spoken language and reading processes for quite some time (Abramson and Goldinger, 1997; Huey, 1908; Lukatela et al., 2004; Rayner and Pollatsek, 1989). Recently, Poldrack et al. (2001) reported initial fMRI evidence for a neuro-anatomical locus for an interface between speech and reading in the pars triangularis. Therefore, theoretical and empirical justification exists for considering our results in the context of auditory studies of phonological priming.

In this volume, Newman and Connolly report a phonological mapping negativity (PMN) peaking around 290 ms that tended to be more negative at anterior sites and was not affected by lexicality of the target. As the PMN was elicited by a phoneme deletion task, it is difficult to link it with the feature congruency effect that peaked around 270 ms in our Experiment 2. Still, the feature congruency effect appears similar to the PMN in terms of its distribution, polarity, and direction of effect. An auditory ERP study by Praamstra et al. (1994) may be relevant to the late N400-type response observed in the present Experiment 1 (long mask duration). In Praamstra et al., participants listened to pairs of single syllable words; targets were preceded by alliterating and nonalliterating words and nonwords. This study found a phonological N400-type response to nonalliterating word and nonwords, relative to alliterating pairs, that started as early as 250 ms and peaked around 450 ms. Praamstra et al.’s effect is temporally similar to the later negativity we observed in Experiment 1 that peaked around 450 ms. Another similarity between the two sets of waveforms is the long window of negativity before the N450 peak in the present study and Praamstra’s. However, the greatest difference in this preceding window appeared at posterior sites in Praamstra et al., whereas the slow onset of this congruency effect in Experiment 1 tended to be stronger at anterior sites. Praamstra et al. suggest that the alliterative congruency effect is earlier than the rhyming effect in their first experiment because the salient parts of the primes occurred in early positions. Although this may be correct in terms of speech perception, our data indicate that feature differences located at the end of words can give rise to early priming effects was well.

In summary, previous ERP research in visual and auditory word recognition has yielded effects similar to those found in the present study. Previous studies observed that brain potentials were more negative in the incongruent condition than in the congruent condition between 200 – 500 ms. The present congruency effects appeared in this time range and were in a similar direction. Some studies reported that this negativity tended to be stronger at anterior sites, as we observed in the present study. Auditory studies found that this negativity is independent of target lexicality, and our congruency effects were elicited by nonword primes. Thus, it seems that the congruency effects in this middle period of word recognition are at least superficially similar to the phonological effects reported in previous ERP research.

An additional result that may interest some readers is the effect of backward mask duration on target word processing. We manipulated the duration of the backward mask in order to investigate the effect of prime-target SOA (stimulus-onset asynchrony) on phonological priming processes. Experiment 1 used a long mask between prime and target (SOA of 144 ms), whereas Experiment 2 used a short mask (SOA of 66 ms).3 A main effect of Experiment was evident before 320 ms, and SOA interacted with the congruency effect later in word recognition (340 – 500 ms). Inspection of the data in Fig. 5 and 7 suggests that a shorter prime-target SOA affected three aspects of the waveform in Experiment 2 as compared to Experiment 1: the latency of the N1, the latency of congruency effects in the middle period, and the absence of a significant N450 -type component. These neurophysiological differences may contribute to the behavioral priming effect observed by Lukatela et al. (2001).

The shorter SOA in Experiment 2 accompanied a decreased latency of the N1 (Fig. 6 and 7). We interpret this difference in N1 latency between Experiment 1 (around 150 ms) and Experiment 2 (around 100 ms) as a decrease because the N1 timing in Experiment 1 has been observed in previous masked priming ERP studies (Ashby and Martin, 2008; Grainger et al., 2006), as well as in experiments using an RSVP paradigm (Hauk et al., 2006; Sereno et al., 1998). Finding a decreased N1 latency at a shorter prime-target SOA is consistent with a previous study that manipulated SOA within subjects. Holcomb and Grainger (2007) conducted a masked repetition priming experiment that held prime duration constant at 40 ms and varied the prime- target SOA (e.g, 60, 180, 300, 420 ms). A visual comparison of the CZ waveforms (their Figure 1, A and B) suggests that the grand average N1 peaked before 100 ms in the 60 ms SOA condition and peaked around 150 ms in the 180 ms SOA condition. Although the SOAs differ somewhat in the present Experiments 1 and 2 (144 ms and 66 ms, respectively), we essentially replicate the pattern of a shorter SOA decreasing the latency of the anterior N1.4 Holcomb and Grainger (2007) also noted that their repetition priming effect was significant over a wider time range in the 180 ms SOA condition, relative to the 60 ms SOA condition. Similarly, the early congruency effect was significant over a 100 ms time window in our longer SOA experiment, but it was defined by a 40 ms window in our shorter SOA Experiment. The shorter SOA also decreased the latency of the subsequent congruency effect in the middle period; this effect appeared between 200 – 320 ms in Experiment 2 but between 320 – 520 ms in Experiment 1.

Two mechanisms could account for the relationship between shorter SOA and the decreased N1 latency. One possibility is that the long SOA more effectively masked the prime, and made it less available to influence target processing. Were that the case, then congruency effects should shift with the N1 and that was not observed. Rather, our early congruency effects onset around 80 ms irrespective of SOA. Another possibility is that the long SOA extended the time available for processing the prime, allowing higher-level representations to develop that affected target processing. In terms of this study, higher order activation could entail competition between the word neighbors of our nonword primes and our targets. The observation of an N450 in the long SOA experiment is consistent with this interpretation. By comparison, the shorter SOA would minimize the processing time of the prime. This would account for the decreased latency of the N1 as well as the absence of later competition effects in Experiment 2.

The absence of an N450-like competition effect in Experiment 2 was indicated by a three-way interaction between 340 – 500 ms (see Fig.6). The interaction was characterized by a centro-anterior N450-type of congruency effect that was evident in the long SOA experiment, but not in the short SOA experiment. The observation of an N450 in Experiment 1 is consistent with the interpretation of this component being phonological in origin, as it was evoked by nonword primes that would be unlikely to generate semantic competition. It is tempting to explain this interaction in terms of the longer mask in Experiment 1 allowing time for competition effects to arise, but the Grainger et al. experiment reported a reduced N400 to targets in the pseudohomophone condition relative to the control even though they used a brief mask (17 ms). Therefore, it seems that the lack of a significant N450 in Experiment 2 cannot be attributed to the brief mask duration. However, it is possible that the activation of nonword primes in our experiment might spread more slowly than activation of familiar phonological forms (i.e., the pseudohomophones in Grainger et al.). In that case, a longer prime-target SOA would be needed to elicit a phonological N450, and this would explain why we did not find that effect in the short prime-target SOA experiment.

The waveforms in the shorter SOA experiment exhibit a decreased N1 latency, a decreased congruency effect latency in the middle period, and an absence of N450-type competition effects, as compared to the longer SOA waveforms. This constellation of differences could indicate that more efficient word recognition processes were operating with a short SOA. As we did not measure the speed of word recognition in this study, future experiments might explore this idea. The brief prime-target interstimulus interval (ISI) in Experiment 2 (22 ms) might elicit more efficient word recognition processes because this ISI falls within the time range of a typical saccade. Skilled readers are practiced at maintaining advance information about a word for the duration of a saccade, as they routinely integrate parafoveal and foveal information when silently reading text (Rayner, 1998). In that sense, a short prime-target interval might mimic saccade durations during silent reading, and permit readers to engage early phonological processes that are often used for integrating word form information. Whether the congruency effect is actually a result of the integration of parafoveal and foveal information is a topic of future research. Given that the prime-target ISI in Experiment 2 is a closer approximation of saccade durations during natural reading than the ISI in Experiment 1, we consider Experiment 2 the more ecologically valid of the two.

In reference to eye movements during silent reading, our data coincide with the time line of eye movements and ERPs proposed by Sereno and colleagues (1998 2003). Initial processing of phonological feature information by 80 ms would enable this information to impact fixation durations before programming the eye movement to the next word. The middle period congruency effect in Experiment 2 (200 – 320 ms) would appear as word identification completes and around the time the eye leaves the word. The later congruency effect observed between 560 – 600 ms in Experiment 2 suggests late phonological recoding processes that are consistent with word recognition theories proposing a post-lexical, spell-check phase that could occur after the eye has moved to the next word (Paap and Johansen, 1994; Van Orden et al., 1988). The potential alignment of congruency effects and eye movement programming time is less straightforward for the long SOA presentation in Experiment 1. The timing of the first congruency effect would allow parafoveal feature information to impact fixation durations, but the second effect (320 – 520) would onset after the eyes left the target. This suggests that the shorter ISI of Experiment 2 more closely mimics parafoveal processing in natural reading.

The phonological congruency effects reported here indicate that ERPs can index very early effects in word recognition. In our opinion, the most important factor in accounting for the early onset of sub-phonemic congruency effects is the fundamental nature of features in language processing. We also expect that paradigms that allow readers to process advance word form information without conscious awareness, such as masked priming, are more likely to elicit early effects.

The present study has several limitations. Future experiments are needed in order to generalize our congruency effect to more normal reading situations. Whereas masked priming presents advance information to readers in a way that may mimic parafoveal preview, the masked priming paradigm is more than one step away from silent reading as it usually occurs. The use of nonword primes as well as the screen flicker noticed by some participants are two potentially important differences between our study and real world reading. Generalizing to items also might be problematic, given that our materials nearly exhausted the supply of minimal pairs in English that could be matched for word length. Lastly, the present materials do not differentiate between phonetic and phonological activation, and so it is difficult to determine the precise locus of our congruency effects.

Despite the limitations mentioned above, we have several reasons to believe that skilled readers activate feature information early in word recognition. Most importantly, the design of our study controlled for visual similarity of primes and targets in the congruent and incongruent conditions. Thus, the early effects we observed are unlikely to result from visual differences. Secondly, the early congruency effects onset around 80 ms in both experiments. Because previous ERP studies of phonological priming have not reported effects in this time window, the replication of the feature congruency effect in Experiment 2 was necessary to verify it. Thirdly, the novel early feature congruency effect preceded fairly typical congruency effects in the middle period of word recognition. These effects were similar in direction, onset time, and scalp distribution to previously reported effects (Ashby and Martin, 2008; Grainger et al., 2006; Newman and Connolly, 2004, in press). Therefore, it appears that the early congruency effect is not an anomaly.

This initial demonstration and replication of a feature congruency effect is noteworthy for three reasons. The appearance of a congruency effect based on phonological features suggests that features play a fundamental role in visual word recognition as well as spoken word recognition. If features are fundamental in recognizing familiar written words, then the present study offers further evidence for an early interface between written language and spoken language processes. Lastly, the observation of congruency effects that onset around 80 ms provides an initial link between ERP and eye movement accounts of the timecourse of phonological processing in visual word recognition.

Acknowledgments

This research was supported by NIH grant HD051700, which provided funding for the primary and secondary authors. We appreciate the use of many target items from Lukatela, Eaton, Sabadini, and Turvey (2004) as well as Charles Clifton, Jr.’s comments on an earlier version of this manuscript. We especially thank Laura Giffin for her help collecting and managing the data.

Footnotes

Because of equipment changes, the materials were presented at a 75 Hz refresh rate for four participants in Experiment 1 and six participants in Experiment 2. This slower refresh rate increased the duration of the forward mask by 6 ms, the prime by 10 ms, and the backward mask in Experiment 2 by 3 ms, relative to the timing with a 90 Hz presentation. Visual comparison of the grand averaged waveforms recorded at each refresh rate yielded no apparent differences. Accuracy rates for the 75 Hz presentation were 93% and 96% for Experiments 1 and 2, respectively. Accuracy for the 90 Hz presentation was 96% in both Experiments. The designated baseline in each experiment was common to all the participants.

Seven data files in Experiment 1 required interpolation of one or two channels: F6 (2 participants), F4 (1 participant), F3 (1 participant), FC6 (1 participant), FC4 (2 participants), C3 (1 participant), C1 (1 participant), CP2 (1 participant). Four data files in Experiment 2 required interpolation of one channel: F5 (1 participant), F6 (1 participant), CP3 (2 participants). Interpolations were calculated by EEProbe software using a spherical-spline formula.

We recognize that effects of Experiment could be attributed to differences in participant groups as well as differences in the prime-target SOA. We attribute effects of Experiment to differences in prime-target SOA, since group differences in brain potentials would be expected to persist throughout word recognition. A design that manipulates mask duration within-subjects would offer more conclusive evidence about the effects of SOA on phonological priming, such as Holcomb and Grainger (2007).

Differences between Experiment 1 and 2 in viewing the display, font size, or participants may also contribute to the decreased latency of the N1.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Jane Ashby, Department of Psychology.

Lisa D. Sanders, Department of Psychology

John Kingston, Department of Linguistics, University of Massachusetts at Amherst.

References

- Abramson M, Goldinger S. What the reader’s eye tells the mind’s ear: Silent reading activates inner speech. Perception and Psychophysics. 1997;59:1059–1068. doi: 10.3758/bf03205520. [DOI] [PubMed] [Google Scholar]

- Adams MJ. Beginning to Read: Thinking and learning about print. MIT Press; Cambridge, MA: 1990. [Google Scholar]

- Ashby J. Prosody in silent skilled reading: Evidence from eye movements. Journal of Research in Reading, Special Issue: Prosodic Sensitivity in Reading Development. 2006;29:318–333. [Google Scholar]

- Ashby J, Clifton CE., Jr The prosodic property of lexical stress affects eye movements during silent reading. Cognition. 2005;96:B89–B100. doi: 10.1016/j.cognition.2004.12.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ashby J, Martin AE. Prosodic phonological representations early in visual word recognition. Journal of Experimental Psychology: Human Perception and Performance. 2008;34:224–236. doi: 10.1037/0096-1523.34.1.224. [DOI] [PubMed] [Google Scholar]

- Ashby J, Rayner K. Representing syllable information during silent reading: Evidence from eye movements. Language and Cognitive Processes. 2004;19:391–426. [Google Scholar]

- Ashby J, Treiman R, Kessler B, Rayner K. Vowel processing during silent reading: Evidence from eye movements. Journal of Experimental Psychology: Learning, Memory and Cognition. 2006;32:416–424. doi: 10.1037/0278-7393.32.2.416. [DOI] [PubMed] [Google Scholar]

- Balota DA, Chumbley JI. Are lexical decisions a good measure of lexical access – The role of word frequency in the neglected decision stage. Journal of Experimental Psychology-Human Perception and Performance. 1984;10(3):340–357. doi: 10.1037//0096-1523.10.3.340. [DOI] [PubMed] [Google Scholar]

- Bentin S, Mouchetant-Rostaing Y, Giard MH, Echallier JF, Pernier J. ERP manifestations of processing printed words at different psycholinguistic levels. Journal of Cognitive Neuroscience. 1999;11:35–60. doi: 10.1162/089892999563373. [DOI] [PubMed] [Google Scholar]

- Carreiras M, Alvarez CJ, de Vega M. Syllable frequency and visual word recognition in Spanish. Journal of Memory and Language. 1993;32:766–780. [Google Scholar]

- Carreiras M, Perea M. Masked priming effects with syllabic neighbors in a lexical decision task. Journal of Experimental Psychology: Human Perception and Performance. 2002;28:1228–1242. [PubMed] [Google Scholar]

- Chen JY, Lin WC, Ferrand L. Masked priming of the syllable in Mandarin Chinese Speech Production. Chinese Journal of Psychology. 2003;45:107–120. [Google Scholar]

- Colombo L. Lexical stress effect and its interaction with frequency in word production. Journal of Experimental Psychology: Human Perception and Performance. 1992;18:987–1003. [Google Scholar]

- Ferrand L, Segui J, Humphreys GW. The syllable’s role in word naming. Memory & Cognition. 1997;25:458–470. doi: 10.3758/bf03201122. [DOI] [PubMed] [Google Scholar]

- Francis WN, Kucera HK. Frequency Analysis of English Usage. Houghton-Mifflin; Boston: 1982. [Google Scholar]

- Frost R. Toward a strong phonological theory of visual word recognition: True issues and false trails. Psychological Bulletin. 1998;123:71–99. doi: 10.1037/0033-2909.123.1.71. [DOI] [PubMed] [Google Scholar]

- Goswami U. Typical reading development and developmental dyslexia across languages. In: Coch D, Dawson G, Fischer KW, editors. Human Behavior, Learning and the Developing Brain: Atypical Development. Guilford Press; New York: 2007. pp. 145–167. [Google Scholar]

- Grainger J, Kiyonaga K, Holcomb PJ. The time course of orthographic and phonological code activation. Psychological Science. 2006;17:1021–1026. doi: 10.1111/j.1467-9280.2006.01821.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hauk MH, Davis M, Ford F, Pulvermuller Marslen-Wilson WD. The time course of visual word recognition as revealed by linear regression analysis of ERP data. NeuroImage. 2006;30:1383–1400. doi: 10.1016/j.neuroimage.2005.11.048. [DOI] [PubMed] [Google Scholar]

- Holcomb PJ, Grainger J. Exploring the temporal dynamics of visual word recognition in the masked repetition priming paradigm using event-related potentials. Brain Research. 2007;1180:39–58. doi: 10.1016/j.brainres.2007.06.110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huey EB. The psychology and pedagogy of reading. MacMillen; New York: 1908. [Google Scholar]

- Hutzler F, Conrad M, Jacobs AM. Effects of syllable frequency in lexical decision and naming: An eye movement study. Brain and Language. 2005;92:138–152. doi: 10.1016/j.bandl.2004.06.001. [DOI] [PubMed] [Google Scholar]

- Kramer AF, Donchin E. Brain potentials as indices of orthographic and phonological interaction during word matching. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1987;13:76–86. doi: 10.1037//0278-7393.13.1.76. [DOI] [PubMed] [Google Scholar]

- Kutas M, Van Petten C. Electrophysiological perspectives on comprehending written language. New Trends and Advances-- Techniques in Clinical Neurophysiology. 1990;41:155–167. doi: 10.1016/b978-0-444-81352-7.50020-0. [DOI] [PubMed] [Google Scholar]

- Lahiri A, Reetz H. Underspecified recognition. In: Gussenhoven L, Werner N, Rietuld T, editors. Labphon 7. Mouton; Berlin: 2003. pp. 637–676. [Google Scholar]

- Lee HW, Rayner K, Pollatsek A. The time course of phonological, semantic, and orthographic coding in reading: Evidence from the fast-priming technique. Psychonomic Bulletin and Review. 1999;6:624–634. doi: 10.3758/bf03212971. [DOI] [PubMed] [Google Scholar]

- Lee YA, Binder KS, Kim JO, Pollatsek A, Rayner K. Activation of phonological codes during eye fixations in reading. Journal of Experimental Psychology: Human Perception and Performance. 1999;25:948–964. doi: 10.1037//0096-1523.25.4.948. [DOI] [PubMed] [Google Scholar]

- Liberman IY, Shankweiler D, Liberman AM. The alphabetic principle and learning to read. In: Shankweiler D, Liberman IY, editors. Phonology and reading disability: Solving the reading puzzle. University of Michigan Press; Ann Arbor: 1989. pp. 2–33. [Google Scholar]

- Lukatela G, Eaton T, Lee C, Turvey MT. Does visual word identification involve a sub-phonemic level? Cognition. 2001;78:41–52. doi: 10.1016/s0010-0277(00)00121-9. [DOI] [PubMed] [Google Scholar]

- Lukatela G, Eaton T, Sabadini L, Turvey MT. Vowel duration affects visual word identification: Evidence that the mediating phonology is phonetically informed. Journal of Experimental Psychology: Human Perception and Performance. 2004;30:151–162. doi: 10.1037/0096-1523.30.1.151. [DOI] [PubMed] [Google Scholar]

- Newman RL, Connolly JF. Determining the role of phonology in silent reading using event-related brain potentials. Cognitive Brain Research. 2004;21:94–105. doi: 10.1016/j.cogbrainres.2004.05.006. [DOI] [PubMed] [Google Scholar]

- Paap KR, Johansen LS. The case of the vanishing frequency effect – a retest of the verification model. Journal of Experimental Psychology-Human Perception and Performance. 1994;20:1129–1157. [Google Scholar]

- Perfetti CA. Comprehending written language: a blueprint of the reader. In: Brown CM, Hagoort P, editors. The Neurocognition of Language. Oxford University Press; New York: 1999. pp. 167–210. [Google Scholar]

- Perfetti CA, Bell LC, Delaney SM. Automatic (prelexical) phonetic activation in silent word reading: Evidence form backward masking. Journal of Memory and Language. 1988;27:59–70. [Google Scholar]

- Poldrack RA, Temple E, Protopapas A, Nagarajan S, Tallal P, Merzenich M, Gabrieli JDE. Relations between the neural bases of dynamic auditory processing and phonological processing: Evidence from fMRI. Journal of Cognitive Neuroscience. 2001;13:687–697. doi: 10.1162/089892901750363235. [DOI] [PubMed] [Google Scholar]

- Pollatsek A, Lesch MF, Morris RK, Rayner K. Phonological codes are used in integrating information across saccades in word identification and reading. Journal of Experimental Psychology: Human Perception and Performance. 1992;18:148–162. doi: 10.1037//0096-1523.18.1.148. [DOI] [PubMed] [Google Scholar]

- Praamstra P, Meyer AS, Levelt WJ. Neurophysiological manifestations of phonological processing: Latency variation of a negative ERP component timelocked to phonological mismatch. Journal of Cognitive Neuroscience. 1994;6:204–219. doi: 10.1162/jocn.1994.6.3.204. [DOI] [PubMed] [Google Scholar]

- Proverbio AM, Vecchi L, Zani A. From orthography to phonetics: ERP measures of grapheme-to-phoneme conversion mechanisms in reading. Journal of Cognitive Neuroscience. 2004;16:301–317. doi: 10.1162/089892904322984580. [DOI] [PubMed] [Google Scholar]

- Pulvermuller F, Huss M, Kherif F, Martin F, Hauk O, Shtyrov Y. Motor cortex maps articulatory features of speech sounds. Proceedings of the National Academy of Sciences of the United States of America. 2006;103:7865–7870. doi: 10.1073/pnas.0509989103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rastle K, Brysbaert M. Masked phonological priming effects in English: Are they real? Do they matter? Cognitive Psychology. 2006;53:97–145. doi: 10.1016/j.cogpsych.2006.01.002. [DOI] [PubMed] [Google Scholar]

- Rayner K. Eye movements in reading and information processing: 20 years of research. Psychological Bulletin. 1998;124:372–422. doi: 10.1037/0033-2909.124.3.372. [DOI] [PubMed] [Google Scholar]

- Rayner K, Pollatsek A. Psychology of Reading. Lawrence Erlbaum; Englewood Cliffs, NJ: 1989. [Google Scholar]

- Rugg MD. Event-related potentials in phonological matching tasks. Brain and Language. 1984;23:225–240. doi: 10.1016/0093-934x(84)90065-8. [DOI] [PubMed] [Google Scholar]

- Rugg MD, Barrett S. Event-related potentials and the interaction between orthographic and phonological information in a rhyme judgment task. Brain and Language. 1987;32:336–361. doi: 10.1016/0093-934x(87)90132-5. [DOI] [PubMed] [Google Scholar]

- Sereno SC, Rayner K. Measuring word recognition in reading: Eye movements and event-related potentials. Trends in Cognitive Sciences. 2003;7:489–493. doi: 10.1016/j.tics.2003.09.010. [DOI] [PubMed] [Google Scholar]

- Sereno SC, Rayner K, Posner MI. Establishing a time-line of word recognition: Evidence from eye movements and event-related potentials. NeuroReport. 1998;9:2195–2200. doi: 10.1097/00001756-199807130-00009. [DOI] [PubMed] [Google Scholar]

- Shaywitz SE. Overcoming Dyslexia. Knopf; New York: 2003. [Google Scholar]

- Simon G, Bernard C, Largy P, Lalonde R, Rebai M. Chronometry of visual word recognition during passive and lexical decision tasks: An ERP investigation. International Journal of Neuroscience. 2004;114:1401–1432. doi: 10.1080/00207450490476057. [DOI] [PubMed] [Google Scholar]

- Smith F. Why systematic phonics and phonemic awareness instruction constitute an educational hazard. Language Arts. 1999;77:150–155. [Google Scholar]

- Snowling M. Dyslexia as a phonological deficit: evidence and implications. Child Psychology & Psychiatry Review. 1998;3:4–11. [Google Scholar]

- Treiman R, Fowler C, Gross J, Berch D, Weatherston S. Syllable structure or word structure? Evidence for onset and rime units with disyllabic and trisyllabic stimuli. Journal of Memory and Language. 1995;34:132–155. [Google Scholar]

- Van Orden GC, Johnston JC, Hale BL. Word identification in reading proceeds from spelling to sound to meaning. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1988;14:371–386. doi: 10.1037//0278-7393.14.3.371. [DOI] [PubMed] [Google Scholar]