Abstract

The gestures children produce predict the early stages of spoken language development. Here we ask whether gesture is a global predictor of language learning, or whether particular gestures predict particular language outcomes. We observed 52 children interacting with their caregivers at home, and found that gesture use at 18 months selectively predicted lexical versus syntactic skills at 42 months, even with early child speech controlled. Specifically, number of different meanings conveyed in gesture at 18 months predicted vocabulary at 42 months, but number of gesture+speech combinations did not. In contrast, number of gesture+speech combinations, particularly those conveying sentence-like ideas, produced at 18 months predicted sentence complexity at 42 months, but meanings conveyed in gesture did not. We can thus predict particular milestones in vocabulary and sentence complexity at age 3 1/2 by watching how children move their hands two years earlier.

Introduction

The average 10-month-old child does not yet produce intelligible speech but does communicate – through gesture (Bates, 1976; Bates, Benigni, Bretherton, Camaioni & Volterrra, 1979). Moreover, early gesture use is linked to later word learning – the more a child gestures early on, the larger the child's vocabulary later in development (Acredolo & Goodwyn, 1988; Rowe, Özçaliskan & Goldin-Meadow, 2006). In fact, we can predict which lexical items will enter a child's verbal vocabulary by looking at the objects that child indicated in gesture several months earlier (Iverson & Goldin-Meadow, 2005).

Gesture use continues to precede, and to predict, children's language development as they enter the two-word stage. Children who cannot yet combine two words within a single utterance can nevertheless express a two-word idea using gesture and speech together (e.g. point at cup+‘mommy’, referring to mommy's cup; Butcher & Goldin-Meadow, 2000; Capirci, Iverson, Pizzuto & Volterra, 1996; Greenfield & Smith, 1976). Interestingly, the age at which children first produce this type of gesture+speech combination reliably predicts the age at which they first produce two-word utterances (Goldin-Meadow & Butcher, 2003; Iverson & Goldin-Meadow, 2005; Iverson, Capirci, Volterra & Goldin-Meadow, 2008).

Gesture thus forecasts the earliest stages of language learning. But why? Early gesture use might be an early index of global communicative skill. For example, children who convey a large number of different meanings in their early gestures might be generally verbally facile. If so, not only should these children have large vocabularies later in development, but their sentences ought to be relatively complex as well. Alternatively, particular types of early gesture use could be specifically related to particular aspects of later spoken language use. For example, a child who conveys a large number of different meanings via gesture early in development might be expected to have a relatively large vocabulary several years later, but the child might not necessarily produce complex sentences. In contrast, a child who frequently combines gesture and speech to create sentence-like meanings (e.g. point at hat+‘dada’ = ‘that's dada's hat’) early in development might be expected to produce relatively complex spoken sentences several years later, but not necessarily to have a large vocabulary.

Our goal in this study was to test gesture's ability to selectively predict later language learning. We calculated two distinct gesture measures early in development (18 months) and explored how well each measure predicted two different language measures – vocabulary size and sentence complexity – later in development (42 months).

Method

Participants

Fifty-two typically developing children (27 males, 25 females) participated in the study. Children were drawn from a larger sample of families in a longitudinal study of language development. These families were selected to be representative of the greater Chicago area in terms of ethnicity and income levels. All children were being raised as monolingual English speakers.

Procedure and measures

Parent–child dyads were visited in the home every 4 months beginning when the children were 14 months, and videotaped for 90 minutes engaging in their ordinary activities. A typical session included toy play, book reading, and meal or snack time. Our analyses were based on naturalistic data from observations at 18 and 42 months. In addition, children were given a standardized language assessment at 42 months, which was included in the analyses.

Transcription conventions

All speech and gestures on the videotapes were transcribed. The unit of transcription was the utterance, defined as any sequence of words and/or gestures preceded and followed by a pause, a change in conversational turn, or a change in intonational pattern. Transcription reliability was established by having a second coder transcribe 20% of the videotapes; reliability was assessed at the utterance level and was achieved when coders agreed on 95% of transcription decisions.

Speech

All dictionary words, as well as onomatopoeic sounds (e.g. woof-woof) and evaluative sounds (e.g. uh-oh), were counted as words. The number of word types (number of different intelligible word roots) served as a control measure of spoken vocabulary. At 18 months children produced an average of 40 different vocabulary words (SD = 33.4). Mean length of utterance (MLU) in words served as a control measure of spoken syntactic skill. At 18 months children produced an average MLU in words of 1.16 (SD = 0.16). Child word types and MLU at 18 months were positively related to one another (r = .24, p = .08).

Gesture

Children produced gestures indicating objects, people, or locations in the surrounding context (e.g. point at dog), gestures depicting attributes or actions of concrete or abstract referents via hand or body movements (e.g. flapping arms to represent flying), and gestures having pre-established meanings associated with particular gesture forms (e.g. shaking the head ‘no’). Other actions or hand movements that involved manipulating objects (e.g. turning the page of a book) or were part of a ritualized game (e.g. itsy-bitsy spider) were not considered gestures.

We focused on two measures of gesture use during the early stages of language learning. (1) Gesture vocabulary: the number of different meanings the child conveyed via gesture (e.g. point at dog = dog; shake head = no). At 18 months, children produced an average of 33.6 different vocabulary items via gesture (SD = 21.8). (2) Gesture+speech combinations conveying sentence-like ideas: the number of gesture+speech combinations in which gesture conveyed one idea and speech another (e.g. point at cup+‘mommy’). The onset of these types of gesture+speech combinations has been found to precede, and predict, the onset of two-word utterances in both English-learning (Goldin-Meadow & Butcher, 2003; Iverson & Goldin-Meadow, 2005) and Italian-learning (Iverson et al., 2008) children. At 18 months, children produced an average of 11.0 combinations of this type (SD = 11.4). The two gesture measures were positively associated with one another (r = .60, p < .001). We used 18-month (rather than14-month) measures as predictors because some children did not produce any words at all at 14 months, and less than half were producing gesture+speech combinations; in contrast, by 18 months, all of the children produced words (which allowed us to control for the size of the children's early spoken vocabularies in our analyses), and 85% produced at least one gesture+speech combination.

Later measures of vocabulary size and sentence complexity

Children's scores on the Peabody Picture Vocabulary Test (PPVT III; Dunn & Dunn, 1997), administered at 42 months, served as the outcome measure for later vocabulary skill. The PPVT is a widely used measure of vocabulary comprehension with published norms. The average normed PPVT score for our sample was 106 (SD = 17.12).

Children's scores on the Index of Productive Syntax (IPSyn; Scarborough, 1990) were used as the outcome measure for later sentence complexity. IPSyn scores were calculated based on the spoken language each child produced during the 42-month videotaped session. The IPSyn gives children credit for producing different types of noun phrases, verb phrases, and sentences and does not measure tokens (i.e. it does not calculate the number of times each type is produced). It is therefore a measure of the range of structures the child is able to produce at a particular point in time (see Scarborough, 1990, for a description of scoring procedures). The average IPSyn score for our sample was 72 (SD = 10.6). Scores on the PPVT and IPSyn were positively related to one another (r = .37, p < .01).

Results

We conducted a series of multiple regression analyses using children's early gesture vocabulary and gesture+speech combinations at 18 months to predict vocabulary (PPVT) and sentence complexity (IPSyn) at 42 months. In order to determine whether including gesture improves our ability to predict later language above and beyond early speech predictors, we controlled for number of spoken word types produced at 18 months in our analyses of vocabulary size, and MLU at 18 months in our analyses of sentence complexity.

Vocabulary size

Model 1 in Table 1 displays the effect of early spoken vocabulary (word types at 18 months) on later vocabulary comprehension (PPVT at 42 months). The standardized parameter estimate indicates that every standard deviation change in word types that the child produced at 18 months is positively associated with a 0.41 standard deviation difference in PPVT scores at 42 months (p < .01). In this model, spoken vocabulary at 18 months explains 16.7% of the variation in PPVT scores at 42 months.

Table 1.

A series of regression models predicting child vocabulary comprehension skill (PPVT) at 42 months based on early gesture measures (18 months), controlling for early spoken vocabulary (n = 52)

| PPVT 42 months β (standardized) | |||

|---|---|---|---|

| Model 1 | Model 2 | Model 3 | |

| Spoken vocabulary | 0.41** | 0.28* | 0.22 |

| Gesture vocabulary | 0.40** | ||

| Gesture+Speech combinations | 0.27 | ||

| R2 statistic (%) | 16.7 | 30.9 | 20.4 |

p < .05;

p < .01.

In Model 2 (Table 1) we include gesture vocabulary at 18 months as an additional predictor. In this model, the effect of spoken vocabulary at 18 months reduces in strength, and gesture vocabulary at 18 months is a strong, positive predictor of PPVT scores at 42 months (p < .001). Controlling for spoken vocabulary at 18 months, every additional standard deviation change in meanings that the child conveys in gesture at 18 months is positively associated with a 0.40 standard deviation difference in PPVT scores at 42 months. In this model, spoken vocabulary and gesture vocabulary at 18 months combine to explain 30.9% of the variation in PPVT scores at 42 months.1

Model 3 (Table 1) shows that gesture+speech combinations at 18 months do not predict PPVT scores at 42 months, again controlling for early spoken words.2

In sum, controlling for early spoken vocabulary, gesture vocabulary is a strong, reliable positive predictor of PPVT scores at 42 months, whereas gesture+speech combinations are not. Thus, early gesture selectively predicts later vocabulary skill.

But is it gesture per se that predicts later vocabulary size? Perhaps children who produce many different types of gestures early in development also understand a relatively large number of spoken words (cf. Fenson, Dale, Reznick, Bates, Thal & Pethick, 1994). If so, early spoken comprehension might be driving the tight relation we see between early gesture and later vocabulary. To explore this possibility, we examined vocabulary comprehension scores from the MacArthur Communicative Development Inventory (CDI), which was administered to a subset of the children in the study at 14 months (n = 15). Interestingly, we found no relation between CDI comprehension at 14 months and PPVT at 42 months (r = −.39, p = .16). However, we did find a significant, positive relation between gesture vocabulary at 14 months and PPVT at 42 months, even when 14-month performance on the CDI was controlled (r = .59, p < .05). These findings underscore two points: (1) early gesture vocabulary over a period of at least 4 months can be used to predict later vocabulary comprehension, and (2) the number of words the child understands during this early period does not drive the relation between early gesture and later vocabulary.

Sentence complexity

Table 2 presents regression analyses predicting sentence complexity (IPSyn) at 42 months. Model 1 shows that there is no relation between early spoken sentence complexity (MLU at 18 months) and IPSyn at 42 months. We retained MLU in subsequent models as a control for early spoken sentences, but results remain the same even if MLU is not included.

Table 2.

A series of regression models predicting child syntax production (IPSyn) at 42 months based on early gesture measures (18 months), controlling for early spoken syntactic ability (MLU) (n = 52)

| IPSyn 42 months β (standardized) | |||

|---|---|---|---|

| Model 1 | Model 2 | Model 3 | |

| Spoken MLU | 10.05 | 9.58 | 10.05 |

| Gesture+Speech combinations | 0.13* | ||

| Gesture vocabulary | 0.07 | ||

| R2 statistic (%) | 1.8 | 12.7 | 5.7 |

p < .05.

Model 2 (Table 2) includes gesture+speech combinations at 18 months as an additional predictor. In this model, gesture+speech combinations are a significant positive predictor of IPSyn scores at 42 months (p < .05). Controlling for MLU, every additional standard deviation change in gesture+speech combinations produced by the child at 18 months is positively associated with a 0.13 standard deviation difference in IPSyn scores at 42 months. In this model, MLU and gesture+speech combinations combine to explain 12.7% of the variation in IPSyn scores at 42 months.

Model 3 (Table 2) shows that gesture vocabulary at 18 months does not predict IPSyn scores at 42 months, again controlling for early MLU.

In sum, controlling for early verbal syntactic skill, early gesture+speech combinations predict later IPSyn scores, but early gesture vocabulary does not.3 Thus, early gesture selectively predicts later syntactic skill.

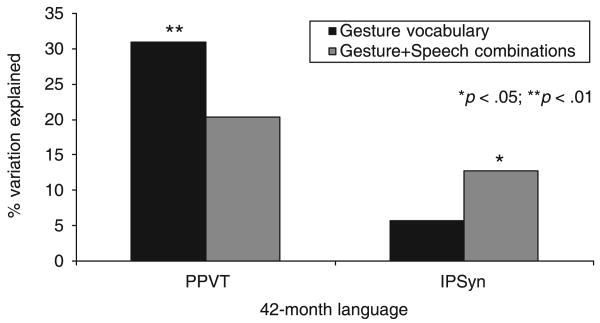

Figure 1 summarizes the regression findings. The figure displays the amount of variation in vocabulary (PPVT, left bars) and sentence complexity (IPSyn, right bars) at 42 months that can be explained by the two gesture measures (gesture vocabulary, gesture+speech combinations) taken at 18 months (including speech controls). The important point to note is that, for PPVT scores, the model including gesture vocabulary (black bar; F = 10.95; p < .0001) explains more of the variation (R2 = 30.9%) than the model including gesture+speech combinations (gray bar; F = 6.27; p < .01; R2 = 20.4%).4 Furthermore, controlling for spoken vocabulary at 18 months, gesture vocabulary is a significant predictor of PPVT scores at 42 months (p < .01), whereas gesture+speech combinations are not. In contrast, for IPSyn scores, the model including gesture+speech combinations (gray bar; F = 3.55; p < .05) explains more of the variation (R2 = 12.7%) than the model including gesture vocabulary (black bar; F = 1.48, p < .24; R2 = 5.7%). And, controlling for spoken sentence complexity at 18 months, gesture+speech combinations are a significant predictor of IPSyn scores at 42 months (p < .05), whereas gesture vocabulary is not.

Figure 1.

Percent of variation in PPVT and IPSyn scores at 42 months explained by gesture vocabulary (black bars) and gesture+speech combinations (gray bars) at 18 months, controlling for early child spoken abilities (n = 52).

Discussion

Previous research had shown that gesture predicts later linguistic skills. In particular, pointing gestures predict the nature of a child's subsequent spoken vocabulary and gesture+speech combinations predict later two-word combinations. But, until our study, the specificity of these predictions had not been explored. Children's pointing gestures could, after all, also predict their two-word combinations, and their gesture+speech combinations could predict their spoken vocabulary.

The findings from our study make it clear that the gesture predictions are indeed finely-tuned. Children's gesture vocabulary (the number of different meanings expressed via gesture) at 18 months is a strong predictor of verbal vocabulary size at 42 months, but their gesture+speech ‘sentences’ (combinations in which gesture conveys one idea and speech another) are not. In contrast, the gesture+speech sentences children produce at 18 months are a strong predictor of verbal sentence complexity at 42 months, but their gesture vocabulary is not. Verbal vocabulary and syntax have been shown to be related abilities in language-learning children (e.g. Marchman & Bates, 1994). However, the two skills are not identical. To the degree that the skills differ during the preschool years, we can see those differences in children's early uses of gesture.

Why does early gesture use selectively predict later spoken vocabulary size and sentence complexity? One possibility is that gesture use reflects two separate abilities (word learning and sentence making) on which later linguistic abilities can be built. Using gesture in specific ways (i.e. to indicate objects in the environment, or to add arguments to a verbal utterance) allows children to express communicative meanings at a time when they are unable to express those meanings in speech. Expressing many different meanings in gesture early in development could be nothing more than an early sign that the child is going to be a good vocabulary learner. Similarly, expressing many gesture+speech combinations early in development could be nothing more than an early sign that the child is going to be a good sentence learner. In other words, the early gestures that children produce could reflect their potential for learning particular aspects of language, but play no role in helping them realize that potential.

Alternatively, the act of expressing vocabulary meanings in gesture could be playing an active role in helping children become better vocabulary learners, just as the act of expressing sentence-like meanings in gesture+speech combinations could be playing an active role in helping children become better sentence learners. This active role could be driven by the child's interaction with an adult, or by the child's own gestures (Goldin-Meadow, 2003).

Gesture is often used in episodes of joint attention between a child and adult, and the frequency of those joint attention episodes is positively associated with language outcomes (Tomasello & Farrar, 1986), perhaps because child gesture elicits verbal responses from parents that facilitate language learning (see, for example, Goldin-Meadow, Goodrich, Sauer & Iverson, 2007). Consider a child who does not yet know the word ‘dog’ and refers to the animal by pointing at it. If mother and child are engaged in an episode of joint attention, mother is likely to respond, ‘yes, that's a dog’, thus supplying the child with just the word he is looking for. Or consider a child who points at her mother while saying the word ‘hat’. Her mother may reply, ‘that's mommy's hat’, thus translating the child's gesture+word combination into a simple sentence. Because they are responses to the child's gestures and therefore finely-tuned to the child's current state, maternal responses of this sort could be particularly effective in teaching children how an idea is expressed in the language they are learning. Gesturing thus elicits responses from others that have the potential to facilitate language learning.

Gesture might also play an active role in language learning by giving children opportunities to practice specific constructions before they can be produced in speech. Children who produce many gesture+speech combinations may be practicing conveying sentence-like meanings and, in this way, facilitating their own transition to two-word speech. Indeed, in studies of older children learning how to solve a math problem, children who are told to practice a correct problem-solving strategy in gesture are significantly more likely to learn how to solve the problem than children who are told to practice the same problem-solving strategy in speech (Cook, Mitchell & Goldin-Meadow, 2007; see also Broaders, Cook, Mitchell & Goldin-Meadow, 2007). Thus, the act of gesturing may itself promote language learning.

Whether or not gesture plays an active role in learning, our results underscore three points about early child gesture: (1) It reflects the child's potential for later language learning. (2) It predicts later language learning in a finely-tuned fashion – gesture vocabulary predicts later verbal vocabulary, not sentence complexity; and gesture+speech sentences predict later sentence complexity, not verbal vocabulary. (3) It predicts later language learning over and above early child speech. Early gesture, or its lack, may, in the end, be a more sensitive – and more targeted – indicator of potential language delay than early speech production.

In conclusion, we have found that early child gesture predicts language skills later in development and is not a global index of language-learning skill, but rather reflects specific skills on which later linguistic abilities can be built.

Acknowledgments

This research was supported by grants from NICHD: F32HD045099 to MR, and P01HD40605 to SGM. We thank the participating families for sharing their child's language development with us; K. Brasky, L. Chang, E. Croft, K. Duboc, J. Griffin, S. Gripshover, K. Harden, L. King, C. Meanwell, E. Mellum, M. Nikolas, J. Oberholtzer, C. Rousch, L. Rissman, B. Seibel, M. Simone, K. Uttich, and J. Wallman for help in collecting and transcribing the data; Seyda Özçaliskan and Eve Sauer for gesture coding; Kristi Schonwald and Jason Voigt for administrative and technical assistance.

Footnotes

An additional model, not shown, indicates that gesture vocabulary alone explains 24.2% of the variance in PPVT scores.

Spoken vocabulary is not significant in Model 3 because spoken vocabulary and gesture + speech combinations are collinear (r = .69, p < .001). Spoken vocabulary and gesture vocabulary at 18 months are also related (r = .33, p < .05).

This result holds when we add in gesture+speech combinations in which gesture reinforces the meaning conveyed in speech (point at dog + ‘dog’).

When gesture vocabulary and gesture+speech combinations at 18 months are pitted against one another (along with the spoken vocabulary control) in a single model explaining PPVT scores (not shown), the effect of gesture vocabulary is significant (p < .01), but the effect of gesture+speech combinations is not (p = .85) [F = 7.17, p < .001, R2 = 30.9%].

References

- Acredolo LP, Goodwyn SW. Symbolic gesturing in normal infants. Child Development. 1988;59:450–466. [PubMed] [Google Scholar]

- Bates E. Language and context. Orlando, FL: Academic Press; 1976. [Google Scholar]

- Bates E, Benigni L, Bretherton I, Camaioni L, Volterra V. The emergence of symbols: Cognition and communication in infancy. New York: Academic Press; 1979. [Google Scholar]

- Broaders SC, Cook SW, Mitchell Z, Goldin-Meadow S. Making children gesture brings out implicit knowledge and leads to learning. Journal of Experimental Psychology: General. 2007;136(4):539–550. doi: 10.1037/0096-3445.136.4.539. [DOI] [PubMed] [Google Scholar]

- Butcher C, Goldin-Meadow S. Gesture and the transition from one- to two-word speech: when hand and mouth come together. In: McNeill D, editor. Language and gesture. New York: Cambridge University Press; 2000. pp. 235–258. [Google Scholar]

- Capirci O, Iverson JM, Pizzuto E, Volterra V. Communicative gestures during the transition to two-word speech. Journal of Child Language. 1996;23:645–673. [Google Scholar]

- Cook S Wagner, Mitchell Z, Goldin-Meadow S. Gesturing makes learning last. Cognition. 2007;106:1047–1058. doi: 10.1016/j.cognition.2007.04.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunn LM, Dunn LM. Peabody Picture Vocabulary Test. 3rd. Circle Pines, MN: American Guidance Service; 1997. [Google Scholar]

- Fenson L, Dale PS, Reznick JS, Bates E, Thal DJ, Pethick SJ. Variability in early communicative development. (59).Monographs of the Society for Research in Child Development. 1994;242(5) [PubMed] [Google Scholar]

- Goldin-Meadow S. Hearing gesture: How our hands help us think. Cambridge, MA: Harvard University Press; 2003. [Google Scholar]

- Goldin-Meadow S, Butcher C. Pointing toward two-word speech in young children. In: Kita S, editor. Pointing: Where language, culture, and cognition meet. Mahwah, NJ: Erlbaum; 2003. pp. 85–107. [Google Scholar]

- Goldin-Meadow S, Goodrich W, Sauer E, Iverson J. Young children use their hands to tell their mothers what to say. Developmental Science. 2007;10:778–785. doi: 10.1111/j.1467-7687.2007.00636.x. [DOI] [PubMed] [Google Scholar]

- Greenfield PM, Smith JH. The structure of communication in early language development. New York: Academic Press; 1976. [Google Scholar]

- Iverson JM, Capirci O, Volterra V, Goldin-Meadow S. Learning to talk in a gesture-rich world: early communication in Italian vs. American children. First Language. 2008;28:164–181. doi: 10.1177/0142723707087736. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iverson JM, Goldin-Meadow S. Gesture paves the way for language development. Psychological Science. 2005;16:368–371. doi: 10.1111/j.0956-7976.2005.01542.x. [DOI] [PubMed] [Google Scholar]

- Marchman VA, Bates E. Continuity in lexical and morphological development: a test of the critical mass hypothesis. Journal of Child Language. 1994;21:339–366. doi: 10.1017/s0305000900009302. [DOI] [PubMed] [Google Scholar]

- Rowe ML, Özçaliskan S, Goldin-Meadow S. The added value of gesture in predicting vocabulary growth. In: Bamman D, Magnitskaia T, Zaller C, editors. Proceedings of the 30th Annual Boston University Conference on Language Development; Somerville MA: Cascadilla Press; 2006. pp. 501–512. [Google Scholar]

- Scarborough HS. Index of productive syntax. Applied Psycholinguistics. 1990;11:1–22. [Google Scholar]

- Tomasello M, Farrar MJ. Joint attention and early language. Child Development. 1986;57:1454–1463. [PubMed] [Google Scholar]