Abstract

Objective:

Our purpose was to develop a geographically localized, multi-institution strategy for improving enrolment in a trial of secondary stroke prevention.

Methods:

We invited 11 Connecticut hospitals to participate in a project named the Local Identification and Outreach Network (LION). Each hospital provided the names of patients with stroke or TIA, identified from electronic admission or discharge logs, to researchers at a central coordinating center. After obtaining permission from personal physicians, researchers contacted each patient to describe the study, screen for eligibility, and set up a home visit for consent. Researchers traveled throughout the state to enroll and follow participants. Outside the LION, investigators identified trial participants using conventional recruitment strategies. We compared recruitment success for the LION and other sites using data from January 1, 2005, through June 30, 2007.

Results:

The average monthly randomization rate from the LION was 4.0 participants, compared with 0.46 at 104 other Insulin Resistance Intervention after Stroke (IRIS) sites. The LION randomized on average 1.52/1,000 beds/month, compared with 0.76/1,000 beds/month at other IRIS sites (p = 0.03). The average cost to randomize and follow one participant was $8,697 for the LION, compared with $7,198 for other sites.

Conclusion:

A geographically based network of institutions, served by a central coordinating center, randomized substantially more patients per month compared with sites outside of the network. The high enrollment rate was a result of surveillance at multiple institutions and greater productivity at each institution. Although the cost per patient was higher for the network, compared with nonnetwork sites, cost savings could result from more rapid completion of research.

GLOSSARY

- BMI

= body mass index;

- HIPAA

= Health Insurance Portability and Accountability Act;

- HOMA

= homeostastis model assessment of insulin resistance;

- ICD-9

= International Classification of Diseases, 9th Revision;

- IRB

= institutional review board;

- IRIS

= Insulin Resistance Intervention after Stroke;

- LION

= Local Identification and Outreach Network.

The randomized clinical trial is the preferred scientific strategy for measuring the effectiveness and safety of new therapies for stroke patients. Unfortunately, many recent trials in stroke have been hampered by slow patient accrual.1,2 Trials that are designed to last 3 to 4 years are taking much longer. Trials designed for one or two countries have grown into multinational efforts. Among the serious consequences of slow accrual are increased costs and delayed completion.3,4

The current paradigm for most large clinical trials in stroke includes recruitment from multiple, independent hospitals. Each hospital establishes and staffs its own research operation and seeks patients only within its own walls. Within this paradigm, reasons for slow accrual, other than exclusion factors,5 seem to include 1) inadequate numbers of eligible patients seeking care at a recruitment venue, 2) failure to identify and approach all eligible patients, 3) barriers to participation that result in patient refusal, and 4) lack of adequately trained research personnel. Many small and nonacademic hospitals cannot support this paradigm and do not participate in stroke trials.

In this article, we describe a novel approach that addresses these basic reasons for slow accrual. The approach, termed Local Identification and Outreach Networks (LIONs), was first developed to improve recruitment for the Hemorrhagic Stroke Project6 during 1994–2000 and the Women's Estrogen for Stroke Trial during 1993–2001.7 It was reconstructed in 2004 for the Insulin Resistance Intervention after Stroke (IRIS) trial, which is examining the effectiveness of pioglitazone compared with placebo for prevention of stroke and myocardial infarction among patients with insulin resistance and a recent ischemic stroke or TIA. This article is based on our experience with the Connecticut LION in the IRIS trial.

The Connecticut LION includes multiple collaborating hospitals. Each works within US federal guidelines governing human subjects research and the Health Insurance Portability and Accountability Act (HIPAA)8 to provide the names of patients with stroke or TIA to researchers at a central coordinating center. After obtaining permission from personal physicians, the researchers contact each patient to describe the study, screen for eligibility, and set up a home visit. Researchers from the center travel throughout the state to enroll and follow participants.

METHODS

Hospital and investigator eligibility.

General hospitals in the state of Connecticut were selected for possible participation if they had more than 300 beds. We also approached selected smaller hospitals, such as rehabilitation hospitals, with distinct features indicating a high volume of ischemic stroke patients, and the presence of a staff neurologist or physiatrist who was willing to serve as site principal investigator.

Recruitment protocol in the Connecticut LION.

We petitioned the institutional review board (IRB) at each hospital for permission to use active surveillance. For purposes of this research, active surveillance involves efforts by researchers to identify and contact every eligible patient using a system that may be reasonably expected to achieve complete capture. In our preferred strategy, staff from the Network Coordinating Center had access to admission or discharge logs for patients with stroke and TIA. If this strategy was not accepted by the governing IRB, we negotiated an alternative. Identification of patients by clinicians during routine care with subsequent referral to the Network Coordinating Center was not considered active surveillance.

Active surveillance required a waiver of HIPAA research authorization. Under current law, such waivers are permitted when research cannot be practicably conducted without access to and use of protected health information.8

Once a potentially eligible patient was identified, a research associate reviewed the medical records, if available, to confirm eligibility. If eligibility was confirmed or remained uncertain, a research associate or an IRIS investigator obtained permission from an attending or personal physician to contact the patient.

If permission was granted, a research associate spoke with the patient by telephone or in person (if still in the hospital). The associate explained the IRIS trial and screened for eligibility. If the patient screened eligible, the associate invited the patient to learn more during an in-person visit by a research nurse. If the patient agreed, an appointment was made for the nurse to meet the patient in the patient's home or another location if preferred by the patient.

During the in-person visit, the nurse explained the IRIS protocol, invited questions, reconfirmed eligibility, and invited the patient to provide written informed consent. Each eligible patient signed a Research Authorization Form, in addition to the written informed consent, allowing researchers access to additional personal health information. Further enrollment activities, such as the baseline interview, phlebotomy, and randomization were completed during a second visit.

The role of the site principal investigator at each member institution was to consult on local research implementation. He or she edited an IRB application that was drafted by researchers at the Network Coordinating Center. Once the application was approved, the site principal investigator's role was to periodically review trial operations, assist in annual IRB reapprovals, assist in eligibility determination for specific patients, and continually evaluate and improve research implementation. Research associates from the Network Coordinating Center were accountable to the site principal investigator for their performance. The site principal investigator at most sites received a stipend that was based on enrollment.

Recruitment protocols at IRIS sites outside the Connecticut LION.

Outside the Connecticut LION, site investigators typically recruited from one hospital. A few recruited from two or three. Site investigators were encouraged but not required to use active surveillance and to identify potential participants from electronic rosters that would provide near-complete capture. Not all hospital IRBs, however, would approve strategies in which patients were identified from such rosters. Coordinators outside the Connecticut LION rarely performed home visits and typically worked on more than one study. The main differences between the LION in Connecticut and other sites, therefore, were that in Connecticut the research staff from a central coordinating center covered multiple hospitals, worked exclusively on the IRIS trial, routinely made first contact with patients usually by telephone, and enrolled patients during home visits.

IRIS protocol.

Essential eligibility criteria include a TIA or ischemic stroke more than 2 weeks before baseline evaluation but less than 6 months, age older than 40 years, absence of diabetes, ability to provide informed consent, and insulin resistance. Insulin resistance is determined by the homeostasis model assessment of insulin resistance (HOMA) index, calculated from measurements of fasting insulin and glucose. Eligible participants are randomly assigned to placebo or pioglitazone 45 mg. Surveillance for safety, outcome events, risk factor status, cotherapy, and adherence are completed during regular telephone calls and annual in-person visits. Patients remain on study drug for at least 3 years. The primary outcome is time to stroke or myocardial infarction.

Data management and analysis.

For this report, we included data on all LION institutions in Connecticut for which an IRB application or research committee application was submitted.

For each institution that approved the IRIS protocol, electronic data were stored in files created with Microsoft® Office Access 2003 (Redmond, WA). Data from patients who consented to participate that were originally collected on paper forms were converted to electronic format using Cardiff TeleForm® software, version 10.1 (Vista, CA). Data were analyzed using SAS statistical software, version 9.1 (Cary, NC).

Recruitment and follow-up costs were calculated as total cost per randomized patient. For the Connecticut LION, costs included salaries for personnel at the Network Coordinating Center from the date of hire in 2004 through June 30, 2007, staff travel costs within the state, stipends for investigators at member institutions, wages for personnel at member institutions, and 30.4% facilities and administration fees. Personnel included three full-time research associates (two registered nurses), a physician–investigator (5% effort), a project manager (3% effort), a business manager (5% effort), an IRB application specialist (100% effort first 2 years only), and an administrative assistant (0–30% effort variable over time). For sites outside the LION, costs included all fees paid from the beginning of the project in 2004 through June 30, 2007, and the cost of contract management (30.4% on the first $25,000 paid to sites). Indirect costs averaged 24% (range 8%–46%).

RESULTS

LION assembly.

Among 28 acute care not-for-profit hospitals in Connecticut, we identified 12 with more than 300 beds. One was not invited to participate because, based on past experience, we believed it would decline. Two chose to participate as independent sites outside of the LION project. We added 2 hospitals with less than 300 beds: a rehabilitation hospital and an acute care hospital with strong records in stroke research. A total of 11 hospitals, therefore, were invited to participate and initially accepted.

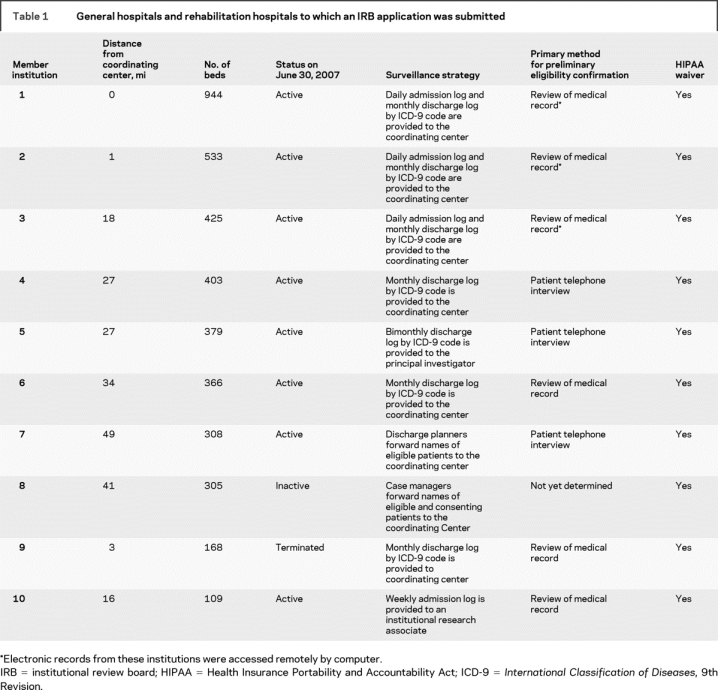

An IRB application was ultimately filed at 10 of the 11 institutions. One of the 11 was excluded when its Research Review Committee objected to the LION concept and would not authorize subsequent review by its IRB. By June 30, 2007, 8 of the remaining 10 hospitals were active and 2 were not. One small hospital was terminated when active surveillance revealed few eligible patients. Another had not begun to identify potential IRIS participants. Essential features of the 10 institution are described in table 1.

Table 1 General hospitals and rehabilitation hospitals to which an IRB application was submitted

Surveillance systems.

The IRBs at all 10 institutions were asked to approve the surveillance system described above that would give personnel from the Network Coordinating Center access to logs of patients admitted or discharged with the diagnosis of stroke or TIA, their names, the names of their physician, and contact information. Approval was granted at 5 institutions and denied at 5. The 5 denying institutions approved strategies involving surveillance by local hospital staff and referral to the Network Coordinating Center. This strategy succeeded at 2 institutions and failed at 3. At the successful sites, surveillance was completed by discharge planners who identified eligible patients during their regular work (institution 7) or a research associate who worked for the facility but was paid separately for work on the IRIS trial (institution 10).

At the 3 other institutions, surveillance was attempted by diagnosis coders (institution 4), the principal neurologist (institution 6), or case mangers (institution 8) during their routine work activities. After data showed slow recruitment or incomplete surveillance, the IRBs at institutions 4 and 6 approved the originally requested surveillance system. The IRB at institution 8 approved a strategy similar to that for institution 10 in which a member of the hospital staff was paid to perform active surveillance for IRIS, but work had not started by June 30, 2007.

Thus, the preferred (template) protocol for patient identification by which patient lists were provided to researchers in the Network Coordinating Center was ultimately approved by 7 of the 10 hospitals participating in the LION project. The other 3 hospitals require employees of the institution to locally review admission or discharge logs and medical records to preselect eligible patients for referral to the Network Coordinating Center.

Preliminary eligibility was checked before direct patient contact for patients from most LION hospitals. Only the minimum information necessary was gathered. Strategies included remote review of electronic medical records by IRIS staff (3 institutions), on-site review of paper medical records (1 institution), and on-site review of medical records by in-house personnel (2 institutions).

Nine of the 10 hospitals approved the preferred (template) strategy for first and subsequent contact of potential participants by IRIS staff. Hospital 9 required us to send a letter to patients in advance of our telephone contact; patients could call to opt out of the telephone call.

Recruitment performance.

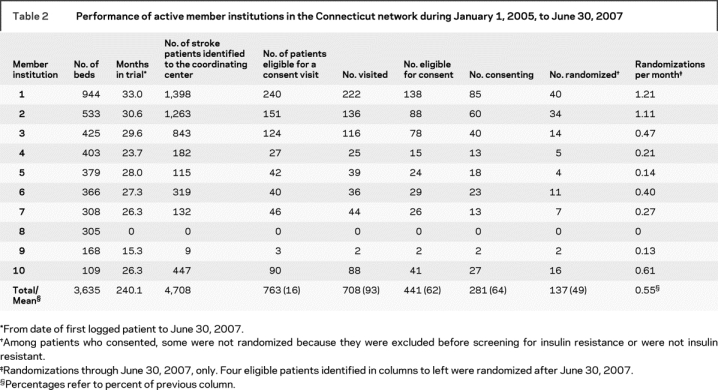

Performance data for the Connecticut LION and for each of the 10 active, inactive, or terminated member institutions are reported in table 2. A total of 4,708 patients were identified to the Network Coordinating Center, among whom 763 (16%) were determined to be eligible for a consent visit. Of the 763 patients, 708 (93%) agreed to an in-person consent visit, usually in their home. As a result of the consent visit, 441 of 708 (62%) were found to be eligible for enrollment. A high proportion of eligible patients consented to participate in the trial (281/441 = 64%).

Table 2 Performance of active member institutions in the Connecticut network during January 1, 2005, to June 30, 2007

Member institutions in the Connecticut LION were, on average, more productive in terms of randomizations than other institutions in the IRIS network. Among 104 institutions outside Connecticut that participated in the trial at any time before June 30, 2007, the average monthly randomization rate was 0.33 participants (range 0–1.88). Adjusted for bed size of enrolling institutions, the average monthly randomizations per 1,000 beds was 0.56 (range 0–4.53). Among the 75 institutions outside Connecticut that enrolled at least one patient, the average monthly randomization rate was 0.46 participants (range 0.03–1.88); average monthly randomizations per 1,000 beds was 0.76 (range 0.04–4.53). Among the nine active or terminated sites in the Connecticut LION, the average monthly randomization rate was 0.51 participants (range 0.13–1.21); average monthly randomizations per 1,000 beds was 1.52 (range 0.38–5.58). After adjusting for bed size, hospitals in Connecticut remained more productive compared with the 75 outside Connecticut that have enrolled at least one patient (1.52 randomizations/1,000 beds/month compared with 0.76 randomizations/1,000 beds/month, Wilcoxon rank-sum comparison p = 0.03). If the Connecticut LION is considered as one site, the average monthly enrollment is 4.0.

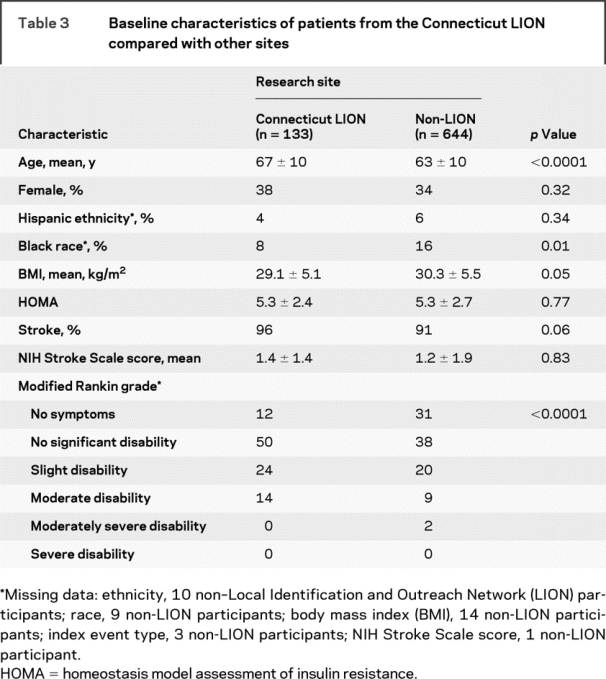

Table 3 displays essential demographic and clinical characteristics of patients enrolled at the Connecticut LION compared with other sites. Patients from Connecticut were older, more disabled, and less likely to be black. Patients from Connecticut were also more likely to enter the trial with a stroke, rather than a TIA, although the difference was not significant.

Table 3 Baseline characteristics of patients from the Connecticut LION compared with other sites

Experience with home visits.

Among 708 patient visits performed by research nurses in the Connecticut LION, almost all took place at home. Nurses never found themselves in situations they perceived to be dangerous. One nurse sustained injuries in a car accident while returning from an assignment.

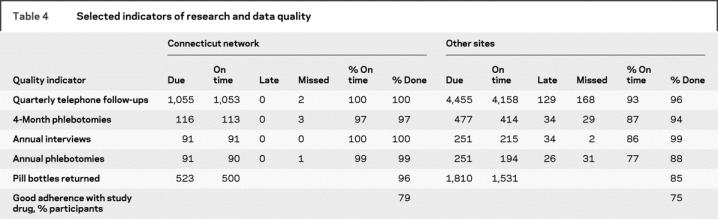

Data quality.

Key indicators of data quality for the Connecticut LION, compared with other IRIS sites, are shown in table 4. Data quality was good at all sites, but trends favored the Connecticut LION, particularly for completion of annual phlebotomies and pill bottle returns. Returned bottles were used to calculate medication adherence. Not shown in table 3 is average time from stroke or TIA to randomization, which was 71 days for the Connecticut LION, compared with 86 days for other sites (p < 0.0001).

Table 4 Selected indicators of research and data quality

Cost.

The average total cost to randomize and follow one participant from the start of funding July 1, 2004, through June 30, 2007, including facilities and administration costs, was $8,697 for the Connecticut network, compared with $7,198 for the other sites.

Adverse events.

Among 4,708 patients identified and more than 2,000 direct contacts, we encountered only 2 patients or relatives who expressed discomfort with our approach. The son of 1 patient had privacy concerns that were quickly resolved when we explained the IRB approval process and our compliance with HIPAA. Another potential participant became upset after learning we could not pay for his participation. No complaints against the trial have been filed with member institutions or their IRBs.

DISCUSSION

The Connecticut LION entered participants into the IRIS trial at an average rate of 4.0 per month, compared with 0.46 at more conventional sites. The high enrollment rate was the result of surveillance at multiple institutions and greater average productivity at each, as indicated by enrollment rates adjusted for bed size. The quality of data from the Connecticut network, including medication adherence, was comparable to data from conventional sites. The cost to enroll and follow each patient, however, was higher in the Connecticut LION. It is possible that greater per-patient costs will be offset by savings related to earlier completion of the research.

The Connecticut LION enrolled patients into the IRIS trial from a wide geographic area, served by some institutions and physicians who were not routinely involved in stroke research. As a result, patients who would not otherwise be offered the chance to participate in research were identified and enrolled. Special outreach strategies, furthermore, ensured that barriers to research participation imposed by access to transportation, inconvenience, or disability were minimized.

For investigators who create their own LION, we recommend asking IRBs to approve specific key strategies for patient surveillance and enrollment, and providing assurance regarding privacy, personnel qualifications, and quality oversight. The key strategies include timely access to accurate admission or discharge logs, access to medical records for eligibility confirmation, and direct contact with eligible patients after approval from treating physicians. Remote access to electronic medical records creates additional efficiency. All work should be performed by dedicated research staff from a central coordinating center. Personnel qualifications can be documented by formal training in HIPAA regulations and human subjects research. When IRBs will not agree to one or more of the key features, investigators should be prepared to accommodate local preferences. However, when an IRB requires patient surveillance by local personnel, investigators must ensure that they are properly trained, are committed to the project, and have access to complete admission rosters.

Seven of 10 LION institutions (70%) approved the preferred systems for active surveillance by research staff from a central coordinating center (table 3). Our experience at these 7 institutions indicated that active surveillance by a centralized staff can succeed in achieving enrollment, meeting institutional expectations, safeguarding patient privacy, and garnering patient acceptance.

Systems of local surveillance by institutional employees also succeeded at 2 institutions where the employees acted as agents of the IRIS trial. Compared with staff from the Network Coordinating Center, these institutional employees seemed to afford their IRBs a measure of comfort and control, sometimes as a first interface with patients. Our data, however, show no benefit from institutional employees in terms of safety or efficacy (i.e., enrollment rate).

Our experience with the LION strategy indicates that it can succeed in Connecticut, but further proof of the concept will require testing in other locations and with different trials. It should be noted that the member institutions in the Connecticut LION had prior experience in collaborative research that may have fostered the trust required for centralized research administration. Collaborating neurologists in the Connecticut network were strongly supportive of research, had no concern that the project would result in market competition, and trusted the project would not limit the care they could provide to their patients.

AUTHOR CONTRIBUTIONS

Statistical analysis was performed by C.M. Viscoli.

APPENDIX

Steering committee:

Walter N. Kernan, MD (Chair), Department of Medicine, Yale School of Medicine, CT; Harold P. Adams, MD, Department of Neurology, University of Iowa, IA; Bruce M. Coull, MD, Department of Neurology, University of Arizona, Tucson, AZ; Karen Furie, MD, Department of Neurology, Harvard Medical School, MA; Mark Gorman, MD, Department of Neurology, University of Vermont, VT; Peter D. Guarino, PhD, West Haven Veterans' Administration Medical Center, CT; Ralph I. Horwitz, MD, Department of Medicine, Stanford University, CA; Silvio E. Inzucchi, MD, Department of Medicine, Yale School of Medicine, CT; Anne M. Lovejoy, PA-C, Department of Medicine, Yale School of Medicine, CT; Peter N. Peduzzi, PhD, Department of Epidemiology and Public Health, Yale School of Medicine, CT; Gregory Schwartz, MD, PhD, Department of Medicine, University of Colorado, CO; David Spence, MD, Department of Neurology, Robarts Research Institute, Ontario, Canada; David Tanne, Department of Neurology and Sagol Neuroscience Center, Chaim Sheba Medical Center, Tel Aviv, Israel; Catherine M. Viscoli, PhD, Department of Medicine, Yale School of Medicine, CT; Lawrence H. Young, MD, Department of Medicine, Yale School of Medicine, CT.

Data and safety monitoring board:

Michael D. Walker, MD (Chair), John B. Buse, MD, Department of Medicine, University of North Carolina at Chapel Hill, NC; Lloyd Chambless, PhD, Department of Biostatistics, University of North Carolina at Chapel Hill, NC; David Faxon, MD, Department of Medicine, Brigham and Women's Hospital, MA; Jennifer K. Pary, MD, CNOS, Dakota Dunes, SD.

Investigators:

In addition to the authors, the following investigators participated in this study:

United States:

Alabama: University of Alabama, J. Halsey; University of South Alabama, R. Zweifler, I. Lopez; Arizona: University of Arizona, B. Coull; California: Cedars-Sinai Medical Center, D. Palestrant; Stanford University, M. Lansberg, G. Albers; UC-Davis Medical Center, P. Verro; UCSF (Fresno), T. Warwick, L. Nguyen; University of California, Los Angeles, B. Ovbiagele; University of California, San Diego, B. Meyer; University of California, San Francisco, S. Johnston; Colorado: Denver Health and Hospital Authority, R. Hughes; Connecticut: Hartford Hospital, I. Silverman; Yale University, J. Schindler; Florida: Florida Neurovascular Institute, E. Albakri; University of Florida, S. Silliman; Illinois: Cook County (Stroger) Hospital, M. Kelly, L. Singleton, J. Kramer; OSF Saint Francis Medical Center, D. Wang; University of Illinois, Chicago, L. Pedelty; Indiana: Indiana University, J. Fleck; Iowa: Ruan Neuroscience Center/Mercy Medical Center, M. Jacoby; University of Iowa, E. Leira, R. Wallace; Kansas: Via Christi Regional Medical Center, A. Ehtisham; Kentucky: University of Kentucky Research Foundation, L. Pettigrew; Louisiana: LSU Health Sciences Center, A. Minagar; Maine: Penobscot Bay Neurology, B. Sigsbee; Maryland: Johns Hopkins University, R. Wityk; Massachusetts: Beth Israel Deaconess, S. Kumar; Boston Medical Center Corp., C. Kase; Massachusetts General Hospital/General Hospital Corp., K. Furie; Michigan: Henry Ford Health Sciences Center, P. Mitsias; Michigan State University, A. Majid; Missouri: Washington University, A. Nassief, C. Hsu; Nebraska: University of Nebraska, P. Fayad; New Hampshire: Dartmouth, T. Lukovits, R. Clark; New Mexico: University of New Mexico, G. Graham; New York: Burke Medical Research Institute, P. Fonzetti, B. Volpe; New York Methodist Hospital, M. Salgado, R. Birkhahn; University of Rochester, W. Burgin; Ohio: Case Western Reserve University, S. Sundararajan; Cleveland Clinic Foundation, I. Katzan; Metrohealth Medical Center, J. Hanna; Ohio State University, A. Slivka; University of Cincinnati, D. Kleindorfer; University of Toledo, G. Tietjen; Oregon: Oregon Health and Science University, W. Clark; Pennsylvania: Abington Memorial Hospital, B. Diamond; Pennsylvania: Allegheny Singer Research Institute, A. Tayal; Lankenau Institute for Medical Research, G. Friday; Temple University, N. Gentile; Thomas Jefferson University, R. Bell; University of Pittsburgh, K. Uchino, N. Vora; Puerto Rico: University of Puerto Rico, F. Santiago; Rhode Island: Rhode Island Hospital, E. Feldmann, J. Easton; Tennessee: Vanderbilt University, A. O'Duffy; Texas: Methodist Hospital Research Institute, J. Ling; University of Texas, San Antonio, R. Hart; University of Texas, Southwestern, M. Johnson; Utah: University of Utah, E. Skalabrin; Vermont: University of Vermont, M. Gorman; Virginia: University of Virginia, N. Solenski, K. Johnston; Washington: Providence Medical Research Center, M. Geraghty; Washington, DC: National Rehabilitation Hospital, A. Dromerick; Wisconsin: Medical College of Wisconsin, M. Torbey.

Canada:

Alberta: Center for Neurologic Research, T. Winder; University of Alberta, A. Shuaib; British Columbia: Vancouver Island Health Research Centre, A. Penn; Ontario: Intermountain Research Consultants, S. Malik, F. Sher; Robarts Research Institute, R. Chan, J. Spence; St. Michaels Hospital, University of Toronto, N. Bayer; Quebec: Centre Hospitalier Affilie Universitaire de Quebec, A. Mackey, S. Verreault; CHUM-Centre de recherche, Hopital Notre-Dame, A. Durocher, L. Lebrun; Hopital Charles LeMoyne, L. Berger, J. Boulanger; McGill University–Jewish General, J. Minuk; McGill University–Montreal General, R. Cote.

Address correspondence and reprint requests to Dr. Walter N. Kernan, Suite 515, 2 Church St. South, New Haven, CT 06519 walter.kernan@yale.edu.

*See appendix for list of participating investigators.

†Died March 8, 2006.

Supported by the National Institute of Neurological Disorders and Stroke (U01 NS044876) and by a grant from Takeda Pharmaceuticals North America, Inc.

Disclosure: Dr. Inzucchi has received honoraria from Takeda Pharmaceuticals (manufacturer of pioglitazone) and other manufacturers of diabetic medications.

Received September 2, 2008. Accepted in final form February 5, 2009.

REFERENCES

- 1.Marler JR. NINDS clinical trials in stroke: lessons learned and future directions. Stroke 2007;38:3302–3307. [DOI] [PubMed] [Google Scholar]

- 2.Hobson RW, Brott TG, Roubin GS, Silver FL, Barnett HJM. Carotid artery stenting: meeting the recruitment challenge of a clinical trial. Stroke 2005;36:1314–1315. [DOI] [PubMed] [Google Scholar]

- 3.Hunningshake DB, Darby CA, Probstfield JL: Recruitment experience in clinical trials: literature summary and annotated bibliography. Controlled Clin Trials 1987;8:6S–30S. [DOI] [PubMed] [Google Scholar]

- 4.Embi PJ, Jain A, Clark J, Bizjack S, Hornung R, Harris CM. Effect of a clinical alert system on physician participation in trial recruitment. Arch Intern Med 2005;165:2272–2280. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Elkins JS, Khatabi T, Fung L, Rootenberg J, Johnston SC. Recruiting subjects for acute stroke trials: a meta-analysis Stroke 2006;37:123–128. [DOI] [PubMed] [Google Scholar]

- 6.Kernan WN, Viscoli CM, Brass LM, et al. Phenylpropanolamine and the risk of hemorrhagic stroke. N Engl J Med 2000;343:1826–1832. [DOI] [PubMed] [Google Scholar]

- 7.Viscoli CM, Brass LM, Kernan WN, Sarrel PM, Suissa S, Horwitz RI. Estrogen replacement after ischemic stroke: report of the Women's Estrogen for Stroke Trial (WEST). N Engl J Med 2001;345:1243–1249. [DOI] [PubMed] [Google Scholar]

- 8.Annas GJ. Medical privacy and medical research: judging the new federal regulations. N Engl J Med 2002;346:216–220. [DOI] [PubMed] [Google Scholar]