Abstract

The research examined whether performance by adult cochlear implant recipients on a variety of recognition and appraisal tests derived from real-world music could be predicted from technological, demographic, and life experience variables, as well as speech recognition scores. A representative sample of 209 adults implanted between 1985 and 2006 participated. Using multiple linear regression models and generalized linear mixed models, sets of optimal predictor variables were selected that effectively predicted performance on a test battery that assessed different aspects of music listening. These analyses established the importance of distinguishing between the accuracy of music perception and the appraisal of musical stimuli when using music listening as an index of implant success. Importantly, neither device type nor processing strategy predicted music perception or music appraisal. Speech recognition performance was not a strong predictor of music perception, and primarily predicted music perception when the test stimuli included lyrics. Additionally, limitations in the utility of speech perception in predicting musical perception and appraisal underscore the utility of music perception as an alternative outcome measure for evaluating implant outcomes. Music listening background, residual hearing (i.e., hearing aid use), cognitive factors, and some demographic factors predicted several indices of perceptual accuracy or appraisal of music.

Keywords: Cochlear implant, cognitive, music, speech perception

The cochlear implant (CI) is a prosthetic hearing device developed primarily to assist persons who are severely to profoundly deaf with verbal communication. The device picks up acoustic signals through an externally worn microphone, and these signals are then processed to filter and extract those components of sound critically important for speech perception. Those components are conveyed via electrical signals to an array of electrodes in the cochlea, resulting in electrical stimulation of the auditory nerve. This signal is then transmitted to the central auditory pathway for interpretation. Although the device does not provide an exact replica of normal hearing, the majority of postlingually deafened implant recipients using modern CIs score above 80% on high-context sentences in quiet listening conditions, even without visual cues (Wilson, 2000).

Although CIs have been quite successful in providing implant recipients with speech perception, they are less effective in transmitting the fine structural features of sound that contribute to music perception (e.g., Gfeller et al, 2000a, 2002a, 2003, 2005, 2007; Leal et al, 2003; Kong et al, 2004; McDermott, 2004). Because people often listen to music for pleasure, perceptual accuracy (e.g., pitch perception, melody recognition) alone is not sufficient with regard to implant benefit; the quality of the sound, or how enjoyable the sound, is also an important component of implant benefit.

When considering the evaluation of music perception and enjoyment by CI users, it is important to recognize that the word music represents a diverse body of styles and structural combinations of individual pitches played sequentially (melodies) and concurrently (harmony) in different rhythmic patterns and tempos, by one or more timbres (e.g., instrumental solos and blends, such as bands or orchestras). Thus, one cannot presume that one single measure of music perception would fully represent the perceptual accuracy or appraisal (ratings of sound quality or enjoyment) experienced by cochlear implant recipients for music perception and/or enjoyment. Just as the assessment of speech recognition has involved a range of stimuli and response tasks (e.g., perception of isolated computer-generated sounds, closed-set word recognition, open-set word recognition, phoneme recognition, recognition of connected discourse), there are a variety of measures that reflect perceptual accuracy on several key structural features of music (e.g., pitch, melody, harmony, timbre, and combinations thereof).

Research indicates that some structural features of music are more effectively perceived than others through the implant. For example, CI recipients perform with similar accuracy as normal-hearing adults with regard to simple rhythmic patterns presented at a moderate rate (Dorman et al, 1991; Gfeller and Lansing, 1991, 1992; Schultz and Kerber, 1994; Gfeller et al, 1997); however, implant recipients are significantly less accurate than normal-hearing adults on pitch perception, including detecting pitch change (frequency difference limens) (McDermott, 2004; Gfeller et al, 2002a), identifying direction of pitch change (higher or lower) (Gfeller et al, 2002a; Laneau et al, 2004; McDermott, 2004), and discrimination of brief pitch patterns (Gfeller and Lansing, 1991, 1992). In most cases, recipients of CIs require considerably larger frequency differences than adults with normal hearing for detecting pitch change and direction of pitch change (e.g., Gfeller et al, 2002a, 2007; Kong et al, 2004; Looi et al, 2004; McDermott, 2004).

Because melodies and harmonies are comprised of sequential pitch patterns and several concurrently presented pitches respectively, the poor, transmission of pitch has negative implications for recognition of melodies with or without harmony (Schultz and Kerber, 1994; Pijl, 1997; Fujita and Ito, 1999; Gfeller et al, 2002a; Leal et al, 2003; Kong et al, 2004; McDermott, 2004). Thus, it is not surprising that implant recipients as a group are significantly less accurate than normal-hearing nonmusicians in closed- and open-set recognition of melodies, especially when lyrics or rhythmic cues are unavailable (Gfeller et al, 2002a, 2005; Kong et al, 2004). In addition, CI recipients are less accurate than normal-hearing listeners on timbre recognition (recognizing musical instruments by sound alone), and they tend to rate (appraise) the tone quality as less pleasant than do normal-hearing adults (Schultz and Kerber, 1994; Gfeller et al, 1998, 2000b; Fujita and Ito, 1999).

Thus, to illustrate how an everyday listening activity could be compromised for an implant user, a well-known song such as “Happy Birthday” may sound monotonic (only one pitch) or like random pitches or sounds and thus be unrecognizable. In addition, the tone quality may sound tinny, unnatural, or essentially like noise. Because music is a pervasive art form and a valued part of cultural rituals, social events, and emotional expression (Nielzen and Cesarec, 1982; Gfeller, 2001; Huron, 2004), and because implant recipients are likely to be exposed to music on a regular basis, improved music perception is often an expressed interest of CI users.

While the majority of implant recipients have significantly poorer accuracy and appraisal of music than normal-hearing people (e.g., Gfeller et al, 1997, 1998, 2002a; McDermott, 2004), there is considerable intersubject variability among implant recipients for various measures of music perception and appraisal. For example, in one experiment examining recognition of “real-world” musical excerpts (Gfeller et al, 2005), some individuals received 0% correct, and the mean score for 79 participants was 15,6%. However, there were individuals sometimes referred to as “star” users who achieved 94.1% correct on that same test—a level of accuracy that seems nearly impossible given the technical limitations of the device with regard to transmission of fine spectral features.

In addition to individual differences demonstrated in specific measures of music perception and appraisal, there can be significant intrasubject variability across indices of music perception. That is, individual CI recipients may do very poorly on pitch perception but show better performance on recognition of musical instruments (Gfeller et al, 1997, 1998, 2002b, 2005, 2006; Gfeller and Lansing, 1991, 1992).

Understanding the factors that contribute to the enormous variability among CI recipients with respect to music perception and enjoyment could have important implications for implant design as well as advising CI users with respect to musical opportunities and benefits. Thus, the purpose of this research was to identify those factors that are most strongly associated with greater perceptual accuracy and enjoyment of music by adult cochlear implant recipients. Although prior studies have examined the relations between a given outcome (e.g., melody recognition) and individual predictor variables (e.g., the relation between cognitive processing, hearing history, and melody recognition; Gfeller et al, 2002a), they have not provided a more comprehensive examination of the relations among multiple musical variables and multiple subject characteristics. Such multivariate considerations may help us better understand individual differences in music perception by CI users. Thus, this paper will examine the perception of real-world musical stimuli in a more comprehensive manner, using multiple linear regression and generalized linear mixed models to help identify those factors that, in combination, best predict performance in the recognition and appraisal of excerpts of musical stimuli of the sort heard in everyday life.

These analyses utilize data from a battery of tests used in prior experiments that examined perceptual accuracy and appraisal of a variety of stimuli ranging from simple and highly controlled computer-generated stimuli (i.e., pure tones) to complex blends of musical sounds (i.e., excerpts of “real-world” music on radio or in recordings) often heard in everyday life. We have also gathered data on individual difference variables (e.g., length of profound deafness; age at time of testing; speech and cognitive measures), particulars regarding assistive hearing device used (e.g., type of cochlear implant and strategy used; whether hearing aids are used), and life experiences (e.g., musical training and listening habits) that theoretically should have important relations with success in music perception and enjoyment. Thus, the factors within these analyses fall into three primary categories: individual differences, technical differences, and environmental influences (i.e., life experiences). The analyses used to examine these relationships were conducted using multiple linear regression and generalized linear mixed models. These analyses were not intended to focus on or model the hearing mechanism per se, and the relative contribution of peripheral and central auditory pathways. Rather, these analyses were designed to evaluate the relative importance of external factors that interface with the hearing mechanism and implant technology in determining music perception and appraisal.

METHODS

Participants

The participants in this study included 209 adult cochlear implant recipients with at least nine months implant experience (mean months of use = 42.3, SD = 45.4). The sample included 97 male and 112 female implant recipients ranging in age from 23.7 to 92.5 years (M = 60.2, SD = 15.4) with less than one year to 58 years (M = 10.8, SD = 12.9) of bilateral profound deafness prior to implantation. Age at time of testing, length of profound deafness, months of use, and year when implanted were all included as demographic predictor variables in the tested models.

Recipients used one of the following device types: CI22, CI24M, Contour, Ineraid, Clarion, HiFocus, CIIHF1/2, 90K (all 22 mm electrodes). The following speech processing strategies were used as appropriate to the device: MPEAK, SPEAK, ACE, Analog SAS, CIS, HiRes, and Conditioner (High-rate pseudospontaneous conditioner as described in Rubinstein et al, 1999; Hong and Rubinstein, 2003). The specific breakdown of device by strategy is shown in Table 1. In addition to including the different device and strategy types as independent variables, we also examined whether or not the individual was a bilateral CI user (Bilat, yes or no) and whether or not the individual used a hearing aid in addition to their CI (HA, yes or no).

Table 1.

Sample Sizes for the Devices and Strategies Present in the Sample

| ACE | Analog | CIS | Cond* | HiRes | MPEAK | SAS | SPEAK | Total | |

|---|---|---|---|---|---|---|---|---|---|

| 90K | - | - | - | - | 9 | - | - | - | 9 |

| CI22 | - | - | - | - | - | 1 | - | 19 | 20 |

| CI24M | 21 | - | 3 | - | - | - | - | 26 | 50 |

| CIIHF1 | - | - | 18 | 2 | 12 | - | 6 | - | 38 |

| CIIHF2 | - | - | 1 | - | 10 | - | - | - | 11 |

| Clarion | - | - | 28 | - | - | - | - | - | 28 |

| Contour | 29 | - | 1 | - | - | - | - | 6 | 36 |

| HiFocus | - | - | 3 | - | - | - | - | - | 3 |

| Ineraid | - | 2 | 10 | - | - | - | - | - | 12 |

| Total | 50 | 2 | 64 | 2 | 31 | 1 | 6 | 51 |

Note: Strategy data is missing for two participants.

Cond = Conditioner.

The CI recipients were tested during their annual visit to our center. All were native English-speaking adults culturally affiliated with the United States, an important factor given that recognition of and response to particular musical selections is influenced by exposure to particular styles of music. Most of the implant recipients described themselves as having been informal music listeners prior to deafness. Only two of the participants had college-level musical training, as determined through the Iowa Music Background Questionnaire (Gfeller et al, 2000a).

The sample in the present study was drawn from all adult CI recipients enrolled in a clinical trial from 1985 through 2006 who had participated in extensive pre- and post-implant assessment on measures of speech and cognition, as well as measures of music perception; measures of speech perception were made available to this project. This sample did not include those patients using short electrodes, which are based on a different principle of stimulation (acoustic plus electric), or persons with known severe intellectual deficits or other sensory limitations (e.g., blindness) that could preclude testing.

The CI recipients participated in different experiments for several projects within our center (audiology, eleetrophysiology, music) that had to be completed during one- or two-day visits. Because of personal circumstances, acute illnesses, and scheduling conflicts, not all participants completed all of the measures included in the present analyses. Importantly, because data are essentially missing at random and not due to the factors under investigation, the sample can be considered unselected with respect to the variables of interest in this research and representative of CI recipients at this center. Although some participants providing data for the present study were included in previously published papers investigating music perception by implant recipients, the full sample has not been described in previous papers.

Test Protocols

General descriptions of the music and cognitive and speech measures are provided below. The test content and protocols for many of the individual measures are described in full in previously published articles as noted.

Music Perception and Appraisal Tests

A number of tests were used to reflect different aspects of music perception and appraisal (liking or enjoyment), from highly controlled isolated elements to more complex and combined elements of music that more closely resemble real-world musical sounds.

Pitch Perception Test

A Pitch Ranking Test (PRT) provided a measure of pitch ranking for pure tones ranging from 131 to 1048 Hz (Gfeller et al, 2007). The PRT was tested in a sound field using a computer- controlled, two-alternative, forced-choice (2AFC) adaptive procedure. The participant was asked to determine if the second pitch in a pair of two pitches was higher or lower than the first pitch. The presentation level of the test tones (average level of 87 dB SPL) was randomized for each presentation over an 8 dB range to minimize loudness cues that might have resulted from standing waves in the double-walled sound booth. The mean presentation level for the test tones was adjusted individually for each cochlear implant participant at each test frequency to insure that the tones were presented at least 20 dB above the participant’s sound-field threshold. The number of correct responses on pitch discrimination was determined as a function of interval size (1 to 5 semitones) and frequency (131 to 1048 Hz). An average number correct over all interval sizes and base frequencies was calculated, yielding an average range of 0 to 6 correct.

Melody Recognition

Two measures of melody recognition were included in the analysis: a Familiar Melody Recognition test (FMR) (described in detail in Gfeller et al, 2002a) and a Musical Excerpt Recognition Test (MERT) (described in detail in Gfeller et al, 2005). The FMR tested recognition of 12 well-known children’s or folk melodies that were prepared using MIDI (musical instrument digital interface) software and synthesizers. Administration of this test required prior familiarity with 75% of the test items (as determined through a 27-item check list of songs well known in U.S. culture). Each item (melody only and melody plus harmony conditions, no lyrics) was heard two times, and response was open set. Scores were recorded as the percent correct of those songs known prior to testing (range of 0–100%) (Gfeller et al, 2002a).

The MERT tested recognition of excerpts of real-world melodies of the sort heard on the radio or in recordings. The stimuli for the MERT were excerpts from 24 real-world recordings of musical items of three different styles (pop, country, classical) determined through media rankings to be highly familiar to the U.S. population. Each participant listened to eight songs without lyrics (MERT-I) and 16 songs with lyrics (MERT-L). After listening to each song, they attempted to identify whether or not they recognized the item (coded as success or failure). Administration of this test required the participant to have prior familiarity with at least 25% of the test items (as determined through a 79-item check list). The analyses included the data for only those songs that the participant reported knowing beforehand so that different participants were tested on a differing number of songs. Separate scores (percent correct) were obtained for excerpts with and without lyrics (Gfeller et al, 2005).

Timbre Recognition

The Timbre Recognition test (TR; described in detail in Gfeller et al, 2002c) presented recordings of eight real musical instruments playing a standardized solo melody of seven notes in length. Scores reflected the percent correct in a closed-set test (16 options) of those instruments the subjects reported knowing prior to testing (Gfeller et al, 2002c). Participation in this test required reporting prior familiarity with at least 50% of the instruments included in the test.

Appraisal Measure

A Musical Excerpt Appraisal Measure (MEAM) (described in detail in Gfeller et al, 2003) was designed to measure appraisal of the sound quality of real-world music when transmitted through the CI. Each participant listened to eight songs without lyrics (MEAM-I) and 16 songs with lyrics (MEAM-L), This measure yielded ratings for the pleasantness or likeability of those same excerpts presented in the MERT, that is, excerpts of recordings of real-world excerpts of pop, country, and classical melodies. The mean score of appraisal was obtained by using a visual analog scale with a range of 0–100.

The FMR, MERT, TR, and MEAM were administered in a sound-treated room via a Mac computer (model M3409) and Altec Lansing external speakers (model ACS 340). Participants responded using a Sony touch screen monitor (model CPD-2000SF). The test stimuli were transmitted through the microphone of the speech processor, which approximates to a considerable extent everyday listening experiences in quiet. The sound level was averaged at 70 dB SPL; however, implant recipients were permitted to adjust their processor for maximum comfort.

Musical Background Questionnaire (MBQ)

The extent and type of formal musical training prior to implantation, as well as listening habits and enjoyment prior to and following implantation, were quantified through the MBQ (described in detail in Gfeller et al, 2000a). The questionnaire yielded four scores that were included in the model: two scores for formal musical training (one for elementary school [MT1] and a second for secondary education and beyond [MT2]), a score for music listening habits and enjoyment prior to implantation (MLE-pre), and a score for music listening habits and enjoyment following implantation (at the time of testing) (MLE-post).

Cognitive Measures

Because implant recipients must learn to use a new signal, there has long been an interest in identifying cognitive factors that might predict implant outcome. Because evidence regarding the role of general intelligence in implant outcome has been mixed (c.f. Knutson et al, 1991; Waltzman et al, 1995), we have focused our efforts on specific cognitive and attentional processes that might underlie the task confronted by an implant user attempting to perceive music. These processes include the ability to extract target features from an array of sequentially presented information, associative memory, working memory, and the ability to process signal changes quickly and accurately. In the present study we have included several such cognitive tests to determine whether some measures of cognitive abilities, when combined with other predictive variables, enhance the multivariate prediction of the accuracy of music perception and the appraisal of music. All of the tests are administered in a visual modality so that scores are not confounded with audition.

Tests of Sequential Processing

Two tests were included to assess the ability of participants to accurately identify sequential information without placing a premium on speed of responding. The first is the Sequence Learning Test (SLT; Simon and Kotovsky, 1963). The SLT presents participants with a graded series of items, each of which presents a sequence of letters that evidences a discernable pattern. Correct identification of the pattern is evidenced by a participant correctly providing the next four letters in the sequence. The second test of sequence recognition is the Raven Progressive Matrices (RPM; Raven et al, 1977), a test in which the participant must correctly identify a two-dimensional progression and select the correct item that would be placed in a missing cell. Although developed as a nonverbal test of intelligence, the RPM was selected for use on the basis of studies of eye tracking which established that performance on the RPM reflects the accurate identification of sequential patterns (Carpenter et al, 1990).

Visual Monitoring Task (The VMT)

The VMT (Knutson et al, 1991) also requires participants to correctly identify sequential information, but the VMT requires rapid responding and working visual memory. On the VMT, participants view single numbers presented on a computer monitor at either a one/sec rate or a one/2 sec rate. When the currently presented number, combined with the preceding two numbers, produces an even-odd-even pattern, the participant is required to strike the space bar on a PC keyboard. Thus, a participant must continuously update their recollection of preceding sequences and respond to the current stimulus. Participant performance reflects correct responses and correct rejections as well as erroneous responses (i.e., false positive responses) and failures to response (i.e., false rejections). These four response types are aggregated into a single score using receiver operating characteristic (ROC) analysis to provide a measure of signal discrimination that is independent of the participant’s response biases and decision criterion. One commonly used measure of signal detectability is the proportion of the area of the graph that lies below the ROC curve (Gescheider, 1997), which is a distribution-free measure of signal detection (Green and Swets, 1988). In the present study, this area is computed from the empirical ROC points using the trapezoidal rule. Thus, for each presentation rate, the participant’s score is the trapezoidal area under the ROC curve. The mean score from the two presentation rates was used in the predictive models tested.

Speech Measures

Speech perception data has been collected using a variety of speech measures since the inception of our clinical research center in 1985. The two tests included in this study were the Consonant-Nucleus Consonant Test (CNC) (Tillman and Carhart, 1966), and the Hearing in Noise Test (HINT) (Nilsson et al, 1994), These two measures have been used most frequently over this time span and are commonly used measures of speech reception among CI centers. Speech perception was measured in quiet using recorded CNC monosyllabic words and HINT sentences. CNC scoring was based on percent-correct performance at the word level, and the HINT sentences were scored by dividing the total number of key words correctly identified by the total number of key words possible. Two lists of CNC words and four lists of HINT sentences were presented to each participant. All speech perception lists were randomized between participants, and no participant received two of the same lists during this study. Speech recognition tests were presented at 70 dB(C) or 60 dB(A).

Statistical Analysis

Multiple linear regression models were used to analyze the relations among the independent predictor variables and each of the continuous dependent variables. These dependent variables -were PRT, FMR, TR, MEAM-L, and MEAM-I. In order to represent categorical variables in the models, dummy variables were introduced. For these models there were 27 possible predictor variables. We wanted to identify the variables that best predicted each of the dependent variables. With the large number of predictor variables involved, we used univariate tests as a screening measure and selected only the variables that had p-values less than .15 for further study. These univariate tests were simple linear regression analyses for the continuous variables, t tests for dichotomous variables, and ANOVA tests for categorical variables. Variables that passed the screening measure were included in the multiple regression analyses. To identify the influential variables in the multiple regression analysis, and to eliminate the extraneous variables, we selected the best regression models on the basis of Akaike’s information criterion (AIC; Akaike, 1973). The top-ranked AIC-selected model for each outcome was chosen as the final model for interpretation.

Generalized linear mixed models (GLMM) were used to analyze the relations between the independent predictor variables and musical excerpt recognition variables (MERT-L and MERT-I). GLMMs can be used in circumstances where the outcome measure is not normally distributed (e.g., categorical) and where there are repeated measurements per participant. In the present case, the tested model is also unbalanced because of the different number of excerpts used for each participant. The possibility of testing an unbalanced model is another benefit of the flexibility of a GLMM, The excerpts that were chosen were meant to be a representative sample of any excerpt with or without lyrics; thus, the variable excerpt was treated as a random effect in the analyses. The tested model included both fixed effects and random effects for a dichotomous outcome repeated over time; thus, the variable it is a generalized linear mixed model with random effects for participant and song. There is no corresponding model selection criterion for this type of model, and stepwise variable selection was used.

Due to the nature of patient participation and the testing format as described previously, a large number of patients did not have time to take at least one of the tests. There is, therefore, a large degree of item nonresponse, and only 72 of the 209 participants took every test. Each selected model was then fit using the number of available cases for that analysis. In other words, we used the participants with measurements for all relevant dependent variables for that outcome. This approach resulted in significantly larger sample sizes than were used in model selection. The regression (PROC REG) and GLMM (PROC GLIMMIX) analyses were performed using SAS 9.1 software (SAS Institute, 2004).

RESULTS

Descriptive statistics summarizing the dependent and independent variables for the total sample of CI users contributing to the analyses are presented in Table 2. Preliminary analyses of the implant devices and strategies used by the individuals in the sample (see Table 1) failed to identify any statistically significant influence of device or strategy on music perception or appraisal. Thus, it is important to note that neither device nor strategy were influential variables in any of the models that were tested.

Table 2.

Summary of the Variables Used for Model Selection

| Variable | Meaning | Obs. | Mean | Min. | Max. |

|---|---|---|---|---|---|

| Independent Variables | |||||

| Device | Cochlear implant hardware | 209 | - | - | - |

| Strategy | Cochlear implant software | 207 | - | - | - |

| Bilat | Bilateral CI user (1 = yes, 0 = no) | 209 | 0.12 | 0 | 1 |

| HA | Hearing aid user (1 =yes, 0 = no) | 147 | 0.21 | 0 | 1 |

| LPD | Length of profound deafness (months) | 176 | 10.75 | 0 | 58 |

| Yrlmplnt | Year of implant | 209 | 1997.73 | 1984 | 2004 |

| MOU | Months of use | 209 | 42.26 | 9 | 255 |

| MLE-pre | Music listening experience prior to implantation | 204 | 5.12 | 0 | 8 |

| MLE-post | Music listening experience after implantation | 204 | 3.71 | 0 | 8 |

| MT1 | Amount of music training (elementary school) | 194 | 5.35 | 0 | 27 |

| MT2 | Amount of music training (high school & adult) | 180 | 1.20 | 0 | 20 |

| VMT1 | Visual Monitoring Test 1 | 166 | 0.70 | 0 | 1 |

| VMT2 | Visual Monitoring Test 2 | 165 | 0.63 | 0 | 1 |

| RPM | Ravens Progressive Matrices | 166 | 48.64 | 11 | 78 |

| SCT | Sequence Completion Task | 168 | 21.03 | 0 | 48 |

| CNC | Consonant Noise Consonant Test | 192 | 0.46 | 0 | 0.92 |

| HINT | Hearing in Noise Test | 195 | 0.76 | 0 | 1 |

| Age | Age at time of test | 209 | 60.18 | 23.7 | 92.5 |

| Dependent Variables | |||||

| PRT | Pitch Ranking Test (total out of 540) | 95 | 363.94 | 214 | 493 |

| FMR | Familiar melody recognition (percent correct) | 147 | 25.73 | 0 | 87.5 |

| MERT-I | Melody recognition, instrumental (proportion correct) | 134 | 4.48 | 0 | 50 |

| MERT-L | Melody recognition, lyrics present (proportion correct) | 134 | 13.82 | 0 | 87.5 |

| TR | Timbre recognition (percent correct) | 177 | 43.88 | 6.7 | 100 |

| MEAM-I | Melody appraisal instrumental (range 0–100) | 160 | 49.04 | 7.1 | 94.5 |

| MEAM-L | Melody appraisal, with lyrics (range 0–100) | 160 | 60.50 | 28.3 | 96.4 |

The results of the model selection procedures are shown in Table 3. For each outcome, the number of individuals used for the final analysis is presented, along with the coefficient of multiple determination (r2), the p-value for the overall F test, the variables present in the AIC-selected model, their regression coefficients, and the p-value of the t test for the individual parameters. Note that the GLMM analyses have neither corresponding r2 values nor an overall F test. All models were significant at the .05 level. In the results from the individual models that follow, it is worth noting that, because the models were selected using a subset of the subjects that are used to fit the resulting model (see “Statistical Analysis” section for details), some of the models selected contain covariates with nonsignificant p-values (p > .05). This is likely caused by random variation between the samples, but it could be due to systematic differences between the two sets of subjects with regard to the relationship in question. In either case, there is statistical support for the position that these variables are at least moderately linked to the outcome.

Table 3.

AIC-Selected Regression Models

| Dependent variable | n | r2 | P-value | Predictor variables | β | P-value |

|---|---|---|---|---|---|---|

| PRT | 98 | 0.209 | <0.001 | VMT | 70.38 | <0.001 |

| MT2 | 2.45 | 0.115 | ||||

| CNC | 50.56 | 0.019 | ||||

| FMR | 86 | 0.314 | <0.001 | HA | 9.44 | 0.083 |

| MT2 | 1.66 | 0.014 | ||||

| VMT | 42.74 | <0.001 | ||||

| MERT-I | 89 | HA | 0.12 | 0.01 | ||

| MT2 | 0.02 | <0.001 | ||||

| Age | −0.01 | <0.001 | ||||

| MERT-L | 129 | MOU | 0.00 | 0.028 | ||

| MLE-post | 0.02 | 0.022 | ||||

| HINT | 0.21 | 0.006 | ||||

| Age | −0.01 | <0.001 | ||||

| TR | 149 | 0.169 | <0.001 | MLE-post | 1.87 | 0.029 |

| MT2 | 1.46 | 0.011 | ||||

| HINT | 21.43 | <0.001 | ||||

| MEAM-I | 144 | 0.068 | 0.007 | MLE-post | 1.61 | 0.014 |

| VMT | 7.97 | 0.076 | ||||

| MEAM-L | 110 | 0.177 | 0.0004 | MLE-pre | 0.87 | 0.096 |

| Bilat | 6.25 | 0.028 | ||||

| HINT | 10.88 | 0.02 | ||||

| HA | 5.21 | 0.065 |

r2 = coefficient of multiple determination; β = regression coefficient

Pitch Ranking Test (PRT)

The t tests for the individual parameters of the regression models show that high school/adult music training (p ≤ .115), Visual Monitoring Task score (p < .001), and CNC score (p ≤ .019) were determined to be the best set of predictors of pitch recognition. Higher scores for all three of these measures are associated with superior pitch recognition test scores. For example, if all other covariates remained the same, an increase of 10% in an individual’s VMT score increases the expected value of his/her PRT score by approximately seven points.

Familiar Melody Recognition (FMR)

The regression analysis for melody recognition reveals that wearing a hearing aid (p ≤ .083), high school/adult music training (p ≤ .014), and visual monitoring task score (p < .001) yielded the best set of predictors of melody recognition. Higher FMR scores were associated with users who wore hearing aids, had more musical training, and had higher visual monitoring task scores. For example, adjusted for VMT scores and musical training, hearing aid users recognized familiar melodies 9.4% more often than nonusers.

Musical Excerpt Recognition Test (MERT-I)—Instrumental

For song recognition without lyrics, use of a hearing aid (p ≤ .01), high school/adult music training (p ≤ .001), and age (p < .001) proved to be the best model. Recognition of excerpts declined with age and was found to improve with hearing aid use and musical training.

Musical Excerpt Recognition Test (MERT-L)—Lyrics Present

For the recognition of songs with lyrics, the covariates months of use (p ≤ .028), music listening experience after implantation (p ≤ 0.022), speech perception (HINT sentence scores) (p ≤ .006), and age (p < .001) comprised the best model. Recognition of excerpts with lyrics declined with age but improved with increased months of CI use, postimplantation music listening experience, and better speech perception.

Timbre Recognition (TR)

Music listening experience after implantation (p ≤ .029), high, school/adult music training (p ≤ .011), and speech perception (as measured by HINT sentences scores) (p < .001) were found to be the best predictors of timbre recognition. Higher measures for all three covariates were associated with increased timbre recognition.

Musical Excerpt Appraisal Measure (MEAM-I)—Instrumental

The predictor variables in the best model for instrumental song appraisal were revealed to be music listening experience after implantation (p ≤ .014) and the average visual monitoring task score (p ≤ .076). Higher measures of both variables were linked to greater appraisal of instrumental music.

Musical Excerpt Appraisal Measure (MEAM-L)—Lyrics Present

Music listening experience prior to implantation (p ≤ .096), bilateral implantation (p ≤,028), speech perception (HINT sentence scores) (p ≤ .02), and use of a hearing aid (p ≤ .065) proved to be the predictors yielding the best model for appraisal of music containing lyrics. Hearing aid users, as well as CI users with bilateral implantation, were associated with higher appraisal of music containing lyrics. Higher appraisal of music with lyrics was also associated with more accurate speech perception and music listening experience prior to implantation.

DISCUSSION

When speech perception is the criterion for implant benefit, accuracy of perception is the standard of measurement. When music perception is the criterion of implant benefit, accuracy of perception is only one criterion. The other criterion is the appraisal of the percept. That is, although the ability to recognize a musical excerpt can reflect an important outcome of implantation, whether the implant user evaluates the musical signal positively will determine whether they choose to listen to music and whether they evaluate the outcome of the implant favorably. For that reason, the current analyses distinguished between the ability to correctly identify musical stimuli and the subject’s appraisal of those musical stimuli. By adopting a multiple regression strategy, it was possible to determine whether combinations of variables were better than individual predictors, but the strategy also made it possible to eliminate individual predictors that provide essentially redundant information. Importantly, for most of these analyses, the significant predictors of percept accuracy were not predictors of appraisal. Similarly, variables that predicted appraisal were not necessarily significant predictors of accuracy. Thus, not only are perceptual accuracy and perceptual appraisal different indices of implant benefit, these indices are predicted by different combinations of variables. These results not only add to the justification for considering accuracy and appraisal differently, they underscore the possibility that different processes may underlie the different aspects of musical perception by implant users.

The relations between the dependent and predictor variables, and the clinical implications of these relations, are clarified by classifying predictors into three broad categories: technical factors, individual differences, and life experiences. With regard to technical factors (see Figure 1), neither device nor strategy were significant predictors of any of the five dependent variables, nor did they account for added variance in multiple regression analyses. Thus, the notion that some devices and some strategies would afford implant users greater capacity for musical perception and appreciation was not supported in these analyses. The use of hearing aids, however, was a significant contributing factor for several measures of recognition and appraisal of music, with and without lyrics. These results are consistent with research regarding bimodal stimulation for those CI users with a long electrode array who have usable acoustic hearing (most typically in the nonimplanted ear). Preliminary studies with such recipients indicate that the usable acoustic hearing (e.g., in a bimodal condition of a CI plus a contralateral hearing aid) can enhance some aspects of music listening such as pitch perception or melody recognition (Büchler et al, 2004; Dillier, 2004; Kong et al, 2005). With regard to more conventional internal arrays, this finding does suggest that implant users who can also use a hearing aid in either ear might enjoy enhanced music appreciation relative to persons who only use an implant. From the standpoint of evolving implant design, this finding can be seen as supporting recent efforts to develop implant designs or surgical techniques that help to preserve residual hearing (James et al, 2006).

Figure 1.

Relations between dependent variables and factors related to technical differences. The arrows indicate the presence of statistically significant relations between the dependent variables and predictor variables classified as technical differences. The p value is indicated by the type of arrow. NS indicates variables are not significant.

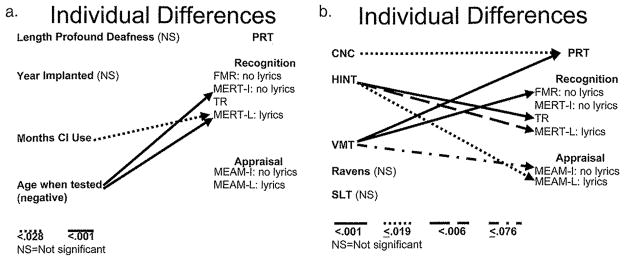

With regard to individual differences (see Figures 2a and 2b) predicting music perception, those variables related to hearing history (e.g., length of implant experience) show an interesting contrast relative to speech reception data, in which improved speech perception scores are associated with more implant experience (cf. Tyler and Summerfield, 1996). As the present data indicate, greater length of implant experience with the CI is a predictor only for recognition of real-world melodies that also have linguistic information. In short, when musical stimuli have lyrics, some factors that have been shown to influence speech perception also influence accuracy of music perception. Greater age at time of testing being negatively correlated with recognition of the real-world melodies in this protocol merits further investigation. Although it might be assumed that older adults would have less familiarity with the selections in the music tests, the selections in those tests were based on considerable evidence that the melodies would be familiar to the population studied (Gfeller et al, 2005). Thus, while replication with other melodies might be considered, it is likely that other processes associated with aging might account for the results.

Figure 2.

Relations between dependent variables and factors related to individual differences. The arrows indicate the presence of statistically significant relations between the dependent variables and predictor variables classified as technical differences. The p value is indicated by the type of arrow. NS indicates variables are not significant.

The limited contribution of performance on speech perception tests in predicting music perception and appraisal reinforces the notion that performance on music perception tests does not provide redundant information in the evaluation of implant success. As the analyses indicate, speech perception (i.e., performance on the HINT) contributes primarily to the prediction of those tests that include lyrics. Although the association between better speech perception and the perception of musical excerpts with lyrics is not surprising, it is the general absence of an association between speech perception and musical perception that is important to note. Quite simply, in isolation or in combination with other variables, strong performance on speech perception measures largely did not predict music perceptual accuracy or positive appraisal of music in the absence of lyrics.

There were two exceptions to the poor prediction of music perception without lyrics. The HINT scores combined with musical training and postimplant listening experience contributed to timbre recognition, and the CNC scores combined with a cognitive measure and musical training contributed to the prediction of pitch ranking. These results may point to some general ability to respond to acoustic events that is tapped by both speech perception tests and music perception tests. The link between speech perception and music perception involving lyrics and the absence of a link between speech perception and music perception without lyrics is quite consistent with research that distinguished between those features of speech and music that are more salient to understanding, in particular, spectral information being more important for perception of pitch and timbre, and broad temporal features being adequate for perception of speech (e.g., Qin and Oxenham, 2003; Kong et al, 2004). Yet, the prediction of timbre and pitch ranking by speech perception measures might seem to be inconsistent with such findings. It is important to note, however, that neither the HINT nor the CNC scores alone accounted for much variance in timbre recognition or pitch ranking. Thus, it seems probable that these measures all reflect a fundamental ability to respond to acoustic events.

Of the three cognitive measures we included in the tested models, the Visual Monitoring Task (VMT) emerged as the best predictor—perhaps because it not only requires correct identification of sequential information, as do the RPM and the SLT, but it also requires rapid responding and working memory, which may be more reflective of the task demands facing a CI recipient trying to extract meaningful information from a complex, rapidly changing but degraded musical signal. To the extent that the VMT has been an effective predictor of speech perception (cf. Knutson et al, 1991; Knutson, 2006), the utility of the VMT in contributing to the prediction of music perception and appraisal in the absence of lyrics suggests that some specialized cognitive processing and general working memory is critically important for the realization of maximum benefit from the existing implant designs. Although other research with pediatric implant recipients has implicated working memory in speech perception outcomes (e.g., Pisoni and Geers, 2000; Cleary et al, 2002; Pisoni and Cleary, 2003), that research has caused Cleary et al (2002) to argue that auditory working memory rather than general working memory underlies implant benefit. In the present study, using cross-modality (vision and hearing) prediction from the VMT, there is evidence that adult implant recipients with better visual working memory are likely to realize greater benefits from the implant, especially when other favorable predictors are present. Thus, additional work on the role of general and modality-specific working memory in implant benefit is indicated.

With regard to life experience (see Figure 3), while general music education in grade school was not a significant predictor, we did find formal music training in high school, college, and beyond to be a significant predictor for four dependent variables with no lyrics. Importantly, in previous studies that we have conducted with smaller samples, we did not find formal training prior to implantation to be significantly correlated with greater accuracy on a number of measures of music perception. In the more comprehensive analyses with a larger sample size in the present study; formal music training at a more advanced level has now emerged as a strong predictor of success on tasks with no lyrics, in which the listener must rely more on spectral information. From a rehabilitative perspective, it is hopeful to note that more time spent in listening to music after implantation is associated with greater accuracy and appraisal of real-world music with and without lyrics. It is also important to note that the positive experience that comes with better accuracy and appraisal of music might also enhance the willingness of implant users to engage in music listening opportunities.

Figure 3.

Relations between dependent variables and factors related to life experiences. The arrows indicate the presence of statistically significant relations between the dependent variables and predictor variables classified as technical differences. The p value is indicated by the type of arrow. NS indicates variables are not significant.

The key findings of this study have several implications for future research initiatives in the implant field. First of all, it is important to recognize that factors can contribute differentially to the variability among adult CI recipients with regard to music perception and music enjoyment. These results underscore the importance of evaluating both the accuracy of music perception and the degree of music enjoyment as distinguishable indices of implant outcome. Because perceptual acuity and appraisal emerged as two distinct aspects of music listening, they cannot be assessed with a single omnibus measure of music listening and perception.

The presence or absence of sung lyrics as an important moderator variable is not surprising, given that the CI has been designed primarily to assist in communication of linguistic information. It is important to acknowledge, however, that not all CI recipients can extract the lyrics from music. To some extent, one might consider instrumental accompaniment that often accompanies a vocalist to be the equivalent of background noise (especially in the case of larger and louder accompaniments), since it can mask and thus compete with the perception of target lyrics. In addition, some studies have shown that word recognition of sung lyrics can be more difficult than spoken lyrics (Hsiao et al, 2006); thus the changes in phoneme production that are common in singing (Vongpaisal et al, 2005) may result in reduced word recognition. In short, the extent of success in music listening by CI users will vary depending upon a variety of factors including age of the subject, the response task (open or closed set), the familiarity or difficulty of the vocabulary, the hearing history of the listener, cognitive skills, and whether a musical accompaniment essentially overpowers the singer.

The emergence of acuity, appraisal, and lyrics as distinct aspects determining music perception by CI users has implications for selecting test stimuli as well as for the response tasks that might be incorporated in rehabilitative training programs. Furthermore, life experiences or individual characteristics significantly influenced all seven of the music perception indices examined in this study. While CI users tend to show significant improvements in speech recognition as a result of everyday listening experiences, we do not see significant improvements in music perception and enjoyment as a result of incidental exposure to music in everyday life. This has implications for the provision of counseling of our CI patients with regard to device benefit and making judicious choices for listening experiences, as well as the provision of training to help people optimize CI benefit for music. Because not all CI recipients have useable residual hearing and long electrodes still remain the primary commercial option for implant recipients, actual postimplant training may be one of the more viable options for improving music perception and enjoyment for those implant recipients who use contemporary technology and aspire to having some music in their lives.

Acknowledgments

This study was supported by grant 2 P50 DC00242 from the National Institutes on Deafness and Other Communication Disorders, NIH; grant M01-RR-59 from the General Clinical Research Centers Program, National Center for Research Resources, NIH; Lions Clubs International Foundation; and Iowa Lions Foundation.

Abbreviations

- AIC

Akaike’s information criteria

- Cl

cochlear implant

- CNC

Consonant-Nucleus Consonant Test

- FMR

Familiar Melody Recognition test

- GLMM

generalized linear mixed model

- HINT

Hearing in Noise Test

- MBQ

Musical Background Questionnaire

- MEAM-l

Musical Excerpt Appraisal Measure, no lyrics

- MEAM-L

Musical Excerpt Appraisal Measure, lyrics

- MERT-I

Musical Excerpt Recognition Test, no lyrics

- MERT-L

Musical Excerpt Recognition Test, lyrics

- ROC

receiver operating characteristics

- RPM

Raven Progressive Matrices

- SLT

Sequence Learning Test

- VMT

Visual Monitoring Task

Footnotes

Portions of this article were presented in the keynote address for Music Perception for Cochlear Implant Workshops, University of Washington, Seattle, October 17, 2006, and the 9th International Conference on Cochlear Implants, Vienna, Austria, June 16,2006.

References

- Akaike H. Information theory as an extension of the maximum likelihood principle. In: Petrov VN, Csaki F, editors. Second International Symposium on Information Theory. 2. Budapest, Hungary: Akademia Kaido; 1973. pp. 267–281. [Google Scholar]

- Büchler M, Lai WK, Dillier N. Music perception with cochlear implants. Poster presented at ZNZ Symposium; Zurich. 2004. www.unizh.ch/orl/publications/ [Google Scholar]

- Carpenter PA, Just MA, Shell P. What one intelligence test measures: a theoretical account of the processing in the Raven Progressive Matrices Test. Psychol Rev. 1990;97(3):404–431. [PubMed] [Google Scholar]

- Cleary M, Pisoni DB, Kirk KI. Working memory spans as predictors of spoken word recognition and receptive vocabulary in children with cochlear implants. Volta Rev. 2002;102:259–280. [PMC free article] [PubMed] [Google Scholar]

- Dillier N. Combining cochlear implants and hearing instruments. Proceedings of the Third International Pediatric Conference; Nov 2004; Chicago, IL, Phonak. 2004. pp. 163–172. [Google Scholar]

- Dorman M, Basham K, McCandless G, Dove H. Speech understanding and music appreciation with the Ineraid cochlear implant. Hear J. 1991;44(6):32–37. [Google Scholar]

- Fujita S, Ito J. Ability of Nucleus cochlear implantees to recognize music. Ann Otol Rhinol Laryngol. 1999;108:634–640. doi: 10.1177/000348949910800702. [DOI] [PubMed] [Google Scholar]

- Gescheider GA. Psychophysics: The Fundamentals. 3. Mahwah, NJ: L. Erlbaum Associates; 1997. [Google Scholar]

- Gfeller KE. Aural rehabilitation of music listening for adult cochlear implant recipients: addressing learner characteristics. Music Ther Perspect. 2001;19:88–95. [Google Scholar]

- Gfeller K, Christ A, Knutson J, Witt S, Mehr M. The effects of familiarity and complexity on appraisal of complex songs by cochlear implant recipients and normal-hearing adults. J Music Ther. 2003;40(2):78–112. doi: 10.1093/jmt/40.2.78. [DOI] [PubMed] [Google Scholar]

- Gfeller K, Christ A, Knutson J, Witt S, Murray K, Tyler R. Musical backgrounds, listening habits and aesthetic enjoyment of adult cochlear implant recipients. J Am Acad Audiol. 2000a;11:390–406. [PubMed] [Google Scholar]

- Gfeller K, Knutson JF, Woodworth G, Witt S, DeBus B. Timbral recognition and appraisal by adult cochlear implant users and normal-hearing adults. J Am Acad Audiol. 1998;9:1–19. [PubMed] [Google Scholar]

- Gfeller K, Lansing C. Melodic, rhythmic and timbral perception of adult coehlear implant users. J Speech Hear Res. 1991;34:916–920. doi: 10.1044/jshr.3404.916. [DOI] [PubMed] [Google Scholar]

- Gfeller K, Lansing C. Musical perception of coehlear implant users as measured by the Primary Measure of Music Audiation: an item analysis. J Music Ther. 1992;29(1):18–39. [Google Scholar]

- Gfeller K, Olszewski C, Rychener M, Sena K, Knutson JF, Witt S, Macpherson B. Recognition of “real-world” musical excerpts by cochlear implant recipients and normal-hearing adults. Ear Hear. 2005;26(3):237–250. doi: 10.1097/00003446-200506000-00001. [DOI] [PubMed] [Google Scholar]

- Gfeller K, Olszewski C, Turner C, Gantz B, Oleson J. Music perception with cochlear implants and residual hearing. Audiol Neurootol. 2006;11(Suppl 1):12–15. doi: 10.1159/000095608. [DOI] [PubMed] [Google Scholar]

- Gfeller K, Turner C, Oleson J, Zhang X, Gantz B, Froman R, Olszewski C. Accuracy of cochlear implant recipients on pitch perception, melody recognition and speech reception in noise. Ear Hear. 2007;28(3):412–423. doi: 10.1097/AUD.0b013e3180479318. [DOI] [PubMed] [Google Scholar]

- Gfeller K, Turner C, Woodworth G, Mehr M, Fearn R, Witt S, Stordahl J. Recognition of familiar melodies by adult cochlear implant recipients and normal-hearing adults. Cochlear Implants Int. 2002a;3:31–55. doi: 10.1179/cim.2002.3.1.29. [DOI] [PubMed] [Google Scholar]

- Gfeller K, Witt S, Adamek M, Mehr M, Rogers J, Stordahl J. The effects of training on timbre recognition and appraisal by postlingually deafened cochlear implant recipients. J Am Acad Audiol. 2002b;13:132–145. [PubMed] [Google Scholar]

- Gfeller K, Witt S, Stordahl J, Mehr M, Woodworth G. The effects of training on melody recognition and appraisal by adult cochlear implant recipients. J Acad Rehabil Audiol. 2000b;33:115–138. [Google Scholar]

- Gfeller K, Witt S, Woodworth G, Mehr M, Knutson JF. Effects of frequency, instrumental family, and cochlear implant type on timbre recognition and appraisal. Ann Otol Rhinol Laryngol. 2002c;111:349–356. doi: 10.1177/000348940211100412. [DOI] [PubMed] [Google Scholar]

- Gfeller K, Woodworth G, Robin D, Witt S, Knutson JF. Perception of rhythmic and sequential patterns by normally hearing adults and adult cochlear implant users. Ear Hear. 1997;18:252–260. doi: 10.1097/00003446-199706000-00008. [DOI] [PubMed] [Google Scholar]

- Green DM, Swets JA. Signal Detection Theory and Psychophysics. Los Altos, CA: Peninsula Publishing; 1988. (Orig. pub. 1966) [Google Scholar]

- Hong RS, Rubinstein JT. High-rate conditioning pulse trains in cochlear implants: dynamic range measures with sinusoidal stimuli. J Acoust Soc Am. 2003;114(6):3327–3342. doi: 10.1121/1.1623785. [DOI] [PubMed] [Google Scholar]

- Hsiao F, Gfeller K, Huang T, Hsu H. Perception of familiar melodies by Taiwanese pediatric cochlear implant recipients who speak a tonal language. Paper presented at the 9th International Conference on Cochlear Implants; Vienna, Austria. 2006. [Google Scholar]

- Huron D. Is music an evolutionary adaptation? In: Peretz I, Zatorre R, editors. The Cognitive Neuroscience of Music. Oxford: Oxford University Press; 2004. pp. 57–78. [Google Scholar]

- James CJ, Fraysse B, Deguine O, Lenarz T, Mawman D, Ramos A, Ramsden R, Sterkers O. Combine electroacoustic stimulation in conventional candidates for cochlear implantation. Audiol Neurootol. 2006;11(Suppl 1):57–62. doi: 10.1159/000095615. [DOI] [PubMed] [Google Scholar]

- Knutson JF. Psychological aspects of cochlear implantation. In: Cooper H, Craddock L, editors. Cochlear Implants: A Practical Guide. 2. London: Whurr Publisher Limited; 2006. pp. 151–178. [Google Scholar]

- Knutson J, Hinrichs J, Tyler R, Gantz B, Schartz H, Woodworth G. Psychological predictors of audiological outcomes of multichannel cochlear implants: preliminary findings. Ann Otol Rhinol Laryngol. 1991;100:817–822. doi: 10.1177/000348949110001006. [DOI] [PubMed] [Google Scholar]

- Kong Y, Cruz R, Jones J, Zeng F. Music perception with temporal cues in acoustic and electric hearing. Ear Hear. 2004;25(2):173–186. doi: 10.1097/01.aud.0000120365.97792.2f. [DOI] [PubMed] [Google Scholar]

- Kong Y, Stickney G, Zeng F. Speech and melody recognition in binaurally combined acoustic and electric hearing. J Acoust Soc Am. 2005;117:1351–1361. doi: 10.1121/1.1857526. [DOI] [PubMed] [Google Scholar]

- Laneau J, Wouters J, Moonen M. Relative contributions of temporal and place pitch cues to fundamental frequency discrimination in cochlear implantees. J Acoust Soc Am. 2004;116(6):3606–3619. doi: 10.1121/1.1823311. [DOI] [PubMed] [Google Scholar]

- Leal M, Shin Y, Laborde M, Calmels M, Vergas S, Lugardon S, Andrieu S, Deguine O, Fraysse B. Music perception in adult cochlear implant recipients. Acta Otolaryngol. 2003;123:826–835. doi: 10.1080/00016480310000386. [DOI] [PubMed] [Google Scholar]

- Looi V, McDermott H, McKay C, Hickson L. Pitch discrimination and melody recognition by cochlear implant users. Paper presented at the VIII Cochlear Implant Conference; Indianapolis, Indiana. 2004. [Google Scholar]

- McDermott H. Music perception with cochlear implants: a review. Trends Amplif. 2004;8(2):49–81. doi: 10.1177/108471380400800203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nielzen S, Cesarec Z. Emotional experiences of music as a function of musical structure. Psychol Music. 1982;10:81–85s. [Google Scholar]

- Nilsson M, Soli SD, Sullivan JA. Development of the Hearing in Noise Test for the measurement of speech reception thresholds in quiet and in noise. J Acoust Soc Am. 1994;95:1085–1099. doi: 10.1121/1.408469. [DOI] [PubMed] [Google Scholar]

- Pijl S. Labeling of musical interval size by cochlear implant patients and normally hearing subjects. Ear Hear. 1997;18:364–372. doi: 10.1097/00003446-199710000-00002. [DOI] [PubMed] [Google Scholar]

- Pisoni DP, Cleary M. Measures of working memory span and verbal rehearsal speed in deaf children after cochlear implantation. Ear Hear. 2003;24:106S–120S. doi: 10.1097/01.AUD.0000051692.05140.8E. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pisoni DP, Geers A. Working memory in deaf children with cochlear implants: correlations between digit span and measures of spoken language processing. Ann Otol Rhinol Laryngol. 2000;109:92–93. doi: 10.1177/0003489400109s1240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Qin MK, Oxenham AJ. Effects of simulated cochlear-implant processing on speech reception in fluctuating maskers. J Acoust Soc Am. 2003;114:446–454. doi: 10.1121/1.1579009. [DOI] [PubMed] [Google Scholar]

- Raven JC, Court JH, Raven J. Manual for Raven’s Progressive Matrices and Vocabulary Scales. London: Lewis; 1977. [Google Scholar]

- Rubinstein JT, Wilson BS, Finley CC, Abbas PJ. Pseudospontaneous activity: stochastic independence of auditory nerve fibers with electrical stimulation. Hear Res. 1999;127(1–2):108–118. doi: 10.1016/s0378-5955(98)00185-3. [DOI] [PubMed] [Google Scholar]

- SAS Institute. SAS/STAT 9,1 User’s Guide. Gary, NC: SAS Institute; 2004. [Google Scholar]

- Schulte E, Kerber M. Music perception with the MED-EL implants. In: Hochmair-Desoyer LJ, Hochmair EC, editors. Advances in Cochlear Implants. Vienna, Austria: Menz; 1994. pp. 326–332. [Google Scholar]

- Simon H, Kotovsky K. Human acquisition of concepts for sequential patterns. Psychol Rev. 1963;70:543–546. doi: 10.1037/h0043901. [DOI] [PubMed] [Google Scholar]

- Tillman TW, Carhart R. An Expanded Test for Speech Discrimination Utilizing CNC Monosyllabic Words. Northwestern University Auditory Test No. 6 (Technical Report No. SAM-TR-66-55) Brooks Air Force Base, TX: USAF School of Aerospace Medicine; 1966. [DOI] [PubMed] [Google Scholar]

- Tyler RS, Summerfield AQ. Coehlear implantation: relationships with the research on auditory deprivation and acclimitization. Ear Hear. 1996;17:38S–50S. doi: 10.1097/00003446-199617031-00005. [DOI] [PubMed] [Google Scholar]

- Vongpaisal T, Trehub S, Schellenberg G. Challenges of decoding the words of songs. Paper presented at 10th Symposium on Cochlear Implants in Children; Dallas. 2005. [Google Scholar]

- Waltzman SB, Fisher SG, Niparko JK, Cohen NL. Predictors of postoperative performance with cochlear implants. Ann Otol Rhinol Laryngol. 1995;165(Suppl):15–18. [PubMed] [Google Scholar]

- Wilson B. Cochlear implant technology. In: Kirk KL, Niparko JK, Mellon NK, Robbins AM, Tucci DL, Wilson BS, editors. Cochlear Implants: Principles and Practices. New York: Lippincott, Williams and Wilkins; 2000. pp. 109–118. [Google Scholar]