Abstract

The neural response to stimulus repetition is not uniform across brain regions, stimulus modalities, or task contexts. For instance, it has been observed in many functional magnetic resonance imaging (fMRI) studies that sometimes stimulus repetition leads to a relative reduction in neural activity (repetition suppression), whereas in other cases repetition results in a relative increase in activity (repetition enhancement). In the present study, we hypothesized that in the context of a verbal short-term recognition memory task, repetition-related “increases” should be observed in the same posterior temporal regions that have been previously associated with “persistent activity” in working memory rehearsal paradigms. We used fMRI and a continuous recognition memory paradigm with short lags to examine repetition effects in the posterior and anterior regions of the superior temporal cortex. Results showed that, consistent with our hypothesis, the 2 posterior temporal regions consistently associated with working memory maintenance, also show repetition increases during short-term recognition memory. In contrast, a region in the anterior superior temporal lobe showed repetition suppression effects, consistent with previous research work on perceptual adaptation in the auditory–verbal domain. We interpret these results in light of recent theories of the functional specialization along the anterior and posterior axes of the superior temporal lobe.

Keywords: auditory–verbal working memory, continuous recognition, fMRI, recognition memory, working memory, reactivation, repetition suppression

Introduction

Recent work in neuroscience research has uncovered 2 basic neural signatures associated with memory for items or events: “repetition suppression” and “reactivation” (Desimone 1996). Many studies have shown that brain activity in a variety of areas may be attenuated when a stimulus is repeated, a phenomenon that has been linked to the psychological notion of “priming” (Henson 2003; Grill-Spector et al. 2006), and is thought to be one of the central mechanisms underlying implicit memory (Schacter et al. 2004). In contrast to repetition suppression, reactivation refers to a phenomenon in which the same cortical area that is activated during the initial perception of a stimulus is also activated when the stimulus is later brought to mind in the act of remembering. This idea has a long history in neuroscience and psychology—an outline of which can be found at least as far back as in the writings of the neurologist Wernicke (1874)—but has only recently received solid empirical support in human memory research. For instance, Wheeler et al. (2000) and Nyberg et al. (2000) have shown that cued associative recall of visual and auditory stimuli from long-term memory causes activation increases within the same cortical regions that were involved in the initial perception of those events. Polyn et al. (2005) showed moreover that the pattern activity in object-selective areas in visual cortex, such as the fusiform gyrus and parahippocampal gyrus, could be used to predict the category (e.g., face or object) of a retrieved item during a free recall task. Damasio (1989) has argued that conscious perceptual memory in both recall and recognition emerges from the reactivation—or cortical “retroactivation”—of neural ensembles in “sensory” cortices that represent the sensory features of a stimulus. Moreover, according to this view, cortical reactivation is not the result of isolated neural activity at a single brain region but rather the result of sustained multiregional coactivation between reciprocally connected unimodal sensory and multimodal “convergence” zones primarily in the parietal and frontal lobes.

It is this type of multiregional neural activation is thought to occur in the context of short-term memory maintenance, where it has been observed that regions in frontoparietal “control” areas as well as posterior sensory regions show persistent activity across a short (∼10 s) memory delay (e.g., Postle et al. 2003). In the case of short-term memory maintenance, however, reactivation is mediated by an internal rehearsal process or sensorimotor loop (Fuster 1997; Baddeley 2003), rather than an external cue or stimulus. This kind of top-down control of memory representations has been referred to by Johnson and colleagues as “refreshing” (Raye et al. 2002; Johnson et al. 2007; Yi et al. 2008) and may be viewed as a special case of reactivation in which the neural representations are triggered by way of an internal control process—that is, “active maintenance”—rather than an external sensory event.

Although it is well known that active maintenance is associated with sustained neural activity in perceptual regions during working memory (Druzgal and D'Esposito 2003; Rama et al. 2004; Buchsbaum et al. 2005; Postle 2006), less is known about how external stimuli modulate activity in perceptual regions during short-term recognition memory. One intriguing possibility is that reactivation in posterior sensory regions elicited by stimulus repetition has a similar underlying mechanism to the kind of reactivation that occurs during working memory maintenance—except for one crucial difference: in working memory, reactivation is mediated via top-down control signals that presumably arise from the prefrontal and parietal cortices, whereas in short-term recognition memory, reactivation is triggered by sensory stimulus processing. According to this view, active memory traces residing in posterior sensory cortex can be “reactivated” either from a bottom-up sensory signal or by way of a top-down control signal.

Much of the neuroimaging research examining the effect of stimulus repetition over short intervals, however, has revealed repetition suppression effects, rather than reactivation (Grill-Spector et al. 2006), though such studies have not typically required an explicit recognition memory judgment (but see Druzgal and D'Esposito 2001). Nevertheless, on the basis of the many studies demonstrating repetition suppression effects in perceptual areas for short intervals, one might hypothesize that relative decreases in activation, rather than increases, might serve as the predominant “memory signal” in perceptual processing regions during short-term recognition memory. If, as we have suggested above, however, a similar mechanism underlies reactivation during active maintenance in working memory and in recognition memory, then one would predict that the same perceptual regions that show delay period activity during working memory maintenance also would show reactivation effects during short-term recognition memory. In the present study, we test this prediction by examining how sensory/perceptual cortical areas in a well-characterized verbal working memory network are modulated during stimulus repetition in the context of a short-term recognition memory paradigm.

Recent neuroimaging studies have consistently shown that posterior superior temporal regions are involved in the active maintenance of acoustic–phonological representations (Buchsbaum et al. 2001, 2005; Hickok et al. 2003; Rama et al. 2004; Feredoes et al. 2007), whereas more anterior superior temporal regions are typically not active during working memory maintenance but do exhibit neural adaptation effects in auditory stimulus repetition paradigms (Cohen et al. 2004; Dehaene-Lambertz et al. 2006). Moreover, it has been argued that these anterior and posterior superior temporal regions constitute divergent auditory processing pathways, with the former anterior/ventral stream involved in the identification and categorization of acoustic patterns or objects (a “what” pathway) and the latter dorsal/posterior stream involved in auditory perception in the context of phonological analysis and speech production (a “how” pathway). Within the posterior superior temporal cortex, in the auditory dorsal stream, 2 regions consistently show delay period activation during verbal working memory maintenance (Buchsbaum and D'Esposito 2008): the posterior superior temporal sulcus (pSTS), bilaterally, and a region at the posterior end of the left sylvian fissure, area Spt (sylvian–parietal–temporal). We hypothesized that, insofar as reactivation in short-term recognition memory is mediated by a similar process that underlies sustained activation during working memory, these posterior temporal regions should show enhanced activation during repetition of verbal stimuli. In contrast, we expected to see repetition suppression in the mid-anterior STG/superior temporal sulcus (STS), consistent with previous studies in auditory repetition and the absence of delay period activation in the area during working memory maintenance.

We tested these hypotheses using a verbal continuous recognition paradigm with short lags (1–5 items), where subjects had to make a series of “old”/“new” recognition judgments for a series of verbal stimuli. Although our primary interest was in the auditory domain, we presented words in both auditory–verbal and visual–verbal modalities in order to assess the extent to which the modality of the probe modulated the repetition effect. Thus, for instance, if the posterior STG mediates phonological retrieval, as has been suggested (Hickok et al. 2000; Buchsbaum et al. 2001; Indefrey and Levelt 2004), we should predict that either an auditory–verbal or a visual–verbal probe should give rise to mnemonic reactivation during recognition memory. If the anterior STG, however, is primarily involved in the representation of acoustic—as opposed to phonological—features (Liebenthal et al. 2005), then we predict that it should not show repetition effects when the item encoded auditorily is repeated “visually.”

Materials and Methods

Participants

Forty-three healthy subjects (24 females; age, 19–36 years) gave informed consent according to procedures approved by the University of California. All were right handed, were native English speakers with normal hearing, had normal or corrected-to-normal vision, and had at least 12 years of education. None of the subjects had a history of neurological or psychiatric disease nor were they taking any psychoactive medications. Two subjects were eliminated from statistical analyses due to poor performance and an additional 2 subjects were eliminated due to excessive (>5 mm) head motion, leaving 39 subjects (22 females).

Experimental Stimuli

Auditory recordings of 666 2- and 3-syllable nouns were generated with a text-to-speech synthesizer using the Nuance Speechify software with a female voice. The words were selected from the MRC psycholinguistic database (Coltheart 1981) so as to exclude words with very high (>600) imageability ratings. Other relevant indices for the word set are as follows: average Kucera–Francis written frequency, mean = 43.8, standard deviation (SD) = 64.6; number of syllables, mean 2.46, SD = 0.5; number of letters, mean = 7.1, SD = 1.59; imageability index, mean = 474.9, SD = 97.9. There were no statistically significant differences in these word indices across experimental conditions.

Functional Magnetic Resonance Imaging Scanning Methods

Functional images were acquired during 8 sessions lasting 348 s each. T2*-weighted echo-planar images (EPIs) sensitive to blood oxygenation level-dependent (BOLD) contrast were acquired at 4 Tesla with a Varian (Palo Alto, CA) INOVA MR scanner and a transverse electromagnetic send-and-receive radiofrequency head coil (MR instruments, Minneapolis, MN) using a 2-shot gradient-echo EPI sequence (22.4 × 22.4 cm field of view with a 64 × 64 matrix size, resulting in an in-plane resolution of 3.5 × 3.5 mm for each of 20 3.5-mm axial slices with a 1-mm interslice gap; repetition time, 1 s per one-half of k-space [2 s total]; echo time, 28 ms; flip angle, 20°). High-resolution gradient-echo multislice T1-weighted scans, coplanar with the EPIs, as well as whole-brain MP Flash 3-dimensional T1-weighted scans were acquired for anatomical localization.

Presentation software (Neurobehavioral Systems, Inc., Albany, CA) was used for all stimulus delivery. Sound stimuli were delivered through MR-Confon headphones. Subjects also wore earplugs for additional sound attenuation of the scanner background noise. Auditory word stimuli were presented at approximately 10–15 dB above the scanner noise. Visual word stimuli were presented in 150-point Times Roman font with a liquid crystal display projector on a screen suspended in the scanner bore above the subject's midsection, approximately 15 inches away from the mirror. Subjects viewed the screen via a mirror mounted inside the radiofrequency coil. The projector was tilted so that the visually presented words appeared in the center of the screen where subjects could easily read them.

Functional Magnetic Resonance Imaging Task

Subjects performed a continuous recognition paradigm with auditory– and visual–verbal stimuli (Fig. 1). During each of the eight 6-min scanning runs, subjects were presented with a total of 134 words, half of which were shown visually and half of which were delivered auditorally. The stimulus-onset asynchrony was a constant 2.5 s (onset to onset), and the ordering of auditory and visual stimuli was pseudorandomly distributed with the constraint that exactly half (68 words per run) were visual and half were auditory. For each presented stimulus, subjects made a judgment as to whether the current item had been previously encountered. Subjects pressed the left button if he or she judged that an item was old (previously encountered) and the right button if he or she judged that an item was new (not previously encountered). In each run, 60 of the 134 (44.7%) words were repeated items (REPEAT) and 74 were novel (NOVEL) items. Of the 74 novel words, 14 were “filler” items that were shown once but not repeated, whereas the remaining 60 items were novel items that would be repeated once. From the standpoint of the subject, however, filler and novel items were indistinguishable. Items were either repeated in the same modality (e.g., visual → visual or auditory → auditory), cross-modally (visual → auditory or auditory → visual), or not at all (filler items). Each of the 4 possibilities was enumerated for the subject before practicing the task for the first time. Subjects were instructed that for an item to be classified as old, it need not be presented in the same modality as it was when first encountered. Subjects were also instructed that no item was ever repeated across a run.

Figure 1.

Illustration of the task design. Each box represents a stimulus, which is presented either in the auditory (A) or visual (V) modality. The letter subscripts refer to the whether the item is novel (n) or repeated (r). Stimuli were separated by a constant 2.5-s stimulus-onset asynchrony, and the modality of each item was pseudrandomly ordered. The arrows connecting the boxes represent a novel-repeat stimulus pair at different lags. Thus, “Aud:Aud:4” refers to an auditory–auditory repetition at lag 4. In the full task, all 4 combinations of encoding and probe modalities were equally represented (Aud:Aud, Aud:Vis, Vis:Aud, Vis:Vis). The lag between the first presentation of an item and its repetition was varied from 1 to 5. This factor was crossed with encoding modality and probe modality, yielding 20 unique stimulus repetition conditions.

We refer to the modality of the first presentation of an item as the “encoding modality” (ENC-MOD with levels: ENC-MODAUD, ENC-MODVIS) and the modality of the second (or repeated) presentation of an item as the “probe modality” (PROBE-MOD with levels: PROBE-MODAUD, PROBE-MODVIS). For shorthand, we will refer to each of the 4 cells derived from crossing the ENC-MOD and PROBE-MOD factor as Aud:Aud, Aud:Vis, Vis:Vis, Vis:Aud, where in each case the encoding modality is specified first and the probe modality is specified last. Thus, Vis:Aud (long name: ENC-MODVIS:PROBE-MODAUD) refers to an item that was first presented visually but repeated auditorily; Aud:Vis (long name: ENC-MODAUD:PROBE-MODVIS) refers to an item that first was presented auditorily but was then repeated visually. The last manipulated factor was the lag (LAG) between the first and second presentation of a repeated item, which was varied from 1 (immediate repetition) to 5 (4 items intervening between first and second presentation). An item that was, for instance, encoded auditorily and repeated auditorily at LAG 1 is referred to as Aud:Aud:1. In each run, LAG was distributed pseudorandomly with the constraint that there were 12 trials for each LAG level, which were evenly distributed across the ENC-MOD and PROBE-MOD factors. Subjects were informed that repeated items would only be drawn from the relatively recent past (approximately 30 s) and that no repetitions would carry over across scanning runs. They were not told, however, that the maximum lag was exactly 5 items. For the full experiment, conducted across 8 scanning runs of 134 trials each, there were 594 new items and 480 old items; among the 480 old items, there were 120 trials per ENC-MOD/PROBE-MOD combinations; and within each ENC-MOD by PROBE-MOD condition, there were 24 trials for each of the 5 lags.

Data Processing

Processing in k-space was performed with in-house software. EPI data from different slices were sinc interpolated in time to correct for slice-timing skew. The data were then linearly interpolated in k-space across subsequent shots of the same order (first shot or second shot) to yield an effectively higher sampling rate, nominally twice the original (1 interpolated volume per second). When Fourier transformed, this yielded a total of 342 images for each of the 8 scanning runs. For each subject, images were motion corrected and realigned to the first image of the first run of the session using the AFNI (Cox 1996) program 3dVolreg. The functional images were then smoothed with a 5-mm full-width at half-maximum Gaussian kernel. All statistical analyses were performed on these smoothed and realigned images.

Each subject's high-resolution anatomical scan was normalized to Montreal Neurological Institute (MNI) stereotaxic space with a 12-parameter affine transformation and the FSL program FLIRT. An additional 6-parameter rigid-body registration between each subject's gradient-echo multislice sequence (GEMS) image (which were coplanar with EPIs and acquired immediately before the first EPI volume) to his or her high-resolution MRI was carried out. These 2 linear transformations were then concatenated (GEMS → native space MRI → MNI) to derive a transformation between each subject's native EPI space and the normalized template space. Spatial normalization for the purpose of random effects group analyses was then carried out on the t-statistic maps generated from regression analyses performed on native space EPI time series data.

Functional Magnetic Resonance Imaging Statistical Analysis

Single-subject regression modeling was carried out using the AFNI program 3dDeconvolve. Each of the 20 repetition conditions (ENC-MOD[AUD, VIS] × PROBE-MOD[AUD, VIS] × LAG[1,2,3,4,5]) was modeled with a separate regressor that was generated by convolving a gamma function with a binary vector indicating the event onset times for each condition (1 indicating event onset, 0 otherwise). Regressors for NOVEL:Aud and NOVEL:Vis events were also generated in the same way (see below). An additional set of nuisance regressors (a constant term plus linear, quadratic, and cubic polynomials terms) were included for each scanning run to model low frequency noise in the time series data. From a conceptual standpoint, NOVEL items could be modeled using only 2 regressors, one for auditory and one for visual items. However, because of systematic differences in the prior stimulus context across repeat trials (Aud:Aud:1 trials always immediately followed and auditory stimulus, whereas Aud:Aud:2 trials always followed an auditory stimulus that occurred 2 items ago, etc.), NOVEL trials were split into subsets that were selected so as to best match the preceding stimulus context of each of the 20 repetition conditions. This was achieved by way of a simple algorithm that iterated through all the REPEAT trials and for each one selected the NOVEL trial that had the most similar prior stimulus context (where context was defined as the modality of the last 5 stimuli before the current item). The context of each REPEAT stimulus was compared with each NOVEL item by comparing the modalities of the prior 5 items in the 2 sequences and creating a binary sequence indicating, for each position, whether the stimulus modalities match (value = 1) or did not match (value = 0). A weighted sum—where the weights decayed exponentially from 1 to 5—of this vector was then computed, yielding a composite index of the similarity of the local prior context of the 2 items. Thus, if 2 sequences were preceded by 5 items of the same modality, the index would be zero; and if the 5 preceding items were all different, the index would be maximal. Because the exact solution is dependent on the order of iteration through the REPEAT trials, the matching algorithm was run for 1000 randomly selected trial orderings, and the best of these (in terms of minimizing the sum of the differences in preceding stimulus context) was used to divide the NOVEL trials into 20 disjoint sets of 24 trials. The repetition effect for each REPEAT condition was estimated as a contrast with the matched subset of NOVEL trials. Thus, for instance, the contrast Aud:Aud:1 > NOVEL:Aud is a contrast of the 24 Aud:Aud:1 REPEAT trials with 24 NOVEL:Aud trials that were matched for prestimulus context.

Statistical contrasts at the single-subject level were computed as weighted sums of the estimated beta coefficients divided by an estimate of the standard error, yielding a t-statistic for each voxel in the image volume. Random effects group analyses were then carried out on the spatially normalized single-subject t-statistics using repeated-measures analysis of variance (ANOVA) for hypotheses involving more than one level and with a one-sample t-test (against 0) for simple hypotheses. We chose to use t-statistics in group-level analyses, rather than the raw beta coefficients, because of recent work showing that the latter are often not normally distributed at the group level (Thirion et al. 2007).

Analysis of Behavioral Data

Trials were scored as a “hit” for repeated items correctly classified as old, and as a “correct rejection” for novel items correctly classified as new. The mean accuracy across subjects was 0.913 (SD = 0.053). Accuracy scores for 2 subjects were greater than 3 SDs from the group mean, and these subjects were considered outliers and removed from all subsequent behavioral and functional magnetic resonance imaging (fMRI) analyses.

Results

Behavioral Performance

A 3-way repeated-measures ANOVA was computed where encoding modality (ENC-MOD), probe modality (PROBE-MOD), and LAG were entered as within-subjects factors, and SUBJECT was modeled as a random effect. Main effects of LAG (F = 71, P < 0.0001, degrees of freedom [df] = 4,168) and ENC-MOD (F = 69.2, P < 0.0001, df = 1,42), but not PROBE-MOD (F = 2.5, P = 0.12, df = 1,42), were statistically significant. Several interactions were also significant, including LAG by ENC-MOD (F = 19.4, P < 0.0001, df = 4,168), ENC-MOD by PROBE-MOD (F = 54.7, P < 0.0001, df = 1,42), and LAG by ENC-MOD by PROBE-MOD (F = 15.2, P < 0.0001, df = 4,168). As can be seen in the plot of accuracy data in Figure 2, overall performance was best for items that were encoded auditorily (mean accuracy = 0.947) compared with items that were encoded visually (mean accuracy = 0.89448). In addition, the deleterious effect of increasing LAG on recognition memory was smallest for items that were both encoded and represented in the auditory modality (Aud:Aud; linear coefficient = −0.0045, t = −2.26, P = 0.025). In contrast, items that were encoded visually and represented auditorily (Vis:Aud) showed the steepest decline in accuracy (linear coefficient = −0.05131, t = −13.2, P < 0.0001).

Figure 2.

Behavioral performance data. (A) Graph of accuracy (y axis, measured as percent correct) plotted against LAG (x axis) for each of the 4 repetition conditions. (B) Graph of reaction time (y axis, in ms) plotted against lag (x axis).

Functional Magnetic Resonance Imaging Results

Our first goal was to identify auditory-responsive regions that show repetition sensitivity during short-term recognition memory. Following Wheeler et al. (2000), we define reactivation as a region that is active both during perceptual encoding and during subsequent retrieval. In addition, such retrieval-related activation must be greater than the baseline level of encoding activation, defined as the mean level of activity for NOVEL stimulus events. Thus, repetition effects were expressed as a relative increase in activation with respect NOVEL trials for a given modality. Similarly, we define repetition suppression as any region that is active during stimulus encoding and shows a “decrease” in activity (relative to encoding) upon stimulus repetition. We use the term “repetition reduction” to indicate those regions that show smaller responses to repeated items than to novel items but that do not necessarily show a significant response during encoding.

Sensitivity to stimulus modality may be assessed by examining the main effect of modality (Modality:Aud > Modality:Vis) collapsed across all other conditions. Auditory reactivation, then, is defined as the conjunction: [Modality:Aud > Modality:Vis] ∩ [Aud:Aud > Novel:Aud] or the conjunction: [Modality:Aud > Modality:Vis] ∩ [Aud:Vis > Novel:Vis]. Auditory repetition suppression is defined by the complementary set of conjunctions: [Modality:Aud > Modality:Vis] ∩ [Novel:Aud > Aud:Aud] or the conjunction: [Modality:Aud > Modality:Vis] ∩ [Novel:Vis > Aud:Vis]. Thus, either a visual or auditory probe stimulus can elicit auditory reactivation or suppression. The reason for this is that reactivation and suppression effects are defined in terms of the encoding modality, not the probe modality. An additional set of contrasts would define repetition suppression and reactivation for visually encoded items; however, our analysis was focused on repetition effects in auditory cortex, so we do not report the set of visual repetition effects.

Main Effects of Auditory and Visual Processing

To identify regions that were broadly sensitive to either auditory or visual word stimuli, we compared activity between all auditory and visual trials (Modality:Aud > Modality:Vis) with a one sample t-test (Supplementary Fig. 1). Unsurprisingly, this contrast showed that the area of the superior temporal cortex, bilaterally, was more responsive to auditory stimuli, whereas regions in the ventral and dorsal occipital cortex were more responsive to visual stimuli. In addition, several areas in frontal cortex, notably the precentral and superior frontal gyri, were also more sensitive to visual than auditory stimuli.

Previous neuroimaging studies investigating auditory–verbal repetition effects showed suppression effects in a region in the mid-anterior portion of the STS (Cohen et al. 2004: MNI coordinates: −60, −8, −4; Dehaene-Lambertz et al. 2006: MNI coordinates: −60, −12, −3). As can be seen in Figure 3 (top left image), we also observe repetition suppression effects for auditory word repetition (Aud:Aud) in the mid-anterior superior temporal gyrus (STG) extending in to the STS (MNI maximum: −61.5, −7, −9). The replication of a repetition suppression effect in this relatively anterior superior temporal region confirms that the effect is present not just during passive listening, as previous studies have shown, but also when subjects make recognition memory judgments. In addition to the auditory repetition suppression effect, we observed repetition increases in the posterior superior temporal gyrus (pSTG), the inferior and posterior parietal cortices, the middle frontal gyrus, and a more anterior prefrontal region (see Fig. 4, yellow colors). Of the regions showing a repetition enhancement effect, however, only the region in the posterior superior temporal cortex exhibited reactivation (red color), as we have defined it, insofar as it shows preferential auditory stimulus sensitivity and greater activity to repeated than to novel items.

Figure 3.

Conjunction analyses showing auditory-sensitive regions that also show repetition effects. (A) Repetition effects for the Aud:Vis condition. Green colors are areas with greater activation for auditory stimulus than for visual stimuli (Modality:Aud > Modality:Vis). Yellow colors show areas with greater activation for Aud:Vis trials than for novel trials (Aud:Vis > Novel:Vis). Red colors show the reactivation conjunction (Modality:Aud > Modality:Vis ∩ Aud:Vis > Novel:Vis). Blue colors show the suppression conjunction (Modality:Aud > Modality:Vis ∩ Novel:Vis > Aud:Vis). (B) Repetition effects for the Aud:Aud condition. The color scheme is the same as in the top panel, except “Repeat” (see legend) refers to Aud:Aud trials, and “Novel” refers to Novel:Aud.

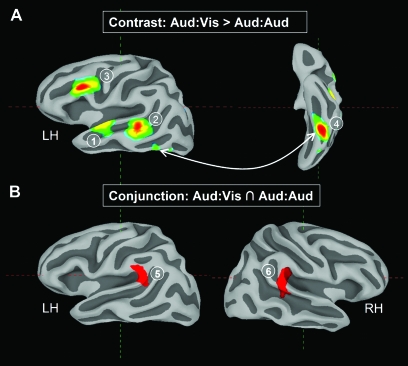

Figure 4.

Contrast and conjunction of within-modality (Aud:Aud) and cross-modality (Aud:Vis) repetition effects. (A) Areas in which repetition-related activity is greater in the cross-modal (Aud:Vis) condition than in the within-modal (Aud:Aud) condition. (B) Auditory-sensitive areas (Modality:Aud > Modality:Vis) that show repetition effects in both the Aud:Aud and Aud:Vis conditions (Modality:Aud > Modality:Vis ∩ Aud:Aud > Novel:Aud ∩ Aud:Vis > Novel:Vis). Region labels are as follows: (1) mid-anterior STG/STS, (2) pSTS, (3) IFG, (4) ventral occipital, (5) left STG/IPL, and (6) right STG/IPL.

Repetition Suppression and Reactivation Effects

To formally isolate regions showing repetition suppression or reactivation effects, we performed a set of conjunction analyses (Nichols et al. 2005). For the 2 contrasts involving auditorily encoded items (Aud:Aud and Aud:Vis), we carried out separate conjunctions with the stimulus modality contrast (Modality:Aud > Modality:Vis). To identify repetition suppression effects, we searched for voxels in which there was a significant negative (t < −3.56, P < 0.001) score for the Aud:Aud contrasts and a significant positive score for the stimulus modality contrast (Modality:Aud > Modality:Vis, P < 0.001). Reactivation effects were identified by searching for voxels with a significant positive score (t > 3.56, P < 0.001) on the Aud:Aud contrast and a significant positive score for the main effect of stimulus modality (Modality:Aud > Modality:Vis). The results of these analyses are displayed in Figure 3, where one can see that repetition suppression is observed in the mid-anterior STG/STS only for the Aud:Aud condition (top left image, blue color), whereas reactivation is observed in a region at the boundary of the pSTG and inferior parietal lobe (IPL) for both the Aud:Aud condition and the Aud:Vis condition (red color).

Cross-Modality Repetition Effects

As can be seen in Figure 3, there appears to be a region of activation in the pSTS exhibiting a reactivation effect in the cross-modality Aud:Vis condition but not in the within-modality Aud:Aud repetition condition. To confirm this observation, a direct contrast (paired t-test) was performed between Aud:Aud and Aud:Vis conditions (Aud:Vis > Aud:Aud). This contrast evaluated the extent to which repetition effects for auditorily encoded items are sensitive to the modality of the probe. As can be seen in Figure 4, activity in a number of regions was greater for the cross-modal probe condition (Aud:Vis) than for the within-modality probe condition (Aud:Aud). A few areas (data not shown), including the ventral premotor cortex/anterior insula and post-central gyrus, bilaterally, showed the reverse effect (Aud:Aud > Aud:Vis).

There were 2 separate clusters of activation in the left superior temporal lobe for which activation during recognition memory is dependent on the modality of the probe (see Fig. 4). The first region, which clearly corresponds to the region showing a large repetition suppression effect for Aud:Aud items (compare with blue cluster in Fig. 3), was located in the mid-anterior STG/STS; the second region, which did not have a significant Aud:Aud repetition suppression effect, was found in the pSTS (see Fig. 4, top panel). Two additional regions, the left inferior frontal sulcus and the left ventral occipital cortex, also showed greater activity for the cross-modal (Aud:Vis) probe condition than for the within-modality probe condition (Aud:Aud).

Domain General Verbal Repetition Effects

To identify regions with reactivation or suppression effects that were robust across both probe modality conditions, we performed 2 three-way conjunction analyses, one for reactivation: [Aud:Aud > NOVEL:Aud] ∩ [Aud:Vis > NOVEL:Aud] ∩ [Modality:Aud > Modality:Vis] and one for suppression: [NOVEL:Aud > Aud:Aud] ∩ [NOVEL:Aud > Aud:Vis] ∩ [Modality:Aud > Modality:Vis]. No regions were observed that showed repetition suppression for both probe modalities. The STG/IPL bilaterally, however, did show auditory reactivation that was present irrespective of the modality of the probe (see Fig. 4, bottom panel).

Summarizing the results so far, we found 3 regions within auditory-sensitive superior temporal lobe that showed significant repetition effects: the left mid-anterior STG/STS, the left pSTS, and the bilateral STG/IPL. These 3 regions along with associated patterns of activation across all 4 encoding/probe modality combinations (Aud:Aud, Aud:Vis, Vis:Aud, Vis:Vis) are shown in Figure 5. As is clear from the plot of mean t-statistics, the mid-anterior STG/STS shows a repetition suppression effect that is confined to the Aud:Aud condition. The pSTS shows a strong reactivation effect for the Aud:Vis condition only (it also shows weak Aud:Aud repetition suppression effect that does not survive the P < 0.001 whole-brain significance threshold). Finally, the pSTG/IPL, bilaterally, shows reactivation for all conditions except Vis:Vis.

Figure 5.

Three superior temporal regions and their pattern of effects across all 4 encoding/probe modality conditions. For the anterior mid-anterior STG/STS (Fig. 6, blue cluster) and pSTS (Fig. 6, cyan cluster), the regions were defined as the contiguous voxels with significant conjoint activity for Aud:Vis > Aud:Aud ∩ Modality:Aud> Modality:Vis.

Although significant superior temporal lobe repetition suppression effects were observed only in Aud:Aud condition, examination of the Aud:Vis, Vis:Aud, and Vis:Vis contrasts at P < 0.05 shows some degree of repetition reduction in the vicinity of the anterior STS/MTG in each condition. As discussed earlier, we define repetition reduction as a decrease in activity to repeated items without the further requirement that the region show a significant positive response during encoding. Using the conservative conjunction method of Nichols et al. (2005) requiring all contrasts to exceed P < 0.001, no regions would qualify as showing repetition reduction in each of the 4 main conditions. Using the method of Friston et al. (2005), however, which tests against a global null hypothesis and adjusts the significance threshold to reflect the number of contrasts entering the conjunction, a number of regions including a cluster in the anterior STS and the hippocampus showed a significant repetition reduction effect across all 4 conditions (see Fig. 6). The region in the anterior STS/middle temporal gyrus (MTG) (MNI: −57, −5, −24) was located just lateral and inferior to the auditory-sensitive portion of the superior temporal cortex. This area is to be distinguished from the region in the mid-anterior STG/STS located approximately 15 mm superiorly (MNI: −66, −7, −9; blue cluster in Fig. 5A) that showed a repetition suppression effect only in the Aud:Aud condition.

Figure 6.

Supramodal repetition suppression effects in the STS and hippocampus. (A) Surface view showing cluster in anterior STS/MTG. (B) Axial slice showing hippocampus and left anterior STS/MTG clusters. (C) Sagittal slice showing anterior STS/MTG cluster. (D) Plot of group mean t-statistics in anterior STS/MTG cluster for all 4 encoding/probe conditions. Largest repetition suppression effect is in Aud:Aud condition, but all conditions show some degree of suppression.

Lag Effects

The 3 temporal lobe regions examined above were based on clusters defined as having repetition effects collapsed across LAG. There might be additional areas, however, that show a differential response across the 5 lags that might not be detected in the main effect analysis. To examine condition-specific lag effects, we carried out set of one-way ANOVAs (within-subject variable: LAG, random effect: SUBJECT) separately for the auditory encoding conditions (Aud:Aud and Aud:Vis). Tests for linear and quadratic trends yielding t-statistics were also computed. We restricted the search space for these condition-specific voxelwise ANOVAs to the auditory-sensitive cortex of the temporal lobe. At a threshold of P < 0.001, only one region, located in the left temporal pole at the level of the MTG (Brodmann area [BA] ∼ 21; MNI: −52, 7, −23), showed a significant effect of lag for the Aud:Aud condition. This region showed a positive linear trend across lag (t = 3.66, P < 0.001) but no significant quadratic effect. It is clear from the plot of means (Supplementary Fig. 2) that this region shows a repetition suppression effect for lag 1 only. For the Aud:Vis condition, a significant lag effect was observed at the posterolateral edge of the STG extending in to the parietal operculum (BA ∼ 42; MNI −63, −34, 19). The cluster of activation was contained within the reactivation cluster observed in conjunction of the Aud:Aud and Aud:Vis main effects (refer to Fig. 4, bottom panel). Both linear (t = −3.22, P < 0.01) and quadratic (t = −4.77; P < 0.001) trends were significant, and the plot of cell means (see Supplementary Fig. 2) shows that the effects are significantly positive for all lags except lag 4.

A whole-brain analysis was also carried out to examine LAG effects collapsed across encoding and probe modalities. The largest LAG effects were observed in the left inferior frontal gyrus (IFG) and in lateral inferior parietal cortex, bilaterally (Fig. 7). These 2 regions showed roughly opposite patterns of activation, with the IFG showing increasing activity as a function of lag and the lateral parietal cortex decreasing activation as a function of LAG. No main effects of LAG were observed in any auditory-sensitive regions in the superior temporal lobe, however.

Figure 7.

Main effect of LAG. (A) Left and right surface views showing (linear) parametric lag effects, where blue areas denote decreasing trends (from lag 1 to lag 5), and red areas denote increasing trends (from lag 1 to lag 5). (B) Plot of mean t-statistics in the IFG and lateral inferior parietal cortex as a function of LAG for each of the 4 encoding/probe modality combinations.

Discussion

We have examined how the neural signatures of short-term verbal recognition memory vary as a function of the modality of both the target and probe items and the lag between the first and second presentations of an item. In 3 anatomically distinct regions in the superior temporal lobe—the mid-anterior STG/STS, the pSTS, and the pSTG/IPL—we observed different patterns of activation during recognition memory. Consistent with previous studies of auditory stimulus repetition (Cohen et al. 2004; Dehaene-Lambertz et al. 2006; Hara et al. 2007), we observed decreases in activity (relative to novel items) in the mid-anterior STG/STS when both the encoded and repeated items were delivered in the auditory modality. This study builds on previous studies in showing that auditory–verbal repetition suppression effects in the mid-anterior STG/STS are present in the context of an explicit recognition memory judgment. In contrast to the more anterior superior temporal region, the pSTG/IPL showed greater activation (reactivation) during correct positive recognition judgments when the modality at encoding was auditory. Finally, a region in the posterior portion of the STS showed a large reactivation effect that was confined to the Aud:Vis condition, that is, when an auditorily encoded item was tested with a visual probe. Taken together, the pattern of repetition effects observed in auditory-sensitive superior temporal cortex reveals a neuroanatomical dissociation in which the mid-anterior STG/STS shows auditory-specific repetition suppression effects and 2 posterior superior temporal regions show enhancement effects—although in the case of the pSTS enhancement, effects were restricted to cross-modal item-probe pairs (Aud:Vis), whereas in the STG/IPL, enhancement effects were observed for both auditory and visual probes (Aud:Aud and Aud:Vis). Conjunction analyses revealed domain general repetition suppression effects in the anterior STS, in a region located just inferior and anterior to the region showing maximal Aud:Aud repetition suppression, as well as in the hippocampus and parahippocampal gyrus. In contrast, domain general repetition enhancement effects were observed in dorsal frontal and parietal regions, consistent with previous neuroimaging research, using a variety of stimulus materials, on old > new effects in recognition memory (Kahn et al. 2004; Shannon and Buckner 2004).

Repetition Suppression

It is well known that repetition suppression effects in visual object processing regions in the inferior temporal cortex are not always sensitive to task demand. For instance, Miller and Desimone (1994) have shown with single-unit recordings in the inferotemporal cortex of the macaque that suppression effects were equally large for repeating stimuli that were behaviorally irrelevant and for those stimuli for which the monkey had to make a “matching” response. In their task, a visual sample stimulus (A) was followed by one or more sequential test stimuli (BCDEA), and the monkey had to respond when presented with an item that matched the sample. In trials referred to as “ABBA,” a nonmatching distracter stimulus (B) repeated in the interval between the presentation of the sample stimulus and the correctly matching test stimulus. Only cells showing a reactivation (or “match enhancement”) effect distinguished between a repeating distracter stimulus (B) and the correct test probe. Miller and Desimone (1994) concluded that automatic repetition suppression and match enhancement reflected 2 different short-term memory mechanisms, with the latter class of responses indicating store processes associated with active, or working, memory. Our observation of a regional dissociation between repetition suppression and repetition enhancement may likewise reflect 2 different memory mechanisms, with the former arising automatically as a result of stimulus processing and the latter explicitly associated with active memory processing.

Xu et al. (2007) have shown that the repetition suppression response to scenes in the parahippocampal gyrus is equivalent for different perceptual tasks, even when performance on the 2 tasks differs in opposite directions. Consistent with these studies, Sayres and Grill-Spector (2006) have shown that repetition suppression in object-selective visual cortex is driven by perceptual processes that occur during stimulus recognition itself, rather than at a later postperceptual or task-driven processing stage. Desimone (1996) has proposed that repetition suppression in sensory/perceptual processing regions is primarily a bottom-up phenomenon that occurs as a result of a smaller pool of neurons responding (an effect of neural tuning process) to the repeated stimulus. Our results show that the repetition suppression effect in the anterior superior temporal region, at least, persists even in the context of a recognition memory judgment. This is consistent with the notion that repetition suppression primarily reflects implicit processes, which are not necessarily sensitive to explicit task demands such as a memory judgment (Henson 2003; Schott et al. 2006).

Scott and colleagues (Scott et al. 2000; Spitsyna et al. 2006) have shown that although the mid-anterior STG/STS is sensitive to phonetic features of speech even when they are embedded in an unintelligible acoustic medium (e.g., speech that has been filtered by spectral inversion of the acoustic signal), an adjacent region in the STS (anterior and inferior to the STG region) is sensitive to speech that is “intelligible.” This sensitivity in the anterior STS intelligibility holds across auditory and visual modalities (Spitsyna et al. 2006), where in the latter case activation in the anterior STS was shown to be greater during the viewing of written text compared with viewing of a false font. Our study extends these findings to the domain of short-term recognition memory and the repetition suppression effect. The finding of auditory-specific repetition suppression in the mid-anterior STG/STS is consistent with this region playing a role in the perception of acoustic–phonetic features and may be akin to the presemantic auditory subsystem identified by Schacter and Church (1992) in the context of implicit memory paradigms. The further observation of a supramodal repetition suppression effect in the region of the anterior STS supports the work of Scott and colleagues and argues for a more abstract level of representation in this region that is relatively insensitive to the modality of stimulus input.

Repetition Enhancement

Although repetition suppression effects were found in the anterior portion of the superior temporal lobe, reactivation effects were observed in more posterior regions. Some previous reports have shown repetition suppression and enhancement effects in different neurons within the same cortical region (Miller and Desimone 1994; Rainer and Miller 2000) or in the same cortical region across different tasks (Turk-Browne et al. 2007). For instance, Turk-Browne et al. (2007) found that the visual quality of a repeating scene stimulus determined whether the parahippocampal place area would show repetition enhancement or repetition suppression. In the present study, however, the dissociation occurred for the same probe stimuli across distant regions in auditory association cortex. The categorical difference in the response properties of anterior and posterior superior temporal regions elicited by matching probes seems to call for an explanation that draws on recent work on the functional neuroanatomical differences between anterior and posterior information processing streams in auditory cortex (Romanski et al. 1999; Tian et al. 2001). A number of recent articles (Hickok and Poeppel 2004, 2007; Scott and Wise 2004) have argued that the pathway emerging from the posterior portion of auditory cortex constitutes an auditory “dorsal stream” that is specialized for the processing of speech and other imitable sounds in the context of auditory–motor integration. Part of the motivation for the existence of such a system comes from lesion evidence, indicating that unilateral lesions to the posterior temporal cortex tend to cause deficits in speech production more often than deficits in speech perception (Hickok and Poeppel 2000). Thus, lesions to pSTG and IPL are commonly associated with profound impairments in auditory–verbal repetition, short-term memory, and spontaneous speech production in patients typically with Wernicke's or conduction aphasia (Damasio and Damasio 1980; Selnes et al. 1985; Shallice and Vallar 1990; Goodglass 1993). The posterior portion of the STG has strong neuroanatomical connections with the posterior prefrontal cortex, including BA 44/6, which is known to be important for motor/articulatory processes underlying speech output (Romanski et al. 1999; Buchsbaum et al. 2005; Catani et al. 2005), as tasks that require some degree of phonological awareness (Zatorre et al. 1992; Hickok and Poeppel 2004). In light of the evidence supporting a role for the pSTG in speech production and auditory–motor integration, one explanation for our observation of repetition enhancement in the STG/IPL is that the activation reflects phonological retrieval processes that occur automatically during successful verbal item recognition. Thus, the reactivation effects observed during recognition memory in these posterior temporal and inferior frontal regions known to be involved in working memory reflect maintenance processes that are necessary for explicit judgments about the current contents of memory. The mechanism underlying working memory maintenance is commonly thought to involve persistent neural activity that occurs in both prefrontal and posterior perceptual regions (Wang 2001). The present task differs from standard working memory tasks in that subjects are not engaged in rehearsal (i.e., retaining a fixed set of items across a delay) but, rather, are continually making recognition decisions about a constantly changing probe item. Nevertheless, the observation of increased activity for positive probes (hits) in the same posterior temporal region that is observed during auditory–verbal working memory maintenance indicates that a similar neural mechanism might underlie the 2 phenomena. Thus, in the case of repetition enhancement, the probe stimulus makes contact with a recently stored memory trace and reactivates it. The difference between reactivation in recognition memory and the sort of persistent activity that is associated with working memory maintenance may only be that in the former case the activating “trigger” is an external stimulus, whereas in the latter the reactivation process is mediated internally by way of prefrontally mediated, top-down attentional processes (Gazzaley et al. 2005; Johnson et al. 2007).

In contrast to the STG/IPL where we observed auditory repetition effects irrespective of the probe modality, in the pSTS, we observed repetition effects that were strongly dependent on the modality of the probe stimulus. While for Aud:Aud repetitions, we observed a weak repetition suppression effect which was not statistically reliable, a strong and significant reactivation effect was observed in the Aud:Vis condition. This enhanced activation for visual probes was also in the inferior frontal sulcus and ventral temporal cortex. The pSTS has long been known to have polysensory response properties (Bruce et al. 1981; Hikosaka et al. 1988) and has been shown in functional neuroimaging studies to be sensitive to a variety of stimulus modalities including visual, auditory, and tactile (Beauchamp 2005). In the speech domain, the pSTS has been shown to have greater activation when simultaneously presented visual and auditory items are congruent (e.g., sound of the latter “A” paired with a visual presentation of the letter “A”) than when they are incongruent (Wright et al. 2003; Macaluso et al. 2004; van Atteveldt et al. 2004). One explanation for our observation of a large Aud:Vis reactivation effect in the pSTS is that the during recognition memory of a congruent, but cross-modal, test-probe stimulus pair, the pSTS is engaged in a multisensory integration of the current visual item with the previously presented auditory item. According to this interpretation, the increase in activation observed for the simultaneous presentation of auditory and visual stimulus pairs also holds for the present circumstance where the 2 stimuli are presented at different moments in time, as was the case in the current study. Note however, that we did not find a reactivation effect for the reverse (Vis:Aud) condition, a finding that complicates the cross-modal integration hypothesis.

Memory Lag

We found large and consistent effects (across all item/probe modality combinations) of repetition lag in the prefrontal and parietal cortices. In the IFG and in the intraparietal sulcus, regions routinely associated with controlled attention (LaBar et al. 1999; Pessoa et al. 2002; Marklund et al. 2007), activity increased as a function of lag. The opposite pattern of activity was observed, however, in the lateral parietal cortex in both hemispheres, where activity was shown to decrease as a function of lag. As the behavioral data indicates, recognizing a probe that was repeated 5 items ago is more difficult than detecting an immediate (lag 1) repetition. One way of explaining the need for increased attentional demand at longer lags is in terms of the strength of the memory signal elicited by probe stimulus. Older memory traces are “weaker” due either to decay processes or retroactive interference. Thus, as the duration between item and probe increases, one might expect that the amount bottom-up trace reactivation declines, placing a greater burden on prefrontal retrieval processes. There is according to this view, then, an inverse relationship between mnemonic trace strength and the extent to which controlled memory search processes are recruited (see Cabeza et al. 2008 for a similar view).

If the pattern of activity in the IFG and IPS reflects the deployment of top-down retrieval mechanisms, the pattern of activity in the lateral parietal cortex is a natural candidate for the representation of mnemonic trace strength (Klimesch et al. 2006). This interpretation of the inverse patterns of activation if the IFG and lateral parietal cortex as indicating a trade-off between top-down retrieval mechanisms and mnemonic trace strength is supported by existing literature on the neural basis of recognition memory. A number of studies have shown that activity in lateral parietal cortex covaries with a number of measures of episodic memory strength (Wagner et al. 2005). For instance, in the remember/know paradigm, activity in the lateral parietal cortex is greater for items which have been classified as having been explicitly recollected (remember) when compared with memory items which are classified as old but not explicitly recollected (know) (Wheeler and Buckner 2004). Lateral parietal cortex has also been shown to be more active when a subject can recall an item and its source than when he or she can remember the item but not its source (Kahn et al. 2004). In addition, activity is modulated by the amount of information retrieved, so that when a subject can recall a larger amount of information about a remembered event, activity in lateral parietal cortex is increased (Vilberg and Rugg 2007). Our study adds to the growing literature on the role of the lateral parietal cortex in memory retrieval in showing that activation in this area is not confined to episodic memory—traditionally construed as a long-term memory phenomena—but indeed extends to memory paradigms involving very short delays.

Conclusions

This experiment examined patterns of repetition suppression and reactivation in the auditory-sensitive cortex of the superior temporal lobe during a short-term verbal continuous recognition paradigm. Our observation of repetition suppression effects in the mid-anterior STG/STS and reactivation effects in the STG/IPL supports a 2-process model of auditory–verbal recognition memory whereby stimulus identification and categorization is primarily mediated by an anterior auditory stream, and phonological retrieval is mediated by a dorsal auditory stream. The difference in the direction of the effects may indicate a fundamental difference between automatic, or implicit, memory processes that are associated with low-level stimulus identification and late-occurring explicit memory processes that occur in the context of phonological retrieval. Taken together, these results further our understanding of how the repetition suppression and reactivation effects both may contribute to short-term auditory–verbal recognition memory.

Supplementary Material

Supplementary Figures 1 and 2 can be found at: http://www.cercor.oxfordjournals.org/.

Funding

Funding to pay the Open Access publication charges for this article was provided by the National Institutes of Health (grant MH63901).

Acknowledgments

Conflict of interest: None declared.

References

- Baddeley A. Working memory: looking back and looking forward. Nat Rev Neurosci. 2003;4:829–839. doi: 10.1038/nrn1201. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS. See me, hear me, touch me: multisensory integration in lateral occipital-temporal cortex. Curr Opin Neurobiol. 2005;15:145–153. doi: 10.1016/j.conb.2005.03.011. [DOI] [PubMed] [Google Scholar]

- Bruce C, Desimone R, Gross CG. Visual properties of neurons in a polysensory area in superior temporal sulcus of the macaque. J Neurophysiol. 1981;46:369–384. doi: 10.1152/jn.1981.46.2.369. [DOI] [PubMed] [Google Scholar]

- Buchsbaum B, Hickok G, Humphries C. Role of left superior temporal gyrus in phonological processing for speech perception and production. Cogn Sci. 2001;25:663–678. [Google Scholar]

- Buchsbaum BR, D'Esposito M. The search for the phonological store: from loop to convolution. J Cogn Neurosci. 2008;20:762–768. doi: 10.1162/jocn.2008.20501. [DOI] [PubMed] [Google Scholar]

- Buchsbaum BR, Olsen RK, Koch P, Berman KF. Human dorsal and ventral auditory streams subserve rehearsal-based and echoic processes during verbal working memory. Neuron. 2005;48:687–697. doi: 10.1016/j.neuron.2005.09.029. [DOI] [PubMed] [Google Scholar]

- Cabeza R, Ciaramelli E, Olson IR, Moscovitch M. The parietal cortex and episodic memory: an attentional account. Nat Rev Neurosci. 2008;9:613–625. doi: 10.1038/nrn2459. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Catani M, Jones DK, ffytche DH. Perisylvian language networks of the human brain. Ann Neurol. 2005;57:8–16. doi: 10.1002/ana.20319. [DOI] [PubMed] [Google Scholar]

- Cohen L, Jobert A, Le Bihan D, Dehaene S. Distinct unimodal and multimodal regions for word processing in the left temporal cortex. Neuroimage. 2004;23:1256–1270. doi: 10.1016/j.neuroimage.2004.07.052. [DOI] [PubMed] [Google Scholar]

- Coltheart M. The MRC psycholinguistic database. Quart J Exp Psychol. 1981;33A:497–505. [Google Scholar]

- Cox RW. AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res. 1996;29:162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Damasio AR. Time-locked multiregional retroactivation: a systems-level proposal for the neural substrates of recall and recognition. Cognition. 1989;33:25–62. doi: 10.1016/0010-0277(89)90005-x. [DOI] [PubMed] [Google Scholar]

- Damasio H, Damasio AR. The anatomical basis of conduction aphasia. Brain. 1980;103:337–350. doi: 10.1093/brain/103.2.337. [DOI] [PubMed] [Google Scholar]

- Dehaene-Lambertz G, Dehaene S, Anton JL, Campagne A, Ciuciu P, Dehaene GP, Denghien I, Jobert A, Lebihan D, Sigman M, et al. Functional segregation of cortical language areas by sentence repetition. Hum Brain Mapp. 2006;27:360–371. doi: 10.1002/hbm.20250. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desimone R. Neural mechanisms for visual memory and their role in attention. Proc Natl Acad Sci USA. 1996;93:13494–13499. doi: 10.1073/pnas.93.24.13494. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Druzgal TJ, D'Esposito M. Activity in fusiform face area modulated as a function of working memory load. Brain Res Cogn Brain Res. 2001;10:355–364. doi: 10.1016/s0926-6410(00)00056-2. [DOI] [PubMed] [Google Scholar]

- Druzgal TJ, D'Esposito M. Dissecting contributions of prefrontal cortex and fusiform face area to face working memory. J Cogn Neurosci. 2003;15:771–784. doi: 10.1162/089892903322370708. [DOI] [PubMed] [Google Scholar]

- Feredoes E, Tononi G, Postle BR. The neural bases of the short-term storage of verbal information are anatomically variable across individuals. J Neurosci. 2007;27:11003–11008. doi: 10.1523/JNEUROSCI.1573-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston KJ, Penny WD, Glaser DE. Conjunction revisited. Neuroimage. 2005;25:661–667. doi: 10.1016/j.neuroimage.2005.01.013. [DOI] [PubMed] [Google Scholar]

- Fuster JM. Network memory. Trends Neurosci. 1997;20:451–459. doi: 10.1016/s0166-2236(97)01128-4. [DOI] [PubMed] [Google Scholar]

- Gazzaley A, Cooney JW, McEvoy K, Knight RT, D'Esposito M. Top-down enhancement and suppression of the magnitude and speed of neural activity. J Cogn Neurosci. 2005;17:507–517. doi: 10.1162/0898929053279522. [DOI] [PubMed] [Google Scholar]

- Goodglass H. Understanding aphasia. San Diego (CA): Academic Press; 1993. [Google Scholar]

- Grill-Spector K, Henson R, Martin A. Repetition and the brain: neural models of stimulus-specific effects. Trends Cogn Sci. 2006;10:14–23. doi: 10.1016/j.tics.2005.11.006. [DOI] [PubMed] [Google Scholar]

- Hara NF, Nakamura K, Kuroki C, Takayama Y, Ogawa S. Functional neuroanatomy of speech processing within the temporal cortex. Neuroreport. 2007;18:1603–1607. doi: 10.1097/WNR.0b013e3282f03f39. [DOI] [PubMed] [Google Scholar]

- Henson RN. Neuroimaging studies of priming. Prog Neurobiol. 2003;70:53–81. doi: 10.1016/s0301-0082(03)00086-8. [DOI] [PubMed] [Google Scholar]

- Hickok G, Buchsbaum B, Humphries C, Muftuler T. Auditory-motor interaction revealed by fMRI: speech, music, and working memory in area Spt. J Cogn Neurosci. 2003;15:673–682. doi: 10.1162/089892903322307393. [DOI] [PubMed] [Google Scholar]

- Hickok G, Erhard P, Kassubek J, Helms-Tillery AK, Naeve-Velguth S, Strupp JP, Strick PL, Ugurbil K. A functional magnetic resonance imaging study of the role of left posterior superior temporal gyrus in speech production: implications for the explanation of conduction aphasia. Neurosci Lett. 2000;287:156–160. doi: 10.1016/s0304-3940(00)01143-5. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D. Towards a functional neuroanatomy of speech perception. J Cogn Neurosci. 2000:45–45. doi: 10.1016/s1364-6613(00)01463-7. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D. Dorsal and ventral streams: a framework for understanding aspects of the functional anatomy of language. Cognition. 2004;92:67–99. doi: 10.1016/j.cognition.2003.10.011. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D. The cortical organization of speech processing. Nat Rev Neurosci. 2007;8:393–402. doi: 10.1038/nrn2113. [DOI] [PubMed] [Google Scholar]

- Hikosaka K, Iwai E, Saito H, Tanaka K. Polysensory properties of neurons in the anterior bank of the caudal superior temporal sulcus of the macaque monkey. J Neurophysiol. 1988;60:1615–1637. doi: 10.1152/jn.1988.60.5.1615. [DOI] [PubMed] [Google Scholar]

- Indefrey P, Levelt WJ. The spatial and temporal signatures of word production components. Cognition. 2004;92:101–144. doi: 10.1016/j.cognition.2002.06.001. [DOI] [PubMed] [Google Scholar]

- Johnson MR, Mitchell KJ, Raye CL, D'Esposito M, Johnson MK. A brief thought can modulate activity in extrastriate visual areas: top-down effects of refreshing just-seen visual stimuli. Neuroimage. 2007;37:290–299. doi: 10.1016/j.neuroimage.2007.05.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahn I, Davachi L, Wagner AD. Functional-neuroanatomic correlates of recollection: implications for MODls of recognition memory. J Neurosci. 2004;24:4172–4180. doi: 10.1523/JNEUROSCI.0624-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klimesch W, Hanslmayr S, Sauseng P, Gruber W, Brozinsky CJ, Kroll NE, Yonelinas AP, Doppelmayr M. Oscillatory EEG correlates of episodic trace decay. Cereb Cortex. 2006;16:280–290. doi: 10.1093/cercor/bhi107. [DOI] [PubMed] [Google Scholar]

- LaBar KS, Gitelman DR, Parrish TB, Mesulam M. Neuroanatomic overlap of working memory and spatial attention networks: a functional MRI comparison within subjects. Neuroimage. 1999;10:695–704. doi: 10.1006/nimg.1999.0503. [DOI] [PubMed] [Google Scholar]

- Liebenthal E, Binder JR, Spitzer SM, Possing ET, Medler DA. Neural substrates of phonemic perception. Cereb Cortex. 2005;15:1621–1631. doi: 10.1093/cercor/bhi040. [DOI] [PubMed] [Google Scholar]

- Macaluso E, George N, Dolan R, Spence C, Driver J. Spatial and temporal factors during processing of audiovisual speech: a PET study. Neuroimage. 2004;21:725–732. doi: 10.1016/j.neuroimage.2003.09.049. [DOI] [PubMed] [Google Scholar]

- Marklund P, Fransson P, Cabeza R, Larsson A, Ingvar M, Nyberg L. Unity and diversity of tonic and phasic executive control components in episodic and working memory. Neuroimage. 2007;36:1361–1373. doi: 10.1016/j.neuroimage.2007.03.058. [DOI] [PubMed] [Google Scholar]

- Miller EK, Desimone R. Parallel neuronal mechanisms for short-term memory. Science. 1994;263:520–522. doi: 10.1126/science.8290960. [DOI] [PubMed] [Google Scholar]

- Nichols T, Brett M, Andersson J, Wager T, Poline JB. Valid conjunction inference with the minimum statistic. Neuroimage. 2005;25:653–660. doi: 10.1016/j.neuroimage.2004.12.005. [DOI] [PubMed] [Google Scholar]

- Nyberg L, Habib R, McIntosh AR, Tulving E. Reactivation of encoding-related brain activity during memory retrieval. Proc Natl Acad Sci USA. 2000;97:11120–11124. doi: 10.1073/pnas.97.20.11120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pessoa L, Guiterrez E, Bandettini PA, Ungerleider L. Neural correlates of visual working memory: fMRI amplitude predicts task performance. Neuron. 2002;35:975–987. doi: 10.1016/s0896-6273(02)00817-6. [DOI] [PubMed] [Google Scholar]

- Polyn SM, Natu VS, Cohen JD, Norman KA. Category-Specific cortical activity precedes retrieval during memory search. Science. 2005;310:1963–1966. doi: 10.1126/science.1117645. [DOI] [PubMed] [Google Scholar]

- Postle BR. Working memory as an emergent property of the mind and brain. Neuroscience. 2006;139:23–38. doi: 10.1016/j.neuroscience.2005.06.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Postle BR, Druzgal TJ, D'Esposito M. Seeking the neural substrates of visual working memory storage. Cortex. 2003;39:927–946. doi: 10.1016/s0010-9452(08)70871-2. [DOI] [PubMed] [Google Scholar]

- Rainer G, Miller EK. Effects of visual experience on the representation of objects in the prefrontal cortex. Neuron. 2000;27:179–189. doi: 10.1016/s0896-6273(00)00019-2. [DOI] [PubMed] [Google Scholar]

- Rama P, Poremba A, Sala JB, Yee L, Malloy M, Mishkin M, Courtney SM. Dissociable functional cortical topographies for working memory maintenance of voice identity and location. Cereb Cortex. 2004;14:768–780. doi: 10.1093/cercor/bhh037. [DOI] [PubMed] [Google Scholar]

- Raye CL, Johnson MK, Mitchell KJ, Reeder JA, Greene EJ. Neuroimaging a single thought: dorsolateral PFC activity associated with refreshing just-activated information. Neuroimage. 2002;15:447–453. doi: 10.1006/nimg.2001.0983. [DOI] [PubMed] [Google Scholar]

- Romanski LM, Tian B, Fritz J, Mishkin M, Goldman-Rakic PS, Rauschecker JP. Dual streams of auditory afferents target multiple domains in the primate prefrontal cortex. Nat Neurosci. 1999;2:1131–1136. doi: 10.1038/16056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sayres R, Grill-Spector K. Object-selective cortex exhibits performance-independent repetition suppression. J Neurophysiol. 2006;95:995–1007. doi: 10.1152/jn.00500.2005. [DOI] [PubMed] [Google Scholar]

- Schacter DL, Church BA. Auditory priming: implicit and explicit memory for words and voices. J Exp Psychol Learn Mem Cogn. 1992;18:915–930. doi: 10.1037//0278-7393.18.5.915. [DOI] [PubMed] [Google Scholar]

- Schacter DL, Dobbins IG, Schnyer DM. Specificity of priming: a cognitive neuroscience perspective. Nat Rev Neurosci. 2004;5:853–862. doi: 10.1038/nrn1534. [DOI] [PubMed] [Google Scholar]

- Schott BH, Richardson-Klavehn A, Henson RN, Becker C, Heinze HJ, Duzel E. Neuroanatomical dissociation of encoding processes related to priming and explicit memory. J Neurosci. 2006;26:792–800. doi: 10.1523/JNEUROSCI.2402-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scott SK, Blank CC, Rosen S, Wise RJ. Identification of a pathway for intelligible speech in the left temporal lobe. Brain. 2000;12:2400–2406. doi: 10.1093/brain/123.12.2400. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scott SK, Wise RJ. The functional neuroanatomy of prelexical processing in speech perception. Cognition. 2004;92:13–45. doi: 10.1016/j.cognition.2002.12.002. [DOI] [PubMed] [Google Scholar]

- Selnes OA, Knopman DS, Niccum N, Rubens AB. The critical role of Wernicke's area in sentence repetition. Ann Neurol. 1985;17:549–557. doi: 10.1002/ana.410170604. [DOI] [PubMed] [Google Scholar]

- Shallice T, Vallar G. The impairment of auditory-verbal short-term storage. In: Vallar G, Shallice T, editors. Neuropsychological impairments of short-term memory. Cambridge: Cambridge University Press; 1990. pp. 11–53. [Google Scholar]

- Shannon BJ, Buckner RL. Functional-anatomic correlates of memory retrieval that suggest nontraditional processing roles for multiple distinct regions within posterior parietal cortex. J Neurosci. 2004;24:10084–10092. doi: 10.1523/JNEUROSCI.2625-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spitsyna G, Warren JE, Scott SK, Turkheimer FE, Wise RJ. Converging language streams in the human temporal lobe. J Neurosci. 2006;26:7328–7336. doi: 10.1523/JNEUROSCI.0559-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thirion B, Pinel P, Meriaux S, Roche A, Dehaene S, Poline JB. Analysis of a large fMRI cohort: statistical and methodological issues for group analyses. Neuroimage. 2007;35:105–120. doi: 10.1016/j.neuroimage.2006.11.054. [DOI] [PubMed] [Google Scholar]

- Tian B, Reser D, Durham A, Kustov A, Rauschecker JP. Functional specialization in rhesus monkey auditory cortex. Science. 2001;292:290–293. doi: 10.1126/science.1058911. [DOI] [PubMed] [Google Scholar]

- Turk-Browne NB, Yi DJ, Leber AB, Chun MM. Visual quality determines the direction of neural repetition effects. Cereb Cortex. 2007;17:425–433. doi: 10.1093/cercor/bhj159. [DOI] [PubMed] [Google Scholar]

- van Atteveldt N, Formisano E, Goebel R, Blomert L. Integration of letters and speech sounds in the human brain. Neuron. 2004;43:271–282. doi: 10.1016/j.neuron.2004.06.025. [DOI] [PubMed] [Google Scholar]

- Vilberg KL, Rugg MD. Dissociation of the neural correlates of recognition memory according to familiarity, recollection, and amount of recollected information. Neuropsychologia. 2007;45:2216–2225. doi: 10.1016/j.neuropsychologia.2007.02.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wagner AD, Shannon BJ, Kahn I, Buckner RL. Parietal lobe contributions to episodic memory retrieval. Trends Cogn Sci. 2005;9:445–453. doi: 10.1016/j.tics.2005.07.001. [DOI] [PubMed] [Google Scholar]

- Wang XJ. Synaptic reverberation underlying mnemonic persistent activity. Trends Neurosci. 2001;24:455–463. doi: 10.1016/s0166-2236(00)01868-3. [DOI] [PubMed] [Google Scholar]

- Wernicke C. Der aphasische symptomcomplex: eine psycholgische studie auf anatomischer basis. In: Eggbert GH, editor. Wernicke's works on aphasia: a sourcebook and review. The Hague (The Netherlands): Mouton; 1874. pp. 91–145. [Google Scholar]

- Wheeler ME, Buckner RL. Functional-anatomic correlates of remembering and knowing. Neuroimage. 2004;21:1337–1349. doi: 10.1016/j.neuroimage.2003.11.001. [DOI] [PubMed] [Google Scholar]

- Wheeler ME, Petersen SE, Buckner RL. Memory's echo: vivid remembering reactivates sensory-specific cortex. Proc Natl Acad Sci USA. 2000;97:11125–11129. doi: 10.1073/pnas.97.20.11125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wright TM, Pelphrey KA, Allison T, McKeown MJ, McCarthy G. Polysensory interactions along lateral temporal regions evoked by audiovisual speech. Cereb Cortex. 2003;13:1034–1043. doi: 10.1093/cercor/13.10.1034. [DOI] [PubMed] [Google Scholar]

- Xu Y, Turk-Browne NB, Chun MM. Dissociating task performance from fMRI repetition attenuation in ventral visual cortex. J Neurosci. 2007;27:5981–5985. doi: 10.1523/JNEUROSCI.5527-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yi DJ, Turk-Browne NB, Chun MM, Johnson MK. When a thought equals a look: refreshing enhances perceptual memory. J Cogn Neurosci. 2008;20:1371–1380. doi: 10.1162/jocn.2008.20094. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zatorre RJ, Evans AC, Meyer E, Gjedde A. Lateralization of phonetic and pitch discrimination in speech processing. Science. 1992;256:846–849. doi: 10.1126/science.1589767. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.