The hypothesis that dopamine (DA) receptor transmission mediates reinforced learning [162] by strengthening the synaptic connections between corticostriatal inputs and striatal outputs active at the time that a reward is encountered [21] was based largely upon analysis of dopamine antagonist effects on reinforced learning, and preceded the pioneering work by Schultz and colleagues demonstrating that phasic midbrain DA neurons respond to reward and reward-paired stimuli [130, 134]. Later models of DA-mediated reinforcement learning [22, 99, 131, 156, 158] incorporated data regarding electrophysiological responses of substantia nigra (SN) and ventral tegmental (VTA) DA cells to reward-related stimuli, and work in slice preparations demonstrating DA-dependent synaptic plasticity in the striatum [28, 30, 32, 157]. Today, available data help to shed light on the nature of input-output connectivity in the striatum, and the types of information likely transmitted by cortical inputs to striatal output cells. This paper will consider 1) the types of events signaled by phasic midbrain DA neurons to their striatal recipients, 2) DA-mediated plasticity at corticostriatal synapses, 3) the informational codes received and transmitted by striatal output neurons, and 4) simple models of striatal-based learning that incorporate these data.

Phasic electrophysiological responses of midbrain DA neurons

Midbrain dopamine neurons of the VTA and SN send projections to a number of target regions, with particularly strong projections to the dorsal and ventral striatum and the prefrontal cortex (PFC) [20, 44, 106, 152]. A large body of evidence suggests that these DA cells undergo phasic activation in response to primary and conditioned rewards that are not fully-predicted by earlier events or that are of higher-than-expected value [45, 134, 146].

Increases in midbrain DA discharge rate produce elevations in DA release that are disproportionally large during burst-mode firing compared to release during firing of individual action potentials [142]. The phasic burst activation of DA cells following reward delivery has been postulated to provide a ‘teaching’ signal that promotes synaptic strengthening of those cortico-striatal and limbic-striatal glutamate (GLU) synapses whose activity is coincident in time with the phasic increase in synaptic DA concentration [21, 131, 159].

The magnitude of the phasic DA response to reward is inversely related to the animal’s reward expectation at the time that it is delivered [45, 131]. Phasic responses of DA neurons can thus be said to code the discrepancy between predicted and actual reward, that is, a reward prediction error [99, 131]. Like the midbrain DA response, associative learning is greatest when presentation of the unconditioned stimulus (US) is unexpected [74, 91]. Thus, the conditions under which DA-reward activations are observed make these phasic activations particularly well-suited to contribute to reward-related learning [131].

Midbrain dopamine neurons can also show phasic activation responses to salient stimuli without primary or conditioned reward value [63, 66, 90, 141]. However, the characteristics of these DA responses differ from those seen following presentation of primary or conditioned rewards. While both rewards and salient non-rewards can produce phasic DA activation with onset latencies of approximately 70 ms, the phasic DA activation in response to salient non-rewards are sustained for approximately 100 ms and then followed by a period of inhibition in which the DA cell ceases to fire. In contrast, the DA activations elicited by primary and conditioned rewards last several hundreds of milliseconds and are not followed by a period of inhibition [65, 132].

The onset of the DA response to a visual cue signaling reward delivery occurs prior to the visual saccade that would permit foveation of the visual stimulus, and therefore before the reward vs. non-reward status of the event would be expected to have been evaluated [118, 120]. Evidence suggests that one source of this rapid activation of DA cells may be the superior colliculus [35, 36, 96]. In light of the similar onset latencies for DA activation responses to reward-related and salient non-reward stimuli, and the sustained response observed to reward-related stimuli, it is possible that DA cells respond rapidly to salient and novel stimuli, and if the event is subsequently (within hundreds of ms) determined to be reward-related, the DA excitatory response is sustained; if not, a period of inhibition follows.

A strong candidate source of the inhibitory input to DA cells following evaluation of the non-reward status of a salient sensory event are cells in the lateral habenula which send inhibitory projections to both VTA and SN DA cells [34, 59, 92], and which respond to the presentation of non-reward-related visual target stimuli (interspersed with reward-related target trials) approximately 40 ms prior to the onset of the DA inhibitory response to salient non-reward stimuli [83, 92].

The rapid non-discriminating activation onset of the DA response may serve to provide a relatively precise timestamp for modulation of corticostriatal synapses [118, 119], strengthening those striatal input-output connections active at the time the reward stimulus was presented. It has been suggested that the post-excitatory inhibitory phase associated with DA responses to non-reward stimuli may serve to ‘cancel-out’ the preceding phasic DA signal within striatal target regions [73]. It is possible that a slower DA activation onset would permit the maladaptive strengthening of corticostriatal synapses that become active at a later moment in time, i.e., strengthening striatal input-output connections that do not correspond closely to the reward-procuring behavior or to the stimulus conditions that elicited the behavior.

The term ‘DA reward signal”, employed often throughout this paper, is not meant to suggest that DA activity mediates all aspects of reward [23, 125], but rather that the phasic DA signal conveys information regarding the occurrence of reward and reward-predicting stimuli to striatal target cells. While DA plays an important role in behavioral functions that affect response expression [23, 102, 125] via DA modulation of real-time glutamate transmission at cortico- and limbic-striatal synapses [64], the focus here is on models of DA-mediated striatal plasticity and learning.

DA and striatal plasticity

Corticostriatal synapses can express both long-term potentiation (LTP) and long-term depression (LTD) [28, 33, 157]. It has been suggested that the direction of change in synaptic strength may depend upon several factors, including activity of NMDA receptors, dopamine concentrations, activation of D1 and/or D2 receptors [2, 28, 29, 157, 159]. Cortical high-frequency stimulation (HFS) has most frequently been observed to produce LTD of corticostriatal synapses [29, 159]. However, when DA is applied in brief pulses coinciding with the time of pre-synaptic stimulation and post-synaptic depolarization of the striatal cell, corticostriatal synapses show potentiation rather than depression [157].

In rats that had previously been trained to lever press for intracranial self-stimulation, electrical stimulation of the SN using the animal’s optimal ICSS stimulation parameters produced cortricostriatal synaptic potentiation that was prevented by D1 receptor blockade [121]. Strikingly, the magnitude of corticostriatal potentiation induced by SN stimulation within a given animal was correlated with its previously-observed rate of ICSS lever-press acquisition. These data suggest that DA promotes synaptic potentiation in corticostriatal synapses, and that DA-mediated strengthening of corticostriatal synapses is related to operant learning. DA modulation of corticostriatal plasticity appears not to occur when the pulsatile application of DA is delayed with respect to the arrival of the corticostriatal excitatory input [159], an observation that gives added functional significance to the rapid onset of the midbrain DA phasic activation described above. It seems likely that phasic burst responses of midbrain DA cells produce a pulsatile increase in synaptic DA concentrations (above tonic levels) which, via D1 receptor binding, promotes LTP in currently-active corticostriatal synapses. Because D1 receptors have low affinity for the DA molecule, synaptic DA concentrations during tonic single-spike firing of DA neurons are unlikely to lead to D1 receptor binding [52, 71, 122].

D1-dependent LTP [27, 78, 121] is observed only in the population of striatal cells that express D1 receptors [139], i.e., striatal cells that send direct projections to the GPi/SNr and give rise to ‘direct’ basal ganglia circuits. Via these direct basal ganglia circuits, sometimes referred to as the ‘go system’, increased cortico-striatal transmission leads to increased thalamocortical activity, and an increased likelihood of behavioral response expression [6, 10, 48, 97]. D1-mediated LTP is therefore observed in those striatal cells for which strengthened corticostriatal synapses would be expected to lead to an increased likelihood of thalamocortical output when current patterns of corticostriatal input reoccur in the future. Corticostriatal synapses of direct-pathway circuits that are activated in the absence of D1 binding undergo LTD [139]. A DA reward signal is therefore likely to potentiate strengthening of currently activate corticostriatal synapses for striatal cells of the direct pathway via D1-mediated LTP. In the absence of high DA concentrations, e.g., under conditions of tonic rather than phasic DA release, corticostriatal synapses for D1-expressing striatal cells would be expected to undergo LTD, reducing the likelihood that the striatal output cells will fire when the same corticostriatal inputs occur in the future.

Synapses between corticostriatal afferents and D2 receptor-expressing striatal output neurons also undergo plasticity, but the rules governing plasticity of these synapses differ from those described above. For example, corticostriatal synapses of D2-expressing striatal cells undergo D2-facilitated LTD [139]. While such plasticity is likely to play an important complementary role in behavioral learning, it falls outside the focus of this paper. Here, DA-mediated plasticity at corticostriatal synapses refers to plasticity at synapses between corticostriatal afferents and D1-expressing striatal output neurons, i.e., those striatal neurons contributing to direct basal ganglia circuits.

Simple models of striatal stimulus-response (S-R) and response-outcome (R-O) learning

The following section describes simple models of DA-mediated stimulus-response (S-R) and response-outcome (R-O; below designated O-R) or ‘goal-directed’ learning in light of the types of information coded by striatal cells. The simple learning mechanisms described here are meant to apply generally to learning mediated by the caudate, putamen, and ventral striatum. In a later section, particular focus will be placed upon the putamen where input-output connectivity has been especially well characterized. In rats, caudate and putamen are typically referred to as dorsomedial and dorsolateral (DLS) striatum, respectively. When discussing anatomical, electrophysiological data and/or proposed learning mechanisms that apply to both the primate putamen and rat DLS, the latter term will often be employed. The following discussion will concentrate upon learning-related properties of medium spiny output cells, which comprise greater than 95% of neurons in the striatum, and for the sake of focus, not on the activity and learning-related changes observed in tonically-active interneurons in the striatum [13, 160].

The dorsal striatum receives glutamate inputs [50, 94] from virtually all regions of the cerebral cortex and from midline and intralaminar nuclei of the thalamus [77, 85, 88, 95, 138], with most of these inputs terminating on spines of medium spiny output neurons. Convergence of cortical projections onto target neurons is estimated at between 30:1 in rats and 80:1 in monkeys [53].

In the general models in this section, corticostriatal sensory inputs may be taken to refer to somatosensory, visual, auditory, and/or olfactory input, depending upon the striatal region [77, 85, 88, 95, 138]. Output activity of the striatal cell is assumed here to contribute to selection of particular behavioral actions, without specifying the basal ganglia-cortical circuitry that mediates action selection beyond the level of the striatum. For consideration of basal ganglia circuitry involved in action selection, the reader is referred to a number of excellent reviews [5, 48, 54, 56, 67, 68, 98]. Consideration of behavioral action representations as inputs to striatal cells, rather than simply as output consequences of their firing, is reserved for the final section of this paper.

S-R learning

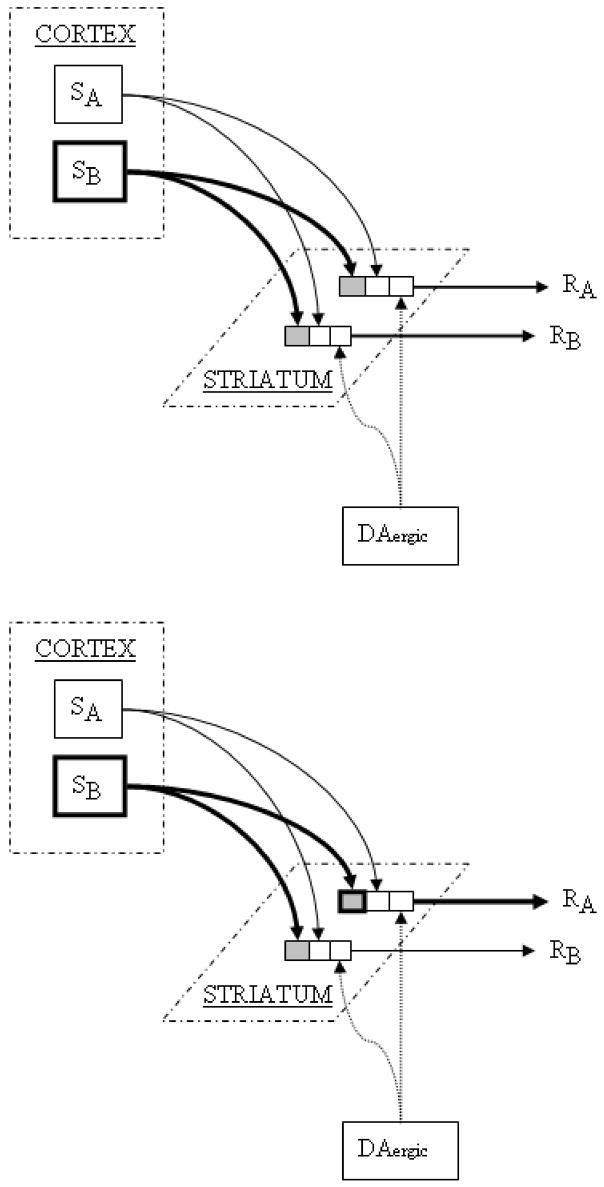

Generally, in models of S-R learning, inputs coding for sensory stimuli and outputs coding motor responses active at the time of a reinforcement signal are strengthened [21, 65, 131, 159]. In outcome-mediated learning, a behavioral response becomes associated, through learning, to a representation of a particular outcome. Upon future activation of a representation of the desired outcome, the behavioral response that produces the outcome is selected. As figures 1A and 1B illustrate, these very different types of learned behavior may be conceptualized in similar ways.

Figure 1.

Figure 1A. Corticostriatal inputs (SA, SB) coding for sensory events synapse upon striatal output cells coding for behavioral responses (RA, RB). A behavioral response (RA) leading to delivery of a reward or reward-predicting stimulus causes a phasic increase in midbrain DA activity and a consequent increase in striatal DA release. DA-mediated LTP strengthens the currently-active synapses (SB to RA; bottom diagram, bold box in striatum) and increases the likelihood that the reward-procuring behavioral response (RA) will occur in response to the same sensory stimulus (SB) in the future. If a striatal output cell is activated by convergent inputs from cortical cells representing two sensory modalities, DA activation will increase the synaptic strength of both inputs to the striatal cell. Such a cell will come to show preferential activity in response to the conjunction of the two sensory conditions, e.g., to a visual stimulus only when presented in proximity to a particular tactile field [55].

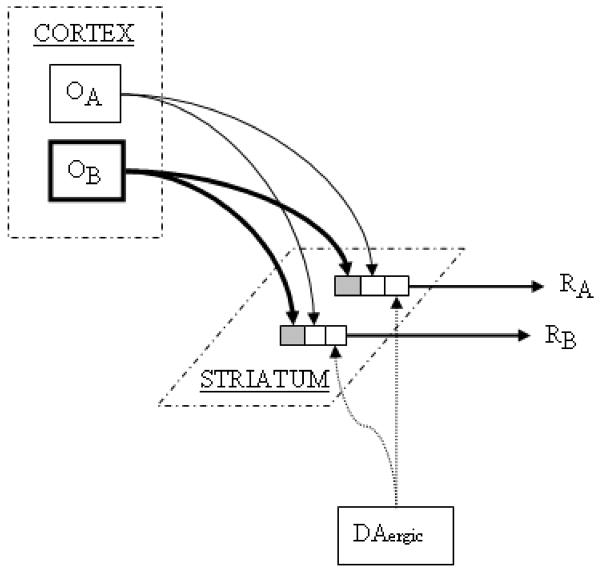

Figure 1B. Corticostriatal inputs (OA, OB) coding for expected reward outcomes synapse upon striatal output cells coding for behavioral responses (RA, RB). Selective strengthening of O-R synapses occurs in a manner identical to that depicted for S-R synapses in figure 1A.

Assume that output cells of the striatum (figure 1A, RA and RB) code particular behavioral responses, e.g., low-level codes to activate specific muscle groups associated with a movement segment, and/or higher-order codes to move a limb to a target position. During S-R learning, DA-mediated promotion of LTP when an animal encounters a less-than-fully-predicted primary or conditioned reward may strengthen the currently-active striatal synapses, i.e., those for which cortical sensory inputs (figure 1A, SB) produced activation of striatal outputs corresponding to the reward-procuring behavior (RA). In this model, the S-R striatal synapse that is active at the time the DA-mediated reward signal arrives (SB to RA) continues to strengthen (bottom diagram in 1A, bold box in the striatum) with repeated reinforcement trials, until an asymptotic level of synaptic strength is reached. S-R synapses that become activated in the absence of the phasic DA-reward signal (SB to RB) undergo LTD, reducing the likelihood that the same sensory input will depolarize the post-synaptic output cell sufficiently to produce neuronal firing in the future. As would be predicted by this type of model, sensory responses are acquired in striatal cells over the course of reward-related learning, and dopamine depletion prevents the acquisition of these striatal sensory responses [12].

It has been proposed that further selectivity of learning and performance may involve lateral inhibition networks [158] (not depicted in the figure) mediated by the inhibitory synaptic contacts that striatal GABAergic output neurons make with one another via local axon collaterals [84, 140, 150, 166]. Through such a mechanism, strongly activated striatal cells may inhibit potential striatal competitor cells from becoming activated. DA-mediated reinforcement of active S-R synapses corresponding to reinforced behavioral performance should, with each reinforced trial, progressively increase the ability of these striatal output cells to exert lateral inhibition on other striatal cells, producing a highly selective activation of those striatal cells whose output corresponds to the successful behavioral response under current stimulus conditions. The number of striatal cells showing task-related activations decreases dramatically over the course of operant S-R learning, as focused activity time-locked to task-related events emerges in a select group of striatal cells [18]. These data may reflect LTD in non-reinforced striatal input-output synapses and/or the increasing lateral inhibitory strength exerted by those cells that have undergone learning-related LTP.

An O-R model of goal-directed learning

S-R and O-R learning may be modeled in a similar manner, except that for O-R learning, cortical inputs to striatal cells represent expected reward outcomes (figure 1B, OA and OB) rather than sensory stimuli. Cells coding for expected reward outcomes are found in orbitofrontal cortex (OFC), and in regions of the PFC and amygdala [11, 48, 103, 133, 137, 145, 153], i.e., in regions that strongly innervate striatal output cells [76, 95, 128, 138]. Striatal cells showing reward expectation coding and/or coding for the conjunction of reward expectation and movement conditions are found in the DLS, dorsomedial stratum and ventral striatum [39, 40, 61, 113, 126, 147], although there are data to suggest that the dorsomedial striatum plays a particularly important role in outcome-mediated learning and performance [164, 165].

Imagine that at a particular moment in time, a cortical cell representing the expectation of juice reward is activated (OB), and that the cell makes synaptic contact with a number of striatal output cells, each representing a different motor response (RA and RB). If activation of one of these output cells (say RA) leads to a reward-procuring behavioral response, DA neurons will become activated in response to the reward, and DA-mediated LTP will strengthen the active corticostriatal synapses (OB to RA; selective strengthening of this corticostriatal synapse is not depicted in the figure). However, if the cortical outcome expectation cell activates a striatal output cell corresponding to a behavior that does not lead to a reward-related event (say RB), DA neurons will not become activated, and during later stages of learning will show an inhibitory response at the time when the absent reward was expected to have occurred (see above). The currently-active synapse (in this case, OB to RB) will undergo LTD. Over the course of successive learning trials, cortical cells coding for the outcome representation will become increasingly likely to activate striatal output cells associated with the reward-procuring behavioral response, and less likely to activate other striatal output cells.

If, as depicted in figure 1B, an individual corticostriatal input coding for reward expectation makes synaptic contact with a number of striatal output cells, each coding for a different behavioral movement (or as described below, promoting sustained activity of frontal cells whose activation is necessary for an upcoming movement, via pallido-thalamo-cortical circuits), one would expect to observe striatal cells whose activation occurs only when a specific behavioral response occurs under a specific reward expectation condition. That is, conjunctive coding for reward expectation and movement. As noted above, output cells in the dorsolateral, dorsomedial, and ventral striatum do code for reward expectation [39, 40, 61, 113, 126, 147], movement [7, 72, 79, 126, 143], and importantly, for the conjunction of the two [40, 57, 61, 113, 126, 147].

Striatal cells coding the conjunction of reward and movement conditions are activated, for instance, prior to the execution of an arm movement - but only when the animal expects to receive food reward as a result of the movement [61]. These cells show very little activity when the animal expects to receive reward in the absence of an arm movement, or in relation to an arm movement for which it expects presentation of only an auditory stimulus signaling progression to the next trial. Other striatal neurons are activated in relation to the arm movement only when the animal expects the movement to result in presentation of the auditory stimulus rather than primary reward. Some cells code for expected outcomes based upon the relative value of the expected reward compared to alternative reward outcomes. Some cells coding for reward value show preferential activation in response to the more-preferred reward, while others respond preferentially to the less-preferred reward [39, 126]. Striatal cells may code for the specific reward expected during a trial (e.g., apple juice versus raspberry juice) [57], although in light of the studies cited above it is possible that such responses reflect the relative value of that expected outcome rather than its sensory properties. Thus, striatal cells often show robust neural responses only to the conjunction of specific movement and reward expectation conditions [40, 57, 61, 113, 126, 147].

The precise sources of reward expectation input to striatal cells remain to be elucidated [64, 134, 137]. However, such activity in striatal cells is unlikely to reflect inputs from midbrain DA cells. The sustained striatal neuronal response during reward anticipation has a pattern (gradual build up of sustained activity) and duration (often greater than 2 seconds) that closely mirrors that observed in reward-coding cells in the orbitofrontal cortex [137]. These response properties are dissimilar to those of midbrain DA neurons, which show activations of a much shorter-duration (lasting hundreds of milliseconds), tightly bound to the time of the reward or reward-predicting stimulus [137]. This suggests that the reward expectation activity seen in striatal cells is not caused by striatal DA release.

As noted above, representation of expected reward outcome is observed in cells of the OFC and PFC, as well as amygdala [11, 48, 103, 133, 137, 145]. Cells in these regions typically respond to reward expectation independent of the animal’s movement, suggesting that cortical reward expectation inputs to striatal cells are not yet conjoined to specific movement conditions. According to the model depicted in figure 1B, the conjunctive coding of expected reward outcomes and behavioral responses that are observed in striatal cells reflects cortical input activity representing reward expectation and striatal output activity corresponding to the behavioral response. The strength of this input-output connection for a given corticostriatal synapse depends upon its history of activation at the time of reward delivery and DA-mediated LTP.

An attribute of this type of O-R model of goal-direct learning is therefore that it operates according to principles that are nearly identical to those typically invoked to underlie S-R learning. That is to say, the models depicted in S-R figure 1A and O-R figure 1B operate by DA-mediated strengthening of currently-active corticostriatal synapses; they differ only with respect to the type of information transmitted to the striatum via cortico-striatal (and/or limbic-striatal) glutamatergic afferents. The O-R model is simple and parsimonious in this respect. In the following section, we examine how the model accounts for “goal devaluation”, a key characteristic of goal-directed learning and performance.

Goal devaluation and the O-R model

A fundamental criterion for designating a behavior ‘goal directed’ is that, after the behavior has been acquired, changes in the value of the expected outcome lead to changes in the rate at which the animal will perform a behavioral response to receive that outcome, i.e., goal-directed or outcome-mediated behaviors are sensitive to ‘goal devaluation’(see [16] review). Suppose, for example, that an animal is trained to perform a behavioral response (RA, left lever-press), for one reward outcome (OA, juice), and to perform a different behavioral response, (RB, right lever-press), for another reward outcome (OB, raisin). One of these outcomes, say OA, is then devalued either by associating it with an aversive state such as LiCl-induced illness or by permitting the subject to consume OA to satiety during a free-feeding session just prior to a subsequent operant test session. During the test session, the subject is given the choice of performing either RA or RB. Importantly, because neither outcome is presented during this ‘extinction’ test session, changes in operant response rate cannot be due to changes in the reinforcing impact of the outcome but rather reflect a change in the animal’s expectation of outcome value. Behavioral sensitivity to outcome value is assessed by the relative reduction in performing the behavioral response that had been associated with the devalued (OA) compared to the still-valued (OB) outcome.

The O-R model depicted in figure 1B accounts for this devaluation effect. Imagine that during an initial training session, a single response manipulandum was operative and a single outcome was presented so that the animal could perform RA to receive OA. As a result of the first few OA presentations, the cortical representation of OA is active (the animal expects to receive OA) when it performs operant response RA corresponding to striatal RA. The operant response leads to reward (OA) delivery, causing a DA-mediated strengthening of the OA to RA synapse as described in the section above. As the strength of the OA to RA synapse becomes progressively stronger, the likelihood that expectation of OA will lead to activation of striatal RA (and performance of RA) increases. During a second session, the animal is permitted to perform RB to receive OB, and OB to RB synapses are similarly strengthened via DA-mediated plasticity. Subsequent devaluation of OA leads to decreased activation of the cortical OA representation, and therefore to a reduced likelihood of activating striatal RA and the corresponding motor act. The still-valued OB expectation continues to generate normal RB output.

After devaluation, it is the value of OA, rather than its expectation, that has been reduced. According to the model, then, corticostriatal neurons representing expected outcomes must be capable of coding not only outcome identity, but also outcome value. Cells coding for the relative magnitude and/or preference (i.e., the ‘value’) of an expected outcome are seen in the OFC [123, 129, 148], PFC [11, 154], and amygdala [103, 104, 114, 117], and as noted above, cells in these regions project strongly to the striatum. If the activity of outcome value-coding cells within one or more of these anatomical regions were to modulate the likelihood (rate) of goal-directed behavioral responding via their corticostriatal projections, lesion or inactivation of the cells should render behavioral response expression insensitive to changes in the value (e.g., devaluation) of the expected outcome. Disruption of activity of cells in the basolateral amygdala impairs behavioral sensitivity to outcome devaluation [17, 37, 58, 101, 109] but see [116]. However, cells in this region appear to critically mediate changes in the value that an organism assigns to an expected outcome (e.g., devaluation), rather than the effect of this changed outcome on subsequent behavioral expression, i.e., if the BLA is inactivated after outcome devaluation has occurred, behavioral expression remains sensitive to the outcome devaluation [155]. Outcome value-coding cells depicted in the O-R model above should not only undergo changes in output as a result of changed value, but should also critically mediate the effect of this changed outcome expectation value on goal-directed behavioral expression.

In contrast, intact OFC activity does appear to be critical in order for outcome devaluation to reduce the likelihood of goal-directed behavioral response expression [49, 70, 115]. Involvement of cells in the OFC may be restricted, however, to modulating expression of simple goal-directed approach responses rather than operant responses such as lever presses [108]. While cells in anatomical regions that innervate the striatum code for outcome value, as required by the O-R model, the precise source(s) of outcome value-code input that mediate(s) the effects of altered outcome value on operant response expression remain(s) to be determined.

Limitation of the O-R model: acquisition of multiple interspersed O-R associations

According to the O-R model, what would happen if, when a particular cortical outcome representation (say OB) activates a striatal output cell coding movement (say RA), the reward outcome is different than was expected. (An early stage of learning is assumed here, i.e., a stage when DA neurons become activated by the primary reward which is not yet fully-predicted on the basis of earlier events). If the reward value is less than expected, the dopamine neuron should be inhibited rather than activated [146], weakening the connection between the outcome expectation input and the striatal output associated with the movement. On the other hand, the model makes the prediction that if the subject has a cortically-represented expectation of a raisin reward (figure 1B, say OB), which drives activation of a striatal output cell (say RA) leading to a lever press that results in the delivery of an equally- or more-preferred juice reward, DA-mediated strengthening of the currently-active corticostriatal synapses (OB to RA) would in fact lead to an increased likelihood of the lever press, even though the raisin reward was expected and the juice reward was delivered.

Further, imagine that the expected raisin (OB) and juice (OA) rewards are equally valued, and the subject must learn to press left for the raisin (figure 1B, OB to RB) and press right for the juice (OA to RA), and that left-raisin and right-juice trials are interspersed during the acquisition sessions. The striatal O-R mechanism should be unable to carry out this learning of the two distinct response-outcome associations (as assessed subsequently by devaluation of one outcome prior to an extinction test session), for the phasic DA response does not depend upon the stimulus characteristics of the reward [134], but on the reward’s value compared to that which was expected [45, 146]. Therefore, in the scenario above, during early stages of learning, even if the animal expects juice reward (OA) and incorrectly presses the left lever (RB) delivering the raisin reward, DA neurons should be activated by the equally-valued juice reward, strengthening the incorrect OA to RB synapse.

An O-R learning system such as that depicted in figure 1B should therefore be unable to learn to perform two distinct behavioral responses for two distinct outcomes when the two distinct response-outcome contingencies are in effect during the same behavioral learning session. On the other hand, as described above, such a system can learn multiple distinct O-R associations so long as each O-R association is acquired during a separate session. According to the model in 1B, separate O-R training sessions would be necessary to acquire each O-R association during early stages of learning, i.e., until the strength of the synaptic connections between O inputs and their corresponding R outputs are sufficiently strengthened to permit OA to active RA with a higher probability than RB. At that point, even with interspersed trials, further strengthening of individual O-R associations through DA-mediated reinforcement should occur. Note, in contrast, that learning distinct S-R associations (in figure 1A, SA-RA and SB-RB) should not be problematic, even with interspersed trials early in learning, for reward presentation depends upon activation of the correct S-R synapses. If the animal performs a particular response to an incorrect discriminative stimulus, no reinforcement is delivered (task contingencies ensure that reward is delivered only following SA to RA or SB to RB, but never following SA to RB or SB to RA). If the reinforcer were shifted to a different but equally-valued reinforcer during task acquisition, discriminative S-R learning should be unimpaired for the phasic DA response will continue to occur following, and only following, the correct behavioral response to the discriminative stimulus.

Learning to respond differently for two distinct but equally-valued rewards might be accomplished by a neural system for which a match between the stimulus properties of the expected and delivered outcomes produces synaptic strengthening between cells representing the expected outcome (e.g., raisin) and those representing spatial location of the manipulandum (left or right side of the cage). (One might imagine that hippocampal systems, which contribute to spatial as opposed to behavioral response learning [110, 111], might be able to acquire the appropriate associations under these conditions). If, however, the animal were required to perform a joint flexion versus extension upon the same (spatially-located) manipulandum for an equally-valued raisin versus juice reward with interspersed R1-O1 and R2-O2 trials, distinct response-outcome associations should be difficult or impossible to acquire. Yet such R1-O1 and R2-O2 association are acquired even when R1 and R2 are directed toward a response manipulandum in a fixed spatial location, and with interspersed trials [43].

The O-R model, then, may underlie the acquisition of O-R associations acquired during independent learning episodes and the expression of goal-directed behavior, even for multiple distinct outcomes. However, an additional mechanism is needed in order to acquire multiple distinct O-R associations with interspersed trials. The additional mechanism need operate only during early stages of learning (to insure that the correct response is selected during activation of a corresponding outcome representation). So long as the organism performs the correct response with the corresponding outcome representation active, DA-mediated strengthening of active O-R synapses will eventually permit the O representation to select the correct striatal R representation. Because the prelimbic cortex critically mediates sensitivity to outcome devaluation only during early stages of training [16, 107], it is tempting to speculate that this region may play a role in circuits that guide early-stage activation of outcome representations and response selection, while correct corticostriatal O-R connections are being established.

Implications of putamen/DLS movement codes for striatal learning

In the S-R and O-R models above, movement coding in striatal cells is assumed to reflect the behavioral response associated with striatal output, rather than movement-related inputs to the cell. However, many striatal neurons do receive movement-related inputs. This section describes movement-related responses of cells in the rat DLS and monkey putamen, both a) pre-movement activity of the cells and b) activity beginning approximately at the time of movement initiation, and considers the significance of both types of striatal movement coding for learning.

DLS responses during movement

Primary sensory and motor areas of the cortex project topographically onto the DLS of rats and lateral putamen of primates. Cortical regions representing the head and face project to a ventromedial region, those representing hind limbs project to a more dorsal zone, and those representing forelimbs project to a still more dorsal and lateral region of the DLS/putamen [25, 46, 56, 85, 95, 144]. Cells in these regions of the striatum often show neuronal activation in response to the movement of specific joints of the body. The body part represented by these striatal movement codes corresponds to the body part represented by the sensorimotor cortical afferents to the region [4, 31]. It therefore appears that movement-tied activations in these striatal regions reflect the influence of their cortical afferents.

Striatal movement codes that occur around the time of the movement itself [4, 61, 81] are likely to reflect cortical input information regarding a movement that has already been initiated. Most cells that are activated around the time of movement become active only after the appearance of task-related EMG activity [80]. This delay in movement-tied activity in putamen cells is consistent with the nature of their motor cortical inputs. Primary motor cortical (M1) inputs to the putamen include both direct projections, and axon collaterals branching from M1 axons as they descend to the brain stem and/or spinal cord [100, 112]. Cortical cells projecting directly to the putamen show movement-related activity that occurs considerably later than that seen in pyramidal tract neurons within the same frontal motor regions [19]. Thus both direct and collateral inputs from the motor cortex to the putamen are likely to convey information about the movement that has already been initiated rather than the next movement to be selected.

Projections from regions of M1 representing a given body part converge with those from primary somatosensory cortex representing the same body part onto discrete regions of the putamen [47]. These somatosensory inputs include those originating in cortical area 3a [46] which contains cells coding for muscle sensation [167] and area 3b [46] which contains cells coding for the direction of movement of tactile stimuli across a particular body part (e.g., digit) [42]. There is a convergence of cortical projections from areas 3a and 3b to subregions of the putamen that receive inputs from M1 areas representing the same body part [46]. Such somatosensory inputs are likely to account for the observation that a large proportion (40–50%) of putamen cells coding for a particular limb movement are activated even during passive movement of the limb [9, 41].

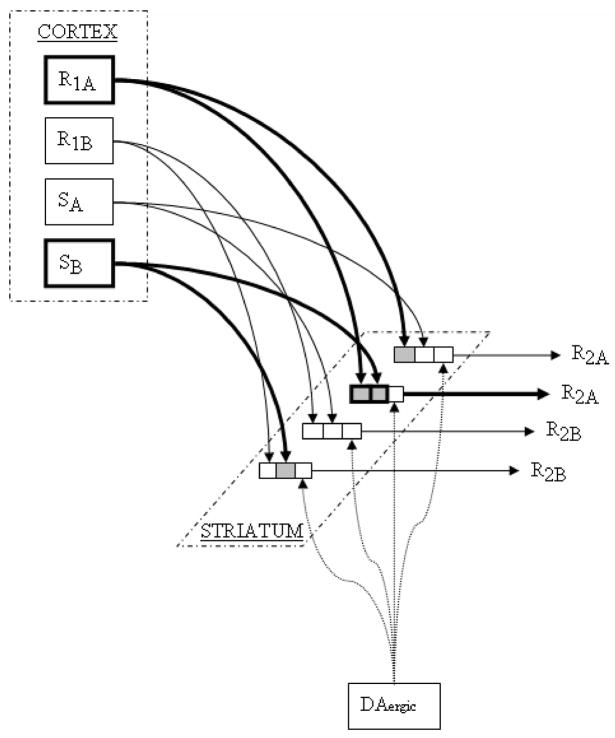

Convergence of a) motor copies via corticospinal collaterals and late-firing direct cortical projections with b) corticostriatal somatosensory information coding muscle activation and tactile results of the just-initiated movement would permit the striatal output cell to become selectively activated just after the initiation of a well-designated body movement. This type of conjunctive somatosensory-movement code would serve well as a discriminative stimulus for generating the next segment of a reinforced movement sequence (figure 2). If combinations of motor and somatosensory inputs designate the just-performed behavioral response (figure 2, R1A and SB), and the output of the cell contributes to selection of the to-be-performed movement (one of the two R2A cells depicted in figure 2), then a DA-mediated strengthening of these input-output connections following unsignaled primary or conditioned reward would increase the likelihood that the first movement (R1A/SB) is followed by the second (R2A). Such a cell would be expected to show single-unit activity correlated with the first movement (for the input signals coding the first movement would be necessary in order to activate the cell) but only when followed by the second movement (the output consequence of cell firing). Striatal cells receiving specific input coding for a tactile stimulus to a particular body part (say SB) may show activation associated with that stimulus only when followed by a particular movement (R2A). These types of neuronal coding are observed in striatal cells [82, 87, 151]. According to the model, the proportion of cells showing this type of conjunctive coding should grow as a function of the number of trials for which that particular sensory/motor combination or movement sequence has been rewarded.

Figure 2.

Corticostriatal inputs from primary sensorimotor cortex carry somatosensory (SB) and movement-related (R1A) inputs representing the just-initiated behavior to striatal output cells corresponding with the to-be-initiated movement (one of the two R2A cells). If the animal initiates a behavioral response leading to reward delivery, the currently active synapses (SB/R1A to R2A) are strengthened, increasing the likelihood that the reward-procuring behavior (R2A) will occur in response to the same somatosensory and motor input conditions (SB/R1A) in the future.

The acquisition and performance of learned movement sequences is severely disrupted by lesions or temporary inactivation of the DLS [38, 75], and by striatal DA depletion [93], consistent with the notion that the establishment of striatal sequence coding requires DA-mediated strengthening of input-output connections corresponding to consecutive segments of a behavioral sequence. According to this model, DA loss (or pharmacological disruption during acquisition) should prevent the emergence of striatal units displaying sequence coding (perhaps with the exception of cells for which maximally strong input-output connections may be innately wired).

DLS responses during movement preparation

Many putamen cells, particularly within anterior regions of the putamen [8], become activated during periods leading up to the movement and ending with the onset of the movement [4, 7, 79, 80, 136]. Often these activation responses are observed during the delay between the presentation of an instruction cue designating movement direction and a trigger stimulus eliciting movement. These striatal activations typically correspond to the direction of the animal’s upcoming movement [4, 8], and are therefore unlikely to reflect inputs representing the just-performed movement. Cells in M1 and the supplementary motor area (SMA), which strongly innervate output cells of the putamen [6, 56], show movement preparatory activity with similar characteristics [7], and with onset of activation occurring approximately 100–200 ms before similar activations are seen in putamen cells [8]. Striatal movement coding during these pre-movement periods is therefore likely to reflect the pre-movement activity of frontal cortical inputs to the striatum. While particular focus is placed here upon movement preparation coding in putamen cells, such coding is also seen in cells within the caudate and ventral striatum [14, 60, 80, 149].

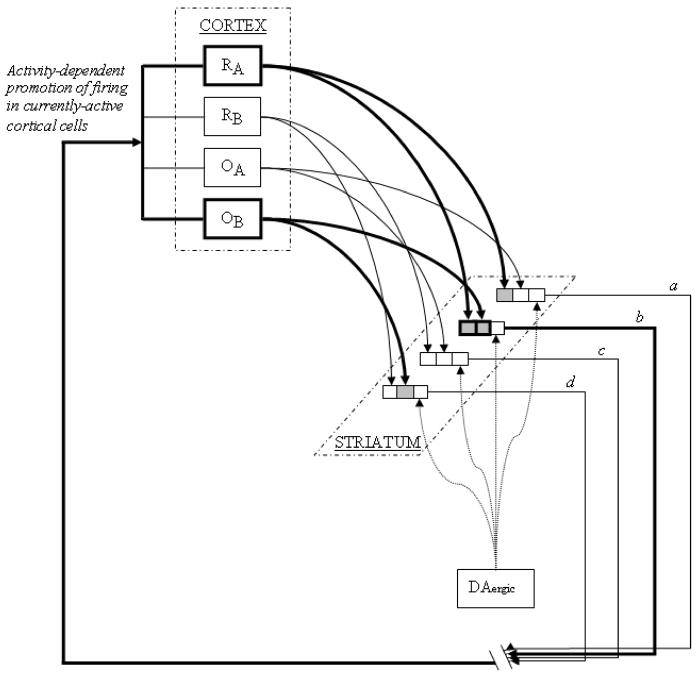

An important output consequence of striatal activity during pre-movement delay periods, via basal ganglia outputs directed to the frontal cortex [3, 6, 127], may be to maintain ongoing neuronal activity in the very cells that send corticostriatal inputs to the striatum [15, 127], i.e. those cortical cells coding movement direction during the pre-movement delay period (see figure 3). As noted above, cortical inputs carrying these movement-direction codes to putamen cells (RA, RB) are likely to originate in M1 and the SMA [8]. Putamen cells (a, b, c, d) send projections to internal segments of the globus pallidus (GPi) which project to specific thalamic nuclei (including VLo and VAmc), which, in turn, project to M1 and SMA [1, 6, 62, 124]. Point-to-point closed loop circuitry is unnecessary for striatal-mediated persistent activity in frontal cortical cells so long as thalamocortical input to M1 and SMA excites (or prevents inhibition of) those cortical cells that are already active, i.e., maintains the activity of currently–active sets of cortical cells [127]. DA-mediated strengthening of currently-active corticostriatal synapses (figure 3, R1A/OB to b) would be expected to increase the future likelihood that the cortical movement preparation signal will lead to striatal output activation. This may permit persistent reverberatory activity of the cortical cells coding movement direction until the time when the trigger stimulus occasions movement initiation. (It has been suggested that movement-related activity of frontal cortical cells during pre-movement delay periods is terminated by thalamocortical signals reflecting corollary discharge that corresponds to movement initiation [26, 127, 163]).

Figure 3.

Cortical M1 and SMA inputs coding the direction of an upcoming limb movement (RA, RB) and cortical cells coding expected reward outcome (OA, OB) send converging projections to striatal output cells (a, b, c, d) which, via striato-pallido-thalamo-cortical pathways promote persistence of activity in the cortical cells. If the animal initiates a behavioral response leading to a reward or reward-predicting cue, the currently active synapses (RA/OB to b) are strengthened by DA-mediated LTP, increasing the likelihood that the same cortical inputs will produce reverberatory activity in the circuit in the future. Once the relevant corticostriatal synapses have been strengthened by DA responses to the reward-predicting cue (e.g., a trigger stimulus associated with response-contingent reward delivery), the likelihood of future reverberatory activity through those synapses will be increased even if future inputs arrive earlier in the trial (e.g., seconds prior to the trigger stimulus).

Adding plausibility to this type of model, thalamostriatal inputs appear to generate ‘upstates’ in striatal cells [127], i.e., stable states of membrane depolarization just below action potential threshold that are necessary in order for incoming glutamate signals to generate action potentials in the striatal cell [105, 161]. Therefore, after the striatal output cell has been activated by its cortical inputs, driving its pallido-thalamo-cortical circuit, projections from thalamus to striatum may promote the maintenance of the striatal upstate until the next corticostriatal signal arrives. Selective facilitation of the activity of currently-active cortical cells may similarly result from thalamocortical promotion of their upstate maintenance [127].

A similar reverberatory mechanism may maintain the activity of frontal cortical cells coding reward expectation [11, 48, 133, 137, 145], cells that, as noted above, are found within frontal regions that project widely to the striatum [95, 128, 138]. Striatal firing to the conjunction of movement and reward expectation conditions during these pre-movement periods [40, 57, 61, 126, 147] is assumed to reflect convergent input from cortical cells representing reward expectation and those representing upcoming movement direction onto individual striatal output neurons. The maintenance of reverberatory activity by striatal cells receiving convergent reward expectation (figure 3, OB) and movement direction (RA) inputs may be of particular significance, for conjunctive coding for reward expectation and movement is observed much more frequently in striatal cells activated during movement preparation compared to those activated during the movement itself [57, 61].

While the focus here has been on cells coding reward expectation/arm movement conjunctions in the putamen, caudate neurons coding for visual saccades show pre-movement (i.e. pre-saccade) activity [60] as well as conjunctive coding of saccade direction and reward expectation [69, 75, 86] similar to that described for arm movements above. The output of these cells corresponds to parameters of the upcoming saccade [69]. DA-mediated corticostriatal synapse-strengthening and promotion of reverberatory corticostriatal activity, in a manner similar to that depicted in figure 3, may underlie the sustained pre-movement activity of these saccade-related cells and the ability of the subject to make rapid saccades in a particular direction even with a delay between the instruction cue signaling movement direction and the trigger stimulus occasioning response initiation.

As noted above, the observation of cortical and striatal preparatory movement activity typically comes from tasks in which a visual instruction stimulus designates the correct movement, a variable delay is introduced, and then a trigger stimulus for movement initiation is presented [14, 60, 80, 149]. Frontal cortical and striatal pre-movement activity typically terminates when the trigger stimulus is presented [80], often several seconds prior to presentation of the primary reward. While the activity of striatal cells coding movement direction would have terminated long before a phasic dopamine response to primary reward, the striatal cell typically is still active at the time of the trigger stimulus [80]; the trigger stimulus has been shown to elicit a phasic DA response under similar behavioral paradigms [89, 135], presumably because the trigger stimulus is a reliable predictor of primary reward delivery.

To the extent that the time intervals between trigger stimulus and reward delivery are narrowly distributed, the DA response should shift from the primary reward to the trigger stimulus; i.e., the DA response to food itself is attenuated to the extent that it is well predicted by the earlier sensory stimulus [45]. To the extent that these time intervals are widely distributed (e.g., with trigger-to-reward intervals ranging from very short to very long), the shift in the DA response from the primary reward to the trigger should be reduced. DA mediated strengthening of the input-output connections postulated here to underlie the sustained activity of cortical movement-coding cells depends upon a phasic DA response to the trigger stimulus. Without a DA response to this reward-related stimulus, strengthening of the input-output connections between cortical movement preparation input codes and striatal outputs serving to maintain the activity of these cortical inputs should not be observed. One would therefore expect that in a task with trigger-to-reward intervals that vary greatly (say from 0.5 sec to 10 sec with a flat distribution of delay probabilities), sustained preparatory movement activity should be reduced not only in striatal cells, but also in frontal cortical cells that normally show activity during the instruction-to-trigger delay period. A loss of sustained preparatory movement activity in frontal cortical cells should also be reduced by intrastriatal infusion of dopamine antagonists or temporary striatal inactivation during task acquisition.

In this model, a DA reward response to a trigger cue after a pre-movement delay strengthens currently-active input-output synapses in the striatum (figure 3, RA/OB to b) giving persistence to the reverberatory activity through these synapses. However, given that the DA-eliciting trigger cue only occurs at the end of the delay period, how does it promote reverberatory activity, i.e., sustained movement-direction (and reward expectation) coding, well in advance of the trigger? DA-mediated strengthening of currently-active corticostriatal synapses will be strengthened only at the time of the trigger stimulus, but the resulting facilitation of transmission across these synapses will promote input-output transmission regardless of when future inputs arrive, i.e., even if the future arrivals of the corticostriatal inputs occur well before the trigger stimulus. It would therefore be predicted that during early stages of training, striatal cells should show pre-movement activity in close proximity to the trigger stimulus, and only after a number of reinforced trials will earlier-onset pre-movement activation (between instruction cue and trigger stimulus) be observed in striatal cells.

According to this model, however, there should be a limit on the maximum duration possible for such pre-movement activity in striatal cells. While corticostriatal input at the time of the reward-related trigger should lead to increased strength of the relevant input-output synapses, corticostriatal inputs through these strengthened synapses that, during subsequent trials, are initiated at an earlier time (with respect to the trigger) drive corticostriatal activity in the absence of a phasic DA response; this should cause weakening of the relevant input-output synapses. Therefore, there is likely to be a limit on the duration of time that such reverberatory activity can be sustained prior to presentation of the trigger, and perhaps a limit on the duration of time between instruction and trigger for which the animal can prepare behaviorally for, or as seen below, withhold, the instructed movement.

In the model described above, the net result of DA-mediated strengthening of corticostriatal synapses coding for movement direction is to allow movement-direction coding cells in the frontal cortex to persist until the trigger stimulus signals the moment of “permissible” response initiation. However, the same mechanism may be applied to cortical signal generators (R1B) that act to inhibit, rather than permit, the movement [24]. In the instruction-trigger paradigm, successful performance requires withholding the movement until the trigger stimulus is presented. DA mediated strengthening of currently active input-output connections would promote the acquisition of persistent activity in frontal cortical cells whose sustained activity is necessary in order to restrain movement during the instruction-to-trigger delay period. Indeed, very high mean rates of overall firing in M1 may be observed in the absence of behavioral movement [51]. Taha et al. make the insightful observation that greater response suppression is required during trials in which a premature movement must be withheld in order to obtain a reward compared to trials in which little reward is available, consistent with the frequently observed activity of striatal cells that are activated preferentially during pre-movement periods when the movement is expected to result in the delivery of reward [39, 113].

In summary, it is proposed that pre-movement activity of striatal cells may serve to maintain ongoing cortico-striatal basal ganglia circuit activity between presentation of an instruction cue and a trigger stimulus [15], and that DA-mediated strengthening of currently active synapses following presentation of the trigger increases the strength of these synapses, permitting reverberatory activity to occur. The behavioral consequence of this persistent activity may be to maintain activity in movement direction-coding cells in the cortex until the time that the movement trigger is presented

Conclusion

This paper builds upon previous models of DA-mediated learning in which a DA reward signal strengthens currently-active input-output synapses of the striatum [22, 99, 131, 156, 158]. Current data support the notion that while midbrain DA neurons show phasic activation onset responses to both reward-predicting and salient non-reward events, activation responses to reward-predicting stimuli are sustained for several hundreds of milliseconds beyond those elicited by salient non-rewards. The rapid onset of the phasic DA response may be critical to producing selective strengthening of input-output synapses in the striatum associated with the successful reward-procuring behavior. Similar models involving DA-mediated plasticity in corticostriatal synapses may be employed for both stimulus-response and response-outcome learning, with the key difference being the nature of the corticostriatal input information (stimulus- versus outcome-related) that becomes tied to movement-related striatal outputs. It is suggested that DA-mediated strengthening of corticostriatal synapses in DLS regions receiving afferents from primary sensorimotor cortex serves to bind inputs representing the previously-emitted movement segment to striatal outputs contributing to the selection of the next movement segment in a behavioral sequence.

Within the striatum, more generally, output neurons often show pre-movement activation that requires the conjunction of upcoming movement direction and reward expectation conditions. It is suggested that this sustained neuronal activity reflects convergent inputs from distinct regions of the frontal cortex that code for movement direction and reward expectancy independently. DA-mediated strengthening of active corticostriatal synapses carrying these information codes occurs when the animal encounters a reward-related stimulus. The strengthening of these corticostriatal input-output connections, via downstream basal ganglia consequences of striatal output activity, promotes the maintenance of activity in the very cortical cells that drive corticostriatal input, leading to the establishment of sustained reverberatory loops that permit cortical movement-related cells to maintain activity until the appropriate time of movement initiation.

Acknowledgments

I gratefully acknowledge Rosa Isabel Caamaño Tubío who created the figures for this paper.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Akkal D, Dum RP, Strick PL. Supplementary motor area and presupplementary motor area: targets of basal ganglia and cerebellar output. J Neurosci. 2007;27(40):10659–73. doi: 10.1523/JNEUROSCI.3134-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Akopian G, et al. Functional state of corticostriatal synapses determines their expression of short- and long-term plasticity. Synapse. 2000;38(3):271–80. doi: 10.1002/1098-2396(20001201)38:3<271::AID-SYN6>3.0.CO;2-A. [DOI] [PubMed] [Google Scholar]

- 3.Albin RL, Young AB, Penney JB. The functional anatomy of basal ganglia disorders. Trends Neurosci. 1989;12(10):366–75. doi: 10.1016/0166-2236(89)90074-x. [DOI] [PubMed] [Google Scholar]

- 4.Alexander GE. Selective neuronal discharge in monkey putamen reflects intended direction of planned limb movements. Exp Brain Res. 1987;67(3):623–34. doi: 10.1007/BF00247293. [DOI] [PubMed] [Google Scholar]

- 5.Alexander GE. Basal ganglia-thalamocortical circuits: their role in control of movements. J Clin Neurophysiol. 1994;11(4):420–31. [PubMed] [Google Scholar]

- 6.Alexander GE, Crutcher MD. Functional architecture of basal ganglia circuits: neural substrates of parallel processing. Trends Neurosci. 1990;13(7):266–71. doi: 10.1016/0166-2236(90)90107-l. [DOI] [PubMed] [Google Scholar]

- 7.Alexander GE, Crutcher MD. Neural representations of the target (goal) of visually guided arm movements in three motor areas of the monkey. J Neurophysiol. 1990;64(1):164–78. doi: 10.1152/jn.1990.64.1.164. [DOI] [PubMed] [Google Scholar]

- 8.Alexander GE, Crutcher MD. Preparation for movement: neural representations of intended direction in three motor areas of the monkey. J Neurophysiol. 1990;64(1):133–50. doi: 10.1152/jn.1990.64.1.133. [DOI] [PubMed] [Google Scholar]

- 9.Alexander GE, DeLong MR. Microstimulation of the primate neostriatum. II. Somatotopic organization of striatal microexcitable zones and their relation to neuronal response properties. J Neurophysiol. 1985;53(6):1417–30. doi: 10.1152/jn.1985.53.6.1417. [DOI] [PubMed] [Google Scholar]

- 10.Alexander GE, DeLong MR, Strick PL. Parallel organization of functionally segregated circuits linking basal ganglia and cortex. Annu Rev Neurosci. 1986;9:357–81. doi: 10.1146/annurev.ne.09.030186.002041. [DOI] [PubMed] [Google Scholar]

- 11.Amemori K, Sawaguchi T. Contrasting effects of reward expectation on sensory and motor memories in primate prefrontal neurons. Cereb Cortex. 2006;16(7):1002–15. doi: 10.1093/cercor/bhj042. [DOI] [PubMed] [Google Scholar]

- 12.Aosaki T, Graybiel AM, Kimura M. Effect of the nigrostriatal dopamine system on acquired neural responses in the striatum of behaving monkeys. Science. 1994;265(5170):412–5. doi: 10.1126/science.8023166. [DOI] [PubMed] [Google Scholar]

- 13.Aosaki T, Kimura M, Graybiel AM. Temporal and spatial characteristics of tonically active neurons of the primate’s striatum. J Neurophysiol. 1995;73(3):1234–52. doi: 10.1152/jn.1995.73.3.1234. [DOI] [PubMed] [Google Scholar]

- 14.Apicella P, et al. Neuronal activity in monkey striatum related to the expectation of predictable environmental events. Journal of Neurophysiology. 1992;68(3):945–60. doi: 10.1152/jn.1992.68.3.945. [DOI] [PubMed] [Google Scholar]

- 15.Ashby FG, et al. FROST: a distributed neurocomputational model of working memory maintenance. J Cogn Neurosci. 2005;17(11):1728–43. doi: 10.1162/089892905774589271. [DOI] [PubMed] [Google Scholar]

- 16.Balleine BW, Dickinson A. Goal-directed instrumental action: contingency and incentive learning and their cortical substrates. Neuropharmacology. 1998;37(4–5):407–419. doi: 10.1016/s0028-3908(98)00033-1. [DOI] [PubMed] [Google Scholar]

- 17.Balleine BW, Killcross AS, Dickinson A. The effect of lesions of the basolateral amygdala on instrumental conditioning. J Neurosci. 2003;23(2):666–75. doi: 10.1523/JNEUROSCI.23-02-00666.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Barnes TD, et al. Activity of striatal neurons reflects dynamic encoding and recoding of procedural memories. Nature. 2005;437(7062):1158–61. doi: 10.1038/nature04053. [DOI] [PubMed] [Google Scholar]

- 19.Bauswein E, Fromm C, Preuss A. Corticostriatal cells in comparison with pyramidal tract neurons: contrasting properties in the behaving monkey. Brain Res. 1989;493(1):198–203. doi: 10.1016/0006-8993(89)91018-4. [DOI] [PubMed] [Google Scholar]

- 20.Beckstead RM, V, Domesick B, Nauta WJ. Efferent connections of the substantia nigra and ventral tegmental area in the rat. Brain Research. 1979;175(2):191–217. doi: 10.1016/0006-8993(79)91001-1. [DOI] [PubMed] [Google Scholar]

- 21.Beninger RJ. The role of dopamine in locomotor activity and learning. Brain Res. 1983;287(2):173–96. doi: 10.1016/0165-0173(83)90038-3. [DOI] [PubMed] [Google Scholar]

- 22.Beninger RJ, Miller R. Dopamine D1-like receptors and reward-related incentive learning. Neurosci Biobehav Rev. 1998;22(2):335–45. doi: 10.1016/s0149-7634(97)00019-5. [DOI] [PubMed] [Google Scholar]

- 23.Berridge KC. Food reward: brain substrates of wanting and liking. Neuroscience & Biobehavioral Reviews. 1996;20(1):1–25. doi: 10.1016/0149-7634(95)00033-b. [DOI] [PubMed] [Google Scholar]

- 24.Brotchie P, Iansek R, Horne MK. Motor function of the monkey globus pallidus. 2. Cognitive aspects of movement and phasic neuronal activity. Brain. 1991;114( Pt 4):1685–702. doi: 10.1093/brain/114.4.1685. [DOI] [PubMed] [Google Scholar]

- 25.Brown LL, Sharp FR. Metabolic mapping of rat striatum: somatotopic organization of sensorimotor activity. Brain Res. 1995;686(2):207–22. doi: 10.1016/0006-8993(95)00457-2. [DOI] [PubMed] [Google Scholar]

- 26.Brunel N, Wang XJ. Effects of neuromodulation in a cortical network model of object working memory dominated by recurrent inhibition. J Comput Neurosci. 2001;11(1):63–85. doi: 10.1023/a:1011204814320. [DOI] [PubMed] [Google Scholar]

- 27.Calabresi P, et al. Dopamine and cAMP-regulated phosphoprotein 32 kDa controls both striatal long-term depression and long-term potentiation, opposing forms of synaptic plasticity. J Neurosci. 2000;20(22):8443–51. doi: 10.1523/JNEUROSCI.20-22-08443.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Calabresi P, et al. Long-term synaptic depression in the striatum: physiological and pharmacological characterization. J Neurosci. 1992;12(11):4224–33. doi: 10.1523/JNEUROSCI.12-11-04224.1992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Calabresi P, et al. Dopamine-mediated regulation of corticostriatal synaptic plasticity. Trends Neurosci. 2007;30(5):211–9. doi: 10.1016/j.tins.2007.03.001. [DOI] [PubMed] [Google Scholar]

- 30.Calabresi P, et al. Role of dopamine receptors in the short- and long-term regulation of corticostriatal transmission. Nihon Shinkei Seishin Yakurigaku Zasshi. 1997;17(2):101–4. [PubMed] [Google Scholar]

- 31.Carelli RM, West MO. Representation of the body by single neurons in the dorsolateral striatum of the awake, unrestrained rat. J Comp Neurol. 1991;309(2):231–49. doi: 10.1002/cne.903090205. [DOI] [PubMed] [Google Scholar]

- 32.Centonze D, et al. Dopaminergic control of synaptic plasticity in the dorsal striatum. Eur J Neurosci. 2001;13(6):1071–7. doi: 10.1046/j.0953-816x.2001.01485.x. [DOI] [PubMed] [Google Scholar]

- 33.Choi S, Lovinger DM. Decreased probability of neurotransmitter release underlies striatal long-term depression and postnatal development of corticostriatal synapses. Proc Natl Acad Sci U S A. 1997;94(6):2665–70. doi: 10.1073/pnas.94.6.2665. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Christoph GR, Leonzio RJ, Wilcox KS. Stimulation of the lateral habenula inhibits dopamine-containing neurons in the substantia nigra and ventral tegmental area of the rat. J Neurosci. 1986;6(3):613–9. doi: 10.1523/JNEUROSCI.06-03-00613.1986. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Coizet V, et al. Phasic activation of substantia nigra and the ventral tegmental area by chemical stimulation of the superior colliculus: an electrophysiological investigation in the rat. Eur J Neurosci. 2003;17(1):28–40. doi: 10.1046/j.1460-9568.2003.02415.x. [DOI] [PubMed] [Google Scholar]

- 36.Comoli E, et al. A direct projection from superior colliculus to substantia nigra for detecting salient visual events. Nat Neurosci. 2003;6(9):974–80. doi: 10.1038/nn1113. [DOI] [PubMed] [Google Scholar]

- 37.Corbit LH, Balleine BW. Double dissociation of basolateral and central amygdala lesions on the general and outcome-specific forms of pavlovian-instrumental transfer. J Neurosci. 2005;25(4):962–70. doi: 10.1523/JNEUROSCI.4507-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Cromwell HC, Berridge KC. Implementation of action sequences by a neostriatal site: a lesion mapping study of grooming syntax. J Neurosci. 1996;16(10):3444–58. doi: 10.1523/JNEUROSCI.16-10-03444.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Cromwell HC, Hassani OK, Schultz W. Relative reward processing in primate striatum. Exp Brain Res. 2005;162(4):520–5. doi: 10.1007/s00221-005-2223-z. [DOI] [PubMed] [Google Scholar]

- 40.Cromwell HC, Schultz W. Effects of expectations for different reward magnitudes on neuronal activity in primate striatum. J Neurophysiol. 2003;89(5):2823–38. doi: 10.1152/jn.01014.2002. [DOI] [PubMed] [Google Scholar]

- 41.Crutcher MD, DeLong MR. Single cell studies of the primate putamen. I. Functional organization. Exp Brain Res. 1984;53(2):233–43. doi: 10.1007/BF00238153. [DOI] [PubMed] [Google Scholar]

- 42.DiCarlo JJ, Johnson KO. Receptive field structure in cortical area 3b of the alert monkey. Behav Brain Res. 2002;135(1–2):167–78. doi: 10.1016/s0166-4328(02)00162-6. [DOI] [PubMed] [Google Scholar]

- 43.Dickinson A, et al. Bidirectional instrumental conditioning. Q J Exp Psychol B. 1996;49(4):289–306. doi: 10.1080/713932637. [DOI] [PubMed] [Google Scholar]

- 44.Fallon JH, Moore RY. Catecholamine innervation of the basal forebrain. IV. Topography of the dopamine projection to the basal forebrain and neostriatum. Journal of Comparative Neurology. 1978;180(3):545–80. doi: 10.1002/cne.901800310. [DOI] [PubMed] [Google Scholar]

- 45.Fiorillo CD, Tobler PN, Schultz W. Discrete coding of reward probability and uncertainty by dopamine neurons. Science. 2003;299(5614):1898–902. doi: 10.1126/science.1077349. [DOI] [PubMed] [Google Scholar]

- 46.Flaherty AW, Graybiel AM. Corticostriatal transformations in the primate somatosensory system. Projections from physiologically mapped body-part representations. J Neurophysiol. 1991;66(4):1249–63. doi: 10.1152/jn.1991.66.4.1249. [DOI] [PubMed] [Google Scholar]

- 47.Flaherty AW, Graybiel AM. Two input systems for body representations in the primate striatal matrix: experimental evidence in the squirrel monkey. J Neurosci. 1993;13(3):1120–37. doi: 10.1523/JNEUROSCI.13-03-01120.1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Frank MJ, Claus ED. Anatomy of a decision: striato-orbitofrontal interactions in reinforcement learning, decision making, and reversal. Psychol Rev. 2006;113(2):300–26. doi: 10.1037/0033-295X.113.2.300. [DOI] [PubMed] [Google Scholar]

- 49.Gallagher M, McMahan RW, Schoenbaum G. Orbitofrontal cortex and representation of incentive value in associative learning. J Neurosci. 1999;19(15):6610–4. doi: 10.1523/JNEUROSCI.19-15-06610.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Girault JA, et al. Enhancement of glutamate release in the rat striatum following electrical stimulation of the nigrothalamic pathway. Brain Res. 1986;374(2):362–6. doi: 10.1016/0006-8993(86)90430-0. [DOI] [PubMed] [Google Scholar]

- 51.Goldberg JA, et al. Enhanced synchrony among primary motor cortex neurons in the 1-methyl-4-phenyl-1,2,3,6-tetrahydropyridine primate model of Parkinson’s disease. J Neurosci. 2002;22(11):4639–53. doi: 10.1523/JNEUROSCI.22-11-04639.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Gonon F. Prolonged and extrasynaptic excitatory action of dopamine mediated by D1 receptors in the rat striatum in vivo. J Neurosci. 1997;17(15):5972–8. doi: 10.1523/JNEUROSCI.17-15-05972.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Graybiel AM. The Basal Ganglia and Chunking of Action Repertoires. Neurobiol Learn Mem. 1998;70(12):119–136. doi: 10.1006/nlme.1998.3843. [DOI] [PubMed] [Google Scholar]

- 54.Graybiel AM. Habits, rituals, and the evaluative brain. Annu Rev Neurosci. 2008;31:359–87. doi: 10.1146/annurev.neuro.29.051605.112851. [DOI] [PubMed] [Google Scholar]

- 55.Graziano MS, Gross CG. A bimodal map of space: somatosensory receptive fields in the macaque putamen with corresponding visual receptive fields. Exp Brain Res. 1993;97(1):96–109. doi: 10.1007/BF00228820. [DOI] [PubMed] [Google Scholar]

- 56.Haber SN. The primate basal ganglia: parallel and integrative networks. J Chem Neuroanat. 2003;26(4):317–30. doi: 10.1016/j.jchemneu.2003.10.003. [DOI] [PubMed] [Google Scholar]

- 57.Hassani OK, Cromwell HC, Schultz W. Influence of expectation of different rewards on behavior-related neuronal activity in the striatum. J Neurophysiol. 2001;85(6):2477–89. doi: 10.1152/jn.2001.85.6.2477. [DOI] [PubMed] [Google Scholar]

- 58.Hatfield T, et al. Neurotoxic lesions of basolateral, but not central, amygdala interfere with Pavlovian second-order conditioning and reinforcer devaluation effects. J Neurosci. 1996;16(16):5256–65. doi: 10.1523/JNEUROSCI.16-16-05256.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Herkenham M, Nauta WJ. Efferent connections of the habenular nuclei in the rat. J Comp Neurol. 1979;187(1):19–47. doi: 10.1002/cne.901870103. [DOI] [PubMed] [Google Scholar]

- 60.Hikosaka O, Sakamoto M, Usui S. Functional properties of monkey caudate neurons. I. Activities related to saccadic eye movements. J Neurophysiol. 1989;61(4):780–98. doi: 10.1152/jn.1989.61.4.780. [DOI] [PubMed] [Google Scholar]

- 61.Hollerman JR, Tremblay L, Schultz W. Influence of reward expectation on behavior-related neuronal activity in primate striatum. J Neurophysiol. 1998;80(2):947–63. doi: 10.1152/jn.1998.80.2.947. [DOI] [PubMed] [Google Scholar]

- 62.Hoover JE, Strick PL. The organization of cerebellar and basal ganglia outputs to primary motor cortex as revealed by retrograde transneuronal transport of herpes simplex virus type 1. J Neurosci. 1999;19(4):1446–63. doi: 10.1523/JNEUROSCI.19-04-01446.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Horvitz JC. Mesolimbocortical and nigrostriatal dopamine responses to salient non-reward events. Neuroscience. 2000;96(4):651–656. doi: 10.1016/s0306-4522(00)00019-1. [DOI] [PubMed] [Google Scholar]

- 64.Horvitz JC. Dopamine gating of glutamatergic sensorimotor and incentive motivational input signals to the striatum. Behav Brain Res. 2002;137(1–2):65–74. doi: 10.1016/s0166-4328(02)00285-1. [DOI] [PubMed] [Google Scholar]

- 65.Horvitz JC, et al. A “good parent” function of dopamine: transient modulation of learning and performance during early stages of training. Ann N Y Acad Sci. 2007;1104:270–88. doi: 10.1196/annals.1390.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Horvitz JC, Stewart T, Jacobs BL. Burst activity of ventral tegmental dopamine neurons is elicited by sensory stimuli in the awake cat. Brain Res. 1997;759(2):251–8. doi: 10.1016/s0006-8993(97)00265-5. [DOI] [PubMed] [Google Scholar]

- 67.Houk JC, et al. Action selection and refinement in subcortical loops through basal ganglia and cerebellum. Philos Trans R Soc Lond B Biol Sci. 2007;362(1485):1573–83. doi: 10.1098/rstb.2007.2063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Humphries MD, Stewart RD, Gurney KN. A physiologically plausible model of action selection and oscillatory activity in the basal ganglia. J Neurosci. 2006;26(50):12921–42. doi: 10.1523/JNEUROSCI.3486-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Itoh H, et al. Correlation of primate caudate neural activity and saccade parameters in reward-oriented behavior. J Neurophysiol. 2003;89(4):1774–83. doi: 10.1152/jn.00630.2002. [DOI] [PubMed] [Google Scholar]

- 70.Izquierdo A, Suda RK, Murray EA. Bilateral orbital prefrontal cortex lesions in rhesus monkeys disrupt choices guided by both reward value and reward contingency. J Neurosci. 2004;24(34):7540–8. doi: 10.1523/JNEUROSCI.1921-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Jaber M, et al. Dopamine receptors and brain function. Neuropharmacology. 1996;35(11):1503–19. doi: 10.1016/s0028-3908(96)00100-1. [DOI] [PubMed] [Google Scholar]

- 72.Jaeger D, Gilman S, Aldridge JW. Neuronal activity in the striatum and pallidum of primates related to the execution of externally cued reaching movements. Brain Res. 1995;694(1–2):111–27. doi: 10.1016/0006-8993(95)00780-t. [DOI] [PubMed] [Google Scholar]

- 73.Kakade S, Dayan P. Dopamine: generalization and bonuses. Neural Netw. 2002;15(4–6):549–59. doi: 10.1016/s0893-6080(02)00048-5. [DOI] [PubMed] [Google Scholar]

- 74.Kamin LJ. Selective association and conditioning. In: Honig NJMWK, editor. Fundamental issues in associative learning. Dalhousie University Press; Halifax, Nova Scotia: 1969. [Google Scholar]

- 75.Kawagoe R, Takikawa Y, Hikosaka O. Expectation of reward modulates cognitive signals in the basal ganglia. Nat Neurosci. 1998;1(5):411–6. doi: 10.1038/1625. [DOI] [PubMed] [Google Scholar]

- 76.Kelley AE, V, Domesick B, Nauta WJ. The amygdalostriatal projection in the rat--an anatomical study by anterograde and retrograde tracing methods. Neuroscience. 1982;7(3):615–30. doi: 10.1016/0306-4522(82)90067-7. [DOI] [PubMed] [Google Scholar]

- 77.Kemp JM, Powell TP. The cortico-striate projection in the monkey. Brain. 1970;93(3):525–46. doi: 10.1093/brain/93.3.525. [DOI] [PubMed] [Google Scholar]

- 78.Kerr JN, Wickens JR. Dopamine D-1/D-5 receptor activation is required for long-term potentiation in the rat neostriatum in vitro. J Neurophysiol. 2001;85(1):117–24. doi: 10.1152/jn.2001.85.1.117. [DOI] [PubMed] [Google Scholar]

- 79.Kimura M. Behaviorally contingent property of movement-related activity of the primate putamen. J Neurophysiol. 1990;63(6):1277–96. doi: 10.1152/jn.1990.63.6.1277. [DOI] [PubMed] [Google Scholar]

- 80.Kimura M. Role of basal ganglia in behavioral learning. Neurosci Res. 1995;22(4):353–8. doi: 10.1016/0168-0102(95)00914-f. [DOI] [PubMed] [Google Scholar]

- 81.Kimura M, et al. Neural information transferred from the putamen to the globus pallidus during learned movement in the monkey. J Neurophysiol. 1996;76(6):3771–86. doi: 10.1152/jn.1996.76.6.3771. [DOI] [PubMed] [Google Scholar]

- 82.Kimura M, et al. Goal-directed, serial and synchronous activation of neurons in the primate striatum. Neuroreport. 2003;14(6):799–802. doi: 10.1097/00001756-200305060-00004. [DOI] [PubMed] [Google Scholar]

- 83.Kimura M, Satoh T, Matsumoto N. What does the habenula tell dopamine neurons? Nat Neurosci. 2007;10(6):677–8. doi: 10.1038/nn0607-677. [DOI] [PubMed] [Google Scholar]

- 84.Koos T, Tepper JM, Wilson CJ. Comparison of IPSCs evoked by spiny and fast-spiking neurons in the neostriatum. J Neurosci. 2004;24(36):7916–22. doi: 10.1523/JNEUROSCI.2163-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Kunzle H. Bilateral projections from precentral motor cortex to the putamen and other parts of the basal ganglia. An autoradiographic study in Macaca fascicularis. Brain Res. 1975;88(2):195–209. doi: 10.1016/0006-8993(75)90384-4. [DOI] [PubMed] [Google Scholar]

- 86.Lau B, Glimcher PW. Action and outcome encoding in the primate caudate nucleus. J Neurosci. 2007;27(52):14502–14. doi: 10.1523/JNEUROSCI.3060-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Lidsky TI, Manetto C, Schneider JS. A consideration of sensory factors involved in motor functions of the basal ganglia. Brain Res. 1985;356(2):133–46. doi: 10.1016/0165-0173(85)90010-4. [DOI] [PubMed] [Google Scholar]

- 88.Liles SL. Cortico-striatal evoked potentials in the monkey (Macaca mulatta) Electroencephalogr Clin Neurophysiol. 1975;38(2):121–9. doi: 10.1016/0013-4694(75)90221-7. [DOI] [PubMed] [Google Scholar]

- 89.Ljungberg T, Apicella P, Schultz W. Responses of monkey midbrain dopamine neurons during delayed alternation performance. Brain Res. 1991;567(2):337–41. doi: 10.1016/0006-8993(91)90816-e. [DOI] [PubMed] [Google Scholar]

- 90.Ljungberg T, Apicella P, Schultz W. Responses of monkey dopamine neurons during learning of behavioral reactions. Journal of Neurophysiology. 1992;67(1):145–63. doi: 10.1152/jn.1992.67.1.145. [DOI] [PubMed] [Google Scholar]

- 91.Mackintosh NJ. A theory of attention: variations in the associability of stimlus with reinforcement. Psychol Rev. 1975;82:276–298. [Google Scholar]

- 92.Matsumoto M, Hikosaka O. Lateral habenula as a source of negative reward signals in dopamine neurons. Nature. 2007;447(7148):1111–5. doi: 10.1038/nature05860. [DOI] [PubMed] [Google Scholar]