Abstract

We discuss behavioral studies directed at understanding how probability information is represented in motor and economic tasks. By formulating the behavioral tasks in the language of statistical decision theory, we can compare performance in equivalent tasks in different domains. Subjects in traditional economic decision-making tasks often misrepresent the probability of rare events and typically fail to maximize expected gain. In contrast, subjects in mathematically equivalent movement tasks often choose movement strategies that come close to maximizing expected gain. We discuss the implications of these different outcomes, noting the evident differences between the source of uncertainty and how information about uncertainty is acquired in motor and economic tasks.

Keywords: decision making, risk, neuroeconomics, movement planning under risk, Bayesian decision theory, expected utility theory

Risky decisions and movement planning

Uncertainty plays a fundamental role in perception, cognition and motor control and a wide variety of biological tasks can be formulated in statistical terms. How the organism combines sensory information from many different sources (“cues”) is currently an active area of research and several groups have proposed [1, 2] that perceptual estimation of properties of the environment can be framed within Bayesian decision theory, a special case of statistical decision theory [3]. We will show that framing behavioral tasks in the language of statistical decision theory enables a comparison of performance between motor tasks and decision making under risk.

Research concerning decision-making seeks to understand how subjects choose between discrete plans of action that have economic consequences [4]. A subject might be given a choice between a 10% chance of $5000 (and otherwise nothing) and a 95% percent chance of $300 (and otherwise nothing). These possible choices can be written in compact form as lotteries L1 = [0.1,$5000; 0.9,$0] and L2=[0.95,$300; 0.05,$0]. If subjects are told probabilities, they are making “decisions under risk” and otherwise, “decisions under uncertainty” [5]. Here, we are concerned primarily with the former.

Of course, most subjects would prefer to receive $5000 rather than $300, or $300 rather than $0. The key difficulty in making such decisions is that no plan of action (lottery) available to the subject guarantees any specific outcome.

We review recent experimental work in movement planning [6–9] in which humans perform speeded movements towards displays with regions which, if touched, lead to monetary rewards and penalties (Box 1). Our work shows that humans do very well in making these complex decisions in motor form. This outcome is particularly surprising since humans typically do not do well in equivalent economic decision-making tasks as we describe next.

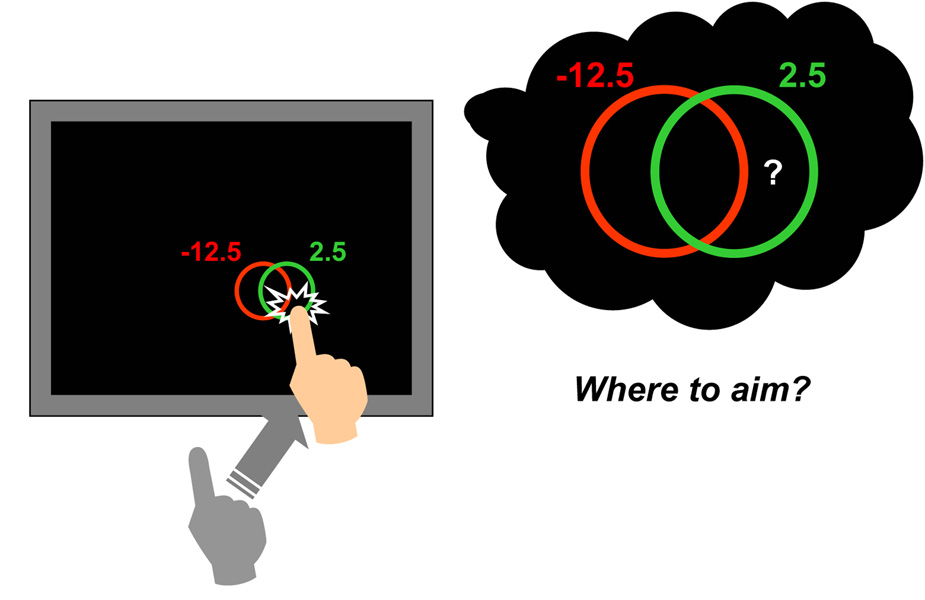

Box 1: Constructing Motor Lotteries

In the main text, we described a movement task equivalent to decision making under risk. On each trial, subjects reach out and touch a computer screen within a short period of time (e.g. 700 ms). Hits inside a green target region displayed on a computer screen yield a gain of 2.5 cents; accidental hits inside a nearby red penalty region incur losses of 12.5 cents. Movements that do not reach the screen within the time limit are heavily penalized (following training they almost never occur). The subject is not completely under control of the movement outcome [43]. The black dots in the figures mark simulated outcomes of attempts to hit the diamond marking the target center (Figure 1a) or the diamond closer to the target edge (Figure 1b).

A movement that reaches the screen within the time limit can end in one of four possible regions: penalty only (Region R1, gain G1 = −12.5), target/penalty overlap (Region R2, gain G2 = −10), target only (Region R3, gain G3 = 2.5), or neither/background (Region R4, gain G4 = 0). In evaluating movement plans in this task, visuo-motor plans that lead to a touch on the screen within the time limit differ only to the extent that they affect the probability Ps(Ri) of hitting each of the four regions Ri, i = 1,…,4. The combination of event probabilities Ps(Ri) resulting from a particular visuo-motor plan (aim point) S and associated gains Gi form a lottery,

| (1) |

An alternative visuo-motor plan S′ corresponds to a second lottery,

| (2) |

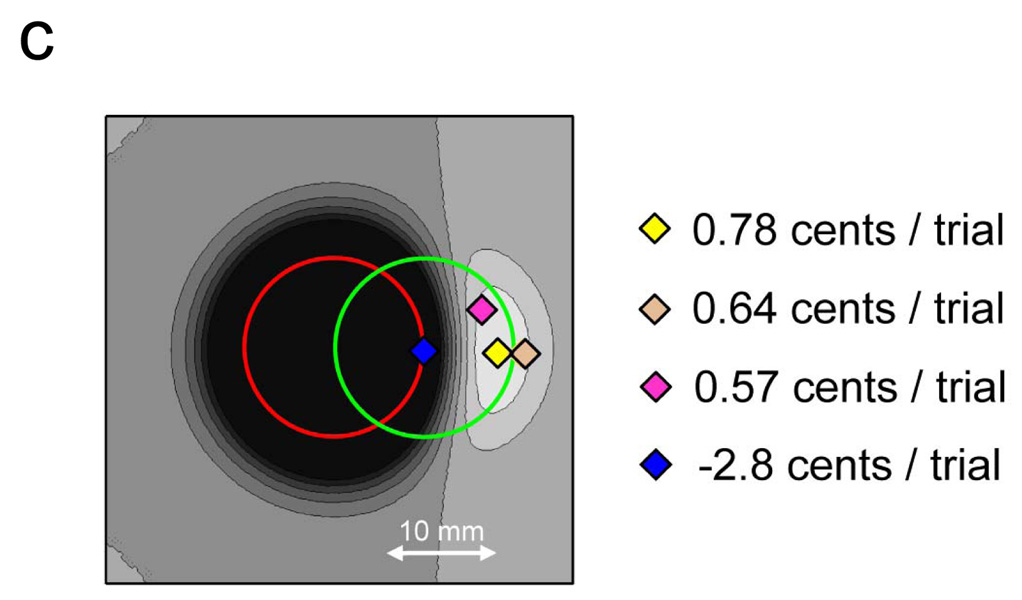

Each lottery corresponds to an aim point. The lottery corresponding to the aim point in Figure 1a has an expected gain of −2.8 cents per trial (the subject loses money on average), the expected gain associated with the aim point in Figure 1b is .78 cents per trial. Obviously, the aim point in Figure 1b offers higher expected gain. In planning movement in this task, subjects effectively choose among not just these two aim points but infinitely many aim points (lotteries). They are engaged in a continuous decision-making task of extraordinary complexity, and it is a task that is performed every time they move.

In Figure 1c we plot the expected gain associated with every possible aim point and highlight four of them. The aim point corresponding to the yellow diamond maximizes expected gain for this subject in this task.

Sub-optimal economic decisions

Human performance in decision making under risk is markedly sub-optimal and fraught with cognitive biases [4] that result in serious deficits in performance. Patterned deviations from maximizing expected gain include a tendency to frame outcomes in terms of losses and gains with an exaggerated aversion to losses [10] and to exaggerate the weight given to low-probability outcomes [11, 12]. This latter property parallels the human tendency to overestimate the relative frequencies of rare events [13, 14]. This exaggeration of the frequency of low-frequency events is observed in many but not all decision-making studies [15]. These behaviors are typically modeled by Prospect Theory by introducing a probability weighting function and by assuming that subjects maximize a tradeoff between losses and gains [10, 12].

Motor tasks equivalent to decision making under risk

Recent work in motor control [9] formulates movement planning in terms of statistical decision theory, effectively converting the problem of movement planning to a decision among lotteries that is mathematically equivalent to decision making under risk. We can compare performance in economic decision-making tasks with performance in equivalent motor tasks and also study how organisms represent value and uncertainty and make decisions in very different domains [16–22].

In Figure 1a, we illustrate the task and show one of the target-penalty configurations used in [6]. The rules of the task are very simple. The configuration appears on a display screen a short distance in front of the subject. The subject must reach out and hit somewhere on the display screen in a short period of time. The subject knows that hits within the green circle result in a monetary payoff but hits within the red result in a loss of money. The amounts vary with experimental condition but in the example in Figure 1a they are 2.5 cents and 12.5 cents, respectively.

Figure 1.

a) An example of a stimulus configuration presented on a display screen. The subject must rapidly reach out and touch the screen. If the screen is hit within the green circle, 2.5 cents is awarded. If within the red, there is a penalty of 12.5 cents. The circles are small (9 mm radius) and the subject can’t completely control this rapid movement. In Box 1 we explain what the subject should do to maximize winnings. b) A comparison of subjects’ performance to the performance that would maximize expected gain. The shift of subjects’ mean movement end points from the center of the green target region is plotted as a function of the shift of mean movement end point that would maximize expected gain for 5 different subjects (indicated by 5 different symbols) and the 6 different target-penalty configurations shown in Fig. 2a (replotted from [6], Figure 5a).

At the speed the subject is forced to move, movement can’t be completely controlled: even if the subject aims at the center of the green circle there’s a real chance of missing it. And, if the subject aims too close to the center of the green circle, there is a risk of hitting inside the red. Where should the subject aim?

In Box 1, we show how to interpret the subject’s choice of aim point as a choice among lotteries and how to determine the aim point that maximizes expected gain. An economist would use the term “utility” where a researcher in motor control would opt for “cost” or “biological cost” and a statistician would use “loss” or “loss function.” Adopting a field-neutral term, we refer to rewards and penalties associated with outcomes as “gains”, positive or negative.

We were surprised to discover that, in this decision task in motor form, participants typically chose visuo-motor plans that came close to maximizing expected gain. In Figure 1b we plot the subject’s displacement in the horizontal direction away from the center of the green circle versus the displacement that would maximize expected gain, combining data across several experimental conditions varying the penalty amount and distance between target and penalty circles [6, 7].

Additional research has extended this conclusion to tasks that involve precise timing and tradeoff between movement time and reward [23, 24] and to tasks involving rapid choices between possible movement targets [9]. Generally, human subjects choose strategies that come close to maximizing expected gain in motor tasks with changing stochastic variability [8, 25] or combining noisy sensory input with prior information [26–29].

These results have implications for our understanding of movement planning and motor control. Typical computational approaches to modeling movement planning take the form of an optimization problem in which the cost function to be minimized is biomechanical and the optimization goal is to minimize some measure of stress on the muscles and joints. These models differ primarily in the choice of the cost function. Possible biomechanical cost functions include measures of joint mobility [30, 31], muscle tension changes [32], mean squared rate of change of acceleration [33], mean torque change [34], total energy expenditure [35] and peak work [36]. These biomechanical models have successfully been applied to modeling reaching movements as following a nearly straight path with a bell-shaped velocity profile and also capture the human ability to adapt to forces applied during movement execution [37]. We emphasize that these models cannot be used to predict subjects’ performance in our movement decision tasks where performance also depends on externally imposed rewards and penalties. Moreover, subjects came close to maximizing expected gain with arbitrary, novel penalties and rewards imposed on outcomes by the experimenter.

Subjects do not always come close to maximizing expected gain in movement planning, e.g. when the number of penalty/reward regions is increased [38] and when the reward or penalty received is stochastic rather than determined by outcome of the subject’s movement [39]. Furthermore, when the penalty is so high that the aim point maximizing expected gain lies outside of the target, results suggest that subjects prefer not to aim outside of the target that they are trying to hit [8]. Thus, while there is a collection of motor tasks, described above, where performance is remarkably good, we cannot simply claim that performance in any task with a speeded motor response will come close to maximizing expected gain. Further work is needed to delimit the range of movement planning tasks where subjects do well.

One evident question is “Are subjects maximizing expected gain gradually, by a process of trial and error?”

Learning probabilities vs. practicing the task

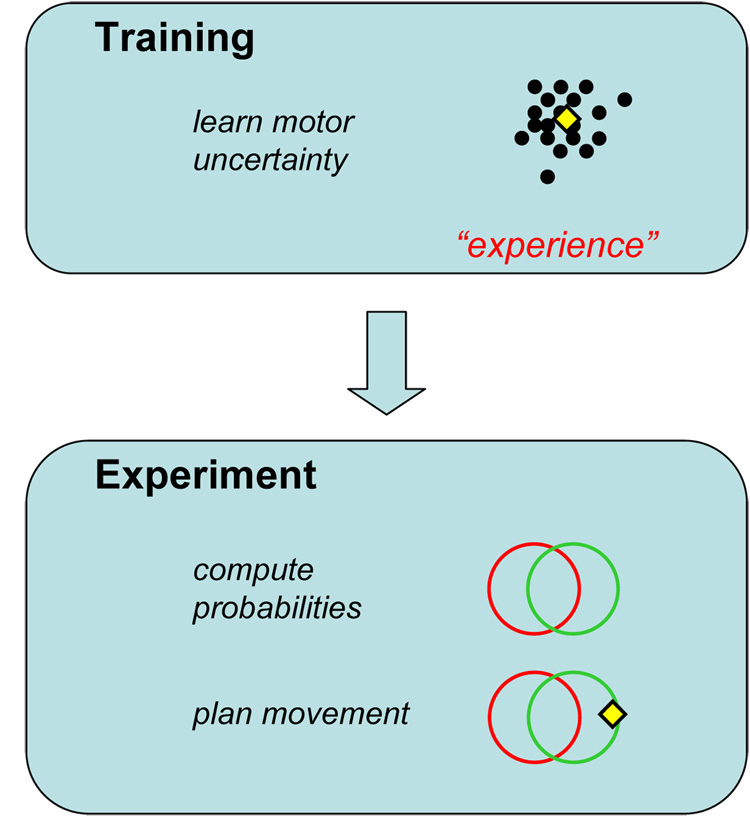

We were surprised to learn that subjects do not show trends consistent with a gradual approach to maximizing expected gain. The design of the studies of Trommershäuser et al. [6–8] and related work [23] had a peculiar structure. Before the “decision making” phase of the experiment, subjects practiced the speeded motor task extensively by repeatedly touching single circular targets. During this initial training period, the experimenter monitored their motor performance until it stabilized and the experimenter could measure each subject’s residual motor variability.

Only after training did subjects learn about the gains and losses assigned to each region in the experimental condition. They were not explicitly told to take into account the spatial locations of reward and penalty regions and the magnitude of penalty and reward, but their highly efficient performance indicates that they did so from the first trial in which rewards and penalties were specified.

To summarize, in these experiments subjects were first trained to be “motor experts” in a simple task where they were instructed to touch targets on the screen. Only then were they given a task involving tradeoffs between rewards and penalties. There were no obvious trends in subjects’ aim points [6, 7] that would suggest that subjects were modifying their decision-making strategy in as they gained experience with the decision-making task (Figure 2a).

Figure 2.

a) Trial-by-trial deviation of movement end point (in the horizontal direction) from the mean movement end point in that condition as a function of trial number after introduction of rewards and penalties (reward: 2.5 cents; penalty −12.5 cents); the six different lines correspond to the six different spatial conditions of target and penalty offset as shown on the right (data replotted from [6], Figure 7). b) Trend of a hypothetical simple learning model in which a subject changes motor strategy gradually in response to rewards and penalties incurred. The subject initially aims at the center of the green circle. Before the subject’s first trial in the decision-making phase of the experiment, the subject is instructed that red circles carry penalties and green circles carry rewards. Subjects may approach the aim point maximizing expected gain by slowly shifting the aim point away from the center of the green circle until the winnings match the maximum expected gain. However, the data shown in a) do not support this learning model.

To see how unusual this outcome is, consider applying a simple reinforcement-learning model according to which the aim point is adjusted gradually in response to rewards and penalties incurred [40–42]. In the absence of any reward or penalty, a learning model based on reward and penalty would predict that the subject should aim at the center of the green circle, just as in the training trials. The subject would then gradually alter the aim point in response to rewards and penalties incurred until the final aim point maximized expected gain (Figure 2b).

However, examination of the initial trials of the decision phase of the experiment suggests that subjects immediately changed their aim point from that used in training to that required to trade off the probabilities of hitting the reward and penalty regions (Figure 2a). This apparent lack of learning is of great interest in that it suggests that, while subjects certainly learned to carry out the motor task in the training phases of these experiments, and learned their own motor uncertainty, they seemed not to need further experience with the decision-making task to perform as well as they did, applying the knowledge of that motor uncertainty to new situations. The trends in performance found with repetition of economic-decision tasks seem absent in equivalent movement-planning tasks.

The contrast between success in “movement planning under risk” and decision making under risk is heightened by the realization that, in decision making under risk, subjects are told the exact probabilities of outcomes and thus have perfect knowledge of how their choice changes the probability of attaining each outcome. The knowledge of probabilities in equivalent motor tasks is never communicated explicitly and thus could equal but never exceed the knowledge available under decision making under risk. Yet the lack of learning in these motor tasks suggests that humans are able to estimate the probabilities of each outcome associated with any given aim point due to motor uncertainty and make use of this knowledge to improve their performance [8]. There is mounting evidence that decision makers behave differently if knowledge of probabilities is gained through “experience” (see Box 2). Our results add a new dimension to what kinds of experience lead to enhanced decision making.

Box 2: Decisions from Experience

There are several factors that may have contributed to the remarkable performance of subjects in movement planning under risk [6–9, 44]. In these experiments, the subject makes a long series of choices and over the course of the experiment his/her accumulated winnings increase. In contrast, subjects in economic decision-making experiments typically make a single “one-shot” choice, choosing among a small set of lotteries. Indeed, when economic decision makers are faced with a series of decisions they tend to move closer to maximum expected gain (see e.g. [45]; “the house money effect:” [46]).

Recent work suggests that subjects who are allowed to simulate a decision task learn from their experience [47] and, together with the studies just cited, it is likely that decision making improves with repetition. However, in the motor tasks discussed here the learning phase does not involve explicit probabilities or values or tradeoffs between risk and reward. In the experimental phase (Main text, Figure 2), they show no evidence of learning. This outcome suggests that they can explicitly transfer experience with motor uncertainty to the decision task (Figure 1), computing probabilities and planning movements on demand. While subjects likely learn from experience in these motor tasks, experience does not involve simple practice or simulation of the actual decision task.

There is growing interest in analyzing brain activity in response to manipulations of various components of decision-making under risk or uncertainty in human subjects (for more extensive reviews, see [16, 17, 19, 20]). The work described here effectively opens a second window on neural processing of uncertainty and value by allowing us to present exactly the same decision problems in different guises.

Statistical decision theory: future directions

The motor tasks we have considered are very simple, a reaching movement to touch a target. Even these very simple motor tasks correspond to complicated choices among lotteries. We close by illustrating that the underlying statistical framework, statistical decision theory, can be used to model complex movement tasks shaped by externally imposed rewards and penalties and where visual uncertainty can play a larger part (Box 3).

Box 3: Statistical Decision Theory and Sensory-Motor Control

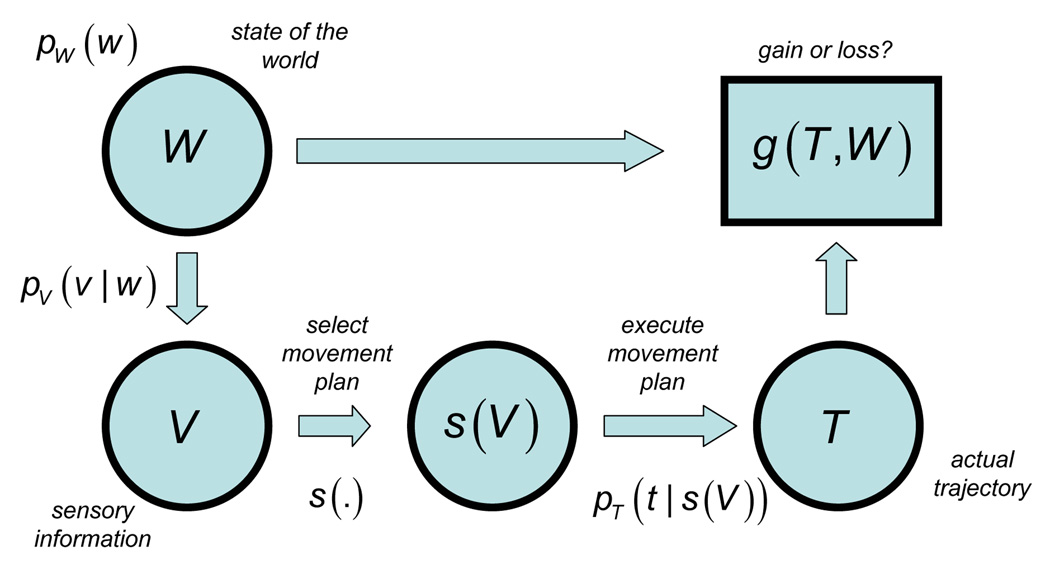

Statistical decision theory [48] is a remarkably general framework for modeling tasks in cognition, perception and planning of movement [3]. In its simplest forms it is the mathematical basis for signal detection theory and common models of optimal visual classification [49]. The models of simple movement tasks considered here are examples of its application. In Figure 1a, we illustrate its application to a more complex movement task that involves both visual and motor uncertainty.

A dinner guest intends to pick up a salt shaker at the center of the table with his right hand. We will follow this movement from initial planning to eventual social disaster (Figure 1b) or success (Figure 1c).

One possible plan of action is schematized as a solid line, sketching out the path of the hand that the guest plans to take. An actual movement plan would specify joint movements throughout the reach. His planning should take into account uncertainty in his estimates of object location as well as his accuracy in movement. If his sensory information is poor under candlelight, he might do well to choose a path that gives the wine glass a wide berth and proceed very slowly. If he moves very slowly though, he may never get through his meal. The potential costs and benefits are measured in units of disgrace, esteem and dry cleaning charges. Statistical decision theory allows us to determine the best possible choice of movement plan, i.e., the one that maximizes expected gain.

In detail, a movement strategy is a mapping from sensory input V to a movement plan s(V) (Figure 2). The expected gain associated with the choice of strategy s(V) is given by

| (3) |

where W is the random state of the world (i.e., positions of arm, salt shaker, wine glass, etc.) with prior distribution pw (w) based on past sensory information and knowledge of how a table is laid out, V is current sensory information about the state of the world with likelihood distribution pv (v|w)and T is the stochastic movement trajectory resulting from the executed movement plan sT (V). The term g(t,w) specifies the gain resulting from an actual trajectory t in the actual state of the world w. In the example given it includes costs incurred by hitting objects while reaching through the dinner scene and possible rewards for successfully grasping the salt shaker. Eq. 5 determines the movement strategy that maximizes expected gain.

Using the methods described there, we can translate visuo-motor and economic decision making tasks into a common mathematical language. We can frame movement in economic terms or translate economic tasks into equivalent visuo-motor tasks. Given the societal consequences associated with failures of decision making in economic, military, medical and legal contexts, it is worth investigating decision tasks in domains where we seem to do very well.

Box 1’s Figure 1.

Equivalence of a movement task and decision making under risk. Subjects must touch a computer screen within a short period of time (e.g. 700 msec). Subjects can win 2.5 cents points by hitting inside the green circle, lose 12.5 cents by hitting inside the red circle, lose 10 cents by hitting where green and red circle overlap or win nothing by hitting outside the stimulus configuration. Each possible aim point on the computer screen corresponds to a lottery. a) Expected gain for a subject aiming at the center of the green target (aim point indicated by the diamond). Black points indicate simulated end points for a representative subject (with 5.6 mm end point standard deviation); target and penalty circles have radii of 9 mm. This motor strategy yields an expected loss of 2.8 cents/trial. The numbers shown below the target configuration describe the lottery corresponding to this aim point, i.e., the probabilities for hitting inside each region and the associated gain. b) Expected gain for a subject with the same motor uncertainty as in a). Here, we simulate the same subject aiming towards the right of the target center (the diamond) to avoid accidental hits inside the penalty circle. This strategy results in an expected gain of 0.78 cents/trial and corresponds to the strategy (aim point) maximizing expected gain. c) Each possible aim point corresponds to a lottery and has a corresponding expected gain, shown by the grayscale background with four particular aim points highlighted.

Box 2’s Figure 1. Motor decisions from experience.

In the learning phase of the experiment subjects learn to hit targets. Their performance improves until their movement variability has reached a plateau. During training they have the opportunity to learn their own motor uncertainty but nothing about the training task requires that they do so. In the experimental phase subjects plan movements that trade off the risk of incurring penalties against the possible reward of hitting targets. They show little evidence of learning and perform well in the task. This suggests that they can convert what they learned in the training phase into the information needed to plan effective movements under risk: the equivalent of estimating the probabilities of the various outcomes associated with any proposed aim point, followed by a computation of expected gain.

Box 3’s Figure 1.

Example of applying statistical decision theory to modeling goal-directed movement under visual and motor uncertainty. a) A dinner guest, whose arm is shown, intends to pick up the salt shaker at the center of the table with his right hand. An intended trajectory is shown along with a “confidence interval” to indicate the range of other trajectories that might occur. b) The actual executed movement may deviate from the intended and, instead of grasping the salt shaker, the guest may accidentally knock over his full wine glass. c) If executed successfully, the dinner guest will pick up the salt shaker without experiencing social disaster. (Drawings by Andreas Olsson)

Box 3’s Figure 2.

Application of statistical decision theory to complex visuo-motor tasks. The goal is a mapping from sensory input V to a movement plan s(V). Gains and losses g,(t,w) are determined by the actual trajectory t executed in the actual state of the world w. The movement plan that maximizes expected gain depends on both visual uncertainty and motor uncertainty. (Here, we follow the convention that random variables are in upper case, e.g. X, while the corresponding specific values that those variables can take on are in lower-case, e.g. p(X).)

Acknowledgments

We thank Marisa Carrasco, Nathaniel Daw, Karl Gegenfurtner and Paul Glimcher for helpful discussion and Andreas Olsson for the drawings. This work was supported by the Deutsche Forschungsgemeinschaft (DFG, Emmy-Noether-Programm, grant TR 528/1–2; 1–3) and NIH EY08266.

References

- 1.Knill DC, Kersten D, Yuille A. Introduction: A Bayesian formulation of visual perception. In: Knill DC, Richards W, editors. Perception as Bayesian Inference. Cambridge University Press; New York: 1996. pp. 1–21. [Google Scholar]

- 2.Landy MS, et al. Measurement and modeling of depth cue combination: in defense of weak fusion. Vis. Res. 1995;35(3):389–412. doi: 10.1016/0042-6989(94)00176-m. [DOI] [PubMed] [Google Scholar]

- 3.Maloney LT. Statistical decision theory and biological vision. In: Heyer D, Mausfeld R, editors. Perception and the Physical World: Psychological and Philosophical Issues in Perception. Wiley; New York: 2002. pp. 145–189. [Google Scholar]

- 4.Kahneman D, Tversky A. Choices, Values, and Frames. New York: Cambridge University Press; 2000. 840 pp. xx. [Google Scholar]

- 5.Knight FH. Risk, Uncertainty and Profit. New York: Houghton Mifflin; 1921. [Google Scholar]

- 6.Trommershäuser J, Maloney LT, Landy MS. Statistical decision theory and the selection of rapid, goal-directed movements. J. Opt. Soc. Am. A Opt. Image Sci. Vis. 2003;20(7):1419–1433. doi: 10.1364/josaa.20.001419. [DOI] [PubMed] [Google Scholar]

- 7.Trommershäuser J, Maloney LT, Landy MS. Statistical decision theory and trade-offs in the control of motor response. Spat. Vis. 2003;16(3–4):255–275. doi: 10.1163/156856803322467527. [DOI] [PubMed] [Google Scholar]

- 8.Trommershäuser J, et al. Optimal compensation for changes in task-relevant movement variability. J. Neurosci. 2005;25(31):7169–7178. doi: 10.1523/JNEUROSCI.1906-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Trommershäuser J, Landy MS, Maloney LT. Humans rapidly estimate expected gain in movement planning. Psychol. Sci. 2006;17(11):981–988. doi: 10.1111/j.1467-9280.2006.01816.x. [DOI] [PubMed] [Google Scholar]

- 10.Kahneman D, Tversky A. Prospect Theory: An analysis of decision under risk. Econometrica. 1979;47:263–291. [Google Scholar]

- 11.Allais M. Le comportment de l'homme rationnel devant la risque: critique des postulats et axiomes de l'école Américaine. Econometrica. 1953;21:503–546. [Google Scholar]

- 12.Tversky A, Kahneman D. Advances in Prospect Theory: Cumulative Representation of Uncertainty. J. Risk Uncertain. 1992;54:297–323. [Google Scholar]

- 13.Attneave F. Psychological probability as a function of experienced frequency. J. Exp. Psychol. 1953;46(2):81–86. doi: 10.1037/h0057955. [DOI] [PubMed] [Google Scholar]

- 14.Lichtenstein S, et al. Judged frequency of lethal events. J. Exp. Psychol. Hum. Learn. 1978;4:551–578. [PubMed] [Google Scholar]

- 15.Sedlmeier P, Hertwig R, Gigerenzer G. Are judgments of the positional frequencies of letters systematically biased due to availability? J. Exp. Psychol. Learn. Memory Cogn. 1998;24(3):754–770. [Google Scholar]

- 16.Daw ND, Doya K. The computational neurobiology of learning and reward. Curr Opin Neurobiol. 2006;16(2):199–204. doi: 10.1016/j.conb.2006.03.006. [DOI] [PubMed] [Google Scholar]

- 17.Glimcher PW, Rustichini A. Neuroeconomics: the consilience of brain and decision. Science. 2004;306(5695):447–452. doi: 10.1126/science.1102566. [DOI] [PubMed] [Google Scholar]

- 18.Gold JI, Shadlen MN. Banburismus and the brain: decoding the relationship between sensory stimuli, decisions, and reward. Neuron. 2002;36(2):299–308. doi: 10.1016/s0896-6273(02)00971-6. [DOI] [PubMed] [Google Scholar]

- 19.Montague PR, King-Casas B, Cohen JD. Imaging valuation models in human choice. Annu Rev Neurosci. 2006;29:417–448. doi: 10.1146/annurev.neuro.29.051605.112903. [DOI] [PubMed] [Google Scholar]

- 20.O'Doherty JP. Reward representations and reward-related learning in the human brain: insights from neuroimaging. Curr Opin Neurobiol. 2004;14(6):769–776. doi: 10.1016/j.conb.2004.10.016. [DOI] [PubMed] [Google Scholar]

- 21.Sugrue LP, Corrado GS, Newsome WT. Matching behavior and the representation of value in the parietal cortex. Science. 2004;304(5678):1782–1787. doi: 10.1126/science.1094765. [DOI] [PubMed] [Google Scholar]

- 22.Sugrue LP, Corrado GS, Newsome WT. Choosing the greater of two goods: neural currencies for valuation and decision making. Nat. Rev. Neurosci. 2005;6(5):363–375. doi: 10.1038/nrn1666. [DOI] [PubMed] [Google Scholar]

- 23.Dean M, Wu SW, Maloney LT. Trading off speed and accuracy in rapid, goal-directed movements. J. Vis. 2007;7(5):10, 1–12. doi: 10.1167/7.5.10. [DOI] [PubMed] [Google Scholar]

- 24.Hudson TE, Maloney LT, Landy MS. Optimal compensation for temporal uncertainty in movement planning. PLoS Biol. 2008. [DOI] [PMC free article] [PubMed]

- 25.Baddeley RJ, Ingram HA, Miall RC. System identification applied to a visuomotor task: near-optimal human performance in a noisy changing task. J. Neurosci. 2003;23(7):3066–3075. doi: 10.1523/JNEUROSCI.23-07-03066.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Körding KP, Wolpert DM. Bayesian integration in sensorimotor learning. Nature. 2004;427(6971):244–247. doi: 10.1038/nature02169. [DOI] [PubMed] [Google Scholar]

- 27.Schlicht EJ, Schrater PR. Impact of coordinate transformation uncertainty on human sensorimotor control. J. Neurophysiol. 2007;97(6):4203–4214. doi: 10.1152/jn.00160.2007. [DOI] [PubMed] [Google Scholar]

- 28.Tassinari H, Hudson TE, Landy MS. Combining priors and noisy visual cues in a rapid pointing task. J. Neurosci. 2006;26(40):10154–10163. doi: 10.1523/JNEUROSCI.2779-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Vaziri S, Diedrichsen J, Shadmehr R. Why does the brain predict sensory consequences of oculomotor commands? Optimal integration of the predicted and the actual sensory feedback. J. Neurosci. 2006;26(16):4188–4197. doi: 10.1523/JNEUROSCI.4747-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Kaminsky T, Gentile AM. Joint control strategies and hand trajectories in multijoint pointing movements. J. Mot. Behav. 1986;18:261–278. doi: 10.1080/00222895.1986.10735381. [DOI] [PubMed] [Google Scholar]

- 31.Soechting JF, Lacquaniti F. Invariant characteristics of a pointing movement in man. J Neurosci. 1981;1(7):710–720. doi: 10.1523/JNEUROSCI.01-07-00710.1981. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Dornay M, Kawato ATRHIPRLKJRSMK, Suzuki R. Minimum Muscle-Tension Change Trajectories Predicted by Using a 17-Muscle Model of the Monkey's Arm. J. Mot. Behav. 1996;28(2):83–100. doi: 10.1080/00222895.1996.9941736. [DOI] [PubMed] [Google Scholar]

- 33.Flash T, Hogan N. The coordination of arm movements: an experimentally confirmed mathematical model. J Neurosci. 1985;5(7):1688–1703. doi: 10.1523/JNEUROSCI.05-07-01688.1985. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Uno Y, Kawato M, Suzuki R. Formation and control of optimal trajectory in human multijoint arm movement. Minimum torque-change model. Biol Cybern. 1989;61(2):89–101. doi: 10.1007/BF00204593. [DOI] [PubMed] [Google Scholar]

- 35.Alexander RM. A minimum energy cost hypothesis for human arm trajectories. Biological Cybernetics. 1997;76(2):97–105. doi: 10.1007/s004220050324. [DOI] [PubMed] [Google Scholar]

- 36.Soechting JF, et al. Moving effortlessly in three dimensions: does Donders' law apply to arm movement? J. Neurosci. 1995;15(9):6271–6280. doi: 10.1523/JNEUROSCI.15-09-06271.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Burdet E, et al. The central nervous system stabilizes unstable dynamics by learning optimal impedance. Nature. 2001;414(6862):446–449. doi: 10.1038/35106566. [DOI] [PubMed] [Google Scholar]

- 38.Wu SW, et al. Limits to human movement planning in tasks with asymmetric gain landscapes. J. Vis. 2006;6(1):53–63. doi: 10.1167/6.1.5. [DOI] [PubMed] [Google Scholar]

- 39.Maloney LT, Trommershäuser J, Landy MS. Questions without words: A comparison between decision making under risk and movement planning under risk. In: Gray W, editor. Integrated Models of Cognitive Systems. Oxford University Press; New York: 2007. pp. 297–315. [Google Scholar]

- 40.Daw ND, et al. Cortical substrates for exploratory decisions in humans. Nature. 2006;441(7095):876–879. doi: 10.1038/nature04766. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Dayan P, Balleine BW. Reward, motivation, and reinforcement learning. Neuron. 2002;36(2):285–298. doi: 10.1016/s0896-6273(02)00963-7. [DOI] [PubMed] [Google Scholar]

- 42.Sutton RS, Barto AG. Adaptive computation and machine learning. Cambridge, Mass.; MIT Press: 1998. Reinforcement Learning: An Introduction; 322 pp. xviii. [Google Scholar]

- 43.Meyer DE, et al. Optimality in human motor performance: ideal control of rapid aimed movements. Psychol Rev. 1988;95(3):340–370. doi: 10.1037/0033-295x.95.3.340. [DOI] [PubMed] [Google Scholar]

- 44.Seydell A, et al. Learning stochastic reward distributions in a speeded pointing task. J. Neurosci. 2008;28:4356–4367. doi: 10.1523/JNEUROSCI.0647-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Redelmeier DA, Tversky A. On the Framing of Multiple Prospects. Psychol. Sci. 1992;3(3):191–193. [Google Scholar]

- 46.Thaler R, Johnson EJ. Gambling with the house money and trying to break even: The effects of prior outcomes on risky choice. Manage. Sci. 1990;36:643–660. [Google Scholar]

- 47.Hertwig R, et al. Decisions from experience and the effect of rare events in risky choice. Psychol. Sci. 2004;15(8):534–539. doi: 10.1111/j.0956-7976.2004.00715.x. [DOI] [PubMed] [Google Scholar]

- 48.Blackwell D, Girshick MA. Wiley publications in statistics. New York: Wiley; 1954. Theory of games and statistical decisions; 355 pp. [Google Scholar]

- 49.Duda RO, Hart PE, Stork DG. 2nd ed. New York: Wiley; 2001. Pattern classification. [Google Scholar]