Abstract

When making choices under uncertainty, people usually consider both the expected value and risk of each option, and choose the one with the higher utility. Expected value increases the expected utility of an option for all individuals. Risk increases the utility of an option for risk-seeking individuals, but decreases it for risk averse individuals. In 2 separate experiments, one involving imperative (no-choice), the other choice situations, we investigated how predicted risk and expected value aggregate into a common reward signal in the human brain. Blood oxygen level dependent responses in lateral regions of the prefrontal cortex increased monotonically with increasing reward value in the absence of risk in both experiments. Risk enhanced these responses in risk-seeking participants, but reduced them in risk-averse participants. The aggregate value and risk responses in lateral prefrontal cortex contrasted with pure value signals independent of risk in the striatum. These results demonstrate an aggregate risk and value signal in the prefrontal cortex that would be compatible with basic assumptions underlying the mean-variance approach to utility.

Keywords: decision making, fMRI, utility, mean-variance approach, neuroeconomics

Subjective value is a crucial term used by microeconomic and finance theories of decision making (1–8). Decisions occur between choice alternatives (options) that can be certain or uncertain; uncertain options are “risky” when the probabilities of outcomes are known, and “ambiguous” when probabilities are not completely known. Two parameters that influence the subjective value of a risky option are its expected outcome value (first moment of a probability distribution of outcomes) and its risk (e.g., variance as second moment of such a probability distribution). Theories of economic decision making usually assume that subjective value increases monotonically with expected value. The influence of risk on subjective value may depend on individual risk attitude. Risk-averse individuals assign higher subjective value to an option with lower risk than an option with higher risk. Risk-seeking individuals show the opposite preference. Thus, for a risk-averse individual, a sure gain of 100 dollars has higher subjective value than an option with an equal probability of winning 200 dollars or nothing. The inverse is true for a risk-seeking individual. Risk-averse individuals accept reductions in expected value for reductions in risk, whereas risk-seeking individuals do so for increments in risk. Importantly, according to this scheme, both risk-averse and risk-seeking individuals integrate expected value and risk to choose the option with the highest subjective value. This scheme appears to follow the basic assumptions underlying the mean-variance approach of finance theory, which captures subjective value (3).

Expected utility theory and prospect theory provide alternative descriptions of subjective value, and measure it formally with individual preferences for choice options (1, 2, 4). Indeed, these theories often assume that choice provides all necessary information about subjective value. However, it is unclear whether valuation occurs also in “imperative” situations in which the agent is simply assigned one option and no choice is possible. In economics, choice-dependent decision utility has superseded experienced utility, which does not necessarily require choice (9), and the 2 forms of utility can differ (10). However, it cannot be ruled out that utility indicated in the absence of choice might serve as input for decision utility. In behavioral neuroscience, the study of no-choice situations can reveal potential inputs for decision mechanisms. For example, with increasing reward delay, option-related neuronal responses decrease similarly in no-choice and choice situations (11, 12). Thus, these responses could provide a common mechanism for processing reward delays irrespective of whether choice is required or not. However, it is unknown whether the crucial subjective value parameters expected value and risk would follow a similar scheme.

Separate studies of expected value and risk point to a role of prefrontal cortex and striatum in coding these 2 reward parameters. Expected value coding is indicated by positive monotonic increases of prefrontal and striatal activation with reward magnitude, probability, and their combination (13–17). Risk coding is suggested by increasing prefrontal and striatal activations with reward uncertainty, variance, or volatility (16–21). Lesions of the prefrontal cortex alter behavior in risky situations (22–26). However, it is unclear whether the prefrontal cortex or striatum are capable of integrating expected value and risk, either in choice or imperative situations. The lateral part of prefrontal cortex appears to be a likely candidate, because it not only separately processes expected value and risk, but also is sensitive to individual differences in attitude toward ambiguity and risk (17, 26–28). Thus, this region appears to not only be separately sensitive to the 2 main components of a utility-like signal, expected value and risk, but also process risk in a subject-specific way. Here, we tested the possibility that lateral prefrontal cortex would integrate these components into a common signal that covaries with both value and risk and depends on the subjective risk attitude.

Results

We used fMRI in 2 separate experiments to investigate whether and how the prefrontal cortex integrates expected value and risk in a risk-attitude dependent fashion. In experiment 1, we aimed to investigate the basic parameters of expected value and variance as potential inputs to neural decision processes. We used an imperative situation in which we had full control over these parameters (Fig. 1A). Single visual stimuli, associated with different levels of expected value and risk, appeared on one quadrant of the monitor. Participants indicated with a button press the quadrant of stimulus appearance. Experiment 1 used 4 levels of expected value, each with a low and high-risk option. In experiment 2, we investigated whether the potential inputs identified by experiment 1 would be used during decisions under uncertainty (Fig. 1B). In each trial, participants chose between a risky and a safe option. Experiment 2 used 2 levels of expected value, each with a low and high risk variant. To reflect the main difference between the 2 experiments, we used well-established imperative and choice-based measures of risk attitude in experiments 1 and 2, respectively. These measures were taken for the levels of expected value and risk used in the task, during or immediately after the task. Thus, we accounted for the possibility that experience may affect risk attitudes (29), and obtained a quantitative measure of risk attitude reflecting individual differences in processing the risk parameters used in the task.

Fig. 1.

Experimental design and behavioral measures of expected value and risk attitude. (A) Imperative task. Single stimuli were presented randomly in one of the 4 quadrants of a monitor for 1.5 s. Participants responded by indicating the quadrant in which stimuli appeared with a button press. Stimuli were associated with different combinations of reward magnitude and probability. (B) Choice task. A safe and a risky monetary choice option were presented randomly on the right and left side of a monitor for 5.5 s. Gambles with 2 levels of expected value and risk were used. The safe option on the left consists of a 100% gain of 45 £; the risky option on the right consists of a 50% gain of either 30 or 90 £(expected value = 60 £; 2 numbers are used for the safe option to keep visual stimulation comparable with the risky option). Participants chose an option with a button press on presentation of a go-signal. (C) Average change in pleasantness rating resulting from the imperative procedure in all participants as a function of expected value (imperative task; 15 participants, error bars represent SEM). The scale ranged from −5 (very unpleasant) to +5 (very pleasant). (D) Risk attitudes of single participants in experiment 1. (E) Average certainty equivalents of low and high expected value options with same risk in experiment 2 (14 participants, error bars represent SEM). (F) Risk attitudes of single participants in experiment 2. Risk attitude was measured as difference between certainty equivalents of the low- and high-risk options.

Behavioral Performance.

In experiment 1, we measured the pleasantness of stimuli before and after the experiment. Pleasantness ratings did not vary across stimuli associated with different reward expected value and risk before the experiment (ANOVA: F1,11 < 0.77, P > 0.67; regression: | r | < 0.12, P > 0.29), but did afterward (F1,11 = 10.01, P < 0.0001), as a function of expected value (r = 0.53, P < 0.0001) (Fig. 1C). Ratings did not vary within pairs of stimuli that had the same expected value but different risk (t14 = −0.67, P > 0.51; paired t test on ratings for low versus high risk stimuli, averaged separately for each participant). Reaction time was significantly shorter for the highest, compared with lowest expected reward value (587 ms versus 601 ms, t14 = 3.1, P < 0.05; paired t test). Risk attitudes were quantified by comparing the postexperimental pleasantness ratings of (p = 0.25 + p = 0.75) with that of (p = 1.0) (30). If the first expression is smaller than the second, participants are risk averse, if it is larger, they are risk seeking. Of the 15 participants, 4 were risk averse, 8 risk seeking, and 3 risk neutral [average risk aversion (± SEM): risk averters 4.1 (± 0.3); risk seekers −1.5 (± 0.5); Fig. 1D]. The rating-based, imperative, measure of risk attitude correlated with an independent, choice-based, measure of risk attitude (r = 0.59, P < 0.05). Thus, participants discriminated the stimuli according to expected value, and differed in their risk attitudes.

In experiment 2, we measured points of equal preference (certainty equivalents) between adjusting safe options and risky options to quantify the subjective value each participant assigned to risky options (31). Certainty equivalents significantly increased with expected value, keeping risk constant (t13 = 34.4, P < 0.0001) (Fig. 1E). To assess risk attitudes, we used the difference in certainty equivalents between low and high risk options, keeping expected value constant. Two participants were risk seeking, 12 risk averse (Fig. 1F). Thus, participants' choice preferences were influenced by both expected value and risk.

Value Coding Irrespective of Risk in Lateral Prefrontal Cortex.

First, we reasoned that an expected value-coding region should show increasing activity to safe options with increasing expected value in the absence of any risk. To test this prediction in experiment 1, we regressed responses to stimuli predicting reward of different magnitudes with certainty (p = 1.0), and found a significant increase in lateral prefrontal regions (Fig. 2A; P < 0.05, small volume correction in frontal lobe). Activations increased similarly, and differed insignificantly for risk-seeking and risk-averse individuals (Fig. 2B; P < 0.05 for both risk-averse and risk-seeking participants; P = 0.97 for difference between the 2 groups). In experiment 2, we compared brain activation when participants chose safe options with high as opposed to low magnitude. To control for the possibility of outcome-related activation contaminating choice-related activation, we did not show the outcomes of each choice in this experiment. As with imperative trials of experiment 1, lateral prefrontal activations were significantly stronger for high, compared with low magnitude options (Fig. 3A; Fig. S1A; P < 0.001 for all participants). Activations increased similarly for both risk seeking and risk-averse participants. However, due to the little number of risk-seeking partricipants (n = 2), the power to make meaningful inferences is very limited; therefore, we show data comparing risk-seeking with risk-averse groups for experiment 2 only in the SI. The lateral prefrontal regions identified in experiments 1 and 2 overlapped (Fig. 3B). Indeed, the activations found in experiment 2 were significant within a region of interest (ROI) defined by only the significantly activated voxels of experiment 1 (P < 0.05, small volume correction). Thus, activations in similar lateral prefrontal regions increase with expected value in the absence of risk, both in imperative and choice situations.

Fig. 2.

Integrated value and risk coding in lateral prefrontal cortex. (A) Value coding with safe options in experiment 1. Activation in lateral prefrontal cortex increasing with safe reward (peaks at 42/30/10 and 34/44/10; P < 0.05, small volume correction with false discovery rate in frontal lobe). Activations were identified by a linear contrast of the 3 safe options: 0, 100, and 200 points. (B) Increase of BOLD response with expected value in safe options irrespective of risk attitude in experiment 1. Peak activations from cluster shown in A covarying with expected value for both risk-averse and risk-seeking individuals (P < 0.05, simple regressions). The difference between the 2 groups was not significant (P = 0.97, unpaired t test). (C) Risk attitude-dependent activation or suppression of responses to risky options in lateral prefrontal cortex in imperative experiment 1. Time courses of responses were extracted from circled cluster shown in A. Responses to stimuli associated with different levels of risk (average variance of low- and high-risk stimuli = 2.5-k and 20-k points2) were averaged separately for risk-averse and risk-seeking participants and across the 4 levels of expected value of interest (50, 100, 150, and 200 points; average, 125 points). (D) Peak activations from time course analysis shown in A to different pairs of stimuli with same expected value but different risk, averaged separately for risk-averse and risk-seeking participants. Activations were higher with higher risk in risk-seeking participants, and lower in risk-averse participants (risk-averse participants: t = −8.6, P < 0.01; risk-seeking participants; t = 3.1, P < 0.05). Activations increased with expected values (left to right; for all: R2 > 0.64, P < 0.06). The groups of participants were split according to the sign of risk attitude.

Fig. 3.

Control analyses. (A) Value coding with safe options in experiment 2. Lateral prefrontal cortex region with stronger activation for higher expected value (peak at 30/52/10; P < 0.05, small volume correction in 10-mm sphere around circled peak voxel at 34/44/10 shown in Fig. 2A defined by experiment 1). (B) Overlap of activations from experiment 1 and 2. To show the full extent of the overlap, activations from A and E were plotted at P < 0.05. Activations from experiment 2 were significant (P < 0.05, small volume correction) within significant voxels of experiment 1. (C and D) Correlation of risk attitude with coding of risk, but not expected value in lateral prefrontal cortex. For this analysis, we varied risk and kept expected value constant or varied expected value and kept risk constant. In clusters shown in A and in Fig. 2A, the high-risk option elicited stronger activation than the low-risk option in risk-seeking individuals, but weaker activation in risk-averse individuals. These activation differences correlated with the degree of individual risk aversion (open squares), in both imperative (C; experiment 1) and choice situations (D; experiment 2) (for experiment 1, the variance of low- and high-risk options was 7.5-k and 22.5-k points2, the expected value of both options was 150 points; R2 = 0.49, P < 0.01; for experiment 2, the variance of low- and high-risk options was 400 and 900 £2, the expected value of both options was 60 £; R2 = 0.81, P < 0.0001). Conversely, higher expected value elicited an increase in lateral prefrontal activation that did not covary with risk attitude (filled diamonds; for experiment 1, the expected value of low- and high-value options was 50 and 150 points, the variance of both options was 7.5-k points2; R2 = 0.01, P = 0.64; difference between correlations: P = 0.05, z test; for experiment 2, the expected value of low- and high-value options was 30 and 60 £, the variance of both options was 400 £2; R2 = 0.06, P = 0.39; difference between correlations: P < 0.01, z test). (E) Comparison of different risk attitude-weighted risk terms in lateral prefrontal cortex in experiment 1. Paired t tests revealed a significantly better fit of brain activation with risk-attitude weighted variance than skewness (t = 3.34, P < 0.01). The comparisons of skewness with coefficient of variation and SD did not reach significance (t = 1.74, P = 0.11 and t = 2.05, P = 0.06 for coefficient of variation and SD, respectively). To compare different risk terms, we normalized each one of them before multiplying it with negative individual risk attitude in experiment 1 and searching for covariation with brain activation. The analysis was restricted to risk-averse and risk-seeking participants (n = 12), because the equivalent regressors in risk neutral participants consisted only of zeros and, thus, did not discriminate between different risk terms. Error bars correspond to SEM.

Integration of Expected Value and Risk According to Subjective Risk Attitude in Lateral Prefrontal Cortex.

Next, we investigated how the addition of risk would influence the lateral prefrontal activations related to expected value. Thus, we not only regressed activations to expected value, but also searched for stronger activations for the high-risk options than the low-risk options in risk-seeking individuals and weaker activations in risk-averse individuals. Activations in the previously identified lateral region of prefrontal cortex fulfilled this response profile (P < 0.05, small volume correction in frontal lobe). Time course analyses revealed that the high-risk options elicited stronger activations than the low-risk options in the risk-seeking individuals, but weaker activation in the risk-averse individuals (Fig. 2C). The time courses for the low-risk options were similar in the 2 participant groups. Activations increased with expected value in both participant groups (Fig. 2D). The increased risk of the high-risk options boosted or suppressed activations in risk-seeking and risk-averse participants, respectively (Fig. 2D). These results suggest that activations in lateral prefrontal cortex combine expected value and risk.

We asked whether expected value and risk would also combine in the choice situation of experiment 2. The increased risk of the high-risk option resulted in moderate activation increases when chosen by risk-seeking individuals, but in activation suppresions when chosen by risk-averse individuals. (Fig. S1B). Activations increased with expected value in both groups of individuals (Fig. S1C). Thus, lateral prefrontal cortex activation appears to combine expected value and risk not only in imperative, but also in choice situations.

Risk Coding Dependent on Subjective Risk Attitude in Lateral Prefrontal Cortex.

Above, we observed substantial influences of risk attitude on the integrated expected value and risk signal. Utility theory suggests that risk attitude should primarily influence risk rather than expected value processing. Therefore, utility-related activation should correlate with individual differences in risk attitude only for options that differ in terms of risk but not in terms of expected value. We tested this requirement and found it met by the previously identified lateral prefrontal region (Fig. 3C). The difference in activation elicited by 2 options differing in risk but not expected value correlated negatively with risk aversion (r = −0.70, P < 0.01). Conversely, the differential activation arising from 2 options differing in expected value but not risk did not correlate with risk attitude (r = −0.10, P = 0.64; Fig. 3C). These data suggest that risk, but not expected value coding by lateral prefrontal cortex, is sensitive to risk attitude.

Next we asked whether risk attitude would also primarily affect risk rather than expected value processing in the choice situations of experiment 2. As with the imperative experiment 1, the differential lateral prefrontal activation elicited by 2 options differing in risk, but not expected value correlated negatively with risk aversion (r = −0.90, P < 0.0001; Fig. 3D). Inspection of the correlation showed that lateral prefrontal activation to higher risk decreased continuously with increasing risk aversion. Conversely, the activation difference between 2 options with the same risk, but different expected value did not correlate with risk attitude (r = −0.24, P = 0.39; Fig. 3D). These data indicate that lateral prefrontal activation related to risk depends on risk attitude irrespective of whether choice is a formal task requirement or not.

Risk can be defined, for example, as SD, variance, skewness, and coefficient of variation (SD divided by expected value) (3, 32, 33). Thus, risk attitude could influence different risk terms differentially, and an integrated expected value and risk signal could theoretically be constructed with various risk terms. In experiment 1, we used enough different options to allow at least partial distinction between some of the proposed risk terms. Activation in the lateral prefrontal region showing individual risk-attitude dependent risk coding was similarly responsive to variance, SD, and coefficient of variation, and marginally more responsive to these risk terms than to skewness (Fig. 3E). Importantly, irrespective of the precise risk term used, the basic rationale of the current study holds in that increasing risk decreases the utility of an option for a risk-averse individual, but increases it for a risk-seeking individual.

Contrast with Posterior Striatum.

Previous research reported expected value coding in the striatum (14, 17). By way of replicating and extending these findings, we asked whether striatal expected value signals would show risk-attitude dependent changes with risky options. We found that striatal activations increased with expected value irrespective of risk level and risk attitude in the imperative situations of experiment 1 (Fig. 4 A–C). The identified region located in posterior striatum, at the border of the globus pallidus and putamen. We tested for pallidal/putamen expected value and risk coding also in the choice situations of experiment 2. As with experiment 1, we found phasic increases in ventral pallidum/putamen activation with increasing expected value, irrespective of risk level and attitude (Fig. S2). These data suggest that ventral pallidum/putamen activity reflects risk-independent expected value both in imperative and choice situations. Importantly, in contrast to the lateral prefrontal cortex, the phasic expected value-related activations in striatum are not modulated by risk, suggesting that not every expected value-sensitive region also displays dependence on risk and risk attitude.

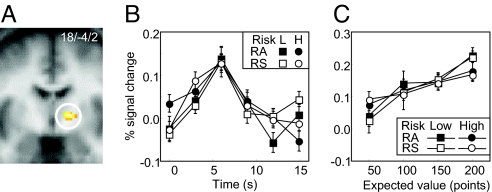

Fig. 4.

Value coding irrespective of risk in striatum. (A) Activation in posterior striatum covarying with increasing expected value (peak at 18/-4/2; P < 0.05, small volume correction with false discovery rate in striatum). (B) Time courses of risk-independent responses in cluster shown in A, averaged separately for risk-averse and risk-seeking participants. Activations were averaged across the 4 levels of expected value (50, 100, 150, and 200 points; average, 125 points). (C) Activation in cluster shown in A to different pairs of stimuli with same expected value but different risk, averaged separately for risk-averse and risk-seeking participants. Peak activations were similar for low and high risk, irrespective of risk attitude (P > 0.66) and increased with expected value (left to right; for all 4 groups: R2 > 0.86, P < 0.05). RA, risk averse; RS, risk seeking; L, low; H, high.

Discussion

The present study shows that expected value signals in the lateral prefrontal cortex are reduced by risk in risk-averse individuals, but increase with risk in risk-seeking individuals. Although previous data showed separate coding of expected value and risk, the present results uncover a remarkable integration of risk into expected value signals. Moreover, the integration of expected value and risk in prefrontal reward signals was not restricted to choices, but occurred also in choice-free (imperative) situations. In contrast to the prefrontal integration, expected value signals in the striatum appeared to be insensitive of risk. Together with separate risk signals identified in previous studies, striatal mechanisms appear to code the expected value and risk components separately.

Relation to Utility Theory.

The present experiment showed similar ventrolateral prefrontal activations both in imperative and choice situations (experiments 1 and 2, respectively). Formal measurement of expected utility traditionally is based on preferences. Therefore, it requires choice situations. However, it is conceivable that microeconomic decision signals are based on more basic input signals reflecting simple reward parameters such as expected value and risk. As an analogy, sensory systems may give rise to perceptual decision signals based on neuronal correlates of basic sensory parameters such as visual motion or tactile vibration (34, 35). The current data suggest that the lateral prefrontal cortex processes a combined signal of expected value and risk not only in imperative but also in choice situations. Thus, for the lateral prefrontal cortex, similar valuation processes may occur in the absence and presence of overt choice and decision, and valuation may not necessarily require choice. This finding may suggest that experienced and decision utility (10) can rely on similar neuronal mechanisms.

In the mean-variance approach to decision utility developed by finance theory, the expected utility of an option corresponds to its expected value minus its risk-attitude-weighted risk (8, 36, 37). The weight that corresponds to risk attitude assumes positive values with risk averse and negative values with risk-seeking individuals. The mean-variance approach appears to provide a useful approximation to utility and even prospect (32, 36–39). Also, with some classes of utility functions (e.g., quadratic), the expected utility is characterized completely by mean and variance, although these functions have some unrealistic properties. Last, with normally distributed outcomes, variance is a valid measure of risk and the mean-variance approach to expected utility holds (32), whereas with not normally distributed outcomes higher moments such as skewness and kurtosis are needed (5). Thus, several lines of evidence suggest that the mean-variance approach to utility is viable, at least under some conditions. The currently found aggregate signal of expected value and risk in the lateral prefrontal cortex could form the neuronal basis of an accepted normative theory of economic decision making.

Standard economic and prospect theories treat utility as a scalar value rather than separating it into constituent elements as endorsed by the mean-variance approach (1, 2, 4). We are agnostic about the specific form of the utility function used by our participants. Any of the utility functions we have recalled are possible; in each function, an increase in the mean without reducing the variance increases utility. The standard utility theories would explain the presently found behavioral and neuronal sensitivity to risk with considerable nonlinearities of utility functions (i.e., deviations from linear expected value coding). The present results are perfectly compatible with expected utility coding by the lateral prefrontal cortex. As a matter of fact, although we used only 3 different magnitudes, the responses to safe options (Fig. 2B) tended to show the typical concave and convex functions of reward magnitude in risk-averse and risk-seeking participants, as would be suggested by expected utility or prospect.

The mean-variance approach to utility defines risk as variance, whereas in standard economic approaches to utility, the definition of risk depends on the class of utility functions used (40). We compared several alternative risk terms, such as standard deviation, coefficient of variation (33), and skewness and found comparable relations between lateral prefrontal activation and these terms when weighted with individual risk-attitude, except for skewness. Thus, for the presently reported region in lateral prefrontal cortex, the general scheme of integrating expected value and risk may hold irrespective of the exact risk term used, as long as higher moments such as skewness are not considered.

Individual Differences in Risk Processing.

Although other species such as bumblebees and juncos show primarily situation-specific differences in risk attitude (41), the degree to which risk attitudes differ across individuals in similar situations appears to be particularly pronounced in humans. In the present study, individuals differed substantially in their risk attitudes. These differences were expressed in lateral prefrontal activation. The correlation of behavioral and lateral prefrontal blood-oxygen-level-dependent (BOLD) responses to risk concurs with the finding that stimulation of lateral prefrontal regions alters risk attitudes (27, 28). Thus, the present data add to the notion that the analysis of individual differences in brain activation is not only viable but also elucidates basic mechanisms of risky decision making.

In the present study, we measured risk attitudes in imperative and choice tasks. As in principle these tasks might yield different risk attitudes, it may be important to keep conclusions based on BOLD activity separate for imperative and choice tasks. Nevertheless, for experiment 1, we found that risk attitude correlated well between imperative (rating) and choice situations. This result suggests that, at least in some situations, risk attitude seems to be independent of imperative or choice measures. One could argue that a task-independent measure of risk attitude, such as a questionnaire about hypothetical gambles, would be more appropriate to measure risk attitude. However, behavioral economics has become reluctant to use hypothetical and task-independent measures, because experience of the task can profoundly affect risk attitudes (29). Future research is needed to determine the most appropriate measure of risk attitude.

Comparison with Striatum.

Previous research reported increasing striatal responses to increases in expected value and its components, magnitude and probability (14, 16, 17, 42–46). Expected value is a risk-independent measure of the value of choice options. We now show that the currently observed expected value-related striatal activations are insensitive to risk and risk-attitude. It is worth noting that these striatal activations were detected with regression models that tested for phasic activations. Longer-lasting, more tonic activations reflecting risk occur in the striatum, but it is unclear whether they are related to risk-attitude (16). The present designs used short intervals between stimuli and outcomes (experiment 1) or no outcomes at all (experiment 2). The designs were optimized for the detection of phasic signals, more similar to the expected value than risk signals emitted by dopamine neurons (47). With these designs, we identified activations in posterior striatal regions, extending into the globus pallidus. The globus pallidus codes reward, receives inputs primarily from the ventral striatum, and sends reward-related information to the lateral habenula, which in turn innervates dopamine neurons (48, 49). Critically, the distinction between striatal and lateral prefrontal responses suggests that not all expected value-sensitive regions integrate risk. Instead, striatal expected value signals are coded separately from risk, and could form the basis for lateral prefrontal integration of expected value and risk.

Anatomical and functional considerations suggest that the lateral prefrontal cortex may be ideally suited to assign choice options with an integrated expected value and risk signal. It receives input from inferior temporal, orbitofrontal and cingulate cortex, the amygdala, and dopaminergic midbrain (50–54). Sensory and object related information could arrive from inferior temporal cortex (55, 56). Expected value and risk-related information could arrive from orbitofrontal and posterior cingulate cortex, amygdala, and dopaminergic midbrain (57–63). In turn, it projects to dorsolateral prefrontal and premotor regions, and could, thus, influence behavioral output (51, 54). Neurophysiological studies have shown that single lateral prefrontal neurons use reward information to increase spatial discrimination, encode reward-based stimulus category, and integrate reward and response history (64–66). Together, these findings on expected reward value and risk processing in the lateral prefrontal cortex underline the role of this structure as a key component of the decision system of the brain. By showing that the lateral prefrontal cortex integrates expected reward value and risk, our data suggest that this region may provide a building block for the computation of utility, as specified by finance and risk-sensitive foraging theories.

Materials and Methods

For details, see SI Methods.

Fifteen right-handed healthy participants (mean age, 27 years; range, 20–41 years; 8 females) were investigated in experiment 1, 14 (mean, 25; range, 20–30 years, 6 females) in experiment 2. Participants were recruited through an advertisement on a local community web site.

Experiment 1 consisted of an imperative paradigm, which allowed us to study the processing of expected value and risk independent of choice. At the beginning of a trial in the main paradigm, single visual stimuli appeared for 1.5 s in one of the 4 quadrants of the monitor. Participants pressed one of 4 buttons corresponding to the quadrant of stimulus appearance. Outcomes appeared 1 s after the stimulus for 0.5 s. Points served as reward, 4% of which were paid out as British pence to participants at the end of the experiment. Throughout the experiment, the total of points accumulated was displayed and updated after reward delivery. We used 4 levels of expected value, which varied between 50 and 200 points in steps of 50. For each of these levels, we used a high- and a low-risk variant with the same expected value, resulting in 8 different stimuli. Trial types alternated randomly. Participants rated the pleasantness of visual stimuli before and after the experiment on a scale ranging from 5 = very pleasant to −5 = very unpleasant. We quantified probabilistic risk aversion by comparing the postexperimental ratings for (p = 0.25 + p = 0.75) and p = 1.0 (30). Experiment 2 varied expected value and risk in a choice situation. In each trial, a risky and a safe option appeared for 5.5 s on the right and left side of a fixation cross present in the middle of the screen. The risky options consisted of a 50% gain of either a larger or a smaller option. The safe options consisted of a 100% gain of an intermediate amount. To equate visual stimulation between the safe and the risky options, 2 times the same number was displayed for the safe option. Participants then chose 1 of these options by button press. No outcome was shown. At the end of the experiment, one trial was chosen randomly and played out to determine participants' payoff in British Pound. For the risky options, we used 2 levels of expected value, £30 and £60. Each of these was presented in a low- and a high-risk version. To determine risk attitude, we identified for each risky option the safe amount for which participants were indifferent between the risky and the safe option (certainty equivalent) (31).

In both experiments, we acquired gradient echo T2*-weighted echo-planar images (EPIs) with BOLD contrast on a Siemens Sonata 1.5 Tesla scanner (slices per volume, 33; repetition time, 2.97 s). Imaging parameters were: echo time, 50 ms; field-of-view, 192 mm. The in-plane resolution was 3 × 3 mm; with a slice thickness of 2 mm, and an interslice gap of 1 mm. Statistical Parametric Mapping (SPM2 and SPM5; Functional Imaging Laboratory, London, U.K.) served to spatially realign functional data, normalize them to a standard EPI template, and smooth them using an isometric Gaussian kernel with a full width at half-maximum of 10 mm. Data were analyzed by constructing a set of stick functions at the time of stimulus or option presentation in experiment 1 and 2, respectively. In both experiments, participant-specific movement parameters were modeled as covariates of no interest. The general linear model served to compute trial type-specific betas. Using random-effects analysis, contrasts were entered into t tests and simple regressions. Following the mean-variance approach, contrasts tested for increasing activation with expected value and further increasing activation with higher risk in risk seeking participants or activation suppression by higher risk in risk-averse participants. Correlations of separate expected value and risk contrasts with risk attitude were performed as simple regressions. To control for false positives due to multiple comparisons, we used small volume correction within frontal lobe (FDR at P < 0.05). Reported voxels conform to Montreal Neurological Institute (MNI) coordinate space. The right side of images corresponds to the right side of the brain.

Supplementary Material

Acknowledgments.

We thank Peter Bossaerts, Shunsuke Kobayashi, and Krishna Miyapuram for helpful discussions. This work was supported by the Wellcome Trust, the University of Cambridge Behavioral and Clinical Neuroscience Institute funded by a joint award from the Medical Research Council and the Wellcome Trust, the Swiss National Science Foundation, the Roche Research Foundation, and the Greek Government Scholarship Foundation. R.J.D. and W.S. are supported by Wellcome Trust Program grants, and W.S. by a Wellcome Trust Principal Research fellowship.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/cgi/content/full/0809599106/DCSupplemental.

References

- 1.Bernoulli D Latin original: 1738. Exposition of a new theory on the measurement of risk. Econometrica. 1954;22:23–36. [Google Scholar]

- 2.Von Neumann J, Morgenstern O. Theory of Games and Economic Behavior. Princeton: Princeton Univ Press; 1944. [Google Scholar]

- 3.Markowitz HM. Portfolio Selection: Efficient Diversification of Investments. New York: John Wiley and Sons; 1959. [Google Scholar]

- 4.Kahneman D, Tversky A. Prospect theory: An analysis of decision under risk. Econometrica. 1979;47:263–291. [Google Scholar]

- 5.Huang C-F, Litzenberger RH. Foundations for Financial Economics. Upper Saddle River, NJ: Prentice-Hall; 1988. [Google Scholar]

- 6.Glimcher PW, Rustichini A. Neuroeconomics: The consilience of brain and decision. Science. 2004;306:447–452. doi: 10.1126/science.1102566. [DOI] [PubMed] [Google Scholar]

- 7.Rangel A, Camerer C, Montague PR. A framework for studying the neurobiology of value-based decision making. Nat Rev Neurosci. 2008;9:545–556. doi: 10.1038/nrn2357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Tobin J. Liquidity preference as behavior towards risk. Rev Econ Stud. 1958;25:65–86. [Google Scholar]

- 9.Stigler GJ. The Development of Utility Theory. I. J Polit Econ. 1950;58:307–327. [Google Scholar]

- 10.Kahneman D, Wakker P, Sarin R. Back to Bentham? Explorations of Experienced Utility. Q J Econ. 1997;112:375–405. [Google Scholar]

- 11.Roesch MR, Calu DJ, Schoenbaum G. Dopamine neurons encode the better option in rats deciding between differently delayed or sized rewards. Nat Neurosci. 2007;10:1615–1624. doi: 10.1038/nn2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Kobayashi S, Schultz W. Influence of reward delays on responses of dopamine neurons. J Neurosci. 2008;28:7837–7846. doi: 10.1523/JNEUROSCI.1600-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Knutson B, Taylor J, Kaufman M, Peterson R, Glover G. Distributed neural representation of expected value. J Neurosci. 2005;25:4806–4812. doi: 10.1523/JNEUROSCI.0642-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Yacubian J, et al. Dissociable systems for gain-and loss-related value predictions and errors of prediction in the human brain. J Neurosci. 2006;26:9530–9537. doi: 10.1523/JNEUROSCI.2915-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Plassmann H, O'Doherty J, Rangel A. Orbitofrontal cortex encodes willingness to pay in everyday economic transactions. J Neurosci. 2007;27:9984–9988. doi: 10.1523/JNEUROSCI.2131-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Preuschoff K, Bossaerts P, Quartz S. Neural differentiation of expected reward and risk in human subcortical structures. Neuron. 2006;51:381–390. doi: 10.1016/j.neuron.2006.06.024. [DOI] [PubMed] [Google Scholar]

- 17.Tobler PN, O'Doherty JP, Dolan RJ, Schultz W. Reward value coding distinct from risk attitude-related uncertainty coding in human reward systems. J Neurophysiol. 2007;97:1621–1632. doi: 10.1152/jn.00745.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Critchley HD, Mathias CJ, Dolan RJ. Neural activity in the human brain relating to uncertainty and arousal during anticipation. Neuron. 2001;29:537–545. doi: 10.1016/s0896-6273(01)00225-2. [DOI] [PubMed] [Google Scholar]

- 19.Huettel SA, Song AW, McCarthy G. Decisions under uncertainty: Probabilistic context influences activation of prefrontal and parietal cortices. J Neurosci. 2005;25:3304–3311. doi: 10.1523/JNEUROSCI.5070-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Huettel SA, Stowe CJ, Gordon EM, Warner BT, Platt ML. Neural signatures of economic preferences for risk and ambiguity. Neuron. 2006;49:765–775. doi: 10.1016/j.neuron.2006.01.024. [DOI] [PubMed] [Google Scholar]

- 21.Behrens TE, Woolrich MW, Walton ME, Rushworth MF. Learning the value of information in an uncertain world. Nat Neurosci. 2007;10:1214–1221. doi: 10.1038/nn1954. [DOI] [PubMed] [Google Scholar]

- 22.Bechara A, Tranel D, Damasio H. Characterization of the decision-making deficit of patients with ventromedial prefrontal cortex lesions. Brain. 2000;123:2189–2202. doi: 10.1093/brain/123.11.2189. [DOI] [PubMed] [Google Scholar]

- 23.Mobini S, et al. Effects of lesions of the orbitofrontal cortex on sensitivity to delayed and probabilistic reinforcement. Psychopharmacol. 2002;160:290–298. doi: 10.1007/s00213-001-0983-0. [DOI] [PubMed] [Google Scholar]

- 24.Sanfey AG, Hastie R, Colvin MK, Grafman J. Phineas gauged: Decision-making and the human prefrontal cortex. Neuropsychologia. 2003;41:1218–1229. doi: 10.1016/s0028-3932(03)00039-3. [DOI] [PubMed] [Google Scholar]

- 25.Clark L, Cools R, Robbins TW. The neuropsychology of ventral prefrontal cortex: Decision-making and reversal learning. Brain Cogn. 2004;55:41–53. doi: 10.1016/S0278-2626(03)00284-7. [DOI] [PubMed] [Google Scholar]

- 26.Floden D, Alexander MP, Kubu CS, Katz D, Stuss DT. Impulsivity and risk-taking behavior in focal frontal lobe lesions. Neuropsychologia. 2008;46:213–223. doi: 10.1016/j.neuropsychologia.2007.07.020. [DOI] [PubMed] [Google Scholar]

- 27.Knoch D, et al. Disruption of right prefrontal cortex by low-frequency repetitive transcranial magnetic stimulation induces risk-taking behavior. J Neurosci. 2006;26:6469–6472. doi: 10.1523/JNEUROSCI.0804-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Fecteau S, et al. Activation of prefrontal cortex by transcranial direct current stimulation reduces appetite for risk during ambiguous decision making. J Neurosci. 2007;27:6212–6218. doi: 10.1523/JNEUROSCI.0314-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Hertwig R, Barron G, Weber EU, Erev I. Decisions from experience and the effect of rare events in risky choice. Psychol Sci. 2004;15:534–539. doi: 10.1111/j.0956-7976.2004.00715.x. [DOI] [PubMed] [Google Scholar]

- 30.Wakker P. Separating marginal utility and probabilistic risk aversion. Theory Decis. 1994;36:1–44. [Google Scholar]

- 31.Luce RD. Utility of Gains and Losses. Mahway, NJ: Lawrence Erlbaum; 2000. [Google Scholar]

- 32.Rothschild M, Stiglitz JE. Increasing risk: I. A definition. J Econ Theory. 1970;2:225–243. [Google Scholar]

- 33.Weber EU, Shafir S, Blais AR. Predicting risk sensitivity in humans and lower animals: Risk as variance or coefficient of variation. Psychol Rev. 2004;111:430–445. doi: 10.1037/0033-295X.111.2.430. [DOI] [PubMed] [Google Scholar]

- 34.Newsome WT, Britten KH, Movshon JA. Neuronal correlates of a perceptual decision. Nature. 1989;341:52–54. doi: 10.1038/341052a0. [DOI] [PubMed] [Google Scholar]

- 35.Romo R, Hernandez A, Zainos A. Neuronal correlates of a perceptual decision in ventral premotor cortex. Neuron. 2004;41:165–173. doi: 10.1016/s0896-6273(03)00817-1. [DOI] [PubMed] [Google Scholar]

- 36.Levy H, Markowtiz HM. Approximating expected utility by a function of mean and variance. Am Econ Rev. 1979;69:308–317. [Google Scholar]

- 37.Caraco T. On foraging time allocation in a stochastic environment. Ecology. 1980;61:119–128. [Google Scholar]

- 38.Pratt JW. Risk aversion in the small and in the large. Econometrica. 1964;32:122–136. [Google Scholar]

- 39.Levy H, Levy M. Prospect theory and mean-variance analysis. Rev Financ Stud. 2004;17:1015–1041. [Google Scholar]

- 40.Ingersoll JE. Theory of Financial Decision Making. Lanham, MD: Rowman and Littlefield Publishers; 1987. [Google Scholar]

- 41.Caraco T, et al. Risk-sensitivity: Ambient temperature affects foraging choice. Anim Behav. 1990;39:338–345. [Google Scholar]

- 42.Knutson B, Adams CM, Fong GW, Hommer D. Anticipation of increasing monetary reward selectively recruits nucleus accumbens. J Neurosci. 2001;21:RC-159. doi: 10.1523/JNEUROSCI.21-16-j0002.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Delgado MR, Locke HM, Stenger VA, Fiez JA. Dorsal striatum responses to reward and punishment: Effects of valence and magnitude manipulations. Cogn Affect Behav Neurosci. 2003;3:27–38. doi: 10.3758/cabn.3.1.27. [DOI] [PubMed] [Google Scholar]

- 44.Abler B, Walter H, Erk S, Kammerer H, Spitzer M. Prediction error as a linear function of reward probability is coded in human nucleus accumbens. NeuroImage. 2006;31:790–795. doi: 10.1016/j.neuroimage.2006.01.001. [DOI] [PubMed] [Google Scholar]

- 45.Dreher JC, Kohn P, Berman KF. Neural coding of distinct statistical properties of reward information in humans. Cereb Cortex. 2006;16:561–573. doi: 10.1093/cercor/bhj004. [DOI] [PubMed] [Google Scholar]

- 46.Kable JW, Glimcher PW. The neural correlates of subjective value during intertemporal choice. Nat Neurosci. 2007;10:1625–1633. doi: 10.1038/nn2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Schultz W. Multiple dopamine functions at different time courses. Annu Rev Neurosci. 2007;30:259–288. doi: 10.1146/annurev.neuro.28.061604.135722. [DOI] [PubMed] [Google Scholar]

- 48.Pessiglione M, et al. How the brain translates money into force: A neuroimaging study of subliminal motivation. Science. 2007;316:904–906. doi: 10.1126/science.1140459. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Hong S, Hikosaka O. The globus pallidus sends reward-related signals to the lateral habenula. Neuron. 2008;60:720–729. doi: 10.1016/j.neuron.2008.09.035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Ilinsky IA, Jouandet ML, Goldman-Rakic PS. Organization of the nigrothalamocortical system in the rhesus monkey. J Comp Neurol. 1985;2361:316–330. doi: 10.1002/cne.902360304. [DOI] [PubMed] [Google Scholar]

- 51.Preuss TM, Goldman-Rakic PS. Connections of the ventral granular frontal cortex of macaques with perisylvian premotor and somatosensory areas: Anatomical evidence for somatic representation in primate frontal association cortex. J Comp Neurol. 1989;282:293–316. doi: 10.1002/cne.902820210. [DOI] [PubMed] [Google Scholar]

- 52.Ungerleider LG, Gaffan D, Pelak VS. Projections from inferior temporal cortex to prefrontal cortex via the uncinate fascicle in rhesus monkeys. Exp Brain Res. 1989;76:473–484. doi: 10.1007/BF00248903. [DOI] [PubMed] [Google Scholar]

- 53.Barbas H, De Olmos J. Projections from the amygdala to basoventral and mediodorsal prefrontal regions in the rhesus monkey. J Comp Neurol. 1990;300:549–571. doi: 10.1002/cne.903000409. [DOI] [PubMed] [Google Scholar]

- 54.Petrides M, Pandya DN. Comparative cytoarchitectonic analysis of the human and the macaque ventrolateral prefrontal cortex and corticocortical connection patterns in the monkey. Eur J Neurosci. 2002;16:291–310. doi: 10.1046/j.1460-9568.2001.02090.x. [DOI] [PubMed] [Google Scholar]

- 55.Tanaka K. Neuronal mechanisms of object recognition. Science. 1993;262:685–688. doi: 10.1126/science.8235589. [DOI] [PubMed] [Google Scholar]

- 56.Kreiman G, et al. Object selectivity of local field potentials and spikes in the macaque inferior temporal cortex. Neuron. 2006;49:433–445. doi: 10.1016/j.neuron.2005.12.019. [DOI] [PubMed] [Google Scholar]

- 57.McCoy AN, Crowley JC, Haghighian G, Dean HL, Platt ML. Saccade reward signals in posterior cingulate cortex. Neuron. 2003;40:1031–1040. doi: 10.1016/s0896-6273(03)00719-0. [DOI] [PubMed] [Google Scholar]

- 58.McCoy AN, Platt ML. Risk-sensitive neurons in macaque posterior cingulate cortex. Nat Neurosci. 2005;8:1220–1227. doi: 10.1038/nn1523. [DOI] [PubMed] [Google Scholar]

- 59.Hsu M, Bhatt M, Adolphs R, Tranel D, Camerer CF. Neural systems responding to degrees of uncertainty in human decision-making. Science. 2005;310:1680–1683. doi: 10.1126/science.1115327. [DOI] [PubMed] [Google Scholar]

- 60.Padoa-Schioppa C, Assad JA. Neurons in the orbitofrontal cortex encode economic value. Nature. 2006;441:223–226. doi: 10.1038/nature04676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Paton JJ, Belova MA, Morrison SE, Salzman CD. The primate amygdala represents the positive and negative value of visual stimuli during learning. Nature. 2006;439:865–870. doi: 10.1038/nature04490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Tobler PN, Fiorillo CD, Schultz W. Adaptive coding of reward value by dopamine neurons. Science. 2005;307:1642–1645. doi: 10.1126/science.1105370. [DOI] [PubMed] [Google Scholar]

- 63.Herry C, et al. Processing of temporal unpredictability in human and animal amygdala. J Neurosci. 2007;27:5958–5966. doi: 10.1523/JNEUROSCI.5218-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Kobayashi S, Lauwereyns J, Koizumi M, Sakagami M, Hikosaka O. Influence of reward expectation on visuospatial processing in macaque lateral prefrontal cortex. J Neurophysiol. 2002;87:1488–1498. doi: 10.1152/jn.00472.2001. [DOI] [PubMed] [Google Scholar]

- 65.Barraclough DJ, Conroy ML, Lee D. Prefrontal cortex and decision making in a mixed-strategy game. Nat Neurosci. 2004;7:404–410. doi: 10.1038/nn1209. [DOI] [PubMed] [Google Scholar]

- 66.Pan X, Sawa K, Tsuda I, Tsukada M, Sakagami M. Reward prediction based on stimulus categorization in primate lateral prefrontal cortex. Nat Neurosci. 2008;11:703–712. doi: 10.1038/nn.2128. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.