Abstract

Bimodal bilinguals are hearing individuals who know both a signed and a spoken language. Effects of bimodal bilingualism on behavior and brain organization are reviewed, and an fMRI investigation of the recognition of facial expressions by ASL-English bilinguals is reported. The fMRI results reveal separate effects of sign language and spoken language experience on activation patterns within the superior temporal sulcus. In addition, the strong left-lateralized activation for facial expression recognition previously observed for deaf signers was not observed for hearing signers. We conclude that both sign language experience and deafness can affect the neural organization for recognizing facial expressions, and we argue that bimodal bilinguals provide a unique window into the neurocognitive changes that occur with the acquisition of two languages.

Keywords: Bimodal bilingualism, American Sign Language, Facial expression

1. Introduction

Bimodal bilinguals, fluent in a signed and a spoken language, exhibit a unique form of bilingualism because their two languages access distinct sensory-motor systems for comprehension and production. In contrast, when a bilingual’s languages are both spoken, the two languages compete for articulation (only one language can be spoken at a time), and both languages are perceived by the same perceptual system: audition. Differences between unimodal and bimodal bilinguals have profound implications for how the brain might be organized to control, process, and represent two languages. Bimodal bilinguals allow us to isolate those aspects of the bilingual brain that arise from shared sensory-motor systems from those aspects that are more general and independent of language modality. In addition, bimodal bilinguals provide a new perspective on the interplay between non-linguistic functions and second language acquisition. Acquiring a signed language has been found to enhance several non-linguistic visuospatial abilities that are directly tied to processing requirements for sign language (see Emmorey, 2002, for review). In this article, we discuss the effects of bimodal bilingualism on both behavior and brain organization and report an fMRI study that investigated the perception of facial expression, a domain where experience with sign language is likely to affect functional neural organization.

For unimodal bilinguals, a second language is commonly acquired during childhood because the family moves to another country where a different language is spoken. However, the sociolinguistic, genetic, and environmental factors that lead to acquisition of a signed language are quite different. While the majority of signed language users are deaf, hearing people may acquire a signed language from their deaf signing parents or as a late-acquired second language through instruction and/or immersion in the Deaf1 community. Moreover, a hearing child exposed from birth to a sign language by deaf family members is typically also exposed to a spoken language from birth by hearing relatives and the surrounding hearing community. Acquisition of a signed language in childhood, but not from birth, is quite rare, as there is no common situation in which a hearing child from a non-signing, hearing family would naturally acquire a signed language. Thus, the majority of research with bimodal bilinguals has been conducted with Children of Deaf Adults (“Codas”), who simultaneously acquired a signed and a spoken language from birth (also known as hearing native signers).2

1.1. Effects of sign language experience on motion processing

In determining whether experience with a signed language impacts non-linguistic visuospatial abilities, it is critical to investigate both deaf and hearing signers to rule out effects that might be due to deafness, rather than to linguistic experience. For example, deaf signers, but not hearing signers, exhibit enhanced visual–spatial abilities in the periphery of vision. Compared to hearing individuals (both signers and non-signers), deaf individuals are better able to detect motion in the periphery (Bavelier et al., 2000, 2001) and to switch attention toward the visual periphery (Proksch & Bavelier, 2002). Thus, although processing sign language critically involves motion processing in the periphery, enhanced processing in this region is linked to auditory deprivation, rather than to sign language experience. This behavioral enhancement is likely due to the fact that deaf individuals must rely more heavily on monitoring peripheral vision in order to detect new information entering their environment.

Although auditory deprivation leads to an enhanced ability to detect and attend to motion in the visual periphery, acquisition of a sign language leads to atypical lateralization of motion processing within the brain. Motion processing is associated with area MT/MST within the dorsal visual pathway, and processing within this region tends to be bilateral, or slightly right-lateralized. However, several studies have found that both hearing and deaf signers exhibit a left hemisphere asymmetry for motion processing (Bavelier et al., 2001; Bosworth & Dobkins, 2002; Neville & Lawson, 1987). Neville (1991) hypothesized that the increased role of the left hemisphere for ASL signers may arise from the temporal coincidence of motion perception and the acquisition of ASL. That is, the acquisition of ASL requires the child to make linguistically significant distinctions based on movement. If the left hemisphere plays a critical role in acquiring ASL, the left hemisphere may come to preferentially mediate the perception of non-linguistic motion as well as linguistically relevant motion.

1.2. Effects of sign language experience on mental imagery

Both hearing and deaf signers exhibit a superior ability to generate and transform mental images. Using versions of the classic Shepard and Metzler (1971) mental rotation task, McKee (1987) and Emmorey, Kosslyn, and Bellugi (1993) found that hearing and deaf signers outperformed hearing non-signers at all degrees of rotation. Further, Talbot and Haude (1993) showed that mental rotation ability was correlated with skill level in American Sign Language (ASL). Emmorey, Klima, and Hickok (1998) hypothesized that enhanced mental rotation skills arise from the need to spatially transform locations within signing space to understand topographic descriptions. In ASL and other signed languages, spatial descriptions are most commonly produced from the signer’s perspective, such that the addressee, who is usually facing the signer, must perform what amounts to a 180° rotation. Recently, Keehner and Gathercole (2007) investigated whether hearing late learners of British Sign Language (BSL) exhibit enhanced performance on a task that simulated this type of spatial transformation. Proficient late learners of BSL were found to outperform matched non-signers on a version of the Corsi block spatial memory task that required 180° mental rotation. In a second experiment, BSL-English bilinguals did not show the typical processing cost associated with mental transformation of these spatial arrays, in contrast to non-signing English speakers. Keehner and Gathercole (2007) concluded that “sign language experience, even when acquired in adulthood by hearing people, can give rise to adaptations in cognitive processes associated with the manipulation of visuospatial information (p. 752).”

In addition, Emmorey et al. (1993) found that both deaf and hearing signers outperformed hearing non-signers on an image generation task in which participants were asked to mentally generate images of a block letter and decide whether a probe X would be covered by the letter, if the letter were actually present. Image generation is an important process that underlies several aspects of sign language processing. For example, within a role shift, signers must direct certain verbs toward a referent that is conceptualized as present, e.g., directing the sign ASK downward toward an imagined child or seated person (Liddell, 1990). ASL classifier constructions that express location and motion information often involve a relatively detailed representation of visual–spatial relationships within a scene (cf. Talmy, 2003), and such explicit encoding may require the generation of detailed visual images.

Using a visual hemifield technique, Emmorey and Kosslyn (1996) replicated the enhanced image generation abilities of Deaf signers and presented evidence suggesting that the enhancement is linked to right hemisphere processing. Consistent with this finding, Emmorey et al. (2005) found that ASL-English bilinguals recruit right parietal cortices when producing English spatial prepositions in a spatial-relations naming task, whereas activation for monolingual English speakers was primarily in left parietal cortex (Damasio et al., 2001). In ASL, spatial relations are most often expressed with locative classifier constructions, rather than lexical prepositions. In these constructions, the hand configuration is morphemic and specifies object type, while the location of the hand in space represents the location of objects in an analogue manner (Emmorey & Herzig, 2003). Both deaf and hearing signers engage parietal cortex bilaterally when producing ASL classifier constructions, and Emmorey et al. (2002) hypothesized that activation within the right parietal cortex is due to the spatial transformation required to map visually perceived or imagined object locations onto the locations of the hands in signing space. Emmorey et al. (2005) argued that the right hemisphere activation observed for bimodal bilinguals when they were speaking English was due to their life-long experience with spatial language in ASL. That is, ASL-English bilinguals, unlike English monolinguals, may process spatial relationships for encoding in ASL, even when the task is to produce English prepositions.

Overall, the evidence indicates that the functional neural organization for both linguistic and non-linguistic processing can be affected by knowledge and use of a signed language. In addition, the bimodal bilingual brain appears to be uniquely organized such that neural organization sometimes patterns with that of deaf signers and sometimes with that of monolingual speakers.

1.3. Effects of sign language experience on face processing: the present study

In ASL, as in many sign languages, facial expressions can be grammaticized and used to mark linguistic structure (Liddell, 1980; Zeshan, 2004). For example, in ASL, raised eyebrows and a slight head tilt mark conditional clauses, while furrowed brows mark wh-clauses. Distinct mouth configurations convey a variety of adverbial meanings when produced simultaneously with an ASL verb (Anderson & Reilly, 1998). Unlike emotional or social facial expressions, grammatical facial expressions have a clear and sharp onset that co-occurs with the beginning of the relevant grammatical structure (Reilly, McIntire, & Bellugi, 1991). During language comprehension, signers must be able to rapidly discriminate among many distinct types of facial expressions. Furthermore, signers fixate on the face of their addressee, rather than track the hands (Siple, 1978). The fact that signers focus on the face and must rapidly identify and discriminate linguistic and affective facial expressions during language perception appears to lead to an enhancement of certain aspects of face processing.

Several studies have shown that both deaf and hearing ASL signers exhibit superior performance on the Benton Test of Face Recognition, compared to non-signers (Bellugi et al., 1990; Bettger, 1992; Bettger, Emmorey, McCullough, & Bellugi, 1997; Parasnis, Samar, Bettger, & Sathe, 1996). The Benton Faces Test assesses the ability to match the canonical view of an unknown face by discriminating among a set of distractor faces presented in different orientations and lighting conditions.

Based on a series of experiments, McCullough and Emmorey (1997) argued that signers’ enhanced performance on the Benton Faces Test is likely due to an enhanced ability to discriminate local facial features. McCullough and Emmorey (1997) found that signers did not exhibit superior performance on a task that requires gestalt face processing (the Mooney Faces test) or on a task that requires recognition of a previously seen face (the Warrington Face Recognition test). However, signers outperformed hearing non-signers on a task that required discrimination of subtle differences in local facial features. McCullough and Emmorey (1997) found that both deaf and hearing ASL signers were better able to discriminate between faces that were identical except for a change in the eyes and eyebrow configuration. In contrast, the deaf signers out-performed both hearing groups (signers and non-signers) in discriminating changes in mouth configuration, suggesting that lip-reading skill and experience with grammatical facial expressions combine to enhance sensitivity to the shape of the mouth. McCullough and Emmorey (1997) argued that enhanced face processing skills in signers is most strongly tied to the ability to discriminate among faces that are very similar (as in the Benton Faces Test) and to recognize subtle changes in specific facial features. These skills are most closely tied to recognition and interpreting linguistic facial expressions (either in ASL or in lip-reading).

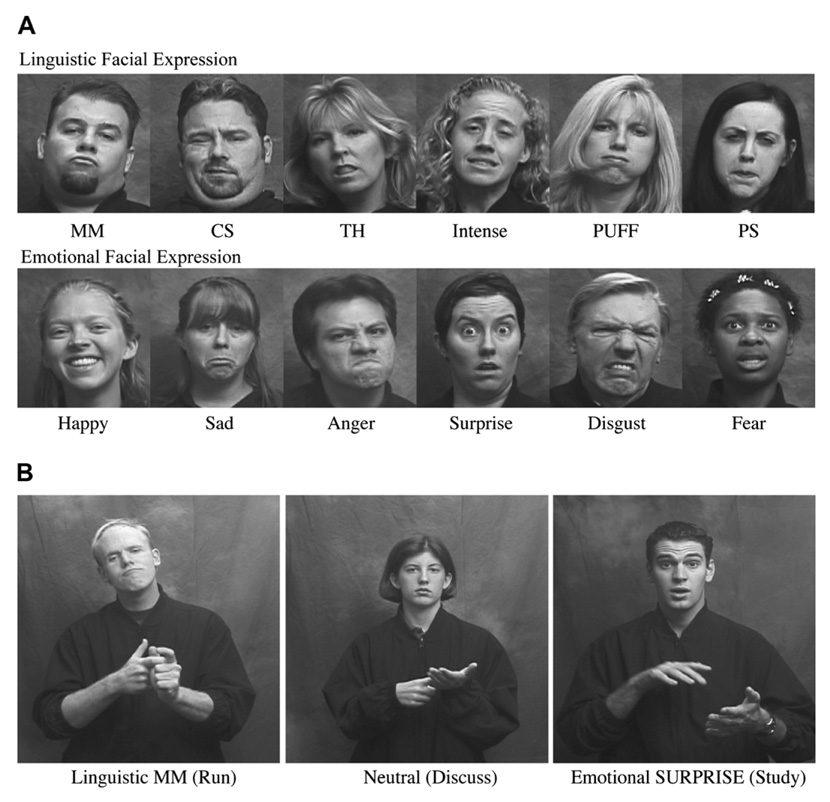

McCullough, Emmorey, and Sereno (2005) investigated the neural regions that support the recognition of linguistic and emotional facial expressions by deaf signers and hearing non-signers. While undergoing functional magnetic resonance imaging (fMRI), participants made same/different judgments to sequentially presented pairs of facial expressions produced by different individuals. The facial expressions were static and blocked by function: linguistic (adverbial markers) or emotional (happy, sad, angry, surprised, fearful). The facial expressions were presented alone (just the face) or in a verb context in which the models produced ASL verbs with linguistic, emotional, or neutral facial expressions (see Fig. 1). Again these were static images, but the verbs were easily recognizable by signers. The latter condition was included because adverbial facial expressions in ASL are bound morphemes that cannot occur in isolation. In the baseline task, participants viewed the same models with neutral facial expressions in the same conditions (face only or while producing a verb) and made same/different judgments based on gender. Two cortical regions of interest (ROIs) that have been strongly associated with face processing were examined: the superior temporal sulcus (STS) and the fusiform gyrus (Haxby, Hoffman, & Gobbini, 2000; Kanwisher, McDermott, & Chun, 1997).

Fig. 1.

(A) Illustration of linguistic and emotional facial expressions in the face only condition. (B) Illustration of linguistic, neutral, and emotional facial expressions in the verb context condition.

McCullough et al. (2005) found strong left hemisphere lateralization for deaf signers within the superior temporal sulcus for linguistic facial expressions, but only when linguistic expressions occurred in the context of a manual verb. Hearing non-signers generally exhibited a right hemisphere asymmetry for processing both linguistic and emotional facial expressions within STS. Somewhat surprisingly, for emotional facial expressions within a verb context, deaf signers exhibited symmetrical activation in STS, which contrasted with the right-lateralized activation observed for hearing non-signers. Since the verb was presented in both the gender-judgment baseline task and in the facial expression judgment task, bilateral activation cannot be due to the presence of the ASL verb. Rather, McCullough et al. (2005) argued that for deaf signers, linguistic processing involves the detection of emotional expressions during narrative discourse (see Reilly, 2000), which may lead to more left hemisphere activation when recognizing emotional facial expressions in a language context. However, it is also possible that the experience that deaf people have in interpreting and recognizing facial affect may differ from that of hearing people, leading to the change in neural organization. For example, the inability to perceive speech and speech prosody may heighten a deaf person’s attention to facial affect when interacting with hearing non-signers, and it could be this experience that leads to left hemisphere activation when recognizing emotional facial expressions in a language/communication context. Therefore, in the study reported here, we used the same experimental stimuli and paradigm to investigate the hemispheric lateralization patterns for hearing native signers.

Within the fusiform gyrus, McCullough et al. (2005) found that deaf signers exhibited a left hemisphere asymmetry for both emotional and linguistic facial expressions in both the face only and the verb context conditions. In contrast, hearing non-signers exhibited bilateral activation with very slight trends toward a rightward asymmetry for all conditions. In addition, Weisberg (2007) found that deaf signers differed in the pattern of laterality within the fusiform gyrus when viewing unknown faces. Although no differences in the left fusiform gyrus were observed, activation within the right fusiform was significantly less for deaf signers compared to hearing non-signers. McCullough et al. (2005) hypothesized that the local featural processing and categorization demands of interpreting linguistic facial expressions may lead to a left hemisphere dominance for facial expression processing within the fusiform gyrus. Local vs. global face processing have been shown to preferentially engage the left vs. the right fusiform gyrus, respectively (Hillger & Koenig, 1991; Rossion, Dricot, Devolder, Bodart, & Crommelinck, 2000). Monitoring and decoding both linguistic and emotional facial expressions during everyday signed conversations may induce a constant competition between local and global processing demands for attentional resources, thus altering efficiency and laterality within the fusiform gyrus for native signers. However, to determine whether the altered lateralization observed for deaf signers is indeed linked to sign language processing, it is necessary to investigate hearing signers who were exposed to ASL from birth.

In sum, within the superior temporal sulcus we predict that hearing native signers will exhibit a right hemisphere asymmetry for emotional facial expressions when presented in isolation, but no asymmetry when presented in a language context—if experience with affective expressions in ASL narratives causes a shift in laterality, as hypothesized by McCullough et al. (2005). We also predict a left hemisphere asymmetry for linguistic facial expressions when presented in a verbal context, paralleling the results with deaf native signers. Within the fusiform gyrus, we predict left-lateralized activation for both expression types in both presentation conditions—if experience with local featural processing of ASL linguistic expressions leads to left hemisphere dominance for face processing in the fusiform.

2. Methods

2.1. Participants

Eleven hearing native ASL signers (4 male; 7 female; mean age = 27.2 years) participated in the experiment. All were born into Deaf signing families, learned ASL from birth, and used ASL in their daily lives (eight were professional interpreters). All participants had attended college, and no participant had a history of neurological illness or affective disorder. All participants were right-handed and had normal or corrected-to-normal vision.

2.2. Materials

The static facial expression stimuli were from McCullough et al. (2005). Sign models produced six linguistic facial expressions, six emotional expressions, and a neutral facial expression (see Fig. 1). The linguistic expressions were: MM (meaning effortlessly), CS (recently), TH (carelessly), INTENSE, PUFF (a great deal or a large amount), and PS (smoothly). The six emotional expressions were happy, sad, anger, disgust, surprise, and fear. The sign models also signed ten different ASL verbs while producing the facial expressions: WRITE, DRIVE, READ, BICYCLE, SIGN, STUDY, RUN, CARRY, DISCUSS, and OPEN. These verbs can be used naturally in conjunction with all of the emotional and linguistic facial expressions.

An LCD video projector and PsyScope software (Cohen, Mac-whinney, Flatt, & Provost, 1993) running on an Apple PowerBook were used to back-project the stimuli onto a translucent screen placed inside the scanner. The stimuli were viewed at a visual angle subtending 10° horizontally and vertically with an adjustable 45° mirror.

2.3. Procedure

The procedure was the same as that used by McCullough et al. (2005). Participants decided whether two sequentially presented facial expressions (produced by different sign models) were the same or different using a button press response. For the baseline task, participants decided whether two sequentially presented sign models (producing neutral facial expressions) were the same or different gender. The face only and verb context conditions were blocked and counter-balanced across participants.

The facial expression task condition (same/different facial expression decision) alternated with the baseline task condition (same/different gender decision). Expression type (emotional or linguistic) was grouped by run, with two runs for each expression type in each stimulus condition (face only and verb context). Each run consisted of eight 32-s blocks alternating between experimental and baseline blocks. Each trial sequentially presented a pair of stimuli, each presented for 850 ms with a 500-ms ISI. At the start of each block, either the phrase ‘facial expression’ or the word ‘gender’ was presented for 1 s to inform participants of the upcoming task. Each run lasted 4 min and 16 s.

2.4. Data acquisition

All structural MRI and fMRI data were obtained using a 1.5-T Siemens Vision MR scanner with a standard clinical whole head coil. Each participant’s head was firmly padded with foam cushions inside the head coil to minimize head movement. For each participant, two high-resolution structural images (voxel dimensions: 1 × 1 × 1 mm) were acquired prior to the fMRI scans using T1-weighted MPRAGE with TR = 11.4, TE = 4.4, FOV 256, and 10° flip angle. These T1-weighted images were averaged post hoc to create a high quality individual anatomical data set for registration and spatial normalization to the atlas of Talairach and Tournoux (1988).

For functional imaging, we acquired 24 contiguous, 5 mm thick coronal slices extending from occipital lobe to mid-frontal lobe (T2*-weighted interleaved multi-slice gradient-echo echo-planar imaging; TR = 4 s, TE = 44 ms, FOV 192, flip angle 90°, 64 × 64 matrix, 3 × 3 × 5 mm voxels). The first two volumes (acquired prior to equilibrium magnetization) from each functional scan were discarded.

2.5. fMRI data pre-processing and analysis

All fMRI data analyses were carried out with Analysis of Functional Neuroimages (AFNI) software (Cox, 1996). The data were pre-processed and analyzed using the identical protocols described in McCullough et al. (2005) with one exception: AFNI 3dDeconvolve was used instead of 3dfim for individual fMRI statistics. The normalization of the structural images was performed manually by identifying the anterior and posterior commissures, and placing markers on the extreme points of the cortex before transforming the image into Talairach space (see Cox, 1996, for more details). Each participant’s anatomical dataset in the Talairach atlas was later used to delineate the region of interest (ROI) boundaries for the superior temporal sulcus (STS) and the fusiform gyrus (FG) in both hemispheres. We defined the superior temporal sulcus ROI as the area encompassing the upper and lower bank of STS, extending from the temporo-occipital line to the posterior part of temporal pole. The fusiform gyrus was defined as the area bounded by the anterior and posterior transverse collateral sulci, medial occipito-temporal sulcus, and the lateral occipito-temporal sulcus.

All functional scans were corrected for inter-scan head motion using an iterative least-squares procedure that aligns all volumes to the reference volume (the first functional scan acquired immediately after the last structural MRI scan). All volumes were then concatenated and spatially smoothed with a 5-mm FWHM gaussian kernel prior to the analysis.

The AFNI program 3dDeconvolve, a multiple linear regression analysis program, was used to calculate the estimated impulse response function between the reference functions and the fMRI time series using a time shift of 1–2 TR at each voxel. In addition to the reference functions, nine regressors were included to account for the baseline, linear, quadratic trends, and intra-scan head motion (three translational and three rotational directions). This statistical map was thresholded at p < .001. A combination of AlphaSim (http://www.afni.nimh.nih.gov) and AFNI’s 3dclust was used to derive clusters of statistically significant activated voxels within the regions of interest (superior temporal sulcus and fusiform gyrus). Monte Carlo simulations generated by AlphaSim determined a minimum cluster size of 7 voxels (315 mm3) corresponded to p value corrected for multiple comparisons <.05. Voxels surviving this analysis were submitted to statistical comparison of extent of activation. In addition to the ROI analysis using individual data, we created group-level T-maps for each condition. The maps were created by converting beta coefficients from all individual functional scans into the Talairach atlas and analyzed with AFNI’s 3dANOVA program. Response accuracy was recorded via PsyScope software (Cohen et al., 1993).

3. Results

3.1. Behavioral results

All participants performed well, achieving a mean accuracy of 79% or greater in all conditions (see Table 1). The accuracy levels for hearing ASL signers did not differ significantly from the deaf signers’ and hearing non-signers’ accuracy levels reported in McCullough et al. (2005), F < 1.

Table 1.

Percent correct for same/different judgments of expressions produced by different models

| Emotional expressions |

Linguistic expressions |

|||

|---|---|---|---|---|

| Face only | Verb context | Face only | Verb context | |

| Hearing signers | 83.2 | 82.5 | 79.3 | 82.1 |

| Deaf signers | 81.8 | 83.8 | 79.6 | 85.4 |

| Hearing non-signers | 80.6 | 85.3 | 79.1 | 81.6 |

Data for the deaf signers and hearing non-signers are from McCullough et al. (2005).

3.2. Imaging results

A separate 2 (hemisphere) × 2 (expression type) ANOVA was conducted for each ROI for each stimulus condition using extent of activation with voxel-wise probability ≥.001 as the dependent measure, following McCullough et al. (2005). Table 2 provides the Talairach coordinates based on the mean of neural activation centroids in each region, and mean volumes of activation for activation extents for each ROI for each condition. For comparison, data from the deaf native signers and hearing non-signers from McCullough et al. (2005) were reprocessed using the same fMRI analysis protocol (i.e., AFNI’s 3dDeconvolve instead of 3dfim) and are presented as well. As can be seen in Table 2, hearing signers showed smaller activation extents than the other two groups. The reason for this difference is unclear, but it does not impact the relative levels of activation across the two hemispheres, which is the focus of our comparisons.

Table 2.

Brain regions activated when perceiving emotional and linguistic facial expressions relative to baseline, in the face only and verb context conditions (ROI analyses)

| Face only Talairach coordinates |

Verb context Talairach coordinates |

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| BA | x | y | z | Vol. (mm3) | BA | x | y | z | Vol. (mm3) | ||

| Emotional STS/MTG | |||||||||||

| L | Deaf signers | 22/21 | −55 | −41 | 7 | 1105 | 22/21 | −55 | −38 | 8 | 1608 |

| Hearing signers | 22/21 | −57 | −44 | 7 | 378 | 22/21 | −43 | −59 | 7 | 900 | |

| Hearing non-signers | 22/21 | −53 | −44 | 7 | 986 | 22/21 | −54 | −40 | 5 | 1169 | |

| R | Deaf signers | 22/21 | 51 | −43 | 10 | 1539 | 22/21 | 48 | −41 | 10 | 1792 |

| Hearing signers | 22/21 | 43 | −45 | 11 | 684 | 22/21 | 52 | −51 | 8 | 1035 | |

| Hearing non-signers | 22/21 | 53 | −37 | 6 | 2364 | 22/21 | 49 | −38 | 5 | 1828 | |

| Fusiform gyrus | |||||||||||

| L | Deaf signers | 37 | −49 | −36 | −10 | 1204 | 37 | −36 | −56 | −12 | 1358 |

| Hearing signers | 37 | −44 | −50 | −13 | 198 | 37 | −43 | −43 | −14 | 594 | |

| Hearing non-signers | 37 | −39 | −54 | −11 | 605 | 37 | −40 | −56 | −12 | 815 | |

| R | Deaf signers | 37 | 35 | −57 | −12 | 541 | 37 | 31 | −57 | −11 | 689 |

| Hearing signers | 37 | 39 | −44 | −13 | 360 | 37 | 42 | −55 | −17 | 810 | |

| Hearing non-signers | 37 | 40 | −52 | −14 | 799 | 37 | 43 | −51 | −10 | 830 | |

| Linguistic STS/MTG | |||||||||||

| L | Deaf signers | 22/21 | −54 | −40 | 8 | 1612 | 22/21 | −54 | −42 | 8 | 2637 |

| Hearing signers | 22/21 | −53 | −59 | 11 | 774 | 22/21 | −53 | −58 | 8 | 828 | |

| Hearing non-signers | 22/21 | −55 | −44 | 5 | 934 | 22/21 | −53 | −44 | 7 | 1016 | |

| R | Deaf signers | 22/21 | 50 | −40 | 7 | 1822 | 22/21 | 51 | −42 | 8 | 1071 |

| Hearing signers | 22/21 | 50 | −50 | 4 | 963 | 22/21 | 48 | −50 | 15 | 999 | |

| Hearing non-signers | 22/21 | 53 | −47 | 7 | 1685 | 22/21 | 54 | −38 | 5 | 2222 | |

| Fusiform gyrus | |||||||||||

| L | Deaf signers | 37 | −38 | −58 | −13 | 1365 | 37 | −36 | −62 | −12 | 1661 |

| Hearing signers | 37 | −41 | −41 | −14 | 540 | 37 | −46 | −53 | −14 | 396 | |

| Hearing non-signers | 37 | −39 | −57 | −12 | 1038 | 37 | −39 | −55 | −13 | 1035 | |

| R | Deaf signers | 37 | 34 | −61 | −11 | 839 | 37 | 35 | −65 | −13 | 681 |

| Hearing signers | 37 | 42 | −38 | −12 | 567 | 37 | 43 | −40 | −14 | 423 | |

| Hearing non-signers | 37 | 39 | −54 | −13 | 1233 | 37 | 40 | −53 | −13 | 1209 | |

Data for the deaf signers and hearing non-signers were recomputed from McCullough et al. (2005).

3.3. Face only condition

In the superior temporal sulcus (STS), there was a significant main effect of hemisphere with greater right than left hemisphere activation, F(1,10) = 15.57, p = .003. There was no main effect of expression type, F(1,10) = 1.8, p = .199. Although the interaction between hemisphere and expression type did not reach significance, F < 1, planned pairwise comparisons revealed significantly greater right hemisphere activation only for emotional facial expressions, t = 3.503, p = .006. Interestingly, within the STS, the activation peak for linguistic facial expressions was substantially more posterior for hearing ASL signers than that observed for either deaf signers or hearing non-signers (see Table 2).

In the fusiform gyrus, activation was bilateral with no main effect of hemisphere, F < 2, or of expression type, F < 2, and no interaction between these factors, F(1,10) = 3.179, p = .105. Planned pairwise comparisons, however, revealed significantly greater right hemisphere activation for emotional facial expressions, t = 2.47, p = .03.

3.3.1. Verb context condition

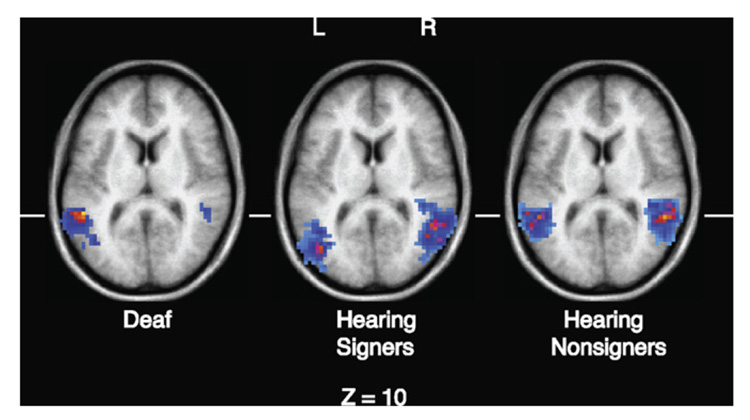

In the superior temporal sulcus, there was no significant main effect of hemisphere, F(1,10) = 4.11, p = .07, or of expression type, F < 1, and no significant interaction, F < 1. Planned comparisons also revealed no significant hemispheric asymmetry for either emotional or linguistic facial expressions, t < 2 for each expression type. However, the STS activation peak for both linguistic and emotional facial expressions was more posterior for hearing signers than for either deaf signers or hearing non-signers (see Table 2). Fig. 2 illustrates this more posterior STS activation for linguistic expressions in the verb context, comparing hearing signers with deaf signers and hearing non-signers. For clarity, only neural activation within the STS/MTG regions are shown.

Fig. 2.

Illustration of the more posterior activation for hearing signers within the superior temporal sulcus for linguistic facial expressions in the verb context condition, from the whole brain analysis. For a more accurate comparison of the three groups, the data for the deaf signers and hearing non-signers from McCullough et al. (2005) were reprocessed using the identical fMRI analysis protocol.

In the fusiform gyrus, there was a significant main effect of hemisphere: activation was right-lateralized for both types of facial expression, F(1,10) = 7.48, p < .05. Although there was no interaction between hemisphere and facial expression type, F(1,10) = 2.914, p = .119, planned pairwise comparisons revealed a significant right hemisphere asymmetry for emotional facial expressions, t = 2.63, p < .05, but no asymmetry for linguistic facial expressions, t < 1.

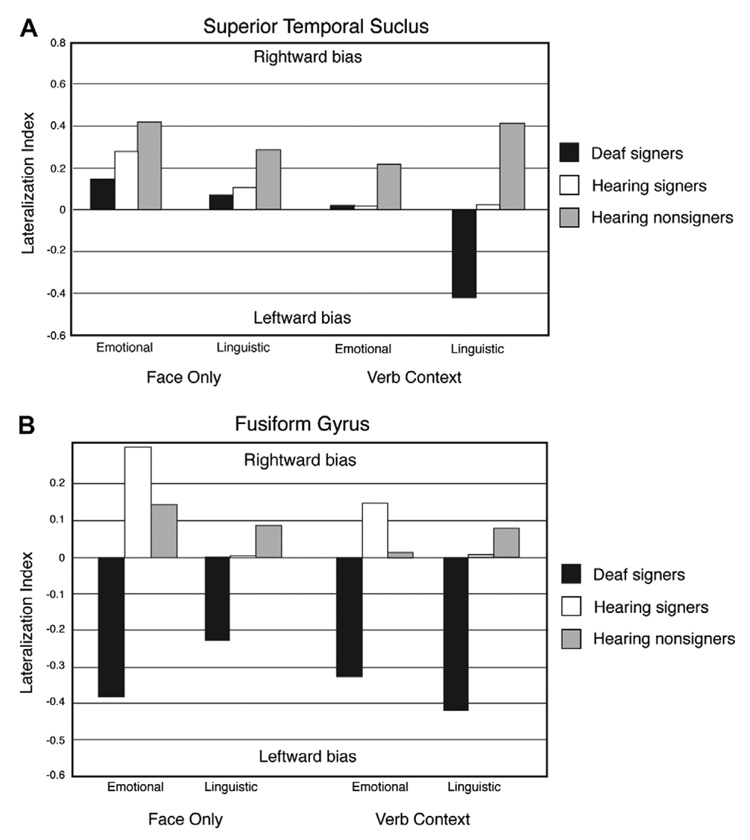

Finally, to illustrate the pattern of hemispheric asymmetries for hearing signers in comparison to deaf signers and hearing non-signers, we calculated a lateralization index for each ROI based on the number of activated voxels above a threshold of p < .001 (see Table 2 and Fig. 3) following McCullough et al. (2005). The lateralization index (LI) for each ROI was computed according to the formula LI = (VolR − VolL)/(VolR + VolL), where VolL and VolR represent the mean numbers of activated voxels across the group in the left and right hemispheres. These laterality indices, depicted in Fig. 3, thus provide a visual illustration of group differences in hemispheric asymmetry across conditions. Positive and negative index values represent rightward and leftward bias, respectively.

Fig. 3.

Lateralization indices for the total number of voxels in the left vs. right STS (A) and left vs. right fusiform gyrus (B) for each participant group. The indices are calculated using the group means from Table 1.

4. Discussion

The results suggest that both sign language experience and deafness can affect the neural organization for recognizing facial expressions. All groups tended toward a right hemisphere asymmetry in the superior temporal sulcus for facial expressions when they were presented in isolation (i.e., with just a face). When recognizing emotional facial expressions in an ASL verb context, hearing signers (bimodal bilinguals) patterned like deaf ASL signers and exhibited no hemispheric asymmetry within STS (see Fig. 3A). In contrast, non-signers showed a right hemisphere bias in this condition. These findings lend support to McCullough et al.’s (2005) hypothesis that processing emotional expressions within a sign language context engages the left hemisphere for signers.

However, it was surprising that hearing signers did not show the same strong left hemisphere asymmetry that McCullough et al. (2005) observed for deaf signers when linguistic facial expressions occurred in a verb context. On the other hand, hearing signers did not show the right hemisphere asymmetry observed for the non-signing group in this condition either. Thus, their lateralization pattern fell “in-between” that of deaf signers (leftward asymmetry) and hearing non-signers (rightward asymmetry). One possible explanation is that linguistic processing of ASL facial expressions reduces the general right hemisphere preference for face processing, but does not create a full shift to left hemisphere preferential processing. For deaf signers, left hemisphere processing of linguistic facial expressions may be more strongly left-lateralized because deaf signers must attend to the mouth both for ASL adverbial expressions (see Fig. 1) and for lip-reading. It is possible that when a facial expression is clearly linguistic (as in the verb context), deaf signers tend to engage the left hemisphere more strongly than hearing signers because of their more extensive experience visually processing linguistic mouth patterns (either lip-reading English or perceiving ASL).

As can be seen in Fig. 2 and in Table 2, left hemisphere activation in the STS for linguistic facial expressions was 14–19 mm more posterior for hearing signers compared to both deaf signers and hearing non-signers. In addition, left hemisphere activation for emotional expressions within a linguistic context was more posterior by 19–21 mm compared to the other two groups. In contrast, when emotional expressions were presented in isolation (face only), the location of activation was quite similar for all three groups (see Table 2), although the groups were not contrasted statistically. This pattern of activation suggests that when hearing native signers interpret language-related facial expressions, they recruit more posterior regions within left STS. This hypothesis is consistent with the results of MacSweeney et al. (2002), who reported that hearing native signers demonstrated less extensive activation within left superior temporal regions when comprehending British Sign Language compared to deaf native signers. MacSweeney et al. (2002) argued that auditory processing of speech has privileged access to more anterior regions within left superior temporal cortex, such that hearing signers engage these regions much less strongly during sign language processing. In contrast, these anterior regions are recruited for sign language processing by deaf native signers for whom auditory input has been absent since birth (see also Petitto et al., 2000). Thus, we suggest that activation within STS for sign language-related facial expressions is shifted posteriorly for hearing signers compared to deaf signers because more anterior regions may preferentially process auditory language input.

We speculate that hearing non-signers do not exhibit such posterior activation because facial expressions are not interpreted as language related. That is, hearing non-signers do not need to segregate the neural processing of sign-related facial expressions from other facial expressions, and therefore more anterior regions within STS can support both auditory speech processing and the perception of facial expressions (either emotional or unfamiliar ASL expressions). Capek et al. (2008) found that sign-related facial expressions (mouth patterns within ‘echo phonology,’ Woll, 2001) activated more posterior regions within STS than speech-related mouthings for deaf signers. We hypothesize that hearing signers, unlike either hearing non-signers or deaf signers, exhibit more posterior activation for sign-related facial expressions (particularly those that involve the mouth) because of the need to distinguish between speech-related expressions and expressions within a sign language context. Although deaf signers who lip-read also must make a distinction between speech-related expressions and sign-related expressions (see Capek et al., 2008), they are free to recruit more anterior STS regions relative to hearing signers when they comprehend sign-related expressions because these regions do not compete with auditory speech processing. For hearing signers, the preference within anterior STS for auditory language processing found by MacSweeney et al. (2002) may create a posterior shift along left STS, such that processing sign-related facial expressions (either linguistic expressions or emotional expressions produced with an ASL verb) engage more posterior regions compared to deaf signers and also compared to hearing non-signers who do not need to segregate two types of language-related facial expressions (speech vs. sign).

Within the fusiform gyrus, the neural response for hearing signers was not left-lateralized across conditions and expression types, as was found for deaf signers (see Fig. 3B). Rather, the lateralization pattern for hearing signers was similar to that of non-signers, with an even stronger right hemisphere bias for emotional facial expressions. Thus, the left hemisphere asymmetry found for deaf signers does not arise solely from their experience with local featural processing of ASL facial expressions. The behavioral data from McCullough and Emmorey (1997) indicated that deaf signers outperformed both hearing signers and non-signers when identifying changes in mouth configuration, suggesting that training with lip-reading may be pivotal to enhancing processing of facial features (the mouth, in particular). A greater reliance on local featural processing of both faces and facial expressions within the left fusiform gyrus for deaf signers may be due to their extensive experience with lip-reading, perhaps in conjunction with their experience processing ASL linguistic facial expressions.

5. General discussion

Bimodal bilingualism can uniquely affect brain organization for language and non-linguistic cognitive processes. Our findings along with those of MacSweeney et al. (2002) suggest that bimodal bilinguals (hearing signers) recruit more posterior regions within left superior temporal cortex than deaf signers when comprehending sign language. This different neural organization is hypothesized to arise from preferential processing of auditory speech within more anterior STS regions and possibly from the need to segregate auditory speech processing from sign language processing within this region (see also Capek et al., 2008). Thus, the neural substrate that supports sign language comprehension for bimodal bilinguals is not identical to that of deaf signers. It is not clear whether bimodal bilingualism affects the neural organization for the perception of spoken language, but as noted in the introduction, the neural systems engaged during the production of spatial language in English (prepositions) can be affected by native knowledge and use of ASL (Emmorey et al., 2005). Furthermore, Neville et al. (1998) found that when reading English, bimodal bilinguals exhibited a distinct neural response compared to monolingual English speakers, displaying less robust activation in left posterior temporal regions. It appears that ASL-English bilinguals exhibit patterns of neural activation during language processing that are unique, differing from both monolingual English speakers and from deaf ASL signers.

In addition, our results suggest that within the superior temporal sulcus, bimodal bilinguals process emotional facial expressions more bilaterally compared to monolingual non-signers, but only when emotional expressions appear in a language context. In contrast, within the fusiform gyrus, bimodal bilinguals pattern more like monolingual English speakers, exhibiting bilateral or right hemisphere biased activation for recognizing facial expressions. Only deaf signers exhibit a consistent left hemisphere bias for facial expression recognition (see Fig. 3B). In this case, neural reorganization appears to arise from experiences associated with deafness, which may involve a greater reliance on lip-reading and perhaps greater attention to the face for social cues in all contexts. In contrast, bimodal bilinguals can attend to the vocal prosody of spoken language and may rely less on facial cues, compared to deaf signers.

In a broader context, bimodal bilinguals offer an unusual opportunity to investigate the nature of the bilingual brain and the cognitive neuroscience of second language acquisition. Thus far, almost all research has been conducted with sign-speech bilinguals who acquired both languages early in life (Codas). The one existing study comparing hearing native and late-learners of ASL found that acquisition of ASL from birth was associated with recruitment of the right angular gyrus during sign language comprehension (New-man, Bavelier, Corina, Jezzard, & Neville, 2002). What remains to be determined is whether late acquisition of a sign language leads to other specific functional changes in the brain within either linguistic or non-linguistic cognitive domains. In addition, we can ask whether structural changes occur with sign language acquisition that are similar to those found for unimodal bilinguals (e.g., Mechelli et al., 2004). It is possible that the changes observed for unimodal bilinguals (e.g., increased gray matter in the left inferior parietal lobule) arise in part because the sensory-motor systems are shared for comprehension and production in the two languages. On the other hand, changes may arise because of the acquisition of a large new lexicon and/or the acquisition of new morpho-syntactic processes. Clearly, hearing signers should not be viewed simply as a language control group for deaf signers. Rather, the study of bimodal bilinguals is likely to give rise to novel insights into the plasticity and specialization of brain structures that support language.

Footnotes

This research was supported by NIH Grants R01 HD13249 and RO1 HD047736 awarded to Karen Emmorey and San Diego State University. We thank Jill Weisberg for helpful comments on an earlier draft of this article, and we are particularly grateful to all of the bimodal bilinguals who participated in the study.

Following convention, lowercase deaf refers to audiological status, and uppercase Deaf is used when membership in the Deaf community and use of a signed language is at issue.

Members of this group of bimodal bilinguals commonly identify themselves as “Codas,” a term which indicates a cultural identity defined in part by shared experiences growing up in a Deaf family (e.g., Preston, 1994).

References

- Anderson DE, Reilly J. PAH! The acquisition of adverbials in ASL. Sign Language and Linguistics. 1998;1–2:117–142. [Google Scholar]

- Bavelier D, Brozinsky C, Tomann A, Mitchell T, Neville H, Liu G. Impact of early deafness and early exposure to sign language on the cerebral organization for motion processing. Journal of Neuroscience. 2001;21(22):8931–8942. doi: 10.1523/JNEUROSCI.21-22-08931.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bavelier D, Tomann A, Hutton C, Mitchell T, Corina D, Liu G, et al. Visual attention to the periphery is enhanced in congenitally deaf individuals. Journal of Neuroscience. 2000;20(17):RC93. doi: 10.1523/JNEUROSCI.20-17-j0001.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bellugi U, O’Grady L, Lillo-Martin D, O’Grady M, van Hoek K, Corina D. Enhancement of spatial cognition in deaf children. In: Volterra V, Erting C, editors. From gesture to language in hearing and deaf children. New York: Springer-Verlag; 1990. pp. 278–298. [Google Scholar]

- Bettger J. The effects of experience on spatial cognition: Deafness and knowledge of ASL. Champagne-Urbana: University of Illinois; 1992. [Google Scholar]

- Bettger J, Emmorey K, McCullough S, Bellugi U. Enhanced facial discrimination: Effects of experience with American Sign Language. Journal of Deaf Studies & Deaf Education. 1997;2(4):223–233. doi: 10.1093/oxfordjournals.deafed.a014328. [DOI] [PubMed] [Google Scholar]

- Bosworth RG, Dobkins KR. Visual field asymmetries for motion processing in deaf and hearing signers. Brain and Cognition. 2002;49(1):170–181. doi: 10.1006/brcg.2001.1498. [DOI] [PubMed] [Google Scholar]

- Capek CM, Waters D, Woll B, MacSweeney M, Brammer M, McGuire P, et al. Hand and mouth: Cortical correlates of lexical processing in British Sign Language and speech reading English. Journal of Cognitive Neuroscience. 2008;20(7):1–15. doi: 10.1162/jocn.2008.20084. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen JD, Macwhinney B, Flatt M, Provost J. Psyscope: A new graphic interactive environment for designing psychology experiments. Behavioral Research Methods, Instruments, and Computers. 1993;25:257–271. [Google Scholar]

- Cox RW. AFNI: Software for analysis and visualization of functional magnetic resonance neuroimages. Computers and Biomedical Research. 1996;29:162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Damasio H, Grabowski TJ, Tranel D, Ponto LL, Hichwa RD, Damasio AR. Neural correlates of naming actions and of naming spatial relations. NeuroImage. 2001;13(6 Pt 1):1053–1064. doi: 10.1006/nimg.2001.0775. [DOI] [PubMed] [Google Scholar]

- Emmorey K. Language, cognition, and the brain: Insights from sign language research. Mahwah, NJ: Lawrence Erlbaum Associates, Inc; 2002. [Google Scholar]

- Emmorey K, Damasio H, McCullough S, Grabowski T, Ponto LL, Hichwa RD, et al. Neural systems underlying spatial language in American Sign Language. NeuroImage. 2002;17(2):812–824. [PubMed] [Google Scholar]

- Emmorey K, Grabowski T, McCullough S, Ponto LL, Hichwa RD, Damasio H. The neural correlates of spatial language in English and American Sign Language: A PET study with hearing bilinguals. NeuroImage. 2005;24(3):832–840. doi: 10.1016/j.neuroimage.2004.10.008. [DOI] [PubMed] [Google Scholar]

- Emmorey K, Herzig M. Categorical versus gradient properties of classifier constructions in ASL. In: Emmorey K, editor. Perspectives on classifier constructions in signed languages. Mahwah, NJ: Lawrence Erlbaum Associates, Inc.; 2003. pp. 222–246. [Google Scholar]

- Emmorey K, Klima E, Hickok G. Mental rotation within linguistic and non-linguistic domains in users of American Sign Language. Cognition. 1998;68(3):221–246. doi: 10.1016/s0010-0277(98)00054-7. [DOI] [PubMed] [Google Scholar]

- Emmorey K, Kosslyn S. Enhanced image generation abilities in deaf signers: A right hemisphere effect. Brain and Cognition. 1996;32:28–44. doi: 10.1006/brcg.1996.0056. [DOI] [PubMed] [Google Scholar]

- Emmorey K, Kosslyn SM, Bellugi U. Visual-imagery and visual spatial language: Enhanced imagery abilities in deaf and hearing ASL signers. Cognition. 1993;46(2):139–181. doi: 10.1016/0010-0277(93)90017-p. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI. The distributed human neural system for face perception. Trends in Cognitive Sciences. 2000;4(6):223–233. doi: 10.1016/s1364-6613(00)01482-0. [DOI] [PubMed] [Google Scholar]

- Hillger LA, Koenig O. Separable mechanisms in face processing: Evidence from hemispheric specialization. Journal of Cognitive Neuroscience. 1991;3:42–58. doi: 10.1162/jocn.1991.3.1.42. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: A module in human extrastriate cortex specialized for face perception. Journal of Neuroscience. 1997;17(11):4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keehner M, Gathercole SE. Cognitive adaptations arising from non-native experiences of sign language in hearing adults. Memory & Cognition. 2007;35(4):752–761. doi: 10.3758/bf03193312. [DOI] [PubMed] [Google Scholar]

- Liddell S. American Sign Language syntax. New York: Mouton; 1980. [Google Scholar]

- Liddell S. Four functions of a locus: Re-examining the structure of space in ASL. In: Lucas C, editor. Sign language research, theoretical issues. Washington, DC: Gallaudet University Press; 1990. pp. 176–198. [Google Scholar]

- MacSweeney M, Woll B, Campbell R, McGuire PK, David AS, Williams SC, et al. Neural systems underlying British Sign Language and audio-visual English processing in native users. Brain. 2002;125(Pt 7):1583–1593. doi: 10.1093/brain/awf153. [DOI] [PubMed] [Google Scholar]

- McCullough S, Emmorey K. Face processing by deaf ASL signers: Evidence for expertise in distinguishing local features. Journal of Deaf Studies & Deaf Education. 1997;2(4):212–222. doi: 10.1093/oxfordjournals.deafed.a014327. [DOI] [PubMed] [Google Scholar]

- McCullough S, Emmorey K, Sereno M. Neural organization for recognition of grammatical and emotional facial expressions in deaf ASL signers and hearing nonsigners. Cognitive Brain Research. 2005;22(2):193–203. doi: 10.1016/j.cogbrainres.2004.08.012. [DOI] [PubMed] [Google Scholar]

- McKee D. An analysis of specialized cognitive functions in deaf and hearing signers. University of Pittsburgh; 1987. [Google Scholar]

- Mechelli A, Crinion J, Noppeney U, O’Doherty J, Ashburner J, Frackowiak RS, et al. Structural plasticity in the bilingual brain. Nature. 2004;431:757. doi: 10.1038/431757a. [DOI] [PubMed] [Google Scholar]

- Neville HJ. Whence the specialization of the language hemisphere? In: Mattingly IG, Studdert-Kennedy M, editors. Modularity and the motor theory of speech perception. Hillsdale, NJ: Lawrence Erlbaum Associates; 1991. pp. 269–294. [Google Scholar]

- Neville H, Bavelier D, Corina D, Rauschecker J, Karni A, Lalwani A, et al. Cerebral organization for language in deaf and hearing subjects: Biological constraints and effects of experience. Proceedings of the National Academy of Sciences of the United States of America. 1998;95:922–929. doi: 10.1073/pnas.95.3.922. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neville HJ, Lawson D. Attention to central and peripheral visual space in a movement detection task: An event-related potential and behavioral study. II. Congenitally deaf adults. Brain Research. 1987;405(2):268–283. doi: 10.1016/0006-8993(87)90296-4. [DOI] [PubMed] [Google Scholar]

- Newman AJ, Bavelier D, Corina D, Jezzard P, Neville HJ. A critical period for right hemisphere recruitment in American Sign Language processing. Nature Neuroscience. 2002;5(1):76–80. doi: 10.1038/nn775. [DOI] [PubMed] [Google Scholar]

- Parasnis I, Samar VJ, Bettger J, Sathe K. Does deafness lead to enhancement of visual spatial cognition in children? Negative evidence from deaf nonsigners. Journal of Deaf Studies & Deaf Education. 1996;1:145–152. doi: 10.1093/oxfordjournals.deafed.a014288. [DOI] [PubMed] [Google Scholar]

- Petitto LA, Zatorre RJ, Gauna K, Nikelski EJ, Dostie D, Evans AC. Speech-like cerebral activity in profoundly deaf people processing signed languages: Implications for the neural basis of human language. Proceedings of the National Academy of Sciences of the United States of America. 2000;97(25):13961–13966. doi: 10.1073/pnas.97.25.13961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Preston P. Mother Father Deaf: Living between sound and silence. Cambridge, MA: Harvard University Press; 1994. [Google Scholar]

- Proksch J, Bavelier D. Changes in the spatial distribution of visual attention after early deafness. Journal of Cognitive Neuroscience. 2002;14(5):687–701. doi: 10.1162/08989290260138591. [DOI] [PubMed] [Google Scholar]

- Reilly JS. Bringing affective expression into the service of language: Acquiring perspective marking in narratives. In: Emmorey K, Lane H, editors. The signs of language revisited: An anthology to honor Ursula Bellugi and Edward Klima. Mahwah: Lawrence Erlbaum Associates; 2000. pp. 415–432. [Google Scholar]

- Reilly JS, McIntire ML, Bellugi U. Baby face: A new perspective on universals in language acquisition. In: Siple P, Fischer SD, editors. Theoretical issues in sign language research. Vol. 2. Chicago, IL: The University of Chicago Press; 1991. pp. 9–23. [Google Scholar]

- Rossion B, Dricot L, Devolder A, Bodart JM, Crommelinck M. Hemispheric asymmetries for whole-based and part-based face processing in the human fusiform gyrus. Journal of Cognitive Neuroscience. 2000;12:793–802. doi: 10.1162/089892900562606. [DOI] [PubMed] [Google Scholar]

- Shepard R, Metzler J. Mental rotation of three-dimensional objects. Science. 1971;171:701–705. doi: 10.1126/science.171.3972.701. [DOI] [PubMed] [Google Scholar]

- Siple P. Visual constraints for sign langauge communication. Sign Language Studies. 1978;19:97–112. [Google Scholar]

- Talairach J, Tournoux P. Co-planar stereotaxic atlas of the human brain. New York: Thieme; 1988. [Google Scholar]

- Talbot KF, Haude RH. The relationship between sign language skill and spatial visualization ability: Mental rotation of three-dimensional objects. Perceptual and Motor Skills. 1993;77:1387–1391. doi: 10.2466/pms.1993.77.3f.1387. [DOI] [PubMed] [Google Scholar]

- Talmy L. The representation of spatial structure in spoken and signed language. In: Emmorey K, editor. Perspectives on classifier constructions in sign languages. Mahwah, NJ: Lawrence Erlbaum & Associates; 2003. pp. 169–195. [Google Scholar]

- Weisberg J. The functional anatomy of spatial and object processing in deaf and hearing populations. Washington, DC: Georgetown University; 2007. [Google Scholar]

- Woll B. The sign that dares to speak its name: Echo phonology in British Sign Language (BSL) In: Boyes-Braem P, Sutton-Spence R, editors. The Hands are the Head of the Mouth: The mouth as articulator in sign languages. Vol. 39. Hamburg: Signum-Verlag; 2001. pp. 87–98. [Google Scholar]

- Zeshan U. Interrogative constructions in signed languages: Crosslinguistic perspectives. Language. 2004;80(1):7–39. [Google Scholar]