Abstract

Two experiments investigated categorical perception (CP) effects for affective facial expressions and linguistic facial expressions from American Sign Language (ASL) for Deaf native signers and hearing non-signers. Facial expressions were presented in isolation (Experiment 1) or in an ASL verb context (Experiment 2). Participants performed ABX discrimination and identification tasks on morphed affective and linguistic facial expression continua. The continua were created by morphing end-point photo exemplars into 11 images, changing linearly from one expression to another in equal steps. For both affective and linguistic expressions, hearing non-signers exhibited better discrimination across category boundaries than within categories for both experiments, thus replicating previous results with affective expressions and demonstrating CP effects for non-canonical facial expressions. Deaf signers, however, showed significant CP effects only for linguistic facial expressions. Subsequent analyses indicated that order of presentation influenced signers' response time performance for affective facial expressions: viewing linguistic facial expressions first slowed response time for affective facial expressions. We conclude that CP effects for affective facial expressions can be influenced by language experience.

Keywords: Categorical perception, Facial expressions, American Sign Language, Deaf signers, Visual discrimination

1. Introduction

In his book “The expression of the emotions in man and animals”, Darwin (1872) noted that Laura Bridgman, a woman who was born deaf and blind, was able to spontaneously express a wide range of affective facial expressions that she was never able to observe in others. This case was one of the intriguing arguments Darwin put forth in support of the evolutionary underpinnings of facial expressions in humans. Since then, an extensive body of research, including seminal cross-cultural studies by Ekman and colleagues (Ekman, 1994; Ekman & Friesen, 1971; Ekman et al., 1987), has provided empirical support for the evolutionary basis of affective facial expression production. Yet, the developmental and neural mechanisms underlying the perception of facial expressions are still not fully understood. The ease and speed of facial expression perception and categorization suggest a highly specialized system or systems, which prompt several questions. Is the ability to recognize and categorize facial expressions innate? If so, is this ability limited to affective facial expressions? Can the perception of affective facial expressions be modified by the experience? If so, how and to what extent? This study attempts to elucidate some of these questions by investigating categorical perception for facial expressions in Deaf1 users of American Sign Language - a population for whom the use of facial expression is required for language production and comprehension.

Categorical perception (CP) is a psychophysical phenomenon that manifests itself when a uniform and continuous change in a continuum of perceptual stimuli is perceived as discontinuous variations. More specifically, the perceptual stimuli are seen as qualitatively similar within categories and different across categories. An example of categorical perception is the hue demarcations in the rainbow spectrum. Humans with normal vision perceive discrete color categories within a continuum of uniform linear changes of light wavelengths (Bornstein & Korda, 1984). The difference between green and yellow hues are more easily perceived than the different hues of yellow, even if the change distances in the wavelength frequencies are exactly the same. Livingston, Andrews, and Harnad (1998) argued that this phenomenon is a result of compression (differences among stimuli in the same category are minimized) and expansion (stimuli differences among categories are exaggerated) in the perceived stimuli relative to a perceptual baseline. The compression and expansion effects may reduce the continuous perceptual stimuli into simple, relevant, and manageable chunks for further cognitive processing and concept formation.

Recently, several studies have indicated that linguistic category labels play a role in categorical perception (Gilbert, Regier, Kay, & Ivry, 2006; Roberson, Damjanovic, & Pilling, 2007; Roberson, Pak, & Hanley, 2008). Roberson and Davidoff (2000) found that verbal interference eliminates CP effects for color, although this effect was not observed when participants were unable to anticipate the verbal interference task (Pilling, Wiggett, Özgen, & Davies, 2003). Roberson et al. (2008) found CP effects for Korean, but not for English speakers for color categories that correspond to standard color words in Korean, but not in English. Roberson et al. (2007) propose that linguistic labels may activate a category prototype, which biases perceptual judgments of similarity (see also Huttenlocher, Hedges, & Vevea, 2000).

Investigation of the CP phenomenon in various perceptual and cognitive domains has provided insights into the development and the working of mechanisms underlying different cognitive processes. For example, our understanding of cognitive development in speech perception continues to evolve through a large body of CP studies involving voice-onset time (VOT). VOT studies have shown that English listeners have a sharp phoneme boundary between /ba/ and /pa/ sounds which differ primarily in the onset time of laryngeal vibration (Eimas, Siqueland, Jusczyk, & Vigorito, 1971; Liberman, Harris, Hoffman, & Griffith, 1957). Other variants of speech CP studies have shown that 6-month-old infants can easily discern speech sound boundaries from other languages not spoken by their families. For example, infants from Japanese speaking families can distinguish /l/ and /r/ sounds, which are difficult for adult speakers of Japanese to distinguish; however, when they reach 1 year of age, this ability diminishes (Eimas, 1975). Bates, Thal, Finlay, and Clancy (2003) argue that the decline of ability to discern phonemic contrasts that are not in one's native language neatly coincides with the first signs of word comprehension, suggesting that language learning can result in low-level perceptual changes. In addition, Iverson et al. (2003) used multi-dimensional scaling to analyze the perception of phonemic contrasts by Japanese, German, and American native speakers and found that the perceptual sensitivities formed within the native language directly corresponded to group differences in perceptual saliency for within- and between category acoustic variation in English /r/ and /l/ segments.

Similar effects of linguistic experience on categorical perception have been found for phonological units in American Sign Language (ASL). Using computer generated continua of ASL hand configurations, Emmorey, McCullough, and Brentari (2003) found that Deaf ASL signers exhibited CP effects for visually presented phonologically contrastive handshapes, in contrast to hearing individuals with no knowledge of sign language who showed no evidence of CP effects (see Brentari (1998), for discussion of sign language phonology). Baker, Isardi, Golinkoff, and Petitto (2005) replicated these findings using naturally produced stimuli and an additional set of contrastive ASL handshapes. Since categorical perception occurred only for specific learned hand configurations, these studies show that CP effects can be induced by learning a language in a different modality and that these effects emerged independently of low-level perceptual contours or sensitivities.

1.1. Categorical perception for facial expression

Many studies have found that affective facial expressions are perceived categorically when presented in an upright, canonical orientation (Calder, Young, Perrett, Etcoff, & Rowland, 1996; Campanella, Quinet, Bruyer, Crommelinck, & Guerit, 2002; de Gelder, Teunisse, & Benson, 1997; Etcoff & Magee, 1992; Herpa et al., 2007; Kiffel, Campanella, & Bruyer, 2005; Roberson et al., 2007). Campbell, Woll, Benson, and Wallace (1999) undertook a study to investigate whether CP effects for facial expressions extend to learned facial actions. They examined whether Deaf signers, hearing signers, and hearing non-signers exhibited CP effects for syntactic facial expressions marking yes-no and Wh-questions in British Sign Language (BSL) and for similar affective facial expressions: surprised and puzzled. Syntactic facial expressions are specific linguistic facial expressions that signal grammatical contrasts. Yes-no questions in BSL (and in ASL) are marked by raised brows, and Wh-questions are marked by furrowed brows. Campbell et al. (1999) found no statistically significant CP effects for these BSL facial expressions for any group. However, when participants from each group were analyzed individually, 20% of the non-signers, 50% of the Deaf signers, and 58% of the hearing signers demonstrated CP effects for BSL syntactic facial expressions. Campbell et al. (1999) suggested that CP effects for BSL expressions may be present but weak for both signers and non-signers. In contrast, all groups showed clear CP effects for the continuum of surprised-puzzled facial expressions.

Campbell et al. (1999) acknowledged several possible methodological problems with their study. First, the groups differed significantly in the age at which BSL was acquired. The mean age of BSL acquisition was 20 years for the hearing signers and 7 years for the Deaf signers. Several studies have shown that the age when sign language was acquired is critical and has a lifelong impact on language proficiency (e.g., Mayberry, 1993; Newport, 1990). Second, and perhaps more importantly, only six images were used to create the stimuli continua (two end-points and four intermediates). With such large steps between the end-points, the category boundaries and CP effects observed are somewhat suspect.

1.2. Facial expressions in American Sign Language

The present studies address these methodological issues and investigate whether categorical perception extends to linguistic facial expressions from American Sign Language. Although ASL and BSL are mutually unintelligible languages, both use facial expressions to signal grammatical structures such as Wh-questions, yes-no questions, conditional clauses, as well as adverbials (Baker-Shenk, 1983; Liddell, 1980; Reilly, McIntire, & Bellugi, 1990a). Linguistic facial expressions differ from affective expressions in their scope, timing and in the facial muscles that are used (Reilly, McIntire, & Bellugi, 1990b). Linguistic facial expressions have a clear onset and offset and are highly coordinated with specific parts of the signed sentence. These expressions are critical for interpreting the syntactic structure of many ASL sentences. For example, both Wh-questions and Wh-phrases are accompanied by a furrowed brow, which must be timed to co-occur with the manually produced clause, and syntactic scope is indicated by the location and duration of the facial expression. In addition, yes-no questions are distinguished from declarative sentences by raised eyebrows, which must be timed with the onset of the question.

Naturally, ASL signers also use their face to convey affective information. Thus, when perceiving visual linguistic input, signers must be able to quickly discriminate and process different linguistic and affective facial expressions concurrently to understand the signed sentences. As a result, ASL signers have a very different perceptual and cognitive experience with the human face compared to non-signers. Indeed, behavioral studies have suggested that this experience leads to specific enhancements in face processing abilities. ASL signers (both deaf and hearing) performed significantly better than non-signers when memorizing faces (Arnold & Murray, 1998), discriminating faces under different spatial orientations and lighting conditions (Bettger, Emmorey, McCullough, & Bellugi, 1997), and discriminating local facial features (McCullough & Emmorey, 1997). Given these differences in the perceptual and cognitive processing of faces, we hypothesize that ASL signers may have developed different internal representations of facial expressions from non-signers, due to their unique experience with human faces.

Using computer-morphing software, we conducted two experiments investigating categorical perception for facial expressions by Deaf signers and hearing non-signers. We hypothesized that both groups would exhibit categorical perception for affective facial expressions, and that only Deaf signers would exhibit a CP effect for linguistic facial expressions. If the hearing group also demonstrates CP effects for linguistic facial expressions, it will suggest that ASL facial expressions may originate from natural categories of facial expression, perhaps based on affective or social facial displays. If both groups fail to demonstrate a CP effect for linguistic facial expressions, it will indicate that the perception of affective facial expressions may be innate and domain specific.

2. Experiment 1: Yes-no/Wh-questions and angry/disgust facial expressions

2.1. Method

2.1.1. Participants

Seventeen Deaf native signers (4 males, 13 females; mean age = 23 years) and 17 hearing non-signers (5 males, 12 females; mean age = 20 years) participated. All of the Deaf native signers had Deaf parents and learned ASL from birth. All were prelingually deaf and used ASL as their preferred means of communication. All hearing participants had no knowledge of ASL. All participants were tested either at Gallaudet University in Washington, DC, or at the Salk Institute for Biological Studies in San Diego, California.

2.1.2. Materials

Black and white photographs, depicting two affective facial expressions (angry, disgust) and two linguistic facial expressions (Wh-question, yes-no question) were produced by a model who was certified in the facial action coding system (FACS) (Ekman & Friesen, 1978). These photographs were then used as end-points for creating and morphing the facial expression continua (see Figs. 1 and 2). The affective facial expressions angry and disgust were chosen because they involve similar musculature changes, and the angry expression engages furrowed brows, similar to the linguistic expression marking Wh-questions. The linguistic facial expressions (Fig. 2) are normally expressed with accompanying manual signs; however, they were presented with just the face (no upper body) in order to replicate and extend previous studies that used face-only stimuli in categorical perception paradigms.

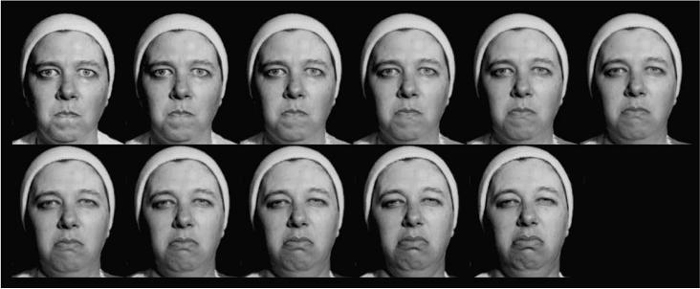

Fig. 1.

Illustration of morphed affective facial expression continuum: angry/disgust.

Fig. 2.

Illustration of morphed linguistic facial expression continuum: Wh-question/yes-no question.

2.1.2.1. Morphing procedure

VideoFusion™ software was used to produce 11 steps of equally spaced morphed images between the original image end-points. The protocol for setting the image control points was nearly identical to the procedure described in Beale and Keil (1995). A total of 240 control points were positioned manually onto the end-point images for the linguistic facial expressions, and approximately the same number was used for the affective facial expressions. Anatomical landmarks (e.g., corners of mouth or eyes) were used as locations for control point placements. See Beale and Keil (1995) for details concerning control point placements and morphing procedures.

2.1.3. Procedure

Two different tasks, discrimination and identification, were used to determine whether participants demonstrated CP effects for a specific continuum, and the overall task design was identical to Beale and Keil (1995). Instructions and stimuli were presented on an Apple PowerMac with a 17-in. monitor using PsyScope software (Cohen, MacWhinney, Flatt, & Provost, 1993). For the Deaf signers, instructions were also presented in ASL by an experimenter who was a fluent ASL signer. The presentation order of linguistic and affective continua was counterbalanced across participants. The discrimination task (an “ABX” matching to sample paradigm) was always presented before the identification task. Instructions and practice trials were given before each task.

On each trial, participants were shown three images successively. The first two images (A and B) were always two steps apart along the linear continuum (e.g., 1-3, 5-7) and were displayed for 750 ms each. The third image (X) was always identical to either the first or second image and was displayed for 1 s. A one second inter-stimulus interval (a blank white screen) separated consecutive stimuli. Participants pressed either the `A' or `B' key on the computer keyboard to indicate whether the third image was more similar to the first image (A) or to the second image (B). All nine two-step pairings of the 11 images were presented in each of four orders (ABA, ABB, BAA, and BAB) resulting in 36 combinations. Each combination was presented twice to each participant, and the resulting 72 trials were fully randomized within each continuum. The relatively long ISI and two-step pairings were chosen to maximize the probability of obtaining categorical perception effects for the discrimination task.

The identification task consisted of a binary forced-choice categorization. Before participants performed the identification task for each continuum, they were first shown two end-point images that were labeled with the numbers 1 or 2. On each trial, participants were presented with a single image randomly selected from the 11 image continuum and were asked to decide quickly which one of the pair (image number 1 or 2) most closely resembled the target image. For example, participants pressed the `1' key if the target image most resembled the angry facial expression and the `2' key if it most resembled the disgust facial expression. Stimuli were presented for 750 ms followed by a white blank screen, and each image was presented eight times, resulting in 88 randomly ordered trials for each continuum. In the linguistic facial expression continuum, the end-point images labeled as 1 and 2 were recognized by all signers as linguistic facial expressions. Hearing non-signers had no difficulty recognizing the difference between the end-point facial expressions; however, these expressions carried no grammatical or linguistic meaning for them.

The sigmoidal curve in the identification task data was then used to predict performance on the discrimination task. Following Beale and Keil (1995), we assessed whether the stimuli within a continuum were perceived categorically by defining the category boundary as the region that yielded labeling percentages between 33% and 66% on the identification task. An image that yielded a score within those upper and lower percentages was identified as the category boundary. If two images yielded scores that fell within those upper and lower percentages, then the category boundary was defined by the image that had the slowest mean response time for category labeling. If the stimuli along a continuum were perceived categorically, peak accuracy would be expected in the discrimination task for the two-step pair that straddles the boundary. Planned comparisons were performed on the accuracy scores at the predicted peaks. That is, for each continuum, the mean accuracy for the pair that straddled the boundary was contrasted with the mean accuracy on all the other pairs combined.

In addition to the planned comparisons of accuracy scores, participants' response times in both discrimination and identification tasks were analyzed using a repeated-measures ANOVA with three factors: group (Deaf signers, hearing non-signers), facial expression (affective, linguistic), and order of conditions (linguistic expressions first, affective expressions first).

2.2. Results

2.2.1. Assessment of categorical perception performance

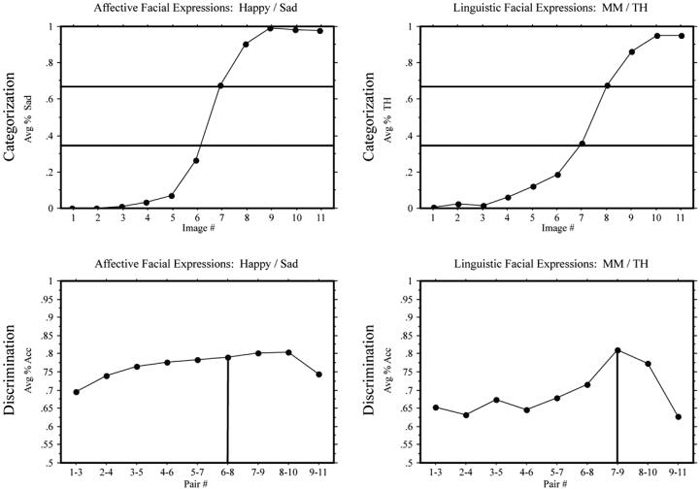

In the identification task, Deaf signers and hearing non-signers demonstrated categorical boundaries, i.e., a sigmoidal shift in category labeling, for both affective (angry/disgust) and linguistic (Wh-question/yes-no question) facial expression continua (see top graphs of Figs. 3 and 4). In the discrimination task, hearing non-signers, as expected, showed a CP effect for affective expressions, F(1,144) = 8.22, p < .005, but surprisingly Deaf signers did not, F(1,144) = 1.59, ns. Also to our surprise, a CP effect for linguistic expressions was found for both hearing non-signers, F(1,144) = 9.84, p < .005, as well as for Deaf signers, F(1,144) = 10.88, p < .005; see bottom graphs of Figs. 3 and 4.

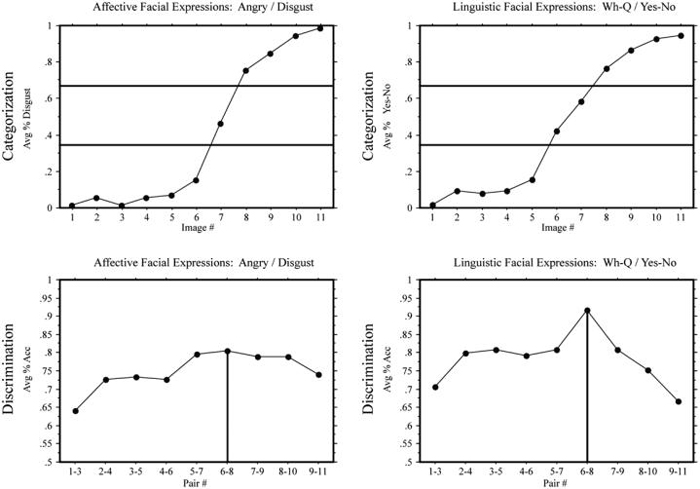

Fig. 3.

Experiment 1: Deaf signers. Top: mean response percentages for the identification tasks: percentage “disgust” responses in the angry/disgust continuum and percentage “yes-no” responses in the Wh-question/yes-no question continuum. The horizontal lines indicate the 33% and 66% boundaries (see text). Bottom: mean accuracy in the discrimination tasks: the vertical lines indicate the predicted peaks in accuracy.

Fig. 4.

Experiment 1: Hearing non-signers. Top: mean response percentages for the identification tasks: percentage “disgust” responses in the angry/disgust continuum and percentage “yes-no” responses in the Wh-question/yes-no question continuum. The horizontal lines indicate the 33% and 66% boundaries (see text). Bottom: mean accuracy in the discrimination tasks: the vertical lines indicate the predicted peaks in accuracy.

2.2.2. Discrimination task response time

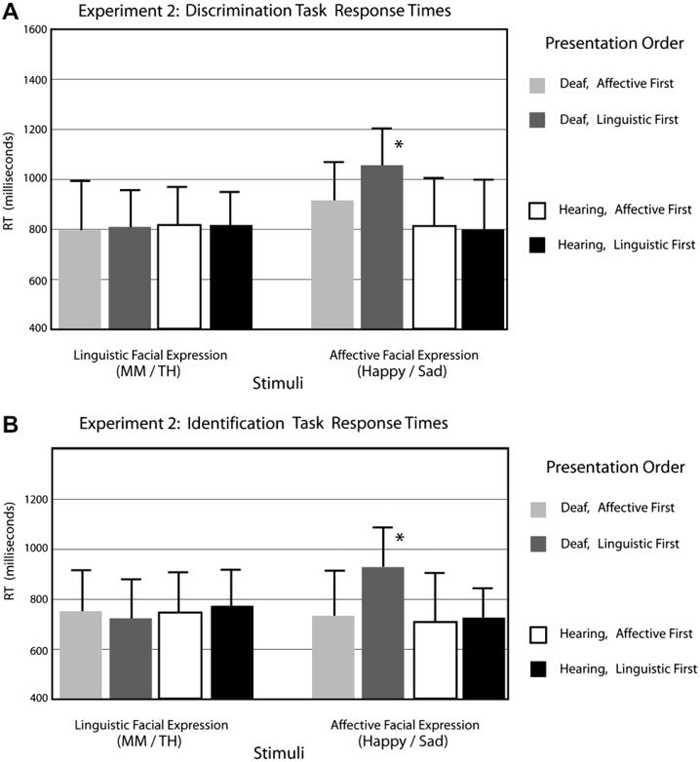

Response time data are presented in Fig. 5A. A repeated-measures ANOVA showed no group difference in response time, F(1,30) < 1. Affective facial expressions were responded to more slowly than linguistic facial expressions for both groups, F(1,30) = 27.70, p < .0001. There was a significant interaction between group and expression type, F(1,30) = 8.02, p < .01. The Deaf group was significantly slower than hearing non-signers for affective facial expressions, t(16) = 4.08, p < .001. There was also a significant three-way interaction between group, expression type, and presentation order, F(1,30) = 9.77, p < .005. Deaf participants responded significantly slower to affective expressions when linguistic expressions were presented first, t(15) = 2.72, p < .05, but not when affective expressions were presented first. In contrast, hearing participants were unaffected by initial presentation of linguistic expressions.

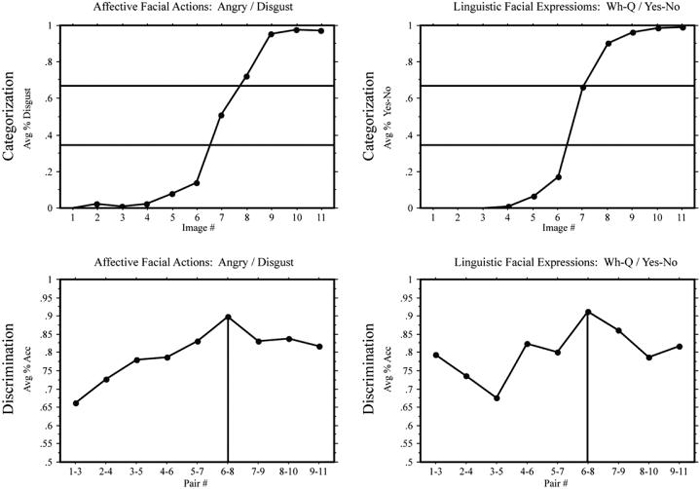

Fig. 5.

Each histogram shows the mean response time (in milliseconds) for (A) the ABX discrimination task and (B) the identification task. The group labeled “affective first” initially performed the discrimination task with the affective facial expression continua. The group labeled “linguistic first” initially performed the discrimination task with the linguistic facial expression continua. The asterisk indicates a significant three-way interaction between group, facial expression, and presentation order.

2.2.3. Identification task response time

The response time pattern for the identification task was parallel to the discrimination task (see Fig. 5B). There was no overall group difference, F(1,30) = 2.39, ns. Affective facial expressions were categorized more slowly than linguistic expressions, F(1,30) = 13.172, p = .001. There was a significant interaction between group and expression type, F(1,30) = 30.73, p < .0001. The Deaf group, again, was significantly slower for affective facial expressions, t(16) = 4.264, p < .0001. A three-way interaction between group, facial expression, and presentation order showed that the hearing group categorized facial expressions similarly, regardless the order of presentation, but the Deaf group was affected by presentation order, F(1,30) = 8.417, p < .001. Identification response times for Deaf signers were similar to hearing non-signers for affective facial expressions when affective expressions were presented first; however, signers were significantly slower if affective expressions were presented after linguistic facial expressions, t(15) = 2.391, p < .05.

2.3. Discussion

We predicted that both Deaf signers and hearing non-signers would exhibit categorical perception for affective facial expressions, and that only Deaf signers would exhibit a CP effect for linguistic facial expressions. The results, however, were somewhat unexpected and suggested a more complex interplay among the mechanisms involved in affective and linguistic facial expression perception. We explore this interaction below, discussing the results for affective and linguistic facial expressions separately.

2.3.1. Affective facial expressions

Both hearing non-signers and Deaf signers, as predicted, exhibited a sigmoidal shift in the identification task for the affective facial expression continuum. The sigmoidal curves observed in this experiment are similar to the curves observed in previous affective facial expression CP studies (de Gelder et al., 1997; Etcoff & Magee, 1992). As expected, hearing non-signers showed a CP effect for affective facial expressions in their discrimination task performance (see the bottom graph in Fig. 4). However, Deaf signers did not show a clear CP effect for the same facial expression continuum (bottom graph in Fig. 3). The ABX discrimination task used in our study involves a memory load that might encourage participants to linguistically label the emotional expressions, which may enhance CP effects. In fact, Roberson and Davidoff (2000) have argued that verbal coding is the mechanism that gives rise to categorical perception. However, both ASL and English contain lexical signs/words for angry and disgust, and both signers and speakers spontaneously use language to encode information in working memory (e.g., Bebko, Bell, Metcalfe-Haggert, & McKinnon, 1998; Wilson & Emmorey, 1997). Thus, the absence of a CP effect for Deaf signers is surprising, given that both signers and speakers have available linguistic labels for emotions and both groups have reasonably similar experiences with affective facial expressions.

We propose that the observed difference may be due to signers' unique experience with ASL. Specifically, ASL exposure during a critical period in early development may have significantly altered Deaf signers' internal representations for affective facial expressions. In a longitudinal analysis of affective and linguistic facial expression development in young Deaf children, Reilly et al. (1990b) found that Deaf 1-year-old children did not differ significantly from their hearing peers in achieving developmental milestones for the interpretation and expression of affective states. However, in the early stages of Deaf children's sign language acquisition, lexical signs for emotions were often accompanied by very specific facial expressions, as if both the sign and facial expression were unified into a gestalt, e.g., a sad facial expression with manual sign SAD. When these children reached approximately age two and a half, their signs and facial expressions bifurcated, often resulting in uncoordinated and asynchronous production of facial expressions and signs. It was not until after affective facial expressions were successfully de-contextualized from signs and incorporated into the linguistic system that facial expressions began to be tightly coordinated with signs or sentences again. At this later point in development, the affective facial expressions accompanying lexical signs for emotions are controlled independently and can be selectively omitted.

It is plausible that during this critical developmental transition, internal representations of affective facial expressions may be altered in the same way that internal representations of speech sounds can be modified by linguistic experience as shown in speech perception studies (e.g., Eimas, 1975; Iverson et al., 2003). A change in facial expression representations during early development may also explain why the Deaf BSL signers in the Campbell et al. (1999) study did exhibit CP effects for affective facial expressions. Unlike the Deaf ASL signers in our study who were exposed to ASL from birth, the Deaf British participants in the Campbell et al. (1999) study acquired sign language at a much later age. Thus, their internal affective facial expression representations could not have been affected by early sign language exposure.

2.3.2. Linguistic facial expressions

Deaf signers and hearing non-signers demonstrated sigmoidal performance in the identification task for linguistic facial expressions, and both groups also demonstrated CP effects. The performance of the Deaf group was expected given their linguistic experience; however, the CP effect for the hearing non-signers was unexpected and suggests that linguistic knowledge of ASL facial expressions marking Wh-questions and yes-no questions is not required for categorical perception.

There are at least two plausible explanations for the hearing non-signers' CP performance for ASL facial expressions. First, the linguistic facial expressions we selected may have been encoded and processed as affective facial expressions by the non-signers. For example, the yes-no question expression could be interpreted as `surprised' and the Wh-question as `angry' (see Fig. 2), and non-signers may have even internally labeled these expressions as such. Second, the linguistic facial expressions used in this study may be part of common non-linguistic, social facial displays that have been learned. For example, raised eyebrows indicate openness to communicative interaction for English speakers (Janzen & Shaffer, 2002; Stern, 1977). In either case, the results of Experiment 1 lend support to Campbell et al.'s (1999) conclusion that CP effects for facial expressions are not necessarily limited to universal, canonical affective facial expressions, i.e., happiness, sadness, anger, surprise, disgust, and fear (Ekman & Friesen, 1971).

2.3.3. Presentation order effects

Overall, Deaf signers responded more slowly than hearing non-signers for affective facial expressions; however, signers' response time performance for both identification and discrimination tasks was contingent upon presentation order (see Fig. 5). Specifically, Deaf signers' response times for affective facial expression were similar to hearing non-signers when affective facial expressions were presented first; however, their responses were slower when affective expressions were presented after the linguistic expressions. Hearing non-signers' response times, on the other hand, were unaffected by presentation order. The slowing effect of processing linguistic expressions on the recognition and discrimination of affective facial expressions for Deaf signers lends plausibility to our hypothesis that signers' linguistic experience may alter how affective facial expressions are processed. In addition, a post-hoc analysis separating the Deaf signers by presentation order into two subgroups revealed that the participants who viewed affective facial expressions first showed a slight trend for a CP effect for affective expressions, t(9) = 1.89, p = .06 (one-tailed); whereas the Deaf signers who first viewed linguistic facial expressions showed no hint of a CP effect for affective expressions, t(8) = .67.

However, the design of Experiment 1 had two shortcomings that may have affected the results. First, the facial expressions were presented with just the face, but linguistic facial expressions are normally accompanied by manual signs. Linguistic expressions constitute bound morphemes and do not occur in isolation. Thus, viewing linguistic facial expressions alone without accompanying manual signs was a highly atypical experience for Deaf signers. It is possible that those experimentally constrained images may have affected the CP and response time performance for signers for both types of facial expressions. Second, as previously discussed, the linguistic facial expressions used in Experiment 1 may have been viewed by hearing non-signers as affective facial expressions. Specifically, the Wh-question facial expression shares features with the universal expression of anger (furrowed brows), while the yes-no question expression shares features with the expression of surprise and social openness (raised eyebrows).

Therefore, we conducted a second experiment with different affective and linguistic facial expression continua and also presented the stimuli in the context of a manual sign. Adverbial facial expressions were chosen for the linguistic continuum because they are less likely to be viewed by hearing non-signers as belonging to a set of affective facial expressions. Adverbial expressions cannot be easily named - there are no lexical signs (or words) that label these facial expressions. In addition, both linguistic and affective expressions were presented in the context of a manual sign in order to make the linguistic stimuli more ecologically valid for Deaf signers. The MM adverbial expression (meaning “effortlessly”) and TH expression (meaning “carelessly”) were selected from a large set of ASL adverbial facial expressions because these expressions have little resemblance to universal affective facial expressions. For the affective continua, the positive and negative expressions happy and sad were chosen as end-points. These expressions were selected specifically to investigate whether the absence of a CP effect that we observed for Deaf signers will also hold for a more salient and contrasting continuum of affective facial expressions.

3. Experiment 2: MM/TH adverbial expressions and happy/sad affective facial expressions

3.1. Method

3.1.1. Participants

Twenty Deaf signers (8 males, 12 females; mean age = 26 years) and 24 hearing non-signers (13 males, 11 females; mean age = 20.5 years) participated. All of the Deaf signers had Deaf parents and learned ASL from birth. All were prelingually deaf and used ASL as their preferred means of communication. All hearing non-signers had no knowledge of ASL. Participants were tested either at Gallaudet University in Washington, DC, or at the Salk Institute for Biological Studies in San Diego, California.

3.1.2. Materials

The end-point images, which show both the face and upper body, were selected from a digital videotape in which a FACS certified sign model produced either affective (happy or sad) or linguistic facial signals (the adverbials MM “effortlessly” or TH “carelessly”) while producing the sign WRITE (see Figs. 6 and 7). The end-point images for the linguistic continuum were reviewed and selected by a native ASL signer as representing the best prototype for each adverbial expression. The morphing procedure performed on the selected images was identical to Experiment 1.

Fig. 6.

Illustration of morphed affective (happy/sad) facial expression continuum.

Fig. 7.

Illustration of morphed linguistic (MM/TH) facial expression continuum.

3.1.3. Procedure

The procedure was identical to Experiment 1.

3.2. Results

3.2.1. Assessment of categorical perception performance

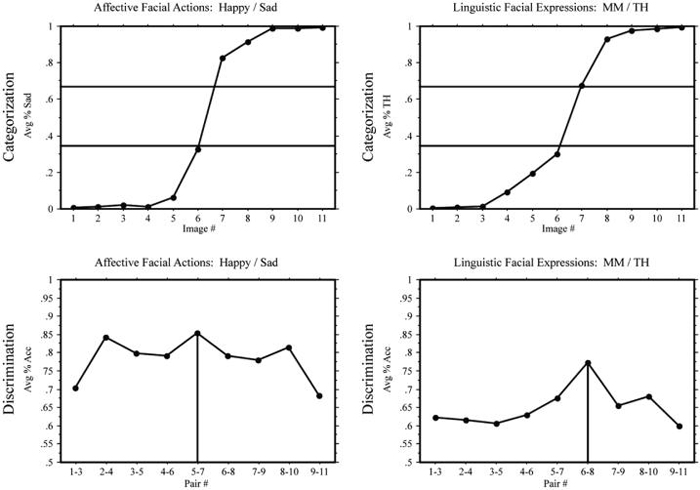

In the identification task, Deaf signers and hearing non-signers again demonstrated a sigmoidal shift for both linguistic (MM/TH) and affective (happy/sad) facial expression continua (see the top graphs in Figs. 8 and 9). The category boundaries for the discrimination tasks were determined by the same method described in Experiment 1. Both hearing non-signers and Deaf signers exhibited a CP effect for adverbial linguistic expressions, F(1,207) = 12.60, p < .001 and F(1,171) = 11.88, p < .001, respectively. However, as in Experiment 1, only hearing non-signers showed a CP effect for affective expressions, F(1,207) = 5.68, p < .05 (see bottom graphs for Figs. 8 and 9).

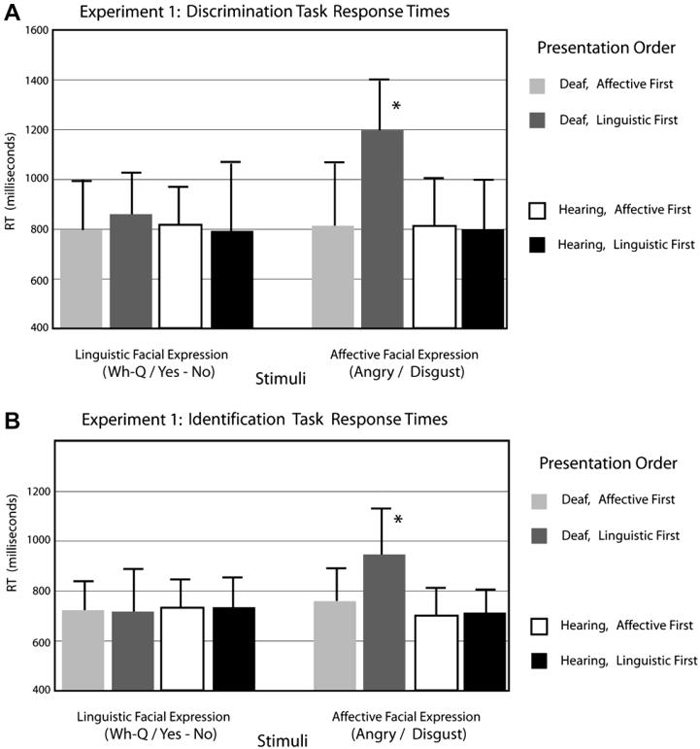

Fig. 8.

Experiment 2: Deaf participants. Top: mean response percentages for the identification tasks: percentage “sad” responses in the (happy/sad) continuum and percentage “TH” responses in the (MM/TH) continuum. The horizontal lines indicate 33% and 66% boundaries. Bottom: means of accuracy in the discrimination tasks; the vertical lines indicate the predicted peaks in accuracy.

Fig. 9.

Experiment 2: Hearing participants. Top: Mean response percentages for the identification tasks: percentage “sad” responses in the (happy/sad) continuum and percentage “TH” responses in the (MM/TH) continuum. The horizontal lines indicate 33% and 66% boundaries. Bottom: means of accuracy in the discrimination tasks; the vertical lines indicate the predicted peaks in accuracy.

3.2.2. Discrimination task response time

Response time data are presented in Fig. 10A. A repeated-measures ANOVA revealed a pattern of results similar to Experiment 1. There was no group difference in the discrimination task response time, F(1,40) < 1. However, across the groups, the affective continuum was discriminated more slowly, F(1,40) = 21.79, p < .0001. There was also a significant interaction between group and facial expression type, F(1,40) = 15.60, p < .001. Deaf signers were significantly slower than hearing non-signers only for affective facial expressions, t(19) = 4.9, p < .0001. Finally, there was a three-way interaction between group, facial expression type, and presentation order, F(1,40) = 4.50, p < .05. Deaf signers discriminated the affective facial expressions more slowly when they were presented after linguistic facial expressions, t(18) = 2.173, p < .05, but hearing signers were again unaffected by presentation order.

Fig. 10.

Each histogram shows the mean response time (in milliseconds) for (A) the ABX discrimination task and (B) the identification task. The group labeled “affective first” initially performed the discrimination task with the affective facial expression continua. The group labeled “linguistic first” initially performed the discrimination task with the linguistic facial expression continua. The asterisk indicates a significant three-way interaction between group, facial expression, and presentation order.

3.2.3. Identification task response times

As in Experiment 1, the response time pattern for the identification task was parallel to the discrimination task (see Fig. 10B). There was no significant group difference in response time for identification task, F(1,40) = 1.15, p = .28. Affective facial expressions again were categorized more slowly for both groups, F(1,40) = 4.9, p < .05). There was a two-way interaction between group and facial expression type, F(1,40) = 25.83, p = .0001. The Deaf group responded more slowly than the hearing group only for the affective facial expressions, t(1,19) = 3.482, p < .005. There was a three-way interaction of group, facial expression, and presentation order that was similar to that found in Experiment 1, F(1,40) = 12.39, p < .005. The Deaf group was significantly slower to categorize affective expressions when they were presented after the linguistic facial expressions, t(18) = 2.343, p < .05.

3.3. Discussion

As in Experiment 1, Deaf signers did not exhibit a CP effect for affective facial expressions, even though the affective facial expression stimuli in Experiment 2 were more salient and contrastive (happy versus sad). Both groups categorized the affective stimuli similarly (see top graphs in Figs. 8 and 9), but only the hearing group showed evidence of a CP effect (see bottom graph in Fig. 9). Also replicating the results of Experiment 1, both groups exhibited CP effects for ASL linguistic expressions, and there was a significant effect of presentation order on response time performance for the Deaf signers (see Fig. 10). Specifically, presentation of ASL linguistic facial expressions slowed response time to affective expressions. Hearing non-signers, as in the Experiment 1, were unaffected by the presentation order. We again conducted a post-hoc analysis with the Deaf signers separated by presentation order. Those participants who had not yet performed the task with linguistic facial expressions again showed a slight trend for a CP effect for affective facial expressions, t(10) = 1.78, p = .07, whereas there was no hint of a CP effect for affective facial expressions for those participants who first viewed linguistic expressions, t(10) = .56.

4. General discussion

The fact that hearing non-signers exhibited discontinuous perceptual boundaries for ASL linguistic facial expressions in both experiments demonstrates that non-affective unfamiliar facial expressions can be perceptually categorized. The CP effects observed for non-signers for ASL facial expressions are unlikely to arise from a verbal labeling strategy (particularly for Experiment 2), and thus the results could be used to argue for the existence of an innate perceptual mechanism for discrimination of any type of upright facial expression (Campbell et al., 1999). However, there is a different possible explanation. Linguistic facial expressions may have roots in social facial displays, which may have been discerned by hearing non-signers despite our best efforts to minimize their resemblance to affective facial expressions. Ekman (1979) reports a large number of facial expressions that are normally generated during everyday conversations that are not associated with emotional categories. It is possible that some of the linguistic facial expressions used in ASL originated from those social facial expressions, but they have been systematized and re-utilized to serve linguistic functions.

Cross-linguistic studies of linguistic facial expressions in sign languages lend some support to this hypothesis. Facial expressions that mark linguistic distinctions are often similar or even identical across signed languages; however, the same facial expression does not necessarily represent the same meaning cross-linguistically (Zeshan, 2004). The use of highly similar facial expressions across signed languages suggests that these facial markers may originate from non-affective social facial displays adopted from the surrounding hearing communities. The abstraction and conventionalization of linguistic facial markers from social facial expressions can occur through repetition, ritualization, and obligatoriness (Janzen & Shaffer, 2002). Moreover, a small subset of highly similar linguistic facial markers from the wide range of possible facial expressions suggests that those expressions have undergone a highly selective conventionalization process. If a facial expression cannot be easily perceived and/or expressed under different environmental conditions (e.g., a rapid succession of facial expressions or in low light), it will be less likely to be repeated, conventionalized, and adopted as an obligatory facial expression. Given the nature of conventionalization processes, facial expressions selected as linguistic markers must be inherently salient and easy to categorize. Thus, the CP effects observed for hearing non-signers for linguistic facial markers may be due to properties inherent to the conventionalized facial expressions.

Deaf signers, as predicted, showed significant CP effects for linguistic facial expressions in both experiments, but they consistently showed a lack of CP effects for affective facial expressions. We hypothesize that Deaf signers' experience with linguistic facial expressions accounts for this pattern of results. Specifically, we propose that initially viewing linguistic facial expressions led to different attentional and perceptual strategies that subsequently affected the perception of the affective expression continua. Deaf signers performed similarly to hearing non-signers for the affective continuum when affective facial expressions were presented first; however, signers performed significantly slower on the same affective continuum after performing the tasks with linguistic facial expressions (see Figs. 5 and 10).

Effects of presentation order on facial expression processing have been observed in other studies. Corina (1989) conducted a tachistoscopic hemifield study with affective and linguistic facial expressions presented to Deaf signers and hearing non-signers. When affective facial expressions were presented first, Deaf signers showed a left visual field (right hemisphere) advantage for both types of expressions; however, when linguistic facial expressions were presented before affective facial expression, signers showed a right visual field (left hemisphere) advantage for linguistic facial expression and no hemispheric advantage for affective facial expressions. Order effects were not observed for hearing non-signers. As in the present study, Corina (1989) found that viewing linguistic facial expressions affected the perception of affective facial expressions for signers.

Why does performing a discrimination or perceptual task with linguistic facial expressions affect the performance of signers, but not non-signers, on affective facial expressions? We suggest that such order effects stem from the distinct contribution of local and global processes for different types of facial expressions. Linguistic facial expressions differ from affective facial expressions in many respects, but one salient difference is that linguistic facial features are expressed independently from each other. For example, it is possible to produce combinations of yes- no or Wh-questions (changes in eyebrows) with MM or TH (changes in mouth feature) to convey eight grammatically distinct meanings that can accompany a single manual sign, such as WRITE: MM alone (“write effortlessly”), Wh-question alone (“what did you write?”), MM + WH-Q (“what did you write effortlessly?”), TH + yes-no (“did you write carelessly?”), etc. In order to isolate and comprehend the linguistic meaning of an ASL sentence, signers must quickly recognize and integrate various individual facial features.

In addition, it appears that substantial experience in processing linguistic facial expressions can lead to significant changes in local face processing mechanisms. Previous research has shown that both Deaf and hearing signers perform significantly better than non-signers on a task that requires local facial feature discrimination (McCullough & Emmorey, 1997) and on the Benton Faces Task, which involves the discrimination of faces under different conditions of lighting and shadow (Bellugi et al., 1990; Bettger et al., 1997). However, signers and non-signers do not differ in the gestalt processing of faces, as assessed by the Mooney Closure Test or in their ability to simply recognize faces after a short delay (McCullough & Emmorey, 1997). Thus, signers appear to only exhibit enhanced performance on tasks that require greater attention to componential facial features. Attention to such local facial features is required during every day language processing for signers. Finally, an fMRI study of affective and linguistic facial expression perception found that activation within the fusiform gyrus was significantly lateralized to the left hemisphere for Deaf signers, but was bilateral for hearing non-signers, for both affective and linguistic expressions (McCullough, Emmorey, & Sereno, 2005). McCullough et al. (2005) hypothesized that the strong left-lateralized activation observed for Deaf signers was due to their reliance on local featural processing of facial expressions, which preferentially engages the left fusiform gyrus (e.g., Hillger & Koenig, 1991; Rossion, Dricot, Devolder, Bodart, & Crommelinck, 2000). Interestingly, categorical perception effects for color are greater when stimuli are presented to the left hemisphere (Drivonikou et al., 2007; Gilbert et al., 2006), which indicates that the presence of linguistic categories affects the neural systems that underlie perceptual discrimination (see also Tan et al., 2008).

How might differences in local facial feature processing and linguistic categorization impact the perception of affective facial expressions? Previous studies have shown that componential (featural) and holistic processing are not wholly independent and sometimes can influence each other. Tanaka and Sengco (1997) propose that the internal representation of a face is composed of highly interdependent componential and holistic information, such that a change in one type of face information affects another. Since Deaf signers must continuously attend to and recognize local facial features for linguistic information, their holistic facial perception may be altered by disproportionate attention to componential facial features. The signers' constant attention to local facial features and their increased number of facial expression categories may lead to a warping of the similarity space for facial expressions, compressing and expanding the representation of facial expressions along various dimensions (see Livingston et al., 1998 for an extended discussion of multi-dimensional warping and similarity space). Importantly, this does not mean that Deaf signers have impaired affective facial recognition—they perform similarly to (or even better than) hearing non-signers in affective facial expression recognition and categorization (Goldstein & Feldman, 1996; Goldstein, Sexton, & Feldman, 2000). Moreover, the effect of featural processing seems to be fluid and transitory, as the order effects have shown. When affective facial expressions are presented first, the performance of Deaf signers parallels that of hearing non-signers in both the Corina (1989) study and in our study.

In conclusion, the evidence from two experiments suggests that facial expression categorical perception is not limited to universal emotion categories, but readily extends to other types of facial expressions, and that exposure to linguistic facial expression categories can significantly alter categorical perception for affective facial expressions.

Acknowledgments

This work was supported by a grant from the National Institutes of Health (R01 HD13249) to Karen Emmorey and San Diego State University. We thank David Corina for the original black and white images used in Experiment 1, and Judy Reilly for help with stimuli development for Experiment 2. We would also like to thank all of the Deaf and hearing individuals who participated in our studies.

Footnotes

By convention, uppercase Deaf is used when the use of sign language and/or membership in the Deaf community is at issue, and lower case deaf is used to refer to audiological status.

References

- Arnold P, Murray C. Memory for faces and objects by deaf and hearing signers and hearing non-signers. Journal of Psycholinguistic Research. 1998;27(4):481–497. doi: 10.1023/a:1023277220438. [DOI] [PubMed] [Google Scholar]

- Baker SA, Isardi WJ, Golinkoff RM, Petitto LA. The perception of handshapes in American Sign Language. Memory & Cognition. 2005;33(5):887–904. doi: 10.3758/bf03193083. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baker-Shenk C. A microanalysis of the non-manual components of questions in American Sign Language. University of California; Berkeley: 1983. Unpublished Ph.D. dissertation. [Google Scholar]

- Bates E, Thal D, Finlay B, Clancy B. Early language development and its neural correlates. In: Rapin I, Segalowitz S, editors. Handbook of neuropsychology, part II. 2nd ed. Vol. 8. Elsevier Science B.V.; Amsterdam: 2003. pp. 525–592. [Google Scholar]

- Beale JM, Keil FC. Categorical effects in the perception of faces. Cognition. 1995;57:217–239. doi: 10.1016/0010-0277(95)00669-x. [DOI] [PubMed] [Google Scholar]

- Bebko JM, Bell MA, Metcalfe-Haggert A, McKinnon E. Language proficiency and the prediction of spontaneous rehearsal in children who are deaf. Journal of Experimental Child Psychology. 1998;68:51–69. doi: 10.1006/jecp.1997.2405. [DOI] [PubMed] [Google Scholar]

- Bellugi U, O'Grady L, Lillo-Martin D, O'Grady M, Hoek K, van Corina D. Enhancement of spatial cognition in deaf children. In: Volterra V, Erting CJ, editors. From gesture to language in hearing and deaf children. Springer-Verlag; New York: 1990. pp. 278–298. [Google Scholar]

- Bettger J, Emmorey K, McCullough S, Bellugi U. Enhanced facial discrimination: Effects of experience with American Sign Language. Journal of Deaf Studies and Deaf Education. 1997;2(4):223–233. doi: 10.1093/oxfordjournals.deafed.a014328. [DOI] [PubMed] [Google Scholar]

- Bornstein MH, Korda NO. Discrimination and matching within and between hues measured by response time: Some implications for categorical perception and levels of information processing. Psychological Research. 1984;46:207–222. doi: 10.1007/BF00308884. [DOI] [PubMed] [Google Scholar]

- Brentari D. A prosodic model of sign language phonology. The MIT Press; Cambridge, MA: 1998. [Google Scholar]

- Calder AJ, Young AW, Perrett DI, Etcoff NI, Rowland D. Categorical perception of morphed facial expressions. Visual Cognition. 1996;3:81–117. [Google Scholar]

- Campanella S, Quinet P, Bruyer R, Crommelinck M, Guerit J-M. Categorical perception of happiness and fear facial expressions: An ERP study. Journal of Cognitive Neuroscience. 2002;14(2):210–227. doi: 10.1162/089892902317236858. [DOI] [PubMed] [Google Scholar]

- Campbell R, Woll B, Benson PJ, Wallace SB. Categorical perception of face actions: Their role in sign language and in communicative facial displays. Quarterly Journal of Experimental Psychology A. 1999;52(1):62–95. doi: 10.1080/713755802. [DOI] [PubMed] [Google Scholar]

- Cohen JD, MacWhinney B, Flatt M, Provost J. PsyScope: A new graphic interactive environment for designing psychology experiments. Behavioral Research Methods, Instruments, and Computers. 1993;25(2):257–271. [Google Scholar]

- Corina D. Recognition of affective and noncanonical linguistic facial expressions in hearing and deaf subjects. Brain and Cognition. 1989;9:227–237. doi: 10.1016/0278-2626(89)90032-8. [DOI] [PubMed] [Google Scholar]

- Darwin C. The expression of the emotions in man and animals. Appleton and Company; New York: 1872. [Google Scholar]

- de Gelder B, Teunisse JP, Benson PJ. Categorical perception of facial expressions: Categories and their internal structure. Cognition and Emotion. 1997;11(1):1–23. [Google Scholar]

- Drivonikou GV, Kay P, Regier T, Ivry RB, Gilbert AL, Franklin A, et al. Further evidence that Whorfian effects are stronger in the right visual field than the left. Proceedings of the National Academy of Sciences. 2007;104(3):1097–1102. doi: 10.1073/pnas.0610132104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eimas PD. Auditory and phonetic coding of cues for speech: Discrimination of the [r-l] distinction by young infants. Perception and Psychophysics. 1975;18:341–347. [Google Scholar]

- Eimas PD, Siqueland ER, Jusczyk P, Vigorito J. Speech perception in infants. Science. 1971;171:303–306. doi: 10.1126/science.171.3968.303. [DOI] [PubMed] [Google Scholar]

- Ekman P. About brows: Affective and conversational signals. In: von Cranach M, Foppa K, Lepenies W, Ploog D, editors. Human ethology. Cambridge University Press; New York: 1979. pp. 169–248. [Google Scholar]

- Ekman P. Strong evidence for universals in facial expressions: A reply to Russell's mistaken critique. Psychological Bulletin. 1994;115:107–117. doi: 10.1037/0033-2909.115.2.268. [DOI] [PubMed] [Google Scholar]

- Ekman P, Friesen W. Constants across cultures in the face and emotion. Journal of Personality and Social Psychology. 1971;17(2):124–129. doi: 10.1037/h0030377. [DOI] [PubMed] [Google Scholar]

- Ekman P, Friesen W. Facial action coding system. Consulting Psychologists Press; Palo Alto, CA: 1978. [Google Scholar]

- Ekman P, Friesen WV, O'Sullivan M, Chan A, Diacoyanni-Tarlatzis I, Heider K, et al. Universals and cultural differences in the judgments of facial expressions of emotion. Journal of Personality and Social Psychology. 1987;53(4):712–717. doi: 10.1037//0022-3514.53.4.712. [DOI] [PubMed] [Google Scholar]

- Emmorey K, McCullough S, Brentari D. Categorical perception in American Sign Language. Language and Cognitive Processes. 2003;18(1):21–45. [Google Scholar]

- Etcoff NL, Magee JJ. Categorical perception of facial expressions. Cognition. 1992;44:227–240. doi: 10.1016/0010-0277(92)90002-y. [DOI] [PubMed] [Google Scholar]

- Gilbert AL, Regier T, Kay P, Ivry RB. Whorf hypothesis is supported in the right visual field but not the left. Proceedings of the National Academy of Sciences. 2006;103(2):489–494. doi: 10.1073/pnas.0509868103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldstein NS, Feldman RS. Knowledge of American Sign Language and the ability of hearing individuals to decode facial expressions of emotion. Journal of Nonverbal Behavior. 1996;20:111–122. [Google Scholar]

- Goldstein NS, Sexton J, Feldman RS. Encoding of facial expressions of emotion and knowledge of American Sign Language. Journal of Applied Social Psychology. 2000;30:67–76. [Google Scholar]

- Herpa CM, Heining M, Young AW, Browning M, Benson PJ, Phillips ML, et al. Conscious and nonconscious discrimination of facial expressions. Visual Cognition. 2007;15(1):36–74. [Google Scholar]

- Hillger LA, Koenig O. Separable mechanisms in face processing: Evidence from hemispheric specialization. Journal of Cognitive Neuroscience. 1991;3:42–58. doi: 10.1162/jocn.1991.3.1.42. [DOI] [PubMed] [Google Scholar]

- Huttenlocher J, Hedges LV, Vevea JL. Why do categories affect stimulus judgment? Journal of Experimental Psychology: General. 2000;129:220–241. doi: 10.1037//0096-3445.129.2.220. [DOI] [PubMed] [Google Scholar]

- Iverson P, Kuhl PK, Akahane-Yamada R, Diesch E, Kettermann A, Siebert C. A perceptual interference account of acquisition difficulties for non-native phonemes. Cognition. 2003;87:B47–B57. doi: 10.1016/s0010-0277(02)00198-1. [DOI] [PubMed] [Google Scholar]

- Janzen T, Shaffer B. Gesture as the substrate in the process of ASL grammaticization. In: Meier RP, et al., editors. Modality and structure in signed and spoken languages. Cambridge University Press; New York: 2002. pp. 199–223. [Google Scholar]

- Kiffel C, Campanella S, Bruyer R. Categorical perception of faces and facial expressions: The age factor. Experimental Aging Research. 2005;31:119–147. doi: 10.1080/03610730590914985. [DOI] [PubMed] [Google Scholar]

- Liberman AM, Harris KS, Hoffman HS, Griffith BC. The discrimination of speech sounds within and across phoneme boundaries. Journal of Experimental Psychology. 1957;54:358–368. doi: 10.1037/h0044417. [DOI] [PubMed] [Google Scholar]

- Liddell SK. American Sign Language syntax. The Hague; Mouton: 1980. [Google Scholar]

- Livingston KR, Andrews JK, Harnad S. Categorical perception effects induced by category learning. Journal of Experimental Psychology: Learning, Memory & Cognition. 1998;23(3):732–753. doi: 10.1037//0278-7393.24.3.732. [DOI] [PubMed] [Google Scholar]

- Mayberry RI. First-language acquisition after childhood differs from second-language acquisition: The case of American Sign Language. Journal of Speech and Hearing Research. 1993;36(6):1258–1270. doi: 10.1044/jshr.3606.1258. [DOI] [PubMed] [Google Scholar]

- McCullough S, Emmorey K. Face processing by deaf ASL signers: Evidence for expertise in distinguishing local features. Journal of Deaf Studies and Deaf Education. 1997;2(4):212–222. doi: 10.1093/oxfordjournals.deafed.a014327. [DOI] [PubMed] [Google Scholar]

- McCullough S, Emmorey K, Sereno M. Neural organization for recognition of grammatical and emotional facial expressions in deaf ASL signers and hearing nonsigners. Cognitive Brain Research. 2005;22(2):193–203. doi: 10.1016/j.cogbrainres.2004.08.012. [DOI] [PubMed] [Google Scholar]

- Newport EL. Maturational constraints on language learning. Cognitive Science. 1990;14:11–28. [Google Scholar]

- Pilling M, Wiggett A, Özgen E, Davies IRL. Is color “categorical perception” really perceptual? Memory & Cognition. 2003;31(4):538–551. doi: 10.3758/bf03196095. [DOI] [PubMed] [Google Scholar]

- Reilly JS, McIntire M, Bellugi U. The acquisition of conditionals in American Sign Language: Grammaticized facial expressions. Applied Psycholinguistics. 1990a;11(4):369–392. [Google Scholar]

- Reilly JS, McIntire ML, Bellugi U. Faces: The relationship between language and affect. In: Volterra V, Erting JC, editors. From gesture to language in hearing and deaf children. Springer-Verlag; Berlin: 1990b. pp. 128–141. [Google Scholar]

- Roberson D, Damjanovic L, Pilling M. Categorical perception of facial expressions: Evidence for a “category adjustment” model. Memory & Cognition. 2007;35(7):1814–1829. doi: 10.3758/bf03193512. [DOI] [PubMed] [Google Scholar]

- Roberson D, Davidoff J. The categorical perception of colors and facial expressions: The effect of verbal interference. Memory & Cognition. 2000;28(6):977–986. doi: 10.3758/bf03209345. [DOI] [PubMed] [Google Scholar]

- Roberson D, Pak H, Hanley JR. Categorical perception of colour in the left and right visual field is verbally mediated: Evidence from Korean. Cognition. 2008;107:752–762. doi: 10.1016/j.cognition.2007.09.001. [DOI] [PubMed] [Google Scholar]

- Rossion B, Dricot L, Devolder A, Bodart JM, Crommelinck M. Hemispheric asymmetries for whole-based and part-based face processing in the human fusiform gyrus. Journal of Cognitive Neuroscience. 2000;12:793–802. doi: 10.1162/089892900562606. [DOI] [PubMed] [Google Scholar]

- Stern D. The first relationship. Harvard University Press; Cambridge, MA: 1977. [Google Scholar]

- Tan LH, Chan AHD, Kay P, Khong P-L, Yip LKC, Luke K-L. Language affects patterns of brain activation associated with perceptual decision. Proceedings of the National Academy of Sciences. 2008;105(10):4004–4009. doi: 10.1073/pnas.0800055105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tanaka JW, Sengco JA. Features and their configuration in face recognition. Memory & Cognition. 1997;25(5):583–592. doi: 10.3758/bf03211301. [DOI] [PubMed] [Google Scholar]

- Wilson M, Emmorey K. Working memory for sign language: A window into the architecture of working memory. Journal of Deaf Studies and Deaf Education. 1997;2(3):123–132. doi: 10.1093/oxfordjournals.deafed.a014318. [DOI] [PubMed] [Google Scholar]

- Zeshan U. Head, hand and face - negative construction in sign languages. Linguistic Typology. 2004;8:1–57. [Google Scholar]