Abstract

The adult brain is endowed with mechanisms subserving enhanced processing of salient emotional and social cues. Stimuli associated with threat represent one such class of cues. Previous research suggests that preferential allocation of attention to social signals of threat (i.e. a preference for fearful over happy facial expressions) emerges during the second half of the first year. The present study was designed to determine the age of onset for infants’ attentional bias for fearful faces. Allocation of attention was studied by measuring event-related potentials (ERPs) and looking times (in a visual paired comparison task) to fearful and happy faces in 5- and 7-month-old infants. In 7-month-olds, the preferential allocation of attention to fearful faces was evident in both ERPs and looking times, i.e. the negative central mid-latency ERP amplitudes were more negative, and the looking times were longer for fearful than happy faces. No such differences were observed in the 5-month-olds. It is suggested that an enhanced sensitivity to facial signals of threat emerges between 5 and 7 months of age, and it may reflect functional development of the neural mechanisms involved in processing of emotionally significant stimuli.

Keywords: facial expression, attention, event-related potentials, looking time, infants

INTRODUCTION

The ability to recognize salient emotional and social cues and prioritize them over other competing stimuli is critical for adaptive behaviour. Indeed, behavioural and neuroimaging studies in adults have provided evidence for enhanced processing of threat-related signals, such as fearful facial expressions (Vuilleumier, 2005; Williams, 2006). The impact of emotional salience on attention and perception is reflected, for example, in preferential allocation of attention to fearful over simultaneously presented neutral faces (Pourtois et al., 2004; Holmes et al., 2005), lower detection threshold for fearful than happy/neutral faces in rapid serial presentation conditions (Milders et al., 2006), and improved visual contrast sensitivity following fearful face cues (Phelps et al., 2006). Neuroimaging and event-related potential (ERP) studies have also provided evidence for enhanced neural responses to fearful faces in the visual cortex (Morris et al., 1998; Batty and Taylor, 2003; Vuilleumier et al., 2004; Williams et al., 2006; Leppänen et al., 2007a).

The developmental origins for the enhanced processing of social signals of threat are not known in detail and emotional face processing in general is considered to follow a protracted developmental course throughout childhood and adolescence (Leppänen and Nelson, 2006). Previous research, however, suggests that a rudimentary capacity to prefer salient emotional cues emerges by the second half of the first year. There is converging evidence that at 7 months of age, infants allocate attention preferentially to fearful over happy expressions. When looking times have been measured to paired presentations of fearful and happy faces (i.e. a visual paired comparison procedure; VPC), 7-month-olds have been shown to spontaneously look longer at fearful than happy expressions (Nelson and Dolgin, 1985; Kotsoni et al., 2001; Leppänen et al., 2007b). In studies investigating attention allocation to emotional faces by recording ERPs, 7-month-olds typically display a larger negativity at frontocentral recording sites 400 ms after stimulus onset for fearful as compared to happy faces (Nelson and de Haan, 1996; de Haan et al., 2004; Leppänen et al., 2007b). This ‘Negative central’ (Nc) component is thought to reflect an obligatory attentional response to salient, meaningful and, in visual memory paradigms, to infrequent stimuli [see de Haan (2007) for review]. The cortical sources of the infant Nc have been localized in the anterior cingulate region (Reynolds and Richards, 2005), corroborating its role in attention regulation (cf. Bush et al., 2000). Finally, our previous study (Peltola et al., 2008) showed that 7-month-old infants disengaged their attention significantly less frequently from centrally presented fearful faces than happy faces or control stimuli in order to shift attention to peripheral targets. These results paralleled those found in adults (e.g. Georgiou et al., 2005).

Although there is growing evidence for 7-month-old infants’ attentional bias for fearful faces, the age of onset for such a bias is not known. The majority of infant ERP studies on emotional face processing has centred on 7-month-old infants (Nelson and de Haan, 1996; de Haan et al., 2004; Grossmann et al., 2007; Leppänen et al., 2007b), and, to our knowledge, there are no published studies investigating younger infants’ ERP responses to fearful emotional expressions [but see Hoehl et al. (2008) for related research]. Studies with human newborns (Farroni et al., 2007) and macaque monkeys (Bauman and Amaral, 2008) indicate that at the early stages of development, infants are not particularly sensitive to fearful faces and other facial signals of threat. There is even some evidence for an early ‘positivity bias’ (Vaish et al., 2008) as earlier studies found longer looking times to happy than angry/frowning and neutral sequentially presented expressions in 5-month-old infants (LaBarbera et al., 1976; Wilcox and Clayton, 1968). Behavioural studies employing a habituation-recovery paradigm to examine infants’ ability to categorize multiple exemplars of an expression to a single class have provided evidence that at the age of 5 months, infants are able to recognize happy expressions (Caron et al., 1982), even when they have been habituated to different intensities of smiles posed by different models (Bornstein and Arterberry, 2003). Interestingly, in an additional looking time assessment with happy and fearful faces, 5-month-olds did not show an attentional preference for either happy or fearful faces (i.e. there were no differences in looking times between these expressions; Bornstein and Arterberry, 2003).

In the present study, we examined with electrophysiological and behavioural methods whether enhanced visual attention to fearful faces is already present at 5 months of age. A standard visual ERP paradigm with happy and fearful faces was first presented to 5- and 7-month-old infants, after which they saw a VPC presentation with two 10-second trials of happy/fearful face pairs. For 7-month-olds, we hypothesized to replicate the previous findings of a larger Nc and longer looking time for fearful than happy faces (Nelson and de Haan, 1996; de Haan et al., 2004; Leppänen et al., 2007b). On the basis of the previous literature (e.g. Bornstein and Arterberry, 2003), we did not expect to see a similar attentional bias in 5-month-old infants.

METHODS

Participants

For the ERPs, the final sample consisted of 18 5-month-old and 20 7-month-old infants. EEG was also recorded from an additional 11 5-month-old and 9 7-month-old infants, who were excluded from the ERP analyses due to fussiness or excessive movement artefacts resulting in <10 good trials. VPC data were analysed from 23 5-month-olds and 26 7-month-olds. VPC data from six infants were discarded due to a side bias (i.e. looking at one stimulus for <5% out of the total looking time for each trial), from two infants due to experimenter error, and from one infant due to fussiness. The parents of the participants were contacted through birth records and local Child Welfare Clinics, and all testing sessions were scheduled within 1 week of the infants’ 5th- or 7th-month birthday. All infants were full term (37–42 weeks), with a birth weight of >2400 g, and free from postnatal visual or neurological abnormalities. Approval for the study was obtained from the research permission committee of the Department of Social Services and Health Care of the city of Tampere.

Eight of the infants in the ERP sample and 14 of the infants in the VPC sample were tested twice (i.e. at both ages). The potential influence of repeated testing was taken into account in data analyses, as described below.

Stimuli and task procedure

The stimuli were colour images of fearful and happy facial expressions posed by four female models. All stimuli were presented on a white background and cropped to exclude all non-face features. Two of the four stimulus models were taken from our own laboratory stimulus database and the other two were from the MacBrain Face Stimulus Set (Tottenham et al., in press). Prior to data collection, a group of adults (n = 18), rated the stimuli for fearfulness and happiness on a scale from 1 to 7. The ratings confirmed that the fearful (M = 5.9; range = 5.2–6.6) and happy (M = 6.1; range = 5.6–6.5) expressions were considered good and equally intense examples of the respective emotions. Consistent with the methodology used in prior infant studies, each infant was presented with expressions of only one model. Different models were, however, used in the ERP and VPC tasks. The infants who participated in the experiment twice saw the faces of the two remaining models on their second visit. With a looking distance of 60 cm, the stimuli measured 15.4° and 10.8° of vertical and horizontal visual angle, respectively.

Upon arrival to the laboratory, the experimental procedure was described and signed consent was obtained from the parent. EEG was recorded while the infants were sitting on their parents lap in front of a 19-inch LCD monitor surrounded by black panels. Fearful and happy faces were shown repeatedly for 1000 ms in random order.1 Between the stimuli, a black-and-white 4°×4° checkerboard stimulus was presented on the centre of the screen to attract the infants’ attention. The checkerboard flickered for the first 1000 ms at 4 Hz and then remained stationary. The experimenter monitored the infants’ looking behaviour through a hidden video camera mounted above the monitor and initiated each trial only when the infants were attending at the stationary checkerboard stimulus. The trials on which the infant blinked or turned his/her eyes away from the face during stimulus presentation were marked online as bad by the experimenter. Trials were presented until the infant became too fussy or inattentive to continue, but with a maximum of 150 trials. On average, 72.3 (SD = 25.4; range = 28–149) trials were presented during the EEG recording. Stimulus presentation was controlled by Neuroscan Stim software running on a 3.4 GHz desktop computer.

After the EEG recording, the electrode cap was removed and two 10-s VPC trials with a happy and a fearful face were presented. On one trial, a happy face was on the left, on the other trial, a fearful face was on the left. The faces were presented 12° apart (ear to ear). The left-right positioning of the two expressions was counterbalanced between participants. The infants’ looking behaviour was recorded with the video camera for offline analyses. The VPC trials were presented with E-Prime software (Psychology Software Tools Inc., www.pstnet.com/eprime).

Acquisition and analysis of the electrophysiological data

Continuous EEG was recorded with 30 Ag–AgCl electrodes mounted in an elastic cap (Quik-Cap) and placed according to the 10–20 system. Neuroscan QuikCell liquid electrolyte electrode application technique was used to obtain electrode impedances <10 kΩ. Linked mastoids served as the reference. EOG electrodes were left out as pilot testing indicated the majority of the infants being markedly intolerant for those electrodes. However, great care was taken to ensure that the final data were free of ocular artefacts by (i) the experimenter monitoring the infants’ eyes continuously during stimulus presentation (and marking trials with eye movements as bad) and (ii) by visually inspecting the EEG for all trials, as described below. The data were sampled at 500 Hz, band-pass filtered from 0.1 to 100 Hz and stored on a computer disk for offline analyses by Scan 4.3 software. Offline, the data were lowpass-filtered at 30 Hz, segmented to 1100 ms epochs starting 100 ms before the presentation of each stimulus and baseline-corrected against the mean voltage during the 100-ms pre-stimulus period. Trials marked as bad online by the experimenter were removed, and the epochs were also visually inspected for artefacts. Trials with motion artefacts resulting from head or body movements, high-frequency EMG artefacts or the activity exceeding A-D values were discarded. Furthermore, for the electrodes above the eyes (Fp1, Fp2), trials with the amplitude exceeding 100 µV were discarded. Infants with <10 good trials in either of the experimental conditions (fearful and happy faces) were excluded from the analyses. For the infants included in the analyses, there were no significant differences in the number of good fearful and happy trials between age groups (5-month-olds: fearful M = 16.5, happy M = 16.4; 7-month-olds: fearful M = 17.4, happy M = 18.8), F(1, 36) = 1.7, P > 0.2.

Attention-sensitive brain responses to the stimuli were examined by analysing the Nc component. There are also other infant ERP components that have been recently associated with the processing of facial expressions, most importantly the posterior N290 and P400 (Leppänen et al., 2007b, in press; Kobiella et al., 2008). However, as the primary focus of our study was on processes related to visual attention, and because the electrode cap used in the present study has a rather sparse coverage of posterior regions where the N290 and P400 components are most prominent, we will only report data on the Nc component. The relation of the Nc and attention is well established and the frontocentral regions where the Nc is most prominent were well covered in the present measurement. To analyse the Nc component, amplitude averages were calculated within a time window of 350–600 ms for electrodes C3 and C4. This time window and these recording sites were chosen on the basis of previous literature (de Haan et al., 2004; Leppänen et al., 2007b) and our own data (i.e. inspection of individual infants’ average ERP plots) showing the most prominent Nc amplitudes on central electrodes within this time window. For both age groups, Nc amplitudes were subjected to a 2 × 2 analysis of variance (ANOVA) with Expression (fearful, happy) and Hemisphere (left, right) as within-subject factors.

Analysis of the behavioural data

The video recordings of the infants’ looking behaviour during VPC trials were coded by an observer blind to the left–right positioning of the fearful and happy faces. Queen's Video Coder (Baron et al., 2001) was used to analyse the total time the infants spent looking at the stimulus on the left and right side for each trial. To ensure the reliability of the coding, another observer coded ∼25% of the recordings (13 infants). Pearson correlations between the two observers’ coding of the participants’ total looking times during individual VPC trials ranged from 0.95 to 1. The analyses were performed on the total looking time for fearful and happy faces, averaged across the two trials.

RESULTS

Electrophysiological data

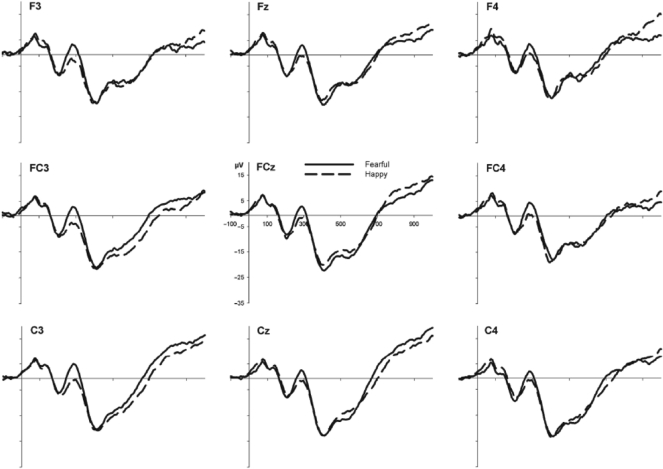

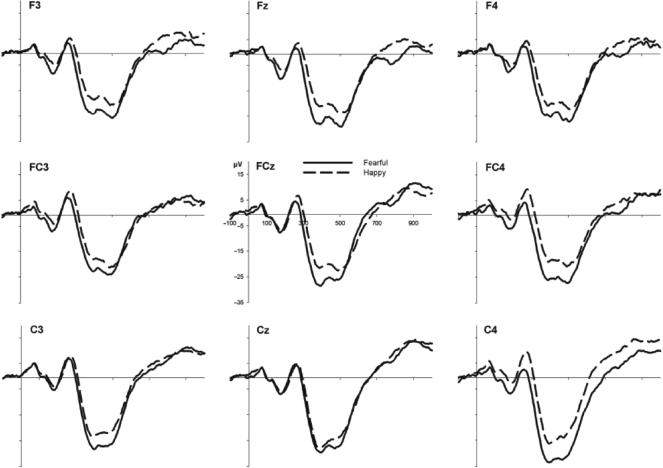

The grand average ERPs measured from frontocentral electrode sites for 5- and 7-month-old infants are shown in Figures 1 and 2, respectively. For the 5-month-olds, an ANOVA showed no main effects or interactions involving Expression or Hemisphere, Fs < 2.2. Thus, the average Nc amplitudes were no greater for fearful (M = −16.3 μV; SD = 11.1) than happy (M = −16.8 μV; SD = 12.7) faces at 5 months of age. For the 7-month-olds’ ERP data, however, an ANOVA resulted in main effects of Hemisphere, F(1, 19) = 5.3, P < 0.05, and Expression, F(1, 19) = 8.6, P < 0.01, but no interaction between them, F ≤ 1. Thus, the mean amplitudes within 350–600 ms after stimulus onset were generally more negative over the right than the left hemisphere. More importantly, the Nc amplitudes were significantly greater for fearful (M = −24.9 μV; SD = 8.0) as compared to happy (M = −18.9 μV; SD = 9.7) faces in 7-month-old infants.

Fig. 1.

Grand average ERP waveforms for fearful and happy faces over the left (F3, FC3, C3), central (Fz, FCz, Cz) and right (F4, FC4, C4) frontocentral region for the 5-month-old infants.

Fig. 2.

Grand average ERP waveforms for fearful and happy faces over the left (F3, FC3, C3), central (Fz, FCz, Cz) and right (F4, FC4, C4) frontocentral region for the 7-month-old infants.

Behavioural data

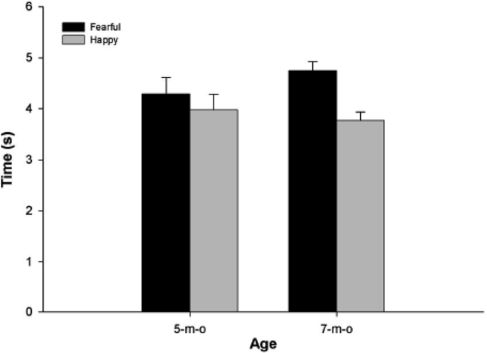

The average looking times for fearful and happy faces in the VPC are presented in Figure 3. As can be inspected, 5-month-olds showed no significant difference in looking times for fearful (M = 4.3 s; SD = 1.6) and happy (M = 4.0 s; SD = 1.5) faces, F(1, 22) = 0.47, P > 0.4. However, 7-month-olds looked significantly longer at fearful (M = 4.7 s; SD = 0.9) when compared with happy (M = 3.8 s; SD = 0.8) faces, F(1, 25) = 13.1, P < 0.01.

Fig. 3.

Average looking times for fearful and happy faces in 5- and 7-month-old infants in the VPC task.

Control analyses

As there were infants who contributed to the data at both 5 and 7 months of age, additional analyses were warranted to examine any possible differences between infants who participated once and twice. And indeed, for the 7-month-olds’ ERP data, there was a marginal three-way interaction including Expression (2), Hemisphere (2) and Visit (2: once, twice), F(1, 18) = 4.8, P < 0.06. For the 7-month-old infants who participated only once, a two-way ANOVA indicated a marginal Expression × Hemisphere interaction, F(1, 11) = 4.0, P < 0.08. Pairwise comparisons showed a larger Nc for fearful than happy faces in the right hemisphere, P ≤ 0.05, but not in the left hemisphere, P > 0.6. Instead, in infants participating at both 5 and 7 months of age, a two-way ANOVA showed a significant main effect for Expression due to larger Nc for fearful than happy faces, P < 0.01, but no Expression × Hemisphere interaction, F(1, 7) = 1.1, P > 0.3.

A 2 (Expression) × 2 (Visit) ANOVA with the 7-month-olds’ VPC data did not yield a significant two-way interaction, F(1, 24) = 0.49, P > 0.4, indicating that the looking times were longer for fearful than happy faces irrespective of whether the infants were on their first or second visit at 7 months of age, both Ps ≤ .05.

DISCUSSION

We examined with ERP and looking time measures whether enhanced visual attention to fearful faces emerges between 5 and 7 months of age or whether it is already present at 5 months of age. For the 7-month-olds, the results replicated earlier findings of a larger Nc component and longer looking times for fearful than happy faces. The 5-month-olds, however, showed an Nc of a similar magnitude for fearful and happy faces, and they also looked equally long at both expressions. It is important to note that the absence of the effects in 5-month-olds was not due to lower-quality data: the younger infants showed a prominent Nc response, provided an equal number of trials for the ERP analyses with the older infants, and looked, on average, at the faces in the VPC task as long as the 7-month-olds.

Thus, the differential pattern of results in 5- and 7-month-old infants implies that the attentional bias for fearful faces emerges at around 7 months of age. An interesting issue is, of course, whether the stronger allocation of attention to fearful faces in 7-month-olds reflects emotional processing, i.e. whether it is the emotional signal value of a fearful face, which is detected and reacted to with increased attention. Fearful faces are considered as potent stimuli for activating the neural mechanisms responsible for detecting and reacting to threat-related signals and potential dangers in the environment (Whalen, 1998). The amygdala is seen as an integral neural structure in associating emotional significance to environmental stimuli and producing a cascade of responses serving rapid alerting and optimal perceptual functioning when encountering signals of threat (Vuilleumier, 2005; Williams, 2006). Indeed, in adults, fearful facial expression cues have been shown to result in enhanced attentional and perceptual processing (Holmes et al., 2005; Milders et al., 2006; Phelps et al., 2006) as well as increased skin conductance responses (Williams et al., 2005). Furthermore, there is a wealth of neuroimaging and ERP evidence for an enhanced neural activation for fearful as compared to happy and neutral faces in the visual occipitotemporal cortex, a process also considered to result from the modulatory influence of the amygdala (Morris et al., 1998; Vuilleumier et al., 2004; Williams et al., 2006; Vuilleumier and Pourtois, 2007; Leppänen et al., 2007a).

It is currently unclear whether a similar subcortical modulatory mechanism could be functional already in early human development. Current neuroimaging techniques (e.g. fMRI) are not feasible for studying the involvement of the amygdala circuitry in infants’ face processing. Moreover, as ERPs reflect mostly cortical postsynaptic activity, the putative amygdala-mediated modulation of ERP activity has to be considered tentatively. Nevertheless, there is some intriguing indirect evidence suggesting that the second half of the first year may be an important turning point in the development of brain systems that underlie sensitivity to signals of threat conveyed by others’ faces (Leppänen and Nelson, 2009). In macaque monkeys, the connections between various cortical areas and the amygdala are relatively mature shortly after birth (Nelson et al., 2002). Interestingly, infant monkeys at around 2 months of age (roughly equalling 8-month-old human infants) become increasingly sensitive to threat-related stimuli, including others’ facial expressions (Bauman and Amaral, 2008). At the same age, monkeys also start to exhibit fear of unfamiliar conspecifics (Suomi, 1999), in close resemblance to the stranger anxiety displayed by human infants from 6 to 7 months of age (Kagan and Herschkowitz, 2005).

As mentioned in the introduction, the neural generators of the Nc component in infant ERPs have been suggested to be located in prefrontal regions, mainly in the anterior cingulate cortex (ACC; Reynolds and Richards, 2005). ACC has been implicated with various functions [see Bush et al. (2000) for review]. For the present purposes, it is interesting to note that in adults, coactivation of ACC and the amygdala has been observed during the processing of fearful faces (Morris et al., 1998; Vuilleumier et al., 2001). ACC activation in such tasks has been associated with the regulation of attentional, behavioural and emotional responses to threat-related stimuli (Morris et al., 1998; Bush et al., 2000; Elliott et al., 2000). In this light, it is tempting to hypothesize that the larger Nc response to fearful faces in 7-month-old infants would reflect stronger ACC activity resulting, in turn, from an increased response in the amygdala. Naturally, we make this inference about infants’ ERP data with great caution, because the time-course of the development of the amygdala and its functional connectivity with ACC and related cortical attention networks is not known.

In addition to the development of the neural circuitry for processing affective significance, it is important to consider the experience-mediated mechanisms that could also be responsible for the observed changes in infants’ sensitivity to fearful faces. It has been noted that changes in infants’ locomotive abilities (e.g. the onset of crawling at around 7 months) are associated with increased variability in caregivers’ expressive behaviours as well as changes in infants’ monitoring of such cues [see Campos et al. (2000) for review]. These changes may form the basis for referential emotional communication as emotional signals start to gain a more direct function in regulating the infant's behaviour. In parallel, developmental changes in infants’ general information processing abilities may also bring about changes in the efficiency of associating emotional meaning to a broader range of stimuli (Vaish et al., 2008). However, despite such important developmental progression occurring at around the same age period as the effects observed in the present study, it is not known to what extent the attentional bias for fearful faces could be accounted for by experience-mediated mechanisms. Exact data on the number of occasions a typical 7-month-old has observed fearful faces is lacking. However, our own experience as well as anecdotal evidence from parents suggests that such direct experience with fearful faces remains very limited at this age period (cf. Malatesta and Haviland, 1982).

The absence of facial expression effects in 5-month-olds in the present study suggests that the perceptual systems of younger infants do not detect the informational value conveyed by fearful faces. At this age, smiling faces are an emotionally salient stimulus to the infants as a result of repeated and affectively rewarding interactions with a smiling caregiver. Indeed, there is some earlier evidence for a looking time bias to happy expressions in younger infants (Wilcox and Clayton, 1968; LaBarbera et al., 1976, but see Bornstein and Arterberry, 2003). In this vein, we could have even expected attention to be allocated more strongly towards happy faces. However, although happy faces are undoubtedly emotionally salient cues, their impact on the allocation of attentional resources in 5-month-olds might not be expected to be comparable to the impact of fearful faces later in development. Theories of affective significance processing propose that once an individual begins to associate different cues with threat- and reward-related qualities, threat-related emotional cues are given precedence on a short time scale, resulting in rapid alerting and possibly interruption of ongoing activity, while positive cues do not require such immediate action but might instead act as reinforcers for an organism to continue with the ongoing activity (Cacioppo et al., 1999; Williams, 2006; Vaish et al., 2008).

On the other hand, it is interesting to note that a recent study (Hoehl et al., 2008) suggests that even infants as young as 3 months old are sensitive to the emotional signal value of fearful faces. In their study, 3-month-olds’ Nc was larger for objects that had been preceded by an image of a fearful face gazing towards the same object when compared with objects that had been paired with a neutral gaze. A direct comparison between that study and the present results is problematic as Hoehl et al. did not report ERPs time-locked to the faces. Nevertheless, it seems possible that, similarly to adults (Adams and Kleck, 2003), averted gaze enhances the processing of fearful faces already at 3 months of age. However, an alternative perceptual/low-level interpretation of the results of Hoehl et al. (2008) is that the enlarged fearful eyes produced a more robust attentional orienting response towards the object than neutral eyes did. This may have resulted in enhanced visual processing of (and a larger Nc for) the object. In this case, no processing of the emotional signal of the preceding face would be necessarily involved.

Seven-month-old infants’ visual preference for fearful faces has also been interpreted as a response to stimuli that are merely unfamiliar to the infants (i.e. novel; Nelson and de Haan, 1996; Vaish et al., 2008). Indeed, it has been shown that during the first half of their first year, infants encounter negative facial expressions rarely in their environment (Malatesta and Haviland, 1982). However, the present findings cannot be easily interpreted as novelty responses. We did not observe an attentional bias in 5-month-olds, to whom fearful faces are, if anything, at least as unfamiliar as they are for 7-month-olds. Furthermore, our previous study (Peltola et al., 2008) showed that novel grimaces lacking emotional signal value but rated as equally novel as the fearful faces did not have an attention holding effect on 7-month-olds’ visual attention as fearful faces did. One could also argue that the 5-month-olds had a weaker stored representation of a happy face than the older infants. If this was the case, it could obviously attenuate the novelty response toward fearful faces in the younger age group, as novelty would have an impact on the processing of both fearful and happy expressions. Although we had no means to assess this issue directly in the present study, the possibility for such an age effect seems rather unlikely in light of previous research. Namely, it has been shown that at the age of 5 months, infants are already able to generalize from multiple exemplars (identities) of happy faces a category of a happy face, even when smiles with varying intensities are used during habituation (e.g. Bornstein and Arterberry, 2003).

There are also previous studies comparing infants’ responses to novel and familiar stimuli, which indicate that infant attention is sensitive to the meaningfulness and emotional content of stimuli. First, de Haan and Nelson (1997, 1999) studied 6-month-old infants and found a larger Nc for familiar than unfamiliar stimuli (mother's face vs stranger's face and familiar vs unfamiliar toys), whereas no effects of stimulus familiarity on looking times were found. Second, a study investigating 7-month-old infants’ processing of angry expressions, which are obviously also relatively unfamiliar to infants at this age, actually found a larger Nc and longer looking times to happy as compared to angry faces (Grossmann et al., 2007). This finding also suggests that simply the negative emotional valence of fearful faces is not responsible for the attentional modulation. Anger and fear share the same valence and yet they seem to produce opposing effects on 7-month-olds’ attention. However, a replication of this result and further testing with different negative emotions (e.g. sadness) is needed to critically test whether infants’ attentional responses are modulated by a specific (negatively valenced) expression rather than by negatively valenced emotions in general. Indeed, it could also be the case that the 7-month-olds in Grossmann et al. (2007) did not yet recognize the emotional signal value of angry faces, which led to stronger allocation of attention to emotionally more meaningful expressions (i.e. happy faces). Another interesting result of that study was that at 12 months of age, infants showed an adult-like stronger negativity in their ERP responses to angry faces. Thus, in relation to our own data, it could be tentatively suggested that the processing of fearful and angry faces might develop along different developmental trajectories during infancy, with infants becoming reactive to fearful faces at an earlier point in development.

An unexpected finding of the present study was that the number of times an infant participated in the experiment had an influence on the observed effects. Importantly, the main finding of larger Nc and longer looking times for fearful than happy faces was observed in both groups of 7-month-old infants (i.e. in those who participated once and those who participated twice). There was, however, a difference in the laterality of the Nc effect, so that the infants participating only once at 7 months of age showed a larger Nc for fearful than happy faces in the right but not in the left hemisphere recording site, while the infants who participated twice at both 5 and 7 months of age showed the differential responses in both hemispheres. The reasons for this unexpected difference are not entirely clear and given that the interaction was marginal, cautiousness must be exercised in interpreting it. We note, however, that the right-lateralized effect in infants who participated only once can be seen to parallel previous observations of a right hemisphere bias in adults’ and infants’ face processing (de Schonen and Mathivet, 1990; de Haan and Nelson, 1997, 1999; Nelson, 2001). The bilateral effect in those infants who participated twice may reflect a training effect, i.e. observing repeated presentations of fearful and happy faces twice within a 2-month period resulted in a more robust and bilateral attentional response for fearful faces. It is an interesting possibility, however, that such small amount of practice could produce observable group differences in processing fearful faces.

The present study is limited in that as we used only static faces presented on the screen, we are not able to make straightforward conclusions about when in development infants begin to process fearful faces differentially in real-life settings where infants are most often faced with dynamic and multimodally communicated emotional expressions. Indeed, it has been observed that infants become sensitive to multimodally communicated expressions earlier in development than to visually presented facial expressions alone (Flom and Bahrick, 2007). Studies in adults suggest that the recognition of dynamically presented facial expressions might recruit a different neural circuitry than static faces (e.g. Kilts et al., 2003); however, studies on infants’ brain responses to dynamic expressions are lacking [but see Grossmann et al. (2008)]. Another limitation concerns the possible differences between age groups in their visual scanning patterns for fearful and happy faces as we did not obtain eye tracking data. Previous data indicate that amygdala activation (Adolphs et al., 2005) and ERP modulation (Schyns et al., 2007) by fearful faces are associated with fixations targeted at the eye region of fearful faces. Thus, interpreting the present results, it remains possible that the 5-month-olds directed their fixations relatively less on the eye region while scanning fearful faces, which could result in an attenuated amygdala response. However, although research on infants’ visual scanning patterns of emotional faces is scarce, existing evidence (Hunnius et al., 2007) as well as our own unpublished data suggest that already from 4 months of age infants direct their fixations pronouncedly on the eye region while looking at different emotional faces. Finally, a replication of the present findings with larger independent samples and a statistically significant interaction between age groups would yield stronger evidence for a developmental change between 5 and 7 months.

In summary, the present study extended previous research on the development of emotional face processing in infancy by showing that the bias to allocate attention more strongly to fearful than happy emotional expressions appears to emerge between 5 and 7 months of age. As the 5-month-olds did not show enhanced attention to fearful faces, which they very rarely encounter in their rearing environment, it seems rather unlikely that the observed effects in 7-month-olds would be accountable merely by the novelty of fearful faces. It is possible that the attentional effects reflect functional developmental changes in brain mechanisms (e.g. the amygdala), which participate in evaluating the emotional significance of stimuli and generating enhanced responses toward stimuli signalling potential threat. Also, as reviewed earlier, the effects are observed at around the same age period when infants begin to show other putatively amygdala-mediated emotional responses, such as stranger anxiety. Together, these findings suggest that 7-month-old infants may already have some understanding of the emotional signal value of fearful expressions although further research is required to determine the level of sophistication of these early abilities.

Acknowledgments

This research was supported by grants from the Finnish Cultural Foundation, the Academy of Finland (projects #1115536 and #1111850) and the Finnish Graduate School of Psychology. Development of the MacBrain Face Stimulus Set was overseen by Nim Tottenham and supported by the John D. and Catherine T. MacArthur Foundation Research Network on Early Experience and Brain Development. Please contact Nim Tottenham at tott0006@tc.umn.edu for more information concerning the stimulus set.

Footnotes

1 In the ERP task, the infants were also shown, along fearful and happy faces, face-shaped matched visual noise images in which the phase spectra of the model's face were randomized, but the amplitude and the colour spectra were held constant (cf. Halit et al., 2004). The data for these stimuli are not reported here.

REFERENCES

- Adams R.B., Jr, Kleck RE. Perceived gaze direction and the processing of facial displays of emotion. Psychological Science. 2003;14:644–7. doi: 10.1046/j.0956-7976.2003.psci_1479.x. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Gosselin F, Buchanan TW, Tranel D, Schyns P, Damasio AR. A mechanism for impaired fear recognition after amygdala damage. Nature. 2005;433:68–72. doi: 10.1038/nature03086. [DOI] [PubMed] [Google Scholar]

- Baron MJ, Wheatley J, Symons L, Hains CR, Lee K, Muir D. The Queen's Video Coder ( http://psyc.queensu.ca/vidcoder/) 2001. . Department of Psychology, Queen's University, Canada. [Google Scholar]

- Batty M, Taylor MJ. Early processing of the six basic facial emotional expressions. Cognitive Brain Research. 2003;17:613–20. doi: 10.1016/s0926-6410(03)00174-5. [DOI] [PubMed] [Google Scholar]

- Bauman MD, Amaral DG. Neurodevelopment of social cognition. In: Nelson CA, Luciana M, editors. Handbook of Developmental Cognitive Neuroscience. 2nd. Cambridge, MA: MIT Press; 2008. pp. 161–85. [Google Scholar]

- Bornstein MH, Arterberry ME. Recognition, discrimination and categorization of smiling by 5-month-old infants. Developmental Science. 2003;6:585–99. [Google Scholar]

- Bush G, Luu P, Posner MI. Cognitive and emotional influences in anterior cingulate cortex. Trends in Cognitive Sciences. 2000;4:215–22. doi: 10.1016/s1364-6613(00)01483-2. [DOI] [PubMed] [Google Scholar]

- Cacioppo JT, Gardner WL, Berntson GG. The affect system has parallel and integrative processing components: form follows function. Journal of Personality and Social Psychology. 1999;76:839–55. [Google Scholar]

- Campos JJ, Anderson DI, Barbu-Roth MA, Hubbard EM, Hertenstein MJ, Witherington D. Travel broadens the mind. Infancy. 2000;1:149–219. doi: 10.1207/S15327078IN0102_1. [DOI] [PubMed] [Google Scholar]

- Caron RF, Caron AJ, Myers RS. Abstraction of invariant face expressions in infancy. Child Development. 1982;53:1008–15. [PubMed] [Google Scholar]

- de Haan M. Visual attention and recognition memory in infancy. In: de Haan M, editor. Infant EEG and event-related potentials. New York: Psychology Press; 2007. pp. 101–43. [Google Scholar]

- de Haan M, Belsky J, Reid V, Volein A, Johnson MH. Maternal personality and infants’ neural and visual responsivity to facial expressions of emotion. Journal of Child Psychology and Psychiatry. 2004;45:1209–18. doi: 10.1111/j.1469-7610.2004.00320.x. [DOI] [PubMed] [Google Scholar]

- de Haan M, Nelson CA. Recognition of the mother's face by six-month-old infants: a neurobehavioral study. Child Development. 1997;68:187–210. [PubMed] [Google Scholar]

- de Haan M, Nelson CA. Brain activity differentiates face and object processing in 6-month-old infants. Developmental Psychology. 1999;35:1113–21. doi: 10.1037//0012-1649.35.4.1113. [DOI] [PubMed] [Google Scholar]

- de Schonen S, Mathivet E. Hemispheric asymmetry in a face discrimination task in infants. Child Development. 1990;61:1192–205. [PubMed] [Google Scholar]

- Elliott R, Rubinsztein JS, Sahakian BJ, Dolan RJ. Selective attention to emotional stimuli in a verbal go/no-go task: an fMRI study. Neuroreport. 2000;11:1739–44. doi: 10.1097/00001756-200006050-00028. [DOI] [PubMed] [Google Scholar]

- Farroni T, Menon E, Rigato S, Johnson MH. The perception of facial expressions in newborns. European Journal of Developmental Psychology. 2007;4:2–13. doi: 10.1080/17405620601046832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Flom R, Bahrick LE. The development of infant discrimination of affect in multimodal and unimodal stimulation: the role of intersensory redundancy. Developmental Psychology. 2007;43:238–52. doi: 10.1037/0012-1649.43.1.238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Georgiou GA, Bleakley C, Hayward J, et al. Focusing on fear: attentional disengagement from emotional faces. Visual Cognition. 2005;12:145–58. doi: 10.1080/13506280444000076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grossmann T, Johnson MH, Lloyd-Fox S, et al. Early cortical specialization for face-to-face communication in human infants. Proceedings of the Royal Society B. 2008;275:2803–11. doi: 10.1098/rspb.2008.0986. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grossmann T, Striano T, Friederici AD. Developmental changes in infants’ processing of happy and angry facial expressions: a neurobehavioral study. Brain and Cognition. 2007;64:30–41. doi: 10.1016/j.bandc.2006.10.002. [DOI] [PubMed] [Google Scholar]

- Halit H, Csibra G, Volein A, Johnson MH. Face-sensitive cortical processing in early infancy. Journal of Child Psychology and Psychiatry. 2004;45:1228–334. doi: 10.1111/j.1469-7610.2004.00321.x. [DOI] [PubMed] [Google Scholar]

- Hoehl S, Wiese L, Striano T. Young infants’ neural processing of objects is affected by eye gaze direction and emotional expression. PLoS ONE. 2008;3:e2389. doi: 10.1371/journal.pone.0002389. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holmes A, Green S, Vuilleumier P. The involvement of distinct visual channels in rapid attention towards fearful facial expressions. Cognition and Emotion. 2005;19:899–922. [Google Scholar]

- Hunnius S, de Wit T.CJ, von Hofsten C. Infants’ visual scanning of faces with happy, angry, fearful, and neutral expression. Poster presented at the biennial meeting of the Society for Research in Child Development: Boston, MA; 2007. [Google Scholar]

- Kagan J, Herschkowitz N. A young mind in a growing brain. Mahwah, NJ: Lawrence Erlbaum Associates; 2005. [Google Scholar]

- Kilts CD, Egan G, Gideon DA, Ely TD, Hoffman JM. Dissociable neural pathways are involved in the recognition of emotion in static and dynamic facial expressions. Neuroimage. 2003;18:156–68. doi: 10.1006/nimg.2002.1323. [DOI] [PubMed] [Google Scholar]

- Kobiella A, Grossmann T, Striano T, Reid VM. The discrimination of angry and fearful facial expressions in 7-month-old infants: an event-related potential study. Cognition and Emotion. 2008;22:134–46. [Google Scholar]

- Kotsoni E, de Haan M, Johnson MH. Categorical perception of facial expressions by 7-month-old infants. Perception. 2001;30:1115–25. doi: 10.1068/p3155. [DOI] [PubMed] [Google Scholar]

- LaBarbera JD, Izard CE, Vietze P, Parisi SA. Four- and six-month-old infants’ visual responses to joy, anger, and neutral expressions. Child Development. 1976;47:535–8. [PubMed] [Google Scholar]

- Leppänen JM, Kauppinen P, Peltola MJ, Hietanen JK. Differential electrocortical responses to increasing intensities of fearful and happy emotional expressions. Brain Research. 2007a;1166:103–9. doi: 10.1016/j.brainres.2007.06.060. [DOI] [PubMed] [Google Scholar]

- Leppänen JM, Moulson MC, Vogel-Farley VK, Nelson CA. An ERP study of emotional face processing in the adult and infant brain. Child Development. 2007b;78:232–45. doi: 10.1111/j.1467-8624.2007.00994.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leppänen JM, Nelson CA. The development and neural bases of facial emotion recognition. In: Keil RV, editor. Advances in child development and behavior. Vol. 34. San Diego: Academic Press: 2006. pp. 207–46. [DOI] [PubMed] [Google Scholar]

- Leppänen JM, Nelson CA. Tuning the developing brain to social signals of emotions. Nature Reviews Neuroscience. 2009;10:37–47. doi: 10.1038/nrn2554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leppänen JM, Richmond J, Moulson MC, Vogel-Farley VK, Nelson CA. Categorical representation of facial expressions in the infant brain. Infancy. in press doi: 10.1080/15250000902839393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Malatesta CZ, Haviland JM. Learning display rules: the socialization of emotion expression in infancy. Child Development. 1982;53:991–1003. [PubMed] [Google Scholar]

- Milders M, Sahraie A, Logan S, Donnellon N. Awareness of faces is modulated by their emotional meaning. Emotion. 2006;6:10–17. doi: 10.1037/1528-3542.6.1.10. [DOI] [PubMed] [Google Scholar]

- Morris JS, Friston KJ, Buchel C, Frith CD, Young AW, Calder AJ, et al. A neuromodulatory role for the human amygdala in processing emotional facial expressions. Brain. 1998;121:47–57. doi: 10.1093/brain/121.1.47. [DOI] [PubMed] [Google Scholar]

- Nelson CA. The development and neural bases of face recognition. Infant and Child Development. 2001;10:3–18. [Google Scholar]

- Nelson CA, Bloom FE, Cameron JL, Amaral D, Dahl RE, Pine D. An integrative, multidisciplinary approach to the study of brain-behavior relations in the context of typical and atypical development. Development and Psychopathology. 2002;14:499–520. doi: 10.1017/s0954579402003061. [DOI] [PubMed] [Google Scholar]

- Nelson CA, de Haan M. Neural correlates of infants’ visual responsiveness to facial expressions of emotion. Developmental Psychobiology. 1996;29:577–95. doi: 10.1002/(SICI)1098-2302(199611)29:7<577::AID-DEV3>3.0.CO;2-R. [DOI] [PubMed] [Google Scholar]

- Nelson CA, Dolgin KG. The generalized discrimination of facial expressions by seven-month-old infants. Child Development. 1985;56:58–61. [PubMed] [Google Scholar]

- Peltola MJ, Leppänen JM, Palokangas T, Hietanen JK. Fearful faces modulate looking duration and attention disengagement in 7-month-old infants. Developmental Science. 2008;11:60–8. doi: 10.1111/j.1467-7687.2007.00659.x. [DOI] [PubMed] [Google Scholar]

- Phelps EA, Ling S, Carrasco M. Emotion facilitates perception and potentiates the perceptual benefits of attention. Psychological Science. 2006;17:292–9. doi: 10.1111/j.1467-9280.2006.01701.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pourtois G, Grandjean D, Sander D, Vuilleumier P. Electrophysiological correlates of rapid spatial orienting towards fearful faces. Cerebral Cortex. 2004;14:619–33. doi: 10.1093/cercor/bhh023. [DOI] [PubMed] [Google Scholar]

- Reynolds GD, Richards JE. Familiarization, attention, and recognition memory in infancy: an event-related potential and cortical source localization study. Developmental Psychology. 2005;41:598–615. doi: 10.1037/0012-1649.41.4.598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schyns PG, Petro LS, Smith ML. Dynamics of visual information integration in the brain for categorizing facial expressions. Current Biology. 2007;17:1580–5. doi: 10.1016/j.cub.2007.08.048. [DOI] [PubMed] [Google Scholar]

- Suomi SJ. Attachment in rhesus monkeys. In: Cassidy J, Shaver PR, editors. Handbook of attachment: theory, research, and clinical applications. New York: Guilford Press; 1999. pp. 181–97. [Google Scholar]

- Tottenham N, Tanaka JW, Leon AC, McCarry T, Nurse M, Hare TA, et al. The NimStim set of facial expressions: judgments from untrained research participants. Psychiatry Research. in press doi: 10.1016/j.psychres.2008.05.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vaish A, Grossmann T, Woodward A. Not all emotions are created equal: the negativity bias in social-emotional development. Psychological Bulletin. 2008;134:383–403. doi: 10.1037/0033-2909.134.3.383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vuilleumier P. How brains beware: neural mechanisms of emotional attention. Trends in Cognitive Sciences. 2005;9:585–94. doi: 10.1016/j.tics.2005.10.011. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ. Effects of attention and emotion on face processing in the human brain: an event-related fMRI study. Neuron. 2001;30:829–41. doi: 10.1016/s0896-6273(01)00328-2. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Pourtois G. Distributed and interactive brain mechanisms during emotion face perception: evidence from functional neuroimaging. Neuropsychologia. 2007;45:174–94. doi: 10.1016/j.neuropsychologia.2006.06.003. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Richardson MP, Armony JL, Driver J, Dolan RJ. Distant influences of amygdala lesion on visual cortical activation during emotional face processing. Nature Neuroscience. 2004;7:1271–8. doi: 10.1038/nn1341. [DOI] [PubMed] [Google Scholar]

- Whalen PJ. Fear, vigilance, and ambiguity: initial neuroimaging studies of the human amygdala. Current Directions in Psychological Science. 1998;7:177–88. [Google Scholar]

- Wilcox BM, Clayton FL. Infant visual fixation on motion pictures of the human face. Journal of Experimental Child Psychology. 1968;6:22–32. doi: 10.1016/0022-0965(68)90068-4. [DOI] [PubMed] [Google Scholar]

- Williams LM. An integrative neuroscience model of “significance” processing. Journal of Integrative Neuroscience. 2006;5:1–47. doi: 10.1142/s0219635206001082. [DOI] [PubMed] [Google Scholar]

- Williams LM, Das P, Liddell B, Olivieri G, Peduto A, Brammer MJ, et al. BOLD, sweat and fears: fMRI and skin conductance distinguish facial fear signals. Neuroreport. 2005;16:49–52. doi: 10.1097/00001756-200501190-00012. [DOI] [PubMed] [Google Scholar]

- Williams LM, Palmer D, Liddell BJ, Song L, Gordon E. The ‘when’ and ‘where’ of perceiving signals of threat versus non-threat. Neuroimage. 2006;31:458–67. doi: 10.1016/j.neuroimage.2005.12.009. [DOI] [PubMed] [Google Scholar]