The ability to isolate a single sound source among concurrent sources and reverberant energy is necessary to understand the auditory world. The precedence effect describes a related experimental finding that when presented with identical sounds from two locations with a short onset asynchrony (on the order of milliseconds), listeners report a single source with a location dominated by the lead sound. Single cell recordings in multiple animal models indicate that there are low-level mechanisms that may contribute to the precedence effect, yet psychophysical studies in humans provide evidence that top-down cognitive processes have a great deal of influence on perception of simulated echoes. In the present study, event-related potentials (ERPs) evoked by click pairs at and around listeners' echo thresholds indicate that perception of the lead and lag sound as individual sources elicits a negativity between 100 and 250 ms, previously termed the object-related negativity (ORN). Even for physically identical stimuli, the ORN is evident when listeners report hearing compared to not hearing a second sound source. These results define a neural mechanism related to the conscious perception of multiple auditory objects.

Unilateral hearing loss, developmental delays in precise auditory processing, and the progressive increases in hearing thresholds associated with aging lead to a common complaint among many individuals: `I can hear the sounds. I just can't understand them.' The inability to isolate individual sound sources among multiple concurrent sounds and reverberant energy likely contributes to this problem. Adults with normal hearing are able to use multiple cues, including pitch, timbre, and timing, to separate concurrent sounds into distinct auditory objects in a process called auditory stream segregation (Bregman, 1990). One such auditory streaming cue is sound source location. That is, sounds that are perceived as originating from distinct locations can be assumed to arise from different events. However, auditory localization is, itself, a complex process built on multiple monaural and binaural calculations that are affected by subcortical and cortical processing, experience with both auditory and visual information, and higher-level cognitive influences. Defining the levels of neural processing at which sounds presented from different locations are treated as distinct objects will provide a greater understanding of why sound source individuation sometimes fails.

One experimental paradigm used to explore auditory localization and stream segregation involves presenting two identical sounds from different locations in the azimuth plane while manipulating the stimulus onset asynchrony (SOA)(Wallach, Newman, & Rosenzweig, 1949). When the two sounds are presented at the same time or with an SOA of less than about 1 ms, summing localization occurs (Blauert, 1997; Litovsky & Shinn-Cunningham, 2001). Under these conditions, a single sound source is reported directly between the two presentation locations for synchronous onsets and progressively closer to the lead location as the SOA increases. As the onset asynchrony between the two sounds is increased beyond 1 ms and up to around 5 ms (depending on the sounds and individual), listeners continue to report a single `fused' sound but localize this sound near the position of the lead sound source and show increased spatial discrimination thresholds for the lag sound. These three perceptual phenomena of fusion, localization dominance, and discrimination suppression together have been termed the `precedence effect' (Blauert, 1997; Litovsky, Colburn, Yost, & Guzman, 1999; Litovsky & Shinn-Cunningham, 2001; Tollin & Yin, 2003). As the onset asynchrony is increased beyond echo threshold, listeners report clearly hearing the lead and lag sound sources as separate events. Although other definitions have been used as well, an individual's echo threshold is the shortest delay between the lead and lag sounds at which the listener perceives two sounds with different locations (Blauert, 1997, p. 224). Even at SOAs long enough that listeners consistently report hearing the lag sound as a separate source, some amount of localization dominance and discrimination suppression has been reported (Litovsky et al., 1999; Litovsky & Shinn-Cunningham, 2001).

Some aspects of the precedence effect can be explained in terms of low-level peripheral processing including the responses of hair cells and cross correlation between input to the two ears (Hartung & Trahiotis, 2001). Further, single cell recordings in several animal models show correlates of the precedence effect in the auditory nerve and subcortical regions such that the physiological response to lag sounds is greatly reduced (Fitzpatrick, Kuwada, Kim, Parham, & Batra, 1999; Spitzer, Bala, & Takahashi, 2004; Yin, 1994). Distinct correlates of summing localization, fusion, and localization dominance have been reported in primary and secondary auditory cortices of anesthetized cats (Mickey & Middlebrooks, 2001). In humans, there is some evidence that unilateral damage to the inferior colliculus results in abnormally weak echo suppression when the lead sound is presented contralateral to the lesion (Litovsky, Fligor, & Tramo, 2002). However, correlates of the precedence effect in the structure and function of peripheral and low-level auditory systems cannot fully explain the complex patterns of perception that have been observed.

Several lines of evidence suggest higher-level cortical systems may also be involved in the precedence effect. First, there is some evidence that, with many hours of practice, listeners can learn to discriminate the interaural time difference of lag sounds below their initial echo thresholds with accuracy similar to that of sounds far above their echo thresholds (Saberi & Antonio, 2003; Saberi & Perrott, 1990). To the extent that the precedence effect can be `unlearned,' the increased localization thresholds for lag sounds observed in unpracticed individuals cannot be fully explained by the function of peripheral auditory systems. However, not all studies show evidence of practice effects on localization of lag sounds (Litovsky, Hawley, Fligor, & Zurek, 2000) and highly trained individuals may learn to use nondirectional acoustic cues to discriminate between stimuli.

Second, switching the location of the lead and lag sounds in a train of click pairs produces a breakdown of the precedence effect. At the same SOA, listeners report not hearing the lag sound before the switch and hearing the lag sound for the first few pairs after the switch (Clifton, 1987). Similarly, listening to a series of sounds with identical locations and onset asynchronies produces a buildup of the precedence effect such that listeners report that echoes fade out across the presentation of several pairs regardless of presentation rate (Clifton & Freyman, 1989; Freyman, Clifton, & Litovsky, 1991). The results of these experiments along with other studies exploring the precise conditions that result in both the breakdown and buildup of the precedence effect are consistent with the hypothesis that listeners build complex models of the acoustically reflective surfaces in a room based on experience with sounds in that setting (Blauert, 1997; Clifton, Freyman, Litovsky, & McCall, 1994; Clifton, Freyman, & Meo, 2002; Freyman & Keen, 2006). Additionally, asymmetries arise in the buildup of the precedence effect that are not evident when onset asynchrony varies randomly from trial to trial (Clifton & Freyman, 1989; Grantham, 1996). Specifically, echo thresholds are higher when the lead sound is presented on the right and the lag sound on the left compared to the reverse when click pairs are repeated multiple times (i.e., the conditions that produce buildup of the precedence effect); no such systematic asymmetry is found for pairs presented in isolation (Grantham, 1996). Finally, neural correlates of echo suppression, but not of the buildup of echo suppression, are reported in the inferior colliculus of cats (Litovsky & Yin, 1998). The effects of auditory context on echo thresholds, asymmetries in the buildup of the precedence effect, and a lack of the buildup effect in subcortical regions of cats implicate cortical mechanisms in the precedence effect.

The psychophysical studies described above have been pivotal in determining the conditions under which auditory context modulates the precedence effect. However, the behavioral responses measured at the conclusion of multiple sensory, perceptual, cognitive, and motor processes do not provide information about the specific levels at which responses to lag sounds above and below thresholds differ. In contrast, the temporal resolution of event-related brain potentials (ERPs) can be used to potentially isolate the levels of processing (and cortical organization) at which these differences occur in humans.

Two recent studies report auditory brainstem responses (ABRs) to sounds presented under conditions that could produce the precedence effect. In the first study (Liebenthal & Pratt, 1999), the earliest potential affected by the presence of a preceding sound peaked around 40 ms after onset (Pa). This peak was reduced in amplitude when a sound was presented as a simulated echo (as opposed to in isolation) and the amount of amplitude reduction was associated with onset asynchrony. A second study reported psychophysical, ABR, and ERP measurements (Damaschke, Riedel, & Kollmeier, 2005) and concluded that the earliest electrophysiological evidence of the precedence effect could be observed as the mismatch negativity (MMN) component which peaked around 150 ms after lag sound onset. However, neither of these studies recorded participant responses during or in precisely the same paradigms as electrophysiological measurements. Psychophysical studies of the precedence effect show that the relationship between SOA and percentage of trials on which a listener reports hearing the lag sound varies widely among individuals and is sometimes a very gradual or irregular function within an individual (Clifton, 1987; Clifton & Freyman, 1989; Damaschke et al., 2005; Freyman et al., 1991; Liebenthal & Pratt, 1999; Litovsky, Rakerd, Yin, & Hartmann, 1997). In the first study described above (Liebenthal & Pratt, 1999), the trial structures differed for the discrimination and ABR experiments such that listeners may have experienced a buildup of the precedence effect in the ABR experiment only. In the second study (Damaschke et al., 2005), the use of an oddball design for the MMN measurements may have produced a breakdown of the precedence effect since the deviant sounds that elicit an MMN are necessarily preceded by multiple repetitions of a standard sound. Without behavioral and electrophysiological responses recorded on the same trials, it is difficult to make direct connections between perception and the underlying neurosensory processing.

Ideally, ERPs would be used to index differences in processing when listeners report hearing and not hearing the lag sound under experimental conditions similar to those employed in the psychophysical studies that have defined the precedence effect. However, since scalp potentials evoked by sounds from different locations in rapid succession are typically indistinguishable, it is not immediately obvious what, if any, differences might be found in the resulting ERP waveforms. For example, Li, Qi, He, Alain, and Schneider (2005) reported no differences in the ERPs elicited by the onsets of correlated and uncorrelated long duration noise presented from two locations with an SOA well below listeners' echo thresholds even though this manipulation typically results in the perception of a single sound source for correlated noise and two sources for uncorrelated noise. In contrast, they found differences in ERP waveforms to a gap in the lag sound when the lag was uncorrelated noise versus correlated noise. A gap in uncorrelated noise typically leads to the correct perception of a gap in the lag, whereas a gap in correlated noise leads to perception of a gap in the lead sound. These results indicate it is possible to use ERPs to index perception of distinct sound sources, but also point out the importance of being able to timelock ERPs to the actual perception of fusion or sound segregation which may have happened at different times on different trials and for different individuals processing the onsets of correlated and uncorrelated noise.

An alternative ERP index of the precedence effect arises from studies seeking to determine the level of processing at which pitch perception occurs. Pitch perception can be manipulated by `mistuning' one of the harmonics in a complex sound. At levels of mistuning that result in listeners reporting two distinct pitches, sounds elicit a negativity that overlaps with the obligatory N1 and P2 components (Alain, Arnott, & Picton, 2001). Since this difference in amplitude is elicited by stimuli that listeners are more likely to report as two distinct sounds, it has been termed the object-related negativity or ORN (Alain et al., 2001). The ORN is similar in amplitude, distribution, and latency when listeners discriminate between complex sounds with and without the mistuning, when they read unrelated material, and when they complete a visual 1-back task (Alain et al., 2001, Alain, Schuler, & McDonald, 2002; Dyson, Alain, & He, 2005). In contrast, a later positivity that peaks around 400 ms and has a posterior distribution is only observed when listeners actively attend to the auditory stimuli (Alain et al., 2001). The magnetic equivalent of the ORN has been reported in studies employing MEG measures (Alain & McDonald, 2007; Hiraumi et al., 2005).

The ORN has also been reported in studies that induce two-sound perception through the use of dichotic pitch stimuli using both ERP (Hautus & Johnson, 2005; Johnson, Hautus, & Clapp, 2003) and MEG techniques (Chait, Poeppel, & Simon, 2006). Importantly, these later studies indicate that the ORN is not specific to mistuned harmonics, though as with the harmonic stimuli, perception of two objects is based on the perception of distinct pitches. A recent study provides some evidence that sounds that are separated based on location may also elicit an ORN. Specifically, when the amount of mistuning of a harmonic (2%) is not enough to result in a consistent perception of two distinct pitches, presenting the mistuned harmonic from a different location elicits the ORN (McDonald & Alain, 2005). Further, in the participants who were skilled at localizing sounds (N = 5), there was evidence of an ORN elicited when a tuned harmonic was presented from a distinct location.

If the ORN is actually indexing perception of two distinct sound sources, then presenting pairs of clicks with an onset asynchrony longer than an individual's echo threshold should also elicit an ORN. Since observing this component does not require setting up a particular auditory context (as does the MMN), ERP and psychophysical measurements can be made under identical conditions - in fact, at the same time. Furthermore, individual differences in echo thresholds become an asset since they make it possible to distinguish between differences in ERPs that are based on perception and those that are driven by physical differences in the stimuli. Specifically, it becomes possible to compare ERPs elicited by physically identical stimuli that result in distinct perceptions (hearing one sound source or two). Therefore, this component presents an opportunity to test multiple hypotheses concerning the precedence effect. In the current study, EEG was recorded while pairs of clicks with varying onset asynchronies were presented from two loudspeakers and listeners were asked to report whether they heard a sound from the lag location. Based on previous evidence of correlates of the precedence effect at early perceptual stages and modulation of the precedence effect by higher-level processes, we hypothesized that perception of a lag sound as a separate source would be indexed by the ORN.

METHOD

Participants

Twelve adults contributed both psychophysical and ERP data (6 females, M age = 23 years; 9 months, s.d. = 4;2); an additional 12 participants contributed psychophysical data only (8 females, M age = 24;9 s.d. = 3;8). Twelve other adults were initially contacted about participating in the study but were excluded for degenerative hearing loss (N = 1), equipment failure (N = 2), consistently reporting hearing the lag sound regardless of onset asynchrony in a screening task (N = 6), or consistently reporting hearing the lag sound when it was presented on the right and consistently reporting not hearing the lag sound when it was presented on the left in the screening task (N = 3). All participants who contributed any data included in analyses (N = 24) were right-handed, had normal or corrected-to-normal vision, and reported having no neurological problems and taking no psychoactive medications. All participants completed a hearing screening and had pure tone thresholds at or below normal levels for both ears for 1, 2, 4, and 8 kHz tones. Adults provided informed consent before participating in any research activities and were paid $10/hour for their time.

Stimuli

Sounds were 181 μs clicks (positive rectangular pulses of four sample points) saved in 22,050 Hz stereo WAVE files. The lead click, presented in either the left or right channel, was followed by an identical sound in the other channel 1-14 ms (in 1 ms steps) after the onset of the first click for a total of 28 sound files (14 for each lead side). A silent interval of 4.5 ms was added to the beginning of each sound file.

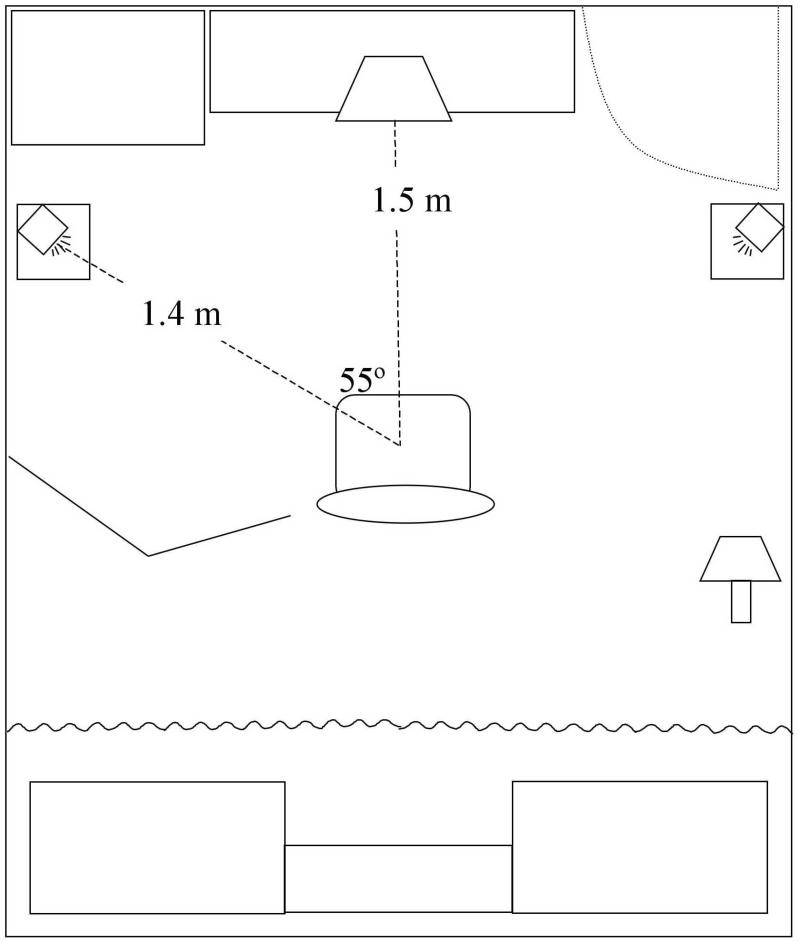

All sounds were presented over M-Audio StudioPro3 loudspeakers with EPrime software running on a PC with a Creative SB Audigy 2 ZS sound card. Each loudspeaker was located 1.4 m from the participants and 55° from midline as shown in Figure 1. Sound level over the two loudspeakers was equated immediately before each participant's arrival by measuring the output level of click trains (10 clicks/s) presented from a single loudspeaker and adjusting the channel-specific volume on the PC to produce a reading of 71 dB SPL (A-weighted). Intensity levels were measured with a free field microphone positioned where the center of a participant's head would be during an experimental session. Sounds were presented in an acoustically shielded and sound-deadened room. The EEG recording equipment precluded conducting the experiment in an anechoic chamber; however, reverberation was minimized by covering as many hard surfaces as possible with acoustically absorbent material (e.g., heavy blankets). The amplitude of actual reflections was assumed to be well below those simulated by the lag sounds. Further, participant responses indicated the conditions were sufficient to elicit the precedence effect and ERP analyses were designed to account for any effects of the specific acoustic environment on echo thresholds.

Figure 1.

Sketch of experiment room. Each loudspeaker was 1.4 m from the participants at an angle of 55° from midline; the loudspeakers were 2.3 m from each other. A computer monitor was located 1.5 m in front of the participants. Behind the participants, a curtain was hung to partially block reflections from metal cabinets and a bookshelf. An electrically shielded lamp was located to the right and a metal arm containing cables to connect scalp electrodes to amplifiers was on the left. A metal filing cabinet, the amplifiers, a table with the computer monitor, and a closed wooden door were in front of the participants.

Procedure

Participants first completed the hearing screening and answered questions to determine if they met the inclusion criteria described above. The first eight of the 33 participants (excludes one for hearing loss and two for equipment failure) who completed any part of testing proceeded directly to the test trials. Since the SOAs employed in testing seven of these initial eight participants failed to fully capture their echo thresholds, subsequent participants completed an additional echo threshold screening task before beginning the test trials.

During the echo threshold screening task, click pairs were presented in two blocks of 105 trials such that the lead sound was always presented from the same loudspeaker. At the beginning of each of these blocks, participants were instructed that they would hear a sound from the left (or right) loudspeaker on every trial. They were asked to press one button on a response box if they also heard a sound coming from the right (or left) and a different button if they did not hear a sound from the lag side. On each trial during left-lead blocks, a click was presented from the left loudspeaker followed by an identical click from the right loudspeaker beginning 2, 4, 6, 8, 10, 12, or 14 ms later. Click pairs at each onset asynchrony were repeated 15 times each in random order. The same procedure was used for click pairs with the lead sound always on the right side and the lag sound always on the left side.

Responses to the screening task were used to select the set of eight SOAs (1 ms intervals) that were to be used during testing as shown in Table 1. For the eight participants who were not asked to complete this echo threshold screening, the eight shortest SOAs were employed during testing. For the first seven of nine participants who passed the screening, selection of the test SOAs was constrained by using the same eight delays in the left-lead and right-lead blocks. For the remaining nine of 16 participants who passed the screening, the range of test lags was chosen separately for the left-lead and right-lead blocks in an attempt to maximize the number of participants with adequate amounts of ERP data at, below, and above their echo thresholds.

Table 1.

Participants

| Scr | Left- and Right- | Left-Lead | Right-Lead | |||

|---|---|---|---|---|---|---|

| Part | Proc | Exclusion | Lead Test SOAs | ERP Thrsh | ERP Thrsh | |

| 1 | Hearing loss | |||||

| 2 | Equip. Failure | |||||

| 3 | Equip. Failure | |||||

| 4 | no | 1-8 | 1-8 | poor fit | poor fit | |

| 5 | no | 1-8 | 1-8 | poor fit | poor fit | |

| 6 | no | 1-8 | 1-8 | poor fit | poor fit | |

| 7 | no | 1-8 | 1-8 | poor fit | poor fit | |

| 8 | no | 1-8 | 1-8 | insuffdata | poor fit | |

| 9 | no | 1-8 | 1-8 | insuffdata | SOA = 3 | |

| 10 | no | 1-8 | 1-8 | poor EEG | poor fit | |

| 11 | no | 1-8 | 1-8 | poor EEG | poor EEG | |

| 12 | yes | Scr Thrsh <1 | ||||

| 13 | yes | Scr Thrsh <1 | ||||

| 14 | yes | 1-8 | 1-8 | insuffdata | SOA = 6 | |

| 15 | yes | 1-8 | 1-8 | SOA = 3 | SOA = 6 | |

| 16 | yes | 1-8 | 1-8 | SOA = 6 | poor fit | |

| 17 | yes | 4-11 | 4-11 | poor fit | poor fit | |

| 18 | yes | 4-11 | 4-11 | insuffdata | SOA = 6 | |

| 19 | yes | 7-14 | 7-14 | poor EEG | insuff data | |

| 20 | yes | 7-14 | 7-14 | SOA=10 | insuff data | |

| 21 | yes | Scr Thrsh <1 | ||||

| 22 | yes | Scr Thrsh <1 | ||||

| 23 | yes | Scr Thrsh <1 | ||||

| 24 | yes | Scr Thrsh <1 | ||||

| 25 | yes | Scr<1&>14 | ||||

| 26 | yes | Scr<1&>14 | ||||

| 27 | yes | Scr<1&>14 | ||||

| 28 | yes | 1-8 | 1-8 | insuff data | SOA = 6 | |

| 29 | yes | 1-8 | 3-10 | SOA = 3 | poor fit | |

| 30 | yes | 2-9 | 2-9 | insuffdata | SOA = 6 | |

| 31 | yes | 2-9 | 4-11 | SOA = 6 | SOA = 7 | |

| 32 | yes | 4-11 | 7-14 | poor fit | insuff data | |

| 33 | yes | 6-13 | 3-10 | poor EEG | poor fit | |

| 34 | yes | 6-13 | 7-14 | SOA=12 | SOA=10 | |

| 35 | yes | 7-14 | 7-14 | poor EEG | poor EEG | |

| 36 | yes | 7-14 | 7-14 | SOA=12 | SOA=13 | |

Note: Participants are grouped rather than listed in order data was collected. Part = participant number; Scr Proc = test SO A screening procedure; Scr Thrsh = screening procedure threshold; ERP Thrsh = the SOA considered to be closest to an individual's echo threshold for ERP analysis. All SOAs are given in ms.

Twenty-four participants (including the eight who were not given the echo threshold screening) proceeded to the test-phase of the experiment. The testing instructions were identical to the screening instructions: Listeners were told they would always hear a sound from the lead side and were asked to press different buttons if they did or did not hear a sound from the lag side. Since EEG was being recorded, they were also asked to refrain from blinking and making any other movements (including pressing a button) when a fixation point was shown on the computer monitor. To reinforce these instructions, they were presented on the computer monitor at the beginning of each block. Each trial began with the appearance of a fixation point in the center of the computer monitor. One of the click pairs was played 200 - 700 ms later (rectangular distribution). The fixation point remained on the screen for another 800 ms followed by a reminder of the instructions (e.g., 1 = sound from left, 4 = no sound from left) to prompt a response. All participants completed 20 left-lead blocks and 20 right-lead blocks. They heard 100 repetitions (5 within a single block) of each of 8 SOAs with the lead sound on each side for a total of 1600 trials.

The number of trials on which a participant reported hearing the echo for each sound (lead side with a specific SOA) was recorded. A logistic function allowed to vary by midpoint and slope was fitted to each psychometric function using nonlinear parameter estimation in Matlab. The accuracy of the curve fit was measured with r-squared and the midpoint of the function defined the echo threshold for that individual and lead side.

During test blocks, EEG was recorded with a bandwidth of 0.01 - 80 Hz, referenced to the vertex, using EGI (Electrical Geodesics, Inc., Eugene OR) 128-channel nets. Four sizes were available to achieve a close fit for every participant. Scalp impedances at all electrode sites were maintained under 50 kΩs. After EEG was digitized (250 Hz), epochs 100 ms before to 600 ms after onset of the lead sound were defined. The 100 ms pre-stimulus interval served as a baseline. Artifact rejection criteria were set for each individual based on observations of blinks, eye movements, and head movements made while subjects listened to instructions. Trials on which EEG amplitude exceeded individual limits at any electrode site were eliminated from analyses.

Behavioral responses were used to define the data included in ERP analyses for each participant and lead side separately since echo thresholds differed among individuals and between lead sides within some participants. To index processing related to perception of the lag sound without confounding physical differences in stimuli, it was necessary to select one SOA closest to the midpoint of the fitted function for each individual and lead side such that the participant reported both hearing and not hearing the lag sound on a sufficient number of trials. A minimum of 38 trials allowed for the inclusion of as many participants as possible while maintaining high data quality. For ERP analyses, the SOA that met these two criteria was considered to be at echo threshold. By comparing ERPs elicited by pairs with an SOA 1 ms longer than threshold when listeners reported hearing the lag sound to responses evoked by pairs with an SOA 1 ms shorter than threshold when listeners reported not hearing the lag sound, it was possible both to determine if perceptual effects observed for the near-threshold conditions replicated and to index any additional differences related to SOA.

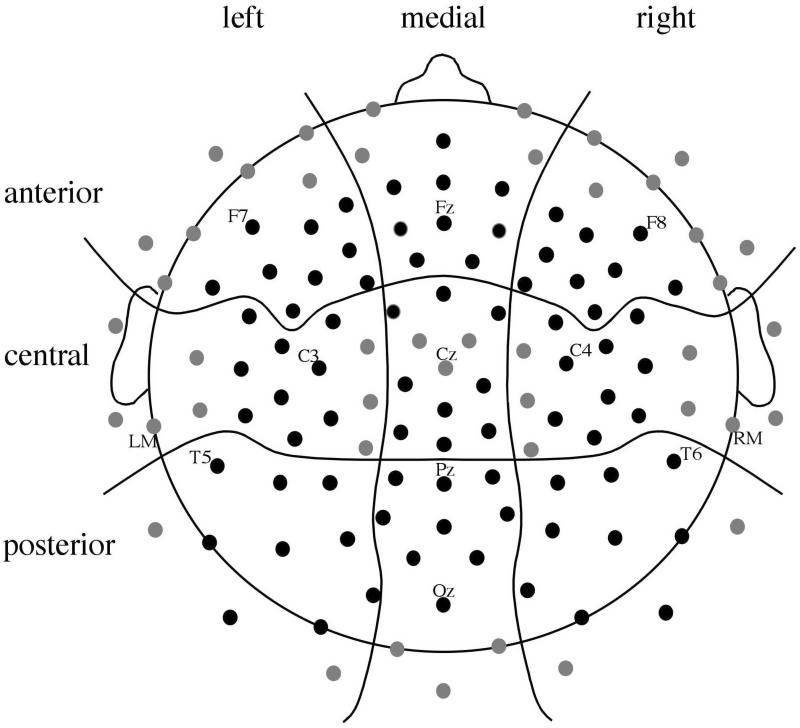

Data for each condition and electrode site for every individual were averaged and then rereferenced to the averaged mastoid measurements. Peak latency and average amplitude measurements were taken on the waveforms in the following time windows after lead sound onset: 20 - 60 ms (P1), 100 - 140 ms (N1), and 170 - 240 ms (P2). Additional average amplitude measurements were made at 100 - 250 and 275 - 475 ms. Measurements were made at 81 electrode sites across the scalp and combined into groups of 9 electrodes in a 3 (Anterior, Central, Posterior or ACP) × 3 (Left, Medial, Right, or LMR) grid (Figure 2). The measurements taken on ERPs elicited by click pairs at echo threshold for trials on which the participant reported hearing the lag sound and trials on which the participant reported not hearing the lag sound were then entered in 2 (Response) × 3 (ACP) × 3 (LMR) repeated-measures ANOVAs (Greenhouse-Geisser adjusted). Data from trials with an SOA 1 ms longer than threshold when the participant reported hearing the lag sound and an SOA 1 ms shorter than threshold when the participant reported not hearing the lag sound were included in ANOVAs of identical design. All significant (p < .05) interactions of Response and electrode position factors were followed by ANOVAs conducted separately for each level of the relevant electrode position factor.

Figure 2.

Approximate location of 128 scalp electrodes. ERP analyses were conducted for data collected at 81 electrodes (shown in black) with electrode position included as two factors in ANOVAs: Left - Medial - Right (LMR) and Anterior - Central - Posterior (ACP). All data were referenced, offline, to the average of the left mastoid (LM) and right mastoid (RM) recordings.

RESULTS

Psychophysical data on echo thresholds defined by the number of trials on which an individual reported hearing the lag sound are reported first. Next ERP waveforms averaged across behavioral response are described followed by the differences in ERPs elicited by click pairs at echo threshold when participants reported hearing and not hearing the lag sound. Finally, the same comparison is made for click pairs with SOAs 1 ms away from echo threshold.

Behavioral responses

For the 24 participants who completed the test phase of the experiment, the number of trials on which they reported hearing the echo for each lead side and onset asynchrony is shown in Figure 3. Since the same individual frequently showed a different pattern of data for click pairs presented with the lead sound on the left and the lead sound on the right (and for many individuals data from only one lead side could be included in ERP analyses), data from these blocks of trials were treated separately. Of the 48 lead side by participants, 16 of the psychophysical functions (from 11 individuals) did not vary systematically with SOA and could not be accurately fit with a logistic function (r2 < .75). For an additional nine lead side by participants (from 9 individuals), none of the SOAs employed during testing was close enough to the midpoint of the logistic function to provide sufficient ERP data (> 38 trials on which the lag sound was and was not reported). For seven lead side by participants (from 5 individuals), responses did change systematically with onset asynchrony but the number of trials with artifact-free EEG in at least one condition was too low (< 10) for inclusion in ERP analyses. The average echo threshold for this group was 7.5 ms (s.d. = 3.7) with a range from 3.0 to 12.0 ms. Behavioral data for the 16 lead side by participants that were included in ERP analyses is shown in the bottom panel of Figure 3. Average echo threshold for this group was also 7.5 ms (s.d. = 3.3) with a range from 3.0 to 12.5 ms.

Figure 3.

Number of trials (out of 100) on which participants reported hearing the echo at each tested onset asynchrony for left-lead (open circles) and right-lead (filled squares) blocks separately. The top panel shows responses that did not vary systematically with onset asynchrony. The second panel includes responses for which there was insufficient data close to echo threshold. The third panel shows responses that were excluded from ERP analyses because of excessive noise. The fourth panel shows the behavioral responses that correspond to data included in ERP analyses. N indicates the number of lead side by participants (total of 48) included in each group.

Participants were required to make a response even if they were uncertain whether they had heard the lag sound. These responses may have been influenced by what was heard on the previous trial. To test this hypothesis, behavioral responses to sounds at echo threshold were divided based on the response given on the previous trial. There were no differences in the probability that listeners reported hearing the lag sound for click pairs at echo threshold when they reported hearing and not hearing the lag sound on the previous trial (t < 1.0).

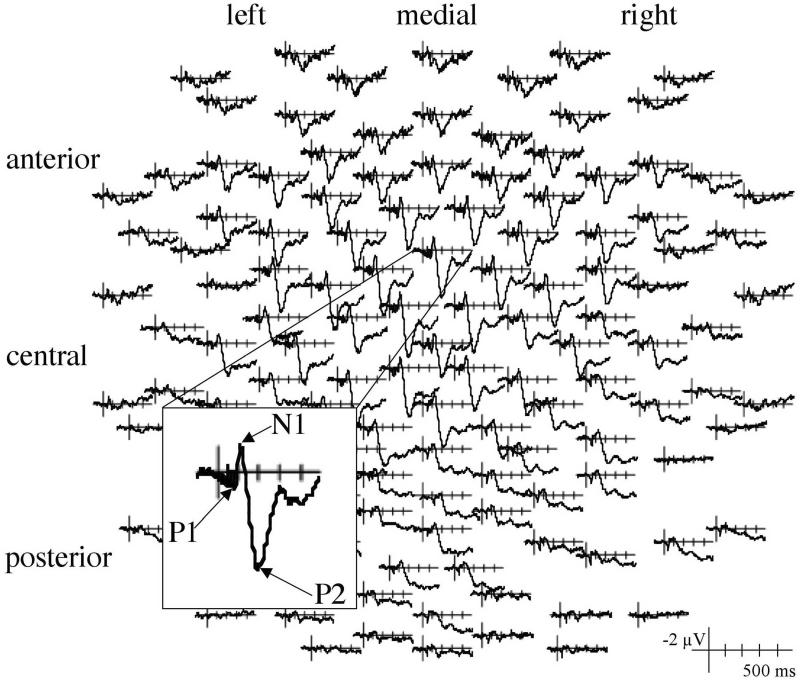

Auditory evoked potentials

As shown in Figure 4, all click pairs elicited a typical positive-negative-positive oscillation. For sounds at echo threshold (defined for each lead side by participant as indicated in Table 1), the first positive component (P1) peaked at 43 ms with an amplitude of 1.5 μV and was largest over anterior and central regions, ACP: F(2,30) = 9.40, p < .01. For pairs with an SOA 1 ms shorter than echo threshold, the P1 peaked at 42 ms and 1.4 μV, whereas for sounds with an SOA 1 ms longer than echo threshold, the P1 peaked at 47 ms and 1.3 μV. The difference in P1 latency for the shorter and longer than threshold categories was significant, F(1,15) = 6.64, p < .05. Since this 5-ms latency difference for sounds that differed by an SOA of only 2 ms was unexpected, data were further explored to determine if the relationship between SOA and P1 latency replicated for additional sounds. For the14 lead side by participants who could also contribute data to conditions that had SOAs 2 ms shorter and 2 ms longer than echo threshold, there was no evidence of P1 latency differences (lat for -2 ms = 43, lat for +2 ms = 44, p's > .30). This lack of replication suggests the P1 elicited by click pairs peaks around 43 ms regardless of SOA. The positive peak was largest over anterior and central regions, F(2,30) = 5.95, p < .05, and medial electrodes, F(2,30) = 4.15, p < .05, for all conditions (interactions: p's > .20).

Figure 4.

Auditory evoked potentials elicited by click pairs at echo threshold averaged across participants, lead side, response, and trials shown for all electrode sites. The response recorded from an anterior midline electrode is shown on a larger scale.

The first negative peak (N1) elicited by click pairs at echo threshold peaked at 120 ms and -1.8 μV and was largest over anterior and central regions, F(2,30) = 14.92, p < .001. For the below threshold condition, the N1 peaked at 116 ms and -1.6 μV; for the above threshold condition, the N1 peaked at 121 ms and -1.7 μV. The difference in N1 latency for the above and below threshold conditions was significant, F(1,15) = 8.41, p < .05); again, this difference was not evident when conditions 2 ms above and 2 ms below threshold were included in a similar analysis (p's > .75). Across conditions, the component was largest at anterior and central electrodes, F(2,30) = 10.70, p < .01.

The second positive peak (P2) elicited by sounds at echo threshold peaked at 207 ms and 4.7 μV. As was found for the P1 and N1, this peak was largest over anterior and central regions, F(2,30) = 28.2, p < .001, and at medial electrodes, F(2,30) = 9.82, p < .001. The P2 peaked at 206 ms for the below threshold condition and at 205 ms for the above threshold condition (p's > .75). The distribution of the component was similar regardless of condition, ACP: F(2,30) = 30.43, p < .001; LMR: F(2,30) = 17.41, p < .001; interaction p's > .75.

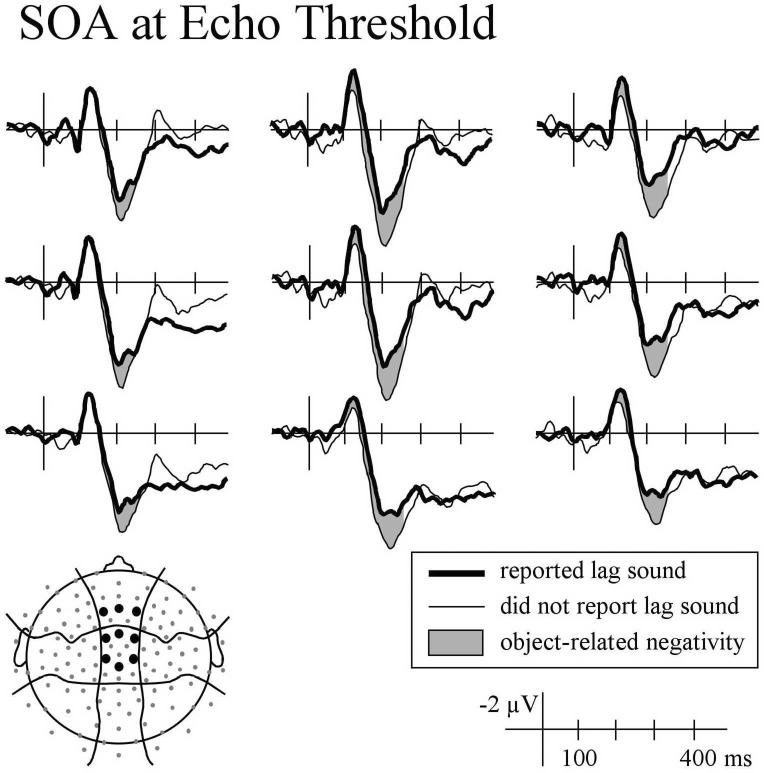

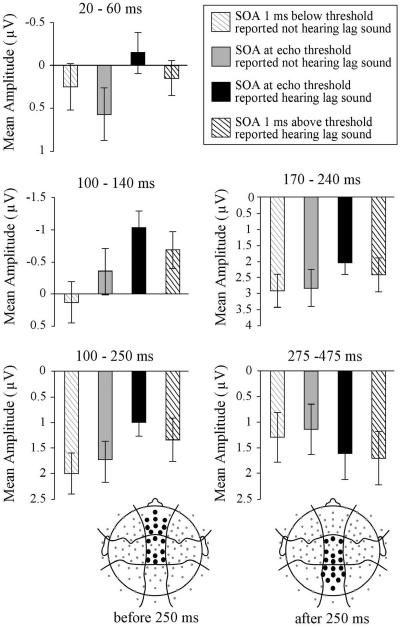

ERP indices of perception

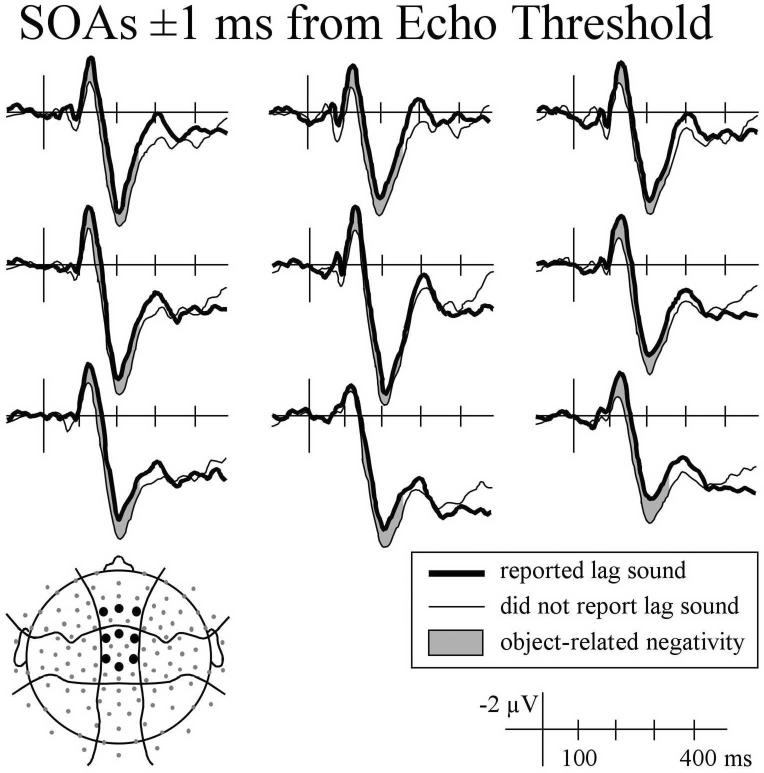

During the P1 time-window (20 - 60 ms), physically identical sounds elicited a larger mean amplitude when listeners reported hearing only one sound compared to trials on which they reported clearly hearing the lag sound, F(1,15) = 5.52, p < .05. This difference was broadly distributed across the scalp and was not replicated for the sounds with SOAs 1 ms shorter and longer than threshold (p's > .30) or SOAs 2 ms shorter and longer than threshold (p's > .30). A more consistent effect, shown in Figure 5, was observed for mean amplitude between 100 and 140 ms (N1). In this time range, click pairs with an SOA at echo threshold elicited a larger negativity when listeners reported hearing the echo compared to not hearing the echo, F(1,15) = 5.38, p < .05. A similar effect, shown in Figure 6, was observed for sounds with lag delays that differed from threshold by 1 ms: Click pairs with SOAs 1 ms more than threshold on trials when listeners reported clearly hearing the echo elicited a larger negativity than click pairs with SOAs 1 ms less than threshold on trials when listeners reported not hearing the echo, F(1,15) = 7.24, p < .05. Similar effects were found during the P2 time range (170 - 240 ms) such that for sounds at echo threshold mean amplitude was less positive when participants reported hearing the echo, F(1,15) = 6.06, p < .05; the same was observed for click pairs above and below threshold, F(1,15) = 4.84, p < .05.

Figure 5.

ERPs recorded at anterior-central and medial electrode sites elicited by physically identical sounds with SOAs at echo threshold when listeners reported hearing the lag sound (thick line) and not hearing the lag sound (thin line).

Figure 6.

ERPs recorded at anterior-central and medial electrode sites elicited by click pairs with onset asynchronies 1 ms longer than echo threshold when listeners reported hearing the lag sound (thick line) and sounds with onset asynchronies 1 ms shorter than echo threshold when listeners reported not hearing the lag sound (thin line).

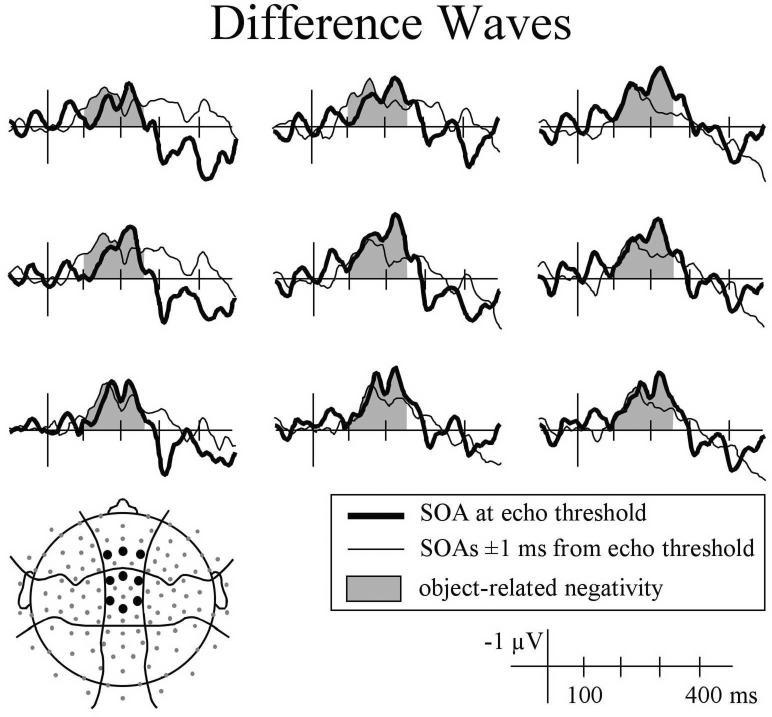

The differences observed in the N1 and P2 time windows were similar to each other (more negative ERPs when listeners reported hearing the echo), and at some electrode sites there appeared to be a single continuous difference (Figure 7) rather than two distinct effects. Therefore, mean amplitude measures that subsumed these times (100 - 250 ms) were taken. Consistent with the individual component analyses, mean amplitude was more negative when listeners reported hearing the lag sound for click pairs with SOAs at echo threshold, F(1,15) = 6.5, p < .05, and for sounds with SOAs 1ms shorter and longer than threshold, F(1,5) = 4.95, p < .05. Over this longer time-window the distribution of the difference was more clearly evident in condition by electrode position interactions that approached significance. Specifically, for sounds at echo threshold, the difference tended to be larger over anterior-central and medial scalp regions, Response × LMR: F(2,30) = 2.75, p = .080; Response × ACP × LMR: F(4,60) = 2.54, p = .067).

Figure 7.

Difference (lag sound reported - lag sound not reported) in ERPs elicited by click pairs with onset asynchronies at echo threshold (thick line) and 1 ms away from echo threshold (thin line).

To determine if the frequency of reporting hearing the lag sound affected mean amplitude between 100 and 250 ms, the 16 lead side by participants were divided into three groups: those who reported hearing the lag sound on over 66% of trials (N = 4), those who reported hearing the lag sound on roughly half of the trials (N = 6), and those who reported hearing the lag sound on fewer than 33% of trials (N = 6). No interaction between group and response was observed in visual inspection of the waveforms. This observation was supported by statistical analyses (p's > .75), though the small numbers included in each group meant these comparisons had little power.

Visual inspection of the grand average waveforms and evidence from studies in which listeners were asked to report whether they heard two distinct pitches (Alain et al., 2001; Hautus & Johnson, 2005) suggested that when listeners reported hearing two distinct sounds, the click pairs would elicit a larger positivity later in the waveform (mean amplitude 275 - 475 ms). Although this was numerically true for sounds with an SOA at threshold as well as 1 or 2 ms away from threshold, none of the main effects of condition or condition by electrode position interactions were significant (p's > .10). The mean amplitude measurements taken in each time window before 250 ms at anterior-central and medial electrode sites and after 250 ms at posterior-central and medial electrode sites are summarized in Figure 8.

Figure 8.

Summary of mean amplitude measurements taken 20-60, 100-140, 170-240, and 100-250 ms after lead sound onset at anterior-central and medial electrode sites and 275-475 ms after sound onset at posterior-central and medial electrode sites. Amplitude of ERPs elicited by sounds at echo threshold is shown as solid bars; for sounds 1 ms away from threshold, amplitude is shown as lined bars. Data for trials on which listeners reported hearing the lag sound are shown in black; for trials on which listeners reported not hearing the lag sound, data is shown in grey. Error bars indicate standard error of the mean.

DISCUSSION

Shorter latency P1 peaks were observed for click pairs with an onset asynchrony 1 ms less than a listener's echo threshold and larger amplitude P1s were evident for sounds with an SOA at listener's threshold when they reported not hearing compared to hearing the lag sound. These early effects failed to replicate across other onset asynchronies and may have been spurious. In contrast, when listeners reported hearing the lag sound for pairs with an SOA at or 1 ms above their echo threshold, evoked potentials had a more negative amplitude measured during the N1 time window (100 - 140 ms), the P2 time window (170 - 240 ms), and across these two periods (100 - 250 ms). These data provide important information about the neurosensory processing involved in the perception (or lack of perception) of simulated echoes. The neural response to physically identical click pairs that listeners later report as two distinct sounds differs from that elicited by stimuli listeners describe as a single sound localized towards the lead loudspeaker. Through comparison with studies that report a similar component, the ORN, under conditions that result in listeners hearing two distinct pitches, it is possible to conclude that these differences reflect a neural mechanism related to conscious perception of multiple sound sources that is not fully dependent on physical differences in the stimuli.

Evidence of an ORN when listeners report hearing the lag sound for click pairs that fall on or very close to their echo threshold can be interpreted in multiple ways. One possibility is that listeners make predictions about whether they will hear the lag sound or not on the next trial, which then affects both early neurosensory processing and their response when the external stimulus provides ambiguous information. However, these predictions would likely be affected by both what was perceived on the previous trial and the overall probability of hearing the lag sound during a session; neither of these factors were observed to affect responses in the current study. That is, listeners were no more likely to report hearing the lag sound at their echo threshold when they reported hearing the lag sound on the previous trial, and the ORN was equally evident in listeners whose echo thresholds fell near the longest onset asynchronies they were presented with (and therefore reported the lag sound relatively rarely) and in those who reported hearing the lag sound on a large proportion of trials. A more plausible explanation for the observed ERP effects at echo threshold is that even though the physical stimulus was identical, the neural response to those stimuli differed in a way that resulted in a `no echo' or `echo present' interpretation by the listener.

Asking listeners to report if they heard a sound from the side of the lag loudspeaker or not on each trial likely encouraged them to direct spatially selective attention towards the location of the lag loudspeaker for an entire block of trials. If listeners did use selective attention to aid in the detection of lag sounds, previous research would predict that it lowered their detection thresholds and increased the amplitude of auditory evoked potentials or resulted in an added negative processing difference (Hansen & Hillyard, 1980; Hillyard, Hink, Schwent, & Picton, 1973; Näätänen, 1982; Picton & Hillyard, 1974; Schröger & Eimer, 1997; Spence & Driver, 1994). Both effects may have been present in the current data but cannot be measured since participants were always performing the same task. However, the ORN elicited by pitch stimuli has been shown to be unaffected by selective attention (Alain et al., 2001, 2002; Dyson et al., 2005). By comparison, this suggests the ORN reported in the current study would have been equal in amplitude regardless of the location, or even the task, listeners were attending to. In contrast, the later positivity evoked by sounds listeners perceive as two distinct pitches has only been observed when listeners actively attend to the auditory stimuli (Alain et al., 2001). The fact that this positivity in the current study was observed in the grand averages but was not consistent enough across individuals to be statistically significant suggests there may have been differences in the extent to which individuals were attending to the task.

The similarity of the ORN with another commonly reported auditory ERP component, the MMN, has been noted in several other studies (Alain, Reinke, He, Wang, & Lobaugh, 2005; Alain et al., 2002; Hautus & Johnson, 2005). In those studies, the ORN and MMN have been differentiated based on distribution (MMN was more anterior), frequency (MMN was modulated by the frequency of a sound in the recent context), and attention (MMN was modulated by participant attention to other tasks). Similar arguments apply to the current data. Although neither frequency nor attention were manipulated and no direct comparison of the ORN and MMN in the same individuals was attempted, the anterior-central distribution of the negativity between 100 and 250 ms and the lack of an association between the amplitude of this negativity and the percentage of trials on which an individual reported hearing the lag sound suggests the difference in ERPs in response to sounds perceived as a single source and two concurrent sounds is best described as an ORN.

The primary goal of this study was to understand the levels of processing that are affected by perception of an echo in the precedence effect. However, just as research on pitch perception that has reported a similar ERP component can further elucidate the interpretation of the current results, the data presented here offer additional clarification of what that component is indexing in pitch perception studies. Specifically, the fact that the ORN is observed for concurrent sound source segregation regardless of whether the streams are separated based on pitch or location indicates that the ORN is actually indexing perception of distinct auditory objects. Further, by showing that the ORN is elicited by physically identical stimuli when listeners report hearing two sounds compared to one, the data strengthen the argument that the differences reported in pitch perception studies are not based on physical differences in the stimuli (Alain et al., 2002, 2005; Hautus & Johnson, 2005).

The number of participants who completed some portion of the experiment but were not included in ERP analyses might suggest that the ORN is not a robust index of the precedence effect. However, the reasons for excluding much of the data included additional constraints that are not typical of other studies. Overall, 67% of the psychophysical functions fit a logistic curve quite well. The number of participants whose responses did not vary systematically with SOA was consistent with other studies conducted in more precisely controlled acoustic environments (Blauert, 1997; Clifton, 1987; Clifton et al., 1994; Damaschke et al., 2005; Freyman et al., 1991; Freyman & Keen, 2006; Litovsky et al., 2000; Saberi & Antonio, 2003).

Understanding the neural mechanisms of auditory stream segregation will be important in formulating both environments and interventions that result in better auditory comprehension in complex, noisy situations. The current study contributes to that understanding by showing that perception of two concurrent sound sources is supported by early neurosensory processing that differs from that associated with perception of one sound source. Comparisons with other studies suggest this difference in processing is not dependent on attention and is similar for auditory objects defined by either pitch or location. Further, the results suggest the precedence effect can be mediated by differences in the neural responses to physically identical stimuli.

Acknowledgments

The research reported here was partially funded by NIH R01 DC01625 awarded to R.E.K. and R.L.F. as well as NIH Institutional Training Grant MH16745 that provided postdoctoral training for A.S.J. The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIDCD, NIMH, or NIH. We thank Lori Astheimer, William Bush, Molly Chilingerian, Ahren Fitzroy, Jim Morgante, and Leah Novotny for assistance with data collection.

References

- 1.Alain C, Arnott S, Picton T. Bottom-up and top-down influences on auditory scene analysis: Evidence from event-related brain potentials. Journal of Experimental Psychology: Human Perception and Performance. 2001;27:1072–1089. doi: 10.1037//0096-1523.27.5.1072. [DOI] [PubMed] [Google Scholar]

- 2.Alain C, McDonald K. Age-related differences in neuromagnetic brain activity underlying concurrent sound perception. The Journal of Neuroscience. 2007;27:1308–1314. doi: 10.1523/JNEUROSCI.5433-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Alain C, Reinke K, He Y, Wang C, Lobaugh N. Hearing two things at once: Neurophysiological indices of speech segregation and identification. Journal of Cognitive Neuroscience. 2005;17:811–818. doi: 10.1162/0898929053747621. [DOI] [PubMed] [Google Scholar]

- 4.Alain C, Schuler B, McDonald K. Neural activity associated with distinguishing concurrent auditory objects. Journal of the Acoustical Society of America. 2002;111:990–995. doi: 10.1121/1.1434942. [DOI] [PubMed] [Google Scholar]

- 5.Blauert J. Spatial Hearing: The Psychophysics of Human Sound Localization. MIT Press; Cambridge, MA: 1997. [Google Scholar]

- 6.Bregman A. Auditory Scene Analysis. MIT Press; Cambridge, MA: 1990. [Google Scholar]

- 7.Chait M, Poeppel D, Simon J. Neural response correlates of detection of monaurally and binaurally created pitches in humans. Cerebral Cortex. 2006;16:835–848. doi: 10.1093/cercor/bhj027. [DOI] [PubMed] [Google Scholar]

- 8.Clifton RK. Breakdown of echo suppression in the precedence effect. Journal of the Acoustical Society of America. 1987;82:1834–1835. doi: 10.1121/1.395802. [DOI] [PubMed] [Google Scholar]

- 9.Clifton RK, Freyman R. Effect of click rate and delay on breakdown of the precedence effect. Perception & Psychophysics. 1989;46:139–145. doi: 10.3758/bf03204973. [DOI] [PubMed] [Google Scholar]

- 10.Clifton RK, Freyman R, Litovsky R, McCall D. Listeners' expectations about echoes can raise or lower echo thresholds. Journal of the Acoustical Society of America. 1994;95:1525–1533. doi: 10.1121/1.408540. [DOI] [PubMed] [Google Scholar]

- 11.Clifton RK, Freyman R, Meo J. What the precedence effect tells us about room acoustics. Perception & Psychophysics. 2002;64:180–188. doi: 10.3758/bf03195784. [DOI] [PubMed] [Google Scholar]

- 12.Damaschke J, Riedel H, Kollmeier G. Neural correlates of the precedence effect in auditory evoked potentials. Hearing Research. 2005;205:157–171. doi: 10.1016/j.heares.2005.03.014. [DOI] [PubMed] [Google Scholar]

- 13.Dyson B, Alain C, He Y. Effects of visual attentional load on low-level auditory scene analysis. Cognitive, Affective, & Behavioral Neuroscience. 2005;5:319–338. doi: 10.3758/cabn.5.3.319. [DOI] [PubMed] [Google Scholar]

- 14.Fitzpatrick D, Kuwada S, Kim D, Parham K, Batra R. Responses of neurons to click-pairs as simulated echoes: Auditory nerve to auditory cortex. Journal of the Acoustical Society of America. 1999;106:3460–3472. doi: 10.1121/1.428199. [DOI] [PubMed] [Google Scholar]

- 15.Freyman R, Clifton RK, Litovsky R. Dynamic processes in the precedence effect. Journal of the Acoustical Society of America. 1991;90:874–884. doi: 10.1121/1.401955. [DOI] [PubMed] [Google Scholar]

- 16.Freyman R, Keen R. Constructing and disrupting listeners' models of auditory space. Journal of the Acoustical Society of America. 2006;106:3957–3965. doi: 10.1121/1.2354020. [DOI] [PubMed] [Google Scholar]

- 17.Grantham W. Left-right asymmetry in the buildup of echo suppression in normal-hearing adults. Journal of the Acoustical Society of America. 1996;99:1118–1123. doi: 10.1121/1.414596. [DOI] [PubMed] [Google Scholar]

- 18.Hansen J, Hillyard S. Endogenous brain potentials associated with selective auditory attention. Electroencephalography and Clinical Neurophysiology. 1980;49:277–290. doi: 10.1016/0013-4694(80)90222-9. [DOI] [PubMed] [Google Scholar]

- 19.Hartung K, Trahiotis C. Peripheral auditory processing and investigations of the 'precedence effect' which utilize successive transient stimuli. Journal of the Acoustical Society of America. 2001;110:1505–1513. doi: 10.1121/1.1390339. [DOI] [PubMed] [Google Scholar]

- 20.Hautus M, Johnson B. Object-related brain potentials associated with the perceptual segregation of a dichotically embedded pitch. Journal of the Acoustical Society of America. 2005;117:275–280. doi: 10.1121/1.1828499. [DOI] [PubMed] [Google Scholar]

- 21.Hillyard S, Hink R, Schwent V, Picton T. Electrical signs of selective attention in the human brain. Science. 1973;182:177–180. doi: 10.1126/science.182.4108.177. [DOI] [PubMed] [Google Scholar]

- 22.Hiraumi H, Nagamine T, Morita T, Naito Y, Fukuyama H, Ito J. Right hemispheric predominance in the segregation of mistuned partials. European Journal of Neuroscience. 2005;22:1821–1824. doi: 10.1111/j.1460-9568.2005.04350.x. [DOI] [PubMed] [Google Scholar]

- 23.Johnson B, Hautus M, Clapp W. Neural activity associated with binaural processes for the perceptual segregation of pitch. Clinical Neurophysiology. 2003;114:2245–2250. doi: 10.1016/s1388-2457(03)00247-5. [DOI] [PubMed] [Google Scholar]

- 24.Li L, Qi J, He Y, Alain C, Schneider B. Attribute capture in the precedence effect for long-duration noise sounds. Hearing Research. 2005;202:235–247. doi: 10.1016/j.heares.2004.10.007. [DOI] [PubMed] [Google Scholar]

- 25.Liebenthal E, Pratt H. Human auditory cortex electrophysiological correlates of the precedence effect: Binaural echo lateralization suppression. Journal of the Acoustical Society of America. 1999;106:291–303. [Google Scholar]

- 26.Litovsky R, Colburn HS, Yost W, Guzman S. The precedence effect. Journal of the Acoustical Society of America. 1999;106:1633–1654. doi: 10.1121/1.427914. [DOI] [PubMed] [Google Scholar]

- 27.Litovsky R, Fligor B, Tramo M. Functional role of the human inferior colliculus in binaural hearing. Hearing Research. 2002;165:177–188. doi: 10.1016/s0378-5955(02)00304-0. [DOI] [PubMed] [Google Scholar]

- 28.Litovsky R, Hawley M, Fligor B, Zurek P. Failure to unlearn the precedence effect. Journal of the Acoustical Society of America. 2000;108:2345–2352. doi: 10.1121/1.1312361. [DOI] [PubMed] [Google Scholar]

- 29.Litovsky R, Rakerd B, Yin T, Hartmann W. Psychophysical and physiological evidence for a precedence effect in the median sagittal plane. Journal of Neurophysiology. 1997;77:2223–2226. doi: 10.1152/jn.1997.77.4.2223. [DOI] [PubMed] [Google Scholar]

- 30.Litovsky R, Shinn-Cunningham B. Investigation of the relationship among three common measures of precedence: Fusion, localization dominance, and discrimination suppression. Journal of the Acoustical Society of America. 2001;109:346–358. doi: 10.1121/1.1328792. [DOI] [PubMed] [Google Scholar]

- 31.Litovsky R, Yin T. Physiological studies of the precedence effect in the inferior colliculus of the cat. I. Correlates of psychophysics. Journal of Neurophysiology. 1998;80:1285–101. doi: 10.1152/jn.1998.80.3.1285. [DOI] [PubMed] [Google Scholar]

- 32.McDonald K, Alain C. Contribution of harmonicity and location to auditory object formation in free field: Evidence from event-related brain potentials. Journal of the Acoustical Society of America. 2005;118:1593–1604. doi: 10.1121/1.2000747. [DOI] [PubMed] [Google Scholar]

- 33.Mickey B, Middlebrooks J. Responses of auditory cortical neurons to pairs of sounds: Correlates of fusion and localization. Journal of Neurophysiology. 2001;86:1333–1350. doi: 10.1152/jn.2001.86.3.1333. [DOI] [PubMed] [Google Scholar]

- 34.Näätänen R. Processing negativity: An evoked-potential reflection of selective attention. Psychological Bulletin. 1982;92:605–640. doi: 10.1037/0033-2909.92.3.605. [DOI] [PubMed] [Google Scholar]

- 35.Picton T, Hillyard S. Human auditory evoked potentials. II. Effects of attention. Electroencephalography and Clinical Neurophysiology. 1974;36:191–199. doi: 10.1016/0013-4694(74)90156-4. [DOI] [PubMed] [Google Scholar]

- 36.Saberi K, Antonio J. Precedence-effect thresholds for a population of untrained listeners as a function of stimulus intensity and interclick interval. Journal of the Acoustical Society of America. 2003;114:420–429. doi: 10.1121/1.1578079. [DOI] [PubMed] [Google Scholar]

- 37.Saberi K, Perrott D. Lateralization thresholds obtained under conditions in which the precedence effect is assumed to operate. Journal of the Acoustical Society of America. 1990;87:1732–1737. doi: 10.1121/1.399422. [DOI] [PubMed] [Google Scholar]

- 38.Schröger E, Eimer M. Endogenous covert spatial orienting in audition: “Cost-benefit” analyses of reaction times and event-related potentials. Quarterly Journal of Experimental Psychology. 1997;50A:457–474. [Google Scholar]

- 39.Spence C, Driver J. Covert spatial orienting in audition: Exogenous and endogenous mechanisms. Journal of Experimental Psychology: Human Perception and Performance. 1994;20:555–574. [Google Scholar]

- 40.Spitzer M, Bala A, Takahashi T. A neuronal correlate of the precedence effect is associated with spatial selectivity in the barn owl's auditory midbrain. Journal of Neurophysiology. 2004;92:2051–2070. doi: 10.1152/jn.01235.2003. [DOI] [PubMed] [Google Scholar]

- 41.Tollin D, Yin T. Psychophysical investigation of an auditory spatial illusion in cats: The precedence effect. Journal of Neurophysiology. 2003;90:2149–2162. doi: 10.1152/jn.00381.2003. [DOI] [PubMed] [Google Scholar]

- 42.Wallach H, Newman E, Rosenzweig M. The precedence effect in sound localization. American Journal of Psychology. 1949;62:315–336. [PubMed] [Google Scholar]

- 43.Yin T. Physiological correlates of the precedence effect and summing localization in the inferior colliculus of the cat. The Journal of Neuroscience. 1994;14:5170–5186. doi: 10.1523/JNEUROSCI.14-09-05170.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]