Abstract

We describe nonnegative matrix factorisation (NMF) with a Kullback-Leibler (KL) error measure in a statistical framework, with a hierarchical generative model consisting of an observation and a prior component. Omitting the prior leads to the standard KL-NMF algorithms as special cases, where maximum likelihood parameter estimation is carried out via the Expectation-Maximisation (EM) algorithm. Starting from this view, we develop full Bayesian inference via variational Bayes or Monte Carlo. Our construction retains conjugacy and enables us to develop more powerful models while retaining attractive features of standard NMF such as monotonic convergence and easy implementation. We illustrate our approach on model order selection and image reconstruction.

1. Introduction

In machine learning, nonnegative matrix factorisation (NMF) was introduced by Lee and Seung [1] as an alternative to k-means clustering and principal component analysis (PCA) for data analysis and compression (also see [2]). In NMF, given a W × K nonnegative matrix X = {x ν,τ}, where ν = 1 : W, τ = 1 : K, we seek positive matrices T and V such that

| (1) |

where i = 1 : I. We will refer to the W × I matrix T as the template matrix, and I × K matrix V the excitation matrix. The key property of NMF is that T and V are constrained to be positive matrices. This is in contrast with PCA, where there are no positivity constraints or k-means clustering where each column of V is constrained to be a unit vector. Subject to the positivity constraints, we seek a solution to the following minimisation problem:

| (2) |

Here, the function D is a suitably chosen error function. One particular choice for D, on which we will focus here, is the information (Kullback-Leibler) divergence, which we write as

| (3) |

Using Jensen's inequality [3] and concavity of log x, it can be shown that D(·) is nonnegative and D(X||Λ) = 0 if and only if X = Λ. The objective in (2) could be minimised by any suitable optimisation algorithm. Lee and Seung [1] have proposed a very efficient variational bound minimisation algorithm that has attractive convergence properties and which has been successfully applied in various applications in signal analysis and source separation, for example, [4–6].

The interpretation of NMF, like singular value decomposition (SVD), as a low rank matrix approximation is sufficient for the derivation of a useful inference algorithm; yet this view arguably does not provide the complete picture about assumptions underlying the statistical properties of X. Therefore, we describe NMF from a statistical perspective as a hierarchical model. In our framework, the original nonnegative multiplicative update equations of NMF appear as an expectation-maximisation (EM) algorithm for maximum likelihood estimation of a conditionally Poisson model via data augmentation. Starting from this view, we develop Bayesian extensions that facilitate more powerful modelling and allow more sophisticated inference, such as Bayesian model selection. Inference in the resulting models can be carried out easily using variational (structured mean field) or Markov Chain Monte Carlo (Gibbs sampler). The resulting algorithms outperform existing NMF strategies and open up the way for a full Bayesian treatment for model selection via computation of the marginal likelihoods (the evidence), such as estimating the dimensions of the template matrix or regularising overcomplete representations via automatic relevance determination.

2. The Statistical Perspective

The interpretation of NMF as a low-rank matrix approximation is sufficient for the derivation of an inference algorithm; yet this view arguably does not provide the complete picture. In this section, we describe NMF from a statistical perspective. This view will pave the way for developing extensions that facilitate more realistic and flexible modelling as well as more sophisticated inference, such as Bayesian model selection.

Our first step is the derivation of the information divergence error measure from a maximum likelihood principle. We consider the following hierarchical model:

| (4) |

| (5) |

Here, 𝒫𝒪(s; λ) denotes the Poisson distribution of the random variable s ∈ ℕ 0 with nonnegative intensity parameter λ, where

| (6) |

and Γ(s + 1) = s! is the gamma function. The priors p(T ∣ ·) and p(V ∣ ·) will be specified later. We call the variables S i = {s ν,i,τ} latent sources. We can analytically marginalise out the latent sources S = {S 1 ⋯ S I} to obtain the marginal likelihood

| (7) |

This result follows from the well-known superposition property of Poisson random variables [7], namely, when s i ∼ 𝒫𝒪(s i; λ i) and x = s 1 + s 2 + ⋯ + s I, then the marginal probability is given by p(x) = 𝒫𝒪(x; ∑i λ i). The maximisation of this objective in T and V is equivalent to the minimisation of the information divergence in (3). In the derivation of original NMF in [8], this objective is stated first; the S variables are introduced implicitly later during the optimisation on T and V. In the sequel, we show that this algorithm is actually equivalent to EM, ignoring the priors p(T ∣ ·) and p(V ∣ ·).

2.1. Maximum Likelihood and the EM Algorithm

The log-likelihood of the observed data X can be written as

| (8) |

where q(S) is an instrumental distribution, that is arbitrary provided that the sum on the right exists; q can only vanish at a particular S only when p does so. Note that this defines a lower bound to the log-likelihood. It can be shown via functional derivatives and imposing the normalisation condition ∑S q(S) = 1 via Lagrange multipliers that the lower bound is tight for the exact posterior of the latent sources, that is,

| (9) |

Hence the log-likelihood can be maximised iteratively as follows:

| (10) |

Here, 〈f(x)〉p(x) = ∫ p(x)f(x)dx, the expectation of some function f(x) with respect to p(x). In the E step, we compute the posterior distribution of S. This defines a lower bound on the likelihood

| (11) |

For many models in the exponential family, which includes (5), the expectation on the right depends on the sufficient statistics of q(S)(n) and is readily available; in fact calculating q(S) should be literally taken as calculating the sufficient statistics of q(S). The lower bound is readily obtained as a function of these sufficient statistics, and maximisation in the M Step yields a fixed point equation.

2.1.1. The E Step

To derive the posterior of the latent sources, we observe that

| (12) |

For the model in (5), we have

| (13) |

It follows from (5), (12), (13), and (7)

| (14) |

where p ν,i,τ ≡ t ν,i v i,τ/∑i' t ν,i' v i',τ are the cell probabilities. Here, ℳ denotes a multinomial distribution defined by

| (15) |

where s = {s 1, s 2,…, s I}, p = {p 1, p 2,…, p I}, and p 1 + p 2 + ⋯ + p I = 1. Here, p i, i = 1 ⋯ I are the cell probabilities, and x is the index parameter where s 1 + s 2 + ⋯ + s I = x. The Kronecker delta function is defined by δ(x) = 1 when x = 0, and δ(x) = 0 otherwise. It is a standard result that the marginal mean is

| (16) |

that is, the expected value of each source s i is a fraction of the observation, where the fraction is given by the corresponding cell probability.

2.1.2. The M Step

It is indeed a good news that the posterior has an analytic form. Since now the M step can be calculated easily as follows:

| (17) |

Fortunately, for maximisation with respect to T and V, the last two difficult terms are merely constant, and we need only to maximise the simpler objective

| (18) |

where we only need the expected value of the sources given by the previous values of the templates and excitations:

| (19) |

Maximisation of the objective Q and substituting 〈s ν,i,τ〉(n) give the following fixed point equations:

| (20) |

Equation (20) is identical to the multiplicative update rules of [8]. However, our derivation via data augmentation obtains the same result as an EM algorithm. It is interesting to note that in literature, NMF is often described as EM-like; here, we show that it is actually just an EM algorithm. We see that the efficiency of NMF is due to the fact that the W × I × K object 〈S〉 needs not to be explicitly calculated as we only need its marginal statistics (sums across τ or ν).

We note that our model is valid when X is integer valued. See [9] for a detailed discussion about consequences of this issue. Here, we assume that for nonnegative real valued , we only consider the integer part, that is, we let , where E is a noise matrix with entries uniformly drawn in [0, 1). In practice, this is not an obstacle when the entries of X are large.

The interpretation of NMF as a maximum likelihood method in a Poisson model is mentioned in the original NMF paper [1] and discussed in more detail by [5, 10]. The equivalence of NMF and probabilistic latent sematic analysis is shown in [11]. Kameoka in [5] focuses on the optimisation and gives an equivalent description using auxiliary function maximisation. In contrast, the auxiliary variables can be viewed as model variables (the sources s) that are analytically integrated out [10]. A general framework is described in [12]. Prior structures are placed on conditionally Gaussian NMF models to enforce sparsity in [13]. However, all of these approaches are based on regularisation, that is, aim at calculating a maximum a posteriori estimate. In contrast, we provided in this article a full Bayesian treatment where the templates and excitations are integrated out.

2.2. Hierarchical Prior Structure

Given the probabilistic interpretation, it is possible to propose various hierarchical prior structures to fit the requirements of an application. Here we will describe a simple choice where we have a conjugate prior as follows:

| (21) |

Here, 𝒢 denotes the density of a gamma random variable x ∈ ℝ+ with shape a ∈ ℝ+ and scale b ∈ ℝ+ defined by

| (22) |

The primary motivation for choosing a Gamma distribution is computational convenience: Gamma distribution is the conjugate prior to Poisson intensity. The indexing highlights the most general case where there are individual parameters for each element t ν,i and v i,τ. Typically, we do not allow many free hyperparameters but tie them depending upon the requirements of an application. See Figure 1 for an example. As an example, consider a model where we tie the hyperparameters such as a ν,i t = a t, b ν,i t = b t, a i,τ v = a v, and b i,τ v = b v for i = 1 ⋯ I, ν = 1 ⋯ W, and τ = 1 ⋯ K. This model is simple to interpret, where each component of the templates and the excitations is drawn independently from the Gamma family shown in Figure 2. Qualitatively, the shape parameter a controls the sparsity of the representation. Remember that 𝒢(x; a, b/a) has the mean b and standard deviation . Hence, for large a, all coefficients will have more or less the same magnitude b, and typical representations will be full. In contrast, for small a, most of the coefficients will be very close to zero, and only very few will be dominating, hence favouring a sparse representation. The scale parameter b is adapted to give the expected magnitude of each component.

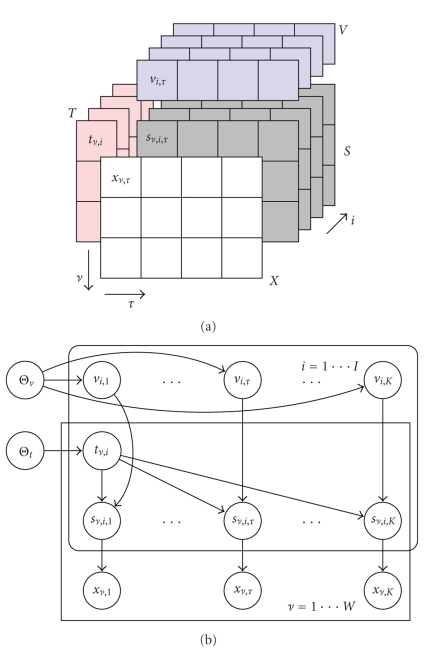

Figure 1.

(a) A schematic description of the NMF model with data augmentation. (b) Graphical model with hyperparameters. Each source element s ν,i,τ is Poisson distributed with intensity t ν,i v i,τ. The observations are given by x ν,τ = ∑i s ν,i,τ. In matrix notation, we write X = ∑ S i. We can analytically integrate out over S. Due to superposition property of Poisson distribution, intensities add up, and we obtain 〈X〉 = TV. Given X, the NMF algorithm is shown to seek the maximum likelihood estimates of the templates T and excitations V. In our Bayesian treatment, we further assume that elements of T and V are Gamma distributed with hyperparameters Θ.

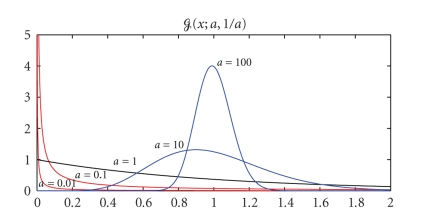

Figure 2.

(Left) The family of densities p(v; a, b = 1) = 𝒢(v; a, b/a) with the same mean 〈v〉 = b = 1. Small values of a (for a < 1) enforce sparser representations, and large values of a ≈ 100 tie all values to be close to a nonzero mean (nonsparse representation).

To model missing data, that is, when some of the x ν,τ are not observed, we define a mask matrix M = {m ν,τ}, the same size as X where m ν,τ = 0, if x ν,τ is missing and 1 otherwise (see Appendix A.4 for details). Using the mask variables, the observation model with missing data can be written as

| (23) |

The hierarchical model in (21) is more powerful than the basic model of (5), in that it allows a lot of freedom for more realistic modelling. First of all, the hyperparameters can be estimated from examples of a certain class of source to capture the invariant features. Another possibility is Bayesian model selection, where we can compare alternative models in terms of their marginal likelihood. This enables one to estimate the model order, for example, the optimum number of templates to represent a source.

3. Full Bayesian Inference

Below, we describe various interesting problems that can be cast to Bayesian inference problems. In signal analysis and feature extraction with NMF, we may wish to calculate the posterior distribution of templates and excitations, given data and hyperparameters Θ ≡ (Θt, Θv). Another important quantity is the marginal likelihood (also known as the evidence), where

| (24) |

The marginal likelihood can be used to estimate the hyperparameters, given examples of a source class

| (25) |

or to compare two given models via Bayes factors

| (26) |

This latter quantity is particularly useful for comparing different classes of models. Unfortunately, the integrations required cannot be computed in closed form. In the sequel, we will describe the Gibbs sampler and variational Bayes as approximate inference strategies.

3.1. Variational Bayes

We sketch here the Variational Bayes (VB) [3, 14] method to bound the marginal log-likelihood as

| (27) |

where q = q(S, T, V) is an instrumental distribution, and H[q] is its entropy. The bound is tight for the exact posterior q(S, T, V) = p(S, T, V ∣ X, Θ) but as this distribution is complex, we assume a factorised form for the instrumental distribution by ignoring some of the couplings present in the exact posterior as follows:

| (28) |

where α ∈ 𝒞 = {{S}, {T}, {V}} denotes set of disjoint clusters. Hence, we are no longer guaranteed to attain the exact marginal likelihood ℒ X(Θ). Yet, the bound property is preserved, and the strategy of VB is to optimise the bound. Although the best q distribution respecting the factorisation is not available in closed form, it turns out that a local optimum can be attained by the following fixed point iteration:

| (29) |

where q ¬α = q/q α. This iteration monotonically improves the individual factors of the q distribution, that is, ℬ[q (n)] ≤ ℬ[q (n+1)] for n = 1, 2,… given an initialisation q (0). The order is not important for convergence; one could visit blocks in arbitrary order. However, in general, the attained fixed point depends upon the order of the updates as well as the starting point q (0)(·). We choose the following update order in our derivations:

| (30) |

| (31) |

| (32) |

3.2. Variational Update Equations and Sufficient Statistics

The expectations of 〈log p(X, S, T, V ∣ Θ)〉 are functions of the sufficient statistics of q (see the expression in the Appendix A.2). The update equation for the latent sources (30) leads to the following:

| (33) |

These equations are analogous to the multinomial posterior of EM given in (14); only the computation of cell probabilities is different. The excitation and template distributions and their sufficient statistics follow from the properties of the gamma distribution:

| (34) |

3.3. Efficient Implementation

One of the attractive features of NMF is easy and efficient implementation. In this section, we derive that the update equations of Section 3.2 in compact matrix notation are to illustrate that these attractive properties are retained for the full Bayesian treatment. A subtle but key point in the efficiency of the algorithm is that we can avoid explicitly storing and computing the W × I × K object 〈S〉, as we only need the marginal statistics during optimisation. Consider (33). We can write

| (35) |

Here, the denominator has to be nonzero. In the last line, we have represented the expression in compact notation where we define the following matrices:

| (36) |

The matrices subscripted with t are in ℝ + W×I and with v are in ℝ + I×K. For notational convenience, we define. ∗ and ./ as elementwise matrix multiplication and division, respectively, and 1 W as a W × 1 vector of ones. After straightforward substitutions, we obtain the variational nonnegative matrix factorisation algorithm, that can compactly be expressed as in panel Algorithm 1.

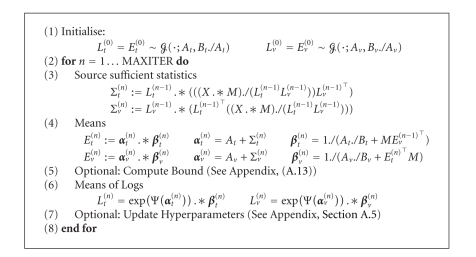

Algorithm 1.

Variational nonnegative matrix factorisation.

Similarly, an iterative conditional modes (ICM) algorithm can be derived to compute the maximum a posteriori (MAP) solution (see Appendix A.4):

| (37) |

| (38) |

Note that when the shape parameters go to zero, that is, A t, A v → 0, we obtain the maximum likelihood NMF algorithm.

3.4. Markov Chain Monte Carlo, the Gibbs Sampler

Monte Carlo methods [15, 16] are powerful computational techniques to estimate expectations of form

| (39) |

where x (i) are independent samples drawn from p(x). Under mild conditions on f, the estimate converges to the true expectation for N → ∞. The difficulty here is obtaining independent samples {x (i)}i=1 ⋯ N from complicated distributions.

The Markov Chain Monte Carlo (MCMC) techniques generate subsequent samples from a Markov chain defined by a transition kernel 𝒯, that is, one generates x (i+1) conditioned on x (i) as follows:

| (40) |

Note that the transition kernel 𝒯 is not needed explicitly in practice; all is needed is a procedure to sample a new configuration, given the previous one. Perhaps surprisingly, even though subsequent samples are correlated, provided that 𝒯 satisfies certain ergodicity conditions, (39) remains still valid, and estimated expectations converge to their true values when number of samples N goes to infinity [15]. To design a transition kernel 𝒯 such that the desired distribution is the stationary distribution, that is, p(x) = ∫ d x′𝒯(x ∣ x′)p(x′), many alternative strategies can be employed [16]. One particularly convenient and simple procedure is the Gibbs sampler where one samples each block of variables from full conditional distributions. For the NMF model, a possible Gibbs sampler is

| (41) |

Note that this procedure implicitly defines a transition kernel 𝒯(· ∣ ·). It can be shown [15] that the stationary distribution of 𝒯 is the exact posterior p(S, T, V ∣ X, Θ). Eventually, the Gibbs sampler converges regardless of the order that the blocks are visited, provided that each block is visited infinitely often in the limit n → ∞. However, the rate of convergence is very difficult to assess as it depends upon the order of the updates as well as the starting configuration (T (0), V (0), S (0)). It is instructive to contrast above (41) with the variational update of (30)–(32): algorithmically the two approaches are quite similar. The pseudo-code is given in Algorithm 2.

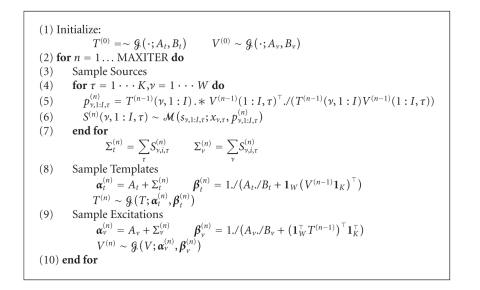

Algorithm 2.

Gibbs sampler for nonnegative matrix factorisation.

3.4.1. Marginal Likelihood Estimation with Chib's Method

The marginal likelihood can be estimated from the samples generated by the Gibbs sampler using a method proposed by Chib [17]. Suppose we have run the block Gibbs sampler until convergence and have N samples as follows:

| (42) |

The marginal likelihood is (omitting hyperparameters Θ)

| (43) |

This equation holds for all points (V, T, S). We choose a point in the configuration space; provided that the distribution is unimodal, a good candidate is a configuration near the mode . The numerator in (43) is easy to evaluate. The denominator is

| (44) |

The first term is full conditional, so it is available for the Gibbs sampler. The third term is

| (45) |

The second term is trickier

| (46) |

The first term here is full conditional. However, the original Gibbs run gives us only samples from p(V ∣ X), not . The idea is to run the Gibbs sampler for M further iterations where we sample from , that is, with S clamped at . The resulting estimate is

| (47) |

Chib's method estimates the marginal likelihood as follows:

| (48) |

4. Simulations

Our goal is to illustrate our approach in a model selection context. We first illustrate that the variational approximation to the marginal likelihood is close to the one obtained from the Gibbs sampler via Chib's method. Then, we compare the quality of solutions we obtain via Variational NMF and compare them to the original NMF on a prediction task. Finally, we focus on reconstruction quality in the overcomplete case where the standard NMF is subject to overfitting.

Model Order Determination —

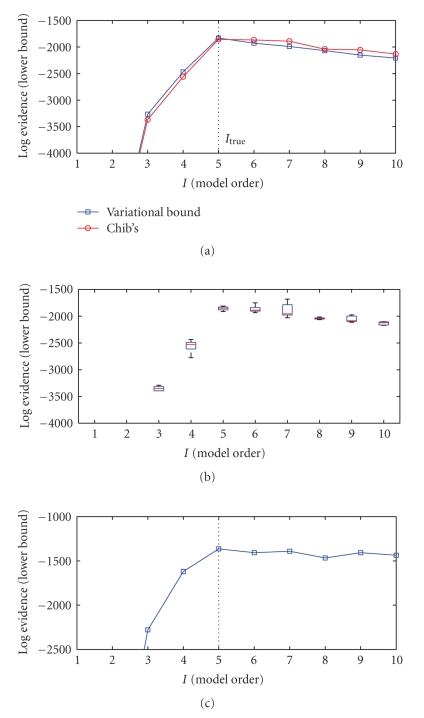

To test our approach, we generate synthetic data from the hierarchical model in (21) with W = 16, K = 10, and the number of sources I true = 5. The inference task is to find the correct number of sources, given X. The hyperparameters of the true model are set to a ν,i t = a t = 10, b ν,i t = b t = 1, a i,τ v = a v = 1, and b i,τ v = b v = 100. In the first experiment, the hyperparameters are assumed to be known and in the second are jointly estimated from data, using hyperparameter adaptation. We evaluate the marginal likelihood for models with the number of templates I = 1 ⋯ 10, with the Gibbs sampler using Chib's method and variational lower bound ℬ via variational Bayes. We run the Gibbs sampler for MAXITER = 10 000 steps following a burn-in period of 5000 steps; then we clamp the sources S and continue the simulation for a further 10 000 steps to estimate quantities required by Chib's method. We run the variational algorithm until convergence of the bound or 10 000 iterations, whichever occurs first. In Figure 3(a), we show a comparison of the variational estimate with the average of 5 independent runs obtained via Chib's method. We observe, that both methods give consistent results. In Figure 4, we show the lower bound as a function of model order I, where for each I, the bound is optimised independently by jointly optimising hyperparameters a t, b t, a v, and b v using the equations derived in the appendix. We observe, that the correct model order can be inferred even when the hyperparameters are unknown a priori. This is potentially useful for estimation of model order from real data.

Figure 3.

Model selection results. (a) Comparison of model selection by variational bound (squares) and marginal likelihood estimated by Chib's (circles) method. The hyperparameters are assumed to be known. (b) Box-plot of marginal likelihood estimated by Chib's method using 5000, 10 000, and 10 000 iterations for burn-in, free, and clamped sampling. The boxes show the lower quartile, median, and upper quartile values. (c) Model selection by variational bound when hyperparameters are unknown and jointly estimated.

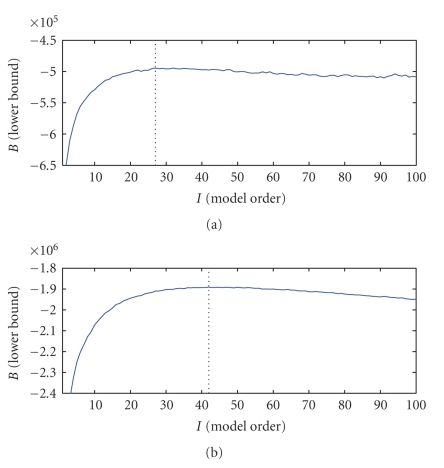

Figure 4.

Model selection using variational bound with adapted hyperparameters on face data 16 × 16 with I* = 27 (a) and 32 × 32 with I* = 42 (b).

As real data, we use a version of the Olivetti face image database (K = 400 images of 64 × 64 pixels available at http://www.cs.toronto.edu/∼roweis/data/olivettifaces.mat). We further downsampled to 16 × 16 or 32 × 32 pixels, hence our data matrix X is 162 × 400 or 322 × 400. We use a model with tied hyperparameters as a ν,i t = a t, b ν,i t = b t, a i,τ v = a v, and b i,τ v = b v, where all hyperparameters are jointly estimated. In Figure 4, bottom, we show results of model order determination for this dataset with joint hyperparameter adaptation. Here, we run the variational algorithm for each model order I = 1 ⋯ 100 independently and evaluate the lower bound after optimising the hyperparameters. The Gibbs sampler is not found practical and is omitted here. The lower bound behaves as is expected from marginal likelihood, reflecting the tradeoff between too many and too few templates. Higher resolution implies more templates, consistent with our intuition that detail requires more templates for accurate representation.

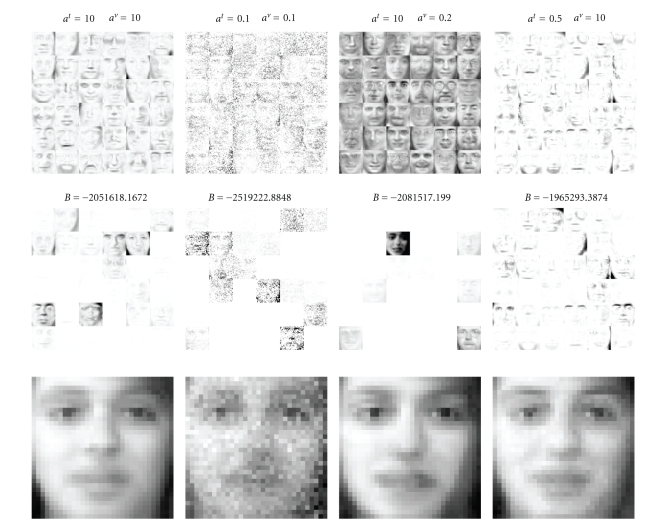

We also investigate the nature of the representations (see Figure 5). Here, for each independent run, we fix the values of shape parameters to (a t, a v) = [(10, 10), (0.1, 0.1), (10, 0.2), (10, 0.5)] and only estimate b t and b v. This corresponds to enforcing sparse or nonsparse t and v. Each column shows I = 36 templates estimated from the dataset conditioned on hyperparameters. The middle image is the same template image above weighted with the excitations corresponding to the reconstruction (the expected value of the predictive distribution) below. Here, we clearly see the effect of the hyperparameters. In the first condition (a t, a v) = (10, 10), the prior does not enforces sparsity to the templates and excitations. Hence, for the representation of a given image, there are many active templates. In the second condition, we try to force both matrices to be sparse with (a t, a v) = (0.1, 0.1). Here, the result is not satisfactory as isolated components of the templates are zeroed, giving a representation that looks like one contaminated by “salt-and-pepper” noise. The third condition ((a t, a v) = (10, 0.2)) forces only the excitations to be sparse. Here, we observe that the templates correspond to some average face images. Qualitatively, each image is reconstructed using a superposition of a few of these templates. In the final representation, we enforce sparsity in the templates but not in the excitations. Here, our estimate finds templates that correspond to parts of individual face images (eyebrows, lips, etc.). This solution, intuitively corresponding to a parsimonious representation, also is the best in terms of the marginal likelihood. With proper initialisation, our variational procedure is able to find such solutions.

Figure 5.

Templates, excitations for a particular example, and the reconstructions obtained for different hyperparameter settings. B is the lower bound for the whole dataset.

Prediction —

We now compare variational Bayesian NMF with the maximum likelihood NMF on a missing data prediction task.

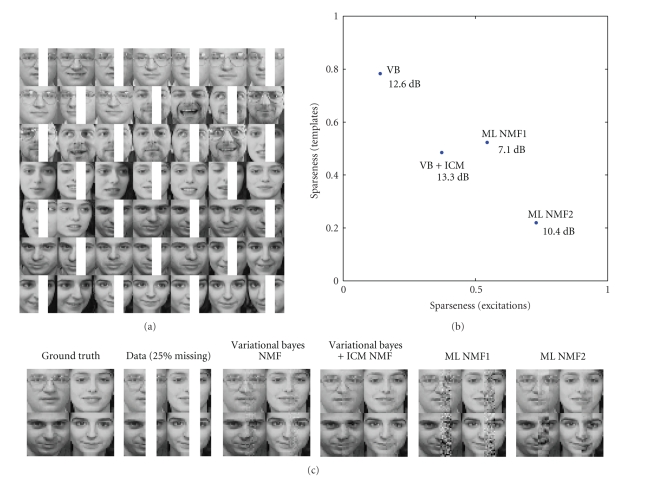

To illustrate the self regularisation effect, we set up an experiment in which we select a subset of the face data consisting of 50 images. From half of the images, we remove the same patch (Figure 6) and predict the missing pixels. This is a rather small dataset for this task, as we have only 10 images for each of the 5 different persons, and half of these images have missing data at the same spot. We measure the quality of the prediction in terms of signal-to-noise ratio (SNR). The missing values are reconstructed using the mean of the predictive distribution X pred ≡ 〈X〉𝒫𝒪(X;T*V*) = T*V*, where T* and V* are point estimates of the template and excitation matrix. We compare our variational algorithm with the classical NMF. For each algorithm, we test two different versions. The variational algorithms differ in how we estimate T* and V*. In the first variational algorithm, we use a crude estimate of T* and V* as the mean of the approximating q distribution. In the second condition, after convergence of hyperparameters via VB, we reinitialise T and V randomly and switch to an ICM algorithm (see (38)). This strategy finds a local mode (T*, V*) of the exact posterior distribution. In NMF, we test two initialisation strategies: in the first condition, we initialise the templates randomly; in the second, we set them equal to the images in the dataset with random perturbations.

Figure 6.

Results of a typical run. (a) Example images from the dataset. (b) Comparison of the reconstruction accuracy of different methods in terms of SNR (in dB), organised according to the sparseness of the solution. (c) (from left to right). The ground truth, data with missing pixels. The reconstructions of VB, VB + ICM, and ML-NMF with two initialisation strategies (1 = random, 2 = to image).

In Figure 6, we show the reconstruction results for a typical run, for a model with I = 100 templates. Note that this is an overcomplete model, with twice as many templates as there are images. To characterise the nature of the estimated template and excitation matrices, we use the sparseness criteria [18] of an m × n matrix X, defined as . This measure is 1 when the matrix X has only a single nonzero entry and 0 when all entries are equal. We see that the variational algorithms are superior in this case in terms of SNR as well as the visual quality of the reconstruction. This is perhaps not surprising, since with maximum likelihood estimation; if the model order is not carefully chosen, generalisation performance is poor: the “noise” in the observed data is fitted but the prediction quality drops on new data. An interesting observation is that highly sparse solutions (either in templates or excitations) do not give the best result; the solution that balances both seems to be the best in this setting. This example illustrates that sparseness in itself may not be necessarily a good criteria to optimise; for model selection, the marginal likelihood should be used as the natural quantity.

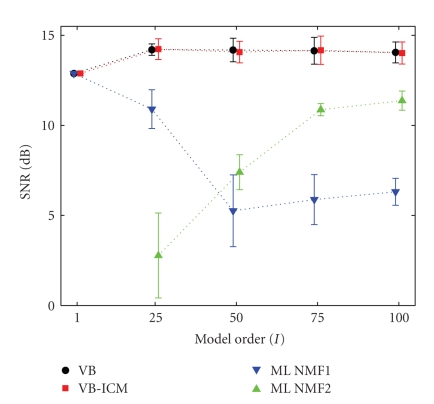

On the same face dataset, we compare the prediction error in terms of the SNR for varying model order I. Our goal is to compare the prediction performance of the full Bayesian approach with the ML-NMF for a range of conditions (under-complete, complete, and overcomplete). The results shown in Figure 7 are averages of several runs with hyperparameter adaptation and different hyperparameter tying. In the simulations, the shape parameters are tied always as a i,τ v = a v (and a ν,i t = a t). The scale parameters are untied or tied as (b τ v, b ν t) (across sources) or b i v, b i t (different for each source) and jointly optimised. Regardless of the hyperparameter tying structure, the results were quite similar. The best SNR values are attained with untied scale parameters for both excitations and templates.

Figure 7.

Average SNR results for model orders I = 1, 25, 50, 75, 100 covering undercomplete, complete, and overcomplete cases. Comparison of VB, VB + ICM, and ML-NMF with two initialisation strategies (1 = random, 2 = to image).

We observe that, due to the implicit self-regularisation in the Bayesian approach, the prediction performance is not very sensitive to the model order and is immune to overfitting. In contrast, the ML-NMF with random initialisation is prone to overfitting, and prediction performance drops with increasing model order. Interestingly, when we initialise the ML-NMF algorithm to true data points with small perturbations, the prediction performance in terms of SNR improves. Note that this strategy would not be possible for data where the pixels were truly missing. However, visual inspection shows that the interpolation can still be “patchy” (see Figure 6).

We observe that hyperparameter adaptation is crucial for obtaining good prediction performance. In our simulations, results for VB without hyperparameter adaptation were occasionally poorer than the ML estimates. Good initialisation of the shape hyperparameters seems to be also important. We obtain best results when initialising the shape hyperparameters asymmetrically, for example, a v < 1 and a t > 10 (see 3rd and 4th panels from left in Figure 5). When the shape hyper-parameters are initialised to small a v, a t ≪ 1, the EM seems to get stuck in a local minima more often. Consequently, the prediction results are poorer. We have also carried out tests with more undercomplete representations when the model order is low I < 10. For these simulations, while the marginal likelihood was in favour of the VB solutions, we have not observed statistically significant differences between VB and ML in terms of SNR. The SNR improvement of VB over ML was on average about 0.1 dB only.

5. Discussion and Conclusions

In this paper, we have investigated KL-NMF from a statistical perspective. We have shown that KL minimisation formulation the original algorithm can be derived from a probabilistic model where the observations are superposition of I independent Poisson-distributed latent sources. Here, the template and excitation matrices turn out to be latent intensity parameters. The interpretation of NMF as a maximum likelihood method in a Poisson model is mentioned in the original NMF paper [1] and discussed in more detail by [5, 10], and [5] focuses on the optimisation and gives an equivalent description using auxiliary function maximisation. In contrast, [10] illustrates that the auxiliary variables can be viewed as model variables (the sources s) that are analytically integrated out. The relationship between KL divergence and the Poisson distribution is not just a lucky coincidence. There exists a duality between divergence functions and exponential family distributions. If a cost function is a Bregman divergence, there exists a regular exponential family where minimising the cost corresponds to maximum likelihood parameter estimation [19]; also see [12] it in the context of matrix factorisation models.

The novel observation in the current article is the exact characterisation of the approximating distribution q(S) or full conditionals p(S ∣ T, V, X) as a product of multinomial distributions, leading to a richer approximation distribution than a naive mean field or single site Gibbs (which would freeze due to deterministic p(X ∣ S)). This conjugate form leads to significant simplifications in full Bayesian integration. Apart from the conditionally Gaussian case, NMF with KL objective seems to be unique in this respect. For several other distance metrics D(·||·), we find that full Bayesian inference not as practical as p(S ∣ T, V, X) is not standard.

We have also shown that the standard KL-NMF algorithm with multiplicative update rules is in fact an EM algorithm with data augmentation. Extending upon this observation, we have developed an hierarchical model with conjugate Gamma priors. We have developed a variational Bayes algorithm and a Gibbs sampler for inference in this hierarchical model. We have also developed methods for estimating the marginal likelihood for model selection. This is an additional feature that is lacking in existing NMF approaches with regularisation, where only MAP estimates are obtained, such as [13, 18, 20].

Our simulations suggest that the variational bound seems to be a reasonable approximation to the marginal likelihood and can guide model selection for NMF. The computational requirements are comparable to the ML-NMF. A potentially time-consuming step in the implementation of the variational algorithm is the evaluation of the Ψ function but this step can also be replaced by a simple piecewise polynomial approximation since exp(Ψ(x)) ≈ x − 0.5 for x > 5.

We first compare the variational inference with a Gibbs sampler. In our simulations, we observe that both algorithms give qualitatively very similar results, both for inference of templates and excitations as well as model order selection. We find the variational approach somewhat more practical as it can be expressed as simple matrix operations, where both the fixed point equations as well as the bound can be compactly and efficiently implemented using matrix computation software. In contrast, our Gibbs sampler is computationally more demanding, and the calculation of marginal likelihood is somewhat more tricky. With our implementation of both algorithms, the variational method is faster by a factor of around 13. Reference implementations of both algorithms in Matlab are available from the following url: http://www.cmpe.boun.edu.tr/∼cemgil/bnmf/.

In terms of computational requirements, the variational procedure has several advantages. First, we circumvent sampling from multinomial variables, which is the main computational bottleneck with the Gibbs sampler. Whilst efficient algorithms are developed for multinomial sampling [21], the procedure is time consuming when the number of latent sources I is large. In contrast, the variational method estimates the expected sufficient statistics directly by elementary matrix operations. Another advantage is hyperparameter estimation. In principle, it is possible to maximise the marginal likelihood via a Monte Carlo EM procedure [22, 23]; yet this potentially requires many more iterations. In contrast, the evaluation of the derivatives of the lower bound is straightforward and can be implemented without much additional computational cost.

The efficiency of the Gibbs sampler could be improved by working out the distribution of the sufficient statistics of sources directly (namely, quantities ∑τ s ν,i,τ or ∑ν s ν,i,τ) to circumvent multinomial sampling. Unfortunately, for the sum of binomial random variables with different cell probability parameters, the sum does not have a simple form but various approximations are possible [24].

Inference based on VB is easy to implement but at the end of the day, the fixed point iteration is just a gradient-based lower bound optimisation procedure, and second order Newton methods can provide more efficient alternatives. For NMF models, there exist many conditional independence relations, hence the Hessian matrix has a special block structure [12]. It is certainly interesting to develop efficient inference methods that make use of the special block structure of the Hessian matrix. However, as our primary goal was a practical full Bayesian treatment, we have not investigated this path yet. Another approach in this direction is using alternative deterministic integration techniques such as expectation propagation (EP) [25]. Those techniques work directly on an approximation of the true marginal likelihood rather than a bound. A related approach known as expectation consistent (EC) inference is used with success in related source separation problems [26].

From a modelling perspective, our hierarchical model provides some attractive properties. It is easy to incorporate prior knowledge about individual latent sources via hyperparameters, and one can easily capture variability in the templates and excitations that is potentially useful for developing robust techniques. The prior structure here is qualitatively similar to an entropic prior [20, 27], and we find qualitatively similar representations to the ones found by NMF reported earlier by [1, 18]. However, none of the above mentioned methods provide an estimate of the marginal likelihood, which is useful for model selection. Our generative model formulation can be extended in various ways to suit the specific needs of particular applications. For example, one can enforce more structured prior models such as chains or fields [10]. As a second possibility, the Poisson observation model can be replaced with other models such as clipped Gaussian, Gamma, or Gaussians which lead to alternative source separation algorithms. For example, the case of Gaussian sources where the excitations and templates correspond to the variances is discussed in [28].

Our main contribution here is the development of a principled and practical way to estimate both the optimal sparsity criteria and model order, in terms of marginal likelihood. By maximising the bound on marginal likelihood, we have a method where all the hyperparameters can be estimated from data, and the appropriate sparseness criteria is found automatically. We believe that our approach provides a practical improvement to the highly popular KL-NMF algorithm without incurring much additional computational cost.

Acknowledgments

The author would like to thank Nick Whiteley, Tuomas Virtanen, and Paul Peeling for fruitful discussion and for their comments on earlier drafts of this paper. This research is funded by the Engineering and Physical Sciences Research Council (EPSRC) under the grant EP/D03261X/1 and by the Turkish Science, Technology Research Council grant TUBITAK 107E021 and Boğaziçi University Research Fund BAP 09A105P. The research is carried out while the author was with the Signal Processing and Comms. Lab, Department of Engineering, University of Cambridge, UK and Department of Computer Engineering, Boğaziçi University, Turkey.

Appendix

A. Standard Distributions in Exponential Form, Their Sufficient Statistics and Entropies

- Gamma

Here, Ψ denotes the digamma function defined as Ψ(a) ≡ dlogΓ(a)/da.(A.1) - Poisson

(A.2) - Multinomial

Here, s = {s 1, s 2,…, s I}, p = {p 1, p 2,…, p I}, and p 1 + p 2 + ⋯ + p I = 1. Here, p i, i = 1 ⋯ I are the cell probabilities, and x is the index parameter where s 1 + s 2 + ⋯ + s I = x. The entropy is given as follows:(A.3) (A.4)

A closed form expression for the entropy is not known due to 〈logΓ(s + 1)〉 terms but asymptotic expansions exist [29, 30]. Computationally efficient sampling from a multinomial distribution is not trivial; see [21] for a comparison of various methods and detailed discussion of tradeoffs.

A.1. Summary of the Generative Model

We have the following. Indices:

i = 1 ⋯ I, source index;

ν = 1 ⋯ W, Row (frequency bin) index;

τ = 1 ⋯ K, Column (time frame) index;

t ν,i: template variable at νth row of the ith source

| (A.5) |

v i,τ: excitation variable of the ith source at τth column

| (A.6) |

s ν,i,τ: source variable of ith source at νth row (frequency bin) and τth column (time frame)

| (A.7) |

x ν,τ: observation at νth row (frequency bin) and τth column (time frame)

| (A.8) |

A.2. Expression of the Full Joint Distribution

Here, ϕ ≡ p(X, S, T, V ∣ Θ) = p(X ∣ S)p(S ∣ T, V)p(V ∣ Θv)p(V ∣ Θt),

| (A.9) |

A.3. The Variational Bound

The variational bound in (27) can be written as

| (A.10) |

where the energy term is given by the expectation of the expression in Appendix A.2, and H[q] denotes the entropy of the variational approximation distribution q where the individual entropies are defined in Appendix A:

| (A.11) |

One potential problem is that this expression requires the entropy of a multinomial distribution for which there is no known simple expression. This is due to terms of form 〈logΓ(s + 1)〉 where only asymptotic expansions are known. Fortunately, the difficult terms in the energy term can be canceled by the corresponding terms in the entropy term, and one obtains the following expression that only depends on known sufficient statistics:

| (A.12) |

After some careful manipulations, the following expression is obtained where log L denotes here elementwise logarithm of matrix L:

| (A.13) |

A.4. Handling Missing Data and Map Estimation

When there is missing data, that is, when some of the x ν,τ are not observed, computation is still straightforward in our framework and can be accomplished by a simple modification to the original algorithm. We first define a mask matrix M = {m ν,τ}, same size as X, where

| (A.14) |

Using the mask variables, the observation model with missing data can be written as follows:

| (A.15) |

The prior is not affected. Hence, we merely replace the first two lines of the expression for the full joint distribution (given in the Appendix A.2) as follows:

| (A.16) |

Consequently, it is easy to see that

| (A.17) |

By a derivation analogous to one detailed in Section 3.3, we see that the excitation update equations in Algorithm 1, line 4, can be written using matrix notation as follows:

| (A.18) |

The update rules for the templates are similar. Note that when there is no missing data, we have M = 1 W 1 K ⊤ which gives the original algorithm. The bound in (A.13) can also be easily modified for handling missing data. We merely replace X ← M. ∗X and the first term E t E v ← M. ∗E t E v.

We conclude this subsection by noting that the standard NMF update equations, given in (20), can be also rewritten to handle missing data as follows:

| (A.19) |

Here, the denominator has to be nonzero. Similarly, an iterative conditional modes (ICM) algorithm can be derived to compute the maximum a posteriori (MAP) solution as follows:

| (A.20) |

Note that when the shape parameters go to zero, that is, A t, A v → 0, we obtain the maximum likelihood NMF algorithm.

A.5. Hyperparameter Optimisation

The hyperparameters Θ = (Θt, Θv) can be estimated by maximising the bound in 13. Below, we will derive the results for the excitations; the results for templates are similar. The solution for shape parameters involves finding the zero of a function f(a) − c, where

| (A.21) |

The solution can be found by Newton's method by iteration of the following fixed point equation:

| (A.22) |

It is well known that Newton iterations can diverge if started away from the root. Occasionally, we observe that a can become negative. If this is the case, we set Δ(n) ← Δ(n)/2, and try again. The digamma Ψ function and its derivative Ψ′ are available in numeric computation libraries (e.g., in Matlab as and , resp.).

The derivation of the hyperparameter update equations is straightforward:

| (A.23) |

Tying parameters across τ as a i v = a i,τ v and b i v = b i,τ v yields

| (A.24) |

Tying parameters across τ and i, a v = a i,τ v, and b v = b i,τ v yields

| (A.25) |

The derivation of the template parameters is exactly analogous. We can express the update equations once again in compact matrix notation as follows:

| (A.26) |

Here, we assume is a matrix-valued function that finds root C i,j = f(A i,j) for each element of A, starting from the initial matrix A 0. If C is a scalar or vector, it is repeated over the missing index to implement parameter tying. For example, if C is a I × 1 vector and A 0 is I × K, we assume C i = c i,τ for all τ = 1 ⋯ K, and the output is the same size as A 0. This is only a notational convenience; an actual implementation can be achieved more efficiently. Again, the implementation of the template parameters is exactly analogous; merely replace above the subscripts as v ← t, (i, τ) ← (ν, i) and (I, K) ← (W, I).

References

- 1.Lee DD, Seung HS. Learning the parts of objects with nonnegative matrix factorization. Nature. 1999;401:788–791. doi: 10.1038/44565. [DOI] [PubMed] [Google Scholar]

- 2.Paatero P, Tapper U. Positive matrix factorization: a non-negative factor model with optimal utilization of error estimates of data values. Environmetrics. 1994;5(2):111–126. [Google Scholar]

- 3.Bishop CM. Pattern Recognition and Machine Learning. New York, NY, USA: Springer; 2006. [Google Scholar]

- 4.Virtanen T. Sound source separation in monaural music signals. Tampere, Finland: Tampere University of Technology; 2006. Nov, Ph.D. thesis. [Google Scholar]

- 5.Kameoka H. Statistical approach to multipitch analysis. Tokyo, Japan: University of Tokyo; 2007. Ph.D. thesis. [Google Scholar]

- 6.Cichocki A, Mørup M, Smaragdis P, Wang W, Zdunek R. Advances in nonnegative matrix and tensor factorization. Computational Intelligence and Neuroscience. 2008;2008:3 pages. doi: 10.1155/2008/852187. Article ID 852187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Kingman JFC. Poisson Processes. Oxford, UK: Oxford Science; 1993. [Google Scholar]

- 8.Lee DD, Seung HS. Algorithms for non-negative matrix factorization. In: Proceedings of the Conference on Advances in Neural Information Processing Systems (NIPS '00), vol. 13; October-November 2000; Denver, Colo, USA. pp. 556–562. [Google Scholar]

- 9.Le Roux J, Kameoka H, Ono N, Sagayama S. On the relation between divergence-based minimization and maximum-likelihood estimation for the i-divergence. Personal Communication, 2008.

- 10.Virtanen T, Cemgil AT, Godsill S. Bayesian extensions to non-negative matrix factorisation for audio signal modelling. In: Proceedings of IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP '08); March-April 2008; Las Vegas, Nev, USA. pp. 1825–1828. [Google Scholar]

- 11.Gaussier E, Goutte C. Relation between PLSA and NMF and implication. In: Proceedings of the 28th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval; August 2005; Salvador, Brazil. pp. 601–602. [Google Scholar]

- 12.Singh AP, Gordon GJ. A unified view of matrix factorization models. In: Proceedings of the European Conference on Machine Learning and Knowledge Discovery in Databases (ECML PKDD '08), vol. 5212; September 2008; Antwerp, Belgium. Springer; pp. 358–373. [Google Scholar]

- 13.Schmidt MN, Laurberg H. Nonnegative matrix factorization with Gaussian process priors. Computational Intelligence and Neuroscience. 2008;2008:10 pages. doi: 10.1155/2008/361705. Article ID 361705. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Ghahramani Z, Beal M. Advances in Neural Information Processing Systems. Vol. 13. Cambridge, Mass, USA: MIT Press; 2000. Propagation algorithms for variational Bayesian learning; pp. 507–513. [Google Scholar]

- 15.Gilks WR, Richardson S, Spiegelhalter DJ, editors. Markov Chain Monte Carlo in Practice. London, UK: CRC Press; 1996. [Google Scholar]

- 16.Liu JS. Monte Carlo Strategies in Scientific Computing. New York, NY, USA: Springer; 2004. [Google Scholar]

- 17.Chib S. Marginal likelihood from the gibbs output. Journal of the Acoustical Society of America. 1995;90(432):1313–1321. [Google Scholar]

- 18.Hoyer PO. Non-negative matrix factorization with sparseness constraints. The Journal of Machine Learning Research. 2004;5:1457–1469. [Google Scholar]

- 19.Banerjee A, Merugu S, Dhillon IS, Ghosh J. Clustering with Bregman divergences. Journal of Machine Learning Research. 2005;6:1705–1749. [Google Scholar]

- 20.Shashanka MV, Raj B, Smaragdis P. Sparse overcomplete latent variable decomposition of counts data. In: Proceedings of the Conference on Advances in Neural Information Processing Systems (NIPS '07); December 2007; Vancouver, Canada. [Google Scholar]

- 21.Davis CS. The computer generation of multinomial random variates. Computational Statistics and Data Analysis. 1993;16(2):205–217. [Google Scholar]

- 22.Tanner MA. Tools for Statistical Inference: Methods for the Exploration of Posterior Distributions and Likelihood Functions. 3rd edition. New York, NY, USA: Springer; 1996. [Google Scholar]

- 23.Quintana FA, Liu JS, del Pino GE. Monte Carlo EM with importance reweighting and its applications in random effects models. Computational Statistics and Data Analysis. 1999;29(4):429–444. [Google Scholar]

- 24.Butler K, Stephens M. Stanford, Calif, USA: Stanford University; 1993. Apr, The distribution of a sum of binomial random variables. prepared for the Office of Naval Research, Tech. Rep. 467. [Google Scholar]

- 25.Minka T. Expectation propagation for approximate Bayesian inference. Cambridge, Mass, USA: MIT Media Lab; 2001. Ph.D. thesis. [Google Scholar]

- 26.Winther O, Petersen KB. Bayesian independent component analysis: variational methods and non-negative decompositions. Digital Signal Processing. 2007;17(5):858–872. [Google Scholar]

- 27.Buntine W. Variational extensions to EM and multinomial PCA. In: Proceedings of the 13th European Conference on Machine Learning (ECML '02), vol. 2430; August 2002; Helsinki, Finland. Springer; pp. 23–34. Lecture Notes In Computer Science. [Google Scholar]

- 28.Cemgil AT, Peeling P, Dikmen O, Godsill S. Prior structures for time-frequency energy distributions. In: Proceedings of IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA '07); October 2007; New Paltz, NY, USA. pp. 151–154. [Google Scholar]

- 29.Flajolet P. Singularity analysis and asymptotics of Bernoulli sums. Theoretical Computer Science. 1999;215(1-2):371–381. [Google Scholar]

- 30.Jacquet P, Szpankowski W. Entropy computations via analytic depoissonization. IEEE Transactions on Information Theory. 1999;45(4):1072–1081. [Google Scholar]