Abstract

Studies of written and spoken language suggest that nonidentical brain networks support semantic and syntactic processing. Event-related brain potential (ERP) studies of spoken and written languages show that semantic anomalies elicit a posterior bilateral N400, whereas syntactic anomalies elicit a left anterior negativity, followed by a broadly distributed late positivity. The present study assessed whether these ERP indicators index the activity of language systems specific for the processing of aural-oral language or if they index neural systems underlying any natural language, including sign language. The syntax of a signed language is mediated through space. Thus the question arises of whether the comprehension of a signed language requires neural systems specific for this kind of code. Deaf native users of American Sign Language (ASL) were presented signed sentences that were either correct or that contained either a semantic or a syntactic error (1 of 2 types of verb agreement errors). ASL sentences were presented at the natural rate of signing, while the electroencephalogram was recorded. As predicted on the basis of earlier studies, an N400 was elicited by semantic violations. In addition, signed syntactic violations elicited an early frontal negativity and a later posterior positivity. Crucially, the distribution of the anterior negativity varied as a function of the type of syntactic violation, suggesting a unique involvement of spatial processing in signed syntax. Together, these findings suggest that biological constraints and experience shape the development of neural systems important for language.

Keywords: electrophysiology, sign language, syntax

Signed languages, such as American Sign Language (ASL), are fully developed, natural languages containing all of the linguistic components of spoken languages, but they are conveyed and perceived in a completely different form than those used for aural-oral languages. (Aural-oral is used here to refer to both written and spoken language forms.) Thus, investigations of signed language processing provide a unique opportunity for determining the neural substrates of natural human language irrespective of language form. The present study used event-related brain potentials (ERPs) to examine semantic and syntactic processing of ASL sentences in deaf native signers of ASL and compared these findings to those from previous studies of aural-oral language.

Lesion and neuroimaging studies suggest that remarkably similar neural systems underlie signed and spoken language comprehension and production. The studies illustrate the importance of a left frontotemporal network for language processing irrespective of the modality through which language is perceived. In particular, neuroimaging studies of sentence processing using written (e.g., refs. 1 and 2), spoken (e.g., refs. 3–5), and audiovisual (6, 7) stimuli have shown reliable left hemisphere-dominant activation in regions such as the inferior frontal gyrus and the posterior superior temporal cortex in hearing users of spoken language. [Recent studies have shown that sentence processing relies on a widely distributed brain network extending beyond perisylvian areas. For example, behavioral deficits in sentence comprehension can also follow damage to a number of the left hemisphere areas, including the anterior superior temporal gyrus, posterior middle temporal gyrus, and middle frontal cortex (8). In addition, neuroimaging studies of sentence processing often show activation in regions within and beyond the perisylvian cortex (e.g., ref. 9).] Similarly, studies of signed sentence processing in deaf and hearing native signers have shown significant activation of the inferior frontal and superior temporal cortices of the left hemisphere (7, 10–13). These neuroimaging studies of signers are largely consistent with neuropsychological evidence from deaf patients who show evidence of frank linguistic deficits following left, but not right, hemisphere damage (14–16).

More controversial is the role of the right hemisphere in linguistic processing of signed languages. Though neuroimaging studies (e.g., refs. 10–12, 17, and 18) have shown that signed language processing also recruits regions within the right hemisphere, studies of right hemisphere-damaged signers often report intact core-linguistic abilities (16). However, Poizner et al. (16) reported 2 subjects with right hemisphere damage who were shown to have impaired performance on a syntax comprehension measure that required processing of spatialized components of ASL grammar. The factors important in the right hemisphere involvement in signed language processing have not been systematically investigated because previous studies examining the neural organization of signed sentences did not separately assess different types of linguistic processing. The present study examines the brain processes associated with semantics and syntax in a signed language.

One hypothesis for the role of the right hemisphere in signed language processing is specifically to enable the use of space in conveying signed language grammar. In particular, whereas aural-oral language utilizes word order and/or inflectional morphology such as case markers to distinguish grammatical referents (e.g., subjects and objects), in ASL these grammatical distinctions can make use of spatial loci and the direction of motion and hand orientation to indicate these grammatical roles (19)—processes that typically engage the right hemisphere in nonlinguistic processing (e.g., ref. 20).

It is well established that semantic and syntactic processes rely on nonidentical neural systems. ERP evidence from studies of aural-oral languages show that violations to semantic/pragmatic expectancy produce a negative potential peaking around 400 msec after the violation onset (N400) that is largest over centroparietal areas (21). Though virtually every word elicits this ERP component, words rich in meaning, so-called open-class words (e.g., nouns, verbs, and adjectives) elicit a larger N400 than closed-class words, which convey the relationship between objects and events (e.g., conjunctions, auxiliaries, and articles) (22). Furthermore, the amplitude of the N400 decreases linearly across word position in semantically congruent sentences (23), and it increases as the cloze probability of a word in sentence context decreases (24), suggesting that weak semantic context makes it difficult to constrain the meaning of incoming words. These findings suggest that the N400 is not a simple incongruity response, but indexes ongoing semantic processing (25). In contrast, syntactic violations typically elicit an anterior negativity largest over the left hemisphere (left anterior negativity, or LAN) (26) between 300 and 500 msec, although sometimes it begins as early as 100 msec (early LAN, or ELAN) (27). The (E)LAN is followed by a broadly distributed posterior positivity (P600 or syntactic positive shift [SPS]) (28). The (E)LAN and P600 components are elicited by a variety of syntactic violations, including anomalous phrase structure (29), subjacency (26), and gender agreement (30). The (E)LAN is believed to index the disruption of early syntactic processes, and Friederici (27) proposed that the (E)LAN and LAN may be decomposed into 2 functionally distinct components—the ELAN indexing early, automatic syntactic parsing, and the LAN reflecting morphosyntactic processing. Other researchers have shown that the LAN may index parsing-related working memory processes (e.g., refs. 31 and 32). Magnetoencelolographic (MEG) studies suggest that the (E)LAN may be generated by left-dominant perisylvian regions; in particular, the pars triangularis (Brodmann's area [BA] 45) of the inferior frontal gyrus (33, 34) and the anterior superior temporal cortex (BA 22) (33). The P600 may reflect syntactic reanalyses and integration (35) and can be influenced by semantic content (36). Thus, in contrast to the LAN, this later positivity may not be a purely syntactic effect.

Previous ERP studies of sign language processing report findings consistent with studies of aural-oral language processing. Deaf and hearing native signers of ASL display an N400 both to open (vs. closed)-class signs that is largest at posterior medial sites and to semantic violations in ASL sentences (37). In a direct comparison of semantic processing in written, spoken, and signed languages, Kutas et al. (38) argued that semantic integration, as measured by the N400, is equivalent across modalities. In addition, Neville et al. (37) found that signs conveying primarily syntactic functions (i.e., closed-class signs) elicited a bilateral anterior negativity in deaf native signers.

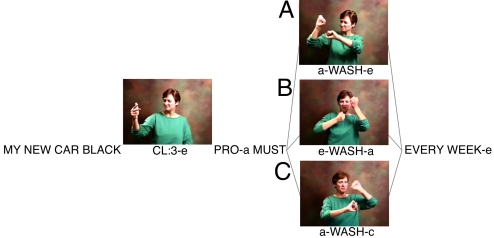

The present study investigates the electrophysiological responses to syntactic incongruities in signed language, in addition to semantic anomalies. Moreover, unlike previous ERP studies of sign processing (32, 33), the present study employs sentences presented at the natural rate of signing. Deaf native signers of American Sign Language viewed ASL sentences. Some sentences were well formed and semantically coherent, whereas others contained a semantic violation, or 1 of 2 different forms of syntactic (verb agreement) violations: a “reversed” verb agreement violation and an “unspecified” verb agreement violation (see supporting information (SI) Appendix for stimulus glosses and English translations). In signed languages such as ASL, agreement between the verb and object (indicating the subject and object of the verb) can be achieved using the space in front of the signer. First referents are established at particular locations in this “signing space” (e.g., by signing CAR and then “placing” the car to the right of the signer), and subsequently the verb is articulated with a movement from the location of the subject to the location of the object (e.g., “I wash the car” would involve movement of the verb WASH from the signer's body toward the location on the right where the car had been established). As in spoken languages, in signed languages, verb agreement is considered a syntactic phenomenon (but see ref. 39); however, the spatial encoding of grammatical relations is a unique feature of signed languages (19, 40). Reversed verb agreement violations were formed by reversing the direction of the verb such that the verb moved toward the subject instead of the object. Unspecified verb agreement violations were formed by directing the verb toward a location in space that had not been defined previously as the subject or object (see Fig. 1).

Fig. 1.

Images from videos illustrating a verb agreement sentence set. Left insert displays the classifier for vehicle (CL:3); right insert displays the direction of the ASL sign WASH; notations in lowercase index the location of the referents in space (a, signer; c, left of the signer; e, right of the signer). In the correct sentence (A), the sign WASH moves from the subject (the signer) toward the object (the car, placed to the right of the signer); in the reversed verb agreement violation (B), WASH moves from the object CAR toward the subject (the signer); in the unspecified verb agreement violation (C), WASH moves from the subject toward the left of the signer, where no referent has been established.

If the neural systems mediating language are independent of the form of a language, ERPs to ASL sentences were expected to be similar to those reported in previous studies of written and spoken language. Specifically, we predicted that semantic violations would elicit an N400 that was largest over centroparietal brain areas, and syntactic violations would elicit an early negativity anteriorly followed by a broadly distributed P600 in deaf native signers. In addition, based on the findings suggesting a greater role for the right hemisphere in processing ASL as compared with aural-oral language (10) and the hypothesis that this difference may be due to the manner in which ASL mediates syntactic functions conveyed in space, we predicted that the index of early, syntactic processing, the anterior negativity, would be less left-lateralized as compared with previous studies of aural-oral language.

Results

Behavior.

Participants correctly judged 80% of the semantically appropriate sentences and 91% of semantically anomalous sentences; for the syntactic sentences, they correctly judged 88% of canonical verb agreement sentences, and 88% and 87% of sentences containing reversed and unspecified verb agreement violations, respectively.

Event-Related Brain Potentials.

Repeated-measures analyses of variance (ANOVAs) were conducted separately for the semantic and syntactic sentence types, with 5 within-participants factors: condition (C, 2 levels: anomalous, canonical), hemisphere (H, 2 levels: left, right), anterior-posterior (A/P, 3 levels: frontal, frontotemporal, temporal for the anterior negativities; 6 levels: frontal, frontotemporal, temporal, central, parietal, occipital for all other effects), and lateral-medial (L/M, 2 levels: lateral, medial). The dependent measure was mean amplitude at each electrode site. The time windows for the ERP effects were defined as the window between the first and last of 3 consecutive significant ANOVAs on short latency bins (see Methods for details). These analyses resulted in the following time windows poststimulus onset: 300–875 msec for the N400, 140–200 msec, and 200–360 msec for the anterior negativity for the reversed and unspecified verb agreement violations, respectively, and 475–1,200 msec and 425–1,200 msec for the P600 for the reversed and unspecified verb agreement violations, respectively.

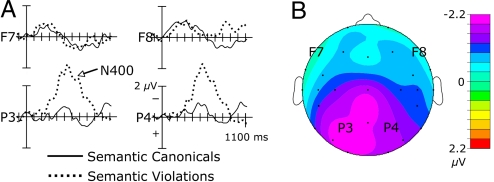

Semantically anomalous sentences elicited an N400 (300–875 msec) relative to control sentences [C F(1, 14) = 11.12, P = 0.005] (see Fig. 2). This response was largest over posterior [C × A/P F(5, 70) = 5.85, P = 0.014] and medial [C × L/M F(1, 14) = 4.73, P = 0.047] sites. Analyses over the 2 medial rows revealed a main effect of condition [C F(1, 14) = 11.51, P = 0.004] and a condition by anterior/posterior interaction [C × A/P F(5, 70) = 4.43, P = 0.025] confirming that, over medial sites, the N400 was largest over posterior sites [C F(1, 14) = 18.48, P = 0.001]. A main effect of condition was observed over the posterior 3 rows [C F(1, 14) = 20.65, P < 0.001, all interactions P > 0.05], suggesting that the N400 was broadly distributed over posterior regions. In addition, semantic violations elicited a late negativity (800–1,200 msec) over right lateral frontal and frontotemporal sites [C F(1, 14) = 4.894, P = 0.044].

Fig. 2.

Grand average and voltage map for semantic violation vs. canonical sentences. (A) Grand mean event-related potentials for conditions semantically anomalous and canonical sentences are displayed. F7 and F8 are frontal, lateral electrode sites of the left and right hemisphere, respectively; P3 and P4 correspond to parietal sites of the left and right hemisphere, respectively. (B) Voltage map plotted for 300–875 msec.

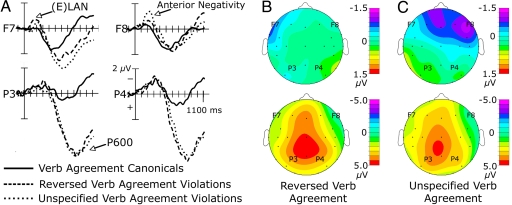

Reversed verb agreement violations elicited an early anterior negativity (140–200 msec) [C × H × L/M F(1, 14) = 6.76, P = 0.021] that was largest over the lateral sites of the left hemisphere [C F(1, 14) = 4.95, P = 0.043; left medial, right medial, and right lateral P values >0.05]. In addition, this violation elicited a widely distributed P600 (475–1,200 msec) [C F(1, 14) = 60.82, P < 0.001]. This response was largest over posterior medial sites [C × A/P × L/M F(5, 70) = 11.4, P = 0.001], particularly at parietal sites [C × A/P F(1, 14) = 7.63, P < 0.015]. Similarly, at anterior rows, the P600 was larger over medial than lateral sites [C × L/M F(1, 14) = 28.25, P < 0.001] especially over frontal sites [C × A/P × L/M F(2, 2) = 24.91, P < 0.001]. At anterior rows, a condition × hemisphere × lateral/medial interaction [C × H × L/M F(1, 14) = 7.52, P = 0.016] revealed that the P600 component was larger over the left hemisphere (see Fig. 3 A and B).

Fig. 3.

Grand average and voltage maps for verb agreement canonical vs. reversed and unspecified verb agreement violations. (A) Grand mean event-related potentials for the verb agreement violations (reversed and unspecified) along with the canonical sentences are shown. All other conventions are as in Fig. 2A. (B) Voltage maps plotted for the anterior negativity (140–200 msec) (Top) and P600 (475–1,200 msec) (Bottom) for the reversed verb agreement condition. (C) Voltage maps plotted for the anterior negativity (200–360 msec) (Top) and P600 (425–1200 msec) (Bottom) for the unspecified verb agreement condition.

Unspecified verb agreement violations, relative to canonical sentences, elicited an anterior negativity (200–360 msec) followed by a P600 (425–1,200 msec). The anterior negativity was largest over frontal and frontotemporal sites [C × A/P F(2, 28) = 10.99, P = 0.001]; an interaction [C × H × A/P × L/M F(2, 28) = 3.52, P = 0.045] showed that the anterior negativity was largest over the right lateral frontal site [C F(1, 14) = 7.67, P = 0.015]. As with the reversed verb agreement violations, sentences containing unspecified verb agreement anomalies elicited a broadly distributed P600 [C F(1, 14) = 30.23, P < 0.001] that was larger over the left than the right hemisphere [C × H F(1, 14) = 21.55, P < 0.001] and over posterior sites [C × A/P F(5, 70) = 4.32, P = 0.036]. At posterior sites, a condition × anterior/posterior × lateral/medial interaction revealed that the P600 component was largest over medial central and parietal sites [C F(1, 14) = 31.91, P < 0.001] (see Fig. 3 A and C).

Discussion

Consistent with a wealth of research on aural-oral language and earlier studies of ASL, the present study showed that semantic processing in ASL elicited an ERP response different in timing and distribution than the pattern produced by processing ASL grammar. Specifically, signed semantic violations elicited an N400 that was largest over central and posterior sites, whereas syntactic violations elicited an anterior negativity followed by a widely distributed P600. These findings are consistent with the idea that, within written, spoken, and signed language processing, semantic and syntactic processes are mediated by nonidentical brain systems.

Comparing the findings from studies of written, spoken, and signed sentence processing can help separate the neural responses related to core language processes from others that may depend upon a particular modality. Moreover, because sign language shares features with written language (e.g., both are visual) and spoken language (e.g., both are learned naturally from the parents, beginning at birth), studies examining the neural processing of sign language can provide insight into the nature of the differences between written and spoken language.

Semantic Processing.

The N400 effect for ASL semantic processing was largest over posterior sites, and the lack of a condition by hemisphere interaction over posterior sites indicates that this effect was bilaterally distributed. This distribution is consistent with previous studies of auditory (e.g., ref. 41) and written (e.g., ref. 26) sentence processing. Thus, the similarity in the distribution of the N400 for signed semantic processing, found in the present study, and spoken and written semantic processing, found in previous studies, may reflect some aspect of semantic processing, irrespective of language modality. That is, that the brain systems supporting the associations between arbitrary symbols (written words and signs received visually or spoken words received aurally), their semantic representations, and their higher-level semantic relations between sentence constituents may be language universal.

In contrast, the timing of the onset of the N400 in the current study is similar to that reported in previous studies of semantic processing using written stimuli. Studies of natural speech report an earlier-onset negativity in response to semantic violations than that typically found for reading (41). The earlier onset of the effect found for spoken compared with written semantic violations, may index 2 distinct components: an incongruity between the sounds of the expected and actual speech stream (i.e., the phonological mismatch negativity, or PMN) followed by an N400 (42). However, in the present study, there was no evidence of a PMN, suggesting that this component may be specific to the auditory modality.

Several possible explanations for this difference may be raised. There are clear timing differences in the execution of sign versus speech. A monosyllabic ASL sign is approximately twice the duration of a monosyllabic English word (43, 44). In addition, in connected discourse, the transition times between 2 sequential words in a sentence and 2 sequential signs will differ as a result of the greater spatial displacement that occurs in sign articulation compared with speech articulation. The time course of lexical access for signs in a sentential context is largely unexplored. In addition, though phonetic coarticulatory cues have been observed to affect spoken language comprehension (45, 46), the role of coarticulation in aiding sign recognition has not been established.

These factors may underlie the observations that the onset of the N400 elicited by signed semantic anomalies was later than that typically reported for spoken language. Instead, the onset time for the N400 was similar to that typically reported in studies of written language; both begin ≈300 msec poststimulus onset (23).

The N400 elicited by signed semantic anomalies is also similar to that reported for nonsign gestures that accompany speech. In nonsigners, viewing manual gestures that suggest the less-preferred meaning of spoken homonyms given the sentence context elicits an N400 (47), as do manual gestures that are semantically incongruous with word meaning (48) or sentence context (49). Together, these findings suggest that the brain systems supporting semantic processing are remarkably similar despite differences in modality and stimulus form.

Syntactic Processing.

Both verb agreement violations elicited an anterior negativity followed by a broadly distributed P600. The distribution of the P600 effects for processing ASL syntactic violations is similar to that reported in studies of written and spoken language processing. Reversed and unspecified signed verb agreement violations both elicited a P600 that was larger over medial and centroparietal sites, suggesting that the underlying cognitive operations that give rise to late syntactic processes, as with those that give rise to semantic processes, may be relatively independent of the modality through which the language is perceived.

The reversed verb agreement violations could have been interpreted as violations to semantic knowledge. To illustrate, the ASL sentence “*CAT a-SEE-c MOUSE CL:1 SCURRY-c CAT c-CHASE-a LUCKY PRO-c ESCAPE-c” translates into the semantically/pragmatically and syntactically anomalous expression “The cat sees the mouse scurry off. *The cat [mouse chases the cat], luckily, the mouse escaped.” In this example, chase is a directional verb; that is, the sign and its corresponding movement simultaneously convey the lexical item and index the subject and object of the verb. In the present study, the context may highlight the incongruity between the semantic/pragmatic expectancy; however, the ERP effects are time locked to the presentation of the verb, whose syntactic agreement features renders the sentence anomalous. In addition, gender and number agreement violations used in previous studies of aural-oral language processing could also be interpreted as semantically anomalous (36). However, the fact that a LAN and P600 were elicited by these violations suggests that participants interpreted them as syntactic anomalies. Other studies have reported a combination of the P600 and N400 in response to processing syntactic anomalies that rely on semantic constraints and depend upon the processing strategy adopted by the perceiver (50). In the present study, all participants displayed a P600 and not an N400. The detection of a syntactic anomaly, as indexed by a LAN, may block subsequent semantic processing (51). However, the repetition of the verb agreement sentences (see Methods) may have suppressed an N400, as previous studies have found an attenuated N400 for the second as compared with the first presentation for both written (e.g., ref. 52) and spoken (e.g., ref. 53) language processing. Thus the question of whether signed verb agreement violations can elicit both P600 and N400 components remains open. Nevertheless, the fact that signed reversed verb agreement violations elicited a LAN and P600 indicates that participants were processing these sentences as syntactic anomalies, and it illustrates the usefulness of the ERP technique in showing how participants are actually processing linguistic stimuli.

Though the findings from the present study show remarkable similarities between written/spoken and signed languages for semantic and late syntactic processes, one intriguing difference is the distribution of the early syntactic effect. Though reversed verb agreement violations elicited an early left anterior negativity, unspecified verb agreement violations elicited an anterior negativity that was larger over the right hemisphere. We do not claim that a right anterior negativity is unique to signed unspecified verb agreement violations. For example, an early right anterior negativity has been observed in studies of violations to musical structure (for a review, see ref. 54). However, the unspecified verb agreement violations likely place different demands on the system involved in processing spatial syntax than reversed verb agreement violations, as the unspecified violations refer to a location at which no referent had previously been located. Thus, the viewer is forced to either posit a new referent whose identity is unknown (and will perhaps be introduced at a later time in the discourse) or infer that the intended referent is one that was previously placed at a different spatial location. Either way, different processing is required compared with the reversed violations. Though we are unable to infer the sources of these ERP effects based on the available data, the results clearly implicate distinguishable neural subsystems involved in the processing of “spatial syntax” in ASL depending on the processing demands, and suggest a more complex organization for the neural basis of syntax than a unitary “grammatical processing” system. It remains to be determined whether the processing of spatial syntax in signed languages relies on systems not used for syntactic processing in aural-oral languages (as might be inferred from findings discussed previously of greater right hemisphere activation in some fMRI studies of ASL) or whether the distinction observed in the present data might also be found in spoken languages if comparable violations could be constructed.

In summary, the invariance of the pattern of results across aural-oral and signed languages demonstrates the existence of strong biological constraints in the organization of brain systems involved in lexical-semantic processing as indexed by the N400. The presence of an early left anterior negativity and P600 for the reversed verb agreement violations further supports previous work suggesting that the syntax of signed languages shares many of its neural underpinnings with spoken languages. At the same time, the right-lateralized negativity elicited by unspecified verb agreement violations in ASL suggests that the agreement systems of signed languages may impose certain unique processing demands that recruit additional brain regions not typically implicated in the processing of spoken language syntax. This difference points to the critical role of experience in shaping the organization of language systems of the brain.

Methods

Participants.

The 15 participants (10 female, average age = 30 years, range 23–47 years) were congenitally and profoundly deaf adults; they had no known neurological impairments, nor were they taking psychotropic medications. All were right-handed according to self-report and the Edinburgh Handedness Inventory (55). In accordance with the University of Oregon research guidelines they gave written consent; they received a monetary fee for their participation. Participants learned ASL from birth from their deaf parents. Though they learned written English in school, all used ASL as their primary language and rated it as the language they felt most comfortable using.

Stimuli.

The 245 experimental sentences were produced by a female deaf native signer of ASL, videotaped, digitized, and presented at the rate of natural sign. One hundred and twenty-five of the sentences were well-formed, semantically coherent sentences, and the remaining 120 contained an error. Thirty-one sentences contained a semantic error. Normative counts of psycholinguistic parameters, such as frequency, do not exist for ASL; however, where possible, the psycholinguistic features pertaining to the items' referent were taken into account. Target items in the semantically appropriate and anomalous conditions were matched on imageability and familiarity (P values >0.1). In addition, semantically anomalous signs were chosen from the same linguistic category as semantically appropriate signs. A native signer judged the semantically anomalous signs as inappropriate given the sentential context.

Thirty sentences contained a reversed verb agreement error; that is, the verb moved from the object to the subject, instead of the opposite direction. Twenty-six sentences contained an unspecified verb agreement error in which the subject and object were set up in space, but instead of directing the verb from the subject toward the object, the signer directed the verb to a location in space that had not been defined. To ensure that the lateralized distributions of the evoked potentials elicited by the verb agreement violations were not due to spatial working memory demands possibly involved in the maintenance of the location of the referent or orienting attention to the its location, the direction of motion was reasonably well balanced across conditions. In particular, the ratio of sentences moving leftward:rightward across the verb agreement target items were 45:55 for canonical verb agreement, 53:47 for the reversed verb agreement violations, and 50:50 for the unspecified verb agreement violations. Twenty-eight sentences contained a subcategorization error (i.e., the intransitive verb was followed by a direct object); the results for this condition are not discussed here. Each sentence containing a violation had a corresponding sentence that was semantically and syntactically correct. One set of 31 canonical sentences served as matched controls for both groups of sentences containing verb agreement violations; they were presented twice to equate “good”/“bad” responses. Nine sentences additional were repeated: 4 canonical sentences (2 semantic, 2 subcategorization) and 5 anomalous sentences (2 semantic, 2 reversed verb agreement, and 1 subcategorization) to reduce the likelihood that previously viewed sentences would influence participants' acceptability judgments (i.e., participants concluding that a sentence was “good” because they had already seen the “bad” version). In addition, 16 filler sentences were included to reduce predictability of the sentence structure. To maintain participants' attention to the stimuli, the sentences varied in length, and in the anomalous conditions the error occurred at various (but never sentence-final) positions across sentences. Two ASL linguists determined the stimulus onsets by identifying, for each target sign, the frame where the hand-shape information of the critical sign was clearly visible, excluding transitional movements leading to the onset of the sign.

Procedure.

Before the ERP recording, each participant viewed videotaped instructions and sample sentences produced by a deaf native signer. Participants were told that in the experiment, they would view a signer producing ASL sentences; some would be “good” ASL and others would be “bad” ASL. Because many deaf individuals are accustomed to communicating with people who use other forms of sign that do not rely on ASL syntax (e.g., Signed English) as well as those with varying fluencies of ASL, participants were instructed to judge the sentences critically and only accept well-formed, semantically coherent ASL as “good” sentences.

Participants were given a set of 16 practice sentences (6 were “good”; 10 contained errors, including 2 semantic, 3 reversed verb agreement, 3 unspecified verb agreement, and 2 subcategorization) to become familiar with the stimuli and experimental procedures.

Data were collected over 2 sessions, each one on a different day (mean days apart = 4.4). Stimuli were presented in a different pseudorandom order for each participant, and each participant viewed the same stimuli in a different order across both sessions to obtain adequate signal to noise. At each experimental session, participants sat comfortably in a dimly lit, sound-attenuated, electrically shielded booth in a mobile ERP laboratory. Stimuli were displayed on a CRT monitor located 57 inches from the participant's face; thus, signs subtended a visual angle of 5° vertically and 7° horizontally. The sequence of events was the following. First, 3 asterisks were displayed slightly above the center of the screen so the participants' eyes would be positioned at the location of the signer's face; the participant pressed a button with either hand to immediately elicit the first frame of the sentence, which was held briefly (500–800 ms) to allow the participant to fixate on the signer's face, then the sentence began. Following the sentence, the final frame was displayed for 1,000 ms before a question mark appeared on the screen and the participant responded (“good” or “bad”) via a button press. To reduce motion artifact, participants were instructed not to respond until the question mark appeared on the screen. Accuracy was emphasized over speed; thus, participants proceeded through the sentences and responded at their own pace. To guard against response bias, participants responded “good” with one hand for one session and with the other hand for the second session; the order of response hand was randomized across participants.

ERP Recording and Analyses.

Electrical activity was recorded from the scalp from 29 tin electrodes sewn into an elastic cap (Electro-Cap) according to an extended International 10–20 system montage (FZ, FP1/FP2, F7/F8, F3/F4, FT7/FT8, FC5/FC6, CZ, T3/T4, C5/C6, CT5/CT6, C3/C4, PZ, T5/T6, P3/P4, TO1/TO2, O1/O2). To monitor eye movement and blinks, additional electrodes were placed on the outer canthus of each eye and below the right eye. Impedances were less than 5 KΩ for eye electrodes and less than 2 KΩ for scalp and mastoid electrodes. Data from all scalp and vertical eye electrodes were referenced online to an electrode placed over the right mastoid; the data were reaveraged offline to the average of the left and right mastoids, and the 2 horizontal eye electrodes were referenced to each other (for electrode montage, see Fig. S1).

The EEG was amplified (−3 dB cutoff, 0.01–100 Hz bandpass) using Grass Model 12 Neurodata Acquisition System amplifiers and digitized online (250-Hz sampling rate). Offline, trials with eye movement, blinks, or muscle movements were identified using artifact rejection parameters tailored to each participant. Only artifact-free trials were kept for further analyses, resulting in the retention of at least 80% of trials across participants (82% semantically correct, 81% semantically anomalous, 83% canonical verb agreement, 84% reversed verb agreement, and 80% unspecified verb agreement). The EEG for each participant was averaged for each condition before applying a 60-Hz bandpass digital filter to remove artifacts due to electrical noise and normalized relative to a calibration pulse (200 msec, 10 μV) that was recorded on the same day as each participant's data. For each sentence condition, trials were averaged together over an epoch of 1,200 msec with a 200-msec prestimulus baseline. Only trials that participants responded to correctly were included.

As stated previously, repeated-measures ANOVAs were conducted, with 5 within-participants factors: sentence type condition (C, 2 levels: anomalous, canonical), hemisphere (H, 2 levels: left, right), anterior-posterior (A/P, 6 levels: frontal (F7/8, F3/4), frontotemporal (FT7/8, FC5/6), temporal (T3/4, C5/6), central (CT5/6, C3/4), parietal (T5/6, P3/4), occipital (TO1/2, O1/2)) and lateral-medial (L/M, 2 levels: lateral [F7/8, FT7/8, T3/4, CT5/6, T5/6, TO1/2], medial [F3/4, FC5/6, C5/6, C3/4, P3/4, O1/2]). Mean amplitude at each electrode site was the dependent measure. To correct for possible inhomogeneity of variances, the Greenhouse-Geisser (56) corrected P values are reported for statistics involving factors with more than 2 levels. The time windows for the ERP components were first assessed by a visual inspection of the waveforms from each participant and the grand average of all 15 participants. The precise latency range for ERP components was determined by performing repeated-measures ANOVAs on adjacent 25-msec epochs over the sites showing the largest mean amplitude (P3/P4, O1/O2 for the N400, over all sites for the broadly distributed P600). The onset and offset for each component were defined as the first and last of 3 consecutive significant (i.e., P < 0.05) ANOVAs, respectively. As stated previously, these analyses revealed that the N400 occurred 300–875 msec poststimulus onset. The P600 started at 475 msec for reversed verb agreement violation and 425 msec for unspecified verb agreement violations. This component continued to be significant throughout the remainder of the poststimulus epoch (1,200 msec). The anterior negativity has a relatively early latency and a focal distribution, so to determine the onset and offset of this effect, 20-msec bins, moving by a 10-msec time window, were measured across the anterior 3 rows, revealing that this effect occurred 140–200 msec after the onset of reversed verb agreement violations and at 200–360 msec after the onset of unspecified verb agreement violations.

Supplementary Material

Acknowledgments.

The authors thank D. Waligura, L. White, T. Mitchell, D. Coch, W. Skendzel, D. Paulsen, J. Currin, and B. Ewan. We also thank Mike Posner for comments on an earlier version of this paper. The project described was supported by National Institutes of Health National Institute on Deafness and Other Communication Disorders Grants R01 DC00128 (to H.N.) and R01 DC003099 (to D.C.). This article's contents are solely the responsibility of the authors and do not necessarily represent the official views of the National Institutes of Health. B.R. is supported by the German Research Foundation (DFG, Ro 1226/1-1, 1-2). A.J.N. is supported by the Canada Research Chairs program. Requests for the sentence video can be made directly to the authors.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/cgi/content/full/0809609106/DCSupplemental.

References

- 1.Bavelier D, et al. Sentence reading: A functional MRI study at 4 Tesla. J Cogn Neurosci. 1997;9:664–686. doi: 10.1162/jocn.1997.9.5.664. [DOI] [PubMed] [Google Scholar]

- 2.Embick D, Marantz A, Miyashita Y, O'Neil W, Sakai KL. A syntactic specialization for Broca's area. Proc Natl Acad Sci USA. 2000;97:6150–6154. doi: 10.1073/pnas.100098897. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Davis MH, Johnsrude IS. Hierarchial processing in spoken language comprehension. J Neurosci. 2003;23(8):3423–3431. doi: 10.1523/JNEUROSCI.23-08-03423.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Mazoyer B, et al. The cortical representation of speech. J Cogn Neurosci. 1993;5(4):467–479. doi: 10.1162/jocn.1993.5.4.467. [DOI] [PubMed] [Google Scholar]

- 5.Roeder B, Stock O, Neville H, Bien S, Roesler F. Brain activation modulated by the comprehension of normal and pseudo-word sentences of different processing demands: A functional magnetic resonance imaging study. Neuroimage. 2002;15(4):1003–1014. doi: 10.1006/nimg.2001.1026. [DOI] [PubMed] [Google Scholar]

- 6.Capek CM, et al. The cortical organization of audio-visual sentence comprehension: An fMRI study at 4 Tesla. Brain Res Cogn Brain Res. 2004;20(2):111–119. doi: 10.1016/j.cogbrainres.2003.10.014. [DOI] [PubMed] [Google Scholar]

- 7.MacSweeney M, et al. Neural systems underlying British Sign Language and audio-visual English processing in native users. Brain. 2002;125(7):1583–1593. doi: 10.1093/brain/awf153. [DOI] [PubMed] [Google Scholar]

- 8.Dronkers NF, Wilkins DP, Van Valin RD, Jr, Redfern BB, Jaeger JJ. Lesion analysis of the brain areas involved in language comprehension. Cognition. 2004;92(1–2):145–177. doi: 10.1016/j.cognition.2003.11.002. [DOI] [PubMed] [Google Scholar]

- 9.Van Petten C, Luka BJ. Neural localization of semantic context effects in electromagnetic and hemodynamic studies. Brain Lang. 2006;97(3):279–293. doi: 10.1016/j.bandl.2005.11.003. [DOI] [PubMed] [Google Scholar]

- 10.Neville HJ, et al. Cerebral organization for language in deaf and hearing subjects: Biological constraints and effects of experience. Proc Natl Acad Sci USA. 1998;95(3):922–929. doi: 10.1073/pnas.95.3.922. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Newman AJ, Bavelier D, Corina D, Jezzard P, Neville HJ. A critical period for right hemisphere recruitment in American Sign Language processing. Nat Neurosci. 2002;5(1):76–80. doi: 10.1038/nn775. [DOI] [PubMed] [Google Scholar]

- 12.Söderfeldt B, et al. Signed and spoken language perception studied by positron emission tomography. Neurology. 1997;49(1):82–87. doi: 10.1212/wnl.49.1.82. [DOI] [PubMed] [Google Scholar]

- 13.MacSweeney M, Capek CM, Campbell R, Woll B. The signing brain: The neurobiology of sign language. Trends Cogn Sci. 2008;12(11):432–440. doi: 10.1016/j.tics.2008.07.010. [DOI] [PubMed] [Google Scholar]

- 14.Corina DP. Aphasia in users of signed languages. In: Coppens P, Lebrun Y, Basso A, editors. Aphasia in Atypical Populations. Hillsdale, NJ: Erlbaum; 1998. pp. 261–309. [Google Scholar]

- 15.Hickok G, Bellugi U, Klima ES. The neurobiology of sign language and its implications for the neural basis of language. Nature. 1996;381(6584):699–702. doi: 10.1038/381699a0. [DOI] [PubMed] [Google Scholar]

- 16.Poizner H, Klima ES, Bellugi U. What the Hands Reveal About the Brain. Cambridge, MA: MIT Press; 1987. [Google Scholar]

- 17.Capek CM, et al. Hand and mouth: Cortical correlates of lexical processing in British Sign Language and speechreading English. J Cogn Neurosci. 2008;20(7):1220–1234. doi: 10.1162/jocn.2008.20084. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Petitto LA, et al. Speech-like cerebral activity in profoundly deaf people while processing signed languages: Implications for the neural basis of all human language. Proc Natl Acad Sci USA. 2000;97(25):13961–13966. doi: 10.1073/pnas.97.25.13961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Padden CA. Interaction of Morphology and Syntax in American Sign Language. New York: Garland; 1988. [Google Scholar]

- 20.Hellige JB. Hemispheric Asymmetry: What's Right and What's Left. Cambridge, MA: Harvard Univ Press; 1993. [Google Scholar]

- 21.Kutas M, Hillyard SA. Reading senseless sentences: Brain potentials reflect semantic incongruity. Science. 1980;207(4427):203–205. doi: 10.1126/science.7350657. [DOI] [PubMed] [Google Scholar]

- 22.Neville HJ, Mills DL, Lawson DS. Fractionating language: Different neural subsystems with different sensitive periods. Cereb Cortex. 1992;2(3):244–258. doi: 10.1093/cercor/2.3.244. [DOI] [PubMed] [Google Scholar]

- 23.Van Petten C. A comparison of lexical and sentence-level context effects in event-related potentials. Lang Cogn Process. 1993;8(4):485–531. [Google Scholar]

- 24.Kutas M, Hillyard SA. Brain potentials during reading reflect word expectancy and semantic association. Nature. 1984;307(5947):161–163. doi: 10.1038/307161a0. [DOI] [PubMed] [Google Scholar]

- 25.van Berkum JJA, Hagoort P, Brown CM. Semantic integration in sentences and discourse: Evidence from the N400. J Cogn Neurosci. 1999;11(6):657–671. doi: 10.1162/089892999563724. [DOI] [PubMed] [Google Scholar]

- 26.Neville HJ, Nicol JL, Barss A, Forster KI, Garrett MF. Syntactically based sentence processing classes: Evidence from event-related brain potentials. J Cogn Neurosci. 1991;3(2):151–165. doi: 10.1162/jocn.1991.3.2.151. [DOI] [PubMed] [Google Scholar]

- 27.Friederici AD. Towards a neural basis of auditory sentence processing. Trends Cogn Sci. 2002;6(2):78–84. doi: 10.1016/s1364-6613(00)01839-8. [DOI] [PubMed] [Google Scholar]

- 28.Hagoort P, Brown C, Groothusen J. The syntactic positive shift (SPS) as an ERP measure of syntactic processing. Lang Cogn Process. 1993;8(4):439–483. [Google Scholar]

- 29.Friederici AD, Hahne A, Mecklinger A. Temporal structure of syntactic parsing: Early and late event-related brain potential effects. J Exp Psychol Learn Mem Cogn. 1996;22(5):1219–1248. doi: 10.1037//0278-7393.22.5.1219. [DOI] [PubMed] [Google Scholar]

- 30.Deutsch A, Bentin S. Syntactic and semantic factors in processing gender agreement in Hebrew: Evidence from ERPs and eye movements. J Mem Lang. 2001;45(2):200–224. [Google Scholar]

- 31.Kluender R, Kutas M. Bridging the gap: Evidence from ERPs on the processing of unbounded dependencies. J Cogn Neurosci. 1993;5(2):196–214. doi: 10.1162/jocn.1993.5.2.196. [DOI] [PubMed] [Google Scholar]

- 32.Roesler F, Pechmann T, Streb J, Roeder B, Hennighausen E. Parsing of sentences in a language with varying word order: Word-by-word variations of processing demands are revealed by event-related brain potentials. J Mem Lang. 1998;38(2):150–176. [Google Scholar]

- 33.Friederici AD, Wang Y, Herrmann CS, Maess B, Oertel U. Localization of early syntactic processes in frontal and temporal cortical areas: A magnetoencephalographic study. Hum Brain Mapp. 2000;11(1):1–11. doi: 10.1002/1097-0193(200009)11:1<1::AID-HBM10>3.0.CO;2-B. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Gross J, et al. Magnetic field tomography analysis of continuous speech. Brain Topogr. 1998;10(4):273–281. doi: 10.1023/a:1022223007231. [DOI] [PubMed] [Google Scholar]

- 35.Osterhout L, Holcomb PJ. Event-related brain potentials elicited by syntactic anomaly. J Mem Lang. 1992;31(6):785–806. [Google Scholar]

- 36.Osterhout L, Bersick M. Brain potentials reflect violations of gender stereotypes. Mem Cognit. 1997;25(3):273–285. doi: 10.3758/bf03211283. [DOI] [PubMed] [Google Scholar]

- 37.Neville HJ, et al. Neural systems mediating American sign language: Effects of sensory experience and age of acquisition. Brain Lang. 1997;57(3):285–308. doi: 10.1006/brln.1997.1739. [DOI] [PubMed] [Google Scholar]

- 38.Kutas M, Neville HJ, Holcomb PJ. A preliminary comparison of the N400 response to semantic anomalies during reading, listening and signing. Electroencephalogr Clin Neurophysiol Suppl. 1987;39:325–330. [PubMed] [Google Scholar]

- 39.Liddell SK. Indicating verbs and pronouns: Pointing away from agreement. In: Emmorey KD, Lane HL, editors. The Signs of Language Revisited: An Anthology to Honor Ursula Bellugi and Edward Klima. Mahwah, NJ: Erlbaum; 2000. pp. 303–320. [Google Scholar]

- 40.Klima E, Bellugi U. The Signs of Language. Cambridge, MA: Harvard Univ Press; 1979. [Google Scholar]

- 41.Holcomb PJ, Coffey SA, Neville HJ. Visual and auditory sentence processing: A developmental analysis using event-related brain potentials. Dev Neuropsychol. 1992;8(2–3):203–241. [Google Scholar]

- 42.Connolly JF, Phillips NA. Event-related potential components reflect phonological and semantic processing of the terminal word of spoken sentences. J Cogn Neurosci. 1994;6(3):256–266. doi: 10.1162/jocn.1994.6.3.256. [DOI] [PubMed] [Google Scholar]

- 43.Emmorey K, Corina D. Hemispheric specialization for ASL signs and English words: Differences between imageable and abstract forms. Neuropsychologia. 1993;31(7):645–653. doi: 10.1016/0028-3932(93)90136-n. [DOI] [PubMed] [Google Scholar]

- 44.Corina DP, Knapp HP. Lexical retrieval in American Sign Language production. In: Goldstein LM, Whalen DH, Best CT, editors. Papers in Laboratory Phonology 8: Varieties of Phonological Competence. Berlin: Mouton de Gruyter; 2006. pp. 213–239. [Google Scholar]

- 45.Repp BH, Mann VA. Perceptual assessment of fricative-stop coarticulation. J Acoust Soc Am. 1981;69(4):1154–1163. doi: 10.1121/1.385695. [DOI] [PubMed] [Google Scholar]

- 46.Repp BH, Mann VA. Fricative-stop coarticulation: Acoustic and perceptual evidence. J Acoust Soc Am. 1982;71(6):1562–1567. doi: 10.1121/1.387810. [DOI] [PubMed] [Google Scholar]

- 47.Holle H, Gunter TC. The role of iconic gestures in speech disambiguation: ERP evidence. J Cogn Neurosci. 2007;19(7):1175–1192. doi: 10.1162/jocn.2007.19.7.1175. [DOI] [PubMed] [Google Scholar]

- 48.Kelly SD, Kravitz C, Hopkins M. Neural correlates of bimodal speech and gesture comprehension. Brain Lang. 2004;89(1):253–260. doi: 10.1016/S0093-934X(03)00335-3. [DOI] [PubMed] [Google Scholar]

- 49.Ozyurek A, Willems RM, Kita S, Hagoort P. On-line integration of semantic information from speech and gesture: Insights from event-related brain potentials. J Cogn Neurosci. 2007;19(4):605–616. doi: 10.1162/jocn.2007.19.4.605. [DOI] [PubMed] [Google Scholar]

- 50.Osterhout L. On the brain response to syntactic anomalies: Manipulations of word position and word class reveal individual differences. Brain Lang. 1997;59(3):494–522. doi: 10.1006/brln.1997.1793. [DOI] [PubMed] [Google Scholar]

- 51.Hahne A, Friederici AD. Differential task effects on semantic and syntactic processes as revealed by ERPs. Brain Res Cogn Brain Res. 2002;13(3):339–356. doi: 10.1016/s0926-6410(01)00127-6. [DOI] [PubMed] [Google Scholar]

- 52.Besson M, Kutas M, Van Petten C. An event-related potential (ERP) analysis of semantic congruity and repetition effects in sentences. J Cogn Neurosci. 1992;4(2):132–149. doi: 10.1162/jocn.1992.4.2.132. [DOI] [PubMed] [Google Scholar]

- 53.Rugg MD, Doyle MC, Wells T. Word and nonword repetition within- and across-modality: An event-related potential study. J Cogn Neurosci. 1995;7(2):209–227. doi: 10.1162/jocn.1995.7.2.209. [DOI] [PubMed] [Google Scholar]

- 54.Koelsch S, Friederici AD. Toward the neural basis of processing structure in music. Comparative results of different neurophysiological investigation methods. Ann N Y Acad Sci. 2003;999:15–28. doi: 10.1196/annals.1284.002. [DOI] [PubMed] [Google Scholar]

- 55.Oldfield RC. The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia. 1971;9(1):97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- 56.Greenhouse SW, Geisser S. On methods in the analysis of profile data. Psychometrika. 1959:95–112. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.