Abstract

Context

The process of knowledge translation (KT) in health research depends on the activities of a wide range of actors, including health professionals, researchers, the public, policymakers, and research funders. Little is known, however, about health research funding agencies' support and promotion of KT. Our team asked thirty-three agencies from Australia, Canada, France, the Netherlands, Scandinavia, the United Kingdom, and the United States about their role in promoting the results of the research they fund.

Methods

Semistructured interviews were conducted with a sample of key informants from applied health funding agencies identified by the investigators. The interviews were supplemented with information from the agencies' websites. The final coding was derived from an iterative thematic analysis.

Findings

There was a lack of clarity between agencies as to what is meant by KT and how it is operationalized. Agencies also varied in their degree of engagement in this process. The agencies' abilities to create a pull for research findings; to engage in linkage and exchange between agencies, researchers, and decision makers; and to push results to various audiences differed as well. Finally, the evaluation of the effectiveness of KT strategies remains a methodological challenge.

Conclusions

Funding agencies need to think about both their conceptual framework and their operational definition of KT, so that it is clear what is and what is not considered to be KT, and adjust their funding opportunities and activities accordingly. While we have cataloged the range of knowledge translation activities conducted across these agencies, little is known about their effectiveness and so a greater emphasis on evaluation is needed. It would appear that “best practice” for funding agencies is an elusive concept depending on the particular agency's size, context, mandate, financial considerations, and governance structure.

Keywords: Knowledge translation, health policy, implementation, qualitative research

Health and health care research has the potential to improve people's health, the delivery of health care, and patients' outcomes. Despite the well-documented cases in which publication alone was sufficient to move research into practice very quickly (Beral, Bull, and Reeves 2005; Hersh, Stefanick, and Stafford 2004), the incorporation of research findings into health policy and routine clinical practice is often unpredictable and can be slow and haphazard (AHRQ 2001), thereby diminishing the return to society from investments in research. Effective and efficient means, therefore, are required to realize the benefits of such investments, and as a result, health-funding agencies are increasingly interested in the process of knowledge translation.

Knowledge translation is a term that is used frequently and rather loosely and has been defined in different ways. A recent Google search (“definition knowledge translation”), restricted to Canadian web pages, yielded 1,350,000 hits. Many websites cite the definition developed by the Canadian Institutes of Health Research (CIHR, the organization that funded this study): “the exchange, synthesis and ethically-sound application of knowledge—within a complex system of interactions between researchers and users—to accelerate the capture of the benefits of research for Canadians through improved health, more effective services and products, and a strengthened health care system” (see http://www.cihr-irsc.gc.ca/e/29418.html, accessed September 20, 2007). In their view, knowledge translation is a broad concept encompassing all the steps between the creation of new knowledge and its application in the real world. Although other terms are used, including knowledge transfer, dissemination, research use, and implementation research (Graham et al. 2006), we shall use the term knowledge translation (KT) in this article because it was introduced by the CIHR and because we used it throughout our research protocol.

Lomas (1993) offered a useful categorization of knowledge translation activities that groups them into three conceptually distinct types: diffusion, dissemination, and implementation. He defined diffusion as those efforts that are passive and unplanned. In this category of knowledge translation activities, the onus is on the potential adopter to seek out the information. Dissemination is an active process to spread the message that involves targeting and tailoring the evidence and the message to a particular target audience. Although these strategies raise awareness and may influence attitudes, they may or may not change the behavior of the target audience. Implementation is an even more active process that involves systematic efforts to encourage adoption of the evidence by identifying and overcoming barriers. An alternative way of thinking about knowledge translation is based on the degree of engagement with the potential audience. In this conceptualization, activities are considered to be “push,” concentrating on diffusion and efforts to disseminate to a broad audience; “pull,” focused on the needs of users, thereby creating an appetite for research results (Lavis, McLeod, and Gildiner 2003); or “linkage and exchange,” building and maintaining relationships in order to exchange knowledge and ideas (Canadian Health Services Research Foundation 1999; Lomas 2000).

The process of KT in health research depends on the activities of a wide range of actors, including health professionals, researchers, the public, policymakers, and research funders (Grimshaw, Ward, and Eccles 2001). KT often requires a range of interventions of varying complexity and resource intensiveness, targeting different levels of health care systems as well as different audiences (Lomas 1997). There has been, however, relatively little empirical research on the actual or potential knowledge translation roles, responsibilities, and activities of the different actors.

A review of the effectiveness of guideline dissemination and implementation strategies directed at health professionals (Grimshaw et al. 2004) found median effect sizes ranging from 8.1 to 14.1 percent for individual strategies targeting behavior change in practitioners (e.g., audit and feedback, or academic detailing). The strategies identified were of variable effectiveness and were used in many different settings, making it impossible to predict which would work best in a given context. Furthermore, despite the growing body of evidence (Heap and Parikh 2005) regarding how new ideas disseminate through an industry, the dissemination of knowledge produced by health researchers (Ramlogan et al. 2005) has received relatively less attention. But a recent survey of applied health researchers conducted by Graham and his colleagues (Graham, Grimshaw, et al. 2005) indicated that researchers were most successful and confident when disseminating the results of their research to their academic colleagues. Most were less successful, however, in disseminating these results to other target audiences, even when they felt that their results were of considerable importance to both the public and decision makers. When considering the public's role in the KT process, it seems intuitively obvious that the media can and do play an important role in influencing the public in matters of health and health care (see, e.g., Grilli, Ramsay, and Minozzi 1998; Petrella et al. 2005), but little is known about how to harness and control this potential KT vehicle. Policymakers also are important actors in the KT realm. A systematic review of health policymakers' perceptions of their use of evidence in making policy decisions (Innvaer et al. 2002) suggested that decision makers might make more use of research results if there were more linkage and exchange between the research and the policy world and if researchers answered the kinds of questions that policymakers asked, in a time frame useful to them.

What Do We Know about Health Research Funders' Efforts to Promote Knowledge Translation?

Research funders could promote knowledge translation in a number of ways, as they are in a position to influence researchers' knowledge translation activities. Funders could emphasize the importance of knowledge translation as an integral part of the research process and require that it be addressed in the grant proposal, and they might require researchers to work with end users (e.g., policymakers, clinicians) as partners in writing the grant proposal and in conducting and eventually implementing the research (Graham, Tetroe, et al. 2005).

Research funders might also promote KT directly by developing their own knowledge translation strategy, disseminating information about funded and completed research, involving end users in prioritizing research topics (i.e., commissioned research), and funding implementation research (i.e., the scientific study of methods to promote the use of research findings in practice).

Our research team found that although different funders had different policies and mechanisms for promoting research translation, these had not previously been documented or explored systematically, nor had they been categorized according to the degree of involvement with the end user (the push, pull, and linkage and exchange categories). Therefore, the overall objective of our project was to determine the knowledge translation policy, expectations, and activities of health research funding agencies both in Canada and internationally. Specifically, we were interested in the following questions: What are funding agencies' expectations of researchers? What do funding agencies perceive as their role in promoting the results of the research they fund? How do funding agencies promote the use of the research they fund? What are the agencies' capacities to support knowledge translation?

Methods

Study Design

We undertook a qualitative study using semistructured interviews with a sample of key informants from applied health funding agencies that we had identified. The interviews were supplemented with and informed by information from the agencies' websites.

Sampling Frame

We identified a “judgment sample” of health research funding agencies in Canada, Australia, France, the Netherlands, Sweden, Denmark, Norway, the United Kingdom, and the United States. A judgment sample is a sample of purposefully selected cases based on particular criteria. In this case, we were seeking cases that would provide interesting contrasts (Kuzel 1992). A judgment sample also is obtained according to the discretion of someone familiar with the characteristics of the population of interest, which in our case was our study team. Accordingly, we selected a purposeful sample of agencies from our home countries that satisfied two broad conditions: agencies (1) that were national in scope and others that were disease-specific voluntary health organizations (VHOs) and (2) that represented a continuum or contrast in their KT engagement. We interviewed in each agency as many three key informants responsible for overall strategic direction, applied research programs, and knowledge translation, as identified by members of the agency.

Semistructured Interviews

We chose the semistructured interview as our primary method of collecting data, as this approach allowed the participants to respond freely, to illustrate concepts, and to present individual perspectives that the interviewer could probe further (Morse and Field 1995). This format also increased the likelihood that busy participants could cover the topics of interest efficiently. The interview framework was constructed by the Ottawa-based team to explore issues regarding knowledge translation at several levels of organization: the country level, the funding agency level, and the researcher level (Ferlie and Shortell 2001).

We searched the website of each of the funding agencies for information relating to any of the questions from the interview framework. This information was pasted into the interview framework before contacting anyone from the agency and thus became a means of briefing the interviewer about the agency before the interviews. We also downloaded documents, publications, and grant application forms when available, to supplement the data gathered from the respondents.

The interviews were conducted face-to-face or by telephone, following the interview framework, although the interviewer was free to pursue issues that arose during the interview that were not addressed by the interview guide and, similarly, to omit questions that had already been covered by either the web content or another interviewee from the same agency. The interviews were taped, with the participants' permission, and each participant signed a consent form. The study received ethics approval from the Ottawa Hospital's Research Ethics Board.

Members from the study's coordinating center in Ottawa conducted the interviews for agencies in Canada, the United States, and the Netherlands. The UK, French, Australian, and Scandinavian investigators oversaw the conduct of the interviews in their home countries, and the Ottawa team trained the interviewers from the other countries. The interviews were conducted in French in France and Québec, in Danish in Denmark, and in English in all the other countries. The interviews took place in 2003 and 2004.

Transcription, Analysis, Coding, and Synthesis of the Data

The audiotapes were transcribed verbatim and combined with the information collected from the agency websites by cutting and pasting the relevant quotations into the interview framework. At this point, the interviews conducted in French and Danish were translated into English, and each translation was verified by two native speakers. One document was created for each agency that combined the comments from all individuals interviewed for that agency. We resolved any discrepant information provided by different respondents by checking the agency's website or contacting one of the respondents. The accuracy of the transcription of the audiotapes was verified by the interviewer before it was incorporated into the agency interview framework. This method was consistent with those described by Marshall and Rossman (1989) and Crabtree and Miller (1992). To monitor the progress of the interviews and permit a follow-up of issues emerging from the data, the interviews, transcription, and analysis took place concurrently when possible, given the geographic and language issues.

The Ottawa study coordinating center then did an iterative thematic analysis, which resulted in the identification of eight key themes, or categories. As the categories emerged, we defined their properties, developed a codebook, and further refined them into subcategories. We drew up a detailed coding sheet for each agency, using the eight categories and their subcategories. For this iteration of the qualitative analysis, the team pasted direct interview and Internet “quotes” into the coding sheets, so as to eliminate redundant information. Two team members did this independently for each agency and resolved any differences through consensus. These code sheets were sent back to one of the agency interviewees for verification. The Ottawa center coded the interviews from Canada, the United States, the Netherlands, and France. The interviewers in Australia, the United Kingdom, and Scandinavia coded the data from their own countries after training from the Ottawa center. Their coding was checked by the Ottawa center team, and discrepancies were resolved through consensus.

The next step in the analysis was to create a summary table for each agency that quantified as much of the information as possible. In these tables, we classified each of the KT activities required of researchers or undertaken by the funding agency as either “present” or “not present.” Although this step effectively eliminated much of the idiosyncratic detail of the agencies' innovative activities, it was necessary in order to capture this extraordinarily rich data set. Our agency summary sheets had two sections: a short narrative followed by the summary tables. The narrative stated the agency's total budget for funding research/people; how long the agency had been in existence; the kind of funding it provided; how it obtained funding; its primary target audience(s); its role with respect to KT (including mandate, focus, and degree of responsibility for KT); its evaluation of KT; and its background in KT.

The summary tables were divided into two sections: the agency's requirements for the researchers and the agency's initiatives. Each activity was entered into a row, and the level of activity was coded as “push,” “pull,” or “linkage and exchange.” Some agencies had activities on all three levels owing to the different kinds of funding initiatives. To quantify and synthesize these summary sheets, we decided to give an agency credit for only the most intense level of activity if more than one level was coded as present.

The final step was synthesizing the summary sheets and creating tables that summarized the percentage of agencies engaging in each of the identified categories. We recorded a given agency as engaging in a certain activity or imposing a particular requirement even if this pertained to only one funding program that was used only once. Because of the agencies' constantly changing innovative funding initiatives, it was difficult, if not impossible, to obtain a reasonable “denominator” for their use of KT strategies. Therefore the resulting data do not reflect how widespread the particular activity was across the different funding programs within an agency.

Results

All the agencies that we approached agreed to participate in the study, for a total of thirty-three agencies (see box 1). Of these, two funded only biomedical research; seven funded only applied research; and twenty-four funded both. Six of the agencies funded only strategic research calls; four had only open funding calls; and twenty-three offered both kinds of funding.

Box 1.

The eight categories identified by the iterative thematic analysis described earlier were as follows: role, background, researcher requirements, application process, dissemination activities, agency initiatives, evaluation, and audience. The “Role” category captures abstract, general principles adopted by the agency, including its mandate, policies, future plans, and general philosophies. “Background” refers to information about funding: how the agency was funded and how its overall budget was allocated. “Researcher requirements” are such obligations as submitting a final report or acknowledging the funding agency in publications. “Application process” refers to the requirements for researchers to submit a KT plan or define their KT audience as part of the application. “Dissemination activities” are efforts by the agency to disseminate research results larger than a single study, for instance, at a top-down level, such as by creating a pull for information, or at a more bottom-up level, such as by training middle managers to understand research. “Agency initiatives” covers strategies like creating special calls for proposals in the science of KT, funding large teams, and holding workshops with researchers and policymakers. The category of “Evaluation” pertains to the evaluation of an agency's specific strategies as well as its views of how well it was meeting its KT mandate. Finally, “Audience” refers to the audiences targeted by the agency for its KT endeavors. The Ottawa-based team formed subcategories of these activities for each of these categories, based on an iterative analysis.

Each row in an agency summary table represents an activity, and the columns indicate whether the activity was coded as “push,” “pull,” or “linkage and exchange” (for examples, see box 2).

Box 2.

References to Knowledge Translation in Agency Mandates and Mission Statements

Despite the recent interest in this area, the concept of knowledge translation appeared to be relatively new to the agencies in our study, although twenty-three of the agencies explicitly or implicitly referred to KT in their mission/mandate. Some of the mandates were specific in describing the KT component; for example, one clause in the UK Medical Research Council's mission statement proposes to “produce skilled researchers and to advance and disseminate knowledge and technology to improve the quality of life and economic competitiveness in the UK.”

Other agencies were more implicit; for example, the Norwegian Research Council's mandate notes that “the council is the central advisor for the authorities in relation to researching political questions and functions as a forum and builder of networks in Norwegian research.”

Our data suggest that at this relatively early stage of trying to engage in KT, neither an explicit nor an implicit mention of KT in an agency's mandate was predictably associated with the range or sophistication of the KT activities in which that agency engaged.

Definitions of Knowledge Translation

For the thirty-three agencies we studied, we identified twenty-nine terms used for KT in the transcripts, many of which were not defined (see box 3). Furthermore, the operational definitions of KT varied between the agencies.

Box 3.

Balance of Responsibilities and Roles

The majority of agency representatives considered KT to be a responsibility shared by the agency and the researchers. In some cases, the implied responsibility for these activities extended beyond the researcher and the agency to the research institutions and policy organizations surrounding them. As one British agency representative pointed out,

You become a researcher because you are a good researcher, not because you are a journalist, not because you are a good communicator. I think very often the researchers may not be the best people to work in that area. We need to provide help for them in the way of PR-type people who would be able to think through a proper communications strategy for a piece. … I don't think researchers are very good at getting their message across to the target audiences because they are really writing for their colleagues, for other researchers, and for people with a special interest in the area.

One Canadian agency acknowledged that “everybody has a bit of responsibility.” This agency noted that some researchers were very good at knowledge translation and that others needed help in linking with knowledge users, targeting their message, and applying their research results. Another Canadian agency had a broader view of whose responsibility it was to translate knowledge:

It is not realistic to expect researchers to take on the responsibility for knowledge transfer. I think their job is to do the best research they possibly can, write it in an understandable format, make sure that it gets published and that people are aware of it … there should be people in their own institution who can take it from where the researchers leave off and help with promoting it. … The biggest responsibility for knowledge transfer is out there in the policy organizations and the government organizations, the regional health authorities … because no one research study can answer an organization's issues. You need to know what many people are thinking on the topic.

ZonMw (NL) has taken the lead in helping their researchers not only disseminate but also implement their research findings. Over a project's life span, the agency tries to accumulate sufficient knowledge about it to be able to decide whether or not to target it for additional dissemination and implementation activities. One or two years into the research project, the agency's staff work with researchers to provide training advice and support in this area and to bring researchers together with relevant stakeholders. Annual reports to the agency must focus on both implementation and research findings. Furthermore, ZonMw assesses the most promising projects for increased implementation assistance. In the final year of project funding, promising studies are subjected to an implementation risk analysis, which entails making a detailed assessment of key findings that can be implemented. Those studies deemed to be “pearls” (Ravensbergen and Lomas 2005) qualify for special attention and resources in a full implementation plan. Not surprisingly, the health research funding “industry” has different opinions as to what should be transferred to whom, when, and by whom.

Evaluation of Knowledge Translation Activities

At the time we conducted this study, eleven agencies had some kind of framework or consistent plan for measuring the impact of some, if not all, of their KT activities. Nearly every agency indicated that it was planning to develop or adapt a KT evaluation framework, but many were struggling to select appropriate indicators.

The summary tables (which attempt to quantify the KT activities) are divided into two broad categories: the agency's requirements for the researcher and those agency initiatives that were broader than an individual funded study. While many of the concepts developed to characterize the information synthesized from this very large data set are self-explanatory; others have been used in a very specific way. We provide illustrative examples of the latter concepts where appropriate, using as many of the agencies as possible and reporting those that we judged to be particularly innovative.

Agency's Requirements of Researchers

The agency's requirements of researchers were divided into three sections: requirements at the time of application, requirements at the end of the study, and allowable expenses for KT activities. The most common activities/strategies reported (tables 1, 2, and 3) are writing a final report for the agency, publishing findings, stating the bottom line or relevance of the proposed work, acknowledging the funder in any publications, providing a knowledge translation plan, providing a lay summary of the proposal, partnering with stakeholders, and attending agency workshops. The most common allowable KT-related expense was for researchers to host workshops.

TABLE 1.

Requirements of Researchers When Applying to Agency (N= 33)

| Agency's Requirements for Researchers | Total Number and Percentage of Agencies with These Requirements | Expected (%) | Required (%) | Required with Help from Agency (%) | |

|---|---|---|---|---|---|

| State bottom line/relevance | 28 | 85% | 6 | 79 | 0 |

| KT plan | 24 | 73% | 3 | 55 | 15 |

| Lay summary of proposal | 22 | 67% | 3 | 55 | 9 |

| Partnership with stakeholders | 20 | 61% | 33 | 18 | 9 |

| Define KT target audience | 15 | 45% | 12 | 30 | 3 |

TABLE 2.

Requirements of Researchers at End of Grant (N= 33)

| End of Grant Requirements of Researchers | Total Number and Percentage of Agencies with These Requirements | Expected (%) | Required (%) | Required with Help from Agency (%) | |

|---|---|---|---|---|---|

| Publish findings | 28 | 85% | 42 | 37 | 6 |

| Final report | 29 | 88% | 6 | 82 | 0 |

| Researchers acknowledge funder | 25 | 76% | 21 | 55 | 0 |

| Attend agency workshops | 20 | 61% | 33 | 15 | 12 |

| Lay summary of results | 19 | 58% | 6 | 30 | 21 |

| Report on communications activities | 15 | 45% | 18 | 24 | 3 |

| Report for decision makers | 8 | 24% | 9 | 9 | 6 |

TABLE 3.

Allowable Expenses for KT Activities (N= 33)

| KT Allowable Expenses | Total Number and Percentage of Agencies Allowing This KT Expenditure | |

|---|---|---|

| Workshops | 21 | 64% |

| Publication | 18 | 55% |

| Dissemination | 17 | 52% |

| Translation (from one language to another) | 11 | 33% |

| Web development | 10 | 30% |

Broader Agency Initiatives

Agency initiatives that were broader than an individual funded study were divided into four sections: tools or techniques, services, linkage, and funding. Ten activities or strategies were reported by more than half the agencies (tables 4, 5, 6, and 7): writing audience-tailored publications; funding targeted workshops; using the media; consulting stakeholders in setting the research agenda; creating audience-tailored web pages; helping researchers write clearly and communicate with the media; creating linkage and exchange opportunities with various parties (such as decision makers, the public, and health managers); posting lay summaries on their websites; conducting health technology assessments, policy, or research syntheses; and creating or funding web-based or real-time networks.

TABLE 4.

Tools and Techniques Used by Agencies (N= 33)

| Agencies' KT Tools and Techniques | Total Number and Percentage of Agencies Using These Tools | |

|---|---|---|

| Audience-tailored publications | 32 | 97% |

| Use of media | 27 | 82% |

| Audience-tailored web pages | 25 | 76% |

| Lay summaries on website | 22 | 67% |

| Development of tools | 9 | 27% |

| Use of drama | 8 | 24% |

TABLE 5.

Services Provided by Agencies (N= 33)

| Services Provided by Agencies | Total Number and Percentage of Agencies Providing These Services | |

|---|---|---|

| Translation: help researchers with writing, communication with media, etc. | 25 | 76% |

| Health technology assessment/policy/research synthesis | 20 | 61% |

| Funding/assistance with commercialization possibilities | 10 | 30% |

| Funding/organization of lectures | 9 | 27% |

TABLE 6.

Linkage Activities Used by Agencies (N= 33)

| Linkage Activities Used by Agencies | Total Number and Percentage of Agencies Using These Linking Activities | |

|---|---|---|

| Consult with stakeholders to set research agenda | 27 | 82% |

| Linkage and exchange | 25 | 76% |

| Create/fund web-based or real-time networks | 18 | 55% |

| Set up programs for decision makers | 7 | 21% |

| Metalinkage | 6 | 18% |

| Organize video conferences | 2 | 6% |

TABLE 7.

Types of KT Funding Made Available by Agencies (N= 33)

| Types of KT Funding Made Available by Agencies | Total Number and Percentage of Agencies Providing These Types of KT Funding | |

|---|---|---|

| Fund targeted workshops | 29 | 88% |

| Fund conferences | 16 | 48% |

| Produce/fund journals (special issues) | 14 | 42% |

| Fund KT RFAs (requests for applications) | 12 | 36% |

| Other funding opportunities | 12 | 36% |

| Fund teams of investigators | 11 | 33% |

| Fund KT centers | 8 | 24% |

| Fund research chairs | 6 | 18% |

Audience-tailored publications, used by nearly all the agencies, differ from other publications in that they are directed at and edited to suit particular audiences. The NCIC (Canada), for example, disseminates newsletters and final reports for a lay audience to its stakeholders (push); the CVZ (NL) publishes and distributes a magazine called Orgcijfers to its stakeholders, who are insurers, politicians, and interest groups who have requested the information included in the publication (pull); and the NHS HTA Programme (UK) produces themed updates that are distributed to selected individuals in the NHS via the NHS Centre for Reviews and Dissemination Single Contact Point (linkage and exchange).

Although only nine of the agencies used or developed tools, this category captured a variety of innovative approaches. The NHS SDO (UK), for example, created an electronic tool called Developing Change Management Skills: A Resource for Health Care Professionals and Managers (http://www.sdo.lshtm.ac.uk/pdf/changemanagement_developingskills.pdf); the AHFMR and CHSRF (Canada) developed a framework (http://www.ahfmr.ab.ca/search/forms/workshop_summary_021128.pdf) and tool (http://www.chsrf.ca/other_documents/working_e.php) for assessing an organization's research capacity; and the SSHRC (Canada) developed a web-based tool to facilitate ongoing interactions within and across research teams and potentially with research stakeholders.

Eight agencies used drama as a knowledge translation strategy. INSERM (France), for example, uses films—images of cells, hearts, and so forth—for television, cinema festivals, and schools; and the RWJF (U.S.) has a televised health series.

More than three-quarters of the agencies tried to help researchers with their writing and press conferences and to put information into a format suitable for a variety of audiences. The VA (U.S.), for example, works closely with researchers to analyze data and develop performance measures; the CVZ (NL) develops guidelines from its funded research; and INSERM (France) has a network responsible for communications and information and relationships with patients' associations, hospitals, events, conferences, and the like. The CHSRF (Canada) has held a number of workshops in which it has successfully trained decision makers and researchers in how to communicate.

Nearly two-thirds of the agencies engaged in some sort of synthesis activity. Both the CHSRF (Canada), which sees policy synthesis as an important part of its role, and the CDC (U.S.) conduct policy and research syntheses as well as health technology assessments. Dissemination of these syntheses is a key task for both agencies. The AHFMR (Canada) has a health technology assessment unit commissioned by decision makers, and an important task of the AHRQ (U.S.) is synthesizing research by bringing together and contextualizing the studies that it funds as well as relevant research from other sources. The VA (U.S.) provides a different form of research synthesis by developing and posting clinical practice guidelines on its website.

Linkage and Exchange

More than three-quarters of the agencies engaged in linkage and exchange activities, in which they try to build relationships in order to exchange knowledge and ideas. The AFM (France), for example, sets up regular opportunities for patients' groups to meet with scientists to discuss their work. INSERM (France), in a program called Rendez-vous santé de l'INSERM, brought together 450 scientists to travel around France to disseminate knowledge to the public.

Fifty-five percent of the agencies have either created networks themselves or provided funding for others to create web-based networks, communities of practice, list-serves, and the like. For example, in partnership with the U.S. Preventive Services Task Force, the AHRQ developed the “Put Prevention into Practice” (PPIP) initiative, which encompasses a set of tools and resources for health care systems, staff, and patients. This enables health care providers to determine which services their patients should receive, as well as provides guidance for establishing administrative systems to facilitate the delivery of preventive care.

Funding agencies may already be embedded in decision-making structures. The Policy Research Programme (UK) directly commissions research for and on behalf of Department of Health policymakers. The program does not have anything specifically referred to as “knowledge translation” activities, but it does have liaison officers whose specific job is to work with the department policy groups for which they have responsibility. The liaison officers help the groups articulate their needs as research questions and commissions research. At the end of projects, findings are fed back to the policy groups, which have the ultimate responsibility for their “translation.”

We use the term metalinkage to describe what occurs when members of an agency-created network or community go on to create their own network or community for further linkage and exchange. For example, the AHRQ, together with the VA, offers a six-month training course for state officials who have been assigned to local hospitals. The graduates of this training course return to their home institutions and teach others, thereby creating “meta-networks.” The CHSRF and AHFMR have programs for decision makers, described later, that enable the participants to share their knowledge and expertise with a larger group of people at their home organizations.

Programs for decision makers are designed to familiarize research users with the research process and culture. The Health Foundation (UK), for example, has set up awareness-raising activities involving the chief medical officer, health care providers, and key individuals from government health departments. In Canada, the AHFMR has its SEARCH (Swift Efficient Application of Research in Community Health) program, which offers senior executives, managers, and organizational decision makers an opportunity to develop practice-based research and evidence-based decision making. The CHSRF has recently created its EXTRA (Executive Training for Research Application) program, which targets health service professionals in senior management positions, with the primary goal of giving health system managers across Canada the skills to better use research in their daily work.

The most common KT funding mechanism used by twenty-nine of the agencies was to finance targeted workshops in order to bring specific and varied audiences together. Funding conferences, special issues of journals, and strategic calls for research on the science of knowledge translation were less common, as was establishing special funding opportunities to create teams of investigators. Only a handful of agencies provided funds for establishing or maintaining special KT centers or for endowing academic research chairs focusing on knowledge translation.

We explored the data to determine whether they showed any patterns in more intensive KT activities (e.g., pull and linkage and exchange) by country, size of the agency budget, source of funding, and whether or not the agencies' mandate/mission implicitly or explicitly mentioned KT. We found no patterns and so decided to restrict our data synthesis to the type of KT activity expressed as a percentage of all thirty-three agencies.

How Did the Funding Agencies Interpret Our Results? Summary of the Workshop Held with Canadian and U.S. Agency Representatives

As soon as the set of summary sheets was complete, verified, and compiled into tables 1 through 7, our project team contacted representatives from the funding agencies in Scandinavia, Canada, the United States, France, and the Netherlands to invite them to a workshop to be held in Ottawa (the UK and Australian investigators had planned separate workshops but were unable to follow through because of the costs and difficulties of bringing the agencies' representatives together). The purpose of the workshop was to bring together our study's investigators and the agencies' representatives to discuss our preliminary findings, to provide an opportunity to consider the data we had collected, and to share our experiences and ideas of best practice. Although the European representatives were unable to attend, two-thirds of the Canadian and U.S. agencies did attend the meeting.

The participants acknowledged the difficulty of capturing the “flavor” of our study's diverse set of agencies. But they also acknowledged that the benefit of this exercise was to examine and discuss the various mechanisms that could facilitate knowledge translation. The participants welcomed the opportunity to talk about the issues raised at the workshop, as funding agencies have few organizations in which to meet and “share war stories.”

Some unresolved issues that permeated the workshop discussion were (1) which part of the KT agenda a funding agency should address by itself and which through outside agencies; (2) the understanding that trying to find out what works in this area depends on what “K” you are trying to “T” to whom; (3) the fact that different agencies may see their role differently, depending on their mandate/mission or background in KT; and (4) the realization that the amount of dedicated funding for KT in many agencies might be described as “decimal dust” (Kerner, Rimer, and Emmons 2005).

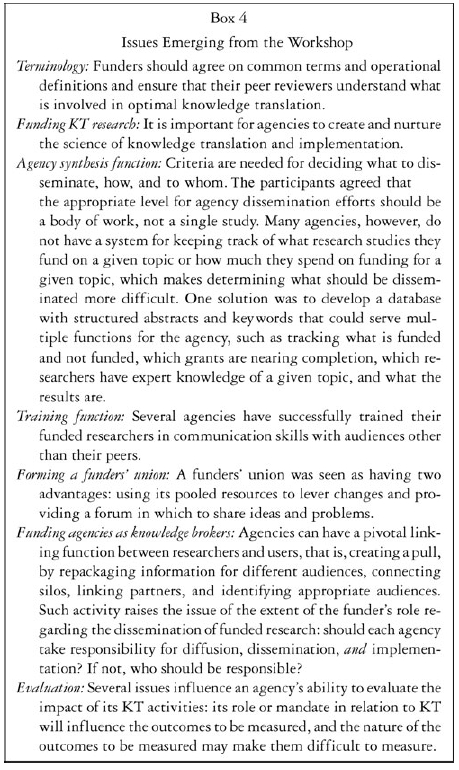

Other issues that consistently recurred during the day-long discussion were the variability in terminology around KT concepts and constructs, the importance of continuing to fund the science of knowledge translation, the agencies' synthesis function, the funding agencies' training function, the importance of forming a funders' “union” to provide a forum for discussions of this sort, the role of funding agencies as knowledge brokers, and the difficulty of evaluating the effectiveness of KT strategies and activities at the funding agency level (see box 4).

Box 4.

One of the workshop's goals was to try to reach a consensus on what funders could do to promote and support researchers' KT activities and also on what funders should do to promote and support KT activities themselves. The discussion produced a list of potential agency activities to facilitate and encourage KT, with the agencies acknowledging that they need more than a list of activities. That is, they need a systematic approach to KT that cuts across all their programs (e.g., What are the agency's goals? What is the range of its programs? What are the desired outcomes? How will they be met?). While the workshop did not provide clear answers or solutions to these issues, it did provide a forum for raising important questions for funding agencies to consider in the future, either individually or as a group.

Discussion

To our knowledge, ours is the first study conducted of a sample of health research funding agencies in developed countries. A novel aspect of our work was conducting a “member check” (i.e., we asked the participants whether they thought our interpretation of the data was valid) of our findings with participating agencies and inviting them to attend a workshop to interpret the results and discuss both best-practice and common issues of concern. In doing so, we were able to confirm the common issues and concerns revealed by the document and interview analysis. A shared understanding of the terminology, roles, and responsibility for knowledge translation; the degree of an agency's involvement in “pull” and “linkage” activities; and the evaluation of the effectiveness of KT policies and activities were identified as particularly vexing issues.

Regardless of the specific terminology they have adopted, research funders perceive themselves as having generally increasing but variable roles in knowledge translation. Their responsibility for these roles is seen as shared with the research community. Moreover, in order to realize their monetary and social investments and expectations, a number of agencies have recognized the need to take more active roles across all stages of knowledge translation, from commissioning research to supporting the implementation of key findings.

While the majority of agencies placed certain requirements on researchers at the beginning of the funding process, they were less likely to place a greater onus on researchers to more actively promote the transfer of their findings beyond more traditional means, such as providing final reports and acknowledging funders. Some of their reasons are that many researchers and funders have important but limited priorities (i.e., ensuring the production of high-quality and relevant research), that some funders recognize that researchers often lack the skills and resources to take a leading role in knowledge translation, and that some funders acknowledge the importance of contextualizing the results of a single research study within a broader evidence base. A related issue is the amount of time required for knowledge translation, by both the researcher and the research funder. While this specific question was not included in the interview schedule, it was raised by some funders and by researchers in a related study (Graham, Grimshaw, et al. 2005). With program (longer-term) rather than project (shorter-term) funding, more time is available to researchers both to foresee the need for and to find the time to engage in appropriate and adequate dissemination activities. In general, research funders use a number of strategies to “push” their findings, which most commonly entail tailoring research products to and synthesizing them for target audiences and, less frequently, developing tools to promote their transfer.

The majority of agencies are engaged in some sort of linkage activities to bring stakeholders and researchers together to share and develop agendas and subsequent research questions. Fewer have extended this function to meta-linkage, in which they would establish and link active communities or networks to enhance the exchange of ideas. Some agencies actively encourage “pull,” that is, create a demand for research findings by those responsible for health policy and the implementation of research evidence. Only a small number have taken steps to fund capacity building and programs dedicated to the research and development of the science of knowledge translation.

When brought together to review these findings, the agencies identified a number of common issues and opportunities for mutual learning regarding knowledge translation, with the most problematic being evaluating the effectiveness and impact of this key process.

The Study's Strengths and Limitations

The goal of qualitative research is to present an accurate picture of reality as it is experienced or perceived by the participants. The validity of qualitative research may be threatened by two sources: description and interpretation (Maxwell 1996). We minimized threats to valid description by taping the interviews and transcribing them verbatim, and we reduced threats to interpretation by checking the representativeness of the data as a whole and of the coding categories. The investigators did the coding together and negotiated a consensus. The investigators deliberately tried to discount or disprove conclusions about the data and proposed alternative explanations for discrepant data. Each of the agencies' summary documents was verified by an agency representative, and the study investigators verified the interpretation and representativeness of the agencies from their country. We had some difficulty verifying the coding sheets we created for each agency owing to the change in practices that occurred between when the interviews were conducted and when the final code sheets were developed and available for review (up to twelve months in some cases). The workshop with the North American funders, however, provided another opportunity for member checking (Lincoln and Guba 1985). Qualitative study designs are not designed to be able to generalize the findings beyond the study population. However, the use of multiple case studies, the diversity and number of funding agencies, and the range of countries involved increased the likelihood that our findings were transferable. Nonetheless, the fact that we purposefully selected the agencies we studied indicates that the reader should exercise caution in transferring the findings within or across countries.

Because of the way in which we derived our data (through semistructured interviews), the activities recorded in the summary sheet of a particular agency may underrepresent the full extent of its operations. However, we are confident that the data represent the full spectrum of activities for the agencies we investigated.

Implications for Policy and Research

The variation in approaches to knowledge translation can be explained by a number of factors. First, the lack of clarity as to what is meant by knowledge translation, how it is operationalized, and how engaged the agency decides to be in this process can account for considerable variation between agencies. Second, agencies are unlikely to be aware of potentially effective strategies used by others, or they may not have the resources or skills to put them into practice. We found that compared with traditional means of “pushing” (or disseminating) research findings, there was relatively less use of “linkage” and “pull” strategies. Work like our study provides an opportunity for funding agencies to review their current inventories of knowledge translation activities. Third, different funding agencies operate within a broad range of contexts, with each requiring or facilitating specific types and/or combinations of approaches. For example, mechanisms or structures that directly link researchers to policymakers (as in the UK DH Programme) may be an effective approach for policy-related research but are unlikely to be transferable to all types of health services and clinical research. Fourth, to date, very little empirical evidence supports the use of specific knowledge translation strategies, meaning that an agency's judgment and available resources are likely to determine the selection of strategy. Strategies that actively anticipate implementation, such as that used by ZonMw, and use some form of diagnostic analysis to identify key determinants of change are intuitively attractive but are, as yet, both uncommon and unproven.

This limited evidence base is problematic, compounded by both the potential difficulties of agreeing on and measuring the outcomes for an evaluation and the methodological issues in designing rigorous studies to test the effectiveness and efficiency of knowledge translation strategies. Evidence-informed progress in this field will require closer collaboration between funding agencies. It also will require more substantial investments in research programs to study the science of KT and to develop methodologies for evaluating the programs. In this way, the evidence base can be developed to enable the timely and consistent translation of relevant research findings into practice and policy.

This limited evidence base should not, however, be used as a reason to curtail or limit financial and institutional commitments to KT. Many examples of good practice have not been rigorously evaluated—wearing a parachute, for example—but make good common sense. Collaboration and communication between agencies can broaden the scope of individual agencies' activities and increase the likelihood that they will be evaluated.

It is worth noting that two-thirds of the agencies fund syntheses of some kind: HTA, policy, or research syntheses. This is a key component of KT, as acknowledged by the workshop participants and many of the agency representatives in their interviews. The strength of the evidence for a particular research finding needs to be confirmed before investing effort in disseminating and applying its results. KT requires the judicious and ethically sound translation of knowledge, and both the funding agencies and the researchers clearly need to jointly assume responsibility for this.

Conclusions

Knowledge translation is an area that funding agencies recognize as very important because of the recognized gap between research, practice, and policy and because of the pressures on the agencies to be accountable to their funding sources. Funding agencies are now rapidly changing their approaches to and means of increasing the use and dissemination of the findings of the research they fund. Our respondents, however, viewed our study as highly relevant, owing to both the perceived pressure for greater accountability and the need to close the gap between research and practice/policy.

The thirty-three agencies reported a wide range of knowledge translation activities, with a large variation in creating a pull for research findings; engaging in linkage and exchange between agencies, researchers, and decision makers; and pushing the results to various audiences. However, no single activity was practiced by all the agencies.

Overall, the agencies appeared to take a more systematic approach to the expectations they had of researchers than to initiatives taken by the agency. While we have essentially cataloged the range of knowledge translation activities conducted across these agencies, we know little about their effectiveness. Evaluating these activities is challenging, and agencies either are not ready to embark on a formal evaluation or are doing so on a very preliminary and/or exploratory basis. A greater emphasis on evaluation will be needed to discover which KT strategies are effective for both agencies and researchers. Agencies may need to review their KT policies periodically to ensure consistency and clarity and to maximize their effectiveness. The agencies may also benefit from opportunities to examine what other agencies are doing in this important area.

Although all these conclusions from our work are important, the largest looming barrier to advancing the knowledge translation agenda is the lack of conceptual clarity regarding what is meant by knowledge translation and what a commonly accepted framework might look like. As box 3 demonstrates (almost frighteningly), twenty-nine different terms are used. It is therefore not surprising to watch the eyes of health researchers dart around in confusion when one of these terms is marshaled as a reason for them to do even more with their limited time and research grant funds. Funding agencies need to think simultaneously about their conceptual framework and their operational definition of KT, so that what is and what is not considered to be KT is clear, and to adjust their funding opportunities and activities accordingly.

Funding agencies, through strategic funding opportunities and meetings of key stakeholders, are in a position to map out the KT terrain—to establish what is known and what needs to be known in KT research and practice—and to try to close the gap. Collaboration and sharing between agencies about KT policies and practices can only lead to a win-win situation. Our research team tried to start the ball rolling by mapping the range of KT activities and bringing together some of the key stakeholders on the topic. Using these data as a baseline, funding agencies can assess how and to what extent their KT activities have changed or should change since we conducted the study.

The agencies we surveyed exhibited considerable variation in their investment in KT and engagement in KT activities. No one agency stood out as being exemplary in the nature and extent of its KT efforts. When we began this investigation, we did not have an archetypal/perfect agency in mind, nor do we now that the data are in. In this case, it would appear that “best practice” is an elusive concept that depends on the size, context, mandate, financial considerations, and governance structure of the particular agency.

Acknowledgments

This study was funded through a CIHR Request for Applications on Strategies for Knowledge Translation in Health (FRN 52687). The authors would also like to thank Louise Zitzelsberger, Rémy Chatila, and Jean Slutsky for their help in completing the study. Finally, the authors would like to thank the participants for agreeing to be interviewed, for validating the agency summary sheets, and for contributing to the workshop. Ian Graham held a CIHR New Investigator's Award at the time the study was conducted, and he and Jacqueline Tetroe subsequently joined the CIHR after the manuscript was first reviewed. Martin Eccles is the William Leech Professor of Primary Care Research at the University of Newcastle upon Tyne; France Légaré is a New Clinical Scientist supported by the Fonds de recherche en santé du Québec; and Jeremy Grimshaw holds a Canada Research Chair in Health Knowledge Transfer and Uptake.

References

- Agency for Health Research and Quality (AHRQ) Translating Research into Practice (TRIP)—II. Washington D.C: 2001. [Google Scholar]

- Beral V, Bull D, Reeves G. Endometrial Cancer and Hormone-Replacement Therapy in the Million Women Study. The Lancet. 2005;365:1543–50. doi: 10.1016/S0140-6736(05)66455-0. [DOI] [PubMed] [Google Scholar]

- Canadian Health Services Research Foundation. Issues in Linkage and Exchange between Researchers and Decision Makers. 1999. [accessed December 14, 2007]. Available at http://www.chsrf.ca/knowledge_transfer/pdf/linkage_e.pdf. [Google Scholar]

- Crabtree B, Miller W. Doing Qualitative Research. Newbury Park Calif: Sage; 1992. [Google Scholar]

- Ferlie EB, Shortell SM. Improving the Quality of Health Care in the United Kingdom and the United States: A Framework for Change. The Milbank Quarterly. 2001;79:281–315. doi: 10.1111/1468-0009.00206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Graham ID, Grimshaw JM, Tetroe JM, Robinson NJ. Ottawa: Ottawa Health Research Institute; 2005. KT Challenges for Researchers: How Are Canadian Health Researchers Promoting the Uptake of Their Findings. [Google Scholar]

- Graham ID, Logan J, Harrison MB, Straus S, Tetroe JM, Caswell W, Robinson N. Lost in Knowledge Translation: Time for a Map? Journal of Continuing Education in Health Professions. 2006;26:13–24. doi: 10.1002/chp.47. [DOI] [PubMed] [Google Scholar]

- Graham ID, Tetroe JM, Grimshaw JM, Robinson NJT, Hebert P. If You Build It—They Will Come: Knowledge Translation Activities of Canadian Applied Health Researchers. 2005. Paper delivered at Canadian Health Services Research Foundation Annual Workshop.

- Grilli R, Ramsay C, Minozzi S. Cochrane Database of Systematic Reviews. Issue 3. 1998. Mass Media Interventions: Effects on Health Services Utilisation. Article Number: CD000389 DOI 10.1002/14651858.CD000389. [DOI] [PubMed] [Google Scholar]

- Grimshaw JM, Thomas RE, MacLennan G, Fraser C, Ramsay C, Vale L, Whitty P, Eccles MP, Matowe L, Shirran L, Wensing M, Dijkstra R, Donaldson C. Effectiveness and Efficiency of Guideline Dissemination and Implementation Strategies. Health Technology Assessment. 2004;8 doi: 10.3310/hta8060. [DOI] [PubMed] [Google Scholar]

- Grimshaw JM, Ward J, Eccles M. Getting Research into Practice. In: Pencheon D, Gray JAM, Guest C, Melzer D, editors. The Oxford Handbook of Public Health Practice. Oxford: Oxford University Press; 2001. pp. 480–86. [Google Scholar]

- Heap SP, Parikh A. The Diffusion of Ideas in the Academy: A Quantitative Illustration from Economics. Research Policy. 2005;34:1619–32. [Google Scholar]

- Hersh AL, Stefanick ML, Stafford RS. National Use of Postmenopausal Hormone Therapy: Annual Trends and Response to Recent Evidence. Journal of the American Medical Association. 2004;291:47–53. doi: 10.1001/jama.291.1.47. [DOI] [PubMed] [Google Scholar]

- Innvaer S, Vist G, Trommald M, Oxman A. Health Policy-Makers' Perceptions of Their Use of Evidence: A Systematic Review. Journal of Health Services Research and Policy. 2002;7:239–44. doi: 10.1258/135581902320432778. [DOI] [PubMed] [Google Scholar]

- Kerner J, Rimer B, Emmons K. Dissemination Research and Research Dissemination: How Can We Close the Gap? Health Psychology. 2005;24:443–46. doi: 10.1037/0278-6133.24.5.443. [DOI] [PubMed] [Google Scholar]

- Kuzel A. Sampling in Qualitative Inquiry. In: Crabtree B, Miller W, editors. Doing Qualitative Research. London: Sage; 1992. pp. 31–44. [Google Scholar]

- Lavis JN, McLeod CB, Gildiner A. Measuring the Impact of Health Research. Journal of Health Services Research and Policy. 2003;8:165–70. doi: 10.1258/135581903322029520. [DOI] [PubMed] [Google Scholar]

- Lincoln YS, Guba EG. Naturalistic Inquiry. Newbury Park Calif.: Sage; 1985. [Google Scholar]

- Lomas J. Diffusion, Dissemination and Implementation: Who Should Do What? Annals of the New York Academy of Sciences. 1993;703:226–35. doi: 10.1111/j.1749-6632.1993.tb26351.x. [DOI] [PubMed] [Google Scholar]

- Lomas J. Hamilton Ont: Centre for Health Economics and Policy Analysis, McMaster University; 1997. Improving Research Dissemination and Uptake in the Health Sector: Beyond the Sound of One Hand Clapping; pp. 1–45. Policy Commentary C97-1. [Google Scholar]

- Lomas J. Using “Linkage and Exchange” to Move Research into Policy at a Canadian Foundation. Health Affairs. 2000;19:236–40. doi: 10.1377/hlthaff.19.3.236. [DOI] [PubMed] [Google Scholar]

- Marshall C, Rossman G. Designing Qualitative Research. Newbury Park Calif.: Sage; 1989. [Google Scholar]

- Maxwell J. Qualitative Research Design: An Interactive Approach. Thousand Oaks Calif.: Sage; 1996. [Google Scholar]

- Morse J, Field P. Qualitative Research Methods for Health Professionals. 2nd ed. Thousand Oaks Calif.: Sage; 1995. [Google Scholar]

- Petrella RJ, Speechley M, Kleinstiver PW, Ruddy T. Impact of a Social Marketing Media Campaign on Public Awareness of Hypertension. American Journal of Hypertension. 2005;18:270–75. doi: 10.1016/j.amjhyper.2004.09.012. [DOI] [PubMed] [Google Scholar]

- Ramlogan R, Mina A, Tampubolon G, Metcalfe JS. Swindon England: ESRC Centre for Research on Innovation and Competition; 2005. Networks of Knowledge: The Distributed Nature of Medical Innovation. Discussion Paper 74. [Google Scholar]

- Ravensbergen J, Lomas J. Global Forum Update on Research for Health. 2005. Creating a Culture of Research Implementation: ZonMw in the Netherlands; pp. 64–66. WHO Report. [Google Scholar]