Abstract

Objectives

To describe the development and preliminary outcomes of the System of Universal Clinical Competency Evaluation in the Sunshine State (SUCCESS) for preceptors to assess students' clinical performance in advanced pharmacy practice experiences (APPEs).

Design

An Internet-based APPE assessment tool was developed by faculty members from colleges of pharmacy in Florida and implemented.

Assessment

Numeric scores and grades derived from the SUCCESS algorithm were similar to preceptors' comparison grades. The average SUCCESS GPA was slightly higher compared to preceptors' scores (0.02 grade points).

Conclusions

The SUCCESS program met its goals, including establishing a common set of forms, standardized assessment criteria, an objective document that is accessible on the Internet, and standardized grading, and reducing pressure on preceptors from students concerning their grades.

Keywords: assessment, APPE, competency, Internet

INTRODUCTION

Schools of medicine, nursing, and pharmacy assess student performance with didactic assessments, such as paper and pencil tests, but also with performance appraisals completed during and after experiential courses. However, developing performance appraisals for health professions students is difficult and, in some cases, schools have failed to assess students' skill performance in experiential courses.1-6 A study of clinical assessment methods used in US medical schools found that students' grades were based on faculty ratings in 50%-70% of schools and on standardized patient test measurements or objective structured clinical examinations (OSCEs) in only about 25% of schools. Moreover, student performance appraisals using patient care simulations do not always result in accurate documentation of students' abilities or fall short because they fail to present students with the same challenges they would encounter in actual patient care encounters. Unsatisfactory student performance during clinical practice experiences may remain undetected or unreported because of lack of documentation, lack of knowledge of what to specifically document, anticipation by the preceptor of an uncomfortable personal appeal by the student, lack of remediation options as a barrier to reporting failures,2 or lack of comprehensive or effective measures for assessing clinical competency.1

Similar concerns about assessment of student performance were expressed by the directors of experiential education at 3 Florida colleges of pharmacy. Each of the 3 colleges used a different tool for assessing students' clinical competencies. This was burdensome and confusing for the preceptors. In response, some preceptors developed and used their own forms instead of the colleges' forms. Those preceptors participating in more than 1 college's experiential program requested that a standardized performance measure be developed for use in grading all pharmacy students in the state. A number of circumstances made development of a single assessment tool a realistic goal. The most significant motivation was that the 3 colleges drew preceptors from the same pool of Florida pharmacists. In response to the preceptors' request, faculty from the State's 3 colleges of pharmacy collaborated to develop a standardized assessment tool: the System of Universal Clinical Competency Evaluation in the Sunshine State (SUCCESS).

METHODS

In 2000 the University of Florida, Nova Southeastern University, and Florida A&M University faculty members agreed to develop a standardized clinical competency performance measure. Six faculty members from the 3 colleges of pharmacy in Florida at the time attended an American Association of Colleges of Pharmacy (AACP) Curriculum Institute on assessment. The group attended the Institute for the purpose of creating a performance-based assessment tool for clinical competence standards. (Two faculty members from Palm Beach Atlantic joined the working group after that College was established in 2001.)

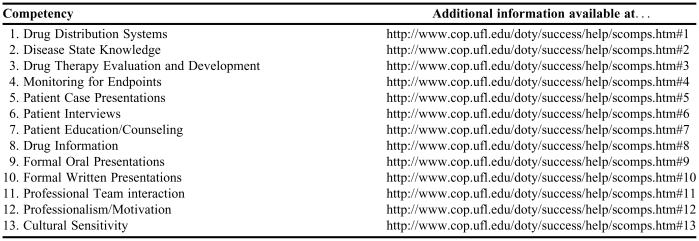

The evaluation tools used by each college were reviewed to identify similarities in existing competency statements. Fortunately, the framework for all 3 tools was based on the Center for Advancement of Pharmaceutical Education (CAPE) competencies.7 CAPE outcome statements were adapted to reflect a subset of the skills needed by a newly licensed practicing pharmacist. Similar statements were compared and a consensus was reached on 13 broad categories of clinical competencies (Table 1), and then these statements were refined over the next 3 years.

Table 1.

Competency Areas Evaluated in the System of Universal Clinical Competency Evaluation in the Sunshine State (SUCCESS) Assessment Tool

The “Help” page for the SUCCESS program is located at: http://www.cop.ufl.edu/doty/success/help/

Each member of the development team wrote statements that described skills needed to execute a competency. Weekly conference calls were conducted for more than a year. Consensus was reached regarding a baseline definition of achievement and each faculty member produced definitions for each statement of competency. The net result was a set of behavior-based rubrics with definitions for performance aligned with the specific competencies to help preceptors assess and assign a value to their students' performance: deficient, competent, or excellent. In general, a competency rating of “excellent” indicated that a student performed the requisite skills independently on a regular basis at a level above the average newly licensed pharmacist. A rating of “competent” indicated that a student was generally able to perform the skills at a level similar to a newly licensed pharmacist, but occasionally needed some assistance from the faculty member in irregular or difficult situations. Finally, a rating of “deficient” indicated a student was unable to consistently perform the required tasks unassisted at the level required of a newly licensed pharmacist or had made serious errors when attempting to complete the tasks. Once similar competency statements were combined, the original 200 items were reduced to the current 96 competency sub-statements within the 13 global competency statements. The following is an example of a competency sub-statement rubric from the “Drug Therapy Evaluation and Development” competency.

Design and evaluate treatment regimens for optimal outcomes using pharmacokinetic data and drug formulation data.

Excellent = Independently designs and evaluates most if not all treatment regimens for optimal outcomes using pharmacokinetic data and drug formulation data.

Competent = Designs and evaluates the most critical treatment regimens for optimal outcomes using pharmacokinetic data and drug formulation data. Requires preceptor's assistance for a more detailed evaluation.

Deficient = Even with preceptor's guidance, the student is not able to design or evaluate regimens for optimal outcomes using pharmacokinetic data and drug formulation data. Preceptor intervention required to prevent errors.

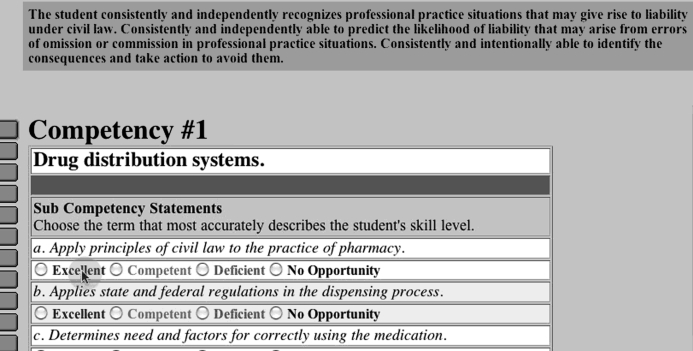

A weight was assigned to individual competency sub-statements and “excellent,” “competent,” and “deficient” performance ratings were defined (Figure 1). Each competency sub-statement was assigned a weight so that the sum of the values of the competency sub-statements within each of the 13 competency areas was 1. In addition, each statement was reviewed to determine if it was “critical” to successfully complete the competency. A skill was defined as critical if a student's deficiency might endanger a patient or the preceptor's relationships within the practice might be harmed. If a student's performance was evaluated to be deficient in one of these critical competencies, he/she would receive the “deficient” score for all the competency sub-statements within that global competency area. The rationale for this decision was that if a student was rated as deficient in a critical skill, it would not matter if he or she excelled at the other competency sub-statements, the outcome could still be harmful or damaging. These events should be rare and this strategy was designed to immediately get the attention of the student, preceptor, and college.

Figure 1.

Example of SUCCESS competency and competency sub-statements with accompanying rubric. Features of the SUCCESS program shown in this figure include the layout of the Internet screen, the number and title of the current competency (Competency #1), the frame showing the competency sub-statements, titles of the competency sub-statements (eg, apply the principles of civil law to the practice of pharmacy), the 3 categories of performance, and “excellent” performance definition contained in the rubric for competency sub-statement “a” of Competency #1. The rubric definitions are revealed by passing the mouse pointer over the sub-competency performance categories.

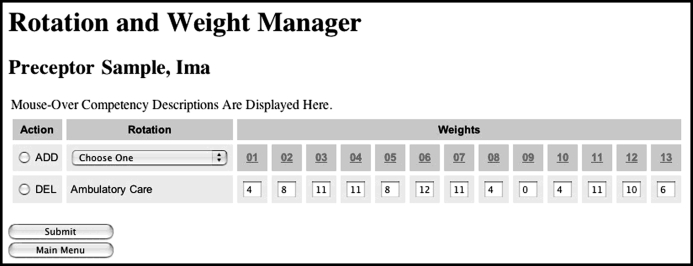

Next, a system was devised for calculating SUCCESS grades. Each of the marks — excellent, competent, and deficient — was assigned a standard percentage. The program was designed so that the preceptor evaluates individual students' performance as excellent, competent, or deficient for a particular competency sub-statement based on the definition that best reflects his or her observation and assessment of the student's performance. The grading process requires preceptor- and rotation-specific weights. Each advanced practice experience is unique; even within the same specialty or general area (eg, psychiatry, community pharmacy). So, a mechanism to reflect that distinctiveness was built into the system as the final step of the scoring algorithm. To meet our purposes, individual faculty preceptors were asked to assign weights to overall competencies to reflect the actual practice activities of their individual advanced practice course (Figure 2). For example, if some competencies were not required, preceptors assigned a weight of “zero” to the competency so the student would not be penalized for not completing it. Once the individual competency sub-statements were evaluated for all 13 competency areas and sub-statements and the preceptors assigned the weights for their specific APPE, a standardized weighting algorithm was applied and the numeric score was calculated. (Note: readers may contact Dr. Randell Doty [Doty@cop.ufl.edu] at the University of Florida for additional information about the algorithm and its calculation if you intend to implement a similar assessment tool.) Once the grades were entered into SUCCESS, the faculty and staff members at the individual Colleges could access information according to their individual assigned “rights” via a secure web report or downloadable file. An alpha test of the assessment tool was conducted. Select faculty members and preceptors used it for 1 semester and provided feedback. Faculty at all 4 colleges and approximately 20 preceptors suggested changes to the assessment tool. Numerous recommendations to the drug information competency were incorporated.

Figure 2.

Preceptor web page for individual rotation competency weighting. This figure illustrates the Rotation Weight Manager features of the SUCCESS program. This feature is designed for individual preceptors to allow the SUCCESS program to calculate the numeric score based on the activities that the preceptor thinks (1) most frequently occur and (2) are most important in his or her advanced practice experience rotation. In this example, 11%of the grade is based on the student's performance on Competency #3 (ie, Drug Therapy Evaluation and Development) and zero is based on the student's performance on Competency #9 (ie, Formal Oral Presentations). The linear combination of these weights is used together with the preceptor's assessments/evaluations for each competency sub-statement to derive a numeric score.

Three important features were built into the design of the system to reflect important concerns of the colleges and their preceptor faculty members. First, preceptors expressed their concerns about students' relative abilities on individual competencies over time in the advanced practice experiences. In response to their concerns, a feature was built into the SUCCESS scoring algorithm designed to take into account at what point the student was in her/his APPE sequence (eg., first APPE versus last APPE). In effect, preceptors wanted inexperienced students to be “given a break” during their first advanced practice courses. Preceptors had consciously or unconsciously considered this factor when assigning grades in the past. Assessments are intended to be standardized and reflect students' actual performance in comparison to the ability-based outcome performance levels expected of entry-level practitioners as defined in the competency statements. Consequently, the development team wanted preceptors to evaluate students based on the performance definitions without taking into account the student's length of time in the advanced practice experience sequence. To that end, we developed an algorithm that modified the final numeric score based on the number of advanced practice experiences the student had completed. This strategy explicitly recognized that a student could be rated as deficient in a skill (even a critical one), but should still pass the course if it was completed early in the student's series of advanced pharmacy practice experiences. Some pharmacy practice skills are so complex that it would be unreasonable to expect a student to be proficient in an important skill after only 1 or 2 practice opportunities. Students and preceptors were informed that a rating of deficient during earlier rotations would not necessarily result in a failing mark. We encouraged the preceptors and students to view a deficient rating as an opportunity for improvement so that students could reach their goal of entry-level competency during future advanced practice experiences.

Since the SUCCESS software was not interwoven with individual college's advanced practice scheduling software, we were unable to designate for which APPE (eg, first, third, last) a score was given. Instead, adjustments had to be applied after the calculated numeric scores were received at each student's college. The system was designed to allow adjustment of students' grades for the first 4 APPEs. However, for the fifth APPE and beyond, the SUCCESS-generated numeric scores were calculated without adjustment. Only numeric scores rather than grades were delivered to individual colleges since each college had its own grading scale.

The second feature added to the system in response to feedback obtained from the alpha testing was the blinding of the preceptor to the students' final SUCCESS-generated numeric score. The principal reasons for blinding the preceptors were (1) SUCCESS was to be shared by preceptors of multiple colleges and individual college's scoring security and confidentiality needed to be maintained; (2) it was sometimes difficult for preceptors to be objective when assessing a student especially if pressured by the student for a specific score; and (3) without it, preceptors might have been tempted to change their entries to support a grade they wanted or were pressured to give.

Finally, the addition of the preceptor blinding and experience adjustment features of the software required the addition of a quality assurance step to ensure that the SUCCESS tool was producing the intended grades. This step was deemed necessary because of the complex nature of the scoring algorithm based on (1) the preceptor's rotation specific weighting, (2) the preceptor's assessment of the student's performance, (3) the blinded grade value of “excellent” “competent” and “deficient,” and (4) the blinded internal weighting of the competency sub-statements.

A beta test of the SUCCESS program was conducted by the University of Florida (UF) in the spring of 2005 and during the 2005-2006 academic year before the system was implemented in the other participating colleges (Palm Beach Atlantic and Nova Southeastern Universities). The quality assurance step was implemented by comparing a preceptor-generated grade with the SUCCESS-generated grade. At the end of the student evaluation, preceptors were asked to assign numeric values and letter grades based on how they would have graded students using their previous tool. The preceptors' numeric scores and letter grades were compared to the SUCCESS-generated scores and grades.

The SUCCESS software and evaluation results were stored on the UF network. Legally binding confidentiality agreements were established with Nova Southeastern and Palm Beach Atlantic Universities. An online tutorial was developed for students and preceptors. A frequently asked questions (FAQ) utility was also created. Additional help with specific issues was available via telephone or e-mail. The protocol for the beta-test and quality assurance of this educational assessment tool was reviewed and approved by the University of Florida Institutional Review Board.

ASSESSMENT

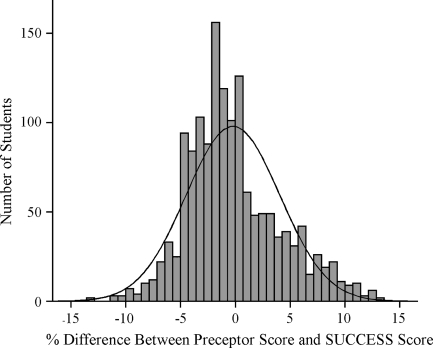

The numeric results presented herein are the outcomes of the UF beta-test. Over 1400 evaluations of individual UF student performance were entered into the SUCCESS program during the first-year trial. Using the SUCCESS tool, approximately 10% fewer students earned an A letter grade. Most of the students not receiving an A were assigned a B+ by the SUCCESS algorithm. Similarly, fewer students received a B grade and more students were assigned a C+ grade. More importantly, the same students who failed the advanced practice experience according to the preceptors' reports also failed based on calculations using the SUCCESS algorithm. Overall grades derived from the SUCCESS algorithm were similar to preceptors' comparison grades. The average SUCCESS grade was not significantly higher than the preceptor scores (0.02 grade points). When SUCCESS generated percentage scores were compared with preceptor-assigned scores, 58.9% (n=866) of the SUCCESS scores were higher (range: 0 to 13.4); 38.2% (n=561) were lower (range: 0 to -13.5), and 2.9% (n=43) were the same (Figure 3).

Figure 3.

Difference in number of points between preceptor assigned grades and grades assigned by the SUCCESS algorithm. A positive score indicates that the preceptor's score was higher than the SUCCESS algorithm generated score. A negative score indicates the SUCCESS algorithm generated score was higher.

The UF College of Pharmacy started its distance education program in fall 2002. The SUCCESS program was designated to be a key component of the programs' overall curriculum assessment plan. Early on, student academic performance and educational environment issues associated with delivery of the didactic portion of the pharmacy curriculum at a distance were assessed.8,9 However, UF faculty members were also interested in the practice experience outcomes of students assigned to the Gainesville campus compared with outcomes of students assigned to the newly established Jacksonville, Orlando, and St. Petersburg/Seminole campuses. The average percentage score for the practice experiences was highest among students assigned to the Gainesville campus (93.0%). The average percentage scores of students assigned to the St. Petersburg campus were the lowest (90.5%), with the Jacksonville (92.4%) and Orlando (92.6%) campuses' scores in between. This compares favorably to the students' grade point average over the 3 years of didactic work. In each case the average student score for the advanced pharmacy practice experiences was in excess of 90%. Equally important, among the 200 UF students taking the North American Pharmacist Licensure Examination (NAPLEX) for the first time in 2006, the passing rate was 90% or higher for all 4 campuses and the mean scaled scores ranged from 105.2 to 115.6. We were unable to correlate individual graduates' NAPLEX scores with the SUCCESS numeric scores and, unfortunately, will be unable to do so until the National Association of Boards of Pharmacy (NABP) policy changes to allow them to be available for assessment and accreditation purposes. However, the SUCCESS scores seem to have “face” validity as a performance assessment tool using the NAPLEX as the gold standard.

DISCUSSION

Anecdotal reports from preceptors' indicate that the SUCCESS assessment tool has been widely accepted by faculty members and preceptors at 3 of Florida's colleges of pharmacy. Some delays have occurred in getting 100% participation secondary to individual preceptors' perceptions or misperceptions of barriers. Some preceptors hesitate to use SUCCESS because they have difficulty accessing web sites from their workplace computers. Others were concerned that basing the scoring algorithm on only 3 ranks – excellent, competent, and deficient – is not enough to distinguish good performers from poor performers. Anecdotal reports lead us to believe that these preceptors' hesitation most likely comes from their misperceptions that these 3 performance ratings represent a Likert performance assessment scale. However, the SUCCESS scoring algorithm does not represent a Likert scale. The 3 ratings (excellent, competent, and deficient) are neither weighted equally in value nor assumed to be equidistant. These specific value-laden terms should never be used individually to define the student's performance; unlabeled letters of the alphabet could be used equally well. The designations of excellent, competent, and deficient performance are classification categories intended to represent students' performance based on the rubric definitions; not on the connotations of the adjectives. Our preliminary data suggest that the SUCCESS tool effectively distinguishes good performers from poor performers. Even though there are only 3 performance-assessment ratings, there are 96 competency sub-statements, providing 288 performance levels. The variability designed into the SUCCESS algorithm is sufficient to allow for adequate differentiation in students' performance because each competency is weighted by the preceptor to reflect their advanced practice experience.

Some preceptors felt that being blinded to their students' final scores conflicted with their academic rights to assign numeric or letter grades. An analogous situation in a didactic course would be to blind the grader from students' names on papers while grading them. Unfortunately, blinding as a safeguard against bias is impossible in an experiential setting where the preceptor and student must work closely together. After becoming accustomed to SUCCESS, anecdotal evidence supports the notion that most preceptors prefer being blinded to the students' final grade because they no longer sense pressure from students to award higher grades than the students deserve.

As is the case in most unfamiliar or new circumstances, the impact on their time was initially perceived as a barrier to fully utilizing SUCCESS for some preceptors. Since then, however, preceptors anecdotally report that their time commitment is reduced once they became comfortable with SUCCESS. Since SUCCESS is available on the Internet 24/7, it provides a flexible and convenient method for submitting student evaluations that may not have been possible before the system was developed.

Some of the preceptors have adapted the tool to use in completing their midpoint evaluations. When the final grade is due, the preceptor only needs to address those competency ratings that have changed since the student's midpoint evaluation. In other cases, preceptors have required their students to take a test at the end of a course as a part of the APPE evaluation. SUCCESS does not have a dedicated location in the system or on the screen for noting an examination score, nor the ability to incorporate the score into the final numeric score. Initially some faculty members and preceptors resisted using SUCCESS because a “test of knowledge” score was not explicitly included as part of the assessment tool. This issue was addressed by explaining to the preceptors that several of the competency sub-statements ask them to explicitly evaluate the adequacy and application of students' knowledge base to clinical situations. Preceptors are encouraged to continue using their tests and to incorporate the students' performance into their ratings on knowledge-based competencies; especially since examination scores provide another objective measure for them to use in deciding whether the student is performing at an excellent, competent, or deficient level. Even so, some preceptors still want a mechanism for reporting examination scores. The SUCCESS development group has opted not to incorporate this feature because it leaves open the door to purposefully defeating the weighted SUCCESS scoring algorithm.

Finally, the goal of a single statewide assessment tool has not been fully realized because of administrative reasons. One college involved in the initial development of SUCCESS is working toward a resolution of administrative issues and plans to participate. In addition, the newest college of pharmacy in the state has already committed to using the SUCCESS program.

The SUCCESS working group has several goals for future improvements, including (1) development of competencies for non-direct patient care activities (eg, during administrative rotations), (2) mapping of the competencies back to individual college's curricula, (3) creating an individualized “prescription for SUCCESS” for students based on preceptors' assessments and (4) development of an introductory practice experience component.

The current 13 competencies address some non-direct patient care skills (eg, competencies 9, 10, 12, and 13). Focused feedback will be elicited from faculty members and preceptors who provide non-direct patient care advanced practice experiences to determine whether additional competencies are necessary. Once the needs assessment is complete, additional competency statements and skill definitions will be developed to enhance assessment of students' performance in these non-direct patient care skills.

It will be important for each college to map the SUCCESS competencies to specific courses in their curriculum. Curriculum and assessment committees will be able to use the outcome data to make curricular recommendations based on students' performance on the clinical competencies. It will provide the colleges' curriculum committees with evidence to make decisions about including more or less didactic coursework; more advanced skills-building courses earlier or later on in the curriculum; or whether the current curriculum is keeping up with professional development. SUCCESS is on the verge of providing the UF College of Pharmacy assessment committee with evidence regarding whether core information and skills are being successfully learned.

One of the first program enhancements in the future is likely to be a module for preparation of an educational “prescription for SUCCESS.” Individual students will receive automated feedback from their previous preceptor based on their performance. Additionally, the student's next preceptor will be sent an automated message specifically asking him or her to prepare additional experiences for the student to practice and be assessed. The prescription for SUCCESS will specify skills for which the student will need extra time, oversight, and feedback. Reports will be generated and forwarded to individual students in the form of percentiles and/or graphic illustrations so that they will be able to compare their performance with that of their peers in similar APPEs.

Other program enhancements also are being developed for individual faculty members to compare the performance of students within the same college (as reported here) and among other participating colleges for benchmarking purposes.

While we are pleased with our progress to this point, more work needs to be done to formally assess the psychometric properties of the competency statements and develop standardized training programs for preceptors to ensure that student assessments and evaluations are valid and comparable statewide, nationally, and internationally.

CONCLUSION

The implementation of an online performance measurement tool designed to be used by multiple colleges across a state is meeting with acceptance and success. One hundred percent of the preceptors at the University of Florida and Palm Beach Atlantic use SUCCESS and >90% of the Nova Southeastern preceptors do so as well. The SUCCESS program has met its goals, including creating a common set of forms, standardized assessment criteria, and an objective document that is accessible on the Internet, as well as standardization of grading and reduction in students' pressuring preceptors for higher grades. We will continue to assess the utility of SUCCESS by comparing the scores of other Florida colleges through similar methods used at UF. In the future we would be amenable to expanding use of the SUCCESS tool to other colleges, nationally and internationally, for student advanced practice experience assessment and benchmarking purposes.

ACKNOWLEDGEMENTS

We gratefully acknowledge the support of the College of Pharmacy, University of Florida, and Dean William H. Riffee. We are deeply grateful to Ned Phillips who helped with all of the programming. We are also grateful to Warren Richards (formerly of Palm Beach Atlantic University) and Doug Covey (UF - Working Professional PharmD Program), who attended the AACP Institute where the prototype was developed and/or assisted in the development over the past 2 years.

REFERENCES

- 1.Dolan G. Assessing student nurse clinical competency: will we ever get it right? J Clin Nurs. 2003;12:132–41. doi: 10.1046/j.1365-2702.2003.00665.x. [DOI] [PubMed] [Google Scholar]

- 2.Dudek NL, Marks MB, Regehr G. Failure to fail: The perspectives of clinical supervisors. Academic Med. 2005;80:S84–7. doi: 10.1097/00001888-200510001-00023. [DOI] [PubMed] [Google Scholar]

- 3.Hemmer PA, Hawkins R, Jackson JL, Pangaro LN. Assessing how well three evaluation methods detect deficiencies in medical students' professionalism in two settings of an internal medicine clerkship. Academic Med. 2000;75:167–73. doi: 10.1097/00001888-200002000-00016. [DOI] [PubMed] [Google Scholar]

- 4.Kassebaum DG, Eaglen RH. Shortcomings in the evaluation of students' clinical skills and behaviors in medical school. Acad Med. 1999;74:842–9. doi: 10.1097/00001888-199907000-00020. [DOI] [PubMed] [Google Scholar]

- 5.Mahara MS. A perspective on clinical evaluation in nursing education. J Adv Nursing. 1998;28:1348. doi: 10.1046/j.1365-2648.1998.00837.x. [DOI] [PubMed] [Google Scholar]

- 6.McGaughey J. Standardizing the assessment of clinical competence: an overview of intensive care course design. Nurs Crit Care. 2004;9:238–46. doi: 10.1111/j.1362-1017.2004.00082.x. [DOI] [PubMed] [Google Scholar]

- 7.American Association of Colleges of Pharmacy. Center for the Advancement of Pharmaceutical Education: Educational Outcomes-2004. http://www.aacp.org/Docs/MainNavigation/Resources/6075_CAPE2004.pdf. Accessed: May 30, 2007.

- 8.Ried LD, McKenzie M. A Preliminary Report on the Academic Performance of Pharmacy Students in a Distance Education Program. Am J Pharm Educ. 2004;68(3) Article 65. [Google Scholar]

- 9.Ried LD, Motycka C, Mobley C, Meldrum M. Comparing Burnout of Student Pharmacists at the Founding Campus with Student Pharmacists at a Distance. Am J Pharm Educ. 2006;70(5) doi: 10.5688/aj7005114. Article 114. [DOI] [PMC free article] [PubMed] [Google Scholar]