Abstract

Objectives

To assess bachelor of pharmacy students' overall perception and acceptance of an objective structured clinical examination (OSCE), a new method of clinical competence assessment in pharmacy undergraduate curriculum at our Faculty, and to explore its strengths and weaknesses through feedback.

Methods

A cross-sectional survey was conducted via a validated 49-item questionnaire, administered immediately after all students completed the examination. The questionnaire comprised of questions to evaluate the content and structure of the examination, perception of OSCE validity and reliability, and rating of OSCE in relation to other assessment methods. Open-ended follow-up questions were included to generate qualitative data.

Results

Over 80% of the students found the OSCE to be helpful in highlighting areas of weaknesses in their clinical competencies. Seventy-eight percent agreed that it was comprehensive and 66% believed it was fair. About 46% felt that the 15 minutes allocated per station was inadequate. Most importantly, about half of the students raised concerns that personality, ethnicity, and/or gender, as well as interpatient and interassessor variability were potential sources of bias that could affect their scores. However, an overwhelming proportion of the students (90%) agreed that the OSCE provided a useful and practical learning experience.

Conclusions

Students' perceptions and acceptance of the new method of assessment were positive. The survey further highlighted for future refinement the strengths and weaknesses associated with the development and implementation of an OSCE in the International Islamic University Malaysia's pharmacy curriculum.

Keywords: clinical competence, objective structured clinical examination (OSCE), bachelor of pharmacy degree, assessment

INTRODUCTION

The paradigm has shifted in the practice of pharmacy in that its maturity as a patient-centered practice has necessitated radical metamorphosis in pharmaceutical education and curricula. In fact, the assessment of clinical competence is fundamental to ensure that graduate pharmacists are able to exercise their duties in patient care.1 Novel performance-based assessment techniques are becoming increasingly popular and acceptable in health professions in response to recommendations to improve validity and fairness of examinations. The quest for improved assessment techniques has gained a greater sense of urgency as undergraduate curricula place more emphasis on competency-based and problem-based instruction and assessment.2 The Objective Structured Clinical Examination (OSCE), one form of performance-based assessment, has become the gold standard the world over as a tool for evaluating the clinical competency of medical and pharmaceutical undergraduate students.1,3-5 In fact, the OSCE has been proven and rated as the most reliable and valid tool for assessing clinical competency.6-8

The Kulliyyah (ie, the Faculty) of Pharmacy at the International Islamic University Malaysia (IIUM) has taken a pioneering role during the 2005-2006 academic session by applying and evaluating the effectiveness of this assessment instrument among the fourth-year students for the final examination in clinical pharmacy. This method was introduced to our undergraduate program in the context of a desire to improve the quality of our evaluation techniques and ever-changing curriculum, and to be abridged of current instructional technologies in pharmaceutical education. A 7-station (5 active) OSCE was designed and implemented to cover the areas that were pertinent to contemporary pharmacy practice and the Clinical Pharmacy III course objectives and learning outcomes.

Although a well-constructed and implemented OSCE is a valid and reliable method of evaluating clinical competence,4,7-9 there is overwhelming evidence from the literature to support that it is not without limitations.10 Furthermore, our hybrid OSCE had some peculiar elements that needed to be studied. Thus, in our mission to enhance the development of a more robust, feasible, reliable, and valid examination in the future, we conducted a survey to assess students' perception and acceptance of the OSCE and explore the strengths and weaknesses of the newly introduced tool. Here we describe and discuss the outcomes of the assessment.

DESIGN

The Faculty of Pharmacy at the IIUM successfully developed, validated, and applied an OSCE in the Clinical Pharmacy III course during the 2005-2006 academic session. The competencies tested in the objective structured clinical examination (OSCE) included patient counseling and communication in the long-term administration of anticoagulants, insulin delivery devices, inhaler devices, and smoking cessation; identification and resolution of drug-related problems (DRPs) using evidence-based approach in a patient with cardiovascular diseases; and literature evaluation/drug information provision to other healthcare professionals. A summary of the objectives and contents of each station is provided in Appendix 1.

The mechanics involved in designing and implementing the OSCE included: development of a blueprint which served as a guideline; development and face-validation of the 7 stations in accordance with the blueprint; design of performance criteria/assessment instruments in the form of structured marking scheme for individual stations; feasibility testing and rehearsals at OSCE stations; and administration of the final examination. A blueprint for designing and running an OSCE was developed by a team of our clinical faculty members. The document clearly spelled out the proposed workstations and their development. The contents of the 5 active stations of the OSCE were determined by carefully defining specific practice competencies to cover the areas that were pertinent to contemporary pharmacy practice and in tandem with the Clinical Pharmacy III course objectives and learning outcomes. The authenticities of the cases outlined in the blueprint were verified by course instructors based on their teaching and clinical experiences and the ideas were modified for ultimate stations development. Structured marking schemes and instructions to candidates and simulated patients/actors were developed based on the tasks assigned at individual stations. However, contents of respective stations and their assessment tools were further face-validated by the departmental Chair (a clinical pharmacist and associate professor), the course coordinator (a clinical pharmacist and lecturer), and one external reviewer (a physician and professor of clinical pharmacology) through a review and consensus. All 3 had extensive experience with how OSCEs are run in pharmacy and medical colleges. All participants (real and simulated patients and physician actors) were given prior training to ensure the consistency of their responses. Feasibility tests were done to ensure that conducting the OSCE was logistically possible.

The 42 students who registered for Clinical Pharmacy III during the 2005-2006 academic session and were eligible to take the examination were randomly assigned into 1 of 2 groups of 21 students. The examination was performed in 2 concurrent sessions for the 2 groups. Two venues were used for the examination: Pharmacy Practice Department (examination venue A) and the Clinical Skills Laboratory (examination venue B). A session comprised of 7 stations (including 2 stations provided for rest). Students were given 15 minutes at each station and assessed by faculty members through the structured and standardized marking scheme. Because this was a pilot study, standard setting procedures such as borderline, Angoff, or their modifications were not used for validation and grading. Moreover, since the OSCE component of the Clinical Pharmacy III course counted as 20% of the total grade, a holistic approach was used for grading the overall summative performance of the students in the course. Since the OSCE was a pioneering experience at our Faculty, we conducted a cross-sectional survey among the examinees in order to identify areas that may need improvement before implementing similar examinations in the future.

A 49-item questionnaire with various domains, modified from a study by Pierre RB et al (2004), was designed, validated, and administered immediately after all students completed the examination.4 Face validation of the questionnaire was done by experienced faculty members and educators and a consensus was established. The questionnaire comprised of demographic data of the respondents and questions to evaluate the nature, organization, content, and structure of the examination; quality of performance of the examination; perception of OSCE validity and reliability; and rating of OSCE in relation to other assessment methods. A 5-point Likert-type scale that indicated degrees of agreement was used to assess most of the dimensions in the questionnaire. However, a 3-point scale was used for rating OSCE in relation to other assessment formats. In addition, open-ended follow-up questions were included to generate qualitative data. (The survey instrument is available from the authors on request.) Students were asked to complete the questionnaire on a voluntary basis immediately after finishing the OSCE, before leaving the examination venue. They were assured that information they provided would remain confidential and their identity would not be disclosed, and that if they chose not to participate, they would not be penalized.

The data were collected and SPSS, version 11.5 (SPSS Inc., Chicago, IL) was used to analyze the data descriptively. However, qualitative data were analyzed manually using thematic contents. Several themes were identified for each question and students' responses were categorized based on a consensus among the investigators.

ASSESSMENT

Of 42 students, 41 returned the questionnaire (response rate 98%). The majority of the respondents were female (63%; 26/41). All the students were single and had a mean age of 23.7 years. Racial distribution of the students included: Malay, 95%; Chinese, 2%; and other, 2%. Fifty-one percent (21/41) of the respondents were examined in examination venue B, and the rest were examined in examination venue A.

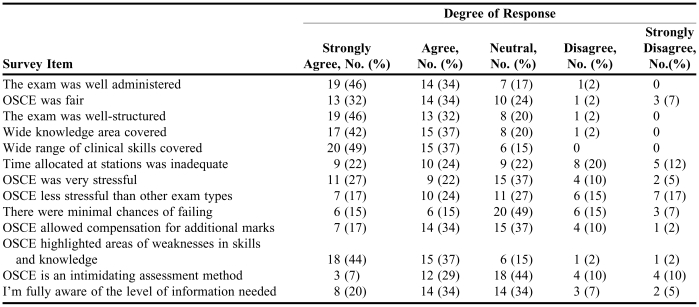

Over 80% of the students found the OSCE helpful in identifying areas of weaknesses in their clinical competencies. Seventy-eight percent agreed that OSCE was comprehensive, and 66% believed that it was fair. About 46% of the respondents strongly agreed and 34% agreed that the examination was well administered. However, 12% noted that they were not fully aware of the level of information needed to accomplish the examination tasks (5% strongly disagreed and 7% disagreed that they were fully aware of the information level needed). This implies that the students felt that they had not been told what would be covered on the OSCE.

Interestingly, none of the students disagreed that a wide range of clinical skills and competencies were covered in the examination, and few (2%) felt that the OSCE did not cover a wide knowledge area in clinical pharmacy practice. Twenty percent of the students believed that the OSCE was an intimidating method of assessment, yet 29% admitted that the chance of failing the examination was minimal. On the other hand, 51% of the students were optimistic that their performance on the OSCE would improve their overall final grade for the course, especially if they did not perform as well on other course examinations that used other assessment methods.

Examinees found the OSCE to be more stressful than other methods of assessment (32%), yet only 10% thought it was unfair. Overall, there was a wide divergence of opinions among students in terms of adequacy of time allocated at each station. About 46% felt that the 15 minutes allocated per station was inadequate. More detailed information on the OSCE evaluation is presented in Table 1.

Table 1.

Bachelor of Pharmacy Students' Perception of an Objective Structured Clinical Examination

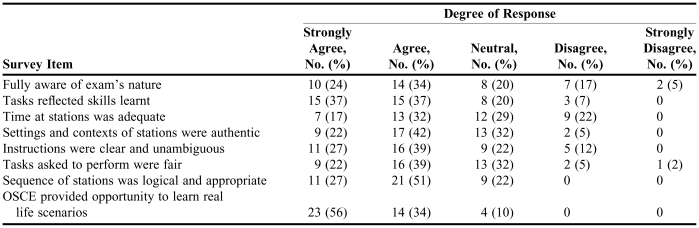

An overwhelming proportion of the students believed that the required tasks in the OSCE were consistent with the skills and knowledge learned in didactic courses and during experiential training. Similarly, the majority of students saw the OSCE as an unprecedented opportunity to experience real life scenarios (56% strongly agreed and 34% agreed).

Furthermore, the majority of students (59%) said they were fully aware of the nature of the OSCE prior to the examination, but about 22% disputed this fact. The majority also agreed that instructions provided during the OSCE were clear and lacked ambiguity. Other pertinent information on quality of performance testing is in Table 2.

Table 2.

Bachelor of Pharmacy Students' Assessment of the Quality of Their Performance on an Objective Structured Clinical Examination

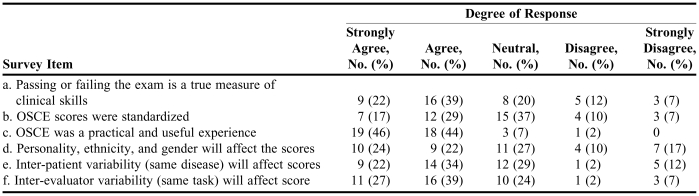

Most of the students were of the view that this method of assessment was a practical and useful experience (Table 3). However, about half of the students raised concerns that personality, ethnicity, and/or gender, as well as interpatient and interassessor variability, were potential sources of bias that may have affected their scores. In spite of this, many students (61%) agreed that their performance on the examination was a true reflection of their clinical skills.

Table 3.

Bachelor of Pharmacy Students' Perception of the Validity and Reliability of an Objective Structured Clinical Examination

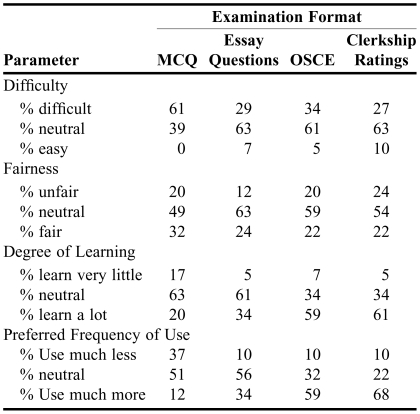

Students were also asked to rate various assessment instruments in terms of difficulty, fairness, degree of learning, and their preferences on the frequency with which the instruments should be used for assessing competencies. The results are summarized in Table 4. Generally, students did not see multiple-choice questions (MCQs) as an easy method of assessment and 61% believed that this type of question was difficult. However, the majority of students were neutral about the difficulty or ease of other question formats. MCQs were rated as the fairest assessment method (31.7% of respondents). Students also gave neutral responses with respect to the fairness of other evaluation tools, except for MCQs. Furthermore, students indicated that they gained the most knowledge/learned the most from OSCEs and clerkships. Consistently, the 2 methods were rated to be the most preferred in terms of frequency of use.

Table 4.

Bachelor of Pharmacy Students' Rating of OSCE in Relation to Other Assessment Methods Used in Clinical Pharmacy and Therapeutics

MCQ = Multiple Choice Question; OSCE = Objective Structured Clinical Examination

Three open-ended questions were asked regarding the strengths, weaknesses, and recommendations for improvement of the OSCE. Although many responses were vague and not interpretable, themes were used to summarize and categorize those that were interpretable.

Regarding the strength of the OSCE, 23 students commented that it exposed them to real-life scenarios and that it was a true reflection of skills learned in the curriculum. Other themes that emerged from students' written comments included measurement and application of knowledge and skills (10 comments); highlighted areas of weaknesses in skills and knowledge (5 comments); enhancement of communication skills (6 comments); and best learning experience they had ever had (9 comments).

Interevaluator and interpatient variability were both identified as major drawbacks of the OSCE (6 and 5 comments, respectively). Students indicated that the OSCE caused a lot of nervousness due to their inexperience (3 comments) and 9 students commented that it was an anxiety- and stress-inducing type of examination. Moreover, 6 students indicated that the time allocated to perform tasks at certain stations such as the DRPs Identification Station was grossly inadequate, and 4 others felt that the time was generally insufficient. Students also highlighted inequality of exposure to certain competencies (such as Novopen and inhaler counseling skills) during their clerkships (5 comments). Other themes with minimal response rates included ambiguous instructions at the stations; inadequate exposure to counseling on use of asthma and insulin devices; ineffective and inadequate ushering and signage; noise from supportive staff members; introduction of the assessment method too late in the curriculum; and use of simulated rather than real patients.

Students strongly recommended that future OSCEs have broader coverage of competencies (such as therapeutic drug monitoring) and be introduced into the pharmacy curriculum at an earlier stage (6 comments each). Another suggestion for improvement was increasing the time allocated at workstations (with 3 students of the view that different tasks needed different times). Other suggestions included: familiarize students with the OSCE rating procedure or the examiner's expectations prior to the examination (2 comments); place more emphasis on experiential training rather than theoretical teaching (2 comments); maintain the same evaluator and patient at a station until all students had passed through (2 comments); ensure the clarity of instructions; and instruct supportive staff members to maintain silence during examination.

There were no statistically significant differences between students' scores in any corresponding station. Likewise there was no significant difference in the overall total score of the examination between students examined in the first venue (Clinical Skills Laboratory) and the second venue (Pharmacy Practice Department) (P = 0.130). The mean total scores for students examined at the 2 venues were 17.2 and 16.4 (out of a maximum obtainable mark of 20), respectively.

DISCUSSION

The majority of students saw the OSCE as an unprecedented opportunity to encounter real-life scenarios. The finding that an overwhelming proportion of the students (90%) admitted that the OSCE provided a useful and practical learning experience was consistent with similar studies reported elsewhere.1,4 The examination provided students with a feedback mechanism to measure their strengths and weaknesses in clinical skills. However, a large proportion of students felt that personality, ethnicity, and gender affected their performance/scores in the examination. Similarly, both the variability in examination venue (despite the same disease condition and tasks, but different patient or actor) and that of examiner were identified by the majority of the students (56 and 66%, respectively) as sources of bias in scores. The interrater and interpatient variability could be true sources of bias as documented by previous studies.11-14

In a study by Austin et al, students expressed considerable concern that there was so much variability between cases and patient-actors that it might adversely affect their academic standing and believed that it was problematic within an evaluation perspective.12 Conversely, the utilization of different graders for the same or even different tasks in OSCEs is acceptable from a learning standpoint, because interrater reliabilities have also been widely reported to be high.10,12,15

Although we did not conduct any interrater reliability testing, the similarity in test scores has given us some hint that the perceived variability or lack of fairness in grading was not reflected in the actual marks between corresponding graders/stations. In order to make future OSCEs more transparent, valid, and reliable, they should not be conducted in 2 separate locations unless standardized participants are used as graders. In addition, the validity of the contention by a large percentage of students that ethnicity and gender were true sources of bias is questionable. Other indicators of validity and reliability used in the survey are to a greater extent in favor of the examination. For instance, one surrogate marker of validity and reliability is that the majority agreed that their performance in the examination was a true reflection of their clinical skills and that the examination was fair.

Our school of pharmacy and many others in Malaysia assess students' abilities in clinical pharmacy and therapeutics using multiple-choice questions and long and short essay questions as well as clerkship ratings, among other methods. Clinical faculty members often see a disparity between high classroom achievers and achievers in the clinical setting.16 For us, the OSCE was a novel method of assessment and the perceptions of those tested will be used for improving assessment methods and for curriculum review. There are few reports in the literature pertaining to students' perceptions of various evaluation methods in therapeutics and clinical pharmacy. In this survey, examinees' points of view on various assessment instruments were assessed in terms of difficulty, fairness, degree of learning, and preferences on frequency of use. Perhaps this comparison may provide an international perspective of how a cohort of pharmacy students perceived the OSCE in relation to other batteries of evaluating clinical competency. The majority of the students rated the MCQs as a difficult assessment method but were neutral about the difficulty or ease of other methods. On the other hand, the MCQs were rated as being the most fair of all assessment methods. This was in complete contrast to other studies which found that the OSCE was fairer than any other assessment format to which students were exposed.4,17 Students' views on difficulty and fairness may not necessarily be consistent with what was published in the literature and these findings must not be interpreted in isolation. This study has clearly demonstrated this fact in the sense that an overwhelming proportion of the students rated OSCE and clerkships to impart the highest degree of learning on them and consistently rated the 2 as the most preferred in terms of frequency of usage. The determination of the impact of these indicators (difficulty, fairness, degree of learning, and preferences) on student's performance in each type of assessment method however, was beyond the scope of this study.

A study by Gardener and colleagues has made a comparison of traditional testing methods and standardized patient (SP) examination for therapeutics.16 They found a moderate positive correlation between performance on the SP and the traditional examinations. In another survey, all examinees believed that OSCE compared to other traditional methods of evaluation was a much better indicator of how they would perform in the real world, lending content validity to the P-OSCE.18 Therefore, the high proportion of students for OSCE on “the frequency they felt an instrument should be used” was a clear reflection of examinees acceptance of the OSCE.

Despite our efforts to give 2 briefing sessions with clear demonstrations of what an OSCE was and how it worked, a large proportion of students (22%) did not feel they had been adequately prepared for the examination. Perhaps this was due to the students' naivety to this method of assessment. Further, from a thematic standpoint, many students felt that the OSCE was an extremely anxiety-producing examination and should be introduced earlier in the curriculum. A study by Allen et al elucidated this and how students' degrees of fear and nervousness changes during the examination.19 In this context, we strongly recommended that in the future, the OSCE be introduced in the pharmacy curriculum at an earlier stage than it was, and should have broader coverage of competencies such as therapeutic drug monitoring and pharmacokinetic dosing adjustment. The significance of exposing the students to this assessment method at an earlier stage would be to build their confidence in providing pharmaceutical care. Students also indicated that the time allocated to perform tasks at certain stations was inadequate or generally insufficient and suggested increasing the time (some students thought different tasks needed different time limits). It was practically difficult to allocate different time limits at different stations during the OSCE. Moreover, our consultations during the development process led to our decision to allocate 15 minutes per station because similar tasks were given less or equal time limits elsewhere.2,4,6,10,20

Standard setting procedures such as Angoff and borderline were not utilized in the examination, since ours was a pilot study. In the future we hope to use such methods for grading performance and testing validity. The holistic approach for validation and grading has been widely used for other assessment methods, but not for OSCE.

CONCLUSION

The OSCE was a useful assessment method. The interrater and interpatient variability were the major weaknesses of our OSCE. Hence, this survey was a vital tool that highlighted the strengths and weaknesses in the development and implementation of an OSCE in a pharmacy curriculum in Malaysia.

ACKNOWLEDGEMENTS

We are deeply indebted to all patients/actors, staff members, and lecturers involved in the OSCE. We also thank all students who participated in this survey.

Appendix 1. Objectives and Description of OSCE Stations

Station 1: Patient Counseling on Oral Anticoagulant Therapy

Description: A patient receiving long-term warfarin therapy

Objective/Task: Assessment of student's skills on counseling patient on anticoagulant therapy.

Patients: Real Patients attending Anticoagulation Clinic at HTAA on warfarin therapy.

Other Requirements: Samples of warfarin tablets, printed educational materials in lay terms for patients.

Station 2: Counseling and Education on Inhaler Devices and Smoking Cessation

Description: A COPD/Asthma patient who is a smoker on MDI beclomethasone

Objective/task: Evaluation of student's ability to educate patient on the rationale of treatment, proper use of inhaler devices and counseling on smoking cessation.

Patients: Real patients with the related conditions attending Respiratory Clinic at HTAA.

Other Requirements: Six (6) samples of beclomethasone (Becotide®) inhalers containing placebo (inert gas).

Station 3: Resting Station

Description: Students would be given time to rest before embarking on the next station. Here, we thought to provide the case summary of the patients in Station – 4 (DRP Identification/Resolution Station), so that examinees would have an idea about the case and make necessary preparations on how to approach the case.

Requirements: Laminated patient's case notes (pharmacist patient database).

Station 4: Drug-Related Problems Identification and Resolution

Description: A patient with CV disease(s) and comorbidities

Objective/task: Assessment of the student's ability to identify drug-related problems (DRPs) using the standard DRP taxonomy and give evidence-based recommendations for a pharmacist's care plan to resolve and/or prevent the DRPs.

Patients: Real patients to be recruited, mostly with multiple cardiovascular diseases (HTN, CHF, AMI, AF) and other comorbidities (DM, hyperlipidemia, gouty/rheumatoid arthritis, PUD etc).

Other Requirements: Patient's charts/case notes, Drug Information Handbook.

Station 5: Counseling on the Use of Insulin Delivery Devices

Description: A Diabetic patient newly prescribed Insulin: Novopen-3®

Objective/task: Assessment of the student's competence on insulin/Novopen® administration techniques.

Patients: “Standardized patients” (actors) to be recruited and specially trained to act as patients with diabetes.

Other Requirements: Four (4) samples of Novopen-3® and four (4) actors to be trained on how to act, and what information to provide to students when they ask.

Station 6: Resting Station

Description: Students would be given 15 minutes to rest at this station before embarking on the next station.

Station 7: Drug Information Inquiry from a Health Professional

Description: A physician/endocrinologist reports that he has heard of a new inhalational insulin preparation approved for use in both type-1 and 2 diabetes mellitus. He is considering using this product in his 14-year-old patient who is non-compliant to his normal insulin injection. He requests information on safety, efficacy, suitability, and availability of this product in Malaysia.

Objective: To test the student's ability to select and evaluate appropriate literature and respond to drug information inquiry in a timely manner.

Actors: Two (2) actors who are to portray physicians/endocrinologists would be stationed near station-7 and will ring this station periodically (whenever the students rotate). They are to be trained on the exact information they should request regarding the use of a newly approved medication.

Other Requirements: Literature Resources (tertiary and primary literature on Exubera® insulin human rDNA origin Inhalation Powder, most current MIMS Malaysia, Drug Information Handbook.

REFERENCES

- 1.Rutter PM. The introduction of observed structured clinical examinations (OSCEs) to the M.Pharm. degree pathway. Pharm Educ. 2002;1:173–80. [Google Scholar]

- 2.Fielding DW, Page GG, Rogers WT, O'Byrne CC, Schulzer M, Moody KG, Dyer S. Application of objective structured clinical examinations in an assessment of pharmacists' continuing competency. Am J Pharm Educ. 1997;61:117–25. [Google Scholar]

- 3.Corbo M, Patel JP, Abdel Tawab R, Davies JG. Evaluating clinical skills of undergraduate pharmacy students using objective structured clinical examinations (OSCEs) Pharm Educ. 2006;6:53–8. [Google Scholar]

- 4.Pierre RB, Wierenga A, Barton M, Branday JM, Christie CD. Student evaluation of an OSCE in paediatrics at the University of the West Indies, Jamaica. BMC Med Educ.2004 Oct. Available at: http://www.biomedcentral.com/1472-6920/4/22. Accessed February 13, 2006. [DOI] [PMC free article] [PubMed]

- 5.Carraccio C, Englander R. The objective structured clinical examination, a step in the direction of competency-based evaluation. Arch Pediatr Adolesc Med. 2000;154:736–41. doi: 10.1001/archpedi.154.7.736. [DOI] [PubMed] [Google Scholar]

- 6.Austin Z, O'Byrne CC, Pugsley J, Munoz LQ. Development and validation processes for an objective structured clinical examination (OSCE) for entry-to-practice certification in pharmacy: the Canadian experience. Am J Pharm Educ. 2003;67(3) Article 76. [Google Scholar]

- 7.Woodburn J, Sutcliffe N. The reliability, validity and evaluation of the objective structured clinical examination in podiatry. Assess Eval Higher Educ. 1996;21:131–47. [Google Scholar]

- 8.Carpenter JL. Cost analysis of OSCEs (Review) Acad Med. 1995;70:828–33. [PubMed] [Google Scholar]

- 9.Jain SS, De Lisa JA, Nadler S, et al. One program's experience of OSCE vs. written board certification results: a pilot study. Am J Phys Med Rehab. 2000;79:462–7. doi: 10.1097/00002060-200009000-00012. [DOI] [PubMed] [Google Scholar]

- 10.Stowe CD, Gardner SF. Real-time standardized participant grading of an objective structured clinical examination. Am J Pharm Educ. 2005;69(3):272–6. [Google Scholar]

- 11.McLaughlin K, Gregor L, Jones A, Coderre S. Can standardized patients replace physicians as OSCE examiners? BMC Med Educ. February 2006. Available at: http://www.biomedcentral.com/1472-6920/6/12. Accessed March 12, 2007. [DOI] [PMC free article] [PubMed]

- 12.Austin Z, Gregory P, Tabak D. Simulated patients vs. standardized patients in objective structured clinical examinations. Am J Pharm Educ. 2006;70(5) doi: 10.5688/aj7005119. Article 119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Quero Munzo L, O'Byrne C, Pugsley J, Austin Z. Reliability, validity and generalizability of an objective structured clinical examination (OSCE) for assessment of entry-to-practice in pharmacy. Pharm Educ. 2005;5:33–43. [Google Scholar]

- 14.Monaghan MS, Vanderbush RE, McKay AB. Evaluation of clinical skills in pharmaceutical education: past, present and future. Am J Pharm Educ. 1995;59:354–8. [Google Scholar]

- 15.LaMantia J, Rennie W, Risucci DA, et al. Interobserver variability among faculty in evaluations of residents' clinical skills. Acad Emerg Med. 1999;6:38–44. doi: 10.1111/j.1553-2712.1999.tb00092.x. [DOI] [PubMed] [Google Scholar]

- 16.Gardner SF, Stowe CD, Hopkins DD. Comparison of traditional testing methods and standardized patient examinations for therapeutics. Am J Pharm Educ. 2001;65:236–40. [Google Scholar]

- 17.Duffield KE, Spencer JA. A survey of medical students' views about the purposes and fairness of assessment. Med Educ. 2002;36:879–86. doi: 10.1046/j.1365-2923.2002.01291.x. [DOI] [PubMed] [Google Scholar]

- 18.Monaghan MS, Turner PD, Vanderbush RE, Grady AR. Traditional student, nontraditional student, and pharmacy practitioner attitudes toward the use of standardized patients in the assessment of clinical skills. Am J Pharm Educ. 2000;64:27–32. [Google Scholar]

- 19.Allen R, Heard J, Savidge M, Bittengle J, Cantrell M, Huffmaster T. Surveying students' attitudes during the OSCE. Adv Health Sci Educ. 1998;3:197–206. doi: 10.1023/A:1009796201104. [DOI] [PubMed] [Google Scholar]

- 20.Cerveny JD, Knapp R, DelSignore M, Carson DS. Experience with objective structured clinical examinations as a participant evaluation instrument in disease management certificate programs. Am J Pharm Educ. 1999;63:377–81. [Google Scholar]