Abstract

Objective

Patients are commonly presented with complex documents that they have difficulty understanding. The objective of this study was to design and evaluate an animated computer agent to explain research consent forms to potential research participants.

Methods

Subjects were invited to participate in a simulated consent process for a study involving a genetic repository. Explanation of the research consent form by the computer agent was compared to explanation by a human and a self-study condition in a randomized trial. Responses were compared according to level of health literacy.

Results

Participants were most satisfied with the consent process and most likely to sign the consent form when it was explained by the computer agent, regardless of health literacy level. Participants with adequate health literacy demonstrated the highest level of comprehension with the computer agent-based explanation compared to the other two conditions. However, participants with limited health literacy showed poor comprehension levels in all three conditions. Participants with limited health literacy reported several reasons, such as lack of time constraints, ability to re-ask questions, and lack of bias, for preferring the computer agent-based explanation over a human-based one.

Conclusion

Animated computer agents can perform as well as or better than humans in the administration of informed consent.

Practice Implications

Animated computer agents represent a viable method for explaining health documents to patients.

1. Introduction

Face-to-face encounters with a health provider—in conjunction with written instructions—remains one of the best methods for communicating health information to patients in general, but especially those with low health literacy [1–4]. Face-to-face consultation is effective because providers can use verbal and nonverbal behaviors, such as head nods, hand gesture, eye gaze cues and facial displays to communicate factual information to patients, as well as to communicate empathy [5] and immediacy [6] to elicit patient trust. Face-to-face conversation also allows providers to make their communication more explicitly interactive by asking patients to do, write, say, or show something that demonstrates their agreement and understanding [7]. Finally, face-to-face interaction allows providers to dynamically assess a patient’s level of understanding based on the patient’s verbal and nonverbal behavior and to repeat or elaborate information as necessary [8].

However, there are several pervasive problems that limit clinician’s capacity to communicate effectively. Providers can only spend a limited amount of time with each patient [9]. Time pressures can result in patients feeling too intimidated to ask questions. Another problem is that of “fidelity”: providers do not always perform in accordance with recommended guidelines, resulting in significant variation in the delivery of health information.

Given the efficacy of face-to-face consultation, a promising approach for conveying health information to patients with limited health literacy is the use of computer animated agents that simulate face-to-face conversation with a provider [10]. These benefits of using conversational agents include:

Use of verbal and nonverbal conversational behaviors that signify understanding and mark significance, and convey information in redundant channels of information (e.g., hand gestures, such as pointing, facial display of emotion, and eye gaze), to maximize comprehension.

Use of verbal and nonverbal communicative behaviors used by providers to establish trust and rapport with their patients in order to increase satisfaction and adherence to treatment regimens [11].

Adaptation of their messages to the particular needs of patients and to the immediate context of the conversation.

Provision of health information in a consistent manner and in a low-pressure environment in which patients are free to take as much time as they need to thoroughly understand it. This is particularly important as health providers frequently fail to elicit patients’ questions, and patients with limited health literacy are even less likely than others to ask questions [12].

According to the 2004 National Assessment of Adult Literacy, fully 36% of American adults have limited health literacy skills, with even higher rates of prevalence among patients with chronic diseases, those who are older, minorities, and those who have lower levels of education [13, 14]. Seminal reports about the problem of health literacy include a sharp critique of current norms for overly complex documents in health care such as informed consent [15, 16]. Indeed, a significant and growing body of research has brought attention to the ethical and health impact of overly complex documents in healthcare [17, 18]. Computer agents may provide a particularly effective solution for addressing this problem, by having the agents describe health documents to patients using exemplary communication techniques for patients with limited health literacy and by providing this information in a context unconstrained by time pressures.

Informed consent agreements for individuals to participate in medical research represent a particular challenge for individuals with limited health literacy to understand, since they typically encode many subtle and counter-intuitive legal and medical concepts. They are often written at a reading level that is far beyond the capacity of most subjects [19] [20]. Researchers may not have the resources to ensure that participants understand all the terms of the consent agreement. Indeed, many potential research subjects sign consent forms that they do not understand [21–23].

Consequently, we modified an existing computer agent framework designed for health counseling [10] [11] to provide explanation of health documents such as research informed consent forms. In this paper we describe the development of this agent, and then present a preliminary evaluation of the computer agent in a three-arm randomized trial in which the agent explains an informed consent document for participation in a genetic repository.

2.0 Methods

2.1 Preliminary Studies – Part 1. Health Document Explanation by Human Experts

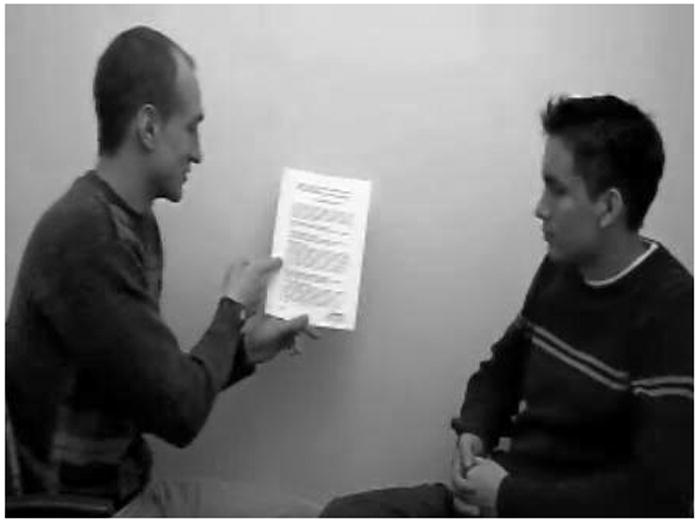

We conducted two empirical studies to characterize how human experts explain health documents to their clients in face-to-face interactions [24]. The first study was conducted with four different experts explaining two different health documents to research confederates. The second study was conducted with one expert explaining health documents to three laypersons with different levels of health literacy. Our primary focus was a micro-analysis of the nonverbal behavior exhibited by the expert in order to inform the development of a computational model of document explanation. We found that one kind of nonverbal behavior was nearly ubiquitous: the use of pointing gestures towards the document by the expert (Figure 1). Of the 1,994 expert utterances analyzed, 26% were accompanied by a hand gesture, and 90% of these involved pointing at the document.

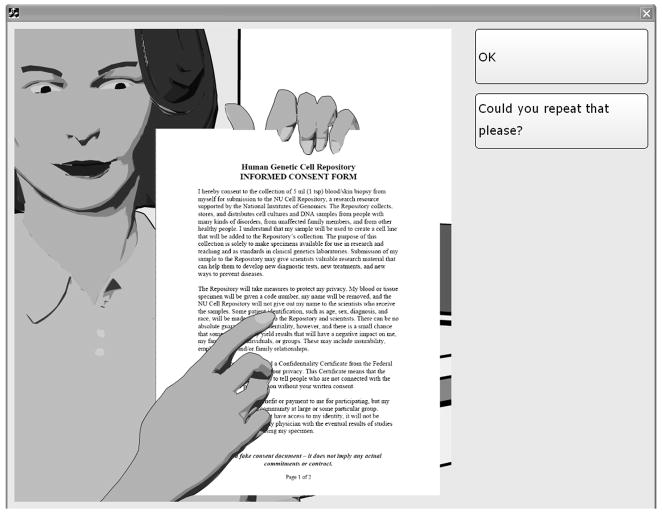

Figure 1.

Explanation of consent by experts

We derived a predictive model of the occurrence and form of referential hand gestures and other nonverbal behavior used by the experts during their explanations. We found that initial mentions of part of a document were more likely to be accompanied by a pointing gesture (43% vs. 19%) and that the kind of document object referred to (page vs. section vs. word or image) was predictive of the kind of hand gesture used (e.g., using a flat hand to refer to a page vs. pointing with a finger to refer to a word). We also found that the expert in the second study omitted a significant amount of detail and used more scaffolding (description of document structure) when describing a health document to listeners with low health literacy, compared to listeners with adequate health literacy.

2.2 Preliminary Studies: Part 2. Adapting a Computer Agent for Health Document Explanation

An existing computer agent framework designed for health counseling [10] [11] was modified to provide explanation of health documents. The framework features an animated computer agent whose nonverbal behavior is synchronized with a text-to-speech engine (Fig. 2). Patient contributions to the conversation are made via a touch screen selection from a multiple choice menu of utterance options, updated at each turn of the conversation. Dialogues are scripted using a custom hierarchical transition network-based scripting language. Agent utterances can be dynamically tailored based on information about the patient, information from previous conversations, and the unfolding discourse context [10]. The animated agent has a range of nonverbal behaviors that it can use, including: hand gestures, body posture shifts, gazing at and away from the patient, raising and lowering eyebrows, head nods, different facial expressions, and variable proximity.

Figure 2.

Computer Agent Interface

The framework was extended for document explanation in several ways. A set of animation system commands was added to allow document pages to be displayed by the character (Figure 2), with page changes accompanied by a page-turning sound. A set of document pointing gestures was added so that the agent could be commanded to point anywhere in the document with either a pointing hand or an open hand. While the document is displayed, the agent can continue using its full range of head and facial behavior, with gaze-aways modified so that the agent looks at the document when not looking at the patient (in our studies of human experts, the expert gazed at the document 65% of the time and at the patient 30% of the time). We also extended our text-to-embodied-speech translation system (“BEAT” [25]) to automatically generate document pointing gestures given the verbal content of the document explanation script, based on models from our earlier studies [24].

We conducted a preliminary study of the document explanation agent in a three-arm randomized trial with 18 participants aged 19–33, in which each participant experienced two of the three conditions. We compared agent-based health document explanation with explanation by human experts and a self-study condition. While there were no significant effects of study condition on comprehension of the documents (measured by post-intervention knowledge tests), the participants who interacted with both the agent and human were significantly more satisfied with the agent (paired t(5)=2.7, p<.05) and with the overall experience (paired t(5)=2.9, p<.05), compared to the human [24].

2.3 Part 3. Evaluation of Computer Agent for Explanation of Research Informed Consent

While the preliminary study provided feedback on the promise of using agents for health document explanation, it lacked ecological validity because the participants were primarily college students who had a fairly high level of health literacy. Thus, the primary purpose of the third research activity was to repeat the pilot evaluation with a population in which limited health literacy is represented.

We conducted an evaluation study to test the efficacy of our agent-based document explanation system, compared with a standard of care control (explanation by a human) and a non-intervention control (self-study of the document in question) for individuals with adequate and inadequate health literacy. The study was a 3-arm (COMPUTER AGENT vs. HUMAN vs. SELF) between-subjects randomized experimental design.

An interaction script was created to present an informed consent form for participation in a genetic repository, based on the preliminary work described above. The consent document used was taken with minor revisions from an existing National Institute of General Medical Sciences template for genetic repository research that has been used in multiple NIH funded projects [26]. The example of a study involving a genetic repository was chosen because we wanted little overlap between the simulated consent experience and the actual consent document used for participating in the current study, and we wanted material that would be largely foreign to participants to decrease the influence of prior knowledge. In each script, patients could simply advance linearly through the explanation (by selecting “OK”), ask for any utterance to be repeated (“Could you repeat that please?”), request major sections of the explanation to be repeated, or request that the entire explanation be repeated. Any number of repeats could be requested and, although the scripting language has the ability to encode rephrasings when an utterance is repeated, for the current study the agent would repeat the exact same utterance when a repeat was requested for any part of the script. The agent was deployed on a mobile cart with a touch screen attached via an articulated arm.

2.3.1 Measures

In addition to basic demographics, we assessed health literacy using the 66-word version of the Rapid Estimate of Adult Literacy in Medicine (REALM) [27]. We defined limited health literacy as a reading level of 8th grade and below and adequate health literacy as 9th grade and above for our analyses, as prior authors have done [28–31]. We also created a knowledge test for the consent document, based on the Brief Informed Consent Evaluation Protocol (BICEP) [22]. This test was administered in an “open book” fashion with the participant able to refer to a paper copy of the consent form during the test. We augmented the BICEP with scale measures of likelihood to sign the consent document, overall satisfaction with the consent process, and perceived pressure to sign the consent document. In the COMPUTER AGENT and HUMAN conditions, the number of questions or requests for clarifications asked by participants during the explanation of the consent document was also counted.

2.3.2 Participants

Twenty-nine subjects participated in the study, were recruited via fliers posted around the Northeastern University neighborhood and in a nearby apartment complex whose demographic consisted of mostly older minority adults, and were compensated for their time. Participants had to be 18 years of age or older and able to speak English. Participants were 66% female, aged 28–91 (mean 60.2). Three were categorized as 3rd grade or below, four as 4th–6th grade, six as 7th–8th grade, and the rest as high school level.

2.3.3 Procedure

The study took place either in a common room of the apartment complex or the Human-Computer Interaction laboratory at Northeastern University. After arriving, people who consented to participate filled out a demographic questionnaire and then had the REALM health literacy evaluation administered.

Following this, they were exposed to one of three treatments in which a consent document was explained to them by either the COMPUTER AGENT or a HUMAN, or were given time to read the document on their own (SELF). For the COMPUTER AGENT condition, they were given a brief training session on how to interact with the computer agent. The experimenter then gave the participant a paper copy of the consent document so they could follow along with the computer agent’s explanation, and left the room. At the end of the interaction, the computer agent informed the participant that they could take as much time as they needed to review the document before signaling to the experimenter that they were ready to continue. For the HUMAN condition, a second research assistant explained the document to the study participant. Two different female instructors played this second role, and both had significant experience administering informed consent for research studies. The instructor was blind to the computer agent interaction script content and evaluation instruments, and was simply asked to explain the document in question to the participant. For the CONTROL condition, participants were handed the document and told to take as much time as they needed to read and understand it, and were then left alone in the observation room until they signaled they were ready to continue.

The research assistant then verbally administered the knowledge test in “open book” format, with the participant being able to reference their paper copy during the test. The process measures were then verbally administered and a semi-structured interview was conducted to ask participants about their impressions of the study.

3. Results

Of the 29 participants, 13 (45%) had inadequate health literacy. We conducted full-factorial ANOVAs for all measures, with study CONDITION (COMPUTER AGENT, HUMAN, SELF) and health LITERACY (ADEQUATE, INADEQUATE) as independent factors, and LSD post-hoc tests when applicable. Table 1 shows descriptive statistics for the outcome measures.

Table 1.

Study Results (mean and (SD))

| Measure | CONDITION | LITERACY | CONDITION x LITERACY | |||||

|---|---|---|---|---|---|---|---|---|

| Agent (N=9) | Human (N=9) | Self (N=11) | Main Effect Sig. | Adequate (N=16) | Inadequate (N=13) | Main Effect Sig. | Interaction Sig. | |

| Comprehension (% correct) | 42.20 (20.33) | 39.44 (12.86) | 25.91 (11.36) | 0.006 | 41.88 (17.78) | 26.92 (9.69) | 0.001 | 0.042 |

| Satisfaction (1–7) | 6.56 (1.01) | 3.89 (2.47) | 5.09 (1.70) | 0.018 | 5.50 (1.90) | 4.77 (2.24) | 0.280 | 0.682 |

| Likelihood to Sign (1–7) | 6.21 (1.30) | 2.78 (2.39) | 3.91 (2.43) | 0.011 | 4.06 (2.52) | 4.54 (2.54) | 0.524 | 0.588 |

| Pressure to Sign (1–7) | 2.11 (2.09) | 2.00 (2.00) | 1.55 (0.93) | 0.719 | 1.75 (1.34) | 2.00 (2.04) | 0.666 | 0.822 |

| Questions asked | 1.12 (2.10) | 1.22 (2.64) | 0.967 | 0.89 (2.67) | 1.50 (2.00) | 0.559 | 0.207 | |

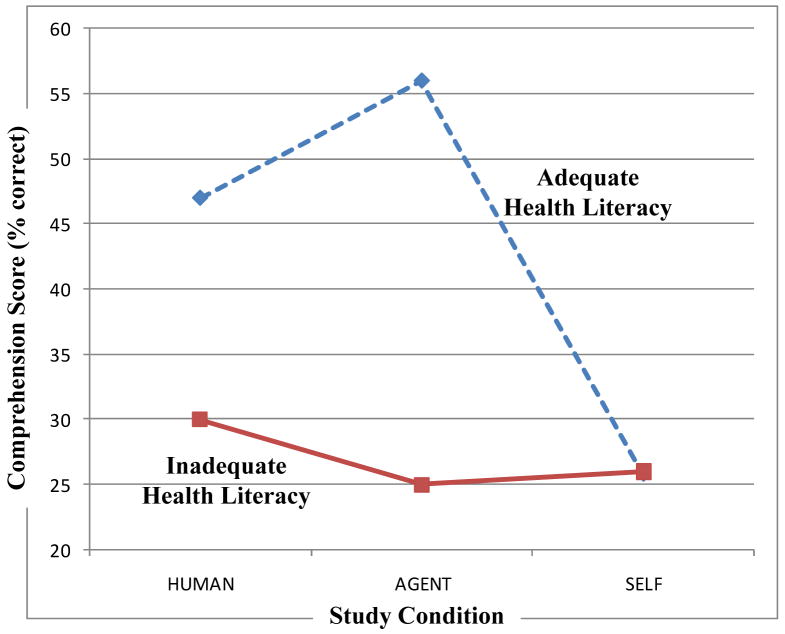

There was a significant interaction between CONDITION and LITERACY on knowledge test comprehension scores, F(2,23)=4.41, p<.05 (Figure 3). Post-hoc tests indicated that, for participants with adequate health literacy, explanations by HUMAN and COMPUTER AGENT resulted in significantly greater comprehension compared to SELF study (with no significant difference between HUMAN and COMPUTER AGENT). However, for participants with inadequate health literacy, there were no significant differences on comprehension between study conditions, and they scored significantly lower as a group compared to participants with adequate health literacy.

Figure 3.

Comprehension of Informed Consent

There was a main effect of study CONDITION on satisfaction with the consent process, F(2,23)=4.78, p<.05, with participants being significantly more satisfied with explanations by the COMPUTER AGENT compared to the HUMAN (participants were also more satisfied with the COMPUTER AGENT compared to SELF study, with post-hoc tests approaching significance, p=.09).

There was also a main effect of study CONDITION on self-reported likelihood to sign the consent document, F(2,32)=5.46, p<.05, with participants significantly more likely to sign the consent form following explanation by the COMPUTER AGENT, compared to either explanation by the HUMAN or SELF study.

There were no significant differences between groups on perceived PRESSURE to sign the consent form, F(2,23)=0.20, p=0.72

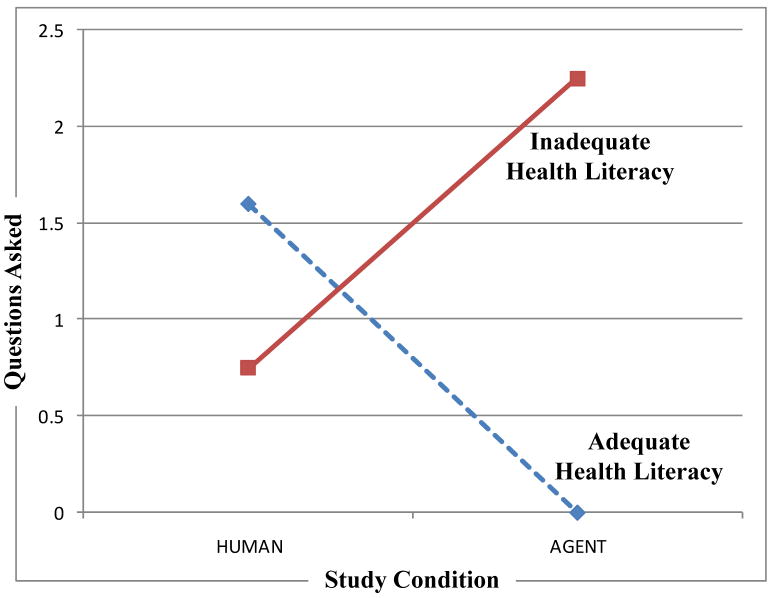

Finally, it appeared that participants with limited health literacy asked more questions of the computer agent compared to the human, while those with adequate health literacy asked more questions of the human, although this interaction was not significant, F(1,13)=1.76, p=0.21 (Figure 4).

Figure 4.

Questions Asked of the Computer Agent or Human

3.1 Qualitative Results

Participant responses to semi-structured interview questions were transcribed from the videotape and common themes were identified [32].

When asked about their impressions of the computer agent, the most frequently mentioned theme (7 participants) was that the computer agent was clear, direct and easy to understand. One participant explicitly said that this clarity was due to the computer agent’s ability to point at the virtual document, with the participant following along:

“She was very direct and very clear when she was explaining it, she was explaining it very nice and slow. And she was pointing to the areas that needed to be focused on. When she was explaining it, she was breaking it down on the paper. Where you couldn’t get lost if you were concentrating on what she was saying. Because it was right there in front of you [points at computer] and it’s like right here [points on paper document], and it’s just she was explaining the whole thing. And I was very comfortable with it because as I was reading it, I understood what she was saying and what I was seeing in front of me.” (49 year old female, adequate literacy)

The second most common impression of the computer agent (4 mentions) was that participants felt they could take as much time as they needed, and did not feel embarrassed asking the computer agent to repeat itself:

“Elizabeth [the name of the agent] was very, uh, patient, and if she says something to you that you don’t understand, she will repeat it again if you push the button. And she would take her time.” (68 year old female, limited literacy).

“For me, you know, when it’s on the computer I can do it five times over if I want to. I can just hit repeat, wait I didn’t understand it, I can just repeat it again. You know, but I wouldn’t do that with you [a human] because if I didn’t understand it I might ask you one time to repeat it, and if I still didn’t understand it I wouldn’t ask you to repeat it. Because I wouldn’t want to seem stupid.” (47 year old female, adequate literacy).

Two participants said that they liked the computer agent because she was polite and did not talk down to them:

“She was really polite, she was really polite. That I liked. Besides the fact, more important than anything else, she looked at me and she talked to me. You know, she was talking to me as a person, as opposed to, um, looking down on me and saying ‘did you understand me!’ you know? And that made me feel really good.” (50 year old female, adequate literacy)

Other positive comments included that the computer agent was “informative” and “correct” (2 mentions), that the computer agent was “honest” (1 mention), and that the respondents liked using the touch screen instead of a mouse (1 mention).

There were two negative comments about the computer agent. One participant mentioned that the computer agent seemed “impersonal”, and another felt the computer agent was too “robotic”.

When asked whether they would prefer that health documents be explained to them by a person or a computer agent, 3 of the 9 participants who interacted with the agent said they would prefer the agent, 1 said they would prefer a human, and 1 said that either would be equally acceptable (the others did not respond). For example:

“I think she did the same as talking to an ordinary person in the hospital. Uh, except she would give you a little more information than they do. Because sometimes they only tell you a little bit, you know what I’m saying, and she explain the whole thing.” (68 year old female, limited literacy).

4. Discussion and Conclusion

4.1 Discussion

The computer agent did as well as or better than the human on all measures, with participants (regardless of literacy level) reporting higher levels of satisfaction with the consent process and greater likelihood to sign the consent document when it was explained by the computer agent, compared to either explanation by a human or self study. In addition, explanation by the computer agent led to the greatest comprehension of the document, but only for those participants with adequate levels of health literacy; participants with limited literacy scored poorly on comprehension in all treatment conditions.

The tendency for participants with inadequate health literacy to ask more questions of the agent may be due to their being comfortable asking a computer repeated questions without feeling “stupid” (as one participant put it). However, an alternate hypothesis is that they asked more questions of the agent because they had a more difficult time understanding it.

The low comprehension scores for participants with inadequate health literacy indicate that much work remains to make the computer agent effective for this population. One pedagogical methodology espoused for patients with limited health literacy is “teach back” in which the patient is asked to teach what they have learned back to the health educator [7]. While there are some problems implementing this in an unconstrained way within our system, it is at least possible to add comprehension checks at key places in the agent-patient conversation and to have the computer agent provide additional information or review if it appears the patient is having problems.

4.1.2 Limitations

Limitations of our study include the generalizability of our findings, especially given the very small convenience sample used. The research assistants who explained the consent forms to participants may not be representative of most researchers who perform this function. There are also ecological validity issues with our study settings, although we would expect that in a rushed clinical environment the agent may outperform a typical research assistant by an even wider margin than we observed.

4.2 Conclusion

Our future work is focused on several extensions to the system and more extensive evaluation. We plan to add audio prompts to the user interface so that patients who are unable to read the text of their conversational responses can still use the system. We are also developing a framework that will allow health document templates to be instantiated and explained, so that, for example, consent form “boilerplates” can be instantiated with the details of a research study, and the computer agent would be able to explain the document to a patient without further scripting or programming. We have also developed the capability for the agent to keep track of specific issues and questions that it could not resolve for the patient, and output these at the end of the session for follow up by a human research assistant or clinician. We also plan to explore the integration of the conversational agent with other multimedia content, such as video clips, to further explain complex topics such as randomization, or numerical concepts like rates - ideas that can be hard to convey verbally. Finally, we plan to replicate the evaluation study in a clinic or hospital environment, where we would expect that the advantages of the computer agent-based approach would be even greater given the time pressures that most human providers are under.

4.3 Practice Implications

This work suggests that animated computer agents can perform as well as people in explaining health documents to patients. For the administration of informed consent in particular, it is possible to construct computer agents that result in at least as much understanding of the consent form, satisfaction of the process, and study participation rates compared to the administration of informed consent by human research assistants. Time and cost savings for research studies or medical procedures requiring informed consent could be significant when large number of patients are involved. The use of this technology may also lead to more ethical treatment of patients through a more controlled administration of informed consent and automated comprehension tests.

Acknowledgments

Role of Funding

This work was supported by a grant from the NIH National Heart Lung and Blood Institute (HL081307-01). The sponsors had no involvement in the study design, collection, analysis and interpretation of data, in the writing of the report, or in the decision to submit the paper for publication.

Thanks to Lindsey Hollister and Maggie McElduff for their assistance in conducting the study.

Footnotes

Conflict of interest

The authors have no conflicts of interest that could influence this work.

We confirm all patient/personal identifiers have been removed or disguised so the patient/person(s) described are not identifiable and cannot be identified through the details of the story.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Timothy W. Bickmore, Northeastern University College of Computer and Information Science, Boston, MA, USA

Laura M. Pfeifer, Northeastern University College of Computer and Information Science, Boston, MA, USA

Michael K. Paasche-Orlow, Boston University School of Medicine, Boston, MA, USA

References

- 1.Clinite J, Kabat H. Improving patient compliance. J Am Pharm Assoc. 1976;16:74–6. [PubMed] [Google Scholar]

- 2.Madden E. Evaluation of outpatient pharmacy patient counseling. J Am Pharm Ass. 1973;13:437–43. [PubMed] [Google Scholar]

- 3.Morris L, Halperin J. Effects of Written Drug Information on Patient Knowledge and Compliance: A Literature Review. Am J Public Health. 1979;69:47–52. doi: 10.2105/ajph.69.1.47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Qualls C, Harris J, Rogers W. Cognitive-Linguistic Aging: Considerations for Home Health Care Environments. In: Rogers W, Fisk A, editors. Human Factors Interventions for the Health Care of Older Adults. Mahwah, NJ: Lawrence Erlbaum; 2002. pp. 47–67. [Google Scholar]

- 5.Frankel R. Emotion and the Physician-Patient Relationship. Motivation and Emotion. 1995;19:163–73. [Google Scholar]

- 6.Richmond V, McCroskey J. Nonverbal Behavior in Interpersonal Relations. Boston: Allyn & Bacon; 1995. Immediacy; pp. 195–217. [Google Scholar]

- 7.Doak C, Doak L, Root J. Teaching patients with low literacy skills. 2. Philadelphia, PA: JB Lippincott; 1996. [Google Scholar]

- 8.Clark HH, Brennan SE. Grounding in Communication. In: Resnick LB, Levine JM, Teasley SD, editors. Perspectives on Socially Shared Cognition. Washington: American Psychological Association; 1991. pp. 127–49. [Google Scholar]

- 9.Davidoff F. Time. Ann Intern Med. 1997;127:483–5. doi: 10.7326/0003-4819-127-6-199709150-00011. [DOI] [PubMed] [Google Scholar]

- 10.Bickmore T, Gruber A, Picard R. Establishing the computer-patient working alliance in automated health behavior change interventions. Patient Educ Couns. 2005;59:21–30. doi: 10.1016/j.pec.2004.09.008. [DOI] [PubMed] [Google Scholar]

- 11.Bickmore T, Picard R. Establishing and Maintaining Long-Term Human-Computer Relationships. ACM Transactions on Computer Human Interaction. 2005;12:293–327. [Google Scholar]

- 12.Katz MG, Jacobson TA, Veledar E, Kripalani S. Patient Literacy and Question-asking Behavior During the Medical Encounter: A Mixed-methods Analysis. J Gen Intern Med. 2007 doi: 10.1007/s11606-007-0184-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Paasche-Orlow MK, Parker RM, Gazmararian JA, Nielsen-Bohlman LT, Rudd RR. The prevalence of limited health literacy. J GenInternMed. 2005;20:175–84. doi: 10.1111/j.1525-1497.2005.40245.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.National Assessments of Adult Literacy. 2004 (Accessed at http://nces.ed.gov/naal/.)

- 15.Nielsen-Bohlman L, Panzer AM, Hamlin B, Kindig DA. Committee on Health Literacy, Board on Neuroscience and Behavioral Health. Washington DC: National Academies Press; 2004. Institute of Medicine. Health Literacy: A Prescription to End Confusion. [PubMed] [Google Scholar]

- 16.Ad Hoc Committee on Health Literacy for the Council on Scientific Affairs AMA. Health literacy: report of the Council on Scientific Affairs. J Amer Med Assoc. 1999;281:552–7. [PubMed] [Google Scholar]

- 17.Paasche-Orlow M, Greene SM, Wagner EH. How health care systems can begin to address the challenge of limited literacy. J Gen Intern Med. 2006;21:884–7. doi: 10.1111/j.1525-1497.2006.00544.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Volandes A, Paasche-Orlow M. Health literacy, health inequality and a just healthcare system. Am J Bioeth. 2007;7:5–10. doi: 10.1080/15265160701638520. [DOI] [PubMed] [Google Scholar]

- 19.Ogloff JRP, Otto RK. Are Research Participants Truly Informed? Readability of Informed Consent Forms Used in Research Ethics & Behavior. 1991;1:239. doi: 10.1207/s15327019eb0104_2. [DOI] [PubMed] [Google Scholar]

- 20.Paasche-Orlow MK, Taylor HA, Brancati FL. Readability Standards for Informed-Consent Forms as Compared with Actual Readability. 2003:721–6. doi: 10.1056/NEJMsa021212. [DOI] [PubMed] [Google Scholar]

- 21.Wogalter MS, Howe JE, Sifuentes AH, Luginbuhl J. On the adequacy of legal documents: factors that influence informed consent. Ergonomics. 1999;42:593–613. [Google Scholar]

- 22.Sugarman J, Lavori PW, Boeger M, et al. Evaluating the quality of informed consent. Clinical Trials. 2005;2:34. doi: 10.1191/1740774505cn066oa. [DOI] [PubMed] [Google Scholar]

- 23.Sudore R, Landefeld C, Williams B, Barnes D, Lindquist K, Schillinger D. Use of a modified informed consent process among vulnerable patients: a descriptive study. J Gen Intern Med. 2006;21:867–73. doi: 10.1111/j.1525-1497.2006.00535.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Bickmore T, Pfeifer L, Yin L. The Role of Gesture in Document Explanation by Embodied Conversational Agents. International Journal of Semantic Computing. 2008;2:47–70. [Google Scholar]

- 25.Cassell J, Vilhjálmsson H, Bickmore T. SIGGRAPH ’01; 2001. Los Angeles, CA: 2001. BEAT: The Behavior Expression Animation Toolkit; pp. 477–86. [Google Scholar]

- 26.Informed Consent form template for genetic repository research. (Accessed at http://ccr.coriell.org/Sections/Support/NIGMS/Model.aspx?PgId=216.)

- 27.Davis TC, Long SW, Jackson RH, et al. Rapid estimate of adult literacy in medicine: a shortened screening instrument. Fam Med. 1993;25:391–5. [PubMed] [Google Scholar]

- 28.Lindau S, Basu A, Leitsch S. Health literacy as a predictor of follow-up after an abnormal Pap smear: a prospective study. J Gen Intern Med. 2006;21:829–34. doi: 10.1111/j.1525-1497.2006.00534.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Mancuso C, Rincon M. Impact of health literacy on longitudinal asthma outcomes. J Gen Intern Med. 2006;21:813–7. doi: 10.1111/j.1525-1497.2006.00528.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Sudore R, Yaffe K, Satterfield S, et al. Limited literacy and mortality in the elderly: The health, aging, and body composition study. Journal of General Internal Medicine. 2006;21:806–12. doi: 10.1111/j.1525-1497.2006.00539.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Lincoln A, Paasche-Orlow M, Cheng D, et al. Impact of health literacy on depressive symptoms and mental health-related: quality of life among adults with addiction. J Gen Intern Med. 2006;21:818–22. doi: 10.1111/j.1525-1497.2006.00533.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Taylor SJ, Bogdan R. Introduction to Qualitative Research Methods. John Wiley & Sons; 1998. [Google Scholar]