Abstract

The quality of healthcare is important to American consumers, and discussion on quality will be a driving force towards improving the delivery of healthcare in America. Funding agencies are proposing a variety of quality measures, such as Centers of Excellence, Pay-for-Participation and Pay-for-Performance initiatives, to overhaul the healthcare delivery system in this country. However, it is quite uncertain whether these quality initiatives will succeed in curbing the unchecked growth in healthcare spending in this country, and physicians understandably are concerned about more intrusion into the practice of medicine. This article will outline the genesis of the quality movement and discuss its effect on the surgical community.

Keywords: quality, health care, pay-for-performance, hand surgery, outcomes

Healthcare will be a decisive issue in the 2008 US presidential election. Over the upcoming months, the candidates’ visions for future healthcare policies will be discussed, debated and dissected. In a December 2007 poll by the Kaiser Family Foundation, healthcare ranked second on a list of voters’ most important issues.1 It is so important that 21% of Americans named healthcare as the single most important issue in their choice for president in this election.1 In contrast, in 2004, healthcare ranked only fourth among decisive issues, with only 14% of those polled considering it the most important.2 The issue of healthcare has remained a major concern because restrictions and lack of access to affordable care have eroded the standard of living expected by many Americans.

Any discussion of healthcare is likely to touch upon a trio of topics: cost, access and quality. These topics weigh on the minds of healthcare consumers as well. A September 2007 CBS News poll found that 66% of registered voters reported that they were unsatisfied with the quality of healthcare in the US.3 Another recent poll by the Kaiser Family Foundation found that 80% of respondents were worried about the worsening of the quality of the healthcare services they receive.4 Furthermore, 81% of Americans reported that they were dissatisfied with the cost of healthcare in the US, up from 62% in 2004.2, 3 (Figure 1)

Figure 1.

Percent of Americans dissatisfied with US healthcare costs and US healthcare quality

Adapted from CBS News and the Kaiser Family Foundation1, 3, 4

There is much to be dissatisfied about. US healthcare spending is among the highest in the world, averaging $7,026 per person, or $2.1 trillion in 2006 and is growing at a rate of over 6.7% per year.5–7 Despite continually increasing expenditures, the US has not enjoyed the quality that should be accompanied by this enormous investment.6 (Figure 2) The stakes are high for various special interests groups to protect their “turfs” in this battle for healthcare allocations. These interest groups, which include the government, insurance companies, health maintenance organizations, consumer groups (such as the American Association of Retired Persons), employees of corporations, and ordinary consumers have competing interests that conflict with hospitals and physician organizations in their efforts to extract as much as possible from a fixed pie of healthcare expenditure.

Figure 2.

Health attainment (disability-adjusted life expectancy) and healthcare spending (% of GDP)

Adapted from World Health Organization6

The current system of US medical care is based on the free-market economic model in which supply and demand will create a mutually-beneficial market for both buyers and sellers. Noted Princeton Health Economist Dr. Uwe Reinhardt supports this free-market model. He believes that competition in medicine is healthy and has the potential to give consumers the ability to choose among various providers for the highest quality of care.8 However he also realizes that medicine is a unique field that is influenced by government regulation, consumer norms and market prices.8 In addition to not holding price in check, the existing US provider reimbursement system does not pay much attention to quality, but bases payment instead on volume and intensity of services provided.9, 10 As the US moves towards a single payor system like those that have been adopted by many industrialized nations, quality metrics will be instituted to improve efficiency of service delivery by focusing on preventive care measures and minimizing costly complications.11

Evolution of the US Health Care System

Prior to the 1970s, private and public payors reimbursed physicians and hospitals customary fees for their services. Charges were submitted to the insurance carriers that typically paid the full amount. However, as the cost of healthcare continued to rise, Medicare and private insurers adopted the Diagnostic-Related Groups (DRGs), which introduced the concept of fixed case-rate payment. Rather than paying hospitals for each test and procedure individually, specific payment rates were given to hospitals based on diagnosis.10 The DRGs introduced the concept of capitation in which shifted the financial burden to the hospitals. The aim of the DRG system is to encourage hospitals to discharge patients earlier and to curb the ordering of expensive, often optional, tests. Because hospitals are paid a set amount, if they can practice medicine more cost-effectively, they will retain more of their reimbursement. The DRG model had a great effect in reducing the length-of-stay in hospitals. For example, in the past, patients who underwent elective surgical procedures may have been admitted to the hospital for a few days prior for preoperative evaluations. With pressure placed on hospitals to discharge patients earlier, minimally-invasive procedures, such as laparoscopic operations, have become much more popular. Additionally, preoperative tests and evaluations are now performed in an outpatient setting. Many surgical procedures have shifted from inpatient to outpatient facilities as a consequence.

The Resource-Based Relative Value Scale (RBRVS) introduced in the late 1980s was another attempt to decrease healthcare costs by limiting physician payment through a metric of intensity of their services.12 Though the RBRVS system aimed to curb physician payment, it could induce an increased use of services by physicians to make up for the decrease in payments. Finally, the proliferation of health maintenance organizations (HMOs) temporarily decreased healthcare expenditures by decreasing healthcare coverage and capitating physician payments. This was done by shifting the financial responsibilities onto the physicians, while the executives of certain HMOs have reaped large profits at the expense of the American people.13 The imposition of the “take it or leave it” positions by the HMOs in setting their fee schedule hampered physicians’ ability to receive fair payments for their services because of anti-trust limitations on physicians’ contract negotiations.14 It is quite apparent that these failed initiatives to curb healthcare costs have made physicians and the general public view this quality movement with great skepticism. The true intentions of this initiative have been called into question by some, pinning it as yet another intrusion into physician autonomy and a veiled attempted in cutting physician income.

The Institute of Medicine (IOM), a branch of the National Academy of Sciences, provides scientifically-informed, nonbiased analysis and advice to policy-makers, medical professionals and the general public.15 It presented two important publications to highlight the quality problems in American medicine. The first of these publications is “To Err is Human” in which the IOM estimated that as many as 98,000 deaths per year in the US are caused by medical error.16 In subsequent discussions, the IOM gave recommendations regarding improving the American healthcare system through the paper “Crossing the Quality Chasm: A New Health System for the 21st century.”17 In this paper, the IOM emphasizes that the gap between the current state of American healthcare and what is needed has widened. This chasm is caused by changing healthcare needs, new and increased use of technology and the growth in the elderly population who often have chronic conditions.17 To begin remedying this situation, the IOM recommended the following: (1) applying evidence to healthcare delivery, (2) using information technology more appropriately, (3) better training for healthcare workforce, and (4) aligning payment with quality improvement.17 Clearly there is much room for improvement in the quality of American healthcare.

Definition of healthcare quality

Before any discussion of healthcare quality can begin, one must define what exactly is quality. Although the definition has evolved over the years, the IOM’s definition is the most widely used.18 Published in 1990, the IOM‘s definition states that quality is “the degree to which health services for individuals and populations increase the likelihood of desired health outcomes and are consistent with current professional knowledge.”17 But this straight-forward definition masks the difficulty of actually measuring healthcare quality.

The measurement of healthcare quality is fraught with potholes and paradoxes. First, there are numerous stake-holders (providers, patients, health care organizations, insurers, purchasers of healthcare) each with their own interests to protect, often conflicting with other stake-holders. Secondly, it is virtually impossible to create a completely error-free measure that is applicable to every situation.19 Finally, the demands placed on quality measures are often unrealistic. Avedis Donabedian, MD, a pioneer in the field of healthcare quality measurement, observed that those who are not familiar with quality assessment “demand measur es that are easy, precise, and complete – as if a sack of potatoes being weighed.”20

Measurement of healthcare quality

Quality can be assessed in one of three domains: Structure, Process and Outcome. Structural assessments are based on the attributes of the setting in which the care is received. These attributes may be of the physician (such as specialty or years in practice), the hospital (staffing characteristics, facilities) or the healthcare system as a whole (financial resources, staff organization).20 Process denotes components of the encounter between patient and healthcare provider, in other words what actions were taken by both patient and provider.20 For example, process measures may include whether compression stockings are placed for long operations or whether a preoperative time-out is instituted to avoid wrong-site surgery. Outcome assessments are, quite simply, the status of the patient following care.20 Outcomes may include health-related quality of life or morbidity and mortality rates.

Outcome assessment was one of the first measures of healthcare quality. In 1916, Ernest Amory Codman, the father of the modern outcomes movement, had suggested methods to monitor what he termed “end results” of care.21 But for certain procedures or conditions, outcomes measures can be quite elusive. Outcomes after carpal tunnel release, for example, cannot be measured accurately with physical tests, such as grip strength or range of motion. Assessment of these outcomes must be based on patient-rated instruments by assessing patients’ perception of their symptom and functional improvement. For other procedures, like silicone arthroplasty, certain complication such as joint loosening may take many years to develop, making it difficult for surgeons and therapists to determine this procedure’s effectiveness unless the patients are followed for many years after surgery.

These difficulties have led health analysts to turn toward structure and process measures as a proxy of quality of care. Many structure and process measures can be assessed relatively easily and pr ecisely. Information on institution procedure volume or the practice of administrating pre-operative antibiotics is readily available or can be abstracted from patient charts with little difficulty. Because of their straightforward nature, these are generally thought to be adequate quality measures.19 There are drawbacks, however. Outcomes do not always suffer, despite poor performance on structure and process measures. Most patients do not develop wound infections, even for those who do not receive prophylactic antibiotics. Just because these patients do not experience poor outcomes, due to luck alone, ought not indicate that they receive high-quality care. One must be careful to not confuse a lack of negative outcomes with good quality of care.

Measuring surgical quality

Quality can be assessed both explicitly and implicitly. Explicit quality measures are developed in advance of the assessment, in contrast to implicit quality assessment that is based on the subjective evaluation of the assessor.20 The best of the explicit measures are evidence-based, validated and have been found to be reliable.19 When a priori assessment measures have not been developed, implicit measures may be used. This type of assessment is based on personal experience and/or expert judgment.20 For example, a physician or therapist may be asked to review a patient’s care to determine if the care is “good enough.”19

For certain medical specialties and medical conditions, process and outcome measures have been well-defined. Quality of emergency department care for acute myocardial infarction can be measured by examining the administration of β-blockers and aspirin or by the information derived from an electrocardiogram.22 For cardiac conditions such as this, outcomes can be measured via mortality. Acute myocardial infarction care shows the ideal relationship between process measures and outcomes measures because there is strong evidence to show that the measured processes have a direct impact on the outcome (death) in question.23 Few hand surgery or therapy procedures have such well-defined and proven measures, as mortality is usually not a dominant outcome. This makes measuring the quality of hand surgery and therapy much more difficult to assess.

Thinking back to the three domains of quality measurement, it is difficult to say if any one is better than the other for measuring surgical quality. Structural measures, such as hospital or surgeon procedure volume are easy to obtain, but the value of volume to evaluate quality over a range of procedure has been debated.24–27 A hospital or a surgeon who perform a large number of a surgical procedure may be shown to have lower mortality and morbidity for this procedure. But the good report card given to the high volume hospitals or surgeons may be caused by other variables such as disease case-mix or intangible factors that may not be entirely attributed to the hospitals and the surgeons. Additionally, structural measures are generally not easily manipulated, for example, to test the effect of volume on outcomes in a clinical trial study design.28 This makes evaluation of the relationship between structure and outcomes difficult.

Process measures, such as prophylactic antibiotic administration for patients having total joint arthroplasty, are already being used in some surgical quality initiatives.29, 30 Because most process measures are performed by the surgeon or therapist directly, or under his/her direct supervision, they are considered as “fair” quality measures compared to structural measures, which are often outside of surgeons’ or therapists’ control.28 But process measures are very procedure-specific and may even be patient-specific. This means that a large number of process measures will need to be developed and validated in order to cover the range of surgical procedures. Finally, process measures may be related to pre-operative care rather than actual surgical or post-operative care.31

In surgery, outcomes draw the most attention because the “end-result” is what is important to the surgical patient. But measuring surgical outcomes can be very difficult. Thus far, many initiatives that measure surgical quality have used morbidity and mortality as outcome indicators.32 This is an attractive measure because morbidity and mortality are easily defined and can be obtained from already existing administrative data. But mortality is only relevant for certain high-risk procedures. Unplanned reoperation has been suggested as a possible outcomes measure;33 like morbidity and mortality, it is easy to define and can be obtained from preexisting data. A 2007 study in the Netherlands found that 70% of reoperations were due to errors in surgical technique, making it ideal to measure quality.33 Unfortunately, from a quality measurement standpoint, reoperation happens relatively infrequently, making it less than ideal for low-volume procedures.33

The use of outcome measures is further complicated by the need for careful case-mix adjustment.31 This will avoid “punishing” hospitals and surgeons who take on more complex cases that naturally may have poorer outcomes. But case-mix adjustment is not an exact science. There are multiple methodologies for case-mix adjustment and the use of these different methods can potentially provide differing results.34 Case-mix adjustment is further complicated by the fact that there is insufficient evidence as to what specific prognostic factors actually govern different outcomes or treatment needs.

Despite these difficulties, surgical quality initiatives do exist. One of the largest is the Veterans Affairs (VA) National Surgical Quality Improvement Program (NSQIP). Established in 1994, NSQIP supplies almost constant feedback, in the form of rankings and outcomes, to VA medical centers on major operations in 9 surgical specialties.35 This feedback allows individual hospitals to monitor their own quality and see how they compare to other VA medical centers. The initiative was originally designed to assess surgical outcomes. But the act of measuring and examining the provided feedback induced behavioral changes that resulted in a 27% decrease in 30-day mortality in the first 5 years of the program.35 In 1998, NSQIP began its Private Sector Initiative, providing feedback on general and vascular surgery to participating academic medical centers and community hospitals.36

Accurate measuring of surgical quality has many potential benefits. The first and most obvious is the improvement of the field. Patients stand to benefit from more than just better quality of care. As healthcare in general becomes more consumer-focused, patients will want to make more of their own healthcare decisions. Surgery is an excellent field for patients to do this. Surgical procedures are often planned in advance, giving patients sufficient time to compare different surgeons and hospitals. Furthermore, because surgery is often a one-time event, with patients often returning to their previous physicians for management, patients are willing to travel farther than they would for medical treatment.32

Measuring quality in hand surgery

The application of quality measures developed for other surgical fields presents a problem for hand surgery. As mentioned above, the standard measure for most surgical quality initiatives is mortality. But mortality in hand surgery is less than 1%,37 so it would not be a useful outcome indicator. Measuring process and structure is no different for hand surgery than for any other surgical specialty. Process measures that indicate the quality of pre-operative care are useful, but do not actually measure the quality of hand surgery performed, in particular, the performance of the hand surgery and the level of expertise by the surgeon. Structure measures such as volume are of limited use because of many other factors that determine the referral patterns. Structural factors such as surgeon subspecialty training or therapist certification have the potential to be predictors of good surgical outcomes.28 In a very specialized field like hand surgery, this may be an important quality indicator as indicated by the rigor of the training path.

Hand surgery and therapy are unique in that injuries and disabilities of the hand can have substantial impact on patients’ quality of life. In lieu of functional outcome measures, such as grip strength or range of motion, patient-rated outcomes have been intensely studies in this specialty.38 Patient-rated questionnaires, such as the Michigan Hand Outcomes Questionnaire (MHQ)39, 40 or the Disabilities of the Arm, Shoulder and Hand (DASH),41 allow patients themselves to report their own level of functional disability.

Applications of quality data

Report Cards

The VA’s experience with NSQIP has repeatedly shown that simply providing feedback can promote significant improvements on outcomes indicators, in this case 30-day mortality rates.42 But more often quality data are used to compare entities. The Healthcare Effectiveness Data and Information Set (HEDIS) collects data on 71 measures over 8 domains to compare health plans and hospitals.43 Mostly process and structure measures are used, with patient satisfaction being the only outcome measure. And these measures have been criticized for imprecise data quality and poor translation to actual quality of practice.44,19 Despite the shortcomings, these data are used by many organization to produce report cards detailing hospital performance on certain surgical procedures. This allows consumers to select where they would like to have their surgery, based on the previous performance of these hospitals.

By far the most popular procedure ranked is coronary artery bypass graft surgery (CABG). As report cards go, this is an ideal procedure. It is preformed frequently at many hospitals and lends itself to the use of mortality as an outcome measure.45 New York was the first state to begin publicly reporting hospital CABG data in 1990 and currently seven other states regularly publish this data.45, 46 These data are almost exclusively derived from hospital discharge reports and are generally limited to procedure volume and mortality.46 Private companies have joined the report card game as well. Currently, companies such as Health Grades, Inc. provide for-fee report cards on hospitals, by condition or procedure. These companies rely on Medicare data and report on a wide range of diagnoses, although their coverage of surgical procedure is somewhat lacking. They use a star system (1, 3 or 5 stars, with 5 being the best), rather than reporting actual data, which may be easier for consumers to understand, but can compromise accuracy. Krumholz et al. compared Health Grades’ ratings with more traditional quality measures, also taken from Medicare data.47 They found that while the ratings could distinguish between aggregate performance groups, Health Grades’ ratings were of no use when comparing individual hospitals.47 Finally, popular press has begun ranking hospitals as well. The US News and World Report published an annual issue devoted to listing America’s best hospitals in 17 specialties using Medicare data and physician surveys. Report cards and national rankings are not solely an American phenomenon. Canada, Great Britain and Australia all have extensive systems of reporting healthcare performance.48–50

Although these report cards and popular press rankings are aimed at consumers, and thus are not as rigorous as traditional quality improvement initiatives, they are nonetheless important. As American medicine becomes more consumer-driven, patients will be taking more responsibility in both choosing and paying for their healthcare. Report cards and rankings provide the transparency that consumers demand. But there is some indication that patients either are not aware of these reports or do not use them.51 Additionally, there are questions regarding their accuracy.47 Given the push toward more transparency in medical care, the prominence of hospital report cards can only grow.

Even more controversial are physician report cards. Several states publicly publish CABG performance data on individual cardiac surgeons, sometimes including mortality rates. Understandably, this makes many surgeons uncomfortable. In fact, these report cards can have unintended consequences. A 1996 survey of cardiovascular surgeons in Pennsylvania found that the majority of surgeons reported that since the commencement of the state’s individual surgeon reporting system, they had become less willing to perform CABG on severely ill patients.52 Perhaps most troubling are consumer ratings of physicians. Zagat, known best for their restaurant guides, has joined forces with an insurance company to provide an interface that will allow patients to rate their physicians based on trust, availability, communication and office environment.53 Although Zagat’s officials freely admit that the ratings are not scientific and reflect patient experience and not quality of care,53 there is a risk that those reading the reviews may not take this into consideration.

Obviously no surgeon, or hospital, wants to provide poor-quality care. Some may believe that the public reporting of quality measures may stimulate improvement among low-performing hospitals. Healthcare payors have developed ways to further reward quality. Currently, the majority of programs focus on rewarding good performance with monetary bonuses, but there are plans in the works to penalize poor performing centers by withholding a percentage of reimbursements as well.29

Centers of Excellence

One of the most basic uses of quality data is the centers of excellence model. In these programs, hospitals that have the best results for a particular procedure are identified and patients are steered toward these centers. Payors do this by limiting payment to only certain high-performing hospitals or by paying for a greater percentage of care at these centers as compared to lower scoring hospitals.31 Centers of excellence programs can be based on pre-existing data, such as surgical volume and mortality, making them relatively easy and inexpensive to set up.31 But, as previously mentioned, these data might not be the best predictors of outcomes.26, 27 Access is another problem with the centers of excellence model. Hospitals that are identified as high-quality are not evenly distributed across the country. Payors may have little power to steer patients toward these hospitals if they are not nearby.54

Pay-for-Participation

The centers of excellence model does not provide direct monetary incentives to high-performing hospitals, but instead rewards them with more patients. One of the newest incentive schemes, pay-for-participation, does provide monetary incentives, but does so using different standards. In these programs, incentives are given for participation in quality measurement, regardless of actual quality.31 This is consistent with the philosophy of continuous quality improvement. Pay-for-participation programs create procedure-specific patient outcomes registries. These registries provide regular feedback to hospitals and to individual surgeons and allow for collaboration between hospitals. Meetings are regularly held for participants to discuss performance and brainstorm ways to improve quality. Increased quality is judged collectively, not based on individual hospital or surgeon performance. This structure is the same as that used by the VA for its NSQIP program, which has been very effective in improving performance on surgical outcome measures.35

One of the hallmarks of pay-for-participation programs is the lack of public reporting.31 Because the information is designed to be used by hospitals and surgeons, very specific types of indicators are measured that may not translate well to public report cards. This makes pay-for-participation programs popular among surgeons, but also reduces public support for these programs.31 The major downfall is that pay-for-participation programs are expensive and complicated to set up. Infrastructure has to be in place for data collection. Every site needs at least one data abstractor and high-level officials need to meet regularly. However, their acceptance by surgeons and their a bility to improve quality may outweigh these initial costs. The attraction with this model is that the quality can be improved through collective efforts, rather than creating a tier system in which only the top performing centers will be rewarded, whereas the low performing centers continue to languish at the bottom of the quality spectrum.

Pay-for-Performance

Pay-for-performance takes cues from both the centers of excellence model and pay-for-participation. Simply put, hospitals that score high on selected quality measures are rewarded with a monetary bonus, or likewise, low-scoring centers are penalized a portion of their reimbursement.31 When process measures are used as a proxy for quality, pay-for-performance programs have been quite successful in improving adherence to the measured processes.31 But as mentioned above, these processes may not be measuring actual surgical care. Despite the potential monetary gain (or threat of monetary loss), pay-for-performance initiatives offer very little in the way of outside motivation. The incentives are often quite low, possibly too low to entice participation, especially for low-performing centers where substantial change and large cost expenditure for infrastructural improvement may be necessary to receive these meager rewards.55

The first major, nation-wide pay-for-performance initiative was inst ituted by the Centers for Medicare and Medicaid Services (CMS) and piloted in 2003. The program uses a classic pay-for-performance model. Core performance measures were developed in areas such as acute myocardial infarction, coronary artery bypass graft, heart failure, community-acquired pneumonia and hip and knee replacement.29 Quality in these areas was based almost exclusively on process measures. Hospitals report their compliance with the performance measures and CMS then ranks the hospitals based on this performance.29 These rankings will be published on the internet, as well as be used to distribute incentives and assess penalties. Hospitals that fall in the top 10% receive a 2% bonus on their Medicare payments. Hospitals in the next 10% receive a 1% bonus and those who round out the top 50% receive recognition for their quality but no monetary reward. Finally, hospitals that have not met minimum performance levels will be penalized as much as 2% of their Medicare reimbursements.29

As expected, there have been some complaints that these measures do not accurately indicate quality of care.56 In addition, participating institutions will need to hire staff to monitor these metrics, often costing more than the potential incentives provided. These complaints are not unique to the CMS program and could be made of any pay-for-performance initiative, yet new programs continue to launch. Consumers continue to demand transparency, payors continue to push for lowered costs and everyone continues to clamor for higher quality. Stuck in the middle are surgeons, says Dr. Tom Russell, Executive Director of the American College of Surgeons (ACS), who feel besieged by these new demands.57 But the quality initiative, as presented in the pay-for-performance system developed by CMS, is here to stay.

Hybrid programs

In 2000, 160 private- and public-sector employers who purchase healthcare for 34 million people formed the Leapfrog Group.54 The goals of this group are to reduce preventable medical mistakes, encourage public reporting of quality and outcomes data, reward doctors and hospitals for quality, safety and affordability and to help consumers make smart healthcare decisions.58 A multidisciplinary team identified “leaps” that when implemented, would result in higher healthcare value - lower costs coupled with higher quality.54 The four “leaps” are: Computerized Physician Order Entry, Evidence-Based Hospital Referral, ICU Physician Staffing and the Leapfrog Safe Practice Score. The main activity of the Leapfrog Group is an online, voluntary hospital survey. Recently, the Leapfrog Group has introduced a hospital rewards program, based on quality and safety, which provides incentives for both participation and excellence.

The Leapfrog Group is an innovative initiative with the potential to have a great impact on the practice of medicine. The group has a large membership, clear goals and national recognition. The number of compliant hospitals has doubled for some “leaps” and several members have been successful in using the “leaps” to make consumers more value-conscious when choosing a healthcare provider.54 But the impact has not been as large as it could potentially be. Too few hospitals have opted to participate in the survey and some purchaser-members have been reluctant to use the survey’s results to guide their healthcare purchasing decisions (limiting employees’ choices to only high-scoring hospitals, for example).54 The Leapfrog Group is continuing to expand their program, maintaining their innovative position by assisting large payors in leveraging their buying power to demand changes in payment, decreased costs, the adoption of quality measures and an overall increase in healthcare value.

Final thoughts on quality data applications

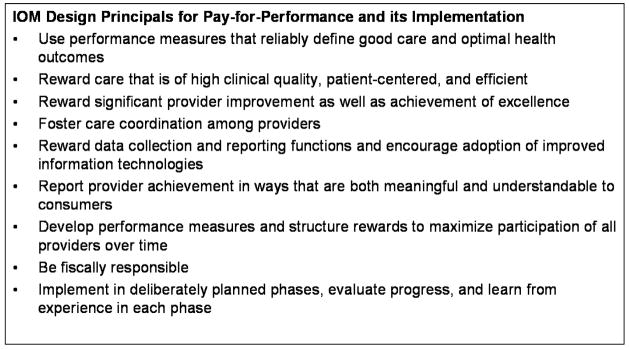

Many quality workgroups have organized an effort to steer national dialogues regarding quality of care initiatives. As one of these groups, the IOM has issued design principles for pay-for-performance programs, including the use of reliable measures, fostering coordination of care and the rewarding of data collection.59 (Figure 3) Pay-for-performance programs are seen by many as being the wave of the future, despite there being little evidence that any one program produces superior quality measurement over another.31

Figure 3.

IOM Design Principals for Pay-for-Performance and its Implementation59

Often the question of which model is best is situational. Measuring the quality of risky, uncommon procedures, such as pancreatic resection and esophagectomy, is best done using the centers of excellence model.31 Pay-for-performance initiatives are best for improving compliance with underused processes of care that are linked to surgical outcomes by a high-level of evidence, like venous thromboembolism prophylaxis for high-risk patients.31 But they are not without their flaws. Rosenthal et al. examined three preventative care process measures in two pay-for-performance initiatives.55 They found that practices with the lowest compliance at baseline showed the most improvement.55 But these were not the centers that reaped the most rewards. Practices that had the highest baseline compliance, received over 75% of the incentives, although they showed little to no improvement.55 Despite improving to a much greater degree, the low-baseline practices were not able to reach the payment threshold and were not rewarded for their efforts. This could discourage participation and quality improvement for centers that need it the most. Additionally, centers with low baseline compliance are often centers with less resources.60 Pay-for-performance initiatives that penalize centers that already struggle with a lack of resources, many of whom care for underserved populations, have the potential to further perpetuate health disparities.

Pay-for-participation initiatives avoid this problem by rewarding all providers equally, providing the best potential for overall improvement of surgical quality.31 This encourages all providers to participate in quality measurement initiatives, regardless of baseline performance. Critics may worry that being rewarded solely for participation may not result in improved performance, but NSQIP has indicated that simply measuring performance can provide better results.28, 35 There are additional benefits as well. Because there is no need to rank large groups of providers or to provide broad generalizations, these programs can identify procedure-specific processes that are related to important surgical outcomes, rather than being limited to high-volume or high-risk procedures.31 This makes pay-for-participation useful for all surgical specialties. As previously mentioned, there are substantial monetary and time-commitment barriers to initiating a pay-for-participation program, but the investment of money will pay off in the potential to reduce negative outcomes of all procedures, which can raise the overall value of surgical care.

Implications for hand surgery and therapy

The future of healthcare quality measurement is in no way certain. Surgical societies, such as the ACS, American Academy of Orthopedic Surgeons and the American Society of Plastic Surgeons are all participating in some way in the quality improvement movement.10, 57, 61 Hand surgeons have a duty and an interest to participate in these societies’ quality improvement initiatives. The development of accurate quality matrices that are specific to hand surgery is of the utmost importance, while ultimately rewarding those who participate in these quality initiatives. Hand surgeons and therapists cannot stand by and hope that the right measures and programs appear. We need to work to make changes and take the future of hand surgery quality into our own hands.

Acknowledgments

Supported in part by a grant from the National Institute of Arthritis and Musculoskeletal and Skin Diseases (R01 AR047328) and a Midcareer Investigator Award in Patient-Oriented Research (K24 AR053120) (to Dr. Kevin C. Chung).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Kaiser Health Tracking Poll: Election 2008. Menlo Park, CA: The Henry J. Kaiser Family Foundation; December 2007, 2007. [Google Scholar]

- 2.Blendon RJ, Altman DE, Benson JM, Brodie M. Health care in the 2004 presidential election. N Engl J Med. 2004 Sep 23;351(13):1314–1322. doi: 10.1056/NEJMsa042360. [DOI] [PubMed] [Google Scholar]

- 3.Health Care and the Democratic Presidential Campaign. CBS News; September 17, 2007, 2007. [Google Scholar]

- 4.Kaiser Family Foundation poll. Storrs, CT: Roper Center for Public Opinion Research; 2007. [Google Scholar]

- 5. [Accessed March 3, 2008];National Health Expenditure Accounts. 2006 Highlights. http://www.cms.hhs.gov/NationalHealthExpendData/downloads/highlights.pdf.

- 6.The World Health Report. [Accessed March 3, 2008];Health Systems: Improving Performance. 2000 http://www.who.int/whr/2000/en/whr00_en.pdf.

- 7.Catlin A, Cowan C, Hartman M, Heffler S. National health spending in 2006: a year of change for prescription drugs. Health Aff. 2008 Jan–Feb;27(1):14–29. doi: 10.1377/hlthaff.27.1.14. [DOI] [PubMed] [Google Scholar]

- 8.Reinhardt UE. The Swiss health system: regulated competition without managed care. JAMA. 2004 Sep 8;292(10):1227–1231. doi: 10.1001/jama.292.10.1227. [DOI] [PubMed] [Google Scholar]

- 9.Bozic KJ, Smith AR, Mauerhan DR. Pay-for-performance in orthopedics: implications for clinical practice. J Arthroplasty. 2007 Sep;22(6 Suppl 2):8–12. doi: 10.1016/j.arth.2007.04.015. [DOI] [PubMed] [Google Scholar]

- 10.Pierce RG, Bozic KJ, Bradford DS. Pay for performance in orthopaedic surgery. Clin Orthop Relat Res. 2007 Apr;457:87–95. doi: 10.1097/BLO.0b013e3180399418. [DOI] [PubMed] [Google Scholar]

- 11.Chung KC, Rohrich RJ. Measuring surgical quality: is it attainable? . Plast Reconstr Surg. doi: 10.1097/PRS.0b013e3181958ee2. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Hsiao WC, Braun P, Dunn D, Becker ER, DeNicola M, Ketcham TR. Results and policy implications of the resource-based relative-value study. N Engl J Med. 1988 Sep 29;319(13):881–888. doi: 10.1056/NEJM198809293191330. [DOI] [PubMed] [Google Scholar]

- 13.Sherrid P. Mismanaged care? . US News & World Report. 1997;123:57–62. [PubMed] [Google Scholar]

- 14.Ghori AK, Chung KC. Market concentration in the healthcare insurance industry has adverse repercussions on patients and physicians. Plast Reconstr Surg. 2008;121(6):435–4403. doi: 10.1097/PRS.0b013e318170816a. [DOI] [PubMed] [Google Scholar]

- 15.Institute of Medicine. [Accessed April 24, 2008.];About - Institute of Medicine . http://www.iom.edu/CMS/AboutIOM.aspx.

- 16.To Err is Human: Building a Safer Health System. Washington, DC: 2000. [PubMed] [Google Scholar]

- 17.Crossing the quality chasm: a new health system for the 21st century. Washington, DC: Institute of Medicine; 2001. [PubMed] [Google Scholar]

- 18.Blumenthal D. Part 1: Quality of care--what is it? N Engl J Med. 1996 Sep 19;335(12):891–894. doi: 10.1056/NEJM199609193351213. [DOI] [PubMed] [Google Scholar]

- 19.Brook RH, McGlynn EA, Cleary PD. Quality of health care. Part 2: measuring quality of care. N Engl J Med. 1996 Sep 26;335(13):966–970. doi: 10.1056/NEJM199609263351311. [DOI] [PubMed] [Google Scholar]

- 20.Donabedian A. The quality of care. How can it be assessed? . JAMA. 1988 Sep 23–30;260(12):1743–1748. doi: 10.1001/jama.260.12.1743. [DOI] [PubMed] [Google Scholar]

- 21.Donabedian A. Twenty years of research on the quality of medical care: 1964–1984. Eval Health Prof. 1985 Sep;8(3):243–265. doi: 10.1177/016327878500800301. [DOI] [PubMed] [Google Scholar]

- 22.Glickman SW, Ou FS, DeLong ER, et al. Pay for performance, quality of care, and outcomes in acute myocardial infarction. JAMA. 2007 Jun 6;297(21):2373–2380. doi: 10.1001/jama.297.21.2373. [DOI] [PubMed] [Google Scholar]

- 23.Lambert-Huber DA, Ellerbeck EF, Wallace RG, Radford MJ, Kresowik TF, Allison JA. Quality of care indicators for patients with acure myocardial infarction: pilot validation of the indicators. Clin Performance Q Health Care. 1994;2:219–222. [Google Scholar]

- 24.Birkmeyer JD, Siewers AE, Finlayson EVA, et al. Hospital volume and surgical mortality in the United States. N Engl J Med. 2002;346:1128–1137. doi: 10.1056/NEJMsa012337. [DOI] [PubMed] [Google Scholar]

- 25.Birkmeyer JD, Stukel TA, Siewers AE, Goodney PP, Wennberg DE, Lucas FL. Surgeon volume and operative mortality in the United States. N Engl J Med. 2003;349:2117–2127. doi: 10.1056/NEJMsa035205. [DOI] [PubMed] [Google Scholar]

- 26.Daley J. Invited commentary: quality of care and the volume-outcome relationship--what’s next for surgery? . Surgery. 2002 Jan;131(1):16–18. doi: 10.1067/msy.2002.120237. [DOI] [PubMed] [Google Scholar]

- 27.Sheikh K. Reliability of provider volume and outcome associations for healthcare policy. Med Care. 2003 Oct;41(10):1111–1117. doi: 10.1097/01.MLR.0000088085.61714.AE. [DOI] [PubMed] [Google Scholar]

- 28.Birkmeyer JD, Dimick JB, Birkmeyer NJ. Measuring the quality of surgical care: structure, process, or outcomes? . J Am Coll Surg. 2004 Apr;198(4):626–632. doi: 10.1016/j.jamcollsurg.2003.11.017. [DOI] [PubMed] [Google Scholar]

- 29.Darr K. The Centers for Medicare and Medicaid Services proposal to pay for performance. Hosp Top. 2003 Spring;81(2):30–32. doi: 10.1080/00185860309598019. [DOI] [PubMed] [Google Scholar]

- 30.Bhattacharyya T, Hooper DC. Antibiotic dosing before primary hip and knee replacement as a pay-for-performance measure. J Bone Joint Surg Am. 2007 Feb;89(2):287–291. doi: 10.2106/JBJS.F.00136. [DOI] [PubMed] [Google Scholar]

- 31.Birkmeyer NJ, Birkmeyer JD. Strategies for improving surgical quality--should payers reward excellence or effort? N Engl J Med. 2006 Feb 23;354(8):864–870. doi: 10.1056/NEJMsb053364. [DOI] [PubMed] [Google Scholar]

- 32.Broder MS, Payne-Simon L, Brook RH. Measures of surgical quality: what will patients know by 2005? . J Eval Clin Pract. 2005 Jun;11(3):209–217. doi: 10.1111/j.1365-2753.2005.00518.x. [DOI] [PubMed] [Google Scholar]

- 33.Kroon HM, Breslau PJ, Lardenoye JW. Can the incidence of unplanned reoperations be used as an indicator of quality of care in surgery? . Am J Med Qual. 2007 May-Jun;22(3):198–202. doi: 10.1177/1062860607300652. [DOI] [PubMed] [Google Scholar]

- 34.Glance LG, Dick A, Osler TM, Li Y, Mukamel DB. Impact of changing the statistical methodology on hospital and surgeon ranking: the case of the New York State cardiac surgery report card. Med Care. 2006 Apr;44(4):311–319. doi: 10.1097/01.mlr.0000204106.64619.2a. [DOI] [PubMed] [Google Scholar]

- 35.Khuri SF, Daley J, Henderson WG. The comparative assessment and improvement of quality of surgical care in the Department of Veterans Affairs. Arch Surg. 2002 Jan;137(1):20–27. doi: 10.1001/archsurg.137.1.20. [DOI] [PubMed] [Google Scholar]

- 36.Khuri SF. Safety, quality, and the National Surgical Quality Improvement Program. Am Surg. 2006 Nov;72(11):994–998. [PubMed] [Google Scholar]

- 37.Bhattacharyya T, Iorio R, Healy WL. Rate of and risk factors for acute inpatient mortality after orthopaedic surgery. J Bone Joint Surg Am. 2002 Apr;84-A(4):562–572. doi: 10.2106/00004623-200204000-00009. [DOI] [PubMed] [Google Scholar]

- 38.Chung KC, Burns PB, Davis Sears E. Outcomes research in hand surgery: where have we been and where should we go? . J Hand Surg. 2006 Oct;31A(8):1373–1379. doi: 10.1016/j.jhsa.2006.06.012. [DOI] [PubMed] [Google Scholar]

- 39.Chung KC, Watt AJ, Kotsis SV, Margaliot Z, Haase SC, Kim HM. Treatment of unstable distal radial fractures with the volar locking plating system. J Bone Joint Surg. 2006 Dec;88A(12):2687–2694. doi: 10.2106/JBJS.E.01298. [DOI] [PubMed] [Google Scholar]

- 40.Chung KC, Kotsis SV, Kim HM. A prospective outcomes study of Swanson metacarpophalangeal joint arthroplasty for the rheumatoid hand. J Hand Surg. 2004 Jul;29A(4):646–653. doi: 10.1016/j.jhsa.2004.03.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Beaton DE, Katz JN, Fossel AH, Wright JG, Tarasuk V, Bombardier C. Measuring the whole or the parts? Validity, reliability, and responsiveness of the Disabilities of the Arm, Shoulder and Hand outcome measure in different regions of the upper extremity. J Hand Ther. 2001 Apr–Jun;14(2):128–146. [PubMed] [Google Scholar]

- 42.Khuri SF. The NSQIP: a new frontier in surgery. Surgery. 2005 Nov;138(5):837–843. doi: 10.1016/j.surg.2005.08.016. [DOI] [PubMed] [Google Scholar]

- 43.National Committee for Quality Assurance. [Accessed March 18, 2008];What is HEDIS? . 2008 http://www.ncqa.org/tabid/187/Default.aspx.

- 44.Pawlson LG, Scholle SH, Powers A. Comparison of administrative-only versus administrative plus chart review data for reporting HEDIS hybrid measures. Am J Manag Care. 2007 Oct;13(10):553–558. [PubMed] [Google Scholar]

- 45.Steinbrook R. Public report cards--cardiac surgery and beyond. N Engl J Med. 2006 Nov 2;355(18):1847–1849. doi: 10.1056/NEJMp068222. [DOI] [PubMed] [Google Scholar]

- 46. [Accessed April 8, 2008.];Hospitals: How safe? . http://www.consumerreports.org/cro/health-fitness/health-care/hospitals-how-safe-103/hospital-report-cards/

- 47.Krumholz HM, Rathore SS, Chen J, Wang Y, Radford MJ. Evaluation of a consumer-oriented internet health care report card: the risk of quality ratings based on mortality data. JAMA. 2002 Mar 13;287(10):1277–1287. doi: 10.1001/jama.287.10.1277. [DOI] [PubMed] [Google Scholar]

- 48.Australian Medical Association . AMA Public Hospital Report Card 2007. 2007. [Google Scholar]

- 49.Shojania KG, Forster AJ. Hospital mortality: when failure is not a good measure of success. Can Med Assoc J. 2008;179(2):153–157. doi: 10.1503/cmaj.080010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Zamvar V. Reporting systems for cardiac surgery. BMJ. 2004 Aug 21;329(7463):413–414. doi: 10.1136/bmj.329.7463.413. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Schneider EC, Epstein AM. Use of public performance reports: a survey of patients undergoing cardiac surgery. JAMA. 1998 May 27;279(20):1638–1642. doi: 10.1001/jama.279.20.1638. [DOI] [PubMed] [Google Scholar]

- 52.Schneider EC, Epstein AM. Influence of cardiac-surgery performance reports on referral practices and access to care. A survey of cardiovascular specialists. N Engl J Med. 1996 Jul 25;335(4):251–256. doi: 10.1056/NEJM199607253350406. [DOI] [PubMed] [Google Scholar]

- 53.Gupta S. Time. Vol. 171. Nwe York: 2008. Rating your doctor ; p. 62. [PubMed] [Google Scholar]

- 54.Galvin RS, Delbanco S, Milstein A, Belden G. Has the leapfrog group had an impact on the health care market? . Health Aff (Millwood) 2005 Jan–Feb;24(1):228–233. doi: 10.1377/hlthaff.24.1.228. [DOI] [PubMed] [Google Scholar]

- 55.Rosenthal MB, Frank RG, Li Z, Epstein AM. Early experience with pay-for-performance: from concept to practice. JAMA. 2005 Oct 12;294(14):1788–1793. doi: 10.1001/jama.294.14.1788. [DOI] [PubMed] [Google Scholar]

- 56.Werner RM, Bradlow ET. Relationship between Medicare’s hospital compare performance measures and mortality rates. JAMA. 2006 Dec 13;296(22):2694–2702. doi: 10.1001/jama.296.22.2694. [DOI] [PubMed] [Google Scholar]

- 57.Russell TR. The future of surgical reimbursement: quality care, pay for performance, and outcome measures. Am J Surg. 2006 Mar;191(3):301–304. doi: 10.1016/j.amjsurg.2005.10.025. [DOI] [PubMed] [Google Scholar]

- 58.The Leapfrog Groups for Patient Safety. [Accessed April 25, 2008.]; http://www.leapfroggroup.org/

- 59.Report Brief. Rewarding provider performance: Aligning incentives in medicare. Institute of Medicine; 2006. [Google Scholar]

- 60.Rosenthal MB, Dudley RA. Pay-for-performance: will the latest payment trend improve care? JAMA. 2007 Feb 21;297(7):740–744. doi: 10.1001/jama.297.7.740. [DOI] [PubMed] [Google Scholar]

- 61.Hunter JG. Appropriate prophylactic antibiotic use in plastic surgery: the time has come. Plast Reconstr Surg. 2007 Nov;120(6):1732–1734. doi: 10.1097/01.prs.0000280567.18162.12. [DOI] [PubMed] [Google Scholar]