SUMMARY

Humans and other animals change their behavior in response to unexpected outcomes. The orbitofrontal cortex (OFC) is implicated in such adaptive responding, based on evidence from reversal tasks. Yet these tasks confound using information about expected outcomes with learning when those expectations are violated. OFC is critical for the former function; here we show it is also critical for the latter. In a Pavlovian over-expectation task, inactivation of OFC prevented learning driven by unexpected outcomes, even when performance was assessed later. We propose this reflects a critical contribution of outcome signaling by OFC to encoding of reward prediction errors elsewhere. In accord with this proposal, we report that signaling of reward predictions by OFC neurons was related to signaling of prediction errors by dopamine neurons in ventral tegmental area (VTA). Furthermore, bilateral inactivation of VTA or contralateral inactivation of VTA and OFC disrupted learning driven by unexpected outcomes.

Keywords: orbitofrontal, dopamine, over-expectation, expectancies, prediction errors, reinforcement learning

INTRODUCTION

Humans and other animals change their behavior when things don’t go as expected. The orbitofrontal cortex (OFC) has long been implicated in such adaptive responding. This is typically demonstrated experimentally using reversal learning tasks, in which subjects must learn to switch their responses when the associations between cues and outcomes are reversed. Damage to OFC reliably impairs rapid reversal learning (Chudasama and Robbins, 2003; Hornak et al., 2004; Izquierdo et al., 2004; Jones and Mishkin, 1972; Schoenbaum et al., 2003). Yet these reversal tasks confound acquisition and the use of the new associations between the cues and outcomes, since subjects must simultaneously learn from outcomes that contradict their expectations and modify their behavior based on this new information. OFC supports the latter function by signaling currently expected outcomes to help guide behavior (Burke et al., 2008; Gallagher et al., 1999; Izquierdo et al., 2004; McDannald et al., 2005; Ostlund and Balleine, 2007).

Here we use a Pavlovian over-expectation task (Lattal and Nakajima, 1998; Rescorla, 1970) to test whether OFC also supports the former function – facilitating new learning in the face of unexpected outcomes. In this task, rats are first trained that several cues independently predict reward. Subsequently, two cues are presented together, in compound, followed by the same reward. When responding for the individual cues is assessed again later, rats exhibit reduced responding to the compounded cues. This reduced responding is thought to result from the violation of summed expectations for reward during compound training. In other words, in compound training, the rat expects to receive two rewards, one for each cue, but only obtains one. The resulting discrepancy between actual and expected outcomes – the negative prediction error – changes the strength of the underlying associative representations, leading to reduced responding when the cues are presented alone.

Here we report that reversible inactivation of OFC during compound training prevents this later reduction in responding to the individual cues. This result cannot be explained as a simple deficit in using associative information to guide behavior, and instead indicates that signals from OFC are required for learning. We propose that this function reflects the contribution of outcome signaling by OFC neurons to encoding of reward prediction errors by other brain areas. In support of this hypothesis, we present single-unit recording data showing that when signaling of reward predictions in OFC is high, signaling of reward prediction errors by dopaminergic neurons in ventral tegmental area (VTA) is low. Furthermore, we also show that VTA, like OFC, is necessary for learning during over-expectation. These data implicate the OFC as a critical part of a circuit driving learning when outcomes are not as expected.

RESULTS

To test the hypothesis that the OFC plays a critical role in facilitating learning in the face of unexpected outcomes, we employed a Pavlovian over-expectation task consisting of three phases: conditioning, compound training, and a probe test. In the conditioning phase, three different cues are independently associated with food reward. Subsequently, in compound training, two of these cues are presented simultaneously as a compound in order to induce a heightened expectation of reward. This heightened expectation is violated when only the normal amount of reward is delivered, inducing a prediction error which then modifies the strength of the underlying associative representations. As a result, rats trained in this manner exhibit reduced responding to the cues previously presented in compound, when they are presented alone in a probe test administered after compound conditioning. This decline in responding is evident from the start of the probe test, indicating that it is the result of learning that occurred during compound training. By dissociating learning in the face of unexpected outcomes, in compound training, from the use of the new information, in probe testing, this task provides an excellent vehicle with which to test the hypothesis that OFC has a specific role in learning when expected and actual outcomes differ. If this is true, then inactivation of OFC during compound training should prevent the spontaneous decline in responding to compounded cue in the probe test. On the other hand, if OFC is only required for using information about expected outcomes to guide behavior, then inactivation of OFC during compound training should have no effect on behavior in the subsequent probe test.

Thirty-five rats were trained in a modified version of the over-expectation task, illustrated by the experimental timeline in Figure 1. Prior to training, 19 rats underwent surgery to implant cannulae bilaterally in OFC. This included 14 rats that later received muscimol and baclofen infusions to inactivate OFC (OFCi group), and 5 rats that later received saline infusions to serve as controls. Muscimol and baclofen were given at a concentration sufficient to cause a reversal learning impairments similar to those caused by neurotoxic lesions of OFC; impairments were reversible as demonstrated by subsequent normal reversal performance (see Supplemental Results). Cannulae location is illustrated in Figure 1. The remaining 16 rats served as non-surgical controls. The saline and non-surgical controls exhibited no differences in any of the subsequent measures of conditioning, and thus they were combined into a single control group.

Figure 1. Effect of OFC inactivation on changes in behavior after over-expectation.

Shown is the experimental timeline linking conditioning, compound conditioning, and probe phases to data from each phase. Top and bottom figures indicate control and OFCi group, respectively. In timeline and figures, V1 is a visual cue (a cue light), A1, A2, A3 are auditory cues (Tone, white noise, clicker, counterbalanced), and O1, O2 are different flavored sucrose pellets (banana and grape, counterbalanced). Position of cannulae within OFC in saline controls (gray dot) and OFCi (black dot) rats are shown in beneath the timeline. A. Percentage of responding to food cup during cue presentation across 10 days of conditioning. Gray, black, and while squares indicate A1, A2 and A3 cues, respectively. B. Percentage of responding to food cup during cue presentation across four days of compound training. Gray, black, and while squares indicate A1/V1, A2 and A3 cues, respectively. Gray and black bars in the insets indicate average normalized % responding to A1/V1 and A2, respectively. C. Percentage of responding to food cup during cue presentation in the probe test. Line graph shows responding across the eight trials and the bar graph shows average responding in these eight trials. Gray, black, and while colors indicate A1, A2 and A3 cues, respectively (*, significant difference at p<0.05; **, significant difference at p<0.01 or better).

After surgery and a two week recovery period, these rats underwent 10 days of conditioning, during which cues were paired with flavored sucrose pellets (banana and grape, designated as O1 and O2, counterbalanced). We have shown elsewhere that these flavored pellets are equally preferred but discriminable, by both controls and OFC-lesioned rats (Burke et al., 2008). Three unique auditory cues (tone, white noise and clicker, designated A1, A2, and A3, counterbalanced) were the primary cues of interest. A1 served as the “over-expected cue” and was associated with three pellets of O1. A2 served as a control cue and was associated with three pellets of O2. A3 was associated with no reward and thus served as a CS-. Rats were also trained to associate a visual cue (cue light, V1) with three pellets of O1. V1 was to be paired with A1 in the compound phase to induce over-expectation; therefore a non-auditory cue was used in order to discourage the formation of compound representations. Rats in the control and OFCi groups showed similar responding to V1 in all phases (see Supplemental Results for statistical analysis of V1 responding).

Acquisition of conditioned responding to the critical auditory cues is shown in Figure 1A. Both controls and OFCi rats developed elevated responding to A1 and A2, compared to A3, across the 10 sessions. Rats in both groups learned to respond to these two cues equally, and responding did not increase across the final 4 sessions. In accord with this impression, ANOVA (group X cue X session) revealed significant main effects of cue and session (cue: F(2,66)=56.5, p<0.000001; session: F(9,297)=5.26, p=0.000001), and a significant interaction between cue and session (F(18,594)=9.88, p<0.000001); however there were no main effects nor any interactions with group (F’s < 1.36, p’s > 0.14). A direct comparison of responding to A1 and A2 revealed no statistical effects of either cue or group (F’s < 1.60, p’s > 0.21), indicating that rats learned to respond to these two cues equally. Furthermore, there were no effects of session in the final four days of training (F’s < 2.29, p’s > 0.08), indicating that responding was at ceiling.

After initial conditioning, the rats underwent four days of compound training. These sessions were the same as preceding sessions, except that V1 was delivered simultaneously with A1. V1 also continued to be presented separately to support its associative strength and thereby maximize the effect of over-expectation on A1. A2 and A3 continued to be presented as before. Immediately prior to each session, rats in the OFCi group received bilateral infusions of muscimol and baclofen in order to inactivate OFC; cannulated rats in the control group received saline infusions.

Responding during compound training is shown in Figure 1B. Both controls and OFCi rats maintained elevated responding to A1/V1 and A2, compared to A3, across the four sessions. ANOVA (group X cue X session) revealed significant main effects of cue and session (cue: F(2,66)=101.4, p<0.000001; session: F(3,99)=3.33, p=0.02) and a significant interaction between cue and session (F(6,198)=2.90, p=0.009). While there were no effects of group on the raw response rates (F’s < 3.6, p’s > 0.06), a comparison of responding to each cue across the last 4 days of initial conditioning versus the 4 days of compound training revealed a significant increase in responding to A1 when it was presented in compound with V1 in controls (F(1,20)=5.42, p=0.017) but not in OFCi rats (F(3,39)=1.56, p=0.23). There was no change in responding to A2 in either group (F’s < 2.68, p’s > 0.11). This effect was also evident in response rates normalized to the last day of training, which showed a significant increase in responding to the A1/V1 compound in controls (F(1,20)=5.47, p=0.029) but not in OFCi (F(1,13)=0.60, p=0.45) rats (Figure 1B, insets). Thus OFC inactivation prevented summation of responding to the compound cue. This effect is consistent with a role for OFC in generating outcome expectancies to drive behavior, which has been shown previously. The question then is whether the loss of this function would also prevent the learning normally induced by compound training.

To test this question, rats received a probe test the day after the last compound conditioning session, in which A1, A2 and A3 were presented alone without reinforcement. Conditioned responding is shown in Figure 1C. Both control and OFCi rats showed elevated responding to A1 and A2, compared to A3, and this responding extinguished across the session. Accordingly an ANOVA (group X cue X trial) revealed significant main effects of cue and trial (cue: F(2,66)=39.7, p<0.000001; trial: F(7,231)=14.8, p<0.000001) and a significant interaction between cue and trial (F(14,462)=2.34, p=0.003). Importantly there were no significant interactions involving group and trial (F’s < 1.37, p’s > 0.21), indicating that there were no effects of prior inactivation of OFC on extinction learning. Because extinction learning would be impaired by damage to OFC (Izquierdo and Murray, 2005), this result indicates that there was no lasting impairment to OFC function resulting from prior inactivation.

In addition to these effects, which were similar between groups, controls also showed less responding to the over-expected A1 cue than to the A2 control cue. As a result, there was a significant interaction between group and cue (F(2,66)=3.23, p=0.04). A step-down ANOVA comparing responding to A1 and A2 revealed that controls showed less responding to the over-expected A1 cue than to the A2 control cue (F(1,20)=14.1, p=0.001); OFCi rats responded at identical levels to the A1 and A2 cues throughout the probe session (F(1, 13)=0.008, p=0.92).

Importantly the difference in responding to A1 and A2 in controls was evident on the first trial in the probe test and persisted throughout extinction (Figure 1C). Thus the decline in responding was not due to effects of extinction or other manipulations in the probe test. The difference in responding was entirely due to a significant decline in responding to the A1 cue; there was no significant change in responding to A2. By contrast, OFCi rats responded at identical levels to the A1 and A2 cues throughout the probe session, and responding on the first trial in the probe test was statistically indistinguishable from responding during compound training. In other words, inactivation of OFC during compound training prevented the spontaneous decrease in conditioned responding to A1 during the probe test, even though the OFCi rats did not receive any inactivating agents at the time of probe testing. Consistent with this impression, a statistical comparison of responding on the first trial of the probe test and last day of compound conditioning revealed a significant decline in responding to A1 in controls (F(1, 19)=13.4, p=0.002) but not OFCi rats (F(1, 13)=0.97, p=0.34), and no change in responding to A2 in either group (F’s < 1.18 p’s > 0.29). Neurotoxic lesions of OFC produced exactly the same effect in a separate experiment (see Supplemental Results).

This pattern of results suggests a novel account of the role of OFC in promoting adaptive or flexible behavior in the face of unexpected outcomes. The involvement of the OFC in flexible behavior is typically demonstrated experimentally using reversal learning tasks, in which associations between cues and outcomes are switched. Damage to the OFC causes animals to be unable to change established response patterns in this setting (Chudasama and Robbins, 2003; Hornak et al., 2004; Izquierdo et al., 2004; Jones and Mishkin, 1972; Schoenbaum et al., 2003). Yet reversal tasks confound the acquisition and the use of new associative information. Previously, researchers have hypothesized that OFC-dependent reversal deficits occur because OFC is necessary for using associative information acquired by other brain regions, such as amygdala, either to inhibit inappropriate responses or to drive correct ones. Yet these hypotheses are inconsistent with recent data showing that animals with damage to OFC are readily able to inhibit prepotent responses in some settings (Chudasama et al., 2007) and with observations that recoding of associative information after reversal in OFC is both inversely related to reversal performance and less than that observed in other brain regions (Patton et al., 2006; Saddoris et al., 2005; Stalnaker et al., 2006). Moreover, neither account can easily explain the current results, since both predict no effect of reversible inactivation during the compound training phase on subsequent performance in the probe test. Instead, our results suggest that OFC is necessary for learning from differences between actual and expected outcomes, as occurs on the compound trials when the summed expected outcome fails to materialize.

Differences between expected and actual outcomes are thought to generate signals – prediction errors –to drive associative learning (Sutton and Barto, 1998). Interestingly a number of brain imaging studies have reported that neural activity in OFC, as reflected in BOLD signal, is correlated with errors in reward prediction. For example, BOLD signal in regions within OFC increases abruptly when expectations for reward are not met (Nobre et al., 1999), and this signal conforms with formal learning theory predictions in a blocking paradigm (Tobler et al., 2006). Thus OFC might contribute to learning during over-expectation if it were directly signaling reward prediction errors.

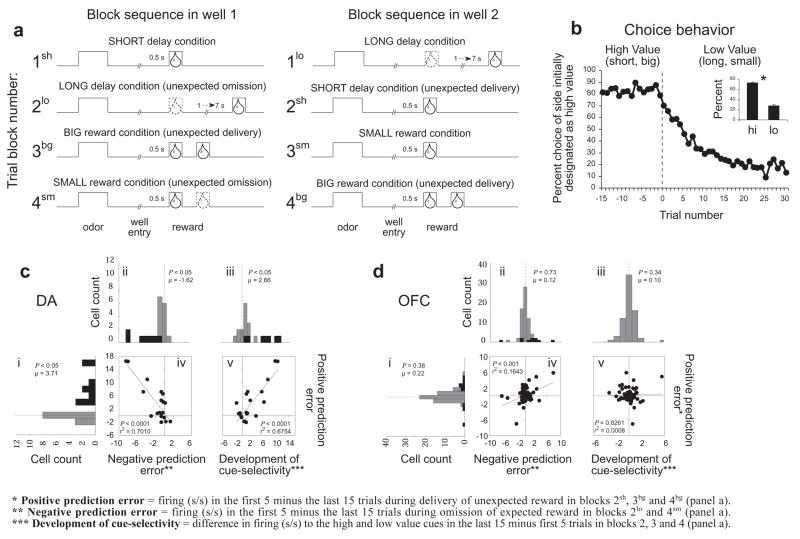

To test this hypothesis, we examined single-unit spiking activity in OFC neurons in a simple choice task that we have previously used to characterize signaling of prediction errors in rat dopamine neurons (Roesch et al., 2007). The choice task and associated behavior are illustrated in Figure 2. Rats were required to respond to one of two adjacent wells after sampling one of three different odor cues at a central port. One odor signaled a sucrose reward in the right well (forced-choice), a second odor signaled a sucrose reward in the left well (forced-choice), and a third odor signaled reward at either well (free-choice). During recording, rats learned to bias their choice behavior in response to manipulations of the size or time to reward in one or the other well (Figures 2A and B). In theory, these manipulations should induce prediction errors, particularly at the start of new blocks when the rats must learn that their prior expectations are no longer valid. Specifically we would expect positive prediction errors at the start of blocks 2sh, 3bg, 4bg (Figure 2A), when an unexpected reward is instituted, and negative prediction errors at the start of blocks 2lo and 4sm (Figure 2A), when an expected reward is omitted. In accord with this proposal, spiking activity in dopamine neurons in VTA, thought to signal positive and negative prediction errors (Bayer and Glimcher, 2005; Montague et al., 1996; Pan et al., 2005; Waelti et al., 2001), was higher at the start of blocks 2sh, 3bg, 4bg (reproduced in Figure 2Ci for comparison with OFC data), lower at the start of blocks 2lo and 4sm (reproduced in Figure 2Cii), and transferred to the predictive cues after learning in these blocks (reproduced in Figure 2Ciii and Cv).

Figure 2. Neural activity in response to errors in reward prediction in OFC versus VTA dopamine neurons.

A. Line deflections indicate the time course of stimuli (odors and rewards) presented to the animal on each trial. Dashed lines show when reward is omitted and solid lines show when reward is delivered. At the start of each recording session one well was randomly designated as short (a 0.5 s delay before reward) and the other long (a 1–7 s delay before reward) (block 1). In the second block of trials these contingencies were switched (block 2). In blocks 3–4, we held the delay constant while manipulating the number of the rewards delivered. Expected rewards were thus omitted on long delay trials at the start of block 2 (2lo) and small reward conditions at the start of blocks 3 and 4 (3sm and 4 sm), and rewards were delivered unexpectedly on short delay trials and big reward trials at the start of blocks 2 (2sh) and block 3–4 (3bg and 4 bg), respectively. B. Line graphs show choice behavior before and after the switch from high valued outcome (averaged across short and big) to a low valued outcome (averaged across long and small); inset bar graphs show average percent choice for high vs low value outcomes. After 5 trials rats had switched their preference to the more valued side, choosing the preferred reward (i.e. short, big) greater than 50% of the time. By the last 15 trials in a block of trials rats were choosing the more valued well greater than 75% of the time. Notably, the change in choice behavior within a given block (first 5 minus last 15 trials) was not significantly different (2-factor anova) across recording group (OFC vs dopamine; P = 0.1435) or value manipulation (delay vs size; P = 0.2311). C and D. Changes in spiking activity during reward delivery and cue sampling in response to errors in reward prediction in reward-responsive VTA dopamine neurons (n = 20) versus reward-responsive OFC neurons (n = 69). Histograms plot the difference in the average firing rate of each neuron in the first five versus the last fifteen trials during the 500 ms after delivery of an unexpected reward (i) or omission of an expected reward (ii) or during the cue sampling period as value selectivity developed (iii). Black bars represent neurons in which the difference in firing was statistically significant (t-test; p < 0.05). P-values in the distribution histogram indicated the results of a wilcoxon text. Boxed scatter plots illustrate neuron-by-neuron correlations between signaling of positive prediction errors and negative prediction errors (iv) or between signaling of positive prediction errors and the development of cue selective responses (v).

Spiking activity in OFC neurons recorded in this same choice task did not exhibit any of these characteristics. Analysis of data from before learning, not included in previously published work (Roesch et al., 2006), showed that reward-related activity in OFC was unaffected by delivery of an unexpected reward (Figure 2Di) or by omission of an expected reward (Figure 2Dii). Nor was there any relationship between the development of cue selectivity and encoding of positive prediction errors in these OFC neurons (Figure 2Diii and Dv). The same was true when responses of all OFC neurons were considered, without pre-selecting for those that were reward responsive (see Supplemental Results). Although a small minority of OFC neurons fired more to unexpected rewards, none of them showed a suppression of activity to reward omission, as would be expected if they were signaling prediction errors. Indeed, to the slight extent that neurons fired more to unexpected rewards, they also tended to fire more to reward omission rather than less, as indicated by a weak but significant positive correlation between the two responses (Figure 2Div). This relationship is the opposite of that observed in dopamine neurons (Figure 2Civ).

If spiking activity in OFC does not reflect prediction errors, then why is OFC necessary for learning driven by these errors during over-expectation and, perhaps, reversal learning? One possibility is that signals from OFC might contribute to the normal calculation of prediction errors by neurons in areas like VTA. Recent studies suggest that OFC is critical for signaling information about expected outcomes, in real time, to guide behavior (Izquierdo et al., 2004; Ostlund and Balleine, 2007; Pickens et al., 2003). For example, rats and monkeys with damage to OFC are unable to modify cue-evoked responding spontaneously to reflect changes in the value of the predicted outcome (Izquierdo et al., 2004; Pickens et al., 2003). These effects have been linked to evidence from single unit recording and imaging studies that cue-evoked neural activity in the OFC signals the outcomes that are expected and their unique motivational value (Arana et al., 2003; Feierstein et al., 2006; Gottfried et al., 2003; O’Doherty et al., 2002a; Padoa-Schioppa and Assad, 2006; Schoenbaum et al., 1998; Tremblay and Schultz, 1999). The same information might be used by VTA dopamine neurons to calculate prediction errors when actual outcomes do not match expectations.

To test this hypothesis, we again examined single-unit spiking activity in OFC and VTA dopamine neurons in our simple choice task. However, this time we compared activity on delay trials and small reward trials before and after delivery of reward. Rewards on these two trial types had the same relative value. This was evident in the rats’ choice behavior, which changed similarly across blocks in which the small or delayed reward was implemented (first 5 minus last 15 trials, anova, NS). Also within the last 15 trials rats selected the delayed reward on 23% of choice trials and the small-reward on 16% of choice trials, rates that were not significantly different (anova, NS).

However these reward types differed in their predictability. Delivery of the small reward was highly predictable, always occurring at 500 ms, whereas delivery of the delayed reward was titrated between 1 and 7 seconds based on the rats behavior and was therefore always somewhat unpredictable. Even after learning, the rats could not predict the delayed reward with great precision. This is reflected in the rats’ licking behavior, which increased rapidly prior to the small-predictable reward and showed no change prior to delivery of the delayed-unpredictable reward (Figure 3A–C; right). Instead, rats licking behavior increased around 500 ms after well entry on delayed trials (Figure 3A–C; left; white bar); this time corresponded to delivery of immediate reward in the preceding trial block (Figure 2A).

Figure 3. Rats time reward delivery on small reward but not delayed reward trials.

A. Line deflections indicate the time course of well entry and reward omission and delivery on delay and small reward trials (odor sampling precedes well entry but is not shown; see Figure 2A). Dashed lines show when reward is omitted (long-delay trials) and solid lines show when reward is delivered (delay and small reward trials). B. Licking aligned on omission (left) and delivery of reward (right) on delay (black) and small (gray) reward trials. Licking increased significantly before delivery of reward on small trials and before omission of reward on delay trials; licking did not change significantly prior to reward delivery on delay trials. C. Bars illustrating slope of rise in licking behavior during the 500 ms preceding reward omission and delivery on delay and small reward trials (*P < 0.0001; ttest). Error bars indicate SEM’s.

If outcome expectancies signaled by OFC before reward contribute to calculation of prediction errors in VTA after reward, then activity in OFC should be higher for a predictable than an unpredictable reward, and this difference should be present before reward delivery, whereas activity in VTA dopamine neurons should be higher for an unpredictable than a predictable reward, and this difference should be present only after reward delivery. In accord with these predictions, spiking activity in OFC neurons was significantly higher before delivery of a small-predictable than a delayed-unpredictable reward (Figure 4B, E), whereas spiking activity in VTA dopamine neurons was significantly higher after delivery of a delayed-unpredictable than a small-predictable reward (Figure 5B, F). Thus when activity in OFC before reward is low, error signaling by the dopamine neurons after reward delivery is high, and vice versa.

Figure 4. Encoding of expectancies and not prediction errors in OFC.

A. Line deflections indicate the time course of well entry and reward omission and delivery on delay and small reward trials as in Figure 3A. B. Average firing of reward-responsive VTA dopamine neurons (n = 20) aligned on omission (left) and delivery of reward (right) on delay (black) and small (gray) reward trials. Firing in the dopamine neurons declined on reward omission and increased on reward delivery, and the increase was greater for delivery of the delayed-unpredictable reward. C–F. Histograms show the distribution of difference scores between firing in the epochs labeled under panel B. Epochs analyzed include [1] 500 ms before reward omission on delayed trials, [2] 500 ms during reward omission on delayed trials, [3] 500 ms before reward delivery on delayed trials, [4] 500 ms after reward delivery on small trials and [5]–[6] 500 ms before and after reward delivery on small reward trials, respectively. Black bars represent neurons in which the difference in firing was statistically significant (t-test; p < 0.05). P-values in the distribution histogram indicated the results of a wilcoxon text for each comparison.

Figure 5. Encoding of prediction errors and not expectancies in VTA.

A–F.Conventions as in Figure 4 except data is from reward-responsive OFC neurons (n = 69). Firing in the OFC neurons increased before delivery of the small-predictable reward and also before omission of expected reward early in delay trials; firing did not increase before delivery of the delayed-unpredictable reward. Black bars represent neurons in which the difference in firing was statistically significant (t-test; p < 0.05). P-values in the distribution histogram indicated the results of a wilcoxon text for each comparison.

A similar relationship was also evident when the expected reward was omitted just after well entry on the long-unpredictable trials. In OFC, activity was higher before omission than before actual delivery of the delayed reward (Figure 4B, C), reflecting the difference in the rats’ expectations for reward (Figure 3B–C), whereas activity in the VTA dopamine neurons was modestly suppressed upon omission (Figure 5B, D). These patterns are consistent with the proposal that VTA dopamine neurons may use information from OFC to calculate prediction errors.

Of course, if OFC acts to promote learning through signaling of prediction errors by VTA, then VTA should be critical to learning during over-expectation. Specifically, suppression of firing by dopamine neurons in VTA, which is thought to signal negative prediction errors, should be necessary in the compound training phase for diminished responding to the over-expected cue to occur in the subsequent probe test. To test this prediction, we inactivated VTA with GABA agonists during compound training in the over-expectation task described earlier. Infusion of GABA agonists should prevent phasic changes in neural activity, particularly the suppression of firing thought to signal negative prediction errors. If phasic suppression of firing in VTA on compound trials drives learning, then inactivated rats should continue to respond at high levels to the over-expected cue during later probe testing.

All procedures were identical to those used in the first experiment, except that rats received cannulae bilaterally in VTA. This included 11 rats in which VTA was later inactivated via infusions of muscimol and baclofen (Murschall and Hauber, 2006) (VTAi group) and 6 saline controls. An additional group of 12 rats were implanted with cannulae in OFC and VTA in opposite hemispheres to allow disconnection of OFC and VTA via contralateral inactivation during compound training. All rats developed elevated responding to A1 and A2, compared to A3, across the 10 conditioning sessions (Figure 6A; see Supplemental Results for V1). ANOVA (group X cue X session) revealed significant main effects of cue and session (for VTAi expt: cue: F(2,30)=40.4, p<0.000001; session: F(9,135)=7.88, p<0.000001; for OFC-VTAi expt: cue: F(2,32)=57.2, p<0.000001; session: F(9,144)=8.78, p<0.000001), and a significant interaction between cue and session (VTAi: F(18,270)=9.26, p<0.000001; OFC-VTAi: F(18,288)=11.7, p<0.000001); however there were no main effects nor any interactions with group (F’s < 0.95, p’s > 0.45). A direct comparison of responding to A1 and A2 revealed no statistical effects of either cue or group (F’s < 1.46, p’s > 0.24), indicating that rats learned to respond to these two cues equally. Furthermore, there were no effects of session in the final four days of training (F’s < 1.33, p’s > 0.27), indicating that responding was at ceiling.

Figure 6. Effect of VTA inactivation on changes in behavior after over-expectation.

Conventions as in Figure 1, except that data is from saline control and VTAi group or OFC-VTAi group. A. Percentage of responding to food cup during cue presentation across 10 days of conditioning. B. Percentage of responding to food cup during cue presentation across four days of compound training. C. Percentage of responding to food cup during cue presentation in the probe test. Line graph shows responding across the eight trials and the bar graph shows average responding in these eight trials.

Elevated responding to A1/V1 and A2, compared to A3, was maintained across the four sessions (Figure 6B). ANOVA (group X cue X session) revealed significant main effects of cue (for VTA expt: F(2,32)=102.2, p<0.000001; for OFC-VTAi expt: F(2,30)=107.1, p<0.000001). While there were no effects of group on the raw response rates (F’s < 4.05, p’s > 0.06), a comparison of responding to each cue across the last 4 days of initial conditioning versus the 4 days of compound training revealed a significant increase in responding to A1 when it was presented in compound with V1 in controls (F(1,5)=7.50, p=0.04) but not in VTAi (F(1,11)=1.59, p=0.23) or OFC-VTAi rats (F(1,10)=0.26, p=0.61). There was no change in responding to A2 in any group (F’s < 1.29, p’s > 0.3). Additionally, if responding to the cues was normalized to response rates on the final day of conditioning, there was a significant increase in responding to the A1/V1 compound in controls (F(1,5)=13.8, p=0.013) but not in VTAi (F(1,11)=0.05, p=0.82) or OFC-VTAi rats (F(1,10)=0.08, p=0.77). Thus bilateral inactivation of VTA or disconnection of OFC from VTA during compound training prevented summation of responding to the compound cue.

Furthermore, as predicted by our hypothesis, bilateral inactivation of VTA or disconnection of OFC from VTA during compound training also prevented the normal reduction in responding to the over-expected cue in the subsequent probe test (Figure 6C). ANOVA (group X cue X trial) revealed significant main effects of cue and trial (for VTAi expt: cue: F(2,30)=34.8, p<0.000001; trial: F(7,105)=5.70, p=0.00013; for OFC-VTAi expt: cue: F(2,32)=34.0, p<0.000001; trial: F(7,112)=4.68, p=0.00012). Importantly there were no interactions involving group and trial (F’s < 1.13, p’s > 0.33), indicating that there were no effects of prior inactivation of VTA or OFC and VTA on extinction learning. In addition to these effects, there was a significant interaction between group and cue (VTAi: F(2,30)=3.49, p=0.04; OFC-VTAi: F(2,32)=3.38, p=0.04). A step-down ANOVA comparing responding to A1 and A2 revealed that controls showed less responding to the over-expected A1 cue than to the A2 cue (F(1,5)=36.0, p=0.001), whereas VTAi (F(1,10)=0.21, p=0.65) and OFC-VTAi rats (F(1,11)=0.06, p=0.82) responded at identical levels to the A1 and A2 cues throughout the probe session. A comparison of responding on the first trial of the probe test versus the last day of compound conditioning revealed a significant decline in responding to A1 in controls (F(1, 5)=9.50, p=0.02) but not in VTAi (F(1, 10)=3.39, p=0.1) or OFC-VTAi rats(F(1, 11)=1.08, p=0.32), and no change in responding to A2 in any group (VTAi: F’s < 0.91, p’s > 0.38; OFC-VTAi: F’s < 4.6, p’s > 0.06).

DISCUSSION

Here we show a novel role for OFC in supporting learning driven by errors in reward predictions. Specifically OFC was necessary for learning, when actual outcomes were worse than expected, in a simple Pavlovian over-expectation task. This result cannot be explained by current proposals that OFC is critical for the use of outcome-related information in guiding behavior, since OFC was online when the use of the information was assessed. Instead it requires some involvement by OFC in recognizing and learning from reward prediction errors. According to classical learning theory (Rescorla and Wagner, 1972), learning from unexpected outcomes is driven by prediction errors, which are calculated from the difference between the value of the outcome predicted by cues in the environment (V) and the value of the outcome that is actually received (λ). The influence of this error term is captured in the equation: δ = c (λ−V), where c is a coefficient that reflects processes like attention, which can influence the rate of learning.

Taking this simple equation as our starting point, we can examine the potential contributions of OFC to the calculation of prediction errors that would explain the learning deficit shown here. The first possibility is that OFC could signal prediction errors themselves (λ−V), consistent with brain imaging studies that have reported that neural activity in OFC, as reflected in BOLD signal, is correlated with errors in reward prediction (Nobre et al., 1999),(Tobler et al., 2006). Here we found little evidence that OFC neurons, either as a group or individually, signaled errors in reward prediction in our choice task. Although a small handful of neurons fired more to unexpected than to expected rewards, an equal number fired less to unexpected rewards, and none of these neurons showed complementary changes in firing to reward omission. Thus by definition, single-unit activity in OFC does not signal prediction errors. Moreover, although it would be possible to cobble together a heterogeneous population of OFC neurons to encode the error term, this population would constitute a small, hand-selected minority of the neurons in OFC. Thus we do not believe OFC is directly signaling prediction errors in any meaningful way.

A second possibility is that OFC could affect the coefficient governing the rate of learning (c), perhaps by modulating attention or surprise in response to changes in reward. Again, this could explain how inactivation of OFC could prevent learning from unexpected rewards. However, there is little or no evidence that OFC is important for attentional processes. OFC is not critical for tasks, like set-shifting (Brown and McAlonan, 2003; Dias et al., 1996), where attentional function is isolated, while OFC is critical for learning in which changes in attention and the learning rate coefficient, c, are not theoretically required. Examples of the latter include the spontaneous changes in conditioned responding after reinforcer devaluation, which is not affected by damage to the central nucleus of the amygdala (Hatfield et al., 1996), an area clearly implicated in incrementing attention (Holland and Gallagher, 1993), but is impaired by damage to OFC (Gallagher et al., 1999; Izquierdo et al., 2004; Pickens et al., 2005; Pickens et al., 2003). Thus the role of OFC in this setting does not seem to reflect a general modulation of attention or learning rate.

Instead, we would suggest that inactivation of OFC prevents learning in this setting because it is critical for signaling information about the value of the predicted reward (V). This information is critical to the calculation of prediction errors. This proposal is consistent with much existing evidence that OFC signals information about expected outcomes (i.e. outcome predictions) (Gottfried et al., 2003; O’Doherty et al., 2002b; Padoa-Schioppa and Assad, 2006; Schoenbaum et al., 1998; Tremblay and Schultz, 1999), and it is also consistent with the relationship between reward-related activity in OFC and VTA dopamine neurons demonstrated in the current study.

According to this model, outcome expectancies or predictions signaled by OFC would be important both for influencing ongoing behavior (Burke et al., 2008; Gallagher et al., 1999; Izquierdo et al., 2004; McDannald et al., 2005; Ostlund and Balleine, 2007), as well as for updating underlying associative information when the outcomes of that behavior become apparent. The latter function may explain the long-standing involvement of OFC in reversal learning tasks and other settings in which it is necessary to learn from one’s mistakes (Chudasama and Robbins, 2003; Hornak et al., 2004; Izquierdo et al., 2004; Jones and Mishkin, 1972). Indeed, failure to reverse encoding in OFC is associated with faster reversal learning (Stalnaker et al., 2007), and the reversal impairment caused by OFC damage is mediated by persistent miscoding of the old associative information in basolateral amygdala (Saddoris et al., 2005; Stalnaker et al., 2007). These results are inconsistent with existing ideas that OFC supports rapid changes in behavior due to a role in rapidly storing new information, and instead suggest that OFC supports reversal learning due to its role in signaling the old information. Such signals could then contribute to the generation of prediction error signals, thereby updating associative representations (initially) in other brain areas. Of course, learning in the compound phase of the over-expectation task requires only negative prediction errors; thus although single-unit data here and elsewhere is consistent with a general involvement in the calculation of prediction errors, OFC may not play a critical role in settings that require positive prediction errors (Wheeler and Fellows, 2008). This would explain why learning is generally unaffected by damage to OFC.

We also provide evidence that the contribution of OFC to error signaling may be mediated through dopamine neurons, which have been implicated in actually signaling prediction errors (Bayer and Glimcher, 2005; Montague et al., 1996; Pan et al., 2005; Waelti et al., 2001). Signaling of both reward prediction errors by dopamine neurons in VTA was clearly related to signaling of reward predictions by single-units in OFC, and bilateral inactivation of VTA during compound training - or unilateral inactivation of VTA in combination with inactivation of OFC in the opposite hemisphere –prevented learning driven by prediction errors in the over-expectation task. The effect of VTA inactivation is consistent with the somewhat controversial proposal that phasic suppression of firing in dopamine neurons, which is often quite modest, is sufficient to drive learning in response to negative prediction errors. It is also worth noting that although inactivation of VTA during compound training did not affect baseline responding, it did abolish the increase in normalized responding observed to the compound cues in controls in both experiments (insets, Figures 1B and 4B). This effect is consistent with reports that VTA is necessary for expression of the general activating or motivational effects of Pavlovian cues and with proposals that tonic dopamine mediates motivational tone (Niv et al., 2007).

Further work is necessary to determine how information from OFC might reach VTA. Signals from OFC could reach VTA either directly via relatively sparse projections or indirectly through a variety of intermediate areas. One particularly attractive candidate is the ventral striatum. Ventral striatum receives heavy input from OFC and projects strongly onto dopaminergic areas proposed to calculate prediction errors (Haber et al., 2000). Ventral striatum has been proposed to function as a critic by contributing to error signaling via these pathways (Hare et al., 2008; O’Doherty et al., 2004). Input concerning the value of outcomes predicted by cues from OFC may support that function. Alternatively OFC may contribute to signaling of prediction errors in one of the many other areas reported to be involved in this function. According to this proposal, OFC would function as a critic in its own right, signaling the value of current states based on the likelihood of future outcomes.

EXPERIMENTAL PROCEDURES

Subjects

Experiments used male Long-Evans rats (Charles Rivers), tested at the University of Maryland School of Medicine in accordance with University and NIH guidelines.

Over-Expectation

Cannulae location

Cannulae (23g; Plastics One inc., Roanoke, VA) were implanted bilaterally in OFC (3.0 mm anterior to bregma, 3.2 mm lateral, and 5.0 mm ventral, vertical) in 19 rats, in VTA (5.4 mm posterior to bregma, 0.9 mm lateral and 7.3 mm ventral, angled 15 degrees toward the midline from vertical) in 17 rats, or unilaterally in OFC and VTA in opposite hemispheres in 12 rats in order to allow infusion of inactivating agents or saline vehicle prior to some testing sessions. Actual infusions were made at 3.0 mm anterior, 3.2 mm lateral, and 6.0 mm ventral in OFC and 5.4 mm posterior, 0.6 mm lateral and 8.2 mm ventral in VTA. Sixteen rats served as non-surgical controls; data from these rats was not statistically different from the saline controls in either experiment.

Apparatus

Training was done in 16 standard behavioral chambers from Coulbourn Instruments (Allentown, PA), each enclosed in a sound-resistant shell. A food cup was recessed in the center of one end wall. Entries were monitored by photobeam. A food dispenser containing 45 mg sucrose pellets (plain, banana-flavored, or grape-flavored; Bio-serv, Frenchtown, NJ) allowed delivery of pellets into the food cup. White noise or a tone, each measuring approximately 76 dB, was delivered via a wall speaker. Also mounted on that wall were a clicker (2 Hz) and a 6-W bulb that could be illuminated to provide a light stimulus during the otherwise dark session.

Pavlovian over-expectation training

Rats were shaped to retrieve food pellets, then they underwent 10 conditioning sessions. In each session, the rats received eight 30-sec presentations of three different auditory stimuli (A1, A2, and A3) and one visual stimulus (V1), in a blocked design in which the order of cue-blocks was counterbalanced. For all conditioning, V1 consisted of a cue light, and A1, A2 and A3 consisted of a tone, clicker, or white noise (counterbalanced). Two differently flavored sucrose pellets (banana and grape, designated O1 and O2, counterbalanced) were used as rewards. V1 and A1 terminated with delivery of three pellets of O1, and A2 terminated with delivery three pellets of O2. A3 was paired with no food. After completion of the 10 days of simple conditioning, rats received four consecutive days of compound conditioning in which A1 and V1 were presented together as a 30-sec compound cue terminating with three pellets of O1, and V1, A2, and A3 continued to be presented as in simple conditioning. Cues were again presented in a blocked design, with order counterbalanced. For each cue, there were 12 trials on the first three days of compound conditioning and six trials on the last day of compound conditioning. One day after the last compound conditioning session, rats received a probe test session consisting of eight non-reinforced presentations of A1, A2 and A3 stimuli, with the order mixed and counterbalanced.

OFC/VTA Inactivation

On each compound conditioning day, cannulated rats received bilateral infusions of inactivating agents (OFC: n = 14; VTA: n = 11: OFC + VTA; n = 12) or the same amount of saline (OFC: n = 5; VTA: n = 6) immediately before the cue-block in which A1 and V1 were presented in compound. To make infusions, dummy cannulae were removed and 30G injector cannulae extending 1.0 mm beyond the end of the guide cannulae were inserted. Each injector cannula was connected with PE20 tubing (Thermo Fisher Scientific, Inc., Waltham, MA) to a Hamilton syringe (Hamilton, Reno, NV) placed in an infusion pump (Orion M361, Thermo Fisher Scientific, Inc., Waltham, MA). Each inactivating infusion consisted of 103 or 0.91 ng muscimol and 32 or 17 ng baclofen in OFC or VTA, respectively (Sigma, St Louis, MO). These doses were chosen based on pilot work using reversal learning (see Figure S2) and published data (Murschall and Hauber, 2006). Drugs were dissolved in 150 nl saline and infused at a flow rate of 250 nl/min. At the end of each infusion, the injector cannulae were left in place for another two to three minutes to allow diffusion of the drugs away from the injector. Approximately 10 min after removal of the injector cannulae, rats underwent compound conditioning.

Response measures

The primary measure of conditioning to cues was the percentage of time that each rat spent with its head in the food cup during the 30-sec CS presentation, as indicated by disruption of the photocell beam. We also measured the percentage of time that each rat showed rearing behavior during the 30-sec CS period. To correct for time spent rearing, the percentage of responding during the 30-sec CS was calculated as follows: % of responding = 100 * ((% of time in food cup)/(100 − (% of time of rearing)) Normalized percentage of responding during presentation of cues A1/V1 and A2 in each compound training session = 100 * ((% of responding to cue in compound training session)/(% of responding to corresponding auditory cue in the last day of conditioning session)).

OFC and VTA Recording

Electrode location

Drivable bundles of 10–25-um diameter FeNiCr recording electrodes (Stablohm 675, California Fine Wire, Grover Beach, CA) were surgically implanted under stereotaxic guidance in the left hemisphere dorsal to either OFC (Roesch et al., 2006) (n = 4; 3.0 mm posterior to bregma, 2.0 mm laterally, and 4.0 mm ventral to the brain surface) or VTA (Roesch et al., 2007) (n = 5; 5.2 mm posterior to bregma, 0.7 mm laterally, and 7.0 mm ventral to the brain surface). Wires were plated to an impedance of ~300 kOhms.

Behavioral task

Recording was conducted in aluminum chambers approximately 18″ on each side with sloping walls narrowing to an area of 12″ × 12″ at the bottom. A central odor port was located above and two adjacent fluid wells on a panel in the right wall of each chamber. Two lights were located above the panel. The odor port was connected to an air flow dilution olfactometer to allow the rapid delivery of olfactory cues. Task control was implemented via computer. Port entry and licking was monitored by photobeams. Trials were signaled by illumination of the panel lights inside the box. When these lights were on, nosepoke into the odor port resulted in delivery of the odor cue to a small hemicylinder located behind this opening. One of three different odors was delivered to the port on each trial, in a pseudorandom order. At odor offset, the rat had 3 seconds to make a response at one of the two fluid wells located below the port. One odor instructed the rat to go to the left, a second odor instructed the rat to go to the right, and a third odor indicated that the rat could obtain reward at either well. Odors were presented in a pseudorandom sequence such that the free-choice odor was presented on 7/20 trials and the left/right odors were presented in equal numbers (+/−1 over 250 trials). During recording, we manipulated the size of the reward delivered at a given side or the length of the delay preceding reward delivery across blocks of trials as illustrated in Figure 2A. At least 60 trials per block were collected for each neuron.

Single-unit recording

Procedures were described previously (Roesch et al., 2007; Roesch et al., 2006). Wires were screened for activity daily; if no activity was detected, the rat was removed, and the electrode assembly was advanced 40 or 80 um. Otherwise active wires were selected to be recorded, a session was conducted, and the electrode was advanced at the end of the session. Neural activity was recorded using two identical Plexon Multichannel Acquisition Processor systems (Dallas, TX), interfaced with odor discrimination training chambers described above. After amplification and filtering, waveforms (>2.5:1 signal-to-noise) were extracted from active channels and recorded to disk by an associated workstation with event timestamps from the behavior computer. Units were sorted using Offline Sorter software from Plexon Inc (Dallas, TX), using a template matching algorithm. Sorted files were processed in Neuroexplorer to extract unit timestamps and relevant event markers and analyzed in Matlab (Natick, MA).

Supplementary Material

Acknowledgments

This work was supported by grants from NIDA to GS, KB, and MR, from the HFSP to YT, and from NIMH to GS.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Arana FS, Parkinson JA, Hinton E, Holland AJ, Owen AM, Roberts AC. Dissociable contributions of the human amygdala and orbitofrontal cortex to incentive motivation and goal selection. Journal of Neuroscience. 2003;23:9632–9638. doi: 10.1523/JNEUROSCI.23-29-09632.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bayer HM, Glimcher PW. Midbrain dopamine neurons encode a quantitative reward prediction error signal. Neuron. 2005;47:129–141. doi: 10.1016/j.neuron.2005.05.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown VJ, McAlonan K. Orbital prefrontal cortex mediates reversal learning and not attentional set shifting in the rat. Behavioral Brain Research. 2003;146:97–130. doi: 10.1016/j.bbr.2003.09.019. [DOI] [PubMed] [Google Scholar]

- Burke KA, Franz TM, Miller DN, Schoenbaum G. The role of orbitofrontal cortex in the pursuit of happiness and more specific rewards. Nature. 2008;454:340–344. doi: 10.1038/nature06993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chudasama Y, Kralik JD, Murray EA. Rhesus monkeys with orbital prefrontal cortex lesions can learn to inhibit prepotent responses in the reversed reward contingency task. Cerebral Cortex. 2007;17:1154–1159. doi: 10.1093/cercor/bhl025. [DOI] [PubMed] [Google Scholar]

- Chudasama Y, Robbins TW. Dissociable contributions of the orbitofrontal and infralimbic cortex to pavlovian autoshaping and discrimination reversal learning: further evidence for the functional heterogeneity of the rodent frontal cortex. Journal of Neuroscience. 2003;23:8771–8780. doi: 10.1523/JNEUROSCI.23-25-08771.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dias R, Robbins TW, Roberts AC. Dissociation in prefrontal cortex of affective and attentional shifts. Nature. 1996;380:69–72. doi: 10.1038/380069a0. [DOI] [PubMed] [Google Scholar]

- Feierstein CE, Quirk MC, Uchida N, Sosulski DL, Mainen ZF. Representation of spatial goals in rat orbitofrontal cortex. Neuron. 2006;51:495–507. doi: 10.1016/j.neuron.2006.06.032. [DOI] [PubMed] [Google Scholar]

- Gallagher M, McMahan RW, Schoenbaum G. Orbitofrontal cortex and representation of incentive value in associative learning. Journal of Neuroscience. 1999;19:6610–6614. doi: 10.1523/JNEUROSCI.19-15-06610.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gottfried JA, O’Doherty J, Dolan RJ. Encoding predictive reward value in human amygdala and orbitofrontal cortex. Science. 2003;301:1104–1107. doi: 10.1126/science.1087919. [DOI] [PubMed] [Google Scholar]

- Haber SN, Fudge JL, McFarland NR. Striatonigrostriatal pathways in primates form an ascending spiral from the shell to the dorsolateral striatum. Journal of Neuroscience. 2000;20:2369–2382. doi: 10.1523/JNEUROSCI.20-06-02369.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hare TA, O’Doherty J, Camerer CF, Schultz W, Rangel A. Dissociating the role of the orbitofrontal cortex and the striatum in the computation of goal values and prediction errors. Journal of Neuroscience. 2008;28:5623–5630. doi: 10.1523/JNEUROSCI.1309-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hatfield T, Han JS, Conley M, Gallagher M, Holland P. Neurotoxic lesions of basolateral, but not central, amygdala interfere with Pavlovian second-order conditioning and reinforcer devaluation effects. Journal of Neuroscience. 1996;16:5256–5265. doi: 10.1523/JNEUROSCI.16-16-05256.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holland PC, Gallagher M. Amygdala central nucleus lesions disrupt increments, but not decrements, in conditioned stimulus processing. Behavioral Neuroscience. 1993;107:246–253. doi: 10.1037//0735-7044.107.2.246. [DOI] [PubMed] [Google Scholar]

- Hornak J, O’Doherty J, Bramham J, Rolls ET, Morris RG, Bullock PR, Polkey CE. Reward-related reversal learning after surgical excisions in orbito-frontal or dorsolateral prefrontal cortex in humans. Journal of Cognitive Neuroscience. 2004;16:463–478. doi: 10.1162/089892904322926791. [DOI] [PubMed] [Google Scholar]

- Izquierdo AD, Murray EA. Opposing effects of amygdala and orbital prefrontal cortex lesions on the extinction of instrumental responding in macaque monkeys. European Journal of Neuroscience. 2005;22:2341–2346. doi: 10.1111/j.1460-9568.2005.04434.x. [DOI] [PubMed] [Google Scholar]

- Izquierdo AD, Suda RK, Murray EA. Bilateral orbital prefrontal cortex lesions in rhesus monkeys disrupt choices guided by both reward value and reward contingency. Journal of Neuroscience. 2004;24:7540–7548. doi: 10.1523/JNEUROSCI.1921-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jones B, Mishkin M. Limbic lesions and the problem of stimulus-reinforcement associations. Experimental Neurology. 1972;36:362–377. doi: 10.1016/0014-4886(72)90030-1. [DOI] [PubMed] [Google Scholar]

- Lattal KM, Nakajima S. Overexpectation in appetitive Pavlovian and instrumental conditioning. Animal Learning and Behavior. 1998;26:351–360. [Google Scholar]

- McDannald MA, Saddoris MP, Gallagher M, Holland PC. Lesions of orbitofrontal cortex impair rats’ differential outcome expectancy learning but not conditioned stimulus-potentiated feeding. Journal of Neuroscience. 2005;25:4626–4632. doi: 10.1523/JNEUROSCI.5301-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Montague PR, Dayan P, Sejnowski TJ. A framework for mesencephalic dopamine systems based on predictive hebbian learning. Journal of Neuroscience. 1996;16:1936–1947. doi: 10.1523/JNEUROSCI.16-05-01936.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murschall A, Hauber W. Inactivation of the ventral tegmental area abolished the general excitatory influence of Pavlovian cues on instrumental performance. Learning and Memory. 2006;13:123–126. doi: 10.1101/lm.127106. [DOI] [PubMed] [Google Scholar]

- Niv Y, Daw ND, Joel D, Dayan P. Tonic dopamine: opportunity costs and the control of response vigor. Psychopharmacology. 2007;191:507–520. doi: 10.1007/s00213-006-0502-4. [DOI] [PubMed] [Google Scholar]

- Nobre AC, Coull JT, Frith CD, Mesulam MM. Orbitofrontal cortex is activated during breaches of expectation in tasks of visual attention. Nature Neuroscience. 1999;2:11–12. doi: 10.1038/4513. [DOI] [PubMed] [Google Scholar]

- O’Doherty J, Dayan P, Schultz J, Deichmann R, Friston KJ, Dolan RJ. Dissociable roles of ventral and dorsal striatum in instrumental conditioning. Science. 2004;304:452–454. doi: 10.1126/science.1094285. [DOI] [PubMed] [Google Scholar]

- O’Doherty J, Deichmann R, Critchley HD, Dolan RJ. Neural responses during anticipation of a primary taste reward. Neuron. 2002a;33:815–826. doi: 10.1016/s0896-6273(02)00603-7. [DOI] [PubMed] [Google Scholar]

- O’Doherty JP, Deichmann R, Critchley HD, Dolan RJ. Neural responses during anticipation of a primary taste reward. Neuron. 2002b;33:815–826. doi: 10.1016/s0896-6273(02)00603-7. [DOI] [PubMed] [Google Scholar]

- Ostlund SB, Balleine BW. Orbitofrontal cortex mediates outcome encoding in Pavlovian but not instrumental learning. Journal of Neuroscience. 2007;27:4819–4825. doi: 10.1523/JNEUROSCI.5443-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Padoa-Schioppa C, Assad JA. Neurons in orbitofrontal cortex encode economic value. Nature. 2006;441:223–226. doi: 10.1038/nature04676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pan WX, Schmidt R, Wickens JR, Hyland BI. Dopamine cells respond to predicted events during classical conditioning: evidence for eligibility traces in the reward-learning network. Journal of Neuroscience. 2005;25:6235–6242. doi: 10.1523/JNEUROSCI.1478-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patton JJ, Belova MA, Morrison SE, Salzman CD. The primate amygdala represents the positive and negative value of visual stimuli during learning. Nature. 2006;439:865–870. doi: 10.1038/nature04490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pickens CL, Saddoris MP, Gallagher M, Holland PC. Orbitofrontal lesions impair use of cue-outcome associations in a devaluation task. Behavioral Neuroscience. 2005;119:317–322. doi: 10.1037/0735-7044.119.1.317. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pickens CL, Setlow B, Saddoris MP, Gallagher M, Holland PC, Schoenbaum G. Different roles for orbitofrontal cortex and basolateral amygdala in a reinforcer devaluation task. Journal of Neuroscience. 2003;23:11078–11084. doi: 10.1523/JNEUROSCI.23-35-11078.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rescorla RA. Reduction in the effectiveness of reinforcement after prior excitatory conditioning. Learning and Motivation. 1970;1:372–381. [Google Scholar]

- Rescorla RA, Wagner AR. A theory of Pavlovian conditioning: variations in the effectiveness of reinforcement and nonreinforcement. In: Black AH, Prokasy WF, editors. Classical Conditioning II: Current Research and Theory. New York: Appleton-Century-Crofts; 1972. pp. 64–99. [Google Scholar]

- Roesch MR, Calu DJ, Schoenbaum G. Dopamine neurons encode the better option in rats deciding between differently delayed or sized rewards. Nat Neurosci. 2007;10:1615–1624. doi: 10.1038/nn2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roesch MR, Taylor AR, Schoenbaum G. Encoding of time-discounted rewards in orbitofrontal cortex is independent of value representation. Neuron. 2006;51:509–520. doi: 10.1016/j.neuron.2006.06.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saddoris MP, Gallagher M, Schoenbaum G. Rapid associative encoding in basolateral amygdala depends on connections with orbitofrontal cortex. Neuron. 2005;46:321–331. doi: 10.1016/j.neuron.2005.02.018. [DOI] [PubMed] [Google Scholar]

- Schoenbaum G, Chiba AA, Gallagher M. Orbitofrontal cortex and basolateral amygdala encode expected outcomes during learning. Nature Neuroscience. 1998;1:155–159. doi: 10.1038/407. [DOI] [PubMed] [Google Scholar]

- Schoenbaum G, Setlow B, Nugent SL, Saddoris MP, Gallagher M. Lesions of orbitofrontal cortex and basolateral amygdala complex disrupt acquisition of odor-guided discriminations and reversals. Learning and Memory. 2003;10:129–140. doi: 10.1101/lm.55203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stalnaker TA, Franz TM, Singh T, Schoenbaum G. Basolateral amygdala lesions abolish orbitofrontal-dependent reversal impairments. Neuron. 2007;54:51–58. doi: 10.1016/j.neuron.2007.02.014. [DOI] [PubMed] [Google Scholar]

- Stalnaker TA, Roesch MR, Franz TM, Burke KA, Schoenbaum G. Abnormal associative encoding in orbitofrontal neurons in cocaine-experienced rats during decision-making. European Journal of Neuroscience. 2006;24:2643–2653. doi: 10.1111/j.1460-9568.2006.05128.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sutton RS, Barto AG. Reinforcement Learning: An introduction. Cambridge MA: MIT Press; 1998. [Google Scholar]

- Tobler PN, O’Doherty J, Dolan RJ, Schultz W. Human neural learning depends on reward prediction errors in the blocking paradigm. Journal of Neurophysiology. 2006;95:301–310. doi: 10.1152/jn.00762.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tremblay L, Schultz W. Relative reward preference in primate orbitofrontal cortex. Nature. 1999;398:704–708. doi: 10.1038/19525. [DOI] [PubMed] [Google Scholar]

- Waelti P, Dickinson A, Schultz W. Dopamine responses comply with basic assumptions of formal learning theory. Nature. 2001;412:43–48. doi: 10.1038/35083500. [DOI] [PubMed] [Google Scholar]

- Wheeler EZ, Fellows LK. The human ventromedial frontal lobe is critical for learning from negative feedback. Brain. 2008;131:1323–1331. doi: 10.1093/brain/awn041. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.