Abstract

Manual gestures occur on a continuum from co-speech gesticulations to conventionalized emblems to language signs. Our goal in the present study was to understand the neural bases of the processing of gestures along such a continuum. We studied four types of gestures, varying along linguistic and semantic dimensions: linguistic and meaningful American Sign Language (ASL), non-meaningful pseudo-ASL, meaningful emblematic, and nonlinguistic, non-meaningful made-up gestures. Pre-lingually deaf, native signers of ASL participated in the fMRI study and performed two tasks while viewing videos of the gestures: a visuo-spatial (identity) discrimination task and a category discrimination task. We found that the categorization task activated left ventral middle and inferior frontal gyrus, among other regions, to a greater extent compared to the visual discrimination task, supporting the idea of semantic-level processing of the gestures. The reverse contrast resulted in enhanced activity of bilateral intraparietal sulcus, supporting the idea of featural-level processing (analogous to phonological-level processing of speech sounds) of the gestures. Regardless of the task, we found that brain activation patterns for the nonlinguistic, non-meaningful gestures were the most different compared to the ASL gestures. The activation patterns for the emblems were most similar to those of the ASL gestures and those of the pseudo-ASL were most similar to the nonlinguistic, non-meaningful gestures. The fMRI results provide partial support for the conceptualization of different gestures as belonging to a continuum and the variance in the fMRI results was best explained by differences in the processing of gestures along the semantic dimension.

Keywords: American Sign Language, gestures, Deaf, visual processing, categorization, linguistic, brain, fMRI

1. Introduction

Manual gestures can take many forms, from those accompanying speech to constituents of a full-fledged language as in American Sign Language (ASL) (McNeill, 1992). In Kendon’s (Kendon, 1988) continuum of gestures (so termed by (McNeill, 1992), speech-accompanying gesticulations morph into language-like-gestures which are transformed over time into pantomimes, then emblems and finally signs of a fully-fledged language. As we move along this continuum from gesticulation to sign languages, the presence of speech declines, the presence of language properties increases and idiosyncratic gestures are transformed into socially regulated signs. McNeill (McNeill, 2005) has described four versions of this continuum varying in terms of the relationship of the gestures to speech, linguistic properties, conventions, and semiotic properties. In the present study, we investigated the neural bases of different hand gestures that varied along a linguistic-semantic continuum by using functional magnetic resonance imaging (fMRI) of Deaf participants who were native signers of ASL.

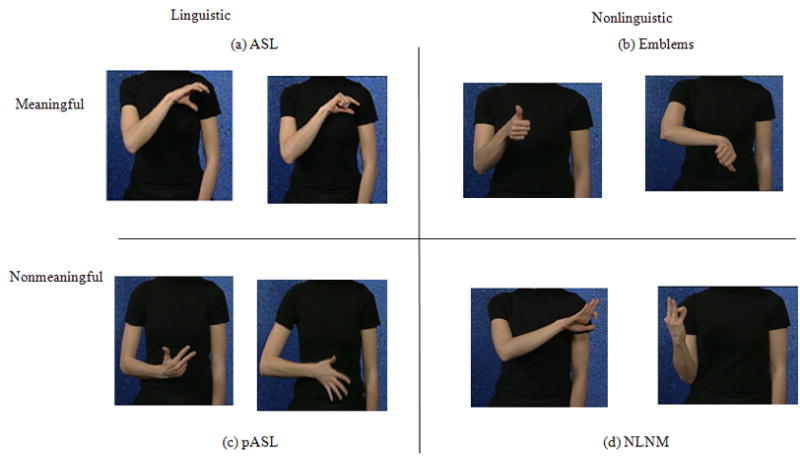

The first goal of the study was to provide greater insight into the neural bases of gestural processing by Deaf participants, specifically, whether they ordered the gestures used in the study along a continuum. Four types of gestures were used in the study: linguistically meaningful ASL signs, pseudo-linguistic and meaningful gestures such as emblems, pseudo-linguistic and non-meaningful gestures, and non-linguistic non-meaningful gestures. The ASL signs were whole-word gestures (tagged as ASL for the rest of the article), emblematic gestures were ‘thumbs-up’/‘thumbs-down’ gestures (EMB), the pseudo-ASL gestures were made-up ASL-like gestures that were similar to pseudo-words in that they could be part of the language but had no meaning (pASL), and non-meaningful non-linguistic gestures (NLNM) that were made-up gestures that could not be viewed as part of the ASL repertoire1. The gestures therefore formed a possible continuum from NLNM → pASL → EMB → ASL with the linguistic and semantic features increasing from the NLNM end to the ASL end. The positions of NLNM and ASL are fixed at the extreme ends of this continuum; the positions of pASL and EMB are not. The latter two could be interchanged within this continuum, depending on whether the semantic dimension is the primary focus (EMB is meaningful and hence most like ASL) or the linguistic dimension is being tested (we assume the linguistic properties of pASL, and not EMB, are closer to those of ASL), leading to two different continua. In defining the “linguistic” nature of gestures, we use the same reasoning put forward by (MacSweeney et al., 2004) and (Petitto et al., 2000), that signed and spoken languages exhibit similar levels of linguistic organization, and single gestures may be defined as linguistic if they follow the phonological rules of a language and are used contrastively. In their 2004 article, (MacSweeney et al., 2004) studied the neural bases of a manual-brachial signaling code used by racecourse bookies and defined this code as nonlinguistic because it does not have an “internal contrastive structure based on featural parameters”. Using this definition, the pASL gestures, because they are modified ASL signs, are considered linguistic, although they are not meaningful. Defining the linguistic nature of emblems is more complicated. (McNeill, 1992) has argued for some linguistic properties for these gestures. Emblems are isolated, stand-alone gestures, not naturally occurring as part of a sentence and are understood by both signers and non-signers within a cultural group. Although, there is some ambiguity, for the purposes of the study, we categorize them as nonlinguistic. The types of gestures used in the study are shown in Figure 1. The gestures used in our study varied along the linguistic dimension, with ASL and pASL having greater linguistic properties than EMB and NLNM.

Figure 1.

Pictures of the four types of gestures used as stimuli in the study: (a) ASL (American Sign Language), (b) Emblems, (c) pseudo-ASL or ASL-like non-meaningful gestures (pASL), and (d) non-meaningful and non-linguistic gestures (NLNM). The gestures vary along the linguistic and semantic dimensions.

The four gesture types used in our study differed as well along the semantic dimension -two of the gestures were meaningful (ASL, EMB) whereas the other two types (pASL, NLNM) were non-meaningful. ASL gestures used in the study were whole-word gestures (e.g., ‘cop’, guilty’). The emblems used in the study were variants of the ‘thumbs-up’ and ‘thumbs-down’ gestures. Emblems are stand-alone hand gestures that convey semantic information and are known to members of a particular culture; they are analogous to spoken words. However, they differ from spoken words or signs of a manual language because they do not occur in syntactic sequences (Venus and Canter, 1987) or do not have a fully contrastive system (McNeill, 2005). Emblematic gestures have been characterized as socially regulated signs that are one-step removed from a full-fledged sign language (Kendon, 1988; McNeill, 1992). They are understood equally well by both hearing and Deaf persons belonging to a specific culture. Recently, several researchers have begun to investigate how emblems are processed, mostly from the point of view of the hearing population. Molnar-Szakacs et al. (Molnar-Szakacs et al., 2007) found that specific cultural and biological factors modulated the neural response (measured as corticospinal excitability) of their participants during processing of culture-specific emblems. Gunter and Bach (Gunter and Bach, 2004) conducted an ERP study to dissociate the processing of meaningful emblems from the processing of non-meaningful hand gestures using hearing participants. They found that the ERPs elicited by the meaningful gestures were similar to those of abstract words.

The stimuli varied along the linguistic and semantic dimensions and it is possible that rather than ordering these gestures systematically along a continuum, the Deaf participants could view them as belonging to two broad categories, for instance, linguistic and nonlinguistic or meaningful and non-meaningful. The fMRI results of the study will help to clarify the differences in processing of the four types of gestures. The differences in the processing of the stimuli will be tested in 3 ways: (1) along the semantic dimension by collapsing EMB and ASL gestures at one end and the pASL and NLNM gestures at the other end, (2) along the linguistic dimension by considering the ASL and pASL gestures at one end and the EMB and NLNM at the other extrema and (3) a gradual ordering of the stimuli along a continuum, that would provide support for one of the Kendon-MacNeill continua. Note that the Kendon-McNeill continua include pantomime-like gestures that were not incorporated in the present study because pantomimes tend to be longer in duration than the standardized ASL gestures and emblems used in the study.

A second goal of the study was to investigate the neural bases of gestural processing in the context of two tasks: a category discrimination task and an identity discrimination task. Our hypothesis was that the two tasks involve different levels of processing: the identity discrimination task engages neural sources and mechanisms involved in phonological-level processing and the category discrimination task at a whole “word” or semantic-level of processing. Such differences in processing would translate into differences in brain activation patterns. Categorization is at the transition between sensory and higher-level cognitive processing. In an earlier study (Husain et al., 2006) using auditory stimuli that varied along the dimensions of speech and acoustic speed, we found a set of core regions which were activated to a greater extent for all stimuli for the category discrimination task compared to the auditory discrimination task: the bilateral middle and inferior frontal gyri, dorsomedial frontal gyrus and the inferior parietal lobule. In this case, the auditory discrimination occurred at the level of processing acoustic features such as transients and category discrimination occurred at the level of phonological processing. There were lateralization differences in these regions, but these differences covaried more with the acoustic features (fast or slow transients) than with the speech/nonspeech nature of the stimuli. Additionally, the reaction times of the categorization task were observed to be longer than the discrimination task for all stimulus types. The second goal of the study seeks to determine if the core regions highlighted in the auditory study were also activated in the processing of manual gestures, and if so, what level of processing (i.e., phonological or semantic) engages these core regions.

2. Results

Participants were imaged using fMRI and their behavioral responses recorded using button presses. While watching videos of manual gestures, participants performed one of two tasks: IDN or identity discrimination task (are the two gestures identical?) and CAT or category discrimination task (do the two gestures belong to the same category?).

2.1 Behavioral results

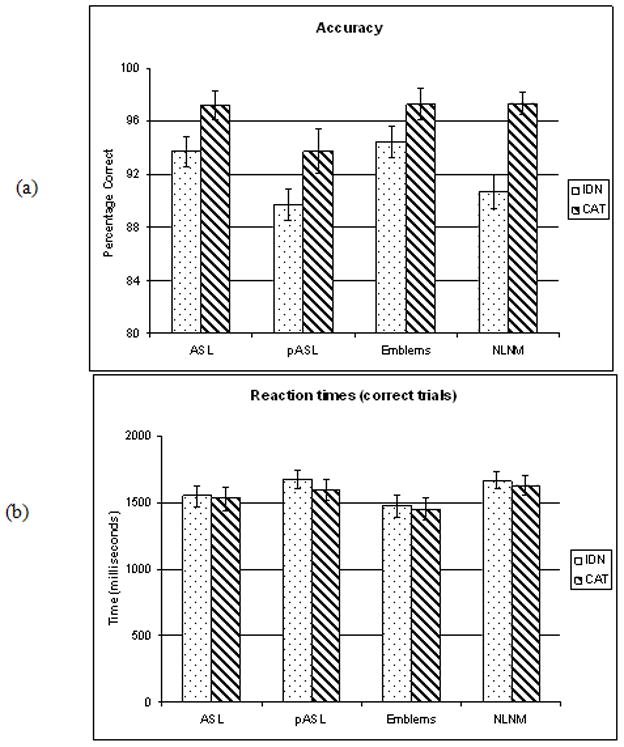

The behavioral results are shown in Figure 2. Reaction time and accuracy were analyzed for 13 Deaf subjects (the behavioral responses of one deaf subject were not recorded due to technical problems and another did not complete the study). We performed a 2-way ANOVA (task, stimulus type) for both accuracy and reaction times. The analysis of correct responses showed a main effect of task type (F[1,96] = 24.25, p < .0001) and stimulus type (F[3, 96] = 4.89, p < .01). Individual comparisons of tasks for a given stimulus type revealed a trend of the CAT task being more accurate than the IDN task; this was significant for the stimulus types of ASL and NLNM and almost significant for pASL (two-tailed paired t-tests at p<0.05 significance). The analysis of reaction times of correct trials showed an almost significant main effect of stimulus type (F [3,96] = 2.49, p = 0.06). All participants showed the following trend –the reaction times for the CAT task were shorter than for the IDN task for all stimulus types, but this trend was not significant for any stimulus type.

Figure 2.

Behavioral results: (a) accuracy and (b) reaction times of correct trials.

2.2 fMRI results

The results reported here are for data from 12 deaf participants. Fifteen participants were enrolled in the study, however, one participant did not complete the study and data from two were excluded from the analysis due to excessive head motion (defined as ≥ 4mm in translation and ≥ 3 degrees in rotation). In the results that follow, the fMRI data are noted in terms of MNI (Montreal Neurological Institute) coordinates of the gyral/sulcal landmarks and Brodmann areas in the vicinity of the suprathreshold voxels for the different contrasts. The MNI coordinates were converted into the Talairach and Tournoux system (Talairach and Tournoux, 1988) before determining the corresponding Brodmann areas.

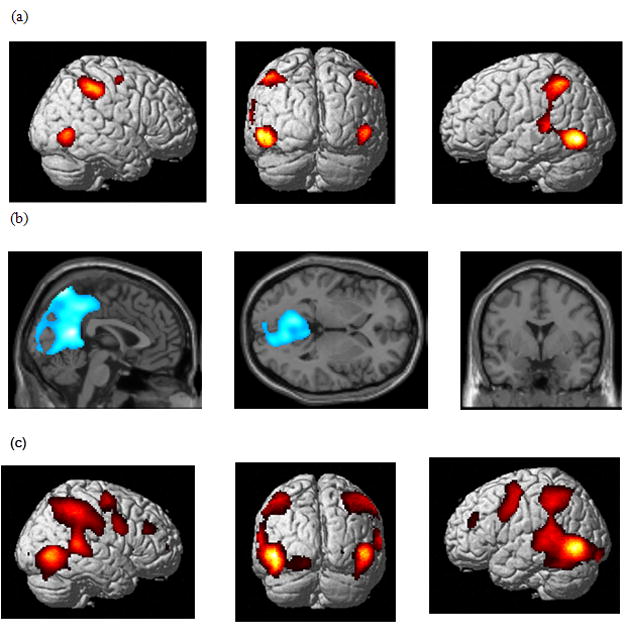

Figure 3 depicts the results of a one-sample t-test of IDN+CAT contrast images of all stimulus types (1 image per subject) from the first level fixed effect analysis. The positive results (IDN+CAT>Rest) showed a diverse set of regions that were activated to a greater extent for the tasks compared to the Rest condition: occipital region (left inferior occipital gyrus, BA 19), temporal region (left inferior, BA 19, and superior, BA 22, temporal gyri, and right middle temporal gyrus, BA 37), parietal region (bilateral inferior parietal lobule, BA 40, and left supramarginal gyrus, BA 40), frontal (right middle, BA 6, and superior frontal gyri, BA 6). The results of the negative contrast (Rest>IDN+CAT) showed an extensive activation cluster in the posterior cortex extending from the cerebellum to the posterior cingulate, possibly representing a part of the default resting network (Fox and Raichle, 2007).

Figure 3.

Statistical parametric maps for both tasks compared to rest using a one-sample t-test: (a) positive comparison and (b) negative comparison in 12 participants and using ANOVA analysis: (c) the basic network over all task and stimulus conditions. The results are rendered on a template brain (p<0.001 uncorrected voxel-wise and p<0.05 family-wise error corrected cluster-wise).

2.2.1 Effect of task and stimulus types

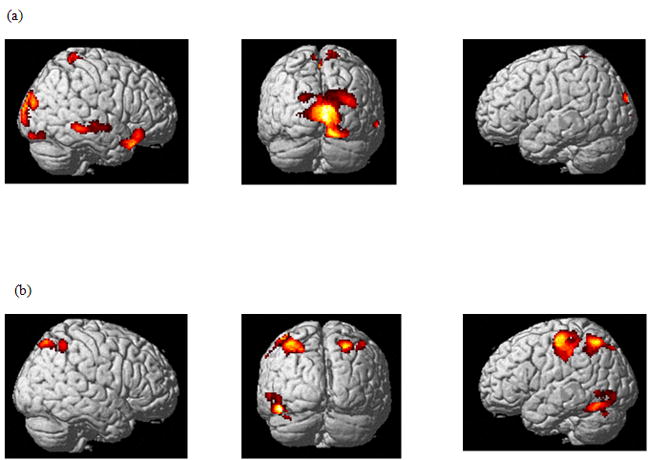

We performed a two-way ANOVA at the second level using contrasts from the fixed-effects analysis (at the individual level) of the two tasks for the four stimulus types (total of 8 contrast images per subject). We used the “flexible factorial” method of SPM5 with stimulus and task as dependent factors with unequal variance and subject as an independent factor with unequal variance. The basic network over all task and stimulus conditions is depicted in Figure 3c and is similar to the network depicted in Figure 3a, except for greater extent of activations in the frontal and temporal cortices. We found no significant interaction of the task and stimulus factors. We examined the effect of the CAT>IDN and IDN>CAT contrasts regardless of the stimulus type (Figure 4 and Table 1). The CAT>IDN comparison revealed greater activation in parts of the right superior temporal cortex, and inferior and middle frontal gyri, left cuneus, and cerebellum, and bilateral paracentral lobule, lingual gyrus and posterior cingulate gyrus. The greater activation in the cluster of voxels located in the middle frontal gyrus was just below threshold (p=0.1 corrected). We chose to report this because the cluster size was still considerable (262 voxels). The IDN>CAT contrast revealed greater activation in regions in the bilateral intraparietal sulcus, left precentral, fusiform, and inferior occipital gyri, and right precuneus.

Figure 4.

Statistical parametric maps showing the effect of task from the ANOVA analysis. Results from (a) CAT>IDN contrast and (b) IDN>CAT contrast in 12 participants (p<0.001 uncorrected voxel-wise and p<0.1 family-wise error corrected cluster-wise). The results are rendered on a template brain.

Table 1.

Effect of the task factor from the ANOVA analysis. Local minima from (a) CAT>IDN contrast and (b) IDN>CAT contrast. All reported minima are p<0.05 FWE corrected for multiple comparisons at the cluster level, except for the line with the asterisk which was at p=0.01 FWE corrected.

| Contrast | MNI coordinates (x,y,z) | Z score | Cluster Size | Gyrus (Brodmann area) | ||

|---|---|---|---|---|---|---|

| IDN > CAT | −36 −34 −36 |

−58 −52 −22 |

56 42 62 |

4.79 4.44 4.28 |

2128 | Intraparietal sulcus (BA 7, 40), precentral gyrus (BA 4) |

| 24 24 40 |

−74 −60 −52 |

58 44 54 |

4.24 3.94 3.71 |

559 | Intraparietal sulcus (BA 7, 40), precuneus (BA 7) | |

| −44 −48 −48 |

−56 −72 −80 |

−18 −18 −4 |

4.14 4.02 3.94 |

451 | Fusiform gyrus (BA 37, 19), inferior occipital gyrus (BA 18) | |

| CAT>IDN | −22 12 −14 |

−78 −80 −62 |

2 0 −8 |

5.58 5.11 5.06 |

4181 | Cuneus (BA 17), lingual gyrus (BA 18, 19) |

| 10 −4 0 |

−46 −20 −34 |

58 40 62 |

4.56 3.99 3.7 |

1644 | Paracentral lobule (BA 7), cingulate gyrus (BA 24, 31) | |

| 34 48 46 |

18 30 −20 |

−26 −16 −6 |

4.53 4.42 4.35 |

1650 | Inferior frontal gyrus (BA 47), Superior temporal gyrus (BA 22, 38), | |

| 48 42 |

6 4 |

48 58 |

3.79 3.41 |

262* | Middle frontal gyrus (BA 8,6) | |

Next, we characterized the effect of stimulus type by collapsing over the two tasks. We first tested the influence of the linguistic dimension, by contrasting the effects of ASL and pASL against the less linguistic stimuli (EMB and NLNM). The LINGUISTIC>NONLINGUISITC and the NONLINGUISTIC>LINGUISTIC contrast did not result in any voxels that survived the statistical threshold. A possible reason for this is that the emblematic gestures are partially linguistic and not completely nonlinguistic. In order to examine the influence of the semantic dimension, we contrasted the effects of ASL and EMB (meaningful) against the non-meaningful stimuli (pASL and NLNM). The MEANINGFUL>NONMEANINGFUL (see Figure 5 and Table 2) comparison resulted in statistically significant greater activation of voxels in bilateral middle and superior temporal cortex. In the left hemisphere, this activation borders the putative Wernicke’s area. The NONMEANINGFUL>MEANINGFUL contrasts did not result in any voxels that survived the statistical threshold.

Figure 5.

Statistical parametric maps depicting the effect of meaning on processing of gestures. Results from the contrast ASL+EMB (meaningful) > pASL+NLNM (non-meaningful), (p<0.001 uncorrected voxel-wise and p<0.05 family-wise error corrected cluster-wise).

Table 2.

Local minima from the contrasts highlighting the semantic and linguistic aspects of the stimuli. All reported minima are p<0.05 FWE corrected for multiple comparisons at the cluster level.

| Contrast | MNI coordinates (x,y,z) | Z score | Cluster Size | Gyrus (Brodmann area) | ||

|---|---|---|---|---|---|---|

| Meaningful (ASL, EMB) > Non-meaningful (pASL, NLNM) | −56 −44 |

−66 −56 |

18 22 |

4.15 4.06 |

595 | Middle and superior temporal gyrus (BA 39) |

| 48 40 54 |

−66 −66 −56 |

22 16 18 |

3.55 3.47 3.36 |

632 | Middle and superior temporal gyrus (BA 39, 22) | |

| Linguistic+ Meaningful (ASL) > Non-linguistic + Non-meaningful (NLNM) | −34 −14 |

56 62 |

2 18 |

4.85 3.61 |

318 | Middle and Superior frontal gyrus (BA 10) |

| 40 56 52 |

−82 −56 −68 |

26 46 24 |

4.56 4.1 3.55 |

971 | Superior occipital gyrus (BA 19), inferior parietal lobule (BA 40), middle temporal gyrus (BA 39) | |

| −58 −46 |

−64 −56 |

16 20 |

4.96 4.68 |

416 | Superior temporal gyrus (BA 39, 22) | |

| −2 16 6 |

−70 −66 −62 |

−28 −36 −16 |

3.86 3.76 3.18 |

489 | Cerebellum | |

| 38 44 40 |

−28 −28 −26 |

66 52 40 |

4.09 4 3.82 |

443 | Precentral (BA 4) and postcentral gyrus (BA 2) | |

| 48 42 40 |

−32 −38 −14 |

−18 −20 −32 |

3.69 3.66 3.56 |

566 | Fusiform gyrus (BA 20) | |

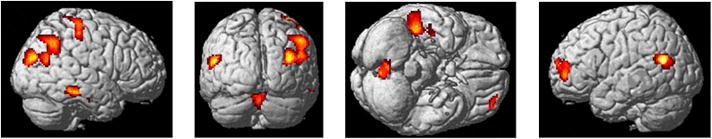

We found no significant differences between processing of ASL and pASL or EMB stimuli. However, there were many differences between the processing of ASL and NLNM gestures. The ASL>NLNM (see Figure 6 and Table 2) contrast resulted in greater activation of voxels in the left middle and superior frontal gyri and superior temporal gyrus, and the right premotor cortex (BA 2,4), fusiform gyrus, occipital gyrus, inferior parietal lobule and middle temporal gyrus and bilateral cerebellum. There were no suprathreshold voxels in the reverse contrast, NLNM>ASL.

Figure 6.

Statistical parametric map representing the differences between NLNM and ASL stimuli for the contrast ASL>NLNM (p<0.001 uncorrected voxel-wise and p<0.05 family-wise error corrected cluster-wise).

2.2.2 Stimulus continua

We examined the systematic variations within the stimulus set by using the following series of contrasts: (1) ASL> pASL, pASL >EMB, and EMB>NLNM (testing the linguistic continuum) and (2) ASL>EMB, EMB>pASL, and pASL>NLNM (testing the semantic continuum). Our a priori expectation was that the areas activated by the individual paired-contrasts will be a subset of the areas activated in the ASL>NLNM contrast, noted above. However, the expectation was true only for the semantic series (2), but with an important caveat. For series (1), we found that only the ASL>pASL (a large cluster near the cuneus, MNI [26, −74, 20]) and ASL>NLNM (see Figure 6) contrasts resulted in suprathreshold voxels. The pASL>EMB contrast did not result in suprathreshold voxels; in fact, the reverse contrast of EMB>pASL resulted in suprathreshold voxels (similar to the MEANINGFUL>NONMEANINGFUL contrast). For series (2), the EMB>pASL (similar to the MEANINGFUL>NONMEANINGFUL comparisons resulted in suprathreshold voxels, however, pASL>NLNM (or its reverse NLNM>pASL) and ASL>EMB (or its reverse EMB>ASL) did not result in any suprathreshold voxels, suggesting that these two types of gestures were processed similarly by the participants. Combining these results with those of the tests of the semantic dimension (MEANINGFUL>NONMEANINGFUL) above, the likeliest ordering of the stimuli is: (ASL≈EMB) > (pASL≈NLNM) or more generally, ASL≥EMB>pASL≥NLNM.

3. Discussion

In the present fMRI study, we investigated gestural processing in the Deaf using two types of tasks and four types of manual gestures. Subjects performed discrimination tasks that were either at a phonological level (IDN, identity discrimination task) or at a semantic level (CAT, category discrimination task). The stimuli possessed one of two linguistic and one of two semantic features, for a total of four stimulus types. There were two main findings. First, in deaf subjects, the brain activation patterns of the stimuli used in the study tended to align with Kendon-McNeill’s semantic continuum of gesture types with the important caveat that participants did not differentiate between ASL and emblematic gestures or between the two types of made-up gestures (pASL, NLNM). Second, we found commonality with our previous work on auditory categorical processing, with regard to both the categorization and the identity discrimination tasks. The gestural categorization task activated the middle and inferior frontal gyrus to a greater extent than the visuo-spatial identity discrimination task and this finding together with our previous auditory study supports the role of these structures in categorical processing. The phonological level processing (IDN) task, compared to the CAT task, resulted in a widespread activation pattern including voxels in the intraparietal sulcus, which again echoes phonological processing in auditory studies. We discuss these findings in section 3.1.

When we separated the gestures based on their linguistic or semantic features, we did not find any statistically significant differences in the brain activation patterns for ASL and emblematic gestures. Our results echo McNeill and others (McNeill, 1992; McNeill, 2005) who have argued for some linguistic properties for emblems. Our results suggest that the participants treated emblems as ASL gestures. This is similar to the results of Emmorey et al. (Emmorey et al., 2004) who found that the iconicity of the gesture did not alter the neural bases of action and tool naming. In other words, both iconic and non-iconic ASL gestures were treated in the same manner. This is not surprising when we consider the adoption of onomatopoeic words or loan words from another language by the native language. With usage, these distinctive words (either because of the onomatopoeia or the foreignness of the words) are treated no differently than the native language words (Jackendoff, 2002).

We found dissimilarity in the processing of ASL and pseudo-ASL gestures, particularly in the cuneus region. The dissimilarity between the ASL and pseudo-linguistic gestures that we observed may extend not only to the perception of these gestures but also to their production. Several researchers (Corina et al., 1992; Emmorey et al., 2004; Marshall and Fink, 2001) have established that different neural systems subserve sign language production and non-sign language pantomimic expression.

Linguistic and nonlinguistic manual gestures have been studied previously by (MacSweeney et al., 2004) who investigated the differences in processing British Sign Language (BSL) and a signaling code known as Tic Tac. Tic Tac, used in racecourses, lacks the phonological structure of BSL but is similar in its visual and articulatory parts. Three groups of subjects were used, none of whom had any knowledge of Tic Tac: deaf native signers, hearing native signers, and hearing nonsigners. Unlike the single gestures used in our study, participants in the MacSweeney et al. study viewed 3-s long videos of sentences in BSL and made-up sentences using Tic Tac and identified the anomalous sentences using a button box. The baseline condition consisted of monitoring visual display of a signer at rest for a target. A widespread frontal-posterior network was activated by all groups when responding to both types of stimuli compared to the baseline condition. However, the Deaf activated mostly auditory processing regions of the superior temporal cortex to a greater extent than the hearing, perhaps due to the involvement of the auditory cortices in visual processing as a consequence of neural plasticity when hearing is absent. Signers showed greater activation for BSL than Tic Tac in the left posterior perisylvian cortex, pointing to the importance of this region in language processing. It is possible that practitioners of Tic Tac (who were not included in the study) will exhibit greater language-like response to this manual code and treat Tic Tac gestures like emblems. For the Deaf and hearing signers of the MacSweeney study, Tic Tac was the equivalent of NLNM in our study because it had neither meaning nor linguistic features. Although, several brain regions showed increased activation for the ASL>NLNM contrast in our study, we did not see increased activation in the posterior perisylvian cortex for ASL compared to NLNM gestures possibly due to isolated nature of the stimuli; they were not part of sentence-like structures.

Our fMRI study finds some support for the rest of MacNeill’s and Kendon’s conceptualization of gestures. Considering the entirety of our fMRI results and Figure 6 specifically, it is evident that ASL and NLNM stimuli are at two ends of the continuum of gestures considered in this study. We indirectly tested the two possible placements of the gestures between the ASL and NLNM endpoints by the LINGUISTIC>NONLINGUISTIC and MEANINGFUL>NONMEANINGFUL contrasts and directly by a series of paired contrasts. From both the indirect and direct estimations, we found that the best representation of the continuum with respect to how the brain grouped the stimuli was NLNM ≤pASL < EMB ≤ASL. The emblematic gestures are most like the ASL gestures and the pseudo-ASL gestures are most like the NLNM gestures. The semantic nature of the gestures likely accounts for this division. McNeill and others (McNeill, 1992; McNeill, 2005) have argued for some linguistic properties for emblems. Therefore, although in this particular analysis the emblems were initially considered to be nonlinguistic meaningful gestures, the participants processed them as linguistic gestures and in fact, they considered emblems to be indistinguishable from ASL gestures. It should be noted that although similar brain regions are activated for both ASL and EMB gestures, their functional connectivity patterns may differ. Our results do not dissociate between these possibilities and further studies and analyses are needed to clarify between these two scenarios.

3.1 Comparison with auditory categorization study

In comparing the categorical discrimination of manual gestures to those of sounds from our previous auditory study (Husain et al., 2006) two facts become evident. First, the average reaction time for the categorical discrimination of gestures, although not significant, was shorter than that for the identity discrimination task of the same gestures. We saw the opposite pattern in the study involving processing of sounds; the categorical discrimination task took longer (again, not significantly) than the identity discrimination task. Second, the categorization of gestures constituted processing at the semantic level, whereas the categorization of sounds occurred at the phonological level, i.e. there were no meanings attached to either the speech or the nonspeech sounds. Nevertheless, we wanted to know whether the three core regions of middle and inferior frontal gyri, intraparietal sulcus and dorsomedial frontal gyrus would also be activated to a greater extent in the categorical discrimination task compared to the identity discrimination task. From Figure 4, it is apparent that the bilateral intraparietal sulcus was activated to a greater extent in the identity discrimination task compared to the category discrimination task, but there was no greater activation in the dorsomedial frontal gyri or the frontal gyri. There were additional activations in fusiform and occipital gyri. In the reverse contrast of category discrimination greater than identity discrimination, the right middle and inferior frontal gyri and right paracentral lobule just posterior to the dorsomedial frontal gyrus and the posterior cingulate gyrus were activated to a greater extent in the category discrimination task than in the identity discrimination task.

Combining the results from the visual and auditory studies it is evident that the middle and inferior frontal gyri are activated for the categorization task regardless of the modality and the level of processing. However, the particular locus of activation within the frontal cortex differs between the two studies. In the previous auditory study, the CAT>IDN contrast activated dorsal IFG (BA 44, 45) and in the current study, the CAT>IDN activated ventral IFG (BA 47). The IFG has long been associated with both phonological and semantic processing (for reviews see (Bookheimer, 2002; Vigneau et al., 2006). In recent years, there have been attempts to parcellate the IFG based on its functioning. According to (Poldrack et al., 1999) a dorsal-ventral distinction within the IFG translates to a phonological-semantic distinction in the functioning of IFG (see also (Bokde et al., 2001). Another recent fMRI study of sentence processing (Uchiyama et al., 2008), localizes verbal working memory within the dorsal inferior frontal gyrus (BA 44/45) and semantic processing within ventral inferior frontal gyrus (BA 47), with syntactic processing in BA 45 linking the two processes. These studies (Bokde et al., 2001; Poldrack et al., 1999; Uchiyama et al., 2008) as well as our present study suggest that the dorsal-ventral functional segregation of the inferior frontal gyrus may have less to do with the modality of the stimuli and more with the level of processing. However, the IFG, much like the superior temporal sulcus (Hein and Knight, 2008) may participate in multiple computations depending on the properties of the network that is engaged by a specific task, and therefore resist an easy partitioning along functional lines. The intraparietal sulcus was activated by the phonological level of processing regardless of stimulus modality. Involvement of the dorsomedial frontal gyrus appears to be specific to the auditory stimuli and its neighbor, the cingulate gyrus, was activated in the visual study. It is possible that both cingulate gyrus and dorsomedial frontal gyrus are involved in task mediation and switching and other aspects of a short-term memory, delayed-match-to-sample task (Rushworth et al., 2004; Walton et al., 2007) and not directly involved with categorization.

3.2 Similarities between gestural and lexical processing

In our study, we found greater activation in the left mid-fusiform gyrus for the IDN>CAT contrast (collapsed over all stimuli, Table 1) and in right mid-fusiform gyrus for the ASL>NLNM contrast (collapsed over both tasks, Table 2). Left mid-fusiform gyrus has been proposed as a visual word form area that is dedicated to the pre-lexical processing of letter strings (Cohen et al., 2000; Cohen et al., 2002; Dehaene et al., 2002). Although this concept has been controversial (see (Price and Devlin, 2003), recently (Waters et al., 2007) have extended the concept of the visual word form area to the left and right mid-fusiform gyrus for manual gestures. (Waters et al., 2007) conducted an fMRI investigation of the role of the mid-fusiform gyrus in processing of finger spelling, sign language gestures, text and picture processing in deaf native signers. They found greater activation in left and right mid-fusiform gyrus for finger spelling compared to sign language in deaf but not hearing participants. However, both deaf and hearing participants showed greater activation in bilateral inferior temporal and mid-fusiform gyrus for the picture stimuli compared to text. Therefore the authors argue for a broader conceptualization of the role of the visual word form area to include the mapping of both static and dynamic linguistically meaningful (or potentially meaningful) visual stimuli from perception to higher-order processing. Our data further add to the broader role of the visual word form area. In the ANOVA analysis, the left mid-fusiform gyrus was activated to a greater extent for the identity task compared to the categorization task. In the identity task, the subjects had to recognize small differences in the ending orientation of the gestures, whereas in the categorization task they had to ignore these same small differences and categorize based on the invariant properties of the gestures. It is not surprising then that the left mid-fusiform gyrus was activated to a greater extent for the identity task compared to the categorization task, because this comparison can be likened to the comparison between finger spelling and sign language processing. Interestingly, the right mid-fusiform gyrus was activated to a greater extent for the ASL gestures compared to the nonlinguistic non-meaningful gestures (NLNM), but not for the contrast of ASL with emblematic gestures. This suggests that the right mid-fusiform gyrus may be activated to a greater extent for linguistic manual gestures compared to the non-linguistic stimuli, whereas the left mid-fusiform gyrus may be involved in perceptual processing of all manual gestures, which is consistent with the work of (Waters et al., 2007). Our results thus extend the role of the mid-fusiform gyrus in processing of dynamic visual stimuli and assign different aspects of this role to the left (all manual gestures) and right (specific to linguistic processing) mid-fusiform gyri.

4. Conclusions

Pre-lingually deaf, native signers of ASL performed two tasks while viewing videos of gestures: a visuo-spatial (identity) discrimination task and a category discrimination task. The gestures were of four types, varying along linguistic and semantic dimensions. The fMRI results support the conceptualization of the four gestures used in the study as varying on the semantic dimension but not along the linguistic dimension. Additionally, processing of non-linguistic and non-meaningful gestures was most different from that of ASL gestures and there were no processing differences between emblems and ASL gestures. These results provide partial support for Kendon and McNeill’s continuum of gestures (c.f. (Kendon, 1988), and (McNeill, 2005) that ranges from nonlinguistic non-meaningful gestures to pseudo-ASL gestures to emblems and finally to ASL gestures. Combining the results from our previous auditory study that also employed identity and category discrimination tasks, we conclude that the middle and inferior frontal gyri are preferentially activated during amodal categorization. These regions were activated in the categorization task to a greater extent compared to the identity discrimination task regardless of the modality of the stimuli. The left mid-fusiform gyrus (visual word form area) was activated by all gestural stimuli to a greater extent when subjects performed the identity discrimination task compared to the categorization task; however, the right mid-fusiform gyrus was activated to a greater extent when processing the ASL gestures compared to non-linguistic meaningless gestures. Our results extend the role of the mid-fusiform gyrus beyond that of lexical processing to the processing of dynamic visual stimuli (which have some language component; instead of reading words or nonwords, you are viewing meaningful or non-meaningful gestures) and assign different aspects of this role to the left and right mid-fusiform gyri. In addition, this study advances our knowledge of the neural bases of gestural processing and underscores its critical role in language.

5. Experimental Procedure

Fifteen profoundly deaf volunteers (6 women, 9 men), who became deaf before the age of three and who learned ASL as their first language, 27 ± 5 (mean ± standard deviation) years of age, participated in the study. One deaf participant was excluded because of discomfort in the scanner. Participants underwent brief medical history and physical screening before scanning. Individuals with any condition that may have compromised their visual ability in the scanner were excluded from the study. Subjects, who had normal vision or wore corrective contact lenses in the scanner, gave their informed consent to the study, which was approved by the NIDCD-NINDS IRB (protocol NIH 92-DC-0178), and were suitably compensated for their participation.

5.1 Stimuli

There were four types of hand gestures that differed from each other primarily along two dimensions – in terms of linguistics (ASL-like to non-ASL), and in terms of being meaningful (meaningful to non-meaningful) (see Figure 1). These four stimulus types were: (1) ASL, (2) pASL, ASL-like gestures that follow the rules of ASL but were not at present part of the ASL vocabulary, similar to pseudowords, (3) EMB, or emblems, that had meaning, some linguistic characteristics, were understood by deaf participants (and hearing persons familiar with North American culture), but were not formally part of the ASL vocabulary, and (4) NLNM, nonlinguistic non-meaningful gestures that did not follow the rules of ASL. Each stimulus type was divided into two classes or categories. For instance, for the ASL set of gestures, the two categories were ‘cop’ and ‘guilty’ gestures. For the pASL gestures, these were the ASL signs for numbers ‘3’ and ‘5’ moving up and down the torso, for the emblems these were the “thumbs-up” and “thumbs-down” gestures and for the NLNM these were complicated hand gestures that could not easily be part of the ASL repertoire (see Footnote 1). The first of the NLNM type of gesture consisted of thumb and first finger pinched with the other three fingers tightly held together and a right to left motion across the torso. The second type of NLNM gestures consisted of the thumb, first and last finger pinched together and the other two fingers out and a right to left motion across the torso. The NLNM type of gesture is analogous to non-native spoken words that do not follow the phonotactic rules of a spoken language. There were 5 exemplars within each category, with the exemplars varying in orientation, specifically the last position of gesture with respect to the torso. Thus, the five exemplars of the ‘cop’ category were all identifiable as ‘cop’ but differed in the orientation of the final hand gesture relative to the shoulder. Similarly, the exemplars of the other 7 classes differed in orientation from other members of their category.

5.2 Tasks

Subjects performed a delayed-match-to-sample (DMS) task in which they saw videos of two gestures interspersed by an interval, and decided if the two gestures were the same or different. During separate blocks, the decision was based on one of two criteria – (1) are the two gestures exactly the same visually? and (2) do the gestures belong to the same category? The first type of task is referred to as Identity Discrimination or IDN and the second type as Category Discrimination or CAT. Each trial was 6.9 seconds in duration, with 2 stimuli of 1500 msecs each, an inter-stimulus interval of 1900 msecs and 2000 msecs during which subjects could respond. The response time was fixed regardless of the time taken by the subject to indicate the response and trials with longer than two-second response times were recorded as incorrect. Subjects saw the same set of stimuli is both tasks.

5.2 Training

Participants were trained outside the magnet prior to being scanned. Training lasted generally for half an hour (for about 7 min. for each stimulus type). During each mini-training session for a particular stimulus type, subjects viewed passively the exemplars from the two categories, and then participated in tasks to discriminate between pairs of gestures based on either criterion (1) or (2). The categories were identified only as ‘A’ and ‘B’ so as to be uniform (not all stimulus types had easily identifiable categories). Although there were separate training sessions for each stimulus type, the instructions and training was identical in each case. Subjects were given feedback for the tasks and repeated a specific training session until their performance was better than a threshold of 85% (62 correct/72 trials) for that stimulus type. During scanning, before each scan run, subjects were familiarized with the type of gestures that they would discriminate in the following run by viewing passively examples of the two categories of that stimulus type.

5.3 fMRI scanning

Subjects were scanned using echo planar imaging (EPI) in a block design paradigm in which 26 axial slices were collected on a 3 Tesla Signa scanner (General Electric, Waukesha, WI). Stimuli were presented using Presentation software (Neurobehavioral Systems, Albany, CA) on a PC laptop computer. There were 4 separate functional EPI runs, one for each type of stimuli. Within an EPI run, subjects underwent 9 rest blocks and 8 test blocks. Within a given test block, there were 8 DMS trials, all of the same type, either IDN or CAT. The instructions as to which criterion to use for the DMS tasks within a given block was given visually 10 seconds prior to the beginning of the test block (during the preceding rest block). The first rest block (fixating on a ‘+’ on the screen) was 38 seconds; subsequent rest blocks were 30 seconds long. Each test block was 55.2 seconds long. The last rest block was 32 seconds long. Total time was 12 minutes and 10 seconds (or 365 repetitions of 2 second TR) for each run. The first four and the last volumes were discarded, resulting in 360 volumes available for analysis. Sagittal localizer and anatomical scans were run prior to the T2-weighted functional EPI scans. The functional scans consisted of 26 interleaved axial (inferior-to-superior) slices that were 5 mm in thickness and with 3.75 × 3.75 mm in-plane resolution (TE=40ms, matrix size 64×64, FOV = 240 mm, 90 degrees flip angle).

5.4 fMRI Analysis

The analysis of the fMRI data was carried out using the statistical parametric mapping (SPM) software (SPM2, Wellcome Dept. of Cognitive Neurology, London, http://www.fil.ion.ucl.ac.uk/spm). The image volumes were corrected for slice time differences, realigned, normalized into standard stereotactic space (using the MNI EPI template provided with SPM2 software) and smoothed with a Gaussian kernel (10 mm full-width-half-maximum). On observation, we found that five of our 13 subjects had movement artifacts in at least one of the 4 EPI scan runs. To control for these movement artifacts, we used a robust weighted least squares method (Diedrichsen and Shadmehr, 2005). The robust weighted least squares method utilizes a restricted maximum likelihood approach to obtain unbiased estimates of image variance from unsmoothed data and applies these weights to the smoothed data resulting in optimal model estimates from the noisy data. The data were rescaled for variations in global signal intensity and high-pass filtered to remove the effect of any low-frequency drift. Using a general linear model (Friston et al., 1995), a mixed effects analysis was conducted at two levels. First, a fixed effects analysis was performed for individual subjects (p<0.001, uncorrected) and contrast images created for the different task conditions. Next, the contrast images from the first level were used to perform a second-level analysis using SPM5, rather than SPM2, because of the increased flexibility of performing ANOVAs in the later version of the SPM software. The second level analyses were of multiple types: one-sample t test, two-sample t test and two-way ANOVA. We used the following statistical threshold of either voxel-level or cluster-level p<0.05 corrected for multiple comparisons using family-wise error, unless otherwise noted.

Acknowledgments

The research was supported by the NIH-NIDCD Intramural Research Program. The authors wish to thank Nathan Pajor, Jaymi Della and Madhav Nandipati for their help in performing the analysis of the behavioral data. David Quinto-Pozos and Christopher Grindrod also have our gratitude for carefully reading and commenting on preliminary versions of this article.

Footnotes

In a manner similar to the fact that certain sound sequences cannot be part of the English language (but can occur in a different language), we devised manual gestures that did not occur in ASL, and their probability of occurrence in ASL was low, as judged by ASL users and experts on ASL. They could however be viewed as belonging to a ‘foreign’ sign language.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Bokde AL, Tagamets MA, Friedman RB, Horwitz B. Functional interactions of the inferior frontal cortex during the processing of words and word-like stimuli. Neuron. 2001;30:609–17. doi: 10.1016/s0896-6273(01)00288-4. [DOI] [PubMed] [Google Scholar]

- Bookheimer S. Functional MRI of language: new approaches to understanding the cortical organization of semantic processing. Annu Rev Neurosci. 2002;25:151–88. doi: 10.1146/annurev.neuro.25.112701.142946. [DOI] [PubMed] [Google Scholar]

- Cohen L, Dehaene S, Naccache L, Lehericy S, Dehaene-Lambertz G, Henaff MA, Michel F. The visual word form area: spatial and temporal characterization of an initial stage of reading in normal subjects and posterior split-brain patients. Brain. 2000;123:291–307. doi: 10.1093/brain/123.2.291. [DOI] [PubMed] [Google Scholar]

- Cohen L, Lehericy S, Chochon F, Lemer C, Rivaud S, Dehaene S. Language-specific tuning of visual cortex? Functional properties of the Visual Word Form Area. Brain. 2002;125:1054–69. doi: 10.1093/brain/awf094. [DOI] [PubMed] [Google Scholar]

- Corina DP, Poizner H, Bellugi U, Feinberg T, Dowd D, O’Grady-Batch L. Dissociation between linguistic and nonlinguistic gestural systems: a case for compositionality. Brain Lang. 1992;43:414–47. doi: 10.1016/0093-934x(92)90110-z. [DOI] [PubMed] [Google Scholar]

- Dehaene S, Le Clec HG, Poline JB, Le Bihan D, Cohen L. The visual word form area: a prelexical representation of visual words in the fusiform gyrus. Neuroreport. 2002;13:321–5. doi: 10.1097/00001756-200203040-00015. [DOI] [PubMed] [Google Scholar]

- Diedrichsen J, Shadmehr R. Detecting and adjusting for artifacts in fMRI time series data. Neuroimage. 2005;27:624–34. doi: 10.1016/j.neuroimage.2005.04.039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Emmorey K, Grabowski T, McCullough S, Damasio H, Ponto L, Hichwa R, Bellugi U. Motor-iconicity of sign language does not alter the neural systems underlying tool and action naming. Brain Lang. 2004;89:27–37. doi: 10.1016/S0093-934X(03)00309-2. [DOI] [PubMed] [Google Scholar]

- Fox MD, Raichle ME. Spontaneous fluctuations in brain activity observed with functional magnetic resonance imaging. Nat Rev Neurosci. 2007;8:700–11. doi: 10.1038/nrn2201. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Holmes AP, Worsley KJ, Poline JP, Frith AD, Frackowiak RSJ. Statistical parametric maps in functional imaging: A general linear approach. Hum Brain Mapp. 1995;2:189–210. [Google Scholar]

- Gunter TC, Bach P. Communicating hands: ERPs elicited by meaningful symbolic hand postures. Neurosci Lett. 2004;372:52–6. doi: 10.1016/j.neulet.2004.09.011. [DOI] [PubMed] [Google Scholar]

- Hein G, Knight RT. Superior temporal sulcus--It’s my area: or is it? J Cogn Neurosci. 2008;20:2125–36. doi: 10.1162/jocn.2008.20148. [DOI] [PubMed] [Google Scholar]

- Husain FT, Fromm SJ, Pursley RH, Hosey LA, Braun AR, Horwitz B. Neural bases of categorization of simple speech and nonspeech sounds. Hum Brain Mapp. 2006;27:636–651. doi: 10.1002/hbm.20207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jackendoff R. Foundations of language: Brain, meaning, grammar, evolution. Oxford University Press; Oxford, UK: 2002. An evolutionary perspective on the architecture. [Google Scholar]

- Kendon A. How gestures can become like words. In: Poyatos F, editor. Cross-cultural perspectives in nonverbal communication. Hogrege; Toronto: 1988. pp. 131–141. [Google Scholar]

- MacSweeney M, Campbell R, Woll B, Giampietro V, David AS, McGuire PK, Calvert GA, Brammer MJ. Dissociating linguistic and nonlinguistic gestural communication in the brain. Neuroimage. 2004;22:1605–18. doi: 10.1016/j.neuroimage.2004.03.015. [DOI] [PubMed] [Google Scholar]

- Marshall JC, Fink GR. Spatial cognition: where we were and where we are. Neuroimage. 2001;14:S2–7. doi: 10.1006/nimg.2001.0834. [DOI] [PubMed] [Google Scholar]

- McNeill D. Hand and Mind. The University of Chicago Press; Chicago, IL: 1992. [Google Scholar]

- McNeill D. Gesture and Thought. The University of Chicago Press; Chicago: 2005. [Google Scholar]

- Molnar-Szakacs I, Wu AD, Robles FJ, Iacoboni M. Do you see what I mean? Corticospinal excitability during observation of culture-specific gestures. PLoS ONE. 2007;2:e626. doi: 10.1371/journal.pone.0000626. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petitto LA, Zatorre RJ, Gauna K, Nikelski EJ, Dostie D, Evans AC. Speech-like cerebral activity in profoundly deaf people processing signed languages: implications for the neural basis of human language. Proc Natl Acad Sci U S A. 2000;97:13961–6. doi: 10.1073/pnas.97.25.13961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poldrack RA, Wagner AD, Prull MW, Desmond JE, Glover GH, Gabrieli JD. Functional specialization for semantic and phonological processing in the left inferior prefrontal cortex. Neuroimage. 1999;10:15–35. doi: 10.1006/nimg.1999.0441. [DOI] [PubMed] [Google Scholar]

- Price CJ, Devlin JT. The myth of the visual word form area. Neuroimage. 2003;19:473–81. doi: 10.1016/s1053-8119(03)00084-3. [DOI] [PubMed] [Google Scholar]

- Rushworth MF, Walton ME, Kennerley SW, Bannerman DM. Action sets and decisions in the medial frontal cortex. Trends Cogn Sci. 2004;8:410–7. doi: 10.1016/j.tics.2004.07.009. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. A Co-planar Stereotaxic Atlas of a Human Brain. Thieme; Stuttgart: 1988. [Google Scholar]

- Uchiyama Y, Toyoda H, Honda M, Yoshida H, Kochiyama T, Ebe K, Sadato N. Functional segregation of the inferior frontal gyrus for syntactic processes: A functional magnetic-resonance imaging study. Neurosci Res. 2008;61:309–318. doi: 10.1016/j.neures.2008.03.013. [DOI] [PubMed] [Google Scholar]

- Venus CA, Canter GJ. The effect of redundant cues on comprehension of spoken messages by aphasic adults. J Commun Disord. 1987;20:477–91. doi: 10.1016/0021-9924(87)90035-9. [DOI] [PubMed] [Google Scholar]

- Vigneau M, Beaucousin V, Herve PY, Duffau H, Crivello F, Houde O, Mazoyer B, Tzourio-Mazoyer N. Meta-analyzing left hemisphere language areas: phonology, semantics, and sentence processing. Neuroimage. 2006;30:1414–32. doi: 10.1016/j.neuroimage.2005.11.002. [DOI] [PubMed] [Google Scholar]

- Walton ME, Croxson PL, Behrens TE, Kennerley SW, Rushworth MF. Adaptive decision making and value in the anterior cingulate cortex. Neuroimage. 2007;36(Suppl 2):T142–54. doi: 10.1016/j.neuroimage.2007.03.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Waters D, Campbell R, Capek CM, Woll B, David AS, McGuire PK, Brammer MJ, MacSweeney M. Fingerspelling, signed language, text and picture processing in deaf native signers: the role of the mid-fusiform gyrus. Neuroimage. 2007;35:1287–302. doi: 10.1016/j.neuroimage.2007.01.025. [DOI] [PMC free article] [PubMed] [Google Scholar]