Abstract

An extension of the Wiener-Volterra theory to a Poisson-distributed impulse train input was used to characterize the temporal response properties of neurons in primary auditory cortex (AI) of the ketamine-anesthetized cat. Both first- and second-order “Poisson-Wiener” (PW) models were tested on their predictions of temporal modulation transfer functions (tMTFs), which were derived from extracellular spike responses to periodic click trains with click repetition rates of 2–64 Hz. Second-order (i.e., nonlinear) PW fits to the measured tMTFs could be described as very good in a majority of cases (e.g., predictability ≥80%) and were almost always superior to first-order (i.e., linear) fits. In all sampled neurons, second-order PW kernels showed strong compressive nonlinearities (i.e., a depression of the impulse response) but never expansive nonlinearities (i.e., a facilitation of the impulse response). In neurons with low-pass tMTFs, the depression decayed exponentially with the interstimulus lag, whereas in neurons with band-pass tMTFs, the depression was typically double-peaked, and the second peak occurred at a lag that correlated with the neuron's best modulation frequency. It appears that modulation-tuning in AI arises in part from an interplay of two nonlinear processes with distinct time courses.

INTRODUCTION

Neurons in primary auditory cortex (AI) of anesthetized animals typically respond only to the onsets of sounds of which the rates of AM exceed a few tens of hertz (or which are unmodulated), although this is not always the case in the awake condition (e.g., Lu et al. 2001; Recanzone 2000; Wang et al. 2005). This strongly nonlinear behavior, potentiated under anesthesia, leads to an innate preference for slowly modulated sounds, such as speech and other conspecific communication calls. It has recently been proposed that modulation-tuning across neural populations is optimized for discriminating between and within classes of natural sounds, including conspecific calls (Woolley et al. 2005). While the synchronized response of most AI neurons drops off with increasing AM rate in simple low-pass fashion, some neurons exhibit band-pass tendencies. The cellular mechanisms underlying modulation-tuning in AI, however, remain unclear.

The degree of neuronal synchronization to stimulus temporal fluctuations is typically quantified by computing the temporal modulation transfer function (tMTF) from responses to periodic or AM sounds (e.g., Eggermont 1994, 2002; Lu and Wang 2000). The tMTFs of auditory neurons have also been derived indirectly, via system identification methods (for a review, see e.g., Wu et al. 2006). Most of these latter efforts, however, relied on the assumption of linearity: the tMTF was derived, essentially, by Fourier transformation of the neuronal impulse response (e.g., Depireux et al. 2001; Theunissen et al. 2001; Woolley et al. 2005). While linear models have provided and continue to provide much insight into the nature of central auditory processing, it is a fact that AI neurons often exhibit profound nonlinearities (e.g., Bar-Yosef et al. 2002). Thus it is not surprising that linear models are highly context-dependent (i.e., depend on the test-stimulus used in their estimation) and perform poorly when used to predict responses to complex stimuli (e.g., Christianson et al. 2008; Machens et al. 2004; Sahani and Linden 2003). The addition of an ad hoc, static nonlinear transformation to the linear model is enjoying a recent resurgence (e.g., Atencio et al. 2008; Brenner et al. 2000; Nagel and Doupe 2006) and does improve predictions (Sharpee et al. 2008) but still leaves the temporal nonlinearities uncharacterized. The more comprehensive ad hoc model of Ahrens et al. (2008), which explicitly includes temporal nonlinearities, arguably represents the present state-of-the-art in the modeling of the AI neuron response.

A rigorous and general nonparametric theory of system identification, capable, in principle, of characterizing the input-output relation of any (time-invariant) nonlinear system with memory, was pioneered long ago by Vito Volterra and Norbert Wiener (Volterra 1930; Wiener 1958; for an accessible introduction to the theory, see Marmarelis and Marmarelis 1978). In essence, the theory combines the Taylor series expansion with the convolution operator to capture the influence of a system's memory, or of stimulus interactions, on the impulse response. Thus the second-order Wiener-Volterra “kernel” effectively corrects the impulse response for interactions with single preceding impulses (as a function of the interval between the 2 impulses), the third-order kernel corrects for interactions with pairs of preceding impulses (as a function of the 2 intervals between the 3 impulses), and so on. One practical limitation of the theory, especially in its application to neural systems, is that the amount of data required to estimate kernels at a given signal-to-noise ratio (SNR) grows exponentially with the kernel order. Above second order, the recording times to acquire sufficient numbers of spikes are very long, and the condition of response stationarity becomes very difficult to meet. Nevertheless providing that second-order interactions are strong, the improvement of second-order over linear predictions can be substantial (e.g., Juusola et al. 1995; Marmarelis and Naka 1972).

Here we applied what has been termed the “Poisson-Wiener” (PW) model (Marmarelis 2004) to characterize the temporal response properties of AI neurons in the ketamine-anesthetized cat. The PW model represents a reformulation of the Gaussian white-noise-based Wiener theory around a Poisson-distributed impulse train test-input (Epping and Eggermont 1986; Krausz 1975), which evokes a much more robust response in AI. Both first- and second-order PW models were tested on their predictions of neuronal tMTFs, which were derived from responses to periodic click trains at rates of 2–64 Hz. We found that second-order fits were substantially better than linear fits in almost all cases and could be described as very good (predictability ≥80%) in a majority of cases.

METHODS

Animal preparation, sound stimulus presentation, and spike recording

Animal use was approved (BI 2001-021) and reviewed on a yearly basis by the Life and Environmental Sciences Animal Care Committee of the University of Calgary, according to the guidelines set by the Canadian Council of Animal Care. Cats were deeply anesthetized with 25 mg/kg ketamine hydrochloride and 20 mg/kg sodium pentobarbital injected intramuscularly. Lidocaine (20 mg/ml) was injected subcutaneously prior to the dissection of tissue and craniotomy over the right temporal lobe. The dura was resected to expose AI, roughly bounded by the anterior and posterior ectosylvian sulci. A mixture of 0.2 ml acepromazine (0.25 mg/ml) and 0.8 ml atropine methyl nitrate (25 mg/ml) was periodically administered subcutaneously at 0.25 ml/kg body wt to reduce secretions into the respiratory tract. Small doses of ketamine were administered as required, typically every hour, to maintain a state of areflexive anesthesia (average across cats: 11.8 mg·kg−1·h−1; range: 8.8–16.7 mg·kg−1·h−1). All cats had normal hearing, as assessed using auditory brain stem response thresholds (Noreña and Eggermont 2005).

Acoustic stimuli were presented in an anechoic room from a speaker system (Fostex RM765 in combination with a Realistic super-tweeter) that produced an approximately flat spectrum (±5 dB ≤40 kHz, measured at the cat's head). The speaker was placed ∼30° from the midline in the contralateral field, ∼50 cm from the cat's left ear. Stimuli were generated in MATLAB and transferred to a sound-delivery system (Tucker Davis Technologies RP2.1).

The cat was secured with one screw cemented on the head without any other restraint. Two arrays of eight electrodes (Frederic Haer) each with impedances between 1 and 2 MΩ were used for spike acquisition. The depth of recording was between 700 and 1,200 μm, and thus the electrodes were likely in deep layer III or layer IV. The recorded potentials were amplified 10,000 times (Tucker Davis Technologies RA16PA), filtered between 0.3 and 3 kHz, and digitized by a multi-channel data-acquisition system (Tucker Davis Technologies RX5). Spikes were identified on-line using a single trigger set well outside of the noise floor and sorted off-line using an automated procedure based on principal component analysis and K-means clustering (Eggermont 1990). Three of the best-sorted waveforms on an electrode, each potentially representing a single unit, were combined for statistical purposes to form a multiple single-unit (MSU) spike train. We have previously shown that single-unit and MSU spike trains give nearly identical tMTFs (Eggermont 1998).

Poisson-Wiener series

The output of a nonlinear “black box” system as a function of time, y(t), can be approximated by a series of orthogonal functionals (i.e., functions of a function), Gi, of the input to the system, x(t), and its PW kernels, pi (after Epping and Eggermont 1986; Krausz 1975)

|

(1) |

where

|

(2) |

The first three functionals are

|

(3) |

|

(4) |

|

(5) |

where λ is the mean impulse rate of the Poisson-distributed test-input. Following Lee and Schetzen (1965), the PW kernels were estimated by cross-correlation of the system's spike output with the Poisson-distributed impulse train input

|

(6) |

|

(7) |

|

(8) |

where E denotes the expected (mean) value of the cross-correlation across time. The kernels may be qualitatively interpreted as follows. The zeroth-order kernel is equal to the average system activity (i.e., firing rate), whether spontaneous or stimulus-evoked. The first-order kernel represents the average response that is time-locked to an individual impulse, or in other words, the mean-rate-corrected peristimulus time-histogram (PSTH). The second-order kernel, in this “predictive form,” represents the average facilitation or depression of the response to an impulse, when that impulse is preceded by another impulse by θ ms, regardless of intervening impulses. A second-order PW series gives an exact prediction of a system's response to any two-impulse experiment, or, if the system nonlinearity is at most quadratic, an exact prediction of the response to any experiment (within assumptions of stationarity, etc.).

First- and second-order PW kernels were computed by discretizing Eqs. 7 and 8, respectively, using a time bin of 1 ms. First-order kernels were subsequently smoothed with a 5-bin uniform filter, and second-order kernels with a 21 × 21-bin uniform filter. Stimuli for kernel estimation were 15-min-long Poisson-distributed trains of acoustic clicks (abbreviated as “Poisson-clicks”) and also (for comparative purposes) Poisson-distributed trains of tone pips (abbreviated as “Poisson-pips;” different frequency pips were treated as identical “impulses”). All individual clicks and pips were presented at a peak level of 65 dB per SPL. Clicks were initially generated at the mean rate of 8/s, which was subsequently reduced to ∼6.7/s (λ in Eqs. 3–8) by imposing a minimum dead time of 20 ms between successive clicks to increase the evoked neural firing rate. Pips were randomly and independently drawn from a pool of 81 frequencies that spanned five octaves (0.3–10 or 0.6–20, or 1.2–40 kHz, depending on responses to a previous search stimulus) in equal logarithmic steps. Each frequency was presented at an average rate of one per 4 s so the average aggregate pip presentation rate was 20/s. The time course of each pip was shaped by a gamma-function

|

(9) |

The gamma-envelope is above half-maximum power (i.e., is within 6 dB of the peak level) over ∼3–17 ms post pip-onset and decays by ∼64 dB at 50 ms, at which point the pip was truncated.

Frequency tuning curves

MSU frequency tuning was assessed on the basis of responses to a pseudorandom sequence of tone pips, differing in frequency and intensity, which were presented one at a time at the rate of 4/s. The same five octaves were spanned by 27 frequencies in equal logarithmic steps. Each frequency was presented at eight peak intensity levels, from −5 to 65 dB SPL, in 10-dB steps. Every frequency-intensity combination was repeated 10 times in the random sequence.

Spectrotemporal receptive fields

Spectrotemporal receptive fields (STRFs) were computed from responses to the 15-min-long Poisson-pips stimulus. Our procedure for computing STRFs was first detailed in Valentine and Eggermont (2004) and updated in Eggermont (2006). Briefly, each spike registered a count in every preceding stimulus frequency-time bin (≤250 ms) that marked a pip onset; thus excitatory frequency-latency combinations produced above-average counts, while inhibitory combinations produced below-average counts. Counts were then converted to firing rates in units of spike/s/pip, and the STRF was smoothed with a 5 × 5-bin uniform filter. The best frequency (BF) was the one that evoked the maximum instantaneous firing rate, i.e., the maximum entry in the STRF matrix.

First- and second-order PW predictions of measured tMTFs

First- and second-order PW models were used to predict neuronal tMTFs, which were also derived directly from responses to periodic click trains with click repetition rates ranging logarithmically from 2 to 64 Hz (4 rates per octave for a total of 21 rates). Each train began with a click at time 0 and was 1 s in duration (so that the 2-Hz train consisted of 3 clicks), and was followed by 2 s of silence. Each train was repeated 10 times in a random sequence (total ensemble duration was 10.5 min). Peak click intensity was 65 dB pe SPL, the same as that used in kernel estimation. The tMTF was defined as the average number of synchronized spikes evoked per click in the steady state, as a function of the click repetition rate. We found that steady state was always reached within 300 ms of a train's onset; thus all earlier clicks and spikes were excluded from the calculation. Synchronized firing was computed by Fourier transformation of the averaged period histograms, which were always constructed using 16 bins per period, so that the relative timing accuracy of the spikes was constant across click repetition rate (Eggermont 2002). The significance of the synchronized response was assessed using the Rayleigh test of uniformity on its vector strength, a phase-locking metric (Fisher 1993).

A first-order predicted response to a given periodic click train was obtained by summing the zeroth- and first-order functionals (Eqs. 3–4). Once computations reached steady state, the predicted period histogram could be taken as the predicted response to any single click in a train, and the synchronized firing rate was computed using the same procedure outlined in the preceding text for measured period histograms. Note that an equivalent first-order prediction of the tMTF can be computed simply by taking the Fourier transformation of the first-order kernel, underscoring the elegance of the convolution theorem. Likewise, a second-order predicted response was obtained by summing the zeroth-, first-, and second-order functionals (Eqs. 3–5) and proceeding as in the preceding text. Note that in the second-order case, transforming to the frequency domain would do little to simplify the computation (see, e.g., Bendat 1998).

For both first- and second-order models, the percent predictability of the measured tMTF at a given click repetition rate was defined as 100 minus the percent error

|

(10) |

The percent predictability will be reported at just the best click repetition rate or modulation frequency (BMF) of the measured tMTF, as well as averaged across those modulation frequencies at which the measured synchronized response was significant at P < 0.05.

Case inclusion criteria

From all available MSU recordings, a smaller sample was chosen for analysis based solely on the criteria of response strength and stationarity. Stationarity was assessed using the Mann-Kendall test (Kendall 1975; Mann 1945), which detects monotonic trends in the interspike interval over time; recordings with a trend significant at P < 0.05 were excluded as nonstationary. Minimum firing rate requirements were as follows. In response to both the Poisson-clicks and -pips stimuli, the peak firing rate of the (smoothed) first-order kernel had to be >4 SD above the baseline rate, which was obtained by averaging spike activity over the first 10-ms post stimulus-onset (shorter than the minimum response transit time to AI). For periodic click trains, the synchronized response at the BMF had to be more than one spike per click, and its vector strength significant at P < 0.05.

RESULTS

Proof of concept: first- and second-order Poisson-Wiener predictions of the responses of simulated nonlinear neurons

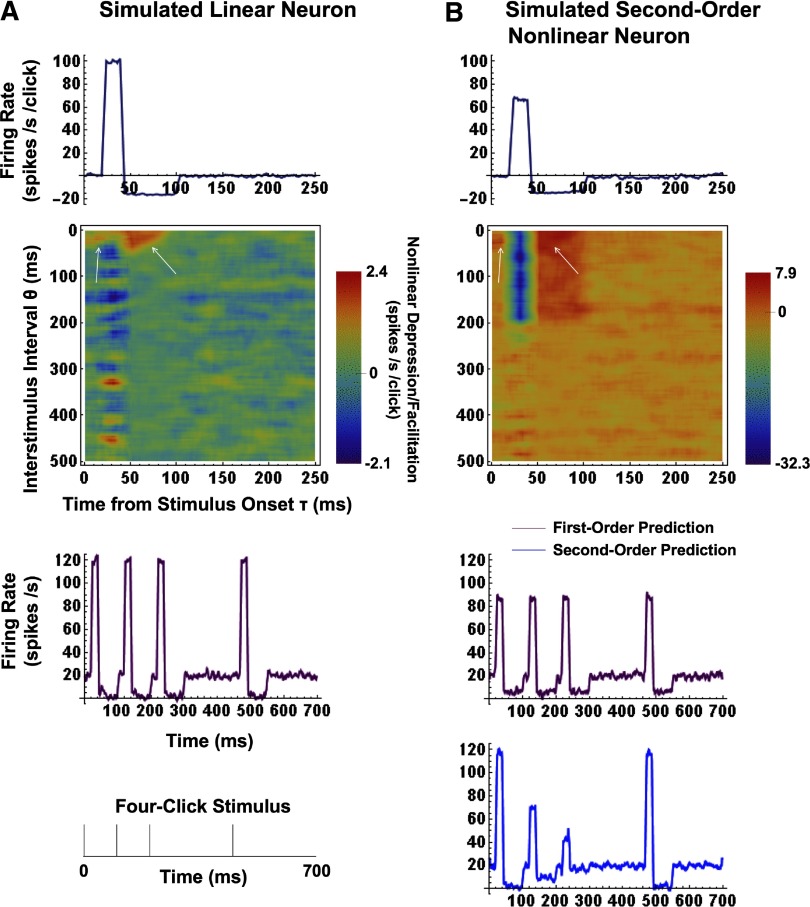

The firing properties of simulated AI neurons were specified as follows. The probability of a spike in each time bin was initially set to give a mean Poisson-distributed spontaneous rate of 20 spike/s. In a simulated linear neuron, a stimulus click produced an average excitation (above the spontaneous rate) of 100 spike/s over post-onset latencies of 20–40 ms, followed by an average suppression (below the spontaneous rate) of 20 spike/s over 40–100 ms. In a simulated second-order nonlinear neuron, the above impulse response was modulated by a simple constant function of the interval to every preceding impulse within the 200-ms memory of the system. Note that all of the preceding values are arbitrary but representative of extracellular MSU recordings in AI. In the example presented as Fig. 1, the second-order constant was set to ½; (values less than unity define a compressive nonlinearity and those greater than unity an expansive nonlinearity). Thus the simulated nonlinear neuron's average response to a click was ½ that of a linear neuron's if the click was preceded by one other within the 200-ms memory, ¼ that of a linear neuron's if the click was preceded by two others within memory, etc. The average Poisson-distributed click stimulation rate (λ in Eqs. 3–8) was four per second, and each simulation was run over 30 min (1.8 × 106 bins).

FIG. 1.

First- and 2nd-order Poisson-Wiener (PW) kernels and response predictions for a simulated linear and a 2nd-order compressively nonlinear neuron. A: 1st- and 2nd-order PW kernels (top) for a simulated linear neuron (see the text for simulation specifications). In the linear case, the 1st-order kernel is equivalent to the impulse response. The 2nd-order kernel illustrates the nonlinear depression and facilitation (by the blue and red ends of the color spectrum, respectively) of the impulse response due to interactions between pairs of impulses separated by time interval θ. Even the heavily smoothed (21 × 21-bin uniform filter) 2nd-order kernel is noisy, but has a mean value of 0 over any substantial vertical or horizontal strip for any linear neuron. Several artifacts due to the discrete computation appear in all 2nd-order kernels and are illustrated by white arrows. The 1st-order predicted response (purple curve) to a 4-click stimulus (bottom panel) is a very close match to simulation specifications. B: 1st- and 2nd-order PW kernels (top) for a simulated 2nd-order compressively nonlinear neuron (see the text for simulation specifications). In this case, the 1st-order kernel is smaller than the impulse response and so the 1st-order model (purple curve) underpredicts the response to single clicks (i.e., those with no preceding clicks in memory) but overpredicts the response to multiple clicks. The 2nd-order kernel characterizes and corrects for the compressive nonlinearity, so that the 2nd-order prediction (blue curve) is a very close match to simulation specifications.

Figure 1A, top, shows the first-order kernel of the simulated linear neuron specified in the preceding text; in this linear case, the first-order kernel is equivalent to the mean spike rate-corrected impulse response. The next panel shows the neuron's second-order kernel, smoothed by a 21 × 21-bin uniform filter. The second-order kernel represents the average facilitation (by the red end of the color spectrum) or depression (by the blue end) of the impulse response at a latency of τ ms (horizontal axis), when the impulse is preceded by another impulse by θ ms (vertical axis), regardless of any intervening impulses. Even with 30 min of simulation, there is considerable noise in the second-order kernel estimate, which is related to the level of spike activity, but any substantial horizontal or vertical strip has a mean value of approximately zero (as required for a linear system). Note, however, that several artifacts (shown by white arrows) arise in our computation of the second-order kernel. These artifacts are a consequence of the discretization of Eq. 8 and become smaller at shorter bin widths. At our 1-ms resolution, the resulting second-order prediction errors were found to be <3%. The third panel of Fig. 1A shows the first-order predicted response to a four-click stimulus, drawn below; the prediction is, of course, a close match to the linear simulation specifications.

Figure 1B, top, shows the computed first-order kernel of a simulated second-order nonlinear neuron. Note that both excitation and suppression are smaller than in the linear case, due to the compressive nonlinearity of factor ½. The neuron's second-order kernel (next panel) shows an average depression of ∼30 spike·s−1·click−1 during the 20-ms duration of excitation and extending over the 200-ms memory, and an average facilitation of ∼6 spike·s−1·click−1 during the 60-ms duration of suppression (in this case, “facilitation” is better understood as “reduction of inhibition”). Note that 30:6 is also the ratio of excitation to suppression (100:20); note also that adding 30 spike·s−1·click−1 to the excitatory part of the nonlinear neuron's first-order kernel, and subtracting 6 spike·s−1·click−1 from the suppressive part gives back the impulse response of the linear neuron. The first-order predicted response of the nonlinear neuron to the four-click stimulus is shown in the third panel of Fig. 1B. Note that the first-order model underpredicts the responses to the first and fourth “linear” clicks, which are not affected by preceding clicks in memory, and overpredicts the responses to the second and third “nonlinear” clicks, which are affected by one and two preceding clicks, respectively. In contrast, the second-order prediction (bottom) is again a close match to the nonlinear simulation specifications, which require average excitatory responses of 70 [i.e., 20 + 1/2(100)] and 45 [i.e., 20 + (½)2(100)] spike/s for the second and third clicks, respectively.

We carried out additional simulations to determine whether the expected second-order predictions would also be obtained for variations of several key, nonarbitrary parameters, namely the strength of the nonlinearity and the average Poisson-click stimulation rate. We found that both parameters could only be increased within limits. For increased nonlinearity strength, this was a consequence of the increase in statistical error in the second-order kernel estimate due to the decrease in the number of spikes. For increased stimulation rate, it was a consequence of the increase in systematic error due to the increased deviation of the test-input distribution from the ideal Poisson (resulting from the 1-ms “dead time” imposed by discretization). As was the case for the first-order prediction of the response of a compressively nonlinear neuron, the systematic error due to the dead time was an underprediction of responses to “linear” clicks and an overprediction of responses to “nonlinear” clicks. It should further be noted that for systems of higher than second-order, a second-order Poisson-Wiener prediction will not be independent of the Poisson-click rate. This is a separate issue from that of the dead time and is a consequence of the decreased number of long inter-click intervals (as the click rate increases) with which the system is probed. This is not a problem for second-order systems where again, interactions occur only between pairs of clicks, regardless of any clicks in between.

First- and second-order PW kernels and predictions

EXAMPLES FROM THE EXPERIMENTAL DATA.

Extracellular recordings were made from AI in seven ketamine-anesthetized cats. The results reported here are based on 58 sets of MSU recordings, which are presumed to come from large pyramidal neurons in layers III/IV of AI. Cases were selected from a larger pool based on the stationarity and the strength of responses to the Poisson-distributed and periodic click train stimuli (see methods).

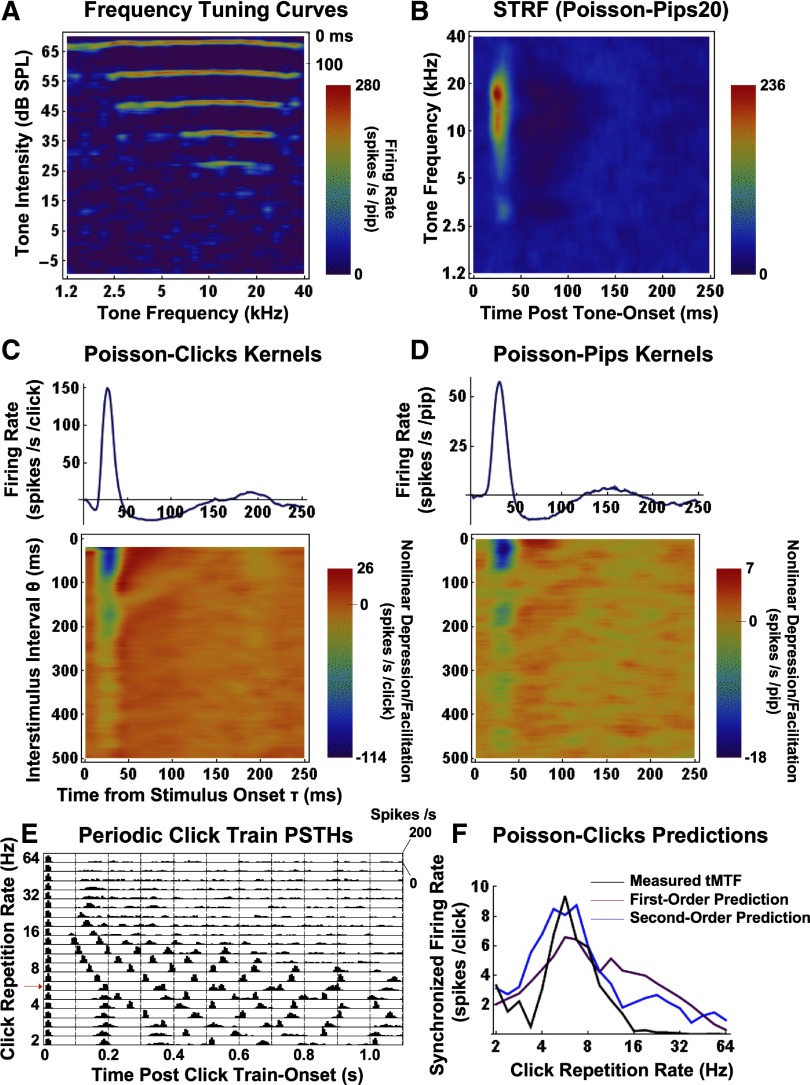

Figure 2 presents results from an example MSU recording (352-2-3). Figure 2A shows its FTC. The average response to a given tone pip frequency and intensity is plotted as a smoothed raster plot over latencies of 0- ms (time scale is in the top-right corner). High firing rates are represented by the red end of the color spectrum and low firing rates by the blue end. The pure tone response threshold lies between 15 and 25 dB SPL, and both the spectral bandwidth and the firing rate at the best frequency (BF) increase monotonically with sound level. The MSU's STRF is shown in Fig. 2B. The unit's BF at 65 dB SPL is ∼17 kHz.

FIG. 2.

An illustration of the analytical procedures using data from an example multispike unit (MSU). A: tone frequency tuning curves as a function of SPL, plotted as smoothed raster plots over latencies of 0 to 100 ms (time scale shown in the top-right corner). B: Spectrotemporal receptive field (STRF) computed from responses to the Poisson-pips stimulus (see text). C and D: 1st- and 2nd-order kernels computed from responses to the Poisson-clicks (C) and Poisson-pips (D) test inputs. Note that the 2nd-order Poisson-clicks kernels are empty for interstimulus lags (θ) of <20 ms due to the minimum dead time imposed between successive clicks. E: raw poststimulus time histographic (PSTH) responses to periodic click trains with click repetition rates of 2–64 Hz. PSTHs represent the averages of 10 trials and were computed in 10-ms bins. F: measured tMTF (black curve), and 1st- (purple) and 2nd-order (blue) PW predictions.

The first-order kernel for the same example MSU, derived from responses to the Poisson-clicks test-stimulus, is given in the top panel of Fig. 2C. As was the case for the vast majority of our recordings, the first-order kernel is characterized by a brief, strong excitation, which is followed by a longer, hard suppression (little or no spiking). In this example, there is also a weak rebound that peaks at ∼190 ms. For comparison, kernels were also obtained from responses to the Poisson-pips test-stimulus (see methods), and the Poisson-pips first-order kernel is shown in Fig. 2D, top. Note the qualitative similarity between the click- and pip-derived first-order kernels, albeit that the rebound activity peaks earlier with the pips (∼150 ms). Note also that the Poisson-pips first-order kernel is computationally equivalent to the mean-rate-corrected time marginal of the STRF (for a proof, see Rieke et al. 1997), as can be appreciated by comparing the excitation-suppression-rebound time course of the STRF to that of the first-order kernel.

Second-order kernels derived from responses to the Poisson-clicks and Poisson-pips test-inputs are presented in Fig. 2, C and D, bottom, respectively. Note, again, their qualitative similarity, except that the Poisson-clicks kernel is empty for θ ≤20 ms due to the dead time imposed between successive clicks (see methods). The characteristic feature of these and in fact of all of our sampled second-order kernels was a marked (nonlinear) depression of the (linear) excitatory response. The depression was generally strongest at the shortest interstimulus intervals (again, represented by the blue end of the color spectrum) but could persist up to the longest intervals investigated (500 ms). This depression during periods of excitation was always coupled to a (nonlinear) facilitation (red colors) during periods of (linear) suppression. Put more concisely, all second-order kernels exhibited strong compressive nonlinearities, which acted to reduce the magnitude of both the excitatory and suppressive components of the first-order response. Expansive nonlinearities (i.e., the facilitation of excitation) were not observed. Our finding of only compressive nonlinearities in AI of anesthetized cats is consistent with the results of intracellular studies on large pyramidal neurons in various regions of rat sensory-motor cortex (Thomson 1997; Thomson et al. 1993; Wehr and Zador 2005). In contrast, more diverse nonlinear dynamics, including expansive nonlinearities, were observed in the auditory midbrain of the grassfrog (Epping and Eggermont 1986) and in nonpyramidal neurons of cerebral cortex (e.g., Zucker and Regehr 2002).

To evaluate the predictive power of first- and second-order PW models, we computed model responses to sets of periodic click trains with repetition rates of 2–64 Hz and compared them to measured responses to the same stimuli (see methods). We mention parenthetically that kernels estimated with the Poisson-pips test input, while useful in many respects, cannot be used to generate quantitative predictions because different-frequency pips do not, of course, constitute equally effective impulse stimuli. Figure 2E shows, again for the same example MSU, the raw PSTH responses to the periodic click train stimuli, while the tMTF derived from these data are illustrated with the black curve in Fig. 2F. The click repetition rate that elicits the most synchronized spikes/click, also referred to as the unit's best modulation frequency (BMF) is 5.7 Hz (indicated by the red arrow in Fig. 2E). Note that at low click repetition rates, this sharply tuned band-pass unit exhibits strong periodic ringing at a frequency that closely matches the unit's BMF. In Fig. 2F, first- and second-order PW predictions of the tMTF are illustrated with purple and blue curves, respectively. We compared measured and predicted BMFs, as well as modulation frequency bandwidths at half-peak value. We also calculated the percent predictability of the tMTF, both at the measured BMF and averaged across those modulation frequencies at which the synchronized response was significant (see methods). In this unit, measured and first- and second-order predicted BMFs were all 5.7 Hz, and bandwidths were 1.0, 3.0, and 1.5 octaves, respectively. First-order predictability of the tMTF at the measured BMF was 70% and averaged first-order predictability was 78%. For the second-order model, these prediction values improved to 86 and 84%, respectively.

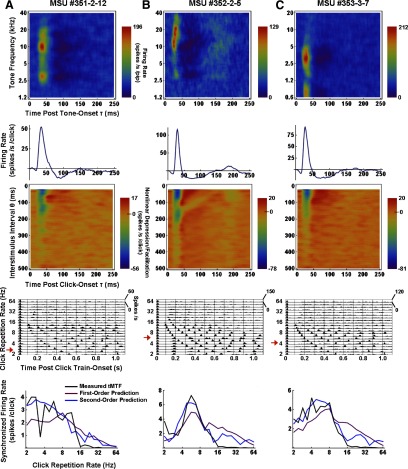

Figure 3 shows results obtained from three additional MSUs (A–C); STRFs are in the top row, first- and second-order PW kernels in the second and third rows, PSTH responses to periodic click trains in the fourth row, and measured and predicted tMTFs in the bottom row. Notice again that the (click-derived) first-order kernel closely describes the time course of the (pip-derived) STRF around the BF. Measured tMTFs were classified by eye as low-pass in 62% (36/58) of MSUs (e.g., Fig. 3, A and C) and as band-pass in the remaining 38% (22/58; e.g., Figs. 2 and 3B). Almost all band-pass units showed strong periodic rebound activity in PSTH responses at low click repetition rates (and in the 1st-order kernel), at frequencies that corresponded with the units’ BMFs. In each example unit, whether low- or band-pass, the second-order PW fit to the measured tMTF represents a substantial improvement over the first-order fit. One notices, however, that even the second-order models overpredict the tMTFs above ∼16 Hz, implying that higher than second-order nonlinearities are present and influence measured responses particularly at high stimulation rates. Parenthetically, we note that one-sixth (6/36) of low-pass tMTFs showed a pronounced notch at click repetition rates of ∼3.4 Hz (e.g., Fig. 3A), which could not be predicted with the PW models. The lack of spikes sometimes observed at this particular click rate is paralleled by the destructive interference seen in click-evoked local field potentials (LFPs). Thus the negative, spike-generating phase of a given LFP is cancelled by the positive-going phase of the previous LFP, seemingly reducing the generation of spikes (Eggermont and Smith 1995).

FIG. 3.

Results from 3 additional example MSUs (A–C). STRFs (top), 1st- and 2nd-order PW kernels (2nd and 3rd rows), PSTH responses to periodic click trains (4th row), and 1st- and 2nd-order PW predictions of measured temporal modulation transfer functions (tMTFs, bottom row).

AVERAGED RESULTS FOR LOW-PASS AND BAND-PASS MSUs.

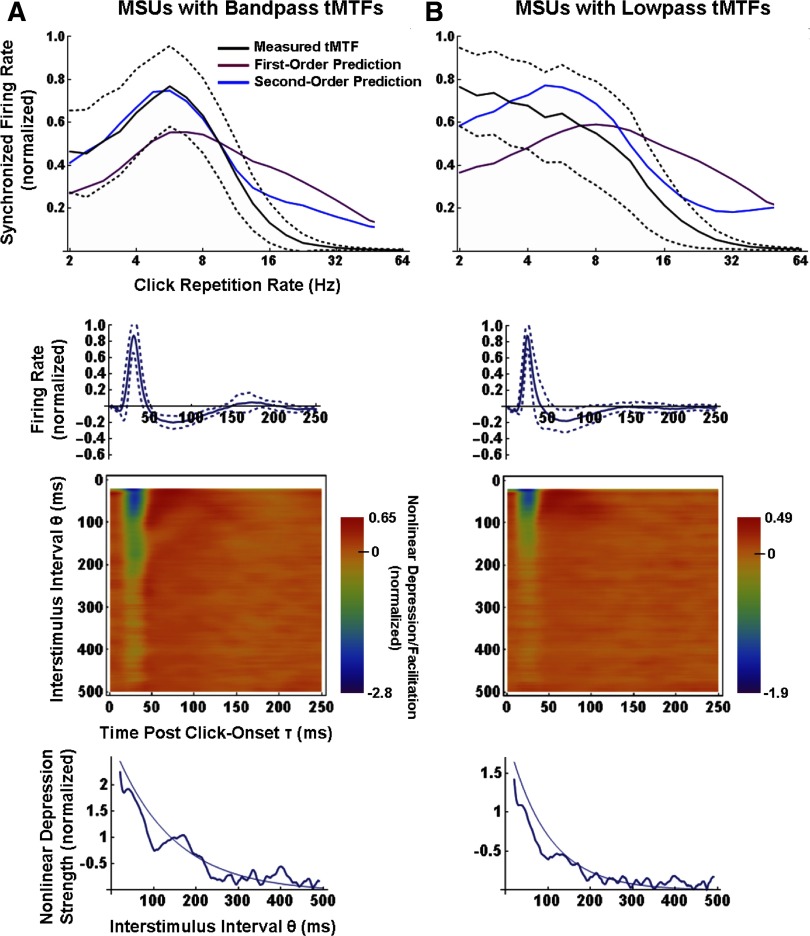

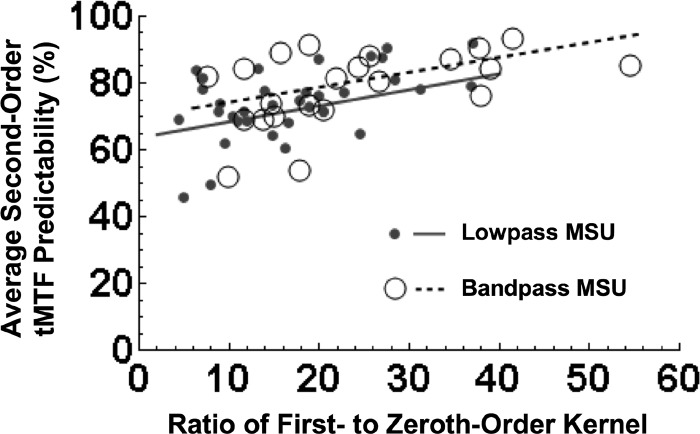

Figure 4, top row, shows averaged tMTFs from the 22 band-pass (A) and 30 low-pass (B) MSUs (the 6 “low-pass with notch” MSUs were excluded, on this plot only); measured, and first- and second-order predicted tMTFs are color-coded as before. Prior to averaging, each case was normalized on the maximum synchronized firing rate of its measured and predicted data. The dashed black curves show ±1 SD about the mean measured tMTF. For the band-pass units, the average second-order prediction happened to be nearly exact up to modulation frequencies of ∼14 Hz. For the low-pass units, the improvement over the first-order model was still substantial, though the average second-order prediction retained some of the band-pass tendencies of the first-order prediction, and was poorer than the second-order fit to band-pass tMTFs. The poorer fit is at least in part a consequence of the lower response SNRs, i.e., a lower ratio of stimulated to spontaneous activity, observed in our sample of low-pass compared with band-pass units (for reasons unknown). The mean peak excitation of the first-order kernels of low-pass units was 58 ± 18 (SD) spike·s−1·click−1, and for band-pass units it was 78 ± 20 spike·s−1·click−1 (P < 0.01; Student's t-test). Figure 5 plots average second-order tMTF predictability versus response SNR, for the sample of low-pass (•) and band-pass MSUs (○). SNR was taken as the ratio of the peak of first-order kernel excitation to the value of the zeroth-order kernel (i.e., the overall mean firing rate). Significant correlations between predictability and response SNR were observed for both low-pass (solid line; r2 = 0.30; P < 10-3) and band-pass units (dashed line; r2 = 0.24; P = 0.02). Mean (± SD) SNRs were 18 ± 9.0 for low-pass units and 25 ± 11 for band-pass units (P = 0.05; Student's t-test).

FIG. 4.

Averaged measured and predicted tMTFs (top), averaged 1st- and 2nd-order PW kernels (2nd and 3rd rows), and averaged time courses of the strength of depression (bottom row) for the sample of 22 band-pass (A) and 36 low-pass (B) MSUs. For each plot, individual cases were normalized on their response maxima before averaging. - - - around the mean measured tMTFs and 1st-order kernels show ± SD. In the bottom row, the strength of the 2nd-order depression at each interstimulus lag (θ domain) was averaged over latencies (τ domain) of 20–40 ms. For the band-pass but not for the low-pass units, the depression exhibits a significant secondary peak at ∼175 ms.

FIG. 5.

Scatter plot of the SNR of the 1st-order response (taken as the ratio of peak to mean excitation) and the 2nd-order predictability of the tMTF. •, low-pass units; ○, band-pass units. Significant correlations between response SNR and predictability were observed for both low-pass (—; r2 = 0.30; P < 10-3) and band-pass units (- - -; r2 = 0.24; P = 0.02).

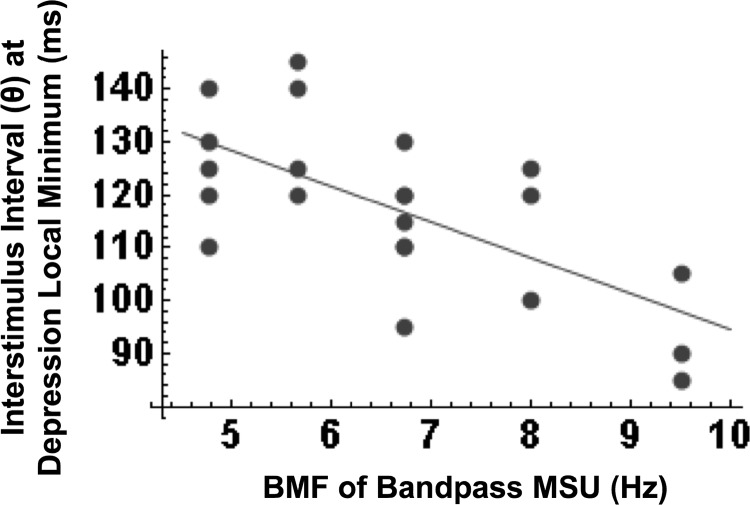

A careful case-by-case inspection indicated that neither the general features of first- and second-order kernels, nor tMTF predictability, varied systematically with MSU frequency tuning. Both did, however, vary with the type of measured tMTF. As already mentioned, first-order kernels of band-pass units typically showed some postsuppression rebound activity, whereas those of low-pass units did not. This is apparent from the averaged first-order kernels, shown in the second row of Fig. 4 (note that individual cases were normalized on their maximum excitation before averaging), although the rebound is small as a result of the smearing caused by averaging (rebounds occurred at different latencies in different units). Also notable is that the decay of second-order depression with increasing interstimulus lag was typically nonmonotonic for band-pass MSUs (e.g., Figs. 2C and 3B), but rarely so for low-pass MSUs (e.g., Figs. 3, A and C). This is also apparent from the averaged second-order kernels, shown in the third row of Fig. 4 (individual cases were normalized on their maximum facilitation before averaging). The bottom row of Fig. 4 illustrates the strength of second-order depression as a function of the interstimulus lag; at each lag (θ domain), the depression was averaged over latencies (τ domain) of 20–40 ms. The time course of the depression in low-pass units is reasonably well-fit by an exponential decay (thin curve) with a mean time constant of 93 ± 18 (SD) ms. The band-pass units are more roughly fit by an exponential decay with a time constant of 138 ± 22 ms, but there appears a significant secondary peak in the depression at an average lag of ∼175 ms (P < 0.01; Student's t-test between the local minimum in depression at ∼100 ms and the secondary peak at ∼175 ms). No significant secondary peak was observed in the low-pass group. Note that if the exponential fit is performed to only the first 100 ms of the data (i.e., up to the inflection point in the band-pass group), the mean time constants are a shorter 64 ± 15 ms for low-pass MSUs and 103 ± 21 ms for band-pass MSUs. Interestingly, as shown in Fig. 6, there was a significant correlation between the BMF of a band-pass unit and the lag to the depression local minimum (r2 = 0.45; P < 0.001). Finally, in both the low- and band-pass groups, the depression strength remained above zero over the entire 500-ms period but did so significantly for ∼200 ms for low-pass MSUs and ∼250 ms for band-pass MSUs.

FIG. 6.

Scatter plot of the BMF and the interstimulus lag at which the 2nd-order kernel of a band-pass MSU shows a local minimum in the strength of depression. Data from 20 of the 22 MSUs that exhibited a clear local minimum is included. The correlation between the BMF and the time lag at the local minimum in depression is highly significant (r2 = 0.45; P < 0.001; regression line drawn on plot).

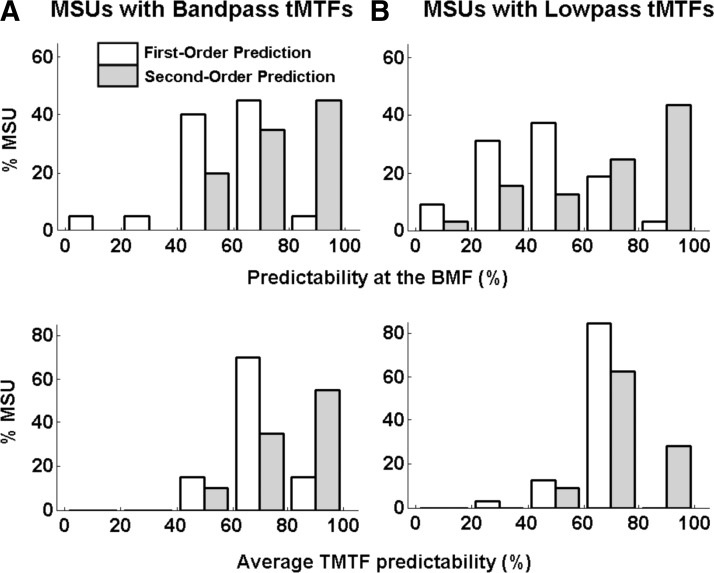

The statistics (mean ± SD) of several parameters of measured and first- and second-order-predicted tMTFs, for the 22 band-pass and 36 low-pass MSUs, are presented in Table 1. These data further underscore the superior predictive power of the nonlinear compared with linear models. The average measured BMFs in the band- and low-pass groups were 6.6 ± 1.4 and 3.1 ± 1.5 Hz, respectively. First-order models overpredicted the BMF of both groups, more so for low-pass units. On average, second-order predictions were not different from measured BMF values for band-pass units but remained significantly higher (P < 10-4) than measured values for low-pass units. (All pairwise comparisons were carried out using ANOVA and the post hoc Tukey test.) Identical trends were obtained for predictions of tMTF bandwidths (see Table 1). The average predictability of the amplitude of the synchronized response at the measured BMF improved dramatically from first- to second-order models: from 58 to 76% for band-pass units and from 46 to 70% for low-pass units. Note that the improvement was actually greater in the low-pass group although the second-order prediction was better in the band-pass group. Likewise, the average predictability of the entire tMTF improved from 69 to 78% (1st- to 2nd-order) for band-pass units and from 68 to 75% for low-pass units. The distributions of tMTF predictions from first- and second-order models are presented in histogram form in Fig. 7. Nonlinear predictions could be described as very good (≥80%) in almost half of our sample, but linear predictions only rarely so.

TABLE 1.

Parameters of measured and first- and second-oral predicted tMTFs

| Measured tMTF | Poisson-clicks Prediction |

||

|---|---|---|---|

| First Order | Second Order | ||

| BMF (Hz) | |||

| BP | 6.6 ± 1.4 | 7.6 ± 1.2 | 6.5 ± 1.2 |

| LP | 3.1 ± 1.5 | 7.8 ± 2.9 | 5.4 ± 1.8 |

| Temporal modulation bandwidth, octave | |||

| BP | 1.7 ± 0.5 | 2.9 ± 1.0 | 1.9 ± 0.4 |

| LP | 1.9 ± 0.8 | 3.5 ± 0.8 | 2.5 ± 0.6 |

| Predictability at the BMF, % | |||

| BP | 58 ± 17 | 76 ± 14 | |

| LP | 46 ± 20 | 70 ± 25 | |

| Average tMTF predictability, % | |||

| BP | 69 ± 10 | 78 ± 11 | |

| LP | 68 ± 8.9 | 75 ± 10 | |

Averaged values (±SD) for band-pass (BP) and low-pass (LP) multispike units of the measured and predicted best modulation frequencies (BMFs, first row) and temporal modulation transfer function (tMTF) bandwidths (second row), and of percent predict-abilities of the tMTF, computed at the just the BMF (third row) and across all significant modulation frequencies (fourth row).

FIG. 7.

Histograms of the distributions of 1st- and 2nd-order predictions of measured tMTFs (□ and  , respectively) for band-pass (A) and low-pass (B) MSUs.

, respectively) for band-pass (A) and low-pass (B) MSUs.

DISCUSSION

We have demonstrated for the first time that an extension of the Wiener-Volterra theory to a Poisson-distributed impulse train input and spike output can be usefully applied to characterize the nonlinear response dynamics of neurons in AI. There have been quite a few previous attempts to characterize the more peripheral stations of the auditory pathway using the second-order Wiener-Volterra approach. These include studies of the auditory nerve (Johnson 1980; Lewis et al. 2002; Recio-Spinoso et al. 2005; Temchin et al. 2005; van Dijk et al. 1994; 1997a,b; Yamada and Lewis 1999; Young and Calhoun 2005), cochlear nucleus (Wickesberg et al. 1984; Recio-Spinoso and van Dijk 2006), midbrain (Epping and Eggermont 1986), and thalamus (Yeshurun et al. 1989). Of the above-cited studies, only those by Temchin et al. (2005) and Young and Calhoun (2005) investigated and reported significantly improved response predictions with second-order compared with first-order models. In the more distant past, one of the chief obstacles to a wider application of the Wiener-Volterra approach to sensory systems analysis had been its high computational demand.

Here we reported that second-order Poisson-Wiener (PW) models carry significantly more predictive power than do linear models in AI of the anesthetized cat. An even stronger finding is that second-order PW models provide very good fits to measured tMTFs in a majority of AI neurons, suggesting that the nonlinearities are predominantly of second order. In our extracellular recordings, which presumably originated predominantly from large layer III and IV pyramidal neurons, the observed nonlinearities were always compressive and generally decreased in strength over a memory of several hundreds of milliseconds.

A well-known compressive nonlinear phenomenon with a memory of several hundreds of milliseconds in the cerebral cortex, is often termed “forward suppression” (i.e., Brosch and Schreiner 1997; Calford and Semple 1995; Eggermont 1999). There are several candidate mechanisms for forward suppression; chief among them are a GABA-mediated feed-forward inhibition (Wehr and Zador 2005) and a Ca2+-mediated short-term depression of synaptic transmission (Zucker and Regehr 2002). Wehr and Zador (2005) that demonstrated, in rat AI, that GABAergic inhibition could contribute to forward suppression over the first 50 to 100 ms or so, but that suppression could persist for ≤500 ms or more (as was confirmed here). Whether GABAergic inhibition is accompanied in AI by the type of thalamocortical synaptic depression that occurs in the visual (Freeman et al. 2002) and somatosensory systems (Chung et al. 2002) remains to be shown.

The first-order kernels of the majority of our band-pass units exhibited a periodic ringing whose frequency corresponded with the BMF of the unit. In addition, second-order kernels showed distinct secondary peak in depression strength at interstimulus lags that also correlated with the BMF, i.e., the unit preferred modulation rates that coincided with a minimum in second-order depression. The mechanism responsible for this secondary, longer-latency nonlinear effect, which clearly contributes to the establishment of modulation tuning in AI, is not entirely clear. One possibility is autonomous periodic bursting in the spindle frequency range that is induced by ketamine anesthesia (Eggermont 1992; Kenmochi and Eggermont 1997). However, when we computed the power spectra of cortical local field potentials (LFPs; recorded from the 2- to 40-Hz signal on our electrodes) in the absence of sound and compared them with tMTFs measured on the same electrodes, we did not find a correlation between peaks in the LFP spectrum and peaks in the tMTF. In addition, on several occasions, we measured low-pass and band-pass tMTFs simultaneously on adjacent electrodes.

Using a phenomenological synaptic model with two time constants, one for the buildup of and another for the recovery from nonlinear depression, Eggermont (1999) obtained good fits to low-pass tMTFs measured in AI of the anesthetized cat. Good fits to band-pass tMTFs, however, necessitated the introduction of a third time constant for nonlinear facilitation, which is not supported by recordings from AI pyramidal neurons. The data presented here suggest that the model be amended to include a two-process depression. Other recent parametric models of short-term plasticity in neocortex also incorporate multiple depressive-facilitative mechanisms with distinct time constants (e.g., Markram et al. 1998; Song et al. 2009).

Given the substantial improvement in nonlinear over linear fits to both band- and low-pass tMTFs, it should be interesting to apply the Poisson-Wiener approach to the prediction of neural responses to stimuli outside the class used in kernel estimation, e.g., to conspecific vocalizations. A major problem with linear models is that they perform poorly in predicting responses to stimuli with different statistical properties than the characterizing stimulus class even if their predictions to stimuli drawn from characterizing class are reasonably good (e.g., Kowalski et al. 1996; Sen et al. 2001; Woolley et al. 2006). The extent to which this limitation also applies to the second-order PW model remains to be addressed in future work.

GRANTS

This work was supported by the Alberta Heritage Foundation for Medical Research, the National Sciences and Engineering Research Council of Canada, and the Campbell McLaurin Chair for Hearing Deficiencies.

Acknowledgments

A. Noreña and B. Gourévich assisted with the data collection.

REFERENCES

- Ahrens et al. 2008.Ahrens MB, Linden JF, Sahani M. Nonlinearities and contextual influences in auditory cortical responses modeled with multilinear spectrotemporal methods. J Neurosci 28: 1929–1942, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Atencio et al. 2008.Atencio CA, Sharpee TO, Schreiner CE. Cooperative nonlinearities in auditory cortical neurons. Neuron 58: 956–966, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bar-Yosef et al. 2002.Bar-Yosef O, Rotman Y, Nelken I. Responses of neurons in cat primary auditory cortex to bird chirps: effects of temporal and spectral context. J Neurosci 22: 8619–8632, 2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bendat 1998.Bendat JS Nonlinear Systems Techniques and Applications. New York: Wiley, 1998.

- Brenner et al. 2000.Brenner N, Bialek W, de Ruyter van Steveninck R. Adaptive rescaling maximizes information transmission. Neuron 26: 695–702, 2000. [DOI] [PubMed] [Google Scholar]

- Brosch and Schreiner 1997.Brosch M, Schreiner CE. Time course of forward masking tuning curves in cat primary auditory cortex. J Neurophysiol 77: 923–943, 1997. [DOI] [PubMed] [Google Scholar]

- Calford and Semple 1995.Calford MB, Semple MN. Monaural inhibition in cat auditory cortex. J Neurophysiol 73: 1876–1891, 1995. [DOI] [PubMed] [Google Scholar]

- Christianson et al. 2008.Christianson GB, Sahani M, Linden JF. The consequences of response nonlinearities for interpretation of spectrotemporal receptive fields. J Neurosci 28: 446–455, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chung et al. 2002.Chung S, Li X, Nelson SB. Short-term depression at thalamocortical synapses contributes to rapid adaptation of cortical sensory responses in vivo. Neuron 34: 437–446, 2002. [DOI] [PubMed] [Google Scholar]

- Depireux et al. 2001.Depireux DA, Simon JZ, Klein DJ, Shamma SA. Spectrotemporal response field characterization with dynamic ripples in ferret primary auditory cortex. J Neurophysiol 85: 1220–1234, 2001. [DOI] [PubMed] [Google Scholar]

- Eggermont 1990.Eggermont JJ The Correlative Brain; Theory and Experiment in Neural Interaction. Berlin: Springer Verlag, 1990.

- Eggermont 1992.Eggermont JJ Stimulus induced and spontaneous rhythmic firing of single units in cat primary auditory cortex. Hear Res 61: 1–11, 1992. [DOI] [PubMed] [Google Scholar]

- Eggermont 1994.Eggermont JJ Temporal modulation transfer functions for AM and FM stimuli in cat auditory cortex. Effects of carrier type, modulating waveform and intensity. Hear Res 74: 51–66, 1994. [DOI] [PubMed] [Google Scholar]

- Eggermont 1998.Eggermont JJ Representation of spectral and temporal sound features in three cortical fields of the cat. Similarities outweigh differences. J Neurophysiol 80: 2743–2764, 1998. [DOI] [PubMed] [Google Scholar]

- Eggermont 1999.Eggermont JJ The magnitude and phase of temporal modulation transfer functions in cat auditory cortex. J Neurosci 19: 2780–2788, 1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eggermont 2002.Eggermont JJ Temporal modulation transfer functions in cat primary auditory cortex: separating stimulus effects from neural mechanisms. J Neurophysiol 87: 305–321, 2002. [DOI] [PubMed] [Google Scholar]

- Eggermont 2006.Eggermont JJ Properties of correlated neural activity clusters in cat auditory cortex resemble those of neural assemblies. J Neurophysiol 96: 746–764, 2006. [DOI] [PubMed] [Google Scholar]

- Eggermont and Smith 1995.Eggermont JJ, Smith GM. Synchrony between single-unit activity and local field potentials in relation to periodicity coding in primary auditory cortex. J Neurophysiol 73: 227–245, 1995. [DOI] [PubMed] [Google Scholar]

- Epping and Eggermont 1986.Epping WJ, Eggermont JJ. Sensitivity of neurons in the auditory midbrain of the grassfrog to temporal characteristics of sound. I. Stimulation with acoustic clicks. Hear Res 24: 37–54, 1986. [DOI] [PubMed] [Google Scholar]

- Fisher 1993.Fisher NI Statistical Analysis of Circular Data. Cambridge, UK: Cambridge Univ. Press, 1993.

- Freeman et al. 2002.Freeman TC, Durand S, Kiper DC, and Carandini M. Suppression without inhibition in visual cortex. Neuron 35: 759–771. [DOI] [PubMed]

- Johnson 1980.Johnson DH Applicability of white-noise nonlinear system analysis to the peripheral auditory system. J Acoust Soc Am 68: 876–884, 1980. [DOI] [PubMed] [Google Scholar]

- Juusola et al. 1995.Juusola M, Weckström M, Uusitalo RO, Korenberg MJ, French AS. Nonlinear models of the first synapse in the light-adapted fly retina. J Neurophysiol 74: 2538–2547, 1995. [DOI] [PubMed] [Google Scholar]

- Kendall 1975.Kendall MG Rank Correlation Methods. London: Griffin, 1975.

- Kenmochi and Eggermont 1997.Kenmochi M, Eggermont JJ. Autonomous cortical rhythms affect temporal modulation transfer functions. Neuroreport 8: 1589–1593, 1997. [DOI] [PubMed] [Google Scholar]

- Kowalski et al. 1996.Kowalski N, Depireux DA, Shamma SA. Analysis of dynamic spectra in ferret primary auditory cortex. II. Prediction of unit responses to arbitrary dynamic spectra. J Neurophysiol 76: 3524–3534, 1996. [DOI] [PubMed] [Google Scholar]

- Krausz 1975.Krausz HI Identification of nonlinear systems using random impulse train inputs. Biol Cybern 19: 217–230, 1975. [Google Scholar]

- Lee and Schetzen 1965.Lee YW, Schetzen M. Measurement of the Wiener kernels of a nonlinear system by cross-correlation. Int J Control 2: 237–254, 1965. [Google Scholar]

- Lewis et al. 2002.Lewis ER, Henry KR, Yamada WM. Tuning and timing in the gerbil ear: Wiener-kernel analysis. Hear Res 174: 206–221, 2002. [DOI] [PubMed] [Google Scholar]

- Lu et al. 2001.Lu T, Liang L, Wang X. Temporal and rate representations of time-varying signals in the auditory cortex of awake primates. Nat Neurosci 4: 1131–1138, 2001. [DOI] [PubMed] [Google Scholar]

- Lu and Wang 2000.Lu T, Wang X. Temporal discharge patterns evoked by rapid sequences of wide- and narrowband clicks in the primary auditory cortex of cat. J Neurophysiol 84: 236–246, 2000. [DOI] [PubMed] [Google Scholar]

- Machens et al. 2004.Machens CK, Wehr MS, Zador AM. Linearity of cortical receptive fields measured with natural sounds. J Neurosci 24: 1089–1100, 2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mann 1945.Mann HB Non-parametric test against trend. Econometrika 13: 245–259, 1945. [Google Scholar]

- Markram et al. 1998.Markram H, Wang Y, Tsodyks M. Differential signaling via the same axon of neocortical pyramidal neurons. Proc Natl Acad Sci USA 95: 5323–5328, 1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marmarelis and Marmarelis 1978.Marmarelis PZ, Marmarelis VZ. Analysis of Physiological Systems: The White-Noise Approach. New York: Plenum, 1978.

- Marmarelis and Naka 1972.Marmarelis PZ, Naka K-I. White-noise analysis of a neuron chain: an application of the Wiener theory. Science 175: 1276–1278, 1972. [DOI] [PubMed] [Google Scholar]

- Marmarelis 2004.Marmarelis VZ Nonlinear Dynamic Modeling of Physiological Systems. Hoboken: Wiley, 2004.

- Nagel and Doupe 2006.Nagel KI, Doupe AJ. Organizing principles of spectro-temporal encoding in the avian primary auditory area field L. Neuron 58: 938–55, 2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Noreña and Eggermont 2005.Noreña AJ, Eggermont JJ. Enriched acoustic environment after noise trauma reduces hearing loss and prevents cortical map reorganization. J Neurosci 25: 699–705, 2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Recanzone 2000.Recanzone GH Response profiles of auditory cortical neurons to tones and noise in behaving macaque monkeys. Hear Res 150: 104–118, 2000. [DOI] [PubMed] [Google Scholar]

- Recio-Spinoso et al. 2005.Recio-Spinoso A, Temchin AN, van Dijk P, Fan YH, Ruggero MA. Wiener-kernel analysis of responses to noise of chinchilla auditory-nerve fibers. J Neurophysiol 93: 3615–3634, 2005. [DOI] [PubMed] [Google Scholar]

- Recio-Spinoso and van Dijk 2006.Recio-Spinoso A, van Dijk P. Analysis of responses to noise in the ventral cochlear nucleus using Wiener kernels. Hear Res 216: 7–18, 2006. [DOI] [PubMed] [Google Scholar]

- Rieke et al. 1997.Rieke F, Warland D, de Ruyter van Steveninck R, Bialek W. Spikes: Exploring the Neural Code. Cambridge, MA: MIT Univ. Press, 1997.

- Sahani and Linden 2003.Sahani M and Linden JF. How linear are auditory cortical responses? In: Advances in Neural Information Processing Systems (15th ed.), edited by Becker S, Thrun S, Obermayer K. Cambridge, MA: MIT Univ. Press, 2003, p. 125–132.

- Sen et al. 2001.Sen K, Theunissen FE, Doupe AJ. Feature analysis of natural sounds in the songbird auditory forebrain. J Neurophysiol 86: 1445–1458, 2001. [DOI] [PubMed] [Google Scholar]

- Sharpee et al. 2008.Sharpee TO, Miller KD, Stryker MP. On the importance of static nonlinearity in estimating spatiotemporal neural filters with natural stimuli. J Neurophysiol 99: 2496–2509, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Song et al. 2009.Song D, Wang Z, Marmarelis VZ, Berger TW. Parametric and non-parametric model-ing of short-term synaptic plasticity. II. Experimental study. J Comput Neurosci 26: 21–37, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Temchin et al. 2005.Temchin AN, Recio-Spinoso A, van Dijk P, Ruggero MA. Wiener kernels of chinchilla auditory-nerve fibers: verification using responses to tones, clicks, and noise and comparison with basilar-membrane vibrations. J Neurophysiol 93: 3635–3648, 2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Theunissen et al. 2001.Theunissen FE, David SV, Singh NC, Hsu A, Vinje WE, Gallant JL. Estimating spatio-temporal receptive fields of auditory and visual neurons from their responses to natural stimuli. Network 12: 289–316, 2001. [PubMed] [Google Scholar]

- Thomson 1997.Thomson AM Activity-dependent properties of synaptic transmission at two classes of connections made by rat neocortical pyramidal axons in vitro. J Physiol 502: 131–147, 1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thomson et al. 1993.Thomson AM, Deuchars J, West DC. Large, deep layer pyramid-pyramid single axon EPSPs in slices of rat motor cortex display paired pulse and frequency-dependent depression, mediated presynaptically and self-facilitation, mediated postsynaptically. J Neurophysiol 70: 2354–2369, 1993. [DOI] [PubMed] [Google Scholar]

- Valentine and Eggermont 2004.Valentine PA, Eggermont JJ. Stimulus dependence of spectro-temporal receptive fields in cat primary auditory cortex. Hear Res 196: 119–133, 2004. [DOI] [PubMed] [Google Scholar]

- van Dijk et al. 1994.van Dijk P, Wit HP, Segenhout JM, Tubis A. Wiener kernel analysis of inner ear function in the American bullfrog. J Acoust Soc Am 95: 904–919, 1994. [DOI] [PubMed] [Google Scholar]

- van Dijk et al. 1997a.van Dijk P, Wit HP, Segenhout JM. Dissecting the frog inner ear with Gaussian noise. I. Application of high-order Wiener-kernel analysis. Hear Res 114: 229–242, 1997a. [DOI] [PubMed] [Google Scholar]

- van Dijk et al. 1997b.van Dijk P, Wit HP, Segenhout JM. Dissecting the frog inner ear with Gaussian noise. II. Temperature dependence of inner ear function. Hear Res 114: 243–251, 1997b. [DOI] [PubMed] [Google Scholar]

- Volterra 1930.Volterra V Theory of Functionals and of Integral and Integro-Differential Equations. Glasgow, UK: Blackie and Son, 1930.

- Wang et al. 2005.Wang X, Lu T, Snider RK, Liang L. Sustained firing in auditory cortex evoked by preferred stimuli. Nature 435: 341–346, 2005. [DOI] [PubMed] [Google Scholar]

- Wehr and Zador 2005.Wehr M, Zador AM. Synaptic mechanisms of forward suppression in rat auditory cortex. Neuron 47: 437–445, 2005. [DOI] [PubMed] [Google Scholar]

- Wickesberg et al. 1984.Wickesberg RE, Dickson JW, Gibson MM, Geisler CD. Wiener kernel analysis of responses from anteroventral cochlear nucleus neurons. Hear Res 14: 155–174, 1984. [DOI] [PubMed] [Google Scholar]

- Wiener 1958.Wiener N Nonlinear Problems in Random Theory. New York: Wiley, 1958.

- Woolley et al. 2005.Woolley SM, Fremouw TE, Hsu A, Theunissen FE. Tuning for spectro-temporal modulations as a mechanism for auditory discrimination of natural sounds. Nat Neurosci 8: 1371–1379, 2005. [DOI] [PubMed] [Google Scholar]

- Woolley et al. 2006.Woolley SM, Gill PR, Theunissen FE. Stimulus-dependent auditory tuning results in synchronous population coding of vocalizations in the songbird midbrain. J Neurosci 26: 2499–2512, 2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu et al. 2006.Wu MC, David SV, Gallant JL. Complete functional characterization of sensory neurons by system identification. Annu Rev Neurosci 29: 477–505, 2006. [DOI] [PubMed] [Google Scholar]

- Yamada and Lewis 1999.Yamada WM, Lewis ER. Predicting the temporal responses of non-phase-locking bullfrog auditory units to complex acoustic waveforms. Hear Res 130: 155–170, 1999. [DOI] [PubMed] [Google Scholar]

- Yeshurun et al. 1989.Yeshurun Y, Wollberg Z, Dyn N. Prediction of linear and non-linear responses of MGB neurons by system identification methods. Bull Math Biol 51: 337–346, 1989. [DOI] [PubMed] [Google Scholar]

- Young and Calhoun 2005.Young ED, Calhoun BM. Nonlinear modeling of auditory-nerve rate responses to wideband stimuli. J Neurophysiol 94: 4441–4454, 2005. [DOI] [PubMed] [Google Scholar]

- Zucker and Regehr 2002.Zucker RS, Regehr WG. Short-term synaptic plasticity. Annu Rev Physiol 64: 355–405, 2002. [DOI] [PubMed] [Google Scholar]