Abstract

The brain adapts to asynchronous audiovisual signals by reducing the subjective temporal lag between them. However, it is currently unclear which sensory signal (visual or auditory) shifts toward the other. According to the idea that the auditory system codes temporal information more precisely than the visual system, one should expect to find some temporal shift of vision toward audition (as in the temporal ventriloquism effect) as a result of adaptation to asynchronous audiovisual signals. Given that visual information gives a more exact estimate of the time of occurrence of distal events than auditory information (due to the fact that the time of arrival of visual information regarding an external event is always closer to the time at which this event occurred), the opposite result could also be expected. Here, we demonstrate that participants' speeded reaction times (RTs) to auditory (but, critically, not visual) stimuli are altered following adaptation to asynchronous audiovisual stimuli. After receiving “baseline” exposure to synchrony, participants were exposed either to auditory-lagging asynchrony (VA group) or to auditory-leading asynchrony (AV group). The results revealed that RTs to sounds became progressively faster (in the VA group) or slower (in the AV group) as participants' exposure to asynchrony increased, thus providing empirical evidence that speeded responses to sounds are influenced by exposure to audiovisual asynchrony.

Keywords: audition, perception, vision, time, recalibration

Recent studies have shown that the brain can adjust the processing of asynchronous sensory signals to help preserve the subjective impression of simultaneity. Prolonged exposure to asynchronous stimuli (such as “simple” pairs of beeps and flashes or “complex” audiovisual speech) often induces temporal “aftereffects” in the perception of subsequently presented stimuli (1–8)*. The mere presence of these temporal “aftereffects” suggests that the mechanisms integrating information from our senses are quite flexible in terms of reducing temporal disparities and hence, optimizing the perception of the events around us. Crucially, Fujisaki and colleagues (1) found (indirect) evidence of temporal aftereffects even in perceptual illusions that depend for their occurrence on the temporal relation between signals, suggesting that these effects are genuinely perceptual.

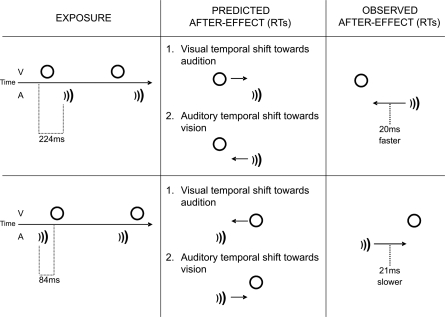

It has been suggested that these temporal recalibration effects might be based on a readjustment of the speed at which different sensory signals are transmitted neurally (e.g., 1, 8). However, previous research has not been able to clarify whether or not this readjustment implies a real change in the speed at which humans respond to each of the recalibrated stimuli. Audition has traditionally been seen as a sensory modality that dominates over the others (e.g., vision) in the temporal domain, thus resulting in illusory effects such as the so-called temporal ventriloquism effect (e.g., 9). Following from this idea, the mechanism underlying audiovisual temporal adaptation could reasonably be expected to imply a shift in the processing of the visual signal toward the (perhaps more accurately coded) auditory signal (see “Predicted aftereffects” in Fig. 1). It is, however, worth highlighting the fact that the most likely cause of audiovisual asynchrony in our environment is the physical delay between auditory and visual signals induced by the fact that light travels much faster than sound (nearly 300,000,000 m/s versus 340 m/s in the air, respectively; see ref. 10). When taken together with differences in the neural transduction of sensory signals at the sensory epithelia, it has been estimated that sounds will reach the brain before visual signals for events that occur up to 10 m away from us, but that visual signals will lead whenever an event occurs at a greater distance (10, 11). Given that only auditory arrival time is affected by a change in the distance of an event from an observer, visual information actually provides a far more precise estimate of when an event occurred. Recent findings suggest that audiovisual temporal discrepancies introduced by distance are compensated for in the processes of temporal adaptation (12). Keeping this fact in mind, it makes sense for the brain to “pull” auditory signals into temporal alignment with the corresponding visual events and not vice versa (see “Predicted aftereffects” in Fig. 1). The question of which signal (auditory, visual, or both) is modulated during the adaptation to asynchronous audiovisual signals is then by no means a trivial one.

Fig. 1.

Predicted and observed aftereffects. The predicted and observed results are presented for the cases in which the visual signal leads (upper row) and lags (lower row) the auditory signal. According to Prediction 1 (see the second column) and following the observation that visual stimuli can be attracted toward auditory stimuli in the temporal domain, specific modulations in visual RTs could be expected as a result of temporal recalibration. By contrast, RTs to auditory stimuli (see Prediction 2 in the second column) could be modulated by exposure to audiovisual asynchrony as a consequence of the fact that auditory (but not visual) arrival time is modulated by the distance of an event from the observed. The different predictions highlighted in this panel are, however, not exclusive. As the third column shows, we observed specific modulations of RTs only in participants' responses to auditory stimuli.

In the present study, simple reaction times (RTs) to individually-presented auditory and visual stimuli were used to investigate whether the temporal readjustment observed during (and after) the exposure to asynchronous sensory signals influences the speed at which the brain can respond to unimodal stimuli. Interestingly, RTs were used, in a control experiment in a recent study by Harrar and Harris (3), to investigate the possible influence of attention to asynchronous stimulus pairs on the typical shifts observed in psychophysical tasks such as simultaneity judgments (SJs) (e.g. ref. 2). Regarding the use of RTs and psychophysical measures to study the temporal aspects of human perception, it is still not clear whether the 2 approaches tackle the same brain processes or not (13). It has recently been suggested that, although obvious differences arise between temporal order judgments (TOJs) and RTs in response distributions and associated variance, both can be modeled as arising from the same system or mechanism while engaging distinct levels of a decision process (14). This evidence supports the use of RTs in directly assessing the mechanisms of temporal recalibration between sensory signals.

In the present study, RTs after exposure to asynchrony [either audition lagging (VA) or audition leading (AV)] were compared with RTs after exposure to synchronous audiovisual stimulation (see Fig. 1). According to the “temporal shift” hypothesis, which claims that the speed of signal processing is modulated to preserve the perception of simultaneity, one should expect to find very specific modulations of participants' RTs depending on the direction of the exposure to asynchrony (e.g., faster RTs to sound, but slower RTs to lights, would be seen following exposure to VA asynchrony). Surprisingly, the results reported here suggest that only auditory RTs are modulated, during the exposure to audiovisual asynchrony, in the direction predicted by the “temporal shift” hypothesis.

Results

To analyze whether adaptation to a specific audiovisual asynchrony influences participants' RTs to subsequent unimodal (visual or auditory) stimuli, participants' average unimodal (speeded) detection RTs (falling in the 100–450 ms range) after a 5-min exposure to either synchrony (baseline) or asynchrony were calculated. An analysis of variance (ANOVA), including 2 within-participants factors (“sensory modality”: visual versus auditory; and “type of exposure”: synchrony versus asynchrony), and 1 between-participants factor (VA versus AV group), revealed a significant 3-way interaction [F(1, 17) = 5.7, P = 0.029]. This result suggests that the exposure to audiovisual asynchrony (VA or AV), but not to audiovisual synchrony, differentially affected participants' responses to the auditory and visual stimuli. Further analysis showed that the 2 groups (VA and AV) differed in terms of the asynchrony effect (i.e., the result of subtracting the RTs in the synchrony condition from those in the asynchrony condition), only in their speeded responses to sounds [t(17) = −4.2, P = 0.0006], indicating that exposure to audiovisual asynchrony affected the speed with which participants responded to sounds, but not to lights [t(17) = −1.6, P = 0.136].

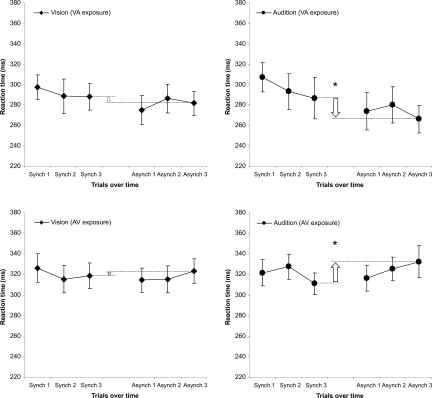

Following the methodology used in previous studies (e.g., 1), “re-exposure” stimuli (that were all synchronous or asynchronous, depending on the block: synchrony or asynchrony, respectively) were alternated with the RT trials in the test phase (i.e., after each synchrony and asynchrony exposure phase; see Materials and Methods section for details). This design allowed us to determine how the participants' RT changed over time as participants' exposure to a particular asynchrony increased. According to the Vincentization procedure (15, 16), each participant's RTs in the experiment were grouped into 6 “temporal bins” (or “periods”) of approximately 18 trials each (synch1, synch2, synch3, asynch1, asynch2, and asynch3) and averaged for the VA and AV groups separately (see Fig. 2). As for the previous analyses, all RTs falling outside the 100–450 ms range were excluded from these analyses. An ANOVA including the factors of modality (visual versus auditory), time period (from synch1 bin to asynch3 bin) and group (VA versus AV) revealed a significant main effect of time period [F(5, 85) = 3.6, P = 0.006], and significant interactions between time period and group [F(5, 85) = 3.1, P = 0.01], and between modality, time period, and group [F(5, 85) = 2.6, P = 0.03]. Additional analyses revealed that, while an effect of time period [F(5, 85) = 3.1, P = 0.01], and an interaction between time period and experimental group [F(5, 85) = 4.6, P = 0.001], were found when responses to auditory stimuli were considered, no such effects were observed on visual responses. This shows that participants' responses to auditory, but not to visual, stimuli were faster as a result of exposure to VA asynchrony and slower as a result exposure to AV asynchrony.

Fig. 2.

Responses over time. RTs are represented (on the y axis) for each sensory modality (visual and auditory) and experimental group (VA and AV) separately in different graphs. The participants' responses over the course of the experiment were grouped into 6 different temporal bins (3 taken following exposure to synchrony, in block 1, and the remaining 3 taken in block 2, following adaptation to audiovisual, VA, or AV, asynchrony). RTs to auditory stimuli tended to be faster as the exposure (and re-exposure) to an auditory-lagging asynchrony increased and slower as the exposure (and re-exposure) to an auditory-leading asynchrony increased. No such pattern of results was observed for the visual RTs. The arrows show the difference between the last 18 trials in the synchrony (block 1) and asynchrony (block 2) for all of the different conditions. This difference was significant when considering only the RTs to sounds. The error bars indicate the standard error of the mean.

RTs from the last bin (i.e., the last 36 trials; 18 visual and 18 auditory) in both the synchrony and the asynchrony blocks were taken from each modality and group to compare the “final” effect of exposure to synchrony with the “final” effect of exposure to asynchrony (see the arrows in Fig. 2). An ANOVA, including the factors of sensory modality (visual versus auditory), exposure (synchrony versus asynchrony), and group (AV versus VA), revealed a significant 3-way interaction [F(1, 17) = 5.1, P = 0.04], and a 2-way interaction between exposure and group [F(1, 17 = 7.1, P = 0.02], again indicating that exposure to audiovisual asynchrony modulated auditory RTs differently in the AV and VA groups. More detailed analyses confirmed this result. While an interaction between Exposure and Group was found when considering auditory RTs [F(1, 17 = 8.9, P = 0.008], no trace of any such effect was observed when visual RTs were analyzed [F(1, 17 = 1.3, P = 0.276]. Note that the effect reported fell in the range of the temporal shift effects previously found using other methods (e.g., 1, 8; see Fig. 2). Finally, it should be noted that, when considering only data from the 3 temporal bins in the synchrony block, there was a near-significant trend, in RTs, to decrease in the AV group [F(2, 18) = 2.8, P = 0.089]. This result reinforces the notion that the responses in the AV group (the one showing, in the asynchrony condition, progressively larger RTs) were being modulated by exposure to asynchrony.

The overall numerical difference between the 2 groups was not significant for RTs to sounds in synchrony [t(17) = 1.4, P = 0.17], and nor was it significant for RTs to visual stimuli in synchrony [t(17) = 1.7, P = 0.10]. This, and the fact that the effects of exposure were found exclusively in responses to sounds, demonstrates that the effects of exposure to asynchrony reported were not due simply to a general (and unexpected) difference between groups.

Discussion

The most parsimonious explanation for these results is that during exposure to asynchronous audiovisual stimulation, the speed at which the auditory signal is processed changes to enhance the subjective impression of audiovisual synchrony (see “Observed aftereffect” in Fig. 1). According to our data, this change is sufficient to modify the time at which participants are able to respond to sounds. Recent research using electrophysiological recordings has demonstrated, in line with the results reported here, that some specific event-related potentials (ERPs) involved in the detection of a sound (namely the N1 and P2 components) can be observed earlier depending on the visual information that precedes them (17, 18). Thus, the RT results reported here, when combined with the data from other studies, such as those involving TOJs or SJs (1–8, 14), strongly suggest the existence of a mechanism that can re-adjust the temporal processing of incoming sensory signals that are related (e.g., by means of causality, as in the case of seeing the preparatory lip movement producing /b/ and subsequently hearing the related sound; see 18) or tend to occur close in time (as in our experiment). Importantly, similar temporal effects have also been reported during the perception of our own actions and their consequences (19, 20), perhaps indicating that the human brain tends to bind (in time) the asynchronous events that are, for whatever reason, related (e.g., causality, see 21, or temporal proximity). Speculatively, it could be that a “prior”–specifying that vision provides more reliable information regarding the absolute time of occurrence of a distant event–is applied to all possible cases of audiovisual asynchrony (perhaps even including the case in which audition leads vision), thus inducing a certain modulation of auditory processing.

The absence of any effect in visual RTs following exposure to temporally misaligned audiovisual signals does not necessarily imply that the processing of visual stimuli is never altered. It may, for example, be that the effects of temporal adaptation to asynchrony influence the processing of auditory and visual signals in different ways, and/or perhaps at different stages of neural processing (e.g., early versus late). An alternative explanation for the absence of any effect on visual RTs is that the auditory processing could have been a more suitable candidate for a temporal shift because of its lower reliability (due to the presence of background white noise in the testing chamber) with respect to the visual processing. This hypothesis opens up the possibility of studying, in the future, how the reliability of the stimuli may (or may not) influence the mechanisms underlying temporal recalibration.

We must, however, bear in mind the fact that vision often provides a more precise estimate of when a distant audiovisual event took place (see Introduction). This would also explain why it is precisely the processing of sound what appears to be shifted in time. It is worth highlighting that the effects found are likely to reflect the consequence of the exposure to a very specific asynchrony (e.g., responses to sounds being faster after the exposure to VA), and not only the mere exposure to asynchronous stimuli in general or some other uncontrolled factor (e.g., the effects of practice or fatigue), as it could well have happened in the only previous study using RTs in a temporal recalibration paradigm (3)†. RTs, in our experiment, increased or decreased as the exposure to an AV or VA asynchrony, respectively, augmented. Whether or not performance will be optimal as a result of this temporal readjustment will depend on each task/situation. Changing the speed at which sounds are processed would lead to a more accurate percept of when a distant multisensory event occurred (i.e., closer to its real time of occurrence), but it would also make simple detection suboptimal under certain conditions (e.g., when the detection of sounds is slowed down as a result of exposure to AV asynchrony; see Fig. 2).

There might also be other ways of changing the speed of information flow during the perception of asynchronous signals. Temporal recalibration occurs at a perceptual level (e.g., see 1, 17, 18), and therefore, it is suggested that adaptation influences unimodal (i.e., auditory) processing at a putatively early stage. However, it could be that the exposure to a specific asynchrony also induces a shift in, for example, the criterion used to decide how much sensory evidence is required to “detect” a sound. Consistent with this latter hypothesis, adaptation to, for example, VA asynchrony would allow the system to accept less evidence in order for a sound to be detected, thus moving the criterion for the detection of a sound (but not the neural transmission speed) to an earlier point in time. Further research will be needed to elucidate whether adaptation to audiovisual asynchrony implies a genuine change in the speed at which the sensory signal is transmitted through the early stages of information processing (in a similar way as in, for example, 18) and/or a more “abstract” mechanism, such as a change in the criterion for detection. However, what should be clear from the results reported here is that temporal recalibration induces temporal shifts that are unimodal in nature and can be measured in a task as simple as speeded detection.

There is an apparent contradiction between our results and the literature on temporal ventriloquism, where the time at which an observer perceives a visual stimulus seems to shift toward the instant at which a sound appears (e.g., 9, 23–25). One possible explanation for this discrepancy may be that temporal ventriloquism does not really induce any temporal shift in the processing of the visual signal. The fact that temporal ventriloquism modulates the amplitude of visual evoked potentials such as P1 and N1, but not their latency, can be seen as providing evidence against the “visual temporal shift” hypothesis (26). Another possibility is that the brain utilizes different strategies to segregate, in time, 2 visual stimuli (as in the majority of studies investigating temporal ventriloquism; e.g., 9, 25) and to bind 2 asynchronous stimuli from different modalities (e.g., vision and audition), as surely happens in the present study. Further research will be needed to gain a fuller understanding of the mechanisms underlying temporal ventriloquism (and adaptation). Both effects illustrate the flexibility of the brain in terms of updating and combining multisensory information. The results reported here clearly demonstrate that changes in simple detection latencies to sound (perhaps reflecting one of the most basic human responses to a stimulus from the outside world) are influenced by a prior exposure to audiovisual asynchrony.

Materials and Methods

Participants.

Nineteen naïve participants (14 female, mean age of 24 years) with normal hearing and normal or corrected-to-normal vision took part in this study. They received a 5-pound (UK Sterling) gift voucher in return for taking part in the experiment, which was conducted in accordance with the Declaration of Helsinki.

Stimuli.

In block 1 (synchronous), the visual stimulus (a 5-°-wide green ring) was presented (in a Philips 107-E Monitor, 85 Hz) together with a 1,000 Hz beep (delivered, at 75.5 dB(A) via 2 loudspeakers, 1 located either side of the screen) for 24 ms every 1,000 ms. In block 2 (asynchronous) and for the VA group of participants, there was a 224-ms gap between the onset of the visual stimulus and the onset of the auditory stimulus. For the AV group, the onset of the auditory stimulus appeared 84 ms before the onset of the visual stimulus. Asymmetrical asynchronies were chosen on the basis of previous research showing that people tolerate more asynchrony when the visual signal arrives before the auditory signal (26, 27)‡. The same parameters were used for the 8 re-exposure pairs of audiovisual stimuli among the sets of 4 RTs trials. White noise was presented continuously in the background at 62.5 dB (A) during the whole experimental session.

Procedure.

The experiment consisted of 2 blocks (synchronous and asynchronous)§. In block 1 (synchronous), participants were exposed to brief visual and auditory stimuli (both lasting 24 ms) appearing in synchrony for 5 min. Next, and following the instruction “press,” groups of 4 unimodal visual or auditory stimuli (that were identical to those used in the exposure phase) were presented pseudorandomly with an intertrial interval of 1500–3500 ms. The participants, sitting 50 cm from the screen, had to press the spacebar as rapidly as possible whenever they perceived either stimulus. Eight “re-exposure” pairs of audiovisual stimuli appeared, following the instruction “do not press” between every 4 RT trials until a total of 112 RT trials (56 visual and 56 auditory) had been presented. In block 2 (asynchronous), a brief silent gap was introduced between the visual and auditory stimuli during the initial exposure (and the re-exposure stimuli). The 2 experimental blocks followed exactly the same structure. However, half of the participants (VA group; 9 participants) were exposed to audiovisual asynchrony in the second block where the auditory stimulus was always delayed by 224 ms with respect to the visual stimulus, while the other participants (AV group; 10 participants) were exposed to asynchronous stimuli where the auditory stimulus led by 84 ms (see Fig. 2 for further details). To ensure that participants were attending to the stimuli, they had to detect occasional oddball stimuli (25% of the total) that were different from the “standard” (i.e., a thinner ring together with a 1,300-Hz beep). Detection performance on this task was near perfect. To both familiarize the participants with the task and reduce as much as possible the effects of practice in the RTs, a practice block preceded the main experimental blocks. This block consisted of 60 speeded detection trials (30 auditory and 30 visual) whose onset times were jittered, resulting in stimulus presentation at unpredictable times.

Acknowledgments.

We thank Dr. Daniel Linares for his help in the earlier stages of the study presented here. J.N. was supported by a Beatriu de Pinós program (Departament d'Innovació, Universitats i Empresa, Catalonia, Spain), and a Ramón y Cajal program (Ministerio de Ciencia e Innovación, Spain). This research was partially supported by Spanish Government Grant RYC-2008-03672 (to J.N.). These experiments were conducted at the Crossmodal Research Laboratory, Oxford University.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

An “aftereffect” is taken here to refer to a perceptually useful readjustment of the processing of incoming stimuli that can be observed after exposure to the stimuli that gave rise to the mentioned readjustment.

Harrar et al.'s study (3) represents an interesting attempt to see the effects of perceiving audiovisual asynchrony on the detection of visual or auditory stimuli. However, while the methodology used by these authors was designed to investigate any possible effects of attention, it did not allow them to see specific modulations of the RTs to visual and auditory stimuli due to temporal recalibration. In contrast with this previous study, there were 2 different kinds of exposure in our experiment: exposure to synchrony and exposure to asynchrony (and not just to asynchrony, see ref. 3). We also used 2 different kinds of asynchrony (VA and AV) instead of just 1. Moreover, less RT trials (4 against 12) were presented between re-exposure top-ups (see Methods), thus reducing the possible impact of practice/fatigue or temporal readjustment to baseline. This helps us to be confident that our results were due to the prior exposure to asynchrony, and not to any other possible factor.

There is extensive evidence (e.g., see refs. 29 and 30) suggesting that humans tolerate more asynchrony when vision leads than when vision lags. This is probably due to the fact that in real situations involving audiovisual asynchronies (note that we are not talking about small differences in neural transduction), visual signals tend to appear before auditory signals (e.g., as when a football player kicks a ball 100 m from the perceiver).

As it is still not clear how long the temporal adaptation “aftereffect” lasts, we decided to use a blocked experimental design in which the synchrony condition (where no adaptation was expected to occur) always preceded the asynchrony condition. In this way, we reduced the chances of obtaining any undesirable carry-over effects.

References

- 1.Fujisaki W, Shimojo S, Kashino M, Nishida S. Recalibration of audiovisual simultaneity. Nat Neurosci. 2004;7:773–778. doi: 10.1038/nn1268. [DOI] [PubMed] [Google Scholar]

- 2.Hanson JVM, Heron J, Whitaker D. Recalibration of perceived time across sensory modalities. Exp Brain Res. 2008;185:347–352. doi: 10.1007/s00221-008-1282-3. [DOI] [PubMed] [Google Scholar]

- 3.Harrar V, Harris LR. The effect of exposure to asynchronous audio, visual, and tactile stimulus combinations on the perception of simultaneity. Exp Brain Res. 2008;186:517–524. doi: 10.1007/s00221-007-1253-0. [DOI] [PubMed] [Google Scholar]

- 4.Navarra J, Soto-Faraco S, Spence C. Adaptation to audiotactile asynchrony. Neurosci Lett. 2007;413:72–76. doi: 10.1016/j.neulet.2006.11.027. [DOI] [PubMed] [Google Scholar]

- 5.Navarra J, et al. Exposure to asynchronous audiovisual speech extends the temporal window for audiovisual integration. Cogn Brain Res. 2005;25:499–507. doi: 10.1016/j.cogbrainres.2005.07.009. [DOI] [PubMed] [Google Scholar]

- 6.Vatakis A, Navarra J, Soto-Faraco S, Spence C. Temporal recalibration during asynchronous audiovisual speech perception. Exp Brain Res. 2007;181:173–181. doi: 10.1007/s00221-007-0918-z. [DOI] [PubMed] [Google Scholar]

- 7.Vatakis A, Navarra J, Soto-Faraco S, Spence C. Audiovisual temporal adaptation of speech: Temporal order versus simultaneity judgments. Exp Brain Res. 2008;185:521–529. doi: 10.1007/s00221-007-1168-9. [DOI] [PubMed] [Google Scholar]

- 8.Vroomen J, Keetels M, de Gelder B, Bertelson P. Recalibration of temporal order perception by exposure to audio-visual asynchrony. Cogn Brain Res. 2004;22:32–35. doi: 10.1016/j.cogbrainres.2004.07.003. [DOI] [PubMed] [Google Scholar]

- 9.Morein-Zamir S, Soto-Faraco S, Kingstone A. Auditory capture of vision: examining temporal ventriloquism. Cogn Brain Res. 2003;17:154–163. doi: 10.1016/s0926-6410(03)00089-2. [DOI] [PubMed] [Google Scholar]

- 10.Spence C, Squire SB. Multisensory integration: Maintaining the perception of synchrony. Curr Biol. 2003;13:R519–R521. doi: 10.1016/s0960-9822(03)00445-7. [DOI] [PubMed] [Google Scholar]

- 11.Pöppel E. A hierarchical model of temporal perception. Trends in Cogn Sci. 1997;1:56–61. doi: 10.1016/S1364-6613(97)01008-5. [DOI] [PubMed] [Google Scholar]

- 12.Heron J, Whitaker D, McGraw PV, Horoshenkov KV. Adaptation minimizes distance-related audiovisual delays. J Vision. 2007;7:1–8. doi: 10.1167/7.13.5. [DOI] [PubMed] [Google Scholar]

- 13.Jaśkowski P. Simple reaction time and perception of temporal order: Dissociations and hypotheses. Perc Motor Skills. 1996;82:707–730. doi: 10.2466/pms.1996.82.3.707. [DOI] [PubMed] [Google Scholar]

- 14.Cardoso-Leite P, Gorea A, Mamassian P. Temporal order judgment and simple reaction times: Evidence for a common processing system. J Vision. 2007;7:1–14. doi: 10.1167/7.6.11. [DOI] [PubMed] [Google Scholar]

- 15.Graighero L, Fadiga L, Umiltá C, Rizzolati G. Action for perception: A motor-visual attentional effect. J Exp Psychol Hum Percept Perform. 1999;25:1673–1692. doi: 10.1037//0096-1523.25.6.1673. [DOI] [PubMed] [Google Scholar]

- 16.Ratcliff R. Group reaction time distributions and an analysis of distribution statistics. Psych Bull. 1979;86:446–461. [PubMed] [Google Scholar]

- 17.Stekelenburg JJ, Vroomen J. Neural correlates of multisensory integration of ecologically valid audiovisual events. J Cogn Neurosci. 2007;19:1964–1973. doi: 10.1162/jocn.2007.19.12.1964. [DOI] [PubMed] [Google Scholar]

- 18.van Wassenhove V, Grant KW, Poeppel D. Visual speech speeds up the neural processing of auditory speech. Proc Natl Acad Sci USA. 2005;102:1181–1186. doi: 10.1073/pnas.0408949102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Stetson C, Cui X, Montague PR, Eagleman D-M. Motor-sensory recalibration leads to an illusory reversal of action and sensation. Neuron. 2006;51:651–659. doi: 10.1016/j.neuron.2006.08.006. [DOI] [PubMed] [Google Scholar]

- 20.Engbert K, Wohlschläger A, Thomas R, Haggard P. Agency, subjective time, and other minds. J Exp Psychol Hum Percept Perform. 2007;33:1261–1268. doi: 10.1037/0096-1523.33.6.1261. [DOI] [PubMed] [Google Scholar]

- 21.Winter R, Harrar V, Gozdzik M, Harris LR. The relative timing of active and passive touch. Brain Res. 2008;1242:54–58. doi: 10.1016/j.brainres.2008.06.090. [DOI] [PubMed] [Google Scholar]

- 22.Fendrich R, Corballis PM. The temporal cross-capture of audition and vision. Percept Psychophys. 2001;63:719–725. doi: 10.3758/bf03194432. [DOI] [PubMed] [Google Scholar]

- 23.Vroomen J, de Gelder B. Temporal ventriloquism: Sound modulates the flash-lag effect. J Exp Psychol Hum Percept Perform. 2004;30:513–518. doi: 10.1037/0096-1523.30.3.513. [DOI] [PubMed] [Google Scholar]

- 24.Bertelson P, Aschersleben G. Temporal ventriloquism: Crossmodal interaction on the time dimension. 1. Evidence from auditory-visual temporal order judgment. Int J Psychophysiol. 2003;50:147–155. doi: 10.1016/s0167-8760(03)00130-2. [DOI] [PubMed] [Google Scholar]

- 25.Vroomen J, Keetels M. Sounds change four-dot masking. Acta Psychologica. 2009;130:58–63. doi: 10.1016/j.actpsy.2008.10.001. [DOI] [PubMed] [Google Scholar]

- 26.Stekelenburg JJ, Vroomen J. An event-related potential investigation of the time-course of temporal ventriloquism. NeuroReport. 2005;16:641–644. doi: 10.1097/00001756-200504250-00025. [DOI] [PubMed] [Google Scholar]

- 27.Lewkowicz DJ. Perception of auditory-visual temporal synchrony in human infants. J Exp Psychol Hum Percept Perform. 1996;22:1094–1106. doi: 10.1037//0096-1523.22.5.1094. [DOI] [PubMed] [Google Scholar]

- 28.McGrath M, Summerfield Q. Intermodal timing relations and audio-visual speech recognition by normal-hearing adults. J Acoust Soc Am. 1985;77:678–685. doi: 10.1121/1.392336. [DOI] [PubMed] [Google Scholar]

- 29.Dixon NF, Spitz L. The detection of auditory visual desynchrony. Perception. 1980;9:719–721. doi: 10.1068/p090719. [DOI] [PubMed] [Google Scholar]

- 30.van Wassenhove V, Grant KW, Poeppel D. Temporal window of integration in auditory-visual speech perception. Neuropsychologia. 2007;45:598–607. doi: 10.1016/j.neuropsychologia.2006.01.001. [DOI] [PubMed] [Google Scholar]