Abstract

Three adolescents with traumatic brain injury performed a physical therapy task in the absence of programmed consequences or duration requirements. Next, the experimenter gave the participants the options of a smaller immediate reinforcer with no response requirement or a larger delayed reinforcer with a response requirement. Self-control training exposed participants to a procedure during which they chose between a smaller immediate reinforcer and a progressively increasing delayed reinforcer whose values varied and were determined by a die roll. The participants chose whether they or the experimenter rolled the die. All participants initially demonstrated low baseline durations of the physical therapy task, chose the smaller immediate reinforcer during the choice baseline, and changed their preference to the larger delayed reinforcer during self-control training.

Keywords: brain injury, choice, delayed reinforcement, impulsivity, self-control

An increasing number of published reports have demonstrated the assessment or teaching of more optimal choice making among persons with disabilities (e.g., Dixon & Falcomata, 2004; Falcomata & Dixon, 2004; Neef & Lutz, 2001; Neef et al., 2005). Often termed self-control, it is defined as selecting the more advantageous reinforcer that is associated with a delay to delivery over a smaller reinforcer that is available immediately. The techniques used in these clinical interventions to promote more advantageous responding were developed initially in the nonhuman laboratory. For example, Grosch and Neuringer (1981) demonstrated that pigeons more frequently responded to the discriminative stimulus associated with a smaller more immediate reinforcer when given the choice between smaller more immediate and larger delayed reinforcement. However, after the introduction of a disc at the back of the experimental chamber that had no relation to the delivery of reinforcement, the pigeons shifted response allocation to the larger delayed reinforcer as the delay to reinforcement increased. Further, during the delays to reinforcement, the pigeons pecked at this newly introduced disc. The intervening activity of noncontingent disc pecks appeared to enhance tolerance to delayed reinforcement delivery.

Dixon, Horner, and Guercio (2003) extended these findings to participants with traumatic brain injury. Dixon et al. initially presented the participant with discriminative stimuli that were paired with a smaller more immediate and a larger delayed reinforcer (DVD viewing). Following a series of forced- and free-choice trials in this concurrent-schedule arrangement, the participant demonstrated a preference for smaller more immediate reinforcement. However, during a series of training trials that included a concurrent activity (the experimenter instructed the participant to hold his hand open) during the increasing delays to larger reinforcement, the participant altered this preference and selected larger delayed reinforcement more often.

Ferster (1953) observed that pigeons chose the response option associated with a smaller more immediate reinforcer over a larger delayed reinforcer; he then removed the delay associated with the larger reinforcer and gradually reintroduced it over successive trials. Fading the duration of the delay associated with the larger reinforcer appeared to alter preference for the smaller reinforcer. Mazur and Logue (1978) used a similar procedure, except that the delays were initially equal for the smaller and larger reinforcers and decreasing delays were introduced with the smaller reinforcer. Pigeons exposed to these contingencies selected the delayed reinforcer more often than did pigeons in a control group in which choices were always between smaller immediate and larger delayed reinforcement.

Variations of this delay-fading technique have been used with children with autism (Dixon & Cummings, 2001), children with attention deficit hyperactivity disorder (ADHD; Binder, Dixon, & Ghezzi, 2000; Neef, Bicard, & Endo, 2001; Schweitzer & Sulzer-Azaroff, 1988), children with mental retardation (Ragotzy, Blakely, & Poling, 1988), and persons with mental illness (Dixon & Holcoumb, 2000). In the studies by Binder et al., Dixon and Cummings, and Dixon and Holcoumb, the authors combined the delay-fading procedure of Ferster (1953) with the concurrent activity of Grosch and Neuringer (1981). During the delay to reinforcement, the participant could engage in a clinically relevant task. In summary, it appears that altering response allocations to concurrently available reinforcers can be accomplished by providing a concurrent activity during the delay, by fading the delay associated with the larger reinforcer, or with a combination of the two techniques.

One additional manipulation that may be used to promote self-control is to capitalize on humans' preference for greater control over choice-making opportunities. For example, Fisher, Thompson, Piazza, Crosland, and Gotjen (1997) gave 3 children the option of choosing a reinforcer or having the experimenter select the reinforcer according to a schedule yoked to the child's choices. All children preferred the choice condition. However, once contingencies were altered such that choice led only to less preferred reinforcers while the highly preferred options were still delivered by the experimenter, all children tended to select the option for the experimenter to deliver the better consequences.

The purpose of the present study was to replicate and extend research on the effects of interventions that involve self-control and choice on task performance. Specifically, we examined the effects of participant choice on the completion of task requirements by adolescents with acquired brain injury. First, we assessed the extent to which participants selected smaller more immediate reinforcement over larger more delayed reinforcement in a concurrent-operants procedure. Next, we presented the participants with choices between small immediate and larger delayed reinforcement delivered contingent on engagement in a concurrent activity at higher than baseline rates. Finally, we added a choice that had no contingent relation to the reinforcement, consisting of a die roll to determine delay value.

METHOD

Participants

Trent was an 18-year-old young man who had sustained a traumatic brain injury as a result of being struck by a car while riding his tricycle approximately 15 years prior to the onset of the study. According to his mother's report, he lost consciousness after reaching the hospital. There was no information available on the length of time he remained unconscious or on the site in the brain damaged by his injury. He took Prozac (30 mg), Elavil (150 mg), and Abilify (15 mg) once daily throughout the study. In the 3 months prior to the start of the study, Trent punched out a window in the residence, engaged in two instances of physical aggression, and on separate occasions attempted to harm two community members.

Jack was an 18-year-old young man who had sustained a traumatic brain injury as a result of a 9-m fall from a roof that had occurred approximately 15 years prior to the onset of the study. There was no information regarding the site in the brain damaged by the injury. He suffered an additional anoxic injury less than 1 year later as a result of being accidentally hanged by his sister while they were playing a game. It was reported that he was blue when found; however, the time of loss of airflow to the brain was unknown. He also had a diagnosis of ADHD. He took Concerta (54 mg) once daily throughout the study. He had a history of impulsive behavior, and staff reported that he was extremely resistant to in-the-moment feedback during challenging situations. In the 3 months prior to the study, he engaged in repeated verbal outbursts, punched out a window in the residence, and ran away from the facility.

Tori was a 16-year-old girl who had sustained a traumatic brain injury as a result of being struck by a motor vehicle approximately 9 years before the start of the study. Tori's family reported that she remained comatose for an unknown length of time following the accident. She was not on any medication during the study. She had a history of impulsive behavior, which included bringing a weapon to school. In the 3 months prior to the study, she repeatedly engaged in stealing and violent verbal outbursts.

All 3 participants could speak in full sentences and could understand verbal and written directions by caregivers.

Stimulus Preference Assessment

Prior to the onset of the study, the experimenter interviewed the participants and full-time direct-care staff members who frequently worked with them to generate a list of 5 to 10 reinforcing edible items. Identified items were used in a stimulus preference assessment without replacement (DeLeon & Iwata, 1996). The items determined to be most preferred were candy for Trent and soda for Jack and Tori. The possibility of reinforcer satiation was addressed by presenting participants with very small quantities and making these items available only during sessions with the experimenter.

Materials and Setting

All sessions were conducted in either the conference room or the dining area of the facility in which the participants resided. Stimuli included 100 pennies, 100 nickels, 100 dimes, one large bin, three smaller bins, a six-sided die, and six index cards (6.4 cm by 7.6 cm). Each small bin was labeled with a dollar amount corresponding to a coin. Two of the index cards contained a colored symbol (dark gray circle and light gray circle). The remaining four cards each contained a written name to correspond with the names of the 3 participants and the name of the primary experimenter.

Experimental Design

A multiple baseline design across participants was combined with reversals (ABCAB) within participants. First, a naturalistic baseline was conducted to evaluate the duration of responding by the participant in the absence of programmed reinforcement (A). Next, a choice baseline was conducted to serve as a control condition by which to evaluate changes in response preference following treatment (B). Once the participant demonstrated a clear preference for one response option during three of four consecutive sessions, a self-control condition was implemented (C). The natural baseline condition (A) was reintroduced and was followed by the choice baseline (B) to determine whether participants switched their preferences after having experienced self-control training.

Procedure

After discussion with an occupational therapist at the facility, it was concluded that each participant could benefit from additional fine-motor training in the form of sorting coins into bins. Experimenters conducted two to five trials per session, with a minimum of 90 min between each session. Each trial consisted of the presentation of condition-specific instructions (detailed below), the various discriminative stimuli associated with the choice options, associated delays (if necessary), and delivery of the programmed reinforcer. Each trial was followed by an intertrial interval (ITI) that was an amount of time between consumption of the reinforcer and the beginning of the next trial. When the experimenter delivered the large delayed reinforcer, the ITI was 5 s. When the experimenter delivered the small immediate reinforcer, the ITI was calculated by adding 150 s (approximate time to consume the larger reinforcer) to the 5 s, for a total ITI of 155 s. Experimenters delivered reinforcers on a 1∶3 magnitude ratio. For example, if the smaller reinforcer consisted of one piece of candy, the larger reinforcer consisted of three pieces. Figure 1 displays response options and resulting consequences that occurred in each condition.

Figure 1.

A graphical presentation of the methods and procedure of the present study.

Natural baseline

At the start of the session, the experimenter placed a bin containing nickels, dimes, and pennies in front of the participant, along with three smaller bins labeled to correspond with one of the coins in the larger bin. After informing the participant that the coins needed to be sorted into the smaller bins, the experimenter modeled taking coins from the larger bin and placing them one at a time into the proper smaller bins. The participant was then instructed to engage in the task. Then the experimenter gave the following instructions, “Sort these coins for as long as you can, let me know when you're finished by saying ‘I'm done.’” The experimenter did not give any other instructions or prompts. Trials ended when one of the following two conditions was met: (a) The participant did not initiate the task within 30 s of the instruction to sort the coins, or (b) the participant had stopped engaging in the task for 5 s. No consequences were delivered for any behavior.

Choice baseline

A two-choice concurrent schedule was in effect. Sessions began with the experimenter showing a small and large portion of the reinforcer to the participant. The experimenter placed the large bin, containing the coin mixture, and the three smaller bins, labeled to correspond with a particular coin from the larger bin, in front of the participant. The experimenter held up two index cards, one containing a dark gray circle and the other containing a light gray circle (both circles were the same size and placed in the same position on their respective index cards). The experimenter randomly assigned cards to each set of contingencies during each session. The experimenter gave the participant the following instructions:

If you would like to receive the small amount of [reinforcer] for doing nothing, point to the dark circle. If you would like to sort the coins for Y amount of time [10 times the mean level of baseline responding] to receive the large amount of [reinforcer], point to the light circle.

The criterion for determining the amount of sorting time required to obtain the larger reinforcer coincided with previous self-control studies (Dixon & Falcomata, 2004; Falcomata & Dixon, 2004) and was 1,220 s for Trent, 2,820 s for Jack, and 460 s for Tori. If, after choosing between the two response options, the participant selected the stimulus paired with the larger reinforcer, the experimenter said “please begin.” Trials for both reinforcement conditions ended under the same conditions described for the natural baseline. No reinforcement was delivered unless the participant engaged in the target behavior for the specified amount of time. If the participant selected the smaller reinforcer, it was delivered immediately. The experimenter initiated the next trial after the prescribed ITI.

Self-control training

A three-choice fixed-duration progressive-duration progressive-duration schedule of reinforcement was in effect. Sessions began with the experimenter displaying one small portion and one large portion of the reinforcing item within view of the participant. The experimenter placed the large and three smaller bins of coins in front of the participant. The experimenter gave the participant the following instructions:

If you would like to receive the small amount of [reinforcer] for doing nothing, point to the dark circle. If you would like to receive the larger amount of [reinforcer], you have to make another choice. Roll the die and sort the coins for the number of seconds that appear on the die, or let me roll the die and sort the coins for the number of seconds that appear on the die. Again, if you want the larger [reinforcer] you have to pick between me rolling the die and you rolling the die to see how long you will sort the coins. If you want to roll the die, then pick the card that says [participant's name], and if you want me to roll the die, pick the card that says “Pam.”

The concurrent three-option choice procedure remained in effect throughout the duration of the self-control training condition. However, when the participant selected the larger reinforcer option (either rolling the die him- or herself or the experimenter rolling the die) on two of three successive choices, the duration required to engage in the sorting task was increased by an amount equal to the participant's mean baseline responding. The final criterion for the duration required to engage in the sorting task to access reinforcement was equal to the duration of sorting required to earn the larger reinforcer in the choice condition. This criterion was the same as that used in previous studies (Dixon et al., 2003; Falcomata & Dixon, 2004). The instruction was then modified and the following instruction was delivered to the participant:

Roll the die and sort the coins for the amount of seconds that appears on the die [plus the mean of baseline responding], or let me roll the die and sort the coins for the amount of time that appears on the die [plus the mean of baseline responding].

Two of three successive choices were required to increase the delay associated with that specific choice option. In the event that a participant had chosen the small immediate reinforcer on eight of nine consecutive trials, this option would have been removed and reintroduced following demonstration of a preference for one of the larger reinforcement options.

As repeated choices were made for either die-rolling option (self-roll or experimenter roll), the number produced by the die roll was multiplied by integers to increase the amount of delay and coin sorting that was required to gain access to the larger reinforcer following a mean of four trials. For example, after Trent completed four to five trials in which he chose to roll the die himself, emitted the target response requirement, and consumed the larger reinforcer, subsequent die rolls for the self-roll option were multiplied by two, then three, and so on. This multiplication resulted in increasing amounts of coin sorting that was needed to be completed when he rolled the die. However, the multiplier was implemented across experimenter and participant die-roll options independently. As a result, if a participant made many choices for one option over the other, it then would become more advantageous for the participant to select the option that had not been selected frequently, because the multiplier would be considerably lower. For example, if the participant consistently selected the self-roll option for multiple consecutive trials, then the multiplier for the self-roll option increased while the multiplier for the experimenter-roll option remained the same. In this case, it would be beneficial for the participant to switch to the experimenter-roll option at that point.

Trials ended when one of the conditions for termination occurred, as previously described. The self-control training condition continued until the participant demonstrated a preference for the larger reinforcer (regardless of self-roll or experimenter roll) that was of equal delay length to that used in the choice baseline condition.

Interobserver Agreement

A second observer independently collected data on choice selection and duration of engagement in the coin-sorting task during at least 30% of sessions. Interobserver agreement for choice selection was calculated by dividing agreements by agreements plus disagreements and converting the ratio to a percentage. Agreement was 100% for all 3 participants. Agreement for duration of engagement in the coin-sorting task was calculated by dividing the smaller duration observed by the larger duration observed and converting the ratio to a percentage. Agreement was 99.9% or higher for all 3 participants.

RESULTS

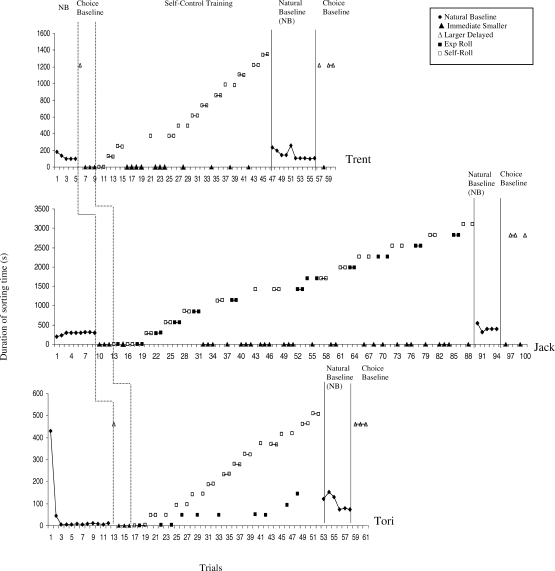

During all sessions, the participants immediately began to engage in the task once the experimenter gave the instructions. The participants engaged in the target behavior for the required amount of time during all experimental sessions, with the exception of one trial conducted with Jack at the onset of the choice baseline. Figure 2 shows performance during the natural baseline, choice baseline, and self-control training conditions.

Figure 2.

Data obtained during baseline, choice baseline, and self-control training for each of the 3 participants.

During the natural baseline condition, levels of engagement in the coin-sorting task were low for all participants (Ms = 122 s, 282 s, and 46 s for Trent, Jack, and Tori, respectively). These means were then multiplied by 10 to determine the amount of sorting required for each participant to obtain the larger reinforcer in the choice baseline condition. During the choice baseline condition, participants demonstrated a preference for the smaller immediate reinforcer by repeatedly selecting this response option either exclusively (Jack) or more often (Trent and Tori).

During the self-control training condition, all 3 participants selected the self-roll option more often than the experimenter roll option. This preference was shown throughout the study when the values of the two response options associated with the larger reinforcer were equal. Interestingly, Trent's and Tori's preferences for the self-roll option persisted even when the response requirement associated with that option exceeded that of the experimenter-roll option.

Trent's responding during the self-control training condition indicated a pronounced preference for choice. After 36 trials, he demonstrated criterion-level performance on the sorting task (1,342 s), having chosen the self-roll option on 69.5% of trials and the small immediate reinforcer option on 30.5% of trials. At one point, he almost met the predetermined criterion for eliminating the small immediately available reinforcer from the choice array. However, after choosing the small immediately available reinforcer on seven of eight trials, he then began to choose the self-roll option consistently and continued to do so from that point on, even when the response requirement exceeded that of the larger delayed reinforcer. Tori chose the self-roll option on 72.5% of trials. During the remainder of the trials, she selected the experimenter-roll option. After 36 trials, she had chosen the self-roll option on 72.2% of trials and the experimenter-roll option on 27.8% of trials; she demonstrated criterion-level performance on the sorting task (506 s) after 53 trials.

Jack spent the longest amount of time of all 3 participants in the self-control training condition. He chose the self-roll option when the response requirement associated with it was equal to that of the experimenter-roll option and chose the experimenter-roll option when the requirement increased for the self-roll option. On Trials 54 through 56, he chose the experimenter-roll option on two of three consecutive trials despite the fact that the self-roll option had an equivalent response requirement. After 77 trials, he demonstrated criterion-level performance on the sorting task (3,102 s). He chose the self-roll option on 33.8% of the trials, the experimenter-roll option on 29.9% of the trials, and the small immediate reinforcement option on 36.4% of the trials.

When the natural baseline condition was reimplemented, the mean duration of responding of all 3 participants decreased (150 s for Trent, 410 s for Jack, and 105 s for Tori). Nevertheless, mean engagement times were higher than those originally observed in the first natural baseline condition. After return to the choice baseline contingencies, all 3 participants reversed their selections to the option that required them to engage in the task for a duration equivalent to 10 times their natural baseline responding. Trent and Jack chose the larger delayed reinforcement option on three of four trials, and Tori chose the larger delayed reinforcement option on three consecutive trials.

DISCUSSION

The present study examined the preference between a small immediate reinforcer and larger delayed reinforcer in persons with brain injury. The participants spent little time engaged in the task in the absence of programmed reinforcers. When they were then asked to select between a small amount of a preferred item for no engagement in the task or a large amount of that same preferred item for a substantially larger duration of engagement than seen in baseline, all participants chose the impulsive response option and selected the smaller reinforcer. Following completion of self-control training this preference altered, and all participants chose the larger delayed reinforcer option. Further, this study assessed participants' preference to roll the die themselves (or have the experimenter roll the die) to determine the length of task engagement.

The results replicate and extend research on self-control in several ways. First, the results support previous research showing that gradual fading of delay can alter preferences of persons with disabilities (e.g., Neef et al., 2001; Ragotzy et al., 1988). The current procedure involved fading time to reinforcement delivery along with the concurrent work requirement. These elements have been manipulated individually in prior studies (Dixon et al., 2003; Dixon & Falcomata, 2004), and similar results have been obtained.

Second, this study suggests the potential of choice to enhance the development of self-control. Future studies might further examine the utility of incorporating such choice opportunities into therapeutic tasks presented to individuals who seek clinical services. Altering various dimensions of available tasks (i.e., low effort or higher effort; preferred or nonpreferred) and accompanying reinforcers for persons with disabilities may be tied directly to client programming or educational goals. Choice components could be added to traditional interventions to enhance the acceptability of such interventions to the client. Future studies could examine the effects of choice on measures other than duration, such as number of tasks completed, therapeutic goals attempted in a given session, or items completed on an academic worksheet.

The present study differed from prior research by Dixon and colleagues (Binder et al., 2000; Dixon & Cummings, 2001; Dixon & Holcoumb, 2000) by using a variable rather than progressive delay to larger reinforcer delivery. The roll of the die resulted in various amounts of task engagement required. It may be useful for future studies to directly compare the effects of variable and incremental delay-fading procedures.

The present findings also raise some interesting conceptual issues that are worthy of further exploration. Through repeated pairings with a larger reinforcer, the task of sorting coins may have acquired some of the functional properties of the reinforcer itself. Such conceptualizations have been previously noted by Dixon et al. (2003), Stromer, McComas, and Rehfeldt (2000), Reeve, Reeve, Townsend, and Poulson (2007), and Vollmer, Borrero, Lalli, and Daniel (1999). Further, choice may have reinforcing properties that, when coupled with the larger reinforcer, resulted in a compound reinforcer that was of greater value than the larger reinforcer alone. In a sense, the choice was a reinforcer as well. Perhaps choice was a greater source of reinforcement for Trent and Tori than it was for Jack, thereby accounting for Jack's different preferences when there were unequal response requirements for the larger reinforcer choice options. He readily relinquished the opportunity to choose the outcome of the die roll when the response requirement attached to this choice increased. However, throughout the phase, he demonstrated a preference for the larger delayed reinforcer rather than the smaller immediately available one. Motivating operations may have affected his responding, and subsequent studies should attempt to control for such variables. Larger reinforcerment comes at the cost of time in a nonpreferred activity, and smaller reinforcers are associated with loss of opportunity for larger reinforcement.

Our results are similar to those of Fisher et al. (1997), who showed that individuals with developmental disabilities preferred a choice condition, in which the participant could choose the reinforcer, over a no-choice condition, in which the experimenter-delivered reinforcers were identical to those selected by the participants in the choice condition (the choices were yoked in choice and no-choice conditions). The yoked no-choice condition controlled for the effects of preference (i.e., the possibility that the effects of choice were a result of the participant selecting the most preferred reinforcer rather than the effects of choice itself) that were an inherent component of the choice condition. In the present investigation our participants had access to identical reinforcement, yet they chose to self-roll even when the response requirement for self-roll increased beyond that of the response requirement for experimenter-roll for 2 of 3 participants.

It is possible that participants preferred rolling the die because that activity itself was reinforcing or was preferable to waiting for the experimenter to roll. Subsequent research should examine this possibility by controlling for activity engagement during delays. Whatever the mechanism, it appears that choice is an effective means of promoting increased task engagement. To the extent that it is preferred by participants, it might also result in greater compliance with clinical procedures and educational practices. Future studies should further explore the clinical applicability of enhancing choice-making opportunities.

REFERENCES

- Binder L.M, Dixon M.R, Ghezzi P.M. A procedure to teach self-control to children with attention deficit hyperactivity disorder. Journal of Applied Behavior Analysis. 2000;33:233–237. doi: 10.1901/jaba.2000.33-233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DeLeon I.G, Iwata B.A. Evaluation of a multiple-stimulus presentation format for assessing reinforcer preferences. Journal of Applied Behavior Analysis. 1996;29:519–532. doi: 10.1901/jaba.1996.29-519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dixon M.R, Cummings A. Self-control in children with autism: Response allocation during delays to reinforcement. Journal of Applied Behavior Analysis. 2001;34:491–495. doi: 10.1901/jaba.2001.34-491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dixon M.R, Falcomata T.S. Preference for progressive delays and concurrent physical therapy exercise in an adult with acquired brain injury. Journal of Applied Behavior Analysis. 2004;37:101–105. doi: 10.1901/jaba.2004.37-101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dixon M.R, Holcoumb S. Teaching self-control to small groups of dually diagnosed adults. Journal of Applied Behavior Analysis. 2000;33:611–614. doi: 10.1901/jaba.2000.33-611. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dixon M.R, Horner M.J, Guercio J. Self-control and the preference for delayed reinforcement: An example in brain injury. Journal of Applied Behavior Analysis. 2003;36:371–374. doi: 10.1901/jaba.2003.36-371. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Falcomata T.F, Dixon M.R. Enhancing physical therapy exercises in persons with acquired brain injury through a self-control training procedure. European Journal of Behavior Analysis. 2004;5:5–17. [Google Scholar]

- Ferster C.B. Sustained behavior under delayed reinforcement. Journal of Experimental Psychology. 1953;45((4)):218–224. doi: 10.1037/h0062158. [DOI] [PubMed] [Google Scholar]

- Fisher W.W, Thompson R.H, Piazza C.C, Crosland K, Gotjen D. On the relative reinforcing effects of choice and differential consequences. Journal of Applied Behavior Analysis. 1997;30:423–438. doi: 10.1901/jaba.1997.30-423. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grosch J, Neuringer A. Self-control in pigeons under the Mischel paradigm. Journal of the Experimental Analysis of Behavior. 1981;35:3–21. doi: 10.1901/jeab.1981.35-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazur J.E, Logue A.W. Choice in a “self-control” paradigm: Effects of a fading procedure. Journal of the Experimental Analysis of Behavior. 1978;30:11–17. doi: 10.1901/jeab.1978.30-11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neef N.A, Bicard D.F, Endo S. Assessment of impulsivity and the development of self-control in children with attention deficit hyperactivity disorder. Journal of Applied Behavior Analysis. 2001;34:397–408. doi: 10.1901/jaba.2001.34-397. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neef N.A, Lutz M.N. A brief computer-based assessment of reinforcer dimensions affecting choice. Journal of Applied Behavior Analysis. 2001;34:57–60. doi: 10.1901/jaba.2001.34-57. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neef N.A, Marckel J, Ferreri S.J, Bicard D.F, Endo S, Aman M.G, et al. Behavioral assessment of impulsivity: A comparison of children with and without attention deficit hyperactivity disorder. Journal of Applied Behavior Analysis. 2005;38:23–37. doi: 10.1901/jaba.2005.146-02. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ragotzy S.P, Blakely E, Poling A. Self-control in mentally retarded adolescents: Choice as a function of amount and delay of reinforcement. Journal of the Experimental Analysis of Behavior. 1988;49:191–199. doi: 10.1901/jeab.1988.49-191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reeve S.A, Reeve K.F, Townsend D.B, Poulson C.L. Establishing a generalized repertoire of helping behavior in children with autism. Journal of Applied Behavior Analysis. 2007;40:123–136. doi: 10.1901/jaba.2007.11-05. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schweitzer J.B, Sulzer-Azaroff B. Self-control: Teaching tolerance for delay in impulsive children. Journal of the Experimental Analysis of Behavior. 1988;50:173–186. doi: 10.1901/jeab.1988.50-173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stromer R, McComas J.J, Rehfeldt R.A. Designing interventions that include delayed reinforcement: Implications of recent laboratory research. Journal of Applied Behavior Analysis. 2000;33:359–371. doi: 10.1901/jaba.2000.33-359. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vollmer T.R, Borrero J.C, Lalli J.S, Daniel D. Evaluating self-control and impulsivity in children with severe behavior disorders. Journal of Applied Behavior Analysis. 1999;32:451–466. doi: 10.1901/jaba.1999.32-451. [DOI] [PMC free article] [PubMed] [Google Scholar]