Abstract

The recommendation to reserve the most potent reinforcers for unprompted responses during acquisition programming has little published empirical support for its purported benefits (e.g., rapid acquisition, decreased errors, and decreased prompt dependence). The purpose of the current investigation was to compare the delivery of high-quality reinforcers exclusively following unprompted responses (differential reinforcement) with the delivery of high-quality reinforcers following both prompted and unprompted responses (nondifferential reinforcement) on the skill acquisition of 2 children with autism. Results indicated that both were effective teaching procedures, although the differential reinforcement procedure was more reliable in producing skill acquisition. These preliminary findings suggest that the differential reinforcement of unprompted responses may be the most appropriate default approach to teaching children with autism.

Keywords: autism, differential reinforcement, skill acquisition

A common recommendation for behavioral acquisition programs is to reserve high-quality reinforcers for instances of unprompted responding following the initial transfer of stimulus control (Anderson, Taras, & O'Malley-Cannon, 1996; Lovaas, Freitas, Nelson, & Whalen, 1967; Sundberg & Partington, 1998). This technique has been shown to be a useful tool in promoting rapid acquisition and decreasing the occurrence of prompt dependence that can result from imprecise prompt fading (MacDuff, Krantz, & McClannahan, 2001). To date, however, only two studies have evaluated the effects of this procedure.

Olenick and Pear (1980) evaluated this differential reinforcement procedure with 3 children with severe mental retardation who were taught to tact pictures over a progressive sequence of prompt and probe trials. Unprompted correct responses were reinforced on a continuous reinforcement (CRF) schedule, and prompted responses were reinforced on a fixed-ratio (FR) 6 or 8 schedule. Schedules of reinforcement were manipulated by trial type across phases, and the authors demonstrated that the combination of the differentially rich schedule for unprompted responses and the lean schedule for prompted responses was more effective with all participants.

Touchette and Howard (1984) compared participant errors, trials to criterion, and transfer of stimulus control across three contingencies of reinforcement for prompted and unprompted responses in visual discrimination tasks with 3 children with severe mental retardation. Systematic prompt-delay procedures were implemented in conjunction with the following schedules of reinforcement for prompted and unprompted responses, respectively: CRF CRF, CRF FR 3, and FR 3 CRF. Errors were uniformly low across participants and teaching conditions. Two of 3 participants averaged substantially fewer trials to criterion in the condition that favored unprompted responses (CRF FR 3) than in the conditions in which reinforcement for prompted and unprompted responses was uniform or favored prompted responses. Similarly, the smallest delay values corresponding to the initial transfer of stimulus control were obtained in the condition that produced the greatest density of reinforcement for unprompted responses relative to the other two conditions.

Even though findings from these two investigations demonstrate the efficacy of two variations of the differential reinforcement procedure, an evaluation that incorporates procedures more representative of contemporary clinical practice is warranted. Thus, the purpose of the present study was to extend research on the differential reinforcement of unprompted responses with children with autism by using a consistent trial format and manipulating quality rather than frequency of reinforcement.

METHOD

Participants and Setting

Two boys who had been diagnosed with autism participated in the study. Eric was 5 years old and attended a general education classroom with the assistance of a full-time paraprofessional aide. He communicated vocally using two- and three-word phrases. His adaptive behavior composite score on the Vineland Adaptive Behavior Scales (VABS; Sparrow, Balla, & Cicchetti, 1984) was 77, placing him at the 6th percentile among his typically developing peers. Steve was 3 years old and attended a full-time preschool for children with autism. He communicated using gestures and a few one-word vocal requests. Steve's adaptive behavior composite score on the VABS was 94, placing him at the 34th percentile among his typically developing peers. Both participants exhibited generalized repertoires for motor and vocal imitation.

Dependent Variables and Interobserver Agreement

Targets for each participant were selected from two program areas, picture sequencing and tacts. Performance data were summarized as the percentage of trials in which the participants responded correctly and independently during each block of 10 trials. Unprompted responses were defined as those that occurred within 3 s of the onset of the trial (i.e., the therapist's instruction and the presentation of relevant stimuli for tacting or sequencing). The mastery criterion across targets was unprompted responses in at least 80% of trials across two consecutive sessions when the first trial of each session was correct and unprompted. Interobserver agreement was assessed for at least 28% of sessions during reinforcer-evaluation and treatment-comparison conditions per participant. During the reinforcer evaluation, mean total agreement was 95% for Eric and 100% for Steve. During the treatment comparison, mean point-by-point agreement was 98% for both Eric and Steve.

Procedure

Preference assessment

Brief multiple-stimulus (without replacement) preference assessments (Carr, Nicolson, & Higbee, 2000) were conducted to identify foods that would function as reinforcers for Eric's and Steve's responses during training. The three highest ranked foods identified in this manner were used as programmed consequences throughout reinforcer evaluations and treatment comparisons.

Reinforcer evaluation

A reversal design was used to determine the relative reinforcing effectiveness of praise versus praise plus food as consequences for Eric. Specifically, the frequency of an arbitrary operant response (disc pressing) during 2-min sessions was evaluated under the aforementioned reinforcement conditions and a no-consequence baseline condition. Steve completed a progressive-ratio 2 reinforcer evaluation (Roane, Lerman, & Vorndran, 2001). An arbitrary response of placing foam tiles in a plastic container was selected. Break points (Glover, Roane, Kadey, & Grow, 2008), or the highest schedule value completed by the participant before responding ceased for 5 min, were compared between alternating praise and praise-plus-food conditions.

Treatment comparison

An alternating treatments design was used to evaluate the effects of differential reinforcement of unprompted responding on acquisition for both participants. In Steve's case, the design was modified following the first evaluation to control for the possibility of multiple-treatment interference, and a reversal design was then used. Multiple pairs of targets from a single program area (i.e., tacts or picture sequences) were trained with each participant. Eric's target behaviors involved arranging sets of three pictures in a chronological sequence. Steve was taught to tact a variety of actions and emotions in pictures. Each training trial was initiated with the presentation of the relevant discriminative stimulus (instruction and visual stimuli) followed by a 3-s delay before a hand-over-hand or full verbal response prompt was provided by the experimenter. In the rare case that Steve did not respond to the vocal model the first time, the prompt was repeated every 3 s until the imitative response was emitted. Errors (e.g., placing the sequencing cards in the wrong order, emitting a nontarget vocal response) were immediately followed by a prompt. Prompted responses, or those responses that followed the response prompt of the experimenter, were initially followed by the highest quality consequence identified by the reinforcer evaluations (praise and the delivery of a highly preferred food item) in both the differential and nondifferential reinforcement conditions. Throughout training on targets in the nondifferential reinforcement condition, praise and access to high-quality food reinforcers were delivered contingent on both prompted and unprompted responses. Following the first instance of an unprompted response in the differential reinforcement condition, contingent praise and access to high-quality food reinforcers were provided only following additional unprompted responses. The lower quality consequence identified by the reinforcer evaluation (praise alone) was delivered following subsequent prompted responses in the differential reinforcement condition. Finally, a termination criterion was enforced in the event that an ascending trend was not established on a specific target within 10 training sessions. The unlearned target was later addressed with the alternative teaching procedure.

Procedural Integrity

Two measures of procedural integrity were also collected to ensure that teaching procedures were implemented as described in the experimental protocol. First, trained data collectors assessed procedural integrity during at least 45% of treatment sessions for each participant. Procedural integrity was summarized as the percentage of trials implemented as described for each block of trials. Required therapist behaviors included presenting the appropriate discriminative stimulus, waiting 3 s, prompting the response (as necessary), delivering the programmed consequences, and providing an intertrial interval of 20 s. Trials for which any of the aforementioned steps was omitted or implemented inaccurately were scored as incorrect. A procedural integrity score was calculated for each session by dividing the number of correctly implemented trials by the total number of trials and converting this ratio to a percentage. The mean procedural integrity score was 99.6% for Eric (range, 90% to 100%), and 98% for Steve (range, 80% to 100%). The second measure of procedural integrity was provided by naive observers who viewed three sessions from each condition over the course of training with each participant. The observers then completed a nine-item, close-ended postobservation questionnaire to document any unsystematic differences in reinforcer or prompt quality between conditions. Results indicated that, apart from the manipulation of the independent variable, teaching procedures were implemented uniformly across conditions.

RESULTS AND DISCUSSION

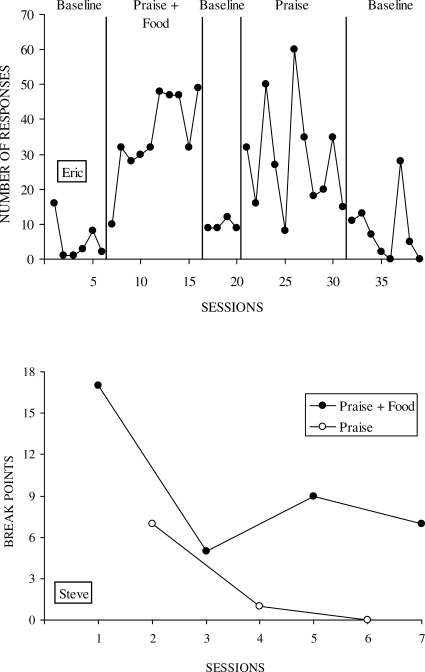

Results from the reinforcer evaluations are displayed in Figure 1. During Eric's evaluation, the frequency of responding showed a stable, ascending trend uniquely under the praise-plus-food reinforcement condition. In Steve's evaluation, the break points obtained under the praise-alone condition decreased across repeated exposures to this condition and, overall, remained consistently lower (range, 0 to 7) than the break points produced in the praise-plus-food condition (range, 5 to 17). Results from these reinforcer evaluations demonstrate that praise plus food constituted a higher quality, more effective reinforcer than praise alone for both participants.

Figure 1.

Results from Eric's single-operant reinforcer evaluation (top) and Steve's progressive-ratio-schedule reinforcer evaluation (bottom).

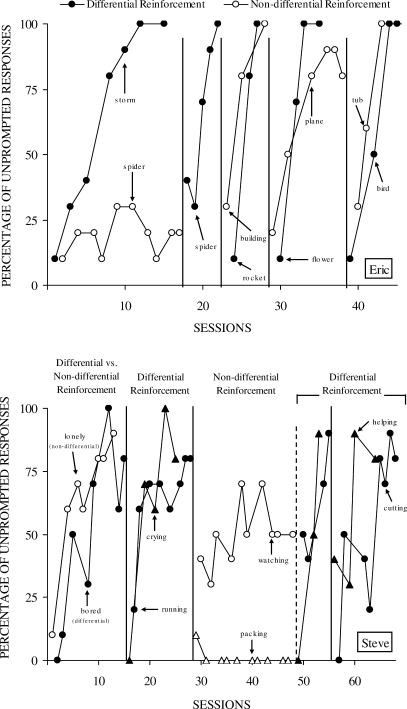

Results from the treatment comparisons appear in Figure 2. Eric acquired eight picture sequences across four phases of differential and nondifferential reinforcement. During the first comparison, a clear separation emerged between the two training data paths, with acquisition occurring only under the differential reinforcement condition. During the second phase of the evaluation, the differential reinforcement condition was implemented with the unlearned sequence (spider) from Phase 1 and acquisition occurred in five additional training sessions. Eric mastered the remaining three pairs of picture sequences rapidly under both conditions (M = 3.8 sessions to mastery; range, 3 to 5 sessions). Although his performance on the final six sequencing tasks demonstrates that both differential and nondifferential reinforcement procedures can lead to acquisition, the first two phases of the evaluation demonstrate that differential reinforcement was initially more effective.

Figure 2.

Acquisition data across targets taught using differential and nondifferential reinforcement contingencies for Eric (top) and Steve (bottom). Note that one target for Eric (spider) and two targets for Steve (watching, packing) were exposed to both nondifferential and differential reinforcement conditions.

Steve acquired eight tacts of actions and emotions over the course of the investigation. The differential and nondifferential teaching conditions were equally effective during the first evaluation; thus, a reversal design was implemented to determine whether multiple-treatment interference might have been responsible for the similar patterns. Pairs of targets were subsequently taught using one treatment procedure per phase, and only the differential reinforcement condition was effective in producing acquisition. The two targets (watching, packing) that were not acquired under nondifferential reinforcement were then mastered in three and four sessions of differential reinforcement. These results suggest that the differential reinforcement of unprompted responses was, again, the more effective teaching method when different patterns of responding were observed between differential and nondifferential reinforcement conditions. Although results for both participants are similar to previous investigations (i.e., Olenick & Pear, 1980; Touchette & Howard, 1984), a number of observations and considerations merit closer examination.

Following mastery of the two initial picture sequences, Eric began to acquire novel picture sequences in uniformly few sessions across the two training conditions. This finding suggests that the advantages of differential reinforcement of unprompted responses might be restricted to those circumstances in which the response being taught is not from a generalized class of responses, and the learner has not yet acquired a repertoire for learning novel responses due to enhanced stimulus control. That is, therapist instructions and teaching materials were potentially more likely to evoke approximations of the target behavior (e.g., attending to stimuli and card placement) after Eric had a history of training on sequencing tasks. It is also possible that the targets that were presented later in the investigation were less difficult than those presented at the outset.

Another way to account for the learning that occurred in both differential and nondifferential reinforcement conditions is related to the more immediate reinforcement that followed all unprompted correct responses. Because errors were immediately followed by response prompts, the delay to reinforcement in these cases was increased relative to unprompted correct responses. This delay may have constituted an embedded differential contingency for both conditions in which unprompted correct responses produced more immediate reinforcement than unprompted error responses. One limitation to this analysis is that all unprompted errors in the nondifferential reinforcement condition were followed by prompted responses before a reinforcer was delivered. The embedded contingency account is feasible insofar as reinforcers that followed posterror prompted responses also affected the initial error response.

At least three possibilities for the mechanism of action that underlies the effects associated with the differential reinforcement of unprompted responses in this investigation should be considered. First, the nondifferential reinforcement procedure may have failed to produce acquisition in some cases because error responses were adventitiously reinforced when high-quality consequences were delivered contingent on the prompted response. Prompting procedures used in this investigation dictated that, under the nondifferential reinforcement condition, errors were followed within seconds by the delivery of the high-quality reinforcer. The differential reinforcement condition, therefore, may have achieved its effects by interrupting the relation between errors and delivery of high-quality reinforcement.

Others have proposed that negative reinforcement contingencies can play a significant role in the efficacy of various teaching procedures (Iwata, 1987). Potentially aversive conditions associated with the teaching procedures may have influenced findings from this investigation. Specifically, if the prompts delivered contingent on errors or failures to respond within the allotted interval introduced a sufficiently aversive condition, it is possible that errors and prompted responses decreased due to the resulting punishment contingency. Further, the avoidance of those aversive prompts may have functioned as negative reinforcers for unprompted responding.

A third variable that may have contributed to the relative efficacy of the differential reinforcement procedure is the potential difference in response effort between prompted and unprompted responses. It could be the case that previously mastered responses under the stimulus control of prompts (compliance with physical guidance or vocal imitation, in this study) are fundamentally less effortful than novel or newly acquired responses. As such, less effortful prompted responses would remain at greatest strength (i.e., prompt dependence) as long as the opportunity to engage in a prompted response is available and the quality of reinforcers programmed for more and less effortful behaviors is the same.

In conclusion, the nondifferential reinforcement procedure was, predictably, less likely to produce acquisition of motor and vocal responses for 2 children with autism. Even though findings from this investigation suggest that both differential and nondifferential reinforcement procedures can result in mastery, the occasional failure of the latter to do so implicates the differential procedure as the preferable method when teaching children with autism.

Acknowledgments

This article is based on a thesis submitted by the first author, under the supervision of the second author, in partial fulfillment of the requirements for the master of arts degree in psychology. Amanda Karsten is now affiliated with Western New England College, and James Carr is now affiliated with Auburn University. We thank committee members Linda LeBlanc and Dick Malott for their helpful comments on the thesis. We also thank Rachel Clark, Katie Mahoney, Joe Keller, Richika Fisher, Corey Boulas, Tom Dailey, Erin Jeltema, and Danielle Williams for their assistance with data collection.

REFERENCES

- Anderson S.R, Taras M, O'Malley-Cannon B. Teaching new skills to young children with autism. In: Maurice C, Green G, Luce S, editors. Behavioral intervention for young children with autism: A manual for parents and professionals. Austin, TX: Pro-Ed; 1996. pp. 181–193. [Google Scholar]

- Carr J.E, Nicolson A.C, Higbee T.S. Evaluation of a brief multiple-stimulus preference assessment in a naturalistic context. Journal of Applied Behavior Analysis. 2000;33:353–357. doi: 10.1901/jaba.2000.33-353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glover A.C, Roane H.S, Kadey H.J, Grow L.L. Preference for reinforcers under progressive- and fixed-ratio schedules: A comparison of single and concurrent arrangements. Journal of Applied Behavior Analysis. 2008;41:163–176. doi: 10.1901/jaba.2008.41-163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iwata B.A. Negative reinforcement in applied behavior analysis: An emerging technology. Journal of Applied Behavior Analysis. 1987;20:361–378. doi: 10.1901/jaba.1987.20-361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lovaas I.O, Freitas L, Nelson K, Whalen C. The establishment of imitation and its use for the development of complex behavior in schizophrenic children. Behaviour Research and Therapy. 1967;4:171–181. doi: 10.1016/0005-7967(67)90032-0. [DOI] [PubMed] [Google Scholar]

- MacDuff G.S, Krantz P.J, McClannahan L.E. Prompts and prompt-fading strategies for people with autism. In: Maurice C, Green G, Foxx R.M, editors. Making a difference: Behavioral intervention for autism. Austin, TX: Pro-Ed; 2001. pp. 37–50. [Google Scholar]

- Olenick D.L, Pear J.J. The differential reinforcement of correct responses to probes and prompts in picture-name training with severely retarded children. Journal of Applied Behavior Analysis. 1980;13:77–89. doi: 10.1901/jaba.1980.13-77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roane H.S, Lerman D.C, Vorndran C.M. Assessing reinforcers under progressive schedule requirements. Journal of Applied Behavior Analysis. 2001;34:145–167. doi: 10.1901/jaba.2001.34-145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sparrow S, Balla D, Cicchetti D. The Vineland Adaptive Behavior Scales: Interview edition, survey form manual. Circle Pines, MN: American Guidance Service 1984.

- Sundberg M.L, Partington J.W. Teaching language to children with autism or other developmental disabilities. Pleasant Hill, CA: Behavior Analysts, Inc; 1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Touchette P.E, Howard J.S. Errorless learning: Reinforcement contingencies and stimulus control transfer in delayed prompting. Journal of Applied Behavior Analysis. 1984;17:175–188. doi: 10.1901/jaba.1984.17-175. [DOI] [PMC free article] [PubMed] [Google Scholar]