Abstract

Broca’s area is crucial for speech production. Several recent studies suggest it has an additional role in visual speech perception. This conclusion remains tenuous, as previous studies employed tasks requiring active processing of visual speech movements which may have elicited conscious sub-vocalizations. To study whether Broca’s area is modulated during passive viewing of speech movements, we conducted a fMRI experiment where participants detected rare and brief visual targets that were briefly superimposed on two task irrelevant conditions: passive viewing of silent speech versus non-speech (gurning) facial movements. Comparison revealed Broca’s area to be more active when observing speech. These findings provide further support for Broca’s area in speech perception and have clear implications for rehabilitation of aphasia.

Keywords: motor speech, gurning, speech perception, Broca’s area

Introduction

It is well known that a region of the left inferior frontal gyrus known as “Broca’s area” is crucial for speech production, with patients having injury to this region showing profound deficits in fluid speech production. Recent brain activation studies have suggested that the left inferior frontal gyrus may actually be activated by a wide range of tasks including digit sequence learning [1,2], imagery of motion [3], and subvocal rehearsal [4], with a meta-analysis suggesting that phonological perception activates a more dorsal region than covert and overt speech production [5]. Of particular interest, several studies have suggested that this region is active not only for speech production, but also during speech perception [6–11]. Indeed, the role of this region in speech perception is of theoretical and clinical importance. For example, Liberman et al.’s influential motor theory of speech perception suggests that listeners rely on reconstructing the motoric programs required to produce a given speech sound [6]. Under such a model, one would expect that the speech production system is intrinsically linked to the speech perception system. Such notions have gained recent support from findings of ‘mirror neurons’ in the primate homologue to this region, which fire both when observing an action as well as executing the same action [7].

One popular paradigm to examine whether the frontal language areas are specifically engaged by observing speech movements is neuroimaging studies that contrast videos of oral speech movements to videos that show non-speech oral movements (gurning). Gurning stimuli involve buccofacial movements, such as raising the upper lip to reveal the front teeth, sticking out the tongue, or licking the side of the mouth. Several studies have observed enhanced left frontal lobe activation when observing silent speech videos relative to gurning, providing evidence that this region plays a role in visual speech perception. However, all previous studies contain a potential confound: specifically, each of these studies included a task where the cortical speech production mechanism may have been either implicitly or explicitly engaged. Therefore, it is possible that these studies identified Broca’s area involvement because the participants were covertly producing the speech motor movements in order to complete the task. This can be observed by considering the tasks of each of the five previous studies. First, in a study by Campbell et al., participants were asked to silently rehearse the speech stimuli, while conducting a counting task for the gurning stimuli [8]. Second, Hall and colleagues used visual speech stimuli composed of sentences such as “The four yellow leaves are falling”; where in the example the participant was expected to press the fourth button on a keypad, and pressed buttons in a numerical sequence in response to gurning stimuli [9]. Third, the sparse imaging design of Bonilha et al required participants to explicitly replicate the observed speech and gurning movements [10]. Finally, in the design of Fridriksson et al., participants observed two successive silent videos (either two gurns or two syllables), with the participants required to determine if the two videos showed identical or different speech movements [11].

Comparing oral speech to gurning is an elegant control condition. First, the gurning stimuli have many of the same spatial and movement properties of speech. In addition, these studies appear to suggest that the adult human frontal lobe is not a generalized mirror neuron system that responds equally to any face movement, rather it shows enhanced activity to speech movements relative to non-speech movements, extending studies which compare speech videos to other forms of baseline [12,13]. On the other hand, if the previous studies all encouraged speech motor programming to accomplish the tasks, it becomes difficult to determine whether the frontal activation observed in these studies truly reflects enhanced activation during speech perception, or merely activation for speech production.

The purpose of the current study was to determine whether activity in Broca’s area is elicited during passive viewing of visual motor speech movements in situations where the task does not benefit from covertly mimicking the observed mouth movements. To accomplish this, we developed a paradigm where the silent speech or gurning videos were irrelevant to the participant’s task. Such a design should not encourage the participants to subvocally mimic the speech movements and should hopefully reveal whether frontal activation is obligatorily engaged when observing speech motor movements. Specifically, we asked participants to watch silent speech and gurning videos, with the task of detecting infrequent visual targets that were briefly superimposed in front of the videos. Our prediction was that the cortical speech network would be specifically activated by speech movements, even though they were not relevant to the task.

Methods

Sixteen female graduate students (age range = 19–31; mean = 22.38; SD = 2.76) from the University of South Carolina volunteered to be in this study. All had normal vision or corrected to normal vision using contact lenses. All used right-handed handwriting by self report. Note that if some individuals from our sample had mixed or reversed dominance it would only add to the variability in brain activation, and reduce the magnitude of any observed effects. The Institutional Review Board at the University of South Carolina gave approval for this study, and all participants provided written informed consent.

The experiment involved completion of a visual attention task during fMRI scanning. The task displayed 200 silent 1.5s videos [13] – half showing oral speech movements and half showing gurning. A block paradigm was used with three conditions: speech, gurning, and rest, with each block lasting 14s. The resting block displayed a static central black fixation cross on a gray background. During speech and gurning blocks, five videos were shown sequentially, with an inter-trial interval of 2.8s (the 1.3s interval between videos displayed the same static image as the rest block). Blocks were presented in a pseudorandomized order such that within every six-block cycle there were always two rest blocks, two gurning blocks and two speech blocks. Participants were instructed to press a button using their left index finger whenever they saw a visual target, which was a small black cross superimposed on a small gray background. This target was randomly presented 20 times throughout the session, appearing between 200 and 800ms after a video began, and visible for approximately 50ms. The spatial location of the target was randomly jittered to occur approximately in the region of the lips of the speaker shown in the background video. It was equally likely to occur during speech or gurning videos, but was never presented during the rest periods.

Task stimuli were presented using custom written software running on a Windows XP computer. The videos were displayed using a 1024×768 pixel resolution DLP projector with a long throw lens that was located outside the scanner’s Faraday cage. The image was directed through a wave-guide and reflected off of a front-silvered mirror, which in turn reflected the images onto a back-projection screen that could be observed from another mirror mounted on the scanner’s head coil.

FMRI data were collected on a Siemens 3T Trio scanner with 12-channel RF head coil. During the first six minutes in the scanner the participant performed a practice version of the task (identical to the experiment previously described except targets appeared on half the trials and the background fixation stimuli was displayed on a blue background) while an anatomical scan was acquired. Next, participants performed the task during 853.6s of continuous fMRI acquisition. During this time, a total of 388 T2*-weighted fMRI volumes were collected using an EPI pulse sequence with the following parameters: TR = 2.2 s, TE = 30 ms, flip angle = 90°, 64×64 matrix, 192×192mm FOV, 36 ascending 3mm thick slices with 20% slice gap resulting in voxels with an effective distance of 3×3×3.6mm between voxel centers, no parallel imaging acceleration. The scanner did not reconstruct the first two volumes (due to T1 effects), therefore the first block of videos commenced precisely 10s after the acquisition of the first reconstructed slice.

FMRI data processing was carried out on each participant’s data using the FMRI Expert Analysis Tool Version 5.98 [14]. Data preprocessing included motion correction, brain extraction, spatial smoothing using a 8mm FWHM Gaussian kernel, grand-mean intensity normalization of the entire 4D dataset by a single multiplicative factor and highpass temporal filtering (Gaussian-weighted least-squares straight line fitting, with sigma=30.0s). Individual statistical analysis included local autocorrelation correction. The statistical model included the convolved functions of speech and gurning as well as regressors that modeled head movement parameters. Each individual’s fMRI data were normalized to the MNI 152 template image using the linear routines of FLIRT. Finally, a mixed-effects analysis was computed for the entire group, with the subsequent voxelwise statistical maps thresholded at Z > 2.3, followed by a cluster significance threshold of p = 0.05 adjusted for multiple comparisons [15].

In addition to the voxelwise analysis, we also conducted a region of interest analysis. Regions of interest (ROI) included brain areas typically associated with speech processing – approximating the locations of BA 22 (posterior superior temporal lobe, Wernicke’s Area), BA 40 (inferior parietal lobe) and BA 44 (inferior frontal gyrus, posterior portion of Broca’s Area). For each ROI the top 10% of voxels, as assessed by each tasks’ Z-scores, were computed.

Results

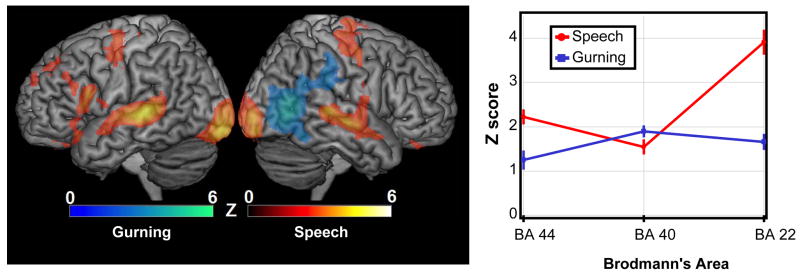

Whole brain voxelwise analyses were performed comparing activation during speech versus gurning. These comparisons are illustrated in the left panel of Figure 1. As shown in Table 1, local maxima for passive viewing of speech stimuli greater than gurning resulted in statistically significantly increased activation in regions corresponding to BA 44, BA 18 (lingual gyrus), and BA 21 (middle temporal gyrus) in the left hemisphere, and BA 21 and BA 6 (middle frontal) in the right hemisphere. A local maximum for gurning greater than speech was recorded in the right hemisphere BA 37 (middle temporal gyrus).

Figure 1.

Left panel shows rendered images of the left and right hemispheres, respectively. Regions in the red-orange color scale were significantly more active during speech videos than gurning videos, while regions in the blue-cyan color scheme show more activation during gurning than speech videos (with the color corresponding with the observed Z-score, as shown in the legend). These statistical maps were initially cropped for Z >2.3, followed by a cluster-level threshold of p <0.05, corrected for multiple comparisons. The panel on right left shows the mean Z-score for the top 10% of voxels in three distinct Brodmann Areas. The red lines show activation for the contrast of speech videos versus rest, while the blue lines show the activation for the comparison of gurning versus rest. Note that BA44 and BA22 were substantially more active during speech videos than gurning videos. The error bars show normalized standard error, as suggested by Masson and Loftus, 2003.

Table 1.

List of voxel clusters that survived statistical thresholding for multiple comparisons (p <0.05). The table lists the cluster size (voxels), as well as peak characteristics including Z-score, and Xmm Ymm and Zmm in MNI space, Automatic Anatomical Labeling location (Tzourio-Mazoyer, 2002) as well as approximate Brodmann Area.

| Speech > Gurning | ||||||

|---|---|---|---|---|---|---|

| Voxels | Z-score | X (mm) | Y (mm) | Z (mm) | Anatomy | BA |

| 7801 | 4.06 | −50 | 14 | 18 | Left Frontal Inferior Operculum | 44 |

| 4633 | 5.04 | −24 | −94 | −12 | Left Lingual | 18 |

| 3185 | 5.3 | −58 | −32 | 0 | Left Middle Temporal | 21 |

| 2518 | 4.86 | 58 | −32 | 4 | Right Middle Temporal | 21 |

| 1545 | 3.69 | 56 | −8 | 54 | Right Middle Frontal | 6 |

| Gurning > Speech | ||||||

| Voxels | Z-score | X (mm) | Y (mm) | Z (mm) | Anatomy | BA |

| 4041 | 4.93 | 44 | −60 | 12 | Right Middle Temporal | 37 |

The region of interest data were subjected to a two by three repeated measures ANOVA, with two levels of movement (speech vs. gurning) and three levels of an ROI factor (BA 22, BA 40, BA 44). This test revealed a main effect of region F(2,30) = 20.6 p<0.000002, and movement F(1,15) = 11.9 p<0.003562, as well as an interaction between these two factors F(2,30) = 37.1 p<0.000001 (Figure 1, right panel). Planned repeated-measures t-tests contrasting the speech versus gurning activations found significant differences in BA 22, t(15)=5.85 p< 0.0001, as well as BA 4, t(15)=3.10 p< 0.0073, but not BA 40. As predicted, speech stimuli elicited a larger response than gurning stimuli in both BA 22 and BA 44.

Discussion

Compared to gurning, passive viewing of speech resulted in greater brain activation across multiple cortical regions in the left and right hemispheres. Central to the current investigation, this included the posterior portion of Broca’s area, BA 44. Furthermore, in comparison to gurning, passive visual perception of speech motor movements was associated with greater activation in Broca’s area (BA 44) and Wernicke’s area (BA 22). Both conditions elicited similar activation in BA 40 of the left parietal lobe.

Results from the ANOVA are consistent with previous studies with regard to the role of Broca’s and Wernicke’s areas, but not the inferior parietal lobe, in visual perception of speech motor movements [8–11]. However, the crucial finding from this study is that visual speech perception related activity in Broca’s area is elicited even when the task does not mandate explicit processing of the speech stimuli. Thus, Broca’s area appears to be important for both speech production and perception.

Essentially, the overt task used in this study is an odd-ball or vigilance paradigm, and thus is likely to have recruited activity of the ventral prefrontal region. However, because the likelihood of a target stimuli was kept equivalent across experimental conditions (e.g. oddball targets occurred both during speech and gurning videos), these non-speech specific attentional demands were essentially cancelled out in the subtraction analyses.

A caveat to interpreting findings from all fMRI studies is that results identify brain regions activated by the task, but not necessarily crucial to performance. To elucidate whether a given region is crucial for task performance, complementary evidence from patient studies can be considered. To date, only one study has examined visual speech perception in brain damaged patients by comparing task performance across patients with aphasia only, aphasia and apraxia of speech, and normal controls [16]. Here, results indicated similar levels of impairment for perception of both speech and non-speech motor movements. Moreover, impaired task performance was strongly predicted by the severity level of apraxia of speech, a condition usually associated with Broca’s area damage [17]. In consideration of the role of Broca’s area in visual speech perception, two potential confounds should be considered. First, this study used an “active” task that required comparison between successive movements; thus the role of working memory and sub-vocal speech production cannot be ignored. Second, lesion data for the patients were not available, and therefore specific cortical involvement cannot be determined. A lesion-symptom mapping study in patients with left hemisphere damage could potentially help resolve this discrepancy. Alternatively, rapid repetitive transcranial magnetic stimulation (TMS) could be employed to produce temporary, regionally circumscribed cortical disruptions in healthy persons. For example, a recent study by Rorden and colleauges [18] used TMS to briefly disrupt Broca’s area while participants determined if a pair of successive videos showed the same or different oral movements. In keeping with the current results, TMS impaired performance for videos that showed oral speech movements but not gurning movements.

Results from the current study indicate that visual perception of speech motor movements may be important when evaluating severity of non-fluent aphasia. The degree of acute impairment following stroke or other injury may have important prognostic value. Performance on such tasks may also provide useful quantitative measures with which to track changes associated with intervention. Interestingly, a recent study by Fridriksson et al. [19] found that naming abilities in patients with chronic aphasia improved significantly following a visual-auditory speech training paradigm, but not after training that only included auditory speech. This suggests that, rehabilitation efforts for non-fluent aphasia should include improvement of visual perception of speech movements, perhaps through training on tasks or guided exposure.

Acknowledgments

This work was supported by grants to Julius Fridriksson from the NIDCD (DC005915 & DC008355) and a grant to Chris Rorden from the NINDS (NS054266).

References

- 1.Müller RA, Kleinhans N, Pierce K, Kemmotsu N, Courchesne E. Functional MRI of motor sequence acquisition: effects of learning stage and performance. Cognitive Brain Research. 2002;14:277–293. doi: 10.1016/s0926-6410(02)00131-3. [DOI] [PubMed] [Google Scholar]

- 2.Schubotz RI, von Cramon DY. Interval and ordinal properties of sequences are associated with distinct premotor areas. Cerebral Cortex. 2001;11:210–222. doi: 10.1093/cercor/11.3.210. [DOI] [PubMed] [Google Scholar]

- 3.Binkofski F, Amunts K, Stephan KM, Posse S, Schormann T, Freund HJ, Zilles K, Seitz RJ. Broca’s region subserves imagery of motion: a combined cytoarchitectonic and fMRI study. Human Brain Mapping. 2000;11:273–285. doi: 10.1002/1097-0193(200012)11:4<273::AID-HBM40>3.0.CO;2-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Paulesu E, Frith CD, Frackowiak RS. The neural correlates of the verbal component of working memory. Nature. 1993;362:342–345. doi: 10.1038/362342a0. [DOI] [PubMed] [Google Scholar]

- 5.Meyer M, Jancke L. Involvement of left and right frontal operculum in speech and nonspeech perception and production. In: Grodzinsky Y, Amunts K, editors. Broca’s region. New York: Oxford University Press; 2006. pp. 218–241. [Google Scholar]

- 6.Liberman AM, Mattingly IG, Turvey MT. Language codes and memory codes. In: Melton AW, Martin E, editors. Coding Processes in Human Memory. Washington: V. H. Winston and Sons; 1972. pp. 307–334. [Google Scholar]

- 7.Skipper JI, Goldin-Meadow S, Nusbaum HC, Small SL. Speech-associated gestures, Broca’s area, and the human mirror system. Brain and Language. 2007;101:260–277. doi: 10.1016/j.bandl.2007.02.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Campbell R, MacSweeney M, Surguladze S, Calver G, McGuire P, Suckling J, Brammer MJ, David AS. Cortical substrates for the perception of face actions: an fMRI study of the specificity of activation for seen speech and for meaningless lower-face acts (gurning) Cognitive Brain Research. 2001;12:233–243. doi: 10.1016/s0926-6410(01)00054-4. [DOI] [PubMed] [Google Scholar]

- 9.Hall DA, Fussell C, Summerfield AQ. Reading fluent speech from talking faces: typical brain networks and individual differences. Journal of Cognitive Neuroscience. 2005;17:939–9. 53. doi: 10.1162/0898929054021175. [DOI] [PubMed] [Google Scholar]

- 10.Bonilha L, Moser D, Rorden C, Baylis GC, Fridriksson J. Speech apraxia without oral apraxia: can normal brain function explain the physiopathology? Neuroreport. 2006;17:1027–1031. doi: 10.1097/01.wnr.0000223388.28834.50. [DOI] [PubMed] [Google Scholar]

- 11.Fridriksson J, Moss J, Davis B, Baylis GC, Bonilha L, Rorden C. Motor speech perception modulates the cortical language areas. NeuroImage. 2008;41:605–613. doi: 10.1016/j.neuroimage.2008.02.046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Calvert GA, Campbell R, Brammer MJ. Evidence from functional magnetic resonance imaging of crossmodal binding in the human heteromodal cortex. Current Biology. 2000;10:649–657. doi: 10.1016/s0960-9822(00)00513-3. [DOI] [PubMed] [Google Scholar]

- 13.Fridriksson J, Moser D, Ryalls J, Bonilha L, Rorden C, Baylis G. Modulation of Frontal Lobe Speech Areas Associated with the Production and Perception of Speech Movements. Journal of Speech, Language, and Hearing Research. doi: 10.1044/1092-4388(2008/06-0197). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Smith SM, Jenkinson M, Woolrich MW, Beckmann CF, Behrens TEJ, Johansen-Berg H, et al. Advances in functional and structural MR image analysis and implementation as FSL. NeuroImage. 2004;23:208–219. doi: 10.1016/j.neuroimage.2004.07.051. [DOI] [PubMed] [Google Scholar]

- 15.Worsley KJ, Evans AC, Marrett S, Neelin P. A three dimensional statistical analysis for CBF activation studies in human brain. Journal of Cerebral Blood Flow Metabolism. 1992;12:900–918. doi: 10.1038/jcbfm.1992.127. [DOI] [PubMed] [Google Scholar]

- 16.Schmid G, Ziegler W. Audio-visual matching of speech and nonspeech oral gestures in patients with aphasia and apraxia of speech. Neuropsychologia. 2006;44:546–555. doi: 10.1016/j.neuropsychologia.2005.07.002. [DOI] [PubMed] [Google Scholar]

- 17.Hillis AE, Work M, Barker PB, Jacobs MA, Breese EL, Maurer K. Re-examining the brain regions crucial for orchestrating speech articulation. Brain. 2004;127(7):1479–1487. doi: 10.1093/brain/awh172. [DOI] [PubMed] [Google Scholar]

- 18.Rorden C, Davis B, George MS, Borckardt J. Fridriksson J Broca’s area is crucial for visual discrimination of speech but not non-speech oral movements. Brain Stimulation. doi: 10.1901/jaba.2008.1-383. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Fridriksson J, Baker MJ, Whiteside J, Eoute D, Moser D, Vesselinov R, Rorden C. Treating visual speech perception to improve speech production in non-fluent aphasia. Stroke. doi: 10.1161/STROKEAHA.108.532499. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]