Abstract

Previous studies have shown that the number of objects we can actively hold in visual working memory is smaller for more complex objects. However, complex objects are not just more complex but are often more similar to other complex objects used as test probes. To separate effects of complexity from effects of similarity, we measured visual memory following a 1s delay for complex and simple objects at several levels of memory-to-test similarity. When memory load was 1 object, memory accuracy for a face (a complex attribute) was similar to a line orientation (a simple attribute) when the face changed in steps of 10% along a morphing continuum and the line changed in steps of 5° in orientation. Performance declined with increasing memory load and increasing memory-to-test similarity. Remarkably, when memory load was 3 or 4 objects, face memory was better than orientation memory at similar changed steps. These results held when comparing memory for line orientations with that for inverted faces. We conclude that complex objects do not always exhaust visual memory more quickly than simple objects do.

Keywords: visual working memory, change detection, visual short-term memory

Introduction

Whether it is crossing a busy street, playing team sports, or driving, many daily activities require the buffering of visual information for a short period of time after its disappearance (Baddeley, 1986; Hollingworth, Richard, & Luck, 2008; Logie, 1995). The amount of information actively held in short-term visual memory is severely limited. This limitation is often demonstrated by showing observers an array of visual objects, taking them away, and probing memory with a changed test display after a short delay (Baddeley, 1986; Hollingworth et al., 2008; Logie, 1995; Pashler, 1988; Phillips, 1974). Using this procedure, most visual short-term memory studies have put the upper capacity limit at three or four objects (Alvarez & Cavanagh, 2004; Awh, Barton, & Vogel, 2007; Irwin & Andrews, 1996; Luck & Vogel, 1997).

Extensive research has been devoted to characterizing factors that influence the capacity of short-term visual memory. This research shows that much like verbal short-term memory, visual short-term memory is limited by the number of “chunks,” where each chunk is a single visual object (Luck & Vogel, 1997). However, researchers continue to debate whether the number of available chunks is fixed for all kinds of visual stimuli, or whether it varies depending on the complexity of visual features (Alvarez & Cavanagh, 2004; Awh et al., 2007; Eng, Chen, & Jiang, 2005; Makovski & Jiang, in press; Zhang & Luck, 2008). Much progress has been made to address this question, but no clear consensus has been reached.

In order to test the relationship between memory capacity and object complexity, we must first clarify how “complexity” is measured. Various definitions have been proposed, including “figure goodness” (indexed by a set of rotation and reflection transformations), (Garner, 1962; Garner & Sutliff, 1974), “perimetric complexity” (indexed by the ratio between the square of perimeter and “ink” area of a shape), (Attneave & Arnoult, 1956; Pelli, Burns, Farell, & Moore-Page, 2006), and “informational load” (indexed by visual search slope), (Alvarez & Cavanagh, 2004; Eng et al., 2005). The index used most often in studies of visual short-term memory is informational load, measured by the slope relating visual search speed to the number of search objects. Complex objects take longer to process, leading to a steeper search slope in comparison to simple objects. The index of informational load corresponds well with figure goodness (Makovski & Jiang, in press), which corresponds well with perimetric complexity (Attneave, 1957; Garner & Sutliff, 1974).

Relying on informational load, Alvarez and Cavanagh (2004) proposed that the number of available “slots” in short-term visual memory is inversely related to the informational load carried by the objects. Complex objects such as random polygons, cubes with sides of variable shading, and unfamiliar faces carry heavier informational load and fill up visual working memory more quickly than simple objects such as color patches and line orientations (Alvarez & Cavanagh, 2004; Eng et al., 2005). These findings support the flexible-slot hypothesis, according to which visual working memory has fewer slots for objects with heavier informational load.

Advocates for a fixed-slot hypothesis pointed out that visual working memory may contain a fixed number of slots, but multiple slots are needed to hold a complex object (Luck & Zhang, 2004). Furthermore, a better ability to detect changes in simple objects does not necessarily mean that simpler objects are better retained in short-term visual memory. Instead, because simple objects are often highly distinguishable from other simple objects used as memory probes, the change from a simple object to another simple object produces a larger change signal than that from a complex object to another. Because larger changes are easier to detect (Zhou, Kahana, & Sekuler, 2004), the complexity effect observed previously may reflect a similarity effect. To find out whether complexity influences memory representation, experimenters must equate the size of change for simple and complex objects.

Attempts to dissociate similarity and complexity have been made previously. Awh et al. (2007) found that change detection was simple when a memorized cube changed into a Chinese character or vice versa, but the task became more difficult when the change was within category. These researchers suggested that memory for complex objects can be as accurate as memory for simple objects given that a large change was used to probe memory. However, when the change was made between categories, detection performance may not be supported by memory of the exact shape - a complex attribute, but by memory of salient features that distinguish the two categories - a simple attribute. To ensure that memory for simple and complex objects is being investigated, a different approach must be taken. In this study, we introduce such an approach, in which only within-category changes are made but the size of the change from memory-to-test was comparable between simple and complex objects. This design allows us to address the complexity effects in a short-term memory delay task.

To examine whether visual memory in a short-term task is exhausted more quickly by more complex objects, we parametrically manipulated the similarity between a memory object and a test probe for two types of objects: line orientations and human faces. The parametric manipulation of memory-to-test similarity allowed us to choose psychologically equivalent changes in the face task and the line orientation task at a memory load of 1. For example, at load 1, performance may be comparable when faces change in M% along a face morph and the lines change in N degrees in orientation. We then test whether these changed steps still produced comparable levels of performance for faces and line orientations at higher memory load.

Experiment 1: Faces and line orientations

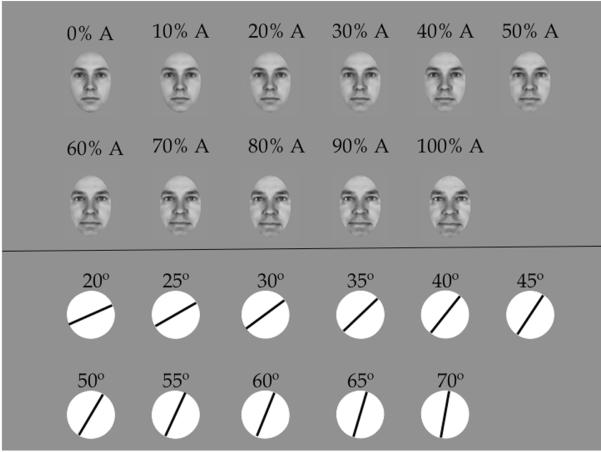

We tested two types of stimuli that differed significantly in subjective complexity: face identity and line orientation. Figure 1 shows the full set of stimuli used in this experiment.

Figure 1.

Eleven faces and line orientations used in Experiment 1.

In the 2007 Psychonomic Society meeting an audience of about 100 psychologists were shown one line and one face (Figure 1) among other objects, presented one at a time. All but two psychologists rated the face as more complex than the line. The psychologists’ intuition was verified in laboratory testing, where 14 participants (19 to 30 years old) rated the subjective complexity of several objects, including a face and a line shown in Figure 1. On a 7-point scale with 1 being very simple and 7 being very complex, the average rating was 5.6 (S.E. = 0.4) for the face and 2.1 (S.E. = 0.2) for the line. Every rater gave a higher complexity rating to the face than to the line, p < .001. The subjective rating results fit well with neurophysiological processing hierarchy, where line orientations are processed early by V1 neurons whereas face identities are coded later by cells in the inferior temporal cortex (Grill-Spector & Malach, 2004).

A second reason to test face identity and line orientation is that unlike shaded cubes or Chinese characters that can only be sampled from a finite set, faces and line orientations can be changed parametrically. This property enables us to sample various levels of memory-to-test similarity, providing a psychometric function of memory performance at different levels of memory-to-test similarity (Zhou et al., 2004). We then measure performance at progressively higher memory load. If short-term visual memory for complex objects is loaded up more quickly by more complex objects, then increasing memory load should impair performance more in the face task than the line task.

Method

Participants

Five observers (27 to 32 years old), including two authors (YVJ and WMS) and three naïve subjects, were tested individually in a room with normal interior lighting. They sat approximately 57 cm from a 19” monitor. Experiments were programmed with Psychophysics toolbox (Brainard, 1997; Pelli, 1997) implemented in MATLAB.

Stimuli

Two types of stimuli were used in this experiment: upright faces and line orientations (Figure 1).

(1) faces

Eleven faces were selected from the Max Planck face database (Troje & Bulthoff, 1996), (http://faces.kyb.tuebingen.mpg.de/). All faces were converted to grayscale and preprocessed to have equal overall luminance and contrast. Each face subtended 4.88° × 6.05°. At one extreme was a face - face A - that contained 100% of an individual’s face. At the other extreme was an average face with 0% of face A (Blanz & Vetter, 2003). The 11 faces were morphs of face A onto the average face in steps of 10% morph. Thus, the faces contained 100%, 90%, 80%, ..., to 0% of face A (Figure 1 left). The middle 9 faces were used as the memory stimuli. The changed test face could differ from the memory face by 10%, 20%, 30%, 40%, or 50% toward either face A or the average face.

(2) lines

We placed a black line against a white disk to form the line stimulus (line length = 4.88°, line width = 0.16°). The line was placed against a disk to reduce interaction among multiple lines that tend to form emergent configural contours (Alvarez & Cavanagh, 2008; Delvenne & Bruyer, 2006; Jiang, Chun, & Olson, 2004). The 11 lines ranged from 20° to 70° in 11 increasing steps of 5° (Figure 1 right). The middle 9 orientations were used as the memory stimuli. The changed test line could differ from the memory line by 5°, 10°, 15°, 20°, or 25° clockwise or counterclockwise.

Task

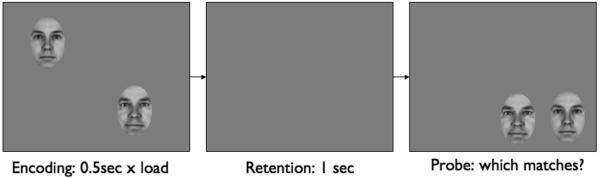

Figure 2 illustrates the basic trial sequence. On each trial, one to four objects from a given category (lines or faces) were presented on the memory display against a gray background. Objects on the memory display were sampled without replacement from the middle 9 examples equally often. Memory objects were presented equidistantly from one another with each object’s center being 4.68° away from fixation. To ensure adequate encoding, the duration of the memory display was proportional to memory load: 500 ms for load 1, 1000 ms for load 2, 1500 ms for load 3, and 2000 ms for load 4. The presentation duration was within the typical range of encoding duration used in short-term visual memory studies (Chen, Eng, & Jiang, 2006; Eng et al., 2005; Makovski, Shim, & Jiang, 2006) and was the same for line orientations and faces. Participants were allowed to move their eyes. Following the presentation of the memory display, a blank retention interval (with a gray screen) lasted 1000 ms, after which two horizontally arrayed test probes were presented. The test probes were centered on one of the memory objects. Subjects were told to compare the memory object at the probed location with the two test probes. One test probe was identical to the memory object at that location and the other was changed by one of five steps. Each step in the line orientation task was 5° and each step in the face task was 10% morph. The direction of the change was balanced overall, but if the memory object was toward an extreme, the changed item was typically less extreme. For example, if the memory object was a 90% face A and the change was one step, then the changed probe could be either the 80% face A or the 100% face A, equally often. But if the change was two steps, then the changed item could only by the 70% face [footnote 1]. The same rule of stimulus sampling applied to line orientations.

Figure 2.

Trial sequence used in Experiment 1. Observers remembered 1-4 objects from a single category (lines or faces) and reported whether the left or right test stimulus matched the memory object previously centered at the probe location. One of the test stimuli was the same as the memory object at that location; the other was changed in one of five steps.

Participants pressed the left or right key to indicate whether the left or right test probe matched their memory at the probed location. A beep was presented after each incorrect response. To minimize verbal recoding, the task was carried out with concurrent articulatory suppression (Baddeley, 1986). Participants repeated a three-letter word, pre-specified before each block, as rapidly as they could, throughout each trial.

Design

We employed a full factorial design of two stimulus types (faces or line orientations), four memory loads (1, 2, 3, or 4), and five sizes of change from memory to testing (1 to 5 steps). Trials from all conditions were randomly intermixed in a given testing session of 360 trials (divided into 8 blocks). One author and one naïve subject completed 6 sessions; the other participants completed 4 sessions.

Results

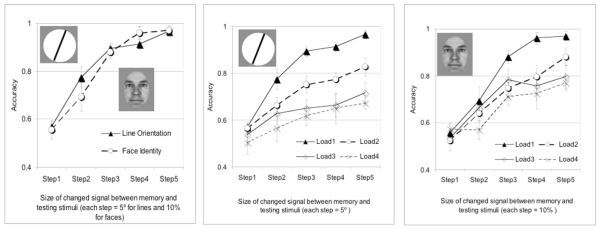

Figure 3 (left) shows memory accuracy for 1 face or 1 line at five changed steps. Accuracy was lower when the similarity between the memory item and the changed probe was greater, F(4, 16) = 41.20, p < .001. This result confirmed previous findings (Awh et al., 2007; Zhou et al., 2004). Face task accuracy was similar to line task accuracy, F < 1. The interaction between stimulus type (faces vs. line orientations) and memory-to-test similarity (steps 1 to 5) was insignificant with Greenhouse-Geisser correction for sphericity, F(1.7, 6.8) = 3.59, p > .09. The borderline significance was driven by numerically better performance in the line task than face task at step 2, but similar performance in the two tasks at other steps. Individual t-tests failed to show significant differences between line and face memory at any of the steps tested, ps > .15. Thus, the five changed steps (in units of 5° for line orientation and 10% for face morph) produced similar levels of accuracy in the face memory and line memory tasks, except that the line performance was numerically better than face performance at step 2.

Figure 3.

Results from Experiment 1. Left: accuracy for 1 line or 1 face at five changed steps. Middle: accuracy for 1-4 lines. Right: accuracy for 1-4 faces. Error bars show ±1 standard error of the mean across subjects.

Figure 3 (middle and right) shows performance at various memory loads for lines and faces. For both types of stimuli, the psychometric curve shifted downward and rightward as memory load increased, F(3, 12) = 7.52, p < .004 for faces and F(3, 12) = 50.72, p < .001 for lines. In order for performance to reach the same level, a greater change was needed as memory load increased. The shift occurred numerically for all memory loads, resulting in a significant linear trend of load, F(1, 4) = 10.80, p < .03 for faces and F(1, 4) = 211.15, p < .001 for lines. There was a significant reduction in performance from load 1 to load 2 for lines, F(1, 4) = 253.99, p < .001, and a marginal reduction from load 1 to load 2 for faces, F(1, 4) = 5.71, p < .08.

Was the memory load effect greater for more complex stimuli? The answer derived from our data was “no.” Increasing memory load led to a greater decline in the line orientation performance than the face performance. Face performance was superior to line performance at load 3, F(1, 4) = 41.12, p < .003, and load 4, F(1, 4) = 7.57, p < .05. Driven by a smaller load effect in the face task, the interaction between stimulus type (faces or line orientations) and memory load was significant, F(3, 12) = 4.81, p < .02. The advantage of faces over lines was eliminated at the smallest change step, possibly due to a floor effect, F(4, 16) = 7.66, p < .001, for the interaction between stimulus type and size of change.

Discussion

Experiment 1 reveals two noteworthy findings. First, in both the line and face memory tasks, overall accuracy declined as memory load increased. At higher memory load, the test probe must be more different from the memory object for performance to reach a certain threshold, say, 75% (Palmer, 1990). The reduction in memory accuracy is significant even when load increased from 1 to 2 objects, both of which are within the typically assumed memory capacity (Cowan, 2001). These results indicate that memory precision can still improve within the conventional memory capacity of about 3 or 4. This improvement may be attributed to the redundant representations of a single memory object in multiple available slots (Zhang & Luck, 2008). Second, the effect of memory load on performance was not greater in the face task than the line task; the opposite was found. There was no evidence that more complex objects load up visual memory more quickly in a short-term visual memory task. The idea that short-term visual memory capacity is always inversely related to object complexity is unsubstantiated.

Experiment 2: Line orientations and inverted faces

This experiment aims to replicate Experiment 1 and to address a criticism often raised about it. Many researchers have argued that the perception of upright faces relies on a special “face module” (Duchaine & Nakayama, 2005; Moscovitch, Winocur, & Behrmann, 1997). Perhaps visual memory for faces is unique because it is enhanced by the specialized face system (Curby & Gauthier, 2007). To evaluate this possibility, we tested participants in a second experiment where line orientations were compared with inverted faces. Studies on both normal adults (Young, Hellawell, & Hay, 1987) and brain damaged patients with object agnosia or prosopagnosia (Farah, Wilson, Drain, & Tanaka, 1995; Moscovitch et al., 1997) have shown that inverted faces are handled not by the specialized face system, but by the general object processing system used for nonface objects. If the greater memory load effect on line memory than face memory is restricted to memory for upright faces, then it should not generalize to memory for inverted faces.

Method

We tested participants on visual working memory tasks using the same line and face stimuli as Experiment 1, except the faces were turned upside-down. Four of the five participants from Experiment 1 completed this experiment. Other than the inversion of faces, other aspects of the experiment were the same as those of Experiment 1.

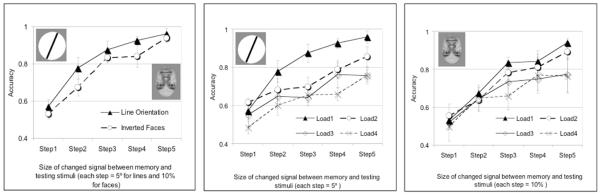

Results

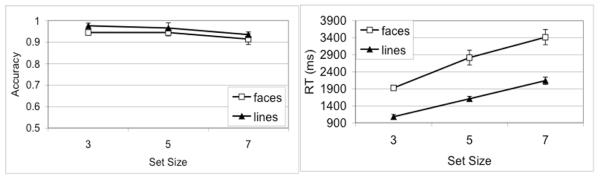

Similar to Experiment 1, increasing memory load was more detrimental to line memory than face memory, even though the faces were inverted (Figure 4). This difference was reflected by a significant interaction between stimulus type (lines or inverted faces) and memory load, F(3, 9) = 4.76, p < .03. At a memory load of 1, line performance was significantly better than inverted-face performance, F(1, 3) = 11.73, p < .04. This difference was eliminated at higher memory loads, Fs < 1. Indeed, at load 4, performance was numerically better in the inverted-face task than the line task.

Figure 4.

Results from Experiment 2. Left: memory accuracy for 1 line was better than for 1 inverted face. Middle: memory accuracy for 1-4 lines. Right: memory accuracy for 1-4 inverted faces. Error bars show ±1 standard error of the mean across subjects.

Besides their different sensitivity to increased memory load, face and line memory share some similar features. Memory accuracy declined when load increased, F(3, 9) = 21.17, p < .001, and when the memory-to-test similarity increased, F(4, 12) = 33.39, p < .001. The sensitivity to increased memory-to-test similarity was comparable for face and line tasks, F < 1 in the interaction between memory-to-test similarity and stimulus type, and F < 1 in the three-way interaction between load, stimulus type, and memory-to-test similarity.

Discussion

Experiment 2 replicated results from Experiment 1. Again, memory accuracy declined with increasing load for both line orientations and inverted faces. This decline was less dramatic for inverted faces than line orientations, even though the former was more complex.

One notable difference between Experiments 1 and 2 was that although performance was comparable for upright faces and line orientations at load 1, it was better for line orientations than inverted faces. This difference reveals a face inversion effect, where perception and memory of upright faces are superior to those of inverted faces (Farah, Tanaka, & Drain, 1995; Yin, 1969).

Experiment 3: Informational load

A skeptic reader might dismiss the former results by arguing that perhaps faces are not more complex than line orientations. After all, subjective rating is just that - subjective. Here we provide an objective index of complexity by measuring the informational load of faces and line orientations. Informational load was first introduced by Alvarez and Cavanagh (2004), who measured object complexity by obtaining the slope of the linear function relating search RT to display set size in a visual search task (Wolfe, 1998). This measure is appealing as an alternative to subjective rating. However, search slope as a measure of informational load is also controversial because it confounds an object’s intrinsic complexity with its relative similarity to other objects (Duncan & Humphreys, 1989). Nonetheless, informational load has been an influential concept in studies of visual working memory, so we measure it in Experiment 3 to provide converging evidence for the idea that faces are more complex than lines.

We tested two versions of the visual search experiment that differed in the homogeneity of the distracter stimuli, which was known to affect search efficiency (Moraglia, 1989; Wolfe, Stewart, Friedmanhill, & Oconnell, 1992). In Experiment 3a, participants searched for a target among homogeneous distracters that differed from the target by 3 or 5 steps. In Experiment 3b, participants searched for a target among heterogeneous distracters that differed from the target by ±3 and ±5 steps. We intended to find converging evidence from Experiments 3a and 3b for the idea that faces carry heavier informational load than lines.

Experiment 3a

Methods

Participants

The same individuals who took part in Experiment 1 also completed this experiment.

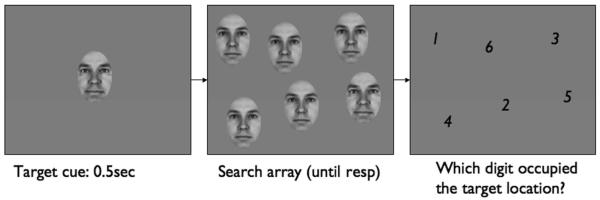

Design and procedure

Each participant completed a visual search task, where they searched for an odd item among, 2, 5, or 8 identical distracters (Figure 5). The target was present on every trial. The distracters were selected from the same category (faces or lines) as the target but differed from the target in 3 or 5 steps (see Experiment 1’s use of “steps”). All items were presented in randomly selected locations sampled from a 4×4 imaginary grid (16.88°×16.88°). Items were slightly jittered with respect to the center of a cell to reduce co-linearity. Following Alvarez and Cavanagh (2004), the search display was preceded by the presentation of a target stimulus for 500 ms. Participants searched for the cued target, which was also the odd (unique) item on the search display, and pressed the spacebar upon detection. RT was defined as the interval between search display onset and the spacebar response. The display was then replaced by an array of digits ranging from 1 to 9. Participants typed in the digit occupying the target’s location; this response yielded an accuracy measure. Each participant completed 432 trials divided randomly and evenly into two stimulus types (faces or lines), three set sizes (3, 6, or 9 total items), and two target-to-distracter similarity levels (3 or 5 steps of difference between the target and distracters).

Figure 5.

Trial sequence used in Experiment 3a. Observers searched for the cued target. Upon target detection they pressed the spacebar and typed in the digit behind the target item. Search set size was 3, 6, or 9.

Results

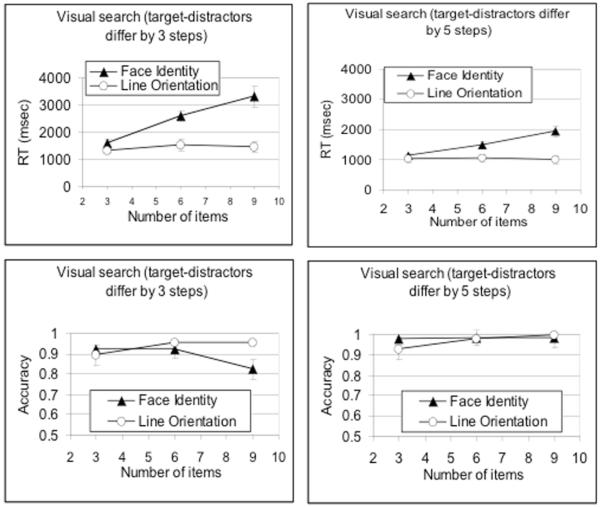

Figure 6 (top) shows visual search RT on correct trials. The search slope on face trials was 285 ms/item when the target and distracter faces differed by 30% morph, and 134 ms/item when they differed by 50% morph. The search slope on line trials was 25 ms/item when the target and distracter lines differed by 15°, and -5 ms/item when they differed by 25°. The interaction between set size and stimulus type was significant, F(2, 8) = 25.94, p < .001, confirming that search slopes were steeper for faces than lines. Error rates mirrored RT data (Figure 6 bottom). The search resuls suggest that faces carry heavier informational load than lines.

Figure 6.

Visual search RT (top) and accuracy (bottom) results from Experiment 3a. The distracters were homogeneous. Error bars show ±1 standard error of the mean across subjects.

Experiment 3b

Method

Participants

Four participants completed Experiment 3b. They were previously tested in other experiments reported here.

Design

This experiment was similar to Experiment 3a except for the following changes. The critical difference is that distracters on the search display were heterogeneous. Specifically, on each trial participants were presented with a cue object at fixation and 3, 5, or 7 search items placed equidistantly on an imaginary circle (radius = 7.5°). One of the search objects was the same as the cue object at fixation and the others were distracters. The distracters were selected randomly with replacement from items that were ±3 and ±5 steps away from the target. The target was defined by the central cue (rather than by a precued object) because it was no longer an odd item. The presence of the central cue also ensured that search errors did not result from a failure to remember the identity of the cue. Participants pressed the spacebar upon target detection and typed in the digit occupying the target’s location. Each participant completed 432 trials, divided randomly and evenly into two stimulus types (lines or faces), three set sizes (3, 5, or 7), and nine possible targets.

Results

Figure 7 shows results from Experiment 3b. Search accuracy was above 90% and was not significantly affected by task, F(1, 3) = 2.92, p > .15, set size, F(2, 6) = 2.60, p > .15, or their interaction, F < 1. Mean RT on correct trials was significantly longer in the face task in comparison to the line task, F(1, 3) = 55.68, p < .005. In both tasks RT was longer when there were more items on the display, F(2, 6) = 54.80, p < .001. However, a significant interaction between task and set size showed that search slope was steeper in the face task, F(2, 6) = 6.88, p < .028. The slope was 265 ms/item in the line task and 374 ms/item in the face task.

Figure 7.

Visual search accuracy (left) and RT (right) from Experiment 3b. The distracters were heterogeneous. Error bars show ±1 standard error of the mean across subjects.

Discussion

As a control experiment, Experiment 3 demonstrated that faces carry heavier informational load than line orientations. Search slopes were steeper in the face search task than the line orientation search task, both when the distracters were homogeneous and when they were heterogeneous. By this measure, lines carry lower informational load than faces and can be considered a simpler type of stimulus. We recognize, however, that search slope is a controversial concept as a measure of object complexity (Awh et al., 2007; Duncan & Humphreys, 1989). In fact, the measured informational load for a given type of stimulus changed significantly depending on the nature of distracters. Search slopes were steeper when the distracters were heterogeneous rather than homogeneous (Moraglia, 1989; Wolfe et al., 1992). Thus, search slope (or “informational load”) cannot be taken as a straightforward index of an object’s “intrinsic” complexity. Nonetheless, our results indicate that search efficiency can be dissociated from working memory load. A stimulus that produces more efficient search (e.g., the line orientations) may fill up working memory more quickly than another stimulus (e.g., the faces).

General Discussion

This study presents one of the first comprehensive datasets on visual memory for two types of stimuli differing significantly in complexity. By systematically varying the similarity between a memory object and a test probe, we measured performance in a short-term memory task for line orientations and faces at different memory load. We observed the following results.

First, increasing memory load impaired memory for line orientations more than memory for faces. At a low memory load of 1, face memory was equal to or worse than line orientation memory when faces changed in steps of 10% in face morph and lines changed in 5° in orientation. At a high memory load of 3 or 4, face memory was better than line orientation memory at these steps. These results were unexpected because faces are intuitively more complex, an intuition supported by the visual search results. Our study provides evidence against the idea that complex objects always fill up active visual memory more quickly than simple objects do. Similar results were obtained when comparing memory for inverted faces and line orientations.

Why did line orientations load up visual memory more quickly than faces in our study, while the opposite may be expected from previous findings? Previous visual short-term memory studies that employed large memory-to-probe changes have shown that memory capacity for faces is between 1 and 2 (Curby & Gauthier, 2007; Eng et al., 2005), and for line orientations is just under 4 (Jiang et al., 2004; Luck & Vogel, 1997). There are several reasons why our results are different. First, previous studies have not controlled for the memory-to-probe similarity between lines and faces. These studies typically used lines that differed by at least 45° from memory to testing, a difference that may be psychologically greater than that produced by changing one face to another. Second, by using large changes, previous studies may have introduced global configural changes that specifically aided memory for line orientations (Alvarez & Cavanagh, 2008; Delvenne & Bruyer, 2006; Jiang et al., 2004; Luck & Vogel, 1997). The kind of configural memory could be of limited use when the orientation change is small, as in our study. When the similarity between memory and test stimuli was controlled for, face memory was more robust at high load perhaps because multidimensional stimuli (e.g., faces) do not interact with each other as much as single dimensional stimuli (e.g., lines) do (Rouder, 2001).

The second finding from our results is that in both line and face memory tasks, performance was worse when the test probe was more similar to the memory object. This finding shows that visual memory has limited resolution even when the memory delay is short (Palmer, 1990; Zhang & Luck, 2008; Zhou et al., 2004). In addition, the greater the memory load, the poorer is the memory precision averaged across all memory objects. That is, a larger change must be made from the memory object to the test probe for change detection to reach the same threshold performance. The reduction in averaged memory precision was seen even when the load increased from 1 to 2, both of which were within the conventionally accepted memory capacity.

What accounts for the reduction in memory precision averaged across all items? At least two hypotheses have been proposed. According to the “flexible resolution” view (Wilken & Ma, 2004), the greater the memory load, the greater was the number of items retained in memory. However, each item was retained with poorer memory resolution. According to the “fixed-slot, fixed-resolution” view, a fixed number of objects (e.g., 3 out of N items) were held in visual working memory, each with a fixed resolution. When the memory load exceeded the number of available slots, some items would not be retained in memory, so the resolution to those items would be infinitely low. The measured memory precision on any trial was the average between those retained with (fixed) high resolution and those not retained in memory (Zhang & Luck, 2008). Recent results combining psychophysical data with modeling have supported the latter view (Rouder et al., 2008; Zhang & Luck, 2008). By measuring the kind of errors people commit in a color working memory task, Zhang and Luck (2008) were able to show that the errors were well modeled as the sum of high-precision memory for 3 colors and random guesses for the others. When the color memory load was 1, the precision of memory response was better than that at load 3. These data were also modeled by assuming that the 3 available slots were each used to hold the single color, producing redundant gain (Rouder et al., 2008; Zhang & Luck, 2008).

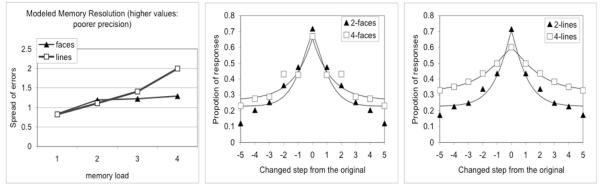

In spite of few caveats (see Appendix), our experimental paradigm allowed crude modeling of the data. Specifically, we modeled the error distribution (the proportion of trials subjects reported a changed test stimulus as the same as the memory) at different memory-to-test similarity levels using a generalized error distribution [footnote 2]. The spread of this distribution became broader as memory load increased from 1 to 4 in the line orientation task, with no clear evidence for an asymptote (see Appendix). Although this result may be taken as evidence for the “flexible resolution” view (which predicts a widening of error distribution at high memory load), it is also consistent with the “fixed-resolution” view as long as one assumes that the lines received redundant representations in 4 or more available slots (Zhang & Luck, 2008). In the face memory task, the error distribution became broader from 1 to 2 faces and did not change from 2 to 4 faces. These results may also be taken as evidence for the “fixed-resolution” hypothesis as long as one assumes that a single face received redundant representations in 2 (but not more) slots. Future studies that vary memory load at a wider range are needed to evaluate these assumptions (Zhang & Luck, 2008).

Although we have only tested visual memory for faces and line orientations, the approach taken here can be used to test other types of stimuli, such as color, luminance, and complex shapes that continuously deform. One could, for instance, match memory performance on colors and complex shapes at load 1 [footnote 3], and probe performance for colors and shapes at greater memory loads. This work is necessary in order to test the generality of the conclusions reached here. A promising approach is to create a new set of stimuli where the simple and complex objects are products of the same morphing algorithm. This approach is needed because faces and line orientations differ in so many dimensions and a direct comparison can only be made crudely.

How much of what is measured in a short-term delayed memory task reflects processes specific to visual short-term memory, as opposed to visual long-term memory? [footnote 4] Unfortunately, visual short-term memory is not the only system that can contribute to performance in a short-term delayed task. Visual long-term memory will always contribute to an ongoing task (Makovski & Jiang, 2008), even when the task involves perception rather than memory (Chun & Jiang, 1998) and when the memory delay is short (Buttle & Raymond, 2003). The contamination from visual long-term memory to a short-term task is an issue in all existing short-term memory studies. In our study, we have tried to minimize differential impact of long-term memory on faces and line orientations by using unfamiliar faces (Buttle & Raymond, 2003) and inverted faces (Jackson & Raymond, 2008). Given that familiarity effects in face working memory tasks were restricted to faces with super familiarity (e.g., faces of celebrities or friends) and was eliminated with face inversion (Eng, Chen, & Jiang, 2006; Jackson & Raymond, 2008), we are confident that our results are not products of long-term, familiarity effects. Nonetheless, future studies should further address how familiarity (and long-term memory) modulates working memory, and whether any modulation is due to an interaction between familiarity and subjective complexity.

To summarize, our study on visual memory for faces and line orientations in a short-term task presents a solid approach to studying the precision of memory representation. We found no evidence that more complex objects, such as faces, exhaust visual working memory more quickly than do simpler objects, such as line orientations. Our study underscores the importance of varying memory-to-probe similarity when assessing visual working memory.

Acknowledgments

The authors contributed equally to this study, which was funded in part by NSF 0733764, NIH 071788, and ARO 46926-LS. We thank Arash Afraz for help with the face stimuli, MiYoung Kwon for help with modeling, and Steve Luck, George Alvarez, Jeremy Wolfe, Kalanit Grill-Spector, James Pomerantz, Khena Swallow, Leah Watson, and Galit Yovel for comments and suggestions.

Appendix

In a recent study, Zhang and Luck (2008) used computational modeling to distinguish the “flexible resolution” account and the “fixed-slot, fixed-resolution” account. Simply stated, the proportion of reporting a test stimulus as being the same as the memory stimulus increases as the memory-to-test similarity increases. If increasing memory load makes memory less precise, then the spread of the error distribution should be broader. Conversely, if some items are held with high precision while others are not at all stored, then the error distribution should not get broader, but will be shifted upwards by a constant amount corresponding to subjects’ random guesses for items left out of memory. In other words, the error distribution should maintain its degree of spread but its tail should asymptote at a value greater than zero.

Using this logic, we fitted a generalized error distribution (also called the exponential power distribution) to our data averaged across all subjects, separately for the face and line task (Experiment 1), and separately for each memory load. The probability density function used to model our data (http://en.wikipedia.org/wiki/Exponential_power_distribution) can be found on wikipedia, except that we added a third parameter corresponding to the upward shift. The R-square of the fit was 0.83, 0.88, 0.97, and 0.89 for load 1, 2, 3, and 4 of the face task, and 0.90, 0.96, 0.98, and 0.99 for load 1, 2, 3, and 4 of the line task.

Figure 8-left plots the modeled spread of the error distribution at different memory load, where higher values on the Y-axis indicates poorer precision (errors spread more widely). Figure 8-middle and right plot the modeled and underlying data at memory load 2 and 4 for faces and lines. The model was fitted to the averaged data across all subjects. The modeling results are broadly, though perhaps not uniquely, consistent with the “fixed-slot, fixed-resolution” view. Specifically, assuming that the number of fixed slots available for lines is greater than 4, the increased precision at lower load may be explained by the redundant gain due to duplicated representations in 4 or more slots. Further, assuming that the number of fixed slots available for faces is 2 or fewer, the constant precision at all load may be explained by the fixed-resolution model.

Figure 8.

Left: modeled memory precision (the spread parameter) for lines and faces at different memory load. Higher numbers on the Y-axis means worse precision. Errors spread more widely when line load increased from 1 to 4, but was relatively constant for faces. Middle: model fit for face 2 and face 4. Right: model fit for line 2 and line 4.

There are several caveats in this modeling attempt that may weaken the conclusions one can reach. First, the fit for individual subjects was worse (R-square ranging from 0.62 to 0.98) than the fit for the averaged data. Second, we used a two-alternative-forced-choice rather than a free choice of all 11 possible stimuli, so the 0-step change (the choice that matched the memory object) was over-represented in the data. This may have contributed to the “peakier” envelop than Gaussian functions in the error distribution. Finally, the 11 possible stimuli did not cover the entire stimulus space (e.g., we only sampled 20° to 70° in orientation space and only sampled the average face to face A). Some of the stimuli (e.g., the 100% A) could only be tested with a probe that was less extreme than the original. These factors may affect the underlying error distribution, reducing the confidence of the data fitting.

Footnotes

A bias to choose the more extreme probe can yield above chance performance for a memory object toward the extreme, whereas the same bias will yield below chance performance for a memory object toward the middle. In other words, a consistent bias (e.g., of always choosing the more extreme probe) will not produce above-chance performance.

We thank George Alvarez for this suggestion.

Load 1 is used as an anchor because it is the simplest case, uncomplicated by multiple comparisons. Note that a precise performance match between any two types of stimuli at load 1 is unnecessary. When one type of stimulus produces a psychometric curve that is rightward shifted compared with that of another type, researchers simply need to shift the second curve to match the first, and applying the shift to all memory loads.

We thank Steve Luck for raising this important question.

Reference

- Alvarez GA, Cavanagh P. The capacity of visual short-term memory is set both by visual information load and by number of objects. Psychological Science. 2004;15(2):106–111. doi: 10.1111/j.0963-7214.2004.01502006.x. [DOI] [PubMed] [Google Scholar]

- Alvarez GA, Cavanagh P. Visual short-term memory operates more efficiently on boundary features than on surface features. Perception & Psychophysics. 2008;70(2):346–364. doi: 10.3758/pp.70.2.346. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Attneave F. Physical determinants of the judged complexity of shapes. Journal of Experimental Psychology. 1957;53:221–227. doi: 10.1037/h0043921. [DOI] [PubMed] [Google Scholar]

- Attneave F, Arnoult MD. The quantitative study of shape and pattern perception. Psychological Bulletin. 1956;53(6):452–471. doi: 10.1037/h0044049. [DOI] [PubMed] [Google Scholar]

- Awh E, Barton B, Vogel EK. Visual working memory represents a fixed number of items regardless of complexity. Psychological Science. 2007;18(7):622–628. doi: 10.1111/j.1467-9280.2007.01949.x. [DOI] [PubMed] [Google Scholar]

- Baddeley AD. Working Memory. Oxford University Press; 1986. [Google Scholar]

- Blanz V, Vetter T. Face recognition based on fitting a 3D morphable model. Ieee Transactions on Pattern Analysis and Machine Intelligence. 2003;25(9):1063–1074. [Google Scholar]

- Brainard DH. The psychophysics toolbox. Spatial Vision. 1997;10(4):433–436. [PubMed] [Google Scholar]

- Buttle H, Raymond JE. High familiarity enhances visual change detection for face stimuli. Perception & Psychophysics. 2003;65(8):1296–1306. doi: 10.3758/bf03194853. [DOI] [PubMed] [Google Scholar]

- Chen DY, Eng HY, Jiang YH. Visual working memory for trained and novel polygons. Visual Cognition. 2006;14(1):37–54. [Google Scholar]

- Chun MM, Jiang YH. Contextual cueing: Implicit learning and memory of visual context guides spatial attention. Cognitive Psychology. 1998;36(1):28–71. doi: 10.1006/cogp.1998.0681. [DOI] [PubMed] [Google Scholar]

- Cowan N. The magical number 4 in short-term memory: A reconsideration of mental storage capacity (vol 23, pg 87, 2001) Behavioral and Brain Sciences. 2001;24(3) doi: 10.1017/s0140525x01003922. [DOI] [PubMed] [Google Scholar]

- Curby KM, Gauthier I. A visual short-term memory advantage for faces. Psychonomic Bulletin & Review. 2007;14(4):620–628. doi: 10.3758/bf03196811. [DOI] [PubMed] [Google Scholar]

- Delvenne JF, Bruyer R. A configural effect in visual short-term memory for features from different parts of an object. Quarterly Journal of Experimental Psychology. 2006;59(9):1567–1580. doi: 10.1080/17470210500256763. [DOI] [PubMed] [Google Scholar]

- Duchaine B, Nakayama K. Dissociations of face and object recognition in developmental prosopagnosia. Journal of Cognitive Neuroscience. 2005;17(2):249–261. doi: 10.1162/0898929053124857. [DOI] [PubMed] [Google Scholar]

- Duncan J, Humphreys GW. Visual-Search and Stimulus Similarity. Psychological Review. 1989;96(3):433–458. doi: 10.1037/0033-295x.96.3.433. [DOI] [PubMed] [Google Scholar]

- Eng HY, Chen D, Jiang YV. Effects of familiarity on visual working memory of upright and inverted faces. Journal of Vision. 2006;6(6):359. [Google Scholar]

- Eng HY, Chen DY, Jiang YH. Visual working memory for simple and complex visual stimuli. Psychonomic Bulletin & Review. 2005;12(6):1127–1133. doi: 10.3758/bf03206454. [DOI] [PubMed] [Google Scholar]

- Farah MJ, Tanaka JW, Drain HM. What Causes the Face Inversion Effect. Journal of Experimental Psychology-Human Perception and Performance. 1995;21(3):628–634. doi: 10.1037//0096-1523.21.3.628. [DOI] [PubMed] [Google Scholar]

- Farah MJ, Wilson KD, Drain HM, Tanaka JR. The Inverted Face Inversion Effect in Prosopagnosia - Evidence for Mandatory, Face-Specific Perceptual Mechanisms. Vision Research. 1995;35(14):2089–2093. doi: 10.1016/0042-6989(94)00273-o. [DOI] [PubMed] [Google Scholar]

- Garner WR. Uncertainty and structure as psychological concepts. Wiley; New York: 1962. [Google Scholar]

- Garner WR, Sutliff D. Effect of Goodness on Encoding Time in Visual-Pattern Discrimination. Perception & Psychophysics. 1974;16(3):426–430. [Google Scholar]

- Grill-Spector K, Malach R. The human visual cortex. Annual Review of Neuroscience. 2004;27:649–677. doi: 10.1146/annurev.neuro.27.070203.144220. [DOI] [PubMed] [Google Scholar]

- Hollingworth A, Richard AM, Luck SJ. Understanding the function of visual short-term memory: Transsaccadic memory, object correspondence, and gaze correction. Journal of Experimental Psychology-General. 2008;137(1):163–181. doi: 10.1037/0096-3445.137.1.163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Irwin DE, Andrews RV. Integration and accumulation of information across saccadic eye movements. In: Inui T, McClelland JL, editors. Attention and Performance, XVI: Information integration in perception and communication. MIT Press; Cambridge, MA: 1996. pp. 125–155. [Google Scholar]

- Jackson MC, Raymond JE. Familiarity enhances visual working memory for faces. Journal of Experimental Psychology: Human Perception & Performance. 2008;34(3):556–568. doi: 10.1037/0096-1523.34.3.556. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiang YH, Chun MM, Olson IR. Perceptual grouping in change detection. Perception & Psychophysics. 2004;66(3):446–453. doi: 10.3758/bf03194892. [DOI] [PubMed] [Google Scholar]

- Logie RH. Visual-spatial working memory. Psychology Press; New York: 1995. [Google Scholar]

- Luck SJ, Vogel EK. The capacity of visual working memory for features and conjunctions. Nature. 1997;390(6657):279–281. doi: 10.1038/36846. [DOI] [PubMed] [Google Scholar]

- Luck SJ, Zhang W. Fixed resolution, slot-like representations in visual working memory. Journal of Vision. 2004;4(8):149. [Google Scholar]

- Makovski T, Jiang YV. Proactive interference from items previously stored in visual working memory. Memory & Cognition. 2008;36(1):43–52. doi: 10.3758/mc.36.1.43. [DOI] [PubMed] [Google Scholar]

- Makovski T, Jiang YV. Indirect assessment of visual working memory for simple and complex objects. Memory & Cognition. doi: 10.3758/MC.36.6.1132. in press. [DOI] [PubMed] [Google Scholar]

- Makovski T, Shim WM, Jiang YHV. Interference from filled delays on visual change detection. Journal of Vision. 2006;6(12):1459–1470. doi: 10.1167/6.12.11. [DOI] [PubMed] [Google Scholar]

- Moraglia G. Display Organization and the Detection of Horizontal Line Segments. Perception & Psychophysics. 1989;45(3):265–272. doi: 10.3758/bf03210706. [DOI] [PubMed] [Google Scholar]

- Moscovitch M, Winocur G, Behrmann M. What is special about face recognition? Nineteen experiments on a person with visual object agnosia and dyslexia but normal face recognition. Journal of Cognitive Neuroscience. 1997;9(5):555–604. doi: 10.1162/jocn.1997.9.5.555. [DOI] [PubMed] [Google Scholar]

- Palmer J. Attentional Limits on the Perception and Memory of Visual Information. Journal of Experimental Psychology-Human Perception and Performance. 1990;16(2):332–350. doi: 10.1037//0096-1523.16.2.332. [DOI] [PubMed] [Google Scholar]

- Pashler H. Familiarity and Visual Change Detection. Perception & Psychophysics. 1988;44(4):369–378. doi: 10.3758/bf03210419. [DOI] [PubMed] [Google Scholar]

- Pelli DG. The VideoToolbox software for visual psychophysics: Transforming numbers into movies. Spatial Vision. 1997;10(4):437–442. [PubMed] [Google Scholar]

- Pelli DG, Burns CW, Farell B, Moore-Page DC. Feature detection and letter identification. Vision Research. 2006;46(28):4646–4674. doi: 10.1016/j.visres.2006.04.023. [DOI] [PubMed] [Google Scholar]

- Phillips WA. Distinction between Sensory Storage and Short-Term Visual Memory. Perception & Psychophysics. 1974;16(2):283–290. [Google Scholar]

- Rouder JN. Absolute identification with simple and complex stimuli. Psychological Science. 2001;12(4):318–322. doi: 10.1111/1467-9280.00358. [DOI] [PubMed] [Google Scholar]

- Rouder JN, Morey RD, Cowan N, Zwilling CE, Morey CC, Pratte MS. An assessment, of fixed-capacity models of visual working memory. Proceedings of the National Academy of Sciences of the United States of America. 2008;105(16):5975–5979. doi: 10.1073/pnas.0711295105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Troje NF, Bulthoff HH. Face recognition under varying poses: The role of texture and shape. Vision Research. 1996;36(12):1761–1771. doi: 10.1016/0042-6989(95)00230-8. [DOI] [PubMed] [Google Scholar]

- Wilken P, Ma WJ. A detection theory account of change detection. Journal of Vision. 2004;4(12):1120–1135. doi: 10.1167/4.12.11. [DOI] [PubMed] [Google Scholar]

- Wolfe JM. What can 1 million trials tell us about visual search? Psychological Science. 1998;9(1):33–39. [Google Scholar]

- Wolfe JM, Stewart MI, Friedmanhill SR, Oconnell KM. The Role of Categorization in Visual-Search for Orientation. Journal of Experimental Psychology-Human Perception and Performance. 1992;18(1):34–49. doi: 10.1037//0096-1523.18.1.34. [DOI] [PubMed] [Google Scholar]

- Yin RK. Looking at upside-down faces. Journal of Experimental Psychology. 1969;81(1):141–145. [Google Scholar]

- Young AW, Hellawell D, Hay DC. Configurational Information in Face Perception. Perception. 1987;16(6):747–759. doi: 10.1068/p160747. [DOI] [PubMed] [Google Scholar]

- Zhang WW, Luck SJ. Discrete fixed-resolution representations in visual working memory. Nature. 2008;453(7192):233–U213. doi: 10.1038/nature06860. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhou F, Kahana MJ, Sekuler R. Short-term episodic memory for visual textures - A roving probe gathers some memory. Psychological Science. 2004;15(2):112–118. doi: 10.1111/j.0963-7214.2004.01502007.x. [DOI] [PubMed] [Google Scholar]