Abstract

This study examined whether dysphoria influences the identification of non-ambiguous and ambiguous facial expressions of emotion. Dysphoric and non-dysphoric college students viewed a series of human faces expressing sadness, happiness, anger, and fear that were morphed with each other to varying degrees. Dysphoric and non-dysphoric individuals identified prototypical emotional expressions similarly. However, when viewing ambiguous faces, dysphoric individuals were more likely to identify sadness when mixed with happiness than non-dysphoric individuals. A similar but less robust pattern was observed for facial expressions that combined fear and happiness. No group differences in emotion identification were observed for faces that combined sadness and anger or fear and anger. Dysphoria appears to enhance the identification of negative emotion in others when positive emotion is also present. This tendency may contribute to some of the interpersonal difficulties often experienced by dysphoric individuals.

Keywords: interpretation bias, depression, cognitive models, information processing

Cognitive models of depression (e.g., Beck, 1976; Teasdale, 1988) posit that information processing biases for positive and negative stimuli play a critical role in the disorder. This bias is hypothesized to be pervasive, influencing memory, attention, and interpretation of environmental stimuli. Depressed individuals are therefore expected to selectively attend to negative stimuli, filter out positive stimuli, and interpret neutral information as being more negative or less positive than is actually the case. This cognitive bias, in turn, is thought to maintain the disorder.

There is now fairly consistent evidence that depression is characterized by these cognitive biases. For instance, depressed individuals display greater difficulty disengaging their attention from negative words (e.g., Koster et al., 2005), recall a greater number of negative sentences and words (e.g., Direnfeld & Roberts, 2006; Mogg et al., 2006; Wenzlaff et al., 2002), unscramble fewer positive sentences (e.g., Wenzlaff & Bates, 1998), and negatively interpret ambiguous homophones (Mogg et al., 2006; Wenzlaff & Eisenberg, 2001) compared to non-depressed individuals. Similarly, depressed individuals are less likely to recall positive information and show less interconnectedness among positive self-referent information (Dozois, 2002; Dozois & Dobson, 2001). This evidence is consistent with the idea that depressed and non-depressed individuals differ in how they process emotional stimuli.

More recently, researchers have begun to examine how depressed individuals’ process interpersonal stimuli. Depressed people often report experiencing poorer social support from friends and family members (Beach & O’Leary, 1993). Further, they display a variety of interpersonal deficits (e.g., Joiner, 2000), such as having poorer social skills as rated by social partners and neutral observers (Segrin, 1990), excessive reassurance seeking (Prinstein et al., 2005), and more negative verbal and non-verbal communication (Hale et al., 1997) than non-depressed people. Gotlib and Hammen (1992) have speculated that information processing biases may contribute to some of the interpersonal difficulties often experienced by depressed people.

Although a variety of stimuli could be used to examine information processing biases for interpersonal content, facial expressions depicting emotional expressions are the most commonly used stimuli. There are a number of reasons to use facial stimuli. Facial expressions play a particularly important role in social communication, as they can convey a wide range of information between social partners (Mayer et al., 1990). The ability to accurately decode, interpret, and respond to emotional facial expressions has been considered essential for effective social functioning (Montagne et al., 2005). Indeed, the ability to correctly identify emotional facial expressions has been associated with greater empathy and prosocial behavior (Marsh et al., 2007). It may be that depressed individuals experience interpersonal difficulties in part because of biases in how they interpret the type of emotion their social partner is experiencing.

Research exploring this idea has been somewhat inconsistent. In some studies, depressed individuals’ ability to identify emotional facial expressions appears to be impaired. For instance, Gur et al. (1992) reported that depressed individuals were more likely to misinterpret happy faces as neutral and neutral faces as sad. Similarly, Persad and Polivy (1993) reported that depressed individuals had a generalized deficit in their recognition of facial expressions that depicted a variety of emotions (e.g., sad, happy, fear, anger, surprise, indifference). In contrast, other studies have found no deficits in face processing among depressed individuals (Archer et al., 1992; Frewen & Dozois 2005).

Given this inconsistency across findings, it may be that depressed individuals are able to correctly recognize prototypical emotional expressions, but may have more difficulty when facial expressions are ambiguous. In line with this possibility, Bouhuys and colleagues (Bouhuys et al., 1999; Geerts & Bouhuys, 1998) presented three ambiguous and nine non-ambiguous schematic facial expressions to depressed and control participants. Participants were instructed to rate how strongly each schematic depicted positive and/or negative emotion. Depressed participants rated the ambiguous schematic pictures as significantly more negative than controls. Further, perception of negative emotion in these stimuli predicted the persistence of depression measured 13 and 24 weeks later. Similar results using the same stimuli were reported by Hale (1998). Finally, using the same stimuli, Raes, Hermans, and Williams (2006) found negative perception of ambiguous faces was associated with a ruminative thinking style, a cognitive style often associated with depression (Nolen-Hoeksema, 2000).

The evidence to this point suggests that depressed people are more likely to identify negative emotion in ambiguous emotional expressions than non-depressed individuals. However, the vast majority of this research has relied on simple, schematic drawings of faces. Far less research has been completed with arguably more ecologically valid and complex stimuli, such as human facial expressions. In one such study, Joormann and Gotlib (2006) used an innovative task designed to measure identification of ambiguous emotional expressions. Participants viewed a movie in which a face changed in small increments from a neutral to an emotional expression. Participants were instructed to stop the movie when they could identify an emotion. Interestingly, depressed and non-depressed individuals were equally accurate in the identification of anger, sadness, and happiness. However, depressed individuals took longer to identify happiness than non-depressed participants. Further, depressed individuals took longer to identify sad than angry facial expressions. Depressed and non-depressed groups did not differentially identify anger. This suggests that depressed individuals more readily perceive sad emotion and take longer to identify positive emotion in others. This pattern of findings is also consistent with Beck’s (1976) content specificity hypothesis that biases should be strongest among depressed people for stimuli that are consistent with themes of sadness and loss.

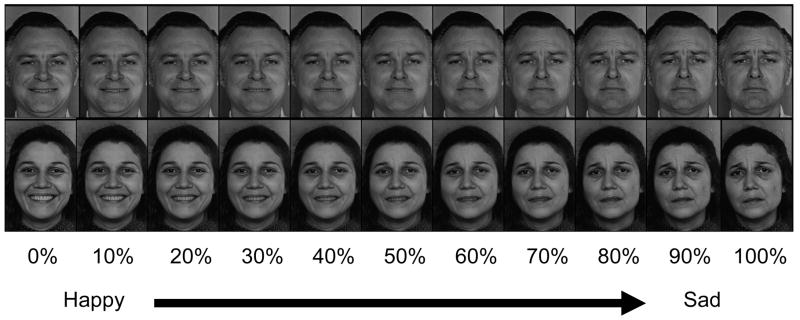

The current study was designed to build upon this previous work by examining whether individuals reporting high levels of depressive symptoms interpret emotionally ambiguous faces differently than individuals who report low levels of depressive symptoms. Specifically, emotional faces depicting happiness, sadness, anger, and fear, were paired and then morphed together in 10% increments (cf. Pollak & Kistler, 2002). This produced prototypical emotional expressions at each end of the continuum, with increasingly ambiguous stimuli towards the middle of each continuum (see Figure 1). Participants were shown each of the images several times in a randomized order and identified the emotion expressed in each image. This task allowed us to examine whether dysphoric individuals displayed information processing biases for prototypical emotion expressions as well as more ambiguous emotion expressions in human faces. Consistent with Beck’s (1976) content specificity hypothesis and Joormann and Gotlib (2006), we expected group differences for the identification of happiness and sadness.

Figure 1.

Examples of interpersonal stimuli morphed along the happy-sad continuum. The percentages indicate percent of the sad prototype. Thus, 0% = 0% sad and 100% happy, 50% = 50% sad and 50% happy, and 100% = 100% sad and 0% happy. Adapted from Ekman & Friesen, 1976.

Method

Participants

Participants were recruited from a large southwestern university and completed the study as part of a research requirement for an introduction to psychology course. Consistent with recommendations by Kendal et al. (1987), mass pre-testing at the beginning of the semester was used to initially identify individuals with elevated and low symptoms of depression. From this larger pool, 138 undergraduate students who scored above a 10 and below a 4 on the short form of the Beck Depression Inventory (Beck, Rial, & Rickels, 1974) were invited to participate. To determine whether depression severity was maintained, participants completed the Beck Depression Inventory-II (BDI-II; Beck, Steer, & Brown, 1996) immediately before completing study procedures. Participants who were not dysphoric during pre-testing and whose BDI-II score was 9 or less at the time of testing were classified as non-dysphoric (ND; n = 55). Participants who were classified as dysphoric at pre-testing and scored 16 or above at the time of testing were classified as currently dysphoric (D; n = 52). Previous research has shown that a cut score of 16 on the BDI-II yielded the best trade off in terms of sensitivity and false positive rate for predicting a mood disorder among a college student sample (Sprinkle et al., 2002). We used a score of 9 or less for the non-dysphoric group to ensure separation between our dysphoria groups. Participants who did not maintain their dysphoria status from pre-testing to the time of the experiment (n = 31) were excluded from this study. Average BDI-II score was in the moderate range for the dysphoric group and in the minimal range for the non-dysphoric group (see Table 1). The sample consisted of more females (59%) than males. Average age was 21.62 (SD = 6.08).

Table 1.

Descriptive statistics for study participants

| n | Age (SD) | Gender | BDI-II (SD) | |

|---|---|---|---|---|

| Dysphoric (D) | 55 | 20.84 (4.45) | 58% Female | 25.50 (7.13) |

| Non-Dysphoric (ND) | 52 | 22.58 (7.61) | 60% Female | 4.85 (2.57) |

Note: No statistically significant differences between depression groups were observed for gender or age.

Emotion Identification Task

Stimuli were 8 faces from the Pictures of Facial Affect (Ekman & Friesen, 1976) photo set. A male and female actor portrayed each of the four emotions (fear, anger, happy, and sad). Emotional faces for each actor were paired in the following manner – fear-anger, happy-fear, happy-sad, sad-anger – and then morphed together in 10% increments (cf. Pollak & Kistler, 2002). This created 4 continua for each actor, each of which contained the two prototype faces and 9 morphed faces of increasing intensity (see Figure 1). For example, the happy-sad continuum consisted of the prototypical happy face (expressing 100% happy and 0% sad), a face expressing 90% happy and 10% sad, a face expressing 80% happy and 20% sad, and so on until reaching the prototypical sad face (0% happy and 100% sad). For clarity, we will refer to each morphed face by the percent it is morphed into the second emotion listed in the continuum. So, the first morphed face in the happy-sad continuum (90% happy and 10% sad) will be referred to as morph increment 10 for that continuum.

Participants viewed each image one at a time and were prompted to identify the emotion expressed by each face. Each trial began with a central fixation cross that was presented for 250 ms. This was followed by a blank black screen for 250 ms. The emotion face stimuli (measuring 12 cm × 18 cm) was then presented on the screen along with two emotion labels. The labels were the emotions expressed by the two prototype faces for a given morph continuum. For example, for any face in the happy-sad continuum, “happy” and “sad” were the two emotion labels given. Participants then selected which emotion best described the emotion being expressed in the face by pressing a corresponding response box button. Once a participant responded, the next trial began. Images were presented in a new fully randomized order for each participant. Images were randomized within and between continua. Images that fell in the 0% to 30% and 70% to 100% range were presented twice for each actor while images in the 40% to 60% range were expected to be somewhat more ambiguous and thus were presented 4 times each. This resulted in a total of 224 trials. Task duration was approximately 20 minutes.

Procedure

All procedures were approved by local institutional review boards. After a brief review of study procedures, participants completed informed consent, a demographic form, and the BDI-II. Participants were then escorted to a soundproofed testing room. The emotion identification task was controlled by E-prime software installed on a PC and presented via a 20-inch LCD monitor. All instructions were presented via computer monitor.

Data Analysis

Our primary analysis examined whether dysphoria groups differentially identified emotion in the morphed faces across the four emotion continua. To do so, we computed the probability of identifying the second emotion for each of the 11 morphed faces along each continuum (e.g., probability of identifying a sad face at morph increment 40 within the happy to sad continuum). Probability estimates were obtained for each individual via binary logistic regression using the morph increment (0–100) to predict whether the second emotion was identified (yes, no) for each continuum. Probability estimates were obtained for each morph increment for each individual along each continuum and could range from 0 to 1. We then used a mixed-plot ANOVA to examine whether dysphoria groups differed in the probability of identifying each emotion along the four continua.

Results

Emotion Discrimination

A 2 (stimuli actor: male, female) × 4 (stimulus continuum: happy – sad, happy – fear, fear – anger, sad – anger) × 11 (morph increment: 0–10) × 2 (dysphoria group: dysphoric, non-dysphoric) mixed-plot analysis of variance examined whether the depressed group differed in their responses to emotional stimuli. Results indicated significant effects for stimulus continuum, F(3, 312) = 31.43, p <.001, η2 =.23, stimulus continuum × dysphoria group, F(3, 312) = 7.81, p <.001, η2 =.07, morph increment, F(10, 1040) = 4704.21, p <.001, η2 =.97, morph increment × dysphoria group, F(10, 1040) = 2.64, p =.003, η2 =.03, stimulus continuum × actor, F(3, 312) = 183.32, p <.001, η2 =.65, stimulus continuum × actor × dysphoria group, F(3, 312) = 2.69, p =.05, η2 =.03, stimulus continuum × morph increment, F(3,312) = 23.83, p <.001, η2 =.19, stimulus continuum × morph increment × dysphoria group, F(3,312) = 3.87, p <.001, η2 =.04, actor × morph increment, F(10, 1040) = 26.69, p <.001, η2 =.20, actor × morph increment × dysphoria group, F(10, 1040) = 5.60, p <.001, η2 =.05, and stimulus continuum × actor × morph increment, F(3,312) = 59.92, p <.001, η2 =.37. The four-way interaction was not significant.

Follow-up analyses focused on the significant stimulus continuum × morph increment × dysphoria group interaction, as an examination of this interaction provides a critical test of our hypotheses and the higher order four-way interaction was not significant. We examined the form of this three-way interaction by investigating the relationship between morph increment and dysphoria group within each stimulus continuum.

Happy – Sad Continuum

Results from an 11 (morph increment: 0 – 10) × 2 (dysphoria group: dysphoric, non-dysphoric) mixed plot analysis indicated a significant main effect for morph increment, F(10, 1050) = 1177.92, p <.001, η2 =.91, and a significant morph increment × dysphoria group interaction, F(10, 1050) = 6.34, p <.001, η2 =.06. This significant interaction was followed-up by examining group differences at each morph increment. Given the large number of follow-up tests, we used Sidak’s (1967) adjustment to alpha to set significance level. To achieve an overall alpha of.05 with 11 post-hoc tests and a correlation of.30 among our dependent variables, we had to set significance level to a p-value of ≤.009.

No significant differences were observed at the emotion prototypes for happy (morph increment 0: F(1, 105) = 1.28, p =.26) or sad (morph increment 100: F(1, 105) = 2.20, p =.14). However, significant differences were observed at morph increments 30: F(1, 105) = 10.29, p =.002, 50: F(1, 105) = 9.16, p =.003, 60: F(1, 105) = 15.49, p <.001, and 70: F(1, 105) = 8.39, p =.005. No significant differences were observed for the other morph increments (see Table 2). Dysphoric individuals were more likely to observe sadness in four ambiguous faces within this continuum than non-dysphoric individuals.

Table 2.

Means (standard deviation) for probability of identifying second emotion along each morphed continuum. Bold font indicates significant group differences (p <.05) for that morph increment. D = Dysphoric, ND = Non-Dysphoric

| Morph Increment | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Continuum | 0 | 10 | 20 | 30 | 40 | 50 | 60 | 70 | 80 | 90 | 100 |

| Happy → Sad | |||||||||||

| D | .01 (.02) | .02 (.06) | .05 (.11) | .14 (.19) | .34 (.29) | .67 (.27) | .89 (.15) | .97 (.05) | .99 (.02) | 1.0 (.01) | 1.0 (.00) |

| ND | .00 (.00) | .01 (.02) | .02 (.03) | .05 (.07) | .24 (.22) | .49 (.31) | .71 (.28) | .89 (.17) | .97 (.06) | .98 (.04) | .99 (.02) |

| Happy → Fear | |||||||||||

| D | .06 (.02) | .01 (.04) | .03 (.06) | .09 (.12) | .41 (.28) | .70 (.25) | .89 (.15) | .97 (.05) | .99 (.01) | .99 (.01) | .99 (.01) |

| ND | .00 (.01) | .01 (.02) | .02 (.04) | .04 (.07) | .28 (.21) | .64 (.22) | .82 (.19) | .94 (.11) | .97 (.05) | .99 (.02) | 1.0 (.01) |

| Sad → Anger | |||||||||||

| D | .01 (.01) | .01 (.02) | .02 (.04) | .05 (.07) | .17 (.15) | .48 (.24) | .77 (.21) | .93 (.10) | .97 (.05) | .99 (.02) | .99 (.01) |

| ND | .00 (.01) | .01 (.02) | .02 (.04) | .05 (.09) | .17 (.20) | .55 (.28) | .84 (.18) | .95 (.08) | .98 (.05) | .99 (.02) | .99 (.02) |

| Fear → Anger | |||||||||||

| Dysphoric | .02 (.08) | .03 (.08) | .05 (.09) | .08 (.10) | .17 (.13) | .37 (.18) | .60 (.16) | .76 (.14) | .87 (.12) | .92 (.09) | .94 (.07) |

| ND | .02 (.05) | .04 (.08) | .06 (.11) | .09 (.14) | .17 (.19) | .39 (.23) | .64 (.20) | .81 (.18) | .89 (.14) | .93 (.12) | .96 (.08) |

Happy – Fear Continuum

Results from an 11 (morph increment: 0 – 10) × 2 (dysphoria group: dysphoric, non-dysphoric) mixed plot analysis indicated a significant main effect for morph increment, F(10, 1050) = 1738.86, p <.001, η2 =.94, and a significant morph increment by dysphoria group interaction, F(10, 1050) = 3.59, p <.001, η2 =.03. As before, this significant interaction was followed-up by examining group differences at each morph increment using a p-value ≤.009 to determine significance. No significant differences were observed at the emotion prototypes for happy (morph increment 0: F(1, 105) = 1.03, p =.31) or fear (morph increment 100: F(1, 105) = 0.16, p =.70). Significant group differences were observed at morph increments 30: F(1, 105) = 7.53, p =.007, and 40: F(1, 105) = 7.06, p =.009. No significant group differences were observed for the other morph increments. Dysphoric individuals were more likely to identify fear in two ambiguous faces within this continuum than non-dysphoric (see Table 2).

Sad – Anger Continuum

Results from an 11 (morph increment: 0 – 10) × 2 (dysphoria group: dysphoric, non-dysphoric) mixed plot analysis indicated a significant main effect for morph increment, F(10, 1050) = 1841.66, p <.001, η2 =.95, and a non-significant morph increment by dysphoria group interaction, F(10, 1050) = 1.58, p =.11, η2 =.02. This non-significant interaction suggests that the dysphoria groups did not differentially perceive the morphed faces on this continuum (see Table 2).

Fear – Anger Continuum

Results from an 11 (morph increment: 0 – 10) × 2 (dysphoria group: dysphoric, non-dysphoric) mixed plot analysis indicated a significant main effect for morph increment, F(10, 1050) = 1282.74, p <.001, η2 =.94, and a non-significant morph increment × dysphoria group interaction, F(10, 1050) = 0.54, p =.86, η2 =.00. This non-significant interaction suggests that the dysphoria groups did not perceive the morphed faces differently (see Table 2).1

Discussion

We examined whether dysphoric individuals displayed systematic biases in how they interpreted interpersonal stimuli that depicted prototypical and ambiguous emotional facial expressions relative to non-dysphoric individuals. No group differences were observed for the prototype emotional expressions. Thus, dysphoria does not appear to impact the identification of non-ambiguous expressions of emotion. However, group differences were observed for several stimuli that combined positive and negative emotional expressions. More specifically, dysphoric individuals were more likely to identify sadness when mixed with a happy expression in four faces that were particularly ambiguous. A similar pattern was observed for a mixture of fear and happiness, although this effect was less robust as group differences only emerged for two ambiguous faces along the continuum. In contrast, no group differences were observed when sadness and anger and fear and anger were morphed together.

The current study may provide insight into the interpersonal deficits that are often observed in depressed adults. Gotlib and Hammen (1992) suggested that cognitive biases may contribute to some of the interpersonal problems experienced by depressed individuals. It is possible that a tendency to perceive negative emotion in others impacts a depressed persons’ ability to effectively interact with social partners. For instance, given a tendency to negatively interpret facial expressions that mix negative and positive emotion, a social partner may have to be significantly more animated when expressing positive emotion to a dysphoric individual. This may become tiresome for the social partner over time and contribute to the erosion of the relationship. Further, dysphoric individuals may perceive their social interactions to be more negative, thereby potentially maintaining their depression. Future research that directly links biased processing of emotional facial expressions with interpersonal difficulties will be an important next step for this area of research.

The perception of emotional information in stimuli constructed to be emotionally ambiguous has a number of qualities that we believe make it a useful tool with which to assess social information processing. First, this task does not rely on reaction time to infer biased processing. Although we have used reaction time tasks to measure information processing (e.g., Beevers & Carver, 2003; Beevers et al., 2007), some investigators have suggested that slow and variable motor responses observed in some psychiatric conditions may potentially confound manual reaction time data (Mathews et al., 1996). The current task overcomes this limitation by not relying on reaction time. Second, this task requires that individuals identify prototypical emotional expressions as well as ambiguous expressions. This allows us to determine whether individuals have difficulty with the identification of emotion in general or whether biases become more prominent as stimulus ambiguity increases. Third, this task does not rely on a single response per emotion condition but instead requires individuals to repeatedly make judgments about stimuli across each level of the morphed continuum. Repeated assessments typically produce more reliable estimates than single assessments.

In addition to these strengths, the task as designed in the current study also has its limitations. For instance, it is not possible to determine whether dysphoric individuals are reporting greater sadness and fear when mixed with happiness because they are attending to the negative emotion or because they are failing to attend to the positive emotion. Consider a facial expression that was created to express 30% sadness and 70% happiness. If the dysphoric group is more likely to identify this face as sad, as they did in the current study, do they respond in this manner because they are preferentially attending to the sad emotion or failing to attend to the happy emotion? Further, if dysphoric participants over-identify negative emotion in others, combining negative emotions would not produce a bias. Consistent with this idea, we did not find any dysphoria group differences for continua that involved two negative emotions. One possible solution is to morph emotional faces with neutral faces. If dysphoric individuals identify negative emotion earlier in the continua and/or identify positive emotion later in the continua than non-dysphoric individuals, this could clarify the nature of these interpretive biases (cf. Joormann & Gotlib, 2007).

Another issue regarding this task is how to define biased emotion identification. Bias was defined as relative to the responses of the non-dysphoric group. We assumed the non-dysphoric group provided normative responses on this task and deviations from these responses reflected a bias. Indeed, other similar studies have defined bias by comparing responses of dysphoric and non-dysphoric groups (Joormann & Gotlib, 2007). We did consider using a more putatively objective definition of bias. One possibility was to define bias as the amount of agreement between (a) the probability of identifying an emotion and (b) its corresponding morph increment. For instance, identifying an emotion 50% of the time in expressions that contain 50% of that emotion could be considered unbiased. Identifying an emotion 60% of the time in expressions that contain 30% of that emotion could be interpreted as biased. However, this approach assumes that the morph increment reflects the true ambiguity of the facial expression and that the ambiguity of the facial expressions is equivalent across a continuum. This may not be the case, as features of particular emotions may be more prominent than others (e.g., smile), thus making that emotion easier to identify at certain points of the continuum.

Several limitations of the current study should also be noted. We studied a convenient sample of young adults who were recruited from a university setting, which limits the generalizability of our findings to older and less well educated depressed populations. We did not conduct clinical interviews to diagnose depression, so it is unclear whether these results will generalize to clinically depressed individuals. A person experiencing high levels of depressive symptoms may perceive emotion differently than a person suffering from clinical depression. Additional research examining social information processing with a clinically diagnosed sample is clearly warranted. Further, it is possible that participants in our sample would have been diagnosed with other comorbid disorders or were experiencing high levels of anxiety. These unmeasured factors could have influenced the findings. Although our sample size was moderate, a larger study would provide more statistical power to detect smaller group differences. Finally, our images were morphed from the Ekman collection (Ekman & Friesen, 1976), which has been used extensively. It is important for future research to use facial expressions from other well-validated collections, such as the NimStim collection (for more information, see www.macbrain.org), to ensure that these cognitive biases generalize beyond the Ekman collection.

In conclusion, these findings promote further understanding of the cognitive processes associated with dysphoria. Dysphoric individuals have a stronger tendency to identify sadness and fear when mixed with happiness than non-dysphoric individuals. The bias to identify sadness appears to be more robust than for fear. In contrast, dysphoria does not appear to disrupt the identification of prototypical or non-ambiguous emotion expressions. Future work should consider exploring the interpersonal consequences of this cognitive bias, as this may be a pathway by which a dysphoric episode is maintained. Continued research aimed at identifying the cognitive, social, and behavioral processes that serve to maintain depression may ultimately inform treatment development and help reduce the significant distress that is often associated with an episode of depression.

Footnotes

We examined reaction time data to determine whether participants had longer latencies to identify the ambiguous morphed faces than the prototypical emotion expressions. In short, facial expressions in the middle of the continuum took significantly longer to identify than the prototype emotions, suggesting that those images were indeed more ambiguous.

References

- Archer J, Hay DC, Young AW. Face processing in psychiatric conditions. British Journal of Clinical Psychology. 1992;31:45–61. doi: 10.1111/j.2044-8260.1992.tb00967.x. [DOI] [PubMed] [Google Scholar]

- Beach SR, O’Leary KD. Dysphoria and marital discord: Are dysphoric individuals at risk for marital maladjustment? Journal of Marital & Family Therapy. 1993;19:355. doi: 10.1111/j.1752-0606.1993.tb00998.x. [DOI] [PubMed] [Google Scholar]

- Beck AT. Cognitive therapy and the emotional disorders. Oxford, England: International Universities Press; 1976. [Google Scholar]

- Beck AT, Rial WY, Rickels K. Short form of Depression Inventory: Cross-validation. Psychological Reports. 1974;34:1184–1186. [PubMed] [Google Scholar]

- Beck AT, Steer R, Brown GK. Beck Depression Inventory (BDI-II) San Antonio (TX): Psychological Corporation; 1996. [Google Scholar]

- Beevers CG, Carver CS. Attentional bias and mood persistence as prospective predictors of dysphoria. Cognitive Therapy and Research. 2003;27:619–637. [Google Scholar]

- Beevers CG, Gibb BE, McGeary JE, Miller IW. Serotonin transporter genetic variation and biased attention for emotional word stimuli among psychiatric inpatients. Journal of Abnormal Psychology. 2007;116:208. doi: 10.1037/0021-843X.116.1.208. [DOI] [PubMed] [Google Scholar]

- Beevers CG, Meyer B. Lack of positive experiences and positive expectancies mediate the relationship between bas responsiveness and depression. Cognition and Emotion. 2002;16:549–564. [Google Scholar]

- Bouhuys AL, Geerts E, Gordijn MCM. Depressed patients’ perceptions of facial emotions in depressed and remitted states are associated with relapse: A longitudinal study. Journal of Nervous and Mental Disease. 1999;187:595–602. doi: 10.1097/00005053-199910000-00002. [DOI] [PubMed] [Google Scholar]

- Direnfeld DM, Roberts JE. Mood congruent memory in dysphoria: The roles of state affect and cognitive style. Behavior Research and Therapy. 2006;44:1275–1285. doi: 10.1016/j.brat.2005.03.014. [DOI] [PubMed] [Google Scholar]

- Dozois DJA. Cognitive organization of self-schematic content in nondysphoric, mildly dysphoric, and moderately-severely dysphoric individuals. Cognitive Therapy and Research. 2002;26:417–429. [Google Scholar]

- Dozois DJA, Dobson KS. Information processing and cognitive organization in unipolar depression: Specificity and comorbidity issues. Journal of Abnormal Psychology. 2001;110:236–246. doi: 10.1037//0021-843x.110.2.236. [DOI] [PubMed] [Google Scholar]

- Ekman P, Friesen WV. Pictures of Facial Affect. Palo Alto, CA: Consulting Psychologists Press; 1976. [Google Scholar]

- Frewen PA, Dozois DJA. Recognition and interpretation of facial expressions in dysphoric women. Journal of Psychopathology and Behavioral Assessment. 2005;27:305–315. [Google Scholar]

- Geerts E, Bouhuys N. Multi-level prediction of short-term outcome of depression: Non-verbal interpersonal process, cognitions, and personality traits. Psychiatry Research. 1998;79:59–72. doi: 10.1016/s0165-1781(98)00021-3. [DOI] [PubMed] [Google Scholar]

- Gotlib IH, Hammen CL. Psychological aspects of depression: Toward a cognitive-interpersonal integration. Oxford, England: John Wiley and Sons; 1992. [Google Scholar]

- Gur RC, Erwin RJ, Gur RE, Zwil AS, Heimberg C, Kraemer HC. Facial emotion discrimination: II. Behavioral findings in depression. Psychiatry Research. 1992;42:241–251. doi: 10.1016/0165-1781(92)90116-k. [DOI] [PubMed] [Google Scholar]

- Hale WW., III Judgment of facial expressions and depression persistence. Psychiatry Research. 1998;80:265–274. doi: 10.1016/s0165-1781(98)00070-5. [DOI] [PubMed] [Google Scholar]

- Hale WW, III, Jansen JHC, Bouhuys AL, Jenner JA. Non-verbal behavioral interactions of depressed patients with partners and strangers: The role of behavioral social support and involvement in depression persistence. Journal of Affective Disorders. 1997;44:111. doi: 10.1016/s0165-0327(97)01448-1. [DOI] [PubMed] [Google Scholar]

- Joiner TE., Jr Depression’s vicious scree: Self-propagating and erosive processes in depression chronicity. Clinical Psychology: Science and Practice. 2000;7:203–218. [Google Scholar]

- Joormann J, Gotlib IH. Is this happiness I see? Biases in the identification of emotional facial expressions in depression and social phobia. Journal of Abnormal Psychology. 2006;115:705. doi: 10.1037/0021-843X.115.4.705. [DOI] [PubMed] [Google Scholar]

- Kendall PC, Hollon SD, Beck AT, Hammen CL, Ingram RE. Issues and recommendations regarding use of the Beck Depression Inventory. Cognitive Therapy and Research. 1987;11:289–299. [Google Scholar]

- Koster EHW, De Raedt R, Goeleven E, Franck E, Crombez G. Mood-congruent attentional bias in dysphoria: Maintained attention to and impaired disengagement from negative information. Emotion. 2005;5:446–455. doi: 10.1037/1528-3542.5.4.446. [DOI] [PubMed] [Google Scholar]

- Marsh AA, Kozak MN, Ambady N. Accurate identification of fear facial expressions predicts prosocial behavior. Emotion. 2007;7:239. doi: 10.1037/1528-3542.7.2.239. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mathews A, Ridgeway V, Williamson DA. Evidence for attention to threatening stimuli in depression. Behaviour Research & Therapy. 1996;34:695. doi: 10.1016/0005-7967(96)00046-0. [DOI] [PubMed] [Google Scholar]

- Mayer JD, DiPaolo M, Salovey P. Perceiving affective content in ambiguous visual stimuli: A component of emotional intelligence. Journal of Personality Assessment. 1990;54:772. doi: 10.1080/00223891.1990.9674037. [DOI] [PubMed] [Google Scholar]

- Mogg K, Bradbury KE, Bradley BP. Interpretation of ambiguous information in clinical depression. Behaviour Research and Therapy. 2006;44:1411–1419. doi: 10.1016/j.brat.2005.10.008. [DOI] [PubMed] [Google Scholar]

- Montagne B, Kessels RPC, Frigerio E, de Haan EHF, Perrett DI. Sex differences in the perception of affective facial expressions: Do men really lack emotional sensitivity? Cognitive Processing. 2005;6:136. doi: 10.1007/s10339-005-0050-6. [DOI] [PubMed] [Google Scholar]

- Nolen-Hoeksema S. The role of rumination in depressive disorders and mixed anxiety/depressive symptoms. Journal of Abnormal Psychology. 2000;109:504–511. [PubMed] [Google Scholar]

- Persad SM, Polivy J. Differences between depressed and nondepressed individuals in the recognition of and response to facial emotional cues. Journal of Abnormal Psychology. 1993;102:358–368. doi: 10.1037//0021-843x.102.3.358. [DOI] [PubMed] [Google Scholar]

- Pollak SD, Kistler D. Early experience is associated with the development of categorical representations for facial expressions of emotion. Proceedings of the National Academy of Sciences. 2002;99:9072–9076. doi: 10.1073/pnas.142165999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prinstein MJ, Borelli JL, Cheah CSL, Simon VA, Aikins JW. Adolescent girls’ interpersonal vulnerability to depressive symptoms: A longitudinal examination of reassurance-seeking and peer relationships. Journal of Abnormal Psychology. 2005;114:676. doi: 10.1037/0021-843X.114.4.676. [DOI] [PubMed] [Google Scholar]

- Raes F, Hermans D, Williams JMG. Negative bias in the perception of others’ facial emotional expressions in major depression. Journal of Nervous and Mental Disease. 2006;194:796–799. doi: 10.1097/01.nmd.0000240187.80270.bb. [DOI] [PubMed] [Google Scholar]

- Segrin C. A meta-analytic review of social skill deficits in depression. Communication Monographs. 1990;57:292. [Google Scholar]

- Sidak Z. Rectangular confidence regions for the means of multivariate normal distributions. Journal of the American Statistical Association. 1967;62:626–633. [Google Scholar]

- Sprinkle SD, Lurie D, Insko SL, Atkinson G, Jones GL, Logan AR. Criterion validity, severity cut scores, and test-retest reliability of the beck depression inventory-II in a university counseling center sample. Journal of Counseling Psychology. 2002;49:381–385. [Google Scholar]

- Teasdale JD. Cognitive vulnerability to persistent depression. Cognition and Emotion. 1988;2:247–274. [Google Scholar]

- Wenzlaff RM, Bates DE. Unmasking a cognitive vulnerability to depression: How lapses in mental control reveal depressive thinking. Journal of Personality and Social Psychology. 1998;75:1559–1571. doi: 10.1037//0022-3514.75.6.1559. [DOI] [PubMed] [Google Scholar]

- Wenzlaff RM, Eisenberg AR. Mental control after dysphoria: Evidence of a suppressed, depressive bias. Behavior Therapy. 2001;332:27–45. [Google Scholar]

- Wenzlaff RM, Meier J, Salas DM. Thought suppression and memory biases during and after depressive episodes. Cognition and Emotion. 2002;16:403–422. [Google Scholar]