Abstract

Background

Performing music requires fast auditory and motor processing. Regarding professional musicians, recent brain imaging studies have demonstrated that auditory stimulation produces a co-activation of motor areas, whereas silent tapping of musical phrases evokes a co-activation in auditory regions. Whether this is obtained via a specific cerebral relay station is unclear. Furthermore, the time course of plasticity has not yet been addressed.

Results

Changes in cortical activation patterns (DC-EEG potentials) induced by short (20 minute) and long term (5 week) piano learning were investigated during auditory and motoric tasks. Two beginner groups were trained. The 'map' group was allowed to learn the standard piano key-to-pitch map. For the 'no-map' group, random assignment of keys to tones prevented such a map. Auditory-sensorimotor EEG co-activity occurred within only 20 minutes. The effect was enhanced after 5-week training, contributing elements of both perception and action to the mental representation of the instrument. The 'map' group demonstrated significant additional activity of right anterior regions.

Conclusion

We conclude that musical training triggers instant plasticity in the cortex, and that right-hemispheric anterior areas provide an audio-motor interface for the mental representation of the keyboard.

Background

The mastering of a musical instrument requires some of the most sophisticated skills, including fast auditory as well as motor processing. The performance targets of the highly trained movement patterns are successions of acoustic events. Therefore, any self-monitoring during musical performance has to rely on quick feedforward or feedback models that link the audible targets to the respective motor programs. Years of practice may establish a neuronal correlate of this connection, which has recently been shown by brain imaging studies for both directions, auditory-to-motor, and motor-to-auditory.

• For auditory-to-motor processing: Professional musicians often report that pure listening to a well-trained piece of music can involuntarily trigger the respective finger movements. With a magnetoencephalography (MEG) experiment, Haueisen & Knösche [1] could demonstrate that pianists, when listening to well-trained piano music, exhibit involuntary motor activity involving the contralateral primary motor cortex (M1).

• For motor-to-auditory processing as a possible feedforward projection, Scheler et al. [2] collected functional magnetic resonance (fMRI) brain scans of eight violinists with German orchestras and eight amateurs as they silently tapped out the first 16 bars of Mozart's violin concerto in G major. The expert performers had significant activity in primary auditory regions, which was missing in the amateurs.

• Both directions of co-activation have been investigated in eight pianists and eight non-pianists in a previous cross-sectional study using DC-EEG [3]. In two physically different tasks involving only hearing or only motor movements respectively, the non-pianists exhibited distinct cortical activation patterns, whereas the pianists showed very similar patterns (based on correlation and vector similarity measures).

All of these studies have dealt with cross-sectional comparisons of expert musicians to non-experts. An issue that can not be addressed by this approach is to what extent the demonstrated co-activation processes are a result of many years of practice experience of the professionals, or if the time course establishing the phenomenon reveals a faster learning pace, even in the initial stages of practice. The crucial aspect of this approach is that, in addition to comparing experts to novices, the effects of practice on cortical activity is monitored in a group of beginners over a period of several weeks.

Using transcranial magnetic stimulation (TMS), Pascual-Leone et al. [4] demonstrated how plastic changes in the cerebral cortex during the acquisition of new fine motor skills occur within only five days. Subjects who learned the one-handed, five-finger exercise through daily 2-hour piano practice sessions, enlarged their cortical motor areas targeting the long finger flexor and extensor muscles, and decreased their activation threshold. Furthermore, the study provided evidence that the changes were specifically limited to the cortical representation of the hand used in the exercise, and that the changes take place regardless whether the training had been performed physically, or mentally only. Moreover, Classen et al. [5] showed that in motor skill acquisition the first effects of short-term plasticity appear not only in a matter of days, but even in minutes.

Whereas short-term practice seems to enlarge respective cortical motor areas, sustained long-lasting continuation of the training can yield a reduction of the size of these areas. Jäncke and co-workers [6] showed that during a bimanual tapping task, the primary and secondary motor areas (M1, SMA, pre-SMA, and CMA) were considerably activated to a much lesser degree in professional pianists than in non-musicians. The results suggest that the long lasting extensive hand skill training of the pianists leads to greater efficiency which is reflected in a smaller number of active neurons needed to perform given finger movements.

The present investigation deals not only with the plasticity of motor representations but also with the issue of auditory-sensorimotor integration in piano practice. Auditory feedback is essential for this kind of motor skill acquisition. The use of a computer-controlled digital piano allows us to access and to isolate the independent target features (parameters pitch, onset time, and loudness). Special attention is directed to an experimental dissociation of key presses and the associated acoustic events. The probe tasks of the paradigm involved only auditory or only motoric aspects of the original complex audio-motor task during piano practice.

We utilized DC-EEG to clarify the temporal dynamics of plasticity arising from this highly specialized sensorimotor training. DC-EEG reflects activation of the cerebral cortex caused by any afferent inflow. The neurophysiological basis of this lowest frequency range of the EEG are excitatory postsynaptic potentials in the dendrites of layers I-II [7]. DC-EEG has been shown to reflect cognitive and motor processing [8,9]. Its spatial accuracy has been evaluated by fMRI co-registration (spatial differences EEG-MRI < 12 mm [9]).

For the measurement of event-related DC-EEG (direct voltage electroencephalography), the auditory and motoric features of piano performance were dissociated (labeled as auditory and motoric probe tasks): The probe tasks during EEG required either passive listening or silent finger movement, and were therefore either purely auditory or purely motoric.

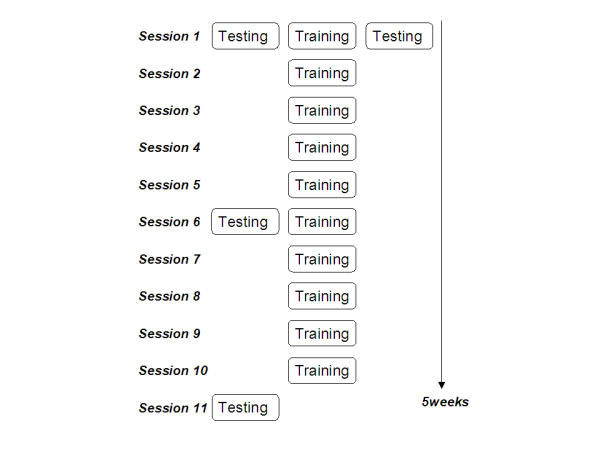

In contrast, the training paradigm aimed at sensorimotor binding by providing a regular piano situation with instant auditory feedback for each keystroke. The beginners were trained to re-play acoustically presented melodies with their right hand as precisely as possible. The total training period for each subject consisted of 10 single 20-minute-sessions, two sessions a week, over a period of five to six weeks.

The EEG probe task paradigm was carried out before the first practice session, right after the first practice session, after three weeks, and eventually after completion of the training, in order to evaluate changes that are introduced to the EEG as a result of the training paradigm.

Two groups of non-musicians were trained in the training paradigm and tested in the EEG probe task paradigm. Group 1 ('map' group) worked with a digital piano featuring a conventional key-to-pitch and force-to-loudness assignment.

Group 2 ('no-map' group) ran the same paradigm and the same five-week training program but the five relevant notes (pitches) were randomly reassigned to the five relevant keys, or fingers, respectively, after each single training trial.

Additionally, nine professional pianists were tested in the EEG probe task paradigm.

Results

EEG data were recorded immediately before and after the 1st, after 5, and after 10 sessions of practice, in order to trace the changes induced by single session practice as well as by the prolonged training.

The subjects' event-related slow EEG-potentials were recorded at 30 electrode sites.

To exclude that a putative cortical motor DC activation accompanying the auditory probe tasks generated an actual efferent, i.e. supra-threshold, outflow of motor commands, a simultaneous EMG of the finger muscles of the right hand was monitored accompanying the EEG while auditory stimuli were delivered.

The task-related DC shifts were baseline-corrected and checked for consistency. A minimum of 45 trials without artifacts was required from each subject for averaging purposes.

Mean values during the stimulus and the task period were subjected to statistical analysis by repeated measure analysis of variance (ANOVA).

Longitudinal differences were obtained individually for each subject, for the measurements right after the 1st session, before the 6th, and at the beginning of the 11th session. Average data for all subjects was used to create a Grand Average. For longitudinal differences and group differences a P < 0.05 – criterion was applied. Behavioral data was obtained from the same error measures that served as level adaptation criterion during the interactive training (see Methods section for details). Values were normalized, session-averaged, and group grand-averaged.

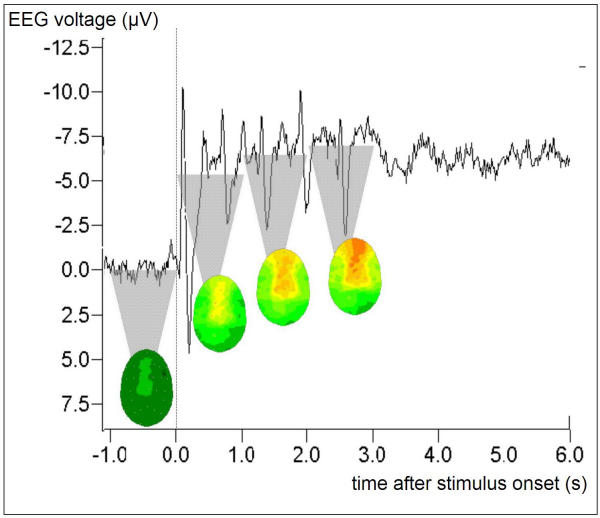

Fig. 1 depicts the Grand Average ('map' group, session 1, pre-training) of task-related EEG during the auditory probe task in a single electrode position (FC3) to exemplify the evaluation procedure. The course of the obtained average potential commenced with an evoked potential (N100) at the beginning of the stimulus period with its maximum amplitude over Cz reflecting an orienting response to the initial note, followed by a positive potential (P200). The following sustained negative DC-potential shift during the 3-second task period plateaued after about 1 second, however, a fast N100-P200 response to each single note of the auditory pattern was superimposed onto the DC-shift (every 600 ms).

Figure 1.

Task-related potential at electrode position FC3 during the presentation of the auditory probe task. Stimulus onset t = 0, stimulus end t = 3000 ms. Grand Average including nine subjects ('map' group) with > 45 single presentations each. The averaging process preserves the interindividually invariant signal components, such as the negative DC plateau and the superimposed ERP peaks (bimodal combination at a latency of 100 ms/200 ms + n * 600 ms) that are evoked by the single tones the stimulus is composed of. The top of the shaded triangles indicate the DC level that is obtained by time-averaging the signal over 1-second time windows; the resulting 30-electrode topographic interpolations are attached to the tips of the respective triangles. Statistical analysis and cortical imaging in the results section is based on the time window [1000 ms, 3000 ms] after stimulus onset.

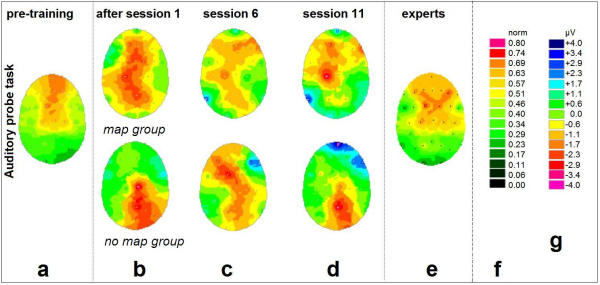

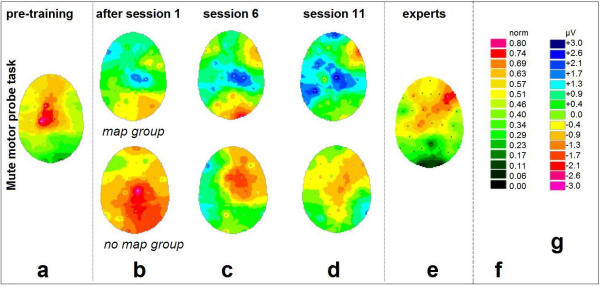

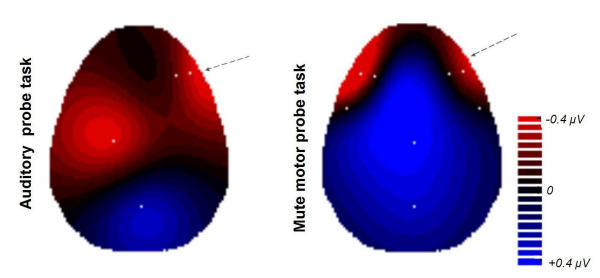

The results of the topographic mapping of the sustained DC values in the time window 1000 ms – 3000 ms after stimulus / movement onset are summarized in Fig. 2 (auditory probe task) and Fig. 3 (motor probe task). The displayed activation patterns are 'electrical top views' onto the unfolded surface of the head (nose pointing upward, the lateral borders covering subtemporal electrodes level with the ears).

Figure 2.

Changes of cortical DC-potentials induced by training: Auditory probe task. The displayed activation patterns are 'electrical top views' onto the unfolded surface of the head. Electrode positions are indicated by white dots, the color values result from interpolation including the four nearest electrodes for each pixel. (a) Initial DC-EEG in the 17 inexperienced subjects prior to first practice. Normalized data. (b) Activation changes (additional negative potential compared to the baseline (a)) after the first 20-minute practice. (c) Activation changes after 20.5 ± 7.9 days of practice (5 sessions). (d) Activation changes after 38.7 ± 11.6 days of practice (10 sessions). (e) group of 9 professional pianists (accumulated practice time = 19.4 ± 6.7 years) while performing the identical experimental paradigm. Normalized data. In (b), (c), and (d): Upper panel: Beginner group 1 ('map' group, n = 9), lower panel: Beginner group 2 ('no-map' group, n = 8). Subtractive data based on individual potential differences. (f) Color scale for normalized panels (a) and (e); (g) Color scale for subtractive panels (b) through (d).

Figure 3.

Changes of cortical DC-potentials induced by training: Mute motor probe task For general legend, please cf. Fig. 2.

EEG data was recorded immediately before and after the 1st, 6th, and 11th session, in order to trace the changes induced by single session practice as well as by the prolonged training. For that purpose, the data obtained before the 1st session provided a subject's individual 'initial state baseline' (Figs. 2a, 3a, both beginner groups). Compared to the baseline in Figs. 2a/3a, subtractive values for the measurements right after the 1st session (Figs. 2b, 3b), before the 6th (Figs. 2c, 3c), and before the 11th session (Figs. 2d, 3d), are depicted as shown. Figs. 2, 3(e) show the task-related DC-activity of the professional pianists, to be compared to Figs. 2a, 3a.

DC-EEG: Auditory probe task

Figs. 2a,2b,2c,2d depict the 5-week changes of cortical activation for the passive auditory paradigm. The largest amplitudes with respect to increase as well as decrease were detected as follows: For the pre-training condition (Fig. 2a), the passive auditory musical processing led to activation mainly in frontal and central areas. This was true for both beginner groups.

Initial alterations in cortical activity occurred after only 20 minutes of practice (Fig. 2b). For the 'map' group, immediately following the very first session at the piano, passive listening led to a widespread additional activation in the vicinity of the central sulcus (as estimated by comparison with Fig. 3a) and lateralized to the left. (Only the right hand had been trained). The subsequent measurements (Figs. 2c,2d) were collected before the 6th / 11th training session, i.e. the probe tasks were conducted immediately before (instead of after) the attentionally loaded practice session. The interval to the preceding active training was 3.9 ± 2.9 days ( ± SD). Thus, what we would expect from this data are stable phenomena. After 5 sessions (20.5 ± 7.9 days of practice, Fig. 2c), the additional left-lateralized coactivation gradually focused onto the left primary sensorimotor cortex.

± SD). Thus, what we would expect from this data are stable phenomena. After 5 sessions (20.5 ± 7.9 days of practice, Fig. 2c), the additional left-lateralized coactivation gradually focused onto the left primary sensorimotor cortex.

After 10 sessions (38.7 ± 11.6 days of practice, Fig. 2d), a clear co-activation of the sensorimotor cortex responsible for the right hand, accompanied by activity in right fronto-temporal electrodes, was observed. In neither condition did the EMG reveal any muscular activity in the digits of the right hand.

The initial parietal activity was reduced. Moreover, the frontal activity showed a salient region in the right hemisphere.

The 'no-map' group displayed non-homogeneous results with a tendency to additional activity in parietal regions that did not change profoundly over the weeks of training. However, interindividual variance was high throughout the data (group standard deviations of an order of magnitude ± 1 μV at single electrode sites for potential averages with a range of only 5 μV). Whilst the above results remain on a merely descriptive level, we found highly significant changes for the electrode positions C3 (left central) and F10 (right fronto-temporal) in the 'map' group only.

The EEG activation pattern of the professional pianists (Fig. 2e) exhibits activity over frontal, bilateral temporal and central areas. The pattern differs significantly (P < 0.05) from that of the beginners (Fig. 2a).

DC-EEG: Motor probe task

Fig. 3 shows the simultaneous development of DC activity for the silent movement paradigm. In this condition, the largest amplitudes with respect to increases and decreases were detected as follows: For the pre-training condition (Fig. 3a), simple motor execution led to DC potentials in the left primary motor area (right hand). Again, this was true for both beginner groups.

After the first 20-minute practice, the subjects of the 'map' group (Fig. 3b, above) displayed a decrease of task-related activity at the ipsilateral sensorimotor cortex. After 5 sessions (20.5 ± 7.9 days of practice, Fig. 3c), an additional coactivation in the bilateral frontolateral and temporal cortices was observed for the motor task. After 10 sessions (38.7 ± 11.6 days of practice, Fig. 3d), activity in right fronto-temporal electrodes was observed. As in the auditory task, the parietal lobe activity only increased in the first half of the training (until week 3), but then had been decreased at the end of the five-week period (Figs. 3b,3c,3d). For the movement task the right hand had to be moved anyway (Fig. 3a): this might explain why no significant changes were detected in the contralateral sensorimotor cortex. The most prominent effect is noticeable on the ipsilateral side (Figs. 3b,3c,3d): the ipsilateral sensorimotor cortex (≅ unused left hand) seemed to be inhibited during the very first session and remained in this state throughout the course of the study. Again, in addition to these effects, the development of a somewhat prominent region of right anterior activation was observed. For these two mentioned regions, the changes at the corresponding electrode sites (C4 over ipsilateral central area, F10 over right fronto-temporal area) were significant (P < 0.05).

The 'no-map' group displayed highly non-homogeneous data (Figs. 3b,3c,3d, below); no consistent pattern changes were observed during the training.

As in the auditory probe task, interindividual variance was high in every group. Except for the 'map' group's electrode positions as stressed above, no significant effect could be detected.

The EEG activation pattern of the professional pianists (Fig. 3e) exhibits activity over frontal, bilateral temporal and central areas. The pattern differs significantly (P < 0.05) from that of the beginners (Fig. 3a). Interestingly, comparison between the auditory and motor task types within the expert group reveals no significant pattern differences at all, despite the different physical and sensory features of the two task types.

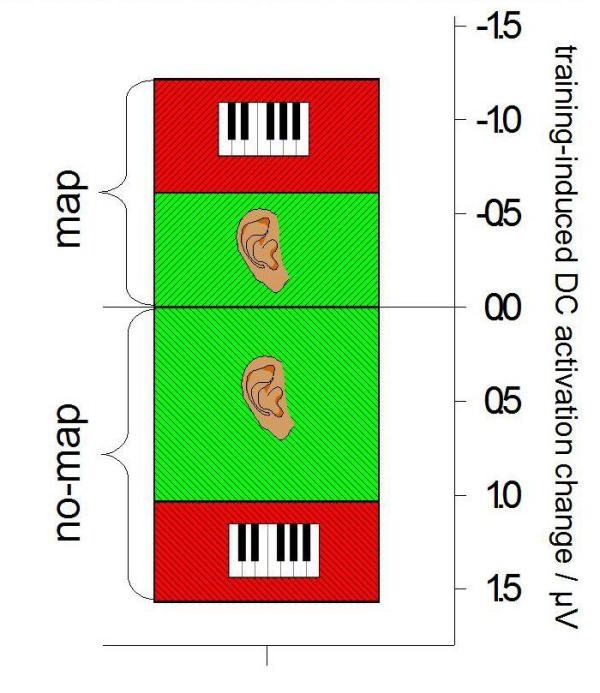

Group Differences

A comparison of the two beginner groups established for this study might help clarify the functional relevance of the right anterior DC-activity that strikingly appears in the auditory as well as in the mute motor task.

The 'no-map' group undertook the same 5-week training with one modification: The assignment of the five relevant notes (pitches) to the five relevant keys, or fingers, respectively, was 'shuffled' after each single training trial, so that this group was not given any chance to figure out any coupling between fingers and notes except the temporal coincidence of keystroke and sound. In other words: those subjects were not given any opportunity to establish an internal 'map' between motor events and auditory pitch targets.

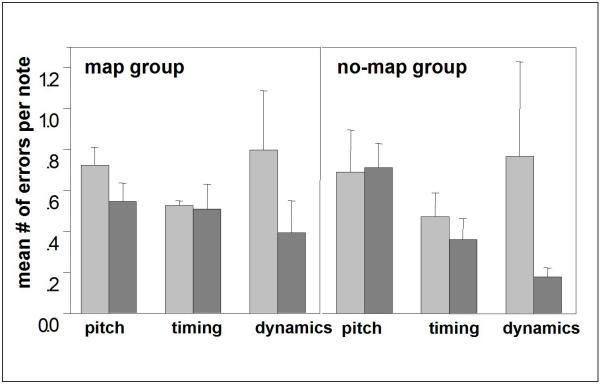

Performance

The 'map' group practiced under conditions of standard piano characteristics; the 'no-map' group practiced with a piano that persistently had its key-to-pitch assignment shuffled. However, the members of the 'no-map' group still had to practice proper timing and fine adjustment of finger forces for the reproduction of rhythm and loudness. In fact, as the behavioral data indicates, the subjects of the 'no-map' group learned to perform these aspects of playing an instrument much better than the 'map' group (Fig. 4): In the first session, both groups made 0.72 ± 0.09 pitch errors (wrong key) per note on average, 0.53 ± 0.02 timing errors (key press > 1/16 note too early/late), and 0.80 ± 0.29 dynamics errors. In the 11th session, the 'map' group scored 0.54 ± 0.09 pitch errors per note, 0.51 ± 0.12 timing errors and 0.39 ± 0.16 dynamics errors, i.e., major improvements were achieved in the pitch domain. The 'no-map' group finally scored 0.71 ± 0.12 pitch errors, 0.36 ± 0.10 timing errors and 0.18 ± 0.05 dynamics errors per note, i.e., they did not improve in mapping keys to pitches, instead they improved their 'feeling' for timing and dynamics much more than the 'map' group.

Figure 4.

Average error incidences per note Average error incidences per note of a given melody, divided into pitch errors (note order only), timing errors and dynamics errors. The values of the 'map' group (n = 9) are displayed on the left, those of the 'no-map' group (n = 8) on the right. In each case, the left bar (light gray) dates from the first training session and the adjacent right one (dark gray) from the last session (session 11).

EEG group differences

Fig. 5 illustrates the topographic differences between the two beginner groups employed for the training, after completion of the five weeks of practice. The interpolation map is based on subtractive data of the patterns in Fig. 2d and Fig. 3d, upper panel minus lower panel ('map' group data minus 'no-map' group data, respectively).

Figure 5.

Changes of cortical activity induced by 10 sessions of training. Changes of cortical activity induced by 10 sessions of training. Shown are all inter-group-differences between the 'map' group and the 'no-map' group for an electrode selection (white dots) where the two groups differed significantly (P < 0.05). The electrode positions C3 (auditory probe task) and F10 (both task types) differed highly significant (P < 0.01) between the two groups. Topographic mapping results from two-dimensional 4-neighbors interpolation. RED: Areas where the training-induced changes are more pronounced in the 'map' group than in the 'no-map' group, with respect to cortical activation (i.e., more negative potentials). BLUE: Areas unaffected or inhibited due to the practice, in the 'map' group compared to the 'no-map' group.

In the auditory probe task the main group differences emerged at two regions:

- Left central region differences at electrode sites C3, Cz, FC3, C7 (focus of highly significant difference is C3; P < 0.01). This is located on the left hemisphere, over the sensorimotor cortex for the upper right extremities.

- Right fronto-temporal region differences at electrode sites F8, F10, FT8, FT10, T8 (focus of highly significant difference is F10; P < 0.01). This is over the right fronto-temporal area at an inferior plane (supraorbital-frontal and temporopolar).

These significant activation differences between the groups possibly highlight brain areas used that the 'map' group had trained to make use of whilst the 'no-map' group had not. The right fronto-temporal selection of electrode sites (F8, F10, FT8, FT10, T8) that differed between the groups (although at some sites not significantly) was chosen to visualize the voltage group differences in the inset, Fig. 6. The difference is basically due to a couple of mutual effects, which is even more pronounced by picking only the highly significant electrode F10: in the 'map' group, the activity of the area concerned had increased (ΔV = -3.95 μV). Secondly, in the 'no-map' group the activity while processing either kind of task was not merely unchanged but had even decreased compared to the initial state prior to the study (ΔV = +3.37 μV). The difference was highly significant in both conditions (P < 0.01 for both auditory and motor probe task).

Figure 6.

Changes of cortical activity induced by 10 sessions of training. Activation changes for a right fronto-temporal selection of electrode sites (F8, F10, FT8, FT10, and T8). Tiled box plot. Green bars relate to the passive auditory task, red bars to the silent movement condition. Error bars not displayed; the differences within one condition are highly significant (P < 0.01).

Discussion

Recent studies have demonstrated anterior frontal and temporal areas predominantly of the right cerebral hemisphere to be of major importance for real and imagined perception of melodic and harmonic pitch sequences [10-15]. After looking at various other methods (PET [10,11,13], fMRI [2,16], MEG [1,12], rTMS [14]) which have provided information about localization of the different neuronal populations involved in musical perceptual and imagery skills, we utilized DC-EEG to clarify the temporal dynamics of plasticity arising out of this kind of highly specialized sensorimotor training. The authors were aware that questions of localization may be better addressed with other imaging techniques, and thus our principal aim was to design an experiment that allows investigations of functional anatomy with respect to function rather than to anatomy. When concerning cortical activation, the method combines high replicability and excellent temporal resolution. The crucial advantages of this method are non-invasiveness, the possibility of follow-up studies, and – in contrast to fMRI – the lack of accompanying noise, a factor which facilitates measurements in the auditory modality. A major benefit of the paradigm applied here is a clear separation of parameters. The learned skill involves neither the retrieval of familiar or learned melodies from long-term memory nor the practice of fixed complex motor programs ('sequence learning'). Each training task is randomly generated, though applying to a set of 'musical' rules, and subjects implicitly practice only how to 'translate' a given auditory target sequence into a corresponding motor program. The sensitive auditory monitoring during the following motor execution (and comparison with the auditory target image in working memory), which is essential in learning to play an instrument, is likely to promote a strong link between the internal representation of 'musical ear' and 'musical hand'.

Therefore, a thorough discussion of the group differences can give important hints towards an interpretation of the overall results.

Whilst a clear coactivation of the sensorimotor hand area (while listening) had developed in Group 1, such an effect was absent in Group 2. Although Group 2 had experienced excessively (for several thousand times over a period of five weeks) that every time they operated a key they would produce an audible tone, no common representation of ear and hand was established. It seems that a meaningful organization of perceptual and motion events (stimulus-response consistency) promotes the development of sensorimotor coactivation.

A highly significant group difference appears at the right anterior electrode site F10. The significance is basically due to an increase in the 'map' group and a decrease in the 'no-map' group. In this case, practice reduces the degree to which a cortex area engages in a certain task. The subjects of the 'no-map' group appear to actually unlearn the use of a specific brain area.

This finding has important implications for narrowing down the possible functional role of the right anterior activity. The mastering of a musical instrument is at least a triple task and requires operation of the intended key (string, etc.) with the appropriate force (air pressure, etc.) at the right moment. Yet, Group 2 of this study acquired high timing accuracy for the reproduction of fast complex rhythmic sequences (consisting of up to 12 notes of different lengths within 3 seconds duration) and a considerable implicit feeling for the piano keyboard (i.e., for the dosage of fine finger forces) without putting anterior right networks into action at all. For this reason, we suggest that the actual function of this network is mainly to process the sequential order of pitch patterns, and that the time-and force-dependent features of music making – at least in motor learning – may be processed elsewhere [17].

Despite this, the anterior right region is active in both perceptual and motoric tasks. There is a great deal of evidence, to be discussed in the following, showing that components of right anterior networks are of major importance for perceptual processing, memory and imagery of pitch sequences in musically untrained subjects and, in expert musicians, for imagined musical performance.

As to the question whether this right anterior region performs audiomotor integration in the first place, or whether its original purpose is perceptual, or rather motoric, we cannot provide an unambiguous answer due to the lack of spatial resolution of the EEG: If the activity originates from (a) the temporal pole, then its function is probably of higher (secondary or tertiary) auditory nature; if it originates from (b) the dorsal frontal lobe, then it is probably motoric; if it originates from (c) ventrolateral or supraorbital parts of the prefrontal cortex – which is most likely according to our data, then it is potentially a map.

Ad (a)

The temporal lobe, and especially the Superior Temporal Gyrus (STG) has on several occasions been found to be involved in perceptual processing [10,11,18-20]. The very same region obviously engages in musical imagery, such as the mental scanning of melodies, or memory retrieval of musical material [10,13,14,18,19,21], which is in keeping with the hypothesis that mental images are produced by the same mechanisms that are responsible for the perception of stimuli within the same modality [22-27].

Ad (b)

However, the possibility that the anterior right region is mainly motoric can also be supported in the present literature. If the activity proves to be frontal, then it would be elucidating to have a look at the left hemisphere again. The Broca area lies on the left side of the cortex, within the frontal lobe compartment of the region homologous to the region in question. One may speculate as to whether or not the parallels are coincidental: the phenomenon of movement (speech production) prepared by sequentially concatenating melodic elements (phonological entities), and the reverse sensorimotor involvement in melody (speech) perception are examples of such parallels. The 'motor theory' of speech perception [28] assumes that interpretation of the acoustic structure of speech is possible in terms of a motor program, suggesting a functional neuroanatomy which does not only hold the classical Wernicke area responsible for the comprehension of speech [29,30]. Could, then, a putative 'right Broca' be an abstract sort of motor area as well, designed to comprehend melodies? Pihan et al. [31] have demonstrated the importance of this module for the correct perception of prosody, i.e. the affective melody of verbal phrases. Maess et al. [32] demonstrated that processing music-syntactic incongruities produces early right-anterior negativity (ERAN) in MEG- as well as in EEG-signals. The ERAN is localized in Broca's area and its right-hemispheric homologue. These areas are involved in syntactic analysis during auditory language comprehension. Generally, structures of the left hemisphere of the cortex are often conceived of as high-resolution analyzers in the time domain, whereas precise frequency analysis is usually ascribed to structures of the right hemisphere [33].

Ad (c)

However, as there equally worthwhile arguments for reasoning that the anterior right activity in our auditory and motor tasks exhibits both auditory and motor facets of a genuine ear-to-hand (key-to-pitch, respectively) interface map, the authors favor the interpretation of the region at hand as being an audio-motor integrator: The frontal lobe has been shown to engage in a broad variety of multimodal and sensorimotor interface functions in studies covering species from bat to primate [e.g. 34, 35], and the anterior right region unveiled in this study might just contribute another one – highly specific to music performance.

Since this region, the temporal as well as the prefrontal part [10,15], has been demonstrated to be involved in the perception of music with respect to pitch features – such as melodic or harmonic structures, this effect could provide an explanation why skilled pianists often state that silent practice creates an apparent auditory impression (or illusion) of what they are 'playing', and – vice versa – that listening devotedly to a colleague's recital is experienced as playing along rather than just passively perceiving. One concern that has not yet been thoroughly addressed is the strong quality of automatization emerging from the results. The coupling of auditory and motoric processing demonstrated in this study reveals itself to be so strong that the experimental stimulation of one of those two partial representations alone produces an automatic [12,36,37] co-activation of the other. The occurrence of this characteristic co-activity does not depend upon the degree of attention to a task. The circuits in question seem to be active even when the subject is unaware.

Conclusions

Many studies so far decided to recruit either non-musicians or professional musicians as subjects, allowing no evidence whether functional specialization is effected only by lifetime practice (which has been suggested to possibly even alter macrostructural anatomy [40-42]). Therefore, rather than comparing two groups of subjects, the present study monitors one group of participants during their plastic cortical reshaping process induced by audiomotor practice at the piano. Despite the non-homogeneous data due to interindividual variance in a complex and possibly largely strategy-dependent based task, the investigation yields a number of significant major results, which can be summarized as follows:

- Piano practice leads to an increase in the task-related DC-EEG for simple probe tasks. The activation increases appear in the left central and right anterior regions if the training is performed with the right hand. It should be stressed that both regions show an increase of DC-EEG regardless of whether the design of the probe task is purely auditory or purely motoric.

- The observed effect of auditory-sensorimotor coactivation emerges in the first few minutes of training and is firmly established within a few weeks.

- The right anterior activation is linked to the establishment of a note-to-key map and is absent in the control condition.

The question remains as to how musicians experience this inseparable bimodal representation of the instrumental skills and reveals parallels to what is known about mental imagery of music. The representations of temporally extended physical stimuli (melodies) must themselves extend over time, a rule which applies for auditory [38] as well as for visual stimuli [39]. Our subjects faithfully report similar sensations of mentally 'scanning' the auditory image along with the fingerings.

In conclusion, the anterior right region that appears active in both kinds of probe tasks seems to have properties of a supramodal neural network suitable for translating sound into motion, i.e., it may provide musicians with an interface map. Contrary to a common notion amongst music educators, the establishment of a close ear-to-hand link is not the goal that eventually crowns decades of practice, but rather, it commences within the first weeks of practice and may render the basis for any instrumental skills that can be accomplished in a pianist's career.

Methods

Two different sensorimotor paradigms were established for the study: One for training and one for testing.

- In the actual measurement of the event-related DC-EEG (see below) the auditory and motoric features of piano playing were dissociated (probe task paradigm), – whereas the training procedure aimed at sensorimotor binding by providing a regular piano situation with instant auditory feedback for each keystroke (training paradigm).

Before the first practice session, right after the first practice session, after three weeks and after completion of the training, the EEG probe task paradigm was carried out in order to evaluate changes that are introduced to the EEG as a result of the training paradigm. Fig. 7 outlines the arrangement of testing and training sessions.

Figure 7.

Five-week schedule for the non-musicians. Schematic diagram of the training/testing sessions for the piano practice study. Chronological order of the sessions is top to bottom; order of sub-sessions within one day is left to right.

Subjects

Two groups of musically inexperienced subjects (17 right-handed students [43] with no formal instrumental training whatsoever; 9 male, 8 female; mean age 26.2 ± 5.3 years) were trained in the training paradigm and tested in the EEG probe task paradigm (see below).

Additionally, nine right-handed professional pianists (5 male, 4 female, mean age 25.0 ± 6.2 years; with an accumulated lifetime practice experience of 19.4 ± 6.7 years of daily practice) were tested in the EEG probe task paradigm (see below). They, of course, did not have to undergo the training procedure.

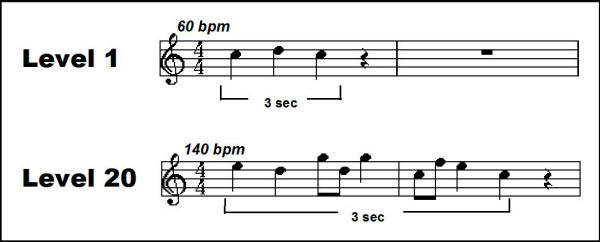

The training paradigm

The beginners were trained over a period of five weeks (10 sessions) to re-play acoustically presented melodies with their right hand as precisely as possible with respect to note order, rhythmic timing and loudness of the piano keystrokes (Table 1). The subjects practiced (with an interactive computer system) listening to 3-second piano melodies of note range c'-g' (Table 1) and, after a 4-second pause, tried to re-play the melodies with the five digits of their right hand as accurately as possible. The system was adaptive: An online performance analysis based on MIDI data of the re-played melodies determined the tempo and complexity of subsequently presented targets (Fig. 8). Three categories of weighted errors added to the rating criterion: Pitch errors (wrong key was pressed), timing errors (key was pressed at the wrong time; deviations larger (smaller) than 1/16 were regarded metric (rhythmic) errors), and dynamic errors (MIDI velocity differing from target loudness more than 10%). The practice session was terminated by the software when a subject's performance curve reached exponential saturation, i.e. when no further improvement was achieved in that session, which was the case after about 20 minutes. The subjects' only feedback on whether an actual trial was solved successfully was an implicit one: they heard themselves playing. No visual or verbal cues (like tone names, score notation or even their own hands visible on the piano keys) were permitted in order to ensure that the practiced skill involved only features of auditory-to-sensorimotor integration in the domains of perception and self-monitored motor execution.

Table 1.

Summary of the general rules for melodic stimulus synthesis by the interactive training software. The melodies were presented acoustically to the subject, who had to remember it for 4 seconds and then immediately replay it on a piano keyboard. The 'level' l (left column) is an arbitrary measure of the level of complexity the subject is able to reproduce properly and therefore a measure of actual skill, making it possible to automatically adjust the task difficulty during practice. The table specifies how the parameters pitch range, number of beats (crotchets), number of additional quavers and tempo (bpm) depend on the given level.

| Level 1 | range | number of beats (1/4 notes) | number of additional 1/8 notes | tempo (bpm) |

| 1 | C-D | 3 | - | 60 |

| 2 | C-E | 3 | - | 60 |

| 3 | C-F | 3 | - | 60 |

| 4 | C-G | 3 | - | 60 |

| 5 | C-G | 4 | - | 80 |

| 6 | C-G | 4 | 1 | 80 |

| 7 | C-G | 4 | 1 | 80 |

| 8 | C-G | 5 | 1 | 100 |

| 9 | C-G | 5 | 1 | 100 |

| 10 | C-G | 5 | 1 | 100 |

| 15 | C-G | 6 | 2 | 120 |

| 20 | C-G | 7 | 3 | 140 |

Figure 8.

Two examples of the auditory targets generated by the training software, with musical notation. bpm: beats per minute – tempo is adjusted in order to constrain the duration of each stimulus to 3 sec. Level l = 1: Pitch range = 2 (C and D), beats = 3, additional quavers = 0, bpm = 60; Level l = 20: Pitch range = 5 (C through G, white keys), beats = 7, additional quavers = 2, bpm = 140.

The total training period for each subject consisted of 10 single 20-minute-sessions, two sessions a week (Fig. 7).

Stimulus generation

The level of complexity of the real-time synthesized auditory piano patterns is determined by the parameters note range, number of notes to be concatenated, tempo, and rhythm. Increasing level of difficulty is accompanied by increasing values for these parameters (Table 1). Additionally, the generating algorithm meets pitch transition probabilities as customary with classical European music. Fig. 8 gives two examples for typical stimuli.

Stimuli were D/A converted by a PC Soundcard (TerraTec™ Maestro) and delivered to the subject with an active loudspeaker (Klein & Hummel) at a distance of 90 cm in front of the subject (approximately acoustic free field conditions). The melodies were presented at an average 73 dB (A) sound pressure level. Inter-stimulus silence in the lab was measured 39.8 dB (A).

Two experimental groups of beginners

The beginners' pool was subdivided into two groups: Both groups had to perform the training procedure as described above.

Group 1 ('map' group, 9 subjects, 5 male, 4 female) worked with a digital piano featuring a conventional key-to-pitch and force-to-loudness assignment.

Group 2 ('no-map' group, 8 subjects, 4 male, 4 female) ran the same paradigm and the same five-week training program but the five relevant notes (pitches) were randomly reassigned to the five relevant keys, or fingers, respectively, after each single training trial.

DC-EEG Measurement

Auditory Probe Task and Motor Probe Task

During the measurement of the event-related DC-EEG (direct voltage electroencephalography), the auditory and motoric features of piano performance were dissociated: The probe tasks during the EEG required either passive listening or silent finger movement, and were therefore either purely auditory or purely motoric.

After obtaining written consent, the volunteers were placed in an optically and acoustically insulated chamber in front of a sight-shaded piano keyboard. Only a fixation dot and some instructional icons/prompts were presented on a black screen. The subjects' event-related slow DC-EEG-potentials were measured either while

- passively listening to 3-second monophonic piano sequences (Auditory Probe Task, 60 recordings) or while

- arbitrarily pressing keys on a soundless piano keyboard (Motor Probe Task, 60 recordings).

The participants were instructed to do either kind of task without any demands being specified. For the mute motor task, the five digits of the right hand were placed on the five white keys c'-g', corresponding to the ambitus of the melodies in the auditory tasks. The total duration of the movement was constrained to 3 seconds by computer instructions. The trigger for the epoching of event-related DC-potentials was given at onset of the first note of the melody in the auditory task, and on the first keystroke in the mute motor task.

The stimuli were real-time synthesized by the same algorithm as in the adaptive training, the level of difficulty being held constant (l = 10) at any time.

By not linking the test events of either kind of task to any performance demands, the paradigm aimed at recruitment of neuronal networks that – after the training – would continue as automatic rather than intentional.

Data acquisition

The subjects' event-related slow EEG-potentials were recorded from the scalp by non-polarizable Ag/AgCl-electrodes with an electrode impedance of less than 1 kΩ at 30 electrode sites with linked mastoid electrodes as a reference. The electrodes were mounted on an EasyCap™ and distributed across the whole scalp according to a modified 10–20 system [44] including additional subtemporal electrodes. Electrode sites FT9, FPZ, FT10, F7, F3, FZ, F4, F8, FT7, FC3, FC4, FT8, T7, C3, CZ, C4, T8, P7, P9, P10, P8, TP7, P3, PZ, P4, TP8, O1, O2, F9, and F10, were included.

Stabilization of the electrode potential and reduction of the skin potential was reached using the method for high quality DC-recordings described by Bauer et al. [45]. DC potentials were amplified by a 32-channel SynAmps™ and recorded by means of NeuroScan™ (sampling rate 400 s-1, low pass filter = 40 Hz, 24 dB/octave, high pass filter = DC).

Vertical and horizontal eye movements (VEOG resp. HEOG) were recorded simultaneously for artifact control. Only trials without eye movements, artifacts from Galvanic skin reflex (sweat) or DC-drifts were accepted for averaging. To exclude that a putative cortical motor DC activation accompanying the auditory probe tasks generated an actual efferent, i.e. supra-threshold, outflow of motor commands, a simultaneous surface electromyogram (EMG) of the superficial finger flexors and extensors of the right hand was monitored accompanying the EEG while auditory stimuli were delivered.

Data analysis / Statistics

Task-related DC shifts typically build up within 500–1000 ms after task onset and then level to a plateau that is sustained until (and beyond) the end of the stimulus/task [7]. Baseline correction was obtained by equating the mean of the preperiod with zero. Hence, the temporal average of the scalp potential in the time window [1000 ms to 3000 ms after task onset] served as the DC plateau value and was fed to further analysis and the presented imaging, respectively.

Electrode sites at which the DC increase/decrease was not consistently related to task execution, i.e. not significantly (p ≥ 0.01) related to the triggering events of sound onset/movement onset throughout all artifact-free epochs, were rejected (for the respective session and subject only). This procedure serves as a DC-artifact criterion. A minimum of 45 trials without artifacts was required from each subject for averaging purposes.

EEG data were recorded immediately before and after the 1st, before the 6th, and as a separate 11th session, in order to trace the changes induced by single session practice as well as by the prolonged training. For that purpose, the data obtained before the 1st session provided a subject's individual 'initial state baseline'. In addition, this initial measurement gave coarse information about individual anatomical properties, e.g., activation foci of motor cortical activity.

Mean values during the stimulus and the task period were subjected to statistical analysis by repeated measure analysis of variance (ANOVA) incorporating one group factor ('no-map' vs. 'map' group) and 4 within-subjects factors: period (auditory stimulus period vs. motor task period), recording (before or after practice within one session), phase of training (1, 5, or 10 sessions of practice), and electrode position (30 positions according to the 10–20 system).

Longitudinal differences were obtained individually for each subject – each compared to the 'initial state baseline': Subtractive values were calculated for the measurements right after the 1st session, from the 6th, and from the 11th session. Average data for all subjects was used to create a Grand Average. For longitudinal differences and group differences a P < 0.05 – criterion (resulting from ANOVA) was applied. For the between-group comparison to the professional group (since within-subject plasticity changes were not available from the professionals), differences in distribution rather than amplitude were demonstrated by ANOVA based on normalized data [46]. Pattern comparisons were based on Pearson's correlation coefficient (applied for between-group differences). Behavioral data was obtained from the same error measures that served as level adaptation criterion during the interactive training (see above). The error parameters (pitches, keystroke times, and keystroke force/loudness) in each trial were normalized in order to show the number of errors per keystroke rather than per task, session-averaged, and group averaged. Standard deviations were calculated.

Authors' contributions

MB designed the paradigm, carried out the experiments, performed the statistical analysis and drafted the manuscript. EA participated in the design and coordination of the study, in the acquisition of subjects, and in discussion and general conclusions. Both authors read and approved the final manuscript.

Acknowledgments

Acknowledgements

We are grateful to Dietrich Parlitz for co-designing the paradigm, to Anke Pirling for assistance in data analysis, to Michael Grossbach for inspiring discussions on the issue, to Josephine Vains for proof-reading, and to all of the volunteers for spending their precious time at our piano (particularly to the ones in the 'cheated' group). The investigators were supported by the German Research Foundation (DFG) as participants of the priority programme SPP1001 'sensorimotor integration'.

Contributor Information

Marc Bangert, Email: mbangert@bidmc.harvard.edu.

Eckart O Altenmüller, Email: altenmueller@hmt-hannover.de.

References

- Haueisen J, Knösche TR. Involuntary motor activity in pianists evoked by music perception. J Cogn Neurosci. 2001;13:786–92. doi: 10.1162/08989290152541449. [DOI] [PubMed] [Google Scholar]

- Scheler G, Lotze M, Braitenberg V, Erb M, Braun C, Birbaumer N. Musician's brain: balance of sensorimotor economy and frontal creativity [abstract] Soc Neurosci Abstr. 2001;27:76.14. [Google Scholar]

- Bangert M, Parlitz D, Altenmüller E. Cortical audio-motor corepresentation in piano learning and expert piano performance [abstract] Soc Neurosci Abstr. 1998;24:658. [Google Scholar]

- Pascual-Leone A, Dang N, Cohen LG, Brasil-Neto JP, Cammarota A, Hallett M. Modulation of muscle responses evoked by transcranial magnetic stimulation during the acquisition of new fine motor skills. J Neurophysiol. 1995;74:1037–1045. doi: 10.1152/jn.1995.74.3.1037. [DOI] [PubMed] [Google Scholar]

- Classen J, Liepert J, Wise SP, Hallett M, Cohen LG. Rapid plasticity of human cortical movement representation induced by practice. J Neurophysiol. 1998;79:1117–1123. doi: 10.1152/jn.1998.79.2.1117. [DOI] [PubMed] [Google Scholar]

- Jäncke L, Shah NJ, Peters M. Cortical activations in primary and secondary motor areas for complex bimanual movements in professional pianists. Brain Res Cogn Brain Res. 2000;10:177–83. doi: 10.1016/s0926-6410(00)00028-8. [DOI] [PubMed] [Google Scholar]

- Speckmann EJ, Elger CE. Introduction to the neurophysiological basis of the EEG and DC potentials. In: Niedermeyer E, Lopes da Silva F, editor. In Electroencephalography: Basic principles, clinical applications, and related fields. 4. Williams & Wilkins, Baltimore; 1998. pp. 15–27. [Google Scholar]

- Altenmüller EO, Gerloff C. Psychophysiology and the EEG. In: Niedermeyer E, Lopes da Silva F, editor. In Electroencephalography: Basic principles, clinical applications, and related fields. 4. Williams & Wilkins, Baltimore; 1998. pp. 637–655. [Google Scholar]

- Gerloff C, Grodd W, Altenmüller E, Kolb R, Naegele T, Klose U, Voigt K, Dichgans J. Coregistration of EEG and fMRI in a Simple Motor Task. Human Brain Mapping. 1996;4:199–209. doi: 10.1002/(SICI)1097-0193(1996)4:3<199::AID-HBM4>3.0.CO;2-Z. [DOI] [PubMed] [Google Scholar]

- Zatorre RJ, Evans AC, Meyer E. Neural mechanisms underlying melodic perception and memory for pitch. J Neurosci. 1994;14:1908–1919. doi: 10.1523/JNEUROSCI.14-04-01908.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Griffiths TD, Büchel C, Frackowiak RS, Patterson RD. Analysis of temporal structure in sound by the human brain. Nature Neuroscience. 1998;1:422–427. doi: 10.1038/1637. [DOI] [PubMed] [Google Scholar]

- Tervaniemi M, Kujala A, Alho K, Virtanen J, Ilmoniemi RJ, Näätänen R. Functional specialization of the human auditory cortex in processing phonetic and musical sounds: A magnetoencephalographic (MEG) study. Neuroimage. 1999;9:330–336. doi: 10.1006/nimg.1999.0405. [DOI] [PubMed] [Google Scholar]

- Halpern AR, Zatorre RJ. When that tune runs through your head: A PET investigation of auditory imagery for familiar melodies. Cerebral Cortex. 1999;9:697–704. doi: 10.1093/cercor/9.7.697. [DOI] [PubMed] [Google Scholar]

- Halpern AR. Cerebral Substrates of Musical Imagery. In: Zatorre RJ, Peretz I, editor. The Biological Foundations of Music. Ann N Y Acad Sci. Vol. 930. 2001. pp. 179–192. [DOI] [PubMed] [Google Scholar]

- Kölsch S, Gunter TC, Friederici AD. Brain Indices of Music Processing: 'Non-musicians' are musical'. J Cogn Neurosci. 2000;12:520–41. doi: 10.1162/089892900562183. [DOI] [PubMed] [Google Scholar]

- Langheim FJ, Callicott JH, Mattav VS, Bertolino A, Frank JA, Weinberger DR. Cortical systems activated during imagined musical performance [abstract] Soc Neurosci Abstr. 1998;24:434. [Google Scholar]

- Penhune VB, Zatorre RJ, Evans AC. Cerebellar contributions to motor timing: A PET study of auditory and visual rhythm. J Cogn Neurosci. 1998;10:752–765. doi: 10.1162/089892998563149. [DOI] [PubMed] [Google Scholar]

- Zatorre RJ, Halpern AR. Effect of unilateral temporal-lobe excision on perception and imagery of songs. Neuropsychologia. 1993;31:221–232. doi: 10.1016/0028-3932(93)90086-f. [DOI] [PubMed] [Google Scholar]

- Zatorre RJ, Halpern AR, Perry DW, Meyer E, Evans AC. Hearing in the mind's ear: A PET investigation of musical imagery and perception. J Cogn Neurosci. 1996;8:29–46. doi: 10.1162/jocn.1996.8.1.29. [DOI] [PubMed] [Google Scholar]

- Samson S, Zatorre RJ. Melodic and harmonic discrimination following unilateral cerebral excision. Brain Cogn. 1988;7:348–360. doi: 10.1016/0278-2626(88)90008-5. [DOI] [PubMed] [Google Scholar]

- Zatorre RJ, Samson S. Role of the right temporal neocortex in retention of pitch in auditory short-term memory. Brain. 1991;114:2403–2417. doi: 10.1093/brain/114.6.2403. [DOI] [PubMed] [Google Scholar]

- Farah MJ. Psychophysical evidence for a shared representational medium for mental images and percepts. J Exp Psychol Gen. 1985;114:91–103. doi: 10.1037//0096-3445.114.1.91. [DOI] [PubMed] [Google Scholar]

- Farah MJ. Is visual imagery really visual? Overlooked evidence from neuropsychology. Psychol Rev. 1988;95:307–317. doi: 10.1037/0033-295x.95.3.307. [DOI] [PubMed] [Google Scholar]

- Finke RA. Theories relating mental imagery to perception. Psychol Bull. 1985;98:236–259. [PubMed] [Google Scholar]

- Finke RA, Shepard RN. Visual functions of mental imagery. In: Boff KR, Kaufman L, Thomas JP, editor. In : Handbook of perception and human performance, 2: Cognitive processes and performance. Wiley, New York; 1986. pp. 37.1–37.55. [Google Scholar]

- Kosslyn SM. Image and mind. Harvard University Press: Cambridge MA; 1980. [Google Scholar]

- Kosslyn SM. Seeing and imaging in the cerbral hemispheres: A computational approach. Psychol Rev. 1987;94:148–175. [PubMed] [Google Scholar]

- Liberman A. Speech: A special code (learning, development, and conceptual change series) CIT press; 1995. [Google Scholar]

- Aboitiz F, Garcia R. The anatomy of language revisited. Biol Res. 1997;30:171–183. [PubMed] [Google Scholar]

- Price CJ, Wise RJ, Warburton EA, Moore CJ, Howard D, Patterson K, Frackowiak RS, Friston KJ. Hearing and saying. The functional neuro-anatomy of auditory word processing. Brain. 1996;119:919–931. doi: 10.1093/brain/119.3.919. [DOI] [PubMed] [Google Scholar]

- Pihan H, Altenmüller E, Hertrich I, Ackermann H. Cortical activation patterns of affective speech processing depend on concurrent demands on the subvocal rehearsal system. Brain. 2000;123:2338–2349. doi: 10.1093/brain/123.11.2338. [DOI] [PubMed] [Google Scholar]

- Maess B, Koelsch S, Gunter TC, Friederici AD. Musical syntax is processed in Broca's area: an MEG study. Nat Neurosci. 2001;4:540–5. doi: 10.1038/87502. [DOI] [PubMed] [Google Scholar]

- Zatorre RJ, Belin P. Spectral and temporal processing in human auditory cortex. Cereb Cortex. 2001;11:946–53. doi: 10.1093/cercor/11.10.946. [DOI] [PubMed] [Google Scholar]

- Eiermann A, Esser KH. The hunt for the cortical audio-motor interface in bats [abstract] Assoc Res Otolaryngol Abs. 1999;22:188. [Google Scholar]

- Rizzolatti G, Arbib MA. Language within our grasp. TINS. 1998;21:188–194. doi: 10.1016/s0166-2236(98)01260-0. [DOI] [PubMed] [Google Scholar]

- Tervaniemi M, Ilvonen T, Karma K, Alho K, Näätänen R. The musical brain: brain waves reveal the neurophysiological basis of musicality in human subjects. Neurosci Lett. 1997;226:1–4. doi: 10.1016/s0304-3940(97)00217-6. [DOI] [PubMed] [Google Scholar]

- Kölsch S, Schroger E, Tervaniemi M. Superior pre-attentive auditory processing in musicians. Neuroreport. 1999;10:1309–1313. doi: 10.1097/00001756-199904260-00029. [DOI] [PubMed] [Google Scholar]

- Halpern AR. Mental scanning in auditory imagery for songs. J Exp Psychol Learn Mem Cogn. 1988;14:434–43. doi: 10.1037//0278-7393.14.3.434. [DOI] [PubMed] [Google Scholar]

- Shepard RN, Metzler J. Mental rotation of three-dimensional objects. Science. 1971;171:701–703. doi: 10.1126/science.171.3972.701. [DOI] [PubMed] [Google Scholar]

- Schlaug G, Jäncke L, Huang Y, Staiger JF, Steinmetz H. Increased corpus callosum size in musicians. Neuropsychologia. 1995;33:1047–1055. doi: 10.1016/0028-3932(95)00045-5. [DOI] [PubMed] [Google Scholar]

- Pantev C, Oostenveld R, Engelien A, Ross B, Roberts LE, Hoke M. Increased auditory cortical representation in musicians. Nature. 1998;392:811–814. doi: 10.1038/33918. [DOI] [PubMed] [Google Scholar]

- Elbert T, Pantev C, Wienbruch C, Rockstroh B, Taub E. Increased cortical representation of the fingers of the left hand in string players. Science. 1995;270:305–307. doi: 10.1126/science.270.5234.305. [DOI] [PubMed] [Google Scholar]

- Oldfield RC. The Assessment and Analysis of Handedness: The Edinburgh Inventory. Neuropsychologia. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Jasper HH. The ten-twenty electrode system of the international federation. Electroencephalogr Clin Neurophysiol. 1958;10:371–375. [PubMed] [Google Scholar]

- Bauer H, Korunka C, Leodolter M. Technical requirements for high-quality scalp DC recordings. Electroenc Clin Neurophysiol. 1989;72:545–547. doi: 10.1016/0013-4694(89)90232-0. [DOI] [PubMed] [Google Scholar]

- McCarthy G, Wood CC. Scalp distributions of event-related potentials: an ambiguity associated with analysis of variance models. Electroencephalogr Clin Neurophysiol. 1985;62:203–8. doi: 10.1016/0168-5597(85)90015-2. [DOI] [PubMed] [Google Scholar]