Abstract

Multiple sources of information from the face guide attention during social interaction. The present study modified the Posner cueing paradigm to investigate how dynamic changes in emotional expression and eye gaze in faces affect the neural processing of subsequent target stimuli. Event-related potentials (ERPs) were recorded while participants viewed centrally presented face displays in which gaze direction (left, direct, right) and facial expression (fearful, neutral) covaried in a fully crossed design. Gaze direction was not predictive of peripheral target location. ERP analysis revealed several sequential effects, including: (1) an early enhancement of target processing following fearful faces (P1); (2) an interaction between expression and gaze (N1), with enhanced target processing following fearful faces with rightward gaze; and (3) an interaction between gaze and target location (P3), with enhanced processing for invalidly cued left visual field targets. Behaviorally, participants responded faster to targets following fearful faces and targets presented in the right visual field, in concordance with the P1 and N1 effects, respectively. The findings indicate that two nonverbal social cues—facial expression and gaze direction—modulate attentional orienting across different temporal stages of processing. Results have implications for understanding the mental chronometry of shared attention and social referencing.

Keywords: Facial affect, Positive psychology, Evoked potentials, Shared attention

INTRODUCTION

During the course of social interaction it is important to be aware of the attentional focus and internal state of the person with whom you are engaged. Attentional focus is most readily inferred by noticing the direction of one’s gaze, although other cues such as head orientation, body posture, and pointing are also used in social communication. Where someone is looking is typically interpreted as a direct indication of what he or she is paying attention to, which in turn can affect one’s own focus of attention. The common focus of attention across individuals in dyadic interactions is termed shared or joint attention (Corkum & Moore, 1994).

In addition to using social cues from the face and body to identify another person’s spatial distribution of attention in the environment, emotional expression is read during social communication to interpret feeling states of others and to predict their potential actions toward oneself and other objects in the environment. For instance, if there is a change in a partner’s expression from bored to happy, one may determine that something in the social context is pleasing to the partner, which may foster additional social engagement and prolong the interaction. When changes in emotional expression are combined with gaze shifts, the social cues of the partner provide additional information that directs one’s actions toward or away from other stimuli in the environment. The role of gaze shifts is particularly important for some emotions, such as fear, where the meaning of the emotion is ambiguous until the source of the emotional change is discerned (i.e., to identify where the threat is located; Whalen et al., 2001). A related concept in developmental psychology is social referencing, in which a toddler will use emotional expression and attention-directing cues displayed by a parent to determine whether or not to approach or withdraw from a novel toy or stranger (Klinnert, Campos, Sorce, Emde, & Svejda, 1983). Thus, the combination of multiple social signals from a partner permits inferences regarding the salience of events in the environment and dyadic context that can be powerful determinants of attention and action during social communication.

Facial expression and gaze direction are such fundamentally important forms of nonverbal social communication that the ability to interpret these two types of information develops quite early in ontogeny. Infants can discriminate some emotional facial expressions soon after birth (Farroni, Menon, Rigato, & Johnson, 2007; Field, Woodson, Greenberg, & Cohen, 1982; Nelson & Horowitz, 1983). Field and colleagues (1982) have shown that discriminating happy, sad and surprised expressions can be seen in infants within the first two days after birth. Farroni and colleagues (2007) suggest that newborns demonstrate a preference for happy compared to fearful faces due to the experience they gain during the first few days of life. Additionally, newborns are faster to saccade to a gazed-at peripheral target when the eye movement is visible (Farroni, Massaccesi, Pividori, & Johnson, 2004).

Emotional facial expressions provide information about the state of mind of the actor (Ekman & Oster, 1979) as well as changes in one’s level of safety and threat. Negative emotional expressions reflexively attract attention when paired with a neutral or positive expression and presented laterally in the visual field (Holmes, Vuilleumier, & Eimer, 2003; Mogg & Bradley, 1999; Mogg et al., 2000), as well as when presented at a random location in the visual field within a background of neutral or positive emotional expressions during visual search (Eastwood, Smilek, & Merikle, 2001; Fox et al., 2000; Lundqvist & Ohman, 2005; Ohman, Flykt, and Esteves, 2001; Ohman, Lundqvist, & Esteves, 2001; Tipples, Atkinson, & Young, 2002; Tipples, Young, Quinlan, Broks, & Ellis, 2002). Pourtois, Grandjean, Sander, and Vuilleumier (2004) have demonstrated that a rapid shift of attention to the location of a fearful face facilitates target processing, indexed by the amplitude of the P1 component. Adams and Kleck (2003, 2005) have demonstrated that expressions associated with a withdrawal motivation (e.g., fear) are more easily detected when the face is presented with averted gaze, while expressions associated with approach motivation (e.g., happiness and anger) are more easily detected when the face is presented with direct gaze.

Additional behavioral evidence of gaze and emotional expression interactions has been reported using the Garner selective attention paradigm. Both Ganel, Goshen-Gottstein, and Goodale (2005) and Graham & LaBar (2007) reported integrated processing of gaze and expression by showing that participants were not able to attend to one dimension without interference from the other. However, there were differences in how gaze and expression were processed, as inversion interfered more with expression judgments than with gaze judgments (Ganel et al., 2005), and the interaction effects were modulated by the relative discriminability of gaze and expression (Graham & LaBar, 2007). These studies suggest at least some integration of gaze and expression information during face processing. Therefore, it is reasonable to wonder whether reflexive orienting to gaze direction is modulated by facial expression.

Reflexive orienting to gaze direction is indexed by a reaction time advantage for targets that appear at the gazed-at location compared to other locations, even when participants are told that the gaze is not predictive of target location (e.g., Friesen & Kingstone, 1998). This phenomenon has been demonstrated in both humans (Deaner & Platt, 2003; Driver et al., 1999; Friesen & Kingstone, 1998, 2003a; b; Friesen, Moore, & Kingstone, 2005; Friesen, Ristic, & Kingstone, 2004; Hietanen, 1999; Langton & Bruce, 1999; Ristic, Friesen, & Kingstone, 2002; Ristic & Kingstone, 2005) and nonhuman primates in certain behavioral contexts (Deaner & Platt, 2003; Emery, Lorincz, Perrett, Oram, & Baker, 1997; but see Itakura, 1996; Tomonaga, 2007; Tomasello, Hare, Lehmann, & Call, 2007). These results suggest that gaze is an evolutionarily important form of communication in higher primates, and gaze-following provides the viewer with advantageous information about his or her surroundings that would not otherwise be available. Gaze shifts can signal the location of both positively valent stimuli, such as a potential source of food, and negatively valent stimuli, such as where a predator is lurking. To differentiate between these two distinct signals the viewer must be able to combine gaze information with information gleaned from the social partner’s facial expression.

Few behavioral studies have directly investigated the relationship between emotional expression and gaze during target detection or identification tasks, and those that have been conducted provide mixed results (Hietanen & Leppanen, 2003; Hori et al., 2005; Mathews, Fox, Yiend, & Calder, 2003; Pecchinenda, Pes, Ferlazzo, & Zoccolotti (2008); Putman, Hermans, & van Honk, 2006; Tipples, 2006). Our behavioral studies (Graham, Friesen, Fichtenholtz, & LaBar, in press) have shown that facial expression and gaze direction produce discrete effects at short stimulus onset asynchronies (SOAs), wherein targets were responded to more quickly when the face was emotionally expressive regardless of the match between gaze direction and target location. However, some studies have shown that facial expression and gaze direction–target location congruency can interact, leading to better orienting to a gazed-at location in the presence of a fearful face (Putman et al., 2006; Tipples, 2006), a happy face (Hori et al., 2005; Putman et al., 2006), in participants high in trait anxiety (Holmes, Richards, & Green, 2006; Mathews et al., 2003), or when the participants have an explicitly evaluative task (Pecchinenda et al., 2008) or more time to process the cues (Graham et al., in press). Still other reports have shown no effect of facial expression (fearful, angry, disgust, or happy) or no interactions between gaze direction and facial expression on attentional orienting (Bayliss, Frischen, Fenske, & Tipper, 2007; Hietanen and Leppanen, 2003).

In sum, the behavioral studies investigating gaze–expression interactions on attentional orienting are inconclusive. In addition to differences in experimental design across studies, one possibility is that emotional expression and gaze influences do not converge onto the same stage of target processing, which may increase the variability in behavioral effects. By varying the task (target detection, location, or discrimination) and timing (cue–target SOA, target duration), previous studies may be engaging different neural mechanisms. Event-related potential (ERP) studies may be useful in this regard by revealing the relative timing of the effects of multiple social cues on target processing. Additionally, the neural mechanisms underlying specific target ERP components are becoming well understood (see Luck, 2005). Previous ERP studies using laterally presented visual targets have shown a consistent pattern of ERP components that can be modulated by attention: an early positive deflection (P1, at occipital electrode sites) at 70–100 ms post target onset, a negative deflection (N1, at parietal/occipital electrode sites) at approximately 150–200 ms post target onset, and a late positive deflection (P3, at central/parietal/midline electrode sites) at approximately 300–500 ms post target onset (for review see Luck, Woodman, & Vogel, 2000). ERP studies of gaze-directed attentional orienting have analyzed the P1, N1, and P3 components, demonstrating enhanced P1 amplitude in response to gazed-at targets (valid) compared to targets presented across the screen from the gazed at location (invalid), and greater P3 amplitude in response to invalid compared to valid targets (Schuller & Rossion, 2001, 2004, 2005). No effects were seen on the N1 component. However, these studies did not manipulate the expression on the face. One previous study has investigated the role of expression and gaze on ERPs (Fichtenholtz, Hopfinger, Graham, Detwiler, & LaBar, 2007), but did not include a neutral expression control condition. In this study, the P1 component was modulated by the expression depicted by the facial cue; the N1 was modulated by expression on leftward gaze trials; and the P3 component was enhanced in response to invalid targets.

In the current study, we modified the gaze-cuing paradigm (Friesen & Kingstone, 1998) by incorporating dynamic gaze shifts (as seen in Schuller & Rossion, 2001, 2005) with dynamic expression changes to produce a complex attention-directing face cue that had the appearance of reacting to a lateralized target just prior to its presence in the participant’s visual field. Given the previous behavioral findings, we expected to see independent effects of facial emotion and gaze direction in the reaction time (RT) data. Our previous research (Fichtenholtz et al., 2007; Graham et al., in press) suggests that behavioral effects of emotion are generally consistent across specific expressions (e.g., fear, happiness, disgust). To simplify the current design and to accommodate the signal-averaging requirements of ERP studies, one expression—fear—was chosen. The presentation of the fearful expression should create a situation where the participants are in a heightened state of vigilance and are more attentive to all succeeding stimuli that may be presented, regardless of location. In this situation, consistent with our previous findings (Fichtenholtz et al., 2007), early enhanced processing of target stimuli following a fearful face should not be limited to those targets presented at the gazed-at location (P1 component). Accordingly, we predicted that gaze-directed attentional effects would occur later during target processing when contextual information is integrated (P3 component). In addition, the facial expression of the cue should interact with gaze direction on the N1 component, which indexes target discrimination processes (Fichtenholtz et al., 2007). Due to the dynamic nature of the facial cues, it is difficult to differentiate the responses to the simultaneous gaze-shift and expression changes. The present analyses were instead focused on ERPs time-locked to target presentation, in order to measure the effects of gaze and expression on the neural processing of subsequent stimuli as indices of attentional modulation, as done in most previous studies of voluntary and involuntary spatial attention (Heinze et al., 1994; Mangun & Hillyard, 1991).

METHODS

Participants

Twenty-one healthy right-handed young adults provided written consent to volunteer in this experiment. All the participants were Duke University students and either received course credit for their participation or were compensated at a rate of $10 per hour. Participants were screened for a history of neurological and psychiatric disorders, substance abuse, and current psychotropic medications. One subject was excluded from participating due to a psychiatric history. Additionally, data from four participants was excluded because of excessive ERP artifacts (i.e. more than 20% of trials had to be rejected). Analyses were conducted on the remaining 16 participants (M 20.31 years old±SD 1.58; 8 males). The Duke University Medical Center Institutional Review Board approved the protocol for ethical treatment of human participants.

Task parameters

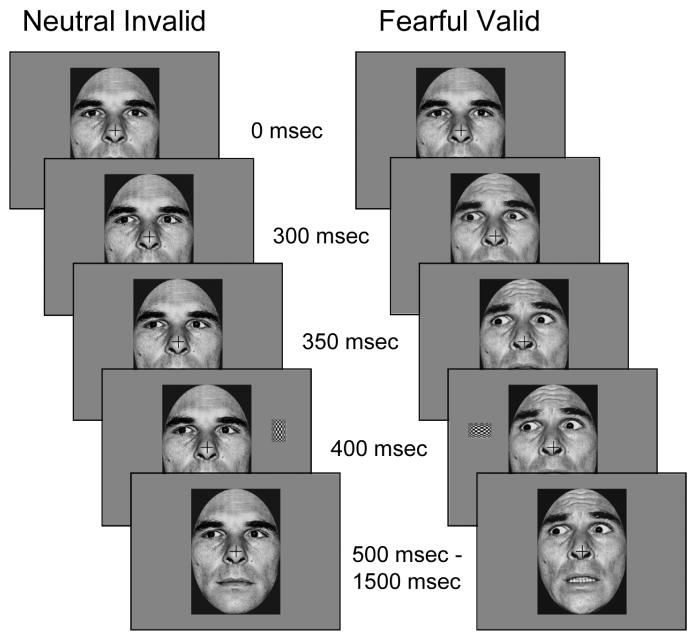

Participants performed the task in 14 runs, each lasting approximately 4.2 min. Each run contained 72 trials consisting of four exemplars of 18 different trial types. Each trial consisted of a dynamic face cue stimulus and a target rectangle. The face cue was presented in three successive frames using apparent motion to create the illusion of a shift in eye gaze and a simultaneous change in emotional expression (Figure 1). The nomenclature for the direction of gaze is based on the participants’ frame of reference, so on a leftward gaze-shift trial the pupils on the face move to the left of the participant’s center. In the first frame, a neutral face gazing forward was presented for 300 ms. The second (50 ms) and third (50 ms) frames differed by condition. In the neutral expression conditions, the second frame consisted of a left, direct, or right eye gaze, and the third frame was identical to the second frame. During the fearful expression conditions, the second frame consisted of the presentation of a 55% fearful expression (i.e., morphed face consisting of 55% fearful expression, 45% neutral expression) and a left, direct, or right eye gaze, and the third frame presented a 100% fearful expression with the identical gaze as in frame two. Thus, the eye gaze shift terminated 50 ms prior to the change in emotional expression although both started at the same time. This frame sequence was used to approximate the relative timing of gaze and expression shifts in response to the onset of a threatening peripheral stimulus in the environment.

Figure 1.

This novel variation on the gaze direction cueing task uses both dynamic expression and eye gaze shifts. The expression change is a two-stage process that lasts for 100 ms (as seen in the right column) and the gaze shift is a single step that begins at the same time as the expression change. Total trial duration was 1500 ms with a random intertrial interval between 1500 and 2000 ms.

Immediately following the cue stimulus, the attentional target was presented for 100 ms. The third frame of the cue stimulus remained on the screen for the remainder of the trial (1100 ms duration). Each trial was followed by an intertrial interval that varied randomly between 1500 and 2000 ms. The attentional target was a rectangular checkerboard presented either horizontally or vertically in the periphery (2.1° above fixation, 7.4° left or right of fixation, 4.25° left or right of the face) of the upper left or right visual field (Figure 1). Attentional targets were presented with equal frequency with horizontal and vertical orientations, in the left and right visual fields, and at the gazed-at (valid) or not-gazed-at (invalid) location. One-third of the trials consisted of the cue sequence being presented with no target (catch trials). The catch trials were included in order to provide an electrophysiological baseline which could be subtracted from the target trials in order to isolate the attention-related ERP response to the targets (Busse & Woldorff, 2003). This baseline was available for each type of target trial.

Throughout the entire run participants were asked to fixate on a centrally presented cross. The participants’ task was to identify the orientation of the long axis of the rectangular target using two buttons on a game controller. Responses were made with the index finger of each hand. Response mapping was balanced across subjects to mitigate motor-related hemispheric asymmetries in the ERP data.

Stimuli

In order to simplify the stimulus presentation and reduce the possibility of ERP effects due to featural differences in cue stimuli, one male actor (PE) was selected from the Ekman and Friesen (1978) pictures of facial affect to act as the centrally presented cuing stimulus. The original photos posed fearful and neutral facial expressions with direct gaze. Adobe Photoshop (Adobe Systems Incorporated, San Jose, CA) was used to manipulate gaze direction so that the irises were averted between 0.37° and 0.4° from the centrally positioned irises in the faces with direct gaze. Thus, six digitized grayscale photographs were used, which constituted the factorial combination of facial expression (fearful, neutral) and gaze direction (direct, left, and right). In order to create realistic changes in emotional expression, fearful facial expressions of intermediate intensity were created using the morphing methods outlined by LaBar and colleagues (2003) using MorphMan 2000 software (STOIK, Moscow, Russia). Three morphs depicting 55% fearful/45% neutral expression were created with left, right, and direct-looking gaze and were used to create a more naturalistic apparent motion effect. The grayscale facial cuing stimuli were presented at fixation and subtended approximately 6.3° of horizontal and 8.9° of vertical visual angle.

Target stimuli consisted of two rectangles subtending 1.1°×1.6° of visual angle. The rectangles were filled with a black and white checkerboard pattern and were presented with the long axis either horizontal or vertical, depending on the trial.

ERP recordings

The electroencephalogram (EEG) was recorded from 64 electrodes in a custom elastic cap (Electro-Cap International, Inc., Eaton, OH) and referenced to the right mastoid during recording. Electrode impedances were maintained below 2 kΩ for the mastoids, below 10 kΩ for the facial electrodes, and below 5 kΩ for all the remaining electrodes. Horizontal eye movements were monitored by two electrodes at the outer canthi of the eyes, and vertical eye movements and eye blinks were detected by two electrodes placed below the orbital ridge of each eye. The 64 electrodes were recorded with a bandpass filter of 0.01–100 Hz and a gain of 1000, and the raw signal was continuously digitized at a sampling rate of 500 Hz. Recordings took place in an electrically shielded, sound-attenuated chamber.

Because we recorded from 64 electrode sites, the nomenclature used to describe ERP results is based on the International 10–20 system (Jasper, 1958) but with additional information that reflects the increased spatial coverage. Electrodes are identified by 10–20 positions, modified with letters or symbols denoting the following: a=slightly anterior placement relative to the original 10–20 position, s=superior placement, i=inferior placement, and ′=placement within 1 cm of 10–20 position.

ERP data reduction

Artifact rejection was performed offline by discarding epochs of the EEG that revealed eye movements, eye blinks, excessive muscle-related potentials, or drifts. For the 16 participants included in the final analysis, 12.04% of trials were excluded due to artifacts. The analysis was focused on examining how ERPs elicited by targets varied as a function of cue emotion, gaze direction, and target location. Thus, averages were calculated for 12 bins formed by crossing two facial expressions (fearful or neutral) with three gaze directions (left, right, or central) and two target locations (left or right visual field). Each average was calculated for an epoch extending from 250 ms before to 1000 ms after target onset and digitally low-pass filtered at 60 Hz. The epoch from 250 ms before the target to target onset was used for baseline correction. By randomizing the different trial types, the impact of response overlap from adjacent stimuli in the sequence was minimized. The responses from the cue-only trials were subtracted from the cue-target trials for each trial type separately to isolate the target-related responses (i.e., Fear/Left gaze/Left target trials minus Fear/Left gaze/No target trials). Importantly, this procedure will remove any lingering cue-related activity that might have occurred during the target epoch being analyzed without disrupting the influence exerted by the cue onto the attentional responses elicited by the target itself (Busse & Woldorff, 2003; Woldorff et al., 2004). While using a static SOA will produce enhanced warning potential in response to the cue, these effects will be consistent across all conditions and removed by the subtraction. After subtracting the cue-only trials, all channels were re-referenced to the algebraic average of the two mastoid electrodes. The ERP averages for individual participants were then combined into group averages across all participants.

ERP data analysis

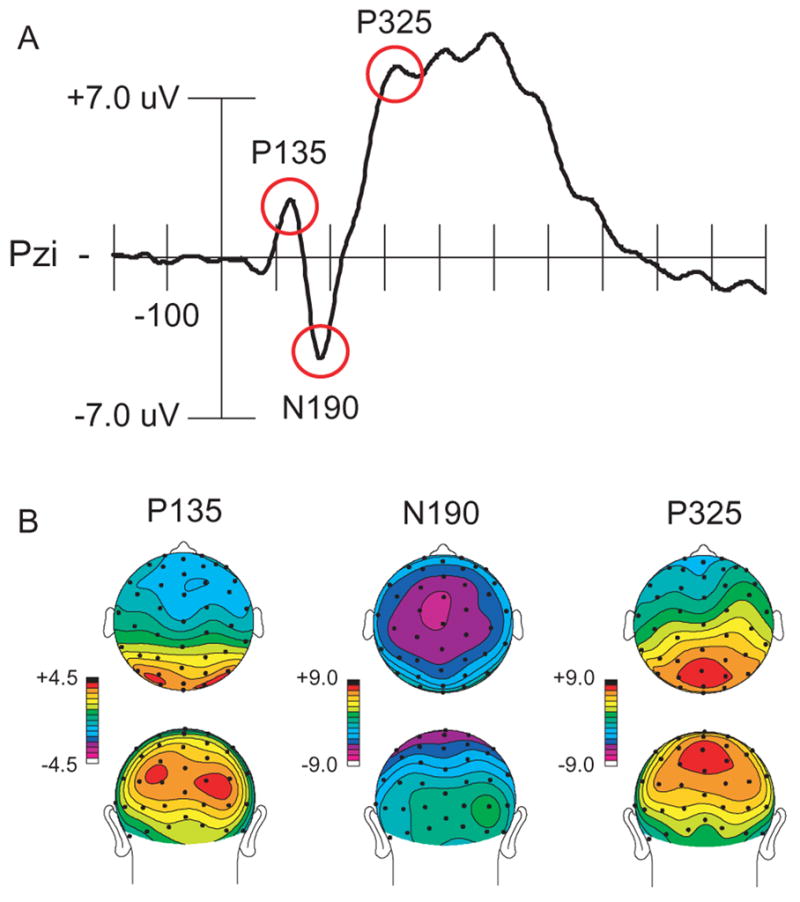

Group-averaged ERPs elicited by left visual field (LVF) and right visual field (RVF) targets (collapsed across cue type) were plotted. These plots revealed the three components that were predicted: (1) a positive deflection (P1 component, P135), at occipital electrode sites, peaking at approximately 135 ms; (2) a negative deflection (N1 component, N190), at central electrode sites, peaking at approximately 190 ms; and (3) a positive deflection (P3 component, P325), at centroparietal midline electrode sites, peaking at approximately 325 ms. In order to capture the peak amplitude of each deflection seen in the group-averaged waveforms (see Figure 2), three latency intervals were selected for analyses: 120–150 ms, 175–205 ms, and 310–340 ms. Additionally, electrode sites were selected based on previous studies of gaze-directed orienting (Schuller & Rossion, 2001, 2004, 2005) and to capture the spatial extent of each component at its peak. Mean amplitude during each latency window, at each electrode site, was entered into further analyses.

Figure 2.

Event-related response across all target types (with cue-related activity removed by subtracing cue-only trials from cue-plus-target trials). (A) Waveform from a representative electrode showing the components of interest. (B) Scalp distributions showing the topographic distribution at the peak of each component.

A third prominent positive deflection was also seen at centroparietal midline electrode sites, peaking at approximately 495 ms. This component showed a main effect of eye gaze, being larger for rightward gaze shifts. Because this component was not hypothesized a priori, it will not be discussed further.

The ANOVA for the contralateral ERPs evoked by the targets’ stimuli during the time range of the P135 component (120–150 ms) included the following factors: expression (fearful, neutral); gaze direction (left, right); target location (LVF, RVF); and electrode locations (lateral or medial scalp locations: P3i, P4i versus O1′, O2′). The ANOVA for the midline ERPs evoked by the target stimuli during the time range of the N190 component (175–205 ms) included the following factors: expression (fearful, neutral), gaze direction (left, right); target location (LVF, RVF); and electrode locations (anterior to posterior scalp locations: Cza, Cz, Pzs). The ANOVA for the midline ERPs evoked by the target stimuli during the time range of the P325 component (310–340 ms) included the same factors as the ANOVA for the N190 component, but used a slightly more posterior set of electrodes (Pzs, Pzi, Ozs). Follow-up comparisons of ERP effects were conducted using additional ANOVAs or paired-samples t-tests. Greenhouse-Geisser p values are reported for ANOVAs on ERP data involving more than one degree of freedom to correct for possible failures to meet sphericity assumptions.

Behavioral data analysis

Mean RT and accuracy for the target discrimination task were computed for each condition for each participant. Any trials with incorrect answers or trials where RTs were shorter than 100 ms or longer than 1000 ms (duration of the face on the screen after target presentation) were excluded from the analysis. Emotional expression (fearful, neutral) × gaze direction (left, right) × target location (LVF, RVF) within-subjects ANOVAs were conducted for both RT and accuracy. Given that RT effects of gaze-directed validity are predicted by previous experiments (i.e. Friesen & Kingstone, 1998), planned comparisons were conducted to compare the effects of gaze direction for each target location (LVF targets: Leftward Gaze/Valid vs. Rightward Gaze/Invalid; RVF targets: Rightward Gaze/Valid vs. Leftward Gaze/Invalid).

While it is important to include direct gaze trials in the experimental design to ensure that participants did not anticipate a gaze change, this trial type was excluded from the analyses due to stimulus differences that are naturally occurring between the neutral-direct and all other conditions. Specifically, the neutral-direct gaze condition differs from all other conditions in that there is absolutely no movement in the face. All other conditions have movement in either the eyes or in the expression, thereby presenting the participant with a motion cue signaling when the target may appear. The neutral-direct condition provides no such cue; therefore, participants may be surprised when the targets are presented, similar to an invalidly cued target, which potentially confounds the interpretation of results from this condition.

RESULTS

Behavior

Accuracy

A three-way repeated-measures ANOVA of accuracy data revealed no significant main effects or interactions. Overall, accuracy was high (M= 93.8% correct).

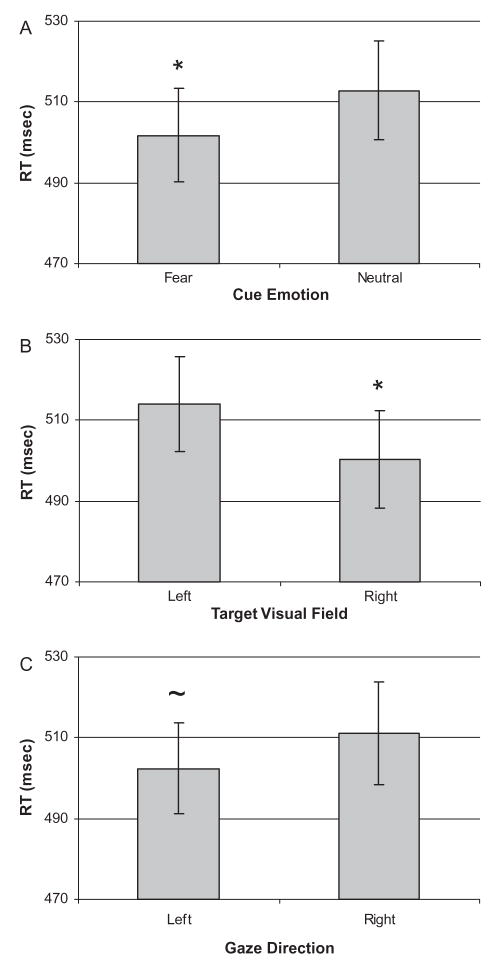

RT

Results from a three-way repeated measures ANOVA of RT data revealed significant main effects of cue facial expression, F(1, 15)= 12.84, p<.01, and target location, F(1, 15)=16.17, p<.01, as well as a trend towards an effect of gaze direction, F(1, 15)=3.85, p=.07. The effect of cue facial expression was due to participants responding faster following the presentation of a fearful facial expression compared to a neutral expression (Figure 3A). Participants also responded faster to targets presented in the RVF compared to targets presented in the LVF (Figure 3B). Lastly, participants had marginally shorter RTs to targets preceded by a leftward gaze shift than a direct or rightward gaze shift (Figure 3C). No interactions were significant. Mean RT data are presented in Table 1.

Figure 3.

Mean reaction time (RT) in ms for each main effect. (A) RT to targets preceded by fearful and neutral expressions. (B) RT to targets presented in the left and right visual fields. (C) RT to targets preceded by leftward and rightward gaze shifts (p=.069). Error bars represent the standard error of the mean. * p<.05, ~ p<.1.

TABLE 1.

Reaction times for each trial type

| Expression | Gaze direction | Target location | Mean | SD |

|---|---|---|---|---|

| Fear | Left | Left | 488.87 | 47.73 |

| Right | 514.10 | 53.44 | ||

| Right | Left | 494.69 | 57.23 | |

| Right | 518.19 | 57.71 | ||

| Neutral | Left | Left | 500.28 | 53.92 |

| Right | 526.62 | 54.18 | ||

| Right | Left | 508.81 | 59.01 | |

| Right | 508.31 | 58.44 |

Planned comparisons

As predicted, comparisons of gaze direction within each visual field revealed a significant RT advantage for LVF targets preceded by a leftward gaze (M 510.94±10.82 ms) compared to a rightward gaze (M 518.01±12.14 ms), t(15)=− 2.13, p<.05. No effect of gaze direction was seen for RVF targets.

Event-related potentials

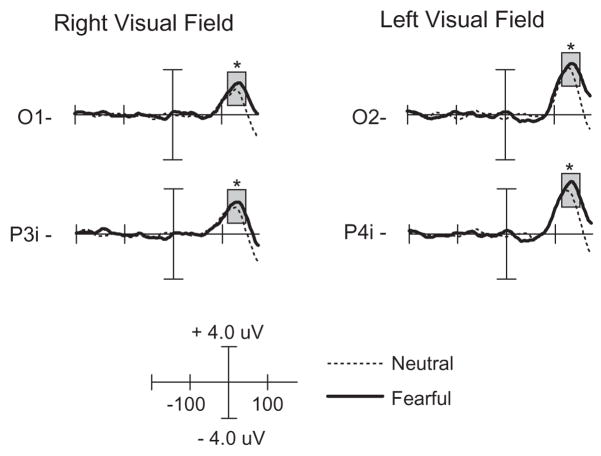

P135 component

The four-way ANOVA over the latency range 120–150 ms at contralateral parieto-occipital electrode sites revealed a main effect of expression, F(1, 15)= 4.78, p<.04, with greater P135 amplitude following fearful faces (as seen in Figure 4). No other main effects or interactions were significant.

Figure 4.

ERP waveforms of the P135 component comparing targets preceded by fearful and neutral expressions. The left column is the response to right visual field targets from left (contralateral) occipital electrode sites. The right column is the response to left visual field targets from right (contralateral) occipital electrode sites. Responses are time-locked to the onset of the target. The time window of the analysis is highlighted. * p<.05.

N190 component

The four-way ANOVA from 175 to 205 ms revealed a significant main effect of gaze direction over central electrode sites, F(1, 15)=6.78, p<.02, with greater N190 amplitude following a rightward gaze, and a significant expression × gaze direction interaction, F(1, 15)= 8.44, p<.01. Follow-up analyses by gaze direction revealed a significant effect of facial expression following a leftward gaze, F(1, 15)=6.95, p<.02, with greater amplitude following a neutral expression, and a trend in the opposite direction following a rightward gaze, F(1, 15)=3.77, p=.07 (as seen in Figure 5). This pattern of response reveals that the N190 shows a differential sensitivity to facial expression with each gaze direction. Following a fearful face, target responses are enhanced for a leftward gaze but reduced for a rightward gaze.

Figure 5.

ERP waveforms of the N190 component comparing targets preceded by fearful and neutral expressions. The left column is the response to targets preceded by a leftward gaze, while the right column is the response to targets preceded by a rightward gaze. Responses are time-locked to the onset of the target. The time window of the analysis is highlighted. *=p<.05, ~=p<.1.

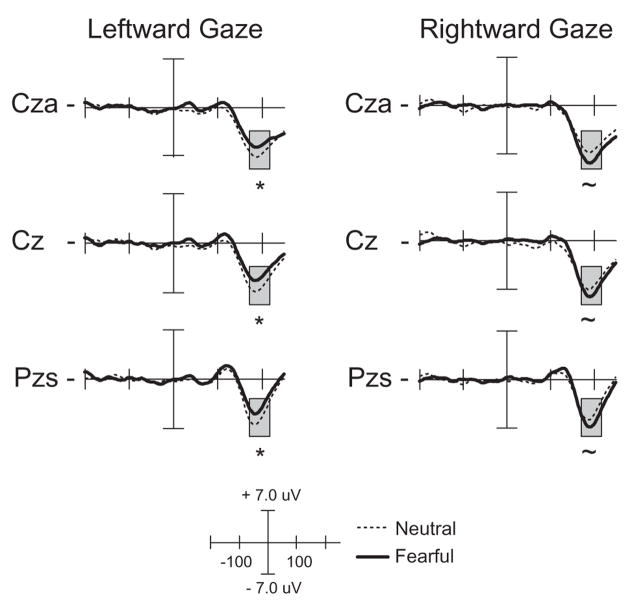

P325 component

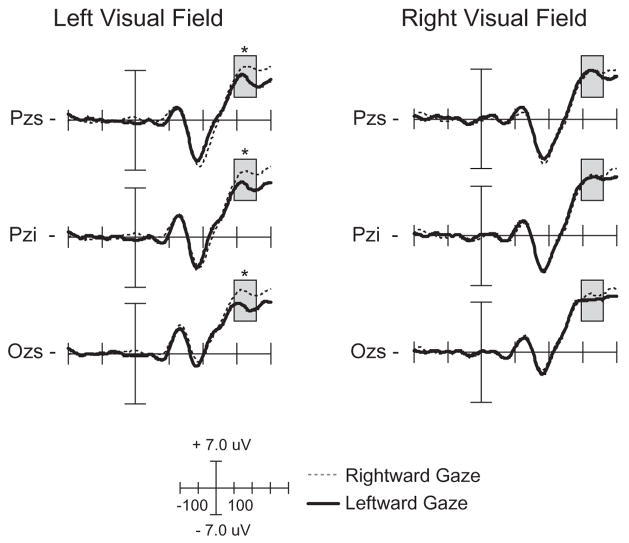

The four-way ANOVA from 310 to 340 ms revealed a significant main effect of gaze direction over centroparietal electrode sites, F(1, 15)=5.00, p<.04, with leftward gaze trials demonstrating smaller P325 amplitude than rightward gaze trials. A significant gaze direction × target location interaction was also seen, F(1, 15)=10.97, p<.01. Follow-up analyses by target location revealed a significant effect of gaze direction for LVF targets, F(1, 15)=17.21, p<.01, with targets preceded by a rightward gaze (invalid cue) eliciting a larger P325 than targets preceded by a leftward gaze (valid cue) (see Figure 6). No effect of gaze direction was found for RVF targets. This pattern of effects demonstrates that the P325 component is modulated by the validity of a gaze-shift when targets are presented in the LVF, and is consistent with the behavioral RT effect seen in the planned comparisons. Combined with the behavioral results, this ERP finding suggests that in the presence of an emotional cue, there is an attentional bias towards the LVF. No main effect of facial expression was seen.

Figure 6.

ERP waveforms of the P325 component comparing targets preceded by leftward and rightward gaze shifts. The left column is the response to left visual field targets and right column is the response to right visual field targets. Responses are time-locked to the onset of the target. The time window of the analysis is highlighted. *=p<.05.

DISCUSSION

The current study used ERPs to differentiate the effects of two different social cues signaled by the face—emotional expression and gaze direction—on attentional orienting. Because these facial signals play significant roles in shared attention and social referencing, understanding their behavioral and neural effects has relevance in the emerging field of social cognitive neuroscience. A novel variant of the gaze-directed attentional orienting paradigm (Friesen & Kingstone, 1998) was developed to create dynamic and realistic facial cue displays that appeared to change suddenly in gaze direction and emotional expression in advance of a target appearing in the participant’s periphery. Previous ERP studies have investigated the effects of gaze direction on attentional orienting (Schuller & Rossion, 2001, 2004, 2005) but did not manipulate facial expression, or manipulated expression without a comparison to a neutral face (Fichtenholtz et al., 2007). Given that these two facial signals typically occur in conjunction and are processed interactively in dyadic interactions, the current study moves a step closer to examining mechanisms of shared attentional orienting in real-world social contexts. The use of ERPs permitted an analysis of the relative timing of the effects of expression and gaze direction on neural responses to attentional targets, showing a temporal progression from expression effects to expression × gaze interactions to gaze effects. This analysis reveals new insights into the mental chronometry of socio-emotional influences on visuospatial attention.

The ERP waveforms in the current study were modulated across a series of components consistently implicated in attentional cueing paradigms (P1, N1, P3; Luck, Woodman, & Vogel, 2000). In the current study, P135 amplitude was greatest over contralateral parieto-occipital sites in response to targets following fearful faces. N190 amplitude showed a facial expression × gaze direction interaction over central sites, with greater amplitude for targets following a neutral face than a fearful face when presented with a leftward gaze and a trend towards the opposite effect on rightward gaze trials. P325 amplitude was increased over midline centroparietal sites for LVF targets that were cued by a rightward gaze (invalid cue) compared to LVF targets following a leftward gaze (valid cue), thus reflecting higher-order effects of gaze direction. Behavioral results appear to corroborate the ERP findings, with main effects of emotional expression (fear faster than neutral), visual field (RVF faster than LVF), and a trend towards a main effect of gaze (left faster than direct/right). Although the expression × gaze interaction on RT was not significant, planned comparisons showed a validity effect for LVF targets (leftward gaze faster than rightward gaze). These findings will be discussed in detail below.

The main effect of facial expression on both enhancing the P135 component amplitude and facilitating RT suggests that the dynamic change in fear intensity induced a state of vigilance that increased early processing of all targets regardless of location. The P1 is thought to represent the initial processing of a visual stimulus in extrastriate visual cortex and has been shown to increase in amplitude in response to additional resources at an attended location (Heinze et al., 1994; Mangun, Hopfinger, Kussmaul, Fletcher, & Heinze, 1997). This early effect suggests that facial expression may be a more relevant signal than eye gaze for establishing attentional sets during social exchanges. In contrast to the present report, previous ERP studies (Schuller & Rossion, 2001, 2004, 2005) showed early gaze effects on attentional orienting in the P1 range. However, these studies did not investigate concurrent changes of gaze and facial expression. Emotional modulation of the target-elicited P1 is consistent with our previous study comparing fearful and happy expressions (Fichtenholtz et al., 2007). When these two facial signals change synchronously, emotional expression appears to take attentional precedence. One may interpret the sudden emergence of a fearful facial expression in a partner as signaling that a new threat has recently entered the environment. If so, the increase in alertness may allow more attentional resources to become available. Consequently, the spotlight of attention can initially widen to include all potential spatial locations in order to detect the threat before using a partner’s gaze information to help focus on the target once a threat level has been established.

The facial expression × gaze direction interaction on the N190 component demonstrates a temporal shift from the initial influence of expression on target processing to the later combined influence of both social cues. In the absence of an expression manipulation, previous studies (Schuller & Rossion, 2001, 2004, 2005) have not demonstrated significant gaze validity effects on this component. In the present study, however, the N190 interaction implicates differential processing of facial expression dependent upon gaze direction, which has been broadly suggested by prior behavioral and functional imaging research (Adams & Kleck, 2003, 2005) and is consistent with our prior finding of decreased processing of targets following a fearful face with a leftward gaze (Fichtenholtz et al., 2007). Although no effect of gaze direction validity was seen on N190 amplitude, these results suggest that the presence of a fearful facial stimulus recruits additional attentional resources from the right hemisphere (compared to a neutral face). When the right hemisphere attentional mechanisms are engaged by a leftward cue, greater attentional resources are available to engage in processing the target if the preceding facial cue was neutral. We note that the spatial distribution of the N190 component seen in the current data (central/parietal focus over the midline scalp; Figure 2b) is different from the lateral-occipital distribution of the N1 component seen during many studies of attentional orienting (Luck et al., 2000). This distribution is more consistent with the anterior N1, which is usually seen earlier (100–150 ms) after target onset (Luck, 2005), and which is also thought to index target discrimination processes. In the current study, the amplitude of the N190 may be reflecting the amount of attentional resources available to engage in the target discrimination task while the processing of the facial cue continues.

The presence of a gaze validity effect on RTand the P325 component for LVF targets validates the assumption that the perceived shift in eye gaze is modulating the participants’ attentional focus. Previous investigations of target processing in response to gaze shifts (Schuller & Rossion, 2001, 2005) have shown a similar effect, suggesting that although validly and invalidly gazed-at targets occur in equal proportions, the invalidly gazed-at targets are unexpected. Additionally, previous studies of attentional orienting to peripheral targets (e.g., Hugdahl & Norby, 1994) have demonstrated a similar effect. Even though the cued location is not predictive, the direction of the cue sets up an expectation that the target will appear there. The enhancement of the P325 component is indexing the violation of that expectation, consistent with the interpretation of the P3 as reflecting contextual updating mechanisms (Mangun & Hillyard, 1991).

The hemispheric asymmetry of this effect is consistent with an LVF bias for target detection in the presence of arousing stimuli (Robinson & Compton, 2006). The potentially emotional, dynamic stimuli used in the current study may be more arousing in nature (LaBar, Crupain, Voyvodic, & McCarthy, 2003) than the static or gaze shift stimuli used in previous studies (Schuller & Rossion, 2001, 2005). This ERP modulation, coupled with the RT advantage for validly gazed-at targets presented in the LVF, demonstrates that gaze direction influences target processing, although this effect occurs later than the modulation of processing due to emotional expression.

The independent effects of facial emotion and gaze-directed validity on RT are consistent with findings that emotion and gaze do not interact functionally to drive attentional orienting at short SOAs (Bayliss et al., 2007; Graham et al., in press; Hietanen & Leppanen, 2003). Nevertheless, others (Hori et al., 2005; Tipples, 2006) have shown behavioral interactions between facial expression and gaze-directed validity. The current study employed sequential dynamic gaze and expression changes, whereas Tipples (2006) used concurrent and coterminous gaze and expression changes, and Hori and colleagues (2005) used static stimuli. Overall, the extant literature provides evidence for partially separable systems involved in the processing of facial emotions and gaze direction (Eimer & Holmes, 2002), but these variables may interact to influence attentional orienting under some circumstances. The finding of separate effects for gaze direction and facial expression at the 100 ms SOA used in this study is consistent with previous evidence that gaze and facial expression processing initially occur in parallel streams (Pourtois et al., 2004) and do not interact until approximately 300 ms (Fichtenholtz et al., 2007; Klucharev & Sams, 2004). Future studies using a longer SOA (~500 ms; similar to Schuller & Rossion, 2001, 2004, 2005) would be helpful in determining whether the effects seen here are due to a lack of time to integrate the two signals.

When directly comparing the effects of two nonverbal social cues on the processing of peripherally presented targets, the current results have shown that early in the processing stream (P135, N190) the effect of facial expression has the dominant effects, but the gaze shift is still shown to affect later processing (P325). The presence of a concomitant expression change may mask the potential early effect of gaze-directed validity seen in other studies that did not incorporate emotional expressions (Schuller & Rossion, 2001, 2004, 2005). The current study also suggests the potential utility of ERPs as markers for the development of shared attention and social referencing, especially as ERPs are relatively easy to obtain in children compared to other neuroimaging measures. Future work should examine emotions other than fear in comparison to neutral to determine the extent to which the pattern of mental chronometry observed here is specific to this emotion and its role in attentional vigilance. Additionally, further controls need to be included in order to ensure that the effects of emotion seen in the current study are in fact due to the emotional content of the stimuli, and not the specific apparent motion features created by the stimulus procedure (see Graham et al., in press). Whereas prior research has examined gaze and expression interactions during face processing (e.g., Adams & Kleck, 2003, 2005; Graham & LaBar, 2007), the present study examines the downstream consequences of these interactions on allocation of visuospatial attention. Further studies are warranted that incorporate additional face exemplars to test the generalizability of the results.

In conclusion, our findings suggest that although multiple dynamic signals are present on the face during the course of social interaction, their effects on attention are spatiotemporally dissociable. In this investigation, facial expression took precedence over gaze direction with respect to its effects on target processing, suggesting that evaluating another individual’s emotional state may be initially more important during shared attention compared to where someone is looking. Because insights into mental chronometry have relevance for distinguishing and understanding affective disorders (Davidson, 1998), it would be interesting to determine how the ERP results in the present study vary in individuals with social cognitive deficits, such as autism. The present study provides new evidence for the effects of gaze direction and emotional expression on multiple neural processes, helping to characterize the mechanisms of shared attention and social referencing.

Acknowledgments

This research was supported by a Ruth L. Kirschstein Individual NRSA Fellowship (F31MH074293) to HMF, a National Institutes of Health grant (R01 MH66034) to JBH, and a National Science Foundation CAREER award (023123) and a National Institutes of Health grant (R01 DA14094) to KSL.

Footnotes

Publisher's Disclaimer: Full terms and conditions of use: http://www.informaworld.com/terms-and-conditions-of-access.pdf

This article may be used for research, teaching and private study purposes. Any substantial or systematic reproduction, re-distribution, re-selling, loan or sub-licensing, systematic supply or distribution in any form to anyone is expressly forbidden.

The publisher does not give any warranty express or implied or make any representation that the contents will be complete or accurate or up to date. The accuracy of any instructions, formulae and drug doses should be independently verified with primary sources. The publisher shall not be liable for any loss, actions, claims, proceedings, demand or costs or damages whatsoever or howsoever caused arising directly or indirectly in connection with or arising out of the use of this material.

Contributor Information

Harlan M. Fichtenholtz, Yale University, New Haven, CT, USA

Joseph B. Hopfinger, University of North Carolina at Chapel Hill, Chapel Hill, NC

Reiko Graham, Texas State University at San Marcos, San Marcos, TX, USA.

Jacqueline M. Detwiler, Duke University, Durham, NC, USA

Kevin S. LaBar, Duke University, Durham, NC, USA

References

- Adams RB, Kleck RE. Percieved gaze direction and the processing of facial displays of emotion. Psychological Science. 2003;14:644–647. doi: 10.1046/j.0956-7976.2003.psci_1479.x. [DOI] [PubMed] [Google Scholar]

- Adams RB, Kleck RE. Effects of direct and averted gaze on the perception of facially communicated emotion. Emotion. 2005;5:3–11. doi: 10.1037/1528-3542.5.1.3. [DOI] [PubMed] [Google Scholar]

- Bayliss AP, Frischen A, Fenske MJ, Tipper SP. Affective evaluations of objects are influenced by observed gaze direction and emotional expression. Cognition. 2007;104:644–653. doi: 10.1016/j.cognition.2006.07.012. [DOI] [PubMed] [Google Scholar]

- Busse L, Woldorff MG. The ERP omitted stimulus response to “no-stim” events and its implications for fast-rate event-related fMRI designs. NeuroImage. 2003;18:856–864. doi: 10.1016/s1053-8119(03)00012-0. [DOI] [PubMed] [Google Scholar]

- Corkum V, Moore C. Development of joint visual attention in infants. In: Moore C, Dunham P, editors. Joint attention: Its origin and role in development. Hillside, NJ: Lawrence Erlbaum Associates.; 1994. pp. 61–85. [Google Scholar]

- Davidson RJ. Affective style and affective disorders: Perspectives from affective neuroscience. Cognition & Emotion. 1998;12:307–330. [Google Scholar]

- Deaner RO, Platt ML. Reflexive social attention in monkeys and humans. Current Biology. 2003;13:1609–1613. doi: 10.1016/j.cub.2003.08.025. [DOI] [PubMed] [Google Scholar]

- Driver J, Davis G, Ricciardelli P, Kidd P, Maxwell E, Baron-Cohen S. Gaze perception triggers reflexive visuospatial orienting. Visual Cognition. 1999;6:509–540. [Google Scholar]

- Eastwood JD, Smilek D, Merikle PM. Differential attentional guidance by unattended faces expressing positive and negative emotion. Perception & Psychophysics. 2001;63:1004–1013. doi: 10.3758/bf03194519. [DOI] [PubMed] [Google Scholar]

- Eimer M, Holmes A. An ERP study on the time course of emotional face processing. NeuroReport. 2002;13:427–431. doi: 10.1097/00001756-200203250-00013. [DOI] [PubMed] [Google Scholar]

- Ekman P, Friesen WV. The facial action coding system. Palo Alto, CA: Consulting Psychologists Press; 1978. [Google Scholar]

- Ekman P, Oster H. Facial expressions of emotion. Annual Review of Psychology. 1979;30:527–554. [Google Scholar]

- Emery NJ, Lorincz EN, Perrett DI, Oram MW, Baker CI. Gaze following and joint attention in rhesus monkeys (Macaca mulatta) Journal of Comparative Psychology. 1997;111:286–293. doi: 10.1037/0735-7036.111.3.286. [DOI] [PubMed] [Google Scholar]

- Farroni T, Massaccesi S, Pividori D, Johnson MH. Gaze following in newborns. Infancy. 2004;5:39–60. [Google Scholar]

- Farroni T, Menon E, Rigato S, Johnson MH. The perception of facial expressions in newborns. European Journal of Developmental Psychology. 2007;4:2–13. doi: 10.1080/17405620601046832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fichtenholtz HM, Hopfinger JB, Graham R, Detwiler JM, LaBar KS. Facial expressions and emotional targets produce separable ERP effects in a gaze directed attention study. Social, Cognitive, and Affective Neuroscience. 2007;2:323–333. doi: 10.1093/scan/nsm026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Field TM, Woodson RW, Greenberg R, Cohen C. Discrimination and imitation of facial expressions by neonates. Science. 1982;218:179–181. doi: 10.1126/science.7123230. [DOI] [PubMed] [Google Scholar]

- Fox E, Lester V, Russo R, Bowles RJ, Pichler A, Dutton K. Facial expressions of emotion: Are angry faces detected more efficiently? Cognition & Emotion. 2000;14:61–92. doi: 10.1080/026999300378996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friesen CK, Kingstone A. The eyes have it! Reflexive orienting is triggered by nonpredictive gaze. Psychonomic Bulletin & Review. 1998;5:490–495. [Google Scholar]

- Friesen CK, Kingstone A. Covert and overt orienting to gaze direction cues and the effects of fixation offset. Neuroreport. 2003a;14:489–493. doi: 10.1097/00001756-200303030-00039. [DOI] [PubMed] [Google Scholar]

- Friesen CK, Kingstone A. Abrupt onsets and gaze direction cues trigger independent reflexive attentional effects. Cognition. 2003b;87:B1–B10. doi: 10.1016/s0010-0277(02)00181-6. [DOI] [PubMed] [Google Scholar]

- Friesen CK, Moore C, Kingstone A. Does gaze direction really trigger a reflexive shift of spatial attention? Brain and Cognition. 2005;57:66–69. doi: 10.1016/j.bandc.2004.08.025. [DOI] [PubMed] [Google Scholar]

- Friesen CK, Ristic J, Kingstone A. Attentional effects of counterproductive gaze and arrow cues. Journal of Experimental Psychology: Human Perception and Performance. 2004;30:319–329. doi: 10.1037/0096-1523.30.2.319. [DOI] [PubMed] [Google Scholar]

- Ganel T, Goshen-Gottstein Y, Goodale MA. Interactions between the processing of gaze direction and facial expression. Vision Research. 2005;45:1191–1200. doi: 10.1016/j.visres.2004.06.025. [DOI] [PubMed] [Google Scholar]

- Graham R, Friesen CK, Fichtenholtz HM, LaBar KS. Modulation of reflexive orienting to gaze direction by facial expressions. Visual Cognition (in press) [Google Scholar]

- Graham R, LaBar KS. The Garner selective attention paradigm reveals dependencies between emotional expression and gaze direction in face perception. Emotion. 2007;7:296–313. doi: 10.1037/1528-3542.7.2.296. [DOI] [PubMed] [Google Scholar]

- Heinze HJ, Mangun GR, Burchert W, Hinrichs H, Sholz M, Munte TF, et al. Combined spatial and temporal imaging of brain activity during selective attention in humans. Nature. 1994;372:543–546. doi: 10.1038/372543a0. [DOI] [PubMed] [Google Scholar]

- Hietanen JK. Does your gaze direction and head orientation shift my visual attention? NeuroReport. 1999;10:3443–3447. doi: 10.1097/00001756-199911080-00033. [DOI] [PubMed] [Google Scholar]

- Hietanen JK, Leppanen JM. Does facial expression affect attention orienting by gaze direction cues? Journal of Experimental Psychology: Human Perception and Performance. 2003;29:1228–1243. doi: 10.1037/0096-1523.29.6.1228. [DOI] [PubMed] [Google Scholar]

- Holmes A, Richards A, Green S. Anxiety and sensitivity to eye gaze in emotional faces. Brain and Cognition. 2006;60:282–294. doi: 10.1016/j.bandc.2005.05.002. [DOI] [PubMed] [Google Scholar]

- Holmes A, Vuilleumier P, Eimer M. The processing of emotional facial expression is gated by spatial attention: Evidence from event-related brain potentials. Cognitive Brain Research. 2003;16:174–184. doi: 10.1016/s0926-6410(02)00268-9. [DOI] [PubMed] [Google Scholar]

- Hori E, Tazumi T, Umeno K, Kamachi M, Kobayashi T, Ono T, et al. Effects of facial expression on shared attention mechanisms. Physiology and Behavior. 2005;84:397–405. doi: 10.1016/j.physbeh.2005.01.002. [DOI] [PubMed] [Google Scholar]

- Hugdahl K, Nordby H. Electrophysiological correlates to cued attentional shifts in the visual and auditory modalities. Behavioral and Neural Biology. 1994;62:21–32. doi: 10.1016/s0163-1047(05)80055-x. [DOI] [PubMed] [Google Scholar]

- Itakura S. An exploratory study of gaze monitoring in nonhuman primates. Japanese Psychological Research. 1996;38:174–180. [Google Scholar]

- Jasper HH. The ten-twenty electrode system of the International Federation. Electroencephalography and Clinical Neurophysiology. 1958;20:371–375. [PubMed] [Google Scholar]

- Johnson MH, Vecera SP. Cortical parcellation and the development of face processing. In: de Boysson-Bardies B, de Schonen S, Jusczyk PW, McNeilage P, Morton J, editors. Developmental neurocognition: Speech and face processing in the first year of life. New York: Kluwer Academic/Plenum Publishers.; 1993. pp. 135–148. [Google Scholar]

- Klinnert MD, Campos JJ, Sorce JF, Emde RN, Svejda M. The development of social referencing in infancy. In: Plutchik R, Kellerman H, editors. Emotion: Theory, research, and experience: Vol. 2. Emotion in early development. New York: Academic Press.; 1983. pp. 57–86. [Google Scholar]

- Klucharev V, Sams M. Interaction of gaze direction and facial expressions processing: ERP study. NeuroReport. 2004;15:621–626. doi: 10.1097/00001756-200403220-00010. [DOI] [PubMed] [Google Scholar]

- LaBar KS, Crupain MJ, Voyvodic JB, McCarthy G. Dynamic perception of facial affect and identity in the human brain. Cerebral Cortex. 2003;13:1023–1033. doi: 10.1093/cercor/13.10.1023. [DOI] [PubMed] [Google Scholar]

- Langton SRH, Bruce V. Reflexive visual orienting in response to the social attention of others. Visual Cognition. 1999;6:541–567. [Google Scholar]

- Luck SJ. An introduction to the event-related potential technique. Cambridge, MA: MIT Press.; 2005. [Google Scholar]

- Luck SJ, Woodman GF, Vogel EK. Event-related potential studies of attention. Trends in Cognitive Sciences. 2000;4:432–440. doi: 10.1016/s1364-6613(00)01545-x. [DOI] [PubMed] [Google Scholar]

- Lundqvist D, Ohman A. Emotion regulates attention: The relation between facial configurations, facial emotion, and visual attention. Visual Cognition. 2005;12:51–84. [Google Scholar]

- Mangun GR, Hillyard SA. Modulations of sensory-evoked brain potentials indicate changes in perceptual processing during visual-spatial priming. Journal of Experimental Psychology: Human Perception and Performance. 1991;17:1057–1074. doi: 10.1037//0096-1523.17.4.1057. [DOI] [PubMed] [Google Scholar]

- Mangun GR, Hopfinger JB, Kussmaul CL, Fletcher E, Heinze HJ. Covariations in ERP and PET measures of spatial selective attention in human extrastriate visual cortex. Human Brain Mapping. 1997;5:273–279. doi: 10.1002/(SICI)1097-0193(1997)5:4<273::AID-HBM12>3.0.CO;2-F. [DOI] [PubMed] [Google Scholar]

- Mathews A, Fox E, Yiend J, Calder A. The face of fear: Effects of eye gaze and emotion on visual attention. Visual Cognition. 2003;10:823–835. doi: 10.1080/13506280344000095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mogg K, Bradley BP. Some methodological issues in assessing attentional biases for threatening faces in anxiety: A replication study using a modified version of the probe detection task. Behaviour Research and Therapy. 1999;37:595–604. doi: 10.1016/s0005-7967(98)00158-2. [DOI] [PubMed] [Google Scholar]

- Mogg K, McNamara J, Powys M, Rawlinson H, Seiffer A, Bradley BP. Selective attention to threat: A test of two cognitive models. Cognition and Emotion. 2000;14:375–399. [Google Scholar]

- Nelson CA, Horowitz FD. The perception of facial expressions and stimulus notion by 2-and 5-month-old infants using holographic stimuli. Child Development. 1983;54:868–877. doi: 10.1111/j.1467-8624.1983.tb00508.x. [DOI] [PubMed] [Google Scholar]

- Ohman A, Flykt A, Esteves F. Emotion drives attention: Detecting the snake in the grass. Journal of Experimental Psychology: General. 2001;130:466–478. doi: 10.1037//0096-3445.130.3.466. [DOI] [PubMed] [Google Scholar]

- Ohman A, Lundqvist D, Esteves F. The face in the crowd revisited: A threat advantage with schematic stimuli. Journal of Personality & Social Psychology. 2001;80:381–396. doi: 10.1037/0022-3514.80.3.381. [DOI] [PubMed] [Google Scholar]

- Pecchinenda A, Pes M, Ferlazzo F, Zoccolotti P. The combined effect of gaze direction and facial expression on cueing spatial attention. Emotion. 2008;8:628–634. doi: 10.1037/a0013437. [DOI] [PubMed] [Google Scholar]

- Pourtois G, Grandjean D, Sander D, Vuilleumier P. Electrophysiological correlates of rapid spatial orienting towards fearful faces. Cerebral Cortex. 2004;14:619–633. doi: 10.1093/cercor/bhh023. [DOI] [PubMed] [Google Scholar]

- Pourtois G, Sander D, Andres M, Grandjean D, Reveret L, Oliver E, et al. Dissociable roles of the human somatosensory and superior temporal cortices for processing social face signals. European Journal of Neuroscience. 2004;20:3507–3515. doi: 10.1111/j.1460-9568.2004.03794.x. [DOI] [PubMed] [Google Scholar]

- Putman P, Hermans E, van Honk J. Anxiety meets fear in perception of dynamic expressive gaze. Emotion. 2006;6:94–102. doi: 10.1037/1528-3542.6.1.94. [DOI] [PubMed] [Google Scholar]

- Ristic J, Friesen CK, Kingstone A. Are eyes special? It depends on how you look at it. Psychonomic Bulletin & Review. 2002;9:507–513. doi: 10.3758/bf03196306. [DOI] [PubMed] [Google Scholar]

- Ristic J, Kingstone A. Taking control of reflexive attention. Cognition. 2005;94:B55–B65. doi: 10.1016/j.cognition.2004.04.005. [DOI] [PubMed] [Google Scholar]

- Robinson MD, Compton RJ. The automaticity of affective reactions: Stimulus valence, arousal, and lateral spatial attention. Social Cognition. 2006;24:469–495. [Google Scholar]

- Schuller AM, Rossion B. Spatial attention triggered by eye gaze increases and speeds up early visual activity. NeuroReport. 2001;12:1–7. doi: 10.1097/00001756-200108080-00019. [DOI] [PubMed] [Google Scholar]

- Schuller AM, Rossion B. Perception of static eye gaze direction facilitates subsequent early visual processing. Clinical Neurophysiology. 2004;115:1161–1168. doi: 10.1016/j.clinph.2003.12.022. [DOI] [PubMed] [Google Scholar]

- Schuller AM, Rossion B. Spatial attention triggered by eye gaze enhances and speeds up visual processing in upper and lower fields beyond early striate visual processing. Clinical Neurophysiology. 2005;116:2565–2576. doi: 10.1016/j.clinph.2005.07.021. [DOI] [PubMed] [Google Scholar]

- Tipples J. Fear and fearfulness potentiate automatic orienting to eye gaze. Cognition and Emotion. 2006;20:309–320. [Google Scholar]

- Tipples J, Atkinson AP, Young AW. The eyebrow frown: A salient social signal. Emotion. 2002;2:288–296. doi: 10.1037/1528-3542.2.3.288. [DOI] [PubMed] [Google Scholar]

- Tipples J, Young AW, Quinlan P, Broks P, Ellis AW. Searching for threat. Quarterly Journal of Experimental Psychology A. 2002;55:1007–1026. doi: 10.1080/02724980143000659. [DOI] [PubMed] [Google Scholar]

- Tomasello M, Hare B, Lehmann H, Call J. Reliance on head versus eyes in the gaze following of great apes and human infants: The cooperative eye hypothesis. Journal of Human Evolution. 2007;52:314–320. doi: 10.1016/j.jhevol.2006.10.001. [DOI] [PubMed] [Google Scholar]

- Tomonaga M. Is chimpanzee (Pan troglodytes) spatial attention reflexively triggered by the gaze cue? Journal of Comparative Psychology. 2007;121:156–170. doi: 10.1037/0735-7036.121.2.156. [DOI] [PubMed] [Google Scholar]

- Whalen PJ, Shin LM, McInerney SC, Fischer H, Wright CI, Rauch SL. A functional MRI study of human amygdala responses to facial expressions of fear versus anger. Emotion. 2001;1:70–83. doi: 10.1037/1528-3542.1.1.70. [DOI] [PubMed] [Google Scholar]

- Woldorff MG, Hazlett CJ, Fichtenholtz HM, Weissman DH, Dale AM, Song AW. Functional parcellation of attentional control regions of the brain. Journal of Cognitive Neuroscience. 2004;16:149–165. doi: 10.1162/089892904322755638. [DOI] [PubMed] [Google Scholar]