Abstract

This research examined the developmental course of infants’ ability to perceive affect in bimodal (audiovisual) and unimodal (auditory and visual) displays of a woman speaking. According to the intersensory redundancy hypothesis (L. E. Bahrick, R. Lickliter, & R. Flom, 2004), detection of amodal properties is facilitated in multimodal stimulation and attenuated in unimodal stimulation. Later in development, however, attention becomes more flexible, and amodal properties can be perceived in both multimodal and unimodal stimulation. The authors tested these predictions by assessing 3-, 4-, 5-, and 7-month-olds’ discrimination of affect. Results demonstrated that in bimodal stimulation, discrimination of affect emerged by 4 months and remained stable across age. However, in unimodal stimulation, detection of affect emerged gradually, with sensitivity to auditory stimulation emerging at 5 months and visual stimulation at 7 months. Further temporal synchrony between faces and voices was necessary for younger infants’ discrimination of affect. Across development, infants first perceive affect in multimodal stimulation through detecting amodal properties, and later their perception of affect is extended to unimodal auditory and visual stimulation. Implications for social development, including joint attention and social referencing, are considered.

Keywords: infant perception, intersensory redundancy, intersensory perception, multimodal perception, emotion perception

Young infants and adults perceive a world of unitary and cohesive objects and events even though they encounter a continuously changing array of visual, auditory, olfactory, gustatory, and tactile stimulation. The information available to the sensory systems that specifies properties of objects and events is of two kinds: amodal and modality specific. Amodal information (e.g., texture, rhythm, tempo, and intensity) is not specific to one sense modality as it can be conveyed redundantly across multiple sense modalities (J. J. Gibson, 1966, 1979). In contrast, information that is modality specific (e.g., color, visual pattern, pitch, etc.) is specific to a single sense modality and cannot be conveyed redundantly across multiple sense modalities. Most events, such as a bouncing ball, provide both modality-specific and amodal information. That is, the ball provides color and pattern information that can only be specified visually and amodal information such as the tempo and rhythm of the bounces that can be redundantly specified in acoustic as well as visual stimulation.

Researchers have demonstrated that one of the earliest and perhaps most important perceptual competencies is infants’ sensitivity to redundantly specified amodal temporal relations (see Lewkowicz, 2000a; Lewkowicz & Lickliter, 1994; Lickliter & Bahrick, 2000; Walker-Andrews, 1997, for reviews). For example, infants perceive the synchrony, rhythm, and tempo common to visible and audible stimulation from events such as an object striking a surface (e.g., Bahrick, 1988, 1992; Bahrick, Flom, & Lickliter, 2002; Bahrick & Lickliter, 2000). Infants also detect the synchrony and affect uniting audible and visible speech (e.g., Lewkowicz, 1996a, 2000b; Walker-Andrews, 1997). Temporal synchrony between auditory and visual stimulation, such as the impacts of a bouncing ball, can specify that the two sources of stimulation “go together” and constitute a unitary event (e.g., Bahrick, 1983, 1988; Bahrick & Lickliter, 2002; Bahrick & Pickens, 1994; Lewkowicz, 1992, 1996b, 2000b; Spelke, Born, & Chu, 1983). Infants’ early sensitivity to amodal information is important because it promotes further differentiation of properties of the event in order of increasing specificity (Bahrick, 2001; Barhick & Lickliter, 2002) and increases the probability that other sources of stimulation that are not synchronous (e.g., sounds of voices from an adjoining room) are not associated with the event. Thus, detection of amodal information promotes veridical perceptual development. However, little is known about what guides infants’ attention toward amodal information in the first place.

One explanation for the early perceptual sensitivity to amodal information is that intersensory redundancy is highly salient to young infants and selectively recruits their attention (Bahrick & Lickliter, 2000, 2002; Bahrick, Lickliter, & Flom, 2004; Lickliter & Bahrick, 2000). We recently proposed an intersensory redundancy hypothesis, which states that information presented concurrently and synchronously in two sense modalities recruits attention and facilitates perceptual learning of amodal information to a greater extent than when the same information is presented in only one sense modality. Thus, detection of amodal information is initially fostered in multimodal stimulation where intersensory redundancy is available and is later extended to unimodal stimulation. Support for these predictions has been generated from research with nonsocial events. Bahrick and Lickliter (2000) found that 5-month-old infants could detect a change in the rhythm of a toy hammer’s tapping in bimodal (audiovisual) stimulation but not in unimodal auditory or visual stimulation. Furthermore, temporal synchrony was necessary for detecting the change in rhythm, as asynchronous presentations were not effective. However, after a few months of additional experience, infants’ sensitivity to changes in rhythm extended to unimodal visual stimulation (Bahrick & Lickliter, 2004). Similarly, infants’ sensitivity to changes in the tempo of the tapping hammer was observed first in bimodal stimulation at 3 months of age; later, at 5 months of age, sensitivity was extended to unimodal visual stimulation (Bahrick et al., 2002; Bahrick & Lickliter, 2004).

Converging evidence for the importance of intersensory redundancy in promoting attention, perception, learning, and memory for amodal properties in early development also has been demonstrated by comparative studies of quail embryos and chicks. For example, quail embryos learned the rhythm of a maternal call four times faster and remembered it four times longer when it was played in synchrony with a flashing light than when it was presented unimodally or asynchronously (Lickliter, Bahrick, & Honeycutt, 2002, 2004). Further physiological studies with quail have demonstrated that synchronous audiovisual stimulation maintains heart rate within a normal range and, thereby, promotes learning of the maternal call. In contrast, asynchronous, audiovisual stimulation significantly elevates the quail’s heart rate to a level that interferes with its ability to learn the maternal call (Reynolds & Lickliter, 2003). Finally, results of neurophysiological studies also have demonstrated that redundant audiovisual stimulation elicits greater neural responsiveness than either the auditory or visual stimulation alone or summed (Stein & Meredith, 1993).

From studies of human and animal infants, it appears that the attentional facilitation of intersensory redundancy to amodal properties is most pronounced in early development (Bahrick & Lickliter, 2000, 2004; Bahrick et al., 2004; Lickliter et al., 2002, 2004). Over the course of development, however, perceptual processing becomes increasingly flexible such that both amodal and modality-specific properties can be perceived in multimodal as well as unimodal contexts (Bahrick & Lickliter, 2004; Bahrick et al., 2004).

In the present study, we extended predictions of the intersensory redundancy hypothesis to the domain of social events, specifically infants’ discrimination of affect in audiovisual speech. We predicted that if intersensory redundancy initially promotes detection of amodal properties, then affect (specified by common amodal rhythm, tempo, duration, and intensity patterns) should initially be discriminated in bimodal stimulation and later extended to unimodal stimulation. Furthermore, initial detection of affect should be observed only in bimodal synchronous stimulation and not in bimodal asynchronous stimulation.

We chose to investigate the perception of dynamic affective expressions in infants between 3 and 7 months of age for several reasons. A considerable literature now exists regarding infants’ discrimination of affective expressions and reveals that between 4 and 7 months of age infants are able to discriminate dynamic, bimodally specified, affective expressions such as happy, sad, and angry (e.g., A. J. Caron, Caron, & MacLean, 1988; Walker-Andrews & Lennon, 1985). Research also has demonstrated that by 5 months of age infants are able to discriminate changes in affect on the basis of vocal expressions alone (Walker-Andrews & Grolnick, 1983; Walker-Andrews & Lennon, 1991), and by 7 months of age infants are able to discriminate static facial expressions on the basis of affect (R. F. Caron, Caron, & Myers, 1985; Kestenbaum & Nelson, 1990; Ludemann & Nelson, 1988; see Walker-Andrews, 1997, for a review). However, virtually no research has examined infants’ sensitivity to affect in both bimodal and unimodal contexts, nor has any tested predictions regarding the developmental progression of infants’ discrimination of affect. However, Walker-Andrews (1997) suggested from her review of the literature that perception of affect first emerges within the context of multimodal stimulation and is later extended to unimodal contexts. To empirically test these hypotheses, we examined the development of infants’ discrimination of affect in bimodal and unimodal contexts in a single research design. Consistent with predictions of the intersensory redundancy hypothesis and conclusions of Walker-Andrews’ (1997) review, we expected the development of infants’ discrimination of affect to emerge in the context of intersensory redundancy and later to extend to nonredundant, unimodal stimulation.

In the first experiment, we habituated infants between 3 and 7 months of age to dynamic, affective, facial expressions and examined their ability to discriminate a change in affect when presented bimodally. In the second and third experiments, we examined the ability of infants between 4 and 7 months of age to discriminate changes in dynamic affective expressions in unimodal auditory stimulation from the voices and unimodal visual stimulation from the faces. Finally, in the fourth and fifth experiments, we explored the basis of intersensory facilitation by examining 4- and 5-month-old infants’ ability to discriminate changes in asynchronous and serially presented auditory–visual stimulation.

Experiment 1: The Development of Sensitivity to Affect in Bimodal Stimulation

Method

Participants

Eighteen infants at each of four ages—3, 4, 5, and 7 months—participated. The mean age of the 3-month-olds (9 girls and 9 boys) was 101 days (SD = 1.1). The mean age of the 4-month-olds (10 girls and 8 boys) was 122 days (SD = 2.02). The mean age of the 5-month-olds (7 girls and 11 boys) was 154 days (SD = 3.6), and the mean age of the 7-month-olds (9 girls and 9 boys) was 225 days (SD = 5.4). The data of 27 additional infants (five 3-month-olds, nine 4-month-olds, six 5-month-olds, and seven 7-month-olds) were excluded from the final analyses. Seventeen infants (four 3-month-olds, four 4-month-olds, five 5-month-olds, and four 7-month-olds) were excluded because of excessive fussiness. Four infants (one 3-month-old, two 4-month-olds, and one 5-month-old) were excluded for equipment failure. Four infants (two 4-month-olds and two 7-month-olds) were excluded for experimenter errors. One 4-month-old was excluded for failure to habituate in fewer than 20 trials, and one 7-month-old was excluded for falling asleep during habituation. Parents of the participants were initially contacted by telephone and received a certificate of appreciation following participation. Ninety-six percent of the participants were White non-Hispanic, 2% were Hispanic, and 2% were Asian. All infants were healthy, normal, full-term infants weighing at least 5 lb at birth, with 5-min Apgar scores of 7 or higher. No information on socioeconomic status was available for the individual participants.

Stimulus events

Dynamic color video films of three female adults conveying three affective expressions (happy, angry, and sad) were created and served as the stimuli. They recited the following script: “Oh, hi baby, look you at you! You’re such a beautiful baby! Your mommy and daddy must be so happy to have a beautiful baby like you!” in infant-directed speech. A training tape was provided by Montague and Walker-Andrews (1999), which has been used as a training tape and stimuli in previous experiments (Kahana-Kalman & Walker-Andrews, 2001; Montague & Walker-Andrews, 2001, 2002). The tape consists of an actress who is familiar with the Facial Action Coding System (Ekman & Friesen, 1978) and has previous experience in presenting dynamic facial expressions displaying happy, sad, and angry audiovisual affective expressions. Seven actresses each studied and reproduced the training tape with each affective expression (i.e., happy, sad, and angry) as closely as possible. A group of 12 undergraduate observers then judged each of the seven actresses in terms of their ability to convey each affective expression. The three actresses with the highest overall rating were selected for the final stimulus events. The final versions of each film depicted a view of the actresses’ face and shoulder area against a uniform blue background. In addition to the primary stimulus events, a control stimulus was used. This event consisted of a plastic green and white toy turtle whose front legs spun and produced a whirring sound.

Apparatus

The stimulus events were videotaped with a Panasonic (WV 3170) color video camera and a Sony (EMC 150T) remote microphone. The events were edited with a Panasonic (VHS NV-A500) edit controller. The edited stimulus events were presented using three Panasonic video decks (VTR AG-1850). The video decks were connected to a Kramer matrix switcher (VS-6YC) that allowed us to switch between the habituation, test, and control displays without the extra time or noise that would have resulted from changing videocassettes. All stimulus events were viewed on a 19-in. (48-cm) color video monitor (Sony KV-20M10), and all soundtracks were presented from a speaker placed on top of the monitor. The sound measured 65 dB (DSM 110 sound-level meter) from the infant seat that was placed 50 cm from the monitor.

The monitor was surrounded by a three-panel wood frame covered in black cloth that prevented the infant from seeing the observers. The observers, unaware of the hypotheses of the experiment and unable to view the visual events, monitored infants’ visual fixations by depressing a button while the infant fixated on the event and released it while the infant looked away. The observers were also blind to the auditory information presented to the infant. Observers wore headphones that played music loud enough to mask the auditory stimuli. The button box was connected to a computer programmed to record visual fixations online and to signal to a second experimenter who controlled the presentation of the video displays. The signal was transmitted to the second experimenter through a small headphone. The observations of the primary observer controlled the presentation of the stimuli.

Procedure

Infants participated in an infant-controlled habituation procedure (Horowitz, Paden, Bhana, & Self, 1972) and were habituated to one of the three actresses conveying one of the three affective expressions in bimodal synchronous audiovisual speech. Following habituation, infants received two no-change posthabituation trials and then received two test trials depicting the same actress displaying a different affective expression in bimodal synchronous audiovisual speech. Infants’ discrimination of affect was assessed by visual recovery to the two test trials that presented a change in affective expression (with respect to the no-change posthabituation trials). Infants were randomly assigned to receive one of the three actresses and one of the three affective expressions during habituation. One third of the infants at each age (n = 6) received one of the three affective expressions (i.e., happy, sad, and angry) during habituation. Also within each habituation condition, one third of the infants were habituated to one of the three faces (i.e., Helen, Mariana, and Monica). Thus, two infants at each age were habituated to Helen’s face while she posed a happy expression, two infants saw Helen pose an angry expression, and two infants saw Helen pose a sad expression. A similar pattern was followed for the other two faces during habituation. Following habituation to a happy expression, half of the infants within this subgroup (n = 3) received a sad expression during the test trials and half (n = 3) received an angry expression during the test trials. A similar pattern was used for the other two affective expressions.

Each habituation sequence consisted of at least six infant-controlled habituation trials. Each trial began when the infant looked toward the video display and the trial ended when the infant looked away for more than 1.5 s. We set 60 s as the maximum trial length and 20 trials as the maximum number of trials. We defined the habituation criterion as a 50% decline in looking on two consecutive trials, compared with infants’ average looking time on the first two trials (i.e., baseline trials). After the habituation criterion was met, two no-change posthabituation trials were presented. These two additional habituation trials were included to establish a more conservative habituation criterion by reducing the possibility of chance habituation and taking into account spontaneous regression toward the mean (see Bertenthal, Haith, & Campos, 1983, for discussion of regression effects in habituation designs), and these trials also served as a basis for assessing visual recovery. Following the two no-change posthabituation trials, infants received two test trials in which the affective expression was changed. For example, if an infant was habituated to Helen displaying an angry expression, then he or she would receive two test trials consisting of Helen displaying either a sad or happy expression. Prior to beginning the habituation sequence, the control stimulus event (i.e., the toy turtle) was presented as a warm-up trial and was used after the presentation of the test trials to examine infants’ overall level of fatigue.

Prior to including an infant’s data in the analyses, we examined the level of fatigue to ensure that the infant was capable of showing visual recovery to an entirely different event. We assessed fatigue by comparing each infant’s looking on the first and the final control trials (i.e., the toy turtle). On the final control trial, infants were required to look at least 20% of their looking on the first control trial. No infants at any age were excluded for failure to meet this criterion.

A second observer recorded the infant’s visual fixations along with the primary observer, and these observations were used in the computation of interobserver reliability. The second observer was present for 25 of the 72 infants (35%) included in the final analyses. Interobserver reliability was calculated by a Pearson product–moment correlation and averaged r =.96 (SD =.03). The interobserver reliabilities for the 3-month-olds (n = 6), 4-month-olds (n = 7), 5-month-olds (n = 6), and 7-month-olds (n = 6) were r =.97 (SD =.04), r =.98 (SD =.06), r =.95 (SD =.02), and r =.94 (SD =.05), respectively.

Results and Discussion

The primary dependent variable was infants’ looking time as a function of trial type: baseline, posthabituation, and test. Infants’ looking time for each trial type and age is presented in Table 1 along with visual recovery (the difference in looking between the posthabituation and test trials).

Table 1.

Means and Standard Deviations for Visual Fixation in Seconds for Baseline, Posthabituation, Test Trials, and Visual Recovery as a Function of Age for Infants Receiving Bimodal (Auditory–Visual) Information Specifying Affect

| 3-month-olds |

4-month-olds |

5-month-olds |

7-month-olds |

|||||

|---|---|---|---|---|---|---|---|---|

| Trial type | M | SD | M | SD | M | SD | M | SD |

| Baseline | 37.5 | 15.9 | 33.8 | 15.5 | 29.1 | 16.1 | 22.5 | 15.4 |

| Posthabituation | 7.6 | 4.4 | 4.9 | 1.8 | 6.7 | 3.9 | 4.4 | 2.7 |

| Test | 6.5 | 3.6 | 11.3 | 4.7 | 12.8 | 12.0 | 10.6 | 10.1 |

| Average (across all trial types) | 17.2 | 17.3 | 16.7 | 15.5 | 16.2 | 15.0 | 12.5 | 12.9 |

| Visual recovery (test–posthabituation) | −1.1 | 4.5 | 6.4** | 5.0 | 6.1* | 10.8 | 6.2** | 9.9 |

Note. Baseline is the mean visual fixation during the first two habituation trials and reflects initial interest. Posthabituation is the mean visual fixation to two no-change trials just after the habituation criterion was met and reflects final interest in the habituated events. Test is the mean visual fixation during the two change test trials, and visual recovery is the difference between visual fixation during the test trials and visual fixation during the posthabituation trials. Average is the average looking across all habituation trial types.

p < .05.

p <.01.

A repeated measures analysis of variance (ANOVA) was performed with age (3, 4, 5, and 7 months) as the between-subjects factor and trial type (baseline, posthabituation, and test) as the repeated measure to compare differences in looking across ages and trials. All significance levels are reported with two-tailed values. Results revealed a significant effect of trials, F(2, 136) = 145.7, p < .001, (effect size) =.68; a significant Trials × Age interaction, F(6, 136) = 3.90, p <.01, ; and a nonsignificant effect of age, F(3, 68) = 1.7, p > .1, . A series of Scheffé’s post hoc comparisons explored the main effect of trials and revealed that across all ages the overall amount of looking for the baseline trials differed from that of the posthabituation trials (p <.001), indicating that infants habituated to the affective expressions. It is important to note, however, that the results of the Age × Trials interaction, and subsequent planned comparisons, revealed that infants’ visual recovery (the mean looking on the posthabituation trials vs. the mean looking on the test trials) differed significantly for all ages except the 3-month-olds. Thus, the difference between posthabituation and test trial looking was significant for the 4-month-olds, t(17) = 5.3, p <.01; the 5-month-olds, t(17) = 2.6, p <.05; and the 7-month-olds, t(17) = 9.7, p <.01; but not the 3-month-olds, t(17) = 1.1, p >.1. In addition, the results of a one-way ANOVA comparing infants’ visual recovery by age were also significant, F(3, 68) = 3.77, p =.014, . Follow-up comparisons revealed that 3-month-olds’ visual recovery reliably differed from the 4-month-olds, t(34) = 4.75, p <.001; 5-month-olds, t(34) = 2.63, p =.013; and 7-month-olds, t(34) = 2.88, p =.007. The visual recovery for the 4-, 5-, and 7-month-olds did not reliably differ (all ps >.1).

We also examined infants’ visual recovery to the final control trial (i.e., the toy turtle). This analysis was particularly relevant for the 3-month-olds in order to rule out the possibility that the nonsignificant visual recovery to a change in affect from habituation to test could be attributed to fatigue. Infants at each age, including 3-month-olds, showed a significant increase in looking to a change in event from the posthabituation trials to the final control trial, t(17) = 7.7, p <.01; t(17) = 6.3, p <.01; t(17) = 5.7, p <.01; and t(17) = 7.8, p <.01, for the 3-, 4-, 5-, and 7-month-olds, respectively. Thus, it seems unlikely that the 3-month-olds’ nonsignificant discrimination of affect was a result of fatigue.

We also examined (using a two-tailed nonparametric binomial test) whether the results of each age group were representative of the pattern of individual infants’ responses or whether they were carried by a few infants with large positive visual recovery scores. Nine 3-month-olds had positive visual recovery scores (R = −10.5–6.9, p =.95). All 18 4-month-olds, however, had positive visual recovery scores (R = 0.5–12.8, p <.01). Fourteen of 18 5-month-olds had positive visual recovery scores (R = −7.4–39.8, p <.05), and 17 of 18 7-month-olds had positive visual recovery scores (R = −5.8–41.3, p <.01). Thus, data at the individual subject level converged with findings of group analyses and demonstrated clear evidence of discriminating bimodal audiovisual affect at 4, 5, and 7 months of age but not at 3 months of age.

Three one-way ANOVAs were conducted to determine whether infants differed in their initial interest level (baseline), total looking time (number of seconds to reach habituation), or the number of trials to reach habituation across age. Results revealed no significant effects (all ps >.1). In addition, we examined whether there were any effects of affective expression or actress on infants’ visual recovery. The results of this 3 × 3 ANOVA revealed that looking during test trials to the happy, sad, or angry affective expression (M = 5.0 s, SD = 9.4; M = 5.3 s, SD = 9.6; and M = 2.8 s, SD = 6.4, respectively) did not significantly differ, F(2, 63) = 0.559, p =.575, . The effect of actress, F(2, 63) = 0.105, p =.901, , and the interaction of affective expression and actress, F(4, 63) = 0.422, p =.792, , also did not reach significance. It is worth noting that researchers examining infants’ discrimination of affect often encounter significant effects where responsiveness is greater to positive expressions than to negative expressions (see Walker-Andrews, 1997, for a discussion of this point). However, as indicated by the above analysis, we saw no such differences. Together, these results demonstrate that infants between 4 and 7 months of age are able to discriminate changes in dynamic affective facial expressions when they are presented bimodally, as in natural audiovisual speech.

Experiment 2: The Development of Sensitivity to Affect in Unimodal Auditory Stimulation

According to the intersensory redundancy hypothesis, early in development or when first encountering a new event, intersensory redundancy facilitates perception of amodal information. However, over the course of development or experience, infants rely less on intersensory redundancy to perceive amodal information (Bahrick & Lickliter, 2004; Bahrick et al., 2004). Thus, if detection of affect emerges in a multimodal context and is later extended to unimodal contexts, we would expect detection of a change in affect in unimodal stimulation (with no redundancy) to emerge after the age of 4 months when detection in multimodal stimulation is first evident. In Experiment 2, therefore, we asked whether 4-, 5-, and 7-month-olds are capable of discriminating changes in affect when presented with unimodal auditory stimulation consisting of the voices used in Experiment 1. Three-month-olds were not included in Experiment 2 because they did not show evidence of discriminating bimodally presented expressions of affect in Experiment 1.

Experiment 2 was identical to Experiment 1 with the following exceptions. The soundtracks used in Experiment 1 served as the auditory stimuli and were paired with a static image of the face of the same actress in a neutral affective expression. Presentation of the soundtrack was contingent on infants’ fixation to the static face and, thus, allowed us to assess attention to the acoustic information. The pairing of an auditory stimulus with a static, nonchanging visual display has been used previously in our own research, as well as the research of others, in assessing infants’ discrimination of auditory stimuli (see Bahrick, Flom, & Lickliter, 2002; Horowitz, 1974; Walker-Andrews & Grolnick, 1983; Walker-Andrews & Lennon, 1991). From habituation to test trials, we changed only the acoustic information for affect while the static face remained constant. This presentation is called unimodal auditory because affect was conveyed only by unimodal acoustic information, and the static face provided no redundancy or visual information specifying affect. All counterbalancing was conducted as in Experiment 1.

Method

Participants

Fifty-four infants of 4, 5, and 7 months of age (n = 18 at each age) participated. The mean age of the 4-month-olds (11 girls and 7 boys) was 122 days (SD = 2.4). The mean age of the 5-month-olds (9 girls and 9 boys) was 153 days (SD = 3.0). The mean age of the 7-month-olds (8 girls and 10 boys) was 228 days (SD = 4.1). The data of 21 additional infants (nine 4-month-olds, four 5-month-olds, and eight 7-month-olds) were excluded from the final analyses. Eleven infants (two 4-month-olds, four 5-month-olds, and five 7-month-olds) were excluded because of excessive fussiness. Six infants (four 4-month-olds and two 7-month-olds) were excluded for experimenter error. Two 4-month-olds were excluded for failure to reach habituation within 20 or fewer trials, one 4-month-old was excluded for fatigue, and one 7-month-old was excluded for falling asleep during habituation. Ninety-eight percent of the participants were White non-Hispanic, and 2% were Hispanic. All infants were healthy, normal, full-term infants weighing at least 5 lb at birth, with 5-min Apgar scores of 7 or higher.

Apparatus and procedure

The apparatus and procedures were identical to Experiment 1 with the exception that unimodal auditory events were presented. A second observer was present for 31 of the 54 infants (57%) in order to examine interobserver reliability. Interobserver reliability in Experiment 2 was calculated by a Pearson product–moment correlation across all participants (r =.95; SD =.03). The interobserver reliabilities for the 4-month-olds (n = 10), 5-month-olds (n = 12), and 7-month-olds (n = 9) were r =.94 (SD =.09), r =.96 (SD =.03), and r =.97 (SD =.07), respectively.

Results and Discussion

Infants’ looking time for each trial type (baseline, posthabituation, and test) and visual recovery (the difference in looking between the posthabituation and test trials) for each age are presented in Table 2.

Table 2.

Means and Standard Deviations for Visual Fixation in Seconds for Baseline, Posthabituation, Test Trials, and Visual Recovery as a Function of Age for Infants Receiving Unimodal (Auditory) Information Specifying Affect

| 4-month-olds |

5-month-olds |

7-month-olds |

||||

|---|---|---|---|---|---|---|

| Trial type | M | SD | M | SD | M | SD |

| Baseline | 32.2 | 18.6 | 30.6 | 17.3 | 18.1 | 12.9 |

| Posthabituation | 4.8 | 2.8 | 5.81 | 3.1 | 3.8 | 1.9 |

| Test | 5.2 | 2.9 | 9.11 | 5.1 | 6.5 | 3.8 |

| Average (across all trial types) | 14.1 | 16.8 | 15.2 | 15.2 | 9.4 | 9.8 |

| Visual recovery (test–posthabituation) | 0.4 | 2.9 | 3.3* | 4.7 | 2.7** | 2.6 |

Note. Baseline is the mean visual fixation during the first two habituation trials and reflects initial interest. Posthabituation is the mean visual fixation to two no-change trials just after the habituation criterion was met and reflects final interest in the habituated events. Test is the mean visual fixation during the two change test trials, and visual recovery is the difference between visual fixation during the test trials and visual fixation during the posthabituation trials. Average is the average looking across all habituation trial types.

p <.05.

p <.01.

A repeated measures ANOVA was conducted with age (4, 5, and 7 months) as the between-subjects factor and trial type (baseline, posthabituation, and test) as the repeated measure. The results revealed a significant effect of trials, F(2, 102) = 92.82, p <.001, ; a significant Age × Trials interaction, F(4, 102) = 3.8, p <.01, ; and, in contrast to Experiment 1, a significant effect of age, F(2, 51) = 4.24, p =.02, . Scheffé’s post hoc comparisons exploring the main effect of trials (baseline, posthabituation, and test) indicated that the overall mean looking for each type of trial differed significantly from that of each other trial type (all ps <.01), with the greatest looking during baseline and the least during posthabituation. Thus, combined across the three ages, infants showed evidence of habituation (baseline vs. posthabituation trials) and evidence of visual recovery (posthabituation vs. test) to the acoustic change in affect. Post hoc comparisons explored the main effect of age and revealed that the overall looking of the 7-month-olds was significantly less than that of the 4-month-olds (p <.01) and the 5-month-olds (p <.05), but the overall looking of the 4- and 5-month-olds did not differ from one another (p >.1). As can be seen in Table 2, the 7-month-olds showed an overall lower mean looking (M = 9.4, SD = 9.8) across all trial types than either the 4- or 5-month-olds (M = 14.1, SD = 16.8; and M = 15.2, SD = 15.2, respectively).

The main effect of trial type was qualified by the Age × Trial Type interaction and was evaluated with Scheffé’s post hoc comparisons. First, and most important to our hypotheses, 5- and 7-month-olds showed a significant visual recovery from the post-habituation trials to the test trials, t(17) = 2.51, p <.05, and t(17) = 4.29, p <.01, respectively. However, the mean recovery for the 4-month-olds, t(17) =.43, p >.1, was not significant. In addition, the recovery of the 4-month-olds reliably differed from the recovery of the 5- and 7-month-olds, t(34) = 2.64, p =.019, and t(34) = 2.42, p =.021, respectively. However, the mean recovery of the 5-month-olds and the 7-month-olds did not differ from each other (p >.1). Thus, in Experiment 2, the 5- and 7-month-olds, but not the 4-month-olds, showed significant evidence of discriminating a change in affect when they received unimodal auditory information. These results stand in contrast to those of Experiment 1 in which infants at all three ages (4, 5, and 7 months) reliably discriminated a change in affect under conditions of redundant bimodal presentation.

Second, the Age × Trial Type interaction also indicated that 4-and 5-month-olds’ initial looking (i.e., during baseline) was greater than the baseline looking of the 7-month-olds, F(2, 52) = 3.95, p =.026, , demonstrating that 7-month-olds looked less during the first two trials of the habituation sequence than the 4- and 5-month-olds. Thus, the significant effect of age appears to be a result of 4- and 5-month-olds’ longer initial baseline looking. Given that the amount of time infants spend attending to the stimulus during habituation is an important basis for discrimination, we conducted a one-way ANOVA, which indicated that the 4-, 5-, and 7-month-olds did not differ in the total number of seconds required to reach habituation (p >.10). Infants also did not differ in terms of the total number trials needed to reach habituation (p >.10). Thus, although the 7-month-olds’ baseline looking was less than that of the 4- and 5-month-olds, infants did not differ across ages in terms of the overall processing time during the habituation procedure, F(2, 51) = 0.41, p >.1, . It is worth noting that the greater initial looking time of younger infants is consistent with prior findings in which younger infants look longer at the same stimuli than older infants (e.g., Fagan, 1974; Hale, 1990; Rose, 1983; Rose, Feldman, & Jankowski, 2002).

We again conducted a 3 × 3 ANOVA to examine whether infants’ visual recoveries at each age (4, 5, and 7 months) differed as a function of affective expression and actress used during habituation. Infants’ mean looking during the test trials to the happy, sad, or angry expression (M = 1.9 s, SD = 2.5; M = 2.3 s, SD = 5.5; and M = 2.4 s, SD = 2.7, respectively) did not reach significance, F(2, 45) = 0.117, p =.575, . The effect of actress, F(2, 45) = 0.884, p =.420, , and the interaction of affective expression and actress, F(4, 45) = 0.705, p =.6, , also failed to reach significance.

Finally, we examined the number of infants at each age who exhibited either positive or negative recovery scores in order to assess whether the overall mean at each age was influenced by statistical outliers. Twelve infants at 4 months of age showed positive visual recovery scores, a result that did not differ from chance (R = −6.0–5.6, p >.1). However, at 5 months of age, 14 infants showed positive visual recovery scores (p <.01); at 7 months of age, 15 infants showed positive visual recovery scores (p <.01). Thus, results of the individual participants’ analyses converge with those of the group analyses and demonstrate detection of a change in auditory stimulation for affect by 5- and 7-month-olds but not 4-month-olds.

The results of Experiment 2 contrast with those of Experiment 1. In bimodal, audiovisual stimulation (Experiment 1), infants discriminated a change in affect developmentally earlier (4-month-olds) than in unimodal auditory stimulation (5-month-olds). The results also revealed that, across all three ages, infants did not differ in terms of the total number of seconds required to reach habituation, even though 4- and 5-month-olds spent more time looking during the first two habituation trials. Finally, the results of Experiment 2 are also congruent with the affect discrimination literature in which it has been documented that between 5 and 7 months of age infants are capable of discriminating vocal expressions of affect (Walker-Andrews & Grolnick, 1983; Walker-Andrews & Lennon, 1991).

Experiment 3: The Development of Sensitivity to Affect in Unimodal Visual Stimulation

The purpose of Experiment 3 was to assess infants’ discrimination of affect on the basis of unimodal visual information. As in Experiment 2, we predicted that if the detection of affect initially emerges in bimodal stimulation and is later extended to unimodal stimulation, then sensitivity to affect in unimodal visual stimulation should emerge after the age of 4 months.

However, there was also reason to hypothesize that the development of infants’ sensitivity to unimodal visual and unimodal auditory presentation of the same information might differ. For example, it has been demonstrated that the senses emerge in an invariant sequence (tactile, vestibular, chemical, auditory, visual); therefore, it is likely that auditory perception may be more differentiated than visual perception in early infancy (see Gottlieb, 1971; Lewkowicz, 2000a; Turkewitz & Kenny, 1982; Turkewitz & Mellon, 1989).

In this experiment, we asked whether 4-, 5-, and 7-month-olds were capable of discriminating changes in affect when presented with unimodal visual information (i.e., the dynamic but silent events used in Experiment 1). Experiment 3 was identical in all respects to Experiment 1 with the exception that infants received unimodal visual information (faces) specifying affect in the silent videotapes from Experiment 1.

Method

Participants

Fifty-four infants at 4, 5, and 7 months of age (n = 18 at each age) participated. The mean age of the 4-month-olds (9 girls and 9 boys) was 122 days (SD = 2.0). The mean age of the 5-month-olds (8 girls and 10 boys) was 152 days (SD = 4.0). The mean age of the 7-month-olds (10 girls and 8 boys) was 230 days (SD = 2.9). The data of nine infants (one 4-month-old, three 5-month-olds, and five 7-month-olds) were excluded from the final analyses. Five infants (one 4-month-old, two 5-month-olds, and two 7-month-olds) were excluded because of experimenter errors. Four infants (one five-month-old and three 7-month-olds) were excluded for excessive fussiness. Ninety-four percent of the participants were White non-Hispanic, and 6% were Asian.

Stimulus events and apparatus

Infants were presented with the visual portions of the events used in Experiment 1. The visual portion (i.e., unimodal visual) depicted the faces speaking silently. All other stimuli, apparatus, and procedures were the same as those used in Experiment 1. A second observer was present for 26 of the 54 infants (48%) in order to examine interobserver reliability. Interobserver reliability was r =.98 (SD =.05) across all participants. The interobserver reliabilities for the 4-month-olds (n = 8), 5-month-olds (n = 9), and 7-month-olds (n = 9) were r =.97 (SD =.04), r =.96 (SD =.11), and r =.98 (SD =.10), respectively.

Results and Discussion

Infants’ looking time for each trial type and age is presented in Table 3. A repeated measures ANOVA was conducted with age (4, 5, and 7 months) as the between-subjects factor and trial type (baseline, posthabituation, and test) as the repeated measure. The results revealed a significant effect of trials, F(2, 102) = 124.5, p <.001, ; a significant Age × Trials interaction, F(4, 102) = 14.1, p <.01, ; and a significant effect of age, F(2, 51) = 8.8, p <.01, . Scheffé’s post hoc comparisons exploring the main effect of trials (baseline, posthabituation, and test) indicated that mean looking for the baseline trials exceeded the mean looking during the posthabituation trials (p <.01), indicating that infants habituated. The overall mean looking on the posthabituation trials, however, did not reliably differ from the mean looking on the test trials (p >.05). Follow-up comparisons also explored the main effect of age and revealed that the overall looking of the 4-month-olds differed from that of the 5-month-olds (p =.032) and from that of the 7-month-olds (p <.01), but the overall looking of the 5- and 7-month-olds did not differ from one another (p >.05). As can be seen in Table 3, the 4-month-olds showed a longer mean looking time across all trial types than either the 5- or 7-month-olds.

Table 3.

Means and Standard Deviations for Visual Fixation in Seconds for Baseline, Posthabituation, Test Trials, and Visual Recovery as a Function of Age for Infants Receiving Unimodal (Visual) Information Specifying Affect

| 4-month-olds |

5-month-olds |

7-month-olds |

||||

|---|---|---|---|---|---|---|

| Trial type | M | SD | M | SD | M | SD |

| Baseline | 35.5 | 14.1 | 24.6 | 13.5 | 13.5 | 9.8 |

| Posthabituation | 5.7 | 3.1 | 4.9 | 1.9 | 3.7 | 2.4 |

| Test | 5.9 | 5.0 | 5.9 | 3.9 | 7.5 | 5.9 |

| Average (across all trial types) | 15.7 | 16.6 | 11.8 | 12.2 | 8.3 | 7.8 |

| Visual recovery (test–posthabituation) | 0.2 | 5.4 | 0.97 | 4.1 | 3.8** | 4.3 |

Note. Baseline is the mean visual fixation during the first two habituation trials and reflects initial interest. Posthabituation is the mean visual fixation to two no-change trials just after the habituation criterion was met and reflects final interest in the habituated events. Test is the mean visual fixation during the two change test trials, and visual recovery is the difference between visual fixation during the test trials and visual fixation during the posthabituation trials. Average is the average looking across all habituation trial types.

p <.01.

To test our main hypothesis, we evaluated the Age × Trial Type interaction with planned comparisons. Results revealed that only the 7-month-olds showed a significant visual recovery (increase in looking from the posthabituation trials to the test trials) to a change in affect, t(17) = 3.9, p <.01. In contrast, the mean visual recovery for the 4- and 5-month-olds did not reach significance, t(17) =.45, p >.1, and t(17) = 1.0, p >.1, respectively, and did not differ from each other (p >.1). However, the visual recovery of the 4- and 5-month-olds reliably differed from that of the 7-month-olds, t(34) = 2.35, p =.025, and t(34) = 2.12, p =.030, respectively. Thus, in this experiment, only the 7-month-olds showed significant evidence of discriminating a unimodal visual change in affect, and the visual recovery of the 7-month-olds was significantly greater than that of the 4- and 5-month-olds.

We again examined the number of infants at each age who exhibited either positive or negative visual recoveries using a two-tailed binomial test in order to assess whether the overall mean at each age was influenced by statistical outliers. At 4 months of age, 7 of 18 infants showed positive visual recovery scores, a frequency that did not differ from chance (R = −6.8–16.2, p >.1). At 5 months of age, 9 infants showed positive visual recovery scores (R = −5.7–10.5, p =.96), also not different from chance. However, at 7 months of age, 16 infants showed positive visual recovery scores, a result that reliably differed from chance (R = −1.8–13.4, p <.001). These findings from individual subject analyses converge with those of the group analyses and demonstrate detection of affect in unimodal visual stimulation at 7 but not at 4 or 5 months of age.

A 3 × 3 ANOVA was conducted to examine whether infants’ visual recoveries at each age (4, 5, and 7 months) differed as a function of affective expression and actress used during habituation. Infants’ mean looking to happy, sad, and angry affective expressions (M = 2.2 s, SD = 4.6; M =.87 s, SD = 5.6, and M = 1.9 s, SD = 4.3, respectively) did not significantly differ, F(2, 45) = 0.357, p =.701, . The effect of actress, F(2, 45) = 0.749, p =.479, , and the interaction of affective expression and actress, F(4, 45) = 0.929, p =.456, , also failed to reach significance.

Comparison Across Experiments 1, 2, and 3

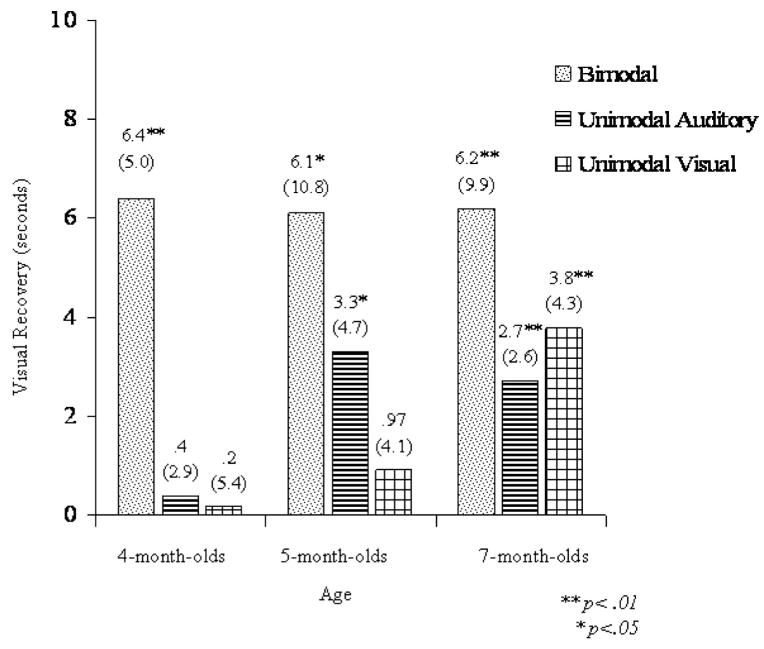

Further analyses were conducted to compare infants’ detection of affect in bimodal (Experiment 1) versus unimodal (Experiments 2 and 3) stimulation. Figure 1 displays infants’ visual recovery to a change in affect as a function of modality (bimodal, unimodal auditory, and unimodal visual) and age (4, 5, and 7 months). Although Scheffé’s post hoc comparisons of trial type (posthabituation vs. test) tested our main hypotheses by revealing whether infants detected a change in affect at each age in Experiments 1–3, comparisons across experiments provide additional tests of our hypotheses.

Figure 1.

Mean visual fixation (and standard deviations) as a function of condition (bimodal, unimodal auditory, unimodal visual) at 4, 5, and 7 months of age during the habituation phase. Visual recovery is the difference between infants’ visual fixation during the test trials and visual fixation during the posthabituation trials. * p <.05. ** p <.01.

If detection of affect emerges first by detecting intersensory redundancy and later is extended to unimodal stimulation, then one would expect the younger infants (4-month-olds) to show greater visual recovery to a change in affect in bimodal stimulation (Experiment 1) than in unimodal auditory (Experiment 2) or unimodal visual stimulation (Experiment 3) and the oldest infants (7-month-olds) to show little difference in visual recovery. To test this hypothesis, we conducted three one-way ANOVAs at each age as a function of modality (bimodal, unimodal auditory, and unimodal visual) comparing visual recovery. Each ANOVA was followed by a priori planned comparisons comparing visual recovery to auditory, visual, and bimodal audiovisual changes. At 4 months, results indicated a significant effect of modality, F(2, 51) = 10.75, p <.001, . Planned comparisons supported our predictions and indicated that the visual recovery of the 4-month-olds in the bimodal condition significantly differed from that of the unimodal auditory, t(34) = 4.4, p <.01, and unimodal visual conditions, t(34) = 3.59, p <.01. In contrast, the visual recovery of the unimodal auditory and visual conditions did not differ (p >.1).

At 5 months, results of the ANOVA also indicated a significant effect of modality, F(2, 51) = 3.19, p =.03, . Planned comparisons indicated that visual recovery in the bimodal condition significantly differed from that of the unimodal visual condition, t(34) = 2.27, p <.05, but not the unimodal auditory condition, t(34) = 3.59, p >.1. Visual recovery of the unimodal auditory and visual conditions did not reliably differ (p >.1). These analyses, along with our earlier analyses, demonstrate that 5-month-olds are able to discriminate a change in bimodal or unimodal auditory stimulation, and they do better than when provided unimodal visual stimulation. In contrast, at 7 months of age, results of the ANOVA indicated a nonsignificant effect of modality on visual recovery, F(2, 51) = 1.42, p >.1, . Thus, although 7-month-olds showed discrimination of affect under bimodal, unimodal auditory, and unimodal visual conditions, discrimination was not superior in one condition over another.

These analyses, taken together with the Scheffé tests of Experiments 1–3, reveal that detection of affect, specified by amodal temporal and intensity shifts, emerges first in bimodal redundant stimulation by 4 months and is later extended to unimodal auditory and then to unimodal visual stimulation. In bimodal stimulation (Experiment 1), infants detected a change in affect by 4 months of age, and sensitivity remained stable across age. In contrast, in unimodal stimulation, infants showed a gradual improvement across age in detection of affect changes. Infants did not detect the change in acoustic stimulation until 5 months of age (Experiment 2), and only by 7 months of age did they detect the change in visual stimulation (Experiment 3). These findings are consistent with predictions of the intersensory redundancy hypothesis that early in development redundant bimodal stimulation fosters perception of amodal properties, and with increasing age, attention becomes more flexible and infants are able to perceive amodal properties under conditions of both unimodal and bimodal stimulation (Bahrick & Lickliter, 2000; Bahrick et al., 2004).

Experiment 4: Infants’ Sensitivity to Affect in Asynchronous Audiovisual Stimulation

Intersensory redundancy is defined as the spatially and temporally coordinated presentation of the same amodal information across two or more senses (Bahrick & Lickliter, 2000, 2004; Bahrick et al., 2004, 2006). Thus, temporal synchrony between the auditory and visual stimulation is entailed in intersensory redundancy and is hypothesized to be necessary for the early developmental benefit of bimodal stimulation in detecting amodal properties. In other words, temporal synchrony makes redundantly specified amodal stimulus properties become perceptual “foreground” and other properties become “background.” Alternatively, it is also possible that the benefit of bimodal stimulation is a result of other factors, such as stimulating two sense modalities (visual and auditory) or having a greater amount of overall stimulation (visual plus auditory). Experiment 4 was, thus, conducted in order to assess whether temporal synchrony between auditory and vocal information for affect was necessary for infants’ initial detection of affect changes. In this experiment, we presented 4- and 5-month-old infants with bimodal information specifying affect; however, the auditory and visual information was temporally misaligned. Thus, if bimodal specification alone is responsible for younger infants’ discrimination of affect, then infants should demonstrate reliable discrimination of affect even in the asynchronous condition. However, if temporal synchrony between visually and acoustically specified affect is necessary for promoting attention to affect, then only when the auditory and visual information are presented in temporal alignment should reliable discrimination be evident.

We chose 4- and 5-month-olds for this experiment because 4-month-olds represent the youngest age that infants demonstrated bimodal discrimination of affect and 5-month-olds were the youngest age that infants demonstrated unimodal discrimination of affect. We predicted that if temporal synchrony is critical for recruiting younger infants’ attention toward the redundantly specified amodal property of affect, then the 4-month-olds should show evidence of discrimination when the auditory–visual information is presented synchronously but not when it is presented asynchronously. In contrast, given that 5-month-olds reliably discriminated affect when presented unimodally (acoustically), 5-month-olds may discriminate the expressions of affect when presented asynchronously because, by this age, intersensory redundancy may not be necessary and discrimination could be based on acoustic information alone.

Method

Participants

Thirty-six infants at 4 and 5 months of age (n = 18 at each age) participated. The mean age of the 4-month-olds (9 girls and 9 boys) was 121 days (SD = 3.0). The mean age of the 5-month-olds (10 girls and 8 boys) was 152 days (SD = 2.0). Five infants (three 4-month-olds and two 5-month-olds) were excluded because of fussiness. Three additional 5-month-olds were excluded for equipment failure, and one additional 5-month-old was excluded for experimenter error. Infants were recruited in a manner identical to previous experiments. Ninety-seven percent of the participants were White non-Hispanic, and 1% was Hispanic.

Stimulus events and apparatus

Infants were presented with the same stimuli used in Experiment 1 with the exception that the auditory information was delayed by 2 s with respect to the visual information. In all other respects, the stimuli, apparatus, and procedures were the same as those used in Experiment 1. A second observer was present for 13 of the 36 infants (36%) in order to examine interobserver reliability. Interobserver reliability was r =.95 (SD =.08) across all participants. The interobserver reliability for the 4-month-olds (n = 6) was r =.96 (SD =.04); for the 5-month-olds (n = 7), it was r =.95 (SD =.12).

Results and Discussion

Infants’ looking time for each trial type, age, and condition for this experiment as well as Experiments 1 and 5 are presented in Table 4.

Table 4.

Means and Standard Deviations for Visual Fixation in Seconds for Baseline, Posthabituation, Test Trials, and Visual Recovery as a Function of Age and Type of Bimodal Specification (Synchronous, Asynchronous, and Sequential)

| Experiment 1: Synchronous |

Experiment 4: Asynchronous |

Experiment 5: Sequential (4-month-olds) |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 4-month-olds |

5-month-olds |

4-month-olds |

5-month-olds |

|||||||

| Trial type | M | SD | M | SD | M | SD | M | SD | M | SD |

| Baseline | 33.8 | 15.5 | 29.1 | 16.1 | 40.8 | 15.2 | 33.9 | 16.1 | 37.5 | 20.5 |

| Posthabituation | 4.9 | 1.8 | 6.7 | 3.9 | 6.6 | 5.0 | 5.4 | 2.8 | 9.2 | 6.2 |

| Test | 11.3 | 4.7 | 12.8 | 12.0 | 8.2 | 5.1 | 11.3 | 12.0 | 9.7 | 4.5 |

| Average (across all trial types) | 16.7 | 15.5 | 16.2 | 15.0 | 18.5 | 14.6 | 16.9 | 13.2 | 18.8 | 16.3 |

| Visual recovery (test–posthabituation) | 6.4** | 5.0 | 6.1* | 10.8 | 1.6 | 5.1 | 5.9* | 9.6 | .51 | 4.7 |

Note. Baseline is the mean visual fixation during the first two habituation trials and reflects initial interest. Posthabituation is the mean visual fixation to two no-change trials just after the habituation criterion was met and reflects final interest in the habituated events. Test is the mean visual fixation during the two change test trials, and visual recovery is the difference between visual fixation during the test trials and visual fixation during the posthabituation trials. Average is the average looking across all habituation trial types.

p <.05.

p <.01.

A repeated measures ANOVA was conducted with age (4 and 5 months) as the between-subjects factor and trial type (baseline, posthabituation, and test) as the repeated measure. The results revealed a significant effect of trials, F(2, 68) = 155.2, p <.001, ; a significant Age × Trials interaction, F(2, 67) = 3.73, p <.05, ; and a nonsignificant effect of age, F(1, 34) = 0.490, p >.10, . Post hoc comparisons exploring the main effect of trials (baseline, posthabituation, and test) indicated that the overall mean looking for the baseline trials exceeded the mean looking during the posthabituation trials, t(34) = 17.2 p <.01, and the overall mean looking on the posthabituation trials also differed from the mean looking on the test trials, t(34) = 5.27 p <.01.

The significant interaction between trials and condition addressed our primary question of whether temporal synchrony is necessary for younger infants’ discrimination of affect. Follow-up comparisons indicated that the 5-month-olds in the asynchronous bimodal condition showed a significant visual recovery to the change in affect. Thus, the increase in looking from the posthabituation trials to the test trials was significant, t(17) = 2.6, p =.017. The visual recovery for the 4-month-olds, however, did not reach significance in the asynchronous condition, t(17) = 1.3, p =.20. Thus, consistent with our predictions, 5-month-olds, but not 4-month-olds, discriminated a change in affective expression when the face–voice synchrony was disrupted by a 2-s interval.

We also compared the visual recovery of the 4- and 5-month-olds across the synchronous (Experiment 1) and asynchronous (Experiment 4) conditions using a priori planned comparisons. These studies used identical procedures and varied only in temporal synchrony. Five-month-olds showed a nonsignificant difference in visual recovery between the asynchronous and synchronous conditions, t(34) =.054, p =.957. The results of the 4-month-olds, however, indicate significantly greater visual recovery in the synchronous than the asynchronous condition, t(34) = 2.8, p <.01.

The results of this experiment demonstrate that 4-month-olds’ ability to discriminate changes in affect under bimodal, but not unimodal, conditions is not a result of presenting “more” information (bimodal) compared with “less” information (unimodal). Rather, these results demonstrate that the superior detection of affect in the bimodal condition is a result of intersensory redundancy and entails the temporal alignment of the auditory and visual stimulation. Similar findings of facilitation in synchronous but not asynchronous bimodal stimulation have been demonstrated in prior studies with human infants’ detection of rhythm (Bahrick & Lickliter, 2000) and quail embryos’ detection of the temporal features of a maternal call (Lickliter et al., 2002, 2004).

These findings also indicate that when infants are first learning to detect affective information (i.e., when the task is difficult), synchrony is important for facilitating learning. However, once infants are relatively proficient perceivers of affect in a particular context, synchrony is no longer necessary. Consistent with this view, Kahana-Kalman and Walker-Andrews (2001) found that 3-month-olds showed intermodal matching for synchronous and asynchronous happy and sad facial and vocal expressions when the expressions were presented by their own mothers but not for the expressions of an unfamiliar woman.

Experiment 5: 4-Month-Olds’ Sensitivity to Sequential Auditory and Visual Stimulation

Although Experiment 4 demonstrated that synchronous auditory and visual stimulation facilitates detection of affect at 4 months of age and asynchronous bimodal information was not sufficient, it remains possible that synchrony per se was not necessary. Rather, detection of affect may be facilitated by the opportunity to experience information in two sense modalities. Thus, in Experiment 5, 4-month-old infants were presented with sequential auditory and visual stimulation. In other words, habituation trials alternated between auditory and visual information for affect. In this way, infants would receive bimodal information without the potential distraction of face–voice asynchrony. If infants fail to detect an affect change following successive auditory and visual stimulation, we can be more confident that synchrony rather than stimulation in two sense modalities is necessary for facilitating early detection of affect, consistent with the predictions of the intersensory redundancy hypothesis (Bahrick & Lickliter, 2000; Bahrick et al., 2004). In this experiment, we asked whether sequential auditory and visual stimulation is sufficient for promoting discrimination of affect at 4 months of age or whether synchronous auditory and visual stimulation is necessary. Because 5-month-olds were able to discriminate affect changes when presented bimodal audiovisual, unimodal auditory, and asynchronous stimulation, they were not included in this experiment.

Method

Participants

Eighteen 4-month-olds (7 girls and 11 boys) whose mean age was 122.2 days (SD = 2.5) participated. Seven additional 4-month-olds participated but were excluded from the analyses, five for excessive fussiness and two for failure to habituate within 20 trials. Infants were recruited in a manner identical to previous experiments. All of the participants were White non-Hispanic.

Stimulus events, apparatus, and procedure

Infants received the same stimuli used in Experiments 2 and 3. However, in this experiment, the habituation trials alternated between auditory and visual stimulation. Thus, infants received a silently speaking (i.e., moving) face on one trial and a voice with a static neutral face on the next trial. Both auditory and visual stimulation specified the same affective expression during habituation. Half the infants received a unimodal auditory trial as their first trial, and the other half received a unimodal visual trial as their first habituation trial. The remainder of the habituation and test trials alternated between unimodal auditory and unimodal visual information. The auditory–visual ordering was preserved across the habituation and test sequence such that if the last habituation trial was an auditory trial, then the first posthabituation trial was a visual trial. We preserved this alternation of auditory and visual trials to ensure that infants could not discriminate a change in the order of trials between habituation and test and that the only change from habituation to test was in the affective expression. During the test trials, each infant received two trials that depicted a change in affect, one unimodal visual and one unimodal auditory. All other stimuli, apparatus, and procedures were the same as those used in Experiment 1. A second observer was present for 8 of the 18 infants (44%) in order to examine interobserver reliability. Interobserver reliability was r =.93 (SD =.02).

Results and Discussion

Infants’ looking behavior as a function of trial type for Experiment 5 is presented in Table 4. The question addressed in Experiment 5 was whether 4-month-olds could discriminate a change in affect in sequential auditory and visual stimulation. We compared 4-month-olds’ looking as function of trial type (baseline, posthabituation, and test) in a one-way ANOVA. The results indicated a significant effect of trial type, F(2, 53) = 29.5, p <.001, . Follow-up comparisons revealed that infants’ posthabituation looking was significantly less than their baseline looking, t(18) = 5.6, p <.01, indicating that infants habituated. However, infants’ visual recovery (an increase in looking from posthabituation to the test trials) did not reliably differ from chance, t(17) =.456, p >.10. Thus, infants showed no evidence of detecting a change in affect in sequential auditory and visual stimulation.

We also compared 4-month-olds’ visual recovery across conditions of bimodal synchronous (Experiment 1), bimodal asynchronous (Experiment 4), and bimodal sequential stimulation (Experiment 5) using a one-way ANOVA. Results revealed a significant effect of condition, F(2, 51) = 7.16, p <.01, . Follow-up post hoc comparisons revealed the mean visual recovery for infants in the bimodal sequential condition did not differ from that of the bimodal asynchronous condition of Experiment 4, t(34) =.66, p >.10; however, both the asynchronous and sequential conditions differed from the bimodal synchronous condition of Experiment 1, t(34) = 2.8, p <.01, and t(34) = 3.6, p <.01, respectively. Finally, infants’ initial looking (baseline trials), F(2, 51) = 0.734, p >.1, , and total number of seconds to reach habituation, F(2, 51) = 1.03, p >.1, , did not significantly differ as a function of condition (bimodal synchronous, bimodal asynchronous, bimodal sequential).

The results of Experiments 1, 4, and 5 provide converging evidence that 4-month-olds’ discrimination of affect in Experiment 1 was not a result of stimulating two sense modalities per se or of presenting more information in the bimodal than unimodal conditions. Rather, these findings are consistent with predictions of the intersensory redundancy hypothesis and suggest that 4-month-olds’ discrimination of affect is facilitated by redundant, audiovisual stimulation presented in temporal synchrony. Neither sequential nor asynchronous bimodal auditory and visual stimulation is sufficient for promoting detection of affect at 4 months. Rather, synchronous bimodal stimulation is necessary.

General Discussion

In the present studies, we examined the development of infants’ ability to discriminate happy, sad, and angry affective expressions portrayed by an adult actress in bimodal audiovisual, unimodal auditory, and unimodal visual stimulation. Following habituation to video displays of a woman speaking in a happy, sad, or angry manner, infants received test trials depicting a change in affect. Results of Experiments 1–3 demonstrated that by 4 months of age infants discriminated changes in dynamic affective expressions in bimodal audiovisual stimulation but not in unimodal auditory or unimodal visual stimulation. In contrast, 5- and 7-month-olds discriminated affective expressions in bimodal as well as unimodal stimulation: At 5 months, affect was discriminated in unimodal auditory displays and at 7 months, in unimodal auditory and unimodal visual displays. Analyses of the frequency of individual participants showing positive visual recovery to the affective changes also converged with results of the group data. Thus, evidence for sensitivity to bimodal audiovisual information for affect was observed at 4 months and remained stable across age, sensitivity to unimodal auditory information emerged at 5 months, and sensitivity to unimodal visual information emerged at 7 months of age.

We also compared performance across age to assess the nature and significance of developmental change. Significant differences across age in detection of affect were observed in each condition. In bimodal stimulation, visual recovery to the changes in affect was significantly greater at 4, 5, and 7 months than at 3 months. In unimodal auditory stimulation, visual recovery to affective changes was significantly greater at 5 and 7 months than at 4 months, and in unimodal visual stimulation, visual recovery was significantly greater at 7 months than at 4 and 5 months. Thus, infants showed developmental improvements in detection of affect, first under conditions of bimodal stimulation, then vocal stimulation, and finally visual stimulation.

This developmental pattern is consistent with conclusions drawn by Walker-Andrews (1997) from her review of the literature across a variety of studies of infant perception of affect that used a range of methods, stimuli, and conditions. She concluded that development follows a course of differentiation in which infants first learn to perceive the affective expressions of others as part of a unified multimodal event, and over time infants perceive affect in the voice and face alone. The critical information, she suggested, is contained in the dynamic flow, including invariant patterns of change in the face, body, and voice. By 3 to 5 months, recognition of affect emerges in bimodal stimulation, first in familiar and then in unfamiliar contexts and persons (see Kahana-Kalman & Walker-Andrews, 2001; Walker-Andrews, 1997). Specifically, Kahana-Kalman and Walker-Andrews (2001) found that 3.5-month-olds showed intermodal matching for asynchronous happy and sad facial–vocal expressions when those expressions were presented by their own mothers but not for the expressions of an unfamiliar woman. Later, by 5 months, differentiation of affect in voices emerges (A. J. Caron et al., 1988; Walker-Andrews & Lennon, 1991). By approximately 7 months of age, infants can perceive affect in faces and can successfully match faces and voices on the basis of affect (Soken & Pick, 1992; Walker, 1982; Walker-Andrews, 1986), suggesting recognition of affect rather than discrimination based solely on featural differences.

One surprising result in this set of experiments was the lack of evidence for infant preferences for positive over negative emotional expressions. In contrast to prior studies (see Nelson, Morse, & Leavitt, 1979; Serrano, Iglesias, & Loeches, 1992; Walker-Andrews & Grolnick, 1983, for examples), there was no evidence of greater visual recovery to a positive than a negative emotional expression. Even differences in sample size across studies were not a likely basis for differences across studies, as our results showed no tendency for looking to happy expressions to be greater than looking to angry or sad expressions.

The pattern of developmental findings observed in our studies is consistent with predictions generated by the intersensory redundancy hypothesis (Bahrick & Lickliter, 2000, 2004; Bahrick et al., 2004). Amodal information, such as that specifying affect (temporal patterning and intensity contours of audiovisual speech), is detected first in a multimodal context and is later extended to unimodal contexts. In multimodal expressions of affect, synchronous auditory and visual stimulation from faces and voices highlights amodal information for affect and causes this information to become foreground. Later in development, detection of affect is generalized to unimodal auditory and then unimodal visual stimulation. These findings suggest that intersensory redundancy initially promotes infants’ discrimination of information for affect by fostering attentional selectivity to redundantly specified amodal properties of stimulation.

These findings thus provide further evidence that intersensory redundancy promotes infants’ detection and perceptual processing of amodal information during early development. This multimodal facilitation found in the present study has been observed previously in human infants for nonsocial events. For example, young infants detected the rhythm and tempo of an object hitting a surface in bimodal but not in unimodal stimulation (Bahrick et al., 2002; Bahrick & Lickliter, 2000). Avian embryos and chicks show intersensory facilitation for the temporal patterning of a maternal call, demonstrating superior learning and memory for the call when it is synchronized with a flashing light (Lickliter et al., 2002, 2004).

Furthermore, the present developmental findings, in which detection of amodal properties are extended from multimodal to unimodal stimulation in a period of a few months, are also consistent with developmental predictions of the intersensory redundancy hypothesis and findings of infant perception of nonsocial events (Bahrick & Lickliter, 2004; Lewkowicz, 2004). Although 3- and 5-month-old infants detect changes in tempo and rhythm (respectively) only in bimodal, audiovisual stimulation, older infants of 5 and 8 months detect these changes in tempo and rhythm (respectively) in audiovisual, visual, and auditory stimulation (Bahrick & Lickliter, 2004). Similarly, 4-month-olds detected the temporal order of an event sequence on the basis of audiovisual information but not on the basis of auditory or visual information alone. By the age of 8 months, however, infants detected the temporal order on the basis of audiovisual, auditory, or visual information (Lewkowicz, 2004). Taken together, these findings suggest that intersensory redundancy promotes initial detection of amodal properties of stimulation such as affect, tempo, rhythm, and temporal order in the multimodal stimulation from both social and nonsocial events. After a few months of perceptual experience, perceptual differentiation progresses, attention becomes more flexible, and detection of these properties of events is extended to unimodal stimulation. It is not yet known whether detection of amodal event properties in unimodal stimulation becomes as proficient as in bimodal stimulation or whether detection in bimodal stimulation retains an advantage throughout the lifespan. Studies are currently in progress addressing this question.

Experiments 4 and 5 explored the nature of intersensory redundancy responsible for facilitating infants’ detection of affect in bimodal stimulation. In Experiment 4, we examined whether temporal synchrony between the audible and visible stimulation was necessary for intersensory facilitation. Alternatively, it was possible that intersensory facilitation resulted from a greater amount of stimulation (audible plus visible) in bimodal than in unimodal auditory or unimodal visual stimulation alone. Infants of 4 and 5 months were habituated to asynchronous versions of the bimodal stimulation and, thus, received the same type and amount of stimulation as in the synchronous displays. Results of the asynchronous condition, however, revealed no evidence of infant detection of affect at 4 months, and results differed significantly from those of the 4-month-olds who received synchronous stimulation. By the age of 5 months, however, after unimodal auditory detection of affect had emerged, detection of affect in asynchronous displays was also present. Together, these findings indicate that synchrony is necessary for promoting initial detection of affect at 4 months of age and that the intersensory facilitation found in Experiment 1 for detection of affect in bimodal stimulation at 4 months was not due to infants having a greater amount of stimulation than those who received unimodal presentations.

Experiment 5 explored whether stimulation in two different senses without redundancy (synchronous alignment of the audible and visible streams) was sufficient for promoting intersensory facilitation. The audible and visual stimulation from the prior studies was presented sequentially, in alternating habituation trials, thus providing the same amount and type of stimulation as in the bimodal condition, without synchrony and without the potential distraction of asynchronous faces and voices. Again, 4-month-olds showed no evidence of detecting a change in affect, and performance was significantly different from that of the synchronous condition. Thus, stimulation in two sense modalities presented successively is not sufficient for supporting detection of affect at 4 months. On the basis of infant performance in these control studies and their difference from performance in the synchronous exposure condition, we conclude that temporal synchrony between faces and voices plays a critical role in the early detection of affect. The perception of affect is initially promoted in naturalistic conditions of bimodal redundant stimulation in which faces and voices are temporally aligned.

The results of the present study and others supporting predictions of the intersensory redundancy hypothesis (see Bahrick & Lickliter, 2002; Bahrick et al., 2004) provide insight into the nature of infant learning within the context of social interactions. Learning about information that can be specified in multiple sense modalities (involving dimensions such as time, space, or intensity) will likely be advantaged and emerge earlier in development when it is available in multimodal stimulation rather than in unimodal stimulation. Research in the area of joint attention, a potential precursor for social referencing and language development (Dunham, Dunham, & Curwin, 1993; Morales, Mundy, & Rojas, 1998; Tomasello & Farrar, 1986), provides some support for this generalization. For example, infants’ ability to follow gaze toward a target, even if it is outside their immediate visual field, is facilitated when the shift in eye gaze is coordinated with gesture and vocal behavior as compared with eye gaze alone (e.g., Corkum & Moore, 1998; Deák, Flom, & Pick, 2000; Flom, Deák, Pick, & Phill, 2004; Flom & Pick, 2007; Moore, Angelopoulos, & Bennett, 1997). Just as redundant intersensory stimulation promotes infants’ learning and discrimination of amodal information for affect, redundant gesture, gaze, and vocal behavior also appear to promote infants’ ability to look where another is looking.

Similar patterns should be observed for the development of social referencing in which infants show the ability to use others’ affective cues for guiding their own exploratory behaviors toward novel objects or events (E. J. Gibson, 1988; Mumme, Fernald, & Herrera, 1996; Phillips, Wellman, & Spelke, 2002; Sorce, Emde, Campos, & Klinnert, 1985). Social referencing involves perceiving and coordinating a number of components, including perceiving the adult’s affective expression, perceiving the novel object or event, relating the affect with the object or event, and regulating one’s exploratory behavior on the basis of the perceived affect–object relation. Our findings suggest that the salience of the affect and the novel object or event should be enhanced when each is dynamic and multimodally specified. This should provide a more firm basis for detecting the affect–object relation and, in turn, for regulating exploratory behavior. Consistent with this view, research suggests that social referencing emerges in somewhat younger infants when the affect, the object, or both are depicted multimodally than when unimodal objects or emotional expressions are presented. For example, infants showed evidence of social referencing as early as 10 months of age when a dynamic, audiovisual object, such as a toy robot, was presented along with multimodal affective expressions (e.g., Hornick, Risenhoover, & Gunnar, 1987; Walden & Ogan, 1988). In contrast, 12-month-olds, but not 10-month-olds, showed social referencing when the novel object was unimodal visual and static (Mumme & Fernald, 2003).