Abstract

Vital physiological behaviors exhibited daily by bacteria, plants, and animals are governed by endogenous oscillators called circadian clocks. The most salient feature of the circadian clock is its ability to change its internal time (phase) to match that of the external environment. The circadian clock, like many oscillators in nature, is regulated at the cellular level by a complex network of interacting components. As a complementary approach to traditional biological investigation, we utilize mathematical models and systems theoretic tools to elucidate these mechanisms. The models are systems of ordinary differential equations exhibiting stable limit cycle behavior. To study the robustness of circadian phase behavior, we use sensitivity analysis. As the standard set of sensitivity tools are not suitable for the study of phase behavior, we introduce a novel tool, the parametric impulse phase response curve (pIPRC).

I. Introduction

Biological entities, such as living cells, are dynamical systems comprised of a complex network of interacting components [1]. Highly evolved control mechanisms produce robust physiological behaviors, e.g. the time-keeping ability of circadian clocks. Systems biologists reverse-engineer such systems by identifying the components and studying the dynamics of their interactions [2]. Mathematical models, often formulated as ordinary differential equations (ODEs), are used to formalize the system kinetics. Model simulation and analysis, coupled with experimentation, elucidate biological design principles. Our interest lies in model analysis, for which system-theoretic tools are natural candidates [1], [3].

The principle of robustness provides a clear connection between biological and systems theory [4]. A key property of biological systems is robust performance in the presence of environmental variation - a specific functionality is maintained despite the presence of specific perturbations [5]. Both biological and engineered systems will be robust to expected disturbances, but fragile to rare, or unexpected disturbances. Robustness such as that displayed by biological systems is accompanied by increased complexity [6], thus eliminating the use of intuition as an effective means of elucidating design principles. The systems must be subjected to formal systems analysis tools.

In the case of ODE models, robustness is generally studied in the context of parametric uncertainty via properties such as robust stability [7] and response to perturbation. Although there is no standard quantitative measure of robustness [5], [8], a common thread in the literature relates robustness to parametric sensitivity. Parameters whose perturbation causes undesirable changes (either qualitative or quantitative) in some output identify processes with fragility [9]. The sensitivity of a system to its parameters may be determined using global [10] or local [11] methods. Our interest is in the latter, utilizing formal sensitivity analysis to quantify the local robustness properties of a system.

Sensitivity analysis has been used to study the response of a state variable (such as a component concentration) to a stimulus or perturbation to a parameter (such as a rate constant). High sensitivity (i.e. large changes in state values) to parametric perturbation indicates fragility, whereas low sensitivity indicates robustness [11]. In works such as [11] and [12], the regulatory processes have been rank-ordered from those with the least sensitivity to those with the highest sensitivity. In all cases, it was found that the rank-ordering was dependent on network structure, rather than on a particular choice of parameters. This strongly suggests that studying the sensitivity properties of a model does, in fact, aid in the analysis of the robustness properties of the biological system itself.

Oscillators form a class of biological systems that requires special attention. Many rhythmic processes such as the cell division cycle, the periodic firing of pacemaker neurons, and the daily sleep/wake cycle are regulated at the cellular level. Complex regulatory networks form the mechanisms for robust endogenous oscillations. For oscillators, maintenance under perturbation of oscillation properties such as amplitude or period may be expected. In the case of the circadian clock - the pacemaker governing daily rhythms - the maintained behavior is significantly more sophisticated. Organisms across the kingdoms possess the highly conserved circadian clock. This clock was designed not to maintain perfect 24 hour rhythms, but rather to maintain approximately 24-hour rhythms and to coordinate its internal time (phase) with the environment (external time). A circadian clock responds robustly to corrective signals such as light. This enables entrainment, by which the clock anticipates the arrival of dawn, and light arrives at the appropriate internal time to correct its mismatch with the environment. To study this phenomenon in vivo, phase response curves (PRCs) have been collected [13], describing a clock's phase-dependent response to short-lived stimuli such as pulses of light.

The goal of the present work is to provide a semi-analytical means to study the robustness properties of the phase behavior of a mathematical model of an oscillator. Standard sensitivity measures fail to capture the phase sensitivity [14] (and, therefore, the robustness properties of phase), motivating us to develop phase-based sensitivity measures. We expand the set of sensitivity measures to include the parametric impulse phase response curve (pIPRC), which characterizes the phase behavior of any limit cycle system and its response to stimuli.

To illustrate the derivation and demonstrate the utility of the pIPRC, we analyze a model of the circadian clock in an isolated cell of the mammalian master clock. In Section III we develop a theoretical basis for the study, and in Section IV, we demonstrate the relationship between theory and numerical experiment. In our study, we find that even though the pIPRC is a local measure, it is a powerful predictor for large perturbations.

Although the present work studies the response of a single oscillator to a stimulus, future work will utilize pIPRCs to study a collection of coupled oscillators that communicate via intercellular signals (which ultimately manifest as parametric modulation). Synchronization (as the result of such signaling) has recently presented itself as an important area of circadian research. Evidence is mounting that the circadian clock in a mouse is composed of a collection of sloppy cellular oscillators that use intercellular signaling to synchronize and create a coherent oscillation [15]. Without using parametric-based signaling, the study of weakly connected neural oscillators has led to a well-developed theory of interactions [16], [17]. The pIPRC provides an entrée into this related, but not otherwise directly applicable, theory.

II. Mathematical Model of Circadian Oscillator

A. Limit Cycle System

The analysis methods presented in this work apply to any oscillatory system that can be represented as a system of ODEs exhibiting limit cycle behavior.

Consider a set of autonomous nonlinear ordinary differential equations

| (1) |

where x ∈  is the vector of states and p ∈

is the vector of states and p ∈  is the vector of (constant) parameters. Assume there is a hyperbolic, stable attracting limit cycle γ. Let xγ(t,p) be the solution to (1) on the limit cycle. It is τ-periodic, meaning xγ(t,p) = xγ(t + τ,p). In the interest of succinct notation, in the remainder of this paper we will be writing x(t,p) as a function of the independent variable, t, only.

is the vector of (constant) parameters. Assume there is a hyperbolic, stable attracting limit cycle γ. Let xγ(t,p) be the solution to (1) on the limit cycle. It is τ-periodic, meaning xγ(t,p) = xγ(t + τ,p). In the interest of succinct notation, in the remainder of this paper we will be writing x(t,p) as a function of the independent variable, t, only.

B. Circadian Clock Model

The molecular mechanism of the circadian clock in a mouse resides in the cells of the suprachiasmatic nucleus of the hypothalamus in the brain. The clockworks in each cell are comprised of genes, mRNA, and proteins, forming a complex network of transcriptional feedback loops [18]. As in engineered systems, interlocking negative and positive feedback loops allow for stable oscillations.

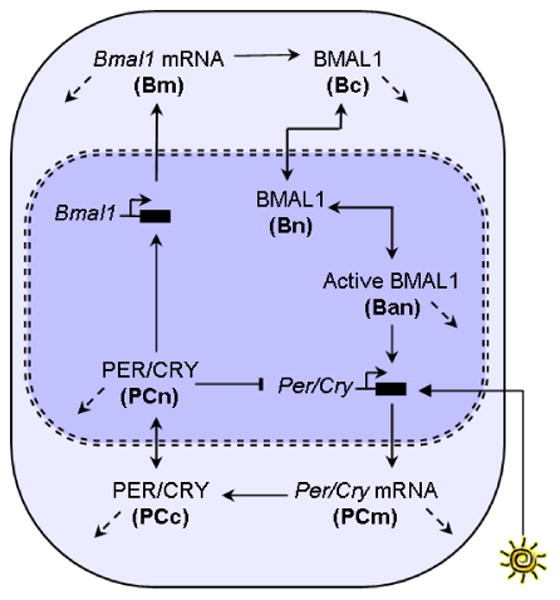

We use the single cell model presented in [19]. Genes with redundant roles - the cryptochrome and period genes Cry1, Cry2, Per1, and Per2 - are combined into a single entity (Per/Cry). Per/Cry mRNA and protein are involved in an auto-regulatory negative feedback loop. An additional gene, Bmal1 codes for the BMAL1 protein, which promotes transcription of Per/Cry. PER/CRY protein, in turn, promotes Bmal1 transcription, forming the positive feedback loop (Figure 1). To study the effects of light, we utilize the light input gate added in [20]. The response to light is similar to the experimental phase response recorded for nocturnal animals [21]. The light input is modeled using parameter L. The gate allows for light input to up-regulate Per/Cry transcription in a phase-dependent manner - it prevents light from entering the system when the nuclear PER/CRY (PCn) is in its trough, which is in the middle of the daytime.

Fig. 1.

Circadian clock model network. The diagram is adapted from Fig 1 in (Geier et al, 2005). The bold letters denote the states. The solid arrows indicate positive influence. For example, greater concentration (up regulation) of Bmal1 mRNA causes greater concentration of cytoplasmic BMAL1. The flat-headed arrows indicate negative influence. For example, nuclear PER/CRY inhibits the transcription of Per/Cry mRNA . The dashed arrows indicate degradation. There is a negative feedback loop formed by the mRNA and protein versions of Per/Cry. Interlocked with it is a positive feedback loop involving Bmal1 mRNA, BMAL1 protein, and Per/Cry. Light enters the system by modulating the transcription rate of Per/Cry mRNA (PCm). In this study, we use the gating function associated with nocturnal animals.

The model is given by

where Per/Cry mRNA transcription is down-regulated by nuclear PER/CRY and up-regulated by active nuclear BMAL1 (Ban) according to

and Bmal1 mRNA transcription is up-regulated by nuclear PER/CRY according to

The parameter values are set as in [20], resulting in a period of oscillation τ of 23.3 hours.

For the purposes of the current investigation, we consider constant darkness (L = 0) to be the nominal configuration for the system. We study the endogenous oscillations, which have a period τ = 23.3 hours. In Figure 2, the oscillations are plotted as a function of time t. In the remaining figures, we plot the results using “circadian time”. It is common practice to scale data (particularly phase response curves) to show a period of 24 hours [13]:

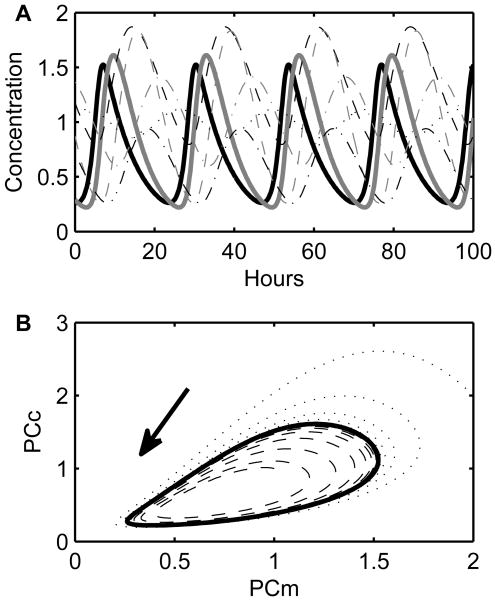

Fig. 2.

Circadian clock model dynamics. (A) A simulation in constant darkness is shown as a function of time. Per/Cry mRNA (PCm) and cytoplasmic PER/CRY (PCc) are shown with the thick lines. (B) The same simulation is shown in phase space (solid line). The dotted and dashed lines represent additional simulations with initial conditions off the periodic orbit (limit cycle). They evolve to the limit cycle, demonstrating that the system has a stable attracting limit cycle.

In addition, we generate all figures such that time 0 corresponds to the internal time associated with dawn. The definitions of internal time (phase) and dawn will be discussed in more detail in Section III-B; briefly, dawn is equivalent to the position on the nominal limit cycle that is passed 7 hours before Per/Cry mRNA peaks. Because there is no light in the nominal system, there is no “daytime” or “nighttime”, and we refer to subjective day (0 to 12 circadian hours after dawn) and subjective night (12 to 24 circadian hours after dawn).

III. Phase Sensitivity Measures

The phase sensitivity measures presented in this section provide a unified framework within which an oscillatory system such as the circadian clock can be studied.

A. Classical Sensitivity Analysis

Classical sensitivity analysis considers the effect at time t on a state value xi of perturbing (at time 0) a parameter pj. The matrix S(t) = (Sij(t)) ∈  of sensitivity coefficients

is computed by solving the ODE system

of sensitivity coefficients

is computed by solving the ODE system

where J(x(t)) ∈  is the Jacobian,

[22].

is the Jacobian,

[22].

The sensitivity coefficients capture the dynamics of the response to parametric perturbation. The information contained in S involves the overall sensitivities. For oscillatory systems, this means that S contains information about the change in amplitude, change in limit cycle shape, change in period, and change in phase behavior. Discriminating between the sensitivity of each feature is an important endeavor. Contributions in the form of limit cycle shape, amplitude, period, and phase sensitivities have been made in [23], [24], [25], [26], [27], and [14]. Here, we build on the work in [24] and [27] where the authors capitalize on dynamical systems theory.

We consider the sensitivity of the system on the limit cycle. In later derivations, we will use the sensitivity coefficient

To assess the time-keeping ability of a limit cycle clock, we examine the sensitivity of its phase to parametric and state perturbations. In the circadian pacemaker, the phase identifies the time of day of the internal clock. Before we can venture further, a precise definition of phase is required.

B. Phase

To display and analyze experimental data, the phase of a biological oscillator is determined by associating a specific phase to a specific event, such as the electrical spike of a firing neuron, or the peak of concentration of Per mRNA in the circadian clock. To study such a system as a limit cycle, we use a similar approach. Each position xγ along the limit cycle γ is associated with a phase φ. The mapping is constructed so that progression along γ (in the absence of perturbation) produces a constant increase in φ [28]. Thus, the standard definition for φ is expressed in terms of its evolution through time, via a differential equation [16], [28], [29]. For an unperturbed system, the phase on the limit cycle is the solution to the initial value problem,

| (2) |

where is a reference position on the limit cycle γ [16], [28]. For a more in-depth discussion of φ defined in the presence of perturbations, see [16].

As written, the solution to (2) will grow unbounded with time. In this sense, it is a function of both time and position. For example,

where k ∈  . It is, however, common practice to bound phase such that φ ∈ [0,τ). When the bounds are applied, phase and position have a one-to-one relationship and the phase is a function of position only, i.e. φ((xγ(t,p))). For the derivation of the sensitivity measures in the following sections, we leave phase unbounded.

. It is, however, common practice to bound phase such that φ ∈ [0,τ). When the bounds are applied, phase and position have a one-to-one relationship and the phase is a function of position only, i.e. φ((xγ(t,p))). For the derivation of the sensitivity measures in the following sections, we leave phase unbounded.

Remark 1: We note that some other researchers choose the righthand side of (2) to be a constant other than 1. This simply leads to a scaled φ. If bounded, its image is in another interval, such as [0,2π).

Consider a neighborhood U containing γ within its basin of attraction. To define phase within U, we use the concept of isochrons [30].

Definition 1 (Isochron): Let xγ(0) be a point on the periodic orbit. The isochron associated with xγ(0) is the set of all initial conditions η(0) such that

An isochron is a “level set” [16] or “same-time locus” [30]; all points on a single isochron will approach the same position on the limit cycle, and therefore all share the same phase. In an n-dimensional system, an isochron will be an (n − 1)-dimensional hyper-surface [30], [31]. Therefore, it is helpful to use small systems to visualize isochrons. Figure 3 shows two isochrons in a 2-state circadian clock model [32]. They intersect the limit cycle at the peak of the first state, and at the position encountered 7 hours later. For any solution x(t) ∈ U to (1), x(t) is on some isochron η(t). The phase of x(t) is defined by the phase of the position on the limit cycle sharing the same isochron; that is, if x1 = η(t) ∩γ, then φ(x(t),t) ≡ φ(x1,t). Clearly, if φ is bounded by the period, then φ is a function of position only and φ(x(t)) ≡ φ(x1). Because all solutions on the same isochron share the same phase (modulo the period), we can rewrite (2) in terms of x ∈ U

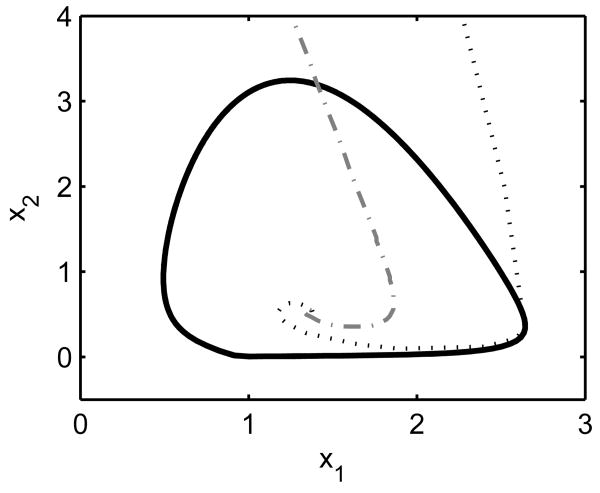

Fig. 3.

Limit cycle with isochrons. The limit cycle is for a 2-state system in which a solution will move around the limit cycle in a counter-clockwise direction. The isochron represented by the dotted line intersects the limit cycle at the peak of state x1. The isochron represented by the dashed-dotted line intersects the limit cycle at a position encountered 7 hours later, i.e if the first isochron is η(t1), then this isochron is η(t1 + 7). Any initial conditions chosen along the same isochron will approach the same position on the limit cycle as time evolves.

| (3) |

For the circadian clock model under investigation, phase is defined such that φ is 7 at the peak of Per/Cry mRNA's oscillation [33]. Dawn is defined such that φ is 0, and is associated with the position on the limit cycle encountered 7 hours earlier .

C. Phase Sensitivity to State Perturbations

If the nominal system is disrupted, then its phase evolution will depart from the nominal. We begin by considering a perturbation to a state value at time t; the perturbed solution may jump off the isochron η(t), incurring a phase shift [16]. As time progresses, the solution will approach the limit cycle, but the phase shift will cause it to be at a different position along the limit cycle than it would have been if it had remained unperturbed. To measure the phase shift caused by an infinitesimal perturbation, we use the state impulse phase response curve (sIPRC). It is a vector equation sIPRC = (sIPRCk), where

The sIPRC appears in the literature associated with weakly connected neural networks, where it is most commonly referred to as z. There are multiple derivations of the curve's formula. Summarizing the derivation in [24], [27], we begin with the adjoint Green's function

where J is the system Jacobian evaluated on the limit cycle. It is integrated backwards from final time t = T to time t = 0 from initial conditions

The solution to the adjoint Green's function converges to a unique, stable limit cycle (periodic with the same period as (1)) ξ as T → ∞ [24], [29]. It represents the sensitivity of the final state values to perturbations in previous state values. We express this interpretation in the form of partial derivatives, with the pertubation as the denominator and the observed effect in the numerator:

The key insight presented in [24] is that the phase shift, which in their terminology is ∂tˆ, depends upon earlier state values. This dependence is determined by any one row of the adjoint variable [16], [24], according to

| (4) |

Because when T ≫ t, we know that is τ-periodic in the limit as T → ∞. Therefore, is truly a function of position and should be written as such. Intuitively, this is clear because the phase response is dependent upon the position along the system limit cycle γ at which the perturbation occurs. Thus, the equation is more precisely expressed as

To write the sIPRC in our notation, we use (2), i.e.

The formula for the sIPRC to the kth state [24] is therefore

This formula is best interpreted by an examination of flow through the isochrons. Suppose that at time t (when the state value is on isochron η(t)), an instantaneous infinitesimal change is made to xγ(t). The solution to the perturbed system (xγ(t) + ∂x) will advance to isochron η(t̃). If t̃ ≠ t, then the system will have incurred a phase shift (t̃ > t is an advance and t̃ < t is a delay). If the system is not subject to any further perturbation, this phase shift (t̃ – t) will be locked in because dφ/dt = 1 in the neighborhood U. It can then be “measured” in the limit as the state trajectory reaches the limit cycle [16]. Recall that predicts the change in state value at final time T. In the limit as ∂xγ(T) → 0, fi(xγ(T)) will accurately describe the speed of the trajectory as it travels from to . Dividing the distance dxi(T) by the speed fi(xγ(T)) produces the travel time, our phase shift.

Remark 2: In practice, the limit (T → ∞) can be approximated. Typically, it takes fewer than three periods for the adjoint solution to converge to its limit cycle.

Remark 3: In [24] a negative sign is introduced to (4). We leave it out in order to maintain consistency with the circadian literature. Phase response curves are plotted such that phase advances are positive [13], and a positive value in the sIPRC (as we have presented it) predicts a phase advance.

Remark 4: The computation can be handled efficiently. Because only one row of the adjoint solution is required, we solve a vector, rather than matrix equation. This solution can be computed using a standard solver, but effort will be reduced if a package such as the DASPKAdjoint is used [34], [35].

Figure 4 shows the results of a numerical experiment in which a small perturbation is made to the state representing Per/Cry mRNA (PCm) in the clock model. We use the fact that

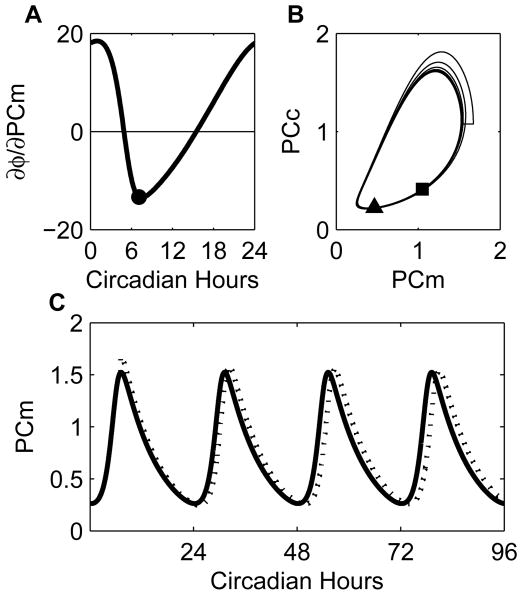

Fig. 4.

System under a state perturbation. (A) The state IPRC for the state representing Per/Cry mRNA (PCm) shows early morning advances and midday delays. To illustrate its utility we perform a numerical experiment using the prediction of the phase shift due to a perturbation in PCm at CT7.1 (circle). Since ∂φ/∂PCm = −13.351 at CT7.1, we predict that a positive perturbation of 0.15 in PCm at CT7.1 will cause a phase shift Δφ ≈ −13.351 · 0.15 = −2.0027, which means there will be a 2-circadian hour delay. (B) In phase space, we show the concentrations of PCm and cytoplasmic PER/CRY (PCc) to show the limit cycle behavior of the numerical experiment. There is a clear deviation from the limit cycle when the perturbation is introduced. The square marks the position of the unperturbed system at CT293 while the triangle marks the position of the perturbed system at the same time. Since the flow about the limit cycle is counter-clockwise, this indicates the perturbed system is lagging. (C) Here we show the same data, but as a function of time. The concentration of PCm in the perturbed system (dotted line) peaks after that in the unperturbed system (solid line) and we observe a 1.9-circadian hour delay.

to illustrate the predictive power of the sIPRC. For ΔPCm = 0.15 (which is about 7% of the amplitude of PCm's oscillation) administered at φ = 7, the prediction is a delay of 2 circadian hours, while the observed delay is 1.89 circadian hours. For a highly non-linear system, the error of prediction is quite good. When we consider parametric perturbations, we find that the state values themselves are not changed much. This is, in part, due to the fact that the model under consideration is generally robust to parametric perturbation (the classical sensitivity coefficients are small) (data not shown). The consequence is that we can consider large parametric perturbations and find that predictions are very accurate.

D. Phase Sensitivity to Parametric Perturbations

Predicting the phase change incurred by a parametric perturbation involves a complicating factor - the isochrons are altered in the perturbed vector field. In this section, we continue to use isochrons in the nominal system as the reference for phase behavior. For this to be a legitimate approach, we must therefore consider the state of the system after a parametric perturbation has ceased and the system has returned to the nominal flow. We begin by predicting the response to a sustained perturbation, move to a short, but finite duration perturbation, and finish by considering an impulse perturbation.

1) Cumulative Phase Sensitivity

Applying the chain rule to the phase variable produces

The phase sensitivity is measured in reference to the isochron η(t) of the nominal system. To interpret the formula, we examine the terms from right to left. The term is the classical sensitivity coefficient, which describes the effect upon state xk at time t due to a constant parametric perturbation to parameter pj initiated at time 0. The term is simply the sIPRCk, which describes the effect that a state perturbation has upon the phase. If the parametric perturbation is lifted at time t, then the vector field will return to its nominal configuration, and the formula will predict the incurred phase shift. Therefore, the phase sensitivity at time t predicts the phase shift incurred by a parametric perturbation sustained until time t. As time progresses, the phase changes accumulate, so we refer to the curve as the cumulative phase sensitivity.

The behavior is best explained in terms of isochrons. Consider a system with initial conditions . Under the nominal flow, it will be on isochron η(t1) at time t1. If a parameter is perturbed (beginning at time 0), then the trajectory will deviate from the nominal trajectory and the system will be on an isochron η(tˆ1) (with respect to the nominal system). The perturbed system is then t1 − tˆ1 time units phase-shifted from the nominal system. If the perturbation is released at that moment (time t1), then the flow will return to nominal and the phase shift will be locked in (because ). For a graphical illustration, see Figure 2 in [24] or Figure 3 in [27].

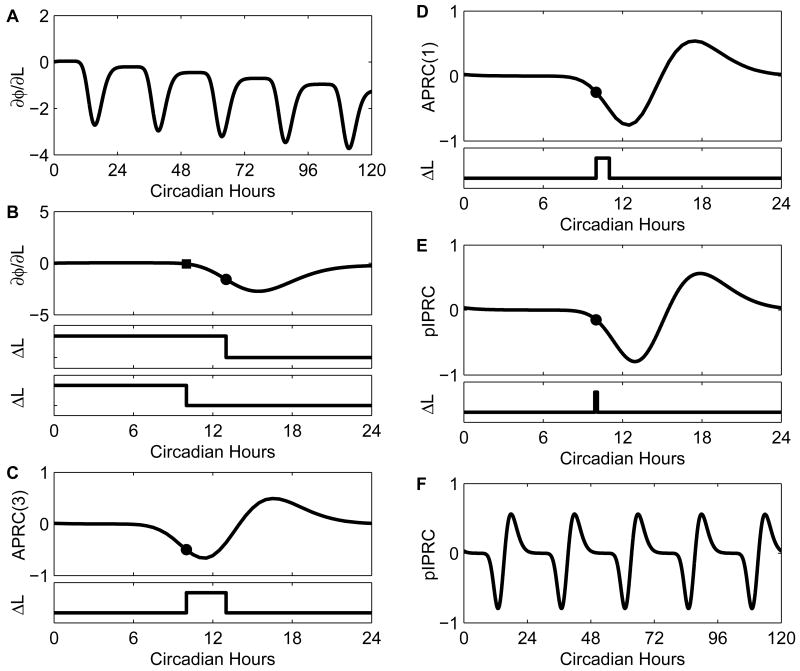

Figures 5A and B show the cumulative phase sensitivity to the parameter associated with light (L). Within each period, light has varying effects, with maximal delays in the early subjective evening (see Figure 5B). The overall effect during a single period is to slow down the system (dφ/dL(τ) < dφ/dL(0)). Over multiple periods, the effect accumulates, resulting in larger delays, as shown by the negative trend in Figure 5A.

Fig. 5.

Relationship between parametric phase sensitivity, analytical phase response curves (APRCs), and parametric impulse PRCs (pIPRCs). (A) The parametric phase sensitivity to infinitesimal perturbations in light (L) grows with time. Each point on the phase sensitivity curve can be used to predict the delay or advance that will be incurred due to the release of a sustained perturbation in the parameter L. The overall negative slope indicates that, in general, the longer L remains perturbed, the more delayed the system will be. (B) We show the parametric phase sensitivity over the first period. The values at CT10 (filled square) and CT13 (filled circle) predict the accumulated phase shifts between CT0 and CT10, and between CT0 and CT13, respectively. The corresponding perturbation shapes are illustrated in the lower panels. We observe that the phase changes accumulated between CT10 and CT13 can be predicted by subtracting dφ/dL(13) − dφ/dL(10). (C) The analytical PRC, APRC(t,3), predicts the shift due to a 3-circadian hour pulse. To compute APRC(t,3), we subtract dφ(t + 3)/dL − dφ(t)/dL and divide by 3. The lower panel shows the trace of the L perturbation (the signal) to which the APRC at CT10 predicts the response. (D) APRC(1) is the analytical PRC to a 1-circadian hour pulse. The pulse starting at CT10 is highlighted. As the pulses get shorter in duration, the APRC approaches the slope of the phase sensitivity. (E) The IPRC is the slope of the phase sensitivity and it predicts the phase shift in response to an impulse perturbation - a pulse that is infinitesimal in both duration and magnitude. The prediction associated with an impulse at CT10 is highlighted and the pulse shape is shown in the lower panel. (F) The parametric IPRC is periodic.

2) Phase Sensitivity and Square Pulse Perturbations

For oscillating systems in general, and the circadian clock in particular, a more useful measure is the response not to a sustained, or step, perturbation, but to a pulsatile perturbation. There is a rich history of biological experimentation in which animal circadian clocks are studied by subjecting the animals (otherwise kept in constant darkness) to square pulses of light [13]. To supply an analytical curve predicting the response to a square pulse perturbation, Gunawan and Doyle made the observation that the cumulative phase sensitivity curve, evaluated at the pulse onset and offset, provides the necessary information [27]. By subtracting the phase shift accumulated before the pulse onset, we compute the shifts accumulated during the pulse.

Definition 2 (APRC): The analytical phase response curve (APRC) is a function of the time of the pulse onset t and pulse duration d:

Figure 5C and D illustrates the APRC to light (APRCL) for pulse durations of 3 and 1 circadian hours, respectively. The shape corresponds very closely to that of experimental data, showing the so-called “deadzone” during the subjective day, with delays early in the subjective evening and advances in late subjective evening [36].

3) Phase Sensitivity and Impulse Perturbations

When d approaches 0, the APRC approaches the slope of the phase sensitivity:

This means the slope of the phase sensitivity will predict the behavior change caused by an impulse perturbation to a parameter.

Definition 3 (pIPRC): The parametric impulse phase response curve (pIPRC) is

| (5) |

Proposition 1 (Computation of the pIPRC): The pIPRC can be computed by taking the full time derivative of the phase sensitivity, resulting in an expression involving terms that are relatively easy to compute:

Because each term on the righthand side is a function of position, the pIPRC is a function of position, i.e.

For the proof, see the appendix.

The pIPRC is τ-periodic when evaluated on the limit cycle. We noted earlier that the sIPRC is τ-periodic. Clearly, is periodic, because xγ(t) is periodic (see Figure 5F). It follows that, like the sIPRC, the pIPRC is a function of position and can therefore be written as a function of phase. We will do so in future sections.

In addition to periodicity, there is a striking similarity between the pIPRC and the sIPRC. They both predict the phase response to an impulse perturbation, under the assumption that the system was in its nominal configuration immediately preceding and immediately following the impulse. This will be important in Section III-F, when we present the phase evolution equation under parametric perturbation. It reformulates the phase evolution equation commonly used to track the phase behavior of a system under state velocity perturbation [16], [17], [28], [29], [37].

The pIPRC to light (pIPRCL) is shown in Figure 5E and F. The curve in this case is unique in that it serves to characterize the system itself - not simply the response to a specific signal. A signal can be viewed as a modulation over time of a parameter, and the pIPRC can be used to predict the response to that signal. Figure 5E illustrates that, regardless of the shape and strength of the light signal, if it arrives during the subjective day the clock does not phase shift. A signal arriving in early subjective evening will cause a phase delay, and a signal arriving in late subjective evening will cause a phase advance. Later, we will use the pIPRC to predict the response to arbitrary signals.

Remark 5: A related curve, the infinitesimal response curve (IRC) to the period is developed in [38], [39]. As part of a wider ranging set of response curves, the period IRC is the same function as the pIPRC, but its derivation is distinctly different. One notable difference is that its computation does not rely directly on the sIPRC.

E. Period Sensitivity

A sustained parametric perturbation changes the freerunning period τ. The period sensitivity predicts the change in τ and is expressed analytically by the APRC for an infinitesimal perturbation of duration τ; the timing effects accumulated over one period produce a change in the period [24].

Proposition 2 (Period Sensitivity): The period sensitivity is given by

where the APRC can be evaluated at any time t1 (as long as the system is not in transient) [24], [27].

The period sensitivity can be used to predict the period in a perturbed system. The negative sign is introduced to make the formula for prediction intuitive:

The relationship between phase response curves and the effects of light upon the period has long been of interest to circadian researchers [13], [21]. For that reason, we explore the relationship between the period sensitivity and the pIPRC. Recall that

Because pIPRCj is the derivative of the cumulative phase sensitivity, we know

Thus, there is a direct relationship between the period sensitivity and the area under the pIPRC,

F. Phase Evolution Under Parametric Perturbation

A powerful technique, pioneered by Malkin [29] and Winfree [37], and developed further by Kuramoto [28] and others [16], [17], [29] is that of phase reduction. Instead of simulating an entire system, the system is reduced to a single phase variable. The phase model is an ordinary differential equation, which we refer to as the phase evolution equation. In the absence of a signal, it is simply (3).

There are many variants of the phase evolution equation, some of which are designed to follow a single oscillator, with others designed to follow the behavior of multiple, weakly coupled oscillators. The signal term also differs in its independent variable. We choose one that includes variables for both external and internal time, t and φ, respectively. Equation 2.6 in [16], written in our notation becomes

| (6) |

where G is the vector of “stimulus effects”. The natural initial condition is φ(0) = 0. Under the assumptions that the limit cycle is normally hyperbolic, and that |G| is bounded by a number small enough for the linear approximation to be sufficiently accurate, this equation is valid in the neighborhood U of γ.

In the context of the models examined in [16], it is the sIPRC (referred to as z in [16]) that characterizes the response to a stimulus represented by G. However, we can separate G into two terms such that

where gj is a time-varying perturbation to the jth parameter. Now, we can rewrite (6) as

The result is a phase evolution equation involving the pIPRC and a signal that modulates a parameter. That signal can be a function of phase or external time. In the present work, we use it as a function of external time only, and define the phase evolution equation as

| (7) |

where φ(0) = 0. It is now apparent that the pIPRC serves to characterize the timing behavior of the system apart from the signal. It is also apparent the pIPRC can be intrepreted as a velocity response curve. Since p and t are independent, (5) can be rewritten as

Our approach will be valid in the same neighborhood with the same assumptions listed above (from [16]) with one major caveat - a perturbation in parameters alters the vector field. The pIPRC is computed assuming the vector field is about to return to the nominal configuration. If the perturbation continues or changes, then the pIPRC's prediction may be incorrect. However, we have observed that the results are qualitatively correct, and often quantitatively correct (Figure 7). We benefit from the tendency for isochrons to remain nearly fixed despite changes to the vector field.

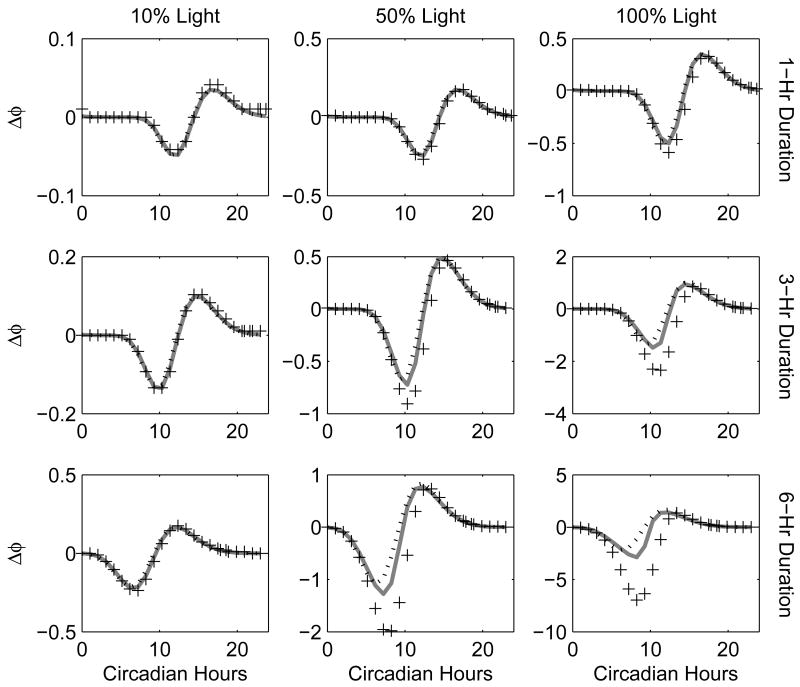

Fig. 7.

Numerical experimental PRCs. Each plot contains the phase shifts for the numerical experimental PRC (pluses), pIPRCL-predicted PRC (solid gray line), and APRCL-predicted PRC (dotted black line). The signal is sinusoidal and its active part lasts 1 hour for the first row, 3 hours for the second row, and 6 hours for the third row. The signal maximum is at 10%, 50%, and 100% of full light, respectively. Notice the different scales on the y-axes.

IV. Phase Response Curves

The curves presented in the previous section can be used to predict the outcome of numerical experiments, which in turn are used to mimic in vivo experiments. Such experiments have been used to collect phase response curves - a phase response curve (PRC) describes the response of the system to a particular signal. The traditional method of computation is simple numerical experimentation; send the signal, then observe the result. In this section, we illustrate the relationship between the APRC, pIPRC, and numerical experimental PRC by considering the model's response to light. We outline various methods for predicting the response first to a square pulse signal, then to an arbitrary signal.

We begin by discretizing time over one period, tdisc = {t1,t2,…,ta} and we compute the PRC at the phase associated with each timestep, Φ = {φ(xγ(t1)),φ(xγ(t2)),…φ(xγ(ta))}. The signal must be expressed as a modulation of a parameter, i.e. gj(t) = Δpj(t).

A. Square Pulse Response Curves

Standard practice for in vivo biological experiments is to subject an animal to a square pulse of light. A natural in silico approach is to subject the model to the same square pulse. To compute the analytical predictions for such a numerical experiment, we use the APRC and pIPRC.

1) Direct Method

The direct method is straightforward. S is simply a pulse of magnitude mag beginning at time t1 of duration d, i.e.

| (8) |

All simulations will end at time T, which must be chosen to ensure a perturbed system will resettle to the nominal limit cycle. The algorithm we suggest is:

Simulate the system in constant dark to create a nominal (reference) limit cycle.

Choose a phase marker for the system, such as the peak of state xi. Record the time tb when that marker occurs in the last period of simulation (T − τ, T).

Choose the time of pulse onset as a marker for the signal, gonset.

-

For each t ∈ tdisc and its associated φ ∈ Φ,

Run a simulation, sending the signal such that gonset occurs at time t.

Measure the time tc of the system marker in the last period of simulation (T − τ,T).

Compute the phase shift Δ(φ) = tb − tc.

2) Predictive Method

The APRC is designed for precisely this purpose - to predict the response to square pulses. It does so under the assumption that the linear approximation holds for finite Δpj.

For each t ∈ tdisc and associated φ ∈ Φ,

The pIPRC can be used in the same manner as the APRC, but is, theoretically, less reliable - the pIPRC is valid in the limit not only as Δpj → 0, but also as Δt → 0. We recommend the APRC for estimating PRCs, but include the pIPRC formula because it is instructive for the purposes of interpretation.

For each t ∈ tdisc and associated φ ∈ Φ,

If the signal is of magnitude and duration 1 (Δpj = 1, d = 1), then the pIPRC itself is an estimate for the PRC. This relationship eases interpretation - rather than attempting to envision a curve that is a rate of change in two variables, we have a concrete meaning. Additionally, for relatively short (circa 1 circadian hour) pulses, the numerical results are nearly identical to those of the APRC. We note also that the pIPRC is most accurate when the middle of the pulse time is used for the prediction. In Section IV-B.2 we describe a better pIPRC method, which uses (7).

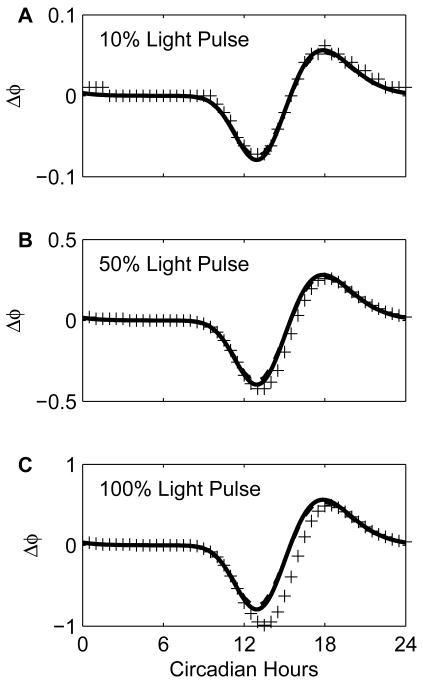

Figure 6 contains the numerical experimental PRC, APRCL-predicted PRC, and pIPRCL-predicted PRC for a pulse lasting 1 circadian hour with strengths ΔL = 0.1,0.5,1.0. The figure illustrates that for a relatively short pulse of light the predictions are qualitatively correct, and quantitatively good. For 1-circadian hour pulses of 10%, 50%, and 100% light, the predictions are within 0.01, 0.25, and 0.5 circadian hours of the numerical experimental PRC, respectively. This figure illustrates the utility of the various methods. For large perturbations, the numerical experimental method is the most accurate because all nonlinearity is accounted for. However, for small perturbations, the numerical experimental method loses accuracy. Computation of the phase shift is limited both by the step size of the simulation and by the simulation accuracy. Because long simulations are required, a gradual drift is observed, and measurement of the time-difference between peaks in the nominal and perturbed systems is less reliable. The APRC method does rely on numerical solution, but is not as susceptible to either of these problems.

Fig. 6.

Numerical experimental PRC's. We plot the phase shifts contains the numerical experimental PRC (pluses), pIPRCL-predicted PRC (solid line), and APRCL-predicted PRC (dashed line). Each phase shift is plotted for the time at the center of the light pulse. (A) Numerical experimental PRC for 1 Circadian Hour-Pulses of light at 10% strength. For each plus in the numerical experiment, a simulation was performed in which the light parameter L was 0.1 during the light pulse and 0 otherwise. After one week was simulated, we compared the time of the peak in the perturbed system to that of the peak of PCm in the nominal system. The time-step resolution and, therefore, the accuracy of this computation is, at best, 0.01 hour. (B) Numerical experimental PRC for pulse of light at 50% strength. (C) Numerical experimental PRC for pulse of light at 100% strength (L = 1).

B. Arbitrary Signal Response Curves

Square pulse signals alone are insufficient to study the circadian clock's timing behavior. For example, the light-signaling pathway is now understood to be composed of gates [36] which modulate the signal shape. As the models become more mechanistic, we must study the response to signals displaying properties such as attenuation and continuous (rather than discrete) transitions. Additionally, as modelers turn to coupled networks of oscillators, the intercellular signaling mechanisms produce curved signals [40], [41].

In this section, we present three methods for computing the PRC to an arbitrarily shaped signal (parametric perturbation). The first method, the direct method, captures all non-linearity in the system but is the most expensive to compute. The second method (pIPRC method), which uses the phase evolution equation (7) is less accurate, but also less expensive computationally. The third method, which uses the APRC is the least accurate and the least expensive. We recommend use of the direct method for large perturbations and the pIPRC for smaller perturbations. The APRC method is included to complete the set of approaches and to illustrate the dynamical nature of phase behavior. Numerically, it is reasonably accurate, but there is seldom any reason to reject the pIPRC method in favor of the APRC method.

For all methods, we choose a marker for the signal, gonset(t) such that the signal first becomes active (non-zero) at time t.

1) Direct Method

Use the same method presented in Section IV-A.1.

This is the most accurate method for larger signals, because it captures all non-linear behavior. It is also the most expensive - every point in the PRC requires the simulation of an n-dimensional system of ODEs over several periods. As models increase in size, the direct method becomes much more expensive.

2) pIPRC Method

For each ts ∈ tdisc and associated φs ∈ Φ, simulate the phase evolution equation

from initial condition φ(ts) = ts. We begin the simulation not from time t, but from the time of the onset of the signal, and end the simulation at time te when the signal becomes inactive (is identically zero). The phase shift is Δφ(φs) ≈ φ(te) − te.

This method captures the phase shifts caused by the signal as it arrives, but relies on the linear approximation of the trajectory with respect to parameter pj. The pIPRC was derived with the assumption that it would be evaluated on the limit cycle. When the system is under perturbation, it is not necessarily on the limit cycle. The phase evolution equation is valid under the assumption that the phase determines the pIPRC even when not on the limit cycle. We have found that this method is most useful for small magnitude signals, but will provide qualitatively good results for larger signals. Computationally, each point requires the simulation of a one-dimensional ODE system for the duration of the signal. This cost is independent of the size of the original model.

3) APRC Method

The basis of this method is to discretize the signal into finite square pulses, and then sum up the phase shifts computed using the APRC for each square pulse. The algorithm is as follows:

Compute APRCj for pulses lasting only one timestep Δt, APRCj(t, Δt).

Discretize the signal using the same timestep.

-

For each ts ∈ tdisc and associated φs ∈ Φ, sum the effects over the duration of the signal:

where Nsig is the number of timesteps required to cover the active signal.

This method does not capture the phase shifts caused by the signal as it arrives; it makes the assumption that gj (t) will arrive when the internal time is also t. In other words, we compute the response to each pulse as if it is the first to arrive (i.e., each pulse is independent from the others). It also relies on the linear approximation of the trajectory with respect parameter pj. Computationally, this method is remarkably fast; each point requires only addition and multiplication operations (no simulation aside from the computation of the APRC itself) and the cost is independent of the size of the original model.

Figure 7 contains the numerical experimental PRC, pIPRCL-predicted PRC, and APRCL-predicted PRC to a half-sinusoidal light signal (identical to that in [42]). The signal is generated such that, if t1 is the time of signal onset, then

PRCs are collected for durations d = 1, 3, and 6 hours and magnitudes mag = 0.1, 0.5, and 1 (i.e. 10%, 50%, and 100% of full light). The plots are organized so that the magnitude is increased from left to right and the duration is increased from top to bottom. In general, we observe that smaller magnitude and smaller durations allow the predictors to be more accurate (the upper lefthand plot shows the best agreement while the lower right shows the worst). The two predicted curves are similar, although the pIPRCL is slightly more accurate (this is most apparent for pulses of 6-hour duration). Both predictors under-estimate the delays. However, the pIPRC method correctly captures the phase of the trough of the PRC. This is a direct consequence of the phase evolution equation. Dynamical tracking of the phase behavior is an important aspect of accurate prediction.

V. Conclusion

We have presented the derivation and intepretation of a novel sensitivity measure, the pIPRC, which both characterizes the phase behavior of an oscillator and provides the means for computing the response to an arbitrary signal (in the form of parametric perturbation). The pIPRC and other isochron-based sensitivity measures presented herein comprise a uniform framework within which the various timing properties are related. The relationship between the period sensitivity and the pIPRC is of particular interest. Circadian researchers have postulated a direct correlation between the area under the phase response curve and the effects of constant light on the period [21]. This work confirms the mathematical relationship.

The PRCs and infinitesimal PRCs presented in this work provide quantifiable measures of robustness for oscillators acting as pacemakers. In these systems, robust performance involves proper maintenance of phase behavior. In the case of the circadian clock, this means that the PRC to light must have not only the proper shape, but also the correct magnitude. The model and light gate considered here match observed biological data. In previous work [12], we invalidated a model of the circadian clock in the plant Arabidopsis thaliana, because the pIPRC had neither the proper shape nor the proper magnitude. In that case, the model's pIPRC predicted an ultra-sensitivity to light, which was verified via simulation - the system was reset to dawn whenever light arrived.

Acknowledgments

The authors would like to thank Neda Bagheri, Henry Mirsky, and Scott Taylor for helpful comments.

This work was supported by the Institute for Collaborative Biotechnologies through grant #DAAD19-03-D-0004 from the U.S. Army Research Office, IGERT NSF grant #DGE02-21715, NSF/NIGMS grant #GM078993, Army Research Office grant #W911NF-07-1-0279, and NIH grant #EB007511.

Biographies

Stephanie R. Taylor received a B.S. in mathematics and computer science from Gordon College (Wenham, Massachusetts) in 1998. From 1998 to 2003, she worked as a software engineer at JEOL, USA (Peabody, MA), where she developed and implemented software associated with a nuclear magnetic resonance spectrometer. She is currently pursuing a Ph.D. in computer science at the University of California Santa Barbara. As an NSF IGERT (Integrative Graduate Education and Research Traineeship) fellow in the Computational Science and Engineering program, her research involves the mathematical modeling and analysis of biological oscillators.

Dr. Rudiyanto Gunawan received the B.S. degree in chemical engineering and mathematics from the University of Wisconsin, Madison, in 1998, and the M.S. and Ph.D. degrees in chemical engineering from the University of Illinois, Urbana-Champaign, in 2000 and 2003, respectively. In July 2006, after a 3-year postdoctoral fellowship at the University of California, Santa Barbara, he joined the Department of Chemical and Biomolecular Engineering in National University of Singapore, where he is currently an Assistant Professor.

Dr. Linda R. Petzold received the B.S. degree in mathematics/computer science from the University of Illinois at Urbana-Champaign (UIUC) in 1974, and the Ph.D. degree in computer science from UIUC in 1978.

After receiving her Ph.D., she was a member of the Applied Mathematics Group at Sandia National Laboratories in Livermore, California until 1985, when she moved to Lawrence Livermore National Laboratory, where she was Group Leader of the Numerical Mathematics Group. In 1991 she moved to the University of Minnesota as a Professor in the Department of Computer Science. In 1997, she joined the faculty of the University of California Santa Barbara, where she is currently Professor in the Departments of Mechanical Engineering and Computer Science.

Prof. Petzold is a member of the US National Academy of Engineering, and a Fellow of the AAAS. She was awarded the Wilkinson Prize for Numerical Software in 1991, the Dahlquist Prize in 1999, and the AWM/SIAM Sonia Kovalevski Prize in 2003.

Dr. Francis J. Doyle III holds the Suzanne and Duncan Mellichamp Endowed Chair in Process Control at the University of California, Santa Barbara in the Department of Chemical Engineering, in 2002. He received his B.S.E. from Princeton (1985), C.P.G.S. from Cambridge (1986), and Ph.D. from Caltech (1991), all in Chemical Engineering. After graduate school, he was a Visiting Scientist at DuPont in the Strategic Process Technology Group, and held appointments at Purdue University; the University of Delaware; and the Universität Stuttgart. His research interests are in the areas of systems biology and nonlinear process control, with applications ranging from gene regulatory networks to complex particulate process systems. He is the recipient of several research awards including: National Science Foundation National Young Investigator; Office of Naval Research Young Investigator; Alexander von Humboldt Research Fellowship (2001-2002); and AIChE CAST Division Computing in Chemical Engineering Award (2005). He is the author of 3 books, and over 150 journal articles.

Appendix: Derivation of the pIPRC

Here, we derive the formula for computation of the pIPRC

Recall that

where the limit can be ignored in practice. With that in mind, and for the sake of conciseness, we will leave out the limit in the derivation. To simplify notation, .

Using summation notation,

Applying the chain rule, we find

| (9) |

The adjoint equation can be expressed in summation notation as

| (10) |

The classical sensitivity coefficient is , and the sensitivity differential equation is

| (11) |

Substituting (10) and (11) into (9), we have

Further expansion of the terms shows

Careful observation reveals that the first and second terms cancel. The h and k labels simply need to be reversed in one of the terms. Reversing the labels in the second term,

Contributor Information

Stephanie R. Taylor, Department of Computer Science, University of California Santa Barbara, Santa Barbara, CA, 93106-5110; Phone: 805-893-8151; Fax: 805-893-4731; E-mail: staylor@cs.ucsb.edu

Rudiyanto Gunawan, Department of Chemical and Biomolecular Engineering, National University of Singapore, Singapore, 117576; Phone: +65-6516-6617; Fax: +65-6779-1936; Email: chegr@nus.edu.sg.

Linda R. Petzold, Linda R. Petzold, Department of Computer Science, University of California Santa Barbara, Santa Barbara, CA, 93106-5070; Phone: 805-893-5362; Fax: 805-893-5435; E-mail: petzold@engineering.ucsb.edu

Francis J. Doyle, III, Department of Chemical Engineering, University of California Santa Barbara, Santa Barbara, CA, 93106-5080; Phone: 805-893-8133; Fax: 805-893-4731; E-mail: doyle@engineering.ucsb.edu.

References

- 1.Sontag ED. Some new directions in control theory inspired by systems biology. IET Syst Biol. 2004 Jun;1(no. 1):9–18. doi: 10.1049/sb:20045006. [Online]. Available: http://dx.doi.org/10.1049/sb:20045006. [DOI] [PubMed] [Google Scholar]

- 2.Kitano H. Systems biology: a brief overview. Proc Natl Acad Sci USA. 2002;295:1662–1664. doi: 10.1126/science.1069492. [Online]. Available: http://dx.doi.org/10.1126/science.1069492. [DOI] [PubMed] [Google Scholar]

- 3.Doyle FJ, III, Stelling J. Systems interface biology. J R Soc Interface. 2006 Oct;3(no. 10):603–616. doi: 10.1098/rsif.2006.0143. [Online]. Available: http://dx.doi.org/10.1098/rsif.2006.0143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Csete M, Doyle J. Reverse engineering of biological complexity. Proc Natl Acad Sci USA. 2002;295:1664–1669. doi: 10.1126/science.1069981. [Online]. Available: http://dx.doi.org/10.1126/science.1069981. [DOI] [PubMed] [Google Scholar]

- 5.Stelling J, Sauer U, Szallasi Z, Doyle FJ, III, Doyle J. Robustness of cellular functions. Cell. 2004 Sep;118(no. 6):675–85. doi: 10.1016/j.cell.2004.09.008. [Online]. Available: http://dx.doi.org/10.1016/j.cell.2004.09.008. [DOI] [PubMed] [Google Scholar]

- 6.Carlson JM, Doyle J. Highly optimized tolerance: robustness and design in complex systems. Phys Rev Lett. 2000 Mar;84(no. 11):2529–2532. doi: 10.1103/PhysRevLett.84.2529. [Online]. Available: http://dx.doi.org/10.1103/PhysRevLett.84.2529. [DOI] [PubMed] [Google Scholar]

- 7.El-Samad H, Prajna S, Papachristodoulou A, Doyle J, Khammash M. Advanced methods and algorithms for biological networks analysis. Proc IEEE. 2006 Apr;94(no. 4):832–853. [Online]. Available: http://dx.doi.org/10.1109/JPROC.2006.871776. [Google Scholar]

- 8.Kitano H. Biological robustness. Nat Rev Genet. 2004 Nov;5(no. 11):826–37. doi: 10.1038/nrg1471. [Online]. Available: http://dx.doi.org/10.1038/nrg1471. [DOI] [PubMed] [Google Scholar]

- 9.Morohashi M, Winn AE, Borisuk MT, Bolouri H, Doyle J, Kitano H. Robustness as a measure of plausibility in models of biochemical networks. J Theor Biol. 2002 May;216(no. 1):19–30. doi: 10.1006/jtbi.2002.2537. [Online]. Available: http://dx.doi.org/10.1006/jtbi.2002.2537. [DOI] [PubMed] [Google Scholar]

- 10.Kim J, Bates DG, Postlethwaite I, Ma L, Iglesias PA. Robustness analysis of biochemical network models. IET Syst Biol. 2006 May;153(no. 3):96–104. doi: 10.1049/ip-syb:20050024. [Online]. Available: http://dx.doi.org/10.1049/ip-syb:20050024. [DOI] [PubMed] [Google Scholar]

- 11.Stelling J, Gilles ED, Doyle FJ., III Robustness properties of circadian clock architectures. Science. 2004 Sep;101(no. 36):13 210–5. doi: 10.1073/pnas.0401463101. [Online]. Available: http://dx.doi.org/10.1073/pnas.0401463101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Zeilinger MN, Farré EM, Taylor SR, Kay SA, Doyle FJ., III A novel computational model of the circadian clock in Arabidopsis that incorporates PRR7 and PRR9. Mol Syst Biol. 2006;2:58. doi: 10.1038/msb4100101. [Online]. Available: http://dx.doi.org/10.1038/msb4100101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Johnson CH. Forty years of PRCs–what have we learned? Chronobiol Int. 1999 Nov;16(no. 6):711–43. doi: 10.3109/07420529909016940. [DOI] [PubMed] [Google Scholar]

- 14.Bagheri N, Stelling J, Doyle FJ., III Quantitative performance metrics for robustness in circadian rhythms. Bioinformatics. 2007 Feb;23(no. 3):358–364. doi: 10.1093/bioinformatics/btl627. [Online]. Available: http://dx.doi.org/10.1093/bioinformatics/btl627. [DOI] [PubMed] [Google Scholar]

- 15.Herzog ED, Aton SJ, Numano R, Sakaki Y, Tei H. Temporal precision in the mammalian circadian system: a reliable clock from less reliable neurons. J Biol Rhythm. 2004 Feb;19(no. 1):35–46. doi: 10.1177/0748730403260776. [Online]. Available: http://dx.doi.org/10.1177/0748730403260776. [DOI] [PubMed] [Google Scholar]

- 16.Brown E, Moehlis J, Holmes P. On the phase reduction and response dynamics of neural oscillator populations. Neural Comput. 2004 Apr;16(no. 4):673–715. doi: 10.1162/089976604322860668. [Online]. Available: http://dx.doi.org/10.1162/089976604322860668. [DOI] [PubMed] [Google Scholar]

- 17.Strogatz SH. From Kuramoto to Crawford: exploring the onset of synchronization in populations of coupled oscillators. Physica D. 2000;143:1–20. [Online]. Available: http://dx.doi.org/10.1016/S0167-2789(00)00094-4. [Google Scholar]

- 18.Reppert SM, Weaver DR. Coordination of circadian timing in mammals. Nature. 2002 Aug;418(no. 6901):935–941. doi: 10.1038/nature00965. [Online]. Available: http://dx.doi.org/10.1038/nature00965. [DOI] [PubMed] [Google Scholar]

- 19.Becker-Weimann S, Wolf J, Herzel H, Kramer A. Modeling feedback loops of the mammalian circadian oscillator. Biophys J. 2004 Nov;87(no. 5):3023–3034. doi: 10.1529/biophysj.104.040824. [Online]. Available: http://dx.doi.org/10.1529/biophysj.104.040824. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Geier F, Becker-Weimann S, Kramer A, Herzel H. Entrainment in a model of the mammalian circadian oscillator. J Biol Rhythm. 2005 Feb;20(no. 1):83–93. doi: 10.1177/0748730404269309. [Online]. Available: http://dx.doi.org/10.1177/0748730404269309. [DOI] [PubMed] [Google Scholar]

- 21.Daan S, Pittendrigh CS. A functional analysis of circadian pacemakers in nocturnal rodents. ii. the variability of phase response curves. J Comp Physiol. 1976 Oct;106(no. 3):253–266. [Google Scholar]

- 22.Varma A, Morbidelli M, Wu H. Parametric Sensitivity in Chemical Systems. New York, NY: Oxford University Press; 1999. [Google Scholar]

- 23.Tomovic R, Vukobratovic M. General Sensitivity Theory. New York: American Elsevier Pub. Co.; 1972. [Google Scholar]

- 24.Kramer M, Rabitz H, Calo J. Sensitivity analysis of oscillatory systems. Appl Math Model. 1984;8:328–340. [Google Scholar]

- 25.Ingalls BP. Autonomously oscillating biochemical systems: parametric sensitivity of extrema and period. IET Syst Biol. 2004 Jun;1(no. 1):62–70. doi: 10.1049/sb:20045005. [Online]. Available: http://dx.doi.org/10.1049/sb:20045005. [DOI] [PubMed] [Google Scholar]

- 26.Zak DE, Stelling J, Doyle FJ., III Sensitivity analysis of oscillatory (bio)chemical systems. Comput Chem Eng. 2005;29(no. 3):663–673. [Online]. Available: http://dx.doi.org/10.1016/j.compchemeng.2004.08.021. [Google Scholar]

- 27.Gunawan R, Doyle FJ., III Isochron-based phase response analysis of circadian rhythms. Biophys J. 2006 Jun;91:2131–2141. doi: 10.1529/biophysj.105.078006. [Online]. Available: http://dx.doi.org/10.1529/biophysj.105.078006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Kuramoto Y. Chemical oscillations, waves, and turbulence. Berlin: Springer-Verlag; 1984. [Google Scholar]

- 29.Hoppensteadt F, Izhikevich E. Weakly Connected Neural Networks. Vol. 126 New York: Springer-Verlag; 1997. (Applied Mathematical Sciences). [Google Scholar]

- 30.Winfree AT. The Geometry of Biological Time. New York: Springer; 2001. [Google Scholar]

- 31.Guckenheimer J. Isochrons and phaseless sets. J Math Biol. 1975 Sep;1(no. 3):259–273. doi: 10.1007/BF01273747. [Online]. Available: http://dx.doi.org/10.1007/BF01273747. [DOI] [PubMed] [Google Scholar]

- 32.Tyson JJ, Hong CI, Thron CD, Novak B. A simple model of circadian rhythms based on dimerization and proteolysis of PER and TIM. Biophys J. 1999 Nov;77(no. 5):2411–7. doi: 10.1016/S0006-3495(99)77078-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Reppert SM, Weaver DR. Molecular analysis of mammalian circadian rhythms. Annu Rev Physiol. 2001;63:647–676. doi: 10.1146/annurev.physiol.63.1.647. [Online]. Available: http://dx.doi.org/10.1146/annurev.physiol.63.1.647. [DOI] [PubMed] [Google Scholar]

- 34.Cao Y, Li S, Petzold L, Serban R. Adjoint sensitivity analysis for differential-algebraic equations: the adjoint DAE system and its numerical solution. SIAM J Sci Comput. 2003;24(no. 3):1076–1089. [Online]. Available: http://dx.doi.org/10.1137/S1064827501380630. [Google Scholar]

- 35.DAE software website, http://www.engineering.ucsb.edu/∼cse.

- 36.Comas M, Beersma DGM, Spoelstra K, Daan S. Phase and period responses of the circadian system of mice (Mus musculus) to light stimuli of different duration. J Biol Rhythm. 2006 Oct;21(no. 5):362–372. doi: 10.1177/0748730406292446. [Online]. Available: http://dx.doi.org/10.1177/0748730406292446. [DOI] [PubMed] [Google Scholar]

- 37.Winfree AT. Biological rhythms and the behavior of populations of coupled oscillators. J Theor Biol. 1967 Jul;16(no. 1):15–42. doi: 10.1016/0022-5193(67)90051-3. [Online]. Available: http://dx.doi.org/10.1016/0022-5193(67)90051-3. [DOI] [PubMed] [Google Scholar]

- 38.Rand DA, Shulgin BV, Salazar D, Millar AJ. Design principles underlying circadian clocks. J R Soc Interface. 2004 Nov;1(no. 1):119–130. doi: 10.1098/rsif.2004.0014. [Online]. Available: http://dx.doi.org/10.1098/rsif.2004.0014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Rand DA, Shulgin BV, Salazar JD, Millar AJ. Uncovering the design principles of circadian clocks: mathematical analysis of flexibility and evolutionary goals. J Theor Biol. 2006 Feb;238(no. 3):616–635. doi: 10.1016/j.jtbi.2005.06.026. [Online]. Available: http://dx.doi.org/10.1016/j.jtbi.2005.06.026. [DOI] [PubMed] [Google Scholar]

- 40.Ueda HR, Hirose K, Iino M. Intercellular coupling mechanism for synchronized and noise-resistant circadian oscillators. J Theor Biol. 2002 Jun;216(no. 4):501–512. doi: 10.1006/jtbi.2002.3000. [Online]. Available: http://dx.doi.org/10.1006/jtbi.2002.3000. [DOI] [PubMed] [Google Scholar]

- 41.To TL, Henson MA, Herzog ED, Doyle FJ., III A Molecular Model for Intercellular Synchronization in the Mammalian Circadian Clock. Biophys J. 2007 Mar;92:3792–3803. doi: 10.1529/biophysj.106.094086. [Online]. Available: http://dx.doi.org/10.1529/biophysj.106.094086. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Kurosawa G, Goldbeter A. Amplitude of circadian oscillations entrained by 24-h light-dark cycles. J Theor Biol. 2006 Sep;242(no. 2):478–488. doi: 10.1016/j.jtbi.2006.03.016. [Online]. Available: http://dx.doi.org/10.1016/j.jtbi.2006.03.016. [DOI] [PubMed] [Google Scholar]