Abstract

What differentiates the living from the nonliving? What is life? These are perennial questions that have occupied minds since the beginning of cultures. The search for a clear demarcation between animate and inanimate is a reflection of the human tendency to create borders, not only physical but also conceptual. It is obvious that what we call a living creature, either bacteria or organism, has distinct properties from those of the normally called nonliving. However, searching beyond dichotomies and from a global, more abstract, perspective on natural laws, a clear partition of matter into animate and inanimate becomes fuzzy. Based on concepts from a variety of fields of research, the emerging notion is that common principles of biological and nonbiological organization indicate that natural phenomena arise and evolve from a central theme captured by the process of information exchange. Thus, a relatively simple universal logic that rules the evolution of natural phenomena can be unveiled from the apparent complexity of the natural world.

Keywords: Complexity, Information, Fluctuations, Synchronization, Rhythms, Brain, Evolution

Introduction

In these days of overabundance of empirical data and observations and of exhaustive analysis of elementary biological processes most commonly investigated under experimental conditions that try to isolate the phenomenon under scrutiny in (supposedly) controlled laboratory settings, a search for unifying general principles of biological organization is critically needed. In the biological sciences, the comprehensive understanding of high-level laws lags considerably behind the understanding of elementary processes. Some have noted that biology would benefit from the physicist’s approach to understanding natural phenomena [1], seeking laws based on abstraction from that overabundance of data. Rather than a description, it is an understanding that is sought; of course, a description is a good first approach to understanding, but it is not quite the same [2], and the choice of the level of description will dictate the nature of the understanding. A useful start in the search for simplification is to focus on the distributed interactions that underlie the system’s collective behavior. When examined from this perspective, apparently diverse phenomena become conceptually closer. The advice of theoretical biologists Robert Rosen and Nicholas Rashevsky is worth considering, and this is the perspective taken in the present narrative. Rosen’s “relational biology” (and similarly Rashevsky’s line of reasoning) focuses on the examination of the organization that all systems have, organization which is independent from the material particles that constitute the system and that is based on the nature of the interactions among those system’s particles [3, 4]. In the present work, the primary focus is on an abstract view of the interactions among a system’s constituents, in an attempt to extract some fundamental essence of natural (organic and inorganic) organization.

Different processes and mechanisms seem to regulate the development and organization of living and nonliving systems. Definitions of life have been continuously advanced from many levels of description, almost always with the apparent intention of disclosing clear-cut differences between the organic and the inorganic, dating back to the early attempts at expressing the unity of the organic world [4]. To dichotomize seems to be one of the commonest human intellectual exercises that, many times, hinder the formulation of comprehensive global views. Words of wisdom have advised to transcend dichotomies [5, 6], and a fine place to start is to take a deep look into the differentiation between the animate and the inanimate. Much of what follows has been noted by others; hence, this narrative is, in practice, a compilation of pieces of knowledge. In the end, the notion that emerges is that common general principles rule the evolution and organization of the animate and the inanimate and that the emergence of life is a very probable, basically inevitable, event.

A perspective on commonalities between the living and the nonliving

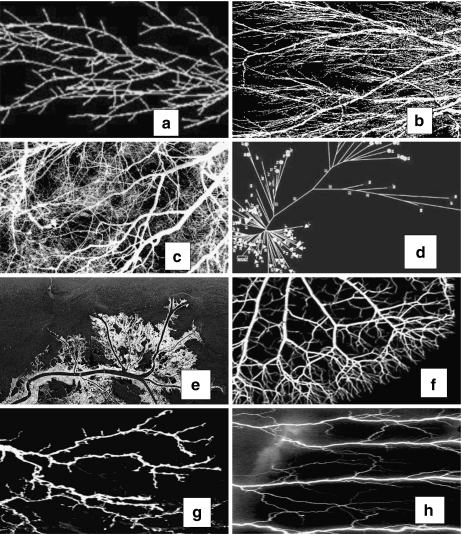

Those who think in terms of an unambiguous and definite separation between the animate and the inanimate are invited to consider the shapes shown in Fig. 1 and to note the striking common (structural) aspects of the natural phenomena displayed there. There are shown a river network; the branches of a tree; a dendritic tree of a brain cell; lightning during thunderstorms; a bronchiolar network in the lungs; an arterial network supplying blood; an axonal ramification of a brain cell; and, finally, one structure that is, to some extent, artificial (man-created): the phylogenetic tree representing the evolution of two genes (this pattern can be considered as a “conceptual” pattern but yet very similar in structure to the others). Try to distinguish them without looking at the figure legend; the task is not too easy. This is just a tiny sample, for these tree-like patterns are ubiquitous in nature: ice crystals, mountain ranges, roots, snowflakes, metal salts in silicate solutions... [7, 8]. A natural question to ask is whether these common shapes arose as a coincidence or whether there is some principle that guides the development of these patterns of matter. Perhaps the consideration of common aspects of these natural and very distinct phenomena can serve as a starting point in a line of reasoning.

Fig. 1.

Tree-like structures in natural phenomena. a Dendrites of a neuron; b tree branches; c lung bronchioles; d phylogenetic tree of two genes (from Yohn et al. 2005, PloS Biol. (4): e111); e river network; f blood capillaries; g axon of a neuron; h lightning

These systems share the characteristic of being open dissipative systems that are, normally, far from equilibrium. This combination of features, principally dissipation (nonlinearity), nonequilibrium, and a source of noise (fluctuations), constitutes a recipe for interesting things (patterns) to occur [9]. This was recognized early on by scientists like A. Turing [10], in whose intellectual legacy a most basic principle of organization is found: complex patterns emerge due to the competing tendencies of diffusion and reaction (a couple of reactants diffusing at different rates), or, in similar words, patterns result from molecular noise (diffusion) combined with an exchange of energy or matter (the chemical reaction), that is, information. Diffusion and stochastic driving are common characteristics among the natural patterns shown above. For instance, river networks and the closely associated topography of mountain ranges (the former is really a representation of the latter) result from diffusive erosion processes and stochastic driving: fluctuations in rock heterogeneity, rainfall, and other factors contribute to the tree-like structure [11]. The crucial importance of fluctuations was observed in computer simulations of river network evolution, where, without fluctuations in an important parameter of the model (the resistance to movement of the sediment transport), the tree-like structure did not form [8]. Randomness in the underlying environment, thus, was found to be a sufficient condition for the appearance of tree-like networks driven by flow gradients (dissipation). Similarly, randomness and a flow gradient, in this case the dissipation of electric energy, generate the tree-like pattern of lightning during storms (Fig. 1h).

These general features of diffusion–reaction and stochastic driving help explain living patterns as well. As an example, diffusion/dissipation and stochastic driving results in the branching of the dendrites and axons of neurons. Ramon y Cajal already observed, in the early twentieth century, that axons tend to grow straight without environmental interferences [12]. Chemical reactions occurring inside and outside the cell, resulting in axonal growth and guidance by chemotaxis, determine where the axon terminal happens to make contact with another cell and establishes a synaptic contact [13], and thus these processes generate the intricate structure of axonal and dendritic trees and the neural circuitries found in nervous systems. In general, this combination of diffusion/dissipation of energy and fluctuations gives rise to pattern formation, contributing to the creation of common shapes of the living and nonliving. Reaction–diffusion models are used to describe pattern formation in natural phenomena, from the inorganic to the organic [9, 14]. An interesting result that arose from a reaction–diffusion model proved useful to study the emergence of patterns if an ordered system interacts, under certain conditions, with similar but disordered systems [15], which demonstrates that, under some circumstances, interactions lead to the replication of ordered patterns. To close this discussion, perhaps the following experiment taken from granular physics exemplifies these matters in a very clear and striking fashion. The experiment can be considered as the story of a “miracle,” because what superficially seems an extremely improbable phenomenon develops in a short time. Schlichting and Nordmeier [16] discussed the fate of the distribution of small steel beads, initially uniformly distributed in two chambers separated by a barrier with a small opening, when the container is vibrated with a shaker. In a few minutes and owing to the inelastic collisions between beads (the source of dissipation) because of the vibration (source of noisy energy), one chamber starts to become more populated while the other continuously loses beads. This initial small difference in the number of balls in each chamber becomes amplified because in the chamber with fewer balls their energy is not dissipated as fast as in the other with more balls (because there are more interactions favoring energy dissipation and thus slowing the velocity of the balls). In the end, one chamber will be mostly full and the other almost empty, thus resulting in what seems to be a very improbable distribution coming from the uniformly distributed situation at the start. The apparent result is that an ordered state has risen from a disordered one, hence decreasing the total entropy, which violates the second principle of thermodynamics. However, this is only apparent at the macroscopic scale because detailed analysis of the heat involved in the collisions, the exchanges of energy, and the noisy energy source of the shaker, among other factors, reveals that the total entropy increases substantially. Thus, as mentioned above, as a result of diffusion/dissipation and a noisy input, a pattern originates, which seems at first glance implausible, but very probable once the detailed microscopic analysis is performed (a nice account of these phenomena can be found in [17]). It is conceivable that this argument applies to the emergence of life, which has also been thought of by many as an improbable event.

Competition, cooperation, and fluctuations as foundations of pattern formation

But how about the “conceptual” tree shown in Fig. 1d representing the evolutionary development of the two genes (or any other evolutionary tree, for that matter). Can this be interpreted in the conceptual formalism of reaction–diffusion plus fluctuations? Indeed, Darwin’s theory of evolution can easily be cast into these terms. The selection of variation through reproduction [18] can be rephrased in terms of competition (selection), cooperation (reproduction), and fluctuations (variation): competition and cooperation among fluctuating sources of information leads to the selection (or transient stabilization) of patterns; in ecosystems, these are evolutionary patterns, but also, in principle, they could be the structural ones discussed previously, or even patterns of activity. For example, in neurophysiology, for many years researchers have recognized the fundamental importance of competition and cooperation between cellular networks in nervous systems (brains in particular [19, 20]), manifested in the match between inhibitory and excitatory connections shaping sensory receptive fields in the neocortex [21], targeting sensory input to the neocortex via the thalamus (center-surround inhibition), determining the gain in neural circuitries from mammals to invertebrates [22], and, in general, shaping information processing in the nervous system information processing [23]. Continuing with the nervous system as an illustration of these phenomena, we find cooperation as another crucial event in brain information processing because cell-to-cell synaptic contacts are unreliable (synapses tend to fail, some cells will die, etc...). Therefore, the transmission of information (in the form of action potentials in neurons) will normally not be stable unless there exists a correlated (or synchronized) firing in a large number of neurons, which is needed to guarantee transfer of spikes from network to network. There is very a extensive work on these synchronization mechanisms in nervous systems (to cite a few representative examples, see [24–28]). Thus, at some level, cooperativity in the form of synchronization of cellular activities guarantees reliable processing of information. But these are not new concepts: cooperation as a fundamental principle of biological systems in general was recognized long ago [29]. Equally important, the crucial role of fluctuations in the competition among neural networks cannot be underestimated [30].

These close relatives, competition and cooperation, are ubiquitously found in natural phenomena and represent a fundamental principle of organization. As a small sample of instances, consider the competition and cooperation between biochemical and mechanical processes in phyllotaxis (the arrangement of leaves on a plant’s surface) [31]; or the competition between external forces (gravity, wind) and randomness in variables like angles between parent–child branches in the formation of botanical tree structures [32]; or the “chemical gardens”, tree-like-patterned structures that grow when soluble metal salts are placed in solutions containing silicates, structures that are shaped by the competition between internal pressure and membrane formation [33]; or in snowflake patterns, determined by the competition between the diffusion of water molecules and the properties of the solid–gas interface like surface tension. Hence, from phylogenetic diversity to formation of crystals, competition and cooperation lead to the selection of fluctuating patterns. The typical Langevin equation composed of a deterministic and a stochastic term [11], so widely used to describe natural phenomena, can be considered as an expression of the roles of these fundamental processes: competition/cooperation (the deterministic part of the equation, the “reaction”) and fluctuations (the noisy, stochastic term).

In essence, these processes depend on the interactions among the system’s constituents. Using the mathematical framework of evolutionary game theory, macroscopic spatial patterns of cooperative phenomena arising from microscopic interactions have been studied [34]. Hence, in the end, interactions are the source of pattern formation. Furthermore, interactions represent, basically, exchange of information: energy/matter redistribution.

Functional interactions: information exchange as energy/matter redistribution

The natural phenomena considered above could be conceptualized in terms of the essence of the processes of competition, cooperation, and fluctuations, which consists in interactions or the exchange of matter/energy. From chemical reactions to the formation of ecosystems, the exchange of matter or energy is the essence of the processes which may or may not occur depending upon the possibility of trading matter/energy among the system’s constituents. For instance, carbon and oxygen atoms can exchange energy in terms of electrons and form a covalent bond because their respective atomic structures allow for this exchange to take place. But carbon and argon do not have complementary electronic structures and thus cannot exchange energy—there is no interaction. The information exchanged is of course relative to what the units can “interpret”: an atom can “interpret” electronic arrangements and thus form chemical bonds with other atoms; a nerve cell can interpret the arrival of synaptic potentials and thus transfer information to another cell. The nature of the interpreter is accidental. Very restricted patterns (if any at all) can emerge from noninteracting units.

Functional interactions have been considered by some authors from an abstract theoretical perspective. A field theoretical approach to functional interactions between sources and sinks has been developed in terms of a physiological interpretation [35, 36] involving local effects through a diffusion term in the field equation governing the activity of neural tissue, while nonlocal effects are described by interaction terms. Based on this, G. Chauvet designed a theory of biological functional organization in terms of functional interactions, proposing that biological systems evolve to maximize their potential of organization of biological function, while at the same time obeying the principle of the growth of thermodynamic entropy due to their physical structures [37]. Hence, functional organization, described in terms of interactions between the constituent units of the system, is superposed on the structural physical organization.

Information transfer and the flow of energy

The essence of interactions is to exchange matter/energy, that is, information. There are several definitions of information, all equally acceptable depending upon the study that is undertaken. For the present purpose, there is no need to precisely define information, but perhaps it is most useful to look at it from an abstract perspective and consider information transfer, or exchange, as energy processing (exchange) resulting from any type of interaction, basically, the flow of energy. Because living systems are called “more complex” than nonliving, complexity could be an important aspect in characterizing the organic versus the inorganic. Several definitions of complexity have been advanced. Most interestingly, information has been regarded as the simplest unifying concept that covers the various views of complexity [38]. If the focus is on information, then, can biological information be quantified? Attempts to describe the information content in living systems go back to the mid-1950s, H. Quastler being an early exponent of these efforts [39]. Around the same time, H. Morowitz proposed a representation of the information content of an organism as the number of states of the system [40], somewhat similar to Rashevsky’s suggestion of information content as determined by that of its constituent molecules [41]. There are several information measures. Reference [42] provides an account on the relation between complexity and different information measures and claims to propose one variant (effective complexity) that is independent of the observer because, in general, information is a relative concept [38], in that it has meaning only when related to the cognitive structure of the receiver [43]. Looking at information from the aforementioned abstract perspective as the flow of energy, a link with a crucial concept proposed by H. Morowitz is established: he postulated, in the 1960s, that the flow of energy through a system acts to organize the system [44, 45]. Indeed, biological information is energy flow [44, 46]: metabolic pathways offer channels for energy/matter to flow, and cell–cell communication via molecular messengers provides further conduits for that flow. Not only in biological, but also in inorganic systems, channels exist so that energy flows, just like the lightning in Fig. 1. Morowitz’s postulate is thus related to the ideas exposed in previous sections on reaction/dissipation.

Maximization of information transfer

The basic proposal derived from all the previous considerations is that, in the natural world, there seems to be a tendency to increase information exchange. At an abstract (and intuitive) level of description, the tendency to maximize information exchange is equivalent to the tendency to maximize the number of interactions: the more interactions, the better the exchange of matter/energy. Both the organic and inorganic tend to a maximum of information exchange, maximizing the exchange of energy/matter, maximizing the number of interactions. But the organic (living) can achieve this maximization more efficiently than the inorganic: organic chemistry and the consequent development of a metabolism afford a better redistribution of energy/matter (information) than that based on inorganic mechanisms alone. Other investigators are pursuing the conditions of maximum information transfer, which is not the maximum information capacity with which Shannon’s formalism is concerned, and propose that maximizing information transfer will maximize the diversity of organized complexity (www.ucalgary.ca/ibi/files/ibi/The%20Open%20UniverseAbs5.pdf).

Is this equivalent to the maximization of entropy? If the exchange of information (energy/matter) increases the number of realizations of energy/matter distribution, perhaps an increase in entropy, at some level, can be expected. However, it is always difficult to relate the equilibrium (thermodynamical) entropy to open dissipative systems. Other means to define entropy in these conditions have been proposed [47]. Competition and cooperation result in complexity and the proposal has been advanced that, perhaps, complexity should substitute entropy and free energy as the fundamental property of open systems [48]. Let us say, for the time being, that the tendency towards maximization of information exchange is a fundamental characteristic of natural phenomena and the rest are details as to how this information exchange is achieved and maximized. Could this be the reason why cyclic behavior and synchronization phenomena are so prominent in nature?

Maximizing information exchange: a possible reason for the ubiquity of synchronization phenomena and cyclic behavior

Rhythms and synchronization phenomena pervade all aspects of the animate and inanimate [49]. It is even tempting to argue that, to a large extent, all natural phenomena arise from a central theme: the cycle. The study and consideration of cycles has occupied minds from the beginning of times, and in modern science these concepts were given more solid foundations in the works of J.C. Maxwell (on “governors”), C. Bernard (on feedback), and W.B. Cannon (on homeostasis), just to cite a few who contributed to the understanding of cyclic behavior.

The fundamental nature of cyclic phenomena is derived from another theorem by H. Morowitz, who provided what can be regarded as a most fundamental principle of nature: in steady state, the flow of energy from a source to a sink leads to at least one cycle in the system [50]. In this way, he was trying to address the apparent paradox that occurs when considering the second law of thermodynamics (tendency towards disorder) and organic evolution (increasing organization). Natural systems are normally far from equilibrium and subjected to flows of matter and energy, and it is these fluxes that constitute the basis of (cyclic) organization. Hence, from this theorem, whenever there is exchange of information (from source to sink), there will be a cycle. If the dissipation (the sink) and the source of energy (the source) are nonlinear, then a stable sustained oscillation of constant amplitude will emerge (see Fig. 2.8 in [49]). Nonlinearities plus Morowitz’s theorem give us rhythms. A cycle is, in essence, information exchange through the multiple interactions that constitute it. The phenomena of cyclic behavior and the related phenomenon of synchronization can be seen as two aspects of maximal information exchange because synchronization between oscillators offerstemporal windows for the optimal transfer of information. A classical example is how the oscillations of neuronal activity and their synchronization favor information exchange between neuronal populations in nervous systems, discussed in [24]. Whether the system’s units are neurons or fireflies, synchrony optimizes the exchange of information in living systems. Similarly, in the nonliving, synchronization of cyclic behavior maximizes redistribution of energy.

If oscillating units (whatever the nature of their cyclic behavior may be) have the opportunity to interact and they are able to exchange information, then their mutual synchronization will almost surely occur. Being able to exchange information is understood here as in being able to develop a “functional” interaction; for example, two neurons with similar intrinsic firing frequencies will communicate better than two neurons with very dissimilar frequencies, and if the oscillating units cannot exchange information at all (as between a firefly and a person clapping), then there will be little chance of synchronization. From this perspective, to ask whether two oscillators will synchronize is like asking whether two molecules will form a chemical bond: if their structure allows for an exchange of information (electrons), the molecules will bond; if the oscillators share some characteristics (frequencies of oscillations, etc...) and they can exchange information, they will synchronize. From this view, synchronization phenomena are so abundant in nature because many units are intrinsic oscillators (or at least are able to oscillate in one or another manner) and they interact, allowing for optimal information transfer.

In sum, it can be conceived that synchronization phenomena are manifestations of the maximization of information exchange, resulting in a widespread redistribution of energy/matter. Furthermore, the nature of the interactions that create cycles represents the aforementioned processes of cooperation/competition, reaction–diffusion, and fluctuations: take for instance the origin of the fluctuating oscillations in calcium concentrations in astrocytes where calcium-induced calcium release (cooperation) is coupled to inositol trisphosphate production (reaction–dissipation) [51].

On extremal principles

Is the maximization of information transfer another optimizing principle? Optimizing principles have had a long history in science and engineering. The maximization or minimization of a cost function represents an approach, a standard procedure, to describe the evolution of natural phenomena. Early efforts in the search for fundamental principles, encompassing optimization as a guide to find representations of systems, arrived at the proposal that entropy is a most primitive concept and that its maximization provides the most unbiased representation of a system [52]. The structures of Fig. 1 have all been described in terms of optimizing principles, for example, invoking the minimization of total volume, therefore achieving the shortest topology connecting terminals in dendritic and axonic arborizations of neurons as well as river networks [53]. This study was done using fluid dynamics concepts, particularly local optimization laws that express the least-energy state of connected cords sharing common junctions (so-called triangle of forces law in vector mechanics); thus, at some level, neuronal arborization morphogenesis behaves like fluid dynamics. Further examples are the idea of maximizing photosynthesis (light interception) as a reason for the structure of trees and plants [32], the maximization of water uptake by roots to explain their fractal structure [54], the minimization of dissipated energy in numerical simulations of models describing landscape evolution [55], and the maximization of metabolic diversity increasing the stability of ecosystems [56]. In general, many optimizing principles revolve around the notion of maximizing the surface areas so that more optimal exchange of energy/matter (information) can take place. These considerations are complementary to those aforementioned in Section 2, about these patterns resulting from gradients and fluctuations, because motion down a gradient involves exchange of information in numerous ways, which leads to increasing complexity [57], or at least to a larger number of rearrangements of interactions.

Other authors have articulated, in one way or another, the idea of the maximization of information (exchange) as a fundamental characteristic of the organic world and the consequent maximal redistribution of energy/matter. Some exponents are E. Smith [46], who wrote that “many invariant properties of life are linked directly to increments of information”; H. Haken [58] with his principle of maximum information; M.-W. Ho [59], declaring that open systems able to store energy will evolve to maximize energy storage; T. Shinbrot and F.J. Muzzio [17], who concluded that “the physically realized states that nature chooses tend to correspond to the ones that maximize the number of possible particle rearrangements”; and finally, H. Morowitz and E. Smith, claiming that “the continuous generation of sources of free energy by abiotic processes may have forced life into existence as a means to alleviate the buildup of free energy...” [45].

Does this all mean that the evolution and development of natural phenomena, and life in particular, are governed by extremal principles? Can optimizing principles be considered as cause of processes or as a convenient method of describing a system? Some have discussed whether properties believed to reside in natural phenomena determine the workings of nature or whether these strictly belong to the observer. This is not the place to engage in a discussion of this matter, but perhaps, as Heinz von Foerster concluded while pondering on properties like order/disorder, these concepts may reflect more our abilities (or inabilities) to characterize the system than being inherent in it [60].

Concluding remarks

Science strives to build on solid foundations where statements are supposed to be either true or false. Conceivably, these dichotomies “tend” to fade away when thinking in terms of tendencies [6]. Most of what we observe and measure in natural phenomena are tendencies rather than definite and unambiguous processes. This is apparent when some thought is devoted to any particular observation or measurement: for instance, a neuronal action potential may be one of the few definite phenomena, but further than this only a tendency of the neuron to fire with one or another firing pattern, in different conditions, can be described since the patterns are always variable. The basic main idea presented in this narrative is that there is a tendency to maximize information exchange—a tendency to more widespread energy distribution. If there is a channel for communication that allows the transfer of matter/energy, then there will be an interaction and possible synchronization phenomena, whatever optimizes the transfer of information. Just as a set of gas molecules will occupy the maximal volume available to them, energy/matter will redistribute in the widest manner, for no particular reason but because that is the most probable outcome. The rest of the different natural phenomena and their mechanisms are but details as to how this optimal information exchange is achieved. It is tempting to envisage living organisms as a result of the widespread distribution of energy. In this view, one organism is but an extension of other organisms, for their exchange of information dictates that some part of one is assimilated into the other.

There is now a call to focus on how the flow of information is managed in living systems [61], and the need for a theory linking information and energy has been put forward [46]. Then, perhaps the time has come to pay close attention to more abstract perspectives based on the handling of information, from which a global collective view of natural phenomena can be formulated. There may be, then, substantial significance derived from the study of interactions from an abstract perspective, for, after all, dynamical bifurcations in natural phenomena are prominent because crises (critical bifurcations) result from the progressive buildup of interactions: a representation of cooperative phenomena via interactions. The broad framework of self-organization (that has been advocated as a fundamental principle of pattern formation [53]) is intimately related to these critical bifurcations resulting from interactions.

Biological processes, in general, support a widespread redistribution of energy/matter (information): biological rates are limited by the rate of energy and matter distribution, requiring a minimization of the energy and time required to distribute resources [62]. Organic chemistry, and the consequent development of a metabolism, afford a better redistribution of information than that based on inorganic mechanisms alone. In the words of Morowitz and Smith [45]: “metabolism formed in this way... may be a unique or at least optimal channel for accessing these particular sources of energy”. Not that there is anything singular with carbon-based chemistry—the fact that it arose on this planet is due to the particular conditions present in the solar system and the earth. The prediction is that any other chemistries will develop to their maximal realization on any planet supporting them. In this view, and contrary to the opinion of an improbable development of life, the emergence of what is called life (starting with a metabolism, which is after all the foundation of life on this planet) is a manifestation of a very probable phenomenon, or state, derived from the maximization of the redistribution of information (matter/energy), as living processes contribute more efficiently to the maximization of information transfer, that is, to a more widespread distribution of matter/energy. Other authors have proposed the notion of life as an inevitable occurrence as well [45].

Much emphasis has been placed on information. Can it be concluded that information, in a general sense, is better suited than other notions to describe the features of living systems? Some would argue that information (at least in the Shannon’s sense) is better than entropy to describe nonequilibrium systems and that it plays the same role as entropy does in equilibrium systems. Others, on the contrary, dispute this idea: H. Haken, for instance, is reluctant to consider the maximization of entropy or information as a cause of processes [58]. He poses the question, asked by others as well, of whether there exists any possible potential function that drives living systems. Haken’s perspective emphasizes the fundamental tendency in natural phenomena to the emergence of coherence, of macroscopic order. Along the lines of the arguments on synchronization in previous paragraphs, the emergence of coordination is a very probable result derived from the tendencies to maximize information exchange. There could be value in the concept of information distance [63] since the information content can be quantified in different manners [39–41].

Patterns emerge from the exchange of information and the presence of fluctuations, the reaction–diffusion paradigm. At the same time, as aforementioned, Morowitz’s theorem links the exchange of information (energy from a source to a sink) to cyclic behavior that fluctuates over time, and the optimisation of information transfer manifests itself as synchronization phenomena. The fascination with patterns in natural phenomena dates back to old times. Unifying principles underlying pattern formation have been sought throughout centuries. Information transfer is an organizing “force” [15, 64]. The eloquent words of E.J. Ambrose have a point: “The matter in life has no permanence; only the pattern according to which it is arranged or organized has permanence. Life is basically a pattern of organized activity” [65]. His last brief statement can be further simplified, using the ideas discussed in this work, to “life is organized information exchange” or, even in simpler words, “everything consists in information exchange.”

Acknowledgements

The author is grateful to Professor Christopher Cherniak for reviewing the manuscript and providing advice.

References

- 1.Kaneko, K.: Life: An Introduction to Complex Systems Biology. Springer, Berlin (2006)

- 2.Laurent, G.: What does ‘understanding’ mean? Nat. Neurosci. 3, 1211 (2000). doi:10.1038/81495 [DOI]

- 3.Rosen, R.: Life Itself: A Comprehensive Inquiry into the Nature, Origin, and Fabrication of Life. Columbia University Press, New York (1991)

- 4.Rashevsky, N.: A contribution to the search of general mathematical principles in biology. Bull. Math. Biophys. 20, 71–92 (1958). doi:10.1007/BF02476561 [DOI]

- 5.Kelso, J.A.S., Engstrøm, D.A.: The Complementary Nature. MIT Press, Cambridge (2006)

- 6.Kelso, J.A.S., Tognoli, E.: Toward a complementary neuroscience: metastable coordination dynamics of the brain. In: Perlovsky, L.I., Kozma, R. (eds.) Neurodynamics of Cognition and Consciousness. Springer, Berlin (2007)

- 7.Cherniak, C.: Local optimization of neuron arbors. Biol. Cybern. 66, 503–510 (1992). doi:10.1007/BF00204115 [DOI] [PubMed]

- 8.Paik, K., Kumar, P.: Emergence of self-similar tree network organization. Complexity 13, 30–37 (2008). doi:10.1002/cplx.20214 [DOI]

- 9.Nicolis, G., Prigogine, I.: Self-organization in Non-equilibrium Systems. Wiley, New York (1977)

- 10.Turing, A.M.: The chemical basis of morphogenesis. Philos. Trans. R. Soc. B. 237, 37–72 (1952). doi:10.1098/rstb.1952.0012 [DOI]

- 11.Sornette, D., Zhang, Y.-C.: Non-linear Langevin models of geomorphic erosion processes. Geophys. J. Int. 113, 382–386 (1993). doi:10.1111/j.1365-246X.1993.tb00894.x [DOI]

- 12.Ramon y Cajal, S.: Degeneracion y Regeneracion del Sistema Nervioso. Moya, Madrid (1913–1914)

- 13.Purves, D., Lichtman, J.: Principles of Neural Development. Sinauer, Sunderland (1985)

- 14.Cross, M.C., Hohenberg, P.C.: Pattern formation outside of equilibrium. Rev. Mod. Phys. 65, 851–1112 (1993). doi:10.1103/RevModPhys.65.851 [DOI]

- 15.Velarde, M.G., Nekorkin, V.I., Kazantsev, V.B., Ross, J.: The emergence of form by replication. Proc. Natl. Acad. Sci. U. S. A. 94, 5024–5027 (1997). doi:10.1073/pnas.94.10.5024 [DOI] [PMC free article] [PubMed]

- 16.Schlichting, H.J., Nordmeier, V.: Strukturen im Sand—Kollektives Verhalten und Selbstorganisation bei Granulaten Math. Naturwissenschaften 49, 323–332 (1996)

- 17.Shinbrot, T., Muzzio, F.J.: Noise to order. Nature 410, 251–258 (2001) doi:10.1038/35065689 [DOI] [PubMed]

- 18.Darwin, C.: On the Origin of Species by Means of Natural Selection. John Murray, London (1859)

- 19.Amari, S., Arbib, M.A.: Competition and cooperation in neural nets. In: Metzler, J. (ed.) Systems Neuroscience. Academic, New York (1977)

- 20.Baron, R.J.: The Cerebral Computer. Lawrence Erlbaum, New Jersey (1987)

- 21.Okun, M., Lampl, I.: Instantaneous correlation of excitation and inhibition during ongoing and sensory-evoked activities. Nat. Neurosci. 11, 535–537 (2008). doi:10.1038/nn.2105 [DOI] [PubMed]

- 22.Olsen, S.R., Wilson, R.I.: Lateral presynaptic inhibition mediates gain control in an olfactory circuit. Nature 452, 956–960 (2008). doi:10.1038/nature06864 [DOI] [PMC free article] [PubMed]

- 23.Rabinovich, M., Volkovskii, A., Lecanda, P., Huerta, R., Abarbanel, H.D.I., Laurent, G.: Dynamical encoding by networks of competing neuron groups: winnerless competition. Phys. Rev. Lett. 87, 068102 (2001). doi:10.1103/PhysRevLett.87.068102 [DOI] [PubMed]

- 24.Fries, P.: A mechanism for cognitive dynamics: neuronal communication through neuronal coherence. Trends Cogn. Sci. 9, 474–480 (2005). doi:10.1016/j.tics.2005.08.011 [DOI] [PubMed]

- 25.Castelo-Branco, M., Neuenschwander, S., Singer, W.: Synchronization of visual responses between the cortex, lateral geniculate nucleus, and retina in the anesthetized cat. J. Neurosci. 18, 6395–6410 (1998) [DOI] [PMC free article] [PubMed]

- 26.Harris, K.D.: Neural signatures of cell assembly organization. Nat. Rev. Neurosci. 6, 399–407 (2005). doi:10.1038/nrn1669 [DOI] [PubMed]

- 27.Perez Velazquez, J.L.: Brain, behaviour and mathematics: are we using the right approaches? Physica D 212, 161–182 (2005). doi:10.1016/j.physd.2005.10.005 [DOI]

- 28.Perez Velazquez, J.L., Wennberg, R. (eds.): Coordinated Activity in the Brain: Measurements and Relevance to Brain Function and Behaviour. Springer, New York (2009)

- 29.Maynard Smith, J., Szathmary, E.: The Major Transitions in Evolution. Freeman, Oxford (1995)

- 30.Wilson, M.T., Steyn-Ross, M.L., Steyn-Ross, D.A., Sleigh, J.W.: Going beyond a mean-field model for the learning cortex: second-order statistics. J. Biol. Phys. 33, 213–246 (2007). doi:10.1007/s10867-008-9056-5 [DOI] [PMC free article] [PubMed]

- 31.Newell, A.C., Shipman, P.D., Sun, Z.: Phyllotaxis: cooperation and competition between mechanical and biochemical processes. J. Theor. Biol. 251, 421–439 (2008). doi:10.1016/j.jtbi.2007.11.036 [DOI] [PubMed]

- 32.Plucinski, M., Plucinski, S., Rodriguez Iturbe, I.: Consequences of the fractal architecture of trees on their structural measures. J. Theor. Biol. 251, 82–92 (2008). doi:10.1016/j.jtbi.2007.10.042 [DOI] [PubMed]

- 33.Pantaleone, J., Toth, A., Horvath, D., Rother McMahan, J., Smith, R., Butki, D., Braden, J., Mathews, E., Geri, H., Maselko, J.: Oscillations of a chemical garden. Phys. Rev. E 77, 046207 (2008). doi:10.1103/PhysRevE.77.046207 [DOI] [PubMed]

- 34.Langer, P., Nowak, M.A., Hauert, C.: Spatial invasion of cooperation. J. Theor. Biol. 250, 634–641 (2008). doi:10.1016/j.jtbi.2007.11.002 [DOI] [PMC free article] [PubMed]

- 35.Chauvet, G.A.: Non-locality in biological systems results from hierarchy. J. Math. Biol. 31, 475–486 (1993) [DOI] [PubMed]

- 36.Chauvet, G.A.: An n-level field theory of biological networks. J. Math. Biol. 31, 771–795 (1993). doi:10.1007/BF00168045 [DOI] [PubMed]

- 37.Chauvet, G.A.: Hierarchical functional organization of formal biological systems: a dynamical approach. II. The concept of non-symmetry leads to a criterion of evolution deduced from an optimum principle of the (O-FBS) sub-system. Philos. Trans. R. Soc. Lond. B 339, 445–461 (1993). doi:10.1098/rstb.1993.0041 [DOI] [PubMed]

- 38.Standish, R.K.: Concept and definition of complexity. ArXiv:0805.0685v1 [nlin.AO] (2008). http://arxiv.org/abs/0805.0685

- 39.Quastler, H.: Information Theory in Biology. University of Illinois Press, Urbana (1953)

- 40.Morowitz, H.J.: Some order-disorder considerations in living systems. Bull. Math. Biophys. 17, 81–86 (1955). doi:10.1007/BF02477985 [DOI]

- 41.Rashevsky, N.: Life, information theory, and topology. Bull. Math. Biophys. 17, 229–235 (1955). doi:10.1007/BF02477860 [DOI]

- 42.Gell-Mann, M., Lloyd, S.: Information measures, effective complexity, and total information. Complexity 2, 44–52 (1996). doi:10.1002/(SICI)1099-0526(199609/10)2:1<44::AID-CPLX10>3.0.CO;2-X [DOI]

- 43.von Foerster, H.: Notes on an epistemology for living things. In: Understanding Understanding. Essays on Cybernetics and Cognition. Springer, New York (2003)

- 44.Morowitz, H.J.: Energy Flow in Biology. Academic, New York (1968)

- 45.Morowitz, H.J., Smith, E.: Energy flow and the organization of life. Complexity 13, 51–59 (2007). doi:10.1002/cplx.20191 [DOI]

- 46.Smith, E.: Thermodynamics of natural selection I: energy flow and the limits on organization. J. Theor. Biol. 252, 185–197 (2008). doi:10.1016/j.jtbi.2008.02.010 [DOI] [PubMed]

- 47.Smith, E.: Thermodynamic dual structure of linearly dissipative driven systems. Phys. Rev. E 72, 36130 (2005). doi:10.1103/PhysRevE.72.036130 [DOI] [PubMed]

- 48.Ben-Jacob, E., Levine, H.: The artistry of nature. Nature 409, 985–986 (2001). doi:10.1038/35059178 [DOI] [PubMed]

- 49.Pikovsky, A., Rosenblum, M., Kurths, J.: Synchronization: A Universal Concept in Nonlinear Sciences. Cambridge University Press, Cambridge (2001)

- 50.Morowitz, H.J.: Physical background of cycles in biological systems. J. Theor. Biol. 13, 60–62 (1966). doi:10.1016/0022-5193(66)90007-5 [DOI]

- 51.Lavrentovich, M., Hemkin, S.: A mathematical model of spontaneous calcium(II) oscillations in astrocytes. J. Theor. Biol. 251, 553–560 (2008). doi:10.1016/j.jtbi.2007.12.011 [DOI] [PubMed]

- 52.Jaynes, E.T.: Information theory and statistical mechanics. Phys. Rev. 106, 620–630 (1957). doi:10.1103/PhysRev.106.620 [DOI]

- 53.Cherniak, C., Changizi, M., Kang, D.W.: Large-scale optimization of neuron arbors. Phys. Rev. E 59, 6001–6009 (1999). doi:10.1103/PhysRevE.59.6001 [DOI] [PubMed]

- 54.Biondini, M.: Allometric scaling laws for water uptake by plant roots. J. Theor. Biol. 251, 35–59 (2008). doi:10.1016/j.jtbi.2007.11.018 [DOI] [PubMed]

- 55.Banavar, J.R., Colaiori, F., Flammini, A., Maritan, A., Rinaldo, A.: Scaling, optimality, and landscape evolution. J. Stat. Phys. 104, 1–48 (2001). doi:10.1023/A:1010397325029 [DOI]

- 56.Lopez Villalta, J.S.: A metabolic view of the diversity–stability relationship. J. Theor. Biol. 252, 39–42 (2008). doi:10.1016/j.jtbi.2008.01.015 [DOI] [PubMed]

- 57.Scheneider, E.D., Sagan, D.: Into the Cool: Energy Flow, Thermodynamics, and Life. University of Chicago Press, Chicago (2005)

- 58.Haken, H.: Information and Self-organization. Springer, Berlin (1998, 2006)

- 59.Ho, M.-W.: Bioenergetics and the coherence of organisms. Neuronetwork World 5, 733–750 (1995). www.i-sis.org.uk

- 60.von Foerster, H.: Disorder/Order: discovery or invention? In: Understanding Understanding. Essays on Cybernetics and Cognition. Springer, New York (2003)

- 61.Nurse, P.: Life, logic and information. Nature 454, 424–426 (2008). doi:10.1038/454424a [DOI] [PubMed]

- 62.West, G.B., Brown, J.H., Enquist, B.J.: A general model for the origin of allometric scaling laws in biology. Science 276, 122–126 (1997). doi:10.1126/science.276.5309.122 [DOI] [PubMed]

- 63.Bennett, C.H., Gacs, P., Li, M., Vitanyi, P.M.B., Zurek, W.H.: Information distance. IEEE Trans. Inf. Theory 44, 1407–1423 (1998). doi:10.1109/18.681318 [DOI]

- 64.Nekorkin, V.I., Kazantsev, V.B., Rabinovich, M.I., Velarde, M.G.: Controlled disordered patterns and information transfer between coupled neural lattices with oscillatory states. Phys. Rev. E 57, 3344 (1998). doi:10.1103/PhysRevE.57.3344 [DOI]

- 65.Ambrose, E.J.: The Nature and Origin of the Biological World. Ellis Horwood, Chichester (1982)