Abstract

The issue of whether speech is supported by the same neural substrates as non-speech vocal-tract gestures has been contentious. In this fMRI study we tested whether producing non-speech vocal tract gestures in humans shares the same functional neuroanatomy as non-sense speech syllables. Production of non-speech vocal tract gestures, devoid of phonological content but similar to speech in that they had familiar acoustic and somatosensory targets, were compared to the production of speech syllables without meaning. Brain activation related to overt production was captured with BOLD fMRI using a sparse sampling design for both conditions. Speech and non-speech were compared using voxel-wise whole brain analyses, and ROI analyses focused on frontal and temporoparietal structures previously reported to support speech production. Results showed substantial activation overlap between speech and non-speech function in regions. Although non-speech gesture production showed greater extent and amplitude of activation in the regions examined, both speech and non-speech showed comparable left laterality in activation for both target perception and production. These findings posit a more general role of the previously proposed “auditory dorsal stream” in the left hemisphere – to support the production of vocal tract gestures that are not limited to speech processing.

Keywords: sensory-motor interaction, auditory dorsal stream, functional magnetic resonance imaging (fMRI)

Human speech involves precise, well-coordinated laryngeal and orofacial movements, likely dependent on neural networks encompassing frontal motor and temporoparietal auditory regions (Hickok and Poeppel, 2004). A common auditory dorsal pathway involving motor responses constrained by auditory experience has been proposed (Warren et al., 2005) that links the auditory processing of speech sounds with motor gestures, enabling accurate sound production (Hickok and Poeppel, 2007). Such auditory-motor interactions may support speech development in children, when speech motor gestures are tuned to, or guided by auditory speech targets (Hickok and Poeppel, 2004). The structures involved in the auditory dorsal stream, which is lateralized to the left hemisphere, may not be specialized for human speech but likely support other types of learned volitional vocal productions with auditory targets (Bottjer et al., 2000; Metzner, 1996; Pa and Hickok, 2008; Smotherman, 2007; Zarate and Zatorre, 2005).

Many studies have indicated that cerebral activation for speech perception can be distinguished from that for non-speech perception, particularly in the superior temporal regions (Benson et al., 2001; Binder et al., 2000; Liebenthal et al., 2005; Scott et al., 2000; Whalen et al., 2006). In some cases, the speech stimuli contained lexical-semantic information involving higher level language processing, greater in the left hemisphere. On the other hand the non-speech stimuli often did not involve vocal tract gestures and were either non-vocal simple tones, non-producible synthetic sounds or sounds from nature (Benson et al., 2006; Benson et al., 2001; Binder et al., 2000) rather than non-speech vocal tract gestures such as sigh, click, and cry. In those instances, differences in brain activation found for speech and non-speech sound processing could have been because the non-speech stimuli did not contain oral motor or vocal targets, less likely to engage motor production circuits such as those involved in speech. One study did use vocally produced non-speech sounds and found that speech sounds activated most parts of the temporal lobe on both sides of the brain, while the right superior temporal lobe was activated to a greater degree by non-speech vocal sounds (i.e., sighs, laughs, cries) (Belin et al., 2002). In another study, however, when subjects performed sequence manipulation tasks with speech involving phoneme processing and non-speech involving oral sounds such as humming, comparable activation in the left posterior inferior frontal and superior temporal regions were found for both speech and non-speech (Gelfand and Bookheimer, 2003). Perhaps if non-speech vocal tract gestures involve segment sequencing, resulting in auditory and somatosensory feedback as is in the case of speech, they will activate comparable regions to speech processing.

Clinical lesion and intraoperative studies, as well as functional imaging studies have provided a wealth of data on neural structures supporting speech motor production. Apraxia of speech (AOS), characterized by difficulty in speech motor planning particularly for complex syllables, has been reported to result following damage to the anterior insula in the language-dominant hemisphere (Dronkers, 1996) as well as left-sided infarctions affecting blood supply to the middle cerebral artery, such as the posterior inferior frontal gyrus (Hillis et al., 2004). Speech execution in terms of rate, intonation, articulation, voice volume, quality, and nasality can be adversely affected in various dysarthrias, which can result from injuries to the basal ganglia (Schulz et al., 1999), thalamus (Ackermann et al., 1993; Canter and van Lancker, 1985), cerebellum (Kent et al., 1979), or cerebral cortex (Ozsancak et al., 2000; Ziegler et al., 1993).

Electrical stimulation of the exposed motor strip representation of face/mouth on either hemisphere controls vocalization (Penfield and Roberts, 1959), and stimulation of left inferior dorsolateral frontal structures can lead to speech arrest and inability to repeat articulatory gestures (Ojemann, 1994). Neuroimaging studies of normal speech motor control (Bohland and Guenther, 2006; Riecker et al., 2008; Soros et al., 2006; Wise et al., 1999), using a variety of speech tasks, have roughly converged on a “minimal network for overt speech production”, including the “mesiofrontal areas, intrasylvian cortex, pre- and post-central gyrus, extending rostrally into posterior parts of the left inferior frontal convolution, basal ganglia, cerebellum, and thalamus” (Riecker et al., 2008).

One study found an opposite pattern of lateralization in the sensorimotor cortex during speech production and production of tunes (articulation constant; i.e, “la” while singing the melody), with the former eliciting predominantly left sided activity and the latter eliciting activity predominantly on the right (Wildgruber et al., 1996). Similarly in a follow-up study by the same group, opposite laterality effects were found when comparing speech and non-speech (singing) in the insula, motor cortex, and the cerebellum (Riecker et al., 2000). In these studies, however, it is still not clear whether singing or other non-speech gestures would be supported bilaterally or with right hemisphere dominance, different from left-lateralized speech production. This is because non-speech tasks such as singing melodies with a constant vowel or consonant-vowel syllable was not comparable to speech in the amount of sequencing required or the variety of vocal tract and oral gestures required for production.

In this study we sought to test whether volitional production of non-speech vocal tract gestures would be supported by comparable functional neuroanatomy as speech production. Non-speech production involved volitional vocal tract gestures such as whistle, cry, sigh, and cough, which have previously learned auditory targets, and require sensory-motor integration for accurate production as in the case of speech. We hypothesized that speech and non-speech would involve the same regions of activation when compared on whole brain analyses and in brain regions involved in speech. Second, we hypothesized no differences in laterality of activity. Third, we hypothesized that although non-speech targets would activate regions involved in the production of speech sounds, activation levels in these regions would be greater, as volitional production of non-speech oral-motor gesture sequences may be more novel and involve greater effort in producing the oral-motor gestures compared to speech.

Methods

Participants

The participants were 34 healthy adults (17 females) aged 18–57 (mean =37 years), right handed on the Edinburgh handedness inventory (Oldfield, 1971), native English speakers, and scored within 1 standard deviation of the age-adjusted mean on speech, language, and cognitive testing. All subjects were free of communication, neurological or medical disorders, passed audiometric screening, and had normal structural MRI scans when examined by a radiologist. All subjects signed an informed consent form approved by the Internal Review Board of the National Institutes of Neurological Disorders and Stroke. All were paid for their participation.

Procedure

Each trial of the experiment started with the presentation of pairs of either speech or non-speech targets, which required repetition (overt production) of the target after a delay period (Figure 1). All the stimuli were previously recorded, using the same female speaker. Five different target pairs were randomly presented for the speech and non-speech conditions. The speech targets were pairs of meaningless consonant-vowel-consonant syllables /bem/-/dauk/, /hik/-/lΛd /, /saip/-/kuf/, /lok/-/chim/, and /raig/-/sot/, devoid of lexicality but following the rules of English phonology. Because our intention was to contrast brain activation for speech and non speech vocal tract gestures, it was important to control for lexical/semantic differences. Therefore, we only used speech targets that did not have lexical/semantic reference.

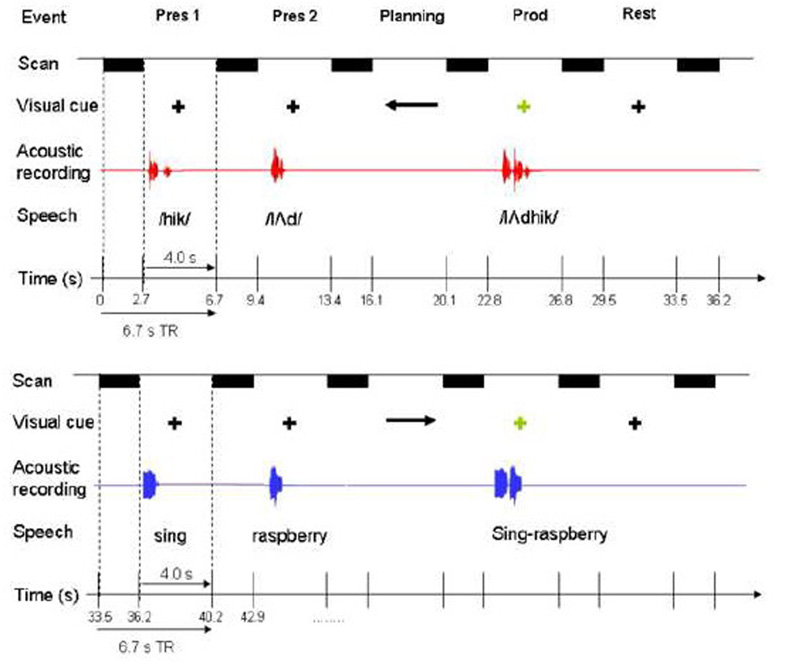

Figure 1.

Experiment outline. Here one speech trial (upper panel) and one non-speech trial (lower panel) are illustrated. Speech and non-speech trials were randomly presented. Each trial consisted of two target presentations (pres 1, pres 2), planning, production (prod), and rest, each presented/performed during a 4 second silent period, which was followed by 2.7 seconds of scanning. Note that only the first of the two responses associated with target presentation (scan following “pres 1”) was used for perception analysis. See text for more detail on the experiment paradigm.

We developed our non-speech gestures to include vocal tract gestures that involved sound targets but were devoid of phonological content. In addition we developed sequences of these gestures so that we had pairs of targeted vocal tract gestures parallel to the nonsense speech syllables. The non-speech targets were pairs of sounds of orofacial and vocal tract gestures: cough-sigh, sing (“/a/” on a tone)-raspberry, kiss-snort, laugh-tongue click, whistle-cry. All non-speech targets could be easily reproduced by each subject, yet involved complex oral motor sequencing, but without phonemic processing typical of speech processing. The non-speech and speech stimuli were similar in duration (x¯ speech = 820 ms (SD = 136), x¯non-speech = 916 ms (SD = 142)) and root-mean-square power (x¯ speech = .15 (SD = .04), x¯ non-speech = .12 (SD = .07)), with no statistically significant difference (p>.05). The speech and non-speech targets did differ, however, in acoustic and motor complexity; speech included more transients and smaller articulatory gestures, and non-speech involved a greater variety of motor gestures, and included more forceful glottal attack (cry, cough) and lip closures (kiss), bilabial bursts (raspberries), tongue thrusts (tongue click), which possibly required more effort than articulating nonsense syllable sequences.

The target presentation phase was followed by a planning phase, when the subjects were visually cued that their upcoming production of the two stimuli should either be in the same order (right arrow), or in the reversed order (left arrow) from the presented pair. Subjects were instructed beforehand not to make any oral movements during this time period. This design separated motor planning from motor production, as the onset of production was signaled by a fixation cross replacing the arrow from the planning phase. The cross served as the “go” signal for subjects to produce the previously planned speech or non-speech response (Figure 1).

Auditory and visual stimuli were delivered using Eprime software (version 1.2, Psychology Software Tools, Inc.) running on a PC, which synchronized each trial with functional image acquisition. Sound was delivered binaurally through MRI-compatible headphones (Silent Scan™ Audio Systems, Avotec Inc., Stuart, FL). The auditory stimuli were set at a comfortable volume level for each subject before the experiment and remained constant throughout the experimental runs. Subjects’ productions were monitored and recorded using an MR-compatible microphone attached to the headphones (Silent Scan™ Audio Systems, Avotec Inc., Stuart, FL).

All subjects underwent a training session on the day of the experiment to familiarize them with the stimuli and tasks. Subjects were able to produce both speech and non-speech stimuli without difficulty. Ten speech and ten non-speech trials were randomly presented in each run, and a total of three runs were completed for each subject, resulting in 60 target presentation (only the first of the two presentation trials were taken for analysis), and 60 production responses; both containing 30 speech and 30 non-speech stimuli.

Image Acquisition

All images were obtained from a 3.0 Tesla GE Signa scanner equipped with a standard head coil. Subjects’ head movements were minimized using padding and cushioning of the head inside the head coil. Gradient echo-planar pulse sequence was used for functional image acquisition (TE=30ms, TR=6.7s, FOV=240mm, 6 mm slice thickness, 23 contiguous sagittal slices). By using an event-related, sparse sampling design (Birn et al., 1999; Eden et al., 1999; Hall et al., 1999) the presentation of auditory stimuli, and the planning and production phases took place while the scanner was transiently silent before scanning 4 seconds later. Sparse sampling minimized scanner noise and movement related susceptibility artifacts. In this experiment, the scans were collected over 2.7 seconds within a TR of 6.7 seconds, leaving 4 seconds of silent period for auditor stimulus delivery and overt production (Figure 1). High-order shimming before echo-planar image acquisition optimized the homogeneity of the magnetic field across the brain and minimized distortions. A high-resolution T1-weighted anatomical image was also acquired for registration with the functional data, using a 3D inversion recovery prepared spoiled gradient-recalled sequence (3D IR-Prep SPGR; TI=450 ms, TE=3.0ms, flip angle=12 degrees, bandwidth=31.25mm, FOV=240 mm, matrix 256×256 mm, 128 contiguous axial slices).

Data Processing

Image preprocessing and all subsequent data analyses were carried out using Analysis of Functional Neuroimages (AFNI) software (Cox, 1996). The first four volumes were excluded from analysis to allow for initial stabilization of the fMRI signal. To correct for small head movements, each volume from the three functional runs were registered to the volume collected closest to the high-resolution anatomical scan using heptic polynomial interpolation. The percent signal change in each voxel was normalized by dividing the hemodynamic response amplitude at each time point by the mean amplitude of all the time points for that voxel from the same run, and multiplying by 100. These functional images from each run were then concatenated into one 3D+time file, and subsequently spatially smoothed using a 6 mm full-width half-maximum Gaussian filter.

The use of sparse sampling that captured only a narrow window near the peak of the hemodynamic response (HDR) ensured that task specific responses were sampled with minimal hemodynamic overlap. A rest period of 6.7 seconds with scanning preceded the first target presentation to further reduce any possible effects of motor planning and execution on the target presentation response. In addition, only data from the first of the two target presentation trials were used for target perception analysis so as to include primarily perception and not planning in the scanning during target perception.

The HDR for speech and non-speech planning responses, when modeled as a gamma variate function from visual cue onset, would have had negligible influence on the acquisition of the following production HDR, because data acquisition for production would have occurred at approximately 10 seconds into the HDR of planning, at which time the amplitude of the planning HDR was modeled to have been at 5% of the peak response. The visual cue for production was presented at the tail end of the planning HDR, and production occurred at an average of 500 ms after the cue, with average duration of 820 ms in speech and 916 ms for non-speech. One production HDR could be expected to return to baseline by approximately 12–13 seconds following visual cue to produce. There was no task following production, so the production HDR is likely to have had little if any influence on the following perception HDR.

During presentation of the auditory target, subjects not only perceived the stimulus but may have also engaged in non-vocal silent rehearsal and short-term memory encoding. Therefore this was not solely a perception task. The subjects also had to wait for the arrow onset approximately 4.7 seconds later to begin planning their production, as the arrow direction informed them of whether their upcoming production would have the same or a reversed order. The delay period also likely involved some short term memory encoding prior to production.

The amplitude coefficients for target perception (speech and non-speech) and production (speech and non-speech) for each subject were estimated using multiple linear regression. This created statistical parametric maps of t statistics for each of the linear coefficients. Statistical images were thresholded at t > 3.1 at p <.01 (corrected). Correction for multiple comparisons was achieved using Monte Carlo simulations (program AlphaSim, part of AFNI), for which we selected a voxel-wise false-positive p threshold of 0.001 and a minimum cluster size of nine contiguous voxels (760 mm3) to give a corrected p value of 0.01. Each individual’s statistical map was transformed into standardized space (MNI 27 T1 weighted MRI from single subject) by using a 12 parameter affine registration.

Analyses

Comparisons of speech versus non-speech during target presentation and production stages

For group analyses, the t statistical maps of each condition were derived and entered into a mixed effects ANOVA, where task stage (target perception, production) and mode (speech versus non-speech) were fixed factors and subjects was a random factor. Contrasts between conditions of interest used pair-wise t-tests, resulting in statistical maps for each contrast. To identify overlapping and distinct regions of activation for speech and non-speech, in both the target presentation and production stages of the task, conjunction analyses (Friston et al., 1999; Nichols et al., 2005) were conducted based on the individual thresholded t statistical maps (p < . 01, corrected).

ROI analyses

We compared speech versus non-speech activation in regions encompassing those reported to be part of the speech production network (Bohland and Guenther, 2006; Guenther et al., 2006) (IFG (BA44, 45), precentral motor (BA 4), STG, SMG). We additionally included those regions found to support speech motor processing in previous studies involving similar bisyllabic non-sense speech production (Riecker et al., 2008) (SMA, sensorimotor (OP4), insula, putamen). These ROIs were cytoarchitectonically defined, using atlas maps in standard space in the inferior frontal (BA 44, BA45) (Amunts et al., 2004), sensorimotor (OP4, BA 4, supplementary motor area (SMA), preSMA) (Eickhoff et al., 2006; Zilles et al., 1995), and inferior parietal regions (supramarginal gyrus (SMG), angular gyrus) (Caspers et al., 2006), using maximum probability maps and macrolabel maps (Eickhoff et al., 2005) implemented in AFNI (Cox, 1996). These maps were not yet available for the posterior superior temporal gyrus (pSTG), insula, and putamen, so the talairach daemon database (Lancaster et al., 2000) was used to define their regional boundaries. In addition, because the pSTG region including the planum temporale (PT) has high inter-subject variability, we manually edited the boundaries of the pSTG, so that its borders coincided from the posterior border of the first Heschl’s gyrus (HG) (Heschl’s sulcus) anteriorly, to the posterior ascending/descending rami posteriorly.

The ROIs were used as masks to extract two measures from each individual’s standardized functional maps: the mean percent BOLD signal change values (relative to baseline rest) and mean percent volume of activation of voxels (thresholded at t > 3.3, p < .01, corrected). For each measure, a 4-way repeated measures ANOVA was used to examine the factors ROI (BA44, BA45, OP4, insula, putamen, BA4, SMA, pSTG, angular gyrus, SMG), side (left, right), stage of task (target perception versus production), and mode (speech, non-speech) at p=.05. If the contrast for speech versus non-speech or left versus right or their interactions were significant at p <0.05, then post hoc speech versus nonspeech or left versus right or their interactions were tested across ROIs at p= 0.0045 to correct for multiple comparisons.

Right-left comparisons

To assess functional laterality in brain activation for each task in each condition, a lateralization analysis (Husain et al., 2006) was performed to compare homologous left-right activation differences. Functional data from each subject were flipped along the y axis, and these maps of each condition were entered, along with the original data, into a mixed effects ANOVA. Contrasts between the original and flipped functional data used one-way directional pair-wise t-tests, resulting in new sets of statistical maps that showed regions significantly more active for the left over the right hemisphere within each condition.

Results

Similarities between speech and non-speech during target presentation and production

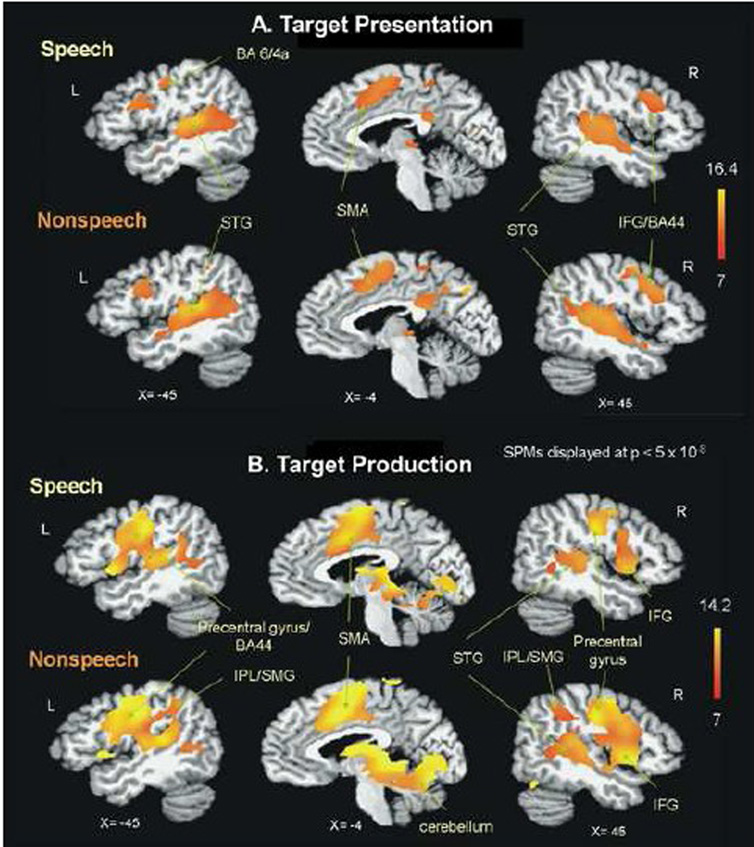

Group analyses of the target presentation and production stages of the task showed similar BOLD responses during speech and non-speech (Figure 2). Group main effects maps for the target presentation stage showed activation for both speech and non-speech in the auditory regions of STG/MTG and sylvian parietal temporal (Spt) region bilaterally, as well as regions associated with speech production including; inferior frontal gyrus, precentral gyri, SMA, precuneus, lentiform nucleus/putamen, and thalamus (Figure 2A, table 1).

Figure 2.

Group main effects for task (A: target presentation, B: production) during speech and non-speech conditions. Speech and non-speech conditions resulted in comparable regions of activation, with differences primarily in the extent of activation. Non-speech conditions showed greater extent of activation than speech. The t statistical parametric maps were thresholded at p=.01 (corrected for multiple comparisons). BA: Brodmann area, IFG: inferior frontal gyrus, IPL: inferior parietal lobule, SMA: supplementary motor area, SMG: supramarginal gyrus, STG: superior temporal gyrus.

Table 1.

Regions activated for speech and non-speech target presentation. t-scores of activation peaks for each anatomical region were thresholded at t>3.6, p<.01 corrected. Results are reported for clusters exceeding 760mm3.

| Region | Approx BA |

x | y | z | Peak t |

|---|---|---|---|---|---|

| Speech Target Presentation | |||||

| Left IFG | 44 | −42 | 11 | 25 | 11.62 |

| Right IFG | 45 | 45 | 14 | 26 | 12.32 |

| Right paracentral lobule | 4 | 4 | −32 | 51 | 8.36 |

| Left precentral gyrus | 6 | −40 | −12 | 42 | 9.41 |

| Right precentral gyrus | 6 | 42 | −5 | 46 | 6.85 |

| Left SMA | 6 | −1 | −2 | 53 | 13.57 |

| Left cingulate gyrus | 32 | −3 | 21 | 34 | 6.75 |

| Right cingulate gyrus | 24 | 12 | 10 | 35 | 6.5 |

| Left STG | 22 | −49 | −29 | 3 | 14.98 |

| Right STG | 22 | 59 | −26 | 4 | 17.98 |

| Left caudate | N/A | −11 | 8 | 8 | 7.56 |

| Right caudate | N/A | 14 | 11 | 7 | 6.73 |

| Left putamen | N/A | −25 | 3 | 8 | 6.94 |

| Right putamen | N/A | 21 | 12 | 7 | 7.33 |

| Left thalamus | N/A | −2 | −26 | 7 | 6.65 |

| Right thalamus | N/A | 9 | −22 | 3 | 9.24 |

| Right posterior cingulate | 23 | 1 | −36 | 24 | 9.04 |

| Non-Speech Target Presentation | |||||

| Left IFG | 44 | −40 | 5 | 25 | 9.43 |

| Right IFG | 44 | 44 | 12 | 25 | 10.33 |

| Right paracentral lobule | 4 | 7 | −36 | 55 | 10.06 |

| Left precentral gyrus | 4a/6 | −40 | −9 | 47 | 7.9 |

| Right precentral gyrus | 6 | 51 | −8 | 47 | 7.86 |

| Left SMA | 6 | 0 | −2 | 53 | 13.6 |

| Left STG | 22 | −45 | −29 | 3 | 15.12 |

| Right STG | 42 | 66 | −21 | 10 | 8.91 |

| Right MTG | 22 | 53 | −37 | 3 | 13.36 |

| Left IPL/SMG | 40 | −46 | −46 | 47 | 6.54 |

| Right IPL/SMG | 40 | 52 | −34 | 53 | 5.87 |

| Left caudate | N/A | −12 | 2 | 13 | 7.04 |

| Right caudate | N/A | 15 | 9 | 6 | 6.68 |

| Left putamen | N/A | −22 | 15 | 8 | 10.62 |

| Right putamen | N/A | 26 | 18 | 4 | 8.35 |

| Left thalamus | N/A | −13 | −30 | 14 | 7.38 |

| Right thalamus | N/A | 14 | −24 | −2 | 8.27 |

| Right posterior cingulate | 17 | 1 | −57 | 12 | 5.93 |

| Right precuneus | 31 | 12 | −47 | 34 | 9.56 |

Initially, we compared the BOLD response from the “repeat” trials with the “reverse” trials, and found no significant differences (p>0.05). Therefore the BOLD responses from both response types were combined for the production analysis. During the production stage, both speech and non-speech activated the bilateral pre-and postcentral gyri, middle and inferior frontal gyri, MTG, SMA, insula, cingulate cortex, SMG, lentiform nucleus, putamen, thalamus, and cerebellum, and the auditory regions of STG and Spt (Figure 2B, table 2). The extent of STG and Spt activation were greater on the right during the non-speech production condition (Figure 2B).

Table 2.

Regions activated for speech and non-speech production. t-scores of activation peaks for each anatomical region were thresholded at t>3.6, p<.01 corrected. Results are reported for clusters exceeding 760mm3.

| Region | Approx BA |

x | y | z | Peak t |

|---|---|---|---|---|---|

| Speech Production | |||||

| Left IFG | 44 | −46 | 4 | 25 | 7.43 |

| Right IFG | 44 | 52 | 10 | 17 | 6.79 |

| Left cingulate gyrus | 24 | −2 | 8 | 42 | 11.25 |

| Right cingulate gyrus | 24 | 5 | 11 | 40 | 12.17 |

| Left insula | 13 | −46 | −18 | 19 | 15.87 |

| Right insula | 44 | 48 | 6 | 3 | 13.19 |

| Left precentral gyrus | 4 | −55 | −15 | 38 | 13.49 |

| Right precentral gyrus | 4 | 48 | −11 | 33 | 12.45 |

| Left postcentral gyrus | 43 | −57 | −10 | 23 | 12.49 |

| Right postcentral gyrus | 3b | 56 | −14 | 29 | 9.67 |

| Left SMA | 6 | 0 | −3 | 44 | 15.78 |

| Left STG | 41 | −38 | −29 | 9 | 10.8 |

| Right STG | 22 | 60 | −10 | 8 | 9.18 |

| Left SMG | 40 | −34 | −50 | 36 | 8.87 |

| Right SMG | 40 | 44 | −47 | 42 | 6.67 |

| Left caudate | N/A | −6 | −1 | 10 | 7.39 |

| Right caudate | N/A | 13 | −2 | 15 | 8.71 |

| Left putamen | N/A | −15 | 6 | 8 | 7.63 |

| Right putamen | N/A | 20 | 8 | 2 | 8.12 |

| Left thalamus | N/A | −12 | −22 | 1 | 13.42 |

| Right thalamus | N/A | 13 | −11 | −1 | 11.57 |

| Left cerebellum (VI) | N/A | −28 | −65 | −18 | 9.54 |

| Right cerebellum (VI) | N/A | 22 | −68 | −10 | 7.63 |

| Non-Speech Production | |||||

| Left IFG | 44 | −54 | 4 | 29 | 9.6 |

| Right IFG | 44 | 54 | 10 | 27 | 9.48 |

| Left MFG | 9 | −32 | 39 | 31 | 7.23 |

| Right MFG | 9 | 36 | 41 | 27 | 8.34 |

| Left cingulate gyrus | 24 | −4 | −5 | 51 | 13.08 |

| Right cingulate gyrus | 32 | 1 | 11 | 45 | 16.47 |

| Left insula | N/A | −35 | 1 | 8 | 11.1 |

| Right insula | 13 | 49 | 0 | 1 | 10.16 |

| Left precentral gyrus | 4p | −54 | −8 | 33 | 10.05 |

| Right precentral gyrus | 44 | 51 | 5 | 8 | 15.86 |

| Left postcentral gyrus | 43 | −53 | −18 | 20 | 16.17 |

| Right postcentral gyrus | 3b | 51 | −19 | 37 | 10.27 |

| Left SMA | 6 | −3 | −2 | 42 | 14.34 |

| Right SMA | 6 | 6 | 0 | 47 | 19.16 |

| Left STG | 41 | −51 | −20 | 6 | 9.93 |

| Right STG | 42 | 62 | −26 | 17 | 10.23 |

| Left IPL/SMG | 40 | −39 | −48 | 40 | 10.61 |

| Right IPL/SMG | 40 | 58 | −45 | 33 | 9.48 |

| Left caudate | N/A | −13 | −1 | 15 | 9.29 |

| Right caudate | N/A | 12 | 0 | 12 | 9.6 |

| Left putamen | N/A | −20 | 0 | 7 | 7.85 |

| Right putamen | N/A | 22 | 2 | 7 | 5.88 |

| Left thalamus | N/A | −17 | −13 | 1 | 11.04 |

| Right thalamus | N/A | 13 | −23 | −2 | 13.54 |

| Left cerebellum (VI) | N/A | −30 | −65 | −18 | 11.71 |

| Right cerebellum (VI) | N/A | 29 | −75 | −15 | 9.49 |

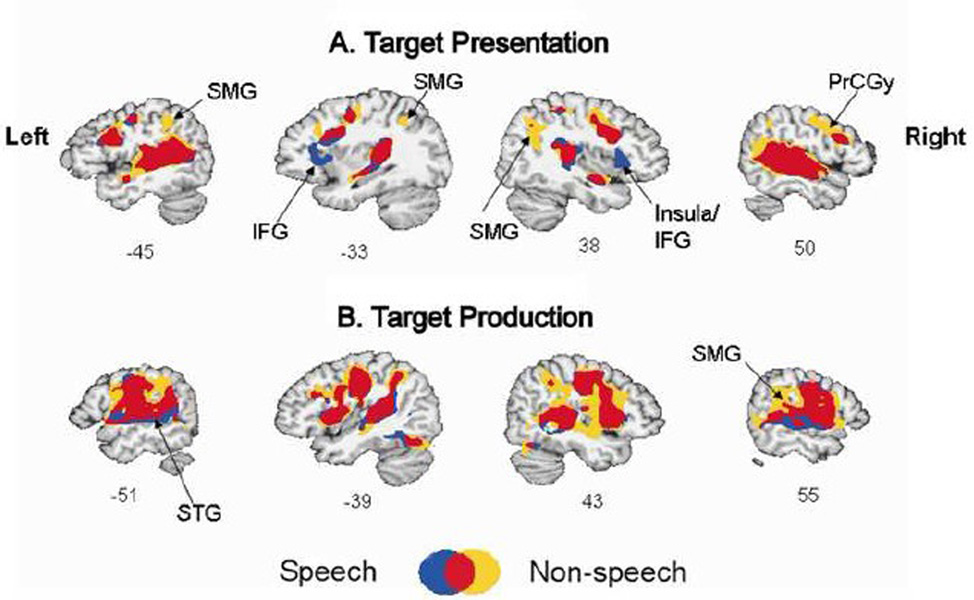

A formal conjunction analysis showed substantial overlap between speech and non-speech conditions during both target presentation and production (Figure 3). During target presentation, two regions were more active for speech compared to non-speech; the left inferior frontal gyrus (IFG) and the right insula/IFG. On the other hand, two other regions were more active for non-speech than for speech: the right and left supramarginal gyrus (SMG) and the right precentral gyrus (PrCGy) (Figure 3A). During production, similar differences were noted; a region was more active in the right SMG during non-speech compared to speech (Figure 3B). Some portions of the bilateral STG regions near STS appeared to be active for speech and not for non-speech.

Figure 3.

Group conjunction maps showing overlapping regions activated for both speech and non-speech conditions (red), regions more specific to speech (blue), and non-speech (yellow). For display purposes, here each condition was thresholded at t > 6 (p <8.1 ×10−7). IFG: inferior frontal gyrus, PrCGy: precentral gyrus, SMG: supramarginal gyrus, STG: superior temporal gyrus.

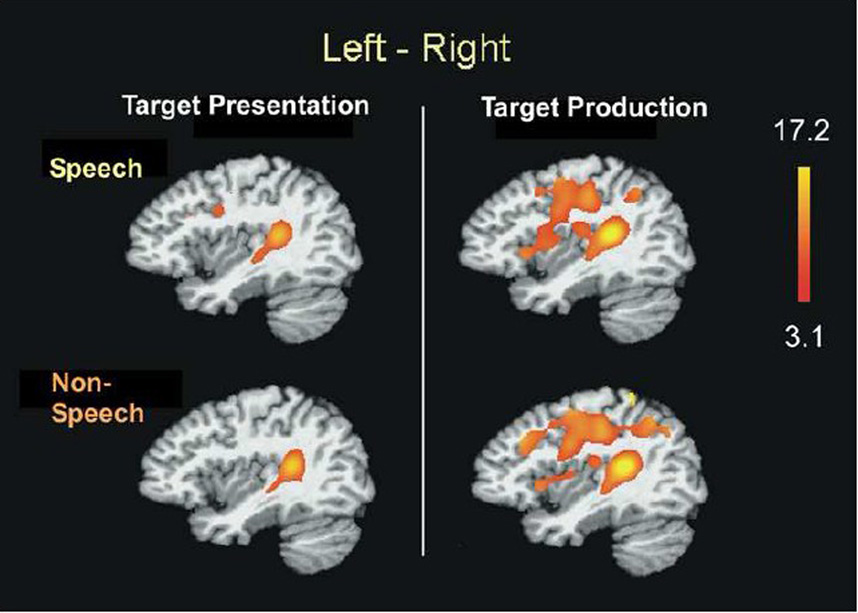

Similar left laterality for speech and non-speech target presentation and production

Laterality analyses indicated that for both speech and non-speech, brain activation was significantly greater on the left during both target presentation and production tasks (Figure 4). Both the pSTG and Spt regions were more active on the left during the perception and production of speech and non-speech targets.

Figure 4.

Laterality analysis. Brain regions more active on the left hemisphere than the right hemisphere (p=.01, corrected) during target presentation and production. Speech and non-speech conditions activated comparable regions encompassing auditory dorsal stream structures with more left lateralization. The posterior superior temporal regions were consistently co-activated with left-bias for both target presentation and production stages, similar for speech and non-speech conditions.

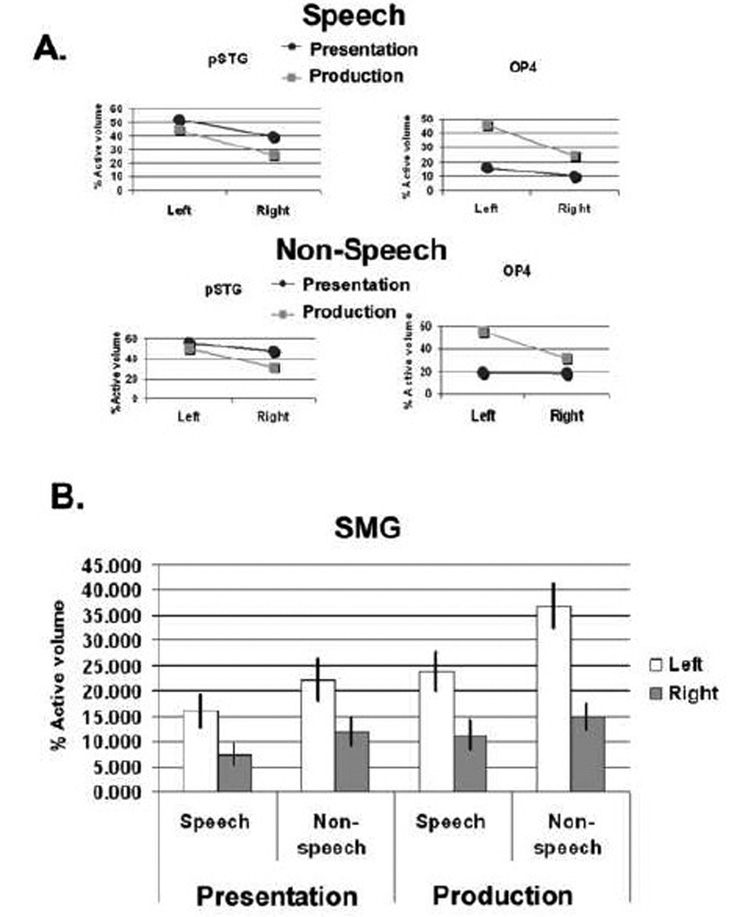

ROI analyses also supported greater volume of activation and greater percent BOLD signal change on the left over the right in both speech and nonspeech. Repeated measures ANOVA revealed a significant ROI ×side (left, right) interaction when examining percent volume of activation (F10,330= 10.24, p<.0005) and percent signal change (F10,330= 4.52, p<.0005). There was also significant ROI × mode (speech, non-speech) × side interaction for percent volume (F10,330= 3.78, p<.0005) but not with percent signal change (F10,330= 1.70, p=0.079). The left volume of activation was greater than on the right during production in: OP4 (F1,33= 32.16, p<.0005), pSTG (F1,33= 11.51, p=.002) and SMG (F1,33= 12.51, p=.001) (Figure 5). In SMG, left sided percent volume for non-speech was greater than for speech during production (F1,33= 11.74, p=.002) (Figure 5B), and approached significance in perception (F1,33= 8.38, p=.007), indicating that left laterality was greater during non-speech than speech in this region.

Figure 5.

A. Mean volume of activation on the left and right hemispheres for target presentation and production stages on Speech and Non-speech shown for posterior superior temporal gyrus (pSTG) and OP4. In these ROIs, significant task (perception vs. production) × side (left vs. right) interactions were significant at p=.01. B. Mean volume of activation on the left and right hemispheres for target presentation and production stages of task in the supramarginal gyrus (SMG). Here non-speech exhibited greater activation than speech during production (significant mode (speech vs. non-speech) x task (perception vs. production) × side (left vs. right) interaction at p=.01). Error bars depict standard error of the mean.

Comparisons between speech and non-speech in the extent of activation

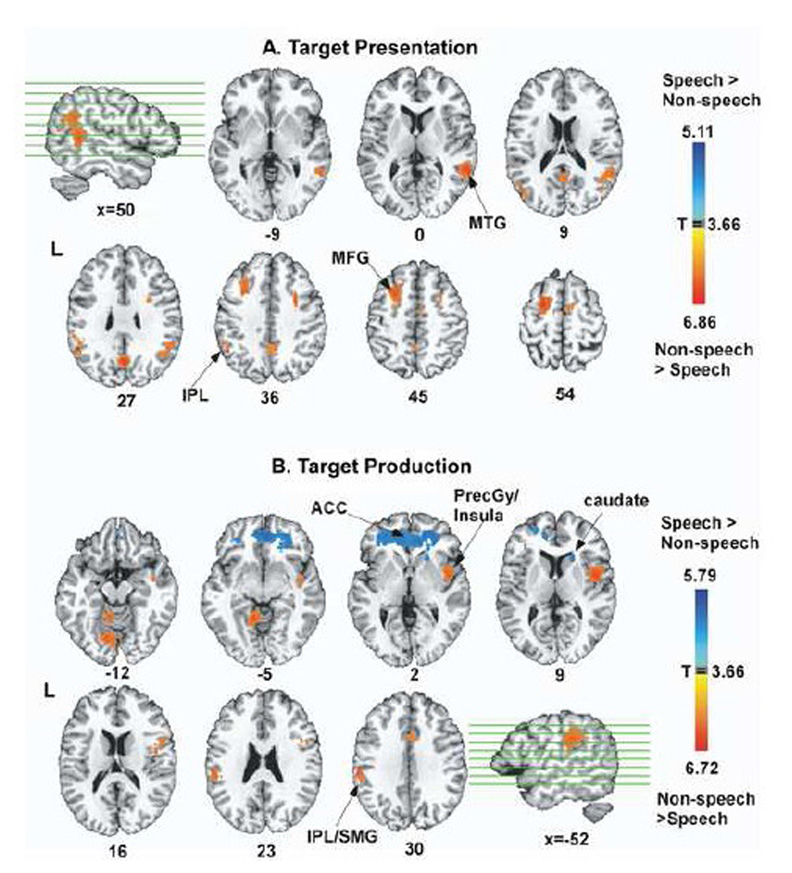

To address this hypothesis, we contrasted speech and non-speech on a whole-brain analysis. During target presentation, non-speech showed greater activation than speech in the left inferior parietal region near SMG, right STG/MTG, the right middle frontal gyrus, right caudate, precuneus, and posterior cingulate gyrus (Figure 6A, table 1). No regions survived the threshold for regions being more active during speech target presentation than during non-speech target presentation.

Figure 6.

Group contrasts between speech and non-speech conditions for target presentation and production. Regions colored red-yellow show areas more active during non-speech compared to speech, and regions colored blue-light blue show areas more active during speech compared to non-speech. All statistical maps were thresholded at p=.01 (corrected). ACC: anterior cingulate cortex, PrCGy: precentral gyrus, SMG: supramarginal gyrus, STG: superior temporal gyrus

During production, non-speech was more active than speech in: the bilateral precentral gyri/insula, inferior frontal gyri, bilateral inferior parietal lobule/SMG, thalamus, SMA, and the cerebellum (Figure 6B, red-yellow, table 2). Only the anterior cingulate cortex (ACC) and bilateral caudate were significantly more active during speech than during non-speech production (Figure 6B, blue-light blue, table 3). This difference could not be attributed to differences in reaction time (RT) of speech and non-speech production onsets: We measured RT offline for both speech and non-speech production onsets in a random sample of 19 subjects, based on digital recordings acquired during the whole experiment. The mean RT for speech production was 587 ms (SD: 187 ms) and the mean RT for non-speech was 564 ms (SD: 242 ms). A repeated measures ANOVA with speech and non-speech RTs as repeated factors showed that the two RT measures were not statistically different in any of the subjects examined (F1,29= 0.166, p= 0.687).

Table 3.

Brain activation contrasts between speech and non-speech tasks

| Region | Approximate BA |

x | y | z | t |

|---|---|---|---|---|---|

| Speech Target Presentation > Non-Speech Target Presentation | |||||

| No regions found significant | |||||

| Non-Speech Target Presentation > Speech Target Presentation | |||||

| Left Mid. Frontal gyrus | 8 | −29 | 17 | 41 | 6.86 |

| Right Mid. Temporal gyrus | 21 | 59 | −47 | 8 | 6.49 |

| Left Inf. Parietal lobule | 40 | −46 | −49 | 39 | 6.01 |

| Right Precuneous | 17/18/31 | 1 | −62 | 26 | 5.82 |

| Speech Production > Non-Speech Production | |||||

| Right Anterior cingulate gyrus | 32 | 4 | 40 | −7 | 5.79 |

| Right caudate | N/A | 19 | 20 | 6 | 4.48 |

| Non-Speech Production > Speech Production | |||||

| Left precentral gyrus/IFG | 44 | −55 | 2 | 12 | 4.53 |

| Right Precentral g./Insula | 44 | 45 | −1 | 9 | 5.7 |

| Cing g./SMA | 6 | 0 | 1 | 46 | 6.72 |

| Left Inf. Parietal lobule/SMG | 40 | −56 | −25 | 26 | 5.74 |

| Right SMG | 40 | 47 | −42 | 34 | 4.1 |

| Left Cerebellar culmen | N/A | −9 | −46 | −8 | 5.67 |

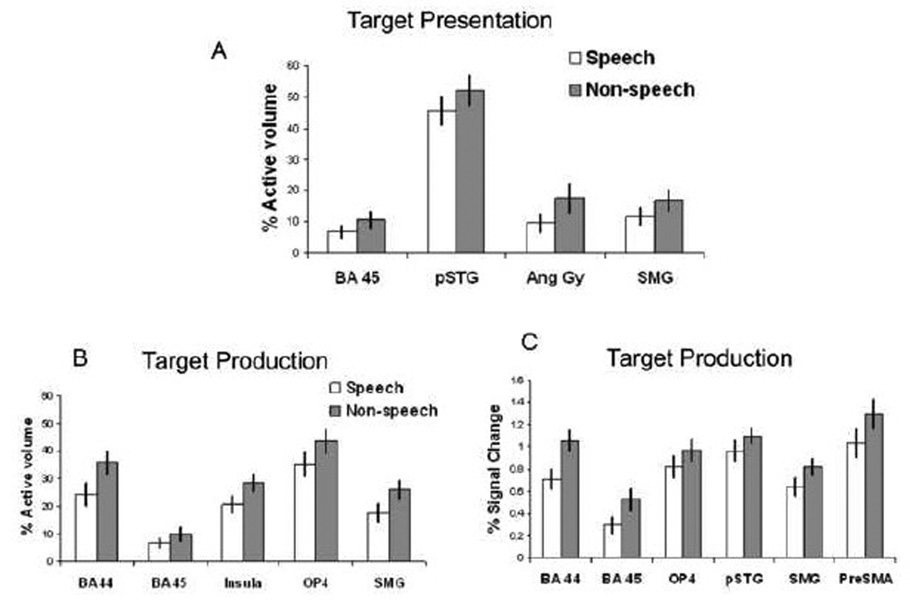

ROI analyses also supported greater volume of activation and greater percent BOLD signal change during non-speech over speech during both target presentation and production in many of the ROIs examined. Repeated measures ANOVA revealed a significant ROI × mode (speech, non-speech) effect when examining percent volume of activation (F10,330= 4.09, p<.0005) and percent signal change (F10,330= 2.57, p=.005). There was also significant ROI × task (target presentation stage versus production) x mode effects for percent volume (F10,330= 5.67, p<.0005) and percent signal change (F10,330= 3.47, p<.0005).

When speech and non-speech were further compared within ROIs, non-speech target presentation was associated with significantly greater percent volume of activation than speech target presentation in the SMG (F1,33= 11.47, p=.002). This approached significance in the BA 45 (F1,33= 9.07, p=.005), angular gyrus (F1,33= 9.24, p=.005), and pSTG (F1,33= 7.85, p=.008) (Figure 7A). No speech versus non-speech differences survived when measured using percent signal change.

Figure 7.

Mean volume of activation across ROIs for speech versus nonspeech conditions for target presentation and production. The differences were all significant at p=.01. Error bars depict standard error of the mean.

During production, non-speech resulted in significantly greater percent volume of activation than speech in BA 44 (F1,33= 24.99, p<.0005), OP4 (F1,33= 10.73, p=.002), SMG (F1,33= 11.46, p=.002), and the insula (F1,33= 17.24, p<.0005), while BA 45 (F1,33= 9.11, p=.005) approached significance (Figure 7B). Non-speech production also had significantly greater percent signal changes than speech production in BA 44 (F1,33= 14.946, p=.001), BA 45 (F1,33=10.73, p=.002), OP4 (F1,33= 9.78, p=.004), SMG (F1,33= 10.11, p=.003), preSMA (F1,33= 19.33, p<.0005). This approached significance in the pSTG (F1,33= 8.73, p=.006) (Figure 7C).

Discussion

In this study we tested the idea of common neural substrates for target perception/encoding and production of speech and non-speech vocal tract gestures. The non-speech gestures used in this study, like speech, were easily producible in a consistent manner and had auditory and somatosensory targets linked to motor execution. This differentiates our non-speech gestures from other studies that have used either non-vocal (no phonation) oral gestures such as tongue movements (Salmelin and Sams, 2002) or non-vocal sounds such as tones (Benson et al., 2001; Binder et al., 2000). The perception and production of such non-vocal non-speech may have been less likely to have engaged the same neural substrates as those involved in speech, not because they were non-speech but because they did not involve vocal tract gestures to the same degree as the gestures used here. Further, in this study, both the speech and non-speech gestures required sequencing and neither the speech nor the non-speech conditions involved simple isolated gestures. The main difference for the non-speech gestures from speech used here was that they did not involve phonological processing. Despite this difference, regional functional activations for speech and non-speech target perception/encoding and production were similar, encompassing the bilateral IFG, STG, a superior temporal-parietal region (Spt), SMG, premotor regions, insula, subcortical areas (caudate, putamen, thalamus) and the cerebellum. Performance for both speech and non-speech tasks were associated with greater activation in the left hemisphere compared to the right, for both target perception/encoding and production.

Both our speech and non-speech tasks required motor productions that were linked to auditory and somatosensory targets, requiring sensory-motor mapping and were produced in a volitional manner but without communicative intent. However, they may have differed in complexity and familiarity, that is, the variety of gestures for speech articulation was less than those included in the oral non-speech gestures such as whistle or tongue click. Further, neither sets of gestures had semantic meaning, and it is unlikely that the nonsense speech syllables activated lexical representations (Binder et al., 2003; Vitevitch et al., 1999). Likewise we cannot rule out that semantic representations may have been triggered by our non-speech gestures, such as cry or laugh. Given these potential differences, the regions activated for both speech and non-speech were remarkably similar, and underscore a strong common involvement of the same sensory-motor integration system. This system appears to support a larger domain of vocal tract gestures requiring sensory-motor mapping, and is not specialized to just the speech domain. These results are in agreement with recent studies by Hickok and colleagues who have suggested the auditory dorsal stream, and the posterior temporal-parietal region in particular, supports sensorimotor integration for not only speech but also non-speech (Hickok et al., 2003; Hickok and Poeppel, 2004, 2007; Pa and Hickok, 2008). They are also in agreement with studies that have examined perceptual discrimination of speech and non-speech sounds sharing similar temporal/acoustic characteristics and found that they activated overlapping regions (Joanisse and Gati, 2003; Zaehle et al., 2008).

Similar to what has been reported by other groups (Pulvermuller et al., 2006; Wilson et al., 2004), we also found motor area activation not only during production but also during target perception/encoding for both speech and non-speech gestures. Likely target presentation involved the perception as well as sub-vocal rehearsal of the oral-motor gestures for both speech and non-speech vocal tract gestures, and short-term memory encoding for the upcoming production stage. The regions that were active during target presentation were similar for vocal tract gestures and speech sounds, involving the ventral premotor, inferior frontal and motor regions in addition to the expected temporal auditory activations.

During the motor execution of both speech and non-speech vocal tract gestures, there was co-activation of motor, somatosensory, as well as auditory regions. Both speech and non-speech gestures were associated with activity in the IFG, ventral premotor areas, SMA, STG, insula, and SMG, cerebellum, and the basal ganglia, regions found to be active in other speech motor studies (Riecker et al., 2008).

As neither our speech nor non-speech gestures had lexical/semantic meaning associated with them, both involved volitional acts involving vocal tract gestures. Here the focus was on imitating an auditory target rather than on self-generation of a gesture to communicate affective or other information. Co-activation found in the premotor/frontal, as well as inferior parietal regions during perception as well as production of these gestures seem to parallel mirror neurons reported to be active during both action execution and action perception (Ferrari et al., 2003; Gallese et al., 1996; Rizzolatti et al., 1996; Rizzolatti and Sinigaglia, 2007). Of particular relevance to speech, the audiovisual mirror neurons found in the monkey F5, a Broca’s area homologue, have been reported to discharge not just to the execution and observation of a specific action but also when this action can only be heard (Kohler et al., 2002). This area has been suggested to be a part of a mirror neuron system in humans, involved in the action production and action observation system. It has been proposed that this region, because of its capacity for supporting imitation, could have played a role in the evolution of speech (Rizzolatti and Arbib, 1998).

In the context of the putative mirror neuron system in humans, the neural pattern generated in the premotor areas during action recognition is similar to that generated to support production of that action (Kohler et al., 2002). Similarly, in the present data premotor regions were similarly active for perception and production regardless of speech or non-speech. This may be because even in the case of non-speech, these were produced involving actions that could be recognized from sound as well as produced, just like speech. Empirical findings of speech related motor activation during speech perception is easily found (Fadiga et al., 2002; Pulvermuller et al., 2006; Watkins et al., 2003; Wilson et al., 2004), and may reflect the involvement of regions suggested to have mirror neuron properties in humans for speech (posterior frontal/premotor) (Iacoboni and Mazziotta, 2007). This seems to fit well with recent speech production models that propose that speech acquisition and production depend on imitative learning of speech through integrating action perception and production (Guenther, 2006; Hickok and Poeppel, 2007).

Laterality of activity during perception/presentation and production of targets were comparable for speech and non-speech, especially in the posterior temporal region pSTG and a sensorimotor region OP4. This suggests that vocal tract gestures with acoustic and somatosensory targets employ comparable neural substrates in the left dorsal stream regardless of whether they are speech or non-speech. The temporoparietal region in the present study that showed left laterality included the Spt region, argued to link sensory systems (whether auditory, somatosensory, or visual) with the motor effector, in this case the “vocal tract action system” (Pa and Hickok, 2008). Dhanjal et al. (2008) also showed that the Spt region was activated for speech as well as for non-speech tongue and jaw movements that result in somatosensory feedback (Dhanjal et al., 2008). This suggests that the Spt may not only be an auditory-motor integration area, but also a multisensory integration area for vocal tract gestures. Our finding of co-activation of this region during perception and production, for both speech and non-speech gestures, are in line with predictions that can be made on these previous studies; both sets of stimuli involved linking an auditory/somatosensory target presentation with vocal tract gestures.

Involvement of similar functional neuroanatomy for non-speech vocal tract gestures as for speech in humans may relate to previous findings suggesting that similar neural substrates underlie non-human primate calls, which also involve laryngeal and pharyngeal movement and sequencing. Monkeys have an architectonically comparable region to area 44 that controls orofacial muscle movement (Petrides et al., 2005), with cortico-cortical connections between the left temporal-parietal and frontal areas (Croxson et al., 2005; Petrides and Pandya, 2002). Similar leftward asymmetries affect the planum temporale in monkeys (Gannon et al., 1998) and perisylvian homologues are activated in response to species-specific calls (Poremba et al., 2004).

The only difference between speech and non-speech processing was in the extent and amplitude of activation in regions within the shared neural network. Similarly, it has been shown that kinematically similar non-speech mouth movements elicit a higher level of activity in the motor cortex than speech movements (Saarinen et al., 2006) and is associated with spatially less focal activity (Salmelin and Sams, 2002) within the motor cortex. Because a greater extent and amplitude of response was seen even for kinematically similar non-speech gestures (Saarinen et al., 2006), the greater activation observed for our non-speech targets compared to speech may not be completely explained by the fact that non-speech required a greater variety of vocal tract/oral-motor gestures than used for speech targets.

Enhanced activation might be expected in auditory-motor regions during executions that are less familiar and less frequently produced, reflecting the need for active recruitment of regions to establish auditory-motor mapping. Enhanced activities in the premotor area, STG, PT, and cerebellum have been reported for non-native vowel contrasts (Callan et al., 2006). Similarly, non-native phonemes are associated with greater signal changes in speech regions, and increased signal changes occur in response to greater difficulties in production in the STG, insula, and Spt (Wilson and Iacoboni, 2006). We found heightened activation throughout the sensorimotor network for non-speech vocal tract gestures compared to speech, as would be expected for tasks if there was a less established feedforward system for motor output. Non-speech vocal tract gestures may have less well established auditory targets compared to speech.

In the present results, SMG was also more highly activated during non-speech compared to speech tasks, particularly on the left side. The left SMG may be an important region for integrating sounds to their articulator position information (Callan et al., 2006). In a speech computational model (DIVA; Directions into Velocities of Articulators) (Guenther, 2006), SMG is proposed as a “somatosensory error map”, where the somatosensory target for a sound and the actual somatosensory state are compared. This may be parallel to what is proposed to occur in the posterior STG in this model, where expected and actual auditory consequences of a sound production are compared. Like the motor-auditory link, the motor-somatosensory link may be weaker for non-speech productions due to infrequent volitional production of these non-speech sounds. Hence, heightened activation in the SMG may reflect heightened need for somatosensory-motor integration to achieve the correct auditory-somatosensory target for non-speech production. For well-established skills such as speech, active somatosensory monitoring may not be required to the same degree as during less familiar sequences such as non-speech sequences. In fact, Dhanjal et al. (2008) showed that an area in the parietal operculum, SII (somatosensory association cortex), is less active during speech, compared to non-speech jaw and tongue movements, although both sets of tasks resulted in somatosensory feedback. This may reflect a greater reliance on conscious monitoring of the somatosensory feedback during non-speech tasks.

During target presentation, no areas were found more active during speech than non-speech, although with a more relaxed threshold we did see greater activation bilaterally in the STS regions during speech compared to non-speech. During production, the anterior cingulate cortex (ACC) and caudate nucleus were the only regions that were more active during speech than non-speech production. The ACC reportedly is involved in execution of appropriate verbal responses and suppression of inappropriate responses (Buckner et al., 1996; Paus et al., 1993). The caudate and basal ganglia have connections to the frontal cortical regions, and have been implicated as important when movement sequences need to be selected and initiated without external cues (Georgiou et al., 1994; Rogers et al., 1998). Perhaps the increased activation in the ACC and caudate during speech reflects the need for more precise movement and execution for speech.

There are several caveats to this study. Because target presentation was the first stage in the motor production task, subjects had to perceive the target and likely were involved in encoding and short-term rehearsal. This may explain the extensive neural overlap that occurred in brain regions active during the target presentation and production of speech and non-speech vocal tract gestures. This study did not use variable interstimulus intervals (ISIs), which could have allowed sampling of longer windows of hemodynamic responses. With variable ISIs, more extensive comparisons between speech and non-speech conditions over time might have been possible. Also, due to the low resolution of our functional scans, we may not have been able to capture small regions of activation that could have differentiated between speech and non-speech responses. Using multichannel MRI receivers and whole-brain surface coil arrays, one study showed that only with the high resolution and the increased signal to noise ratio, fine regions of modality specific responses could be captured using fMRI (Beauchamp et al., 2004). In the future, such advanced fMRI methods may allow for better elucidation of cortical regions that primarily process speech, non-speech, or both types of inputs.

In conclusion, we have shown overlapping sensory-motor responses during the target presentation and production of both speech and non-speech vocal tract gestures. We provide new data that supports the notion that the neural substrates involved in sensory to motor transformation in the left hemisphere are not specific to speech. Rather, these may have evolved for vocal communication in non-human primates and were subsequently adapted to support speech development in humans.

Acknowledgments

This research was supported by the Intramural Research Program in the National Institute of Neurological Disorders and Stroke, NIH. The authors wish to thank Richard Reynolds and Gang Chen for assistance during data analyses and Sandra Martin for conducting speech and language testing.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

REFERENCES

- Ackermann H, Ziegler W, Petersen D. Dysarthria in bilateral thalamic infarction. A case study. J Neurol. 1993;240:357–362. doi: 10.1007/BF00839967. [DOI] [PubMed] [Google Scholar]

- Amunts K, Weiss PH, Mohlberg H, Pieperhoff P, Eickhoff S, Gurd JM, Marshall JC, Shah NJ, Fink GR, Zilles K. Analysis of neural mechanisms underlying verbal fluency in cytoarchitectonically defined stereotaxic space--the roles of Brodmann areas 44 and 45. Neuroimage. 2004;22:42–56. doi: 10.1016/j.neuroimage.2003.12.031. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Argall BD, Bodurka J, Duyn JH, Martin A. Unraveling multisensory integration: patchy organization within human STS multisensory cortex. Nature Neuroscience. 2004;7:1190–1192. doi: 10.1038/nn1333. [DOI] [PubMed] [Google Scholar]

- Belin P, Zatorre RJ, Ahad P. Human temporal-lobe response to vocal sounds. Cognitive Brain Research. 2002;13:17–26. doi: 10.1016/s0926-6410(01)00084-2. [DOI] [PubMed] [Google Scholar]

- Benson RR, Richardson M, Whalen DH, Lai S. Phonetic processing areas revealed by sinewave speech and acoustically similar non-speech. Neuroimage. 2006;31:342–353. doi: 10.1016/j.neuroimage.2005.11.029. [DOI] [PubMed] [Google Scholar]

- Benson RR, Whalen DH, Richardson M, Swainson B, Clark VP, Lai S, Liberman AM. Parametrically dissociating speech and nonspeech perception in the brain using fMRI. Brain and Language. 2001;78:364–396. doi: 10.1006/brln.2001.2484. [DOI] [PubMed] [Google Scholar]

- Binder J, Frost JA, Hammeke TA, Bellgowan PSF, Springer JA, Kaufman JN, Possing ET. Human temporal lobe activation by speech and nonspeech sounds. Cerebral Cortex. 2000;10:512–528. doi: 10.1093/cercor/10.5.512. [DOI] [PubMed] [Google Scholar]

- Binder JR, McKiernan KA, Parsons ME, Westbury CF, Possing ET, Kaufman JN, Buchanan L. Neural correlates of lexical access during visual word recognition. Journal of Cognitive Neuroscience. 2003;15:372–393. doi: 10.1162/089892903321593108. [DOI] [PubMed] [Google Scholar]

- Birn RM, Bandettini PA, Cox RW, Shaker R. Event-related fMRI of tasks involving brief motion. Human Brain Mapping. 1999;7:106–114. doi: 10.1002/(SICI)1097-0193(1999)7:2<106::AID-HBM4>3.0.CO;2-O. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bohland JW, Guenther FH. An fMRI investigation of syllable sequence production. Neuroimage. 2006;32:821–841. doi: 10.1016/j.neuroimage.2006.04.173. [DOI] [PubMed] [Google Scholar]

- Bottjer SW, Brady JD, Cribbs B. Connections of a motor cortical region in zebra pinches: Relation to pathways for vocal learning. Journal of Comparative Neurology. 2000;420:244–260. [PubMed] [Google Scholar]

- Buckner RL, Raichle ME, Miezin FM, Petersen SE. Functional anatomic studies of memory retrieval for auditory words and visual pictures. Journal of Neuroscience. 1996;16:6219–6235. doi: 10.1523/JNEUROSCI.16-19-06219.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Callan AM, Callan DE, Tajima K, Akahane-Yamada R. Neural processes involved with perception of non-native durational contrasts. NeuroReport. 2006;17:1353–1357. doi: 10.1097/01.wnr.0000224774.66904.29. [DOI] [PubMed] [Google Scholar]

- Canter GJ, van Lancker DR. Disturbances of the temporal organization of speech following bilateral thalamic surgery in a patient with Parkinson's disease. J Commun Disord. 1985;18:329–349. doi: 10.1016/0021-9924(85)90024-3. [DOI] [PubMed] [Google Scholar]

- Caspers S, Geyer S, Schleicher A, Mohlberg H, Amunts K, Zilles K. The human inferior parietal cortex: cytoarchitectonic parcellation and interindividual variability. Neuroimage. 2006;33:430–448. doi: 10.1016/j.neuroimage.2006.06.054. [DOI] [PubMed] [Google Scholar]

- Cox RW. AFNI: Software for analysis and visualization of functional magnetic resonance neuroimages. Computers and Biomedical Research. 1996;29:162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Croxson PL, Johansen-Berg H, Behrens TEJ, Robson MD, Pinsk MA, Gross CG, Richter W, Richter MC, Kastner S, Rushworth MFS. Quantitative investigation of connections of the prefrontal cortex in the human and macaque using probabilistic diffusion tractography. Journal of Neuroscience. 2005;25:8854–8866. doi: 10.1523/JNEUROSCI.1311-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dhanjal NS, Handunnetthi L, Patel MC, Wise RJ. Perceptual systems controlling speech production. J Neurosci. 2008;28:9969–9975. doi: 10.1523/JNEUROSCI.2607-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dronkers NF. A new brain region for coordinating speech articulation. Nature. 1996;384:159–161. doi: 10.1038/384159a0. [DOI] [PubMed] [Google Scholar]

- Eden GF, Joseph JE, Brown HE, Brown CP, Zeffiro TA. Utilizing hemodynamic delay and dispersion to detect fMRI signal change without auditory interference: The behavior interleaved gradients technique. Magnetic Resonance in Medicine. 1999;41:13–20. doi: 10.1002/(sici)1522-2594(199901)41:1<13::aid-mrm4>3.0.co;2-t. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Grefkes C, Zilles K, Fink GR. The Somatotopic Organization of Cytoarchitectonic Areas on the Human Parietal Operculum. Cereb Cortex. 2006 doi: 10.1093/cercor/bhl090. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Stephan KE, Mohlberg H, Grefkes C, Fink GR, Amunts K, Zilles K. A new SPM toolbox for combining probabilistic cytoarchitectonic maps and functional imaging data. Neuroimage. 2005;25:1325–1335. doi: 10.1016/j.neuroimage.2004.12.034. [DOI] [PubMed] [Google Scholar]

- Fadiga L, Craighero L, Buccino G, Rizzolatti G. Speech listening specifically modulates the excitability of tongue muscles: A TMS study. European Journal of Neuroscience. 2002;15:399–402. doi: 10.1046/j.0953-816x.2001.01874.x. [DOI] [PubMed] [Google Scholar]

- Ferrari PF, Gallese V, Rizzolatti G, Fogassi L. Mirror neurons responding to the observation of ingestive and communicative mouth actions in the monkey ventral premotor cortex. Eur J Neurosci. 2003;17:1703–1714. doi: 10.1046/j.1460-9568.2003.02601.x. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Holmes AP, Price CJ, Buchel C, Worsley KJ. Multisubject fMRI studies and conjunction analyses. Neuroimage. 1999;10:385–396. doi: 10.1006/nimg.1999.0484. [DOI] [PubMed] [Google Scholar]

- Gallese V, Fadiga L, Fogassi L, Rizzolatti G. Action recognition in the premotor cortex. Brain. 1996;119(Pt 2):593–609. doi: 10.1093/brain/119.2.593. [DOI] [PubMed] [Google Scholar]

- Gannon PJ, Holloway RL, Broadfield DC, Braun AR. Asymmetry of chimpanzee planum temporale: Humanlike pattern of Wernicke's brain language area homolog. Science. 1998;279:220–222. doi: 10.1126/science.279.5348.220. [DOI] [PubMed] [Google Scholar]

- Gelfand JR, Bookheimer SY. Dissociating neural mechanisms of temporal sequencing and processing phonemes. Neuron. 2003;38:831–842. doi: 10.1016/s0896-6273(03)00285-x. [DOI] [PubMed] [Google Scholar]

- Georgiou N, Bradshaw JL, Iansek R, Phillips JG, Mattingley JB, Bradshaw JA. Reduction in External Cues and Movement Sequencing in Parkinsons-Disease. Journal of Neurology Neurosurgery and Psychiatry. 1994;57:368–370. doi: 10.1136/jnnp.57.3.368. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guenther FH. Cortical interactions underlying the production of speech sounds. J Commun Disord. 2006;39:350–365. doi: 10.1016/j.jcomdis.2006.06.013. [DOI] [PubMed] [Google Scholar]

- Guenther FH, Ghosh SS, Tourville JA. Neural modeling and imaging of the cortical interactions underlying syllable production. Brain and Language. 2006;96:280–301. doi: 10.1016/j.bandl.2005.06.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hall DA, Haggard MP, Akeroyd MA, Palmer AR, Summerfield AQ, Elliott MR, Gurney EM, Bowtell RW. 'Sparse' temporal sampling in auditory fMRI. Human Brain Mapping. 1999;7:213–223. doi: 10.1002/(SICI)1097-0193(1999)7:3<213::AID-HBM5>3.0.CO;2-N. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hickok G, Buchsbaum B, Humphries C, Muftuler T. Auditory-motor interaction revealed by fMRI: Speech, music, and working memory in area Spt. Journal of Cognitive Neuroscience. 2003;15:673–682. doi: 10.1162/089892903322307393. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D. Dorsal and ventral streams: A framework for understanding aspects of the functional anatomy of language. Cognition. 2004;92:67–99. doi: 10.1016/j.cognition.2003.10.011. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D. The cortical organization of speech processing. Nat Rev Neurosci. 2007;8:393–402. doi: 10.1038/nrn2113. [DOI] [PubMed] [Google Scholar]

- Hillis AE, Work M, Barker PB, Jacobs MA, Breese EL, Maurer K. Re-examining the brain regions crucial for orchestrating speech articulation. Brain. 2004;127:1479–1487. doi: 10.1093/brain/awh172. [DOI] [PubMed] [Google Scholar]

- Husain FT, Fromm SJ, Pursley RH, Hosey LA, Braun AR, Horwitz B. Neural bases of categorization of simple speech and nonspeech sounds. Human Brain Mapping. 2006;27:636–651. doi: 10.1002/hbm.20207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iacoboni M, Mazziotta JC. Mirror neuron system: basic findings and clinical applications. Ann Neurol. 2007;62:213–218. doi: 10.1002/ana.21198. [DOI] [PubMed] [Google Scholar]

- Joanisse MF, Gati JS. Overlapping neural regions for processing rapid temporal cues in speech and nonspeech signals. Neuroimage. 2003;19:64–79. doi: 10.1016/s1053-8119(03)00046-6. [DOI] [PubMed] [Google Scholar]

- Kent RD, Netsell R, Abbs JH. Acoustic characteristics of dysarthria associated with cerebellar disease. J Speech Hear Res. 1979;22:627–648. doi: 10.1044/jshr.2203.627. [DOI] [PubMed] [Google Scholar]

- Kohler E, Keysers C, Umilta MA, Fogassi L, Gallese V, Rizzolatti G. Hearing sounds, understanding actions: action representation in mirror neurons. Science. 2002;297:846–848. doi: 10.1126/science.1070311. [DOI] [PubMed] [Google Scholar]

- Lancaster JL, Woldorff MG, Parsons LM, Liotti M, Freitas CS, Rainey L, Kochunov PV, Nickerson D, Mikiten SA, Fox PT. Automated Talairach atlas labels for functional brain mapping. Hum Brain Mapp. 2000;10:120–131. doi: 10.1002/1097-0193(200007)10:3<120::AID-HBM30>3.0.CO;2-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liebenthal E, Binder JR, Spitzer SM, Possing ET, Medler DA. Neural substrates of phonemic perception. Cerebral Cortex. 2005;15:1621–1631. doi: 10.1093/cercor/bhi040. [DOI] [PubMed] [Google Scholar]

- Metzner W. Anatomical basis for audio-vocal integration in echolocating horseshoe bats. Journal of Comparative Neurology. 1996;368:252–269. doi: 10.1002/(SICI)1096-9861(19960429)368:2<252::AID-CNE6>3.0.CO;2-2. [DOI] [PubMed] [Google Scholar]

- Nichols T, Brett M, Andersson J, Wager T, Poline JB. Valid conjunction inference with the minimum statistic. Neuroimage. 2005;25:653–660. doi: 10.1016/j.neuroimage.2004.12.005. [DOI] [PubMed] [Google Scholar]

- Ojemann GA. Cortical stimulation and recording in language. San Diego, CA: Academic Press; 1994. [Google Scholar]

- Oldfield RC. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Ozsancak C, Auzou P, Hannequin D. Dysarthria and orofacial apraxia in corticobasal degeneration. Mov Disord. 2000;15:905–910. doi: 10.1002/1531-8257(200009)15:5<905::aid-mds1022>3.0.co;2-d. [DOI] [PubMed] [Google Scholar]

- Pa J, Hickok G. A parietal-temporal sensory-motor integration area for the human vocal tract: Evidence from an fMRI study of skilled musicians. Neuropsychologia. 2008;46:362–368. doi: 10.1016/j.neuropsychologia.2007.06.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paus T, Petrides M, Evans AC, Meyer E. Role of the Human Anterior Cingulate Cortex in the Control of Oculomotor, Manual, and Speech Responses - a Positron Emission Tomography Study. Journal of Neurophysiology. 1993;70:453–469. doi: 10.1152/jn.1993.70.2.453. [DOI] [PubMed] [Google Scholar]

- Penfield W, Roberts L, editors. Speech and brain mechanisms. Princeton: Princeton University Press; 1959. [Google Scholar]

- Petrides M, Cadoret G, Mackey S. Orofacial somatomotor responses in the macaque monkey homologue of Broca's area. Nature. 2005;435:1235–1238. doi: 10.1038/nature03628. [DOI] [PubMed] [Google Scholar]

- Petrides M, Pandya DN. Comparative cytoarchitectonic analysis of the human and the macaque ventrolateral prefrontal cortex and corticocortical connection patterns in the monkey. European Journal of Neuroscience. 2002;16:291–310. doi: 10.1046/j.1460-9568.2001.02090.x. [DOI] [PubMed] [Google Scholar]

- Poremba A, Malloy M, Saunders RC, Carson RE, Herscovitch P, Mishkin M. Species-specific calls evoke asymmetric activity in the monkey's temporal poles. Nature. 2004;427:448–451. doi: 10.1038/nature02268. [DOI] [PubMed] [Google Scholar]

- Pulvermuller F, Huss M, Kherif F, Martin FMDP, Hauk O, Shtyrov Y. Motor cortex maps articulatory features of speech sounds. Proceedings of the National Academy of Sciences of the United States of America. 2006;103:7865–7870. doi: 10.1073/pnas.0509989103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Riecker A, Ackermann H, Wildgruber D, Dogil G, Grodd W. Opposite hemispheric lateralization effects during speaking and singing at motor cortex, insula and cerebellum. NeuroReport. 2000;11:1997–2000. doi: 10.1097/00001756-200006260-00038. [DOI] [PubMed] [Google Scholar]

- Riecker A, Brendel B, Ziegler W, Erb M, Ackermann H. The influence of syllable onset complexity and syllable frequency on speech motor control. Brain Lang. 2008;107:102–113. doi: 10.1016/j.bandl.2008.01.008. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Arbib MA. Language within our grasp. Trends in Neurosciences. 1998;21:188–194. doi: 10.1016/s0166-2236(98)01260-0. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Fadiga L, Gallese V, Fogassi L. Premotor cortex and the recognition of motor actions. Brain Res Cogn Brain Res. 1996;3:131–141. doi: 10.1016/0926-6410(95)00038-0. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Sinigaglia C. Mirror neurons and motor intentionality. Funct Neurol. 2007;22:205–210. [PubMed] [Google Scholar]

- Rogers RD, Sahakian BJ, Hodges JR, Polkey CE, Kennard C, Robbins TW. Dissociating executive mechanisms of task control following frontal lobe damage and Parkinson's disease. Brain. 1998;121:815–842. doi: 10.1093/brain/121.5.815. [DOI] [PubMed] [Google Scholar]

- Saarinen T, Laaksonen H, Parviainen T, Salmelin R. Motor cortex dynamics in visuomotor production of speech and non-speech mouth movements. Cereb Cortex. 2006;16:212–222. doi: 10.1093/cercor/bhi099. [DOI] [PubMed] [Google Scholar]

- Salmelin R, Sams M. Motor cortex involvement during verbal versus non-verbal lip and tongue movements. Hum Brain Mapp. 2002;16:81–91. doi: 10.1002/hbm.10031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schulz GM, Peterson T, Sapienza CM, Greer M, Friedman W. Voice and speech characteristics of persons with Parkinson's disease pre- and post-pallidotomy surgery: preliminary findings. J Speech Lang Hear Res. 1999;42:1176–1194. doi: 10.1044/jslhr.4205.1176. [DOI] [PubMed] [Google Scholar]

- Scott SK, Blank CC, Rosen S, Wise RJS. Identification of a pathway for intelligible speech in the left temporal lobe. Brain. 2000;123:2400–2406. doi: 10.1093/brain/123.12.2400. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smotherman MS. Sensory feedback control of mammalian vocalizations. Behavioural Brain Research. 2007;182:315–326. doi: 10.1016/j.bbr.2007.03.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Soros P, Sokoloff LG, Bose A, McIntosh AR, Graham SJ, Stuss DT. Clustered functional MRI of overt speech production. Neuroimage. 2006;32:376–387. doi: 10.1016/j.neuroimage.2006.02.046. [DOI] [PubMed] [Google Scholar]

- Vitevitch MS, Luce PA, Pisoni DB, Auer ET. Phonotactics, neighborhood activation, and lexical access for spoken words. Brain and Language. 1999;68:306–311. doi: 10.1006/brln.1999.2116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Warren JE, Wise RJS, Warren JD. Sounds do-able: Auditory-motor transformations and the posterior temporal plane. Trends in Neurosciences. 2005;28:636–643. doi: 10.1016/j.tins.2005.09.010. [DOI] [PubMed] [Google Scholar]

- Watkins KE, Strafella AP, Paus T. Seeing and hearing speech excites the motor system involved in speech production. Neuropsychologia. 2003;41:989–994. doi: 10.1016/s0028-3932(02)00316-0. [DOI] [PubMed] [Google Scholar]

- Whalen DH, Benson RR, Richardson M, Swainson B, Clark VP, Lai S, Mencl WE, Fulbright RK, Constable RT, Liberman AM. Differentiation of speech and nonspeech processing within primary auditory cortex. Journal of the Acoustical Society of America. 2006;119:575–581. doi: 10.1121/1.2139627. [DOI] [PubMed] [Google Scholar]

- Wildgruber D, Ackermann H, Klose U, Kardatzki B, Grodd W. Functional lateralization of speech production at primary motor cortex: a fMRI study. NeuroReport. 1996;7:2791–2795. doi: 10.1097/00001756-199611040-00077. [DOI] [PubMed] [Google Scholar]

- Wilson SM, Iacoboni M. Neural responses to non-native phonemes varying in producibility: Evidence for the sensorimotor nature of speech perception. Neuroimage. 2006;33:316–325. doi: 10.1016/j.neuroimage.2006.05.032. [DOI] [PubMed] [Google Scholar]

- Wilson SM, Saygin AP, Sereno MI, Iacoboni M. Listening to speech activates motor areas involved in speech production. Nature Neuroscience. 2004;7:701–702. doi: 10.1038/nn1263. [DOI] [PubMed] [Google Scholar]

- Wise RJS, Greene J, Bu?chel C, Scott SK. Brain regions involved in articulation. Lancet. 1999;353:1057–1061. doi: 10.1016/s0140-6736(98)07491-1. [DOI] [PubMed] [Google Scholar]

- Zaehle T, Geiser E, Alter K, Jancke L, Meyer M. Segmental processing in the human auditory dorsal stream. Brain Res. 2008;1220:179–190. doi: 10.1016/j.brainres.2007.11.013. [DOI] [PubMed] [Google Scholar]

- Zarate JM, Zatorre RJ. Neural substrates governing audiovocal integration for vocal pitch regulation in singing. Neurosciences and Music Ii: From Perception to Performance. 2005;1060:404–408. doi: 10.1196/annals.1360.058. [DOI] [PubMed] [Google Scholar]

- Ziegler W, Hartmann E, Hoole P. Syllabic timing in dysarthria. J Speech Hear Res. 1993;36:683–693. doi: 10.1044/jshr.3604.683. [DOI] [PubMed] [Google Scholar]

- Zilles K, Schlaug G, Matelli M, Luppino G, Schleicher A, Qu M, Dabringhaus A, Seitz R, Roland PE. Mapping of human and macaque sensorimotor areas by integrating architectonic, transmitter receptor, MRI and PET data. J Anat. 1995;187(Pt 3):515–537. [PMC free article] [PubMed] [Google Scholar]