Abstract

The tendency to perceive faces in random patterns exhibiting configural properties of faces is an example of pareidolia. Perception of ‘real’ faces has been associated with a cortical response signal arising at about 170ms after stimulus onset; but what happens when non-face objects are perceived as faces? Using magnetoencephalography (MEG), we found that objects incidentally perceived as faces evoked an early (165ms) activation in the ventral fusiform cortex, at a time and location similar to that evoked by faces, whereas common objects did not evoke such activation. An earlier peak at 130 ms was also seen for images of real faces only. Our findings suggest that face perception evoked by face-like objects is a relatively early process, and not a late re-interpretation cognitive phenomenon.

Keywords: Face perception, subcortical route, object perception, magnetoencephalography

Introduction

“An old-time hominid would be liable to pay dearly, had s/he failed to recognize a pair of glowing dots in the bush at dark as the eyes of a predator, mistaking it for two fireflies” David Navon

We tend to see faces in objects that have constituent parts resembling those of a face. This is an example of a phenomenon called pareidolia, which involves the perception of an ambiguous and random stimulus as significant, such as seeing faces in landscapes, clouds - or even in grilled toasts [1]. Why and how do we tend to see faces in objects that have constituent parts resembling those of a face? Is it because our brains are hard-wired to detect the presence of a face as quickly as possible, or is it a later cognitive construction or interpretation?

Face perception is an automatic, rapid and subconscious process, already present in human newborns, who preferentially orient towards simple schematic face-like patterns (for review see [2,3]). The neural substrate for face processing consists of a distributed network of cortical and subcortical regions. The cortical areas include the inferior occipital gyrus, the fusiform gyrus, the superior temporal sulcus, and the inferior frontal gyrus, while the subcortical network comprises the superior colliculus, the pulvinar nucleus of the thalamus, and the amygdala [4-6]. The subcortical route in adults provides residual face-processing abilities in blindsight patients [7], but the role of this route in the intact brain is not clear.

Magneto-encephalographic [8-10] and electro-encephalographic studies have demonstrated face perception is accompanied by a signal arising at around 170ms after stimulus onset, the N170 (for review, see [11]), and this peak of activity has been associated with face identification [12]. This face-specific component is correlated with activation of the Fusiform Face Area (FFA) and the Superior Temporal Sulcus (STS) [13]. The face-specificity of this component has however been challenged by a recent study examining the effect of interstimulus perceptual variance (ISPV) ([14], but see [15]). In addition to this signal at 170ms, MEG studies have reported an earlier, face-specific activation peaking between 100ms and 120ms after stimulus onset which has been associated with face categorization processes [10,16-18].

We used MEG to measure brain responses to photographs of faces, objects, and objects that can be interpreted as faces, and determine whether faces perception evoked by these objects is a late cognitive process, or whether it is initiated in the earliest stages of face processing.

Materials and Methods

Nine participants (mean age 27y; 3 males) with normal or corrected-to-normal vision volunteered to take part in the study. All procedures were approved by the Massachusetts General Hospital Institutional Review Board, and informed written consent was obtained from each participant.

MEG Experiment

Stimuli consisted of neutral faces from the NimStim Emotional Face Stimuli database http://www.macbrain.org/faces/index.htm#faces, objects and face-like objects, which were obtained from the Francois & Jean Robert FACES book (fig. 1). The control objects were chosen to have similar global shape as the face-like object stimuli (Figure 2C).

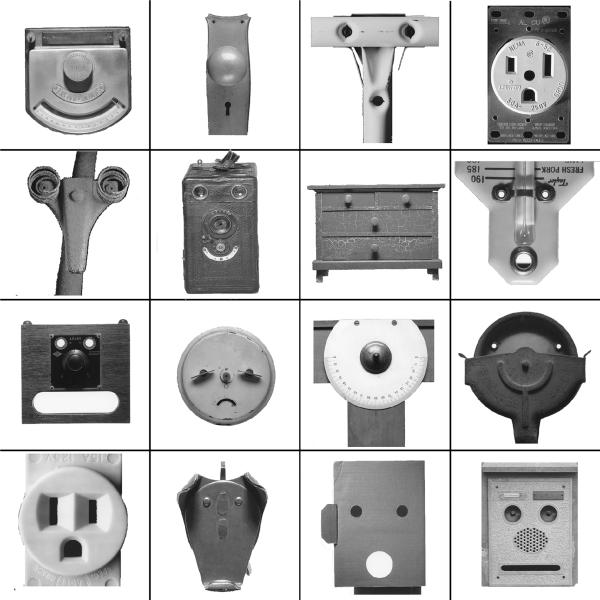

Figure 1.

Examples of stimuli from the Francois & Jean Robert FACES book (with permission of the authors).

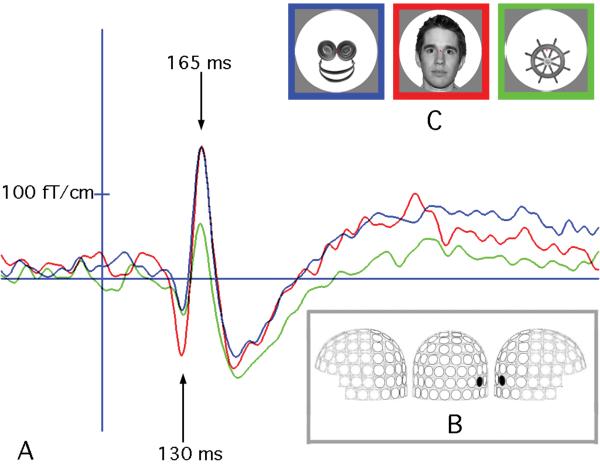

Figure 2.

MEG response to images of real faces, face-like objects and control objects. (A) Timecourse of MEG signal in one participant recorded at a right lateral gradiometer sensor (B) shows a response specific to real faces peaking at 130ms, followed by a similar response for faces and face-like objects at 165ms. Panel C shows an example of the stimuli used in our experiment and the color code for panel A.

The stimuli of the three categories were controlled for low-level parameters. Spatial frequency content of the images in the three conditions was compared by computing the mean power spectrum density of each image. Two-sample t-tests between condition pairs revealed that face, object, and face-like object conditions were not significantly different from one another in terms of their spatial frequency content.

A total of 123 distinct upright black and white stimuli (40 faces, 41 face-like objects and 42 objects) were projected with an LP350 Digital Light Processor projector (InFocus, Wilsonville, OR) onto a back-projection screen placed 1.5 m in front of the subject. Stimuli were presented for 700ms, followed by a blank interval lasting from 1600 to 2400 ms, during which only a fixation cross was present. To control for retinotopic differences, each stimulus was contained within a circle 480 pixels in diameter and had a fixation cross in the center (Figure 2C). Upright Stimuli were presented randomly 120 times in each condition for a total of 360 trials. In addition, faces were randomly presented 93 times upside down. The task of the subject was to indicate every time they saw an inverted face. Only Upright trials were analyzed for this study.

MEG data were acquired with a 306-channel Elekta-Neuromag VectorView system. Eye movements and blinks were monitored with vertical and horizontal electro-oculogram (EOG). Two subjects could not be analyzed because of technical (motion-related) difficulties. MEG signals were averaged across trials for each condition, time-locked to the onset of the stimulus. The evoked responses were low-pass filtered at 40 Hz. T1-weighted structural MR images of the participants were co-registered with their MEG data. The current distribution was estimated at each cortical location using the minimum-norm estimate (MNE) method [19]. Group movies were created by morphing individual source estimates onto an average brain of all the subjects. The FFA was functionally defined for each subject as the area within the fusiform gyrus activated around 170ms in another MEG data set acquired presenting neutral and emotional faces. Time courses were extracted from the FFA ROI for each condition. One-way repeated non-parametric ANOVAs were computed for windows of 20ms around the peak of activation.

Behavioral experiment

To ensure that our face-like stimuli were indeed perceived as faces, we performed a behavioral experiment in eight participants (mean age 30y; 4 males). Objects and face-like objects were randomly presented twice upright and inverted, and participants had to press one button when the stimulus looked like a face, and another when it did not.

Results

Behavioral experiment

Subjects classified 96.3±4.4% (mean, SD) of upright face-like objects in the category “looks like a face”.

MEG experiment

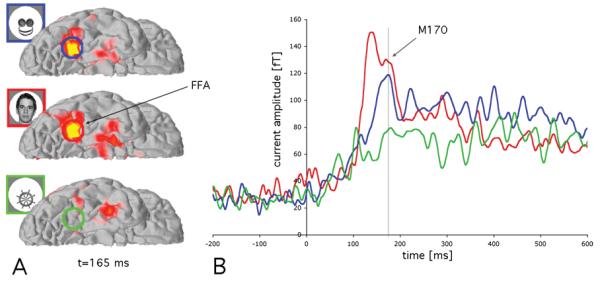

Source analyses of the MEG indicated that the FFA had a first early response to faces peaking at about 130 ms, which may have been evoked by global ovoid shapes of faces and be indicative of face detection processes [20]. After that, the FFA responded similarly to faces and face-like objects at 165 ms (P>0.05), but differentially to objects (p<0.001), as shown in Figures 2 and 3. This indicates that the phenomenon of seeing faces in object stimuli with some face cues is mediated by relatively early components of the visual system, rather than resulting from a late, post-recognition reinterpretation of these object stimuli as faces. Conversely, another face-processing area, the superior temporal sulcus (STS), did not show a differential activation between objects and face-like objects (p>0.05), but differentiated between faces and face-like objects (p<0.01), as well as between faces vs. objects (p<0.001).

Figure 3.

MEG source estimates averaged across all participants. Panel A shows a ventral view of the right hemisphere with average MEG signal at 165 ms, evidencing FFA activation for faces and face-like objects, but not for objects. Panel B shows the average timecourse of all participants in a region of interest (ROI) located in the FFA. In addition to the early response seen for faces (in red, at about 130 ms), both face and face-like objects activate the FFA at around 165 ms, a signal usually referred as M170.

Discussion

Our findings demonstrate that non-face objects can be perceived as faces and activate a region typically associated with face processing in the ventral fusiform gyrus, the fusiform face area (FFA) (Figure 3). While the earliest (∼130 ms) activation in the FFA occurred only for real faces, we found that at ∼165 ms it was activated similarly by stimuli that were perceived as faces, whether they are real faces or non-face objects with some face-like configural cues. This earliest temporal difference between faces and face-like objects may be due to very early differentiation based on global face shape, which is interpreted before the face-like configuration of the inner elements of the stimuli. However, by ∼165 ms the FFA was activated similarly by faces and face-like objects (Figures 2 and 3).

Recent studies have challenged the face-specificity of the N170 [14] and several studies have reported face-specific activation at earlier times than 170 ms [10,16-18]. Similarly to the data presented here, in their ERP investigation Thierry et al. [14] observed greater P1 for faces than cars and no differences between faces and cars in the N170 ranges. However, images of full-frontal views of cars may also be perceived to be face-like, which could explain why they generate the same pattern of activity as our face-like objects did: a similar activation as that evoked by faces at N170 ms, and a differential early (P1 component) activation. Notably though, in our present study, while controlling for retinotopy and spatial frequency, we did not manipulate ISPV, yet found differential activation for face-like objects and control objects.

The relatively early activation of the FFA at 165 ms by the face-like objects suggests that the perception of these objects as faces is not a post-recognition cognitive re-interpretation process; rather, the face cues in the face-like objects are perceived early in the recognition process. This process may be supported by the subcortical network shown to process behaviorally relevant unseen visual events [3,21], and we are planning further experiments that will specifically address this question.

Why do we sometimes mistakenly interpret a non-face object as a face? It is important to note that while the face-like objects we employed in the study shared certain internal configural cues with faces (elements representing two eyes, and mouth/nose), they were not actually schematic faces, nor did most of them have the global ovoid shape of a real face (Figure 1). Moreover, while non-face objects have been shown to activate the FFA with extensive training (e.g., “Greebles”, [22], our face-like objects readily evoked an early (∼165ms) activation, similar to that evoked by faces, without any training. This suggests that the FFA can be activated by crude face-like configural cues embedded in otherwise typical everyday objects.

Dot patterns consisting of two horizontally arrayed elements in the upper part of the stimulus (the “eyes”) and one element vertically centered in the lower part of the stimulus have been shown to evoke stronger looking in infants, as do real faces [3]; a similar pattern of cues also was embedded in our face-like objects (Figure 1).

Conclusion

The similar response found in FFA at 165 ms seen for the images of both face-like objects and real faces suggests that our visual system has the propensity to rapidly interpret stimuli as faces based on minimal cues. This may be the result of our innate faculty to detect faces, and may rely on the activation of the subcortical route. In addition, face-like objects may provide a new way to study face perception in disorders characterized by difficulties in social and communication skills, such as autism. There has been a long debate about the integrity of the FFA in autism and there have been reports of an abnormal N170 in autism [23,24]. However, face-perception studies in autistic individuals are often difficult because autistic persons do not like to look at faces [25]. Our findings thus may provide a new way of investigating the integrity of the face-processing route in autism without being confounded by social factors necessarily present in real faces.

Acknowledgments

This works was supported by NIH NINDS R01NS044824 and Swiss National Science Foundation PPOOB—110741

We thank Jasmine Boshyan for assistance with stimuli preparation, as well as Matti Hämäläinen and Ksenija Marinkovic for fruitful discussions.

References

- [1].BBC Virgin Mary’s toast fetches $28,000. BBC News. 2004 November 23rd; [Google Scholar]

- [2].Palermo R, Rhodes G. Are you always on my mind? A review of how face perception and attention interact. Neuropsychologia. 2007;45:75–92. doi: 10.1016/j.neuropsychologia.2006.04.025. [DOI] [PubMed] [Google Scholar]

- [3].Johnson M. Subcortical face processing. Nat Neurosci Reviews. 2005;6:2–9. doi: 10.1038/nrn1766. [DOI] [PubMed] [Google Scholar]

- [4].Ishai A. Let’s face it: It’s a cortical network. Neuroimage. 2008;40:415–419. doi: 10.1016/j.neuroimage.2007.10.040. [DOI] [PubMed] [Google Scholar]

- [5].Haxby JV, Hoffman EA, Gobbini MI. The distributed human neural system for face perception. Trends Cogn Sci. 2000;4:223–233. doi: 10.1016/s1364-6613(00)01482-0. [DOI] [PubMed] [Google Scholar]

- [6].Vuilleumier P, Armony JL, Driver J, Dolan RJ. Distinct spatial frequency sensitivities for processing faces and emotional expressions. Nat Neurosci. 2003;6:624–631. doi: 10.1038/nn1057. [DOI] [PubMed] [Google Scholar]

- [7].Morris JS, DeGelder B, Weiskrantz L, Dolan RJ. Differential extrageniculostriate and amygdala responses to presentation of emotional faces in a cortically blind field. Brain. 2001;124:1241–1252. doi: 10.1093/brain/124.6.1241. [DOI] [PubMed] [Google Scholar]

- [8].Xu Y, Liu J, Kanwisher N. The M170 is selective for faces, not for expertise. Neuropsychologia. 2005;43:588–597. doi: 10.1016/j.neuropsychologia.2004.07.016. [DOI] [PubMed] [Google Scholar]

- [9].Watanabe S, Miki K, Kakigi R. Mechanisms of face perception in humans: a magneto- and electro-encephalographic study. Neuropathology. 2005;25:8–20. doi: 10.1111/j.1440-1789.2004.00603.x. [DOI] [PubMed] [Google Scholar]

- [10].Halgren E, Raij T, Marinkovic K, Jousmaki V, Hari R. Cognitive response profile of the human fusiform face area as determined by MEG. Cereb Cortex. 2000;10:69–81. doi: 10.1093/cercor/10.1.69. [DOI] [PubMed] [Google Scholar]

- [11].Jeffreys A. Evoked Potential Studies of face and Object Processing. Visual Cognition. 1996;3:1–38. [Google Scholar]

- [12].Bentin S, Allison T, Puce A, Perez E, McCarthy G. Electrophysiological studies of face perception in humans. J Cognitive Neurosci. 1996;8:551–565. doi: 10.1162/jocn.1996.8.6.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Sadeh B, Zhdanov A, Podlipsky I, Hendler T, Yovel G. The validity of the face-selective ERP N170 component during simultaneous recording with functional MRI. Neuroimage. 2008;42:778–786. doi: 10.1016/j.neuroimage.2008.04.168. [DOI] [PubMed] [Google Scholar]

- [14].Thierry G, Martin CD, Downing P, Pegna AJ. Controlling for interstimulus perceptual variance abolishes N170 face selectivity. Nat Neurosci. 2007;10:505–511. doi: 10.1038/nn1864. [DOI] [PubMed] [Google Scholar]

- [15].Rossion B, Jacques C. Does physical interstimulus variance account for early electrophysiological face sensitive responses in the human brain? Ten lessons on the N170. Neuroimage. 2008;39:1959–1979. doi: 10.1016/j.neuroimage.2007.10.011. [DOI] [PubMed] [Google Scholar]

- [16].Liu J, Harris A, Kanwisher N. Stages of processing in face perception: an MEG study. Nat Neurosci. 2002;5:910–916. doi: 10.1038/nn909. [DOI] [PubMed] [Google Scholar]

- [17].Meeren HKM, Hadjikhani N, Ahlfors SP, Hamalainen MS, de Gelder B. Early category-specific cortical activation revealed by stimulus inversion. PLOS One. 2008;3:e3503, 3501–3511. doi: 10.1371/journal.pone.0003503. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Linkenkaer-Hansen K, Palva JM, Sams M, Hietanen JK, Aronen HJ, Ilmoniemi RJ. Face-selective processing in human extrastriate cortex around 120 ms after stimulus onset revealed by magneto- and electroencephalography. Neurosci Lett. 1998;253:147–150. doi: 10.1016/s0304-3940(98)00586-2. [DOI] [PubMed] [Google Scholar]

- [19].Hamalainen MS, Ilmoniemi RJ. Interpreting magnetic fields of the brain: minimum norm estimates. Med Biol Eng Comput. 1994;32:35–42. doi: 10.1007/BF02512476. [DOI] [PubMed] [Google Scholar]

- [20].Tsuchiya N, Adolphs R. Emotion and consciousness. Trends Cogn Sci. 2007 doi: 10.1016/j.tics.2007.01.005. [DOI] [PubMed] [Google Scholar]

- [21].Morris JS, Ohman A, Dolan RJ. A subcortical pathway to the right amygdala mediating “unseen” fear. Proc Natl Acad Sci U S A. 1999;96:1680–1685. doi: 10.1073/pnas.96.4.1680. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Gauthier I, Tarr MJ, Anderson AW, Skudlarski P, Gore JC. Activation of the middle fusiform ‘face area’ increases with expertise in recognizing novel objects. Nat Neurosci. 1999;2:568–573. doi: 10.1038/9224. [DOI] [PubMed] [Google Scholar]

- [23].McPartland J, Dawson G, Webb SJ, Panagiotides H, Carver LJ. Event-related brain potentials reveal anomalies in temporal processing of faces in autism spectrum disorder. J Child Psychol Psychiatry. 2004;45:1235–1245. doi: 10.1111/j.1469-7610.2004.00318.x. [DOI] [PubMed] [Google Scholar]

- [24].Bailey AJ, Braeutigam S, Jousmaki V, Swithenby SJ. Abnormal activation of face processing systems at early and intermediate latency in individuals with autism spectrum disorder: a magnetoencephalographic study. Eur J Neurosci. 2005;21:2575–2585. doi: 10.1111/j.1460-9568.2005.04061.x. [DOI] [PubMed] [Google Scholar]

- [25].Klin A, Jones W, Schultz R, Volkmar F, Cohen D. Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Arch Gen Psychiatry. 2002;59:809–816. doi: 10.1001/archpsyc.59.9.809. [DOI] [PubMed] [Google Scholar]