Abstract

Although self-rated health is proposed for use in public health monitoring, previous reports on US levels and trends in self-rated health have shown ambiguous results. This study presents a comprehensive comparative analysis of responses to a common self-rated health question in 4 national surveys from 1971 to 2007: the National Health and Nutrition Examination Survey, Behavioral Risk Factor Surveillance System, National Health Interview Survey, and Current Population Survey. In addition to variation in the levels of self-rated health across surveys, striking discrepancies in time trends were observed. Whereas data from the Behavioral Risk Factor Surveillance System demonstrate that Americans were increasingly likely to report “fair” or “poor” health over the last decade, those from the Current Population Survey indicate the opposite trend. Subgroup analyses revealed that the greatest inconsistencies were among young respondents, Hispanics, and those without a high school education. Trends in “fair” or “poor” ratings were more inconsistent than trends in “excellent” ratings. The observed discrepancies elude simple explanations but suggest that self-rated health may be unsuitable for monitoring changes in population health over time. Analyses of socioeconomic disparities that use self-rated health may be particularly vulnerable to comparability problems, as inconsistencies are most pronounced among the lowest education group. More work is urgently needed on robust and comparable approaches to tracking population health.

Keywords: health status, health surveys, public health, questionnaires

Measures of health status are widely used in clinical trials and studies on quality of care (1, 2). There is also increasing interest in using health status measures to track changes in population health and health service needs and to monitor progress toward broad goals for the health of communities and nations (3–5). Interest in tracking population health extends to comparisons across countries and measurement of disparities within countries (6–10). Further, population health measures capturing nonfatal outcomes are essential to understanding how well public health and medical care systems are performing (11, 12).

Global measures of self-rated health, based on responses to a single survey question, have been proposed as reliable and valid measures of population health (13–15) and recommended for use in health monitoring by the US Centers for Disease Control, the World Health Organization, and the European Commission (4, 13, 16). The most commonly used survey item asks people to characterize their health as “excellent, very good, good, fair, or poor.” The resulting categorical responses are often dichotomized as “fair” or “poor” versus all other categories (7, 17–19). A recent Institute of Medicine report included the percentage of adults reporting “fair” or “poor” health among the set of 8 indicators recommended for tracking the progress of health in the United States (20).

At the individual level, self-rated health based on a single item has been found to be a strong predictor of health-care utilization, functional ability, and subsequent mortality, even after controlling for other measured indicators of health status and socioeconomic variables (21–26). Based on the strength of these associations, self-rated health has been used extensively in policy analyses as an overall measure of health outcomes (27–30). Despite its appeal as a simple measure with consistent predictive power in cohort studies, however, existing evidence on trends in self-rated health in the United States—where time series are available from multiple survey programs—points to inconsistent population-level patterns across data sources and studies. Zack et al. (31) analyzed self-rated health responses from the Behavioral Risk Factor Surveillance System and found worsening trends from 1993 to 2001. Analyses of trends in the National Health Interview Survey (32), on the other hand, indicate that self-rated health has remained relatively stable. Such discrepant findings based on responses to the same item in different nationally representative surveys raise questions about the validity of inferences about population health based on self-rated health.

To further understand the potential use of self-rated health for population-level monitoring, we present a comprehensive analysis of levels and trends in self-rated health responses in 4 separate nationally representative US surveys. In particular, we focus on characterizing discrepancies between surveys, comparing discrepancies in self-rated health with those in other types of questions, analyzing differences in specific subgroups, and considering possible explanations for inconsistencies across surveys.

MATERIALS AND METHODS

Data sources

We compared responses to a common survey item on self-rated health in 4 national US health surveys from 1971 to 2007: the National Health and Nutrition Examination Survey (NHANES), Behavioral Risk Factor Surveillance System (BRFSS), National Health Interview Survey (NHIS), and Current Population Survey (CPS). Table 1 provides a summary of the key characteristics of each survey.

Table 1.

Summary of Key Survey Characteristics, United States, 1971–2007

| Mode of Data Collection | Years of Analysis | Sample Sizes, no.ab | Response Rates, %abc | |

| BRFSS | Telephone interview | 1993–2007 | 99,119–430,912 (years 1993 and 2007) | 53–77 (years 2001 and 2002) |

| CPS | Telephone and/or in-person interview, depending on state | 1996–2007 | 90,363–143,774 (years 1996 and 2002) | 83–86 (years 2005 and 1998, 2000) |

| NHANES | In-person interview and examination | 1971–1975, 1976–1980, 1988–1994, 1999–2000, 2001–2002, 2003–2004, 2005–2006 | 4,874–18,813 (years 1999–2000 and 1988–1994) | 79–91 (years 2003–2004 and 1976–1980) |

| NHIS | In-person interview | 1982–2007 | 42,625–88,344 (years 1986 and 1992) | 86–97 (years 1999, 2005 and 1982) |

Abbreviations: BRFSS, Behavioral Risk Factor Surveillance System; CPS, Current Population Survey; NHANES, National Health and Nutrition Examination Survey; NHIS, National Health Interview Survey.

Low and high reported across all years of analysis.

The dates in years are provided, respectively, with the first year(s) as the date(s) for the smallest sample size or response rate and the second year(s) the date(s) for the largest.

BRFSS: median cooperation rate across states, defined as the ratio of respondents interviewed to eligible units in which a respondent was selected and actually contacted; CPS: response rate for the March supplement; NHANES: response rate for the interview component; NHIS: overall family response rate for 1997 and onward and overall response rate for the pre-1997 years.

NHANES comprises a series of cross-sectional surveys of the civilian, noninstitutionalized population aged 2 months or older (33). NHANES includes an in-person interview and a subsequent examination component, with both physical and laboratory measurements. The first 3 rounds were conducted at various intervals since 1970. Beginning in 1999, NHANES became a continuous survey with data released every 2 years.

BRFSS is an annual cross-sectional telephone survey started in 1984 (34). Currently, the survey is conducted by health departments in all 50 states and the District of Columbia by using a random-digit dialing method to obtain a state-representative sample of the civilian, noninstitutionalized population aged 18 years or more. The state samples can be combined to form a nationally representative sample.

NHIS is an annual cross-sectional household interview survey of the civilian, noninstitutionalized population, implemented since 1957 (35). The survey instrument is updated approximately every decade, with the last significant revision occurring in 1997. The current survey consists of a core questionnaire and supplementary material that may change each year.

CPS is a monthly nationally representative survey regarding the US labor force, including the noninstitutionalized population aged 16 years or more (36). The survey is conducted through both personal and telephone interviews, independently in each state. In the survey design, members of a household are interviewed for 4 months, left out of the sample the next 8 months, and interviewed again for the following 4 months. We restrict our analysis to the March supplement, which includes self-rated health.

Although NHIS and CPS elicit information on all household members from a single household respondent, we included only self-reports in our analyses. An anomaly in the 1998 CPS data set—the household respondent indicator is blank for 92% of the sample—makes it impossible to distinguish self-reports from proxy responses, so we have excluded these data from our analysis. Self-reports and proxy responses in CPS show minimal differences in levels and trends in all other years (Web Figure 1). (This is the first of 3 supplementary figures; each is referred to as “Web figure” in the text and is posted on the Journal’s website, http://aje.oxfordjournals.org/.)

Health measures

In each survey, analyses were based on responses to the question, “Would you say your health in general is excellent, very good, good, fair, or poor?” (The wording was rearranged slightly in BRFSS, as, “Would you say that in general your health is….”) Respondents who answered “don't know/not sure” or refused to answer were excluded from the analysis (these respondents constituted less than 1% of the overall survey samples in every year and every survey).

Initial analyses were based on dichotomizing self-rated health responses as “fair” or “poor” versus all other categories, following common practice (7, 17–19). In further analyses, we compared this approach with a range of alternatives.

For comparison, we also examined responses to other questions common to the different surveys, including self-reported diabetes and body mass index computed from self-reported weight and height.

Age-standardized measures were computed on the basis of the 2000 US population by 5-year age intervals from 20 years to 70 years or older.

Statistical methods

Sample weights were applied in each data set to account for unequal probabilities of selection, nonresponse, and noncoverage. The provided weights included ratio adjustments to match population distributions by age, sex, and race/ethnicity in each survey, except in some state samples from BRFSS, which matched only on age and sex. Variance estimation was undertaken by using Taylor-series linearization methods to account for complex survey designs including clustering, stratification, and unequal weights (37). For CPS, which does not include variables on stratification and clusters in the public-release data set, we developed synthetic design variables following the approach of Joliffe (38), based on resorting the data and assigning consecutive observations to synthetic clusters in a way that approximates the design effects in the actual CPS sample. Following Joliffe, we used cluster sizes of 4 housing units and sorted by household income to induce intracluster correlation in self-rated health, based on the underlying association between income and health.

For each survey year, we computed confidence intervals around age-standardized proportions using different response categories. We examined patterns by sex and by 3 broad age groups: 20–49 years, 50–64 years, and 65 years or older. We also examined differences by race/ethnicity and educational level. Race/ethnicity was categorized as non-Hispanic white, non-Hispanic black, Hispanic, and other. Education was categorized as less than high school, high school, and more than high school.

To assess trends over the last decade overall and by sex, age, race, and education, we fit logistic regression models relating the probability of “fair” or “poor” self-ratings to calendar year over the period 1998–2007. We did not fit models to NHANES, as data are available only for 2-year periods starting in 1999–2000. We excluded CPS data from 1998 because self-reports could not be distinguished from proxy responses in that year, as noted above. Separate models were fit for each subgroup within each survey. Analogous logistic regression models were fit to the probability of reporting “excellent” health.

All statistical analyses were undertaken by using Stata Release 10/SE (StataCorp LP, College Station, Texas).

RESULTS

In 2007, the age-standardized proportion of respondents reporting fair or poor health ranged from 12.0% (95% confidence interval (CI): 11.3, 12.7) in NHIS to 16.4% (95% CI: 15.9, 16.8) in BRFSS for males and from 13.5% (95% CI: 12.9, 14.1) in NHIS to 16.9% (95% CI: 16.6, 17.2) in BRFSS for females. NHANES estimates in 2005–2006 (the most recent available) were similar to those in BRFSS, but with greater uncertainty.

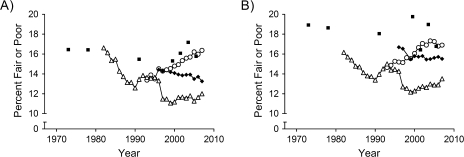

Trends in age-standardized probabilities of reporting fair or poor health are plotted in Figure 1. BRFSS shows increases of 15% among women and 22% among men in reports of fair or poor health over the period 1993–2007. NHIS, on the other hand, shows reductions in fair/poor health from 1982 to 1990, followed by slight increases for the next 2–3 years. Since 1993, changes in NHIS have been relatively modest, except for a sharp drop in 1997 coinciding with a major redesign of the survey, which preserved the exact wording but relocated the self-rated health question within the survey.

Figure 1.

Age-adjusted trends in self-rated health in males (A) and females (B) in 4 nationally representative surveys, United States, 1971–2007. Open circle, Behavioral Risk Factor Surveillance System; filled diamond, Current Population Survey; filled square, National Health and Nutrition Examination Survey; open triangle, National Health Interview Survey.

NHANES shows declines in fair/poor ratings from the first round of the survey (1971–1975) through the third round (1988–1994) in both sexes. In men, trends since 1999–2000 have been marked by rising reports of fair/poor health through 2003–2004, followed by a reversal of this pattern in 2005–2006; patterns for women have oscillated since 1999–2000. Finally, CPS indicates mostly steady reductions in fair/poor ratings for males since 1999 and flat trends for females over this period.

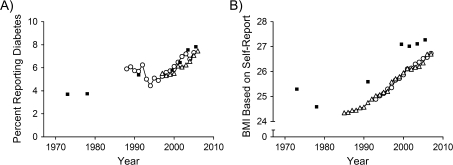

In order to consider whether differences among the surveys may apply more generally to other self-reported health-related items, we compared these results with trends in other variables. For example, Figure 2 presents results from NHIS, BRFSS, and NHANES for females on age-standardized proportions reporting diabetes, which are much more concordant than self-ratings of health. Results are similar for men (not shown). Figure 2 also presents a comparison of body mass index computed from self-reported weight and height. Although the levels and trends are similar in NHIS and BRFSS, estimates from NHANES are higher, by roughly the same increment in each year of comparison. Thus, in contrast to self-reported diabetes, self-reported body mass index appears subject to some systematic variation across surveys. Unlike self-rated health, however, the trend across surveys appears largely consistent despite variation in estimated levels.

Figure 2.

Age-adjusted trends in self-reported diabetes (A) and body mass index (BMI) (B) based on self-reported weight and height among females in 3 nationally representative surveys, United States, 1971–2007. Open circle, Behavioral Risk Factor Surveillance System; filled square, National Health and Nutrition Examination Survey; open triangle, National Health Interview Survey.

Analyses by age, race, and education

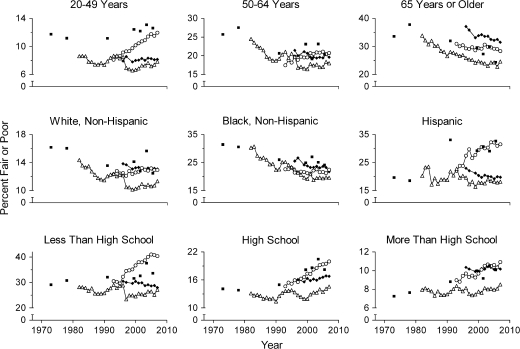

Disaggregation by age, race/ethnicity, and education reveals more subtle patterns (Figure 3). In the youngest age group, CPS and NHIS show the lowest fractions of respondents reporting fair or poor health. Conversely, in the oldest age group, the fraction reporting fair or poor health is highest in CPS. Overall, the sharpest divergence in trends across surveys appears in ages 20–49 years, with the proportion reporting fair/poor health in 2007 around 50% higher in BRFSS compared with NHIS or CPS, in contrast to relatively modest differences in 1993. In older age groups, differences in levels are smaller across surveys, in relative terms, but variation in time trends remains.

Figure 3.

Trends in self-rated health across age, race/ethnicity, and education subgroups, United States, 1971–2007. Open circle, Behavioral Risk Factor Surveillance System; filled diamond, Current Population Survey; filled square, National Health and Nutrition Examination Survey; open triangle, National Health Interview Survey.

Disaggregating by race and ethnicity, we observe the smallest inconsistencies among non-Hispanic African Americans and the largest among Hispanics. For Hispanic respondents, discrepancies among surveys have widened over time, with a nearly 2-fold difference in proportions reporting fair or poor health in NHIS versus BRFSS in 2007, compared with roughly equal proportions in the early 1990s. Levels and trends in the 4 surveys among non-Hispanic whites are moderately discrepant.

Disaggregating by educational level, the greatest discrepancies appear among those respondents without a high school diploma. The magnitudes of cross-survey differences in levels and trends between those with a high school diploma and those with at least some college are similar.

Although the poststratification weighting procedures in CPS, NHANES, and NHIS accounted for age, sex, and race/ethnicity, adjustment for race was incorporated in some states but not others in BRFSS (all states adjusted for age and sex). Education was not factored into the weights for any of the surveys. In our sample on self-rated health, we find some differences across surveys in the sample composition by race and education (Web Figure 2). Changes in these variables, however, are modest and gradual over the period of analysis, and cross-survey differences remain fairly constant over time, which suggests that discrepancies in self-rated health trends are not explained by differences in sample composition.

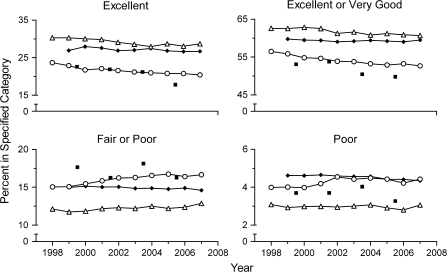

Alternative coding schemes for categorical self-ratings of health

Although researchers typically dichotomize self-rated health as “fair” or “poor” versus all other responses, we considered whether alternative approaches may yield more consistent results. Figure 4 shows trends in the 4 surveys since 1998 based on 4 different dichotomous coding schemes. (Web Figure 3 also presents trends in the average self-rated score, coding “excellent” as 5, “very good” as 4, and so on, which indicate similar discrepancies across surveys as for “fair/poor” ratings.) The ordering of the different surveys in terms of the age-standardized responses is largely preserved across the different choices of dichotomous indicator, with NHIS producing the most favorable ratings, followed by CPS, BRFSS, and NHANES; the exception is the indicator of “poor” self-ratings, for which CPS is least favorable. Figure 4 suggests visually that the proportion of respondents rating themselves as “excellent” may yield more consistent trends across surveys than the standard choice of “fair/poor.” This possibility is evaluated formally in the statistical models described below.

Figure 4.

Age-adjusted trends in self-rated health, by category of response, United States, 1998–2007. Open circle, Behavioral Risk Factor Surveillance System; filled diamond, Current Population Survey; filled square, National Health and Nutrition Examination Survey; open triangle, National Health Interview Survey.

Estimated time trends, 1998–2007

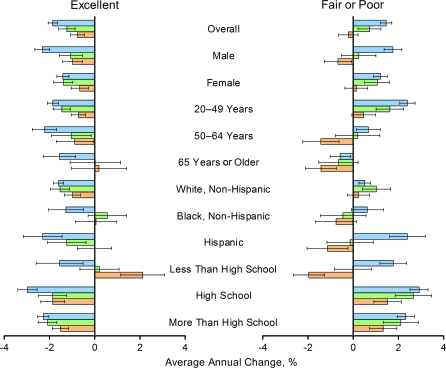

For the 3 surveys with annual reporting (CPS, NHIS, BRFSS), we modeled time trends from 1998 to 2007 using logistic regression of self-rated health (with either “excellent” or “fair/poor” ratings as the dependent variable) as a function of calendar year. Separate models were fit for each survey, by subgroup. The estimated odds ratios for calendar year in the regressions were translated into average annual rates of change in the odds of reporting either “excellent” or “fair/poor” health. For example, an odds ratio of 1.02 on year implies an average annual rate of change of (1.02 − 1.00) × 100 = 2%. Figure 5 summarizes the regression results.

Figure 5.

Average annual change in the odds of reporting “excellent” or “fair/poor” self-rated health, by sex, age, race/ethnicity, and education, United States, 1998–2007. Each bar shows the result of a separate logistic regression of self-rated health as a function of calendar year, estimated for a particular survey and population subgroup. Blue, Behavioral Risk Factor Surveillance System; green, National Health Interview Survey; orange, Current Population Survey.

Overall, and in both men and women, the regressions confirm the observation that trends in “excellent” ratings are more consistent across surveys than trends in “fair/poor” ratings. In men, CPS shows significant declines in the proportion of fair/poor ratings, in contrast to the significant increases seen in BRFSS, whereas declines in excellent ratings are seen in all surveys, albeit at varying rates. Across age groups, significant differences appear in fair/poor ratings from the 2 younger age groups, while excellent ratings are less discrepant across surveys overall. Considering differences across race and ethnic groups, using either dichotomous measure, we found that the greatest discrepancies in trends appear among Hispanic respondents, especially in fair/poor responses. Finally, comparisons across education groups indicate that, for those respondents who have completed at least high school, trends are unambiguously worse: More people report “fair/poor” health at the same time that fewer people report “excellent” health. On the other hand, trends among those without a high school diploma offer the most ambiguous conclusions in any of the subgroup analyses: In terms of both the fair/poor and excellent responses, CPS points to a strong, significant favorable trend, whereas BRFSS shows a strong, significant unfavorable trend in this group.

DISCUSSION

In this study, we undertook a comprehensive comparative analysis of self-rated health in 4 nationally representative US surveys and observed widely discrepant results overall. In addition to variation across surveys in self-rated health levels, we also noted striking inconsistencies in trends. Whereas BRFSS finds that Americans were increasingly likely to report “fair” or “poor” health over the last decade, CPS indicates the opposite trend. Unpacking these discrepancies through subgroup analyses reveals the greatest inconsistencies in trends among younger respondents, Hispanics, and those without a high school education. Our results also challenge the standard practice of focusing on the percentage of respondents with self-ratings of “fair” or “poor,” as this indicator appears prone to greater cross-survey discrepancies than other indicators constructed from the same survey responses, such as the proportion with “excellent” self-ratings.

Wide variations in levels and trends in self-rated health measured in nationally representative surveys using the same survey item demand an explanation. There are at least 3 possibilities. First, despite national sample frames and application of sample weights, the aggregated results from some surveys may not adequately reflect the national average. For example, concerns have been raised in the past about possible noncoverage and nonresponse bias in telephone surveys such as the BRFSS. Recent work, however, has indicated that the bias produced by nonresponse in random-digit telephone surveys is probably modest (39, 40). Although we observed some differences in the demographic composition of the weighted samples in the 4 surveys, these differences were stable over time and therefore cannot explain divergent time trends in self-rated health. Moreover, the consistent trends across surveys observed in other measures, such as diabetes prevalence, mirror a previous finding of consistent cross-sectional estimates in NHIS and BRFSS for 13 of the 14 different health measures examined—with self-rated health being the notable exception (41).

Second, the differences in results across survey platforms may signify a survey mode effect particular to self-rated health. The potential importance of different modes of administration has been noted previously for other specific types of questions, and indeed we observe significant differences across surveys in reported body mass index levels. In order to attribute divergent time trends across the survey platforms to mode effects for self-rated health, however, the mode effects need to be acting differentially over time. In contrast to the body mass index example of parallel time trends across surveys, responses on self-rated health are evidently growing more discrepant over time. We are not aware of any existing studies that account for mode-item effects that change over time in such divergent manners.

A third possibility is that there may be framing and ordering effects in the different questionnaires that interact with attributes of the respondents, so that biases across platforms are shifting. It is difficult to construct more precise hypotheses regarding the nature of the individual and population attributes that would progressively change framing and ordering effects over time. The major shift in NHIS responses in 1997, accompanying a relocation of the self-rated health item within the overall structure of the interview, indicates that ordering effects for the self-rated health item can be large. Cross-survey differences in the steady changes in responses over time would require a more subtle form of framing or ordering effect. These effects might derive, for example, from some changing cultural or linguistic attributes of individual respondents. The widening inconsistencies in trends among Hispanic respondents offer some evidence in favor of the potential importance of cultural or linguistic factors, but more definitive conclusions await further qualitative and quantitative investigation.

Comparing trends by educational level, we find that discrepancies across surveys are most pronounced among respondents without a high school diploma. This finding has potentially profound implications for analyses of socioeconomic disparities in health that rely on self-rated health responses. Trends in CPS show improvements among lower-educated respondents at the same time that self-ratings are worsening among more educated respondents—which has the net effect overall of reducing disparities across education groups. In contrast, BRFSS shows the sharpest declines in health among the least educated group, which implies a widening gap across socioeconomic strata.

Although our analysis of existing survey programs cannot provide a clear indication of the causes of incomparabilities across surveys and over time, it nevertheless offers an important reminder that, at the present time, substantial caution is warranted in using self-rated health to monitor trends in population health. One concrete suggestion that emerges from our study is to reconsider the standard approach of dichotomizing self-rated health as “fair/poor” versus other responses. Although some recent studies have examined the continuity of self-rated health and found evidence of symmetry in responses at the positive and negative ends of the scale (42, 43), our study indicates that trends in self-reported excellent health appear less prone to inconsistencies across surveys than trends in self-reported fair/poor health. This finding challenges the prevailing approach to using this variable in empirical studies in public health, epidemiology, and medical sociology.

Given the importance of tracking nonfatal health outcomes at the population level, what are the available options for refining these tools for future use? Two main avenues have been pursued to date. First, there has been a steady evolution of more detailed instruments that either ask multiple questions about general health (44, 45) or ask about more specific domains of health or symptoms (46–48). Population-level data for these instruments are not yet available for long periods of time or from multiple sources in the same country to test if they suffer from similar problems. Recent efforts to understand relations across various multiitem health measurement scales have characterized differences across instruments in cross-sectional analyses (49–51), but extension of these analyses to compare time trends requires further longitudinal study. Second, strategies such as anchoring vignettes (52, 53) have been proposed recently to enhance the comparability of self-reported survey responses in health and other areas. It is not yet known whether such strategies can successfully remedy the bulk of comparability problems across settings or over time.

The epidemiologic transition has advanced far enough (54–56) that, for most countries, critical questions regarding the population's health encompass not only how long people live but also their experience of health while they are alive. Although self-rated health continues to appeal as a health measure that contributes unique information on individuals’ perceptions of their own health and has strong predictive power for future outcomes, our study suggests that self-rated health may not be suitable for tracking changes in population health over time. In seeking to identify efficient measurement strategies for this latter purpose, more development work on new robust and comparable approaches is urgently needed.

Supplementary Material

Acknowledgments

Author affiliations: Department of Global Health and Population, Harvard School of Public Health, Boston, Massachusetts (Joshua A. Salomon); Harvard Initiative for Global Health, Cambridge, Massachusetts (Joshua A. Salomon, Shefali Oza, Stella Nordhagen); and Institute for Health Metrics and Evaluation, University of Washington, Seattle, Washington (Stella Nordhagen, Christopher J. L. Murray).

This work was supported in part by the National Institute on Aging (grant P01AG17625).

The authors gratefully acknowledge helpful discussions with Ali Mokdad, Dean Joliffe, Linda Martin, and Yael Benyamini.

The funders had no role in the study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Conflict of interest: none declared.

Glossary

Abbreviations

- BRFSS

Behavioral Risk Factor Surveillance System

- CI

confidence interval

- CPS

Current Population Survey

- NHANES

National Health and Nutrition Examination Survey

- NHIS

National Health Interview Survey

References

- 1.Wilson IB, Cleary PD. Linking clinical variables with health-related quality of life. A conceptual model of patient outcomes. JAMA. 1995;273(1):59–65. [PubMed] [Google Scholar]

- 2.Revicki DA. Regulatory Issues and Patient-reported Outcomes Task Force for the International Society for Quality of Life Research. FDA draft guidance and health-outcomes research. Lancet. 2007;369(9561):540–542. doi: 10.1016/S0140-6736(07)60250-5. [DOI] [PubMed] [Google Scholar]

- 3.US Department of Health and Human Services. Healthy People 2010. Washington, DC: US Department of Health and Human Services; 2000. [Google Scholar]

- 4.Kramers PG. The ECHI project: health indicators for the European Community. Eur J Public Health. 2003;13(3 suppl):101–106. doi: 10.1093/eurpub/13.suppl_1.101. [DOI] [PubMed] [Google Scholar]

- 5.Reeve BB, Burke LB, Chiang YP, et al. Enhancing measurement in health outcomes research supported by agencies within the US Department of Health and Human Services. Qual Life Res. 2007;16(suppl 1):175–186. doi: 10.1007/s11136-007-9190-8. [DOI] [PubMed] [Google Scholar]

- 6.Arber S. Comparing inequalities in women's and men's health: Britain in the 1990s. Soc Sci Med. 1997;44(6):773–787. doi: 10.1016/s0277-9536(96)00185-2. [DOI] [PubMed] [Google Scholar]

- 7.Mackenbach JP, Kunst AE, Cavelaars AE, et al. Socioeconomic inequalities in morbidity and mortality in Western Europe. The EU Working Group on Socioeconomic Inequalities in Health. Lancet. 1997;349(9066):1655–1659. doi: 10.1016/s0140-6736(96)07226-1. [DOI] [PubMed] [Google Scholar]

- 8.Cagney KA, Browning CR, Wen M. Racial disparities in self-rated health at older ages: what difference does the neighborhood make? J Gerontol B Psychol Sci Soc Sci. 2005;60(4 suppl):S181–S190. doi: 10.1093/geronb/60.4.s181. [DOI] [PubMed] [Google Scholar]

- 9.Banks J, Marmot M, Oldfield Z, et al. Disease and disadvantage in the United States and in England. JAMA. 2006;295(17):2037–2045. doi: 10.1001/jama.295.17.2037. [DOI] [PubMed] [Google Scholar]

- 10.Martin LG, Schoeni RF, Freedman VA, et al. Feeling better? Trends in general health status. J Gerontol B Psychol Sci Soc Sci. 2007;62(1 suppl):S11–S21. doi: 10.1093/geronb/62.1.s11. [DOI] [PubMed] [Google Scholar]

- 11.OECD Health Project. Towards High-Performing Health Systems. Paris, France: Organisation for Economic Cooperation and Development; 2004. [Google Scholar]

- 12.Schoen C, Osborn R, Doty MM, et al. Toward higher-performance health systems: adults’ health care experiences in seven countries, 2007. Health Aff (Millwood) 2007;26(6):w717–w734. doi: 10.1377/hlthaff.26.6.w717. [DOI] [PubMed] [Google Scholar]

- 13.Hennessy CH, Moriarty DG, Zack MM, et al. Measuring health-related quality of life for public health surveillance. Public Health Rep. 1994;109(5):665–672. [PMC free article] [PubMed] [Google Scholar]

- 14.Andresen EM, Catlin TK, Wyrwich KW, et al. Retest reliability of surveillance questions on health related quality of life. J Epidemiol Community Health. 2003;57(5):339–343. doi: 10.1136/jech.57.5.339. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Moriarty DG, Zack MM, Kobau R. The Centers for Disease Control and Prevention's healthy days measures—population tracking of perceived physical and mental health over time. Health Qual Life Outcomes. 2003;1(1):37. doi: 10.1186/1477-7525-1-37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Health Interview Surveys: Towards International Harmonization of Methods and Instruments (WHO Regional Publications, European Series, No. 58) Copenhagen, Denmark: World Health Organization Regional Office for Europe; 1996. [PubMed] [Google Scholar]

- 17.Power C, Matthews S, Manor O. Inequalities in self rated health in the 1958 birth cohort: lifetime social circumstances or social mobility? BMJ. 1996;313(7055):449–453. doi: 10.1136/bmj.313.7055.449. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Shetterly SM, Baxter J, Mason LD, et al. Self-rated health among Hispanic vs non-Hispanic white adults: the San Luis Valley Health and Aging Study. Am J Public Health. 1996;86(12):1798–1801. doi: 10.2105/ajph.86.12.1798. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Finch BK, Hummer RA, Reindl M, et al. Validity of self-rated health among Latino(a)s. Am J Epidemiol. 2002;155(8):755–759. doi: 10.1093/aje/155.8.755. [DOI] [PubMed] [Google Scholar]

- 20.Institute of Medicine. State of the USA Health Indicators: Letter Report. Washington, DC: The National Academies Press; 2009. [PubMed] [Google Scholar]

- 21.Idler EL, Angel RJ. Self-rated health and mortality in the NHANES-I Epidemiologic Follow-up Study. Am J Public Health. 1990;80(4):446–452. doi: 10.2105/ajph.80.4.446. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Fylkesnes K. Determinants of health care utilization—visits and referrals. Scand J Soc Med. 1993;21(1):40–50. doi: 10.1177/140349489302100107. [DOI] [PubMed] [Google Scholar]

- 23.Idler EL, Benyamini Y. Self-rated health and mortality: a review of twenty-seven community studies. J Health Soc Behav. 1997;38(1):21–37. [PubMed] [Google Scholar]

- 24.Benyamini Y, Idler EL. Community studies reporting association between self-rated health and mortality: additional studies, 1995 to 1998. Res Aging. 1999;21(3):392–401. [Google Scholar]

- 25.Idler EL, Russell LB, Davis D. Survival, functional limitations, and self-rated health in the NHANES I Epidemiologic Follow-up Study, 1992. First National Health and Nutrition Examination Survey. Am J Epidemiol. 2000;152(9):874–883. doi: 10.1093/aje/152.9.874. [DOI] [PubMed] [Google Scholar]

- 26.Benjamins MR, Hummer RA, Eberstein IW, et al. Self-reported health and adult mortality risk: an analysis of cause-specific mortality. Soc Sci Med. 2004;59(6):1297–1306. doi: 10.1016/j.socscimed.2003.01.001. [DOI] [PubMed] [Google Scholar]

- 27.Bartley M. Unemployment and ill health: understanding the relationship. J Epidemiol Community Health. 1994;48(4):333–337. doi: 10.1136/jech.48.4.333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.McWilliams JM, Meara E, Zaslavsky AM, et al. Health of previously uninsured adults after acquiring Medicare coverage. JAMA. 2007;298(14):2886–2894. doi: 10.1001/jama.298.24.2886. [DOI] [PubMed] [Google Scholar]

- 29.Subramanian SV, Kawachi I, Kennedy BP. Does the state you live in make a difference? Multilevel analysis of self-rated health in the US. Soc Sci Med. 2001;53(1):9–19. doi: 10.1016/s0277-9536(00)00309-9. [DOI] [PubMed] [Google Scholar]

- 30.Shi L, Starfield B, Politzer R, et al. Primary care, self-rated health, and reductions in social disparities in health. Health Serv Res. 2002;37(3):529–550. doi: 10.1111/1475-6773.t01-1-00036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Zack MM, Moriarty DG, Stroup DF, et al. Worsening trends in adult health-related quality of life and self-rated health—United States, 1993–2001. Public Health Rep. 2004;119(5):493–505. doi: 10.1016/j.phr.2004.07.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.National Center for Health Statistics. Health, United States, 2006. Hyattsville, MD: National Center for Health Statistics; 2006. [Google Scholar]

- 33.Centers for Disease Control and Prevention, National Center for Health Statistics. Hyattsville, MD: Centers for Disease Control and Prevention, US Department of Health and Human Services; 2007. National Health and Nutrition Examination Survey data. ( http://www.cdc.gov/nchs/nhanes.htm) [Google Scholar]

- 34.Centers for Disease Control and Prevention. Atlanta, GA: Centers for Disease Control and Prevention, US Department of Health and Human Services; 2007. Behavioral Risk Factor Surveillance System Survey data. ( http://www.cdc.gov/brfss/index.htm) [Google Scholar]

- 35.US Department of Health and Human Services, National Center for Health Statistics. Hyattsville, MD: US Department of Health and Human Services, National Center for Health Statistics; 2007. National Health Interview Survey data. ( http://www.cdc.gov/nchs/nhis.htm) [Google Scholar]

- 36.US Census Bureau. Suitland, MD: US Census Bureau; 2007. Current Population Survey, March supplement data. ( http://www.census.gov/cps) [Google Scholar]

- 37.Wolter KM. Introduction to Variance Estimation. New York, NY: Springer-Verlag; 1985. [Google Scholar]

- 38.Jolliffe D. Estimating sampling variance from the Current Population Survey: a synthetic design approach to correcting standard errors. J Econ Soc Meas. 2003;28(4):239–261. [Google Scholar]

- 39.Curtin R, Presser S, Singer E. The effects of response rate changes on the index of consumer sentiment. Public Opin Q. 2000;64(4):413–428. doi: 10.1086/318638. [DOI] [PubMed] [Google Scholar]

- 40.Keeter S, Miller C, Kohut A, et al. Consequences of reducing nonresponse in a national telephone survey. Public Opin Q. 2000;64(2):125–148. doi: 10.1086/317759. [DOI] [PubMed] [Google Scholar]

- 41.Nelson DE, Powell-Griner E, Town M, et al. A comparison of national estimates from the National Health Interview Survey and the Behavioral Risk Factor Surveillance System. Am J Public Health. 2003;93(8):1335–1341. doi: 10.2105/ajph.93.8.1335. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Manderbacka K, Lahelma E, Martikainen P. Examining the continuity of self-rated health. Int J Epidemiol. 1998;27(2):208–213. doi: 10.1093/ije/27.2.208. [DOI] [PubMed] [Google Scholar]

- 43.Mackenbach JP, van den Bos J, Joung IM, et al. The determinants of excellent health: different from the determinants of ill-health? Int J Epidemiol. 1994;23(6):1273–1281. doi: 10.1093/ije/23.6.1273. [DOI] [PubMed] [Google Scholar]

- 44.Deeg DJ, Kriegsman DM. Concepts of self-rated health: specifying the gender difference in mortality risk. Gerontologist. 2003;43(3):376–386. doi: 10.1093/geront/43.3.376. [DOI] [PubMed] [Google Scholar]

- 45.Bjorner JB, Kristensen TSO. Multi-item scales for measuring global self-rated health: investigation of construct validity using structural equations models. Res Aging. 1999;21(3):417–439. [Google Scholar]

- 46.Kaplan RM, Bush JW, Berry CC. Health status: types of validity and the index of well-being. Health Serv Res. 1976;11(4):478–507. [PMC free article] [PubMed] [Google Scholar]

- 47.Ware JE, Jr, Sherbourne CD. The MOS 36-item short-form health survey (SF-36). I. Conceptual framework and item selection. Med Care. 1992;30(6):473–483. [PubMed] [Google Scholar]

- 48.EuroQol—a new facility for the measurement of health-related quality of life. The EuroQol Group. Health Policy. 1990;16(3):199–208. doi: 10.1016/0168-8510(90)90421-9. [DOI] [PubMed] [Google Scholar]

- 49.Hanmer J, Lawrence WF, Anderson JP, et al. Report of nationally representative values for the noninstitutionalized US adult population for 7 health-related quality-of-life scores. Med Decis Making. 2006;26(4):391–400. doi: 10.1177/0272989X06290497. [DOI] [PubMed] [Google Scholar]

- 50.Fryback DG, Dunham NC, Palta M, et al. US norms for six generic health-related quality-of-life indexes from the National Health Measurement Study. Med Care. 2007;45(12):1162–1170. doi: 10.1097/MLR.0b013e31814848f1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Hanmer J, Hays RD, Fryback DG. Mode of administration is important in US national estimates of health-related quality of life. Med Care. 2007;45(12):1171–1179. doi: 10.1097/MLR.0b013e3181354828. [DOI] [PubMed] [Google Scholar]

- 52.Salomon JA, Tandon A, Murray CJ. Comparability of self rated health: cross sectional multi-country survey using anchoring vignettes. BMJ. 2004;328(7434):258–261. doi: 10.1136/bmj.37963.691632.44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Bago d'Uva T, Van Doorslaer E, Lindeboom M, et al. Does reporting heterogeneity bias the measurement of health disparities? Health Econ. 2008;17(3):351–375. doi: 10.1002/hec.1269. [DOI] [PubMed] [Google Scholar]

- 54.Omran AR. The epidemiologic transition: a theory of the epidemiology of population change. Milbank Mem Fund Q. 1971;49(4):509–538. [PubMed] [Google Scholar]

- 55.Murray CJ, Chen LC. Understanding morbidity change. Popul Dev Rev. 1992;18(3):481–503. [Google Scholar]

- 56.Salomon JA, Murray CJL. The epidemiologic transition revisited: compositional models for causes of death by age and sex. Popul Dev Rev. 2002;28(2):205–228. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.