Abstract

Neuronal models predict that retrieval of specific event information reactivates brain regions that were active during encoding of this information. Consistent with this prediction, this positron-emission tomography study showed that remembering that visual words had been paired with sounds at encoding activated some of the auditory brain regions that were engaged during encoding. After word-sound encoding, activation of auditory brain regions was also observed during visual word recognition when there was no demand to retrieve auditory information. Collectively, these observations suggest that information about the auditory components of multisensory event information is stored in auditory responsive cortex and reactivated at retrieval, in keeping with classical ideas about “redintegration,” that is, the power of part of an encoded stimulus complex to evoke the whole experience.

A fundamental principle of memory holds that encoding and retrieval processes are strongly interdependent. A large number of behavioral studies show that memory performance is enhanced if the encoding conditions match the retrieval demands. For example, although semantic analysis of the study material benefits performance on most episodic tests (1), phonemic analysis can be more effective under certain retrieval conditions (2). Similarly, the kind of cues that will be effective at the retrieval stage depends on the extent to which the cue information overlaps the encoded information (3). In addition, major shifts between encoding and retrieval of the internal (4) and external (5) context impairs memory performance. The interrelatedness of encoding and retrieval is also a salient feature in models of the neural basis of episodic memory, in that successful retrieval of episodic information is seen as depending on reactivation of parts of the neural pattern associated with encoding (6, 7). However, whereas there is much support from behavioral studies that encoding and retrieval are interrelated, strong support is lacking at the neural level.

Functional brain-imaging techniques, which allow monitoring of brain activity related to encoding and retrieval separately, offer a way of studying the impact of encoding processes for subsequent retrieval at the neural level. To date, several positron-emission tomography and functional MRI studies have contrasted encoding and retrieval (8), but few studies have examined commonalties in activation patterns directly (9–13).§ Moreover, to provide strong support that the neural pattern at encoding is reactivated during retrieval of the encoded information, alternative interpretations in terms of perceptual and attentional demands need to be ruled out (14).

The present study addressed the issue of whether retrieval of specific event information is associated with activation of some of the brain regions that were engaged during encoding of this information. Specifically, at encoding, a visually presented word was paired with an auditory stimulus. The purpose of the study was to see whether auditory responsive cortex would be activated at retrieval by the visual cue.

Two encoding tasks were used in experiment 1. One task involved paired presentation of visual words and complex sounds. The other task involved presentation of visual words only. The visual + auditory task alone was expected to lead to encoding of auditory event information, and the processing of such information was expected to be associated with activation of auditory regions in temporal cortex. The encoding tasks were followed by two episodic retrieval tasks that involved presentation of visual words. In both retrieval tasks, for each word, subjects were asked to indicate whether they (i) recognized it and remembered that it had been paired with a sound at study, (ii) recognized it and thought it had been presented alone at study, or (iii) thought it was new. In one retrieval task, the words came from the visual + auditory encoding condition. In the other retrieval task, the words had been part of the visual encoding task. Thus, the retrieval conditions were identical, with the exception that only the paired condition was expected to involve retrieval of auditory event information. Of main concern was whether the paired retrieval condition, relative to the unpaired condition, would be associated with increased activity in the same brain regions that were activated during encoding of auditory information. Because there was no auditory stimulation at the retrieval stage, overlapping activity during encoding and retrieval should not be the result of overlapping perceptual demands during encoding and retrieval. Also, because the instruction was identical in both retrieval conditions, observation of overlap in activity for the paired relative to the unpaired retrieval condition should not reflect differences in selective attention across conditions.

A second experiment was designed to replicate and extend the findings from the first experiment. The extension part was concerned with what will be referred to as “incidental reactivation.” Experiment 1 as well as the replication part of experiment 2 addressed “intentional reactivation” in the sense that subjects were instructed to try to remember whether the test words had been paired with sounds at study. Such instructions put focus on a potential word-sound association, and subjects intentionally attempted to retrieve auditory information for each visual word cue. It has been proposed that when different components of an event have been consolidated, retrieval of one component will lead to activation of other components as well (6, 7). This proposal holds true even when the test situation does not demand retrieval of the other event components. By this view, if the word-sound association is strong enough, retrieval of visual word information should “incidentally” reactivate auditory brain regions. This prediction was tested by including three visual word recognition conditions.

In all recognition conditions, subjects were asked to decide whether they recognized visually presented words as having been included in any of the preceding encoding lists. The difference between the recognition conditions had to do with the presence and strength of a word-sound associations (no/weak/strong association). Based on the hypothesis that incidental reactivation depends on the strength of the association between different event components, we expected that recognition of words that were strongly associated with sounds would be associated with maximal activation of auditory brain regions, that auditory activation would be less strong for words with a weak sound association, and that no/least auditory activation would be seen for the words that had not been associated with sounds at study.

Materials and Methods

Participants.

Participating in experiment 1 were 12 right-handed subjects (four females; age range = 20–30), and eight right-handed subjects participated in experiment 2. Two of the subjects in experiment 2 were removed for technical reasons; thus, the final sample consisted of six subjects (two females, age range = 18–30). Subjects were paid for their participation. Both experiments were approved by The Joint Baycrest Centre/University of Toronto Research Ethics and Scientific Review Committee, and written informed consent was obtained from all participants.

Imaging Procedures.

Positron-emission tomography scanning was done with a GEMS Scanditronix (Uppsala) PC-2048 head scanner, with bolus injections of 15O-labeled water into a left forearm vein. Positron-emission tomography data were preprocessed [realigned, stereotactically transformed (15), and smoothed to 10 mm] by using SPM-95 (16). Statistical analyses were done with SPM-96. Changes in global blood flow were corrected for by analysis of covariance.

Behavioral Procedures.

In experiment 1 (Table 1), subjects were asked to memorize single words presented on a computer screen in a (visual) encoding condition, and single words and sounds in a (visual + auditory) encoding condition. Each word or word-sound pair was presented for 4 s (interstimulus interval = 1 s), and the encoding lists consisted of 20 items each. The stimuli consisted of concrete nouns and complex sounds (e.g., a dog barking, a drill, or a plane during takeoff). At retrieval, single visual words were presented on a computer screen (4 s per item; interstimulus interval = 1 s). Subjects pressed one button for words they recognized and thought had been presented alone at study; another button when they recognized a word and remembered that it had been paired with a sound at study; and no button when they thought a word was new. The test lists included 25 items. During the 60-s scanning window, only words (n = 12) from the (visual) encoding condition were included in one retrieval condition (unpaired), whereas the other retrieval condition (paired) included only words from the (visual + auditory) encoding condition. Before the scanning window, subjects saw two new words, two words from the same encoding condition as during the scan window, and three words from the other encoding condition. After the scanning window, subjects saw three old and three new words.

Table 1.

Overview of design in experiment 1

| Scan | Task |

|---|---|

| 1 | Word encoding |

| — | Repetition of word encoding |

| 2 | Word-sound encoding |

| — | Repetition of word-sound encoding |

| 3 | Recognize words and remember sounds (unpaired) |

| 4 | Recognize words and remember sounds (paired) |

Note that the order of scans 1 and 2 as well as 3 and 4 was counterbalanced across subjects.

Experiment 2 included the same four conditions as in experiment 1 plus an additional encoding condition and three visual word recognition conditions (Table 2). The length of the encoding lists was increased to 42 (44 for the additional encoding condition) to generate additional test material for the three visual word recognition conditions. Otherwise, the four replicated conditions were identical to their experiment 1 counterparts. The prescan window/postscan window composition of the tests was the same as in the recognition tests in experiment 1. The visual word recognition conditions involved presentation of single visual words on a computer screen at the rate of 4 s per item (interstimulus interval = 1 s). Participants pressed one button when they thought the word had been studied, and the other when they thought it was new. The difference between the conditions had to do with the type of words that was presented: (i) words that had not been paired with sounds at study; (ii) words for which a weak word-sound association existed (two encoding trials for each pair); and (iii) words that had a strong word-sound association (six encoding trials for each pair).

Table 2.

Overview of design in experiment 2

| Scan | Task |

|---|---|

| 1 | Word encoding—set 1a & 1b |

| — | Repetition of word encoding—set 1a & 1b |

| 2 | Word-sound encoding—set 2a & 2b |

| — | Repetition of word-sound encoding—set 2a & 2b |

| 3 | Recognize words and remember sounds—set 1a (unpaired) |

| 4 | Recognize words and remember sounds—set 2a (paired) |

| 5 | Word-sound encoding—set 2a (two presentations) |

| — | Repetition of word-sound encoding—set 2a (two presentations) |

| 6 | Yes/no word recognition—set 1b (no sound association) |

| 7 | Yes/no word recognition—set 2b (weak sound association) |

| 8 | Yes/no word recognition—set 2a (strong sound association) |

Note that the order of scans 1 and 2, 3 and 4, as well as 6–8 was counterbalanced across subjects.

Results

The behavioral data from experiment 1 showed that subjects were good at recognizing the visual cues and deciding whether there was sound information associated with them (analyses were restricted to items presented during the scan intervals). On average, subjects correctly classified 76% of the words as old and having been paired with sounds at encoding in the (paired) condition (range = 0.47–1.0). Subjects correctly recognized 84% in the (unpaired) condition as old and not paired with sounds during encoding (range = 0.60–1.0).

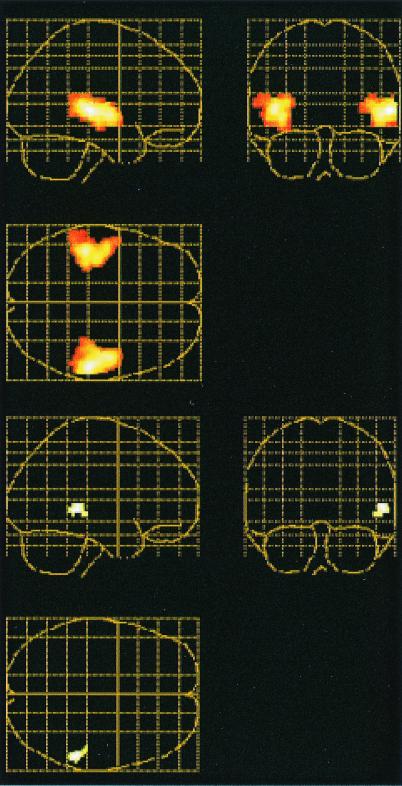

To examine whether brain regions activated during encoding were reactivated at retrieval, the (visual + auditory) and (visual) encoding conditions were contrasted, and the resulting image of brain activity (threshold: P = 0.05, uncorrected) was used as a mask for a contrast between the paired and unpaired retrieval conditions. In this way, analysis of retrieval activity was restricted to those regions that were differentially activated during encoding of words and sounds relative to encoding of words only. Within the limited search space created by the mask, retrieval activations with P ≤ 0.01 (uncorrected) were considered significant. The encoding contrast revealed differential activity (P < 0.01 corrected) in right (x, y, z = 58, −26, 4; 48, −10, 0; 54, −42, 0) and left (x, y, z = −44, −30, 4; −38, −20, −4; −46, −12, 0) temporal lobes, including primary and secondary auditory cortex (Fig. 1 Top). No other activation was significant after correction for multiple comparisons. The contrast between retrieval conditions revealed that the (paired) retrieval condition relative to the (unpaired) retrieval condition was associated with differential activation in right auditory responsive cortex (ref. 17; x, y, z = 56, −40, 0; Fig. 1 Bottom). In addition, differential activity was observed in left medial temporal lobe (x, y, z = −36, −20, −16). These findings provided evidence for overlapping activity in auditory responsive cortex during encoding and retrieval of auditory event information.

Figure 1.

Overlapping activations in auditory responsive cortex during encoding and retrieval of auditory information in experiment 1. Sagittal, coronal, and transverse views of a glass brains are shown. (Top) Differential activation when encoding of visual words and sounds was contrasted with encoding of visual words. (Bottom) Differential activation when paired and unpaired retrieval were compared (the encoding activation map served as a mask for the retrieval comparison). Extent threshold = 25 voxels.

The results from the replication part of experiment 2 provided additional support. Again, it was found that subjects were good at recognizing the visual cues and deciding whether there was sound information associated with them. Subjects correctly classified 73% of the words as old and having been paired with sounds in the (paired) condition (range = 0.60–0.92). The subjects recognized 76% as old and not associated with sounds in the (unpaired) condition (range = 0.40–1.0).

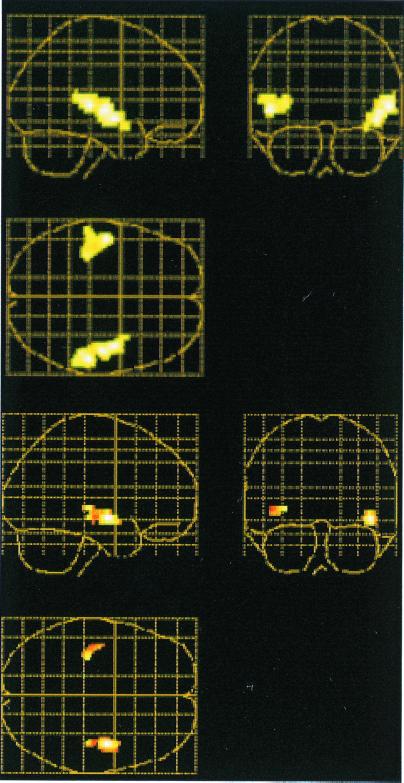

A contrast between the (visual + auditory) (scan 2 in Table 1) and (visual) encoding conditions again revealed bilateral activation (P < 0.01, corrected) in auditory responsive cortex (x, y, z = 56, −28, 4; 44, −6, −8; 48, −16, 0; −50, −14, 0; −36, −30, 8; Fig. 2 Top). As in experiment 1, the differential image from the encoding contrast served as a mask (P < 0.05, uncorrected) for the contrast between the (paired) and (unpaired) retrieval conditions. Increased activity was observed in bilateral auditory responsive cortex (x, y, z = 44, −6, −8; 42, −24, −8; 54, −24, 0; −42, −14, −4; Fig. 2 Bottom). As in experiment 1, the activation was stronger in the right hemisphere. Also, as in experiment 1, differential activity was seen in the left medial temporal lobe (x, y, z = −36, −22, −12; −34, −14, −28).

Figure 2.

Overlapping activations in auditory responsive cortex during encoding and retrieval of auditory information in experiment 2. Sagittal, coronal, and transverse views of glass brains are shown. (Top) Differential activation when encoding of visual words and sounds was contrasted with encoding of visual words. (Bottom) Differential activation when paired and unpaired retrieval were compared (the encodingactivation map in A served as a mask for the retrieval comparison). Extent threshold = 25 voxels.

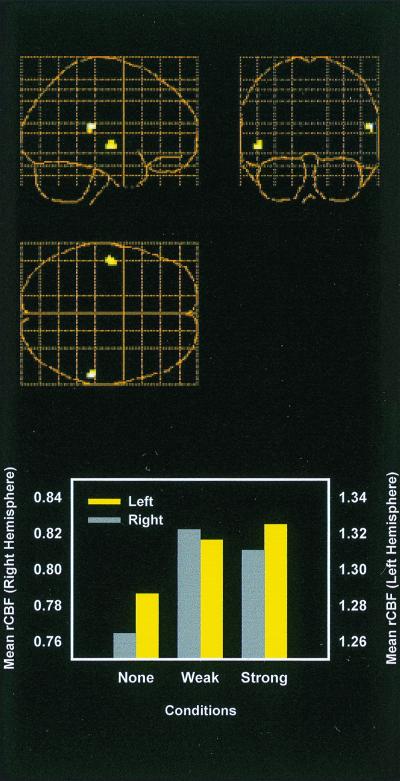

The results from the test of incidental reactivation of auditory brain regions were positive. Word recognition performance was 0.79 (0.40–1.0) in the no association condition; 0.79 (0.53–1.0) in the weak association condition; and 1.0 (1.0–1.0) in the strong association condition. An analysis involving the contrast between the (visual + auditory; scan 2 in Table 1) and (visual) encoding conditions as a mask (P < 0.05, uncorrected) for a contrast between the (strong association) and (no association) recognition conditions revealed increased activity in right (x, y, z = 60, −32, 16) and left (x, y, z = −50, −12, −4) auditory responsive cortex (Fig. 3 Top). Activity at the left voxel tended to increase as a function of the strength of the word-sound association (Fig. 3 Bottom). However, the main difference had to do with presence versus lack of word-sound association, and a weighted contrast of the strong and weak association conditions with the no association condition (with the same encoding contrast as mask) revealed bilateral activation in temporal cortex (x, y, z = −50, −12, −4; 54, −36, 12).

Figure 3.

Mean activity in auditory responsive cortex during visual word recognition. (Top) Differential activation when recognition of words with strong sound associations was contrasted with recognition of words with no sound associations (the image from word-sound encoding—word encoding served as mask). (Bottom) Mean activity in right (x, y, z = 60, −32, 16) and left (x, y, z = −50, −12, −4) auditory responsive cortex as function of experimental condition. None, no word-sound association; Weak, weak word-sound association; Strong, strong word-sound association. y axis, blood flow counts.

Discussion

We have shown that remembering that visual words were paired with sounds during encoding activates regions in right (and to a lesser extent, in left) auditory responsive cortex and that visual word recognition activates regions in auditory responsive cortex if word-sound associations are established during encoding. The auditory regions that were found to be activated at retrieval involved some of the auditory regions that were differentially activated during encoding of sounds. Hence, these findings provide support for the view that retrieval of specific event information is associated with reactivation of some of the regions that were involved during encoding of this information.

An independent but related study by Wheeler and colleagues (18), who used different methods and procedures, makes the same point not only about auditory but also visual information. In the Wheeler et al. (18) study, over a 2-day period, subjects studied pictures and sounds that were paired with descriptive labels. During subsequent episodic retrieval of pictures and sounds based on the labels, increased activity was observed in regions of visual and auditory cortex, respectively. The activated regions comprised subsets of regions activated during separate perception tasks. These findings are in good agreement with the present results. It should be noted, however, that the auditory cortex activation was stronger in the left than in the right hemisphere in the study by Wheeler et al. (18), whereas the opposite was true in our data set. The reason for this discrepancy is unclear. A previous lesion study of auditory imagery and perception pointed to a special role of right temporal cortex (19), but an imaging study of auditory (song) imagery revealed bilateral activation (20). A possibility is that the nature of the sounds affects the laterality of activations.

Collectively, the present study and the study by Wheeler et al. (18) can be taken as support for the notion that sensory aspects of multisensory event information are stored in some of the brain regions that were activated at encoding. To represent a multisensory event, several sensory regions must be involved, and these need to be interrelated. Medial temporal lobe regions have been suggested to be involved in binding together different inputs (27), which is consistent with our observation of differential activation of left medial temporal lobe regions during encoding of word-sound pairs and subsequently during retrieval of sounds based on visual word cues. Moreover, evidence for interrelations between visual and auditory regions was provided by the observation that visual word recognition, which did not pose demands on retrieval of auditory activation, was associated with activation of auditory cortex when the words had been associated with sounds. This result, referred to as incidental reactivation, is in keeping with the idea that interregional connections allow one region to activate others during episodic memory retrieval (6, 7) and with empirical demonstrations of functional interactions between visual and auditory regions during language processing (22) and nondeclarative memory (23). It can also be related to the classical psychological idea of “redintegration” (24–26). Redintegration is closely related to the concept of association; however, where association refers to the relation between parts (which together form a whole), redintegration refers to the relation between any one of the constituent parts of a complex whole and the totality of the whole. Translated into the present experimental setting, a word-sound pair can be seen as a multisensory whole that includes the representation of the sound and that is redintegrated at retrieval by the unisensory presentation of the visual word.

Finally, although we have stressed encoding-retrieval similarities in the present study and provided support that reactivation of encoding-related activity during retrieval refers to a real physiological process in the brain, it is important to stress that the reality of reactivation does not mean that retrieval is, or is no more than, a simple “replay” of the activation in the same neuronal networks that are engaged at encoding. Rather, there is substantial evidence that episodic memory encoding and retrieval processes have different neuroanatomical correlates (8), and the present results may best be seen as providing an example where encoding and retrieval processes meet in the brain.

Acknowledgments

The contribution by Holly Cormier and the staff at the positron-emission tomography division of the Center for Addiction and Mental Health (Toronto) is gratefully acknowledged. This work was supported by a grant from the Swedish Council for Research into the Humanistic and Social Sciences (Stockholm, Sweden; to L.N.), and by Natural Sciences and Engineering Research Council of Canada Grant 8632 (to E.T.).

Footnotes

Wheeler, M. E., Petersen, S. E. & Buckner, R. L., Cognitive Neuroscience Meeting, April 9, 2000, San Francisco, CA, abstr. 60.

References

- 1.Craik F I M, Tulving E. J Exp Psychol Gen. 1975;104:268–294. [Google Scholar]

- 2.Morris C D, Bransford J D, Franks J J. J Verbal Learn Verbal Behav. 1977;16:519–533. [Google Scholar]

- 3.Tulving E, Thomson D M. Psychol Rev. 1973;80:352–373. [Google Scholar]

- 4.Ryan L, Eich E. In: Memory Consciousness and the Brain: The Tallinn Conference. Tulving E, editor. Philadelphia: Psychological; 1999. pp. 91–105. [Google Scholar]

- 5.Godden D, Baddeley A D. Br J Psychol. 1975;66:325–331. [Google Scholar]

- 6.Mcclelland J L, McNaughton B L, O'Reilly R C. Psychol Rev. 1995;102:419–457. doi: 10.1037/0033-295X.102.3.419. [DOI] [PubMed] [Google Scholar]

- 7.Alvarez P, Squire L R. Proc Natl Acad Sci USA. 1994;91:7041–7045. doi: 10.1073/pnas.91.15.7041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Cabeza R, Nyberg L. J Cognit Neurosci. 2000;12:1–47. doi: 10.1162/08989290051137585. [DOI] [PubMed] [Google Scholar]

- 9.Moscovitch C, Kapur S, Kohler S, Houle S. Proc Natl Acad Sci USA. 1995;92:3721–3725. doi: 10.1073/pnas.92.9.3721. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Roland P E, Gulyás B. Cereb Cortex. 1995;5:79–93. doi: 10.1093/cercor/5.1.79. [DOI] [PubMed] [Google Scholar]

- 11.Nyberg L, McIntosh A R, Cabeza R, Habib R, Houle S, Tulving E. Proc Natl Acad Sci USA. 1996;93:11280–11285. doi: 10.1073/pnas.93.20.11280. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Persson, J. & Nyberg, L. (2000) Microsc. Res. Tech., in press. [DOI] [PubMed]

- 13.McDermott K B, Ojemann J G, Petersen S E, Ollinger J M, Snyder A Z, Akbudak E, Conturo T E, Raichle M E. Memory. 1999;7:661–678. doi: 10.1080/096582199387797. [DOI] [PubMed] [Google Scholar]

- 14.Köhler S, Moscovitch M, Winocur G, Houle S, McIntosh A R. Neuropsychologia. 1998;36:129–142. doi: 10.1016/s0028-3932(97)00098-5. [DOI] [PubMed] [Google Scholar]

- 15.Talairach J, Tournoux P. Co-Planar Stereotaxic Atlas of the Human Brain. New York: Thieme; 1988. [Google Scholar]

- 16.Friston K J, Ashburner J, Frith C D, Poline J-B, Heather J D, Frackowiah R S. Hum Brain Mapp. 1996;2:165–189. doi: 10.1002/(SICI)1097-0193(1996)4:2<140::AID-HBM5>3.0.CO;2-3. [DOI] [PubMed] [Google Scholar]

- 17.Penhune V B, Zatorre R J, MacDonald J D, Evans A C. Cereb Cortex. 1996;6:661–672. doi: 10.1093/cercor/6.5.661. [DOI] [PubMed] [Google Scholar]

- 18.Wheeler M E, Petersen S E, Buckner R L. Proc Natl Acad Sci USA. 2000;97:11125–11129. doi: 10.1073/pnas.97.20.11125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Zatorre R J, Halpern A R. Neuropsychologia. 1993;31:221–232. doi: 10.1016/0028-3932(93)90086-f. [DOI] [PubMed] [Google Scholar]

- 20.Zatorre R J, Halpern A R, Perry D W, Meyer E, Evans A C. J Cognit Neurosci. 1996;8:29–46. doi: 10.1162/jocn.1996.8.1.29. [DOI] [PubMed] [Google Scholar]

- 21.Cohen N J, Ryan J, Hunt C, Romine L, Wszalek T, Nash C. Hippocampus. 1999;9:83–98. doi: 10.1002/(SICI)1098-1063(1999)9:1<83::AID-HIPO9>3.0.CO;2-7. [DOI] [PubMed] [Google Scholar]

- 22.Bookheimer S Y, Zeffiro T A, Blaxton T A, Gaillard W D, Malow B, Theodore W H. NeuroReport. 1998;9:2409–2413. doi: 10.1097/00001756-199807130-00047. [DOI] [PubMed] [Google Scholar]

- 23.McIntosh A R, Cabeza R E, Lobaugh N J. J Neurophys. 1998;80:2790–2796. doi: 10.1152/jn.1998.80.5.2790. [DOI] [PubMed] [Google Scholar]

- 24.Hamilton W. Lectures on Meta-Physics and Logic. Vol. 1. Boston: Gould & Lincoln; 1859. [Google Scholar]

- 25.Horowitz L M, Prytulak L S. Psychol Rev. 1969;76:519–532. [Google Scholar]

- 26.Tulving E, Madigan S A. Annu Rev Psychol. 1970;21:437–484. [Google Scholar]