Abstract

A central question in behavioral science is how we select among choice alternatives to obtain consistently the most beneficial outcomes. Three variables are particularly important when making a decision: the potential payoff, the probability of success, and the cost in terms of time and effort. A key brain region in decision making is the frontal cortex as damage here impairs the ability to make optimal choices across a range of decision types. We simultaneously recorded the activity of multiple single neurons in the frontal cortex while subjects made choices involving the three aforementioned decision variables. This enabled us to contrast the relative contribution of the anterior cingulate cortex (ACC), the orbito-frontal cortex, and the lateral prefrontal cortex to the decision-making process. Neurons in all three areas encoded value relating to choices involving probability, payoff, or cost manipulations. However, the most significant signals were in the ACC, where neurons encoded multiplexed representations of the three different decision variables. This supports the notion that the ACC is an important component of the neural circuitry underlying optimal decision making.

INTRODUCTION

A fundamental question in understanding the link between brain and behavior is how the brain computes the best course of action among competing alternatives. In particular, economists, psychologists, and behavioral ecologists have emphasized the importance of three decision variables in how humans and animals determine the value of a choice alternative (Kacelnik, 1997; Stephens & Krebs, 1986; Kahneman & Tversky, 1979): the “payoff” of a choice, the “probability” the choice will yield a particular outcome, and the “cost” in time and effort to obtain an outcome. The neuronal representation of these individual decision variables might be used to form a common neuronal currency which can subsequently guide decision making (Montague & Berns, 2002). Despite the growing interest in the neural correlates of decision making, it remains unclear how these three decision variables are represented and to what extent they are encoded by distinct neuronal populations.

A logical place to search for the encoding of these decision variables is the frontal lobe as damage within this region often causes impairments in decision making and goal-directed behavior. Three specific areas within the frontal lobe are implicated: the orbito-frontal cortex (OFC) (Fellows & Farah, 2007; Fellows, 2006; Izquierdo, Suda, & Murray, 2004; Baxter, Parker, Lindner, Izquierdo, & Murray, 2000; Bechara, Damasio, Damasio, & Anderson, 1994), the anterior cingulate cortex (ACC) (Amiez, Joseph, & Procyk, 2006; Kennerley, Walton, Behrens, Buckley, & Rushworth, 2006; Rudebeck, Buckley, Walton, & Rushworth, 2006; Rudebeck, Walton, Smyth, Bannerman, & Rushworth, 2006; Walton, Kennerley, Bannerman, Phillips, & Rushworth, 2006; Williams, Bush, Rauch, Cosgrove, & Eskandar, 2004; Hadland, Rushworth, Gaffan, & Passingham, 2003; Shima & Tanji, 1998), and the lateral prefrontal cortex (LPFC) (Miller & Cohen, 2001; Owen, 1997; Duncan, Emslie, Williams, Johnson, & Freer, 1996; Shallice & Burgess, 1991). Neuronal activity in these areas is often modulated by manipulations that alter the value of a trial, including reward size (Sallet et al., 2007; Amiez et al., 2006; Padoa-Schioppa & Assad, 2006; Roesch, Taylor, & Schoenbaum, 2006; Roesch & Olson, 2003, 2004; Wallis & Miller, 2003b; Leon & Shadlen, 1999), taste (Padoa-Schioppa & Assad, 2006; Schoenbaum, Setlow, Saddoris, & Gallagher, 2003; O’Doherty, Deichmann, Critchley, & Dolan, 2002; Hikosaka & Watanabe, 2000; Tremblay & Schultz, 1999; Schoenbaum, Chiba, & Gallagher, 1998; Watanabe, 1996), proximity to reward delivery (Ichihara-Takeda & Funahashi, 2007; Roesch et al., 2006; Roesch & Olson, 2005; McClure, Laibson, Loewenstein, & Cohen, 2004), outcome likelihood (Matsumoto, Matsumoto, Abe, & Tanaka, 2007; Sallet et al., 2007; Amiez et al., 2006; Brown & Braver, 2005; Knutson, Taylor, Kaufman, Peterson, & Glover, 2005), and even abstract rewards such as beauty (Kawabata & Zeki, 2004) and trust (King-Casas et al., 2005; Rilling et al., 2002). Recent studies have shown that single neurons in the ACC (Sallet et al., 2007; Amiez et al., 2006) and the OFC (Padoa-Schioppa & Assad, 2006, 2008) can reflect the integration of some of these variables to derive an abstract value signal.

Manipulations of reward appear to have a widespread effect on neural activity both within the frontal cortex as well as many other regions of the brain (Shuler & Bear, 2006; McCoy & Platt, 2005; Dorris & Glimcher, 2004; Sugrue, Corrado, & Newsome, 2004; Platt & Glimcher, 1999; Schoenbaum et al., 1998). These widespread reward-related activations are unlikely to be redundant signals, and so it is particularly important to understand how they differ across areas. There is some evidence that different decision variables may be represented by distinct populations of neurons (Kobayashi et al., 2006; Roesch et al., 2006; Rudebeck, Walton, et al., 2006; Roesch & Olson, 2004). However, to date, no study has recorded from all three of these frontal areas for any one of these decision variables. Thus, one has to infer specialization of function by comparing across studies, potentially masking important differences between brain areas due to differences in subjects, behavioral paradigms, analysis methods, and selectivity criterion. In addition, many previous studies that examined value manipulations on frontal cortex activity did not require the subject to choose between alternative outcomes. Differences in frontal activity have been noted when a choice is either required or not required (Arana et al., 2003). Consequently, it is important to assess the contribution of different frontal areas to encoding different decision variables within the context of choice behavior.

One way to address these issues is to record from multiple brain regions simultaneously while subjects perform decision-making tasks. This provides a way to compare and contrast the functional contributions of different areas for different functions while capitalizing on the spatial and temporal resolution of single-unit neurophysiology. We used this multi-site, multivariable technique to examine how different frontal areas represent different aspects of decision value. We trained two male rhesus macaques (Macaca mulatta) to make choices between pictures associated with different values along three physically different decision dimensions ( “payoff,” “probability,” and “cost”) and recorded the electrical activity of single neurons simultaneously from the OFC, the ACC, and the LPFC while the subjects made their choices. We sought to determine whether there was evidence for distinct populations of neurons within or between areas that encoded the different decision variables. One possible distinction is that the OFC is more important for encoding the reward outcome, whereas the ACC and/or the LPFC encodes the costs involved in obtaining that reward (Rushworth & Behrens, 2008; Lee, Rushworth, Walton, Watanabe, & Sakagami, 2007; Wallis, 2007). This is consistent with the fact that the ACC and the LPFC have stronger connections with motor areas than the OFC, whereas the OFC has stronger connections with the olfactory and gustatory cortex (Petrides & Pandya, 1999; Carmichael & Price, 1995; Dum & Strick, 1993). An alternative possibility is that neurons throughout the frontal cortex will encode value irrespective of the decision variable, consistent with recent accounts of abstract value signals in the OFC and the ACC (Padoa-Schioppa & Assad, 2006, 2008; Sallet et al., 2007; Amiez et al., 2006).

METHODS

Subjects and Neurophysiological Procedures

The subjects, two male rhesus macaques (Macaca mulatta), were 5 to 6 years of age and weighed 8 to 11 kg at the time of recording. We regulated their daily fluid intake to maintain motivation on the task. Our methods for neurophysiological recording are reported in detail elsewhere (Wallis & Miller, 2003a). Briefly, we implanted both subjects with a head positioner for restraint, and two recording chambers, the positions of which were determined using a 1.5-T magnetic resonance imaging (MRI) scanner. We recorded simultaneously from the ACC, the LPFC, and the OFC using arrays of 10 to 24 tungsten microelectrodes (FHC Instruments, Bowdoin, ME). In Subject A, we recorded from the LPFC and the OFC in the left hemisphere and the ACC, the OFC, and the LPFC from the right hemisphere. In Subject B, we recorded from the ACC and the LPFC in the left hemisphere and from the OFC and the LPFC in the right hemisphere. We determined the approximate distance to lower the electrodes from the MRI images and advanced the electrodes using custom-built, manual microdrives. We randomly sampled neurons; no attempt was made to select neurons based on responsiveness. This procedure ensured an unbiased estimate of neuronal activity, thereby allowing a fair comparison of neuronal properties between the different brain regions. Waveforms were digitized and analyzed off-line (Plexon Instruments, Dallas, TX). All procedures were in accord with the National Institutes of Health guidelines and the recommendations of the U.C. Berkeley Animal Care and Use Committee.

We reconstructed our recording locations by measuring the position of the recording chambers using stereotactic methods. These were then plotted onto the MRI sections using commercial graphics software (Adobe Illustrator, San Jose, CA). We confirmed the correspondence between the MRI sections and our recording chambers by mapping the position of sulci and gray and white matter boundaries using neurophysiological recordings. The distance of each recording location along the cortical surface from the lip of the ventral bank of the principal sulcus was then traced and measured. The positions of the other sulci, relative to the principal sulcus, were also measured in this way, allowing the construction of the unfolded cortical maps shown in Figure 6.

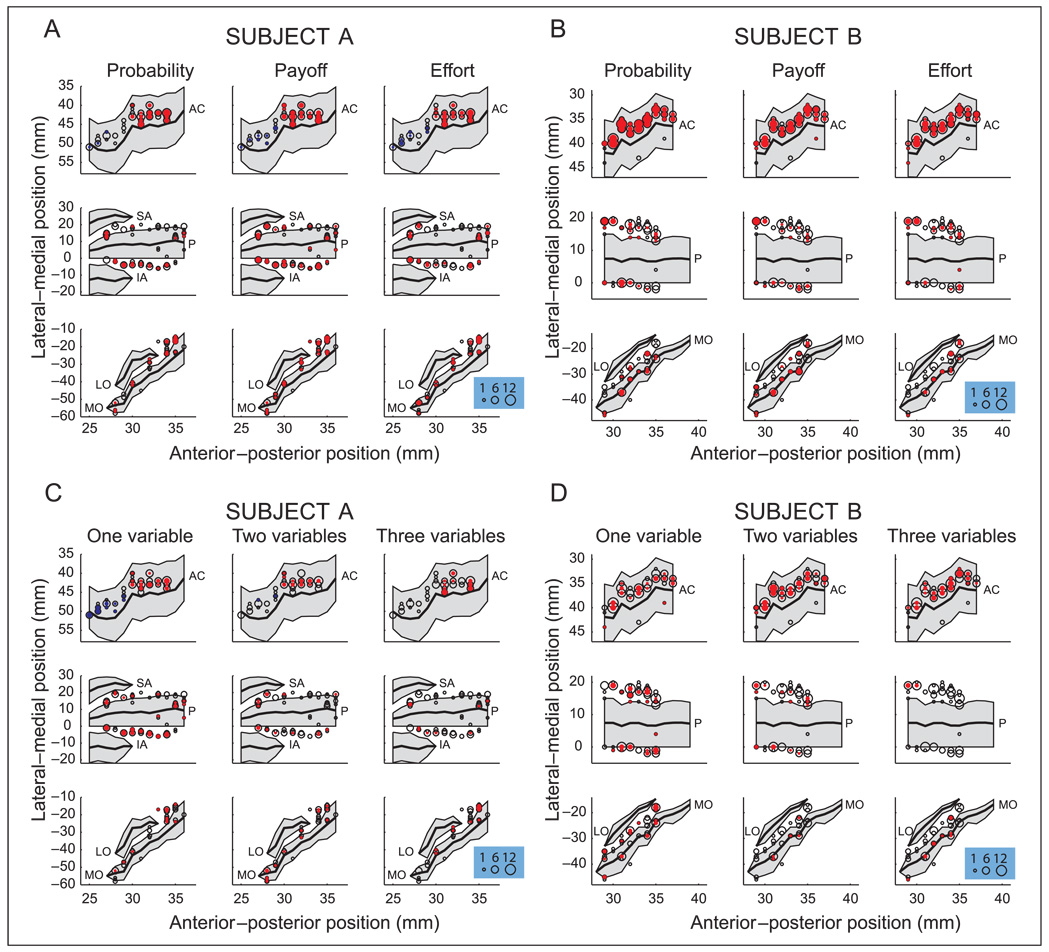

Figure 6.

Locations of all recorded neurons (open circles) and neurons selective for the different decision variables (filled circles) in (A) Subject A and (B) Subject B. Location of neurons selective for different numbers of decision variables in (C) Subject A and (D) Subject B. Red circles indicate the main dataset, whereas blue circles indicate the neurons recorded from the more posterior extent of the ACC. The size of the circles indicates the number of neurons at that location. We measured the anterior–posterior (AP) position from the interaural line (x-axis), and the lateral–medial position relative to the lip of the ventral bank of the principal sulcus (0 point on y-axis). The y-axis runs from ventral to dorsal locations for ACC and LPFC data, and from medial to lateral locations for the OFC data. Gray shading indicates unfolded sulci. See Methods for details regarding the reconstruction of the recording locations. AC = anterior cingulate sulcus; SA = superior arcuate sulcus; IA = inferior arcuate sulcus; P = principal sulcus; LO = lateral orbital sulcus; MO = medial orbital sulcus.

Behavioral Task

We used NIMH Cortex (www.cortex.salk.edu) to control the presentation of stimuli and task contingencies. We monitored eye position with an infrared system (ISCAN, Burlington, MA). Each trial began with the subject fixating a central square cue 0.3° in width (Figure 1A). If the subject maintained fixation within 1.8° of the cue for 1000 msec (fixation epoch), two pictures (2.5° in size) appeared at 5.0° to the left and right of fixation. Each picture was associated with either: (i) a specific number of lever presses required to obtain a juice reward with the probability and magnitude of reward held constant (cost trials), (ii) a specific amount of juice with probability and cost held constant (payoff trials), (iii) a specific probability of obtaining a juice reward with cost and payoff held constant (probability trials). After 1500 msec, the fixation cue changed color, indicating that the subject was free to indicate its choice. Thus, on any given trial, choice value was manipulated along a single decision variable. Choice value in this context refers to the value of the pair of stimuli available for choice on each particular trial. Although the definition of value is fraught with difficulty, we use it here purely in an operational sense to refer to a manipulation which caused our subjects to favor one choice alternative over another.

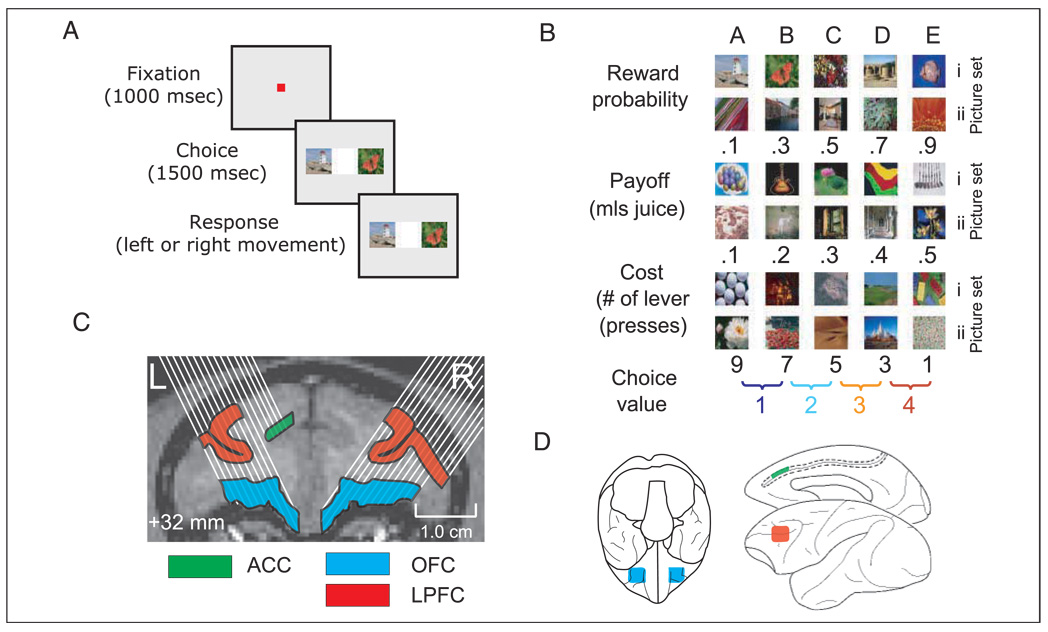

Figure 1.

(A) Sequence of events in the behavioral task. Each trial began with the monkey fixating a central cue for 1000 msec (fixation epoch), after which two pictures appeared either side of fixation. After 1500 msec, the fixation cue changed color, indicating that the subject was free to saccade (Subject B) or move a joystick (Subject A) to indicate its choice (choice epoch). (B) The pictures and outcomes used for Subject B. We used the same outcomes, but different pictures for Subject A. (C) Magnetic resonance scan of a coronal slice through the frontal lobe of Subject B. Shaded regions denote the boundaries of the three frontal areas investigated. White lines depict potential electrode paths. The coronal slice is at the approximate center position of the recording chambers along the anterior–posterior axis. (D) Schematic showing the boundaries of the frontal areas from which we recorded on ventral, medial, and lateral views of the macaque brain. Dashed line depicts unfolded cingulate sulcus.

We used five different picture values for each decision variable and the two presented pictures were always adjacent in value (Figure 1B). Thus, each picture set involved four distinct choice values. This ensured that, aside from the pictures associated with the most or least valuable outcome, subjects chose or did not choose the pictures equally often. For example, a subject would choose Picture C (Figure 1B) on half of the trials (when it appeared with Picture B) and not choose it on the other half of the trials (when it appeared with Picture D). Thus, frequency with which a picture was selected could not account for differences in neuronal activity across decision value. Moreover, by only presenting adjacent valued pictures, we were able to control for the difference in value for each of the choices and, therefore, the conflict or difficulty in making the choice. We used two sets of pictures for each decision variable to ensure that neuronal responses were not driven by the visual properties of the pictures. We presented trials at random from a pool of 48 conditions: three decision variables, two picture sets, two responses (left/right), and four choice values. Subjects worked for ∼600 trials per day. We defined correct choices as choosing the outcome associated with the largest amount of juice, most probable juice delivery, and least amount of cost. We never punished the animal for choosing a less valuable outcome, for example, by using “timeouts” or correction procedures. Nevertheless, the subjects rapidly learned to choose the more valuable outcomes consistently, typically taking just one or two sessions to learn a set of five picture–value associations during initial behavioral training. Once each subject had learned several picture sets for each decision variable, behavioral training was completed and two picture sets for each decision variable were chosen for each subject. Only these six picture sets for each subject were used during all recording sessions.

Subject B was required to maintain fixation within 1.8° of the central point throughout the 1000-msec fixation epoch and 1500-msec choice epoch, and indicated his final choice with an eye movement. Failure to maintain fixation resulted in a 5-sec timeout and repetition of the trial. Subject A had great difficulty not looking at the peripheral pictures and maintaining fixation. We decided to require him to fixate within 1.8° of the central point for the 1000-msec fixation epoch, but when the pictures appeared on the screen, he was free to look at them without penalty. This enabled us to analyze his eye movements during the choice epoch, which could provide us with some indication as to the processes underlying his choice. He subsequently indicated his choice by moving a joystick with an arm movement. We tailored the precise reward amounts to each subject to ensure that they received their daily fluid aliquot over the course of the recording session and to ensure that they were sufficiently motivated to perform the task. In both subjects, probability trials delivered 0.45 ml of juice, whereas cost trials yielded 0.525 ml for Subject A and 0.55 ml for Subject B. Payoff trials yielded the amounts indicated in Figure 1B.

Data Analysis

We excluded trials in which a break fixation occurred and the repetition of the trial that followed such a break (19% of trials—Subject B only) and trials where the subject chose the less valuable outcome (< 2% trials in both subjects). We constructed spike density histograms by averaging activity across the appropriate conditions using a sliding window of 100 msec. To calculate neuronal selectivity related to encoding the choice’s value, we fit a linear regression to the neuron’s firing rate observed during a 200-msec time window and the choice’s value (1 through 4; see Figure 1B). We used this to determine the percentage of the total variance in the neuronal activity that the choice’s value explained (PEV or percentage of explained variance). This measure enabled us to determine the selectivity of the neuron in a way that was independent of the absolute neuronal firing rate. This is useful when comparing neuronal populations that can differ in the baseline and dynamic range of their firing rates. We calculated PEVValue for the first 200 msec of the fixation period and then shifted this 200-msec window in 10-msec steps until we had analyzed the entire trial and calculated the PEVValue for each time point. We then used this analysis to determine the latency at which neurons exhibited selectivity. We defined a neuron as encoding the value of a choice for a given decision variable if the sliding regression analysis reached a significance level of p < .001 for three consecutive sliding time bins. We chose this criterion to produce acceptable type I error levels. We quantified this by examining how many neurons reached our criterion during the fixation period (when the subject does not know which choice will occur, and so the number of neurons reaching criterion should not exceed chance levels). Our threshold yielded 0.8% of the neurons reaching criterion during the fixation period for probability decisions, 0.7% for payoff decisions, and 0.8% for effort decisions. There were no neurons that reached criterion for more than one decision variable. Thus, our type I error for this analysis was acceptable: Crossings of our criterion by chance typically occurred less than 1% of the time for a 1000-msec epoch.

We used a similar method to analyze the encoding of the motor response. We examined the extent to which neurons encoded this factor by comparing neuronal activity on trials where the more valuable picture (and consequently, the subject’s response) was on either the left or right side of the screen using a one-way ANOVA. From this, we calculated the percentage of variance in the neuronal firing rate attributable to the motor response (PEVResponse). We did this for 200-msec windows of activity shifted in 10-msec steps until the entire trial had been analyzed, and then determined the latency at which neurons exhibited selectivity using the criterion of three consecutive time bins with p < .001.

The present analyses focus on neuronal activity during the choice epoch. We also recorded neuronal activity during the outcome of the choice, which we will describe in a subsequent report.

RESULTS

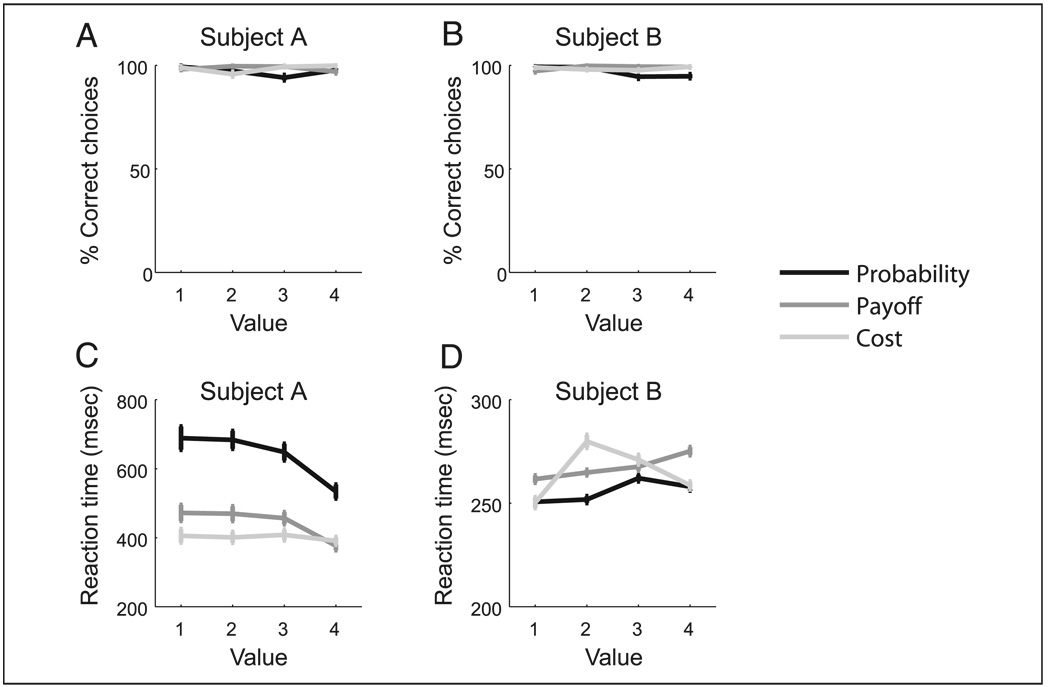

Subjects performed the task near ceiling, choosing the more valuable picture on more than 98% of the trials (Figure 2A and B). The value of the choices systematically affected both subjects’ reaction times although in opposite directions. As value increased, Subject A’s reaction times generally decreased whereas Subject B’s increased. A more detailed analysis using a two-way ANOVA with the factors of choice (four different choices) and decision (probability, payoff, and cost) revealed a significant interaction for both subjects [Subject A: F(6, 276) = 2.5, p < .05; Subject B: F(6, 408) = 15.6, p < 1 × 10–15]. An analysis of the simple effects showed that Subject A’s reaction times decreased linearly as value increased for probability [F(3, 276) = 11.8, p < 1 × 10–15] and payoff [F(3, 276) = 4.6, p < .01] decisions, but not for cost decisions [F(3, 276) = 0.1, p > .1]. In contrast, for Subject B, reaction times increased linearly with value for probability [F(3, 408) = 6.7, p < .001] and payoff decisions [F(3, 408) = 7.6, p < 1 × 10–15] and nonlinearly for cost decisions [F(3, 408) = 39.6, p < 1 × 10–15]. Thus, although decision value was manipulated objectively, behavioral evidence suggests this manipulation had a significant effect on how the subjects subjectively valued each condition.

Figure 2.

(A, B) Both subjects performed the task near ceiling, choosing the more valuable picture on more than 98% of the trials. (C, D) The value of the choices systematically affected both subjects’ reaction times although in opposite directions. As value increased, Subject A’s reaction times generally decreased, whereas Subject B’s increased.

Encoding of Decision Variables during Choice Evaluation

We recorded the activity of 610 neurons from the frontal lobe. There were 257 neurons from the LPFC defined as areas 9, 46, 45, and 47/12l (113 from Subject A and 144 from Subject B). There were 140 neurons from the OFC defined as areas 11, 13, and 47/12o (58 from Subject A and 82 from Subject B). Finally, 213 neurons were in the ACC, within area 24c in the dorsal bank of the cingulate sulcus (70 from Subject A and 143 from Subject B). For many neurons, the activity during the choice epoch (1500-msec period between picture onset and go cue) reflected the value of the available options.

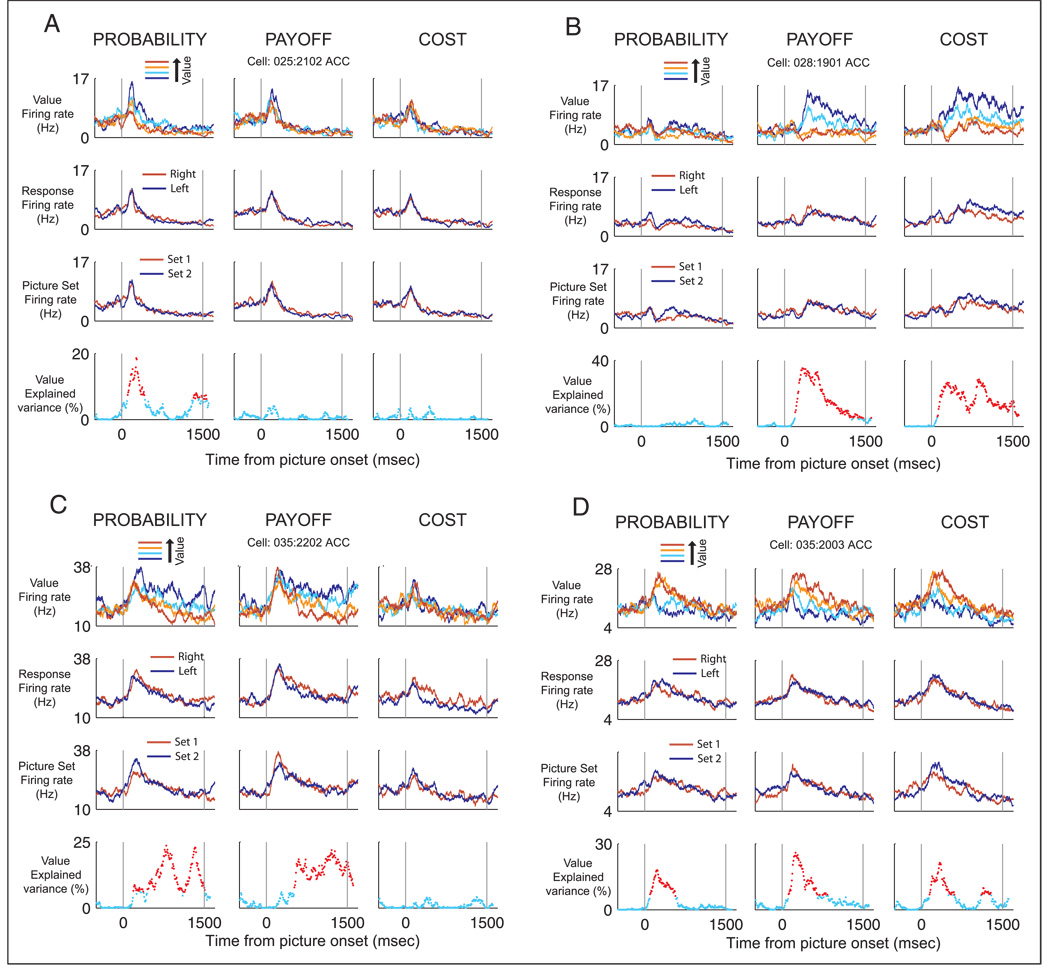

The neuron in Figure 3A encodes the value of the choice for a single decision variable. It shows an increase in firing rate as the probability of the reward decreases, but does not modulate its firing rate for choices where we manipulate payoff or cost. Other neurons showed more complex encoding, modulating their firing rate to combinations of two or more decision variables. For example, the neuron in Figure 3B shows an increase in firing rate as value decreases for manipulations of either payoff or cost but shows no change in its response when we manipulate the value of the probability decisions. Figure 3C illustrates a neuron that shows an increase in firing rate as value decreases for manipulations of probability and payoff but not cost. Figure 3D illustrates a neuron that increases its firing rate as the value of the choice increases for all three decision variables. None of the four neurons in Figure 3 are modulated by the upcoming motor response or the picture sets that we used.

Figure 3.

(A) An ACC neuron that increases its firing rate as choice values decrease for probability decisions only. The top three rows of panels display spike density histograms illustrating the mean firing rate of the neuron on trials sorted according to choice value, direction of the behavioral response, and picture set, respectively. Each column illustrates the neuron’s response to each of the decision variables. The lowest row of panels quantifies the strength of encoding of the choice’s value by calculating the percentage of explained variance based on the results of a sliding linear regression analysis (see Methods). Significant time bins are colored red. (B) An ACC neuron that encodes the choice values for payoff and cost decisions. (C) An ACC neuron that encodes the choice values for probability and payoff. (D) An ACC neuron that encodes the value of the choices for all three decision variables.

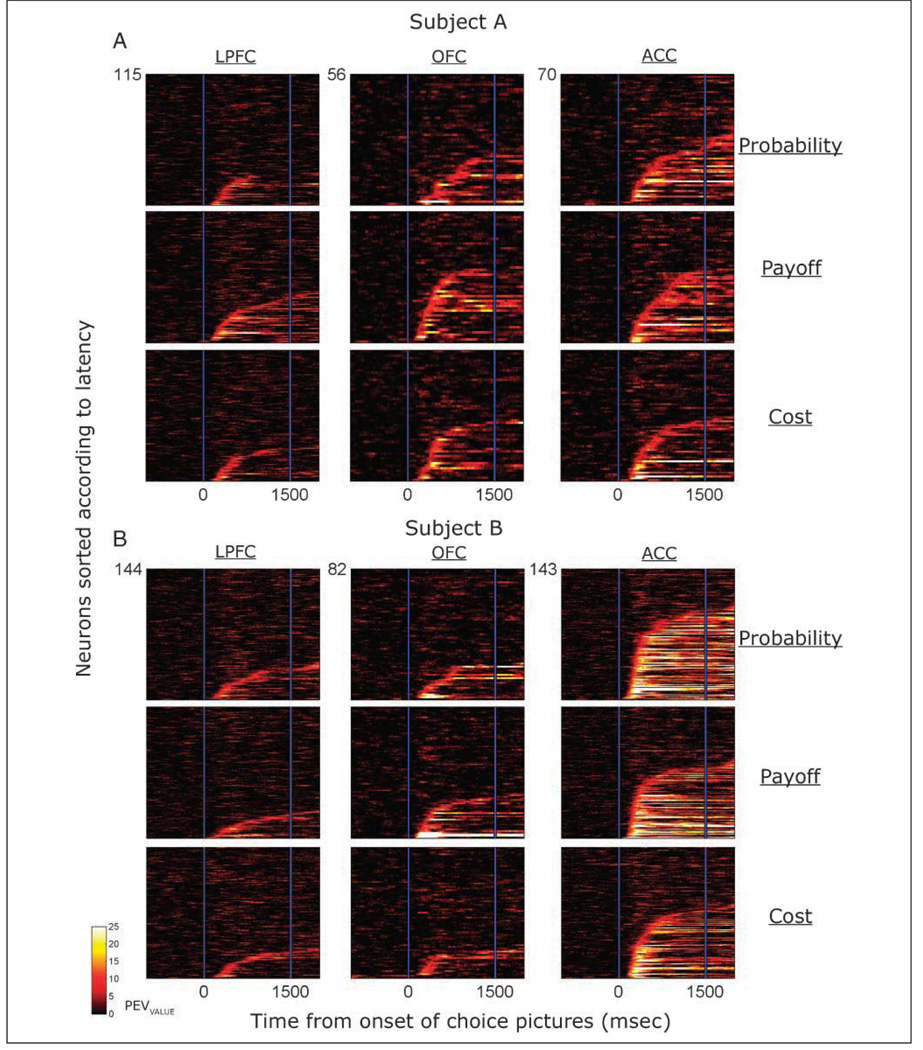

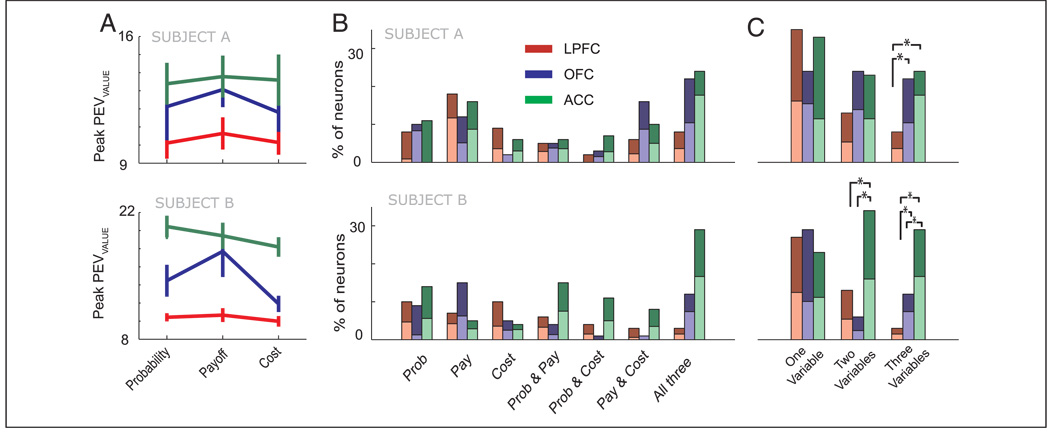

To quantify and compare the strength and time course of the encoding of the choice’s value across the different neuronal populations, we performed a sliding linear regression analysis (see Methods). Figure 3 shows that there is good correspondence between this measure and the selectivity evident in the spike density histograms. Figure 4 illustrates the time course of encoding the choice’s value for all neurons in each brain area and for each decision variable. The most prevalent selectivity was in the ACC, where 84% of the neurons reached criterion for encoding value for at least one of the decision variables, followed by the OFC (56%) and the LPFC (49%). There was no difference in these proportions between the subjects for the ACC and the LPFC, but in the OFC, selectivity was weaker in Subject B (46%) than in Subject A [71%, χ2 = 7.2, p < .01]. To determine the strength of selectivity, for every neuron that exceeded our criterion value, we determined the peak selectivity reached during the choice epoch (Figure 5A). In both subjects, the ACC exhibited the strongest selectivity, followed by the OFC and then the LPFC. We compared these values using a two-way ANOVA with the factors of decision and area. There was a very significant main effect of area [F(2, 681) = 42.9, p < 1 × 10–16], with no significant effect of decision and no interaction. Subsequent post hoc analysis using a Tukey–Kramer test confirmed that the values for every brain area were significantly different from one another (p < .01), with the ACC showing stronger peak selectivity than the OFC and the LPFC, and the OFC showing stronger peak selectivity than the LPFC.

Figure 4.

The time course of selectivity for encoding a choice’s value across the entire population of neurons from which we recorded, sorted according to the area from which we recorded and the decision variable that was manipulated. On each plot, each horizontal line indicates the data from a single neuron, and the color code illustrates how the PEVValue changes across the course of the trial. We have sorted the neurons vertically according to the latency at which their selectivity exceeds criterion. The dark area at the top of each plot consists of neurons that did not reach the criterion during the choice epoch.

Figure 5.

(A) The mean (± standard error) of the peak selectivity reached during the choice epoch for every neuron that reached criterion. (B) Percentage of all neurons that encode the value of the choice depending on which decision variable was being manipulated. The light shading indicates the proportion of neurons that increased their firing rate as value increased, whereas the dark shading indicates those that increased their firing rate as value decreased. (C) Percentage of neurons that encode value across one, two, or three decision variables. Asterisks indicate the proportions that are significantly different from one another (χ2 test, p < .05).

We classified each neuron according to whether it reached criterion for each decision variable. We saw many different types of encoding, representing every decision variable and every combination of decision variables. These different types of encoding were present in all three of the frontal lobe areas from which we recorded (Figure 5B). We grouped the different types of encoding according to how many variables were encoded (Figure 5C). In both subjects, LPFC neurons frequently encoded single decision variables, but showed little evidence of encoding multiple decision variables. In contrast, ACC neurons frequently encoded multiple decision variables. The data from the OFC showed variation between the two subjects, with less encoding of multiple variables occurring in the OFC of Subject B. Nevertheless, even in Subject B, 12% of OFC neurons encoded all three decision variables. For the majority of neurons, the relationship between firing rate and value was consistent across decision variables. For example, if a neuron showed a positive relationship between its firing rate and the value of a choice for one decision variable, it would show a similar positive relationship for other decision variables. There were exceptions but they constituted a minority (10.0% of all neurons). Figure 5B and C shows that there was an approximately equal number of neurons that increased their firing rate as the value increased (49%) compared with the number of neurons that increased their firing rate as the value decreased (51%).

A plausible mechanism by which these different value signals might arise is that neurons that integrate value for multiple decision variables do so by receiving information from neurons tuned to single decision variables. If this were the case, one might expect neurons that encode value for a single decision variable to do so earlier than neurons that encode value for two or more decision variables. To examine whether this was the case, we focused on the ACC because this region contained sufficient neurons that encoded one, two, or three decision variables to permit a meaningful analysis in both subjects. We compared the latencies at which neurons reached our criterion for encoding the value of the choice using a one-way ANOVA grouping the neurons according to the number of decision variables for which the neuron encoded value [there was a significant effect, F(2, 175) = 14.5, p < .00001]. A post hoc Tukey–Kramer test revealed that this was due to significantly longer latencies for encoding one decision variable (645 ± 48 msec) compared to two (460 ± 31 msec) and three decision variables (381 ± 21 msec). The latter two groups did not significantly differ from one another. The same pattern of significant results was evident if we analyzed the data from each subject separately. In sum, there was no evidence that neurons that encoded all three decision variables did so by integrating information from neurons that encoded a single decision variable.

There was no evidence that neurons encoding a particular decision variable(s) were clustered within a brain area (Figure 6). However, in Subject A, we extended our recording area posteriorly toward the cingulate motor areas and recorded the activity of 31 neurons. In this region, many neurons encoded the value of the choice, but tended to do so for only a single decision variable (12/31 or 38%; Figure 6). Only 2/31 neurons (6%) encoded value for two decision variables, and there were none that encoded value for all three variables. Thus, within the dorsal bank of the cingulate sulcus, the encoding of value across multiple decision variables appears to be a process that is confined to the anterior portion of the ACC.

Action Selection

Once the subject has determined the value of the choice alternatives, they must then select the appropriate motor response. We calculated the percentage of explained variance in the neuronal firing rate attributable to the motor response (selection of the left or right picture) across the course of the trial (see Methods). For each brain area, we determined the total number of neurons that reached criterion for encoding the motor response for any of the three decisions. The proportion of motor-selective neurons in the ACC (50%) and the LPFC (43%) did not significantly differ (χ2 = 2.0, p > .1). However, the proportion in the OFC (30%) was significantly smaller than in either the ACC (χ2 = 13.6, p < .001) or the LPFC (χ2 = 6.4, p < .05). Motor-selective neurons were also more prevalent in Subject A (67%) who indicated his response with an arm movement, than in Subject B (27%, χ2 = 97, p < 1 × 10–15) who indicated his response with an eye movement. Previous studies within the ACC have tended to observe more response encoding when arm movements were used (Matsumoto, Suzuki, & Tanaka, 2003; Shima & Tanji, 1998) rather than eye movements (Seo & Lee, 2007; Ito, Stuphorn, Brown, & Schall, 2003). Future experiments will examine whether these differences depend on the effector or reflect individual differences.

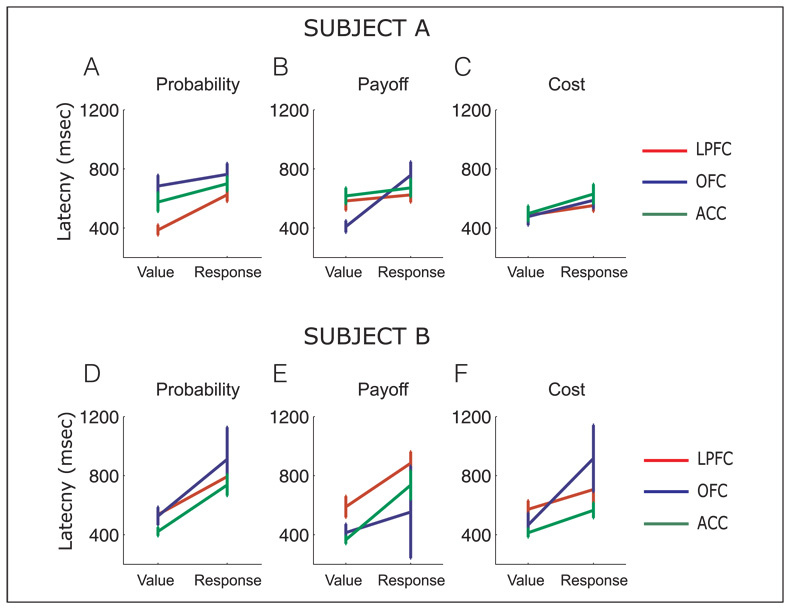

We compared the latency at which neurons encoded the value of the choice with the latency that they encoded the motor response using a three-way ANOVA performed separately for each subject’s data (Figure 7). The factors were encoding (whether the latency was to encode value or motor information), area (LPFC, OFC, and ACC), and decision (probability, payoff, and cost). For Subject A, there was a significant effect of encoding [F(1, 601) = 21.1, p < .00001]. Neurons encoded value information with a mean latency of 529 msec (±20 msec), whereas they encoded response information with a mean latency of 644 msec (±19 msec). For Subject B, there was a highly significant effect of encoding [F(1, 525) = 43.7, p < 1 × 10–9]. Neurons encoded value information with a mean latency of 448 ± 15 msec, whereas they encoded response information with a mean latency of 729 ±31 msec. There was also a significant effect of area [F(2, 525) = 8.7, p < .001]. ACC neurons encoded both value and motor information significantly more quickly than LPFC neurons (Tukey–Kramer test, p < .01), whereas OFC neurons did not significantly differ from either area.

Figure 7.

Comparison of the mean latency (± standard error) of all selective neurons to reach the criterion for selectivity for encoding of the choice’s value and the motor response, for probability, payoff, and cost decisions for Subject A (A–C) and Subject B (D–F).

In summary, these results show that neurons encoded response information later in the trial than value information consistent with the notion that the subject has to calculate the value of the choice alternatives before that information can be used to select the appropriate response. However, no other main effects or interactions were significant at p < .01 in either subject. Thus, there was no evidence that a specific area encoded value or motor information for a specific decision variable before the other areas.

Other Types of Encoding

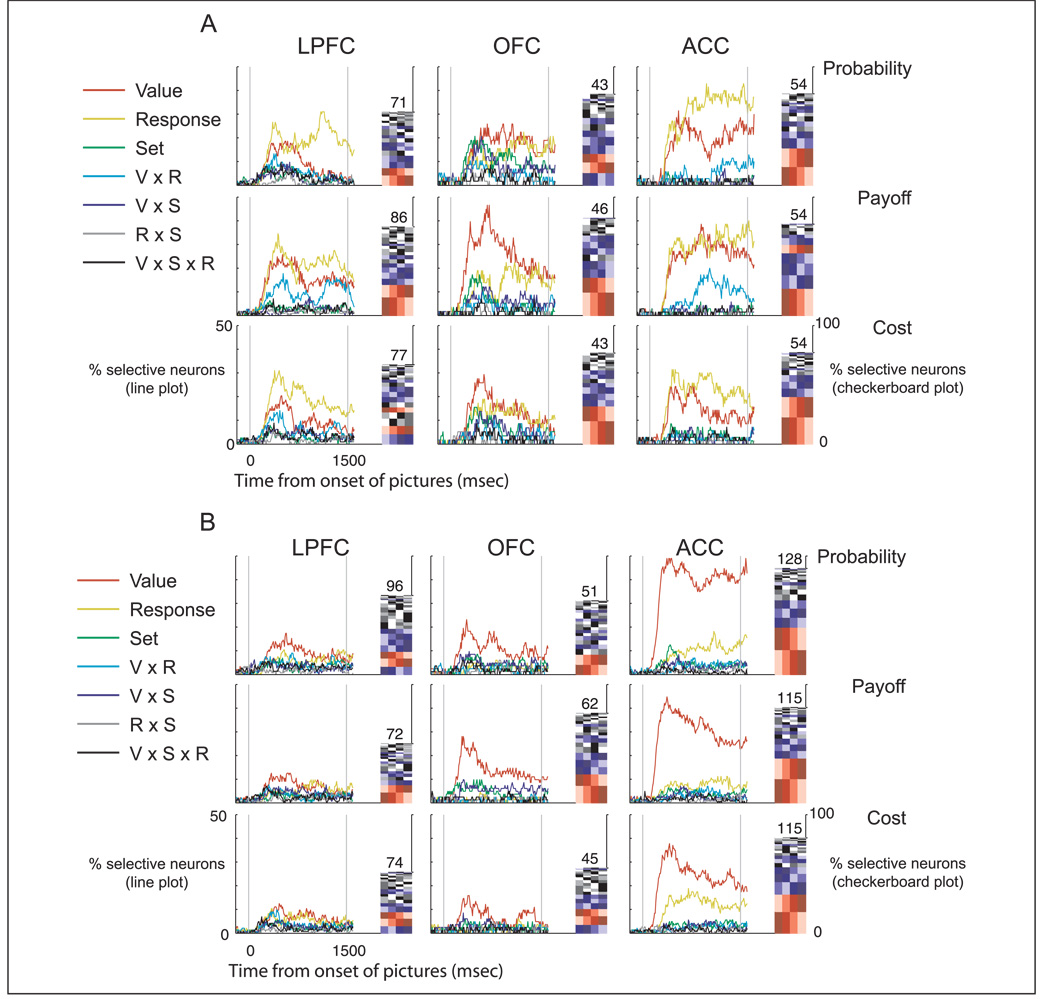

Our focus on the encoding of value and motor information was based on the prominence of this selectivity in the spike density histograms. However, to ensure that we were not overlooking other kinds of neuronal encoding, we performed a factorial analysis using a sliding three-way ANOVA for each neuron and each decision variable in turn. The factors were value (the four different choice values), response (left or right), and picture set (the two sets of pictures). Figure 8 shows, for each time point, the proportion of neurons in the population that significantly encoded a factor or interaction of factors. In every area and for every decision variable, the most prevalent encoding was either the choice’s value or the upcoming motor response, supporting our focus on these two factors.

Figure 8.

The line plots indicate the percentage of all neurons according to the three-way ANOVA that encoded a given factor or interaction of factors at each time point across the course of a trial for (A) Subject A and (B) Subject B. The checkerboard indicates the proportion of different value-encoding schemes. Each row indicates a specific ordering of values, with the values arranged from left to right according to how strongly the neuron fired. The intensity of shading indicates a specific value (with lowest to highest value shaded light to dark). The height of each row indicates the percentage of neurons that encoded value with that particular ordering. The different orderings are arranged vertically in decreasing prevalence. As an example, consider the encoding of value for probability decisions in ACC (A, top right). The most common ordering (bottom row) is those neurons that fired least to the highest value (leftmost darkest shading) and then showed a progressive increase in firing as value progressively decreased, with their highest firing rate occurring to the lowest value (rightmost lightest shading). The next most prevalent ordering (row second from bottom) is those neurons that fired least to the lowest value and then showed a progressive increase in firing as value progressively increased. We have color-coded the different orderings to highlight those of particular interest. The red shading indicates the two orderings that correspond to monotonic encoding schemes, whereas the blue shading indicates the six orderings that correspond to single transpositions from monotonic encoding. The gray-scale shadings reflect the remaining 16 orderings that were neither monotonic nor a single transposition from monotonic. The overall height of the checkerboard indicates the proportion of the neuronal population that encoded value, whereas the absolute number of neurons that did so is printed atop the checkerboard.

An advantage of using ANOVA to characterize neuronal selectivity is that it makes no assumptions regarding the precise relationship between the neuron’s firing rate and the value of the choice. We capitalized on this to examine whether a monotonic linear relationship best described this relationship. For every time bin in the sliding ANOVA analysis that showed a significant main effect of value, we rank-ordered the choice values according to the firing rate of the neuron. Then, for each neuron and each decision variable, in turn, we determined the most frequent ordering over the course of the trial (in 6% of the cases two or more orderings were equally frequent, in which case we used the one that occurred earliest in the trial). Figure 8 illustrates the proportion of these different orderings via the checkerboard plots. Out of the 24 possible orderings, the two most frequent were monotonically increasing and decreasing seen in approximately one third of neurons (probability = 31%; payoff = 38%; cost = 33%). The six orderings that corresponded to single transpositions from monotonic accounted for activity in over one third of neurons (probability = 35%; payoff = 34%; cost = 39%). The remaining 16 possible orderings were encoded by the remaining neurons (probability = 34%; payoff = 28%; cost = 28%). We also performed a trend analysis for every time bin in the sliding ANOVA analysis that showed a significant main effect of value. In 68% of cases, the variance in the data was fit by a linear function with no residual variance left to explain. The addition of a quadratic function significantly reduced the residual variance in 2% of the remaining cases. In sum, for the majority of neurons, the neuronal encoding of value was best described as a linear monotonic function.

Finally, we examined whether other factors might be capable of explaining value encoding. We repeated the sliding regression analysis and determined the partial correlation between neuronal activity and decision value, with the subjects’ reaction times partialled out. For Subject A, who was allowed to move his eyes during the choice epoch, we also partialled out saccadic reaction times, number of saccades, and the total duration spent looking at the chosen or not chosen picture. For both subjects, there was little effect of these parameters on value encoding. An average of 1% of the neurons that we had previously classified as selective were now classed as nonselective.

Value Transitivity

Although neuronal responses indicated that neurons encoded the value of a choice pair relative to other choice pairs, this was not required to solve the behavioral task. Subjects could have performed the task optimally by considering each choice pair in isolation (“if given options A and B, choose B; if given options B and C, choose C,” etc.). Such a strategy would have left them unable to solve novel choice pairings, except through a process of trial-and-error learning. We tested whether this was the case, by presenting each subject with pairs of pictures that were not adjacent in value, a pairing they had never previously encountered. The only way to perform optimally in a transitive inference test is to use the picture–value associations to determine which choice is optimal. Of the 36 novel picture pairings, both subjects chose the more valuable picture every time on the very first occasion of the transitive test. This behavioral evidence is consistent with the neuronal data and suggests that subjects did evaluate choice outcomes relative to other potential choices.

DISCUSSION

During the performance of a simple choice task, many neurons throughout the frontal cortex encoded the value of the choice. There was no evidence that specific areas of the frontal cortex were specialized for processing specific decision variables. There were neurons in all three frontal areas that encoded value relating to decisions involving probability, payoff, or cost manipulations. However, there was a specialization of function with relation to the number of decision variables encoded. Neurons that encoded a single decision variable were equally prevalent throughout the frontal cortex, whereas neurons that encoded two or more decision variables were significantly more common in the ACC and the OFC compared to the LPFC. Neurons that encoded two decision variables are particularly interesting. Had we only found neurons that encoded one decision variable, we would not have evidence that neurons encode value in at least a partially abstract manner. Conversely, had we only found neurons that encoded value for all three decision variables, we could not unconfound decision value from associated processes that correlate with value, such as motivation, arousal, and attention (Roesch & Olson, 2007; Maunsell, 2004). The activity of neurons that encode one or two decision variables cannot be related to motivation, attention, or arousal because these associated cognitive processes would correlate with value regardless of how it is manipulated. These neurons signal both the value of the choice and the reason that the choice is valuable (i.e., which decision variable was manipulated).

Neurons typically encoded value as a linear monotonic function. However, as the value of the choice increased, an approximately equal number of neurons increased their firing rate as decreased their firing rate. The reason for this bidirectional information encoding scheme is unknown, but is evident in multiple frontal areas during perceptual and reward-related discriminations (Seo & Lee, 2007; Machens, Romo, & Brody, 2005). One possibility is that these different encoding patterns reflect different processes that guide choice, such as encoding the value of the chosen or nonchosen alternative to bias the selection process. There was no spatial organization evident in the distribution of such neurons: We even recorded neurons with positive and negative relationships between firing rate and decision value from the same electrode position. These results have broad implications for neuroscientific investigations of decision making, as methodologies that average the neuronal response across populations of neurons (e.g., functional magnetic resonance imaging and event-related potentials) may not be sensitive to detect these value signals. Summing together neurons with equally prevalent but opposing encoding schemes would average out the information we presently report (cf. Nakamura, Roesch, & Olson, 2005).

Comparison of the Encoding of Decision Variables across Frontal Areas

This is the first time that single-neuron activity has been contrasted in the ACC, the LPFC, and the OFC in the same study. We found the strongest and most complex encoding of value information in the ACC. Eighty-four percent of ACC neurons recorded decision value for at least one decision variable and the encoding of choice was consistently the strongest in this area. In addition, significantly more ACC neurons encoded two or more decision variables compared to the OFC and the LPFC. Finally, we showed that the encoding of multiple decision variables was confined to the most anterior extent of the ACC. As we moved posteriorly, there was a marked drop off in such encoding, even though there were still many neurons that would encode value for a single decision variable. It is noteworthy that ACC connections with PFC areas 9 and 46, the amygdala, and the OFC tend to be strongest in more anterior regions of the ACC and diminish in more posterior regions of the ACC (Ongur & Price, 2000; Carmichael & Price, 1996; Bates & Goldman-Rakic, 1993; Van Hoesen, Morecraft, & Vogt, 1993; Amaral & Price, 1984). Our results are consistent with the growing evidence emphasizing the importance of the ACC in diverse aspects of decision making (Behrens, Woolrich, Walton, & Rushworth, 2007; Sallet et al., 2007; Seo & Lee, 2007; Amiez et al., 2006; Kennerley et al., 2006; Walton et al., 2006).

The ACC has also been implicated in other cognitive processes such as conflict monitoring (Botvinick, Braver, Barch, Carter, & Cohen, 2001) and error detection (Niki & Watanabe, 1979), and autonomic processes such as arousal (Critchley et al., 2003). It is difficult to explain our data in terms of these processes. First, we only presented pairs of pictures that were adjacent in value. This design equated the difference in value for all four choices of a decision variable (Figure 1B), which controlled for such potential confounds as response conflict, selection difficulty, and value difference across the four choices within a decision variable. Moreover, if reaction times are taken as a measure of selection difficulty or monitoring of conflict, there was no consistent pattern for reaction times to increase as the value of a choice increased (Figure 2). Second, subjects chose the optimal picture on >98% of trials, suggesting that error-related activity cannot account for our data. Although activity in probability trials may reflect the detection of a potentially nonrewarded response (Brown & Braver, 2005), ACC neurons were not specialized for encoding probability choices. Finally, as discussed above, although explanations in terms of motivation or arousal might account for neurons that encode value for all three decision variables, one cannot apply such an explanation to neurons that were finely tuned for encoding value for just one or two decision variables. Moreover, although response times were significantly modulated by decision value, the partial correlation analysis indicated they made little contribution to the neuronal encoding of decision value, suggesting that motivation and motor preparation cannot account for our data. Thus, the most parsimonious explanation is neurons in the ACC are encoding the value of the choices, albeit with varying degrees of dependence on the nature by which value is manipulated.

Many studies have shown encoding of reward information in the LPFC (Kobayashi et al., 2006; Roesch & Olson, 2003; Wallis & Miller, 2003b; Leon & Shadlen, 1999; Watanabe, 1996). Likewise, in the current study, approximately one third of the LPFC neurons encoded the value of the choice. However, a key difference between the selectivity in the LPFC and the ACC was that neurons in the LPFC encoded value for just one decision variable, and rarely encoded value information for multiple decision variables. The LPFC may be recruited in more dynamic contexts than the present task, such as when optimal performance depends on tracking the history of actions and outcomes (Seo, Barraclough, & Lee, 2007; Barraclough, Conroy, & Lee, 2004).

Although the data from the OFC was more variable between the subjects, there were some consistent trends. In terms of the strength of encoding, it was intermediate between the LPFC and the ACC, and it was more likely than the LPFC to encode value across multiple decision variables. However, the encoding of multiple decision variables within the OFC was more prevalent in Subject A compared to Subject B. One obvious explanation is that there might have been a discrepancy in our recording locations between the two subjects. However, this does not appear to have been the case. In both subjects, we recorded from a wide expanse of the cortex between the lateral and medial orbital sulci, encompassing areas 13l, 13m, 11l, and 11m (Carmichael & Price, 1994). There was no evidence that neurons encoding multiple decision variables were restricted to a particular location within these areas (Figure 6C and D). An alternative explanation is that the simplicity of our task allowed our subjects to solve the task using different learning systems. Intuitively, one would predict that the subjects observe the stimuli, recall the outcome associated with those stimuli, and then determine the optimal response, which would tax stimulus–outcome associations. However, it is also possible that through repeated experience with the task, subjects would learn that given a specific stimulus configuration (pair of pictures), a specific response was optimal, which would tax stimulus– response associations, rather than stimulus–outcome associations. Lesion evidence suggests that the OFC is more important for the encoding of stimulus–outcome associations than response–outcome associations (Ostlund & Balleine, 2007), thus a difference in learning strategy could conceivably produce a differential involvement of the OFC in our task.

An important avenue of future research is to specify precisely the contributions of the ACC and the OFC to choice behavior. One possibility is that the OFC is primarily involved in determining the specific value of different reinforcers, whereas the ACC may be vital in determining the value of different actions based on the history of actions and outcomes. Several pieces of evidence point to this distinction. The ACC strongly connects with cingulate motor areas and limbic structures (Carmichael & Price, 1995; Dum & Strick, 1993), placing it in a good anatomical position to use reward information to guide action selection. The OFC differs from the ACC in that it only weakly connects with motor areas, but heavily connects with all sensory areas, including visual, olfactory, and gustatory cortices (Kondo, Saleem, & Price, 2005; Cavada, Company, Tejedor, Cruz-Rizzolo, & Reinoso-Suarez, 2000; Carmichael & Price, 1995; Rolls, Yaxley, & Sienkiewicz, 1990). Studies that manipulate gustatory rewards have consistently found encoding of these rewards within the OFC (Padoa-Schioppa & Assad, 2006; Tremblay & Schultz, 1999; Watanabe, 1996; Rolls et al., 1990), but not necessarily in ACC (Rolls, Inoue, & Browning, 2003). Moreover, OFC activity is correlated with the preference ranking of different rewards (Arana et al., 2003; Tremblay & Schultz, 1999; Watanabe, 1996) and the combination of reward preference and magnitude (Padoa-Schioppa & Assad, 2006, 2008). Damage to the OFC impairs the ability to establish relative and consistent preferences when offered novel foods (Baylis & Gaffan, 1991), and humans with OFC damage show inconsistent preferences (Fellows & Farah, 2007). The use of only a single reward type (apple juice) in the current study may therefore have limited the recruitment of the OFC (relative to the ACC) as reward preference was not a variable manipulated.

Encoding of Value Scales

Although it would be possible to evaluate and compare different choice outcomes on a case-by-case basis, there are several computational advantages in assigning them to a linear value scale that generalizes across multiple decision variables (Montague & Berns, 2002). First, as the number of potential outcomes increases linearly, the number of comparisons between them increases at a combinatorial rate. For example, if you were choosing between five items on a menu, to compare any two items directly would require 10 comparisons. However, the number of comparisons increases to 45 if you have to compare 10 items directly. A value scale provides an efficient linear solution to this problem: The choice values can be positioned along the same scale and the most valuable choice readily determined. Second, it enables the subject to deal efficiently with novelty. By assigning a value to a newly experienced choice outcome, the individual knows the value of that outcome relative to all previously experienced outcomes. Indeed, our subjects coped with novel choices efficiently as indicated by transitive inference tests. Finally, a value scale may aid behavioral flexibility by facilitating the comparison of disparate behavioral outcomes. This is analogous to the reason that societies use an abstract value measure (fiat currency) to enable the comparison of disparate goods and services. For example, although they are two very physically different things, a consumer can readily determine the relative value of a car and a vacation because each can be assigned an abstract monetary value. Analogously, a multiplexed neuronal value signal could enable the brain to determine the relative value of two different actions, such as grooming a conspecific or eating a banana.

Conclusion

Our results are the first to report how individual ACC, LPFC, and OFC neurons encode information about decision value. The value signals reported here are abstract in the sense that they are encoded by many neurons irrespective of the sensory modality or physical manner in which the value is manipulated. In addition, the value signal is temporally separate from motor preparation processes, which suggests that choice value is computed before the appropriate physical action is selected. Finally, the encoding of value across multiple decision variables appears to be much stronger in the ACC than in the OFC or the LPFC. The robust anatomical connections of the ACC with frontal, limbic, and motor areas places the ACC in an ideal anatomical position to determine the value of choice alternatives based on multiple decision variables such as motivational state, action and reward history, effort, risk, and expected payoff. Ultimately, understanding the relationship between neuronal activity and decision making must begin by understanding the neuronal mechanisms that allow the individual components of a decision to be computed. The encoding of probability, payoff, and effort value by individual ACC neurons may represent an abstract neuronal currency that is a necessary component for optimal choice.

Acknowledgments

The project was funded by NIDA grant R01DA19028, NINDS grant P01NS040813 and The Hellman Family Faculty Fund to J. D. W. and NIMH training grant F32MH081521 to S. W. K. We thank David Freedman, Richard Ivry, Matthew Rushworth, and Mark Walton for valuable comments on this manuscript. S. W. K. and J. D. W. contributed to all aspects of the project. A. F. D. contributed to data analysis. A. H. L. contributed to data collection.

Footnotes

The authors have no competing interests.

REFERENCES

- Amaral DG, Price JL. Amygdalo-cortical projections in the monkey (Macaca fascicularis) Journal of Comparative Neurology. 1984;230:465–496. doi: 10.1002/cne.902300402. [DOI] [PubMed] [Google Scholar]

- Amiez C, Joseph JP, Procyk E. Reward encoding in the monkey anterior cingulate cortex. Cerebral Cortex. 2006;16:1040–1055. doi: 10.1093/cercor/bhj046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arana FS, Parkinson JA, Hinton E, Holland AJ, Owen AM, Roberts AC. Dissociable contributions of the human amygdala and orbitofrontal cortex to incentive motivation and goal selection. Journal of Neuroscience. 2003;23:9632–9638. doi: 10.1523/JNEUROSCI.23-29-09632.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barraclough DJ, Conroy ML, Lee D. Prefrontal cortex and decision making in a mixed-strategy game. Nature Neuroscience. 2004;7:404–410. doi: 10.1038/nn1209. [DOI] [PubMed] [Google Scholar]

- Bates JF, Goldman-Rakic PS. Prefrontal connections of medial motor areas in the rhesus monkey. Journal of Comparative Neurology. 1993;336:211–228. doi: 10.1002/cne.903360205. [DOI] [PubMed] [Google Scholar]

- Baxter MG, Parker A, Lindner CC, Izquierdo AD, Murray EA. Control of response selection by reinforcer value requires interaction of amygdala and orbital prefrontal cortex. Journal of Neuroscience. 2000;20:4311–4319. doi: 10.1523/JNEUROSCI.20-11-04311.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baylis LL, Gaffan D. Amygdalectomy and ventromedial prefrontal ablation produce similar deficits in food choice and in simple object discrimination learning for an unseen reward. Experimental Brain Research. 1991;86:617–622. doi: 10.1007/BF00230535. [DOI] [PubMed] [Google Scholar]

- Bechara A, Damasio AR, Damasio H, Anderson SW. Insensitivity to future consequences following damage to human prefrontal cortex. Cognition. 1994;50:7–15. doi: 10.1016/0010-0277(94)90018-3. [DOI] [PubMed] [Google Scholar]

- Behrens TE, Woolrich MW, Walton ME, Rushworth MF. Learning the value of information in an uncertain world. Nature Neuroscience. 2007;10:1214–1221. doi: 10.1038/nn1954. [DOI] [PubMed] [Google Scholar]

- Botvinick MM, Braver TS, Barch DM, Carter CS, Cohen JD. Conflict monitoring and cognitive control. Psychological Review. 2001;108:624–652. doi: 10.1037/0033-295x.108.3.624. [DOI] [PubMed] [Google Scholar]

- Brown JW, Braver TS. Learned predictions of error likelihood in the anterior cingulate cortex. Science. 2005;307:1118–1121. doi: 10.1126/science.1105783. [DOI] [PubMed] [Google Scholar]

- Carmichael ST, Price JL. Architectonic subdivision of the orbital and medial prefrontal cortex in the macaque monkey. Journal of Comparative Neurology. 1994;346:366–402. doi: 10.1002/cne.903460305. [DOI] [PubMed] [Google Scholar]

- Carmichael ST, Price JL. Sensory and premotor connections of the orbital and medial prefrontal cortex of macaque monkeys. Journal of Comparative Neurology. 1995;363:642–664. doi: 10.1002/cne.903630409. [DOI] [PubMed] [Google Scholar]

- Carmichael ST, Price JL. Connectional networks within the orbital and medial prefrontal cortex of macaque monkeys. Journal of Comparative Neurology. 1996;371:179–207. doi: 10.1002/(SICI)1096-9861(19960722)371:2<179::AID-CNE1>3.0.CO;2-#. [DOI] [PubMed] [Google Scholar]

- Cavada C, Company T, Tejedor J, Cruz-Rizzolo RJ, Reinoso-Suarez F. The anatomical connections of the macaque monkey orbitofrontal cortex. A review. Cerebral Cortex. 2000;10:220–242. doi: 10.1093/cercor/10.3.220. [DOI] [PubMed] [Google Scholar]

- Critchley HD, Mathias CJ, Josephs O, O’Doherty J, Zanini S, Dewar BK, et al. Human cingulate cortex and autonomic control: Converging neuroimaging and clinical evidence. Brain. 2003;126:2139–2152. doi: 10.1093/brain/awg216. [DOI] [PubMed] [Google Scholar]

- Dorris MC, Glimcher PW. Activity in posterior parietal cortex is correlated with the relative subjective desirability of action. Neuron. 2004;44:365–378. doi: 10.1016/j.neuron.2004.09.009. [DOI] [PubMed] [Google Scholar]

- Dum RP, Strick PL. Cingulate motor areas. In: Gabriel M, editor. Neurobiology of cingulate cortex and limbic thalamus: A comprehensive handbook. Cambridge: Birkhäuser; 1993. pp. 415–441. [Google Scholar]

- Duncan J, Emslie H, Williams P, Johnson R, Freer C. Intelligence and the frontal lobe: The organization of goal-directed behavior. Cognitive Psychology. 1996;30:257–303. doi: 10.1006/cogp.1996.0008. [DOI] [PubMed] [Google Scholar]

- Fellows LK. Deciding how to decide: Ventromedial frontal lobe damage affects information acquisition in multi-attribute decision making. Brain. 2006;129:944–952. doi: 10.1093/brain/awl017. [DOI] [PubMed] [Google Scholar]

- Fellows LK, Farah MJ. The role of ventromedial prefrontal cortex in decision making: Judgment under uncertainty or judgment per se? Cerebral Cortex. 2007;17:2669–2674. doi: 10.1093/cercor/bhl176. [DOI] [PubMed] [Google Scholar]

- Hadland KA, Rushworth MF, Gaffan D, Passingham RE. The anterior cingulate and reward-guided selection of actions. Journal of Neurophysiology. 2003;89:1161–1164. doi: 10.1152/jn.00634.2002. [DOI] [PubMed] [Google Scholar]

- Hikosaka K, Watanabe M. Delay activity of orbital and lateral prefrontal neurons of the monkey varying with different rewards. Cerebral Cortex. 2000;10:263–271. doi: 10.1093/cercor/10.3.263. [DOI] [PubMed] [Google Scholar]

- Ichihara-Takeda S, Funahashi S. Activity of primate orbito-frontal and dorsolateral prefrontal neurons: Effect of reward schedule on task-related activity. Journal of Cognitive Neuroscience. 2007;20:563–579. doi: 10.1162/jocn.2008.20047. [DOI] [PubMed] [Google Scholar]

- Ito S, Stuphorn V, Brown JW, Schall JD. Performance monitoring by the anterior cingulate cortex during saccade countermanding. Science. 2003;302:120–122. doi: 10.1126/science.1087847. [DOI] [PubMed] [Google Scholar]

- Izquierdo A, Suda RK, Murray EA. Bilateral orbital prefrontal cortex lesions in rhesus monkeys disrupt choices guided by both reward value and reward contingency. Journal of Neuroscience. 2004;24:7540–7548. doi: 10.1523/JNEUROSCI.1921-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kacelnik A. Normative and descriptive models of decision making: Time discounting and risk sensitivity. Ciba Foundation Symposium. 1997;208:51–67. doi: 10.1002/9780470515372.ch5. discussion 67–70. [DOI] [PubMed] [Google Scholar]

- Kahneman D, Tversky A. Prospect theory: An analysis of decision under risk. Econometrica. 1979;47:263–291. [Google Scholar]

- Kawabata H, Zeki S. Neural correlates of beauty. Journal of Neurophysiology. 2004;91:1699–1705. doi: 10.1152/jn.00696.2003. [DOI] [PubMed] [Google Scholar]

- Kennerley SW, Walton ME, Behrens TE, Buckley MJ, Rushworth MF. Optimal decision making and the anterior cingulate cortex. Nature Neuroscience. 2006;9:940–947. doi: 10.1038/nn1724. [DOI] [PubMed] [Google Scholar]

- King-Casas B, Tomlin D, Anen C, Camerer CF, Quartz SR, Montague PR. Getting to know you: Reputation and trust in a two-person economic exchange. Science. 2005;308:78–83. doi: 10.1126/science.1108062. [DOI] [PubMed] [Google Scholar]

- Knutson B, Taylor J, Kaufman M, Peterson R, Glover G. Distributed neural representation of expected value. Journal of Neuroscience. 2005;25:4806–4812. doi: 10.1523/JNEUROSCI.0642-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kobayashi S, Nomoto K, Watanabe M, Hikosaka O, Schultz W, Sakagami M. Influences of rewarding and aversive outcomes on activity in macaque lateral prefrontal cortex. Neuron. 2006;51:861–870. doi: 10.1016/j.neuron.2006.08.031. [DOI] [PubMed] [Google Scholar]

- Kondo H, Saleem KS, Price JL. Differential connections of the perirhinal and parahippocampal cortex with the orbital and medial prefrontal networks in macaque monkeys. Journal of Comparative Neurology. 2005;493:479–509. doi: 10.1002/cne.20796. [DOI] [PubMed] [Google Scholar]

- Lee D, Rushworth MF, Walton ME, Watanabe M, Sakagami M. Functional specialization of the primate frontal cortex during decision making. Journal of Neuroscience. 2007;27:8170–8173. doi: 10.1523/JNEUROSCI.1561-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leon MI, Shadlen MN. Effect of expected reward magnitude on the response of neurons in the dorsolateral prefrontal cortex of the macaque. Neuron. 1999;24:415–425. doi: 10.1016/s0896-6273(00)80854-5. [DOI] [PubMed] [Google Scholar]

- Machens CK, Romo R, Brody CD. Flexible control of mutual inhibition: A neural model of two-interval discrimination. Science. 2005;307:1121–1124. doi: 10.1126/science.1104171. [DOI] [PubMed] [Google Scholar]

- Matsumoto K, Suzuki W, Tanaka K. Neuronal correlates of goal-based motor selection in the prefrontal cortex. Science. 2003;301:229–232. doi: 10.1126/science.1084204. [DOI] [PubMed] [Google Scholar]

- Matsumoto M, Matsumoto K, Abe H, Tanaka K. Medial prefrontal cell activity signaling prediction errors of action values. Nature Neuroscience. 2007;10:647–656. doi: 10.1038/nn1890. [DOI] [PubMed] [Google Scholar]

- Maunsell JH. Neuronal representations of cognitive state: Reward or attention? Trends in Cognitive Sciences. 2004;8:261–265. doi: 10.1016/j.tics.2004.04.003. [DOI] [PubMed] [Google Scholar]

- McClure SM, Laibson DI, Loewenstein G, Cohen JD. Separate neural systems value immediate and delayed monetary rewards. Science. 2004;306:503–507. doi: 10.1126/science.1100907. [DOI] [PubMed] [Google Scholar]

- McCoy AN, Platt ML. Risk-sensitive neurons in macaque posterior cingulate cortex. Nature Neuroscience. 2005;8:1220–1227. doi: 10.1038/nn1523. [DOI] [PubMed] [Google Scholar]

- Miller EK, Cohen JD. An integrative theory of prefrontal cortex function. Annual Review of Neuroscience. 2001;24:167–202. doi: 10.1146/annurev.neuro.24.1.167. [DOI] [PubMed] [Google Scholar]

- Montague PR, Berns GS. Neural economics and the biological substrates of valuation. Neuron. 2002;36:265–284. doi: 10.1016/s0896-6273(02)00974-1. [DOI] [PubMed] [Google Scholar]

- Nakamura K, Roesch MR, Olson CR. Neuronal activity in macaque SEF and ACC during performance of tasks involving conflict. Journal of Neurophysiology. 2005;93:884–908. doi: 10.1152/jn.00305.2004. [DOI] [PubMed] [Google Scholar]

- Niki H, Watanabe M. Prefrontal and cingulate unit activity during timing behavior in the monkey. Brain Research. 1979;171:213–224. doi: 10.1016/0006-8993(79)90328-7. [DOI] [PubMed] [Google Scholar]

- O’Doherty JP, Deichmann R, Critchley HD, Dolan RJ. Neural responses during anticipation of a primary taste reward. Neuron. 2002;33:815–826. doi: 10.1016/s0896-6273(02)00603-7. [DOI] [PubMed] [Google Scholar]

- Ongur D, Price JL. The organization of networks within the orbital and medial prefrontal cortex of rats, monkeys and humans. Cerebral Cortex. 2000;10:206–219. doi: 10.1093/cercor/10.3.206. [DOI] [PubMed] [Google Scholar]

- Ostlund SB, Balleine BW. The contribution of orbitofrontal cortex to action selection. Annals of the New York Academy of Sciences. 2007;1121:174–192. doi: 10.1196/annals.1401.033. [DOI] [PubMed] [Google Scholar]

- Owen AM. Cognitive planning in humans: Neuropsychological, neuroanatomical and neuropharmacological perspectives. Progress in Neurobiology. 1997;53:431–450. doi: 10.1016/s0301-0082(97)00042-7. [DOI] [PubMed] [Google Scholar]

- Padoa-Schioppa C, Assad JA. Neurons in the orbitofrontal cortex encode economic value. Nature. 2006;441:223–226. doi: 10.1038/nature04676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Padoa-Schioppa C, Assad JA. The representation of economic value in the orbitofrontal cortex is invariant for changes of menu. Nature Neuroscience. 2008;11:95–102. doi: 10.1038/nn2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petrides M, Pandya DN. Dorsolateral prefrontal cortex: Comparative cytoarchitectonic analysis in the human and the macaque brain and corticocortical connection patterns. European Journal of Neuroscience. 1999;11:1011–1036. doi: 10.1046/j.1460-9568.1999.00518.x. [DOI] [PubMed] [Google Scholar]

- Platt ML, Glimcher PW. Neural correlates of decision variables in parietal cortex. Nature. 1999;400:233–238. doi: 10.1038/22268. [DOI] [PubMed] [Google Scholar]

- Rilling J, Gutman D, Zeh T, Pagnoni G, Berns G, Kilts C. A neural basis for social cooperation. Neuron. 2002;35:395–405. doi: 10.1016/s0896-6273(02)00755-9. [DOI] [PubMed] [Google Scholar]

- Roesch MR, Olson CR. Impact of expected reward on neuronal activity in prefrontal cortex, frontal and supplementary eye fields and premotor cortex. Journal of Neurophysiology. 2003;90:1766–1789. doi: 10.1152/jn.00019.2003. [DOI] [PubMed] [Google Scholar]

- Roesch MR, Olson CR. Neuronal activity related to reward value and motivation in primate frontal cortex. Science. 2004;304:307–310. doi: 10.1126/science.1093223. [DOI] [PubMed] [Google Scholar]

- Roesch MR, Olson CR. Neuronal activity in primate orbitofrontal cortex reflects the value of time. Journal of Neurophysiology. 2005;94:2457–2471. doi: 10.1152/jn.00373.2005. [DOI] [PubMed] [Google Scholar]

- Roesch MR, Olson CR. Neuronal activity related to anticipated reward in frontal cortex: Does it represent value or reflect motivation? Annals of the New York Academy of Sciences. 2007;1121:431–446. doi: 10.1196/annals.1401.004. [DOI] [PubMed] [Google Scholar]

- Roesch MR, Taylor AR, Schoenbaum G. Encoding of time-discounted rewards in orbitofrontal cortex is independent of value representation. Neuron. 2006;51:509–520. doi: 10.1016/j.neuron.2006.06.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rolls ET, Inoue K, Browning A. Activity of primate subgenual cingulate cortex neurons is related to sleep. Journal of Neurophysiology. 2003;90:134–142. doi: 10.1152/jn.00770.2002. [DOI] [PubMed] [Google Scholar]

- Rolls ET, Yaxley S, Sienkiewicz ZJ. Gustatory responses of single neurons in the caudolateral orbitofrontal cortex of the macaque monkey. Journal of Neurophysiology. 1990;64:1055–1066. doi: 10.1152/jn.1990.64.4.1055. [DOI] [PubMed] [Google Scholar]

- Rudebeck PH, Buckley MJ, Walton ME, Rushworth MF. A role for the macaque anterior cingulate gyrus in social valuation. Science. 2006;313:1310–1312. doi: 10.1126/science.1128197. [DOI] [PubMed] [Google Scholar]

- Rudebeck PH, Walton ME, Smyth AN, Bannerman DM, Rushworth MF. Separate neural pathways process different decision costs. Nature Neuroscience. 2006;9:1161–1168. doi: 10.1038/nn1756. [DOI] [PubMed] [Google Scholar]

- Rushworth MF, Behrens TE. Choice, uncertainty and value in prefrontal and cingulate cortex. Nature Neuroscience. 2008;11:389–397. doi: 10.1038/nn2066. [DOI] [PubMed] [Google Scholar]

- Sallet J, Quilodran R, Rothe M, Vezoli J, Joseph JP, Procyk E. Expectations, gains, and losses in the anterior cingulate cortex. Cognitive, Affective & Behavioral Neuroscience. 2007;7:327–336. doi: 10.3758/cabn.7.4.327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoenbaum G, Chiba AA, Gallagher M. Orbitofrontal cortex and basolateral amygdala encode expected outcomes during learning. Nature Neuroscience. 1998;1:155–159. doi: 10.1038/407. [DOI] [PubMed] [Google Scholar]

- Schoenbaum G, Setlow B, Saddoris MP, Gallagher M. Encoding predicted outcome and acquired value in orbitofrontal cortex during cue sampling depends upon input from basolateral amygdala. Neuron. 2003;39:855–867. doi: 10.1016/s0896-6273(03)00474-4. [DOI] [PubMed] [Google Scholar]

- Seo H, Barraclough DJ, Lee D. Dynamic signals related to choices and outcomes in the dorsolateral prefrontal cortex. Cerebral Cortex. 2007;17:i110–i117. doi: 10.1093/cercor/bhm064. [DOI] [PubMed] [Google Scholar]

- Seo H, Lee D. Temporal filtering of reward signals in the dorsal anterior cingulate cortex during a mixed-strategy game. Journal of Neuroscience. 2007;27:8366–8377. doi: 10.1523/JNEUROSCI.2369-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shallice T, Burgess PW. Deficits in strategy application following frontal lobe damage in man. Brain. 1991;114:727–741. doi: 10.1093/brain/114.2.727. [DOI] [PubMed] [Google Scholar]

- Shima K, Tanji J. Role for cingulate motor area cells in voluntary movement selection based on reward. Science. 1998;282:1335–1338. doi: 10.1126/science.282.5392.1335. [DOI] [PubMed] [Google Scholar]

- Shuler MG, Bear MF. Reward timing in the primary visual cortex. Science. 2006;311:1606–1609. doi: 10.1126/science.1123513. [DOI] [PubMed] [Google Scholar]

- Stephens DW, Krebs JR. Foraging theory. Princeton: Princeton University Press; 1986. [Google Scholar]

- Sugrue LP, Corrado GS, Newsome WT. Matching behavior and the representation of value in the parietal cortex. Science. 2004;304:1782–1787. doi: 10.1126/science.1094765. [DOI] [PubMed] [Google Scholar]

- Tremblay L, Schultz W. Relative reward preference in primate orbitofrontal cortex. Nature. 1999;398:704–708. doi: 10.1038/19525. [DOI] [PubMed] [Google Scholar]

- Van Hoesen GW, Morecraft RJ, Vogt BA. In Vogt BA, Gabriel M.Connections of the monkey cingulate cortex Neurobiology of cingulate cortex and limbic thalamus: A comprehensive handbook 1993Cambridge: Birkhäuser; 249–284. [Google Scholar]

- Wallis JD. Orbitofrontal cortex and its contribution to decision-making. Annual Review of Neuroscience. 2007;30:31–56. doi: 10.1146/annurev.neuro.30.051606.094334. [DOI] [PubMed] [Google Scholar]

- Wallis JD, Miller EK. From rule to response: Neuronal processes in the premotor and prefrontal cortex. Journal of Neurophysiology. 2003a;90:1790–1806. doi: 10.1152/jn.00086.2003. [DOI] [PubMed] [Google Scholar]

- Wallis JD, Miller EK. Neuronal activity in primate dorsolateral and orbital prefrontal cortex during performance of a reward preference task. European Journal of Neuroscience. 2003b;18:2069–2081. doi: 10.1046/j.1460-9568.2003.02922.x. [DOI] [PubMed] [Google Scholar]

- Walton ME, Kennerley SW, Bannerman DM, Phillips PE, Rushworth MF. Weighing up the benefits of work: Behavioral and neural analyses of effort-related decision making. Neural Networks. 2006;19:1302–1314. doi: 10.1016/j.neunet.2006.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watanabe M. Reward expectancy in primate prefrontal neurons. Nature. 1996;382:629–632. doi: 10.1038/382629a0. [DOI] [PubMed] [Google Scholar]

- Williams ZM, Bush G, Rauch SL, Cosgrove GR, Eskandar EN. Human anterior cingulate neurons and the integration of monetary reward with motor responses. Nature Neuroscience. 2004;7:1370–1375. doi: 10.1038/nn1354. [DOI] [PubMed] [Google Scholar]