Abstract

A fundamental question in human memory is how the brain represents sensory-specific information during the process of retrieval. One hypothesis is that regions of sensory cortex are reactivated during retrieval of sensory-specific information (1). Here we report findings from a study in which subjects learned a set of picture and sound items and were then given a recall test during which they vividly remembered the items while imaged by using event-related functional MRI. Regions of visual and auditory cortex were activated differentially during retrieval of pictures and sounds, respectively. Furthermore, the regions activated during the recall test comprised a subset of those activated during a separate perception task in which subjects actually viewed pictures and heard sounds. Regions activated during the recall test were found to be represented more in late than in early visual and auditory cortex. Therefore, results indicate that retrieval of vivid visual and auditory information can be associated with a reactivation of some of the same sensory regions that were activated during perception of those items.

We are readily able to remember past experiences that include vivid sensory-specific representations, such as the appearance of a recently encountered face or the sound of a new song. A fundamental question about memory is how the brain codes these rich sensory aspects of a memory during the process of retrieval. One longstanding hypothesis is that brain regions active during sensory-induced perceptions are reactivated during retrieval of such information (1–3). Evidence in support of a reactivation hypothesis comes from studies of the visual system. Penfield and Perot stimulated visual cortex in awake humans and were able to induce specific visual memories, such as the image of a familiar street (4). Single-unit studies in nonhuman primates have shown learning-induced modulation of inferior temporal neurons to visual associations such that the neurons showed response selectivity for learned visual items (5, 6). Additionally, studies of mental imagery suggest that activity in visual cortex increases during the active reconstruction of visual images (7, 8). The process by which auditory information is coded in memory is less clear. As with visual cortex, however, Penfield and Perot also discovered that they could elicit specific auditory memories from patients, such as the sound of a mother's voice, when they stimulated regions of the superior temporal lobes (4).

The goal of the present study was to identify regions of the brain associated with the retrieval of vivid visual- and auditory-specific information and to determine the extent to which these regions are a subset of regions primarily associated with modality-specific perception (encoding) of the same information. In other words, the goal is to determine to what extent sensory regions are reactivated during retrieval of sensory-specific information. To encourage vivid retrieval, a paradigm was developed in which subjects studied extensively a set of picture and sound items, each of which was paired with a descriptive label. For example, the label DOG was paired with a picture of a dog for half of the subjects and with the sound of a dog barking for the other half. After study, event-related functional MRI (9, 10) was used while subjects performed tasks requiring either the perception of presented sounds and pictures or the recall of studied sounds and pictures from memory.

Three separate tasks were examined. During the Recall task, no pictures or sounds were presented. Subjects saw only the labels of previously studied items and actively retrieved the pictures and sounds from long-term memory, indicating whether the studied item had been a sound or a picture. During the Perception task, subjects were presented with the studied (Old) items and indicated whether they were sounds or pictures. A third task was identical to the Perception task, except the items had not been studied (New). This final task was examined to determine the extent to which repeated exposure to items can mimic effects similar to those observed during retrieval of sensory-specific information.

Materials and Methods

Twenty-four subjects were recruited from the Washington University community. All subjects had normal or corrected-to-normal vision, were native English speakers, reported no history of significant neurological problems, and showed a strong right-handed preference as measured by the Edinburgh Handedness Inventory (11). Subjects were paid for participation and provided informed consent in accordance with guidelines set by the Washington University Human Studies Committee. Of the 24 subjects, 3 were removed from subsequent analysis because of excessive movement (>1mm/run). Two additional subjects were removed for failing to comply with the behavioral procedures. A final subject was removed for technical reasons associated with structural data misalignment. Data analysis pertains to the remaining 18 subjects (7 male, 11 female; mean age 24.6 years).

Imaging Procedures.

Imaging was conducted on a Siemens 1.5-Tesla Vision System (Erlangen, Germany). Headphones were used to dampen scanner noise and to present auditory stimuli. Visual stimuli were generated on an Apple Power Macintosh G3 computer by using psyscope (12) and were projected onto a screen positioned at the head of the magnet bore by using an Ampro model LCD-150 projector (AmPro, Melbourne, FL). Subjects viewed the stimuli by way of a mirror mounted on the head coil. Subjects responded by using a fiber-optic light-sensitive keypress interfaced with a PsyScope Button Box (Carnegie Mellon University, Pittsburgh, PA). Both a pillow placed within the head coil and a thermoplastic face mask were used to minimize head movement.

Structural images were acquired first by using a sagittal MP-RAGE T1-weighted sequence (TR = 9.7 ms, TE = 4 ms, flip angle = 10°, TI = 20 ms, TD = 500 ms). A series of functional images were then collected with an asymmetric spin-echo echo-planar imaging sequence sensitive to BOLD contrast (T2*) (TR = 2.5 s, TE = 37 ms, 3.75 × 3.75-mm in-plane resolution). During each functional run, 128 sets of 16 contiguous 8-mm-thick axial images were acquired, allowing complete brain coverage at a high signal-to-noise ratio [T. E. Conturo, R. C. McKinstry, E. Akbudak, A. Z. Snyder, T. Z. Yang & M. E. Raichle (1996) Soc. Neurosci. Abstr. 22, 7]. The first four images in each run were discarded to allow for stabilization of longitudinal magnetization. Each run duration was approximately 5 min, with a 3-min interval between runs. The imaging session lasted approximately 2 h.

Behavioral Procedures.

Subjects studied a set of 20 pictures and 20 sounds over a 2-day period. Each picture and sound was paired with a descriptive label and each study session consisted of 10 blocks of the 40 stimuli per day. Item modality was counterbalanced across subjects such that for half of the subjects a picture was associated with a particular label while for the other half a sound was paired with that label. Each picture study trial consisted of the presentation of a label for 750 ms, followed by 500 ms fixation, then by the visual item for 3 s. Each auditory study trial consisted of 750-ms label followed by 4.25-s fixation, during which the auditory stimulus was presented. Picture stimuli ranged in size from 3° to 11° visual angle and included both grayscale and color pictures. Sound stimuli were constructed such that their durations ranged from 1.0 s − 2.5 s with an even distribution across that range. Instructions during study were to memorize each picture and sound thoroughly, and subjects were informed that a test would be given on the items during the scan. Subjects were unaware of the exact nature of the test until immediately before it was actually administered. All text was in 24-pt bold Geneva font (black-on-white on-screen presentation), and all visually presented items were centered on the screen. Total length of study and test trials was 5 s.

On the third day, all subjects were scanned by using functional MRI and were given both a Perception and a Recall test. During the Perception test, subjects were presented with each studied label/item pair, viewed or listened to the item, and made a right-hand button press indicating whether it was a picture or a sound. During the Recall task, subjects saw only the labels of previously studied items and were instructed to retrieve the items from long-term memory and, after fully retrieving the information, to make a button response indicating whether their memory was of a picture or sound. The purpose of the perception task was to define sensory-specific regions that might be reactivated during the Recall task. Reaction times for button presses were recorded on a Macintosh G3 computer. There were a total of four functional runs per subject. The Perception test was given during the first two runs, the Recall test during the next two. Each run comprised 60 randomly intermixed trials, including 20 visual, 20 auditory, and 20 baseline fixation trials, yielding a total of 40 visual, 40 auditory, and 40 fixation trials per test condition for each subject.

Six of the subjects received two additional runs each of the Perception and Recall tests (for a total of eight runs). The label/item pairs for the additional two runs of Perception were entirely new. This condition was added to investigate the effect of repeated exposure to studied items on perception of those items.

Event-Related Functional MRI Data Analysis.

Data from the functional runs were preprocessed to correct for odd/even slice intensity differences and motion artifact by using a rigid-body rotation (13). Sync interpolation was used to account for between-slice timing differences caused by differences in acquisition order, and linear slope was removed on a voxel-by-voxel basis (14). The data were normalized to a mean magnitude value of 1,000. To permit across-subject analysis, anatomic and functional data were transformed into stereotaxic atlas space based on Talairach and Tournoux (15).

Functional data were averaged selectively (9, 16) across runs on the basis of testing condition (Perception, Recall) and trial type (picture, sound, fixation). Data were then averaged across subjects and statistical activation maps constructed on the basis of comparisons between trial types by using a t-statistic (9). A set of estimated hemodynamic response curves were used as a comparison with the obtained hemodynamic responses. The estimated response curves consisted of a set of time-shifted γ functions (16, 17). Statistical activation maps were generated by comparison of Sound and Picture trials (Sound-Picture). Peak coordinates were generated with the statistical criteria of 19 or more contiguous voxels (152 mm3 volume) above P < 0.001 (see ref. 16). For significant peaks occurring closer than 12 mm of one another, the most significant peak was retained.

To obtain time courses for regions of interest, regional analyses were performed on the averaged Sound-Picture data by using the identified peak locations as seed points. Specifically, all voxels within 12 mm of a peak location that were more significant than P < 0.001 were included in the region. Mean percent signal change was then computed for each event type (visual, auditory, fixation). For all regional analyses, baseline fixation was subtracted from Sound and Picture trials to obtain a mean regional signal change that was not contaminated by hemodynamic response overlap (9, 10, 16). Further analysis of time-course data was completed by using paired t tests (random-effects model) to compare time-course amplitude estimates (18) for picture and sound trials in both fusiform and superior temporal regions. In addition, one sample t test was used to determine whether the signal in each region for each condition differed significantly from zero.

Results

Behavioral performance indicated that subjects were readily able to identify whether the studied items were pictures or sounds (98.8% accuracy). Moreover, two sources of behavioral data suggest that subjects were likely to be retrieving the items in a manner preserving aspects of the original perception of the item. First, on debriefing, all subjects reported a clear sense of remembering vivid details of the studied items. Second, the reaction time data from the scanned Recall test showed a significant correlation between the response duration and the actual length of the original studied sounds. Subjects took longer to respond when remembering studied sounds that were longer in duration (r = 0.32, P < 0.05). In an independent behavioral study of 24 subjects (using similar study procedures), this behavioral observation was reinforced by finding that subjects, when explicitly asked, could indicate readily the duration of studied sounds (r = 0.68, P < 0.0001) and width of studied pictures (r = 0.68, P < 0.0001). Note that during the scanned Recall test, subjects were not asked explicitly to retrieve specific item features, such as duration, as was done in the behavioral study.

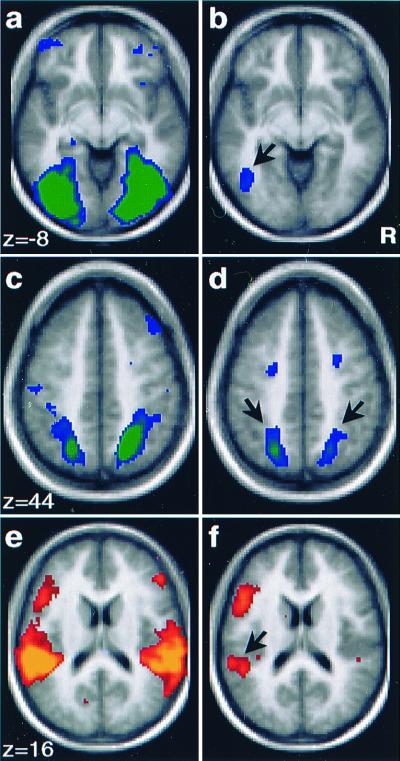

Brain activity maps, comparing picture to sound trials, were constructed separately for the Perception and Recall tasks (Fig. 1). Comparison of picture and sound trials during Perception showed activation in regions of sensory cortex, including many primary and nonprimary visual and auditory cortical regions (Fig. 1 a, c, and e). Activations in visual cortex extended from middle occipital gyrus ventrally along fusiform gyrus [near Brodmann's area (BA) 18/19; Fig. 1a] and dorsally to precuneus and parietal cortex (BA 19/7; Fig. 1c), whereas activations in auditory cortex extended from Heschl's gyrus to middle temporal gyrus (BA 41/21; Fig. 1e). Also activated in this comparison were bilateral frontal regions associated differentially with either picture or sound trials. Specifically, perception of pictures was associated with greater activation of middle frontal gyrus on the left (BA 47) and on the right (BA 10). Perception of sounds was associated with greater activation of regions near posterior inferior frontal gyrus on the left (BA 44/45) and anterior inferior frontal gyrus on the right (BA 45/46; see Fig. 1).

Figure 1.

Activation maps (Left) show brain areas differentially active during perception of pictures (a and c; activation in green) and sounds (e; activation in yellow). Regions of activity span multiple primary and secondary sensory areas. Maps (Right) show areas differentially active during Recall of pictures (b and d) and sounds (f). Areas differentially active during the Recall test represent a subset of those activated during Perception and include sensory-specific areas. The black arrow (b) indicates activity in left fusiform gyrus during Recall of pictures [atlas coordinates (16): x, y, z = −45, −69, −6]. Bilateral regions in superior occipital and parietal cortex were also activated during Recall of pictures (L: −23, −65, 40; R: 21, −63, 48). Areas active during Recall of sounds were near left superior temporal gyrus (f), region indicated by black arrow; (−57, −39, 14) and localize posterior and lateral to primary auditory cortex.

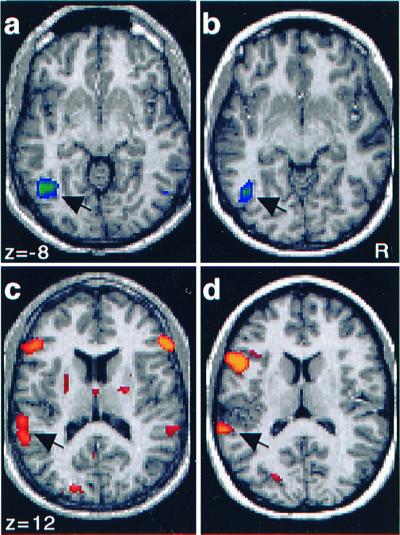

Of central importance, a subset of the regions activated during the Perception of pictures and sounds were activated significantly during Recall of pictures and sounds (Fig. 1 b, d, and f), revealing activity within distinct areas of the brain associated with sensory-specific memories. Picture Recall (compared with sound Recall) was associated with an activation near left fusiform gyrus (BA 19/7; Fig. 1b) and also bilateral dorsal extrastriate visual regions extending to precuneus (BA 19/7; Fig. 1d). Direct plotting of the activated visual locations in reference to estimated areal boundaries (19) showed that the visual areas were most likely beyond areas V1, V2, V3a, and V4. For reference, activation maps were constructed for two of the subjects (Fig. 2 a and b) showing significant regions of activation in left fusiform gyrus. Significant peaks (P < 0.001) obtained during picture Recall are listed in Table 1.

Figure 2.

Activation maps for individual subjects obtained by comparison of the hemodynamic response for picture and sound items during the Recall test. Areas more active during the retrieval of picture (a and b) and sound (c and d) information are shown. Recall of pictures was associated with activation in left fusiform gyrus (a and b: arrows). Recall of sounds was associated with activation of bilateral auditory cortex (c) in one individual and left auditory cortex (d) in another. Note that the activations in auditory cortex (c and d: arrows) are lateral and posterior to Heschl's gyrus.

Table 1.

Significant peak locations in Picture Recall > Sound Recall

| Coordinates

|

Significance −log(p) | Location

|

|||

|---|---|---|---|---|---|

| X | Y | Z | BA | Anatomic label | |

| −23 | −65 | 40 | 31.8 | 7 | L Precuneus |

| 21 | −63 | 48 | 18.8 | 7 | R Precuneus |

| 23 | −63 | 36 | 18.3 | 7 | R Precuneus |

| −17 | −73 | 48 | 14.8 | 7 | L Precuneus |

| 31 | −69 | 24 | 13.4 | 19 | R Middle Occipital |

| 29 | −1 | 58 | 13.1 | 6 | R Middle Frontal |

| −17 | −61 | 52 | 12.8 | 7 | L Parietal |

| −25 | −7 | 54 | 12.5 | 6 | L Middle Frontal |

| −45 | −69 | −6 | 12.4 | 19 | L Fusiform |

| 25 | 1 | 46 | 12.4 | 6 | R Middle Frontal |

| −39 | −79 | 18 | 11.5 | 19 | L Middle Occipital |

| −29 | −51 | 40 | 10.3 | 7 | L Parietal |

| 33 | −39 | 34 | 9.9 | 7/40 | R Parietal |

Coordinates are from the Talairach and Tournoux (15) atlas; R, Right; L, Left; BA, approximate Brodmann area, on the basis of atlas coordinates.

Recall of sounds was associated with bilateral activations near superior temporal gyrus, with left greater than right (Fig. 1f). Note that the right-sided response did not reach statistical significance by using the strict criteria described in Materials and Methods. These regions were located lateral and posterior to primary auditory cortex (BA 22) on the basis of gross anatomical landmarks (20, 21). Regional specificity relative to primary auditory cortex was determined by examination of activation and anatomic maps for the five individual subjects with the most significant effects (maps for two of the subjects are shown in Fig. 2 c and d). For each subject, distinct regions of activation were lateral and posterior to but did not include Heschl's gyrus (BA 41). Table 2 lists the significant peaks observed during Recall of sounds (compared with Recall of pictures).

Table 2.

Significant peak locations in Sound Recall > Picture Recall

| Coordinates

|

Significance −log(p) | Location

|

|||

|---|---|---|---|---|---|

| X | Y | Z | BA | Anatomic label | |

| −49 | 13 | 12 | 31.6 | 44/45 | L Inferior Frontal |

| −43 | 21 | 0 | 23.9 | 45 | L Inferior Frontal |

| −57 | −39 | 14 | 15.9 | 22 | L Sup. Temporal Gyrus |

| −55 | −39 | 26 | 13.5 | 40 | L Parietal |

| −51 | −5 | 46 | 12.5 | 6 | L Middle Frontal |

| 51 | 33 | 10 | 10.6 | 45/46 | R Inferior Frontal |

| −21 | −3 | 6 | 10.5 | Putamen | |

| −3 | −19 | 50 | 9.5 | 6 | L Middle Frontal |

| −9 | −7 | 66 | 9.3 | 3/1 | L Postcentral Gyrus |

Coordinates are from the Talairach and Tournoux (15) atlas; R, Right; L. Left; Sup, Superior; BA, approximate Brodmann area on the basis of atlas coordinates.

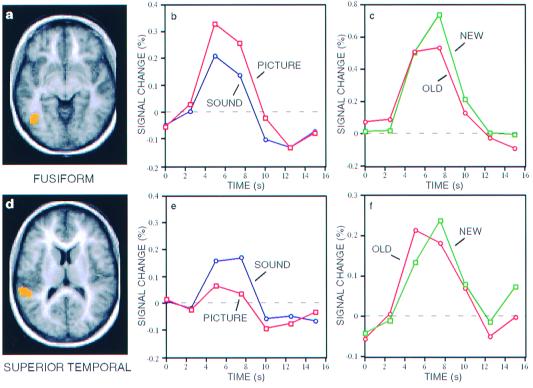

To explore further the behavior of visual and auditory regions associated with signal modulation during Recall, time courses for the averaged picture and sound (both compared with Fixation) trials were generated for the regions near fusiform (Fig. 3 a and b) and superior temporal gyri (Fig. 3 d and e). The fusiform region demonstrated an increased response to retrieved picture information as compared with sounds [t(17) = 4.41, P < 0.0005), with a clear response present during both picture [t(17) = 8.19, P < 0.0001) and sound trials [t(17) = 5.20, P < 0.0001). This response pattern, including a response during sound Recall, was expected because of the presence of visual stimuli (label cues) during both trial types. Recall of pictures appears to significantly increase the response in fusiform gyrus beyond that elicited by the visual word cue. The superior temporal region showed an increased response to sound Recall trials [t(17) = 3.53, P < 0.005), but no detectable response to picture trials [t(17) = 0.51, P = 0.61), with a significant difference between Recall for sounds over recall for pictures [t(17) = 2.96, P < 0.01). These results indicate that the fusiform and superior temporal regions are activated selectively on the basis of the modality of the retrieved information.

Figure 3.

Regions in fusiform (a) and superior temporal (d) gyri (see Tables 1 and 2 for peak coordinates) associated with retrieval of pictures and sounds, respectively. Time courses in fusiform (b) and superior temporal (e) regions representing signal changes relative to fixation for Recall of pictures (open squares) and sounds (open circles). All time courses reflect an increased response, with picture > sound in fusiform gyrus and sound > picture in superior temporal gyrus. Note that a certain level of positive response in fusiform gyrus to sound trials was expected because of the presence of visually presented labels during sound trials. Time courses for regions in fusiform (c) and superior temporal (f) gyri representing signal changes relative to fixation for perception of Old (open circles) and New (open squares) items. Signal change for New items in fusiform gyrus was slightly higher than for Old items but similar in superior temporal gyrus.

Recall was also associated with bilateral frontal activation for picture (Left: BA 6; Right: BA 6/44) and sound (Left: BA 44/45; Right: BA 45/46; see Fig. 1f) items. These regions were segregated spatially by modality: recall of pictures activated regions located in dorsolateral prefrontal cortex, whereas recall of sounds activated regions in inferior prefrontal cortex.

A subgroup of six subjects were given a Perception task in which entirely new items were presented (New). Activation maps comparing picture and sound items for Perception of both Old and New items were generated for this subgroup. Similar regions were activated for both conditions, with minor variations evident on visual inspection. To determine what effect Perception of New vs. Old items had on the critical sensory regions showing modulation during Recall, time courses were plotted for the fusiform and superior temporal gyrus regions (Fig. 3 a and d). Perception of Old pictures resulted in a trend for a slightly reduced response in fusiform gyrus compared to perception of New pictures (t[5] = 2.51, P = 0.05) (Fig. 3c), perhaps revealing a subtle example of perceptual priming (22, 23), whereas perception of New and Old sounds showed a similar response in superior temporal gyrus (t[5] = 0.70, P = 0.52) (Fig. 3d). Critically, the subtle priming effect is in the opposite direction to that observed in the explicit Recall task, where remembering Old items was associated with significantly increased activation in modality-specific regions. Thus, simple repeated exposure to an item does not mimic the effects of active remembering.

Discussion

These results indicate clearly that brain areas in visual and auditory cortex are transiently active during memories that involve vivid visual and auditory content, respectively. Retrieval of pictures activates secondary visual areas, whereas retrieval of sounds activates secondary auditory areas. The visual regions activated include a left ventral fusiform region typically associated with object properties such as shape, color, and texture (24, 25) and several bilateral dorsal regions near precuneus that have been associated with the processing of spatial properties of objects (25, 26). The functional nature of the auditory regions associated with Recall is less well characterized. To determine the extent to which the regions activated during Recall comprised a subset of those activated during Perception, signal change time courses for picture and sound Recall trials were examined in earlier regions of visual and auditory cortex (lingual and Heschl's gyri, respectively; not shown in the figures). The most prominent signal modulations between retrieval of pictures vs. sounds were found in secondary cortex, whereas earlier cortex showed relatively little, if any, effect of modality. This indicates that the sensory-specific regions activated most robustly during Recall of pictures and sounds represent a distinct subset of those activated during Perception, specifically involving late rather than early sensory regions in this study.

Taken collectively, these data demonstrate clearly that vivid retrieval of sensory-specific information can involve the reactivation of sensory processing regions, supporting a reactivation hypothesis (1–3). Several open questions remain, such as whether these findings will generalize to situations where a single study episode is performed and whether earlier sensory regions can be activated when source retrieval encourages access to specific visual features. Recent evidence from Nyberg et al. (27) indicates that these results may generalize to situations in which information is encoded in a single episode.

These results are broadly consistent with findings from several other studies. Miyashita recorded from single units in nonhuman primate inferior temporal (IT) cortex and found neurons that responded preferentially to certain previously studied visual items (6). Importantly, Miyashita determined that these items had become associated with each other during study and that the neuronal responses were evidence for the formation of learned visual associations in IT. D'Esposito et al. (8) had subjects form mental images of the referents to concrete words during functional MRI and found a region of increased response in left fusiform gyrus. Concerning the auditory domain, the present results are in agreement with a recent study by Zatorre et al. using positron-emission tomography that showed a bilateral increase in blood flow in auditory cortex during recall of songs (28).

Interestingly, the regions activated during Recall showed a tendency to be left lateralized. Visual cortex activations in fusiform gyrus were strictly on the left (using our statistical criteria), whereas the more dorsal occipitoparietal activations were bilateral. Auditory activations were bilateral at a lower statistical threshold (Fig. 2 b and d) with only the left region reaching the strict criteria applied during analysis (but see ref. 27). The reason why significant fusiform gyrus activation was limited to the left hemisphere is unclear. Lesion studies have shown that patients with posterior left hemisphere lesions have difficulty with image generation (29) and visual memory (30). Likewise, neuroimaging studies of visual mental imagery show strong left-lateralized activations (7, 8). These results suggest that left visual cortical areas are involved in the retrieval of at least certain types of visual information from long-term memory. The role of the frontal regions in the Recall task is also of considerable interest. One possible hypothesis is that prefrontal cortex coordinates top-down memory retrieval processes by which information represented in posterior cortex is accessed and manipulated in accordance with current task goals (31, 32).

Acknowledgments

We thank David Donaldson for comments and assistance with data collection. Amy Sanders assisted with data collection and Luigi Maccotta, Tom Conturo, Abraham Snyder, and Erbil Akbudak provided valuable assistance in developing data acquisition and analysis methods. Heather Drury and David Van Essen (Washington University, St. Louis, MO) generously provided caret software. Wilma Koutstaal and Carolyn Brenner provided sound and picture stimuli. This work was supported by the McDonnell Center for Higher Brain Function, by National Institutes of Health grants MH57506 and NS32979, and by James S. McDonnell Foundation Program in Cognitive Neuroscience grant (99–63/9900003).

Abbreviation

- BA

Brodmann's area

Footnotes

This paper was submitted directly (Track II) to the PNAS office.

References

- 1.James W. Principles of Psychology. New York: Holt; 1893. [Google Scholar]

- 2.Damasio A R. Cognition. 1989;33:25–62. doi: 10.1016/0010-0277(89)90005-x. [DOI] [PubMed] [Google Scholar]

- 3.Kosslyn S M. Image and Brain: The Resolution of the Imagery Debate. Cambridge, MA: MIT Press; 1994. [Google Scholar]

- 4.Penfield W, Perot P. Brain. 1963;86:595–697. doi: 10.1093/brain/86.4.595. [DOI] [PubMed] [Google Scholar]

- 5.Desimone R. Science. 1992;258:245–246. doi: 10.1126/science.1411523. [DOI] [PubMed] [Google Scholar]

- 6.Miyashita Y. Nature (London) 1988;335:817–820. doi: 10.1038/335817a0. [DOI] [PubMed] [Google Scholar]

- 7.Kosslyn S M, Thompson W L, Kim I J, Alpert N M. Nature (London) 1995;378:496–498. doi: 10.1038/378496a0. [DOI] [PubMed] [Google Scholar]

- 8.D'Esposito M, Detre J A, Aguirre G K, Stallcup M, Alsop D C, Tippet L J, Farah M J. Neuropsychologia. 1997;35:725–730. doi: 10.1016/s0028-3932(96)00121-2. [DOI] [PubMed] [Google Scholar]

- 9.Dale A M, Buckner R L. Hum Brain Mapp. 1997;5:329–340. doi: 10.1002/(SICI)1097-0193(1997)5:5<329::AID-HBM1>3.0.CO;2-5. [DOI] [PubMed] [Google Scholar]

- 10.Wagner A D, Schacter D L, Rotte M, Koutstaal W, Maril A, Dale A M, Rosen B R, Buckner R L. Science. 1998;281:1188–1191. doi: 10.1126/science.281.5380.1188. [DOI] [PubMed] [Google Scholar]

- 11.Raczkowski D, Kalat J W, Nebes R. Neuropsychologia. 1974;6:43–47. doi: 10.1016/0028-3932(74)90025-6. [DOI] [PubMed] [Google Scholar]

- 12.Cohen J D, MacWhinney B, Flatt M, Provost J. Behav Res Methods Instruments Comput. 1993;25:257–271. [Google Scholar]

- 13.Snyder A Z. In: Quantification of Brain Function using PET. Bailey D, Jones T, editors. San Diego: Academic; 1996. pp. 131–137. [Google Scholar]

- 14.Bandettini P A, Jesmanowicz A, Wong E C, Hyde J S. Magn Reson Med. 1993;30:161–173. doi: 10.1002/mrm.1910300204. [DOI] [PubMed] [Google Scholar]

- 15.Talairach J, Tournoux P. Co-Planar Stereotaxic Atlas of the Human Brain. New York: Thieme; 1988. [Google Scholar]

- 16.Buckner R L, Goodman J, Burock M, Rotte M, Koutstaal W, Schacter D, Rosen B, Dale A M. Neuron. 1998;20:285–296. doi: 10.1016/s0896-6273(00)80456-0. [DOI] [PubMed] [Google Scholar]

- 17.Boynton G M, Engel S A, Glover G H, Heeger D J. J Neurosci. 1996;16:4207–4221. doi: 10.1523/JNEUROSCI.16-13-04207.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Miezin F M, Maccotta L, Ollinger J M, Petersen S E, Buckner R L. NeuroImage. 2000;11:735–759. doi: 10.1006/nimg.2000.0568. [DOI] [PubMed] [Google Scholar]

- 19.Van Essen D C, Drury H A. J Neurosci. 1997;17:7079–7102. doi: 10.1523/JNEUROSCI.17-18-07079.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Rademacher J, Caviness V S, Jr, Steinmetz H, Galaburda A M. Cereb Cortex. 1993;3:313–329. doi: 10.1093/cercor/3.4.313. [DOI] [PubMed] [Google Scholar]

- 21.Damasio H. Human Brain Anatomy in Computerized Images. New York: Oxford Univ. Press; 1995. [Google Scholar]

- 22.Schacter D L, Buckner R L. Neuron. 1998;20:185–195. doi: 10.1016/s0896-6273(00)80448-1. [DOI] [PubMed] [Google Scholar]

- 23.Wiggs C L, Martin A. Curr Opin Neurobiol. 1998;8:227–233. doi: 10.1016/s0959-4388(98)80144-x. [DOI] [PubMed] [Google Scholar]

- 24.Miller E K, Li L, Desimone R. Science. 1991;254:1377–1379. doi: 10.1126/science.1962197. [DOI] [PubMed] [Google Scholar]

- 25.Corbetta M, Miezin F M, Dobmeyer S, Shulman G L, Petersen S E. J Neurosci. 1991;11:2383–2402. doi: 10.1523/JNEUROSCI.11-08-02383.1991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Newcombe F, Ratcliff G, Damasio H. Neuropsychologia. 1987;25:149–161. doi: 10.1016/0028-3932(87)90127-8. [DOI] [PubMed] [Google Scholar]

- 27.Nyberg L, Habib R, McIntosh A R, Tulving E. Proc Natl Acad Sci USA. 2000;97:11120–11124. doi: 10.1073/pnas.97.20.11120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Zatorre R J, Halpern A R, Perry D W, Meyer E, Evans A C. J Cognit Neurosci. 1996;8:29–46. doi: 10.1162/jocn.1996.8.1.29. [DOI] [PubMed] [Google Scholar]

- 29.Farah M J, Levine D N, Calvanio R. Brain. 1988;8:147–164. doi: 10.1016/0278-2626(88)90046-2. [DOI] [PubMed] [Google Scholar]

- 30.Grossi D, Modafferi A, Pelosi L, Trojano L. Brain Cognit. 1989;10:18–27. doi: 10.1016/0278-2626(89)90072-9. [DOI] [PubMed] [Google Scholar]

- 31.Goldman-Rakic P S. In: The Prefontal Cortex: Executive and Cognitive Functions. Roberts A C, Robbins T W, Weiskrantz L, editors. Oxford: Oxford Univ. Press; 1998. pp. 87–102. [Google Scholar]

- 32.Miller, E. K. Nat. Rev. Neurosci., in press.