Abstract

Children with reading impairments have deficits in phonological awareness, phonemic categorization, speech-in-noise perception, and psychophysical tasks such as frequency and temporal discrimination. Many of these children also exhibit abnormal encoding of speech stimuli in the auditory brainstem, even though responses to click stimuli are normal. In typically developing children the auditory brainstem response reflects acoustic differences between contrastive stop consonants. The current study investigated whether this subcortical differentiation of stop consonants was related to reading ability and speech-in-noise performance. Across a group of children with a wide range of reading ability, the subcortical differentiation of 3 speech stimuli ([ba], [da], [ga]) was found to be correlated with phonological awareness, reading, and speech-in-noise perception, with better performers exhibiting greater differences among responses to the 3 syllables. When subjects were categorized into terciles based on phonological awareness and speech-in-noise performance, the top-performing third in each grouping had greater subcortical differentiation than the bottom third. These results are consistent with the view that the neural processes underlying phonological awareness and speech-in-noise perception depend on reciprocal interactions between cognitive and perceptual processes.

Keywords: brainstem, dyslexia, electrophysiology, experience-dependent plasticity, learning impairment

Learning impairments, primarily reading disorders, are among the most prevalently diagnosed exceptionalities in school-aged children, affecting ≈ 5% to 7% of the population (1). These impairments coincide with a number of perceptual deficits including inordinate difficulty perceiving speech in noise as well as neural encoding deficits in the auditory system. In typically developing children, differences in contrastive speech stimuli are encoded subcortically (2), but the possible relationship between subcortical encoding of stimulus differences and reading ability has not been previously explored.

Behavioral Impairments.

Children with reading impairments often show deficits in phonological processing, which may be caused by degraded phonological representations or an inability to access these representations effectively (3–5). This population also exhibits impairments in speech sound discrimination (i.e., contrastive syllables) relative to controls matched for age and reading level (6, 7), suggesting that impairments are not simply caused by a maturational delay. These effects are especially prevalent for place of articulation and voice onset time contrasts, which reflect dynamic spectral and temporal contrasts, respectively. Perceptual discrimination deficits seem to be limited to the rapid spectral transitions between consonants and vowels and are not found for steady-state vowels or when formant transitions are lengthened (8–10). Moreover, when presented with between- and within-phonemic category judgments, typically developing children successfully discriminate between categories (e.g., [da] vs. [ba]), whereas children with reading impairments do not (11). Reading-impaired children also show deficits on a number of nonphonetic psychophysical measures, including frequency discrimination (12, 13), sequencing sounds (14), and temporal judgments (15, 16), and are affected more than normal-learning children by backward masking of tones (17, 18).

Additionally, children with reading impairments and those at risk for developing impairments have poorer speech-in-noise perception than their typically developing peers and show a greater deterioration in performance with decreasing signal-to-noise ratio (19, 20). Poor speech-in-noise perception and temporal discrimination are predictive of reading deficits and language delays, respectively, in young children (20, 21). Moreover, dyslexic adults have difficulty grouping streams of tones into distinct auditory events (22). These results, along with evidence showing higher thresholds for visual discrimination in noise (23), suggest that a subset of children with reading disorders may have compromised or reduced ability to overcome the adverse effects of noise on sensory stimuli. Ahissar and colleagues suggest that, unlike typically developing children, children with reading disorders are unable to profit from the repetition of stimuli, suggesting a deficit in forming a perceptual anchor (4) that may preclude separating target stimuli from background noise. This theory suggests that adults and children with reading disorders may have a perceptual attention deficit and trouble attending to target stimuli in the presence of background noise. These multiple lines of evidence suggest that reading and learning impairments arise from the interplay of multiple factors and not from a single mechanism.

Deficits in Neural Encoding of Speech.

Deficits in phonological awareness, reading, and temporal resolution are linked to abnormal neural representation of sound in the auditory system (18, 24–32). Relative to normal-learning children, children with reading impairments show reduced cortical asymmetry of language processing (31, 33, 78) and reduced amplitude or delayed latencies of the mismatch negativity, a cortical response reflecting preconscious neural representation of stimulus differences (27, 32). Children with the weakest mismatch negativity responses also are the most likely to have the lowest literacy scores (27) and the poorest performance on categorical discrimination tasks using the same stimuli (32). Additionally, for poor readers, cortical encoding deficits are found for rapidly presented stimuli and stimuli with rapid frequency changes (similar to formant transitions) (28, 34), mimicking their impaired behavioral performance in temporal order judgments and supporting the hypothesis of a temporal processing deficit (16).

Abnormalities in neural encoding also are found subcortically. The auditory brainstem response to speech closely mimics the spectrotemporal features of the stimulus (35–37) and demonstrates experience-related plasticity (38–41). Phase-locking to speech formant structure below ≈1000 Hz, the upper limit of brainstem phase-locking (42), can be observed in both the spectral and temporal domains. Higher formant information, the dynamic frequency content of the speech signal determined by the shape of the vocal tract, is reflected in transient response elements via absolute peak latency (2). Approximately 40% of children diagnosed with a reading impairment show delayed response timing for the transient elements and reduced spectral magnitude corresponding to the harmonic components of a stop consonant speech stimulus ([da]) relative to age-based norms (18, 24–27, 43, 44). In a large group of children encompassing a broad spectrum of reading abilities, these subcortical measures are correlated with reading performance, with earlier latencies and larger harmonic amplitudes indicating better reading performance (44). Additionally, children with specific language impairments have reduced brainstem encoding of frequency sweeps that mimic formant transitions of consonant-vowel syllables in both frequency range and slope (45). Moreover, speech discrimination in noise is correlated with transient response timing and harmonic encoding of speech presented in background noise (24), and cortical response degradation in noise is greatest for children with delayed responses in the brainstem (43). Interestingly, the representation of the fundamental frequency does not seem to differ between reading-impaired and normal-learning children (18, 24, 26, 36, 44). Thus, the subcortical encoding deficits seen for children with reading disorders are limited to the fast temporal (transient) and dynamic spectrotemporal elements of the signal and do not include the F0. These elements are crucial for distinguishing speech sounds (46, 47), and, importantly, they are the particular aspects of speech that children with reading impairments have the most difficulty discriminating (6, 7).

Neural Differentiation of Speech.

Phonemic contrasts are represented in both the cortex and auditory brainstem. In the cortex, temporal characteristics such as voice onset time are encoded in Heschel's gyrus (48), and the encoding of these features is enhanced after auditory discrimination training (49). In addition, tonotopicity is used to represent place of articulation (i.e., the initial formant frequencies of consonants) and the formant content of vowels in humans and primates (50–52).

Differential encoding of speech stimuli extends to the auditory brainstem. For example, stepwise alteration of voice onset time elicits systematic latency shifts of single-neuron responses in brainstem nuclei, especially for neurons whose characteristic frequency corresponds to the first formant of the stimulus (53). In humans, spectral differences above the phase-locking capabilities of the auditory brainstem are represented by latency shifts in the auditory brainstem responses of typically developing children (2). When 3 contrastive speech syllables are presented ([ga], [da], and [ba]), the stimulus with the highest second and third formants ([ga]) elicits earlier responses than the stimulus with the mid-frequency formants ([da]), which in turn elicits earlier responses than the stimulus with the lowest starting frequencies ([ba]) (2). These effects are most evident in the minor voltage fluctuations between the prominent (major) response peaks. The latency pattern is almost universally present in individuals, and discrepancies in the latency pattern are systematic, with confusions between the 2 stimuli with descending formant trajectories ([da] and [ga]) but not with the stimulus with a rising formant trajectory ([ba]) (2), confirming that these peaks are linked to the formant structure. Thus, the auditory brainstem response closely mimics the acoustics of a stimulus and response timing reflects stimulus features that cannot be encoded directly through phase-locking.

Given previously established links between subcortical encoding of speech and reading ability, the current study sought to identify relationships between the subcortical differentiation of voiced stop consonants and reading ability. Specifically, we sought to establish a relationship between temporal encoding and contrastive speech sounds known to pose phonological difficulties for poor readers. Children with reading impairments also have deficits hearing speech in noise, which may be caused by poor representation of target speech stimuli in the brainstem. Our second aim, therefore, was to identify relationships between speech-in-noise perception and subcortical differentiation of contrastive speech sounds. Electrophysiological responses were collected for 3 speech stimuli that differed in place of articulation ([ba], [da], [ga]) in children with a wide range of reading abilities, including typically developing individuals and those diagnosed with reading disorders. We hypothesized that subcortical differentiation of the 3 speech stimuli would be associated with reading and reading-related indices and speech-in-noise performance, with better performers having greater subcortical differentiation.

Results

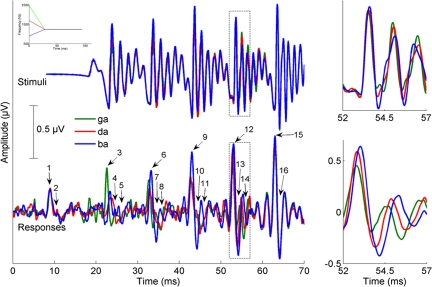

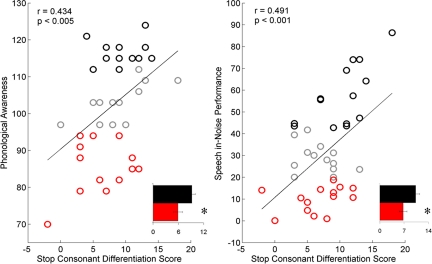

In accordance with previous findings (2), the [ga] < [da] < [ba] latency progression was seen in major and minor peaks but not in onset or endpoint peaks (Fig. 1). As hypothesized, subcortical differentiation of voiced stop consonants correlated with reading, phonological awareness, and speech-in-noise perception only for the response peaks reflecting the spectrotemporally changing formant structure (minor) and not for those reflecting the fundamental frequency (major), onset of the stimulus (onset), or end of the formant transition period (endpoint). Specifically, the stop consonant differentiation score for the minor peaks was positively correlated with phonological awareness (PA) [Comprehensive Test of Phonological Processing (CTOPP) Phonological Awareness r = 0.434, P = 0.004], reading fluency [Test of Silent Word Reading Fluency (TOSWRF) r = 0.399, P = 0.008], and speech perception in noise [Hearing in Noise Test-Right (HINT-R) percentile r = 0.492, P = 0.001] (Fig. 2). To rule out the effect of intellectual ability, attention deficits, and age on the relationships with minor peak stop consonant differentiation score, the correlational analyses were repeated with age,non-verbal IQ, and measures of hyperactive and inattentive behavior as covariates. The strength of the relationships decreased slightly but remained significant, except for reading fluency (PA: r = 0.306, P = 0.059; TOSWRF: r = 0.248, P = 0.13; HINT-R: r = 0.510, P = 0.001).

Fig. 1.

Timing differences present in the responses (Bottom Panels) are absent in the stimuli (Top Panels). For the stimuli and responses, [ba] is plotted in blue, [da] in red, and [ga] in green. (Left) The time domain grand averages of high-pass filtered responses from typically developing children (n = 20) are plotted below the [ba], [da], and [ga] stimuli. The peaks analyzed in the present study are marked on the responses (onset: 1, 2; major: 3, 4, 6, 7, 9, 10, 12, 13; minor: 5, 8, 11, 14; endpoint: 15, 16). For visual coherence, the stimuli have been shifted in time by 8 ms to account for the neural conduction lag. (Right) The 52- to 57-ms region of the responses and time-adjusted stimuli have been magnified to highlight latency differences found among the responses (Bottom) that are not present in the stimuli (Top). These latency differences are thought to reflect the differing second formants of the stimuli schematically plotted in the inset (Top Left).

Fig. 2.

Subcortical differentiation is related to phonological awareness and speech-in-noise performance. The relationships between the stop consonant differentiation score (minor peaks) and phonological awareness (Left) and speech-in-noise performance (Right) are plotted. Performers in the top and bottom terciles for each measure are marked in black and red, respectively. The stop consonant differentiation scores of performers in the top (black) and bottom (red) terciles on each measure are plotted in the insets (means ± 1 standard error). *, P < 0.05.

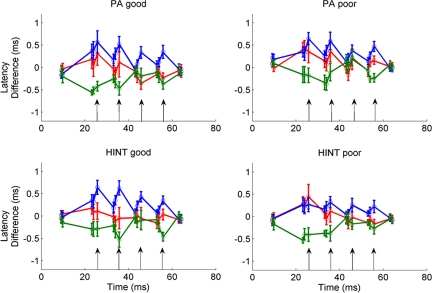

Between-group analyses of stop consonant differentiation scores for the minor peaks also were conducted on the highest and lowest performing terciles (n = 14 each) for PA and HINT-R, the 2 behavioral measures that remained significant after covarying age, attentive behavior, and IQ. Similar analyses were not conducted for the major, onset, or endpoint differentiation scores because they did not correlate with any behavioral measures. The good PA group (top third: PA range 112–124, mean stop consonant differentiation score = 9.21) had higher stop consonant differentiation scores than the poor PA group (bottom third: PA range 70–94, mean stop consonant differentiation score = 5.93; t (26) = 2.414, P = 0.023). Similarly, the good HINT-R group (top third: range 42.8–86.4, mean stop consonant differentiation score = 10.28) had greater stop consonant differentiation scores than the poor HINT-R group (bottom third: range 0.1–18.8, mean stop consonant differentiation score = 6.71; t (26) = 2.287, P = 0.031), although the effect was only marginally significant after correcting for multiple comparisons (see Figs. 2 and 3).

Fig.3.

Children who are poor performers on measures of phonological awareness (PA) and speech-in-noise perception (HINT) have reduced subcortical differentiation of 3 stop consonant stimuli relative to good performers. Normalized latency differences are plotted over time for the good performers (Left) and poor performers (Right) for phonological awareness (Top) and speech-in-noise perception (Bottom). There is better separation among [ga] (green), [ba] (blue), and [da] (red) responses for the good performers. Response latencies were normalized by subtracting individual peak latencies from the grand average of responses to all 3 stimuli (see Methods and ref. 2). Minor peaks are marked with arrows.

Discussion

Preconscious cortical differentiation of place of articulation contrasts has been related previously to reading ability (27, 32). In the present study, subcortical differentiation of contrastive stop consonant syllables was linked to performance on measures of phonological awareness and speech-in-noise perception for peaks corresponding to the minor voltage fluctuations between primary response peaks. These peaks reflect the formant transitions, which differ across stimuli, and are suggestive of the manner by which the brainstem represents frequencies above phase-locking limits (2), making them likely predictors of reading measures. The current study is the first to demonstrate an unambiguous relationship between reading ability and subcortical temporal encoding of contrastive speech sounds that present phonological challenges for poor readers. When children were categorized into terciles based on phonological awareness or speech-in-noise perception, the children in the top third in both groupings exhibited greater subcortical differentiation across the minor peaks than did the children in the bottom third.

The current study also discovered a relationship between subcortical differentiation of stop consonants and the perception of speech-in-noise in conditions that mimic real-word listening situations (e.g., noisy classrooms). This study builds upon previous work identifying broad relationships between perception of signals in noise and brainstem encoding (24). The current study indicates that the subcortical differentiation of stop consonants, known to pose particular perceptual challenges in noise (54), is related to the ability to perceive speech in noise.

Relationships identified in the current study were limited to the minor peaks, thought to be most representative of the differing stimulus formant trajectories (i.e., continuous formant frequency changes over time) (2, 36). By showing that children with poorer phonological awareness and speech-in-noise perception have smaller or absent latency differences among responses, the current study reinforces the notion that formant information is encoded less precisely in the reading-impaired population (18, 24–27, 43, 45). On the other hand, the major peaks are largely driven by the fundamental frequency of the stimulus (36), and previous work has found that children with reading impairments do not differ from normal-reading children in the encoding of fundamental frequency in stop consonants (24, 26, 44).

The phonological deficits experienced by children with reading impairments have been ascribed to both the perception of and the use of phonology (3–5, 16, 17, 21, 55, 56), and the relationships between phonological skills and neural transcription of sound observed in the current study are compatible with “bottom-up” and “top-down” mechanisms. Poor temporal discrimination is reflected in poor neural synchrony and abnormal transient elements of the brainstem response to speech (18), which in the present study reflect the formant trajectories of the stimuli. The current results also support the seminal work by Tallal and colleagues demonstrating that perceptual deficits seen in children with learning impairments are limited to short, spectrally dynamic elements (i.e., formant transitions) and do not affect steady-state vowels (8–10). An inability to represent formant differences in the brainstem could lead to deficient phonological representations (16, 17, 21, 55, 56) and contribute to poor reading. Brainstem processes also are shaped by corticofugal “top-down” influences, leading to the subcortical malleability observed with lifelong experience and short-term training (39–41, 57–60; see refs. 38 and 61 for reviews of language and music work, respectively). In animal models, behaviorally relevant stimuli can elicit selective cortical, subcortical, and even cochlear tuning of receptive fields via corticofugal mechanisms (62–67). Similar plasticity is found in the human auditory brainstem where attentional context can affect online brainstem encoding of speech (68). Children with reading disorders often have impaired phonological retrieval and short-term memory store (3–5), which, over time, may result in a failure to sharpen the subcortical auditory system, resulting in the deficient brainstem responses to speech stimuli and reduced differentiation of stop consonants observed here. We speculate that reading and learning impairments are caused by mutually maladaptive interactions between corticofugal tuning and basic perceptual deficits.

The relationship between speech-in-noise performance and subcortical differentiation of stop consonants can be viewed within a similar framework. Children with reading impairments demonstrate decreased lower-brainstem efferent modulation relative to normal-learning children, similar to deficits observed in speech-in-noise perception (69). Moreover, improved performance on speech-in-noise perception tasks after training coincided with increases in efferent modulation of peripheral hearing mechanisms both in normal-reading adults and in children with learning impairments (60). As proposed by Ahissar and colleagues, cortical influences on brainstem function may result from a backwards search for more concrete representations during adverse listening conditions such as speech in noise (70). Impoverished phonological representations or an inability to access and manipulate them effectively would result in a mismatch between the proposed higher level (phonological) and lower level (auditory brainstem) representations of the stimulus (70). Because lifelong experience, short-term training, and online changes in directed attention and context can affect the auditory brainstem response to speech (38–41, 57–60, 68), it is possible that repeated failures to increase the signal-to-noise ratio in adverse listening conditions, caused by mismatches between poor phonological retrieval and deficient brainstem encoding, negatively affects brainstem encoding of speech. As with phonological deficits, deficits in speech-in-noise perception in reading-impaired children probably are the consequence of interactions among perceptual and cognitive factors.

Conclusion

The current study identified relationships between subcortical differentiation of 3 stop consonants and phonological awareness, reading performance, and speech-in-noise performance in a group of children on a continuum of reading ability. These relationships are consistent with the view that improper utilization of phonology, probably through a combination of deficits in phonological perception and working memory, is manifested in deficient encoding of sound elements important for phoneme identification in the auditory brainstem. Behavioral training, which could enhance task-directed auditory attention, could be an effective vehicle for improving brainstem differentiation of contrastive stimuli in children with poor phonological awareness and speech-in-noise perception.

Materials and Methods

Participants.

Participants were 43 children, ages 8–13 years (mean age = 10.4 years, 20 girls). Of the children, 23 carried an external diagnosis of learning or reading disorders, and of those all but 1 attended a private school for children with severe reading impairments. Additionally, 11 of these children were diagnosed with comorbid attention disorders. All other children were recruited from area schools. All participants had normal hearing defined as air conduction thresholds < 20 dB normal hearing level (nHL) for octaves from 250-8000 Hz, no evident air−bone conduction gap, and click-evoked brainstem response latencies within normal limits (the 100-μs click stimulus was presented at 80 dB sound pressure level (SPL) at a rate of 31/s). We required all children to have clinically normal click-evoked auditory brainstem responses to ensure that observed differences between groups could not be attributable to neurological or audiological impairments.

All children also had normal or corrected-to-normal vision and normal IQ scores (> 85, the boundary of the “below average” range) on the Wechsler Abbreviated Scale of Intelligence (71). The Attention Deficit Hyperactivity Disorder Rating Scale IV (72) was included as a measure of attentive behavior. Age- and sex-normed hyperactive and inattentive subtype scores were used as covariates in the correlational analyses. Children were compensated monetarily for their time. All procedures were approved by the Internal Review Board of Northwestern University.

Reading-Related Measures.

Phonological awareness and processing were assessed with subtests of the CTOPP (73). The 6 subtests (Elision, Blending Words, Rapid Number Naming, Rapid Letter Naming, Nonword Repetition, Number Repetition) yield 3 cluster scores, PA, Phonological Memory, and Rapid Naming, which were used for the current analyses. Children also completed the Test of Word Reading Efficiency (TOWRE), which consists of 2 subtests, Sight and Phoneme (74), that assess the ability to read real words and non-words, respectively, under a time constraint. The TOSWRF also was administered and required children to parse continuous strings of letters into words under a time constraint (75).

Speech-in-Noise Perception Measures.

Speech-in-noise perception was evaluated using the HINT (Bio-logic Systems Corp.), which yields both a threshold signal-to-noise ratio and age-normed percentile score. Children repeated sentences presented in speech-shaped background noise as the program adaptively adjusted the signal-to-noise ratio until the child repeated ≈ 50% of the sentences correctly. The speech stimuli were always presented from the front, and the noise was presented from the front, from 90° to the left, or from 90° from the right in 3 separate conditions. Percentile scores for each of the 3 noise conditions and a composite score for all 3 conditions were used in the present analyses.

Electrophysiological Stimuli and Recording Parameters.

Speech stimuli were synthesized in KLATT (76). Stimuli were 170 ms long with a 50-ms formant transition and a 120-ms steady-state vowel. Voicing onset was at 5 ms. Stimuli ([ba], [da], [ga]) differed from each other only on the second formant (F2) trajectories during the formant transition period (see supporting information (SI) Fig. S1). The fundamental frequency and the fourth, fifth, and sixth formants were constant across time at 100, 3300, 3750, and 4900 Hz, respectively, and within the formant transition period the first and third formants were dynamic, rising from 400 to 720 Hz and falling from 2580 to 2500 Hz, respectively. In the [ga] stimulus, F2 fell sharply from 2480 to 1240 Hz, in the [da] stimulus F2 fell from 1700 to 1240 Hz, and in the [ba] stimulus F2 rose from 900 to 1240 Hz (Fig. 1, Inset). The F2 transitions resolved to 1240 Hz during the steady-state vowel portion. Stimuli of alternating polarity were presented at 80 dB SPL with an interstimulus interval of 60 ms through an insert earphone (ER-3, Etymotic Research) to the right ear with Neuroscan Stim 2 (Compumedics) and were interleaved with 5 other speech stimuli differing in both spectral and temporal characteristics. Responses were collected with a vertical electrode montage (forehead ground, Cz active, and ipsilateral earlobe reference) with Neuroscan Aquire 4.3 (Neuroscan Scan, Compumedics) and digitized at 20,000 Hz.

Data Reduction and Analysis.

Data reduction and preliminary analysis procedures followed those of Johnson et al. (2). Responses were offline bandpass filtered from 70–2,000 Hz at 12 dB roll-off per octave and averaged over a 230-ms window with 40-ms prestimulus and 190-ms poststimulus onset. Separate averages were created for each stimulus polarity, and then the 2 averages were added together (77). The final baseline-corrected average consisted of 6,000 artifact-free sweeps (3,000 sweeps per polarity; artifact defined as activity ± 35 μV). To aid in peak identification, final averages were additionally high-pass filtered at 300 Hz (12 dB/octave slope). In total, 15 peaks were identified: the onset peak and trough (onset peaks), the 8 major response peaks and troughs (major peaks), the 4 minor troughs following the major troughs (minor peaks), and the first peak and trough during the response to the steady-state vowel (endpoint peaks). For stimuli that were phonetically similar to those used in the present study, we previously found that the major and minor response peaks to [ga] occurred earlier than those to [da], which also occurred earlier than those to [ba] over the formant transition region, reflecting the second and third formant frequency differences in those stimuli (2). These differences were not found in the onset response. The latency differences gradually diminished over the course of the first 60 ms of the response, reflecting the diminishing differences among frequencies in the stimuli over time. Importantly, as is the case in the current study, there were no timing differences in the previously used stimuli (2) that might have accounted for these response latency differences.

Given the expected latency pattern of [ga] < [da] < [ba], the responses in the present study were analyzed with a 3-step metric that takes into account both the presence of the expected pattern and the magnitude of the latency differences. Stop consonant differentiation scores were calculated for each of the 15 onset, major, minor, and endpoint peaks.

Step 1.

For each of the 3 pairwise latency comparisons ([ba] vs. [da], [ba] vs. [ga], [da] vs. [ga]) for a given peak, the peak was given a score of 1 if the expected pattern was present and a score of 0 if it was not, resulting in an interim score ranging from 0 to 3.

Step 2.

(i) For each peak, the difference in average latency (Λ) and standard deviation were calculated for each stimulus pair (e.g., [ba] minus [da]) using the children who did not carry an external diagnosis of a learning or reading disorder (n = 20).

(ii) +Λ′ and −Λ′, defined as Λ + 0.67 and Λ − 0.67 standard deviations, respectively, were computed. The range between −Λ′ and +Λ′ accounts for 50% of the cases in a normally distributed population.

(iii) For each peak and each pairwise comparison, if the pattern was present (Step 1) and the latency difference was greater than +Λ′, then an additional point was added to the interim score. A point was subtracted if the pattern was absent and the latency difference was less than −Λ′ (a latency difference in the wrong direction outside normal variance). Additionally, a point was subtracted if the pattern was present but the latency difference was less than −Λ′. In this case it was assumed that, although the pattern was shown, the latency difference between the 2 responses was so small that it was equivalent to there being no difference between the responses.

(iv) The scores derived from Steps 1 and 2iii were summed across all 3 pairwise comparisons ([ba] vs. [da], [ba] vs. [ga], [da] vs. [ga]), resulting in a minimum score of −3 (earned by not showing the pattern in any comparison and having clear latency differences in the wrong direction) and a maximum score of 6 (earned by showing the pattern for all 3 comparisons and having clear latency differences in the expected direction).

Step 3.

The individual differentiation scores then were summed separately for the major peaks (n = 8) and minor peaks (n = 4) to give maximum scores of 48 and 24 and minimum scores of −24 and −12, respectively.

For the onset and endpoint differentiation scores, because there was no expected latency pattern (2), and because this finding was replicated for typically developing children in the current study, latency deviation in either direction was penalized. Any deviation greater than +Λ′ or less than −Λ′ (with Λ ≈ 0) earned −1 point, resulting in a maximum score of 0 (within normal latency limits for all comparisons) and a minimum score of −3 (showing deviation outside latency norms on all 3 comparisons). Scores then were summed across onset (n = 2) and endpoint (n = 2) peaks, yielding a maximum score of 0 and a minimum score of −6 in both cases.

For graphing purposes in Fig. 3, normalized latency values were calculated as described in ref. 2. The response latencies for the typically developing children (n = 20) were averaged across all 3 stimuli for each peak. This grand average latency was subtracted from the latency of each individual child for each peak. Thus, peak latencies that were earlier than the grand average (i.e., responses to [ga]) would have negative normalized values, and peak latencies later than the grand average (i.e., responses to [ba]) would have positive normalized values. Responses to [da] occurred at roughly the same latency as the grand average and thus have normalized latencies near zero.

Pearson's correlations between stop consonant differentiation scores and behavioral measures of reading, phonological awareness, and speech-in-noise perception were conducted in SPSS (SPSS Inc.). Outliers on psychoeducational measures (3 scores) were corrected to be at −2 standard deviations from the standard normed mean of the test (a score of 100). No outliers were present for the speech-in-noise measures. IQ, attentional behavior, and age were included as covariates in correlational analyses. Additionally, children were divided into terciles based on CTOPP PA score and HINT noise right percentile because those measures were the most strongly related to subcortical differentiation, even after controlling for covariates. Table S1 gives performance on behavioral measures for each group. Stop consonant differentiation scores were compared between top and bottom terciles (n = 14) of each measure using independent t-tests. Because of multiple comparisons in the correlations, alpha was set at 0.01. Because the t-test comparison was conducted only on the stop consonant differentiation score for the minor peaks, alpha was adjusted to 0.025.

Supplementary Material

Acknowledgments.

We thank Bharath Chandrasekaran for his review of the manuscript. We especially thank the members of the Auditory Neuroscience Laboratory who aided in data collection and the children and their families who participated in the study. This work was funded by the National Institutes of Health (RO1 DC01510) and the Hugh Knowles Center of Northwestern University.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/cgi/content/full/0901123106/DCSupplemental.

References

- 1.Shapiro B, Church RP, Lewis MEB. In: Children with Disabilities. 6th Ed. Batshaw ML, Pellegrino L, Roizen NJ, editors. Baltimore, Maryland: Paul H. Brookes Publishing Co.; 2007. pp. 367–385. [Google Scholar]

- 2.Johnson K, et al. Brainstem encoding of voiced consonant-vowel stop syllables. Clinical Neurophysiology. 2008;119:2623–2635. doi: 10.1016/j.clinph.2008.07.277. [DOI] [PubMed] [Google Scholar]

- 3.Ramus F, Szenkovits G. What phonological deficit? Q J Exp Psychol. 2008;61:129–141. doi: 10.1080/17470210701508822. [DOI] [PubMed] [Google Scholar]

- 4.Ahissar M. Dyslexia and the anchoring deficit hypothesis. Trends in Cognitive Sciences. 2008;11:458–465. doi: 10.1016/j.tics.2007.08.015. [DOI] [PubMed] [Google Scholar]

- 5.Gibbs S. Phonological awareness: An investigation into the developmental role of vocabulary and short-term memory. Educ Psychol. 2004;24:13–25. [Google Scholar]

- 6.Hazan V, Adlard A. Speech perception in children with specific reading difficulties (dyslexia) Q J Exp Psychol. 1998;51A:153–177. doi: 10.1080/713755750. [DOI] [PubMed] [Google Scholar]

- 7.Maassen B, Groenen P, Crul T, Assman-Hulsmans C, Gabreels F. Identification and discrimination of voicing and place-of-articulation in developmental dyslexia. Clinical Linguistics & Phonetics. 2001;15:319–339. [Google Scholar]

- 8.Tallal P, Piercy M. Developmental aphasia: Rate of auditory processing and selective impairment of consonant perception. Neuropsychologia. 1974;12:83–93. doi: 10.1016/0028-3932(74)90030-x. [DOI] [PubMed] [Google Scholar]

- 9.Tallal P, Piercy M. Developmental aphasia: The perception of brief vowels and extended stop consonants. Neuropsychologia. 1975;13:69–74. doi: 10.1016/0028-3932(75)90049-4. [DOI] [PubMed] [Google Scholar]

- 10.Tallal P, Stark RE. Speech acoustic-cue discrimination abilities of normally developing and language-impaired children. J Acoust Soc Am. 1981;69:568–574. doi: 10.1121/1.385431. [DOI] [PubMed] [Google Scholar]

- 11.Serniclaes W, Sprenger-Charolles L. Categorical perception of speech sounds and dyslexia. Current Psychology Letters. 2003;10(1) [Google Scholar]

- 12.Baldeweg T, Richardson A, Watkins S, Foale C, Gruzelier J. Impaired auditory frequency discrimination in dyslexia detected with mismatch evoked potentials. Ann Neurol. 1999;45:495–503. doi: 10.1002/1531-8249(199904)45:4<495::aid-ana11>3.0.co;2-m. [DOI] [PubMed] [Google Scholar]

- 13.Halliday LF, Bishop DVM. Auditory frequency discrimination in children with dyslexia. Journal of Research in Reading. 2006;29:213–228. [Google Scholar]

- 14.Wright BA, Bowen RW, Zecker SG. Nonlinguistic perceptual deficits associated with reading and language disorders. Curr Opin Neurobiol. 2000;10:482–486. doi: 10.1016/s0959-4388(00)00119-7. [DOI] [PubMed] [Google Scholar]

- 15.Rey V, De Martino S, Espesser R, Habib M. Temporal processing and phonological impairment in dyslexia: Effect of phoneme lengthening on order judgment of two consonants. Brain and Language. 2002;80:576–591. doi: 10.1006/brln.2001.2618. [DOI] [PubMed] [Google Scholar]

- 16.Tallal P, Miller SL, Jenkins WM, Merzenich MM. In: Foundations of Reading Acquisition and Dyslexia: Implications for Early Intervention. Blachman B, editor. Mawah, NJ: Lawrence Erlbaum Assoc. Inc.; 1997. pp. 49–66. [Google Scholar]

- 17.Wright BA, et al. Deficits in auditory temporal and spectral resolution in language-impaired children. Nature. 1997;387:176–178. doi: 10.1038/387176a0. [DOI] [PubMed] [Google Scholar]

- 18.Johnson K, Nicol T, Zecker SG, Kraus N. Auditory brainstem correlates of perceptual timing deficits. J Cognit Neurosci. 2007;19:376–385. doi: 10.1162/jocn.2007.19.3.376. [DOI] [PubMed] [Google Scholar]

- 19.Bradlow AR, Kraus N, Hayes E. Speaking clearly for children with learning disabilities: Sentence perception in noise. Journal of Speech, Language, and Hearing Research. 2003;46:80–97. doi: 10.1044/1092-4388(2003/007). [DOI] [PubMed] [Google Scholar]

- 20.Boets B. P. G, van Wieringen A, Wouters J. Speech perception in preschoolers at family risk for dyslexia: Relations with low-level auditory processing and phonological ability. Brain and Language. 2007;101:19–30. doi: 10.1016/j.bandl.2006.06.009. [DOI] [PubMed] [Google Scholar]

- 21.Benasich AA, Tallal P. Infant discrimination of rapid auditory cues predicts later language development. Behav Brain Res. 2002;136:31–49. doi: 10.1016/s0166-4328(02)00098-0. [DOI] [PubMed] [Google Scholar]

- 22.Helenius P, Uutela K, Hari R. Auditory stream segregation in dyslexic adults. Brain. 1999;122:907–913. doi: 10.1093/brain/122.5.907. [DOI] [PubMed] [Google Scholar]

- 23.Sperling AJ, Zhong-Lin L, Manis FR, Seidenberg MS. Deficits in perceptual noise exclusion in developmental dyslexia. Nature Neuroscience. 2005;8:862–863. doi: 10.1038/nn1474. [DOI] [PubMed] [Google Scholar]

- 24.Cunningham J, Nicol T, Zecker SG, Kraus N. Neurobiologic responses to speech in noise in children with learning problems: Deficits and strategies for improvement. Clinical Neurophysiology. 2001;112:758–767. doi: 10.1016/s1388-2457(01)00465-5. [DOI] [PubMed] [Google Scholar]

- 25.King C, Warrier CM, Hayes E, Kraus N. Deficits in auditory brainstem encoding of speech sounds in children with learning problems. Neurosci Lett. 2002;319:111–115. doi: 10.1016/s0304-3940(01)02556-3. [DOI] [PubMed] [Google Scholar]

- 26.Wible B, Nicol T, Kraus N. Atypical brainstem representation of onset and formant structure of speech sounds in children with language-based learning problems. Biol Psychol. 2004;67:299–317. doi: 10.1016/j.biopsycho.2004.02.002. [DOI] [PubMed] [Google Scholar]

- 27.Banai K, Nicol T, Zecker SG, Kraus N. Brainstem timing: Implications for cortical processing and literacy. J Neurosci. 2005;25:9850–9857. doi: 10.1523/JNEUROSCI.2373-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Nagarajan SS, et al. Cortical auditory signal processing in poor readers. Proc Natl Acad Sci USA. 1999;96:6483–6488. doi: 10.1073/pnas.96.11.6483. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Molfese D. Predicting dyslexia at 8 years if age using neonatal brain responses. Brain and Language. 2000;72:238–245. doi: 10.1006/brln.2000.2287. [DOI] [PubMed] [Google Scholar]

- 30.Molfese DL, et al. Reading and cognitive abilities: Longitudinal studies of brain and behavior changes in young children. Annals of Dyslexia. 2002;52:99–119. [Google Scholar]

- 31.Abrams DA, Nicol T, Zecker SG, Kraus N. Auditory brainstem timing predicts cerebral dominance for speech sounds. J Neurosci. 2006;26:11131–11137. doi: 10.1523/JNEUROSCI.2744-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Kraus N, et al. Auditory neurophysiologic responses and discrimination deficits in children with learning problems. Science. 1996;273:971–973. doi: 10.1126/science.273.5277.971. [DOI] [PubMed] [Google Scholar]

- 33.Cunningham J, Nicol T, Zecker SG, Kraus N. Speech-evoked neurophysiologic responses in children with learning problems: Development and behavioral correlates of perception. Ear and Hearing. 2000;21:554–568. doi: 10.1097/00003446-200012000-00003. [DOI] [PubMed] [Google Scholar]

- 34.Gaab N, Gabrieli JDE, Deutsch GK, Tallal P, Temple E. Neural correlated of rapid auditory processing are disrupted in children with developmental dyslexia and ameliorated with training: An fMRI study. Restorative Neurology and Neuroscience. 2007;25:295–310. [PubMed] [Google Scholar]

- 35.Galbraith GC, Arbagey PW, Branski R, Comerci N, Rector PM. Intelligible speech encoded in the human brain stem frequency-following response. NeuroReport. 1995;6:2363–2367. doi: 10.1097/00001756-199511270-00021. [DOI] [PubMed] [Google Scholar]

- 36.Kraus N, Nicol T. Brainstem origins for cortical “what” and “where” pathways in the auditory system. Trends Neurosci. 2005;28:176–181. doi: 10.1016/j.tins.2005.02.003. [DOI] [PubMed] [Google Scholar]

- 37.Chandrasekeran B, Kraus N. The scalp-recorded brainstem response to speech: Neural origins. Psychophysiology. doi: 10.1111/j.1469-8986.2009.00928.x. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Tzounopoulos T, Kraus N. Learning to encode timing: Mechanisms of plasticity in the auditory brainstem. Neuron. 62(4):463–469. doi: 10.1016/j.neuron.2009.05.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Krishnan A, Xu Y, Gandour J, Cariani P. Encoding of pitch in the human brainstem is sensitive to language experience. Cognitive Brain Res. 2005;25:161–168. doi: 10.1016/j.cogbrainres.2005.05.004. [DOI] [PubMed] [Google Scholar]

- 40.Musacchia G, Sams M, Skoe E, Kraus N. Musicians have enhanced subcortical auditory and audiovisual processing of speech and music. Proc Natl Acad Sci USA. 2007;104:15894–15898. doi: 10.1073/pnas.0701498104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Wong PCM, Skoe E, Russo N, Dees T, Kraus N. Musical experience shapes human brainstem encoding of linguistic pitch patterns. Nature Neuroscience. 2007;10:420–422. doi: 10.1038/nn1872. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Liu L-F, Palmer AR, Wallace MN. Phase-locked responses to pure tones in the inferior colliculus. J Neurophysiol. 2006;95:1926–1935. doi: 10.1152/jn.00497.2005. [DOI] [PubMed] [Google Scholar]

- 43.Wible B, Nicol T, Kraus N. Correlation between brainstem and cortical auditory processes in normal and language-impaired children. Brain. 2005;128:417–423. doi: 10.1093/brain/awh367. [DOI] [PubMed] [Google Scholar]

- 44.Banai K, et al. Reading and subcortical auditory function. Cereb Cortex. 2009 Mar 17; doi: 10.1093/cercor/bhp024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Basu M, Krishnan A, Weber-Fox C. Brainstem correlates of temporal auditory processing in children with specific language impairment. Developmental Science. 2009 May 28; doi: 10.1111/j.1467-7687.2009.00849.x. [DOI] [PubMed] [Google Scholar]

- 46.Summerfield Q, Haggard M. On the dissociation of spectral and temporal cues to the voicing distinction in initial stop consonants. J Acoust Soc Am. 1977;62:436–448. doi: 10.1121/1.381544. [DOI] [PubMed] [Google Scholar]

- 47.Delattre PC, Liberman AM, Cooper FS. Acoustic loci and transitional cues for consonants. J Acoust Soc Am. 1955;27:769–773. [Google Scholar]

- 48.Steinschneider M, Volkov IO, Noh MD, Garell PC, Howard MAI. Temporal encoding of the voice onset time phonetic parameter by field potentials recorded directly from human auditory cortex. J Neurophysiol. 1999;82:2346–2357. doi: 10.1152/jn.1999.82.5.2346. [DOI] [PubMed] [Google Scholar]

- 49.Tremblay K, Kraus N, McGee TJ, Ponton CW, Otis B. Central auditory plasticity: Changes in the N1–P2 complex after speech-sound training. Ear and Hearing. 2001;22:79–90. doi: 10.1097/00003446-200104000-00001. [DOI] [PubMed] [Google Scholar]

- 50.Obleser J, Elbert TR, Lahiri A, Eulitz C. Cortical representation of vowels reflects acoustic dissimilarity determined by formant frequencies. Cognitive Brain Res. 2003;15:207–213. doi: 10.1016/s0926-6410(02)00193-3. [DOI] [PubMed] [Google Scholar]

- 51.Steinschneider M, Reser D, Schroder CE, Arezzo JC. Tonotopic organization of responses reflecting stop consonant place of articulation in primary auditory cortex (A1) of the monkey. Brain Res. 1995;674:147–152. doi: 10.1016/0006-8993(95)00008-e. [DOI] [PubMed] [Google Scholar]

- 52.McGee T, Kraus N, King C, Nicol T. Acoustic elements of speechlike stimuli are reflected in surface recorded responses over the guinea pig temporal lobe. J Acoust Soc Am. 1996;99:3606–3614. doi: 10.1121/1.414958. [DOI] [PubMed] [Google Scholar]

- 53.Sinex DG, Chen G-D. Neural responses to the onset of voicing are unrelated to other measures of temporal resolution. J Acoust Soc Am. 2000;107:486–495. doi: 10.1121/1.428316. [DOI] [PubMed] [Google Scholar]

- 54.Miller GA, Nicely PE. An analysis of perceptual confusions among some English consonants. J Acoust Soc Am. 1955;27:338–352. [Google Scholar]

- 55.Elliott LL, Hammer MA, Scholl ME. Fine-grained auditory discrimination in normal children and children with language-learning problems. Journal of Speech and Hearing Research. 1989;32:112–119. doi: 10.1044/jshr.3201.112. [DOI] [PubMed] [Google Scholar]

- 56.Benasich AA, Thomas JJ, Choudhury N, Leppanen PHT. The importance of rapid auditory processing abilities to early language development: Evidence from converging methodologies. Developmental Psychobiology. 2002;40:278–292. doi: 10.1002/dev.10032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Krishnan A, Swaminathan J, Gandour J. Experience-dependent enhancement of linguistic pitch representation in the brainstem is not specific to a speech context. J Cognit Neurosci. 2009;21(6):1092–1105. doi: 10.1162/jocn.2009.21077. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Russo N, Nicol T, Zecker SG, Hayes E, Kraus N. Auditory training improves neural timing in the human brainstem. Behav Brain Res. 2005;156:95–103. doi: 10.1016/j.bbr.2004.05.012. [DOI] [PubMed] [Google Scholar]

- 59.Song JH, Skoe E, Wong PCM, Kraus N. Plasticity in the adult human auditory brainstem following short-term linguistic training. J Cognit Neurosci. 2008;20:1892–1902. doi: 10.1162/jocn.2008.20131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.deBoer J, Thornton ARD. Neural correlates of perceptual learning in the auditory brainstem: Efferent activity predicts and reflects improvement at a speech-in-noise discrimination task. J Neurosci. 2008;28:4929–4937. doi: 10.1523/JNEUROSCI.0902-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Kraus N, Skoe E, Parbery-Clark A, Ashley R. Training-induced malleability in neural encoding of pitch, timbre, and timing: Implications for language and music. Ann N Y Acad Sci. doi: 10.1111/j.1749-6632.2009.04549.x. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Beitel RE, Schreiner C, Cheung SW, Wang X, Merzenich MM. Reward-dependent plasticity in the primary auditory cortex of adult monkeys trained to discriminate temporally modulated signals. Proc Natl Acad Sci USA. 2003;100:11070–11075. doi: 10.1073/pnas.1334187100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Luo F, Wang Q, Kashani A, Yan J. Corticofugal modulation of initial sound processing in the brain. J Neurosci. 2008;28:11615–11621. doi: 10.1523/JNEUROSCI.3972-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Suga N, Gao E, Zhang Y, Ma X, Olsen JF. The corticofugal system for hearing: Recent progress. Proc Natl Acad Sci USA. 2000;97:11807–11814. doi: 10.1073/pnas.97.22.11807. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Xiao Z, Suga N. Modulation of cochlear hair cells by the auditory cortex in the mustached bat. Nature Neuroscience. 2002;5:57–63. doi: 10.1038/nn786. [DOI] [PubMed] [Google Scholar]

- 66.Gao E, Suga N. Experience-dependent plasticity in the auditory cortex and the inferior colliculus of bats: Role of the corticofugal system. Proc Natl Acad Sci USA. 2000;97:8081–8086. doi: 10.1073/pnas.97.14.8081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Atiani S, Elhilali M, David SV, Fritz J, Shamma S. Task difficulty and performance induce diverse adaptive patterns in gain and shape of primary auditory cortical receptive fields. Neuron. 2009;61:467–480. doi: 10.1016/j.neuron.2008.12.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Galbraith GC, Bhuta SM, Choate AK, Kitahara JM, Mullen TAJ. Brain stem frequency-following response to dichotic vowels during attention. NeuroReport. 1998;9:1889–1893. doi: 10.1097/00001756-199806010-00041. [DOI] [PubMed] [Google Scholar]

- 69.Muchnik C, et al. Reduced medial olivocochlear bundle system function in children with auditory processing disorders. Audiology & Neuro-Otology. 2004;9:107–114. doi: 10.1159/000076001. [DOI] [PubMed] [Google Scholar]

- 70.Nahum M, Nelken I, Ahissar M. Low-level information and high-level perception: The case of speech in noise. PLoS Biol. 2008;6:978–991. doi: 10.1371/journal.pbio.0060126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Wechsler Abbreviated Scale of Intelligence (WASI) TX: The Psychological Corporation: San Antonio; 1999. [Google Scholar]

- 72.DuPaul GJ, Power TJ, Anastopoulos AD, RR . ADHD Rating Scale IV. New York: Guilford Press: New York; 1998. [Google Scholar]

- 73.Wagner RK, Torgensen JK, Rashotte CA. Comprehensive Test of Phonological Processing (CTOPP) Austin, TX: Pro-Ed; 1999. [Google Scholar]

- 74.Torgensen JK, Wagner RK, Rashotte CA. Test of Word Reading Efficiency (TOWRE) Austin, TX: Pro-Ed; 1999. [Google Scholar]

- 75.Mather N, Hammill DD, Allen EA, Roberts R. Test of Silent Word Reading Fluency (TOSWRF) Austin, TX: Pro-Ed; 2004. [Google Scholar]

- 76.Klatt DH. Software for a cascade/parallel formant synthesizer. J Acoust Soc Am. 1980;67:971–995. [Google Scholar]

- 77.Gorga M, Abbas P, Worthington D. In: The Auditory Brainstem Response. Jacobsen J, editor. San Diego, CA: College-Hill Press; 1985. pp. 49–62. [Google Scholar]

- 78.Abrams D, Nicol T, Zecker S, Kraus N. Abnormal cortical processing of the syllable rate of speech in poor readers. J Neurosci. 2009;29:7686–7693. doi: 10.1523/JNEUROSCI.5242-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.